INTRODUCTION

The ability to hear and understand speech is important for classroom learning. To support students in these environments, standards for optimal acoustics recommend noise levels no greater than 35 dBA and reverberation times no greater than 0.6 sec in unoccupied classrooms less than 10000 ft3 (American National Standards Institute [ANSI] 2010; American Speech Language Hearing Association, 1995). However, research indicates that the recommended standards often are not met (Crandell & Smaldino, 1994; Knecht et al. 2002; Sato & Bradley, 2008). For example, Knecht et al. (2002) evaluated acoustical conditions in 32 unoccupied classrooms across suburban, urban and rural school districts. They found noise levels ranging from 34.4–65.9 dBA and reverberation times from 0.2 to 1.25 sec. Of the classrooms tested, noise levels were below the recommended maximum in only four classrooms and reverberation times were below the maximum in 19. Sato and Bradley (2008) evaluated 20 unoccupied and occupied elementary-grade classrooms. The overall noise level across unoccupied classrooms was above the recommended maximum at 42.2 dBA while the reverberation time was below the maximum at 0.45 sec. Reported noise levels were even higher when classrooms were occupied (also see Picard & Bradley 2001 for a review).

Numerous studies have shown that noise and reverberation in classrooms can negatively affect speech intelligibility, conversational interactions, cognitive skills, comprehension, and academic performance (Bradley & Sato 2008; Dockrell & Shield 2006; Jamieson et al. 2004; Klatte et al. 2007; Klatte, Hellbruck et al. 2010; Klatte, Lachmann et al. 2010; McKellin et al. 2007; McKellin et al. 2011; Neuman et al. 2010; Shield & Dockrell 2008; Stansfield et al. 2005; Yang & Bradley, 2009). Adverse acoustic conditions can be especially problematic for children in elementary grades where noise levels may be higher than in classrooms for older students (Knecht et al. 2002; Bradley & Sato 2008). Dockrell and Shield (2006) found that young elementary-grade children’s performance on non-verbal time-limited tasks was negatively impacted by background speech babble and more so when the speech babble was combined with environmental noise. However, for verbal tasks that were not time limited, performance was negatively impacted speech babble but not by babble combined with environmental noise. In a separate study, Shield and Dockrell (2008) reported that both internal and external noise affected elementary-grade children’s performance on standardized achievement measures; however, younger children were less affected by external noises than their older peers. Klatte, Lachmann et al. (2010) examined first- and third-grade children’s performance on a listening comprehension task conducted in a classroom with a favorable reverberation time in quiet and in two noise conditions: speech, environmental noise. Younger children were more affected by noise than older children but for both groups were more negatively impacted by speech than by noise.

Elementary-grade children’s difficulties understanding speech in adverse acoustic environments may be exacerbated by the fact that speech perception skills in noise and reverberation are still developing (Bradley & Sato 2008; Elliot 1979; Fallon et al. 2000; 2002; Johnson 2000). Additionally, students often encounter new information presented under conditions requiring attention to both the teacher and other students, some of whom may not be easily visualized. The additional listening effort required during such tasks may impact higher level cognitive processes such as comprehension of educational material being presented.

When investigating how children understand speech in realistic acoustic conditions such as those found in classrooms, the type of task used to measure this skill impacts the interpretation of results. Speech intelligibility tasks that require only repetition of phonemes, words or sentences provide important information regarding speech understanding. However, they may not assess higher-level cognitive skills required for comprehension during more complex listening tasks (Klatte et al. 2007; Klatte, Hellbruck et al. 2010; Klatte, Lachmann et al. 2010; Prodi et al. 2010; Prodi et al. 2013). Klatte et al. (2007) reported a negative effect of speech noise on first-grade children’s performance for short-term memory and sentence-comprehension tasks even with no effect on a word identification task. Klatte and colleagues (Klatte, Lachmann et al. 2010) suggested that studies with listening tasks designed to simulate school lessons may be necessary to reveal the effects of acoustical environment on comprehension.

Examining performance using typical classroom tasks (e.g., tasks that include both auditory and visual input, comprehension versus identification tasks) presented in plausible classroom acoustical environments may provide a realistic model of the challenging situations children face. Investigating performance in conditions that are not representative of typical listening situations (e.g., noise or reverberation alone) lacks ecological validity as a measure of how children will perform in real-world listening environments. While testing in actual classrooms can provide ecological validity, the active nature of these environments may not provide experimental control necessary for some measurements.

To address the above issues, a simulated classroom environment was created to examine the effect of acoustic environment (signal to noise ratio (SNR), reverberation time (RT), visual component, talker location) on speech understanding (Valente et al. 2012). Valente et al. reported results of two experiments conducted to examine simple (sentence repetition) and complex (comprehension) speech understanding tasks in children and adults in this simulated environment. For the comprehension task, audiovisual recordings of a teacher and four students located around the listener (discussion condition) or a teacher only, located in front of the listener (lecture condition), reading lines from a play were used. Half of the participants listened in each condition and at the end, all answered questions about the content of the play. During this task, listeners’ looking behavior was monitored. It was hypothesized that looking behavior could have an impact on comprehension. Specifically, attempts to locate and look at talkers during the discussion condition could use cognitive resources that would otherwise be allocated for comprehension. This may be especially true in children, whose speech-perception in noise skills are still developing. For the sentence-repetition task, participants repeated sentences presented auditory-only by a single talker either from the front loudspeaker (lecture) or randomly from the five loudspeakers located around the listener (discussion). This task was chosen to allow comparisons to previous studies that used sentence-length materials to examine children’s speech understanding in a variety of acoustical environments (Crandell 1993; Kenworthy et al. 1990; Neuman et al. 2010; Yacullo & Hawkins 1987; Wroblewski et al. 2012).

In a first experiment, an SNR of 10 dB was chosen based on average levels from multiple classrooms reported by Bradley and Sato (2008) and an RT of 0.6 sec was selected to represent a reasonable classroom based on maximum recommended levels from ANSI (2010). In this acoustic environment, children’s performance for the comprehension task was poorer than adults and comprehension scores were significantly lower for those who participated in the discussion condition (five talkers) than for those in the lecture condition (one talker). Analysis of looking behavior revealed that children looked around more than adults during the discussion condition. Despite the fact that children were more likely to attempt to look toward talkers, their comprehension scores were poorer than those of adults, indicating that greater attempts to look at talkers did not improve comprehension for children. In contrast to the comprehension task, all participants scored above 95% correct for the sentence-repetition task, with no significant differences across age or listening condition. The results of the sentence-repetition task suggested that performance for the comprehension task could not be attributed to poor speech-recognition abilities under the typical classroom acoustics used in the study.

A second experiment was conducted in more adverse acoustical environments (SNR = 7 dB, RT = 0.6 sec; SNR = 10 dB, RT = 1.5 sec; SNR = 7 dB, RT = 1.5 sec). Although scores on the sentence-repetition task decreased, all listeners scored above 82%. Results for the comprehension task revealed that in increasingly poorer acoustics, comprehension scores for all age groups in the discussion condition decreased compared to the results for those in the lecture condition. However, younger children performed more poorly than older children and adults in either condition and in all acoustical environments, suggesting that they could not compensate for the greater listening effort required for the task. Together, these experiments suggest that tests using simple speech-recognition tasks, even with degrading acoustics, may not be sufficient to address how children will perform during tasks requiring higher-level cognitive processing.

While poor classroom acoustics can affect educational performance for all students, the impact for listeners with hearing loss may be greater than for listeners with normal hearing (NH) (Anderson et al. 2005; Anderson & Goldstein 2004; Bess et al. 1986; Blair et al. 1985; Crandell, 1993; Finitzo-Heiber & Tillman, 1978; Ruscetta et al, 2005). Approximately 11–15% of children between 6 and 16 years of age in the United States exhibit a hearing loss ≥16 dB HL in one or both ears and children with minimal/mild hearing loss (MMHL) represent greater than 33% of that population (Bess et al, 1998; Niskar et al, 1998). MMHL can be defined broadly as either bilateral hearing loss (3-frequency pure-tone average ≥20 and < 50 dB HL or thresholds >25 dB HL at one or more frequencies above 2 kHz in both ears) or unilateral hearing loss (3-frequency pure-tone average >20 dB HL in the poorer ear and ≤15 dB HL in the better ear or thresholds >25 dB HL at one or more frequencies above 2 kHz in the poorer ear).

Children with MMHL often are placed in mainstream classrooms with limited additional educational support services (Bess et al, 1998; English & Church, 1999; Lieu et al. 2012; Yoshinaga-Itano et al. 2008). Yet, children with MMHL may experience difficulties in speech perception in adverse listening conditions, as well as delays in speech/language and social/emotional development (Bess & Tharpe 1986; Bess et al. 1998; Blair et al. 1985; Bovo, et al. 1988; Crandell 1993; Johnson, et al. 1997; Stein 1983). The academic achievement of many children with MMHL also is poorer than for children with NH (Bess & Tharpe 1986; Bess et al. 1998; Oyler et al. 1988).

Children with MMHL are likely to experience inconsistent access to auditory information during periods of auditory-skill development due to the combined effects of non-optimal acoustical environments, elevated hearing thresholds, and the absence or limited use of amplification. Weaker foundational auditory skills may affect higher perceptual processes, particularly in complex listening environments. Classroom discussions that include rapidly changing talkers (with associated changes in talker location), less reliable visual cues, and the introduction of new information can pose particular difficulty for children with MMHL. The effort to locate talkers and decode the speech signal may expend cognitive resources that otherwise would be used for comprehension (Bess et al. 1998; Hicks & Tharpe 2002; McFadden & Pittman 2010).

To examine how MMHL affects children’s speech understanding in realistic acoustic environments, the current study compared sentence repetition and story comprehension for a group of children with MMHL and age-matched children with NH using the experimental task from the first experiment described in Valente et al. (2012). Children listened to a play read by the teacher and four students (SNR = 10 dB; RT = 0.6 sec). Looking behavior was monitored during this task. They also completed a sentence-repetition task in the same acoustic environment.

Linguistic abilities play a role in children’s comprehension of speech and children with MMHL have been shown to demonstrate poorer language skills than their peers with NH in some areas (Bess et al. 1998; Blair et al., 1985; Keller & Bundy, 1980; Lieu et al., 2010; Wake et al., 2006). Thus, standardized language measures also were included to examine potential contributions to performance on the comprehension task.

It was hypothesized that there would be little difference between children with NH and children with MMHL for sentence repetition but significant differences in comprehension. It also was hypothesized that children with MMHL would make more attempts to look at talkers during the comprehension task than their peers with NH.

METHODS

Participants

For the current study, MMHL was defined as bilateral hearing loss (3-frequency pure-tone average (PTA) ≥20 and ≤45 dB HL or thresholds >25 dB HL at one or more frequencies above 2 kHz in both ears) or unilateral hearing loss (3-frequency PTA >20 dB HL in the poorer ear and ≤15 dB HL in the better ear or thresholds >25 dB HL at one or more frequencies above 2 kHz in one ear). Eighteen 8–12 year-old children with MMHL (8 with unilateral hearing loss [UHL], 10 with bilateral hearing loss [BHL]) and 18 age-matched children with NH participated in the study. In order to recruit sufficient numbers of children with MMHL, the distribution of children across years of age was not equal. Children were divided into younger (8–10 yrs) and older (11–12) age categories. This division resulted in nearly equal numbers of children for the two age categories (8 and 10, respectively) for those with NH and those with MMHL. All children scored within 1.25 SD of the mean on the Weschler Abbreviated Scale of Intelligence (WASI; 1999).

This study was approved by the Institutional Review Board (IRB) for Boys Town National Research Hospital and assent/consent was obtained for all children. Children were paid $15 per hour for their participation and received a book at the completion of the study.

Simulated acoustic environment

The simulated acoustic environment consisted of a physical room and a virtually-modeled room (a detailed description of the creation and validation of the environment is presented in Valente et al. 2012). In brief, the physical room was fitted with passive acoustical treatments and loudspeakers and LCD monitors on desks were arranged around a child’s location (Fig. 1). The ambient environment in the physical room had 0.35 sec reverberation time [1 kHz] and 32.4 dBA background noise at the child’s location. The virtually-modeled room was created using real-time simulation techniques (Braasch et al. 2008) with a virtual-room modeling technique (Virtual Microphone Control; ViMiC) that approximated the acoustics of a physical room. The virtual room had the same dimensions as the physical room and virtual microphones and sound sources were positioned at the locations of the physical loudspeakers and monitors. The simulated room included direct sound as well as first-order and late reflections. In addition, background noise had a spectrum comparable to that produced by heating, ventilation, and air-conditioning (HVAC) systems. Following the acoustic design used in the first experiment of Valente et al. (2012), the levels of speech and noise at the child’s location were 60 dBA and 50 dBA, respectively, resulting in a 10 dB SNR and RT was set to 0.6 sec.

Figure 1.

Simulated classroom set-up.

Procedures

Procedures described below follow those of the discussion condition in Experiment 1 from Valente et al. (2012) with the exception that language measures were included as part of the current study.

Sentence recognition

Children listened to and repeated 50 auditory-only Bamford-Koval-Bench sentences (BKB; Bench et al. 1979) presented quasi-randomly from each of the five loudspeakers. The BKB sentences have three or four target words each. Only those sentences with three target words were used for the current study. The sentences were spoken by a single female talker and digitally recorded in a sound booth using a condenser microphone (AKG Acoustics C535 EB) with a flat frequency response (±2 dB) from 0.2 to 20 kHz. Sentences were presented one at a time and screens of each LCD monitor were illuminated red when a sentence was being presented from the corresponding loudspeaker. Responses were scored for correct repetition of each target word (total = 150 words).

Comprehension

Individual video recordings from Valente et al. (2012) of a teacher and four students reading lines from a 10-minute elementary-age-appropriate “Reader’s Theater” play (Shepard 2010) were used, the content of which was unknown to all children. The talkers were located at −135, −90, 0, 30 and 135 degrees azimuth relative to the child. The talkers each acted as different characters and their speech did not overlap with any other talker during the task. After the play ended, children were asked a series of 18 factual questions (e.g., Who told Leif not to go and work for the troll?; What did the troll tell Master Maid to cook in the stew?). They responded orally and their answers were recorded by the experimenter for scoring. None of the questions could be answered with yes/no. Fifteen of the questions were based on information provided individually by the five talkers (three for each talker), and three questions were based on information provided by multiple talkers. Children responded orally and their answers were recorded by the experimenter for later scoring.

Looking Behavior

Children were instructed to look around as much or as little as needed during the listening tasks. To monitor looking behavior during the classroom listening task, each child wore a custom-designed micro-electro-mechanical-system (MEMS) gyroscopic head-tracking device (Analog Devices, EVAL-ADXRS610) attached to a headband. The head tracker was polled every 100 ms for head rotation angle. Output was fed to a Teabox (Electrotap LLC) that converted the tracking data into a digital signal.

Following the procedures described in Valente et al. (2012), children’s looking behavior was analyzed in two ways. First, proportion of events visualized (POEV) represented the proportion of time a listener looked directly at each of the talkers when they were speaking. For this measure, the known visual angle of each of the simulated talkers relative to the listener was compared to the gyroscopic data for that listener. Second, overall looking behavior was determined by examining raw head-angle recordings for each child. For this measurement, the standard deviation (SD) of the head track represented the degree of head movement from midline.

Language Measures

To examine potential effects of linguistic skills, all children completed the following norm-referenced tests: Peabody Picture Vocabulary Test (PPVT IV; Dunn & Dunn 2007) and three subtests of the Comprehensive Assessment of Spoken Language (CASL; Carrow-Woolfolk 2012): Grammaticality Judgment (GJ), Inferences (Inf), and Non-Literal Language (NLL). The PPVT and the GJ subtest assess vocabulary and syntax, respectively, and the Inf and NLL subtests assess metalinguistic competencies. These measures are widely used both clinically and experimentally to evaluate language skills1.

RESULTS

The current study examined the effects of MMHL on speech understanding in noise and reverberation. Within the group of children with MMHL, it was possible that there would be differences across measures as a function of type of hearing loss (UHL versus BHL). To examine this possibility, separate ANOVAs were conducted of the MMHL group with type of hearing loss as the between subjects factor. Results revealed no significant differences across type of loss for performance on either the comprehension [F(1,16) = .009, p = .927, ηp2 = .001] or sentence repetition [F(1,16) = 2.959, p = .105, ηp2 = .156] tasks. In addition, there were no significant effects of type of loss for POEV [F(1,16) = .142, p = .712, ηp2 = .009] or overall looking behavior [F(1,16) = .031, p = .863, ηp2 = .002]. Thus, for the tasks examined in the current study, children with UHL and those with BHL performed similarly and were combined for the remaining analyses.

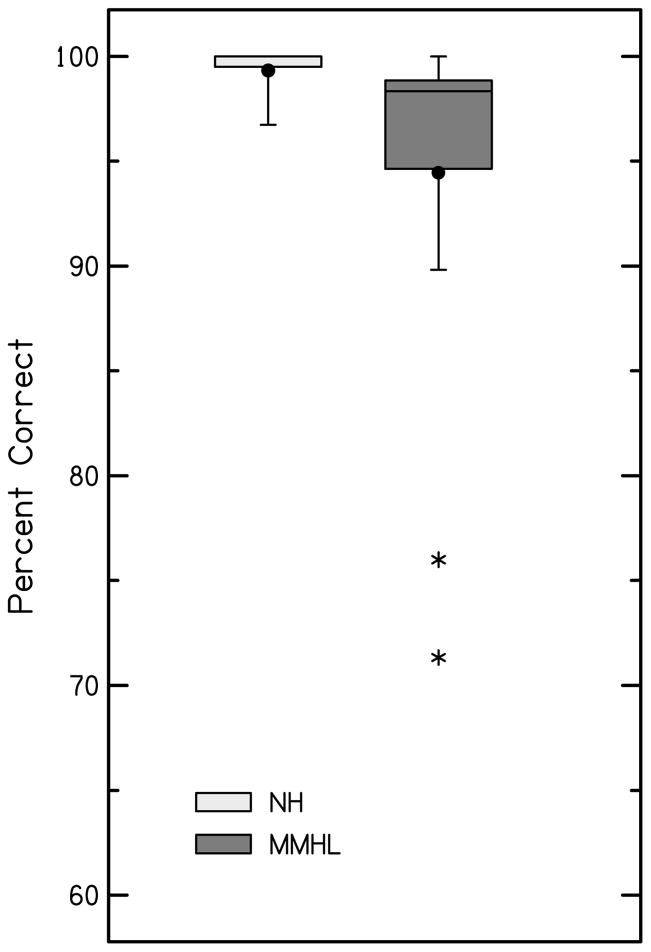

Results for the sentence recognition task indicated generally high scores for children with NH and with MMHL (Figure 2). All except two children with MMHL had scores ≥ 89% correct, in good agreement with results for adults and children with NH from Valente et al. (2012). The high levels of performance and limited variability for both groups precluded additional statistical analysis. However, these findings indicate that under the acoustic conditions used in this study, children with NH and those with MMHL may perform near ceiling on a simple sentence-repetition task.

Figure 2.

Scores (% correct) for the sentence recognition task for the children with NH (light gray) and children with MMHL (dark gray). Boxes represent the interquartile range and whiskers represent the 5th and 95th percentiles. For each box, lines represent the median and filled circles represent the mean scores. Asterisks represent values that fell outside the 5th or 95th percentiles.

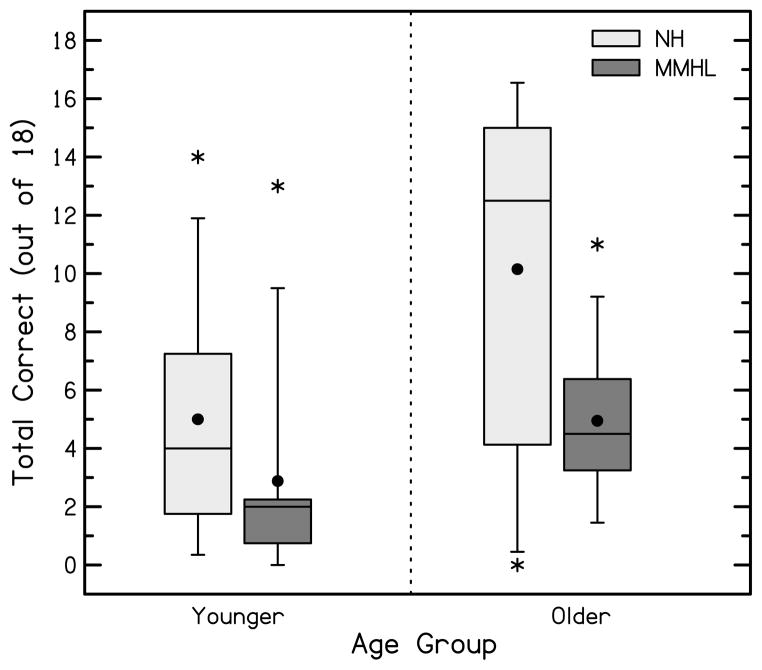

Results for the comprehension task are shown in Figure 3 in the form of box and whisker plots. In general, results revealed poorer performance and greater variability for children with NH and children with MMHL when compared to performance on the sentence-repetition task. At younger ages, mean performance for both groups of children was <9/18 and children with MMHL (M = 2.9; sd = 4.2) performed more poorly than children with NH (M =5.0; sd = 4.6). Examination of individual scores revealed that one younger child with NH and one with MMHL scored considerably higher on the task than the remainder of the children in those groups. In the group with NH, the highest scorer answered 14/18 questions correctly compared to 8/18 for the next highest scoring child. In the group with MMHL, the child with the best performance answered 13/18 questions correctly compared to 3/18 for the next highest scorer. At older ages, mean performance for children with MMHL also was <9/18 (M = 5.0; sd = 2.9), and the highest older performer answered 11/18 questions correctly compared to 7/18 for the next highest scorer. Mean performance for children with NH was higher (M = 10.2; sd = 6.6), with all but three children answering >9/18 questions correctly. The scores for these low performers ranged from 0–3/18. A two-way analysis of variance (ANOVA) with age (younger, older) and hearing loss (NH, MMHL) as independent variables revealed significant effects of age [F(1,32) = 5.015, p = .032, ηp2 = .135] and hearing loss [F(1,32) = 5.155, p = .030, ηp2 = .139] but no age x hearing loss interaction [F(1,32) = 0.908, p = .348, ηp2 = .028]. In general, most children with MMHL performed more poorly than their peers with NH, and younger children in both groups performed more poorly than older children.

Figure 3.

Scores (total correct/18) for the comprehension task for the children with NH (light gray) and children with MMHL (dark gray). Boxes represent the interquartile range and whiskers represent the 5th and 95th percentiles. For each box, lines represent the median and filled circles represent the mean scores. Asterisks represent values that fell outside the 5th or 95th percentiles.

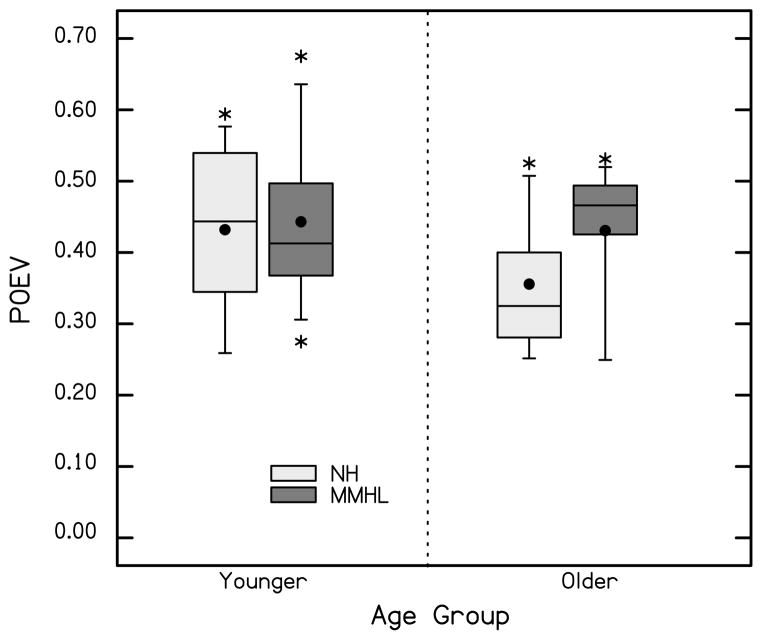

POEV as a function of age and group is shown in Figure 4. Looking behavior data were not available for one child with NH. On average, children with NH and children with MMHL were looking at individual talkers as they spoke less than 50% of the time (young NH: M = 0.43, sd = 0.13; older NH: M = 0.36, sd = 0.10; young MMHL: M = 0.44, sd = 0.13; older MMHL: M = 0.43, sd = 0.19). There was no significant difference in POEV across hearing loss [F(1,31) = 1.268, p = .269, ηp2 = .039] or age [F(1,31) = 1.347, p = .255, ηp2 = .042] and no age x hearing loss interaction [F(1,31) = 0.700, p = ..409, ηp2 = .042]. In this task with rapidly changing talkers, children with NH and those with MMHL did not differ in how often they were looking directly at talkers when those talkers spoke.

Figure 4.

Proportion of events visualized (POEV) during the comprehension task for the children with NH (light gray) and children with MMHL (dark gray). Boxes represent the interquartile range and whiskers represent the 5th and 95th percentiles. For each box, lines represent the median and filled circles represent the mean scores. Asterisks represent values that fell outside the 5th or 95th percentiles.

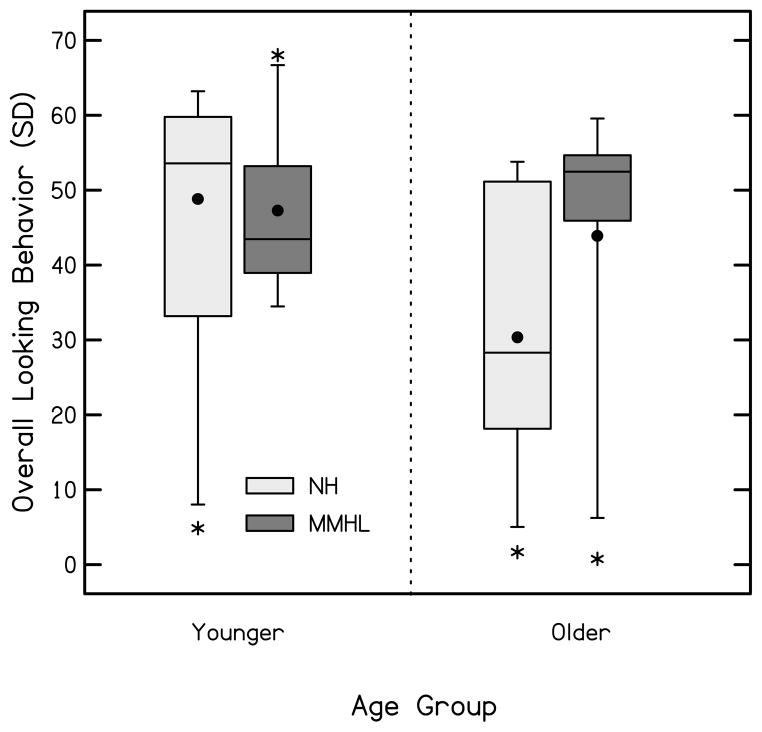

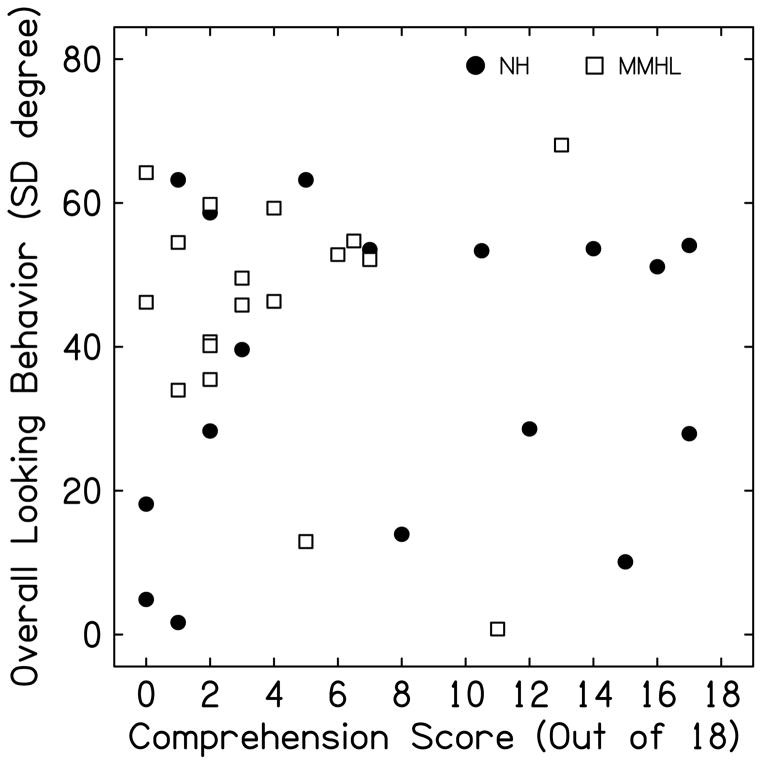

Overall looking behavior as a function of age and group is shown in Figure 5. For the younger children, the mean SD for those with NH was 43.8 (sd = 22.6) and for those with MMHL was 47.3 (sd = 12.7). For the older children, the mean SD for those with NH was 30.4 (sd = 19.1) and for those with MMHL was 43.9 (sd = 20.3). Statistical analysis revealed no significant differences across hearing loss [F(1,31) = 1.713, p = .200, ηp2 = .052] or age [F(1,31) = 1.680, p = .204, ηp2 = .051] and no age x hearing loss interaction F(1,31) = 0.603, p = ..443, ηp2 = .019]. The relationship between overall looking behavior and comprehension also was examined (Figure 6). The pattern of responses revealed no linear relationship (r = −0.024, p = .446).

Figure 5.

Overall looking behavior (SD) during the comprehension task for the children with NH (light gray) and children with MMHL (dark gray). Boxes represent the interquartile range and whiskers represent the 5th and 95th percentiles. For each box, lines represent the median and filled circles represent the mean scores. Asterisks represent values that fell outside the 5th or 95th percentiles.

Figure 6.

Comprehension scores (total correct/18) as a function of Looking behavior (SD degree) for children with NH (filled circles) and children with MMHL (open squares).

Although there were no statistically significant differences in overall looking behavior, the range of individual values was quite large. Examination of individual data revealed differing patterns across hearing loss. In general, the individual children with MMHL demonstrated SDs that were more similar to the majority of younger than to older children with NH. Such patterns suggest the possibility that older children with MMHL may continue to exhibit higher looking behaviors than older children with NH in the type of listening task used in the current study. Additional studies with larger groups of children with MMHL and NH across a variety of ages will be needed to further examine this possibility.

Recall that speech understanding in adverse listening environments may be affected by linguistic ability and children with MMHL may demonstrate poorer linguistic skills than peers with NH. To examine potential effects of MMHL on linguistic abilities, scores for the four norm-referenced language measures was compared across groups to examine possible differences between the children with MMHL and NH. A mixed-model ANOVA was conducted with group (NH, MMHL) as a between-subjects factor and language (PPVT, Grammaticality Judgment, Inference, Non-Literal Language) as a within subjects factor. Results revealed no significant effects of group [F(1,33) = 2.88, p = .099, ηp2 = .080] or language [F(2.34,77.35) = 1.407, p = .251, ηp2 = .041], and no group x language interaction [F(2.34,77.35) = 1.375, p = .259, ηp2 = .040].

Despite the absence of differences across groups, varying linguistic abilities could play a role in performance on the comprehension task for children in general. To examine this potential relationship, a multiple regression was conducted with performance on the comprehension task as the criterion variable and the four language measures entered together as predictor variables (Table 1).2 The overall model fit was significant [F(5,33) = 7.234, p < .0001]. This model accounted for 56.4% of the variance (R2). In this model, only Non-Literal Language (NLL) was a significant contributor to the variance when accounting for age, PPVT, Grammatical Judgment (GJ), and Inference (I).

Table 1.

Multiple regression examining the relationship between performance on the comprehension task as the criterion variable and age and performance on the language tests as the predictor variables.

| Pearson’s r * | Beta | p | R2 | |

|---|---|---|---|---|

| .564 | ||||

| Age | .359 (.032) | .269 | .066 | |

| Grammaticality Judgment | .453 (.006) | .092 | .586 | |

| Inferences | .604 (<.001) | .286 | .129 | |

| Non-Literal Language | .587 (<.001) | .372 | .032 | |

| PPVT | .404 (.016) | .046 | .791 |

Bivariate correlation with comprehension score (p value)

DISCUSSION

The purpose of the current study was to examine the effect of MMHL on children’s performance on both a speech-recognition and a comprehension task in a simulated classroom environment. Results revealed that children with NH and those with MMHL performed at or near ceiling on the sentence recognition task in the acoustic conditions used in this study. These findings are in agreement with previous laboratory studies which have found minimal differences between children with MMHL and their peers with normal hearing under similar acoustic conditions of noise or noise plus reverberation (e.g., Bovo et al. 1988; Crandell 1993; Valente et al. 2012). Although such tasks are commonly used to assess children’s speech understanding in noise and reverberation, the high scores obtained in the current study suggest that simple speech-recognition tests may be limited in their ability to address potential difficulties experienced by children with MMHL for more cognitively demanding tasks in the same acoustic environments.

For the more challenging comprehension task, children with MMHL performed more poorly than their peers with NH. When the current results are examined with those of Valente et al. (2012), they indicate that children with NH performed more poorly than adults with NH on the comprehension task and children with MMHL performed more poorly than children with NH, despite near-ceiling performance for all groups on sentence repetition. In realistic acoustic environments, noise and reverberation can negatively impact speech understanding. As more cognitive resources are required for identification of the speech signal, fewer resources may be available for understanding. Research with children and adults with normal hearing has suggested that memory for speech may be impaired in noise and reverberation, even when the speech is intelligible (Ljung et al. 2009; McCreery & Stelmachowicz 2013). As a listening task becomes longer, greater demands are placed on both short- and long-term memory. In the comprehension task for the current study, children were required to actively process new information as it was presented and to incorporate that information with previous information that was stored in memory over an extended (10 min) period of time. During this task, misunderstanding or completely missing parts of the story could have a significant effect on an individual’s overall understanding. Kjellberg (2004) has proposed that attempts to listen to speech in reverberation for an extended period of time may result in fatigue and a reduction in processing resources. While children with MMHL may be able to perform comparably to their peers with NH on tasks with less cognitive load, they may not be able to maintain the same level of performance as the cognitive load increases. In the latter types of tasks, the children with MMHL may need to exert greater listening effort than their peers, leaving them with fewer resources to understand and remember content. Over time, the effects of attempting to listen and understand in adverse environments may result in the deficits in academic performance across a variety of areas that have been shown in numerous studies (Bess et al. 1986; Bess et al. 1998; Bovo et al., 1988; Lieu et al. 2012).

The results of looking behavior during the classroom task revealed that, on average children with NH and those with MMHL looked at talkers as they were speaking less than 50% of the time (POEV). This finding is likely related, at least in part, to the nature of the task which involved rapid changes across multiple talkers. During this task, even children who attempted to look at all talkers may not have been able to visualize them as they were speaking. Overall looking behavior provided an indication of children’s attempts to look at talkers during the task, with considerable variability across children and no statistically significant differences across age or hearing loss. However, mean overall looking behavior for all children in the current study was higher than that of adults from Valente et al. (2012). Results of that study indicated that children were more likely to choose to look than adults under similar conditions. In addition, the pattern of behaviors in the current study suggested the majority of children with MMHL demonstrated looking behaviors similar to younger children with NH.

In the current study, looking behavior did not predict comprehension. It is possible that this finding resulted, at least partially, from some children not choosing an appropriate looking strategy for the task. Recall that children were instructed to look as much or as little as they wanted during the comprehension task. If it is assumed that listeners choose looking strategies with the intent of optimizing their understanding, then for some children comprehension scores may have represented their most advantageous combination of looking and listening. For other children, the chosen strategies for looking and listening may have negatively impacted performance. Further research is needed to explore these issues in greater depth.

Potential Limitations

The current study shows differences in speech understanding of children with NH and with MMHL in a complex multimodal listening task. However, only one speech-recognition measure and one comprehension measure were used in the study. Recall that the sentence repetition task was chosen to allow comparison with previous studies using similar sentence-length materials. Results for the current study showed good agreement with previous studies using similar materials and acoustic environments. The comprehension task was new and, as such, comparison with other studies is limited. Because there was a single audiovisual recording, the design limited our ability to examine within-subject effects, to compare auditory-only and audiovisual perception, or to vary the classroom task within this group of subjects. Additional studies are in development that will allow us to examine these effects as well those of the number of talkers and their location relative to the listener as and attentional demands of the task.

While looking behaviors provide information regarding how often children attempted to look at talkers during the task, they do not address why children did or did not attempt to look or whether different looking strategies might have affected performance for individual children. The benefit of audiovisual input for speech perception has been well-documented (e.g., Massaro & Cohen 1995; Ross et al. 2007; Sumby & Pollack 1954). However, in many real-world environments not all talkers are easily visualized. In such instances, attempts to visualize talkers may distract the listener from the message they are attempting to comprehend. Thus, the potential benefits of attempts to use audiovisual input may vary depending on the task. In an environment where talkers are easily visualized, listeners may benefit from looking at talkers. However, when talkers are not easily visualized and change rapidly, looking attempts may not be beneficial and could negatively impact comprehension. In listening activities similar to the one used in the current study, some children may look more often in an attempt to gain additional information from visual cues. However, when talkers change rapidly, that strategy may not result in additional visual input, and attempts to locate talkers may use cognitive resources that are needed for comprehension. The potential effects of looking-strategy choices could not be evaluated in the current study. Additional studies to examine these effects will be needed.

Although children with MMHL performed more poorly on the comprehension task than those with NH, the two groups did not differ on the four standardized language measures. Past studies examining speech/language skills in children with MMHL have been mixed, with some showing that these children perform more poorly than their peers with NH and others indicating similar performance (Kiese-Himmel 2002; Kiese-Himmel & Ohlwein 2003; Klee & Davis-Dansky 1986; Lieu et al. 2010; Lieu et al. 2012; Wake et al. 2006). Differences across studies may be related, at least in part, to the standardized measures used to assess language competency and how well those measures relate to performance in other areas (Klee and Davis-Dansky 1986). Further examination of language as well as memory and executive function in children with MMHL is needed to address this issue.

There were no differences between children with UHL and children with BHL on the measures assessed in the current study. This was not unexpected for the sentence recognition task, given the high levels of performance for all participants. For the comprehension task, the children with UHL could be expected to hear some of the talkers (those toward their NH ear) better than the children with BHL. Despite this potential acoustic benefit, results suggest that the high cognitive load of the task impacted both groups equally. Even if individual children missed different parts of the story, the final outcome was the same; poor comprehension of the story. It also is possible that differences between the two categories of children with MMHL were obscured by the small number of participants in each group. Given the effect sizes, this is might be more of a possibility for the sentence repetition task (ηp2 = .156) than for the comprehension task (ηp2 = .001). Additional studies with greater numbers of subjects in both categories will be needed to address these issues.

Conclusions

The findings in the current study have implications for children with MMHL in regular classrooms. While children with BHL and UHL may perform differently on some tasks (they may be similarly impacted during many classroom learning tasks. Results of the current study suggest that simple speech-recognition tasks may not be sufficient to assess how well children with MMHL will perform in classrooms, even when presented under typical acoustic conditions. Tasks that represent the types of listening and learning activities experienced in classrooms under plausible acoustic conditions may be better indicators of real-world speech understanding. In addition, behaviors that would be expected to improve speech understanding in poor acoustics (looking at talkers as they speak) may not be practical/possible under some conditions, and attempts to look may not be beneficial. In such instances, modifying the listening environment (e.g., moving all talkers to the front, facing their classmates) may be a simple and viable alternative to improve communication access

In conclusion, both children with NH and children with MMHL performed at or near ceiling on the sentence repetition task presented under typical classroom acoustic conditions. In that same acoustic environment, older children performed better than younger children, and children with NH performed better than children with MMHL on the comprehension task. The current findings suggest that tasks representing the types of listening and learning activities experienced in classrooms under plausible acoustic conditions may be better indicators of real-world speech understanding than simple speech-recognition tasks. Further examination is needed to better understand how looking and listening behaviors interact for real-world speech understanding.

Acknowledgments

The authors thank Roger Harpster at Boys Town National Research Hospital for assistance with laboratory setup and video recording. This work was supported by NIH grants R03 DC009675, T32 DC000013, and P30 DC004662.

Footnotes

CASL: Test-retest reliability (M = .85 across the 3 subtests); criterion-related validity (correlations range from .72–.80 when compared to four standardized language measures). PPVT-IV: Test-retest reliability (M = .93); criterion-related validity (correlations range from .63–.82 when compared to three standardized language measures). Additional measures are available in the test manuals.

Data for one child with NH was excluded from this analysis because that child scored 4 SD above the mean on the PPVT. Two additional child with NH who scored just >2 SD above the mean on the PPVT were not excluded. No other scores for either group were >2 SD above the mean for any language measure.

The content of this paper is the responsibility and opinions of the authors and does not represent the views of NIH or NIDCD.

Financial Disclosures/Conflicts of Interest:

This research was funded by the NIH/NIDCD.

References

- American Speech-Language-Hearing Association. Guidelines for addressing acoustics in educational settings. 2005 [Guidelines]. Available from www.asha.org/policy.

- Anderson K, Goldstein H. Speech perception benefits of FM and infrared devices to children with hearing aids in a typical classroom. Lang Sp Hear Serv Schools. 2004;35:169–184. doi: 10.1044/0161-1461(2004/017). [DOI] [PubMed] [Google Scholar]

- Anderson K, Goldstein H, Colodzin L, Iglehart F. Benefits of S/N enhancing devices to speech perception of children listeing in a typical classroom with hearing aids or a cochlear implant. J Educ Audiol. 2005;12:14–28. [Google Scholar]

- ANSI. 12.60–2010/Part 1, Acoustical Performance Criteria, Design Requirements and Guidelines for Schools, Part 1: Permanent Schools. Acoustical Society of America; New York: 2010. [Google Scholar]

- Bench J, Koval A, Bamford J. The BKB (Bamford-Koval-Bench) sentence lists for partially-hearing children. Br J Audiol. 1979;13:108–112. doi: 10.3109/03005367909078884. [DOI] [PubMed] [Google Scholar]

- Bess FH, Klee T, Culbertson JL. Identification, assessment, and management of children with unilateral sensorineural hearing loss. Ear Hear. 1986;7:43–51. doi: 10.1097/00003446-198602000-00008. [DOI] [PubMed] [Google Scholar]

- Bess F, Dodd-Murphy J, Parker R. Children with minimal sensorineural hearing loss: Prevalence, educational performance, and functional status. Ear Hear. 1998;19:339–354. doi: 10.1097/00003446-199810000-00001. [DOI] [PubMed] [Google Scholar]

- Bess F, Tharpe AM. Case history data on unilaterally hearing-impaired children. Ear Hear. 1986;7:14–19. doi: 10.1097/00003446-198602000-00004. [DOI] [PubMed] [Google Scholar]

- Blair J, Peterson M, Viehwig S. The effects of mild hearing loss on academic performance of young school-age children. Volta Rev. 1985;87:87–93. [Google Scholar]

- Bovo R, Martini A, Agnoletto M, et al. Auditory and academic performance of children with unilateral hearing loss. Scand Audiol Suppl. 1988;30:71–74. [PubMed] [Google Scholar]

- Braasch J, Peters N, Valente DL. A loudspeaker-based projection technique for spatial music applications using virtual microphone control. Comput Music J. 2008;32:55–71. [Google Scholar]

- Bradley J, Sato H. The intelligibility of speech in elementary school classrooms. J AcoustSoc Am. 2008;123:2078–2086. doi: 10.1121/1.2839285. [DOI] [PubMed] [Google Scholar]

- Carrow-Woolfolk E. Comprehensive Assessment of Spoken Language. San Antonio, TX: Pearson Education, Inc; 2012. [Google Scholar]

- Crandell C. Speech recognition in noise by children with minimal degrees of sensorineural hearing loss. Ear Hear. 1993;14:210–216. doi: 10.1097/00003446-199306000-00008. [DOI] [PubMed] [Google Scholar]

- Crandell C, Smaldino J. An update of classroom acoustics for children with hearing impairment. Volta Rev. 1994;96:291–306. [Google Scholar]

- Dockrell J, Shield B. Acoustical barriers in classrooms: the impact of noise on performance in the classroom. Br Educ Res J. 2006;32:509–525. [Google Scholar]

- Dunn LM, Dunn DM. The Peabody Picture Vocabulary Test. 4. Bloomington, MN: NCS Pearson, Inc; 2007. [Google Scholar]

- Elliott LL. Performance of children aged 9 to 17 years on a test of speech intelligibility in noise using sentence material with controlled word predictability. J Acoust Soc Am. 1979;66:651–653. doi: 10.1121/1.383691. [DOI] [PubMed] [Google Scholar]

- English K, Church G. Unilateral hearing loss in children: An update for the 1990s. Lang Sp Hear Serv Schools. 1999;30:26–31. doi: 10.1044/0161-1461.3001.26. [DOI] [PubMed] [Google Scholar]

- Fallon M, Trehub SE, Schneider BA. Children’s perception of speech in multitalker babble. J Acoust Soc Am. 2000;108:3023–3029. doi: 10.1121/1.1323233. [DOI] [PubMed] [Google Scholar]

- Fallon M, Trehub SE, Schneider BA. Children’s use of semantic cues in degraded listening environments. J Acoust Soc Am. 2002;111:2242–2249. doi: 10.1121/1.1466873. [DOI] [PubMed] [Google Scholar]

- Finitzo-Hieber T, Tillman T. Room acoustics effects on monosyllabic word discrimination ability for normal and hearing-impaired children. J Acoust Soc Am. 1978;21:440–458. doi: 10.1044/jshr.2103.440. [DOI] [PubMed] [Google Scholar]

- Hicks C, Tharpe AM. Listening effort and fatigue in school-age children with and without hearing loss. J Sp Lang Hear Res. 2002;45:573–584. doi: 10.1044/1092-4388(2002/046). [DOI] [PubMed] [Google Scholar]

- Jamieson D, Kranjc G, Yu KW, et al. Speech intelligibility of young school-aged children in the presence of real-life classroom noise. J Am Acad Audiol. 2004;15:508–517. doi: 10.3766/jaaa.15.7.5. [DOI] [PubMed] [Google Scholar]

- Johnson CE. Children’s phoneme identification in reverberation and noise. J Speech Lang Hear Res. 2000;43:144–157. doi: 10.1044/jslhr.4301.144. [DOI] [PubMed] [Google Scholar]

- Johnson C, Stein R, Broadway A, et al. “Minimal” high-frequency hearing loss and school-age children: Speech recognition in a classroom. Lang Speech Hear Serv Schools. 1997;28:77–85. [Google Scholar]

- Keller W, bundy R. Effects of unilateral hearing loss upon educational achievement. Child: Care Health Dev. 1980;6:93–100. doi: 10.1111/j.1365-2214.1980.tb00801.x. [DOI] [PubMed] [Google Scholar]

- Kenworthy O, Klee T, Tharpe AM. Speech recognition ability of children with unilateral sensorineural hearing loss as a function of amplification, speech stimuli, and listening condition. Ear Hear. 1990;11:264–270. doi: 10.1097/00003446-199008000-00003. [DOI] [PubMed] [Google Scholar]

- Kiese-Himmel C. Unilateral sensorineural hearing impairment in childhood: analysis of 31 consecutive cases. Int J Audiol. 2002;41:57–63. doi: 10.3109/14992020209101313. [DOI] [PubMed] [Google Scholar]

- Kiese-Himmel C, Ohlwein S. Characteristics of children with mild hearing impairment. Folia, Phoniatr Logop. 2003;55:70–79. doi: 10.1159/000070089. [DOI] [PubMed] [Google Scholar]

- Kjellberg A. Effects of reverberation time on the cognitive load in speech communication: theoretical considerations. Noise Health. 2004;7:11–21. [PubMed] [Google Scholar]

- Klatte M, Hellbrück J, Seidel J, et al. Effects of classroom acoustics on performance and well-being in elementary school children: a field study. Environ Beh. 2010;42:659–692. [Google Scholar]

- Klatte M, Lachmann T, Meis M. Effects of noise and reverberation on speech perception and listening comprehension of children and adults in a classroom-like setting. Noise Health: Special Issue on Noise, Memory and Learning. 2010;12:270–282. doi: 10.4103/1463-1741.70506. [DOI] [PubMed] [Google Scholar]

- Klatte M, Meis M, Sukowski H, et al. Effects of irrelevant speech and traffic noise on speech perception and cognitive performance in elementary school children. Noise Health. 2007;9:64–74. doi: 10.4103/1463-1741.36982. [DOI] [PubMed] [Google Scholar]

- Klee T, Davis-Dansky E. A comparison of unilaterally hearing-impaired children and normal-hearing children on a batter of standardized language tests. Ear Hear. 1986;7:27–37. doi: 10.1097/00003446-198602000-00006. [DOI] [PubMed] [Google Scholar]

- Knecht H, Nelson P, Whitelaw G, et al. Background noise levels and reverberation times in unoccupied classrooms: Predictions and measurements. Am J Audiol. 2002;11:65–71. doi: 10.1044/1059-0889(2002/009). [DOI] [PubMed] [Google Scholar]

- Lieu J, Tye-Murray N, Karxon R, et al. Unilateral hearing loss is associated with worse speech-language scores in children. Pediatrics. 2010;125:2009–2448. doi: 10.1542/peds.2009-2448. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lieu J, Tye-Murray N, Fu Qiang. Longitudinal study of children with unilateral hearing loss. Laryngoscope. 2012;122:2088–2095. doi: 10.1002/lary.23454. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ljung R, Sorqvist P, Kjellberg A, et al. Poor listening conditions impair memory for intelligible lectures: Implications for acoustic classroom standards. Build Acoust. 2009;16:257–265. [Google Scholar]

- Massaro D, Cohen M. Perceiving talking faces. Curr Dir Psychol Sci. 1995;4:104–109. [Google Scholar]

- McCreery R, Stelmachowicz P. The effects of limited bandwidth and noise on verbal processing time and word recall in normal-hearing children. Ear Hear. 2013;34:585–591. doi: 10.1097/AUD.0b013e31828576e2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McFadden B, Pittman A. Effect of minimal hearing loss on children’s ability to multitask in quiet and in noise. Lang Speech Hear Serv Schools. 2008;39:342–351. doi: 10.1044/0161-1461(2008/032). [DOI] [PMC free article] [PubMed] [Google Scholar]

- McKellin W, Shahin K, Hodgson M, Jamieson J, Pichora-Fuller MK. Pragmatics of conversation and communication in noisy settings. J Pragmatics. 2007;39:2159–2184. [Google Scholar]

- McKellin W, Shahin K, Hodgson M, Jamieson J, Pichora-Fuller MK. Noisy zones of proximal development: Conversations in noisy classrooms. J Socioling. 2011;15:65–93. [Google Scholar]

- Neuman A, Hochberg I. Children’s perception of speech in reverberation. J Acoust Soc Am. 1983;73:2145–2149. doi: 10.1121/1.389538. [DOI] [PubMed] [Google Scholar]

- Neuman A, Wroblewski M, Hajicek J, et al. Combined effects of noise and reverberation on speech recognition performance of normal-hearing children and adults. Ear Hear. 2010;31:336–344. doi: 10.1097/AUD.0b013e3181d3d514. [DOI] [PubMed] [Google Scholar]

- Niskar A, Kieszak S, Holmes A, et al. Prevalence of hearing loss among children 6 to 19 years of age. J Am Med Assoc. 1998;279:1071–1075. doi: 10.1001/jama.279.14.1071. [DOI] [PubMed] [Google Scholar]

- Oyler R, Oyler A, Matkin N. Unilateral hearing loss: Demographics and educational impact. Lang Sp Hear Serv Sch. 1988;19:201–210. [Google Scholar]

- Picard M, Bradley J. Revisiting speech interference in classrooms. Audiology. 2001;40:221–244. [PubMed] [Google Scholar]

- Prodi N, Visentin C, Farnetani A. Intelligibility, listening difficulty and listening efficiency in auralized classrooms. J Acoust Soc Am. 2010;128:172–181. doi: 10.1121/1.3436563. [DOI] [PubMed] [Google Scholar]

- Prodi N, Visentin C, Feletti A. On the perception of speech in primary school classrooms: Ranking of noise interference and age of influence. J Acoust Soc Am. 2013;133:255–268. doi: 10.1121/1.4770259. [DOI] [PubMed] [Google Scholar]

- Ross L, Saint-Amour D, Vleavitt V, et al. Do you see what I am saying? Exploring visual enhancement of speech comprehension in noisy environments. Cerebral Cortex. 2007;17:1147–1153. doi: 10.1093/cercor/bhl024. [DOI] [PubMed] [Google Scholar]

- Ruscetta MN, Arjmand EM, Pratt R., Sr Speech recognition abilities in noise for children with severe-to-profound unilateral hearing impairment. Int J Ped Otorhinolaryngol. 2005;69:771–779. doi: 10.1016/j.ijporl.2005.01.010. [DOI] [PubMed] [Google Scholar]

- Sato H, Bradley J. Evaluation of acoustical conditions for speech communication in working elementary schools. J Acoust Soc Am. 2008;123:2064–2077. doi: 10.1121/1.2839283. [DOI] [PubMed] [Google Scholar]

- Shepard A. Aaron Shepard’s RT Page: Scripts and tips for reader’s theater. 2010 http://www.aaronshep.com/rt/indexhtml#RTE. Last viewed October 2, 2013.

- Shield B, Cockrell J. The effects of environmental and classroom noise on the academic attainments of primary school children. J Acoust Soc Am. 2008;123:133–144. doi: 10.1121/1.2812596. [DOI] [PubMed] [Google Scholar]

- Stansfield S, Berglund B, Clark C, et al. Aircraft and road noise and children’s cognition and health: a cross-national study. Lancet. 2005;365:1942–1949. doi: 10.1016/S0140-6736(05)66660-3. [DOI] [PubMed] [Google Scholar]

- Stein D. Psychosocial characteristics of school-age children with unilateral hearing loss. J Acad Rehabil Audiol. 1983;16:12–22. [Google Scholar]

- Sumby W, Pollack I. Visual contribution to speech intelligibility in noise. J Acoust Soc Am. 1954;26:212–215. [Google Scholar]

- Valente DL, Plevinsky HM, Franco JM, et al. Experimental investigation of the effects of the acoustical conditions in a simulated classroom on speech recognition and learning in children. J Acoust Soc Am. 2012;131:232–246. doi: 10.1121/1.3662059. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wake M, Tobin S, Cone-Wesson B, et al. Slight/mild sensorineural hearing loss in children. Pediatrics. 2006;118:1842–1851. doi: 10.1542/peds.2005-3168. [DOI] [PubMed] [Google Scholar]

- Wechsler Abbreviated Scale of Intelligence. San Antonio, TX: NCS Pearson, Inc; 1999. [Google Scholar]

- Wroblewski M, Lewis D, Valente D, Stelmachowicz P. Effects of reverberation on speech recognition in stationary and modulated noise by school-aged children and young adults. Ear Hear. 2012;33:731–744. doi: 10.1097/AUD.0b013e31825aecad. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yacullo W, Hawkins D. Speech recognition in noise and reverberation by school-age children. Audiology. 1987;26:235–246. doi: 10.3109/00206098709081552. [DOI] [PubMed] [Google Scholar]

- Yang W, Bradley J. Effects of room acoustics on the intelligibility of speech in classrooms for young children. J Acoust Soc Am. 2009;125:922–933. doi: 10.1121/1.3058900. [DOI] [PubMed] [Google Scholar]

- Yoshinaga-Itano C, DeConde Johnson C, Carpenter K, et al. Outcomes of children with mild bilateral hearing loss and unilateral hearing loss. Sem Hear. 2008;29:196–211. [Google Scholar]