Abstract

Background

This study examines sustainment of an EBI implemented in 11 United States service systems across two states, and delivered in 87 counties. The aims are to 1) determine the impact of state and county policies and contracting on EBI provision and sustainment; 2) investigate the role of public, private, and academic relationships and collaboration in long-term EBI sustainment; 3) assess organizational and provider factors that affect EBI reach/penetration, fidelity, and organizational sustainment climate; and 4) integrate findings through a collaborative process involving the investigative team, consultants, and system and community-based organization (CBO) stakeholders in order to further develop and refine a conceptual model of sustainment to guide future research and provide a resource for service systems to prepare for sustainment as the ultimate goal of the implementation process.

Methods

A mixed-method prospective and retrospective design will be used. Semi-structured individual and group interviews will be used to collect information regarding influences on EBI sustainment including policies, attitudes, and practices; organizational factors and external policies affecting model implementation; involvement of or collaboration with other stakeholders; and outer- and inner-contextual supports that facilitate ongoing EBI sustainment. Document review (e.g., legislation, executive orders, regulations, monitoring data, annual reports, agendas and meeting minutes) will be used to examine the roles of state, county, and local policies in EBI sustainment. Quantitative measures will be collected via administrative data and web surveys to assess EBI reach/penetration, staff turnover, EBI model fidelity, organizational culture and climate, work attitudes, implementation leadership, sustainment climate, attitudes toward EBIs, program sustainment, and level of institutionalization. Hierarchical linear modeling will be used for quantitative analyses. Qualitative analyses will be tailored to each of the qualitative methods (e.g., document review, interviews). Qualitative and quantitative approaches will be integrated through an inclusive process that values stakeholder perspectives.

Discussion

The study of sustainment is critical to capitalizing on and benefiting from the time and fiscal investments in EBI implementation. Sustainment is also critical to realizing broad public health impact of EBI implementation. The present study takes a comprehensive mixed-method approach to understanding sustainment and refining a conceptual model of sustainment.

Electronic supplementary material

The online version of this article (doi:10.1186/s13012-014-0183-z) contains supplementary material, which is available to authorized users.

Keywords: Sustainment, Evidence-based intervention, Implementation, Leadership, Organizational culture, Organizational climate, Policy, Public sector

Background

Evidence-based interventions (EBIs) are increasingly being implemented in public-sector health and allied health service settings with little systemic knowledge about what factors facilitate or limit their sustainment. Without effective sustainment, investments in implementation are wasted and public health impact is limited. In the United States, the National Institutes of Health, Agency for Healthcare Research and Quality, Centers for Disease Control and Prevention (CDC), and other federal and state agencies and foundations are funding studies to facilitate more effective implementation of EBIs, but there are few systematic studies of sustainment. Adding to the need for research on sustainment is the fact that many EBIs are not sustained after initial implementation [1]-[4]. Leadership, policies, resource availability, collaborations, and organizational infrastructure are proposed as key determinants of long-term sustainment, yet these elements have not been widely examined [5],[6]. This current study is consistent with conceptual models that propose multiple phases or stages in the implementation process [5],[7]-[9], and focuses explicitly on the period of sustainment. Prospective and retrospective mixed methods are combined to examine three broad issues believed to be critical to EBI sustainment: 1) policies at the legislative, service system, and agency levels; 2) collaborations and partnerships; and 3) long-term bi-directional organizational and individual provider predictors of sustainment outcomes of reach/penetration, fidelity, and sustainment climate [5],[10].

Although some models of implementation invoke sustainment as a key component [1], little empirical work has systematically examined factors that either facilitate or hinder EBI sustainment in public-sector services [11]. In a comprehensive review of the sustainment literature, both outer (system) and inner (organizational) contexts emerged as dominant features across conceptual models [12]. In particular, Klein and colleagues [13] identified management support, organizational supports, and fiscal resources as key elements for implementation effectiveness and sustainment. Additionally, Edmondson [2] found leadership and positive team climate to be key factors in sustainment. Pertinent to the outer context, Pluye and colleagues [14] emphasized policies and processes as precursors to sustainment. Finally, Chambers and colleagues [10] proposed a model of sustainment that spans the ecological system (e.g., systems and policies) and practice setting (e.g., organizational context, service providers).

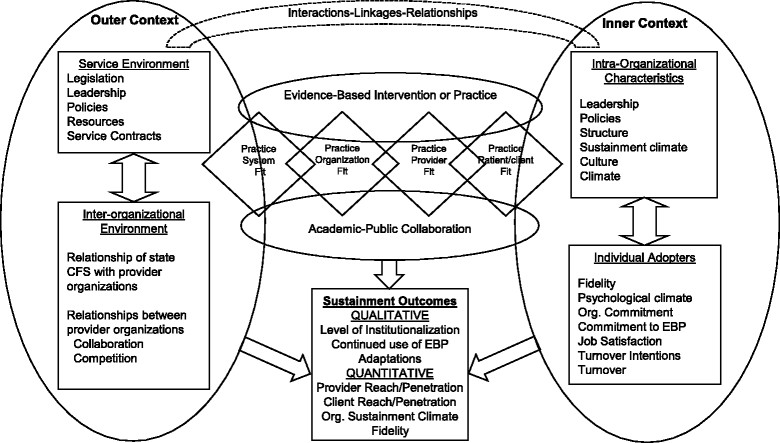

For this study, we build on the Exploration, Preparation, Implementation, Sustainment (EPIS) implementation framework [5] to guide our conceptualization of factors in both outer (i.e., system) and inner (i.e., organization) context factors that affect sustainment. As shown in Figure 1, our proposed conceptual model of sustainment illustrates factors in the outer system and inner organizational contexts that interact and involve relationships among multiple stakeholders including federal and state governments and community-based organizations (CBOs, also known as non-governmental organizations or NGOs), in addition to collaborations with other community stakeholders, academic researchers including EBI developers and purveyors, and funding agencies that support the reach of the EBI to providers and client populations and achieve EBI model instantiation in service systems and, ultimately, EBI model fidelity [5].

Figure 1.

Sustainment conceptual model based on the Exploration, Preparation, Implementation, Sustainment (EPIS) multilevel conceptual framework (Aarons, Hurlburt, & Horwitz, [[11]]). Note: some constructs may be considered predictors or outcomes dependent on particular hypotheses or research questions.

Outer-context issues in EBI sustainment

Conceptual models and reviews identify common outer-context (i.e., system) factors that can influence the capacity of systems and organizations to successfully implement and sustain EBIs [5],[11],[15],[16]. Governmental actions are particularly salient for EBI implementation and sustainment [17]. As such, the outer context of our conceptual model focuses on legislation, policies, public-sector fiscal resource availability, bid solicitations, reimbursement schemes, and how these factors are instantiated into service contracts. Legislation affects services by mandating funding streams or types of services to be provided [18]. Policies within state and county divisions allocate funding and set the parameters for service delivery (e.g., mandating EBIs) [19]. Service contracts can be structured to promote or reflect priorities of the state agency and represent an important mechanism for influencing organizational behaviors regarding EBIs [20]. Bid requirements can specify particular EBIs or leave this to CBO discretion. Reimbursement schemes can support key EBI activities such as quality control or fidelity monitoring to various degrees. Contractual arrangements are common in public-sector services as state or county governments rely on CBOs to provide services beyond their scope or expertise [5]. Such a multifaceted approach is likely necessary to sustain EBIs on a large scale [19].

Inner-context issues in EBI sustainment

Organizational and individual (i.e., provider) factors are important when implementing EBIs in real-world service contexts, and it is likely that these are at play in sustainment as well [21],[22]. For example, the social influence of others in an organization or work team can impact worker attitudes toward practice models [23],[24], and attitudes can affect implementation outcomes such as adoption and use of EBIs [25],[26]. The level of tangible organizational support for a practice is likely to affect adoption, implementation, and sustainment of EBI [13],[22]. The culture and climate of an organization or work team are associated with service provider engagement in work and willingness to utilize EBIs [27],[28], as are individual provider characteristics, including job tenure and level of professional development [29] and attitudes [25]. Strategic organizational climates can support specific employee behaviors [30]-[32], such as EBI use and fidelity. Leadership that motivates providers and promotes effective interaction is linked to service provider attitudes toward adopting EBI [33]. Professional and social networks within service systems spread knowledge about the perceived utility of an EBI and are likely to impact the quality of EBI uptake [34]. A service provider’s own ability to adapt and change can shape attitudes toward adopting new ways of working among his/her colleagues [29]. Finally, it is important to examine measures of strategic climates focused on implementation and sustainment. Sustainment climate is characterized by strong organizational practices, processes, and leader support for EBI that demonstrate what is expected, supported, and rewarded in an organization [32],[35].

Collaboration/partnerships

Linkages between systems, organizations, their environments, and resources (e.g., financial, workforce, and knowledge) are needed for EBI implementation and sustainment [11]. Partnerships involving service system stakeholders can facilitate linkages during the implementation process and promote both positive EBI outcomes [16] and subsequent intervention sustainment [36]. In the context of implementing EBIs through multiple public-private service contracts with CBOs, the involvement of academic partners with a history of community and public-sector engagement can foster strong and supportive relationships among institutions and individual professionals. Although resulting partnerships are not always the product of a planned community-based participatory research [37],[38] or community-partnered participatory research initiative [39], they nonetheless appear to help align the interests of the policymakers, upper-level CBO administrators, direct service providers, and academic researchers in order to undertake activities that promote both initial and ongoing fit of the selected EBI within a service system in the face of competing interests and priorities. The World Health Organization recommends such partnerships as a viable means to implement and sustain services [40]. Interest in such partnerships for intervention development and implementation purposes is also blossoming within mental health services research [41]-[43].

The EBI

The current study contributes to implementation science by addressing the sustainment of SafeCare© (SC), a home-based, behavioral and psychosocial EBI developed to prevent child neglect, a pressing public health problem and by far the most common type of child maltreatment [44]. Child neglect results in negative health, emotional and behavioral effects, and a large economic burden on society [45]. Neglected youth have a higher likelihood of mental health problems including social and emotional withdrawal [46],[47], low self-esteem, and less confidence and assertiveness in learning tasks [48]. They also present the least positive and the most negative affect of all maltreated children [48]. Consequences of neglect include poor academic performance, risk for developmental delays and cognitive difficulties [49], delayed language development [50],[51], and delays in receptive and expressive language [52]. Followed into adulthood, neglected children are at high risk for substance abuse and related behaviors, such as violence and criminal behavior [53]. In addition, while physical and sexual abuse rates have declined markedly over the past 18 years, child neglect rates have remained high with 32.6% of child maltreatment deaths attributable to neglect only [54]. Thus, addressing neglect is a critical public health concern affecting health, mental health, and child development and subsequent adult functioning.

The SC model incorporates principles of applied behavior analysis, is manualized, and uses classic behavioral intervention techniques (e.g., ongoing measurement of observable behaviors, skill modeling, direct skill practice with feedback, training skills to criterion) [55]. Behavioral theory conceptualizes child neglect in terms of caregiver skill deficits, particularly those skills that are most proximal to neglect, such as failing to provide adequate nutrition, healthcare, cleanliness, a safe home environment, parental disengagement, low levels of parental supervision, and inappropriate parenting or child management. SC is comprised of three modules addressing these issues: infant and child health, home safety and cleanliness, and parent-child (or parent-infant) interactions and skills development in problem solving and communications. The EBI has been found to reduce child welfare system recidivism, over and above comparable services without the SC curriculum when implemented at scale [44], making sustainment of this benefit a significant public health imperative. SC involves training providers and having ongoing fidelity coaching from SC model experts. Finally, the United States National SafeCare Training and Research Center (NSTRC) has developed a “train-the-trainer” model, in which selected providers can be trained and certified as SC coaches and trainers to expand or sustain local implementation [56].

The need for studies of sustainment

The study of sustainment is critical. For example, a review of home-based services found a 55% failure rate for implemented programs (i.e., services completely stopped being provided). Of those programs that were still “identifiable”, many of the key treatment elements (i.e., core elements) were no longer part of the services [57]. This highlights the need to increase our knowledge of how to effectively sustain EBIs with fidelity. Sustainment of EBIs to decrease neglect is critical for decreasing a child’s risk for neglect and resulting mental health, substance abuse, mortality, and decrements in health functioning.

The study of sustainment is not a well-developed science; however, there is a small existing literature on sustainment of prevention and intervention programs in a variety of settings that is informative. For example, studies of sustainment of school-based programs have found that variability in implementation success was a function of the quality of the relationships of educators with program recipients [58], that academic-community partnerships to support implementation can be maintained over time [59], and that action-research approaches emphasizing stakeholder involvement in the research process can aid sustainment [60]. Research in community-based settings has found that sustainment is enhanced when costs are shifted from services as usual to the new service model without an overall increase in costs [61]. In addition, contextual factors at both the system and organizational levels can be important through diffusion stages [16], and system issues can exert a greater effect relative to individual provider factors [62]. Viewing implementation as a developmental process across EPIS stages, it is likely that different factors assert critical influences at each stage. There is a need to identify unique sustainment factors across system, organization, and individual levels that can lead to improvements in processes and efficiencies not considered during initial implementation [63]. Such factors should span outer and inner contexts and might include engaging strong leadership across system and organizational levels, use of specific management strategies, attending to both organizational and individual factors, and anchoring new programs across system levels [64]. It is also imperative to look across levels, because system level instability negatively impacts sustainment [65]. For example, workforce policies may need to be tailored differently for urban and rural settings [66], and alternative funding sources may be necessary in some systems for particular types of interventions [67].

The present study

This study of EBI sustainment is funded by the U.S. National Institute of Mental Health (NIMH) and received institutional review board approval from the University of California, San Diego. The study builds on previous studies of implementation of SC [44],[68]-[70] in two U.S. states. Implementations began between 1 and 10 years prior to the beginning of the current study providing variability in sustainment duration. Additionally, implementations spanned multiple types of service systems including state/county departments of mental health, public health, and social services. This study examines sustainment from three complementary perspectives of policy, collaboration, and organizational and provider functioning. Further, this study utilizes mixed methods, involving stakeholders from the outer (i.e., service system) and inner (i.e., provider organizations) contexts, along with academic collaborators, to examine factors related to sustainment and refine a conceptual model of EBI sustainment.

Method/design

Study context

In this study, we examine sustainment of SC after implementation in 11 public-sector service systems across two states and 87 counties. One service system is state-operated with all services provided by CBOs contracted by the state government. The other ten have county-operated systems, in which services are guided, contracted, and/or delivered by county governments. The statewide implementation began 10 years prior to the inception of the current study with continuous involvement from university researchers as part of large-scale NIMH-funded effectiveness [44] and implementation [69] studies. The county-wide implementations were funded by the CDC, the NIMH, the Administration for Children and Families, state government, and community charitable foundations. These implementations involved university and community collaborations with specific projects to examine: 1) a cascading model of implementation featuring inter-agency collaborative teams [71],[72] and 2) utilization of the Dynamic Adaptation Process [73] to facilitate implementation. For the first study, EBI implementation began in a single large county 6 years prior to the onset of the current study. The second study involved ten counties that began implementation between 1 and 3 years prior to this study. Next, we present the specific aims and methods for accomplishing each aim.

Specific aims

Aim 1

Examine the impact of state and county policies and contracting on the provision and sustainability of an EBI within the publicly-funded service systems.

Document review

Documents offer a rich source of information on the intended and actual role of state policy in influencing the uptake and sustainment of SC and the on-the-ground effects of policies within service systems and service organizations. Document collection and analysis procedures will be applied to assess the outer context of EBI sustainment, described in Figure 1[74],[75]. First, EBI-specific documents released by the state and county government between the start of SC implementation and the present, including legislation, executive orders, governor’s speeches, regulations, monitoring data, and annual reports, will be systematically collected and indexed. Agendas and minutes for all meetings related to SC, in addition to request for proposals (RFPs) and contracts with CBOs for SC provision, will also be collected. Over the course of this 5-year study, these documents will continue to be updated quarterly.

Traditional methods in both archival and qualitative research will be utilized to prepare and analyze the documents. Upon retrieval, each document will be entered into a computer database, assigned a unique identification number, and indexed according to type, purpose, or reason for creation/issuance, source, and date. An abstract that provides a brief description or summary of contents will be created for each document. Coding schemes will be developed by the research team for each type of document (e.g., legislation, legal action, etc.). This coding scheme will facilitate further categorization of document content. For each document type or group, two researchers will randomly select a sample of the various documents and generate a list of codes or content categories relevant to SC sustainment [76]. Document contents that do not appear immediately relevant to the research topic at hand will be placed in a separate category, or “parking lot”, for possible analysis in the future. This standard set of codes will then be applied to the broader group. For within-group analyses, changes in contents for specific documents (e.g., modified implementation requirements within CBO contracts and subcontracts) we be identified and analyzed. Analysis of documents will serve several purposes. For example, the review and comparison of enabling legislation and annual RFPs and contracts will help to retrospectively and prospectively trace how SC has matured over time. Analysis of other documents may lend insight into the partnership dynamics explored under Specific Aim 2. For example, iterative review of meeting minutes may shed light on those partners who are most active in the SC initiative, their roles and responsibilities, leadership and infrastructure issues, as well as planning and decision making processes [36]. Document review will facilitate understanding in-depth the relationship between official pronouncements concerning SC and its statewide implementation and factors that might affect reach/penetration and fidelity. Findings derived from the document review will also stimulate “paths of inquiry” to pursue via one-on-one and small group interviews with a broad array of SC stakeholders (see below) [77].

Semi-structured interviews

Open-ended questions will be used to collect descriptive data on the development of policies that have influenced widespread adoption and utilization of SC. The experiences, motivations, and perceptions of state/county administrators, academic investigators, CBO executive directors, and regional directors involved in implementing these policies will also be assessed. Interview questions will elicit information on the current positions and professional backgrounds of each participant; their respective roles in and shared vision for SC implementation, evaluation, and dissemination; the relative success of SC-related legislation, policies, and service contracts in facilitating these processes; and factors that will “make or break” sustainment of SC in the future. These approximately 1.5-h, digitally-recorded interviews will provide an opportunity for participants to contemplate local circumstances (e.g., availability and access to community-based resources) and non-SC contextual factors at the macro-level that might affect sustainment (e.g., yearly fluctuations in federal funding for programs, changes in state economies, changes in political administration, etc.). Handwritten field notes will be organized according to a standard format (“debriefing form”), which includes information on date, time, length of the interaction, physical setting, and participants involved [78],[79]. Field notes and interview transcripts will be converted into analyzable text and stored electronically in a password-protected computer database.

Aim 2

Investigate the role of public, private, and academic relationships and collaboration in the long-term sustainment of an EBI among multiple public-sector service systems.

Qualitative and quantitative methods will be used to assess the role of public, private, and academic relationships in facilitating sustainment of EBIs. Semi-structured interviews will be used with state/county administrators, academic investigators, CBO executive directors, and CBO directors to examine the nature, quality, and degree of formalization of collaborative relationships that have facilitated large-scale implementation of SC in the public sector, and how these relationships might affect the level of institutionalization and sustainment of this intervention in the service system [80]. Thus, this examination will focus on three levels: 1) infrastructure, functions, and processes of extant (formal and informal) partnerships; 2) accomplishment of SC-related activities undertaken by these partnerships; and 3) impact of these activities on EBI sustainment. As part of these interviews conducted over the course of the study, we will collect information on the history of naturally evolving or devolving collaborations that have affected SC sustainment, as well as factors that have positively or negatively affected these collaborations over time (e.g., trust issues, power differentials, and prior partnerships) [81]. Participants will be asked to reflect upon their own involvement in SC-related collaborations, how these collaborations developed over time, and outcomes or accomplishments made possible by these collaborations. Participants will be prompted to discuss the types of interactions they have had with each set of SC stakeholders and how these interactions have affected (or will affect) SC sustainment. Stakeholder participants will be asked about specific processes that are identified in the literature as pivotal to successful collaborations (e.g., communication, problem solving, decision making, consensus-building processes, and conflict management and resolution) and how these processes play out in SC partnerships [36]. In addition to collaboration, participants will comment on other factors likely to influence SC institutionalization and the sustainment climate, including leadership at multiple levels (state/county, academic, CBO, and SC team), evaluation, infrastructure, and funding.

Aim 3

Assess a targeted range of organizational and individual provider factors within direct service agencies that affect reach/penetration, fidelity of SC, and organizational sustainment climate over time.

Semi-structured interviews

SC coaches and CBO supervisors will participate in one-on-one 1-h interviews to document their overall perceptions and experiences with SC and views regarding long-term sustainment. Participants will be asked about their general involvement in SC (including history), probing specifically for positive and negative experiences and challenges encountered during the implementation process that impact SC sustainment. Participants will then be guided toward how the SC model is applied within CBO settings, changing attitudes regarding the model, and changes within CBOs that have influenced attitudes. Third, participants will be asked to provide more targeted commentary on organizational factors within CBOs that affect SC model sustainment on a day-to-day basis, including team functioning and leadership, funding, and external policies (e.g., contract requirements, state regulations, legal actions). Fourth, participants will address prior or current involvement with non-CBO SC stakeholders, including participation in formal or informal collaborations. Finally, participants will comment on the type of support and resources needed within CBOs and teams to effectively provide SC on an ongoing basis. These interviews will also identify “lessons learned” during the coaching and supervisory process, which could help guide future implementation to support EBI sustainment.

Group interviews

Group interviews will be conducted with service providers assigned to SC teams [77]. Group interviews allow for the inclusion of the voice of each participant and are efficient in that trained discussion facilitators will be on-site when teams regularly meet, thus reducing respondent and CBO burden. Each work group will be comprised of 4–10 providers. Each group interview will be conducted over a 1–2-h period with the facilitator guiding the participants in a process of collective reflection and evaluation of issues pertinent to the institutionalization of SC within the service system and CBOs. The questions posed to group participants will parallel those asked of the coaches and supervisors and will thus focus on general involvement, changing attitudes and practices regarding SC, organizational factors and external policies affecting model implementation, involvement or collaboration with other SC stakeholders, and outer- and inner-contextual support needed to facilitate ongoing implementation of SC on individual and organizational levels.

Qualitative data preparation

Both the individual and group interviews will be digitally recorded, transcribed, and coded according to the procedures described above for Aim 1; debriefing forms will be completed for each interview. Similarly, transcripts will be analyzed according to the previously described qualitative methods. We will cluster for analysis those responses about factors at the policy, partnership, and organizational level that are likely to affect sustainment of SC [77]. The researchers will then prepare a summary of issues, ideas, and concerns about sustainment raised by the providers, which will be addressed during the next phase of the research, the development of a conceptual model.

Qualitative data analysis

To analyze the interviews, a descriptive coding scheme will be developed from transcripts and based on the specific questions and broader domains that make up the interviews. NVivo software will be used to organize and index data and aid in the identification of emergent categories and themes [78],[79]. Two types of coding will be used. First, “open coding” will be used to locate themes followed by “focused coding” to determine which themes repeat often and which represent unusual or particular concerns [82]. Coding will proceed in an iterative fashion; we will code sets of transcripts, create detailed memos linking codes to emergent themes, and review with the project’s lead investigators. Discrepancies in coding and analysis will be identified during this review process and resolved during regular team meetings.

Throughout this project, the consistency of interview data collected at different times (Years 1, 3, and 5) and by different methods will be assessed. More specifically, the procedures will utilize a) cross-checking interview data collected by individual staff, b) comparing interview data with findings from the document review, c) checking for constancy in what participants say about the implementation and sustainability of SC over time, and d) comparing perspectives of different stakeholder groups, e.g., state/county, academic, CBO.

Triangulation of qualitative data sources

Triangulation of findings derived from document review with those from individual and group interviews will be enacted to create a complete picture of sustainment issues to date. Interviews will shed light on “behind-the-scenes” events and processes that led to the establishment and dissemination of SC policy and practice and the range of outer- and inner-contextual variables likely to influence further sustainment. The combination of qualitative methods enables us to answer several questions concerning SC sustainment: 1) How does county/state-level legislation impact the service system? 2) How does this system implement policies that result in the issuance of RFPs and service contracts with CBOs? 3) How do CBOs within the service system then collaborate and compete to provide EBIs, such as SC? 4) How do CBOs carry out the terms and conditions of contracts to provide SC? 5) What organizational characteristics within CBOs affect individual providers, team structure and operations, and delivery of SC? 6) What system, organizational, and EBI adaptations support sustainment?

Quantitative methods

Participants and measures

Quantitative data will include administrative and service delivery data provided by the service system representatives, organizational and individual measures collected from direct service providers and supervisors using annual online surveys, and administrator and executive director key informant measures.

Measures from administrative data

Three measures from administrative data will be collected:

Reach/penetration - provider level. We will assess the proportion of providers who have a) received training in SC, b) reached certification, and c) have successfully completed SC services with clients (closed cases only).

Reach/penetration - client level. We will use de-identified administrative data to determine the annual number of potential cases meeting criteria for referral to SC, proportional to the number of cases actually receiving SC.

Staff turnover. Data will be gathered from administrative data and/or organization and provider reports. Post-turnover follow-up calls with SC providers will be used to gather more detail regarding reasons for turnover (e.g., system changes, voluntary or involuntary turnover, other job opportunities, family issues, concerns with the EBI).

Coach measures

Measures of SC model fidelity will be collected from SC coaches. Provider fidelity is a critical sustainment outcome [15] that impacts clinical outcomes [83]. Directly observed fidelity is most related to client outcomes because observers normally are fluent with the constructs and behaviors they are rating. SC coaches are model experts trained in effective coaching practice and SC fidelity ratings. Observations will be coded by coaches using a SC fidelity measure for each observed session (one to four sessions per provider monthly for a minimum of 12 observations per year per provider).

Provider measures

Data will be collected from providers using web-based surveys. The following measures will be included in the provider survey:

Organizational culture, climate, and work attitudes will be assessed with the Organizational Social Context Survey [84].

Leadership will be assessed with the Multifactor Leadership Questionnaire [85] and the Implementation Leadership Scale [86].

The knowledge/perceived value of SC will be assessed within the study workforce with 16 questions adapted from qualitative and quantitative studies examining service system functioning [34],[87],[88].

Sustainment climate is a strategic climate that captures the provider perceptions of the extent to which the policies, practices, and procedures in the organization support sustainment. It will be assessed using an adaptation of the Implementation Climate Scale [32] which is comprised of 18 items with six subscales and which has excellent psychometric characteristics (α for subscales range = .81–.91).

Attitudes toward evidence-based practice will be assessed with the Evidence-Based Practice Attitude Scale [29],[89],[90] (total α = .76).

Administrator measures

Data will be collected from administrators using web-based surveys. The following measures will be included in the administrator survey:

Five subscales from the Program Sustainability Index [91],[92] will assess leadership competence (α = .81, 5 items), effective collaboration (α = .88, 10 items), program results (α = .85, 4 items), strategic funding (α = .76, 3 items), and staff involvement (α = .76, 4 items).

The Level of Institutionalization Scale [80] will be adapted for this study to assess the degree to which SC is institutionalized in the service system and provider organizations using the Cycles-Routines (α = .87) and Niche Saturation scales (α = .84).

Quantitative analyses

All data analytic strategies will adhere to recommendations of the Prevention Science and Methodology Group [93]. Primary analyses will be based on generalized linear mixed models [94]-[96], because the data will have a three-level hierarchical data structure in which measurements over time are nested within individuals (i.e., providers), and individuals are nested within supervision groups. In general, the approach to modeling this type of multilevel data will consist of including random coefficients at the provider and supervision group levels. While this approach is typically sufficient to control for the dependency among observations, we will also test for the possible presence of residual dependency by fitting models that superimpose different autocorrelated error structures on the baseline random-effects models and compare the fit of these nested models using a likelihood ratio test [94],[96]. The models tested involve both fixed and time-varying covariates. Our modeling approach imposes a 1-year lag on observations for the response (e.g., for time-varying covariates, Time 1 values are used to predict Time 2 values on the response, Time 2 values on the covariate are used to predict time 3 values on the response, controlling for Time 1 measurements on the response) [97]. Given that the conventional mixed model imposes the untested constraint that each time-varying covariate has equal between- and within-subject effects, we will explore models that provide separate estimates of these effects and thereby relax this constraint [94],[98]. All models will be multivariate in nature (i.e., testing all predictors simultaneously). Significance tests will focus on individual regression coefficients from these models.

Provider reach/penetration is a binary indicator (either the provider is trained, certified, and is delivering every indicated module of SC to clients on their caseload or not) and will be modeled with a logit link function. Demographic variables (age, gender, race/ethnicity, education level, years of experience, job tenure, urban/rural), work attitudes, turnover intentions, team turnover rate, organizational climate, and sustainment climate will be included in the model. Given that the response and predictors are measured at each assessment period, we will lag the response, controlling for baseline measurements of the response [97].

Client reach/penetration will be evaluated with two statistical models. Client penetration is conceived as the number of clients being served by SC divided by those served by SC plus those who need SC, but are not served (i.e., served/[served + unmet need]). The proportion of clients served with SC in each implementation site will be compared using a chi-square goodness-of-fit test, followed by comparisons among the categories conditional on a significant overall test. These comparisons will be conducted cross-sectionally. There is no hierarchical structure associated with this outcome. In the presence of statistically significant differences between sites, qualitative analyses will be conducted to identify cause of the disparity.

Fidelity ratings of SC providers will be treated as a continuous outcome, modeled using a link function selected on the basis of the observed distribution. Predictors include work attitudes, organizational climate, sustainment climate, and demographics variables. While predictor variables are measured at each assessment, fidelity ratings are averaged for each provider in the time period between two assessments and the time period leading up to the first measurement. Averaging fidelity ratings between two assessments, the resulting value is treated as a measurement occasion for the later time period (e.g., averaging fidelity ratings for a provider between the first and second assessment is treated as a value for the response at the second assessment).

Sustainment climate response is continuous and, assuming it is normally distributed, will be modeled with an identity link function. The same demographic predictors described above will be modeled, as well as work attitudes, transformational leadership, and organizational climate. Given that responses and predictors are measured at each assessment period, we will lag the response, controlling for baseline measurements [97].

Overall mixed-methods integration

We will integrate qualitative/quantitative results through an inclusive process that values the perspectives of all stakeholders (state/county and organizational participants, investigators, consultants). We will assess consistencies and discrepancies between qualitative and quantitative data and analyses to determine if we are capturing issues and constructs most relevant to SC sustainment in the service systems and organizations. We will consider each analysis (qualitative and quantitative) on its own terms and how the two differ or converge in their findings when working towards overall interpretations and conclusions [77],[99],[100]. Qualitative and quantitative data will be integrated through triangulation to examine convergence, expansion, and complementarity of the two data sets [70],[99],[101],[102]. Data sets will be merged by a) linking qualitative and quantitative databases and b) embedding one within the other so that each plays a supportive role for the other. Specifically, results of each data set will be placed side-by-side to examine 1) convergence (do results provide the same answer to the same question, e.g., do interview data concur with quantitative data regarding sustainment climate and SC use?), 2) expansion (are unanticipated findings produced by one data set explained by another, e.g., can survey data that suggest poor sustainment climate be explained by qualitative interview data?), and 3) complementarity (does embedding results of the qualitative analysis within the quantitative data set help contextualize overall results, i.e., does it explain variability represented by confidence intervals or variance estimates in statistical analyses on sustainment climate and leadership?).

Aim 4

Integrate findings from Aims 1, 2, and 3 through a collaborative process involving the investigative team, consultants, and system and community-based organization stakeholders in order to further develop and refine a conceptual model of sustainment to guide future research and to provide a resource for service systems to prepare for sustainment as the ultimate goal of the implementation process.

Discussion

The study of sustainment is at least as important as the study of implementation for a number of reasons. First, the potential public health impact of implementing an EBI will not be fully realized unless that practice can be sustained in the context in which it was implemented. Second, further research is needed (beyond the scope of the present study) to examine initial investments in EBI implementation that may be diminished or wasted without sustainment, resulting in a lack of return on investment (ROI) and a failure to realize cost-effectiveness of EBIs found in other studies. Indeed, while methods for assessing ROI have a long history and despite its importance when considering the sunk costs of implementation efforts [103], ROI is rarely examined. Third, without ongoing practice, skills developed by clinicians will likely be lost or EBI fidelity will be jeopardized [104]. Although flexibility within fidelity is a recommended approach [105], without ongoing feedback or coaching, clinician or service provider behavior may drift from expected standards of practice associated with positive patient or client outcomes. Taken together, such negative impacts of failure to sustain EBIs seriously threaten the value of implementation efforts.

To understand multiple factors that affect implementation and sustainment, mixed methods are needed [101]. For example, gaining a full understanding of the outer context impacting sustainment requires not only interviews with key stakeholders, but an analysis of the written and formalized policies that impact how services are funded, supported, and monitored at the system level. Where services are contracted, an evaluation of requests for proposals, contracts, and statements or work is necessary to better understand the legally binding mechanisms that encourage, support, dictate, or disrupt what services will be provided and in what way they will be provided. In the inner context, mixed methods may inform, converge, or expand the understanding of how leadership, group dynamics, and organizational context can impact the quality with which EBIs are sustained [70]. Finally, studies with prospective design characteristics, such as the one described in this protocol, are needed so that sustainment is not assessed at only one point in time, but rather, changes in policy and organizational contexts can be examined over time, for their impact on EBI sustainment processes and outcomes.

Acknowledgments

This study is supported by the U.S. National Institute of Mental Health grant R01MH072961 (Principal Investigator: Gregory A. Aarons). We thank participants from the service systems, organizations, and the participating providers for their collaboration and involvement in this study. We also thank Natalie Finn, B.A., for her assistance in the preparation of this manuscript.

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Footnotes

Competing interests

GAA is an Associate Editor, and CEW and SCR are on the Editorial Board of Implementation Science; all decisions on this paper were made by another editor. The authors declare that they have no other competing interests.

Authors’ contributions

GAA is the principal investigator for this study. AEG and GAA drafted the manuscript. GAA, AEG, CEW, MGE, SCR, MJC, and DBH conceptualized and designed the study, edited the manuscript, and approved the final version of the manuscript.

Contributor Information

Gregory A Aarons, Email: gaarons@ucsd.edu.

Amy E Green, Email: a4green@ucsd.edu.

Cathleen E Willging, Email: cwillging@pire.org.

Mark G Ehrhart, Email: mehrhart@mail.sdsu.edu.

Scott C Roesch, Email: scroesch@sciences.sdsu.edu.

Debra B Hecht, Email: debra-hecht@ouhsc.edu.

Mark J Chaffin, Email: mchaffin@gsu.edu.

References

- 1.Edmondson AC. Framing for learning: lessons in successful technology implementation. Calif Manage Rev. 2003;45:35–54. [Google Scholar]

- 2.Edmondson AC. Speaking up in the operating room: how team leaders promote learning in interdisciplinary action teams. J Manage Stud. 2003;40:1419–1452. [Google Scholar]

- 3.Massatti RR, Sweeney HA, Panzano PC, Roth D. The de-adoption of innovative mental health practices (IMHP): why organizations choose not to sustain an IMHP. Adm Policy Ment Hlth. 2008;35:50–65. doi: 10.1007/s10488-007-0141-z. [DOI] [PubMed] [Google Scholar]

- 4.Romance NR, Vitale MR, Dolan M, Kalvaitis L: An empirical investigation of leadership and organizational issues associated with sustaining a successful school renewal initiative.Paper presented at the Annual Meeting of the American Educational Research Association April, 2002; New Orleans, LA.,

- 5.Aarons GA, Hurlburt M, Horwitz SM. Advancing a conceptual model of evidence-based practice implementation in public service sectors. Adm Policy Ment Hlth. 2011;38:4–23. doi: 10.1007/s10488-010-0327-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Chambers DA, Azrin ST. Research and services partnerships: partnership: a fundamental component of dissemination and implementation research. Psychiatr Serv. 2013;64:509–511. doi: 10.1176/appi.ps.201300032. [DOI] [PubMed] [Google Scholar]

- 7.Chamberlain P, Brown CH, Saldana L. Observational measure of implementation progress in community based settings: the stages of implementation completion (SIC) Implement Sci. 2011;6:116–123. doi: 10.1186/1748-5908-6-116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Fixsen DL, Naoom SF, Blase KA, Friedman RM, Wallace F. Implementation Research: A Synthesis of the Literature. Tampa: University of South Florida, Louis de la Parte Florida Mental Health Institute, the National Implementation Research Network (FMHI Publication #231; 2005. [Google Scholar]

- 9.Tabak RG, Khoong EC, Chambers DB, Brownson RC. Models in dissemination and implementation research: useful tools in public health services and systems research. Frontiers in Public Health Services and Systems Research. 2013;2:8. [Google Scholar]

- 10.Chambers DA, Glasgow RE, Stange KC. The dynamic sustainability framework: addressing the paradox of sustainment amid ongoing change. Implement Sci. 2013;8:117. doi: 10.1186/1748-5908-8-117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Greenhalgh T, Robert G, Macfarlane F, Bate P, Kyriakidou O. Diffusion of innovations in service organizations: systematic review and recommendations. Milbank Q. 2004;82:581–629. doi: 10.1111/j.0887-378X.2004.00325.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Stirman SW, Kimberly J, Cook N, Calloway A, Castro F. The sustainability of new programs and innovations: a review of the empirical literature and recommendations for future research. Implement Sci. 2012;7:12. doi: 10.1186/1748-5908-7-17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Klein KJ, Conn AB, Sorra JS. Implementing computerized technology: an organizational analysis. J Appl Psychol. 2001;86:811–824. doi: 10.1037/0021-9010.86.5.811. [DOI] [PubMed] [Google Scholar]

- 14.Pluye P, Potvin L, Denis JL. Making public health program last: conceptualizing sustainability. Eval Program Plann. 2004;24:121–133. [Google Scholar]

- 15.Proctor E, Silmere H, Raghavan R, Hovmand P, Aarons GA, Bunger A, Griffey R, Hensley M. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Adm Policy Ment Hlth. 2011;38:65–76. doi: 10.1007/s10488-010-0319-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Mendel P, Meredith L, Schoenbaum M, Sherbourne C, Wells K. Interventions in organizational and community context: a framework for building evidence on dissemination and implementation in health services research. Adm Policy Ment Hlth. 2008;35:21–37. doi: 10.1007/s10488-007-0144-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Bruns EJ, Hoagwood KE, Rivard JC, Wotring J, Marsenich L, Carter B. State implementation of evidence-based practice for youths, part II: recommendations for research and policy. J Am Acad Child Adolesc Psychiatry. 2008;47:499–504. doi: 10.1097/CHI.0b013e3181684557. [DOI] [PubMed] [Google Scholar]

- 18.Feldman S. The ‘millionaires tax’ and mental health policy in California. Health Aff. 2009;28:809–815. doi: 10.1377/hlthaff.28.3.809. [DOI] [PubMed] [Google Scholar]

- 19.Raghavan R, Bright CL, Shadoin AL. Toward a policy ecology of implementation of evidence-based practices in public mental health settings. Implement Sci. 2008;3:3–26. doi: 10.1186/1748-5908-3-26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Aarons GA, Ehrhart MG, Farahnak LR, Sklar M. Aligning leadership across systems and organizations to develop a strategic climate for evidence-based practice implementation. Annu Rev Public Health. 2014;35:255–274. doi: 10.1146/annurev-publhealth-032013-182447. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Aarons GA. Measuring provider attitudes toward evidence-based practice: consideration of organizational context and individual differences. Child Adolesc Psychiatr Clin N Am. 2005;14:255–271. doi: 10.1016/j.chc.2004.04.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Aarons GA, Sommerfeld DH, Walrath-Greene CM. Evidence-based practice implementation: the impact of public versus private sector organization type on organizational support, provider attitudes, and adoption of evidence-based practice. Implement Sci. 2009;4:83–96. doi: 10.1186/1748-5908-4-83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Caldwell DF, O’Reilly CA., III The determinants of team-based innovation in organizations: the role of social influence. Small Group Res. 2003;34:497–517. [Google Scholar]

- 24.Willging CE, Lamphere L, Waitzkin H. Transforming administrative and clinical practice in a public behavioral health system: an ethnographic assessment of the context of change. Journal of Health Care for the Poor and the Underserved. 2009;20:873–890. doi: 10.1353/hpu.0.0177. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Henggeler SW, Chapman JE, Rowland MD, Halliday-Boykins CA, Randall J, Shackelford J, Schoenwald SK. Statewide adoption and initial implementation of contingency management for substance abusing adolescents. J Consult Clin Psychol. 2008;76:556–567. doi: 10.1037/0022-006X.76.4.556. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Beidas RS, Marcus S, Aarons GA, Hoagwood KE, Schoenwald S, Evans AC, Hurford MO, Hadley T, Barg F, Walsh LM, Adams D, Mandell D: Individual and organizational predictors of community therapists’ use of evidence-based practices in a large public mental health system.JAMA Psychiatry, in press.,

- 27.Glisson C. The organizational context of children’s mental health services. Clin Child Fam Psych. 2002;5:233–253. doi: 10.1023/a:1020972906177. [DOI] [PubMed] [Google Scholar]

- 28.Aarons GA, Sawitzky AC. Organizational culture and climate and mental health provider attitudes toward evidence-based practice. Psychol Serv. 2006;3:61–72. doi: 10.1037/1541-1559.3.1.61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Aarons GA. Mental health provider attitudes toward adoption of evidence-based practice: the evidence-based practice attitude scale (EBPAS) Ment Health Serv Res. 2004;6:61–74. doi: 10.1023/b:mhsr.0000024351.12294.65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Ehrhart MG. Leadership and procedural justice climate as antecedents of unit-level organizational citizenship behavior. Pers Psychol. 2004;57:61–94. [Google Scholar]

- 31.Schneider B, Ehrhart MG, Mayer DM, Saltz JL, Niles-Jolly K. Understanding organization-customer links in service settings. Acad Manage J. 2005;48:1017–1032. [Google Scholar]

- 32.Ehrhart MG, Aarons GA, Farahnak LR. Assessing the organizational context for EBP implementation: the development and validity testing of the Implementation Climate Scale (ICS) Implement Sci. 2014;9:157. doi: 10.1186/s13012-014-0157-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Aarons GA. Transformational and transactional leadership: association with attitudes toward evidence-based practice. Psychiatr Serv. 2006;57:1162–1169. doi: 10.1176/appi.ps.57.8.1162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Frambach RT, Schillewaert N. Organizational innovation adoption: a multi-level framework of determinants and opportunities for future research. Journal of Business Research Special Issue: Marketing Theory in the Next Millennium. 2002;55:163–176. [Google Scholar]

- 35.Jacobs SR, Weiner BJ, Bunger AC. Context matters: measuring implementation climate among individuals and groups. Implement Sci. 2014;9:46. doi: 10.1186/1748-5908-9-46. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Butterfoss FDB. Coalitions and Partnerships in Community Health. Hoboken, NJ: Jossey-Bass; 2007. [Google Scholar]

- 37.Wallerstein N, Duran B. The conceptual, historical, and practice roots of community based participatory research and related participatory traditions. In: Minkler M, Wallerstein N, editors. Community-based Participatory Research for Health. San Francisco: Jossey-Bass; 2003. [Google Scholar]

- 38.Wallerstein N, Duran B. Community-based participatory research contributions to intervention research: the intersection of science and practice to improve health equity. Am J Public Health. 2010;100:S40–S46. doi: 10.2105/AJPH.2009.184036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Wells K, Jones L. Research in community-partnered, participatory research. JAMA. 2009;302:320–321. doi: 10.1001/jama.2009.1033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Widdus R. Public-private partnerships for health: their main targets, their diversity, and their future directions. Bull World Health Organ. 2001;79:713–720. [PMC free article] [PubMed] [Google Scholar]

- 41.Garland AF, Hurlburt MS, Hawley KM. Examining psychotherapy processes in a services research context. Clin Psychol-Sci Pr. 2006;13:30–46. [Google Scholar]

- 42.Wells K, Miranda J, Bruce ML, Alegria M, Wallerstein N. Bridging community intervention and mental health services research. Am J Psychiatry. 2004;161:955–963. doi: 10.1176/appi.ajp.161.6.955. [DOI] [PubMed] [Google Scholar]

- 43.Institute of Medicine [IOM] Improving the Quality of Health Care for Mental and Substance-use Conditions. Washington DC: National Academies Press; 2006. [PubMed] [Google Scholar]

- 44.Chaffin M, Hecht D, Bard D, Silovsky JF, Beasley WH. A statewide trial of the SafeCare home-based services model with parents in Child Protective Services. Pediatrics. 2012;129:509–515. doi: 10.1542/peds.2011-1840. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Swenson CC, Brown EJ, Lutzker JR. Issues of maltreatment and abuse. In: Freeman A, editor. Personality Disorders in Childhood and Adolescence. Hoboken, NJ: John Wiley & Sons Inc; 2007. pp. 229–295. [Google Scholar]

- 46.Bousha DM, Twentyman T. Mother-child interactional style in abuse, neglect, and control groups: naturalistic observations in the home. J Abnorm Psychol. 1984;93:106–114. doi: 10.1037//0021-843x.93.1.106. [DOI] [PubMed] [Google Scholar]

- 47.Crittenden PM. Children’s strategies for coping with adverse home environments: an interpretation using attachment theory. Child Abuse Negl. 1992;16:329–343. doi: 10.1016/0145-2134(92)90043-q. [DOI] [PubMed] [Google Scholar]

- 48.Egeland B, Sroufe A, Erickson M. The developmental consequence of different patterns of maltreatment. Child Abuse Negl. 1983;7:459–469. doi: 10.1016/0145-2134(83)90053-4. [DOI] [PubMed] [Google Scholar]

- 49.Kent J. A follow-up study of abused children. J Pediatr Psychol. 1976;1:25–31. [Google Scholar]

- 50.Culp RE, Watkins RV, Lawrence H, Letts D, Kelly DJ, Rice ML. Maltreated children’s language and speech development: abused, neglected, and abused and neglected. First Language. 1991;11:377–389. [Google Scholar]

- 51.Fox L, Long SH, Langlois A. Patterns of language comprehension deficit in abused and neglected children. Journal of Speech & Hearing Disorders. 1988;53:239–244. doi: 10.1044/jshd.5303.239. [DOI] [PubMed] [Google Scholar]

- 52.Gaudin JM. Short-term and long-term outcomes. In: Dubowitz H, editor. Neglected Children: Research, Practice and Policy. London: Sage; 1999. pp. 89–108. [Google Scholar]

- 53.Widom CS, White HR. Problem behaviors in abused and neglected children grown-up: prevalence and co-occurrence of substance abuse, crime and violence. Criminal Behavior & Mental Health. 1998;7:287–310. [Google Scholar]

- 54.U.S. Department of Health and Human Services, Administration for Children and Families, Administration on Children, Youth and Families . Child Maltreatment. Washington, DC: Children’s Bureau; 2010. [Google Scholar]

- 55.Lutzker JR, Bigelow KM, Doctor RM, Kessler ML. Safety, health care, and bonding within an ecobehavioral approach to treating and preventing child abuse and neglect. J Fam Violence. 1998;13:163–185. [Google Scholar]

- 56.Whitaker DJ, Lutzker JR, Self-Brown S, Edwards AE. Implementing an evidence-based program for the prevention of child maltreatment: the SafeCare® program. Report on Emotional & Behavioral Disorders in Youth. 2008;8:55–62. [Google Scholar]

- 57.Wright C, Catty J, Watt H, Burns T. A systematic review of home treatment services. Soc Psychiatry Psychiatr Epidemiol. 2004;39:789–796. doi: 10.1007/s00127-004-0818-5. [DOI] [PubMed] [Google Scholar]

- 58.Fagen MC, Flay BR. Sustaining a school-based prevention program: results from the Aban Aya sustainability project. Health Educ Behav. 2009;36:9–23. doi: 10.1177/1090198106291376. [DOI] [PubMed] [Google Scholar]

- 59.Ozer E, Cantor J, Cruz G, Fox B, Hubbard E, Moret L. The diffusion of youth-led participatory research in urban schools: the role of the prevention support system in implementation and sustainability. Am J Community Psychol. 2008;41:278–289. doi: 10.1007/s10464-008-9173-0. [DOI] [PubMed] [Google Scholar]

- 60.Elias MJ, Zins JE, Graczyk PA, Weissberg RP. Implementation, sustainability and scaling up of social-emotional and academic innovations in public schools. School Psych Rev. 2003;32:303–319. [Google Scholar]

- 61.Foster EM, Kelsch CC, Kamradt B, Sosna T, Yang Z. Expenditures and sustainability in systems of care. J Emot Behav Disord. 2001;9:53–62. [Google Scholar]

- 62.Jacobs JA, Dodson EA, Baker EA, Deshpande AD, Brownson RC. Barriers to evidence-based decision making in public health: a national survey of chronic disease practitioners. Public Health Rep. 2010;125:736–742. doi: 10.1177/003335491012500516. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Grimes J, Kurns S, Tilly WDI. Sustainability: an enduring commitment to success. School Psych Rev. 2006;35:224–244. [Google Scholar]

- 64.Larsen T, Samdal O. Implementing second step: balancing fidelity and program adaptation. J Educ Psychol Consult. 2007;17:1–29. [Google Scholar]

- 65.Jansen M, Harting J, Ebben N, Kroon B, Stappers J, Van Engelshoven E, de Vries N. The concept of sustainability and the use of outcome indicators. A case study to continue a successful health counselling intervention. Fam Pract. 2008;25:32–37. doi: 10.1093/fampra/cmn066. [DOI] [PubMed] [Google Scholar]

- 66.Baumann A, Hunsberger M, Blythe J, Crea M. Sustainability of the workforce: government policies and the rural fit. Health Policy. 2008;85:372–379. doi: 10.1016/j.healthpol.2007.09.004. [DOI] [PubMed] [Google Scholar]

- 67.Myers KM, Valentine JM, Melzer SM. Feasibility, acceptability, and sustainability of telepsychiatry for children and adults. Psychiatr Serv. 2007;58:1493–1496. doi: 10.1176/ps.2007.58.11.1493. [DOI] [PubMed] [Google Scholar]

- 68.Aarons GA, Sommerfeld DH. Leadership, innovation climate, and attitudes toward evidence-based practice during a statewide implementation. J Am Acad Child Psy. 2012;51:423–431. doi: 10.1016/j.jaac.2012.01.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Aarons GA, Sommerfeld DH, Hecht DB, Silovsky JF, Chaffin MJ. The impact of evidence-based practice implementation and fidelity monitoring on staff turnover: evidence for a protective effect. J Consult Clin Psychol. 2009;77:270–280. doi: 10.1037/a0013223. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Aarons GA, Fettes DL, Sommerfeld DH, Palinkas LA. Mixed methods for implementation research: application to evidence-based practice implementation and staff turnover in community-based organizations providing child welfare services. Child Maltreat. 2012;17:67–79. doi: 10.1177/1077559511426908. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Hurlburt M, Aarons GA, Fettes DL, Willging C, Gunderson L, Chaffin MJ. Interagency collaborative team model for capacity building to scale-up evidence-based practice. Child Youth Serv Rev. 2014;39:160–168. doi: 10.1016/j.childyouth.2013.10.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Aarons GA, Fettes DL, Hurlburt MS, Palinkas LA, Gunderson L, Willging CE, Chaffin MJ. J Clin Child Adolesc. 2014. Collaboration, negotiation, and coalescence for interagency-collaborative teams to scale-up evidence-based practice; pp. 1–14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Aarons GA, Green AE, Palinkas LA, Self-Brown S, Whitaker DJ, Lutzker JR, Silovsky JF, Hecht DB, Chaffin MJ. Dynamic adaptation process to implement an evidence-based child maltreatment intervention. Implement Sci. 2012;7:1–9. doi: 10.1186/1748-5908-7-32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Waitzkin H, Williams R, Bock J, McCloskey J, Willging C, Wagner W. Safety-net institutions buffer the impacts of medicaid managed care: a multi-method assessment in a rural state. Am J Public Health. 2002;92:598–610. doi: 10.2105/ajph.92.4.598. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Willging C, Waitzkin H, Wagner W. Medicaid managed care for mental health services in a rural state. J Health Care Poor Underserved. 2005;16:497–514. doi: 10.1353/hpu.2005.0060. [DOI] [PubMed] [Google Scholar]

- 76.Stufflebeam DL, Shinkfield AJ. Evaluation Theory, Models, and Application. San Francisco, CA: Jossey-Bass; 2007. [Google Scholar]

- 77.Patton MQ. Qualitative Research and Evaluation Methods. 3. Thousand Oaks, CA: Sage Publications; 2002. Qualitative analysis and interpretation; pp. 431–539. [Google Scholar]

- 78.Miles MB, Huberman AM. Qualitative Data Analysis: An Expanded Sourcebook. Thousand Oaks, CA: Sage Publications, Inc.; 1994. [Google Scholar]

- 79.Fraser D. QSR NVivo Reference Guide. Melbourne: QSR International; 2000. [Google Scholar]

- 80.Steckler A, Goodman RM, McLeroy KR, Davis S, Koch G. Measuring the diffusion of innovative health promotion programs. Am J Health Promot. 1992;6:214–225. doi: 10.4278/0890-1171-6.3.214. [DOI] [PubMed] [Google Scholar]

- 81.Kano M, Willging C, Rylko-Bauer B. Community participation in New Mexico’s behavioral health care reform. Med Anthropol Q. 2009;23:277–297. doi: 10.1111/j.1548-1387.2009.01060.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Emerson RM, Fretz RI, Shaw LL. Writing Ethnographic Fieldnotes. Chicago: University of Chicago; 1996. [Google Scholar]

- 83.Schoenwald SK, Sheidow AJ, Letourneau EJ. Toward effective quality assurance in evidence-based practice: links between expert consultation, therapist fidelity, and child outcomes. J Clin Child Adolesc. 2004;33:94–104. doi: 10.1207/S15374424JCCP3301_10. [DOI] [PubMed] [Google Scholar]

- 84.Glisson C, Landsverk J, Schoenwald S, Kelleher K, Hoagwood K, Mayberg S, Green P. Assessing the Organizational Social Context (OSC) of mental health services: implications for research and practice. Administration and Policy in Mental Health and Mental Health Services Research Special Issue: Improving Mental Health Services. 2008;35:98–113. doi: 10.1007/s10488-007-0148-5. [DOI] [PubMed] [Google Scholar]

- 85.Bass BM, Avolio BJ. MLQ: Multifactor Leadership Questionnaire (Technical Report) NY: Center for Leadership Studies, Binghamton University; 1995. [Google Scholar]

- 86.Aarons GA, Ehrhart MG, Farahnak LR. The Implementation Leadership Scale (ILS): development of a brief measure of unit level implementation leadership. Implement Sci. 2014;9:45. doi: 10.1186/1748-5908-9-45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Kraut RE, Rice RE, Cool C, Fish RS. Varieties of social influence: the role of utility and norms in the success of a new communication medium. Organ Sci. 1998;9:437–453. [Google Scholar]

- 88.Hellofs LL, Jacobson R. Market share and customers’ perceptions of quality: when can firms grow their way to higher versus lower quality? J Mark. 1999;63:16–25. [Google Scholar]

- 89.Aarons GA, McDonald EJ, Sheehan AK, Walrath-Greene CM. Confirmatory factor analysis of the evidence-based practice attitude scale (EBPAS) in a geographically diverse sample of community mental health providers. Adm Policy Ment Hlth. 2007;34:465–469. doi: 10.1007/s10488-007-0127-x. [DOI] [PubMed] [Google Scholar]

- 90.Aarons GA, Cafri G, Lugo L, Sawitzky A. Expanding the domains of attitudes towards evidence-based practice: the Evidence-based Practice Attitude Scale-50. Adm Policy Ment Hlth. 2010;39:331–340. doi: 10.1007/s10488-010-0302-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91.Mancini JA, Marek LI. Sustaining community-based programs for families: conceptualization and measurement. Fam Relat. 2004;53:339–347. [Google Scholar]

- 92.Goodman RM, McLeroy KR, Steckler AB, Hoyle RH. Development of level of institutionalization. Health Educ Q. 1993;20:161–178. doi: 10.1177/109019819302000208. [DOI] [PubMed] [Google Scholar]

- 93.Brown CH, Wang W, Kellam SG, Muthen BO, Petras H, Toyinbo P, Poduska J, Ialongo N, Wyman PA, Chamberlain P, Sloboda Z, MacKinnon DP, Windham A, the Prevention Science and Methodology Group Methods for testing theory and evaluating impact in randomized field trials: intent-to-treat analyses for integrating the perspectives of person, place, and time. Drug Alcohol Depend. 2008;95:74–104. doi: 10.1016/j.drugalcdep.2007.11.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Hedeker D, Gibbons RD. Longitudinal Data Analysis. 1. Hoboken, NJ: Wiley-Interscience; 2006. [Google Scholar]

- 95.McCulloch CE, Searle SR. Generalized, Linear, and Mixed Models. New York: Wiley; 2001. [Google Scholar]

- 96.Raudenbush SW, Bryk AS. Hierarchical Linear Models: Applications and Data Analysis Methods. Thousand Oaks, CA: Sage Publications; 2002. [Google Scholar]

- 97.Singer JD, Willet JB. Applied Longitudinal Data Analysis: Modeling Change and Event Occurrence. New York: Oxford University Press; 2003. [Google Scholar]

- 98.Hedeker D. An introduction to growth modeling. In: Kaplan D, editor. The Sage Handbook of Quantitative Methodology for the Social Sciences. Thousand Oaks, CA: Sage Publications; 2004. [Google Scholar]

- 99.Creswell JW, Plano Clark VL. Designing and Conducting Mixed Methods Research. Thousand Oaks, CA: Sage Publications; 2007. [Google Scholar]

- 100.Tashakkori A, Teddlie C. Issues and dilemmas in teaching research methods courses in social and behavioural sciences: US perspective. Int J Social Res Methodol. 2003;6:61–77. [Google Scholar]

- 101.Palinkas LA, Aarons GA, Horwitz S, Chamberlain P, Hurlburt M, Landsverk J. Mixed method designs in implementation research. Adm Policy Ment Hlth. 2011;38:44–53. doi: 10.1007/s10488-010-0314-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 102.Teddlie C, Tashakkori A. Handbook of Mixed Methods in Social and Behavioral Research. Thousand Oaks, CA: Sage Publications; 2003. Major issues and controversies in the use of mixed methods in the social and behavioral sciences; pp. 3–50. [Google Scholar]

- 103.Phillips JJ. Return on Investment in Training and Performance Improvement Programs. New York, NY: Routledge; 2003. [Google Scholar]

- 104.McHugo GJ, Drake RE, Whitley R, Bond GR, Campbell K, Rapp CA, Goldman HH, Lutz WJ, Finnerty MT. Fidelity outcomes in the national implementing evidence-based practices project. Psychiatr Serv. 2007;58:1279–1284. doi: 10.1176/ps.2007.58.10.1279. [DOI] [PubMed] [Google Scholar]

- 105.Kendall PC, Beidas RS. Smoothing the trail for dissemination of evidence-based practices for youth: flexibility within fidelity. Prof Psychol: Res Pract. 2007;38:13–20. [Google Scholar]