Abstract

Individuals of many animal species communicate with each other using sounds or “calls” that are made up of basic acoustic patterns and their combinations. We are interested in questions about the processing of communication calls and their representation within the mammalian auditory cortex. Our studies compare in particular two species for which a large body of data has accumulated: the mustached bat and the rhesus monkey. We conclude that the brains of both species share a number of functional and organizational principles, which differ only in the extent to which and how they are implemented. For instance, neurons in both species use “combination-sensitivity” (nonlinear spectral and temporal integration of stimulus components) as a basic mechanism to enable exquisite sensitivity to and selectivity for particular call types. Whereas combination-sensitivity is already found abundantly at the primary auditory cortical and also at subcortical levels in bats, it becomes prevalent only at the level of the lateral belt in the secondary auditory cortex of monkeys. A parallel-hierarchical framework for processing complex sounds up to the level of the auditory cortex in bats and an organization into parallel-hierarchical, cortico-cortical auditory processing streams in monkeys is another common principle. Response specialization of neurons seems to be more pronounced in bats than in monkeys, whereas a functional specialization into “what” and “where” streams in the cerebral cortex is more pronounced in monkeys than in bats. These differences, in part, are due to the increased number and larger size of auditory areas in the parietal and frontal cortex in primates. Accordingly, the computational prowess of neural networks and the functional hierarchy resulting in specializations is established early and accelerated across brain regions in bats. The principles proposed here for the neural “management” of species-specific calls in bats and primates can be tested by studying the details of call processing in additional species. Also, computational modeling in conjunction with coordinated studies in bats and monkeys can help to clarify the fundamental question of perceptual invariance (or “constancy”) in call recognition, which has obvious relevance for understanding speech perception and its disorders in humans.

Keywords: Language, Speech, Neural coding, Bats, Primates, Review

2. INTRODUCTION

Communication sounds (or “calls”) mediate many interactions between conspecifics and can be important determinants of social order (1–5). Although animal calls can be used as information-bearing signals across species to aid in their survival, as in the case of alarm calls, those of greatest concern here are the species-specific calls that are used in a social context. These calls are of greatest importance for species that are highly social, such as most primates, bats and many other species that use calls to gauge motivational levels of others and modulate their own so as to interact with conspecifics in the most advantageous manner. How are such complex sounds perceived and how are they processed by the brain? How are they encoded into neural signals and quickly decoded within the neural system to generate the appropriate behaviors? These questions are only beginning to be addressed thanks in part to the advancement of new technologies that allow the structure of these sounds to be examined using quantitative methods and the sounds to be synthesized in an accurate manner outside the animal (6–8). In this article, we examine the state of our knowledge of these signals and how they are represented within the cerebral cortex, which likely constitutes the locus for their perception in the mammalian brain.

Rapid progress has been made in recent years towards elucidating some of the basic neural mechanisms that appear to play an important role in the coding and decoding of complex sounds, especially social calls (9–20). We focus here in particular on two species, namely the mustached bat, Pteronotus parnellii, and the rhesus monkey, Macaca mulatta, that have been used for intense studies over the last decade (10–12, 20–29). New and interesting findings have also been made in the domestic cat, Felis domestica (16, 30–33), the house mouse, Mus muscus (34, 35), and the marmoset, Callithrix jacchus (36, 37) and these contribute to our general perspective of how the mammalian cortex manages complex sounds within the neural domain so as to make the most direct and efficient use of the information present in the acoustic domain. Clearly, each species has the potential to have evolved different strategies that are most appropriate for its environment and sensory requirements. Many of the neural computational strategies are governed by the commonalities in the physics of the environment as well as the nature of complex sounds, and by the developmental and evolutionary similarities among the neural structures involved. These commonalties likely extend to the adaptations for the use of sounds by humans in the form of speech and music (38, 39).

By examining the neural information processing strategies across two evolutionally divergent species, the mustached bat and the rhesus monkey, we hope to illustrate the common principles of complex sound perception, which must also underlie the functional organization for processing or “management” of auditory information. We use the term “management” in its broadest sense to include both the anatomical, neurophysiological and computational strategies that must be exploited at multiple levels to arrive at a successful solution to the hypercomplex problem of complex sound perception in general and audiovocal communication in particular. It is no wonder that it has taken nature several million years to arrive at a (possibly) optimal solution for realizing the full potential of audiovocal communication in the form of speech and music. Several other factors besides optimized auditory encoding and decoding have likely enabled the evolution of these uniquely human faculties. Clearly the end result is one of the most dramatic accomplishments of nature that distinguishes humans from any other species and has played a very important role in our biological and cultural development. In the sections below, we examine the basic structure of all communication sounds, including speech sounds, and the common neural organization and mechanisms that may be present across species. We will also address the significance of species-specific specializations that are equally important to recognize when elucidating any common principles.

3. SPECIES-SPECIFIC COMMUNICATION CALLS IN MAMMALS

3.1. Acoustic features

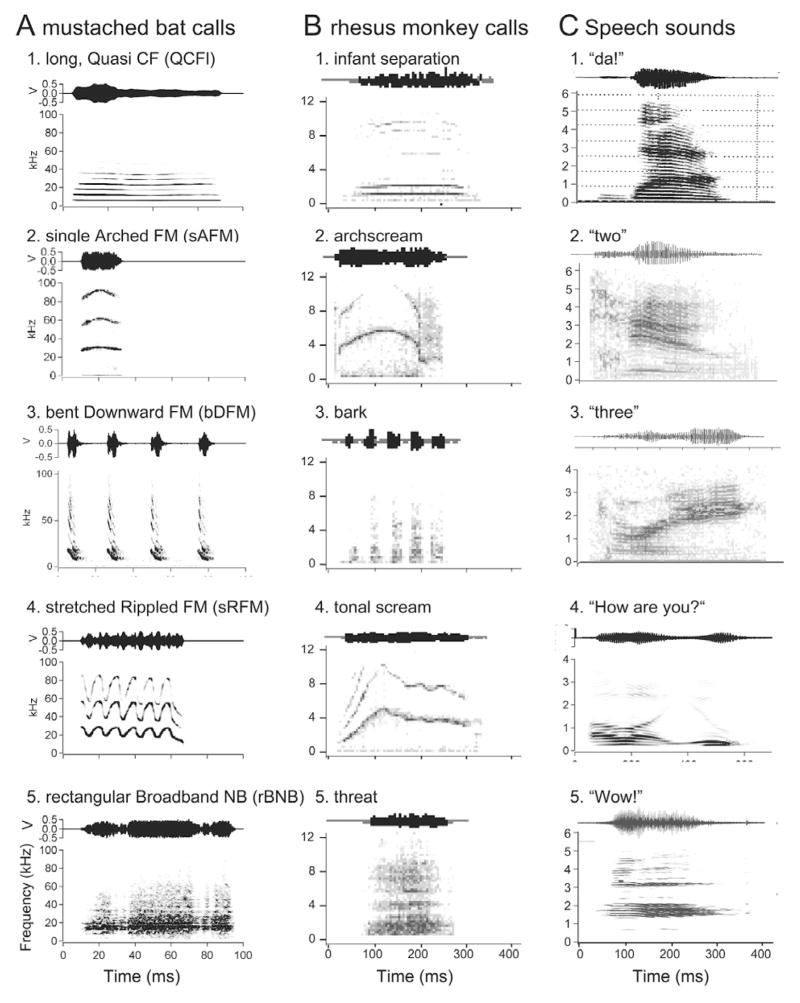

From an acoustics standpoint, all natural sounds, including communication calls, consist of three basic types of elemental sounds, namely, constant frequency or CF sounds, frequency-modulated or FM sounds and noise bursts or NB-type sounds. Pure tones are rare in nature; accordingly, very few calls are strictly tonal, although many have a clear harmonic structure. Most complex calls consist of combinations of tones and/or FM sounds; the latter can be either broad- or narrow-band, whereas the former contain multiple or harmonic bands of predominant frequencies. Additional complexities may be present both in the spectral and temporal domains. Both animal and human sounds may contain varying levels of noisiness making it difficult to identify distinct classes of calls. Also, sounds with two different fundamental frequencies may be emitted at the same time and modulated independently (40). In Figure 1, we illustrate the similarity in the spectrographic structure of “simple syllabic” call types in three different species of mammals. The simple syllables in bats are defined on the basis of the statistically significant differences in the overall spectrographic pattern of calls so that the same pattern is not present in any other call type (40). The basic patterns of acoustic structure are also present in other species, such as monkeys and cats, although the frequency scale is shifted down by nearly an order of magnitude and the meaning of each sound is obviously species-specific (41–44). Even in humans, the spectrograms for the vocalization of phonemes and short words look remarkably similar to the simple syllabic vocalization of other mammalian species (45).

Figure 1.

A. Amplitude envelopes (above) and spectrograms (below) of five examples of simple syllabic calls emitted by mustached bats (P. parnellii). B. Amplitude envelopes (above) and spectrograms (below) of calls emitted by rhesus monkeys. C. Speech sounds for a phoneme, /da/ and simple words.

3.2. Call structure and syntax

For social communication, two or more different categories of sounds may be combined in the time domain to generate “composites” that do not belong to any one single class of elemental sounds (40). If so, we can ask the question: are there any “phonetic” rules for joining simple syllables to produce composites? From the few available studies, the answer to this question is an emphatic “yes”. Rules for these types of combinations in communication sounds of primates have been referred to as “phonetic-like” syntax (46) and in birdsong as syllabic syntax (47), although in the latter case, short silent intervals are a part of the syllable. From recordings of mustached bat calls, only 11 of the 19 simple syllables are used to construct composites. As an illustration, the bent upward FM (bUFM) component in a composite is never followed by an attached syllable whose fundamental is lower than the mean terminal frequency of the bUFM. Similarly, a fixed, sinusoidal FM (fSFM) component in a composite typically precedes a short quasi CF (QCFs) or a QCFs-like component. In mustached bat calls, only the duration parameter of a simple syllable appears to be significantly altered when that syllable is combined within a composite, e.g., bUFM. This total duration of a syllable is probably constrained by the lung volume and respiratory rate of the animal (48) and therefore the duration of one or more components in a composite may be significantly modified.

Simple syllabic calls may also be combined in different forms and contexts to produce a train or “stream” of sounds. Repetition of similar syllables during such vocalization bouts frequently indicates the urgency of a desired response. In mouse pups, wriggling (isolation) calls emitted at high repetition rates and sometimes with increasing amplitudes trigger a retrieval response on the part of maternal females (49, 50). This type of sequencing of sounds is less common in animals compared to speech in humans, except in the context of song as in songbirds, bats, dolphins and whales or chorus calls in some species of frogs and primates (51–54). It has been postulated both in bats and in some species of primates, that these types of multisyllable combinations in the time domain follow syntactical rules much like those in the production of a stream of speech sounds (40). Rules governing the phrasing of simple syllables and composites in a phrase, monologue or a dialogue relate to “lexical-like” syntax (46) and segmental syntax (55). These are less common and more difficult to define in bats because of the difficulty in separating sounds of different individuals in a large colony. The syntax for creating composites and “phrases” may be further constrained either by mechanisms of sound production or other rules of construction and perception of the message (56). These cannot be identified without functional-anatomical and behavioral studies of sound communication in each species.

3.3. Call variability

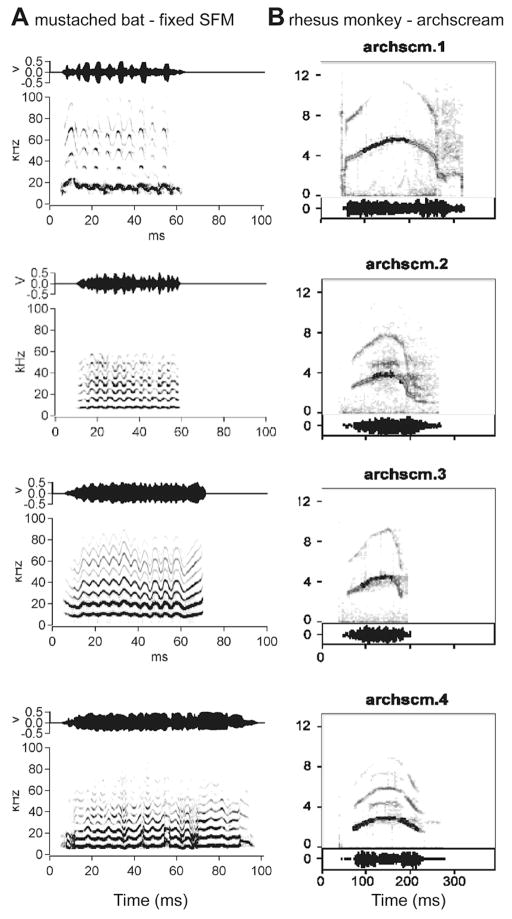

There is considerable variability among different examples of the same call type, as uttered by different individuals, both in terms of tempo and spectral position (see Figure 2). Males, females, and infants differ in their fundamental frequencies or pitch. Yet, acoustically different variants of the same call frequently elicit essentially the same type of behavioral reaction (49, 57). The neural system for processing and representing the calls must therefore be capable of ignoring information that is irrelevant for establishing the identity of the sound and come up with a caller-invariant representation. Call components that are frequently varied may be used to define caller identity. Understanding the nature of how such representations are formed will be crucial to understanding any form of communication system. Artificial systems for understanding speech and language still struggle enormously with the invariance problem, because variations of human speech sounds due to many different kinds of accents or physiological factors are commonplace. Learning about the solution to the invariance problem from a successful biological system will be a highlight of research on call recognition.

Figure 2.

Spectrographic examples of communication calls showing several variants of a simple syllable, sinusoidal FM (sFM), emitted by mustached bats (A) and the archscream call emitted by rhesus monkeys (B).

4. THE AUDITORY BAUPLAN

The auditory system is one of the most complex sensory systems with extensive interconnectivity between the two sides of the brain at multiple levels of processing. The cross-over of inputs from each side is considered to be important for sound localization at the level of the brainstem. These cross-over circuits yield clear maps of auditory space and underlie the computation for estimating the location of sound sources in 3-D space. The cortical level manages multiple parameters of complex sounds that lead to an enriched perception of calls. This is accomplished with EPSPs and IPSPs that underlie the spiking response properties of neurons at all subcortical levels starting with the cochlear and olivary nuclei and ascending to the levels of the lateral lemniscus and the inferior colliculus as well as loops of spiking activity within thalamocortical and corticothalamic circuits. Our concern in this article is mainly on what we know so far about the coding and representation of calls in the AC of mammals, especially of bats and primates.

4.1. Auditory cortex in primates

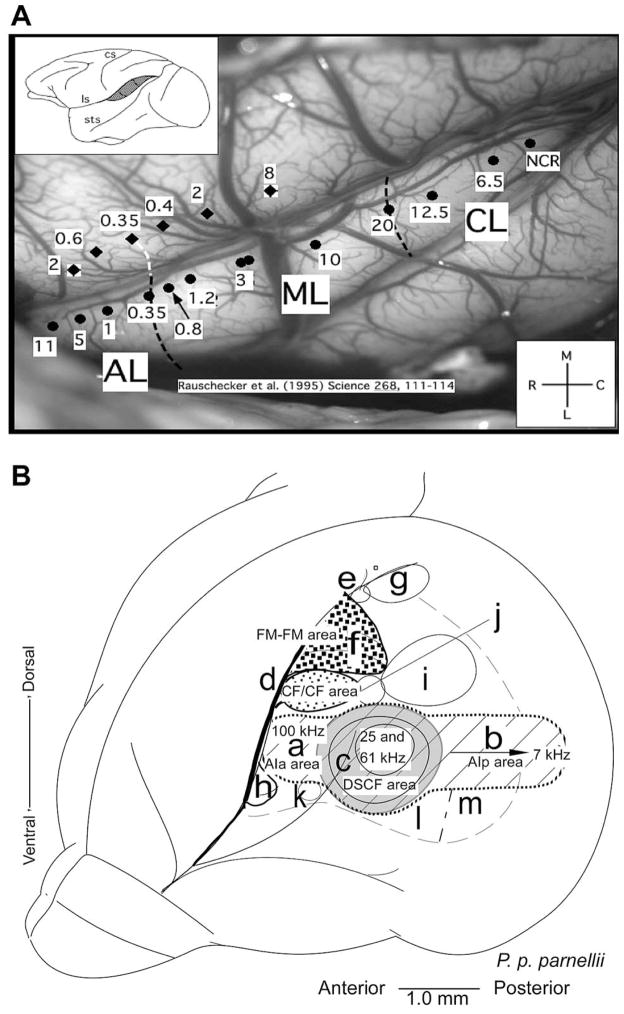

Auditory cortex in primates has traditionally been divided into three cytoarchitectonic fields: Brodmann areas 41, 42, and 22 (or TC, TB, and TA in the nomenclature of Bonin and Bailey (58). More recently, it has been shown that “primary” auditory cortex (areas 41 or TC, respectively) can be subdivided into two to three “core” areas on the basis of both histochemistry and neurophysiological mapping (59) (see Figure 3A). Similarly, “secondary” auditory cortex surrounding the core both laterally and medially is subdivided into at least six “belt” areas (26, 60, 61). Beyond belt, there is a third level of processing in primate auditory cortex that has been termed “parabelt”, whose organization is less well understood but contains at least a rostral and a caudal subdivision (62). The rostral (or anterior) and caudal (or posterior) subdivisions of belt and parabelt are thought to form the beginning within cortex of functional processing streams that underlie the two major functions of hearing: identification of auditory patterns or objects (“what”), and spatial perception of sound (including motion in space) (“where”) (11, 25, 26) (see Section 7 below).

Figure 3.

A. Organization of the AC in rhesus monkeys showing the reversal of tonotopic mappings in the core, belt and parabelt areas. Organization of the AC in the mustached bats. B. Lateral view of the bat’s brain showing the functionally defined subdivisions of the AC in mustached bats. The primary auditory cortex (AI) is shown as cross-hatched. ‘a’ AI-anterior, ‘b’ AI-posterior, ‘c’ DSCF area (shown in gray), ‘d’ CF/CF area (fine stippled) ‘e’ DIF area, ‘f’ FM-FM area (coarse stippled), ‘g’ DF area, ‘h’ VF area, ‘i’ DM area, ‘j’ TE area, ‘k’ H1-H2 area, ‘l’ VA area, ‘m’ VP area (adapted from Suga et al., 1987, (78). Detailed representation of the FM-FM, CF/CF, DSCF and AIp areas in the AC. The DSCF area occupies nearly 30% of the area of the tonotopically organized primary AC and represents a small range (60.6 to 62.3 kHz) of frequencies.

Evidence for multiple subdivisions of auditory cortex has been available in cats for some time (63, 64), although hints as to their functional relevance have been harder to come by. The homologies between different fields in cats and monkeys, based on anatomy, physiology, or behavior, are also far from clear. Some authors have suggested that a reversal of cortical maps and (therefore) functions between rostral and caudal ST has taken place during evolution, due to the disproportional growth of the temporal lobe. This is supported by a reversal of the frequency gradients in primary auditory cortex between carnivores and primates (e.g., (27, 65)). If this theory is correct, anterior auditory areas in carnivores should be responsible for sound localization and posterior areas should primarily manage communication calls and other auditory objects. Cryogenic deactivation of the posterior auditory field, however, leads to deficits in sound localization behavior in cats (66). This would suggest that the anterior vs. posterior specializations may be maintained despite the reversal in frequency gradients. Equivalent tests of auditory pattern or object discrimination behavior in cats are still outstanding, but such studies in the dog (another carnivore) are consistent with the gradient-reversal idea (67).

4.2. Auditory cortex in bats

The auditory system and the auditory cortex (AC) in mustached bats is one of the best studied among mammals. Most of the early studies on the auditory system of bats were conducted from the viewpoint of the functional organization of the AC for the management of echolocation information. These studies revealed that auditory information is processed in a parallel-hierarchical manner as it ascends along lemniscal auditory pathways to the level of the AC (68). The AC is organized to represent and map different information-bearing elements (IBEs) and information-bearing parameters (IBPs), respectively. Highly specialized neurons within these areas compute different aspects of a moving target, such as distance, velocity, size and wing-beat frequency via an organized representation of pulse-echo delays (FM-FM and DF areas), Doppler-shifts in echo frequencies (CF-CF area) and subtended angle (DSCF area), respectively (see Figure 3B). Later, we will consider how calls are represented over this specialized organization.

The FM-FM area has been traditionally considered as part of the primary AC or core area as frequencies represented here are absent in the primary tonotopic axis. However, recent comparative analyses using cytoarchitecture, cytochrome oxidase staining and neuronal tract-tracing experiments in Rhinolophus suggest that this part of the auditory cortex in mustached bats is best regarded as a belt area because it does not receive inputs directly from the main relay nucleus, the ventral portion of the medial geniculate body, vMGB, in the thalamus (69–71). By this reckoning, the FM-FM area would be homologous to the dorsal field, and the DF area, that also processes FM combinations, would be included in the parietal cortex. The parabelt region likely corresponds to the ventral field from where call responses have not been well studied. In bats, as in other mammals, the auditory thalamus is divisible into a ventral, a dorsal and a medial division. An additional nucleus, the supra-geniculate, relays auditory information directly to an auditory field in the frontal cortex of bats (72).

In summary, the belt areas are less easy to differentiate on the basis of a representation of pure tone frequencies alone, although tonotopic mapping has been used to define the ultrasonic field in the mouse (73), which represents a specific range of frequencies. More commonly, however, neurons in the higher-order fields of the AC are hard to drive with pure tones to which they rapidly habituate, if they respond at all. In primates (as described above), these areas respond largely or only to complex sounds such as narrow-band noises, harmonic complexes, or frequency and/or amplitude-modulated tones and noises (61, 74–77). Cochleotopic mapping on the basis of bandpass filtered center frequencies, however, can still be discerned (61). In bats, neurons in the proposed core and belt areas seem to be multifunctional in that they respond with comparable peak response magnitudes to both echolocation sounds and to communication calls (22).

5. NEURAL MECHANISMS FOR CALL PROCESSING

5.1. Receptive fields and neural coding

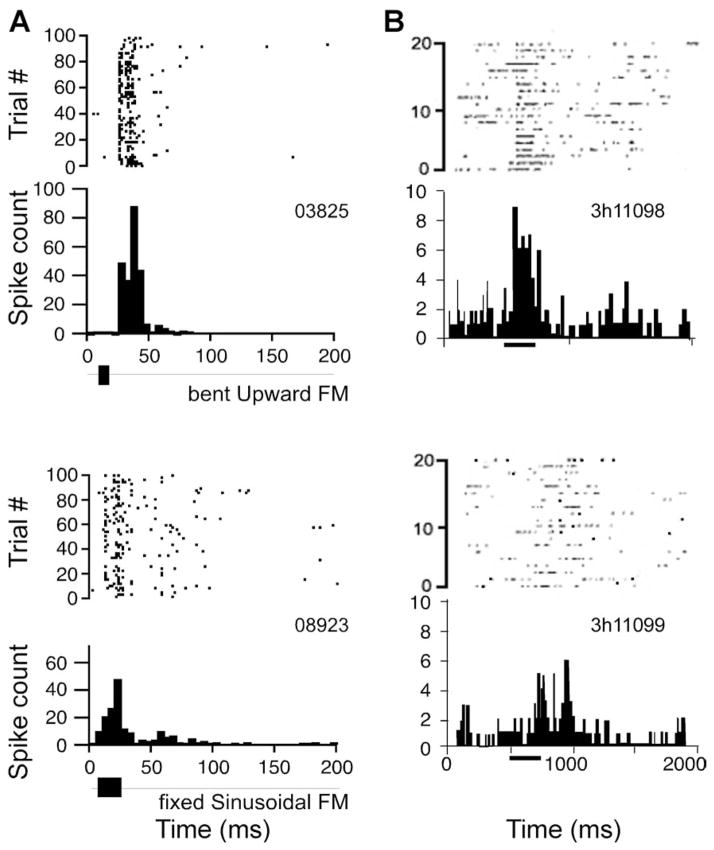

The receptive fields of auditory neurons can be defined in one or more of many different dimensions. These dimensions range from frequency to amplitude to pitch and space around the head. Receptive fields of neurons can also be defined either on the basis of excitation and facilitation or inhibition of their spontaneous levels of activity. Tone based receptive fields can be considered simply as mappings and re-mappings resulting from the convergence and divergence of inputs from different regions of the cochlea. These cochleotopic receptive fields (or response areas) are different from the binaural receptive fields resulting from the interactions of the inputs from the two ears, which are therefore considered to be computational by design. Other response properties, such as periodicity pitch and complex acoustic features depend on specialized neural mechanisms (see below) that extract spectro-temporal information from complex sounds and encode it in the spiking pattern and timing of spikes. All of these “managerial skills” of neurons and the neural networks are manifested in the final representation of complex sounds within the AC of bats and primates. This translates into robust responses of single neurons to communication sounds (see Figure 4). In the context of communication, it is therefore important to understand these representational schemes and the neural mechanisms underlying call processing in order to arrive at a complete understanding of how animals and humans perceive complex sounds that can be used for social interactions and the exchange of information, e.g., via speech and language in humans. Below we briefly discuss some of these neural mechanisms and representational schemes in order to understand how the auditory system manages to extract relevant information from the auditory scene to support a robust, noise-tolerant system for audiovocal communication. Since this field is still in its infancy and much work is needed to validate or discard the many hypotheses circulating in the scientific literature, we do not always provide conclusive answers for the questions we raise. Nevertheless, rapid advances have been made in recent years in a few species of primates and bats and we can at least now formulate the problem in the form of testable hypotheses. We discuss some of these advances in a generalized framework and briefly describe what we have learnt thus far about the neural mechanisms for the cortical management of communication calls.

Figure 4.

A. Dot raster and PSTH plots to show the neural response to whole calls in the A1 of mustached bats (A) and rhesus monkeys (B). Response patterns in both species are generally similar and show a phasic response onset with a clear peak firing rate that lasts for a few ms. Response duration is an order of magnitude longer in primates compared to bats. Bin width = 5 ms.

5.2. Integrative mechanisms: combination-sensitivity

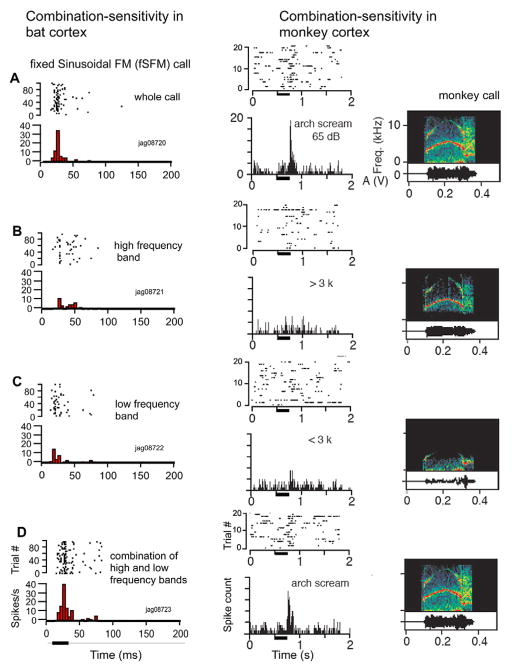

One of the most significant and astonishing similarities between bats and monkeys identified so far is the neuronal mechanism by which selectivity for complex sounds, including calls, is reached. Both species make use of nonlinear integration mechanisms, which have been termed “combination-sensitivity” in bats (78–83). Many calls can be split into two or more spectral and/or temporal components. Application of just one component often leads to no (or a relatively weak) response; combination of the components in the spectral and/or temporal domains leads to a nonlinear enhancement of the response so that it is greater than the sum of the responses to each component alone (fig. 5A). This phenomenon was first discovered for the processing of echolocation sounds in bats (84), but holds equally well for calls in both bats and rhesus monkeys (26, 79) (see Figure 5B). In fact, combination-sensitivity has been found in the auditory system of other vertebrates that are avid users of auditory communication signals, such as songbirds and frogs (86, 87). Combination-sensitivity may thus constitute a truly universal mechanism for generating neurons specialized for the decoding of complex auditory patterns and possibly for complex patterns present within most other sensory modalities as well.

Figure 5.

Dot raster (above) and PSTH plots (below) to demonstrate combination-sensitivity in the firing rate of a neuron by presentation of spectrographic components in the call response of neurons (A) in the DSCF area in mustached bats and (B) in a neuron in the belt region of the rhesus monkey AC. Bin width = 5 ms.

One difference between bats and monkeys is that combination-sensitive neurons are found at earlier levels in the auditory pathways of bats than in monkeys. For instance, combination-sensitive neurons exist in high numbers in the primary auditory cortex (A1) and are already present at the level of the inferior colliculus in bats (89), whereas such neurons do not become prevalent until the level of the lateral belt in rhesus monkeys (85). It has been suggested that combination-sensitivity in subcortical structures in bats can be mediated by back-projections (e.g., via corticothalamic loops) (88). In a similar vein, part of the combination-sensitivity observed within A1 of bats could be mediated by strong cortico-cortical feedback to A1 in the auditory system of bats (85). In addition, the hierarchy of processing sounds may consist of many more levels in the primate cortex and be more condensed in the relatively much smaller brain of bats, leading to specialization at an earlier stage of auditory processing in bats.

5.3. Parallel-hierarchical processing

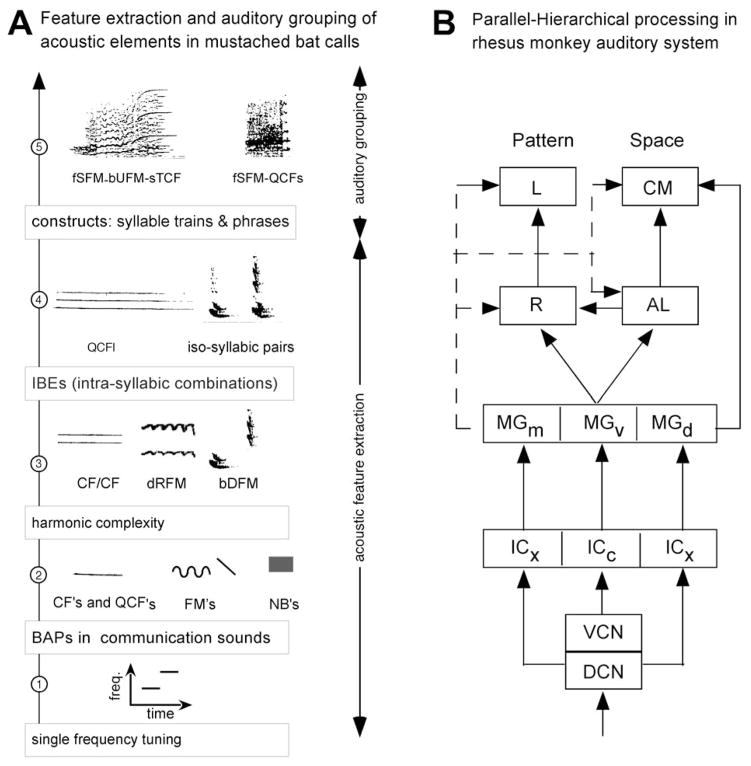

The concept of parallel-hierarchical processing has been a very influential one in research on sensory processing in bats and primates. This concept was reinforced by a top-down analysis of the processing of pulse and echo information for target tracking and capture via echolocation in bats (78). The same type of parallel-hierarchical processing, as described for computing pulse-echo parameters, is hypothesized to apply to the computation and representation of social calls (see Figure 6A). More detailed research on call processing at subcortical levels of the bat’s auditory system is needed, however, to further validate this hypothesis. Along these lines, multiple pathways for processing FMs versus sound duration and/or spatial location/motion may be present for processing auditory information within complex sounds, such as communication calls. Evidence of parallel-hierarchical processing may also be provided by examining the cortical representation of different types of sound stimuli that vary in complexity. This is discussed in more detail in section 6.

Figure 6.

A. Hypothetical scheme showing the steps for parallel-hierarchical processing of communication calls along a subcortical “what” stream in mustached bats. The scheme is based on what we know about processing of echolocation signals in the mustached bat’s auditory system (68). B. Flow-chart showing the parallel-hierarchical processing of auditory information and the origin of the “what” and “where” streams in the brain of rhesus monkeys. DCN, dorsal cochlear nucleus, VCN, ventral cochlear nucleus, ICx, external nucleus of the inferior colliculus; ICc, central nucleus of the inferior colliculus; MGm, MGv, MGd, medial, ventral and dorsal divisions, respectively, of the medial geniculate body; bDFM, bent downward FM; bUFM, bent upward FM; dRFM, descending rippled FM; fSFM, fixed sinusoidal FM; QCFl, long quasi CF; QCFs, short quasi CF.

In primates, an extensive analysis of call parameters has not been conducted at subcortical levels. Here, the concept of parallel-hierarchical processing has emerged from studies of the visual cortex, thus bypassing the classical lemniscal pathways in the brainstem. As applied to the auditory system of primates, therefore, parallel-hierarchical processing applies mainly to the organization of the AC into core, belt and parabelt areas (as well as their thalamic inputs) where neurons show increasing levels of specialization (see Figure 6B). This concept has been further extended to processing at higher levels of the cortex up to the level of the frontal cortex with the proposition of the “what” and “where” processing streams. These cortico-cortical processing streams are discussed further in section 7.

6. REPRESENTING ACOUSTIC INFORMATION IN CALLS

6.1. Tones versus basic acoustic patterns

Tones ranging from very short durations (< 10 ms) to long durations of several hundred milliseconds are frequently present as integral components of most complex sounds. Even FMs are sometimes modeled as a series of short-duration tones stepped up or down in frequency. According to this model, a neural response to an FM is triggered largely by a single frequency present within the FM sweep. This frequency is sometimes referred to as a “trigger frequency” and may correspond to the best excitatory frequency tuning for that neuron (33). Accordingly, the neural representation of complex sounds can be equated to the coordinated firing of a population of neurons, each one of which is tuned to a single or a relatively small range of best frequencies. Alternatively, neurons may be tuned to the FM sweeps themselves and not respond to any single FM frequency presented in isolation. This model is supported by the finding of specialized neurons in the FM-FM area in the auditory cortex of mustached bats (15, 83). These neurons respond well only to combinations of a pair of downward sweeping FMs matching the FMs in its echolocation pulse. Whether calls are encoded by the coordinated firing of a population of relatively unspecialized neurons or by neurons specialized to respond to specific acoustic features, such as FMs, within a call, is not completely clear and may vary with the level of specialization of neurons within a particular area and with the species in question.

Inner hair cells in the cochlea and the auditory nerve fibers are tuned to single frequencies that are mapped along a unidimensional gradient into a “tonotopic” map. After multiple levels of processing, are neurons within the AC also tuned only to single frequencies? In bats, cortical neurons in the CF/CF and DSCF areas are tuned to at least a pair and sometimes multiple frequencies. This is despite the fact that most neurons are inhibited by a wide range of frequencies and those in the posterior A1 are tuned to multiple frequencies that are harmonically related (10, 24). In primates, the tonotopic mapping holds true for primary core areas. In both bats and primates, the tonotopic gradient follows an antero-posterior (or rostro-caudal) gradient. To further validate single parameter representations, as in frequency tuning, as a means for encoding communication calls, it is important to know the overlap of common frequencies in the different species-specific communication calls. In most calls of any species this overlap of frequencies is quite large, making it difficult to imagine how a solely frequency-based representation of calls would generate an optimal code.

Basic acoustic patterns (BAPs) represent the first step for an increase in complexity over single or pairs of tones. In an acoustic pattern, the frequency typically changes with time. Examples of BAPs that are present within calls include linear and exponential FM sweeps, sinusoidal frequency modulations or SFMs as well as different types of noise bursts (NBs). NBs can vary in bandwidth and have a rich acoustic structure by having one or more bands of predominant frequencies. These types of acoustic patterns could constitute the “basis functions” for representing calls just like polar, hyperbolic, and Cartesian gratings are postulated for the macaque visual cortex (90). Mathematical formulation of acoustic patterns is more difficult than for visual stimuli, but some progress has been made in this direction by using independent components analysis (91). Data on the analysis of any mapping topographic of these parameters in the AC are virtually nonexistent, except for FMs (see next section). Neurons also have been shown to respond selectively to and are tuned to SFMs in the inferior colliculus of bats (92). A systematic analysis of tuning and response selectivity to different call types, however, is lacking. These data can be useful in designing the most relevant set of parameters to study in any one particular species.

At present, it remains unclear if BAPs actually represent the important IBEs within calls. If so, do they represent invariant features that a neuron and/or the organism can use as cues to identify different call types? The only fact potentially relevant to this question in monkey auditory cortex is the gradient for preferred bandwidth in the lateral belt of rhesus monkeys, which runs in a medio-lateral direction and is thus orthogonal to the frequency gradient in these areas (93). While neurons in the auditory belt cortex of monkeys no longer respond well to tones, a gradient according to best center frequency or “cochleotopic” gradient is still evident. However, complex spectral tuning with multiple peaks in the frequency-tuning curve becomes more common in the belt areas (94). It is expected that in parabelt and beyond, most neurons will no longer be tuned to a single frequency and, accordingly, will require complex spectral patterns to respond with a significant increase of their firing rate. This can be seen as directly equivalent to the situation in the visual cortex, where receptive fields become increasingly specialized and responsive to complex patterns as one moves away from primary visual cortex.

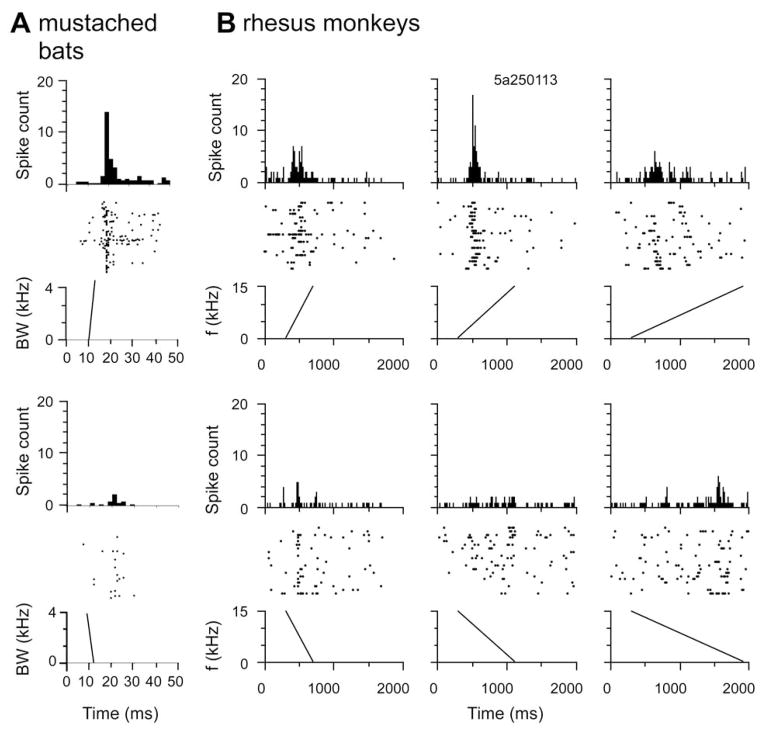

6.2. Frequency modulated sweeps

FMs are ubiquitous in natural sounds and especially in the communication calls emitted by bats and primates. FM transitions also underlie the distinction between different types of phonemes in speech sounds. Thus, /da/ has both an upward and a downward FM or formant transition at sound onset that is followed by an appropriate vowel sound (see Figure 1C.1.), whereas /ba/ has two downward sweeping FM transitions in the formant (dominant) frequencies. Equivalent to the visual cortex, where neurons usually respond better to moving than stationary stimuli, responses in auditory cortex to tones moving in the spectral dimension across the place of best frequency, i.e. FM sweeps, often lead to significant enhancement of the neural response. Studies on echolocation in bats first yielded a clear map of the representation of combinations of FMs corresponding to the first harmonic in the pulse and the second, third or fourth harmonics in the echo. These maps were restricted to the FM-FM and DF areas in the postulated belt regions of the AC. Recent studies in the DSCF area in the mustached bat’s A1 are also yielding a rich representation of FMs with neurons tuned to the direction and slope of FMs (see Figure 7A), (93). Neurons in both core and belt areas of cats and monkeys are selective for the direction and rate of FM sweeps (16, 27, 28) (see Figure 7B). A mapping of FM direction or other parameters, however, remains to be elucidated.

Figure 7.

Neural responses (PSTHs above and dot rasters below) showing preference for upward versus downward frequency modulated sweeps in (A) the DSCF area in mustached bats (95), and (B) the rhesus monkey auditory cortex. Responses in the rhesus monkey also show a tuning to the slope of the upward FM. Bin width = 5 ms.

These findings are significant for two reasons: First, they show that FM sweeps have a universal character as elements of communication calls across species and are therefore found prominently as preferred stimuli for neurons in the AC. Secondly, FM sweeps as auditory equivalents of moving light stimuli, demonstrate that one of the building blocks of cortical columns may be a module that detects change across the sensory receptor surface in a particular direction. While visual areas show a distinct organization into columns with regard to orientation and direction selectivity, no such organization for FM-sweep direction has yet been found in the auditory cortex of cats or primates. Of course, this does not mean that such an organization does not exist. It could, however, point to other forms of cortical order, which is due to the fact that the basilar membrane is essentially unidimensional, whereas the retina is, by definition, two-dimensional. A recent study in the rat A1, showed that direction selectivity is topographically ordered in parallel with characteristic or best frequency (BF) such that low BF neurons preferred upward sweeps, whereas high BF neurons preferred downward sweeps (96).

6.3. Acoustic features within calls

It is possible that complex acoustic features, rather than single or pairs of tones or any of the BAPs represent the “minimal excitatory elements” that play an important role in the identification of a call type. In that case, important questions to address are, (i) are any call parameters topographically organized within the AC? (ii) how many patterns are there and how much do they overlap between call types? and (iii) do they constitute the presumptive (IBEs)? If not, which acoustic features are invariant among samples of the same call type?

A dissection of call types according to the excitatory tuning of neurons in the AC of bats has yielded interesting insights into the specialization of neurons for call processing. Recent studies in bats suggest that at the level of the AC, neurons are dually specialized to represent both pulse-echo pairs and calls (20, 22, 79). Furthermore, temporal parameters in both calls and echoes are represented within the FM-FM area, whereas combinations of spectral parameters are represented in the CF-CF and DSCF areas (20, 22). Neurons in the FM-FM area compute inter-syllable intervals of silence and those in the DSCF area compute the direction and possibly the slope of FMs within social calls (20, 95). Neurons with multi-peaked frequency tuning in the AI-p area do not appear to be selective to FM direction, but are sensitive to the harmonic complexity in calls (21). Thus, combinations of temporal and spectral features within calls appear to be extracted and represented in different cortical areas (24).

Recognition of a call type is postulated to be accomplished on the basis of a “consensus” that may be demonstrated by the near-synchronous or correlated firing of a small population of neurons scattered in different cortical areas (24). This coordinated firing of groups of neurons within different cortical areas could convey information about a call type. In summary, studies in both monkeys and bats have shown that complex pieces of a call type when presented together can trigger facilitatory interactions that lead to a nonlinear enhancement of the firing rate of a neuron. At the level of the AC, this information about call type is conceivably encoded in the firing rate of a small population of neurons distributed in the AC (24).

Although single neuron activity in several different areas in the AC of mustached bats has been shown to be facilitated by call “bits” (see Figure 5), little is known about the way in which different call bits or feature dimensions are organized tangentially to the surface of either a bat’s or a monkey’s AC. The best way to visualize any order in the organization of call parameters may be by means of optical recording techniques, which provide a means of looking at large numbers of neurons simultaneously. Unfortunately, this technique has so far proven difficult to successfully apply to call processing in the auditory cortex of an awake or lightly anesthetized animal. Tones and FM stimuli, however, have been optically mapped in the A1 of the guinea pig suggesting that these stimuli are represented in a spatio- or spectro-temporal manner across A1 (97).

6.4. Perceptual invariance of call types

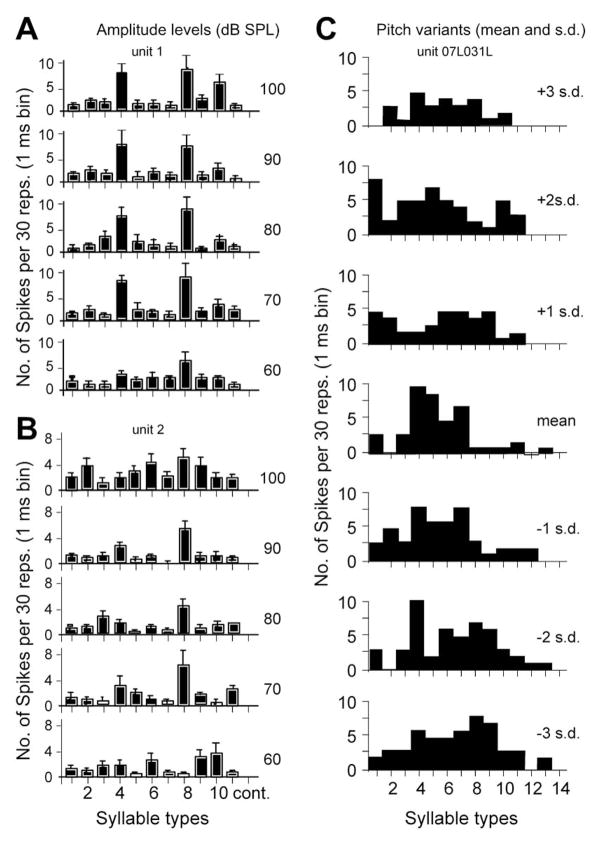

Neural “mappings” for the representation of pitch, amplitude and bandwidth have been identified in the temporal lobe of humans and the AC of cats and marmosets (98, 99). However the role of these mappings in generating perceptual invariance of call types is unclear. To date, these mappings are considered to contribute to a spatio-temporal and possibly dynamic representation of complex sounds across a large population of neurons. For a call type to be recognized as that type and the appropriate meaning to be conveyed, neurons or networks of neurons must respond largely to one call and should continue to respond to that call irrespective of variations in its pitch, amplitude and a few other parameters that may vary with the identity and mood of the emitter. Although the existence of such neurons is a distinct possibility considering the computational models proposed for object recognition in the visual system (101), this is not usually the case in the AC of bats and primates (see Figure 8).

Figure 8.

A and B. Bar graphs for the peak firing rate of two single neuron responses in the DSCF area in mustached bats showing both invariance and variation of responses to different amplitudes of simple syllabic call types (1 to 14). C. Bar graphs of responses of a cortical neuron to 14 syllables and their variants. Variants are produced by shifting the fundamental frequency and harmonic spectrum of the waveform by ±1, ±2, and ±3 standard deviation (s.d.) from the mean of the natural variation in call types. Neuron responds with different response magnitudes to several variants of the same syllable type. Neural response invariance possibly underlies the phenomenon of perceptual invariance, but appears to be absent in the AC. Each stimulus presentation was repeated 10 times. Bin width = 10 ms.

A search for cortical neurons that are highly specialized to respond to complex sounds, such as calls, is a neuroethologically valid approach. Neurons that respond exclusively to one or two whole calls in a level- and pitch-tolerant manner, however, are rare in the AC of bats (24). Although such neurons may exist, they are difficult to find and have not yet been reported in any species. At higher levels of processing, such as the frontal cortex (FC), the presence of such neurons is more likely. Here neurons responding selectively to whole calls have been reported in both bats and primates, but their response to BAPs and acoustic features have not been extensively studied (100, 101). Recordings from the frontal auditory field (FAF) in bats and from the ventrolateral prefrontal cortex (PFC) in monkeys show that responses of single neurons here can last for a few hundred milliseconds and extend well beyond the duration of the stimulus (102). Also, FC neurons in both bats and primates are selective for a few call types, but not specialized to respond only to one call type. Therefore, studies of the neural basis of perceptual invariance of call types represent uncharted territory and could lead to some exciting discoveries about the neural mechanisms for object recognition and how calls are represented in the AC as well as at other levels of the auditory system in mammals.

7. CORTICO-CORTICAL PROCESSING STREAMS

The visual system has long been thought to be organized hierarchically with increasingly complex feature dimensions emerging at successively higher processing levels. At the same time, it has also become clear that the visual system consists of several parallel channels, the most prominent of which are the magno (M)- and parvocellular (P) pathways, which originate at a level as early as the retinal ganglion cells (103). Dual pathways for the processing of form and motion/space at the cortical level were postulated independently (104) before it became clear that the P- and M-systems provide prominent input to these pathways.

Dual cortical pathways for the processing of patterns and space have recently been proposed also for the auditory system in primates (25, 26, 85) (see Figure 9A). While the evidence for such division of labor at the cortical level has been accumulating, the anatomical and physiological evidence for distinct input systems at subcortical, including brainstem levels, is still lacking. The equivalent level in the auditory system to the retinal ganglion stage in vision would be the cochlear nuclei, so one ought to start looking at this level for the equivalent of M and P cells in the auditory system. Excellent progress has been made in terms of defining cell types and their functional properties within the cochlear nucleus of cats and other species, but a neuroethological analysis at this level of the auditory system is lacking.

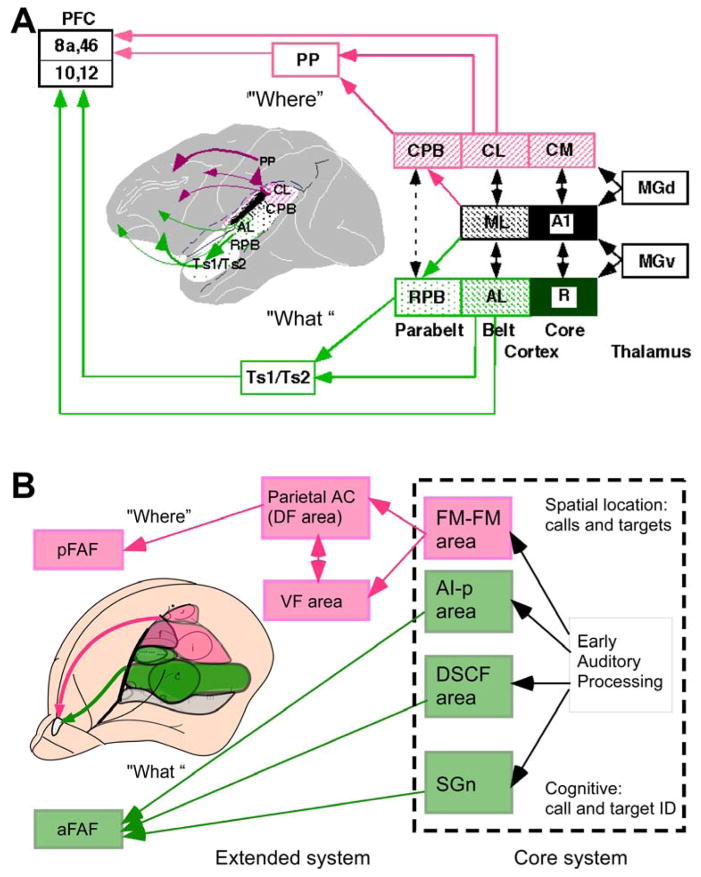

Figure 9.

Functional organization of the “what” (pink) and “where” (green) cortical processing streams in (A) primates, and (B) as hypothesized for mustached bats. The bat model is divided into a core system for auditory analysis of echoes and calls and an extended system for the processing of meaning gleaned from echoes and calls. aFAF and pFAF, anterior and posterior frontal auditory fields,; MGd and MGv, dorsal and ventral medial geniculate nuclei; SGn, suprageniculate nucleus; Numbers refer to Brodmann areas.

The rostral and caudal subdivisions of belt and parabelt regions of the AC are thought to form the beginning within auditory cortex of functional processing streams that underlie the two major functions of hearing: identification of auditory patterns or objects, and spatial perception of sound (including motion in space) (11, 25, 26). Evidence for this is based on single-unit recordings from rostral and caudal belt demonstrating increased selectivity (or “tuning”) for communication calls and the spatial position, respectively (29). In addition, it has been demonstrated that rostral belt neurons project to ventrolateral PFC, which is also involved in visual object working memory; by contrast, caudal belt projects to dorsolateral PFC, which is known to be involved in spatial working memory (105).

The role of caudal belt in spatial analysis has also been shown by work in awake behaving rhesus monkeys demonstrating a tighter coupling of neuronal activity in the caudomedial area (CM) with sound localization behavior (106). Furthermore, lesions of rostral and caudal portions of superior temporal (ST) cortex lead to deficits in frequency and space discrimination behavior, respectively (107). Similar organizational patterns are beginning to emerge in marmosets, with an anterolateral area seemingly involved in pitch analysis (99).

Even stronger evidence for dual cortical pathways comes from functional neuroimaging studies in humans, which have shown unequivocally a division of labor between rostral and caudal parts of ST cortex in terms of auditory processing of objects/patterns and space, respectively. Various recent studies have provided evidence for a role of rostral ST in identification of sound objects, including human voice and speech sounds (108–115). Conversely, several studies have shown that caudal STG and posterior parietal cortex are activated during processing of auditory space and motion (116–118), including a recent meta-analysis of 11 “spatial” and 27 “nonspatial” studies (119).

The evidence for cortico-cortical processing streams in bats, similar to primates, is beginning to emerge. Recent comparative analysis in Rhinolophus suggests that the FM-FM area in the auditory cortex in mustached bats is best regarded as a belt area and the DF area possibly corresponds to a portion of the parietal cortex (69–71). The DM, FM-FM and DF areas may thus belong to an auditory “where”-stream (see Figure 9B). Nevertheless, a conceptual difference between bats and primates becomes evident as several bat studies support the possibility of multifunctionality of different areas (12, 20, 79), whereas primate studies emphasize the concept of functional specialization. The concept of multifunctionality is based on the observation that single neurons in, e.g., the FM-FM area are selective to parameters important for ranging, which is a “where”-stream function; at the same time, neurons in this area may also be selective for temporal features within a call type (19, 20), a typical “what”-stream function. This is different from the situation in monkeys, where selectivity for calls is found largely in one area and tuning to sound location in another (29).

Only a small percentage (~15%) of neurons of this kind were found in area CL in macaques (29). It can be assumed that they are created by convergent input from lower-order neurons with selectivity in just one parameter domain. The majority of neurons in the DSCF area are selective for both echolocation and call parameters, but the proportion of neurons in the FM-FM area of bat auditory cortex that are selective in both parameter domains remains to be estimated. Overall, functional specialization and multifunctionality may be two extremes on the same scale with the actual proportions of neuronal selectivity somewhere in-between. It is entirely possible that in bats, specialization for one function is expanded to a dual specialization within single neurons due to the compressed cortical hierarchy in this species. It does not mean, however, that the principle of a common bauplan in different mammalian species has to be abandoned.

8. PERSPECTIVE

The ability to hear complex sounds and communicate with conspecifics via vocalizations represents an important advancement for cooperative and social interactions among individuals within large societies. This ability underlies the development of speech and music in humans. How does the auditory system manage to instantly analyze sounds and differentiate between different types of complex sounds, as exemplified by communication calls in mammals? We know relatively little, but rapid progress is being made in a few bat and primate species in this exciting and important area of research. The studies on the AC of bats and primates represent a top-down approach for understanding the processing and perception of calls. Perception is considered to require a host of neural mechanisms and processing strategies that are not unlike the managerial operations that must be performed with split-second precision for the successful completion of any complex, mission-critical project. For auditory communication, these operations must be completed continuously and consistently within a fraction of a second and without losing accuracy.

An examination of how neurons in the AC manage to differentiate between different call types has revealed some of the mission-critical strategies adopted by the auditory system. These strategies include tuning to multiple sound parameters, including tones and BAPs, and the development of combination-sensitivity at the single cell level. At the level of neural populations, knowledge of the selected features represented within the AC reveals the potential IBEs (e.g., FMs) and IBPs (e.g., direction and slope of FMs) within calls. Studies of the response properties of neurons indicate that the acoustic features represented in the AC are most likely coded within the firing rate of neurons. This rate code may be transformed to a temporal code in the FC. An acoustic analysis of sounds and of call selectivity of cortical neurons reveals the level of specialization achieved and the call features extracted and represented within each area of the AC. Generalizing the common findings made thus far in bats and primates, it may be concluded that parallel-hierarchical processing and combination-sensitivity are two important evolutionary achievements underlying the functional organization of the auditory system and are required for an efficient management of complex sounds. The degree of complexity of the acoustic features represented, the level at which a particular neural mechanism is implemented, and the manner and kind of features extracted from communication sounds may vary with the species in question.

Acknowledgments

This work was supported in part by NIH grants DC02054 and DC03789 to J.S.K. and J.P.R., respectively, and a NSF grant NS 052494 to J.P.R. from the National Institutes of Health. We also thank Georgetown University for an intramural collaborative research grant to J.S.K. to explore neurocomputational mechanisms of auditory object recognition in bats and primates.

References

- 1.Clement MJ, Dietz N, Gupta P, Kanwal JS. Audiovocal communication and social behavior in mustached bats. In: Kanwal JS, Ehret G, editors. Behavior And Neurodynamics for Auditory Communication. Cambridge University Press; Cambridge, England: 2005. [Google Scholar]

- 2.Kostan KM, Snowdon CT. Attachment and social preferences in cooperatively-reared cotton-top tamarins. Am J Primatol. 2002;57:131–139. doi: 10.1002/ajp.10040. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Snowdon CT. Affiliative processes and vocal development. Ann N Y Acad Sci. 1997;807:340–351. doi: 10.1111/j.1749-6632.1997.tb51931.x. [DOI] [PubMed] [Google Scholar]

- 4.Pola YV, Snowdon CT. The vocalizations of pygmy marmosets (Cebuella pygmaea) Anim Behav. 1975;23:826–842. doi: 10.1016/0003-3472(75)90108-6. [DOI] [PubMed] [Google Scholar]

- 5.Kitchen DM, Cheney DL, Seyfarth RM. Male chacma baboons (Papio hamadryas ursinus) discriminate loud call contests between rivals of different relative ranks. Anim Cogn. 2005;8:1–6. doi: 10.1007/s10071-004-0222-2. [DOI] [PubMed] [Google Scholar]

- 6.Beeman K. Digital Signal Analysis, Editing and Synthesis. In: Hopp SL, Owren MJ, Evans CS, editors. Animal Acoustic Communication: Sound analysis and research methods. Springer-Verlag; Berlin, Germany: 1998. [Google Scholar]

- 7.Suga N. Philosophy and stimulus design for neuroethology of complex-sound processing. Phil Trans R Soc Lond. 1992;336:423–428. doi: 10.1098/rstb.1992.0078. [DOI] [PubMed] [Google Scholar]

- 8.Symmes D. On the use of natural stimuli in neurophysiological studies of audition. Hear Res. 1981;4:203–214. doi: 10.1016/0378-5955(81)90007-1. [DOI] [PubMed] [Google Scholar]

- 9.Doupe AJ, Kuhl PK. Birdsong and human speech: common themes and mechanisms. Annu Rev Neurosci. 1999;22:567–631. doi: 10.1146/annurev.neuro.22.1.567. [DOI] [PubMed] [Google Scholar]

- 10.Kanwal JS, Fitzpatrick DC, Suga N. Facilitatory and inhibitory frequency tuning of combination-sensitive neurons in the primary auditory cortex of mustached. J Neurophysiol. 1999;82:2327–2345. doi: 10.1152/jn.1999.82.5.2327. [DOI] [PubMed] [Google Scholar]

- 11.Rauschecker JP. Cortical processing of complex sounds. COIN. 1998;8:516–521. doi: 10.1016/s0959-4388(98)80040-8. [DOI] [PubMed] [Google Scholar]

- 12.Suga N. Multi-function theory for cortical processing of auditory information: implications of single-unit and lesion data for future research. J Comp Physiol [A] 1994;175:135–144. doi: 10.1007/BF00215109. [DOI] [PubMed] [Google Scholar]

- 13.Suga N, O’Neill WE, Kujirai K, Manabe T. Specialization of “combination-sensitive” neurons for processing of complex biosonar signals in the auditory cortex of the mustached bat. J Neurophysiol. 1983;49:1573–1626. doi: 10.1152/jn.1983.49.6.1573. [DOI] [PubMed] [Google Scholar]

- 14.Theunissen FE, Doupe AJ. Temporal and spectral sensitivity of complex auditory neurons in the nucleus HVc of male zebra finches. J Neurosci. 1998;18:3786–802. doi: 10.1523/JNEUROSCI.18-10-03786.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.O’Neill WE, Suga N. Encoding of target range and its representation in the auditory cortex of the mustached bat. J Neurosci. 1982;2:17–31. doi: 10.1523/JNEUROSCI.02-01-00017.1982. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Tian B, Rauschecker JP. Processing of frequency-modulated sounds in the cat’s anterior auditory field. J Neurophysiol. 1994;71:1959–1975. doi: 10.1152/jn.1994.71.5.1959. [DOI] [PubMed] [Google Scholar]

- 17.Tian B, Rauschecker JP. On- and off-responses in rhesus monkey auditory cortex. Soc Neurosci abst. 2003;29 [Google Scholar]

- 18.Wang X. On cortical coding of vocal communication sounds in primates. Proc Natl Acad Sci USA. 2000;97:11843–11849. doi: 10.1073/pnas.97.22.11843. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Esser KH, Condon CJ, Suga N, Kanwal JS. Syntax processing by auditory cortical neurons in the FM-FM area of the mustached bat Pteronotus parnellii. Proc Natl Acad Sci USA. 1997;94:14019–14024. doi: 10.1073/pnas.94.25.14019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Ohlemiller KK, Kanwal JS, Suga N. Facilitative responses to species-specific calls in cortical FM-FM neurons of the mustached bat. Neuroreport. 1996;7:1749–1755. doi: 10.1097/00001756-199607290-00011. [DOI] [PubMed] [Google Scholar]

- 21.Medvedev AV, Kanwal JS. Local field potentials and spiking activity in the primary auditory cortex in response to social calls. J Neurophysiol. 2004;92:52–65. doi: 10.1152/jn.01253.2003. [DOI] [PubMed] [Google Scholar]

- 22.Kanwal JS, Peng JP, Esser K-H. Vocal communication and echolocation in the mustached bat: computing dual functions within single neurons. In: Thomas JA, Vater M, Moss CJ, editors. Advances in the Study of Echolocation in Bats and Dolphins. University of Chicago Press; Chicago, USA: 2004. [Google Scholar]

- 23.Kanwal JS, Washington SD, Lai Z. Preference for a complex sound in auditory cortical neurons shifts with stimulus amplitude. Soc Neurosci abst. 2005;35 [Google Scholar]

- 24.Kanwal JS. A distributed cortical representation of social calls. In: Kanwal JS, Ehret G, editors. Behavior And Neurodynamics For Auditory Communication. Cambridge University Press; Cambridge, England: 2005. [Google Scholar]

- 25.Rauschecker JP. Processing of complex sounds in the auditory cortex of cat, monkey and man. Acta Otolaryngol. 1997;532:34–38. doi: 10.3109/00016489709126142. [DOI] [PubMed] [Google Scholar]

- 26.Rauschecker JP, Tian B. Mechanisms and streams for processing of “what” and “where” in auditory cortex. Proc Natl Acad Sci USA. 2000;97:11800–11806. doi: 10.1073/pnas.97.22.11800. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Tian B, Rauschecker JP. Processing of frequency-modulated sounds in the cat’s posterior auditory field. J Neurophysiol. 1998;79:2629–2642. doi: 10.1152/jn.1998.79.5.2629. [DOI] [PubMed] [Google Scholar]

- 28.Tian B, Rauschecker JP. Processing of frequency-modulated sounds in the lateral auditory belt cortex of the rhesus monkey. J Neurophysiol. 2004;92:2993–3013. doi: 10.1152/jn.00472.2003. [DOI] [PubMed] [Google Scholar]

- 29.Tian B, Reser D, Durham A, Kustov A, Rauschecker JP. Functional specialization in rhesus monkey auditory cortex. Science. 2001;292:290–293. doi: 10.1126/science.1058911. [DOI] [PubMed] [Google Scholar]

- 30.Schreiner CE, Mendelson JR. Functional topography of cat primary auditory cortex: distribution of integrated excitation. J Neurophysiol. 1990;64:1442–1459. doi: 10.1152/jn.1990.64.5.1442. [DOI] [PubMed] [Google Scholar]

- 31.Schreiner CE, Sutter ML. Topography of excitatory bandwidth in cat primary auditory cortex: single-neuron versus multiple-neuron recordings. J Neurophysiol. 1992;68:1487–502. doi: 10.1152/jn.1992.68.5.1487. [DOI] [PubMed] [Google Scholar]

- 32.Sutter ML, Schreiner CE. Physiology and topography of neurons with multipeaked tuning curves in cat primary auditory cortex. J Neurophysiol. 1991;65:1207–1226. doi: 10.1152/jn.1991.65.5.1207. [DOI] [PubMed] [Google Scholar]

- 33.Nelken I, Fishbach A, Las L, Ulanovsky N, Farkas D. Primary auditory cortex of cats: feature detection or something else? Biol Cyber. 2003;89:397–406. doi: 10.1007/s00422-003-0445-3. [DOI] [PubMed] [Google Scholar]

- 34.Ehret G. Left hemisphere advantage in the mouse brain for recognizing ultrasonic communication calls. Nature. 1987;325:249–251. doi: 10.1038/325249a0. [DOI] [PubMed] [Google Scholar]

- 35.Ehret G, Riecke S. Mice and humans perceive multiharmonic communication sounds in the same way. Proc Nat Acad Sci, USA. 2002;99:479–482. doi: 10.1073/pnas.012361999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Wang X, Kadia SC. Differential representation of species-specific primate vocalizations in the auditory cortices of marmoset and cat. J Neurophysiol. 2001;86:2616–2620. doi: 10.1152/jn.2001.86.5.2616. [DOI] [PubMed] [Google Scholar]

- 37.Wang X, Merzenich MM, Beitel R, Schreiner CE. Representation of a species-specific vocalization in the primary auditory cortex of the common marmoset: temporal and spectral characteristics. J Neurophysiol. 1995;74:2685–706. doi: 10.1152/jn.1995.74.6.2685. [DOI] [PubMed] [Google Scholar]

- 38.Sussman HM. Neural coding of relational invariance in speech: human language analogs to the barn owl. Psychological Review. 1989;96:631–642. doi: 10.1037/0033-295x.96.4.631. [DOI] [PubMed] [Google Scholar]

- 39.Sussman HM, McCaffrey HA, Matthews S. An investigation of locus equations as a source of relational invariance for stop place categorization. J Acoust Soc Am. 1991;90:1309–1325. [Google Scholar]

- 40.Kanwal JS, Matsumura S, Ohlemiller K, Suga N. Analysis of acoustic elements and syntax in communication sounds emitted by mustached bats. J Acoust Soc Am. 1994;96:1229–1254. doi: 10.1121/1.410273. [DOI] [PubMed] [Google Scholar]

- 41.Hauser MD. Articulatory and social factors influence the acoustic structure of rhesus monkey vocalizations: a learned mode of production? J Acoust Soc Am. 1992;91:2175–2179. doi: 10.1121/1.403676. [DOI] [PubMed] [Google Scholar]

- 42.Hauser MD. The Evolution of Communication. MIT Press; Cambridge, MA: 1996. [Google Scholar]

- 43.Smolders JW, Aertsen AM, Johannesma PI. Neural representation of the acoustic biotope: a comparison of the response of auditory neurons to tonal and natural stimuli in the cat. Biol Cybern. 1979;35:11–20. doi: 10.1007/BF01845840. [DOI] [PubMed] [Google Scholar]

- 44.Shipley C, Carterette EC, Buchwald JS. The effects of articulation on the acoustical structure of feline vocalizations. J Acoust Soc Am. 1991;89:902–909. doi: 10.1121/1.1894652. [DOI] [PubMed] [Google Scholar]

- 45.Hauser MD, Fowler CA. Fundamental frequency declination is not unique to human speech: evidence from nonhuman primates. J Acoust Soc Am. 1992;91:363–369. doi: 10.1121/1.402779. [DOI] [PubMed] [Google Scholar]

- 46.Snowdon CT. Linguistic and pschyolinguistic approaches to primate communication. In: Snowdon CT, Brown CH, Petersen MR, editors. Primate Communication. Cambridge University Press; Cambridge, England: 1982. [Google Scholar]

- 47.Marler P, Peters S. Birdsong and speech: evidence for special processing. In: Eimas PD, editor. Perspectives on the Study of Speech. Lawrence Erlbaum Associates, Publishers; Hillsdale: 1981. [Google Scholar]

- 48.Suthers RA, Fattu JM. Mechanisms of sound production by echolocating bats. Amer Zool. 1973;13:1215–1226. [Google Scholar]

- 49.Ehret G, Haack B. Categorical perception of mouse pup ultrasound by lactating females. Naturwissenschaften. 1981;68:208–209. doi: 10.1007/BF01047208. [DOI] [PubMed] [Google Scholar]

- 50.Ehret G, Riecke S. Mice and humans perceive multiharmonic communication sounds in the same way. Proc Natl Acad Sci USA. 2002;99:479–482. doi: 10.1073/pnas.012361999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Janik VM. Whistle matching in wild bottlenose dolphins (Tursiops truncatus) Science. 2000;289:1355–1357. doi: 10.1126/science.289.5483.1355. [DOI] [PubMed] [Google Scholar]

- 52.Payne RS, McVay S. Songs of humpback whales. Science. 1971;173:585–597. doi: 10.1126/science.173.3997.585. [DOI] [PubMed] [Google Scholar]

- 53.Brenowitz EA, Margoliash D, Nordeen KW. An introduction to birdsong and the avian song system. J Neurobiol. 1997;33:495–500. [PubMed] [Google Scholar]

- 54.Margoliash D. Acoustic parameters underlying the responses of song-specific neurons in the white-crowned sparrow. J Neurosci. 1983;3(5):1039–1057. doi: 10.1523/JNEUROSCI.03-05-01039.1983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Marler P, Peters S. The role of song phonology and syntax in vocal learning preferences in the song sparrow, (Melospiza melodia) Ethology. 1988;77 [Google Scholar]

- 56.Payne K, Payne R. Large scale changes over 19 years in songs of humpback whales in Bermuda. Zeitschrift fur Tierpsychologie. 1985;68:89–114. [Google Scholar]

- 57.Petersen MR. The perception of species-specific vocalizations by primates: a conceptual framework. In: Snowdon CT, Brown CH, Petersen MR, editors. Primate Communication. Cambridge University Press; New York: 1982. [Google Scholar]

- 58.Bonin GV, Bailey P. The Neocortex of Macaca mulatta. Univ. of Illinois Press; Urbana, IL: 1947. [Google Scholar]

- 59.Pandya DN, Sanides F. Architectonic parcellation of the temporal operculum in rhesus monkey and its projection pattern. Z Anat Entwicklungsgesch. 1973;139:127–161. doi: 10.1007/BF00523634. [DOI] [PubMed] [Google Scholar]

- 60.Kaas JH, Hackett TA. Subdivisions of auditory cortex and processing streams in primates. Proc Natl Acad Sci USA. 2000;97:11793–1179. doi: 10.1073/pnas.97.22.11793. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Rauschecker JP, Tian B, Hauser M. Processing of complex sounds in the macaque nonprimary auditory cortex. Science. 1995;268:111–114. doi: 10.1126/science.7701330. [DOI] [PubMed] [Google Scholar]

- 62.Hackett TA, Stepniewska I, Kaas JH. Thalamocortical connections of the parabelt auditory cortex in macaque monkeys. J Comp Neurol. 1998;400:271–286. doi: 10.1002/(sici)1096-9861(19981019)400:2<271::aid-cne8>3.0.co;2-6. [DOI] [PubMed] [Google Scholar]

- 63.Imig TJ, Reale RA. Patterns of cortico-cortical connections related to tonotopic maps in cat auditory cortex. J Comp Neurol. 1980;192:293–332. doi: 10.1002/cne.901920208. [DOI] [PubMed] [Google Scholar]

- 64.Merzenich MM, Brugge JF. Representation of the cochlear partition on the superior temporal plane of the macaque monkey. Brain Res. 1973;50:275–296. doi: 10.1016/0006-8993(73)90731-2. [DOI] [PubMed] [Google Scholar]

- 65.Jones EG. The Thalamus. Plenum Press; New York: 1985. [Google Scholar]

- 66.Malhotra S, Hall AJ, Lomber SG. Cortical control of sound localization in the cat: unilateral cooling deactivation of 19 cerebral areas. J Neurophysiol. 2004;92:1625–43. doi: 10.1152/jn.01205.2003. [DOI] [PubMed] [Google Scholar]

- 67.Kusmierek P, Malinowska M, Kosmal A. Different effects of lesions to auditory core and belt cortex on auditory recognition in dogs. Exp Brain Res. 2007 doi: 10.1007/s00221-007-0868-5. Epub ahead of print. [DOI] [PubMed] [Google Scholar]

- 68.Suga N, Kanwal JS. Echolocation: Creating computational maps via parallel-hierarchical processing. In: Arbib M, editor. Handbook of Brain Theory and Neural Networks. The MIT press; 1995. pp. 344–351. [Google Scholar]

- 69.Radtke-Schuller S. Neuroarchitecture of the auditory cortex in the rufous horseshoe bat (Rhinolophus rouxi) Anat Embryol (Berl) 2001;204:81–100. doi: 10.1007/s004290100191. [DOI] [PubMed] [Google Scholar]

- 70.Radtke-Schuller S. Cytoarchitecture of the medial geniculate body and thalamic projections to the auditory cortex in the rufous horseshoe bat (Rhinolophus rouxi). I. Temporal fields. Anat Embryol (Berl) 2004;209:59–76. doi: 10.1007/s00429-004-0424-z. [DOI] [PubMed] [Google Scholar]

- 71.Radtke-Schuller S, Schuller G, O’Neill WE. Thalamic projections to the auditory cortex in the rufous horseshoe bat (Rhinolophus rouxi). II. Dorsal fields. Anat Embryol (Berl) 2004;209:77–91. doi: 10.1007/s00429-004-0425-y. [DOI] [PubMed] [Google Scholar]

- 72.Kobler JB, Isbey SF, Casseday JH. Auditory pathways to the frontal cortex of the mustache bat, Pteronotus parnellii. Science. 1987;236:824–826. doi: 10.1126/science.2437655. [DOI] [PubMed] [Google Scholar]

- 73.Hofstetter KM, Ehret G. The auditory cortex of the mouse: connections of the ultrasonic field. J Comp Neurol. 1992;323:370–386. doi: 10.1002/cne.903230306. [DOI] [PubMed] [Google Scholar]

- 74.Rauschecker JP. Processing of complex sounds in the auditory cortex of cat, monkey, and man. Acta Otolaryngol Suppl. 1997;532:34–38. doi: 10.3109/00016489709126142. [DOI] [PubMed] [Google Scholar]

- 75.Tian B, Rauschecker JP. Processing of frequency-modulated sounds in the cat’s posterior auditory field. J Neurophysiol. 1998;79:2629–2642. doi: 10.1152/jn.1998.79.5.2629. [DOI] [PubMed] [Google Scholar]

- 76.Sutter ML, Schreiner CE. Physiology and topography of neurons with multipeaked tuning curves in cat primary auditory cortex. J Neurophysiol. 1991;65:1207–1226. doi: 10.1152/jn.1991.65.5.1207. [DOI] [PubMed] [Google Scholar]

- 77.Geissler DB, Ehret G. Auditory perception vs. recognition: representation of complex communication sounds in the mouse auditory cortical fields. Eur J Neurosci. 2004;19:1027–1040. doi: 10.1111/j.1460-9568.2004.03205.x. [DOI] [PubMed] [Google Scholar]

- 78.Suga N, Niwa H, Taniguchi I, Margoliash D. The personalized auditory cortex of the mustached bat: adaptation for echolocation. J Neurophysiol. 1987;58:643–54. doi: 10.1152/jn.1987.58.4.643. [DOI] [PubMed] [Google Scholar]

- 79.Kanwal JS. Processing species-specific calls by combination-sensitive neurons in an echolocating bat. In: Hauser MD, Konishi M, editors. The Design of Animal Communication. The MIT Press; Cambridge, MA: 1999. [Google Scholar]

- 80.Kawasaki M, Margoliash D, Suga N. Delay-tuned combination-sensitive neurons in the auditory cortex of the vocalizing mustached bat. J Neurophysiol. 1988;59(2 Feb):623–635. doi: 10.1152/jn.1988.59.2.623. [DOI] [PubMed] [Google Scholar]

- 81.Misawa H, Suga N. Multiple combination-sensitive neurons in the auditory cortex of the mustached bat. Hear Res. 2001;151:15–29. doi: 10.1016/s0300-2977(00)00079-6. [DOI] [PubMed] [Google Scholar]

- 82.Fitzpatrick DC, Kanwal JS, Butman JA, Suga N. Combination-sensitive neurons in the primary auditory cortex of the mustached bat. J Neurosci. 1993;13:931–940. doi: 10.1523/JNEUROSCI.13-03-00931.1993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Taniguchi I, Niwa H, Wong D, Suga N. Response properties of FM-FM combination-sensitive neurons in the auditory cortex of the mustached bat. J Comp Physiol [A] 1986;159:331–337. doi: 10.1007/BF00603979. [DOI] [PubMed] [Google Scholar]

- 84.Suga N. Specialization of the auditory system for reception and processing of species-specific sounds. Fed Proc. 1978;37:2342–2354. [PubMed] [Google Scholar]

- 85.Rauschecker JP. Parallel processing in the auditory cortex of primates. Audiology & Neuro-Otology. 1998;3:86–103. doi: 10.1159/000013784. [DOI] [PubMed] [Google Scholar]

- 86.Fuzessery ZM, Feng AS. Mating call selectivity in the thalamus and midbrain of the Leopard frog (Rana p. pipiens): single and multiunit analyses. J Comp Physiol [A] 1983;150:333–344. [Google Scholar]

- 87.Margoliash D, Fortune ES. Temporal and harmonic combination-sensitive neurons in the zebra finch’s HVc. J Neurosci. 1992:4309–4326. doi: 10.1523/JNEUROSCI.12-11-04309.1992. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Yan J, Suga N. Corticofugal amplification of facilitative auditory responses of subcortical combination-sensitive neurons in the mustached bat. J Neurophysiol. 1999;81:817–824. doi: 10.1152/jn.1999.81.2.817. [DOI] [PubMed] [Google Scholar]

- 89.Mittmann DH, Wenstrup JJ. Combination-sensitive neurons in the inferior colliculus. Hear Res. 1995;90:185–91. doi: 10.1016/0378-5955(95)00164-x. [DOI] [PubMed] [Google Scholar]

- 90.Gallant JL, Braun J, Van Essen DC. Selectivity for polar, hyperbolic, and Cartesian gratings in macaque visual cortex. Science. 1993;259:100–103. doi: 10.1126/science.8418487. [DOI] [PubMed] [Google Scholar]

- 91.Averbeck BB, Romanski LM. Principal and independent components of macaque vocalizations: constructing stimuli to probe high-level sensory processing. J Neurophysiol. 2004;91:2897–2909. doi: 10.1152/jn.01103.2003. [DOI] [PubMed] [Google Scholar]

- 92.Casseday JH, Covey E, Grothe B. Neural selectivity and tuning for sinusoidal frequency modulations in the inferior colliculus of the big brown bat, Eptesicus fuscus. J Neurophysiol. 1997;77:1595–1605. doi: 10.1152/jn.1997.77.3.1595. [DOI] [PubMed] [Google Scholar]

- 93.Rauschecker JP, Tian B. Processing of bandpassed noise in the lateral auditory belt cortex of the rhesus monkey. J Neurophysiol. 2004;91:2578–2589. doi: 10.1152/jn.00834.2003. [DOI] [PubMed] [Google Scholar]

- 94.Rauschecker JP, Tian B, Pons T, Mishkin M. Serial and parallel processing in rhesus monkey auditory cortex. J Comp Neurol. 1997;382:89–103. [PubMed] [Google Scholar]

- 95.Washington SD, Kanwal JS. Excitatory tuning to upward and downward directions of frequency-modulated sweeps in the primary auditory cortex. Soc Neurosci, abstr. 2005;35 [Google Scholar]

- 96.Zhang LI, Tan AY, Schreiner CE, Merzenich MM. Topography and synaptic shaping of direction selectivity in primary auditory cortex. Nature. 2003;424:201–205. doi: 10.1038/nature01796. [DOI] [PubMed] [Google Scholar]

- 97.Horikawa J, Hess A, Hosokawa Y, TI . Spatiotemporal processing in the guinea pig auditory cortex. In: Kanwal JS, Ehret G, editors. Behavior and Neurodynamics for Auditory Communication. Cambridge University Press; Cambridge, England: 2005. [Google Scholar]

- 98.Read HL, Winer JA, Schreiner CE. Functional architecture of auditory cortex. Curr Opin Neurobiol. 2002;12:433–40. doi: 10.1016/s0959-4388(02)00342-2. [DOI] [PubMed] [Google Scholar]

- 99.Bendor D, Wang X. The neuronal representation of pitch in primate auditory cortex. Nature. 2005;436:1161–5. doi: 10.1038/nature03867. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 100.Riesenhuber M, Poggio T. Neural mechanisms of object recognition. Curr Opin Neurobiol. 2002;12:162–168. doi: 10.1016/s0959-4388(02)00304-5. [DOI] [PubMed] [Google Scholar]

- 101.Kanwal JS, Gordon M, Peng JP, Heinz-Esser K. Auditory responses from the frontal cortex in the mustached bat, Pteronotus parnellii. Neuroreport. 2000;11:367–72. doi: 10.1097/00001756-200002070-00029. [DOI] [PubMed] [Google Scholar]

- 102.Romanski LM, Averbeck BB, Diltz M. Neural representation of vocalizations in the primate ventrolateral prefrontal cortex. J Neurophysiol. 2005;93:734–747. doi: 10.1152/jn.00675.2004. [DOI] [PubMed] [Google Scholar]

- 103.Livingstone MS, Hubel DH. Do the relative mapping densities of the magno- and parvocellular systems vary with eccentricity? J Neurosci. 1988;8:4334–4339. doi: 10.1523/JNEUROSCI.08-11-04334.1988. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 104.Ungerleider LG, Mishkin M. Two cortical visual systems. In: Ingle DJ, Goodale MA, Mansfield RJW, editors. Analysis of Visual Behaviour. MIT Press; Cambridge, MA: 1982. [Google Scholar]

- 105.Romanski LM, Bates JF, Goldman-Rakic PS. Auditory belt and parabelt projections to the prefrontal cortex in the rhesus monkey. J Comp Neurol. 1999;403:141–157. doi: 10.1002/(sici)1096-9861(19990111)403:2<141::aid-cne1>3.0.co;2-v. [DOI] [PubMed] [Google Scholar]

- 106.Recanzone GH, Guard DC, Phan ML, Su TK. Correlation between the activity of single auditory cortical neurons and sound-localization behavior in the macaque monkey. J Neurophysiol. 2000;83:2723–2739. doi: 10.1152/jn.2000.83.5.2723. [DOI] [PubMed] [Google Scholar]

- 107.Harrington IA, Heffner HE. A behavioral investigation of “separate processing streams” within macaque auditory cortex. Assoc Res Otolaryngol abstr. 2002;25:120. [Google Scholar]

- 108.Alain C, Arnott SR, Hevenor S, Graham S, Grady CL. “What” and “where” in the human auditory system. Proc Natl Acad Sci USA. 2001;98:12301–12306. doi: 10.1073/pnas.211209098. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 109.Belin P, Zatorre RJ, Lafaille P, Ahad P, Pike B. Voice-selective areas in human auditory cortex. Nature. 2000;403:309–312. doi: 10.1038/35002078. [DOI] [PubMed] [Google Scholar]

- 110.Bellmann A, Meuli R, Clarke S. Two types of auditory neglect. Brain. 2001;124:676–687. doi: 10.1093/brain/124.4.676. [DOI] [PubMed] [Google Scholar]

- 111.Binder JR, Frost JA, Hammeke TA, Bellgowan PS, Springer JA, Kaufman JN, Possing ET. Human temporal lobe activation by speech and nonspeech sounds. Cereb Cortex. 2000;10:512–528. doi: 10.1093/cercor/10.5.512. [DOI] [PubMed] [Google Scholar]

- 112.Binder JR, Liebenthal E, Possing ET, Medler DA, Ward BD. Neural correlates of sensory and decision processes in auditory object identification. Nat Neurosci. 2004;7:295–301. doi: 10.1038/nn1198. [DOI] [PubMed] [Google Scholar]

- 113.Clarke S, Bellmann A, Meuli RA, Assal G, Steck AJ. Auditory agnosia and auditory spatial deficits following left hemispheric lesions: evidence for distinct processing pathways. Neuropsychologia. 2000;38:797–807. doi: 10.1016/s0028-3932(99)00141-4. [DOI] [PubMed] [Google Scholar]

- 114.Maeder PP, Meuli RA, Adriani M, Bellmann A, Fornari E, Thiran JP, Pittet A, Clarke S. Distinct pathways involved in sound recognition and localization: a human fMRI study. Neuroimage. 2001;14:802–816. doi: 10.1006/nimg.2001.0888. [DOI] [PubMed] [Google Scholar]

- 115.Zatorre RJ, Bouffard M, Belin P. Sensitivity to auditory object features in human temporal neocortex. J Neurosci. 2004;24:3637–3642. doi: 10.1523/JNEUROSCI.5458-03.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 116.Krumbholz K, Schonwiesner M, Von Cramon DY, Rubsamen R, Shah NJ, Zilles K, Fink GR. Representation of interaural temporal information from left and right auditory space in the human planum temporale and inferior parietal lobe. Cereb Cortex. 2004 doi: 10.1093/cercor/bhh133. Epub ahead of print. [DOI] [PubMed] [Google Scholar]

- 117.Lewis JW, Beauchamp MS, DeYoe EA. A comparison of visual and auditory motion processing in human cerebral cortex. Cereb Cortex. 2000;10:873–888. doi: 10.1093/cercor/10.9.873. [DOI] [PubMed] [Google Scholar]

- 118.Zimmer U, Macaluso E. High binaural coherence determines successful sound localization and increased activity in posterior auditory areas. Neuron. 2005;47:893–905. doi: 10.1016/j.neuron.2005.07.019. [DOI] [PubMed] [Google Scholar]