Abstract

Cognitive functioning can be assessed with performance-based assessments such as neuropsychological tests and with interview-based assessments. Both assessment methods have the potential to assess whether treatments for schizophrenia improve clinically relevant aspects of cognitive impairment. However, little is known about the reliability, validity and treatment responsiveness of interview-based measures, especially in the context of clinical trials. Data from two studies were utilized to assess these features of the Schizophrenia Cognition Rating Scale (SCoRS). One of the studies was a validation study involving 79 patients with schizophrenia assessed at 3 academic research centers in the US. The other study was a 32-site clinical trial conducted in the US and Europe comparing the effects of encenicline, an alpha-7 nicotine agonist, to placebo in 319 patients with schizophrenia. The SCoRS interviewer ratings demonstrated excellent test-retest reliability in several different circumstances, including those that did not involve treatment (ICC> 0.90), and during treatment (ICC>0.80). SCoRS interviewer ratings were related to cognitive performance as measured by the MCCB (r= −0.35), and demonstrated significant sensitivity to treatment with encenicline compared to placebo (P<.001). These data suggest that the SCoRS has potential as a clinically relevant measure in clinical trials aiming to improve cognition in schizophrenia, and may be useful for clinical practice. The weaknesses of the SCoRS include its reliance on informant information, which is not available for some patients, and reduced validity when patient self-report is the sole information source.

Keywords: cognition, schizophrenia, cognitive impairment, assessment, treatment response, SCoRS

INTRODUCTION

Cognitive impairment in schizophrenia has traditionally been assessed with performance-based cognitive measures (Chapman and Chapman, 1973). Many of these measures were derived from tests developed to assess neurocognitive function for the identification of strengths and weaknesses in patients with brain dysfunction or intellectual impairment, or for examining the effects of aging (Spreen & Strauss, 1998). More recently, tests measuring highly specific cognitive processes, often developed for neuroimaging paradigms, have been utilized as well (Barch et al., 2009). However, there are multiple practical constraints on the assessment of cognition conducted exclusively with performance-based tests. Most clinicians who might wish to evaluate the severity of cognitive impairment in their patients with schizophrenia do not have the required expertise and resources to conduct meaningful performance-based assessments. Furthermore, the interpretation of the clinical relevance of changes in performance-based measures is not immediately accessible to non-experts, including clinicians, consumers, and family members, and may require different approaches or supplemental assessments with greater face validity. Finally, there is no consensus among experts as to how much change on neuropsychological tests is clinically meaningful.

Regulatory bodies such as the United States Food and Drug Administration (FDA) and the European Medicines Agency (EMA) support the use of cognitive performance measures as primary endpoints in clinical trials for the treatment of cognitive impairment in schizophrenia. However, they have also noted the absence of face validity of performance-based cognitive measures as one of the reasons they require a pharmacologic treatment also to demonstrate efficacy on an endpoint that has greater clinical meaning to clinicians and consumers. These indices could include performance-based measures of functional capacity or interview-based assessments of clinically relevant and easily detectable cognitive change (Buchanan et al., 2005, 2011). In addition, assuming that some treatments become available, clinicians will need an assessment that they can utilize to assess cognitive change in their patients in situations where performance-based cognitive tests are not practically available. Interview-based assessments have the potential to meet these requirements.

Several interview-based measures of cognition are available. The two that have been utilized the most in large-scale studies with adequate methods, such as the Measurement and Treatment Research to Improve Cognition in Schizophrenia (MATRICS) project, have been the Schizophrenia Cognition Rating Scale (SCoRS) and the Cognitive Assessment Interview (CAI). These measures examine cognitive functioning through questions about functionally relevant, cognitively demanding tasks. As a result, they measure cognitive functioning from a different perspective than performance-based assessments, and a full overlap with performance-based measures is not expected.

We will focus in this paper on research recently completed with the SCoRS. Information on the SCoRS’ psychometric properties, relationship to cognitive functioning, as well as other measures of functional capacity, can be found in a variety of peer-reviewed publications, including Keefe et al., (2006), Green et al., (2008), and Harvey et al. (2011). Overall, the strengths of the SCoRS are its brief administration time, requiring about 15 minutes per interview (Keefe et al., 2006; Green et al., 2008); its relation to real-world functioning (Keefe et al., 2006); good test-retest reliability; and correlations with at least some performance-based measures of cognition (Keefe et al., 2006). However, several challenges remain. Due to the difficulties that patients with schizophrenia have reporting accurate information regarding cognition and everyday functioning (Bowie et al., 2007; Sabbag et al., 2011; also Durand et al., this issue), the validity of the SCoRS and its correlations with performance-based measures of cognition may depend upon the availability of an informant. Since some patients with schizophrenia may not have people who know them well (Patterson et al., 1996; Bellack et al., 2007), requirements for informant information may reduce the practicality of the SCoRS.

It is important to determine the contexts in which informant information is required and whether there are circumstances where it is not. Also, while the US FDA has expressed general acceptance of interview-based measures of cognition as secondary endpoints in clinical trials for drugs to improve cognitive impairment in schizophrenia (Buchanan, et al., 2005; 2011) and the SCoRS in particular is being used as a co-primary endpoint in phase 3 registration clinical trials (www.clinicaltrials.gov, accessed May 9, 2014), the effect of treatment on the SCoRS is not well known. Finally, if the SCoRS and similar measures are to be useful for clinical applications, it may be helpful to begin to gather information on the reliability and sensitivity of specific items of the SCoRS for the purposes of reducing the length of the assessment down to its crucial components.

In this paper, we will address the following questions about the SCoRS:

What is the structure of the SCoRS items? Do the items measure a single factor or multiple factors? Based upon correlations with cognitive performance measures such as the MATRICS Consensus Cognitive Battery (MCCB), assessment of the reliability of items, and treatment responsiveness, are there opportunities for data reduction?

-

What is the relative benefit of informant information given the potential time and resource cost and unavailability of reliable informants?

What is the relative reliability of different sources of information?

What is the relative association of data from different sources with cognitive performance measures such as the MCCB?

What is the relative sensitivity to treatment of data from the different sources?

Are there differences in the reliability, validity and sensitivity of the SCoRS based upon geographical region and level of expertise and experience with the instrument?

EXPERIMENTAL PROCEDURES

In order to increase the sample size and the diversity of conditions in which SCoRS data were evaluated, we combined data from two very different studies. One study was an academic study conducted at three research centers in the United States. The second study was a phase 2 clinical trial conducted in the United States and Europe.

Validation Study

76 patients with DSM-IV schizophrenia were assessed in the context of a multi-center validation study of functional capacity outcome measures, including the SCoRS. Assessments were completed at two visits 7–14 days apart by highly trained raters at the University of Miami Medical Center, University of California at San Diego, and the University of South Carolina.

Treatment Study

319 patients with DSM-IV schizophrenia were randomized at 32 s in the United States, Russia, Ukraine, and Serbia in the context of a phase 2 clinical trial to determine the safety and efficacy of encenicline, an alpha-7 nicotinic agonist, for the treatment of cognitive impairment in patients with schizophrenia. Considering Day 1 as the day that treatment was initiated, the SCoRS was administered in this study at Day −14, Day −7, twice on Day −4, and then on post-treatment visits at Day 28, Day 56 and Day 77. The MCCB was completed on Day 1, Day 44, and Day 84 only in the United States.

Schizophrenia Cognition Rating Scale (SCoRS)

The SCoRS is an interview-based measure of cognitive impairment with questions aimed at the degree to which this impairment affects day-to-day functioning. The SCoRS was developed specifically to assess aspects of cognitive functioning found in each of the seven MATRICS cognitive domains, and included 20 items. See Table 2 for a list of items. Each item is rated on a scale ranging from 1–4 with higher scores reflecting a greater degree of impairment. Each item has anchor points for all levels of the 4-point scale. The anchor points for each item focus on the degree of impairment in that ability and the degree to which the deficit impairs day-to-day functioning. Raters considered cognitive deficits only and attempted to rule out non-cognitive sources of the deficits. For example, a patient may have had severe difficulty reading because his reading experience was minimal, which would suggest that the limitation was related to level of education and not cognitive impairment.

Table 2.

Mean (± Standard Deviation) Baseline performance and Factor Weights on the SCoRS

| Question | Validation Study (N = 76) | Treatment Study (N = 224) | Combined (N = 300) | Factor Weights |

|---|---|---|---|---|

| 1. Remembering names of people you know or meet? | 2.03 ± 0.73 | 1.98 ± 0.68 | 1.99 ± 0.69 | 0.46 |

| 2. Remembering how to get places? | 1.67 ± 0.79 | 1.59 ± 0.71 | 1.61 ± 0.73 | 0.50 |

| 3. Following a TV show? | 1.96 ± 0.96 | 1.96 ± 0.80 | 1.96 ± 0.84 | 0.60 |

| 4. Remembering where you put things? | 2.08 ± 0.86 | 2.02 ± 0.77 | 2.04 ± 0.79 | 0.59 |

| 5. Remembering your chores and responsibilities? | 1.93 ± 0.91 | 1.90 ± 0.72 | 1.91 ± 0.77 | 0.55 |

| 6. Learning how to use new gadgets and equipment? | 1.92 ± 0.83 | 2.18 ± 0.78 | 2.12 ± 0.80 | 0.52 |

| 7. Remembering information and/or instructions recently given to you? | 2.26 ± 0.81 | 2.29 ± 0.70 | 2.29 ± 0.73 | 0.64 |

| 8. Remembering what you were going to say? | 1.97 ± 0.78 | 1.90 ± 0.69 | 1.92 ± 0.71 | 0.56 |

| 9. Keeping track of your money? | 2.31 ± 0.93 | 1.75 ± 0.76 | 1.89 ± 0.84 | 0.47 |

| 10. Keeping your words from being jumbled together? | 1.70 ± 0.78 | 1.64 ± 0.66 | 1.66 ± 0.69 | 0.52 |

| 11. Concentrating well enough to read a newspaper or a book? | 2.04 ± 0.87 | 2.30 ± 0.79 | 2.23 ± 0.82 | 0.62 |

| 12. With familiar tasks? | 1.51 ± 0.81 | 1.49 ± 0.66 | 1.49 ± 0.70 | 0.59 |

| 13. Staying focused? | 2.11 ± 0.74 | 2.30 ± 0.63 | 2.25 ± 0.67 | 0.47 |

| 14. Learning new things? | 1.84 ± 0.73 | 2.14 ± 0.76 | 2.07 ± 0.76 | 0.66 |

| 15. Speaking as fast as you would like? | 1.63 ± 0.76 | 1.77 ± 0.78 | 1.73 ± 0.78 | 0.45 |

| 16. Doing things quickly? | 1.75 ± 0.80 | 1.82 ± 0.73 | 1.80 ± 0.75 | 0.53 |

| 17. Handling changes in your daily routine? | 1.71 ± 0.85 | 1.92 ± 0.71 | 1.86 ± 0.75 | 0.67 |

| 18. Understanding what people mean when they are talking to you? | 2.01 ± 0.74 | 1.94 ± 0.69 | 1.96 ± 0.70 | 0.56 |

| 19. Understanding how other people feel about things? | 2.16 ± 0.86 | 2.25 ± 0.81 | 2.23 ± 0.83 | 0.58 |

| 20. Following conversations in a group? | 1.95 ± 0.86 | 2.22 ± 0.80 | 2.15 ± 0.82 | 0.69 |

| Interviewer SCoRS Total | 38.55 ± 9.99 | 39.35 ± 8.68 | 39.15 ± 9.02 | |

| Global Ratings SCoRS | 5.07 ± 1.65 | 4.89 ± 1.57 | 4.94 ± 1.59 |

Complete administration of the SCoRS included two separate sources of information that generated three different ratings: a rating based on an interview with the patient, a rating based on an interview with an informant of the patient (family member, friend, social worker, etc.), and a rating generated by the interviewer who administered the scale to the patient and informant. The informant based his or her ratings on interaction with and knowledge of the patient; raters were instructed to identify an informant as the person who had the most regular contact with the patient in everyday situations. The interviewer’s rating on each item reflected a judgment-based combination of the two interviews incorporating the interviewer’s observations of the patient. Raters were instructed to conduct the informant ratings within 7 days of the administration of the patient rating. Raters also generated a global rating of overall impairment by incorporating all information from all sources. The global rating was scored from 1 to 10, with higher ratings indicating more impairment. A simple sum of the 20 SCoRS items referred to as the SCoRS total, which has been responsive to treatment effects (Hilt et al., 2011; Keefe et al., 2014), was also calculated.

MATRICS Consensus Cognitive Battery (MCCB)

The MCCB measures seven separable cognitive domains: speed of processing; attention/vigilance; working memory (verbal and nonverbal); verbal learning; visual learning; reasoning and problem solving; and social cognition. Administration of the MCCB requires about 75–90 minutes. The following tests were administered in this standard order:

Trail Making A

Brief Assessment of Cognition in Schizophrenia (BACS) Symbol Coding

Hopkins Verbal Learning Test – Revised (HVLT-R)

Wechsler Memory Scale III (WMS-III) Spatial Span

University of Maryland Letter Number Span

Neuropsychological Assessment Battery (NAB) – Mazes

Brief Visuospatial Memory Test – Revised (BVMT-R)

Category Fluency

Mayer-Salovey-Caruso Emotional Intelligence Test (MSCEIT) Managing Emotions

Continuous Performance Test – Identical Pairs (CPT-IP)

All testers were trained on the administration and scoring of the MCCB using video and group training sessions and were individually certified by an expert on the MCCB. Training, data collection, and data quality assurance were implemented or supervised by an experienced psychologist as per the guidelines outlined in the MCCB manual (Nuechterlein & Green, 2006). The MCCB scoring program yields seven domain scores and a composite score which are standardized to the same T-score measurement scale with a mean of 50 and an SD of 10 based upon the normative data collected from a sample of 300 community participants as part of the MATRICS PASS and published in the MCCB manual and the MCCB scoring program (Nuechterlein & Green, 2006).

Analyses

Demographic information for patients from the two studies is presented. For the purposes of these analyses, the treatment study data includes only subjects from the intent-to-treat population (N=307).

In the treatment study, seven sequential assessments with the SCoRS over both pre-treatment visits (Day −14, Day −7, twice on Day −4) and post-treatment visits (Day 28, Day 56 and Day 77) allowed an assessment of the test-retest reliability of this measure in the context of treatment and in the context of a simple repeated measures design. Intraclass correlation coefficients (ICCs) were obtained from the estimated R-matrix of a mixed model for repeated measures with subject as a random effect using a compound symmetry covariance structure. Separate models were utilized for the SCoRS total and the 20 individual items from the 3 sources of information: patient, informant, and interviewer (i.e., 63 models). Additionally, reliability for the SCoRS global rating from the interviewer was also examined. Pre-treatment models included assessments from all patients on days −14, −7, and −4. ICCs were also examined in patients assigned to placebo across all 7 visits.

Visit was added as a fixed effect to the models that were used to determine the ICCs. The mean difference across visits was used to calculate effect size which was expressed as Cohen’s d. The values for Cohen’s d can thus be interpreted to represent systematic error in the SCoRS. Since some patients did not have an informant available, the sample size for the SCoRS analyses varied by the source of rating and the occurrence of missing data. If 5 or fewer SCoRS items were missing from an assessment, then the SCoRS total was imputed using the average of the non-missing items.

A combined dataset was created from the treatment and validation studies which included the first assessment from both the SCoRS and MCCB administrations in those situations where an informant was present (N=300). This dataset was used to explore baseline performance on the SCoRS, the relationship between the SCoRS and the MCCB, the internal consistency of the items, and for an exploratory factor analysis of the SCoRS items.

Using the combined dataset, Pearson correlations were calculated between individual SCoRS items, total score, and global rating for the patient, informant, and interviewer and the MCCB domains and composite score. The results are restricted to sites in the US due to limited administration of the MCCB in the clinical trial (N=151). Correlations less than −0.20 were considered to be sufficiently robust to be meaningful and are reported here. Note that the expected direction of the relationship is negative since for the SCoRS, larger values indicate greater impairment while for the MCCB larger values indicate better cognitive function.

An exploratory factor analysis was run on the 20 interviewer items of the SCoRS using the baseline ratings from the combined dataset. Missing individual items resulted in the loss of 5 observations (N=295). Factors were retained in the model if they had both an eigenvalue > 1 and a cumulative proportion of variance > 0.8. Factor loadings were used as weights to create a factor score, which was then compared to the SCoRS total with respect to sensitivity to treatment effect using linear mixed models on the treatment study data. Cronbach’s alpha was used to calculate internal consistency.

Finally, using the treatment study data (N=307), SCoRS items that responded to treatment were determined from 63 (20 items and total from 3 data sources) linear mixed models of the change from baseline. Covariates included baseline, visit, and treatment as fixed factors with subject as a random factor. Additionally, treatment comparisons were explored for the change from baseline in the SCoRS total based on the interviewer ratings. Regional effects on the interviewer SCoRS total were also explored with this model. Missing individual items resulted in the loss of 5 observations (N=302).

RESULTS

Demographics and Baseline Performance

Demographic and baseline characteristics of patients from the two studies are given in Table 1. Despite considerable differences in the aims and design of the two studies, their demographics were similar. The largest discrepancies were the percentages for Caucasians: 18% Caucasian (Hispanic) and 34% Caucasian (non-Hispanic) for the Validation study; 7% Caucasian (Hispanic) and 59% Caucasian (non-Hispanic) for the Treatment study. Mean performance on the first administration of the SCoRS items for each study in isolation and combined is presented in Table 2. A Wilcoxon-Mann-Whitney test was used to test for significant differences between the two studies. Questions 6, 9, 11, 13, 14, 17, and 20 were all significant at the α=0.05 level.

Table 1.

Demographics for the Validation and Treatment Studies

| Validation Study (N=76) | Treatment Study (N=307) | |

|---|---|---|

| Age | 42.6 ± 11.62 | 38.4 ± 10.04 |

| Female N(%) | 29 (38.16) | 96 (31.27) |

| Race/Ethnicity N(%) | ||

| African American | 31 (41) | 97 (32) |

| Hispanic | 14 (18) | 20 (7) |

| Caucasian | 26 (34) | 181 (59) |

| Other | 5 (6) | 9 (2) |

| Region N(%) | ||

| U.S. | 76 (100) | 166 (54) |

| Russia | 59 (19) | |

| Serbia | 28 (9) | |

| Ukraine | 54 (18) | |

| MCCB Composite T-Score | 30.3 ± 12.60 | 28.9 ± 11.301 |

The MCCB was only administered in the U.S. for the treatment study (n = 154).

Informant Characteristics

There were considerable differences between regions in the percentage of patients who had informants and the frequency of contact that an informant had with the patient. All subjects in Europe brought an informant with them to their visit as this was a requirement for the study and these informants spent a median of 70 hours per week with the patient. Approximately 50% of the U.S. patients had an informant who spent a median of 20 hours per week with them. The European informants were all significant others, immediate family members, or extended family members. Of the U.S. informants, approximately half were family members who spent a median of 40 hours per week with the patient. Non-family member informants in the U.S. spent a median of 6 hours per week with the patient.

Exploratory Factor Analysis and Item Analysis

The exploratory factor analysis on the combined dataset (N=295) indicated that a single factor was the best structure. The factor loadings were used as weights to create a factor score (Table 2). Cronbach’s alpha was 0.90.

Test-retest Reliability

Pre-treatment - all patients

The ICCs were greater than 0.90 for the patient (N=299), informant (N=231) and interviewer (N=302) SCoRS total and interviewer global rating. When interviewer and patient ICCs were re-examined using only assessments where an informant was available (N=231), both still remained above 0.90. Effect sizes for systematic error in the SCoRS total, although significantly different from zero (p-values<0.10), were trivial (patient, d=0.10; informant, d=0.05; interviewer, d=0.06) indicating that the reported ICCs are reflective of random error. There was no systematic error present for the interviewer global ratings. ICCs for the pre-treatment period were also compared between sites in the US (when an informant was present, N=90) and Europe (informants present at all assessments, N=141). ICCs were higher in Europe than the US for the patient (0.95 vs. 0.86), informant (0.97 vs. 0.82), and interviewer (0.97 vs. 0.84) SCoRS total and interviewer global rating (0.99 vs. 0.86).

The ICCs for individual SCoRS items varied by question and source of information. In general, the ICC was lowest for the patient (19 of 20 items) and highest for the interviewer (12 of 20 items). The largest discrepancy was for “Keeping track of your money” between patient scores (ICC=0.72) and interviewer scores (ICC=0.81). For two items, the ICCs for patient and interviewer were equivalent: “Remembering information and/or instructions recently given to you” and “Speaking as fast as you would like” (ICC=0.80 for both items). Overall, the mean values (range) were 0.77 (0.69–0.82) for patient, 0.81 (0.74–0.88) for informant, and 0.82 (0.71–0.87) for interviewer. Among the items with the lowest test-retest reliability across all three sources were “Remembering what you were going to say,” “Staying focused,” and “Understanding what people mean when they are talking to you.” “Following a TV show” had the highest or second highest reliability.

Post-treatment - Placebo group

Assessment of the reliability of the SCoRS was also examined for the treatment period in the placebo group only. The ICCs for the SCoRS total were 0.76 for the patient ratings (N=100), 0.85 for the informant ratings (N=71) and 0.81 for the interviewer ratings (N=101). Effect sizes for systematic error between Day −14 and Day 77 were substantial in this group (patient, d=0.70; informant, d=0.55; interviewer, d=0.69). If systematic error is removed from the total error and ICCs are recalculated, there is a slight increase overall (patient, ICC=0.81; informant, ICC=0.88; interviewer, ICC=0.85), although the test-retest reliability is still somewhat lower than observed for the SCoRS total in the pre-treatment period. The ICC for the interviewer global rating was somewhat lower than for the SCoRS total (0.76 vs. 0.81). Again, individual items varied considerably, and ICCs were substantially lower than for the pre-treatment comparisons. The mean (range) ICC was 0.68 (0.55–0.78) for the patient ratings, 0.71 (0.59–0.80) for the informant ratings, and 0.72 (0.47–0.83) for the interviewer. “Following a TV show” and “Understanding how other people feel about things” consistently yielded higher ICCs, whereas “Remembering information and/or instructions recently given to you” had the lowest reliability for all 3 sources.

Correlations between SCoRS and MCCB

The SCoRS total based on the interviewer rating was significantly correlated with the MCCB composite score (r=−0.35) at baseline (Supplemental Table 1). The SCoRS total had correlations of r<−0.20 (p-value≤.01) with 6 of the 7 MCCB domains; the SCoRS global rating was similarly correlated with 5 of the 7 domains. Thirty-one of the 140 correlations among the individual SCoRS items from the interviewer ratings and the MCCB domains were <−0.20. Inspection of the pattern of correlations suggested that while items and domains that are conceptually related tended to have significant correlations (e.g., the SCoRS item asking about trouble speaking quickly (#15) was correlated with the speed of processing domain, r=−0.27), the more striking aspect of the matrix was that certain items had relationships with multiple MCCB domains while other items had more delineated relationships. For example, three of the items (#8, 10, 16) had correlations <−0.20 with 4 of the 7 MCCB domains, while 8 of the items (#1, 3, 4, 5, 13, 17, 19, 20) had none. Eleven of the items showed meaningful correlation with the Working Memory domain, whereas only 1 item was correlated with each of the Visual and Verbal Learning domains. Thus, the SCoRS items vary in the extent to which they converge with the performance-based domains from the MCCB.

The item ratings based solely on the patient’s report had fewer (12 of 140) correlations <−0.20, consistent with the notion that patients are less attuned to their cognitive performance (two sample proportions test, z=−3.21; P=.003). Fourteen of the 20 items from the patient report had no correlations <−0.20 with MCCB domains.

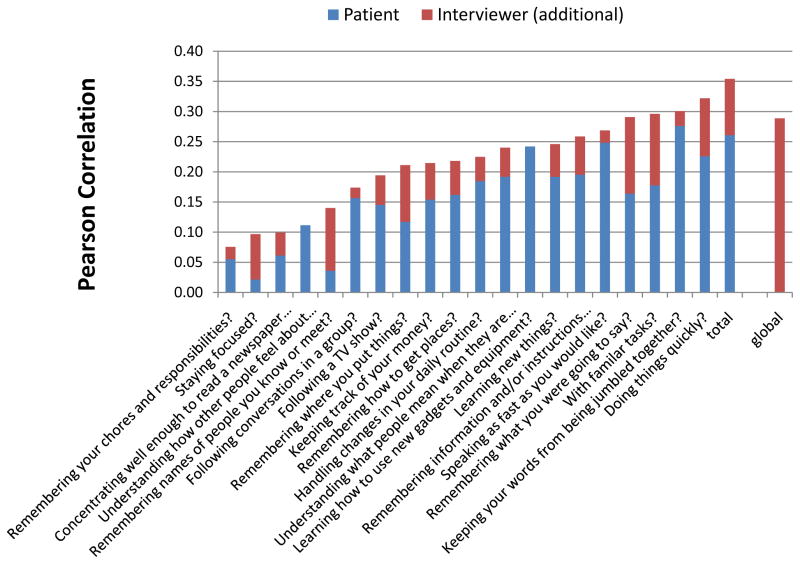

Figure 1 displays the correlations between the items and summary measures of the SCoRS and the MCCB composite for the patient and interviewer. There are two instances where the patient had slightly higher correlations than the interviewer as indicated by the completely blue bar (“understanding how other people feel”, “learning how to use new equipment”). However, in general, the information obtained by the interviewer from the informant improved the correlation with the MCCB.

Figure 1.

Pearson Correlations between SCoRS items and MCCB Composite T-Score at Baseline from Patient and Interviewer Ratings

Treatment Analyses

Both the total score and the factor score were modeled using the baseline score, treatment, visit, and an interaction term for treatment and visit. The main effects were found to be statistically significant for both outcomes (see Table 3) with minimal differences between them. The interaction terms were non-significant. Treatment effect sizes (Cohen’s d) were also similar from the two models and are reported here with the sign changed such that a positive effect size is associated with less impairment in the active treatment group. For the 0.3 mg encenicline versus placebo comparison: factor score model, d=−0.05; SCoRS total model, d<−0.01. For the 1.0 mg versus placebo comparison: factor score model, d=0.19; SCoRS total model, d=0.22. Due to the two models yielding nearly identical results, we focused solely on the SCoRS total. The total score is a simpler statistic and is equivalent to using a factor-based score (with factor weights all equal to 1) rather than using the factor loadings as weights.

Table 3.

Comparison of the Treatment Effect model for the Factor Score and the SCoRS Total

| Type 3 Tests of Fixed Effects

| |||

|---|---|---|---|

| Effect | Response | F Value | P-value |

| Baseline Factor Score | Factor Score | 48.11 | <0.001 |

| Treatment | Factor Score | 3.51 | 0.032 |

| Day | Factor Score | 84.05 | <0.001 |

|

| |||

| Treatment*Day | Factor Score | 0.53 | 0.713 |

|

| |||

| Baseline SCoRS Total | SCoRS Total | 62.37 | <0.001 |

| Treatment | SCoRS Total | 4.64 | 0.010 |

| Day | SCoRS Total | 81.54 | <0.001 |

| Treatment*Day | SCoRS Total | 0.43 | 0.789 |

In a separate exploratory analysis of the regional effect on the Interviewer SCoRS total, a statistical trend for a treatment by region interaction was found (p=0.091), suggesting that treatment response may be different for Europe and the U.S. In particular, there was a stronger treatment effect for European patients versus US patients in the SCoRS endpoint. This may be explained by the difference in the nature of the informant and the extent of contact with the patient.

Item Response Analysis

Table 4 lists the SCoRS items that responded to treatment based on results from 63 linear mixed models of the change from baseline. Significant p-values from the informant ratings are presented for the comparison of 1 mg encenicline to placebo for eight of the 20 items and the SCoRS total. Seven of these 8 items and the total from the interviewer ratings also responded. There was not a significant treatment effect for the SCoRS global rating (p=0.084). Only 1 item (“following a TV show”) responded to treatment for the patient ratings as well. It is not surprising that the test-retest reliability for this item was among the highest (≥0.78) for ratings from all 3 sources. Most of the items that responded for the informant and interviewer had ICCs>0.70. When the items were analyzed separately by geographical region (Table 5), two additional questions were responsive to 1.0 mg encenicline, making a total of 10 items plus the total score and global rating. However, only 1 item (“remembering where you put things”) responded to treatment in the US sites, and only for the informant ratings.

Table 4.

SCoRS items that responded to treatment with encenicline 1.0 mg compared to placebo: Effect sizes (ES) presented as Cohen’s d

| SCoRS Item | Interviewer ES (p-value) |

Informant ES (p-value) |

Patient ES (p-value) |

|---|---|---|---|

| 1. Remembering the names of people you know or meet | 0.32 (.027) | 0.55 (.001) | |

| 2. Remembering how to get places | 0.27 (.058) | 0.48 (.005) | |

| 3. Following a TV show | 0.44 (.003) | 0.49 (.004) | 0.38 (.008) |

| 4. Remembering where you put things | 0.32 (.024) | 0.56 (.001) | |

| 7. Remembering information recently given to you | 0.36 (.035) | ||

| 13. Staying Focused | 0.28 (.048) | 0.44 (.011) | |

| 16. Doing things quickly | 0.28 (.054) | 0.37 (.029) | |

| 20. Following conversations in a group | 0.37 (.010) | 0.36 (.033) | |

| Total Score | 0.37 (.011) | 0.57 (.001) |

DISCUSSION

The SCoRS is an interview-based rating scale of cognitive impairment and related functioning in patients with schizophrenia. Previous work had suggested that the SCoRS has the potential to be used as a clinical measure of cognitive treatment response and to be used in clinical trials as a co-primary measure with functional relevance due to its good reliability and its relation to real-world functioning and performance-based measures of cognition. However, given its acceptance by the US FDA as a co-primary measure, and the increasing desire of clinicians to assess cognition with interview-based measures, several important issues arise, and are addressed by the data described here.

First, the structure of the SCoRS items suggests that a single factor best describes them, and significant shortening of the SCoRS is not recommended at this time. Second, it appears as though the SCoRS is responsive to pharmacologic treatment when compared to placebo, including total score response and robust single item responses. Third, the need for an informant continues to be underscored by reliability, validity and treatment response data, however in certain circumstances and for certain items, information that is based upon patient-report alone may have value. Finally, the reliability and the sensitivity of the instrument may vary based upon geographical region and the extent of training of raters.

The current version of the SCoRS includes 20 items. Although the interview and scoring of the measure usually requires less than 15 minutes per interview, it would be helpful to identify aspects of the scale that will allow for the reduction of items or for a restructuring of the scale based upon cognitive domains. An exploratory factor analysis performed on 295 patients assessed in a diverse set of circumstances and geographical regions suggested that a single factor best describes the data. Since both the factor score and a simple total of all 20 items yielded almost identical reliability and treatment effects, we suggest that investigators use the total score, which is simpler to calculate and will be identical across studies. Most of the items had high reliability, yet only a few demonstrated statistically significant response to treatment with encenicline. In addition, there was considerable variability in terms of which items responded in different geographical regions and different sources of information. More work will need to be done to determine if some of the less reliable items are those that respond best to treatment and therefore need to be retained in the instrument. Therefore, at this point we are hesitant to reduce the SCoRS to fewer items.

The test-retest reliability of the SCoRS was excellent in the two studies described in this paper. The highest reliability was found to be with the sum of the 20 items, which was better than the single global ratings that are also a part of the instrument. The test-retest reliability was higher for ratings made by the interviewer when the interviewer considered information from the patient and an informant. In some circumstances, ratings made from information collected only from the patient had good reliability and modest responses to treatment. Several issues need to be considered before utilizing data collected from patients without informants. Significant experience and/or training for raters is important, as the psychometrics of the patient-only ratings were better from academic research centers than clinical trials sites, although trained European raters in clinical trials were able to collect data from patients that was reliable and sensitive to treatment.

Although the test-retest reliability statistics of the total ratings and many individual items were excellent in the US, there were substantial differences in the reliability of the SCoRS between regions. The exact source of this discrepancy is not known and requires further research. It is possible that patients with schizophrenia in Serbia, Russia, and Ukraine have more cohesive families and communities and their societies are less isolating of patients with schizophrenia, which supports more reliable reports of cognition-related behavior.

Previous reports suggested variable correlations between interview-based assessments and cognitive performance measures, ranging from −0.40 (Keefe et al., 2006) in a single site study to −0.31 in the 5-site MATRICS validation study (Green et al., 2008) with the SCoRS and −0.40 (Ventura et al., 2013) in a single site study to −0.23 in the 4-site MATRICS Validation of Intermediate Measures study (Green et al., 2011) with the CAI. The current data collected in a three-site research center consortium suggest that overall MCCB composite scores and SCoRS total ratings can have high correlations (r=0.46) when interviewers are highly trained and have access to reliable informants. Overall, patient reports were less reliable and less related to cognitive performance, yet some of the items had significant correlations with MCCB scores even when the ratings were based on the patient report alone. Again, the generalizability of this relationship to clinical practice and clinical trials is not known. The MCCB domain scores tended to be correlated with specific items in a broad fashion, rather than with items that conceptually would seem related to that cognitive domain. It appears as though many of the SCoRS items evaluate multiple cognitive domains. Further, a sharp distinction among MCCB domains is not well supported in factor analyses (Burton et al., 2013; Noh et al., 2010) and may best be represented by a single factor (Keefe et al., 2006; Dickinson et al., 2006) with social cognition possibly separate.

The SCoRS was responsive to treatment. It was more responsive to treatment in Europe than in the United States. While some of this regionally-mediated responsiveness may have been due to the presence of high contact informants, the patient reports in Europe were also responsive to treatment. The individual items that were especially responsive to treatment appeared to measure visual memory functions (remembering where you put things; remembering how to get places) and attention in different contexts of human interaction (following a TV show; following conversations in a group). A few of the items were responsive to treatment even in the data collected solely from patients: remembering names, remembering chores, staying focused, and doing things quickly. It may be that certain items are more sensitive to cognitive processes that can be observed better by patients than by those who interact with them.

In summary, the SCoRS has excellent test-retest reliability, is strongly related to cognitive performance as measured by the MCCB, and is sensitive to treatment. Its weaknesses include its reliance on informant information, which is not available for some patients, and may vary by region.

Supplementary Material

Acknowledgments

We thank Cathy Lefebvre, who assisted with the preparation of the manuscript.

ROLE OF FUNDING SOURCE

One of the studies was sponsored by NIMH SBIR grant 5R44MH084240-03. The other study was funded by FORUM Pharmaceuticals.

Footnotes

CONTRIBUTORS

Richard Keefe conceptualized the paper, designed the statistical analysis plan, and wrote the manuscript. Vicki Davis designed the statistical analysis plan, conducted statistical analyses and wrote the manuscript. Nathan Spagnola designed the statistical analysis plan, conducted statistical analyses and wrote the manuscript. Dana Hilt designed one of the studies. Nancy Dgetluck designed the statistical analysis plan and conducted data analysis for one of the studies. Stacy Ruse oversaw the collection of data for one of the studies. Tom Patterson and Meera Narasimhan each oversaw the collection of data at one of the sites in one of the studies. Philip Harvey conceptualized the paper, designed the statistical analysis plan, and edited the manuscript. All authors contributed to and have approved the final manuscript.

CONFLICT OF INTEREST

Dr. Richard Keefe currently or in the past 3 years has received investigator-initiated research funding support from the Department of Veteran’s Affair, Feinstein Institute for Medical Research, GlaxoSmithKline, National Institute of Mental Health, Novartis, Psychogenics, Research Foundation for Mental Hygiene, Inc., and the Singapore National Medical Research Council. He currently or in the past 3 years has received honoraria, served as a consultant, or advisory board member for Abbvie, Akebia, Amgen, Astellas, Asubio, AviNeuro/ChemRar, BiolineRx, Biogen Idec, Biomarin, Boehringer-Ingelheim, Eli Lilly, EnVivo, GW Pharmaceuticals, Helicon, Lundbeck, Merck, Minerva Neurosciences, Inc., Mitsubishi, Novartis, Otsuka, Pfizer, Roche, Shire, Sunovion, Takeda, Targacept. Dr. Keefe receives royalties from the BACS testing battery, the MATRICS Battery (BACS Symbol Coding) and the Virtual Reality Functional Capacity Assessment Tool (VRFCAT). He is also a shareholder in NeuroCog Trials, Inc. and Sengenix.

Meera Narasimhan reports having received grant or research support over the last 3 years from the National Insitute of Mental Health, the National Insitute of Drug Abuse, the National Institues of Alcohol Abuse and Alcoholism, the National Institutes of Health, Astra Zeneca, Eli Lilly, Janssen Pharmaceutica, Forest Labs, and Pfizer.

Dana Hilt and Nancy Dgetluck are full time employees of FORUM Pharmaceuticals.

Vicki Davis, Nathan Spagnola, and Stacy Ruse are full time employees of NeuroCog Trials, Inc.

Dr. Patterson has no conflicts of interest to disclose.

Dr. Harvey reports having received consulting fees for Abbvie, Boeheringer-Ingelheim, En Vivo, Genentech, Otsuka America, Sunovion Pharma, and Takeda Pharma. Dr. Harvey receives royalties from the Brief Assessment of Cognition in Affective Disorders (BAC-A) and the Brief Assessment of Cognition in Schizophrenia (BACS).

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Barch DM, Carter CS, Arnsten A, Buchanan RW, Cohen JD, Geyer M, Green MF, Krystal JH, Nuechterlein K, Robbins T, Silverstein S, Smith EE, Strauss M, Wykes T, Heinssen R. Selecting Paradigms From Cognitive Neuroscience for Translation into Use in Clinical Trials: Proceedings of the Third CNTRICS Meeting. Schizophr Bull. 2009;35:109–114. doi: 10.1093/schbul/sbn163. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bellack AS, Green MF, Cook JA, Fenton W, Harvey PD, Heaton RK, Laughren T, Leon AC, Mayo DJ, Patrick DL, Patterson TL, Rose A, Stover E, Wykes T. Assessment of community functioning in people with schizophrenia and other severe mental illnesses: a white paper based on an NIMH-sponsored workshop. Schizophr Bull. 2007;33:805–822. doi: 10.1093/schbul/sbl035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bowie CR, Twamley EW, Anderson H, Halpern B, Patterson TL, Harvey PD. Self-assessment of functional status in schizophrenia. J Psychiatr Res. 2006;41:1012–1018. doi: 10.1016/j.jpsychires.2006.08.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buchanan RW, Davis M, Goff D, Green MF, Keefe RSE, Leon A, Nuechterlein K, Laughren T, Levin R, Stover E, Fenton W, Marder S. A Summary of the FDA-NIMH-MATRICS workshop on clinical trial designs for neurocognitive drugs for schizophrenia. Schizophr Bull. 2005;31:5–19. doi: 10.1093/schbul/sbi020. [DOI] [PubMed] [Google Scholar]

- Buchanan RW, Keefe RSE, Umbricht D, Green MF, Laughren T, Marder SR. The FDA-NIMH-MATRICS guidelines for clinical trial design of cognitive-enhancing drugs: What do we know 5 years later? Schizophr Bull. 2011;37:1209–1217. doi: 10.1093/schbul/sbq038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burton CZ, Vella L, Harvey PD, Patterson TL, Heaton RK, Twamley EW. Factor structure of the MATRICS Consensus Cognitive Battery (MCCB) in schizophrenia. Schizophr Res. 2013;146:244–248. doi: 10.1016/j.schres.2013.02.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chapman LJ, Chapman JP. Problems in the measurement of cognitive deficit. Psychol Bull. 1973;79:380–385. doi: 10.1037/h0034541. [DOI] [PubMed] [Google Scholar]

- Green MF, Nuechterlein KH, Kern RS, Baade LE, Fenton WS, Gold JM, Keefe RSE, Mesholam-Gately R, Seidman LJ, Stover E, Marder SR. Functional co-primary measures for clinical trials in schizophrenia: Results from the MATRICS psychometric and standardization study. Am J Psychiatry. 2008;165:221–228. doi: 10.1176/appi.ajp.2007.07010089. [DOI] [PubMed] [Google Scholar]

- Green MF, Schooler NR, Kern RS, Frese FJ, Granberry W, Harvey PD, Karson CN, Peters N, Stewart M, Seidman LJ, Sonnenberg J, Stone WS, Walling D, Stover E, Marder SR. Evaluation of functionally meaningful measures for clinical trials of cognition enhancement in schizophrenia. Am J Psychiatry. 2011;168:400–407. doi: 10.1176/appi.ajp.2010.10030414. [DOI] [PubMed] [Google Scholar]

- Dickinson D, Ragland JD, Calkins ME, Gold JM, Gur RC. A comparison of cognitive structure in schizophrenia patients and healthy controls using confirmatory factor analysis. Schizophr Res. 2006;85:20–29. doi: 10.1016/j.schres.2006.03.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harvey PD, Ogasa M, Cucchiaro J, Loebel A, Keefe RS. Performance and interview-based assessments of cognitive change in a randomized, double-blind comparison of lurasidone vs. ziprasidone. Schizophr Res. 2011;127:188–194. doi: 10.1016/j.schres.2011.01.004. [DOI] [PubMed] [Google Scholar]

- Hilt DC. EVP-6124, An Alpha-7 Nicotinic Partial Agonist, Produces Positive Effects on Cognition, Clinical Function, and Negative Symptoms in Patients with Chronic Schizophrenia on Stable Antipsychotic Therapy. Poster presentation at the 50th Annual ACNP Meeting; Hollywood, FL. December 4–8.2011. [Google Scholar]

- Keefe RSE, Poe M, Walker TM, Kang JW, Harvey PD. The Schizophrenia Cognition Rating Scale (SCoRS): Interview-based assessment and its relationship to cognition, real-world functioning and functional capacity. Am J Psychiatry. 2006;163:426–432. doi: 10.1176/appi.ajp.163.3.426. [DOI] [PubMed] [Google Scholar]

- Keefe RSE, Moghaddam B. Reward processing, cognition and perception during adolescent brain development and vulnerability for psychosis. Symposia presented at the 4th Annual SIRS meeting; Florence, Italy. April 5–9.2014. [Google Scholar]

- Noh J, Kim JH, Hong KS, Kim N, Nam HJ, Lee D, Yoon SC. Factor structure of the neurocognitive tests: an application of the confirmative factor analysis in stabilized schizophrenia patients. J Korean Med Sci. 2010;25:276–282. doi: 10.3346/jkms.2010.25.2.276. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nuechterlein KH, Green MF. MATRICS Consensus Cognitive Battery Manual. MATRICS Assessment, Inc; Los Angeles, Ca: 2006. [Google Scholar]

- Patterson TL, Semple SJ, Shaw WS, Grant I, Jeste DV. Researching the caregiver: family members who care for older psychotic patients. Psychiatr Ann. 1996;26:772–784. [Google Scholar]

- Sabbag S, Twamley EM, Vella L, Heaton RK, Patterson TL, Harvey PD. Assessing everyday functioning in schizophrenia: not all informants seem equally informative. Schizophr Res. 2011;131:250–255. doi: 10.1016/j.schres.2011.05.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spreen O, Strauss E. A Compendium of Neuropsychological Tests. 2. Oxford University Press; New York: 1998. [Google Scholar]

- Ventura J, Reise SP, Keefe RS, Hurford IM, Wood RC, Bilder RM. The Cognitive Assessment Interview (CAI): development and validation of an empirically derived, brief interview-based measure of cognition. Schizophr Bull. 2013;39:583–591. doi: 10.1093/schbul/sbs001. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.