Abstract

People make determinations about the social characteristics of an agent (e.g., robot or virtual agent) by interpreting social cues displayed by the agent, such as facial expressions. Although a considerable amount of research has been conducted investigating age-related differences in emotion recognition of human faces (e.g., Sullivan, & Ruffman, 2004), the effect of age on emotion identification of virtual agent facial expressions has been largely unexplored. Age-related differences in emotion recognition of facial expressions are an important factor to consider in the design of agents that may assist older adults in a recreational or healthcare setting. The purpose of the current research was to investigate whether age-related differences in facial emotion recognition can extend to emotion-expressive virtual agents. Younger and older adults performed a recognition task with a virtual agent expressing six basic emotions. Larger age-related differences were expected for virtual agents displaying negative emotions, such as anger, sadness, and fear. In fact, the results indicated that older adults showed a decrease in emotion recognition accuracy for a virtual agent's emotions of anger, fear, and happiness.

INTRODUCTION

Facial expressions are one of the most important media for communicating emotional state (Collier, 1985), and a critical component in successful social interaction. Understanding the role of emotion in creating fluid and natural social interaction should not be limited to only the study of human-human interaction. Emotion may also play an important role while interacting with an agent, such as a robot or animated software agent (e.g., virtual agent). Hence, understanding issues related to the social characteristics of agents may be a crucial component in promoting optimal interaction between a human and an agent. As agents approach higher levels of intelligence and autonomy, the quality of their social capabilities will be a critical factor in their ability to interact with humans as a partner, rather than a tool (Breazeal, 2002).

There has been a growing interest in the development of socially interactive agents. Within the last decade, advances in computer and sensing capabilities have led to an increase in the development and research of social agents with the capability to assist or entertain humans in domestic or recreational settings. One population that could especially benefit from assistive agents is older adults.

Older adults prefer to age in place (Gitlin, 2003); that is, they prefer to age in their home setting. Robots, and other intelligent agents, have the potential to facilitate older adults’ independence, reduce healthcare needs, and provide everyday assistance, thus reducing care-giving needs. A better understanding of how older people socially interact with technology (e.g., how they interpret social cues such as emotion) will directly impact the design of emotionally expressive assistive agents.

It is generally accepted that people are willing to attribute social characteristics to technology (Nass, Moon, Fogg, & Reeves, 1995). Virtual agents in particular use facial expressions to facilitate social interaction (Cassell, Sullivan, Prevost, & Churchill, 2000). Although people have been shown to recognize agent facial expressions accurately (Bartneck, Reichenbach, & Breemen, 2004), it is currently unknown whether older adults recognize agent emotion as well as younger adults, given research showing age-related differences in emotion processing.

The ability to recognize emotions has been shown to change with normal aging (Sullivan, & Ruffman, 2004; Isaacowitz et al., 2007). A considerable amount of research indicates an age-related decline in the identification of the emotions anger, sadness, and fear. However, emotions such as happiness, disgust, or surprise do not generally show age-related identification differences (for a summary, see Ruffman, Henry, Livingstone, & Phillips, 2008).

Although the aforementioned age-related differences in emotion recognition seem prevalent for human expression of emotion, the effect of age on emotion recognition of virtual agent expressions has been largely unexplored. Possible differences between younger and older adults in recognizing emotion is an important factor to consider when designing social agents. Given the critical role facial expressions may play in facilitating social interaction, it is important to understand how well an older adult can interpret the facial expressions a social agent is intended to portray.

The purpose of this experiment was to compare younger and older adults’ identification of emotion expressed by a virtual agent. Six basic emotions were examined, with varying levels of intensity. Based upon the literature investigating age-related differences in emotion recognition of human faces (Ruffman et al., 2008), we expected to find the largest differences between young and older adults when viewing virtual agents displaying the emotions of anger, sadness, and fear.

METHOD

Participants

The participants were 20 younger adults (10 females and 10 males) aged 18 to 27 years, and 20 community dwelling older adults (10 females and 10 males) aged 65 to 75 years. The younger adults received course credit for participation. The older adults were compensated monetarily for their participation. The majority of older adults (n=16) reported having a high school education or higher. All participants had visual acuity of 20/40 or better for near and far vision. Regarding previous experience, 9 younger adult participants and 2 older adult participants reported having experience with virtual agents, cartoons, or animation.

Materials

Stimuli were presented using E-prime version 2.0 (Psychological Software Tools, 2008). Participants viewed the computer monitor from a distance of approximately 24 inches. Pictures of the virtual agent (displayed on a 17 inch monitor) were presented in an 8 x 8.5 display area, creating an average visual angle of 20.3 degrees. Participants made their responses on a keyboard. The keys were labeled with the words ‘yes’ or ‘no’ (the “f” and “j” key respectively) and the six basic emotions: anger, disgust, fear, happiness, sadness, and surprise (labeled on the horizontal number keys above the qwerty keyboard).

Stimuli

The Philips iCat robot is equipped 11 servos that control different features of the face, such as the eyebrows, eyes, eyelids, mouth and head position. The Virtual iCat, used in this study, is a 2D animated replica of the iCat robot, capable of creating the same facial expressions with the same level of control. The Virtual iCat emotions were created using Philips's Open Platform for Personal Robotics (OPPR) software. OPPR consists of a Robot Animation Editor for creating animations, providing control over each individual servo.

Emotions and Intensity

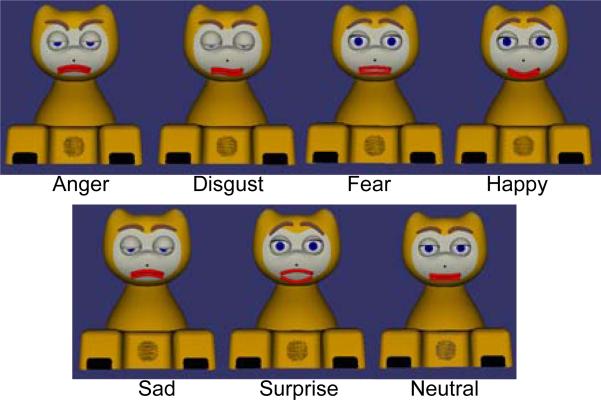

Six emotions were displayed (happiness, surprise, fear, anger, sadness and disgust), as well as a neutral expression (see Figure 1). The emotions were supplied by the OPPR software, with a pre-defined arrangement of facial features shown to commonly represent the intended emotions (Bartneck, Reichenbach & Breemen, 2004).

Figure 1.

The six basic emotions at maximum (i.e., 100%) intensity and neutral.

Each emotion was presented at five intensity levels (20%, 40%, 60%, 80%, 100%). The experimenter created each emotion's geometrical intensity levels by dividing the spatial difference of each facial component (i.e., lips, eyebrows, eyelids) between the neutral state and maximum (i.e., 100%) expression at 20% intervals.

Design

A mixed 6 (Emotion Type) x 5 (Intensity) x 2 (Age) factorial design was used. Emotion Type and Intensity were within subject variables, and Age was a grouping variable. Dependent measures were Recognition Accuracy and Response Time for making an emotion identification response. Recognition Accuracy will be the primary focus of this report.

Procedure

Participants completed an informed consent, outlining the general aspects of the study as well as their rights as participants. The participants were then trained on the task by completing 30 practice trials in which they were presented with a single word on the computer screen (surprise, happiness, fear, sadness, disgust, or anger) and asked to press the corresponding labeled key on the keyboard. The practice trials were designed to allow each participant to become familiar with the keyboard, and learn where the labeled keys were located.

After completion of the practice trials, the participants began the experimental session. Participants were randomly presented with pictures of the Virtual iCat. The stimuli were presented in 4 blocks, with 40 pictures in each block (emotion word order was randomized). Within each block, each intensity level of every emotion was presented once; the neutral expression was presented 10 times. Sequence controls ensured that no more than two of the same emotions were shown in a row. Self-paced breaks were offered between each block, as well as in the middle of each block.

Participants were first asked if emotion was present in the picture (yes/no response). If they answered ‘yes’ that there was emotion present, they were then asked to identify the emotion by selecting the correct basic emotion labeled on a keyboard. Immediately following the experimental session, the participants were debriefed and compensated for their time.

RESULTS

The focus of the present analysis is on trials where participants reported that an emotion was present. A 6 (Emotion Type) x 5 (Intensity) x 2 (Age) analysis of variance (ANOVA) was conducted; Age was a grouping variable, and Emotion Type and Intensity were within subjects variables. Huyhn-Feldt corrections were applied where appropriate. The ANOVA revealed that the mean scores for age groups differed significantly F(1,38) = 23.44, p < .001. The mean scores for the six emotions differed significantly, F(5,190) = 41.14, p < .001. The analysis also revealed that the mean scores for intensity level were significantly different, F(4,152) = 186.81, p < .001. The interaction between the emotion type and age group was significant, F(5,190) = 4.82, p < .01. There was also a significant interaction between intensity and age group F(4,152) = 5.84, p < .001, and between intensity and emotion type F(20,760) = 10.81, p < .001. The analysis also revealed a significant three-way interaction between age group, intensity level, and emotion type, F(20,760) = 3.78, p < .001.

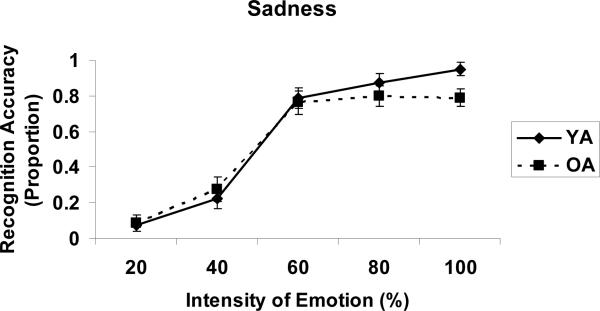

To address whether emotion recognition accuracy differed as a function of age and intensity, separate Age x Intensity Level ANOVAs were conducted for each emotion type. The main effect of emotion intensity was significant for all emotions: surprise F(4,152) = 14.64, p < .001, anger F(4,152) = 24.11, p < .01, happiness F(4,152) = 6.84, p < .01, fear F(4,152) = 26.03, p < .001, disgust F(4,152) = 21.21 p < .001 and sadness F(4,152) = 126.71, p < .001, indicating that both age groups’ recognition accuracy increased as the intensity of the emotion increased.

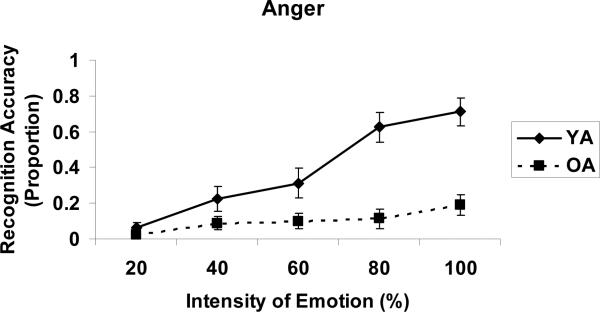

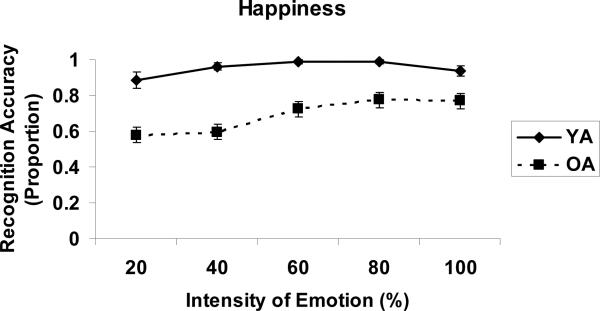

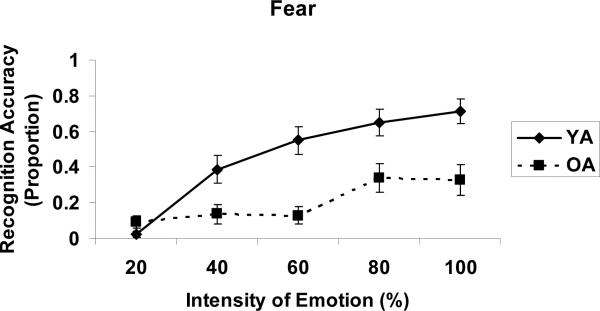

As expected there were age-related differences in emotion recognition accuracy. A main effect of Age Group was found for the emotions anger F(1,38) = 23.18, p < .001, happiness F(1,38) = 13.53, p <.001 and fear F(1,38) = 6.92, p < .001, with older adults being less accurate at identifying the emotion. An Emotion Type by Age Group interaction was examined, and indicated a significant interaction for the emotions anger F(4,38) = 23.18, p < .001 and fear F(4,38) = 15.56, p < .001. The Emotion by Age Group interaction for happiness was not statistically significant F(4,38) = 2.73,p = .055. Recognition accuracy for all intensity levels for each separate emotion can be seen in Figures 2 through 7.

Figure 2.

Recognition accuracy of anger for young and older adults (error bars indicate standard error).

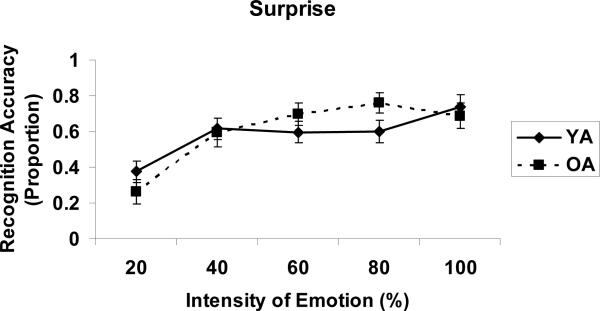

Figure 7.

Recognition accuracy of surprise for young and older adults (error bars indicate standard error).

Post hoc, independent samples t tests were conducted to further explore the interaction between age and intensity for each emotion type, with Bonferroni corrections used where appropriate. The post hoc analysis indicated that younger adults showed a significantly greater recognition accuracy benefit for the emotion anger at 80% and 100% intensity levels, and for the emotion fear at 60%, 80%, and 100% intensity levels. The emotion happiness demonstrated a ceiling effect for younger adults (demonstrating accuracy of 89% or higher for all intensities, see Figure 5), resulting in a significant age-related difference for all intensities except 100% (see Table 1).

Figure 5.

Recognition accuracy of happiness for young and older adults (error bars indicate standard error).

Table 1.

Differences in recognition accuracy between Age.

| Intensity % | Anger | Fear | Happiness | |||

|---|---|---|---|---|---|---|

| t(1,38) | p | t(1,38) | p | t(1,38) | p | |

| 20 | 1.06 | .296 | −1.74 | .092 | 3.20 | .003* |

| 40 | 1.73 | .094 | 2.63 | .012 | 4.26 | .001* |

| 60 | 2.24 | .033 | 4.52 | .001* | 3.69 | .001* |

| 80 | 5.25 | .001* | 2.85 | .007* | 2.90 | .009* |

| 100 | 5.46 | .001* | 3.46 | .001* | 1.92 | .066 |

p<.01

DISCUSSION

The purpose of this study was to investigate whether age-related differences in facial emotion recognition commonly found for human faces are also found in emotion-expressive virtual agents. The results for the virtual agent did reveal some age-related differences. In contrast to younger adults, older adults showed lowered emotion recognition accuracy for the Virtual iCat's emotions of anger, fear, and happiness.

Particularly for the emotions anger and fear, the results are aligned with previous research, indicating that older adults show impairment in identifying these emotions in human faces (Isaacowitz et al., 2007; Ruffman et al., 2008). However, the human facial recognition literature suggests that older adults generally show impairment in identifying the emotion sadness (Ruffman et al., 2008). This age-related difference was not found in this study.

Most surprising was the significant difference between age groups for the emotion happiness, with older adults showing lower accuracy. This finding was unexpected because previous research on emotion recognition of human faces has repeatedly found an absence of age-related differences for the recognition of happiness (Ruffman et al., 2008).

Differences between the results from this study and the age-related recognition differences often cited in the literature suggest that while basing agent facial expressions on human expressions may be a good place to start, the virtual design community may need data that transcend human faces.

Extending emotion identification research to include social agent emotions is an important advance in the study of emotion recognition. Age-related differences in emotion recognition of human faces, as reflected in the literature (Sullivan & Ruffman, 2004), point to the need to also understand recognition of virtual agent facial expressions. The findings from this study are particularly important because they provide evidence that some age-related differences in emotion identification may not be exclusive to human faces (i.e., age-related recognition differences of anger and fear).

By extending emotion recognition research to the field of social agents, researchers can examine the extent to which age-related differences in emotion recognition are generalizable to biologically inspired emotive agents. In an applied setting, this area of research is an important step in understanding how older adults may perceive emotional-expressive agents, such as assistive robotics. These data have implications for designing assistive personal agents for older users. For example, developers might believe that negative emotions in a robot or virtual agent would be useful for conveying an error or a misunderstanding. However, if older adults do not accurately recognize such emotions they may not interpret the intended message correctly. By investigating age-related differences in the emotion recognition of virtual agents, we have taken steps toward enriching the facial expression principles that designers use in developing emotionally-expressive robots, virtual agents, and avatars.

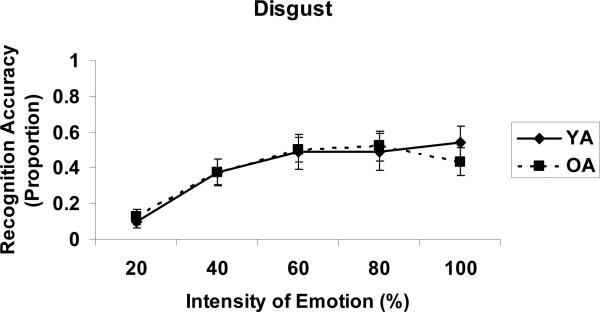

Figure 3.

Recognition accuracy of disgust for young and older adults (error bars indicate standard error).

Figure 4.

Recognition accuracy of fear for young and older adults (error bars indicate standard error).

Figure 6.

Recognition accuracy of sadness for young and older adults (error bars indicate standard error).

ACKNOWLEDGEMENTS

This research was supported in part by a grant from the National Institutes of Health (National Institute on Aging) Grant P01 AG17211 under the auspices of the Center for Research and Education on Aging and Technology Enhancement (CREATE).

REFERENCES

- Bartneck C, Reichenbach J, Breemen A.v. In your face robot! The influence of a character's embodiment on how users perceive its emotional expressions.. Proceedings of the Design and Emotion; Ankara. 2004. [Google Scholar]

- Breazeal C. Emotion and sociable humanoid robots. International Journal of Human-Computer Studies. 2002;59:119–155. [Google Scholar]

- Cassell J, Sullivan J, Prevost S, Churchill E. Embodied conversational agents. MIT Press; Cambridge, MA: 2000. [Google Scholar]

- Collier G. Emotional expression. Laurence Erlbaum Associates, Inc., Publishers; Hillsdale, NJ: 1985. [Google Scholar]

- Gitlin LN. Conducting research on home environments: Lessons learned and new directions. The Gerontologist. 2003;43(5):628–637. doi: 10.1093/geront/43.5.628. [DOI] [PubMed] [Google Scholar]

- Isaacowitz DM, Lackenhoff CE, Lane RD, Wright R, Sechrest L, Riedel R, et al. Age differences in recognition of emotion in lexical stimuli and facial expressions. Psychology and Aging. 2007;22(1):147–159. doi: 10.1037/0882-7974.22.1.147. [DOI] [PubMed] [Google Scholar]

- Nass C, Moon Y, Fogg BJ, Reeves B. Can computer personalities be human personalities? International Journal of Human-Computer Studies. 1995;43(2):223–239. [Google Scholar]

- Psychological Software Tools E-prime version 2.0. 2008 [ http:www.pstnet.com]. (Computer software and manuals)

- Ruffman T, Henry JD, Livingstone V, Phillips LH. A meta-analytic review of emotion recognition and aging: Implications for neuropsychological models of aging. Neuroscience & Biobehavioral Reviews. 2008;32(4):863–881. doi: 10.1016/j.neubiorev.2008.01.001. [DOI] [PubMed] [Google Scholar]

- Sullivan S, Ruffman T. Emotion recognition deficits in the elderly. International Journal of Neuroscience. 2004;114(3):403–432. doi: 10.1080/00207450490270901. [DOI] [PubMed] [Google Scholar]