Abstract

The sufficient-component cause framework assumes the existence of sets of sufficient causes that bring about an event. For a binary outcome and an arbitrary number of binary causes any set of potential outcomes can be replicated by positing a set of sufficient causes; typically this representation is not unique. A sufficient cause interaction is said to be present if within all representations there exists a sufficient cause in which two or more particular causes are all present. A singular interaction is said to be present if for some subset of individuals there is a unique minimal sufficient cause. Empirical and counterfactual conditions are given for sufficient cause interactions and singular interactions between an arbitrary number of causes. Conditions are given for cases in which none, some or all of a given set of causes affect the outcome monotonically. The relations between these results, interactions in linear statistical models and Pearl’s probability of causation are discussed.

Keywords: causal inference, counterfactual, epistasis, interaction include keywords that are in title, potential outcomes, synergism

1. Introduction

Rothman’s sufficient-component cause model [21] postulates a set of different causal mechanisms each sufficient to bring about the outcome under consideration. Rothman refers to these hypothesized causal mechanisms as “sufficient causes”, conceiving of them as minimal sets of actions, events or states of nature which together initiate a process resulting in the outcome.

Thus each sufficient cause is hypothesized to consist of a set of “component causes”. Whenever all components of a particular sufficient cause are present, the outcome occurs; within every sufficient cause, each component would be necessary for that sufficient cause to lead to the outcome. Models of this kind have a long history: a simple version is considered by Cayley [3]; it also corresponds to the INUS model introduced by Mackie [11] in the philosophical literature. Much recent work has sought to relate the model to other causal modelling frameworks [7, 8, 29, 31, 32].

In traditional sufficient-component cause [SCC] models the outcome and all the component causes are events, or equivalently, binary random variables. An SCC model with k component causes, implies a set of 2k potential outcomes. Conversely, in section 2 we show that for any given list of potential outcomes there is at least one SCC model which represents this set. However, in general there may be many such SCC models.

One question concerns whether, given a set of potential outcomes implied by some (unknown) SCC model, one may infer that two component causes are present within some sufficient cause in the unknown SCC model. In general, it is possible that two SCC models both imply the same set of potential outcomes yet although A and B occur together in some sufficient component cause in the first model, A and B are not present together in any sufficient component cause in the second. In [32] two sufficient component causes are said to form a ‘sufficient cause interaction’ (or to be ‘irreducible’) if they are both present within at least one sufficient cause in every SCC model for a given set of potential outcomes. Of course, in general, the distribution of potential outcomes for a given population is also unknown, though it is constrained (marginally) by the observed data from a randomized experiment. In [32] empirical conditions are given which are sufficient to ensure that for any set of potential outcomes compatible with experimental data, all compatible SCC models will contain a sufficient cause involving A and B. These results are an improvement upon earlier empirical tests for the existence of a two-way interaction in an SCC model [22], which required the assumption of monotonicity; see also [1, 9, 10, 15, 30]. The new results are able to establish the existence of an interaction in situations where monotonicity does not hold. In this paper we develop empirical conditions that are sufficient for the existence of a sufficient cause containing a given subset of an arbitrary number of variables both with and without monotonicity assumptions.

As illustrative motivation for the theoretical development we will consider data presented in a study by [26], summarized in Table 1, from a case-control study of bladder cancer examining possible three-way interaction between smoking (1 =present), and genetic variants on NAT2 (0 = R, 1 = S genotype) and NAT1 (1 for the *10 allele) for Caucasian individuals. We return to this example at the end of this paper to examine the evidence for a sufficient cause containing all three of smoking, the S genotype on NAT2 and the *10 allele on NAT1.

Table 1.

| Smoking | NAT2 | NAT1*10 | Cases (n = 215) |

Controls (n = 191) |

Odds Ratio (95% CI) | |

|---|---|---|---|---|---|---|

| 0 | 0 | 0 | 6 | 13 | 1 | |

| 0 | 0 | 1 | 8 | 16 | 1.1 | (0.3, 3.9) |

| 0 | 1 | 0 | 16 | 31 | 1.1 | (0.4, 3.5) |

| 0 | 1 | 1 | 6 | 10 | 1.3 | (0.3, 5.3) |

| 1 | 0 | 0 | 42 | 32 | 2.8 | (1.1, 8.3) |

| 1 | 0 | 1 | 41 | 26 | 3.4 | (1.2, 10.1) |

| 1 | 1 | 0 | 61 | 51 | 2.6 | (0.9, 7.3) |

| 1 | 1 | 1 | 35 | 12 | 6.3 | (2.0, 20.3) |

The remainder of this paper is organized as follows: Section 2 presents the sufficient-component cause framework as formalized by VanderWeele and Robins [32]. Section 3 describes general n-way irreducible interactions (aka ‘sufficient cause interactions’) and characterizes these in terms of potential outcomes. Section 4 derives empirical conditions for the existence of irreducible interactions both with and without monotonicity assumptions. Section 5 describes ‘singular’ interactions which arise in genetic contexts, provides a characterization, derives empirical conditions that are sufficient for their existence, and relates this notion to Pearl’s probability of causation. Section 6 discusses the relation between singular and sufficient cause interactions and linear statistical models. Section 7 provides some comments regarding stronger interpretations of sufficient cause models, and returns to the data presented in Table 1. Finally section 8 offers some possible extensions to the present work.

2. Notation and Basic Concepts

We will use the following notation: An event is a binary random variable taking values in {0, 1}. We use uppercase roman to indicate events (X), boldface to indicate sets of events (C), and lowercase to indicate specific values both for single random variables (X = x), and, with slight abuse of notation, for sets {C = c} ≡ {∀i, (C)i = (c)i}, and {a ≤ b} ≡ {∀i, (a)i ≤ (b)i}; 1 and 0 are vectors of 1’s and 0’s; the cardinality of a set is denoted |C|. We use fraktur () to denote collections of sets of events.

The complement of some event X is denoted by . A literal event associated with X, is either X or . For a given set of events C, is the associated set of literal events:

For a literal , and an assignment c to C, (L)c denotes the value assigned to L by c. The conjunction of a set of literal events is defined as:

note that Λ(B) = 1 iff for all i, Fi = 1. We also define B1 ∧B2 ≡ Λ {B1, B2}. We will use to denote the indicator function for event A. There is a simple correspondence between conjunctions of literals and indicator functions: let B = {X1, …, Xs} and C = {Y1, …, Yt}, then

| (2.1) |

Similarly, the set of literals corresponding to an assignment c to C is defined:

so that ; note that |B[c]| = |C|. The disjunction of a set of binary random variables is defined as:

note that ∨({Z1, …, Zp}) = 1 iff for some j, Zj = 1. Similarly we let B1 ∨ B2 ≡ ∨{B1, B2}. Given a collection of sets of literals we define:

We use to denote the set of subsets of that do not contain both X and for any X ∈ C, more formally:

Note that if , and |B| = |C|, so that for all C ∈ C, exactly one of C or is in B, then an assignment of values b to B induces a unique assignment c to C and vice versa.

2.1. Potential Outcomes Models

Consider a potential outcome model [14, 23, 24] with s binary factors, X1, …, Xs, which represent hypothetical interventions or causes and let D denote some binary outcome of interest. We use Ω to denote the sample space of individuals in the population and use ω for a particular sample point. Let Dx1…xs(ω) denote the counterfactual value of D for individual ω if the cause Xj were set to the value xj for j = 1, …, s. The potential outcomes framework we employ makes two assumptions: first, that for a given individual these counterfactual variables are deterministic; second, in asserting that the counterfactual Dx1…xs(ω) is well-defined, it is implicitly assumed that the value that D would take on for individual ω is determined solely by the values that X1, …, Xs are assigned for this individual, and not the assignments made to these variables for other individuals ω′. This latter assumption is often called ‘no interference’ [6], or the Stable Unit Treatment Value Assumption (SUTVA) [25]. An example of a situation where this assumption might fail is a vaccine trial where there is ‘herd’ immunity.

We will use Dx1…xs(ω), DX1=x1,…,Xs=xs(ω), Dc and DC=c(ω), with C = {X1, …, Xs} interchangeably. In this setting there will be 2s potential outcomes for each individual ω in the population, one potential outcome for each possible value of (X1, …, Xs); we use to denote the set of all such potential outcomes for an individual, and for the population. Note that if G = g(C) is some deterministic function of C then GC=c(ω) = g(c), and hence is constant; thus our usage is consistent with the definition of (L)c in the previous section.

The actual observed value of D for individual ω will be denoted by D(ω) and similarly the actual value of X1, …, Xs by X1(ω), …, Xs(ω). Actual and counterfactual outcomes are linked by the Consistency Axiom which requires that

| (2.2) |

i.e. that the value of D which would have been observed if X1, …, Xs had been set to the values they actually took is equal to the value of D which was in fact observed [?]. It follows from this axiom that DX1(ω),…,Xs(ω)(ω) = D is observed, but it is the only potential outcome for individual ω that is observed.

Example 1. Consider a binary outcome D with three binary causes of interest, X1, X2 and X3. Suppose that the population consists of two individuals. The potential outcomes (LHS) and actual outcomes (RHS) are shown in Table 2.

Table 2.

All potential outcomes and actual outcomes for three binary causes X1, X2 and X3, in a population with two individuals.

| Individual | D 000 | D 001 | D 010 | D 011 | D 100 | D 101 | D 110 | D 111 | (X1,X2,X3) | D |

|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 0 | 1 | 1 | 0 | 0 | 1 | 1 | 0 | (1, 0, 1) | 1 |

| 2 | 0 | 1 | 1 | 0 | 0 | 1 | 1 | 1 | (0, 0, 0) | 0 |

We use the notation A ⫫ B | C to indicate that A is independent of B conditional on C in the population distribution.

2.2. Definitions for sufficient cause models

The following definitions generalize those in [32] to sub-populations, :

Definition 2.1 (Sufficient Cause). A subset B of the putative (binary) causes for D forms a sufficient cause for D (relative to C) in sub-population Ω* if for all c ∈ {0, 1}|C| such that (Λ(B))c = 1, Dc(ω) = 1 for all ω ∈ Ω* ⊆ Ω. (We assume that there exists a c* such that (Λ(B))c* = 1.)

Observe that if B is a sufficient cause for D then any intervention setting the variables C to c with (Λ(B))c = 1 will ensure that Dc(ω) = 1 for all ω ∈ Ω*. We restrict the definition to non-empty sets Ω*, to preclude every set B being a sufficient cause in an empty sub-population. Likewise we require that there exists some c* such that (Λ(B))c* = 1 in order to avoid logically inconsistent conjunctions, e.g. , being classified (vacuously) as a sufficient cause. As a direct consequence, for any binary random variable X, at most one of X and appear in any sufficient cause B.

Proposition 2.2. In Ω* if B is a sufficient cause for D relative to C then B is sufficient for D in any set C* with B ⊆ C* ⊆ C.

B may be sufficient for D relative to C in Ω*, but not relative to C′ ⊃ C.

Proposition 2.3. If B is a sufficient cause for D relative to C in Ω* then B is sufficient for D relative to C in any subset .

B may be sufficient for D relative to C in Ω*, but not in Ω′ ⊃ Ω*.

Definition 2.4 (Minimal Sufficient Cause). A set forms a minimal sufficient cause for D (relative to C) in sub-population Ω* if B constitutes a sufficient cause for D in Ω* but no proper subset B* ⊂ B also forms a sufficient cause for D in Ω*.

Note that (in some Ω*) B may be a minimal sufficient cause for D relative to C, but not relative to C* ⊂ C, so the analog of Proposition 2.2 does not hold. For individual 2 in Table 2 {X1, X3} is a minimal sufficient cause relative to {X1, X2, X3}. However, if we suppose that for ω = 2, X2 is not caused by X1 and X3, so for all x1, x3, X2X1=x1,X3=x3(ω = 2) = X2(ω = 2), then {X1, X3} is not a minimal sufficient cause relative to {X1, X3}:

(since X2(ω =2) = 0) hence X3 is a sufficient cause of D relative to {X1, X3}, hence {X1, X3} is not minimal relative to {X1, X3} for ω = 2.

Similarly, if B is a minimal sufficient cause for D relative to C in Ω*, it does not follow that B is a minimal sufficient cause for D relative to C in subsets Ω** ⊆ Ω*, so the analog to Proposition 2.3 does not hold. In particular, it may be the case that for all ω ∈ Ω*, B is not a minimal sufficient cause for D in {ω}.

In the language of digital circuit theory [12], sufficient causes are termed ‘implicants’, and minimal sufficient causes are ‘prime implicants’.

Definition 2.5 (Determinative Set of Sufficient Causes). A set of sufficient causes for D, , is said to be determinative for D (relative to C) in sub-population Ω* if for all ω ∈ Ω* and for all c, Dc(ω) = 1 if and only if .

We will refer to a determinative set of sufficient causes for D as a sufficient cause model. Observe that in any sub-population Ω* for which there exists a determinative set of sufficient causes, the vectors of potential outcomes for D are identical, so for all ω,ω′ ∈ Ω*.

Definition 2.6 (Non-redundant Set of Sufficient Causes). A determinative set of sufficient causes , for D, is said to be non-redundant (in Ω*, relative to C) if there is no proper subset that is also determinative for D.

Note that sufficient causes are conjunctions, while sets of sufficient causes form disjunctions of conjunctions; minimality refers to the components in a particular conjunction, that each component is required for the conjunction to be sufficient for D; non-redundancy implies that each conjunction is required for the disjunction of the set of conjunctions to be determinative. If for some set of sufficient causes , for all X ∈ C, and all , either X ∈ B or then is a non-redundant set of sufficient causes.

Example 1 (Revisited). The set forms a determinative set of sufficient causes for the individual ω = 2, since:

| (2.3) |

as does :

| (2.4) |

As this example shows, determinative sets of sufficient causes are not, in general, unique.

2.3. Sufficient cause representations for a population

As noted, if B is a sufficient cause for D in Ω*, then all the units in Ω* will have D = 1 for any assignment c to C, such that (Λ(B))c = 1. In most realistic settings it is unlikely that any set B will be sufficient to ensure D = 1 in an entire population. Consequently different sets of sufficient causes will be required within different sub-populations. A sufficient cause representation is a set of sub-populations each with its own determinative sufficient cause representation:

Definition 2.7. A sufficient cause representation (A, ) for is an ordered set A = 〈A1, … Ap〉 of binary random variables, with (Ai)c = Ai for all i, c, and a set , with , such that for all ω, c, Dc(ω) = 1 ⇔ for some j, Aj(ω) = 1 and (∧(Bj))c = 1.

Note that the binary random variables Ai and the sets Bi are naturally paired via the orderings of A and ; we will refer to a pair (Ai, Bi) as occurring in the representation. The requirement that (Ai)c = Ai for all i, c implies that A ∩ C = ∅, and further that the Ai are unaffected by interventions on the Xi; this is in keeping with the interpretation of the Ai as defining pre-existing sub-populations with particular sets of potential outcomes for D.

Proposition 2.8. If (A, ) is a sufficient cause representation for then Bi is a sufficient cause of D in the sub-population in which Ai(ω) = 1.

Proposition 2.9. If (A, ) is a sufficient cause representation for , then for all A* ⊆ A, if

then

forms a determinative set of sufficient causes (relative to C) for .

Note that consists of the sub-population in which Ai(ω) = 1 for all Ai ∈ A* and Aj(ω) = 0 for all Aj ∈ A\A*.

Proof: Suppose for some , , and c we have (Λ(Bj))c = 1. Since , Aj(ω) = 1. It then follows from the definition of a sufficient cause representation that Dc(ω) = 1. Conversely, suppose Dc(ω) = 1. As (A, ) is a sufficient cause representation, for some j, Aj(ω) = 1 and (Λ(Bj))c = 1. Since, by hypothesis, , it follows that Aj ∈ A*, hence .

Theorem 2.10. For any there exists a sufficient cause representation (A, ).

Proof: Let p = 2|C|, and define , ordered arbitrarily. Further define Ai(ω) ≡ DBi=1(ω). Given an arbitrary c, for some j, B[c] = Bj, by construction of . We then have

as required. The last step follows since by definition B[c] = 1 iff C = c.

[32] proves this for the case of |C| = 2; see also [7] and [29] for discussion of the case |C| = 1.

Example 1 (Revisited). The construction given in the proof of Theorem 2.10 would yield the following sets of sufficient causes to represent shown in Table 2:

| (2.5) |

with A1 = A4 = A5 = 0, A2 = A3 = A6 = A7 = 1, .

3. Irreducible conjunctions

We saw in Example 1 above with ω = 2 that an individual’s potential outcomes may be such that there are two determinative sets of common causes and and {X1, X2} is in , but not in . However, certain conjunctions are such that in every representation either the conjunction is present or it is contained in some larger conjunction; such conjunctions are said to be ‘irreducible’:

Definition 3.1. is said to be irreducible for if in every representation (A, ) for there exists , with B ⊆ Bi.

[32] also refer to irreducibility of B for as a ‘sufficient cause interaction’ between the components of B. (Note, however, that if B is irreducible, this does not in general imply that B is either a minimal sufficient cause, or even a sufficient cause, only that there is a sufficient cause that contains B.) It can be shown (via Theorem 3.2 below) that {X2, } and {, X3} are irreducible for in Table 2. In §7 we provide an interpretation of irreducibility in terms of the existence of a mechanism involving the variables in B. We now characterize irreducibility:

Theorem 3.2. Let , , |B| = |C1|, then B is irreducible for iff there exists ω* ∈ Ω and values for C2 such that: (i) ; (ii) for all L ∈ B, .

Here indicates the disjoint union of C1 and C2. Thus B is irreducible if and only if there exists an individual in Ω, who would have response D = 1 if every literal in B is set to 1, but D = 0 whenever one literal is set to 0 and the rest to 1 (in some context ). Note that conditions (i) and (ii) are equivalent to:

| (3.1) |

Proof: (⇒) We adapt the proof of Theorem 2.10, to show that if for all ω ∈ Ω and assignments to C2, at least one of (i) or (ii) does not hold, then there exists a representation (A, ) for such that for all , B ⊆ Bi.

Define:

under arbitrary orderings. Thus is the set of subsets of exactly |C| literals that do not include B as a subset, while contains those subsets of size |C| − 1 that contain all but one literals in B.

For define the corresponding ;

For define , where .

The representation is given by , where . To see this, first note that if for some ω and c, there is a pair (Aj, Bj) in (A, ) such that Aj(ω) = 1 and (Λ(Bj))c = 1 then by construction of A† and A‡ it follows that Dc(ω) = 1. For the converse, suppose that for some c and ω, Dc(ω) = 1. There are two cases to consider:

(Λ(B))c=0. In this case B ⊈ B[c], so for some j, , hence , as required.

(Λ(B))c =1. Let c be partitioned as (c1, c2). Since (i) holds with , (ii) does not. Thus for some L ∈ B, DB\{L}=1,L=0,C2=c2(ω) = 1. By construction of , for some j, , so . Since 1 = Dc(ω) = DB\{L}=1,L=0,C2=c2(ω), we have , as required.

(⇐) Suppose for a contradiction, that for some ω* and , (i) and (ii) hold, but B is not irreducible. Then there exists a representation (A, ) such that for all , B ⊈ Bi. By (i), . Thus for some pair (Aj, Bj), A (ω*) = 1, and . Since B ⊈ Bj there exists some L ∈ B \ Bj, but then since Aj(ω*) = 1 and , we have , which is a contradiction.

Corollary 3.3. If B is irreducible for then for any Ω* ⊃ Ω, B is irreducible for .

Proof: By Theorem 3.2, since if Ω satisfies (i) and (ii) then so does Ω*.

3.1. B irreducible for with |B| = |C|

In the special case where |B| = |C|, the concepts of minimal sufficient cause for some ω* and irreducibility coincide.

Proposition 3.4. If and |B| = |C| then B is a minimal sufficient cause for some ω* ∈ Ω relative to C i B is irreducible for .

Proof: If |B| = |C| then condition (i) in Theorem 3.2 (taking C2 = ∅) holds iff B is a sufficient cause for D for ω*, and similarly condition (ii) holds iff B is a minimal sufficient cause for D (for ω*).

Thus we have the following:

Corollary 3.5. If , |B| = |C| and B is a minimal sufficient cause for D for some ω* ∈ Ω then for every representation (A, ) for .

Proof: Immediate from Proposition 3.4.

3.2. B irreducible for with |B| > |C|

When |B| < |C| the conditions for irreducibility and for being a minimal sufficient cause are logically distinct. Condition (i) in Theorem 3.2 requires for one assignment (and some ω*), while if B is a sufficient cause (for ω*) then this condition is required to hold for all assignments ; in contrast condition (ii) in Theorem 3.2 requires that there exists a single (and some ω*) such that for all , while for B to be a minimal sufficient cause for ω* merely requires that for all L ∈ B, there exists an assignment such that .

Example 1 (Revisited). Let C = {X1, X2, X3}, = {2}. Relative to C, {X1, X2} is a minimal sufficient cause for ω = 2 since D111(2) = D110(2) = 1, and D011(2) = D100(2) = 0. However {X1, X2} is not irreducible for because we have D101(2) = D010(2) = 1, hence condition (ii) in Theorem 3.2 is not satisfied for either X3 = 0, or X3 = 1. Conversely {X1} is irreducible for = {2} since D111(2) = 1, while D011(2) = 0, but {X1} is not a sufficient cause because D100(2) = 0.

Though irreducibility of B for neither implies, nor is implied by B being a minimal sufficient cause for some ω ∈ Ω, it does imply that every sufficient cause representation for contains at least one conjunction Bj of which B is a (possibly proper) subset. However, prima facie this still leaves open the possibility that, for example, every representation either includes B ∪ {L} or , for some L, but no representation includes both. However, this cannot occur:

Corollary 3.6. Let , if B is irreducible for then there exists a set , with |B*| = |C| such that in every representation (A, ) for there exists , with B ⊆ Bj ⊆ B*.

Thus irreducibility of B further implies that there is a set B* of size |C| such that in every representation there is at least one conjunct containing B that is itself contained in B*. However, it should be noted that, in general, there may be more than one conjunct Bj with B ⊆ Bj ⊆ B*.

Proof: Immediate from Theorem 3.2, taking .

Finally, we note that a conjunction that is both irreducible and a minimal sufficient cause corresponds to an ‘essential prime implicant’ in digital circuit theory [12]. The Quine-McCluskey algorithm [13, 18, 19] finds the set of essential prime implicants for a given Boolean function, which here corresponds to the potential outcomes for an individual.

3.3. Enlarging the set of potential causes

As noted in §2.2 a set B may be a minimal sufficient cause for C but not a superset C′. Irreducibility is also not preserved without further conditions. To state these conditions we require the following:

Definition 3.7. X′ is said to be not causally influenced by a set C if for all ω ∈ Ω, the potential outcomes are constant as c varies.

We will also assume that if every X′ ∈ C′ is not causally influenced by C then the Relativized Consistency Axiom holds:

| (3.2) |

i.e. that if variables in C′ are not causally influenced by the variables in C then the counterfactual value of D intervening to set C to c is the same as the counterfactual value of D intervening to set C to c and the variables in C′ to the values they actually took on.

We now have the following Corollary to Theorem 3.2:

Corollary 3.8. Let , , |B| = |C1|. If B is irreducible for , C′ ∩ C = ∅ and for all X′ ∈ C′, X′ is not causally influenced by C, then B is irreducible for .

Proof: By Theorem 3.2 there exists ω* ∈ Ω and an assignment to C2 such that (i) and (ii) hold. Let c′ = C′(ω*). Since variables in C′ are not causally influenced by C, for all assignments b:

the second equality here follows from (3.2). It follows that ω* and (, c′) obey (i) and (ii) in Theorem 3.2 with respect to C ∪ C′.

The assumption that every variable in C′ is not causally influenced by C, is required because otherwise we may have C′(ω*) ≠ (C′)B=b* (ω*) for some assignment b* to B. For example, let C = {X1, X2, X3}, and suppose that

for all ω ∈ Ω. In this case {X1, X2} is irreducible for , but not for . We saw earlier that if B is a minimal sufficient cause for C then this does not imply that B is a minimal sufficient cause with respect to subsets of C. Here we see that if B is irreducible with respect for then this does not imply irreducibility for supersets C* ⊃ C, unless every variable in C* \ C is not causally influenced by a variable in C.

4. Tests for irreducibility

In this section we derive empirical conditions which imply that a given conjunction B is irreducible for . Our first approach is via condition (3.1).

4.1. Adjusting for Measured Confounders

To detect that (3.1) holds requires us to identify the mean of potential outcomes in certain subpopulations. This is only possible if we have no unmeasured confounders [??]:

Definition 4.1. A set of covariates W suffice to adjust for confounding of (the effect of) C on D if:

| (4.1) |

for all c, w.

Proposition 4.2. If a set W suffices to adjust for confounding of C on D and P (C = c, W = w) > 0 then

The proof of this is standard and hence omitted.

Note that if W is sufficient to adjust for confounding of C on D, then W is also sufficient to adjust for confounding of B on D, where , |B|=|C|.

4.2. Tests for irreducibility without monotonicity

Theorem 4.3. Let , , |B| = |C1|. If W is sufficient to adjust for confounding of C on D, and for some c2,w,

| (4.2) |

then B is irreducible for .

Proof: We prove the contrapositive. Suppose that B is not irreducible for . Then by Theorem 3.2, for all ω ∈ Ω, and all c2,

Hence for any w,

Applying Proposition 4.2 to each term implies the negation of (4.2).

The condition provided in Theorem 4.3 can be empirically tested with t-test type statistics if W consists of a small number of categorical or binary variables or using regression or inverse probability of treatment weighting methods [20, 34] otherwise.

It follows from Corollary 3.8 that condition (4.2) further establishes that B is irreducible for so long as every variable in C′ is not causally influenced by variables in C.

It may be shown that condition (4.2) is the sole restriction on the law of (D, C, W) implied by the negation of irreducibility.

4.3. Graphs

In the next section we develop more powerful tests under monotonicity assumptions. However, to state these conditions we first introduce some concepts from graph theory:

Definition 4.4. A graph defined on a set B is a collection of pairs of elements in B, ≡ {E | E = {B1, B2} ⊆ B, B1 ≠ B2}.

This is the usual definition of a graph, except that the vertex set here is a set of literals. We will refer to sets in as edges, which we will represent pictorially as B1 — B2.

Definition 4.5. Two elements L, L* ∈ B are said to be connected in if there exists a sequence L = L1, …, Lp = L* of distinct elements in B such that {Li, Li+1} ∈ for i = 1, …, p – 1.

The sequence of edges joining L and L* is said to form a path in .

Definition 4.6. A graph on B is said to form a tree if , and any pair of distinct elements in B are connected in .

In a tree there is a unique path between any two elements.

Proposition 4.7. Let be a tree on B. For each element R ∈ B there is a natural bijection:

given by where is the last edge on a path from R to L.

It is not hard to show that for a graph , if the bijections described in Proposition 4.7 exist then is a tree.

Theorem 4.8 (Cayley [4]). On a set B there are |B||B|−2 different trees.

4.4. Monotonicity

Sometimes it may be known that a certain cause has an effect on an outcome that is either always positive or always negative.

Definition 4.9. Bi has a positive monotonic effect on D relative to a set B (with Bi ∈B) in a population Ω if for all ω ∈ Ω and all values b−i for the variables in B \ {Bi}, DB{Bi}=b−i,Bi=1(ω) ≥ DB{Bi}=b−i,Bi=0(ω).

Similarly we say that L has a negative monotonic effect relative to B ∪ {L} if has a positive monotonic effect relative to . Note that the case in which DB{Bi}=b−i,Bi=1(ω) = DB{Bi}=b−i,Bi=0(ω) for all ω, and hence Bi has no effect on D relative to B, is included as a degenerate case.

The definition of a positive monotonic effect requires that an intervention does not decrease D for every individual, not simply on average, regardless of the other interventions taken. This is thus a strong assumption; see [33] for further discussion.

Proposition 4.10. The set of potential outcomes that are compatible with monotonicity is given by the Sperner number S(n).

*** Cite Dedekind again?

4.5. Tests for irreducibility with monotonicity

Knowledge of the monotonicity of certain potential causes allows for the construction of more powerful statistical tests for irreducibility than those given by Theorem 4.3.

Theorem 4.11. Let , , |B|=|C1| and suppose that every L ∈ B+ has a positive monotonic effect on D relative to C. If for some tree on B+, ω* ∈ Ω and some c2 we have:

| (4.3) |

then B is irreducible for .

If we know that X has a negative monotonic effect on D, then we may use this Theorem to construct more powerful tests of the irreducibility of sets containing with respect to . Under the assumption that every L ∈ C has a monotonic effect on D we have shown via direct calculation using cddlib [?] that for |C| ≤ 4, the conditions (4.3) are the sole restrictions on the law of (D, C, W) implied by the negation of irreducibility. We conjecture that this holds in general.

Proof: By Theorem 3.2 it is sufficient to prove that under the monotonicity assumption on B+, (4.3) implies (3.1). Suppose that (3.1) does not hold, so that for all values c2, and all ω* ∈ Ω,

Then for each ω* ∈ Ω there exists R ∈ B+ such that

For a given tree , the remaining terms on the RHS of (4.3) are:

by Proposition 4.7. Finally since , L′ has a positive monotonic effect on D relative to C, thus no term in the sum is positive. Consequently for all ω* ∈ Ω, (4.3) does not hold for any tree .

Example 2. In the case B = {X1, X2} = B+ = C, where there is only one tree on B+, consisting of a single edge X1 — X2. Thus if X1 and X2 have a positive monotonic effect on D (relative to C) then Theorem 4.11 implies that if the following inequality holds for some ω ∈ Ω then

then {X1, X2} is irreducible for .

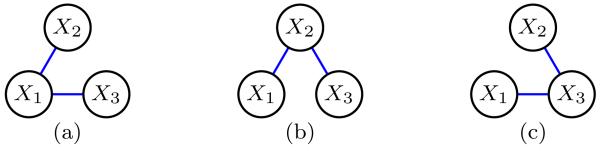

Example 3. If B = {X1, X2, X3} = B+ = C, then there are three trees on B+, see Figure 1. These correspond to the following conditions:

D111(ω) − (D110(ω) + D101(ω) + D011(ω)) + (D010(ω) + D001(ω)) > 0;

D111(ω) − (D110(ω) + D101(ω) + D011(ω)) + (D100(ω) + D001(ω)) > 0;

D111(ω) − (D110(ω) + D101(ω) + D011(ω)) + (D100(ω) + D010(ω)) > 0.

Thus B is irreducible for if at least one holds for some ω ∈ Ω.

Fig 1.

The three trees on {X1, X2, X3}.

Corollary 4.12. Let , |B| = |C1|. Suppose that every L ∈ B+ has a positive monotonic effect on D relative to C, and W is sufficient to adjust for confounding of C on D. If for some tree on B+, and some c2, w we have:

| (4.4) |

then B is irreducible for .

Proof: Directly analogous to the proof of Theorem 4.3.

The special case of the previous Corollary where |B+| = |C| = 2, and W = ∅, appears in Rothman and Greenland [22]; see also Koopman [10].

Theorem 4.8 implies that if every literal in B has a positive monotonic effect on D then we will have |B||B|−2 conditions to test, each of which is sufficient to establish the irreducibility of B for . As before, the conditions (4.4) may be tested via t-test type statistics or using various statistical models.

As with the results in §4.2, we may apply Corollary 3.8 to establish that B is irreducible for D(C ∪ C′,) if every variable in C′ is not causally influenced by variables in C.

4.6. Tests for a minimal sufficient cause under monotonicity

As noted in section §3.1 if |B| = |C| then irreducible conjunctions are also minimal sufficient causes. Thus in this special case the tests of irreducibility given in Theorem 4.3 and Corollary 4.12 also establish that B is a minimal sufficient cause relative to C. When |B| < |C| these tests do not in general establish this. However, under positive monotonicity assumptions on C2, such tests may be obtained by taking c2 = 0:

Proposition 4.13. Let , , |B|=|C1|. Suppose every L ∈ C2 has a positive monotonic effect on D relative to C. If (i) DB=1,C2=0(ω*) = 1 and (ii) for all L ∈ B, , then B is a minimal sufficient cause for D relative to C for ω*.

Proof: For any c2, DB=1,C2=c2(ω) ≥ DB=1,C2=0(ω) by the monotonicity assumption. Hence B is a sufficient cause for D relative to C for ω*. Minimality follows directly from condition (ii).

We have the following corollaries which provide conditions under which B is a minimal sufficient cause for D relative to C for some ω ∈, in addition to being irreducible for :

Corollary 4.14. Let , |B| = |C1|. Suppose every L ∈ C2 has a positive monotonic effect on D relative to C and W is sufficient to adjust for confounding of C on D. If (4.2) holds with c2 = 0 for some w then B is a minimal sufficient cause of D relative to C for some ω ∈ Ω.

Proof: Immediate from Proposition 4.13 and Theorem 4.3.

Corollary 4.15. Let , , |B| = |C1|. Suppose that every L ∈ B+ ∪ C2 has a positive monotonic effect on D relative to C, and W is sufficient to adjust for confounding of C on D.

If (4.4) holds with c2 = 0 for some w and some tree on B+ then B is a minimal sufficient cause of D relative to C for some ω ∈ Ω.

Proof: Immediate from Proposition 4.13 and Corollary 4.12.

5. Singular interactions

In the genetics literature, in the context of two binary genetic factors, X1 and X2, ‘biologic’ or ‘compositional’ epistasis [2, 5, 17] is said to be present if for some ω*, D11(ω*) = 1 but D10(ω*) = D01(ω*) = D00(ω*) = 0; in this case the effect of one genetic factor is effectively masked when the other genetic factor is absent. If {X1, X2} is a minimal sufficient cause of D relative to {X1, X2} for ω* then although this implies D11(ω*) = 1 and D10(ω*) = D01(ω*) = 0, it does not imply D00(ω*) = 0. This motivates the following:

Definition 5.1. A minimal sufficient cause B for D relative to C for ω* is said to be singular if there is no , B′ ≠ B, forming a minimal sufficient cause for D relative to C for ω*. B is singular for if B is singular relative to C for some ω* ∈ Ω.

If B is singular for then we will also refer to a singular interaction between the components of B. We now characterize singularity in terms of potential outcomes:

Theorem 5.2. Let C = C1∪C2, , |B| = |C1| then B is singular for iff there exists ω* ∈ Ω such that

| (5.1) |

Note that (5.1) is equivalent to:

| (5.2) |

Thus if B is singular for then there is some individual ω* whose potential outcomes are given by the single conjunction B.

Proof: By definition, B is a sufficient cause for D for ω* iff . Thus it is sufficient to show that, assuming B is a minimal sufficient cause for D for ω*, there are no other minimal sufficient causes of D for ω* iff .

Suppose B is the only minimal sufficient cause for D for ω*, but that for some b* ≠ 1, . Let , B† forms a sufficient cause for D for ω*, and B ⊈ B†. Hence there is some B′ ⊆ B† that is a minimal sufficient cause for D for ω*, and B ≠ B†, a contradiction.

Conversely suppose but there exists another minimal sufficient cause B′ for D for ω*, and B ≠ B†. Since B′ is minimal, B ⊈ B′. Thus there exists a such that , but and hence , a contradiction.

Corollary 5.3. For , if B is singular then B is irreducible.

Proof: Immediate from (5.1) and the definition of irreducibility.

Theorem 5.4 relates singular interactions to properties of the set of sufficient cause representations for .

Theorem 5.4. Let . B is singular for iff there exists ω* ∈ Ω such that in every representation (A, ) for , (i) for all , with |B*| = |C| and B ⊆ B* there exists with Bi ⊆ B* and Ai(ω*) = 1; (ii) for all such that B ⊈ Bi, Ai(ω*) = 0.

Proof: Let , where , and |B| = |C1|.

(⇒) Suppose B is singular for , so that some ω* ∈ Ω satisfies (5.2). Then for any representation (A, ) for and any B* such that |B*| = |C| and B ⊆ B* we can select values so that . Since there exists Ai ∈ A, with Ai (ω*) = 1 and . Thus Bi ⊆ B*, so (i) holds. For all such that B ⊈ Bi, we can choose , with and values so that . Since we have Ai(ω*) = 0 since , so (ii) holds as required.

(⇐) Suppose there exists ω* ∈ such that every representation (A, ) satisfies (i) and (ii). We will show that (5.1) holds. For any values let , so |B*| = |C| and B ⊆ B*. Thus by (i) there exists with Bi ⊆ B* and Ai(ω*) = 1. Hence since A((ω*) = 1 and . Conversely for any b′ ≠ 1, let B′ ≡ B[B=b′]. so |B′| = |C1| with B′ ≠ B. Thus for all such that , B ⊈ Bi and thus by (ii) Ai (ω*) = 0. Hence .

We now consider results relevant for testing for singular interactions with or without monotonicity assumptions.

Theorem 5.5. Let , |B| = |C| and suppose that every L ∈ B+ has a positive monotonic effect on D relative to C. If for some tree on B+ and some ω* ∈ Ω we have

| (5.3) |

then B is singular for .

Proof: By Theorem 5.4 B is singular for iff

| (5.4) |

Suppose for a contradiction that (5.4) does not hold but (5.3) holds for some ω* ∈ Ω. Since B+ has a positive monotonic effect on D relative to C, if is such that then implies for some . Hence for all ω ∈ Ω

| (5.5) |

By applying the same argument to the first two terms on the LHS of (5.5) as was applied in the proof of Theorem 4.11 we have that (5.3) does not hold for all ω ∈, which is a contradiction.

The following corollary to Theorem 5.5 generalizes the discussion in [27,28] to an arbitrary number of dichotomous factors:

Corollary 5.6. Let , |B| = |C|. Suppose that every L ∈ B+ has a positive monotonic effect on D relative to B, and W is sufficient to adjust for confounding of C on D. If for some tree on B+, and some w we have:

| (5.6) |

then B is singular for .

Proof: By applying Proposition 4.2 to each term in (5.3).

Condition (5.6) leads directly to a statistical test of compositional epistasis. This is notable since some claims in the genetics literature appear to suggest that such tests did not exist [5].

As stated in the next corollary, from Theorem 5.5 if all or all but one of the elements of B have positive monotonic effects on D then singularity and irreducibility coincide:

Corollary 5.7. Suppose |B| = |C| and that for all or all but one of Bi ∈ B, Bi has a positive monotonic effect on D relative to B then B is singular for i B is irreducible for .

An important consequence of this corollary is that when there is at most one variable that does not have a positive monotonic effect condition (4.4) establishes that B is singular in addition to being irreducible for .

Proof: Let B′ denote the one or zero elements of B that do not have a monotonic effect on D relative to C. If B is irreducible for then by Theorem 4.11,

Since the third term on the LHS of (5.3) vanishes when |B′| ≤ 1, it follows that B is singular for . The converse is given in Corollary 5.3.

Corollary 5.8. Suppose |B| = |C| and that for all or all but one of , Bi has a positive monotonic effect on D relative to B ∪ C, for all X′ ∈ C′, X′ is not causally influenced by C and B is singular for then B is singular for .

Proof: By Corollary 5.7, B is irreducible relative to . Hence by Corollary 3.8 B is singular relative to . The conclusion then follows from a further application of Corollary 5.7.

5.1. Relation to Pearl’s Probability of Causation

Pearl [16, chapter 9] defined the probability of necessity and sufficiency (PNS) of cause X for outcome D to be P (DX=1(ω) = 1, DX=0(ω) = 0). In other words PNS(D, X) is the probability that D would occur if X occurred and would not have done so had X not occurred. We generalize this to the setting in which there are multiple causes B:

Definition 5.9. For , the probability of necessity and sufficiency of B causing D, is:

Thus PNS(D, B) is the probability that D would occur if every literal L ∈ B occurred and would not have done so had at least one literal in B not occurred.

Proposition 5.10. If |B| = |C| then PNS(D, B) > 0 i B is singular for .

Proof: Follows directly from Theorem 5.4 and Definition 5.9.

This connection also provides an interpretation for condition (5.6).

Proposition 5.11. Under the conditions of Corollary 5.6, with W = ∅ = B+ PNS(B, D) is bounded below by the RHS of (5.6).

Proof:

which is the RHS of (5.6) with W = ∅ = B+.

This generalizes some of the lower bounds on PNS(D, X) given by Pearl [16, §9.2]. The assumption in Proposition 5.11 that B and D are unconfounded, so W = ∅, and the absence of monotonicity assumptions so B+ = ∅, are for expositional convenience.

6. Relation to Statistical Models with Linear Links

In related work [32] it is noted that the presence of interaction terms in statistical models do not in general correspond to sufficient conditions for irreducibility. Consider for example a saturated Bernoulli regression model for D with identity link and binary regressors C = {X1, …, Xp}:

| (6.1) |

Note that with c = (x1, …, xp) then , the usual multiplicative interaction term. The conditions, given earlier, for detecting the presence of irreducibility and singularity lead to linear restrictions on the regression coefficients :

| (6.2) |

Note that (6.2) includes an intercept β∅. First we define

the degree of L in a tree , to be the number of edges in that contain L.

Proposition 6.1. Under the conditions of Theorem 4.11, with B = C, condition (4.3) is equivalent to restriction (6.2) with where:

| (6.3) |

Note that since is a tree on B+, the last term in (6.3) has a natural graphical interpretation as the number of edges in the ‘induced subgraph’ of on the subset . Definition (6.3) also subsumes condition (4.2) given in Theorem 4.3 (without monotonicity), in which case the last three terms in (6.3) vanish. Though Proposition 6.1 assumes that C2 = ∅, the condition given by (6.2) and (6.3) continues to apply in the case where c2 = 0 as in Corollaries 4.14 and 4.15; obvious extensions apply to the case where c2 ≠ 0.

Proof: This follows by counting the number (and sign) of expectations in (4.3) for which is a subset of the variables assigned the value 1 in the conditioning event. The first two terms in (4.3) correspond, respectively, to the first two terms in (6.3). The remaining three terms in (6.3) correspond to the last sum in (4.3): ||, the number of edges in , is the total number of terms in the sum. The sum over degrees subtracts the number of terms in which some is assigned zero. Since this double counts terms corresponding to edges contained in , the last term corrects for this.

Proposition 6.2. Under the conditions of Theorem 5.5, with B = C, condition (5.3) is equivalent to restriction (6.2) with where:

| (6.4) |

Proof: Expression (6.4) follows from another counting argument similar to the proof of Proposition 6.1, together with the observation that conditions (4.3) and (5.3) only differ in that the terms in the sum over L in (4.3) for L ∈ B′ are replaced by a sum over all subsets of B′.

Example 4 (Two-way interactions). Consider the saturated Bernoulli regression with with identity link with C = {X1, X2}:

Suppose that X1 and X2 are unconfounded with respect to D, so (4.1) holds with W = ∅. Proposition 6.1 implies that {X1, X2} is irreducible relative to C if β12 > β∅; Proposition 6.2 implies that {X1, X2} is singular relative to C if 12 > 2 ∅. If one of X1 or X2 have positive monotonic effects on D relative to C then Proposition 6.1 and Corollary 5.7 imply that {X1, X2} is both irreducible and singular relative to C if β12 > β∅ If X1 and X2 have positive monotonic effects on D relative to C then Proposition 6.1 and Corollary 5.7 imply that {X1, X2} is both irreducible and singular relative to C if β12 > 0.

Thus only under the assumption of positive monotonic effects for both X1 and X2 does the sufficient condition for the irreducibility and singularity of {X1, X2} coincide with the classical two-way interaction term β12 being positive.

It also follows from Proposition 3.4 that if {X1, X2} is irreducible relative to C then there exists some ω ∈ for whom {X1, X2} is a minimal sufficient cause relative to C (since |B| = |C|).

Example 5 (Three-way interactions). The saturated Bernoulli regression with three binary variables and a identity link can be written as:

Suppose that C = {X1, X2, X3} is unconfounded for D. Proposition 6.1 implies that {X1, X2, X3} is irreducible relative to C if

| (6.5) |

It follows from Proposition 3.4 that if {X1, X2, X3} is irreducible relative to C then there exists some ω ∈ Ω for whom {X1, X2, X3} is a minimal sufficient cause relative to C (since |B| = |C|).

Proposition 6.2 implies {X1, X2, X3} is singular relative to C if

However, if X1, X2 and X3 have positive monotonic effects on D (relative to C) then Proposition 6.1 implies {X1, X2, X3} is irreducible relative to C if any of the following hold:

| (6.6) |

equivalently, β123 > min{β1, β2, β3}. By Corollary 5.7 this also establishes that {X1, X2, X3} is singular relative to C.

If only X1 and X2 have positive monotonic effects on D relative to C then Proposition 6.1 implies that {X1, X2, X3} is irreducible relative to C if

| (6.7) |

By Corollary 5.7 condition (6.7) also implies that {X1, X2, X3} is singular relative to C (since only X3 does not have a positive monotonic effect on D). As we would expect condition (6.7) is weaker than (6.5) but stronger than any of the conditions (6.6). If only one potential cause has a monotonic effect on D relative to C then we can only use (6.5) to establish irreducibility.

Thus for three-way interactions β123 > 0 does not correspond to any of the sufficient conditions for irreducibility or singularity of {X1, X2, X3} relative to C, regardless of whether or not monotonicity assumptions hold.

7. Interpretation of sufficient cause models

As mentioned in §2.1 the observed data for an individual (C(ω), D(ω)) represents a strict subset of the potential outcomes ; this is the ‘fundamental problem of causal inference’. Further, as we have seen, for a given set of potential outcomes there can exist different determinative sets of minimal sufficient causes B for the same set of potential outcomes; see (2.3) and (2.4). Thus we have the following for an individual:

| (7.1) |

It is typically impossible to know the set of potential outcomes for an individual , even when C = {X}, even from randomized experiments. However possession of this knowledge would permit one to predict how a given individual would respond under any given pattern of exposures C = c.

The results in this paper demonstrate that, given data from a randomized experiment (or when sufficient variables are measured to adjust for confounding) it is possible to infer the existence of an individual for whom all sets of minimal sufficient causes have certain features in common. However, given the double many-one relationship (7.1), and the fact that the set of potential outcomes , if they were known, apparently address all potential counterfactual queries, it is natural to ask what is to be gained by considering such representations. We now motivate our results by presenting several different interpretations of sufficient cause representations.

7.1. The descriptive interpretation

Under this view sets of minimal sufficient causes are merely a way to describe the set of potential outcomes . The representation (A, ) may be more compact; compare Table 2 and (2.5). Extending this to a population Ω, the variables A in a representation (A, ) merely describe subpopulations with particular patterns of potential outcomes. Knowing that there exists an individual for whom all representations have certain features in common provides qualitative information about the set of potential outcomes.

For two binary causes {X1, X2}, Theorem 3.2 implies that {X1, X2} is irreducible relative to C for ω* if D11(ω*) = 1 and D10(ω*) = D01(ω*) = 0. Such a pattern is of interest insofar as it indicates that the causal process resulting in this individual’s potential outcomes D(C, ω*) is such that (for some setting of the variables in C \ {X1, X2}), D = 1 if both X1 = 1 and X2 = 1, but not when X1 = 1 and X2 = 0 or vice versa.

Similarly it follows from Theorem 5.2 that if {X1, X2} is singular relative to C for ω* if D11(ω*) = 1 and D10(ω*) = D01(ω*) = D00(ω*) = 0. Hence the causal process producing is such that, for some setting of the variables in C \ {X1, X2}, D = 1 if both X1 = 1 and X2 = 1, but not when either X1 = 0 or X2 = 0.

In contrast to the classical notions of interaction arising in linear models (see §6), irreducibility and singularity are causal in that they relate to the potential outcomes. §4 and §5 contain empirical tests for the presence of irreducible or singular interactions.

7.2. Generative mechanism interpretations

A minimal sufficient cause representation may be interpreted in terms of a ‘generative mechanism’:

Definition 7.1. A mechanism M(ω) relative to C takes as input an assignment c to C, and outputs a ‘state’ Mc(ω) which is either ‘active’ (1) or ‘inactive’ (0). A mechanism is said to be generative for D if whenever it is active, the event D = 1 is caused, so that Mc(ω) = 1 implies Dc(ω) = 1. Conversely, a mechanism is said to be preventive for D if whenever Mc(ω) = 1, Dc(ω) = 0 is caused.

Though this definition refers to a mechanism ‘causing’ D = 1 or D = 0, we abstain from defining this more formally in terms of potential outcomes until the next section. Our reason for proceeding in this way is that there may be circumstances in which an investigator is able to posit the existence of a causal mechanism causing D = 1 or D = 0, e.g. based on experiments manipulating the inputs C and output D, but lacks sufficiently detailed information to posit well-defined counterfactual outcomes involving interventions on these (hypothesized) mechanisms.

Definition 7.2. A set of generative mechanisms M = 〈M1, …, Mp〉 will be said to be exhaustive for a given set of potential outcomes if for all ω ∈ Ω, and all c, if Dc(ω) = 1 then for some Mi ∈ M, .

Note that if M forms an exhaustive set of mechanisms for , then it follows that in a context in which no mechanism Mi is active, D = 0.

Proposition 7.3. If M = 〈M1, …, MpW〉 forms an exhaustive set of generative mechanisms for then and .

Proof: Follows Definitions 7.1 and 7.2. 2

Proposition 7.4. Suppose M forms an exhaustive set of generative mechanisms for . If , |B| = |C| and B is irreducible for then there exists an individual ω* and a mechanism Mi such that but for all L ∈ B, .

Thus if there exists an exhaustive set of generative mechanisms for and B is irreducible then there is an individual ω* and a mechanism Mi such that Mi is active when all the literals in B take the value 1, and is inactive when any one literal is 0 and the rest continue to take the value 1.

Proof: By Theorem 3.2, since B is irreducible for , there exists ω* ∈ Ω such that DB=1(ω*) = 1 and for all L ∈ B, DB\{L}=1,L=0(ω). Since M is an exhaustive set of generative mechanisms for , we have that for all c, . Since DB=1(ω*) = 1, for some Mi ∈ M, . Since for all L ∈ B, DB\{L}=1,L=0(ω*) = 0 we have that .

Proposition 7.5. Suppose M forms an exhaustive set of generative mechanisms for . If , |B| = |C| and B is singular for then there exists an individual ω* and a mechanism Mi such that iff b = 1.

Hence under the conditions of Proposition 7.5, if B is singular then there is an individual ω* and a mechanism Mi such that Mi is active if and only if all the literals in B take the value 1.

Proof: Similar to the proof of Proposition 7.4, replacing Theorem 3.2 by Theorem 5.2.

As the next example shows, the assumption that there exists an exhaustive set of generative mechanisms is substantive, and does not hold in all cases.

Example 6. Suppose C = {X1, X2} where X1 and X2 denote the presence of a variant allele at two particular loci. Let M1 and M2 denote two different proteins such that Mi is produced iff Xi = 0, i.e. the associated allele is not present. Finally let D denote some characteristic whose occurrence is blocked by the presence of either M1 or M2 (or both). In this example

By De Morgan’s Law, the second equation here may also be expressed as:

The mechanisms M1 and M2 are preventive for D, so that D = 1 only occurs when both mechanisms are inactive. An exhaustive set of generative mechanisms does not exist because in this example there are no generative mechanisms.

It is natural to suppose that mechanisms are ‘modular’ and thus may be isolated or rendered inactive without affecting other such mechanisms. This may be formalized via potential outcomes:

Definition 7.6. An exhaustive set of generative mechanisms M for are said to support counterfactuals if there exist well-defined potential outcomes DC=c,M=m(ω) and DM=m(ω) such that

The important assumption here is the existence of the potential outcomes Dm(ω) and Dc,m(ω). Note that if M supports counterfactuals then interventions on C do not affect D if interventions are also made on M.

Proposition 7.7. If the exhaustive set of generative mechanisms M support counterfactuals then

so that consistency holds for the potential outcomes Dm(ω) and Dc,m(ω).

Proof: This follows because

7.3. Counterfactual interpretation of a minimal sufficient cause representation

If we have an exhaustive set of generative mechanisms which supports counterfactuals, and further each mechanism is a conjunction of literals, then there will be a minimal sufficient cause representation that itself supports counterfactuals.

Definition 7.8. A representation (A, ) for will be said to be structural if for each pair (Ai, Bi), Ai ∈ A, there exists a generative mechanism (or mechanisms) Mi such that Mi = Ai ∧ (Λ(Bi)) and

Thus if (A, ) is structural then each pair (Ai, Bi), Ai ∈ A, corresponds to a mechanism Mi. Thus in this case the variables Ai(ω) may be interpreted as indicating whether the corresponding mechanism(s) Mi is ‘present’ in individual ω. We may thus associate potential outcomes with the Ai, corresponding to removing (or inserting) the corresponding mechanism(s). This interpretation of the Ai’s is consistent with the notion of ‘co-cause’ which arises in the literature on minimal sufficient causes.

We note that ‘structural’ is often used as a synonym for ‘causal’. However, even under the weak interpretation, a sufficient cause representation is causal in that it represents a set of potential outcomes. The word is used in Definition 7.8 to connote that the structure of the representation itself represents (additional) potential outcomes arising from intervention on a set of mechanisms M that correspond with the pairs (Ai, Bi), Ai ∈ A, . Note that there need not be a unique structural representation (A, ) for . There might be several functionally equivalent generative mechanisms corresponding to a given pair (Ai, Bi).

Proposition 7.9. If a representation (A, ) for , where A = 〈A1, …, Ap〉, is structural then the associated set of generative mechanisms 〈M1, …, Mp〉 is exhaustive.

Proof: This follows from Definitions 2.7 and 7.2. 2.

Proposition 7.10. Suppose that M forms an exhaustive set of generative mechanisms for and M supports counterfactuals. If for all Mi ∈ M there exists , and an Ai such that for all c, and ω ∈ Ω, if Ai(ω) = 1 then (Mi)c(ω) = Λ(Bi)c, then (A = 〈A1, …, Ap〉, ) forms a representation for that is structural.

Proof: This follows from Definitions 2.7 and 7.8.

Proposition 7.11. If there is some representation (A, ) that is structural and is irreducible for then there exists a mechanism Mi that is active only if B = 1.

Proof: If B is irreducible for then there exists with ; the mechanism Mi = Ai ∧ (Λ(Bi)) is such that Mi = 1 only if B = 1.

Note that the conclusion of Proposition 7.11, unlike those of Propositions 7.4 and 7.5, does not make reference to an individual ω*. This is because Proposition 7.11 assumes that there is a representation (A, ) that is structural: in this representation the Ai variables may be seen as a constituent part of the corresponding mechanism Mi.

Note that there may exist a set of exhaustive generative mechanisms, but these mechanisms may not themselves be conjunction of literals so that there is no sufficient cause representation for that is structural:

Example 7. Suppose C = {X1, X2} where X1 and X2 again denote the presence of variant alleles, acquired by maternal and paternal inheritance respectively, at a particular locus. Let M denote a protein that is produced iff either X1 = X2 = 1 or X1 = X2 = 0 and let D denote some characteristic that occurs iff M = 1. Suppose we can intervene to remove or add the protein. We then have that

Thus {M} constitutes an exhaustive set of generative mechanisms for . We have a representation for :

with A1(ω) = A2(ω) = 1 for all ω ∈. However, this representation is not structural because A1(ω)X1X2 and A2(ω)(1−X1)(1−X2) do not constitute separate mechanisms for which interventions are conceivable; there is only one mechanism M, the protein. Since for anyω ∈, D11(ω) = 1 and D10(ω) = D01(ω) = 0, {X1, X2} is irreducible relative to C; however it is not the case that there is a mechanism Mi that is active only if X1X2 = 1 since for the only mechanism M it is the case that M = 1 if X1 = X2 = 0. Note, however, in this example there is still a mechanism, namely M, that will be ‘active’ if X1 = X2 = 1 but will be ‘inactive’ if only one of X1 or X2 is 1.

Example 8. To illustrate the results in the paper we consider again the data presented in Table 1. We let D denote bladder cancer, X1 smoking, X2 the S NAT2 genotype, and X3 the *10 allele on NAT1. As discussed in Example 5, if the effect of C = {X1, X2, X3} is unconfounded for D and and we fit the model

| (7.2) |

then if X1, X2 and X3 have positive monotonic effects on D (relative to C) then {X1, X2, X3} is irreducible relative to C if any of the following hold:

We cannot fit the model (7.2) directly with case control data. However, under the assumption that the outcome is rare (reasonable with bladder cancer) so that odds ratios approximate risk ratios, we can fit the model:

| (7.3) |

and the conditions for the irreducibility of {X1, X2, X3} relative to C under monotonicity of {X1, X2, X3} become:

If we fit model (7.3) using maximum likelihood, we find that

In each case the point estimate suggests evidence of irreducibility, under monotonicity of {X1, X2, X3}, but the sample size is not sufficiently large to draw this conclusion confidently. With monotonicity of {X1, X2, X3}, irreducibility also implies a singular interaction for {X1, X2, X3}. If we assume that only {X1, X2} or {X1, X3} or {X2, X3} are monotonic relative to C then the conditions for irreducibility in Example 5 can be expressed respectively as:

From model (7.3) we have that

Again, in each case the point estimate suggests evidence of irreducibility, under monotonicity of just two of the three exposures, but the sample size is not sufficiently large to draw this conclusion confidently. With monotonicity of two of the three exposures, irreducibility also implies a singular interaction for {X1, X2, X3}. The test for irreducibility in Example 5 without assumptions about monotonicity can be expressed as:

From model (7.3) we have that

In this case, not even the point estimate is positive.

If {X1, X2, X3} is in fact irreducible and if there exists a representation that is structural then it follows by Proportion 7.11 that there exists a mechanism that is active only if X1 = 1, X2 = 1, X3 = 1.

8. Concluding Remarks

In this paper we have developed general results for notions of interaction that we referred to as ‘irreducibility’ (aka ‘a sufficient cause interaction’) and ‘singularity’ for sufficient cause models with an arbitrary number of dichotomous causes. The theory could be extended by developing notions of sufficient cause, irreducibility and singularity for causes and outcomes that are categorical and/or ordinal in nature.

Acknowledgments

We thank Stephen Stigler for pointing us to earlier work by Cayley on minimal sufficient cause models. We thank James Robins for helpful conversations. Tyler VanderWeele was supported by the National Institutes of Health (R01 ES017876). Thomas Richardson was supported by the National Science Foundation (CRI 0855230), the National Institutes of Health (R01 AI032475), and The Institute of Advanced Studies, University of Bologna.

Footnotes

AMS 2000 subject classifications: Primary 62A01; secondary 68T30, 62J99

Contributor Information

Tyler J. VanderWeele, Harvard School of Public Health, Department of Epidemiology, 677 Huntington Avenue, Boston, MA 02115, tvanderw@hsph.harvard.edu, URL: http://www.hsph.harvard.edu/faculty/tyler-vanderweele/

Thomas S. Richardson, University of Washington, Department of Statistics, Box 354322, Seattle, WA 98195, thomasr@uw.edu

References

- [1].Aickin M. Causal Analysis in Biomedicine and Epidemiology Based on Minimal Sufficient Causation. Marcel Dekker; New York: 2002. [Google Scholar]

- [2].Bateson W. Mendel’s Principles of Heredity. Cambridge University Press; [Google Scholar]

- [3].Cayley A. Note on a question in the theory of probabilities. London, Edinburgh and Dublin Philosophical Magazine. 1853;VI:259. [Google Scholar]

- [4].Cayley A. A theorem on trees. Quart. J. Math. 1889;23:376–378. [Google Scholar]

- [5].Cordell H. Epistasis: what it means, what it doesn’t mean, and statistical methods to detect it in humans. Hum. Mol. Genet. 2002;11:2463–2468. doi: 10.1093/hmg/11.20.2463. [DOI] [PubMed] [Google Scholar]

- [6].Cox DR. The Planning of Experiments. Wiley; New York: 1958. [Google Scholar]

- [7].Flanders D. Sufficient-component cause and potential outcome models. Eur. J. Epidemiol. 2006;21:847–853. doi: 10.1007/s10654-006-9048-3. [DOI] [PubMed] [Google Scholar]

- [8].Greenland S, Brumback B. An overview of relations among causal modelling methods. Int. J. Epidemiol. 2002;31:1030–1037. doi: 10.1093/ije/31.5.1030. [DOI] [PubMed] [Google Scholar]

- [9].Greenland S, Poole C. Invariants and noninvariants in the concept of interdependent effects. Scand. J. Work Environ. Health. 1988;14:125–129. doi: 10.5271/sjweh.1945. [DOI] [PubMed] [Google Scholar]

- [10].Koopman JS. Interaction between discrete causes. Am. J. Epidemiol. 1981;113:716–724. doi: 10.1093/oxfordjournals.aje.a113153. [DOI] [PubMed] [Google Scholar]

- [11].Mackie JL. Causes and conditions. American Philosophical Quarterly. 1965;2:245–255. [Google Scholar]

- [12].Marcovitz AB. Introduction to Logic Design. McGraw-Hill; 2001. [Google Scholar]

- [13].McCluskey EJ. Minimization of Boolean functions. The Bell System Technical Journal. 1956;35:1417–1444. [Google Scholar]

- [14].Neyman J. Sur les applications de la thar des probabilities aux experiences agaricales: Essay des principle. In: Dabrowska D, Speed T, editors. Statistical Science 5. 1923. pp. 463–472. Excerpts in English. [Google Scholar]

- [15].Novick LR, Cheng PW. Assessing interactive causal influence. Psychological Review. 2004;111:455–485. doi: 10.1037/0033-295X.111.2.455. [DOI] [PubMed] [Google Scholar]

- [16].Pearl J. Causality: Models, Reasoning, and Inference. Cambridge University Press; Cambridge: 2000. [Google Scholar]

- [17].Phillips P. Epistasis – the essential role of gene interactions in the structure and evolution of genetic systems. Nature Reviews Genetics. 2008;9:855–867. doi: 10.1038/nrg2452. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [18].Quine WV. The problem of simplifying truth functions. American Math. Monthly. 1952;59:521–531. [Google Scholar]

- [19].Quine WV. A way to simplify truth functions. American Math. Monthly. 1955;62:627–631. [Google Scholar]; Robins JM. A new approach to causal inference in mortality studies with sustained exposure period - application to control of the healthy worker survivor effect. Mathematical Modelling. 1986;7:1393–1512. [Google Scholar]

- [20].Robins JM. Marginal structural models versus structural nested models as tools for causal inference. In: Halloran E, Berry D, editors. Statistical Models in Epidemiology: the Environment and Clinical Trials. Springer; New York: 1999. pp. 95–134. [Google Scholar]; Rosenbaum PR, Rubin DB. The central role of the propensity score in observational studies for causal effects. Biometrika. 1983;70:41–55. [Google Scholar]

- [21].Rothman KJ. Causes. Am. J. Epidemiol. 1976;104:587–592. doi: 10.1093/oxfordjournals.aje.a112335. [DOI] [PubMed] [Google Scholar]

- [22].Rothman KJ, Greenland S. Modern Epidemiology. Lippincott-Raven; Philadelphia: 1998. [Google Scholar]

- [23].Rubin DB. Estimating causal effects of treatments in randomized and nonrandomized studies. J. Educ. Psychol. 1974;66:688–701. [Google Scholar]

- [24].Rubin DB. Bayesian inference for causal effects: The role of randomization. Ann. Statist. 1978;6:34–58. [Google Scholar]

- [25].Rubin DB. Comment on neyman 1923 and causal inference in experiments and observational studies. Statistical Science. 1990;5:472–480. [Google Scholar]

- [26].Taylor JA, Umbach DM, Stephens E, Castranio T, Paulson D, Robertson G, Mohler JL, Bell DA. The role of N-acetylation polymorphisms in smoking-associated bladder cancer: Evidence of a gene-gene-exposure three-way interaction. Cancer Research. 1998;58:3603–3610. [PubMed] [Google Scholar]

- [27].VanderWeele T. Empirical tests for compositional epistasis. Nature Reviews Genetics. 2010;11:166. doi: 10.1038/nrg2579-c1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [28].VanderWeele T. Epistatic interactions. Statistical Applications in Genetics and Molecular Biology. 2010;9:1–22. doi: 10.2202/1544-6115.1517. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [29].VanderWeele TJ, Hernán MA. From counterfactuals to sufficient component causes, and vice versa. Eur. J. Epidemiol. 2006;21:855–858. doi: 10.1007/s10654-006-9075-0. [DOI] [PubMed] [Google Scholar]

- [30].VanderWeele TJ, Robins JM. Directed acyclic graphs, sufficient causes and the properties of conditioning on a common effect. Am. J. Epidemiol. 2007;166:1096–1104. doi: 10.1093/aje/kwm179. [DOI] [PubMed] [Google Scholar]

- [31].VanderWeele TJ, Robins JM. The identification of synergism in the sufficient-component cause framework. Epidemiol. 2007;18:329–339. doi: 10.1097/01.ede.0000260218.66432.88. [DOI] [PubMed] [Google Scholar]

- [32].VanderWeele TJ, Robins JM. Empirical and counterfactual conditions for sufficient cause interactions. Biometrika. 2008;95:49–61. [Google Scholar]

- [33].VanderWeele TJ, Robins JM. Signed directed acyclic graphs for causal inference. J. Roy. Statist. Soc., Series B. 2010;72:111–127. doi: 10.1111/j.1467-9868.2009.00728.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [34].Vansteelandt S, Goetghebeur E. Causal inference with generalized structural mean models. J. Roy. Statist. Soc., Series B. 2003;65:817–835. [Google Scholar]