Significance

Building on recent electrophysiological evidence showing that novel communicative behavior relies on computations that operate over temporal scales independent from transient sensorimotor behavior, here we report that those computations occur simultaneously in pairs with a shared communicative history, but not in pairs without a shared history. This pair-specific interpersonal synchronization was driven by communicative episodes in which communicators needed to mutually adjust their conceptualizations of a signal’s use. That interpersonal cerebral synchronization was absent when communicators used stereotyped signals. These findings indicate that establishing mutual understanding is implemented through simultaneous in-phase coordination of cerebral activity across communicators, consistent with the notion that pair members temporally synchronize their conceptualizations of a signal’s use.

Keywords: social interaction, theory of mind, experimental semiotics, dual functional magnetic resonance imaging, conceptual knowledge

Abstract

How can we understand each other during communicative interactions? An influential suggestion holds that communicators are primed by each other’s behaviors, with associative mechanisms automatically coordinating the production of communicative signals and the comprehension of their meanings. An alternative suggestion posits that mutual understanding requires shared conceptualizations of a signal’s use, i.e., “conceptual pacts” that are abstracted away from specific experiences. Both accounts predict coherent neural dynamics across communicators, aligned either to the occurrence of a signal or to the dynamics of conceptual pacts. Using coherence spectral-density analysis of cerebral activity simultaneously measured in pairs of communicators, this study shows that establishing mutual understanding of novel signals synchronizes cerebral dynamics across communicators’ right temporal lobes. This interpersonal cerebral coherence occurred only within pairs with a shared communicative history, and at temporal scales independent from signals’ occurrences. These findings favor the notion that meaning emerges from shared conceptualizations of a signal’s use.

Human sociality is built on the capacity for mutual understanding, but its principles and mechanisms remain poorly understood (1). Given the pervasive ambiguity of communicative signals (2), how can we expect to understand each other? For instance, I might think of tacitly asking my friend Tom to enter a pub by virtue of a pointing gesture toward a nearby bike, believing that both of us recognized the bike of his girlfriend Emma, only to realize how my gesture would be interpreted differently as Tom tells me about his recent split from Emma (2, 3).

An influential suggestion holds that communicators are mutually primed by each other’s behaviors, with associative mechanisms automatically coordinating the production of communicative signals and the comprehension of their meanings (4–8). In this framework, mutual understanding arises by virtue of individual experiences with a signal’s properties, as when linguistic features of a word are biased by recent experience of those features (9, 10). Alternatively, mutual understanding might require shared conceptualizations of a signal’s use, abstracted away from specific experiences during a communicative interaction (11–14). In this framework, mutual understanding arises from what communicators mutually know, “conceptual pacts” that are negotiated by communicators over the course of their interactions (11). Although both possibilities emphasize that communicative signals are context dependent (15), they put different emphasis on the relevance of the communicative signal. Both possibilities predict that mutual understanding is neurally implemented through temporally coherent and spatially overlapping activity across communicators (7, 16–18), but with different cerebral dynamics. If meaning is shared by virtue of signals’ features, then communicators’ cerebral coherence should be synchronized to the occurrence of those signals (7, 19, 20). If meaning is shared through conceptual pacts, then communicators’ cerebral coherence should be synchronized to abstractions generalized over multiple communicative episodes, without reference to the occurrence of a specific experience (1, 11, 14). Those predictions can be tested by manipulating the dynamics of mutual understanding across communicators, while capturing the dynamics of their interpersonal cerebral coherence.

Mutual understanding was manipulated with an experimentally controlled communicative task (16, 21). This task precludes the use of communication channels and preexisting shared representations used during daily communication (e.g., a common idiom, body emblems, facial expressions), thereby gaining control over the communicative environment and the history of that environment (16, 21). The cerebral characteristics of mutual understanding were isolated through three nested analyses performed on functional magnetic resonance imaging (fMRI) activities simultaneously recorded in pairs of communicators engaged in understanding each other over a series of communicative interactions (22, 23). First, a model-based analysis isolated cerebral signals whose temporal profile matched the behavioral dynamics of mutual understanding observed across Communicators and Addressees. Second, a model-free analysis determined the frequency and phase characteristics of the interpersonal cerebral coherence of Communicator–Addressee pairs. Third, a model-based analysis tested whether interpersonal cerebral coherence in Communicator–Addressee pairs is specifically driven by the creation of novel shared meanings, independently from responses to transient signals.

Results

Twenty-seven pairs of participants were asked to jointly create a goal configuration of two geometrical tokens, using the movements of the tokens on a digital game board as the only available communicative signal. One member of a pair, the “Communicator,” knew the goal configuration, and he moved his token on the game board with his right hand to inform the other member, the “Addressee,” where and how to position her token (Fig. 1A, Movie S1, and Fig. S1). A systematic analysis of the communicative signals used by each pair of participants indicates that Communicators and Addressees do not independently learn overlapping interpretations of the behaviors. Rather, Communicators take into account how Addressees interpreted their signals, and adjust them accordingly (see SI Materials and Methods and Fig. S2 on how pairs converge on a shared meaning). Furthermore, the same sequence of movements (a signal) could be used by different pairs to represent different meanings, and the same meaning could be conveyed by different signals across different pairs (e.g., Movies S1–S4). The same signal could even be used to convey different goal states in different interactions within the same pair (16). These observations indicate that, in this task, despite the asymmetric load on signal production, signal–meaning mappings are dynamically and jointly constructed by each communicative pair of Communicators and Addressees from a vast space of possibilities (16, 21, 24). Despite the obvious surface differences between this communicative task and daily conversations, in both situations effective communication arises only when a pair converges on a shared meaning (1, 12). We experimentally manipulated the dynamics of shared meaning by using two types of communicative problems distributed over 84 successive interactions. There were problems in which the pairs converged on stereotyped signal–meaning mappings in a training session before the fMRI experiment (“Known” interactions), and there were problems in which signal–meaning mappings were open for negotiation (“Novel” interactions). Accordingly, during novel interactions, the same pair could solve multiple instances of the same problem type through different communicative behaviors, depending on the recent history of interactions of that pair (Movies S5–S7), an indication of the ability of this game to capture the dynamics of pair-specific conceptual pacts (11).

Fig. 1.

Behavioral and cerebral markers of mutual understanding. (A) Communicative interaction: to achieve a joint goal configuration of their tokens (top left grid), the Communicator needed to convince the Addressee to move her token (in orange) to a location and orientation unknown to her. The Communicator could achieve this only by moving his token (in blue) across the digital grid, knowing that the Addressee will observe and interpret those movements as best as she can, before moving her token in response (see Fig. S1A and Movie S1 for full sequence of events). Successful performance requires each pair to share the meanings of those communicative signals. (B) There were communicative problems for which the pairs had previously established mutual understanding (Known, with communicative success at ceiling level), and problems in which shared meaning of the communicative signals had yet to be established (Novel, with logarithmically increasing success). Scan time is binned in seven blocks of six communicative problems each. Error bars indicate ±1 SEM. (C) A whole-brain model-based analysis (P < 0.05, familywise error corrected) indicated that activity in the right superior temporal gyrus (rSTG) ([58, −8, 5]) was higher during Known than Novel interactions and followed the same logarithmically increasing dynamics as communicative success during Novel interactions, both in Communicators producing communicative signals and in Addressees comprehending those signals (see Figs. S3 and S4, and Table S1 for masking procedures). For anatomical reference, the location of Heschl’s gyrus is indicated in red. The two lower panels indicate parameter estimates of activity evoked in rSTG over scan time as a function of problem type (Known, Novel), separately for Communicators and Addressees. Continuous lines indicate a significant fit to a logarithmic function.

Matched Brain–Behavior Dynamics of Mutual Understanding.

First, we searched across the whole brain for a cerebral dynamics matching the behavioral dynamics of mutual understanding. Over time, Communicator–Addressee pairs logarithmically increased their communicative success when dealing with Novel problems [F(1,40) = 51.6; P < 0.001; R2adj = 0.55], against a stable and fully successful performance during Known problems (Fig. 1B). During Known trials, performance can largely rely on retrieval of mutual knowledge preestablished for each problem. Multiple regression analysis revealed that the medial prefrontal cortex and the right temporal lobe were more involved during Known interactions than Novel interactions (Fig. S3), two cortical regions previously shown to be more involved during communicative than noncommunicative control interactions (16). Within the right temporal lobe, an anterior portion of the superior temporal gyrus [right superior temporal gyrus (rSTG)] showed a cerebral dynamics that matched the behavioral dynamics of mutual understanding, namely the same logarithmic increase as communicative success over the course of Novel problems (Fig. 1C). This region had the peculiarity that, despite large differences in the sensory inputs and motor outputs experienced by Communicators and Addressees, its temporal dynamics was closely matched across both members of a communicative pair (Fig. 1C). This finding opens the possibility to isolate the interpersonal coupling mechanisms that drive those increases in blood oxygen level-dependent (BOLD) signal across Communicators and Addressees, with both increases matched to the dynamics of mutual understanding.

Interpersonal Cerebral Coherence During Mutual Understanding.

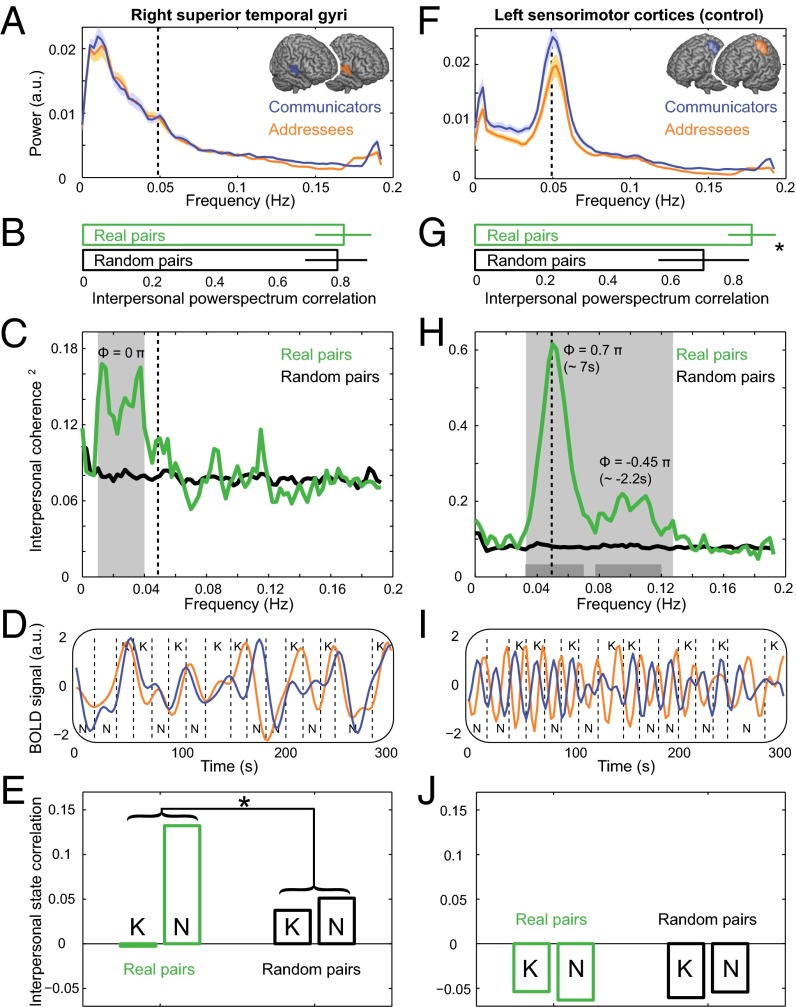

Second, we defined the characteristics of interpersonal cerebral coupling of Communicator–Addressee pairs. We reasoned that mutual understanding should be particularly evident in real communicative pairs that were in the process of creating shared meanings. In contrast, mutual understanding cannot emerge in random pairs engaged in solving the same communicative problems as real pairs, but without a shared communicative ground. Cerebral correlates of mutual understanding that fulfilled this criterion were characterized with coherence spectral-density analysis of BOLD time series from the rSTG of pairs of participants. This analysis provides model-free estimates of interpersonal coherence, namely frequency and phase-lag of cerebral dynamics of Communicators and Addressees. There was interpersonal coherence in the rSTG dynamics at 0.01–0.04 Hz (25–100 s), only within real communicative pairs, with zero phase-lag [0.05 ± 0.23 π (mean ± SD); Fig. 2C and Fig. S5]. This data-driven finding is particularly relevant for distinguishing between the two possible mechanisms of mutual understanding because coherence was present at a frequency well below the ∼0.05-Hz periodic occurrence of individual communicative problems and behaviors (every ∼20 s; vertical dashed line in Fig. 2C). For comparison, a control region (around the left central sulcus; Fig. 2F), expected a priori to show interpersonal couplings driven by the sensorimotor events occurring in the experiment, was coherent across real pairs at a frequency of 0.05 Hz (20 s) with a phase-lag corresponding to 7 s (0.7 ± 0.11 π; Fig. 2H and Fig. S5). These parameters closely match the dominant experimental frequency and the average temporal gap between the token movements of the participants in a pair (Fig. S1A). Inspection of the BOLD time series revealed that this region, unlike rSTG, remained indifferent to the Known or Novel nature of the communicative problems (compare Fig. 2 D and I), despite a strong sensitivity to the trial-by-trial timing differences between real and random pairs. Given that communicative problems and behaviors were also designed to be out of phase (Fig. 1A), the zero phase-lag low-frequency cerebral coherence found in the rSTG indicates that mutual understanding cannot emerge from mutual priming linking production and comprehension of individual communicative behaviors (5, 6, 25). Rather, zero phase-lag low-frequency cerebral coherence limited to those pairs with a shared communicative history indicates that mutual understanding relies on pair-specific computations decoupled from the occurrence of specific communicative events within communicating pairs.

Fig. 2.

Pair- and state-specific cerebral synchronization during mutual understanding. (A) Power spectral densities of BOLD signal in rSTG reveal predominantly low-frequency effects in both Communicators and Addressees. Shades indicate ±1 SEM. (B) Power spectra of real and random communicative pairs were matched, ruling out power differences as a source of pair-specific phase coupling. Error bars indicate ±1 SD. (C) Coherence spectra of real communicative pairs differed from those of random pairs at frequencies lower than the dominant experimental frequency (indicated by dashed line, ∼0.05 Hz). Gray surface indicates frequencies with a statistically significant difference in coherence (P < 0.05, corrected for multiple comparisons across frequencies, nonparametric randomization across participant pairs). Coherence values are magnitude squared to indicate explained variance per frequency bin. (D) Representative BOLD signal time courses of a real communicative pair (bandpass filtered between 0.01 and 0.04 Hz). Dashed lines indicate onsets and offsets of communicative interactions. K and N denote Known and Novel problem types, respectively. (E) Pair-specific (real vs. random pairs) and state-specific (Novel vs. Known episodes) synchronization of rSTG activity, estimated independently for each group of communicative problems. Synchronization was stronger in real pairs dealing with Novel problem types. (F) Power spectral densities of BOLD signal in left sensorimotor cortex ([−38, −19, 55]) reveal consistent activation at the dominant experimental frequency. (G) Power spectra of real communicative pairs were more similar than those of random pairs. Asterisk denotes P < 0.001. (H) Coherence spectra of real communicative pairs differed from those of random pairs at the dominant experimental frequency, with a phase difference matching the average temporal gap between the movements of the participants in a pair. Gray surface indicates frequencies with a statistically significant difference in coherence. Dark gray bars at Bottom indicate frequencies with a statistically significant phase difference. (I) BOLD signal time courses of the same communicative pair as in D (bandpass filtered between 0.03 and 0.07 Hz) from the left sensorimotor cortex. (J) BOLD signal synchronization of left sensorimotor cortex was not influenced by pair or state.

State-Specific Cerebral Synchronization During Mutual Understanding.

Third, we tested whether the interpersonal cerebral coherence found in real pairs is specifically driven by the creation of novel shared meanings. Following previous behavioral findings (16, 21), we expected that mutual understanding should be particularly evident when real communicative pairs create shared meanings, i.e., during Novel trials, and less when the same pairs retrieve stereotyped signal–meaning mappings, i.e., during Known trials. Behaviorally, real pairs had stronger interaction-by-interaction couplings than random pairs during Novel interactions [cross-correlation of planning times; real pairs: r = 0.15 ± 0.18, mean ± SD; random pairs: r = 0.06 ± 0.16; t(727) = 2.8; P = 0.006, two-sided independent t test], whereas no differential mutual adjustments were observed over the course of the Known interactions (real pairs: r = 0.09 ± 0.17; random pairs: r = 0.06 ± 0.17). The cerebral sources of this stronger interpersonal coupling in real pairs during Novel trials were isolated with a cross-correlation analysis on independent fMRI time series for each set of Known and Novel problems, i.e., communicative episodes with dynamics corresponding to the frequency characteristics of interpersonal coupling. Furthermore, to test whether this interpersonal coupling was independent from responses to transient events within each trial, a multiple regression analysis estimated subject-specific time series of rSTG activity. This model-based analysis generated independent fMRI time series for each task state, defined as one or more consecutive communicative problems of the same type (Novel, Known), having accounted for and removed variance related to transient task events within the occurrence of each communicative problem (Fig. S1A). Differential cross-correlation effects between Novel and Known task states were estimated on both real and random pairs. This analysis isolates a cerebral index of mutual understanding with a clear behavioral correlate, having controlled for noncommunicative shared experiences across real pairs, e.g., the effects of being engaged in the same task in the scanner, systematic differences in task difficulty between Known and Novel problems, shared experimental structure, and task duration. There was stronger cross-correlation between rSTG activities evoked during episodes involving Novel than Known problems, but only in real pairs [F(1,1454) = 4.6, P = 0.032; Fig. 2E]. This interaction was driven by stronger cross-correlation of the Novel than the Known task states, t(26) = 3.3,P = 0.003 (two-sided paired t test), and by stronger real than random pair cross-correlation of the Novel task states, t(727) = 2.0, P = 0.044 (two-sided independent t test).

These results lead to two observations. First, pair-specific coherence consisted in simultaneous changes occurring when interlocutors needed to mutually adjust their conceptualizations of a signal’s use (Novel task states), but not when interlocutors were using stereotyped signal–meaning mappings (Known task states). Second, pair-specific coherence in the rSTG was driven by coupled activity related to whole communicative episodes, rather than to specific task events within each individual communicative problem. These two observations provide empirical evidence for the notion that mutual understanding pertains to a mutually known conceptual structure that gives meaning to individual communicative signals (11, 12).

Interpretational Issues.

It could be argued that the cerebral dynamics and interpersonal couplings quantified in this study reflect the dynamics of the task structure, rather than mutual understanding. In fact, several task features and control analyses qualify the specificity of these findings. The first analysis isolated common dynamics across Communicators and Addressees, excluding the contribution of sensorimotor or cognitive factors limited to only one member of a communicative pair. The second analysis indicates that the increase in coherence among real communicative pairs occurred in the context of matched amplitude and distribution of spectral power in Communicators and Addressees (Fig. 2 A and B). This finding ensures that the increased coherence was due to pair-specific phase coupling. Furthermore, the zero phase-lag low-frequency cerebral coherence could not be a consequence of task-driven sensorimotor events, like eye movements, given that communicative problems and behaviors were designed to be out of phase (Fig. 1A). Shared knowledge of the task structure, e.g., whether the current problem is Known or Novel, cannot be driving the interpersonal coherence, given that only the Communicator had access to that knowledge. The third analysis indicates that interpersonal coherence in the rSTG followed the behavioral profile of interpersonal couplings in planning times, while being independent from transient task events. This finding excludes that low-frequency interpersonal coherence reflects any shared experiences of the two communicators that is happening across more than one interaction. Furthermore, a number of control analyses exclude that the findings are a consequence of pair-specific coherent changes in attention to auditory stimulation of the MR scanners (Fig. S6), changes in visual attention (Fig. S7), changes in the state of the default-mode network as task difficulty decreased (Fig. S8), or shared trial-specific features (Fig. S9). Finally, the pair-specific and state-specific cerebral synchronization observed in rSTG was anatomically specific, being clearly absent from regions previously suggested to be involved in social action understanding and in theory of mind computations (Fig. S10).

Discussion

This study shows that establishing mutual understanding relies on cerebral activity that is spatially and temporally coherent across communicators. This cerebral activity followed the dynamics of mutual understanding over the course of the experiment and emerged from a region embedded in a circuit previously shown to be relevant for human communication (16). The interpersonal coherence of this cerebral activity followed temporal scales independent from transient sensorimotor behavior, and it occurred simultaneously, only in pairs with a shared communicative history. Furthermore, pair-specific temporal synchronization of this activity occurred when novel communicative knowledge was generated among pairs with a shared communicative history, but not when those communicators could rely on stereotyped signal–meaning mappings that did not require equally frequent updating. Taken together, these findings indicate that establishing mutual understanding is implemented through matched cerebral dynamics across communicators, independently from responses to transient signals.

These observations discriminate between two current accounts of how humans can understand each other. This study indicates that interpersonal cerebral coherence related to the emergence of mutual understanding was decoupled from the occurrence of specific communicative events within communicating pairs. This observation provides an empirical argument against the hypothesis that mutual understanding arises by virtue of individual experiences with a signal’s properties (5, 6), and more generally against models of human referential communication that emphasize signal transmission (7, 19). In fact, this study indicates that communicators’ interpersonal cerebral coherence operates across multiple communicative episodes, supporting the hypothesis that mutual understanding arises from shared conceptualizations of a signal’s use (11, 12). The anatomical findings of this study reinforce that conclusion. Interpersonal cerebral coherence emerged from a site, the rSTG, necessary for interpreting visual motion events as driven by mental states (26, 27), and distinct from posterior temporal sites involved in processing biological motion (28, 29). A neighboring portion of the rSTG (<12-mm distance) has also been shown to interpret pragmatic features of linguistic events, embedding utterances into their conversational context (30). Taken together, those reports and the current study converge in linking the rSTG to processing conceptual knowledge attributed to other human agents, dynamically adjusting that knowledge to the current situation. In contrast, anatomical sites previously suggested to support human referential communication [e.g., “action-observation network” (31, 32), “perspective-taking network” (33), and the “social brain” (34)] showed interpersonal coherence effects that were not influenced by the emergence of mutual understanding (Fig. S10). Those sites lacked the pair-specific and state-specific response profile observed in the rSTG.

Building on previous studies focused on communicative behaviors during live social interactions (16, 18, 22, 23, 35–38), this study has experimentally manipulated the dynamics of mutual understanding to assess its consequences on interpersonal cerebral coherence. It remains to be seen which neural mechanism can support the extremely long integration window (∼100 s) through which the rSTG contributes to mutual understanding. Tonic up-regulation of broadband neural activity, previously shown to integrate driving afferences with contextual information (16, 39), may support the integration of the current communicative signal within the conceptual space defined by the recent history of communicative interactions. It also remains to be seen whether the present findings generalize to communicative situations involving faster turn-taking and multiple communication channels, e.g., the situation faced by interlocutors in daily conversations. Faster and more complex signals might modulate the cerebral circuits supporting our ability to develop meaning from shared conceptualizations of a signal’s use (40, 41). However, it remains unclear whether faster and more complex signals, per se, can resolve the fundamental computational bottleneck of the multiple ambiguities of those signals (1–3, 42). This bottleneck becomes obvious in a number of situations requiring access to mutual understanding without preexisting shared meanings, e.g., communicative interactions with artificial agents (43). The findings of this study suggest that those agents might better satisfy human communicative expectations by using a cognitive architecture that embeds communicative signals into a conceptual space continuously updated by shared communicative history, over and above rapid searches from a large array of preestablished associations (7).

Materials and Methods

Participants.

Fifty-four right-handed male participants (assigned to 27 communicative pairs, 18–27 y of age) were recruited to take part in this study. They were screened for a history of psychiatric and neurological problems and had normal or corrected-to-normal vision. Participants gave written informed consent according to institutional guidelines of the local ethics committee (Committee on Research Involving Human Subjects, region Arnhem-Nijmegen, The Netherlands) and were either offered a financial payment or given credits toward completing a course requirement.

Task.

The task was organized in a series of trials, each constituting a single communicative interaction. At interaction onset, each player is assigned a token (epoch 1: token assignment, Fig. S1A). Then the Communicator (and the Communicator only) is shown the goal configuration of that interaction (epoch 2: planning). The goal configuration contains the tokens of the Communicator (in blue) and the Addressee (in orange), at the grid locations and orientations they should have at the end of the interaction. Both interlocutors know that the Addressee does not see the goal configuration, and that the Communicator cannot move the Addressee’s token. Therefore, the Communicator needs to communicate to the Addressee the location and orientation that her token should have at the end of the interaction. To comply with the task requirements, the Communicator also needs to ensure that at the end of his turn his token is at the location and orientation specified by the goal configuration. In this game, the only means available to the Communicator for communicating with the Addressee is by moving his own token around the grid, namely horizontal translations, vertical translations, or clockwise and counterclockwise rotations. Both Communicator and Addressee also know that the Communicator has unlimited time available for planning his moves, but only 5 s for moving his token on the grid. The Communicator signals his readiness to move by pressing the start/stop button. At this point, the goal configuration disappears, the Communicator’s token appears in the center of the grid, and he can start moving his token (epoch 3: movement). After 5 s, or earlier if the Communicator hits the start/stop button again, the Communicator’s token cannot move further and the Addressee’s token appears alongside of the game board. This event indicates that the Addressee has acquired control over her token. The Addressee has unlimited time to infer the goal location and orientation of her token on the basis of the observed movements of the Communicator (epoch 4: planning). After the Addressee presses the start/stop button, her token appears at a random location on the game board (excluding goal positions of the Communicator’s and Addressees’ tokens) and she has 5 s to move her token (epoch 5: movement). Finally, after 5 s, or earlier if the Addressee hits the start/stop button again, the same feedback is presented to both players in the form of a green tick or red cross (positive or negative feedback, respectively; epoch 6: feedback). The feedback indicates whether the participants had matched the location and orientation of their tokens with those of the goal configuration.

The Addressee cannot solve the communicative task by reproducing the movements of the Communicator’s token. Rather, the Addressee needs to disambiguate communicative and instrumental components of the Communicator’s movements, and find a relationship between the Communicator’s movements and their meaning. There are no a priori correct solutions to the communicative task, or a limited set of options from which the Communicator could choose. The behaviors and neural activity evoked by this communication game are described in detail in SI Materials and Methods and in previous publications (16, 17, 21, 24, 44–47).

Experimental Design.

An experiment comprised three parts; two training sessions outside the scanners, followed by one fMRI session in which functional brain images were acquired on both participants of a pair, simultaneously. In the first training session, the participants were individually trained with using a hand-held controller. Afterward, both members of a communicative pair were jointly familiarized with the general procedures and events of the interactive task (10 interactions with no communicative demands). In the second training session, each communicative pair was jointly familiarized with the communicative aspect of the task and their communicative roles, which were fixed across the experiment. In fact, unbeknownst to the participants themselves, within this session the participants were driven to establish mutual understanding on a set of communicative problems. The training session was completed only when the participants had successfully solved at least 25 communicative problems, without communicative failures in any of their last 10 problems.

The fMRI session consisted of 84 communicative interactions. One-half of these interactions consisted of problem types previously faced by the participant pair within the training session, now labeled as Known interactions. One-half of the interactions were Novel interactions, i.e., problem types that were not presented to the pair before. The participant pairs were not informed about the nature of the interactions. Known and Novel interactions were pseudorandomly intermixed, with a maximum of three interactions of the same condition in a row. Known and Novel interactions used different combinations of token shapes and orientations, and different goal configurations. During Known interactions, the Communicator–Addressee token pairs were chosen from triangle–rectangle, rectangle–circle, triangle–circle, and triangle*–circle pairs (with the label “triangle*” indicating a triangle pointing outwards the game board). During Novel interactions, the Communicator–Addressee token pairs were chosen from rectangle–triangle, circle–rectangle, circle–triangle (e.g., Fig. 1A), and circle–triangle*. During Novel interactions, the Communicator’s token could be moved through fewer orientations than the Addressee’s token. For instance, when the Communicator’s token was a circle and the Addressee’s token was a triangle, then the Communicator needed to find a way to indicate to the Addressee the orientation of her token, because rotations of the circle token were not visible. A further level of difficulty could be introduced by using a triangle* as the Addressee’s goal configuration, the Communicator’s token being a circle. During Novel interactions, when a pair jointly solved four problems with the same type of goal configuration, consecutively, it was assumed that the pair had established a consistent way to solve that communicative problem. Accordingly, that goal configuration was substituted with a new goal configuration for the remainder of the experiment. The rationale of this intervention was to drive participants to continuously create new communicative behaviors, rather than exploiting already established communicative conventions.

fMRI Image Acquisition and Analysis.

Functional magnetic resonance images of a Communicator and Addressee pair were acquired simultaneously in separate MRI scanners. Communicators were scanned using a 3-T Trio scanner (Siemens). BOLD-sensitive functional images were acquired using a single shot gradient echo-planar imaging (EPI) sequence [repetition time (TR)/echo time (TE), 2.60 s/40 ms; 34 transversal slices; interleaved acquisition; voxel size, 3.5 × 3.5 × 3.5 mm3]. Structural images were acquired using a magnetization-prepared rapid gradient echo (MP-RAGE) sequence (TR/TE, 2.30 s/3.9 ms; voxel size, 1 × 1 × 1 mm3). Addressees were scanned using a 1.5-T Sonata scanner (Siemens) and a single-shot gradient EPI sequence (TR/TE, 2.70 s/40 ms; 34 transversal slices; ascending acquisition; voxel size, 3.5 × 3.5 × 3.5 mm3). Structural images were acquired using a MP-RAGE sequence (TR/TE, 2.25 s/3.68 ms; voxel size, 1 × 1 × 1 mm3).

The images were preprocessed and statistically analyzed using FSL (FMRIB Software Library; fsl.fmrib.ox.ac.uk/fsl/) and SPM8 (Statistical Parametric Mapping; www.fil.ion.ucl.ac.uk/spm). Preprocessing of the functional scans included brain extraction (48), spatial realignment (rigid-body transformations using sinc interpolation algorithm), slice-time correction, coregistration [of functional and anatomical images, after prior coregistration of both image types to a Montreal Neurological Institute (MNI) template], reslicing (1.5 × 1.5 × 1.5 mm), spatial nonlinear normalization [to MNI space (49)], and spatial smoothing (isotropic 8-mm FWHM Gaussian kernel). Each anatomical image was segmented into three different tissue compartments (gray matter, white matter, cerebral spinal fluid). Mean signals in the latter two compartments and head movement-related parameters (including derivatives of translations and rotations to the mean as obtained during the spatial realignment) were entered as regressors in all first-level fMRI analyses. During model estimation, the data were high-pass filtered (cutoff, 128 s), and temporal autocorrelation was modeled as an autoregressive (1) process.

The seven epochs shown in Fig. S1A were considered for the first-level fMRI analyses of both Communicators and Addressees. The feedback event (epoch 6, Fig. S1A) was modeled separately for positive and negative outcomes of an interaction. Task epochs pertaining to Communicators planning their moves, and Addressees observing the Communicators’ moves were modeled separately for Known and Novel interactions, with durations set to the trial-specific epoch durations. Each epoch time series was convolved with a canonical hemodynamic response function and used as a regressor in the SPM general linear model. In addition, the emergence of meaning over the course of the experiment in the Novel interactions was modeled as a parametric (logarithmic) modulation of BOLD signal over scanning time during planning (for Communicators) and observation epochs (for Addressees). This choice was grounded on the logarithmic modulation of communicative success observed in the pairs’ behavior during Novel interactions (green curve in Fig. S1D). For visualization purposes of these time-modulatory effects (Fig. 1C), we considered planning and observation regressors that each encompassed six consecutive interactions (labeled as a “scan time bin”; Fig. 1) rather than one, i.e., to obtain more reliably estimated data points of cerebral activation during those epochs over the course of the Known and Novel interactions.

Interpersonal coherence analysis was performed using the FieldTrip toolbox for magnetoencephalography/electroencephalography analysis (fieldtrip.fcdonders.nl/) (50) and custom MATLAB code (MathWorks). Whole-experiment BOLD signal time series were extracted from regions of interest, synchronized to experiment onset, bandpass filtered (0.0025- to 0.02-Hz frequency domain brick-wall filter), and nuisance-corrected using mean signals of white matter and cerebral spinal fluid compartments and head movement-related parameters. The BOLD signal time series of Addressees were resampled to match the sample frequency of Communicators using linear interpolation (TR of 2.70 s compared with 2.60 s). For each participant, the time series was segmented into multiple consecutive overlapping windows of 400 s (75% overlap). The windows were then tapered with a set of three orthogonal Slepian tapers before spectral estimation and calculation of the magnitude squared coherence. This resulted in a spectral smoothing of ±0.005 Hz.

Interpersonal state correlation analysis was performed using cross-correlation of subject-specific time series of cerebral activity estimated independently for each task state. These independent fMRI time series (composed of regressor weights) were obtained using multiple linear regression, modeling the BOLD signal of each participant’s task state separately for Known and Novel problem types (26 task states per problem type), with each task state including one or more consecutive communicative problems. We additionally considered eight different task epochs (the seven epochs indicated in Fig. S1A, with feedback epochs modeled separately for outcome) to account for variance related to transient task events within each task state.

Statistical Inference at the Group Level.

To test for the consequences of experimentally manipulating mutual understanding on cerebral activity, we performed a group-level whole-brain search of BOLD signal that had an equivalent temporal profile as communicative success over the course of the experiment (Fig. 1C). This search involved localizing cerebral activity that was higher during Known than Novel interactions (Fig. S3) and followed a logarithmic increase during Novel interactions (Fig. S4), both in Communicators producing communicative signals and Addressees comprehending those signals. We also estimated the change in cerebral activity over the course of Known interactions to control for unspecific changes in BOLD signal over time (see Table S1 for masking procedures). These contrasts were entered into a full factorial model with Role (Communicator, Addressee) as between-subjects variable, and Problem type (Known, Novel) and Time (No modulation, Parametric modulation) as within-subjects variable, treating subjects as a random variable. Unequal variance between the conditions was assumed, and the degrees of freedom were corrected for nonsphericity at each voxel. We report the results of a random-effects analysis, with inferences drawn at the cluster level, corrected for multiple comparisons over the whole brain using Random Fields Theory based familywise error correction [P < 0.05 (51)]. Clusters were obtained on the basis of a cluster-generating threshold (u) of P < 0.05. We play on the strength of conjunction analyses to isolate neural effects overlapping between the two roles, Communicators and Addressees (52).

Pair specificity of BOLD signal synchronization was tested by comparing interpersonal cerebral coherence calculated on BOLD signals of participants forming real pairs (Nreal pairs = 27) with cerebral coherence calculated on BOLD signals of participants that did not share a communicative history (e.g., Communicator from pair 1 with Addressee from pair 2; Nrandom pairs = 702; i.e., 27 × 26 combinations). The coherence measures were entered into a second-level random-effects analysis correcting for multiple comparisons at the cluster level [Monte Carlo P < 0.05; 10,000 randomizations across participant pairs (53)]. Pair and state specificity of BOLD signal synchronization was tested by comparing cross-correlations of Known and Novel task state time series estimated on both real and random pairs using a 2 × 2 univariate ANOVA.

Supplementary Material

Acknowledgments

We thank Roger and Sarah Newman-Norlund, Paul Gaalman, Frank Leone, Rogier Mars, Lawrence O’Dwyer, and Arie Verhagen for technical advice and comments on drafts of this manuscript. This research was supported by VICI Grant 453-08-002 and Gravitation Grant 024-001-006 of the Language in Interaction Consortium from Netherlands Organization for Scientific Research (to I.T.).

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission. A.A.G. is a guest editor invited by the Editorial Board.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1414886111/-/DCSupplemental.

References

- 1.Levinson S. On the human interactional engine. In: Enfield N, Levinson S, editors. Roots of Human Sociality. Berg Publisher; Oxford: 2006. pp. 39–69. [Google Scholar]

- 2.Sperber D, Wilson D. Relevance: Communication and Cognition. Blackwell Publishers; Oxford: 2001. [Google Scholar]

- 3.Tomasello M. Origins of Human Communication. MIT; Cambridge, MA: 2008. [Google Scholar]

- 4.Pulvermüller F, Moseley RL, Egorova N, Shebani Z, Boulenger V. Motor cognition-motor semantics: Action perception theory of cognition and communication. Neuropsychologia. 2014;55:71–84. doi: 10.1016/j.neuropsychologia.2013.12.002. [DOI] [PubMed] [Google Scholar]

- 5.Pickering MJ, Garrod S. An integrated theory of language production and comprehension. Behav Brain Sci. 2013;36(4):329–347. doi: 10.1017/S0140525X12001495. [DOI] [PubMed] [Google Scholar]

- 6.Pickering MJ, Garrod S. Toward a mechanistic psychology of dialogue. Behav Brain Sci. 2004;27(2):169–190, discussion 190–226. doi: 10.1017/s0140525x04000056. [DOI] [PubMed] [Google Scholar]

- 7.Hasson U, Ghazanfar AA, Galantucci B, Garrod S, Keysers C. Brain-to-brain coupling: A mechanism for creating and sharing a social world. Trends Cogn Sci. 2012;16(2):114–121. doi: 10.1016/j.tics.2011.12.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Rizzolatti G, Arbib MA. Language within our grasp. Trends Neurosci. 1998;21(5):188–194. doi: 10.1016/s0166-2236(98)01260-0. [DOI] [PubMed] [Google Scholar]

- 9.Segaert K, Menenti L, Weber K, Petersson KM, Hagoort P. Shared syntax in language production and language comprehension—an FMRI study. Cereb Cortex. 2012;22(7):1662–1670. doi: 10.1093/cercor/bhr249. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Sass K, Krach S, Sachs O, Kircher T. Lion—tiger—stripes: Neural correlates of indirect semantic priming across processing modalities. Neuroimage. 2009;45(1):224–236. doi: 10.1016/j.neuroimage.2008.10.014. [DOI] [PubMed] [Google Scholar]

- 11.Brennan SE, Clark HH. Conceptual pacts and lexical choice in conversation. J Exp Psychol Learn Mem Cogn. 1996;22(6):1482–1493. doi: 10.1037//0278-7393.22.6.1482. [DOI] [PubMed] [Google Scholar]

- 12.Clark HH. Using Language. Cambridge Univ Press; Cambridge, UK: 1996. [Google Scholar]

- 13.Wittgenstein L. (2009) Philosophical Investigations (Wiley-Blackwell, Oxford), 4th Ed.

- 14.Binder JR, Desai RH. The neurobiology of semantic memory. Trends Cogn Sci. 2011;15(11):527–536. doi: 10.1016/j.tics.2011.10.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Lerner Y, Honey CJ, Silbert LJ, Hasson U. Topographic mapping of a hierarchy of temporal receptive windows using a narrated story. J Neurosci. 2011;31(8):2906–2915. doi: 10.1523/JNEUROSCI.3684-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Stolk A, et al. Neural mechanisms of communicative innovation. Proc Natl Acad Sci USA. 2013;110(36):14574–14579. doi: 10.1073/pnas.1303170110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Noordzij ML, et al. Brain mechanisms underlying human communication. Front Hum Neurosci. 2009;3:14. doi: 10.3389/neuro.09.014.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Menenti L, Gierhan SM, Segaert K, Hagoort P. Shared language: Overlap and segregation of the neuronal infrastructure for speaking and listening revealed by functional MRI. Psychol Sci. 2011;22(9):1173–1182. doi: 10.1177/0956797611418347. [DOI] [PubMed] [Google Scholar]

- 19.Shannon C. A mathematical theory of communication. Bell Syst Tech J. 1948;27:379–423. [Google Scholar]

- 20.Tognoli E, Lagarde J, DeGuzman GC, Kelso JA. The phi complex as a neuromarker of human social coordination. Proc Natl Acad Sci USA. 2007;104(19):8190–8195. doi: 10.1073/pnas.0611453104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.de Ruiter JP, et al. Exploring the cognitive infrastructure of communication. Interact Stud. 2010;11(1):51–77. [Google Scholar]

- 22.Montague PR, et al. Hyperscanning: Simultaneous fMRI during linked social interactions. Neuroimage. 2002;16(4):1159–1164. doi: 10.1006/nimg.2002.1150. [DOI] [PubMed] [Google Scholar]

- 23.Anders S, Heinzle J, Weiskopf N, Ethofer T, Haynes JD. Flow of affective information between communicating brains. Neuroimage. 2011;54(1):439–446. doi: 10.1016/j.neuroimage.2010.07.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Blokpoel M, et al. Recipient design in human communication: Simple heuristics or perspective taking? Front Hum Neurosci. 2012;6:253. doi: 10.3389/fnhum.2012.00253. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Gallese V, Sinigaglia C. What is so special about embodied simulation? Trends Cogn Sci. 2011;15(11):512–519. doi: 10.1016/j.tics.2011.09.003. [DOI] [PubMed] [Google Scholar]

- 26.Han Z, et al. Distinct regions of right temporal cortex are associated with biological and human-agent motion: Functional magnetic resonance imaging and neuropsychological evidence. J Neurosci. 2013;33(39):15442–15453. doi: 10.1523/JNEUROSCI.5868-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Zahn R, et al. Social conceptual impairments in frontotemporal lobar degeneration with right anterior temporal hypometabolism. Brain. 2009;132(Pt 3):604–616. doi: 10.1093/brain/awn343. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Grossman ED, Battelli L, Pascual-Leone A. Repetitive TMS over posterior STS disrupts perception of biological motion. Vision Res. 2005;45(22):2847–2853. doi: 10.1016/j.visres.2005.05.027. [DOI] [PubMed] [Google Scholar]

- 29.Schultz J, Friston KJ, O’Doherty J, Wolpert DM, Frith CD. Activation in posterior superior temporal sulcus parallels parameter inducing the percept of animacy. Neuron. 2005;45(4):625–635. doi: 10.1016/j.neuron.2004.12.052. [DOI] [PubMed] [Google Scholar]

- 30.Bašnáková J, Weber K, Petersson KM, van Berkum J, Hagoort P. Beyond the language given: The neural correlates of inferring speaker meaning. Cereb Cortex. 2014;24(10):2572–2578. doi: 10.1093/cercor/bht112. [DOI] [PubMed] [Google Scholar]

- 31.Kilner JM, Neal A, Weiskopf N, Friston KJ, Frith CD. Evidence of mirror neurons in human inferior frontal gyrus. J Neurosci. 2009;29(32):10153–10159. doi: 10.1523/JNEUROSCI.2668-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Chong TT, Cunnington R, Williams MA, Kanwisher N, Mattingley JB. fMRI adaptation reveals mirror neurons in human inferior parietal cortex. Curr Biol. 2008;18(20):1576–1580. doi: 10.1016/j.cub.2008.08.068. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Young L, Camprodon JA, Hauser M, Pascual-Leone A, Saxe R. Disruption of the right temporoparietal junction with transcranial magnetic stimulation reduces the role of beliefs in moral judgments. Proc Natl Acad Sci USA. 2010;107(15):6753–6758. doi: 10.1073/pnas.0914826107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Lewis PA, Rezaie R, Brown R, Roberts N, Dunbar RI. Ventromedial prefrontal volume predicts understanding of others and social network size. Neuroimage. 2011;57(4):1624–1629. doi: 10.1016/j.neuroimage.2011.05.030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Bahrami B, et al. Optimally interacting minds. Science. 2010;329(5995):1081–1085. doi: 10.1126/science.1185718. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Schippers MB, Roebroeck A, Renken R, Nanetti L, Keysers C. Mapping the information flow from one brain to another during gestural communication. Proc Natl Acad Sci USA. 2010;107(20):9388–9393. doi: 10.1073/pnas.1001791107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Stephens GJ, Silbert LJ, Hasson U. Speaker-listener neural coupling underlies successful communication. Proc Natl Acad Sci USA. 2010;107(32):14425–14430. doi: 10.1073/pnas.1008662107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Silbert LJ, Honey CJ, Simony E, Poeppel D, Hasson U. Coupled neural systems underlie the production and comprehension of naturalistic narrative speech. Proc Natl Acad Sci USA. 2014;111(43):E4687–E4696. doi: 10.1073/pnas.1323812111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Miller KJ, et al. Cortical activity during motor execution, motor imagery, and imagery-based online feedback. Proc Natl Acad Sci USA. 2010;107(9):4430–4435. doi: 10.1073/pnas.0913697107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Straube B, Green A, Bromberger B, Kircher T. The differentiation of iconic and metaphoric gestures: Common and unique integration processes. Hum Brain Mapp. 2011;32(4):520–533. doi: 10.1002/hbm.21041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Skipper JI, Goldin-Meadow S, Nusbaum HC, Small SL. Gestures orchestrate brain networks for language understanding. Curr Biol. 2009;19(8):661–667. doi: 10.1016/j.cub.2009.02.051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.van Rooij I, et al. Intentional communication: Computationally easy or difficult? Front Hum Neurosci. 2011;5:52. doi: 10.3389/fnhum.2011.00052. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Levesque HJ. On our best behaviour. Artif Intell. 2014;212:27–35. [Google Scholar]

- 44.Volman I, Noordzij ML, Toni I. Sources of variability in human communicative skills. Front Hum Neurosci. 2012;6:310. doi: 10.3389/fnhum.2012.00310. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Newman-Norlund SE, et al. Recipient design in tacit communication. Cognition. 2009;111(1):46–54. doi: 10.1016/j.cognition.2008.12.004. [DOI] [PubMed] [Google Scholar]

- 46.Stolk A, Hunnius S, Bekkering H, Toni I. Early social experience predicts referential communicative adjustments in five-year-old children. PLoS One. 2013;8(8):e72667. doi: 10.1371/journal.pone.0072667. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Stolk A, et al. Understanding communicative actions: A repetitive TMS study. Cortex. 2014;51:25–34. doi: 10.1016/j.cortex.2013.10.005. [DOI] [PubMed] [Google Scholar]

- 48.Smith SM. Fast robust automated brain extraction. Hum Brain Mapp. 2002;17(3):143–155. doi: 10.1002/hbm.10062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Friston KJ, et al. Spatial registration and normalization of images. Hum Brain Mapp. 1995;3(3):165–189. [Google Scholar]

- 50.Oostenveld R, Fries P, Maris E, Schoffelen JM. FieldTrip: Open source software for advanced analysis of MEG, EEG, and invasive electrophysiological data. Comput Intell Neurosci. 2011;2011:156869. doi: 10.1155/2011/156869. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Worsley KJ, Evans AC, Marrett S, Neelin P. A three-dimensional statistical analysis for CBF activation studies in human brain. J Cereb Blood Flow Metab. 1992;12(6):900–918. doi: 10.1038/jcbfm.1992.127. [DOI] [PubMed] [Google Scholar]

- 52.Nichols T, Brett M, Andersson J, Wager T, Poline JB. Valid conjunction inference with the minimum statistic. Neuroimage. 2005;25(3):653–660. doi: 10.1016/j.neuroimage.2004.12.005. [DOI] [PubMed] [Google Scholar]

- 53.Maris E, Oostenveld R. Nonparametric statistical testing of EEG- and MEG-data. J Neurosci Methods. 2007;164(1):177–190. doi: 10.1016/j.jneumeth.2007.03.024. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.