Significance

Real-world decisions frequently involve tradeoffs between multiple economic dimensions, and integration of benefits and costs into a common currency of subjective value is fundamental to action selection. Phasic dopamine is widely regarded as a critical teaching signal for learning the values assigned to actions, and these stored (“cached”) values can be read out from cue-evoked dopamine responses. Here, we observed a significant inversion between animals’ behavioral preferences and the rank ordering of dopamine-reported cached values, indicating that these cached values cannot be the sole determinant of choices in simple economic decision making. These data challenge the fundamental tenet of contemporary theories of decision making which posit that dopamine-associated cached values are sufficient to serve as the basis for action selection.

Keywords: decision making, action selection, cached values, dopamine

Abstract

Phasic dopamine transmission is posited to act as a critical teaching signal that updates the stored (or “cached”) values assigned to reward-predictive stimuli and actions. It is widely hypothesized that these cached values determine the selection among multiple courses of action, a premise that has provided a foundation for contemporary theories of decision making. In the current work we used fast-scan cyclic voltammetry to probe dopamine-associated cached values from cue-evoked dopamine release in the nucleus accumbens of rats performing cost–benefit decision-making paradigms to evaluate critically the relationship between dopamine-associated cached values and preferences. By manipulating the amount of effort required to obtain rewards of different sizes, we were able to bias rats toward preferring an option yielding a high-value reward in some sessions and toward instead preferring an option yielding a low-value reward in others. Therefore, this approach permitted the investigation of dopamine-associated cached values in a context in which reward magnitude and subjective preference were dissociated. We observed greater cue-evoked mesolimbic dopamine release to options yielding the high-value reward even when rats preferred the option yielding the low-value reward. This result identifies a clear mismatch between the ordinal utility of the available options and the rank ordering of their cached values, thereby providing robust evidence that dopamine-associated cached values cannot be the sole determinant of choices in simple economic decision making.

In contemporary theories of economic decision making, values are assigned to reward-predictive states in which animals can take action to obtain rewards, and these state-action values are stored (“cached”) for the purpose of guiding future choices based upon their rank order (1–5). It is believed that these cached values are represented as synaptic weights within corticostriatal circuitry, reflected in the activity of subpopulations of striatal projection neurons (6–9), and are updated by dopamine-dependent synaptic plasticity (10–12). Indeed, a wealth of evidence suggests that the phasic activity of dopamine neurons reports instances in which current reward or expectation of future reward differs from current expectations (13–24). This pattern of activity resembles the prediction-error term from temporal-difference reinforcement-learning algorithms, which is considered the critical teaching signal for updating cached values. A notable feature of models that integrate dopamine transmission into this computational framework is that the cached value of an action is explicitly read out by the phasic dopamine response to the unexpected presentation of a cue that designates the transition into a state in which that action yields reward. Therefore, cue-evoked dopamine signaling provides a neural representation of the cached values of available actions, and if these cached values serve as the basis for action selection, then cue-evoked dopamine responses should be rank ordered in a manner that is consistent with animals’ behavioral preferences.

Numerous studies that recorded cue-evoked dopamine signaling have reported correlations with the expected utility (subjective value) of actions (24–36). For example, risk-preferring rats demonstrated greater cue-evoked dopamine release for a risky option than for a certain option with equivalent objective expected value (reward magnitude times probability), whereas risk-averse rats showed greater dopamine release for the certain than for the risky option (30). Likewise, the cached values reported by dopamine neurons in macaque monkeys accounted for individual monkeys’ subjective flavor and risk preferences, with each attribute weighted according to its influence on behavioral preferences (31, 32). These observations, which are consistent across measures of dopamine neuronal activity and dopamine release, reinforce the prevailing notion that the dopamine-associated cached values could be the primary determinant of decision making (2–5, 17, 28–32) because the cue-evoked dopamine responses were rank ordered according to the animals’ subjective preferences. However, there have been some reports that other economic attributes, such as effortful response costs (35–38) or the overt aversiveness of an outcome (39), are represented inconsistently by cue-evoked dopamine responses. For example, Gan et al. (35) showed that independent manipulations of two different dimensions (reward magnitude and effort) that had equivalent effects on behavior did not have equivalent effects on dopamine release. Paralleling these findings, a recent report reached a similar conclusion that dopamine transmission preferentially encodes an appetitive dimension but is relatively insensitive to aversiveness (39).

Because these cue-evoked dopamine signals represent cached values that are purported to determine action selection, their differential encoding of economic dimensions has potentially problematic implications in the context of decision making. Namely, by extrapolating from these studies (35–39), one might infer that when a decision involves the tradeoff between these economic dimensions, the rank order of the dopamine-associated cached values for each of the available options would not consistently reflect the ordinal utility of these options and therefore these cached values could not, on their own, be the basis of choices. However, this counterintuitive prediction was not tested explicitly by any of these previous studies; thus it remains a provocative notion that merits direct examination, because it is contrary to the prevailing hypothesis described above which is fundamental to contemporary theories of decision making. Therefore, we investigated interactions between dimensions that previously have been shown during independent manipulations to be weakly or strongly incorporated into these cached values. Specifically, we increased the amount of effort required to obtain a large reward so that animals instead preferred a low-effort option yielding a smaller reward, and we used fast-scan cyclic voltammetry to record cue-evoked mesolimbic dopamine release as a neurochemical proxy for each option’s cached value. These conditions permitted us to test whether the cached values reported via cue-evoked dopamine indeed align with animals’ subjective preferences across these mixed cost–benefit attributes.

Results

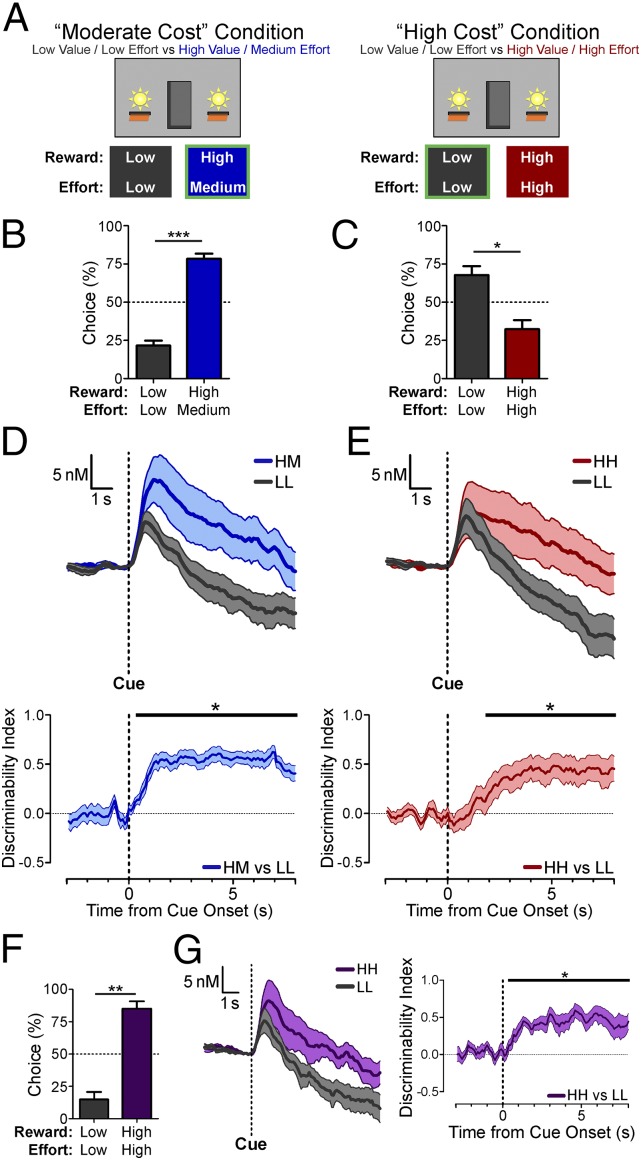

Food-restricted rats performed an instrumental decision-making task with mixed reward and effort contingencies (Fig. 1A). Sessions consisted of repeating blocks of four single-option forced trials in which only one of the two options was available followed by four choice trials in which both options were available concurrently. After a 45 ± 15 s variable intertrial interval (ITI), each trial began with the onset of one or both cue lights and the simultaneous extension of the corresponding lever(s). One lever was a low-value/low-effort (LL) reference option, yielding one food pellet for four lever presses. The alternative option yielded a high-value reward (four pellets) for a medium-effort requirement (eight presses) in the moderate-cost condition (high value/medium effort, HM) or for a high-effort requirement in the high-cost condition (high value/high effort, HH). During initial training before dopamine transmission in the high-cost condition was recorded, this high-effort requirement was determined individually for each rat so that it preferred the LL option. This high response cost ranged from 32–48 lever presses among rats but remained constant within each session for a given rat. A pair of voltammetry recordings (one session per counterbalanced lever side assignments for the high- and low-value options) was conducted for each condition. The behavioral criterion was defined as 75% choice for the HM option in moderate-cost sessions and for the LL option in high-cost sessions within a sliding window of 12 consecutive choice trials. After reaching this criterion, rats performed four additional blocks (32 trials), which provided the data analyzed from each session. Rats’ postcriterion choices revealed significant preferences for the HM option in the moderate-cost condition (Fig. 1B; t8 = 7.095, P = 0.0001) and for the LL option in the high-cost condition (Fig. 1C; t6 = 2.923, P = 0.0265).

Fig. 1.

Task design, behavioral performance, and voltammetry results from forced trials with simultaneous cue and lever onset (first cohort). (A) Reward and effort contingencies for moderate-cost and high-cost sessions (the box outlined in green signifies the preferred option). (B and C) Mean (+ SEM) postcriterion percent choice for moderate-cost sessions (***P = 0.0001, n = 9 rats) (B) and high-cost sessions (*P = 0.0265, n = 7 rats) (C). (D and E) Mean (± SEM) cue-evoked dopamine release (Upper) and discriminability index time series (Lower) in moderate-cost sessions (D, n = 11 recording sites) and in LL-preferred high-cost sessions (E, n = 10 recording sites). (F and G) Mean (+ SEM) postcriterion percent choice (**P = 0.001, n = 8 rats) (F) and mean (± SEM) cue-evoked dopamine release (G, Left) and discriminability index time series (G, Right) in HH-preferred high-cost sessions (n = 11 recording sites). For each discriminability index time course in D, E, and G, the horizontal bar indicates time points of significant discriminability (*P < 0.05, permutation tests).

To monitor mesolimbic dopamine transmission, we used fast-scan cyclic voltammetry at carbon-fiber microelectrodes chronically implanted in the nucleus accumbens core (Fig. S1) (40). Voltammetric recordings from postcriterion trials revealed phasic increases in dopamine concentration following cue onset for all trial types. Cue-evoked dopamine release during forced trials in the moderate-cost condition was greater in HM trials than in LL trials (Fig. 1D). To quantify this selectivity, at each time point, we calculated a dopamine discriminability index (41) based on the area under the receiver operating characteristic (auROC) curve (42), which indicates the probability that an ideal observer could correctly classify the trial type to which a randomly selected response belongs. The auROC values, which range from 0 to 1, were transformed [discriminability index = (auROC – 0.5) × 2] so that an index approaching 1 indicates that the dopamine response to an HM trial can be discriminated reliably as greater than the response to a LL trial, an index of 0 indicates that the responses from each trial type cannot be discriminated, and an index approaching −1 indicates greater dopamine release in response to the LL than to the HM option. This discriminability index time course confirmed that the cue-evoked dopamine response to the HM option was significantly greater than the response to the LL option, an effect we observed regardless of the side to which each option was assigned in the operant chamber (i.e., across the counterbalanced pairs of recorded sessions within this group of rats; Fig. S2A). The greater dopamine response to the preferred HM option is consistent with numerous previous observations that options with greater subjective value are associated with greater dopamine-reported cached values than less-preferred options (24–36). However, in these moderate-cost sessions, animals’ preferences are driven predominantly by the HM option’s greater reward value, a dimension that is robustly incorporated into dopamine-associated cached values (24, 27, 32–36). Thus, this condition demonstrated that these signals encode value-related information but did not allow us to determine whether dopamine-reported cached values still correlate with subjective value when preferences are driven by a weakly encoded economic dimension.

The critical test of this hypothesis was provided by the high-cost sessions in which animals reached the behavioral criterion for preferring the LL option, because the option yielding a larger reward was rendered the nonpreferred option by its high effort requirement, an attribute to which cue-evoked dopamine was relatively insensitive in previous studies (35–38). If the cached value signaled by cue-evoked dopamine reliably reflects subjective value, we would expect greater dopamine release in response to the preferred LL option. On the other hand, if the dopamine-reported cached value is more sensitive to expected reward value than to anticipated effort, we would expect a greater response to the HH option that yields a larger reward, despite the animals’ subjective preferences for the LL alternative. In these high-cost sessions, cue-evoked dopamine release in the nucleus accumbens core was discriminable between HH and LL forced trials, but only after the peak response (Fig. 1E and Fig. S2B). Remarkably, the greater of these responses was for the HH option, even though the LL option was significantly preferred. Therefore, in these high-cost sessions, the relative ordering of the cached values reported by cue-evoked dopamine release was inconsistent with the subjective value of each option. In other high-cost sessions in which rats failed to reach criterion for the LL option but instead preferred the HH option (Fig. 1F; t7 = 5.380, P = 0.001), dopamine release again was greater for the HH option (Fig. 1G). Thus, across all session types there was a greater dopamine-associated cached value for the high-value option (HM or HH) than for the LL option, regardless of whether the cost to obtain this high-value option was only moderately higher or was much higher and regardless of whether or not it was preferred over the LL option.

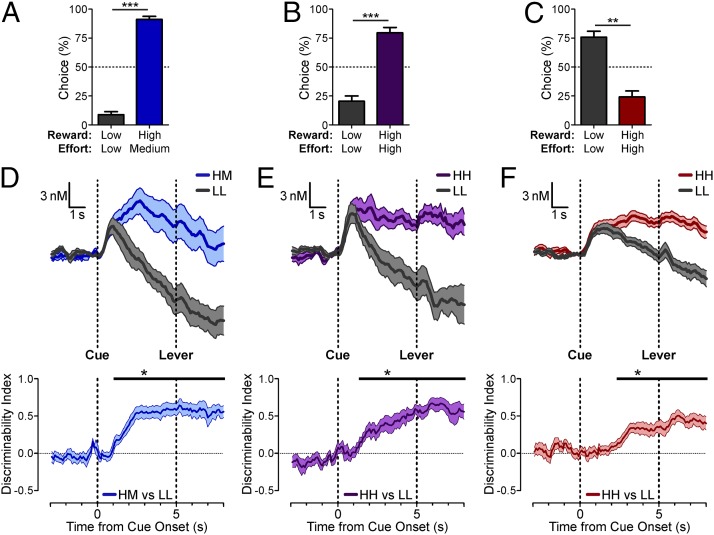

The cue-evoked mesolimbic dopamine response consistently was more sustained for the high-value than for the low-value option. However, during this sustained response, the remaining cost to obtain the reward becomes incrementally smaller with each lever press, and so this encoding pattern may reflect the dynamically increasing subjective value. To rule out this possibility, we conducted another set of experiments that allowed us to assess the cached values reported via cue-evoked dopamine release in a 5-s period before the lever was available (Fig. 2 A–C; significant postcriterion behavioral preferences in moderate-cost sessions: t7 = 12.98, P = 3.74 × 10−6; HH-preferred high-cost sessions: t9 = 5.267, P = 0.0005; and LL-preferred high-cost sessions: t11 = 4.319, P = 0.0012). In this interval between cue and lever presentation, the pattern of dopamine responses for each of the sessions was comparable to the previous results, in that mesolimbic dopamine release was greater for the option yielding the high-value reward in all session types regardless of whether or not it was preferred (Fig. 2 D–F). Importantly, there was significant discriminability between the high- and low-value options before lever presentation, demonstrating that the greater sustained response observed in high-value trials was not caused by its increase in subjective value as the remaining response cost was reduced with each lever press.

Fig. 2.

Behavioral performance and voltammetry results from forced trials with a 5-s cue-to-lever delay (second cohort). (A–C) Mean (+ SEM) postcriterion percent choice for moderate-cost sessions (***P = 3.74 × 10−6, n = 8 rats) (A), HH-preferred high-cost sessions (***P = 0.0005, n = 10 rats) (B), and LL-preferred high-cost sessions (**P = 0.0012, n = 12 rats) (C). (D–F) Mean (± SEM) cue-evoked dopamine release (Upper) and discriminability index time series (Lower) in moderate-cost sessions (n = 9 recording sites) (D), in HH-preferred high-cost sessions (n = 11 recording sites) (E), and in LL-preferred high-cost sessions (n = 15 recording sites) (F). For each discriminability index time course in D–F, the horizontal bar indicates time points of significant discriminability (*P < 0.05, permutation tests).

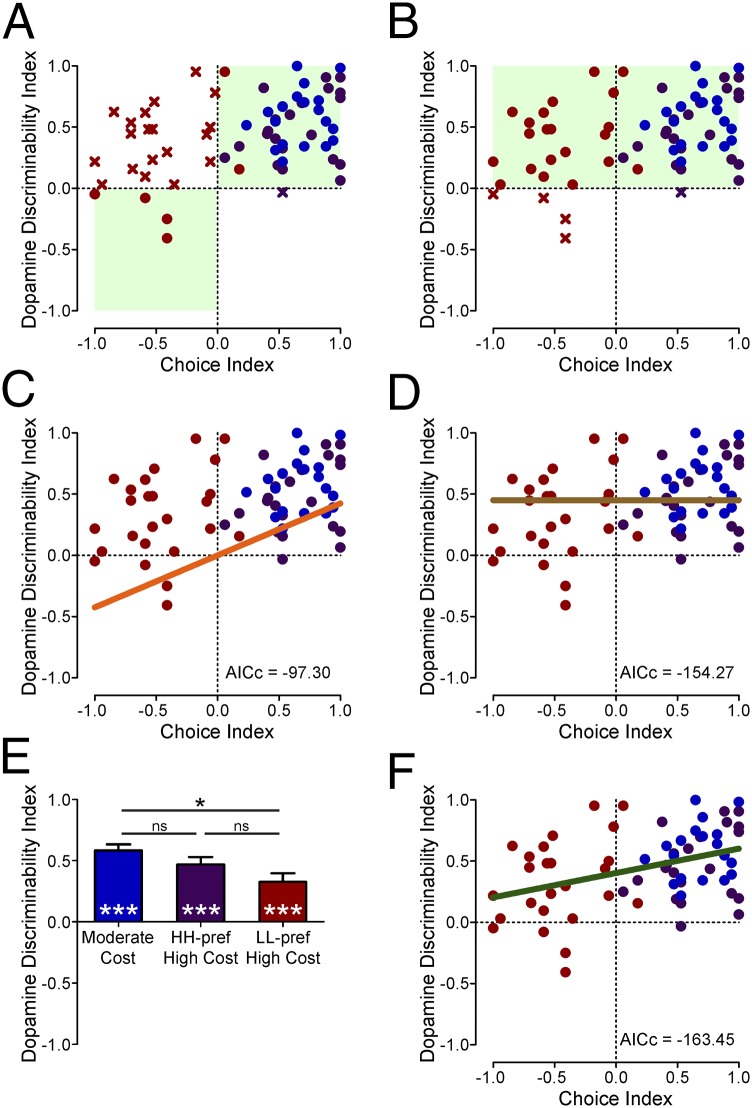

To examine further the relationship between these behavioral and neurochemical data, we pooled the data for each session across all the conditions in all the rats. We plotted the dopamine discriminability index (the ability to discern the dopamine signal over the 5 s following cue onset as being greater for the larger-reward option than the smaller-reward option) as a function of behavioral preferences in each session, using a choice index from postcriterion behavior: choice index = [p(H) − 0.5] × 2, where p(H) is the proportion of choices for the high-value option (HM or HH). Thus, a choice index of 1 corresponds to 100% choice for the high-value option, −1 indicates 100% choice for the LL option, and 0 indicates indifference between the two options. This analysis allowed us to test whether the dopamine-reported cached values reflect subjective value. According to this prevailing hypothesis, the data should occupy the upper-right and lower-left quadrants of the graph exclusively. That is, the dopamine discriminability index should be positive when rats preferred the high-value option (positive choice index) and negative when rats preferred the LL option (negative choice index). Indeed the majority of points with a positive choice index were in the upper-right quadrant (43 of 44 sessions), significantly higher than expected by chance (P = 5.16 × 10−12, binomial test). However, the data diverged from this model in sessions with a negative choice index, because the majority of these sessions did not have a negative dopamine discriminability index. In fact, a significant majority of the sessions with a negative choice index had a positive dopamine discriminability index (19 of 23 sessions, P = 0.0026, binomial test; Fig. 3A), favoring an alternative model in which the dopamine discriminability index is positive regardless of the animal’s preference (62 of 67 sessions, P = 1.42 × 10−13, binomial test; Fig. 3B).

Fig. 3.

Models testing the relationship between dopamine-associated cached values and subjective preferences. In all panels, blue points are moderate-cost sessions (n = 20), red points are LL-preferred high-cost sessions (n = 25), and purple points are HH-preferred high-cost sessions (n = 22). (A and B) The x’s designate sessions violating the categorical models’ predictions. (A) The expected utility categorical model predicts that all data should fall within the upper-right and lower-left quadrants (green). (B) The expected benefits categorical model predicts that all data should fall within the upper quadrants (green). (C) The expected utility regression model: linear regression constrained through origin (y = β1 × x; β0 = 0). (D) The expected benefits regression model: constant line, no slope (y = β0; β1 = 0). (E) The average dopamine discriminability index was significantly greater than 0 for each session type (***P < 0.001) but was lower in the LL-preferred high-cost sessions than in the moderate-cost sessions (*P = 0.015). (F) The standard linear regression model (y = β1 × x + β0). Both the slope and intercept differ significantly from 0 (P < 0.001).

We next carried out a more detailed analysis of a utility model relating the dopamine-reported cached values to choice and compared this model with a model in which utility has no influence. We constructed the utility model as a regression line (y = β1 × x + β0) constrained through the origin (β0 = 0; Fig. 3C) and the alternative model as a constant with no slope (β1 = 0; Fig. 3D). Comparing the goodness-of-fit using the second-order bias-corrected Akaike Information Criterion (AICc) (43, 44) based on the residual sum of squares, the utility model provided an inferior fit to the data than did the alternative model, which explicitly did not account for utility (AICc = −97.30 vs. −154.27, respectively; weight of evidence favoring the origin-constrained slope = 4.26 × 10−13, vs. >0.999 favoring the constant with no slope). Although the positive bias in the dopamine discriminability index, independent of preference, observed in the alternative model was evident in all session types (Fig. 3E; one-sample t tests vs. zero: moderate-cost sessions, t19 = 11.90, P = 2.98 × 10−10; HH-preferred high-cost sessions, t21 = 7.78, P = 1.28 × 10−7; LL-preferred high-cost sessions, t24 = 4.67, P = 9.61 × 10−5), its magnitude differed among these conditions (one-way ANOVA: F2,64 = 4.28, P = 0.018; Bonferroni-corrected post hoc tests: moderate-cost vs. LL-preferred high-cost sessions, P = 0.015; LL-preferred vs. HH-preferred high-cost sessions, P = 0.312; moderate-cost vs. HH-preferred high-cost sessions, P = 0.638). Indeed, a standard linear regression model including both a slope and an intercept term as free parameters provided an improved fit (Fig. 3F; AICc = −163.45) over the origin-constrained slope (Fig. 3C), the constant line without slope (Fig. 3D), and discontinuous lines (Fig. S3 and Table S1). In this unconstrained model, the slope of the linear term differed significantly from 0 (β1 = 0.199 ± 0.057, t = 3.468, P = 9.34 × 10−4) and explained a small proportion of the variance in the dopamine discriminability index (r2 = 0.156). Nonetheless, the y-intercept of this regression model also was significantly greater than 0 (β0 = 0.403 ± 0.038, t = 10.737, P = 4.89 × 10−16), suggesting that behaviorally indifferent rats show greater cue-evoked dopamine release to a high-value option than to a low-value option despite their lack of preference. Moreover, this regression line remained positive for all possible choice indices, meaning that dopamine release is greater for the high-value option regardless of preference. Importantly, the type of model that provided the best fit was not changed if we used the data only from the cohort of animals with the 5-s cue-to-lever delay (Fig. S4 and Table S2), if the counterbalanced session pairs were treated as independent data points (Fig. S5 and Table S3), or if we analyzed the peak dopamine release rather than the auROC-based discriminability index (Fig. S6 and Table S4). Collectively, these data indicate that, although the dopamine-reported cached values showed a modest correlation with utility in these experimental paradigms, this relationship is not sufficient to make them a reliable instrument for determining choices.

Discussion

A preponderance of evidence supports the notion that phasic dopamine transmission functions as a neural instantiation of the temporal-difference prediction errors that drive reinforcement learning (13–24). Accordingly, changes in dopamine transmission are evoked whenever there is an unexpected reward-related event, both when reward delivery differs from expectations and when reward-predictive cues drive changes in expectation of available reward. The latter exemplifies how the dopamine response to unexpected cue presentation provides a readout of the cached value assigned to that cue through temporal-difference learning. These cached values are theorized to be used to determine action selection, so that the preferred action is the one associated with the cue with the greatest cached value (1–5). To subserve this role in decision making, the cached values need to incorporate any and all economic attributes insofar as those attributes influence subjective preferences; that is, by definition, the cached values must reliably reflect the ordinal utility of the available actions as revealed by animals’ behavioral preferences. Consistent with this premise, there have been numerous reports in which dopamine-associated cached values incorporate many economic attributes affecting animals’ behavioral preferences, such as objective expected value (24–27, 35, 36), and some subjective attributes, including risk preference (30–33), temporal discounting (24, 28, 29), flavor or reward-type preference (31), perceptual uncertainty (45, 46), and even preference for advanced information (34). Even though this system is based on a cached-value (“model-free”) architecture, there have been suggestions in the literature that it has access to “model-based” information derived from inferential online computation (47), further supporting the notion that the dopamine-associated cached values are a common currency for economic decision making (17). However, no matter how many positive correlations between dopamine-associated cached values and subjective preferences are observed, the existence of counterexamples in which this relationship is reversed is sufficient to demonstrate that the fundamental claim of decision-making theories—that cached values are all that is required to determine action selection—simply cannot hold as a general principle. Accordingly, the current work identifies circumstances in which there was a significant inversion between the options’ ordinal utility and the rank ordering of their dopamine-reported cached values. This breakdown in the relationship between cached values and subjective value arose in situations where the animals’ preferences were guided predominantly by effortful response cost, an economic dimension which previous voltammetry (35, 38) and electrophysiology (36, 37) studies have found to be weakly incorporated into the dopamine-associated cached values. Therefore, despite robust evidence that the dopamine-associated cached values do incorporate several subjective attributes such as risk preference (30–33) and temporal discounting (24, 28, 29), the current results by necessity imply that these cached values alone are insufficient for determining economic choices.

We have demonstrated that dopamine-associated cached values are positively correlated with behavioral preferences in some circumstances—those in which the benefits overshadow the costs—but are diametrically opposed to preference order in others in which the costs predominate in guiding animals’ choices. Therefore, valuations used in decision making cannot be based on this simple cached-value–based system alone, because they must account for costs that influence action selection. Theorists have proposed that coexisting valuation systems compete for the control of behavioral resources (1–5, 48). Based on this competition framework, one could speculate that choices were determined by an alternative valuation system under circumstances in which costs outweigh benefits (i.e., when the dopamine-associated cached values did not correlate with preference) and that the cached values were used as the determinant of choice only when benefits overshadow costs. However, this marginalized use of the cached-value system would be quite limited, because real-world choices often are strongly influenced by aversive or energetic costs. Moreover, even when benefits do overshadow costs, there still are gradations in preferences for incremental changes in response cost (49), and so even these preferences cannot be based on a valuation system that is relatively insensitive to costs. Therefore, additional information on costs is required to perform these decision-making computations. Representations of costs indeed have been observed in areas such as the anterior cingulate cortex (50–54), insular cortex (55, 56), and basolateral amygdala (49, 57), all of which provide glutamatergic inputs that converge on striatal projection neurons. Therefore, a more integrative valuation system could arise from the downstream combination of benefit information from the dopamine-associated cached values and cost information from other neural sources. However, this concept of an incomplete valuation system requiring additional information is not accommodated in current theories (1–5, 48), whether they describe the dopamine-associated valuation system alone or used in parallel with alternative systems. Alternatively, it remains possible that dopamine-associated cached values do not contribute to the selection process at all but rather play a more nuanced role in decision making that pertains to the performance or execution of the selected action. This scenario would place the burden of action selection on other reward-related structures. Indeed, representations of subjective value have been observed in multiple cortical regions (53, 58–60). Perturbation of the cached values (9, 21, 23) to test the extent of their contribution to action selection could distinguish between these possibilities in future experiments. Under any of these scenarios, however, the current findings demonstrate that the dopamine-associated cached values alone are not sufficient to serve as the basis of simple economic choices.

In the current work, we used cue-evoked mesolimbic dopamine transmission as a means to examine cached values assigned to reward options and identified circumstances in which animals preferentially selected the option that did not have the greatest cached value. This situation arose in the cost–benefit decisions of the present experiment when the differences in response costs overshadowed the differences in benefits. These findings demonstrate a direct violation of the fundamental principle that these cached values reflect animals’ subjective preferences and are sufficient for determining choices. Therefore, we conclude that dopamine-associated cached values cannot be used as the sole determinant of cost–benefit decision making.

Materials and Methods

See SI Materials and Methods for additional details.

Subjects and Surgery.

All procedures were approved by the University of Washington Institutional Animal Care and Use Committee. Male Sprague–Dawley rats (Charles River Laboratories), 250–300 g upon arrival, were anesthetized with isoflurane for bilateral implantation of carbon-fiber microelectrodes (40) targeting the nucleus accumbens core (1.3 mm anterior, 1.3 mm lateral, 6.8–7.0 mm ventral to bregma) and an Ag/AgCl reference electrode. After at least 1 wk of postsurgery recovery, rats were food-restricted to 90% of their ad libitum body weight with free access to water in the animals’ home cages.

Mixed-Contingency Decision-Making Task.

All sessions consisted of blocks of four single-option forced trials in which only one of the two options was available followed by four choice trials in which both options were concurrently available. A 45 ± 15 s variable ITI separated each discrete trial, with a maximum of 120 trials per session. As in initial training (SI Materials and Methods), for the first cohort of rats each trial began immediately after the ITI with the onset of one or both cue lights and the simultaneous extension of the corresponding lever(s); for the second cohort the lever(s) extended 5 s after the onset of the cue light(s). The mixed-contingency decision-making task consisted of two types of sessions: moderate-cost and high-cost conditions (Fig. 1A). In both conditions, one lever served as a low value/low effort (LL) reference option, yielding one food pellet for four lever presses. The alternative option yielded a high-value reward (four pellets) for a medium effort requirement (eight presses) in the moderate-cost condition (high value/medium effort, HM) or for a high effort requirement in the high-cost condition (high value/high effort, HH). Before voltammetric recordings were conducted (SI Materials and Methods), this high effort requirement was determined individually for each rat such that it preferred the LL option. The final high effort requirements used in recording sessions ranged from 32–48 lever presses for rats in the first cohort and 32–128 presses for rats in the second cohort. Behavioral criterion was defined as 75% choice for the HM option in the moderate-cost condition and for the LL option in the high-cost condition within a sliding 12-choice window. After reaching this criterion, rats performed four additional blocks (32 trials), which provided the data analyzed from each recording session. We also obtained recordings from high-cost sessions in which rats did not reach the intended criterion for the LL option and instead reached the opposite criterion, preferring the HH option. The high effort requirements from these HH-preferred high-cost sessions ranged from 32–64 presses for the first cohort and 32–128 presses for the second cohort.

Fast-Scan Cyclic Voltammetry Recording Sessions.

Chronically implanted carbon-fiber microelectrodes were connected to a head-mounted voltammetric amplifier for dopamine detection by fast-scan cyclic voltammetry as previously described (40). During recording sessions, a potential of −0.4 V (versus the Ag/AgCl reference electrode) was applied to the carbon fiber and ramped to +1.3 V and back at a rate of 400 V/s at 10 Hz. To confirm that electrodes were capable of detecting chemically verified dopamine, a series of unexpected food pellets was delivered before and after each recording session. The voltammetry data from a recording session were included in the analysis only if the pre- and postsession pellet deliveries elicited dopamine release whose cyclic voltammogram (electrochemical signature) achieved a high correlation (r2 ≥ 0.75 by linear regression) with that of a dopamine standard.

Supplementary Material

Acknowledgments

We thank S. Ng-Evans, K. Reinelt, and K. Dofredo for technical assistance and A. Hart, G. Boynton, and G. Horwitz for advice on data analysis. This work was supported by a National Science Foundation Graduate Research Fellowship (to N.G.H.), a Wellcome Trust Research Career Development Fellowship (to M.E.W.), and National Institutes of Health Grants GM007108 and DA036278 (to N.G.H.), DA007278 (to M.M.A.), and MH079292, DA027858, and AG044839 (to P.E.M.P.).

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1419770111/-/DCSupplemental.

References

- 1.Sutton RS, Barto AG. Reinforcement Learning: An Introduction. MIT Press; Cambridge, MA: 1998. [Google Scholar]

- 2.Daw ND, Doya K. The computational neurobiology of learning and reward. Curr Opin Neurobiol. 2006;16(2):199–204. doi: 10.1016/j.conb.2006.03.006. [DOI] [PubMed] [Google Scholar]

- 3.Rangel A, Camerer C, Montague PR. A framework for studying the neurobiology of value-based decision making. Nat Rev Neurosci. 2008;9(7):545–556. doi: 10.1038/nrn2357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Kable JW, Glimcher PW. The neurobiology of decision: Consensus and controversy. Neuron. 2009;63(6):733–745. doi: 10.1016/j.neuron.2009.09.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Lee D, Seo H, Jung MW. Neural basis of reinforcement learning and decision making. Annu Rev Neurosci. 2012;35:287–308. doi: 10.1146/annurev-neuro-062111-150512. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Samejima K, Ueda Y, Doya K, Kimura M. Representation of action-specific reward values in the striatum. Science. 2005;310(5752):1337–1340. doi: 10.1126/science.1115270. [DOI] [PubMed] [Google Scholar]

- 7.Lau B, Glimcher PW. Value representations in the primate striatum during matching behavior. Neuron. 2008;58(3):451–463. doi: 10.1016/j.neuron.2008.02.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Cai X, Kim S, Lee D. Heterogeneous coding of temporally discounted values in the dorsal and ventral striatum during intertemporal choice. Neuron. 2011;69(1):170–182. doi: 10.1016/j.neuron.2010.11.041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Tai LH, Lee AM, Benavidez N, Bonci A, Wilbrecht L. Transient stimulation of distinct subpopulations of striatal neurons mimics changes in action value. Nat Neurosci. 2012;15(9):1281–1289. doi: 10.1038/nn.3188. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Reynolds JN, Hyland BI, Wickens JR. A cellular mechanism of reward-related learning. Nature. 2001;413(6851):67–70. doi: 10.1038/35092560. [DOI] [PubMed] [Google Scholar]

- 11.Shen W, Flajolet M, Greengard P, Surmeier DJ. Dichotomous dopaminergic control of striatal synaptic plasticity. Science. 2008;321(5890):848–851. doi: 10.1126/science.1160575. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Pawlak V, Kerr JN. Dopamine receptor activation is required for corticostriatal spike-timing-dependent plasticity. J Neurosci. 2008;28(10):2435–2446. doi: 10.1523/JNEUROSCI.4402-07.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Houk JC, Adams JL, Barto AG. A model of how the basal ganglia generate and use neural signals that predict reinforcement. In: Houk JC, Davis JL, Beiser DG, editors. Models of Information Processing in the Basal Ganglia. MIT Press; Cambridge, MA: 1995. pp. 249–270. [Google Scholar]

- 14.Montague PR, Dayan P, Sejnowski TJ. A framework for mesencephalic dopamine systems based on predictive Hebbian learning. J Neurosci. 1996;16(5):1936–1947. doi: 10.1523/JNEUROSCI.16-05-01936.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Schultz W, Dayan P, Montague PR. A neural substrate of prediction and reward. Science. 1997;275(5306):1593–1599. doi: 10.1126/science.275.5306.1593. [DOI] [PubMed] [Google Scholar]

- 16.Glimcher PW. Understanding dopamine and reinforcement learning: The dopamine reward prediction error hypothesis. Proc Natl Acad Sci USA. 2011;108(Suppl 3):15647–15654. doi: 10.1073/pnas.1014269108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Schultz W. Updating dopamine reward signals. Curr Opin Neurobiol. 2013;23(2):229–238. doi: 10.1016/j.conb.2012.11.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Waelti P, Dickinson A, Schultz W. Dopamine responses comply with basic assumptions of formal learning theory. Nature. 2001;412(6842):43–48. doi: 10.1038/35083500. [DOI] [PubMed] [Google Scholar]

- 19.Bayer HM, Glimcher PW. Midbrain dopamine neurons encode a quantitative reward prediction error signal. Neuron. 2005;47(1):129–141. doi: 10.1016/j.neuron.2005.05.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Cohen JY, Haesler S, Vong L, Lowell BB, Uchida N. Neuron-type-specific signals for reward and punishment in the ventral tegmental area. Nature. 2012;482(7383):85–88. doi: 10.1038/nature10754. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Steinberg EE, et al. A causal link between prediction errors, dopamine neurons and learning. Nat Neurosci. 2013;16(7):966–973. doi: 10.1038/nn.3413. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Hart AS, Rutledge RB, Glimcher PW, Phillips PEM. Phasic dopamine release in the rat nucleus accumbens symmetrically encodes a reward prediction error term. J Neurosci. 2014;34(3):698–704. doi: 10.1523/JNEUROSCI.2489-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Stopper CM, Tse MT, Montes DR, Wiedman CR, Floresco SB. Overriding phasic dopamine signals redirects action selection during risk/reward decision making. Neuron. 2014;84(1):177–189. doi: 10.1016/j.neuron.2014.08.033. [DOI] [PubMed] [Google Scholar]

- 24.Roesch MR, Calu DJ, Schoenbaum G. Dopamine neurons encode the better option in rats deciding between differently delayed or sized rewards. Nat Neurosci. 2007;10(12):1615–1624. doi: 10.1038/nn2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Fiorillo CD, Tobler PN, Schultz W. Discrete coding of reward probability and uncertainty by dopamine neurons. Science. 2003;299(5614):1898–1902. doi: 10.1126/science.1077349. [DOI] [PubMed] [Google Scholar]

- 26.Morris G, Arkadir D, Nevet A, Vaadia E, Bergman H. Coincident but distinct messages of midbrain dopamine and striatal tonically active neurons. Neuron. 2004;43(1):133–143. doi: 10.1016/j.neuron.2004.06.012. [DOI] [PubMed] [Google Scholar]

- 27.Tobler PN, Fiorillo CD, Schultz W. Adaptive coding of reward value by dopamine neurons. Science. 2005;307(5715):1642–1645. doi: 10.1126/science.1105370. [DOI] [PubMed] [Google Scholar]

- 28.Kobayashi S, Schultz W. Influence of reward delays on responses of dopamine neurons. J Neurosci. 2008;28(31):7837–7846. doi: 10.1523/JNEUROSCI.1600-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Day JJ, Jones JL, Wightman RM, Carelli RM. Phasic nucleus accumbens dopamine release encodes effort- and delay-related costs. Biol Psychiatry. 2010;68(3):306–309. doi: 10.1016/j.biopsych.2010.03.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Sugam JA, Day JJ, Wightman RM, Carelli RM. Phasic nucleus accumbens dopamine encodes risk-based decision-making behavior. Biol Psychiatry. 2012;71(3):199–205. doi: 10.1016/j.biopsych.2011.09.029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Lak A, Stauffer WR, Schultz W. Dopamine prediction error responses integrate subjective value from different reward dimensions. Proc Natl Acad Sci USA. 2014;111(6):2343–2348. doi: 10.1073/pnas.1321596111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Stauffer WR, Lak A, Schultz W. Dopamine reward prediction error responses reflect marginal utility. Curr Biol. 2014;24(21):2491–2500. doi: 10.1016/j.cub.2014.08.064. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Nasrallah NA, et al. Risk preference following adolescent alcohol use is associated with corrupted encoding of costs but not rewards by mesolimbic dopamine. Proc Natl Acad Sci USA. 2011;108(13):5466–5471. doi: 10.1073/pnas.1017732108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Bromberg-Martin ES, Hikosaka O. Midbrain dopamine neurons signal preference for advance information about upcoming rewards. Neuron. 2009;63(1):119–126. doi: 10.1016/j.neuron.2009.06.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Gan JO, Walton ME, Phillips PEM. Dissociable cost and benefit encoding of future rewards by mesolimbic dopamine. Nat Neurosci. 2010;13(1):25–27. doi: 10.1038/nn.2460. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Pasquereau B, Turner RS. Limited encoding of effort by dopamine neurons in a cost-benefit trade-off task. J Neurosci. 2013;33(19):8288–8300. doi: 10.1523/JNEUROSCI.4619-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Ravel S, Richmond BJ. Dopamine neuronal responses in monkeys performing visually cued reward schedules. Eur J Neurosci. 2006;24(1):277–290. doi: 10.1111/j.1460-9568.2006.04905.x. [DOI] [PubMed] [Google Scholar]

- 38.Wanat MJ, Kuhnen CM, Phillips PEM. Delays conferred by escalating costs modulate dopamine release to rewards but not their predictors. J Neurosci. 2010;30(36):12020–12027. doi: 10.1523/JNEUROSCI.2691-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Fiorillo CD. Two dimensions of value: Dopamine neurons represent reward but not aversiveness. Science. 2013;341(6145):546–549. doi: 10.1126/science.1238699. [DOI] [PubMed] [Google Scholar]

- 40.Clark JJ, et al. Chronic microsensors for longitudinal, subsecond dopamine detection in behaving animals. Nat Methods. 2010;7(2):126–129. doi: 10.1038/nmeth.1412. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Kepecs A, Uchida N, Zariwala HA, Mainen ZF. Neural correlates, computation and behavioural impact of decision confidence. Nature. 2008;455(7210):227–231. doi: 10.1038/nature07200. [DOI] [PubMed] [Google Scholar]

- 42.Green DM, Swets JA. Signal Detection Theory and Psychophysics. Wiley; New York: 1966. [Google Scholar]

- 43.Akaike H. A new look at the statistical model identification. IEEE Trans Automat Contr. 1974;19(6):716–723. [Google Scholar]

- 44.Burnham KP, Anderson DR. Model Selection and Multimodal Inference: A Practical Information-Theoretic Approach. Springer; New York: 2002. [Google Scholar]

- 45.Nomoto K, Schultz W, Watanabe T, Sakagami M. Temporally extended dopamine responses to perceptually demanding reward-predictive stimuli. J Neurosci. 2010;30(32):10692–10702. doi: 10.1523/JNEUROSCI.4828-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.de Lafuente V, Romo R. Dopamine neurons code subjective sensory experience and uncertainty of perceptual decisions. Proc Natl Acad Sci USA. 2011;108(49):19767–19771. doi: 10.1073/pnas.1117636108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Bromberg-Martin ES, Matsumoto M, Hong S, Hikosaka O. A pallidus-habenula-dopamine pathway signals inferred stimulus values. J Neurophysiol. 2010;104(2):1068–1076. doi: 10.1152/jn.00158.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Daw ND, Niv Y, Dayan P. Uncertainty-based competition between prefrontal and dorsolateral striatal systems for behavioral control. Nat Neurosci. 2005;8(12):1704–1711. doi: 10.1038/nn1560. [DOI] [PubMed] [Google Scholar]

- 49.Ghods-Sharifi S, St Onge JR, Floresco SB. Fundamental contribution by the basolateral amygdala to different forms of decision making. J Neurosci. 2009;29(16):5251–5259. doi: 10.1523/JNEUROSCI.0315-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Walton ME, Bannerman DM, Alterescu K, Rushworth MF. Functional specialization within medial frontal cortex of the anterior cingulate for evaluating effort-related decisions. J Neurosci. 2003;23(16):6475–6479. doi: 10.1523/JNEUROSCI.23-16-06475.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Rudebeck PH, Walton ME, Smyth AN, Bannerman DM, Rushworth MF. Separate neural pathways process different decision costs. Nat Neurosci. 2006;9(9):1161–1168. doi: 10.1038/nn1756. [DOI] [PubMed] [Google Scholar]

- 52.Hillman KL, Bilkey DK. Neurons in the rat anterior cingulate cortex dynamically encode cost-benefit in a spatial decision-making task. J Neurosci. 2010;30(22):7705–7713. doi: 10.1523/JNEUROSCI.1273-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Kennerley SW, Behrens TE, Wallis JD. Double dissociation of value computations in orbitofrontal and anterior cingulate neurons. Nat Neurosci. 2011;14(12):1581–1589. doi: 10.1038/nn.2961. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Amemori K, Graybiel AM. Localized microstimulation of primate pregenual cingulate cortex induces negative decision-making. Nat Neurosci. 2012;15(5):776–785. doi: 10.1038/nn.3088. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Prévost C, Pessiglione M, Météreau E, Cléry-Melin ML, Dreher JC. Separate valuation subsystems for delay and effort decision costs. J Neurosci. 2010;30(42):14080–14090. doi: 10.1523/JNEUROSCI.2752-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Palminteri S, et al. Critical roles for anterior insula and dorsal striatum in punishment-based avoidance learning. Neuron. 2012;76(5):998–1009. doi: 10.1016/j.neuron.2012.10.017. [DOI] [PubMed] [Google Scholar]

- 57.McHugh SB, et al. Aversive prediction error signals in the amygdala. J Neurosci. 2014;34(27):9024–9033. doi: 10.1523/JNEUROSCI.4465-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Padoa-Schioppa C. Neurobiology of economic choice: A good-based model. Annu Rev Neurosci. 2011;34:333–359. doi: 10.1146/annurev-neuro-061010-113648. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Kim S, Hwang J, Lee D. Prefrontal coding of temporally discounted values during intertemporal choice. Neuron. 2008;59(1):161–172. doi: 10.1016/j.neuron.2008.05.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Louie K, Glimcher PW. Separating value from choice: Delay discounting activity in the lateral intraparietal area. J Neurosci. 2010;30(16):5498–5507. doi: 10.1523/JNEUROSCI.5742-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.