Abstract

What makes people willing to pay costs to benefit others? Does such cooperation require effortful self-control, or do automatic, intuitive processes favor cooperation? Time pressure has been shown to increase cooperative behavior in Public Goods Games, implying a predisposition towards cooperation. Consistent with the hypothesis that this predisposition results from the fact that cooperation is typically advantageous outside the lab, it has further been shown that the time pressure effect is undermined by prior experience playing lab games (where selfishness is the more advantageous strategy). Furthermore, a recent study found that time pressure increases cooperation even in a game framed as a competition, suggesting that the time pressure effect is not the result of social norm compliance. Here, we successfully replicate these findings, again observing a positive effect of time pressure on cooperation in a competitively framed game, but not when using the standard cooperative framing. These results suggest that participants' intuitions favor cooperation rather than norm compliance, and also that simply changing the framing of the Public Goods Game is enough to make it appear novel to participants and thus to restore the time pressure effect.

Introduction

Cooperation is, at once, both an essential feature of human social life and an enduring puzzle: why would anyone incur the personal costs that cooperative behavior demands in order to benefit the group when he or she could just as easily behave selfishly and hope to reap the rewards of others' prosociality? As such, the evolution and maintenance of cooperation are topics of major interest across the social and biological sciences [1]–[32].

Research has attempted to better understand the cognitive mechanics of cooperative decision-making by appealing to dual process models of judgment. These models posit the existence of two qualitatively distinct modes of thought: one that is relatively automatic, rapid, spontaneous, holistic, and intuitive, and another that is relatively controlled, slow, sequential, deliberative and rational [33]–[39]. Through the lens of this dual process perspective, a key question on the nature of cooperative decision-making concerns the extent to which individuals possess an intuition to be selfish that is only overridden through deliberative efforts to be prosocial, or, instead, possess an intuition towards prosociality that is overridden by deliberative selfishness.

To assess these two possibilities, a number of recent studies have attempted to experimentally manipulate the extent to which intuitive or rational processes are engaged during decision-making in economic cooperation games. It has been found that time pressure [40]–[43], cognitive load [44]–[46], conceptual priming of intuition [40], deciding about present rather than future allocations of money [47], [48], and disruption of the right lateral prefrontal cortex [49] can increase participants' willingness to pay money to benefit others in both unilateral and multilateral cooperation games. Other studies find null effects of some of these manipulations [50]–[53], suggesting the presence of important moderators. (Reaction time correlation studies yield conflicting results [40], [54]–[58], but this is likely due to the fact that decisions involving conflict take longer, regardless of the extent to which intuitive versus deliberative processes are invoked [59]; furthermore, ego depletion seems to result in opposite effects on Dictator Game prosociality compared to the other manipulations of cognitive processing [60]–[63] suggesting that in this context, depletion may be both reducing self-control and changing participants' intuitive preferences.)

Further evidence for an intuitive predisposition towards cooperation comes from the finding that participants treat neutrally framed games the same as cooperatively framed games [64]; that previous play of long versus short repeated games spills over into subsequent one-shot games, but only for participants who rely on heuristics [65]; that people with low self-control are more likely to sacrifice to benefit their romantic partners [66]; that people who risk their lives to save strangers overwhelmingly describe their decision processes as automatic and intuitive [67]; that text analysis of participants' descriptions of their decision process during economic games finds that positive emotion predicts cooperation while inhibition predicts selfishness [68]; and the cooperative choices involve less conflict than non-cooperative choices in mouse-tracking studies [69].

Theoretical Motivation

To explain this overall relationship between deliberation and selfishness, and to predict specific moderators, the Social Heuristics Hypothesis (SHH) has been proposed [41]. The SHH adds an explicitly dual process framework to theories of cultural evolution, norm internalization and spillover effects [8], [70]–[75]. It posits that cooperative decision-making is guided by heuristic strategies that have generally been successful in one's previous social interactions and have, over time, become internalized and automatically applied to social interactions that resemble situations one has encountered in the past. When one encounters a new or atypical social situation that is unlike previous experience, one generally tends to rely on these heuristics as an intuitive default response. However, through additional deliberation about the details of the situation, one can override this heuristic response and arrive at a response that is more tailored to the current interaction. Thus, misapplication and over-generalization of heuristics is at the heart of the SHH.

An important prediction that stems from this over-generalization is that the effects of promoting intuition should be moderated by one's past experience. Because cooperative decision-making in one's daily life often involves repeated interactions with others in which reputation or the threat of sanctions is an important consideration, intuitions should generally favor cooperation because this is the payoff-maximizing strategy. However, not everyone acquires such experience in their daily lives, and those individuals that report having less general trust of their interaction partners in their daily lives have been shown to exhibit less of a tendency to cooperate when induced to rely more heavily on their intuitions [40], [42].

Moreover, even if individuals more generally have cooperative interactions in their daily lives, they may nonetheless have a great deal of exposure to situations or contexts in which cooperation is not the payoff-maximizing strategy, thus leading them to be less inclined to trust their intuitions in these cases. That is, experience with settings where one's typically advantageous response is non-optimal should lead to a reduction in the spillover effects that the SHH argues drive the intuitive cooperation effect. In support of this contention, people's self-reported experience with economic games—games in which the selfish strategy is typically payoff-maximizing—tend to exhibit less of an effect of promoting intuition on their cooperative decision-making [40]–[42]. Moreover, in a longitudinal analysis of the effects of time pressure in economic games conducted online on Mechanical Turk, intuitions to cooperate steadily declined over a two-year period, suggesting that as economic games became more popular on MTurk and as participants acquired greater experience with them, they exhibited less of a tendency to trust intuitions to cooperate [41].

If this effect of prior experience with economic games is indeed driven by a learned suppression of spillover effects in the context of familiar game paradigms, then it should be possible to re-induce the intuitive cooperation effect by modifying the paradigm such that it once again appears novel. One way to achieve this may be to change the way in which the cooperative decision is framed. The results of a recent study [43] (hereafter RNW) are consistent with this suggestion. In their second study, RNW applied time pressure or delay to cooperation games while also manipulating how the cooperative decision was framed to participants (RNW's first study crossed time pressure with an ingroup/outgroup manipulation). In the competitive frame condition, the interaction was described as a competition with other competitors, with a winner being declared at the end of the game on the basis of their earned payoffs. In the cooperative frame condition, the game used the language typical of many cooperation experiments (inspired by the seminal work of [76]) in which the game was described as a decision about how much to contribute to a common project. Previous research has suggested that such differences in framing can have strong effects on participants' overall levels of cooperation [64], [77]. RNW asked whether, in addition to having a main effect on cooperation, the framing manipulation would moderate the effects of time pressure on participants' choices in a Public Goods Game (PGG). They found main effects of context and time pressure in the predicted directions. With respect to moderation, they found a non-significant but trending interaction, such that the positive effect of time pressure on cooperation was driven primarily by those in the competition condition (rather than the cooperatively framed baseline typically used in PGG experiments).

This result is surprising if one believes that the effect of time pressure on cooperation is explained by increasing adherence to the social norms dictated by the situation: on this view, one would expect time pressure to lead to greater selfishness in the competitive domain and greater cooperation in the cooperative domain. If, conversely, people have a domain-general heuristic favoring cooperation (rather than norm compliance) that gets degraded by prior experience with specific settings where cooperation is not advantageous, the observed pattern should be expected: many participants on MTurk have prior experience with the standard cooperative-framed PGG instructions (undermining the intuitive cooperation effect), whereas the competitive frame is novel (leaving the intuitive cooperation effect intact). Thus, the results of RNW provide evidence that the intuitive cooperation effect is not unique to situations where a cooperative norm is projected, and provides further evidence for the experience hypothesis.

The Present Study

Here we test whether this pattern of results is replicable. Above and beyond the general importance of replication studies, we had several additional motivations. First, although RNW found a significant positive simple effect of time pressure in the competition condition and no simple effect in the baseline, the interaction between time pressure and condition was non-significant in their data. Thus, it is difficult to definitively conclude that the novel competition frame increased the time pressure effect (as would be predicted by the experience hypothesis). Second, RNW's main analysis in their framing experiment excluded participants that failed to obey the time constraint, which can impair causal inference [50] (note that this was not true of RNW's Study 1, which demonstrated the robustness of the time pressure effect to interaction with in-group vs out-group members). Finally, there have been general questions raised about the replicability of the effect of time pressure on cooperation [50], [51], [78], and so further tests are valuable.

To this end, we sought to replicate RNW's framing experiment (their Study 2) using a large sample drawn from the same study population as the original study. Additionally, we aggregate these new data with those of RNW to assess the overall effect of frame and time pressure on cooperative decision-making.

Methods

Participants

Participants were 751 (319 women; Mage = 30.5) Mechanical Turk (MTurk) workers [79]–[83] who were located in the United States. They participated in exchange for a $0.50 show-up fee as well as the opportunity to earn up to an additional $1 based on their decisions in an economic game. These studies were approved by the Yale University Human Subjects Committee IRB Protocol #1307012383. All subjects provided written informed consent prior to participating, and this was approved by the Human Subjects Committee. For raw data, see Material S1.

Procedure

Measure of cooperation

To assess participants' level of cooperation, they completed a one-shot four player Public Goods Game (PGG). Each participant made a decision about how much of an endowment of $0.40 they wanted to contribute to a shared public resource, in increments of $0.02. Participants were informed that any money they contributed to the shared resource would be doubled by the experimenter and distributed evenly among all four members of the group. Thus, if all participants contributed all $0.40 of their endowment, everyone would double their earnings and receive $0.80. However, if participants chose to keep their $0.40 while the other three group members contributed their earnings, they would receive $1.00, thus maximizing their total earnings. Our primary dependent measure was the amount of money contributed.

Framing manipulation

To manipulate participants' construal of the economic game as either cooperative or competitive, we altered the wording of the instructions by condition. In the cooperative condition, the game was described as a decision about how much to contribute to a common project, and the other participants were referred to as other members of the group:

“You have been randomly assigned to interact with 3 other people. All of you receive this same set of instructions. You cannot participate in this study more than once. Each person in your group is given 40 cents for this interaction (in addition to the 50 cents you received already for participating). You each decide how much of your 40 cents to keep for yourself, and how much (if any) to contribute to the group's common project (in increments of 2 units: 0, 2, 4, 6 etc). All money contributed to the common project is doubled, and then split evenly among the 4 group members. Thus, for every 2 cents contributed to the common project, each group member receives 1 cent. If everyone contributes all of their 40 cents, everyone's money will double: each of you will earn 80 cents. But if everyone else contributes their 40 cents, while you keep your 40 cents, you will earn 100 cents, while the others will earn only 60 cents. That is because for every 2 cents you contribute, you get only 1 cent back. Thus you personally lose money on contributing. The other people are REAL and will really make a decision – there is no deception in this study. Once you and the other people have chosen how much to contribute, the interaction is over. Neither you nor the other people receive any bonus other than what comes out of this interaction.”

In contrast, in the competitive condition, the interaction with the other participants was described as a competition with four competitors:

“You have been randomly assigned to compete with 3 other opponents. All of you receive this same set of instructions. You cannot participate in this study more than once. Each person in your group is given 40 cents for this interaction (in addition to the 50 cents you received already for participating). You each decide how much of your 40 cents to keep for yourself, and how much (if any) to contribute (in increments of 2 units: 0, 2, 4, 6 etc). All money contributed is doubled, and then split evenly among the 4 competitors. Thus, for every 2 cents contributed, each group member receives 1 cent. If everyone contributes all of their 40 cents, everyone's money will double: each of you will earn 80 cents. But if everyone else contributes their 40 cents, while you keep your 40 cents, you will earn 100 cents, while the others will earn only 60 cents. That is because for every 2 cents you contribute, you get only 1 cent back. Thus you personally lose money on contributing. Your opponents are REAL and will really make a decision – there is no deception in this study. Once you and the other people have chosen how much to contribute, the interaction is over. Neither you nor the other competitors receive any bonus other than what comes out of this interaction.”

(Note that in our experiment the competition instructions were more closely matched to the cooperative condition than the instructions used in the competitive condition in RNW.)

Manipulation of time constraint

We manipulated the cognitive processing of participants' cooperative decision-making by imposing a time constraint on their decision in the PGG. Following the instructions page (which was self-paced), participants moved to a new screen that differed by condition. In the time constraint condition, participants were asked, at the top of the screen, to make their decision as quickly as possible, and to take no longer than 10 seconds to make their choice. In the time delay condition, participants were asked to consider their decision very carefully and were told not to make a decision for at least 10 seconds. In both cases, participants made their decision using a slider initialized to a 50% contribution (as in [40], [43]).

A number of participants did not obey the time constraint instructions, either failing to make their decision within the allotted time in the time constraint condition (140 participants; 36%) or failing to wait the allotted time in the time delay condition (61 participants; 17%). However, the manipulation still had a substantial effect: the median decision time in the time constraint condition was 9 seconds, and, in the time delay condition, 21 seconds. We include all participants (regardless of whether they obeyed the time constraint) in our analyses in order to ensure a causal interpretation of our results (see [50]). We note, however, that our results are robust to exclusion of those that failed to follow the time constraint instructions.

Assessing Comprehension

We assessed comprehension with two questions that occurred after the decision (so as not to induce a reflective mindset, as per [40]'s Supplemental Study): “What level of contribution earns the highest payoff for the group as a whole?” and “What level of contribution earns the highest payoff for you personally?” 216 people (28.8%) answered at least one of these questions incorrectly (69 answered #1 wrong, 9.2%; 205 answered #2 wrong, 27.3%). As we demonstrate below, our results are robust to inclusion or exclusion of those that failed the comprehension questions.

Results

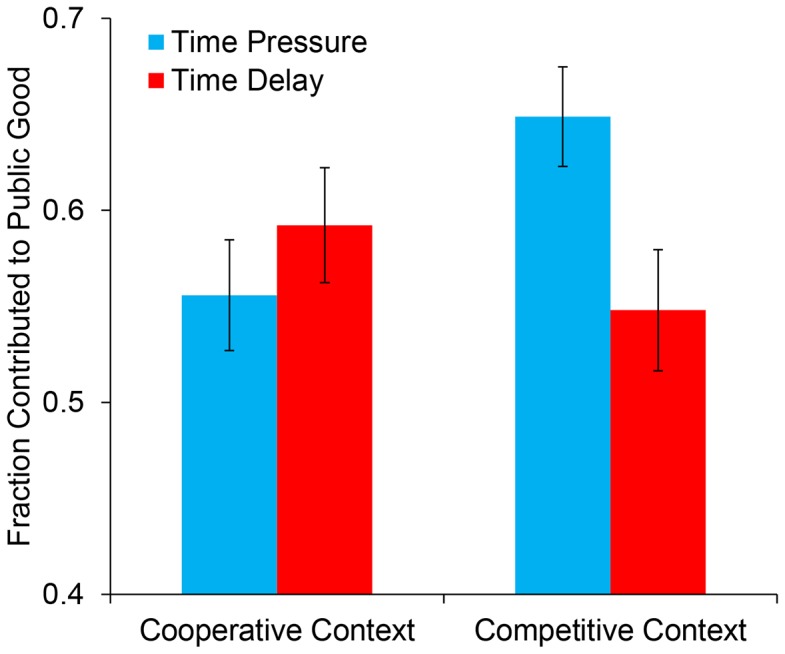

To assess the effects of our manipulations on participants' levels of cooperation, we submitted PGG contributions to a 2 (time constraint or time delay) ×2 (competitive or cooperative frame) analysis of variance (ANOVA). This analysis revealed a significant interaction between time constraint and contextual framing, F(1,747) = 5.605, p = .018 (see Fig. 1) (with non-comprehenders excluded: F(1,531) = 5.118, p = .024).

Figure 1. Average cooperation (% of endowment contributed to public good) by time constraint and social context in our experiment.

Error bars indicate standard errors of the mean.

Examining the simple effects, we find that in the competitive framing, participants contributed significantly more to the public good when under time pressure than when forced to deliberate, F(1,747) = 6.075, p = .014 (with non-comprehenders excluded: F(1,531) = 6.499, p = .011). Conversely, there was no significant simple effect of time constraint in the cooperative framing, F(1,747) = .789, p = .375 (with non-comprehenders excluded: F(1,531) = .378, p = .539).

These results successfully replicate the simple effects found in RNW. Moreover, we find a significant interaction between time pressure and framing where RNW found only a trending interaction that was non-significant.

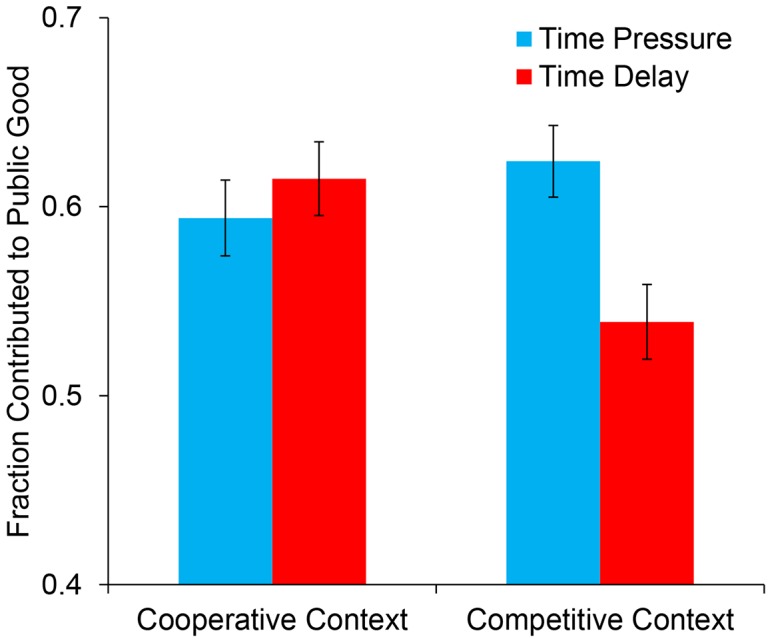

To further assess the robustness of our result, we aggregated our data with the N = 899 observations from RNW Study 2. We conducted a 2 (Study: Current or RNW) ×2 (time constraint or time delay) ×2 (competitive or cooperative frame) ANOVA. This analysis once again revealed a significant interaction between time constraint and contextual framing, F(1,1642) = 7.04, p = .008 (see Fig. 2) (with non-comprehenders excluded: F(1,1222 = 4.127), p = .042), that was, importantly, unqualified by Study, F(1,1642) = .699, p = .403 (with non-comprehenders excluded: F(1,1222) = 1.88, p = .17).

Figure 2. Average cooperation (% of endowment contributed to public good) by time constraint and social context when aggregating data from our experiment as well as Rand Newman & Wurzbacher (2014).

Error bars indicate standard errors of the mean.

In the competitive framing, participants once again contributed significantly more to the public good when under time pressure than when forced to deliberate, F(1,1642) = 9.152, p = .003 (with non-comprehenders excluded: F(1,1222) = 6.848, p = .009). Conversely, there was no significant simple effect of time constraint in the cooperative framing, F(1,1642) = .533, p = .465 (with non-comprehenders excluded: F(1,1222) = .051, p = .821).

These results thus clarify the effect of framing on time pressure, suggesting that the interaction between frame and time pressure is significant, and that time pressure only has a positive effect on cooperation in the competitive framing in which the context served to make the decision feel less familiar.

Discussion

Using time constraint manipulation, we replicate RNW's findings that in a Public Goods Game framed as a competition, participants were more inclined to cooperate with others when under time pressure than when asked to deliberate and think carefully about their decision. These findings thus suggest that the effects of intuitive cooperation demonstrated in previous research [40]–[43] are not merely about obeying the social norms dictated by the situation or by participants assuming that cooperation is what is implicitly expected or required of them in the experimental context. Even when the interaction was specifically framed as a competition, and the only way to win that competition was by not contributing to a public good, participants were nonetheless more inclined to cooperate when forced to make their decision quickly rather than deliberately.

These results also suggest that the intuitive cooperation effect is the result of a broad over-generalization, in that it was still observed in a setting where cooperation was less favorable. This is inconsistent with the idea that different social defaults exist for different scenarios, such that different frames would elicit different heuristic responses matched to the frame. On the contrary, the intuitive response in the competitively framed interaction was much more cooperative than the intuitive response in the cooperatively framed interaction. We also note that the time pressure effect we observe cannot be explained by increasing randomness or errors, as contribution rates are further from 50% (chance) and closer to 100% under time pressure than under time delay.

The fact that we do not observe an effect of time pressure in the baseline cooperatively framed condition is surprising from a ‘different heuristics for different situations' perspective, but consistent with recent evidence regarding the ability of prior experience with economic games to undermine cooperative intuitions [40]–[42]. It is noteworthy, then, that in the control condition in our study—under conditions that are most likely to capture the standard presentation of PGGs on MTurk and thus much more likely to be familiar to experienced turkers—we fail to find any evidence for an effect of time constraint on participants' contributions. However, it appears that only a few small changes to the wording of the instructions and presentation of the game in our competitive framing condition were enough to restore the effects of time pressure on cooperation. If this analysis is correct, it suggests that even small changes to the presentation of standard economic games (even those that might lead to an overall decrease in cooperative behavior) should result in larger effects of time pressure on decisions to cooperate.

Supporting Information

(CSV)

Acknowledgments

We thank Owen Wurzbacher for assistance in running the experiments.

Data Availability

The authors confirm that all data underlying the findings are fully available without restriction. All data and code are available on David Rand's personal website, www.DaveRand.org.

Funding Statement

Funding was graciously provided by the John Templeton Foundation. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1. Hamilton WD (1964) The genetical evolution of social behaviour. I. J Theor Biol 7:1–16. [DOI] [PubMed] [Google Scholar]

- 2. Trivers R (1971) The evolution of reciprocal altruism. Quarterly Review of Biology 46:35–57. [Google Scholar]

- 3. Axelrod R, Hamilton WD (1981) The evolution of cooperation. Science 211:1390–1396. [DOI] [PubMed] [Google Scholar]

- 4. Fudenberg D, Maskin ES (1990) Evolution and cooperation in noisy repeated games. American Economic Review 80:274–279. [Google Scholar]

- 5. Nowak MA, May RM (1992) Evolutionary games and spatial chaos. Nature 359:826–829. [Google Scholar]

- 6. Nowak MA, Sigmund K (1992) Tit for tat in heterogeneous populations. Nature 355:250–253. [Google Scholar]

- 7. Milinski M, Semmann D, Krambeck HJ (2002) Reputation helps solve the 'tragedy of the commons'. Nature 415:424–426. [DOI] [PubMed] [Google Scholar]

- 8.Bowles S, Gintis H (2003) Origins of human cooperation. Genetic and cultural evolution of cooperation: 429–443.

- 9. Boyd R, Gintis H, Bowles S, Richerson PJ (2003) The evolution of altruistic punishment. Proc Natl Acad Sci USA 100:3531–3535. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Henrich J, Boyd R, Bowles S, Camerer C, Fehr E, et al. (2005) “Economic man” in cross-cultural perspective: Behavioral experiments in 15 small-scale societies. Behavioral and brain science 28:795–855. [DOI] [PubMed] [Google Scholar]

- 11. Bartlett MY, DeSteno D (2006) Gratitude and Prosocial Behavior: Helping When It Costs You. Psychological Science 17:319–325. [DOI] [PubMed] [Google Scholar]

- 12. Rockenbach B, Milinski M (2006) The efficient interaction of indirect reciprocity and costly punishment. Nature 444:718–723. [DOI] [PubMed] [Google Scholar]

- 13. Nowak MA (2006) Five rules for the evolution of cooperation. Science 314:1560–1563. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Herrmann B, Thoni C, Gächter S (2008) Antisocial punishment across societies. Science 319:1362–1367. [DOI] [PubMed] [Google Scholar]

- 15. Fu F, Hauert C, Nowak MA, Wang L (2008) Reputation-based partner choice promotes cooperation in social networks. Physical Review E 78:026117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Cushman F, Macindoe O (2009) The coevolution of punishment and prosociality among learning agents.

- 17. Helbing D, Szolnoki A, Perc M, Szabó G (2010) Evolutionary Establishment of Moral and Double Moral Standards through Spatial Interactions. PLOS Comput Biol 6:e1000758. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Perc M, Szolnoki A (2010) Coevolutionary games–A mini review. Biosystems 99:109–125. [DOI] [PubMed] [Google Scholar]

- 19.Sigmund K (2010) The calculus of selfishness. Princeton: Princeton Univ Press.

- 20. Rand DG, Arbesman S, Christakis NA (2011) Dynamic social networks promote cooperation in experiments with humans. Proceedings of the National Academy of Sciences 108:19193–19198. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Apicella CL, Marlowe FW, Fowler JH, Christakis NA (2012) Social networks and cooperation in hunter-gatherers. Nature 481:497–501. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Fu F, Tarnita CE, Christakis NA, Wang L, Rand DG, et al. (2012) Evolution of in-group favoritism. Sci Rep 2:460. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Manapat ML, Nowak MA, Rand DG (2012) Information, irrationality and the evolution of trust. Journal of Economic Behavior and Organization [Google Scholar]

- 24. Rand DG, Nowak MA (2013) Human Cooperation. Trends in Cognitive Sciences 17:413–425. [DOI] [PubMed] [Google Scholar]

- 25. Crockett MJ (2013) Models of morality. Trends in cognitive sciences 17:363–366. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Yoeli E, Hoffman M, Rand DG, Nowak MA (2013) Powering up with indirect reciprocity in a large-scale field experiment. Proceedings of the National Academy of Sciences 110:10424–10429. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Hauser OP, Rand DG, Peysakhovich A, Nowak MA (2014) Cooperating with the future. Nature 511:220–223. [DOI] [PubMed] [Google Scholar]

- 28.Jordan JJ, Peysakhovich A, Rand DG (In press) Why we cooperate. In: Decety J, Wheatley T, editors. The Moral Brain: Multidisciplinary Perspectives. Cambridge, MA: MIT Press.

- 29. Piff P, Stancato D, Cote S, Mendoza-Denton R, Keltner D (2012) Higher social class predicts increased unethical behavior. Proceedings of the National Academy of Sciences 109:4086–4091. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Jin Q, Wang L, Xia C-Y, Wang Z (2014) Spontaneous Symmetry Breaking in Interdependent Networked Game. Sci Rep 4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Boccaletti S, Bianconi G, Criado R, del Genio CI, Gómez-Gardeñes J, et al. (2014) The structure and dynamics of multilayer networks. Physics Reports 544:1–122. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Rand DG, Nowak MA, Fowler JH, Christakis NA (2014) Static Network Structure Can Stabilize Human Cooperation. Proceedings of the National Academy of Sciences [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Sloman SA (1996) The empirical case for two systems of reasoning. Psychological Bulletin 119:3. [Google Scholar]

- 34.Kahneman D (2011) Thinking, Fast and Slow. New York, NY: Farrar, Straus and Giroux.

- 35. Kahneman D (2003) A perspective on judgment and choice: Mapping bounded rationality. American Psychologist 58:697–720. [DOI] [PubMed] [Google Scholar]

- 36. Frederick S (2005) Cognitive Reflection and Decision Making. The Journal of Economic Perspectives 19:25–42. [Google Scholar]

- 37. Stanovich KE, West RF (1998) Individual Differences in Rational Thought. Journal of Experimental Psychology: General 127:161–188. [Google Scholar]

- 38. Miller EK, Cohen JD (2001) An integrative theory of prefrontal cortex function. Annual Review of Neuroscience 24:167–202. [DOI] [PubMed] [Google Scholar]

- 39.Chaiken S, Trope Y (1999) Dual-process theories in social psychology. New York: Guilford Press.

- 40. Rand DG, Greene JD, Nowak MA (2012) Spontaneous giving and calculated greed. Nature 489:427–430. [DOI] [PubMed] [Google Scholar]

- 41. Rand DG, Peysakhovich A, Kraft-Todd GT, Newman GE, Wurzbacher O, et al. (2014) Social Heuristics Shape Intuitive Cooperation. Nature Communications 5:3677. [DOI] [PubMed] [Google Scholar]

- 42. Rand DG, Kraft-Todd GT (2014) Reflection Does Not Undermine Self-Interested Prosociality. Frontiers in Behavioral Neuroscience 8:300. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Rand DG, Newman GE, Wurzbacher O (2014) Social context and the dynamics of cooperative choice. Journal of Behavioral Decision Making doi:10.1002/bdm.1837. [Google Scholar]

- 44. Schulz JF, Fischbacher U, Thöni C, Utikal V (2014) Affect and fairness: Dictator games under cognitive load. Journal of Economic Psychology 41:77–87. [Google Scholar]

- 45. Cornelissen G, Dewitte S, Warlop L (2011) Are Social Value Orientations Expressed Automatically? Decision Making in the Dictator Game. Personality and Social Psychology Bulletin 37:1080–1090. [DOI] [PubMed] [Google Scholar]

- 46. Roch SG, Lane JAS, Samuelson CD, Allison ST, Dent JL (2000) Cognitive Load and the Equality Heuristic: A Two-Stage Model of Resource Overconsumption in Small Groups. Organizational Behavior and Human Decision Processes 83:185–212. [DOI] [PubMed] [Google Scholar]

- 47. Kovarik J (2009) Giving it now or later: Altruism and discounting. Economics Letters 102:152–154. [Google Scholar]

- 48. Dreber A, Fudenberg D, Levine DK, Rand DG (2014) Altruism and Self-Control. Available at SSRN [Google Scholar]

- 49. Ruff CC, Ugazio G, Fehr E (2013) Changing Social Norm Compliance with Noninvasive Brain Stimulation. Science 342:482–484. [DOI] [PubMed] [Google Scholar]

- 50. Tinghög G, Andersson D, Bonn C, Böttiger H, Josephson C, et al. (2013) Intuition and cooperation reconsidered. Nature 497:E1–E2. [DOI] [PubMed] [Google Scholar]

- 51. Verkoeijen PPJL, Bouwmeester S (2014) Does Intuition Cause Cooperation? PLOS ONE 9:e96654. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52. Hauge KE, Brekke KA, Johansson L-O, Johansson-Stenman O, Svedsäter H (2014) Keeping others in our mind or in our heart? Distribution games under cognitive load. University of Gothenburg Working Papers in Economics [Google Scholar]

- 53. Kinnunen SP, Windmann S (2013) Dual-processing altruism. Frontiers in Psychology 4:1–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54. Piovesan M, Wengström E (2009) Fast or fair? A study of response times. Economics Letters 105:193–196. [Google Scholar]

- 55. Fiedler S, Glöckner A, Nicklisch A, Dickert S (2013) Social Value Orientation and information search in social dilemmas: An eye-tracking analysis. Organizational Behavior and Human Decision Processes 120:272–284. [Google Scholar]

- 56. Rubinstein A (2007) Instinctive and cognitive reasoning: a study of response times. The Economic Journal 117:1243–1259. [Google Scholar]

- 57. Rubinstein A (2013) Response time and decision making: An experimental study. Judgment and Decision Making 8:540–551. [Google Scholar]

- 58.Cappelen AW, Nielsen UH, Tungodden B, Tyran JR, Wengström E (2014) Fairness is intuitive. Available: http://ssrncom/abstract=2430774.

- 59.Evans AM, Dillon KD, Rand DG (2014) Reaction Times and Reflection in Social Dilemmas: Extreme Responses are Fast, But Not Intuitive. Available: http://ssrncom/abstract=2436750.

- 60. Balliet D, Joireman J (2010) Ego depletion reduces proselfs' concern with the well-being of others. Group Processes & Intergroup Relations 13:227–239. [Google Scholar]

- 61. DeWall CN, Baumeister RF, Gailliot MT, Maner JK (2008) Depletion Makes the Heart Grow Less Helpful: Helping as a Function of Self-Regulatory Energy and Genetic Relatedness. Personality and Social Psychology Bulletin 34:1653–1662. [DOI] [PubMed] [Google Scholar]

- 62. Xu H, Bègue L, Bushman BJ (2012) Too fatigued to care: Ego depletion, guilt, and prosocial behavior. Journal of Experimental Social Psychology 48:1183–1186. [Google Scholar]

- 63. Halali E, Bereby-Meyer Y, Ockenfels A (2013) Is it all about the self? The effect of self-control depletion on ultimatum game proposers. Frontiers in human neuroscience 7:240. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64. Engel C, Rand DG (2014) What does “clean” really mean? The implicit framing of decontextualized experiments. Economics Letters 122:386–389. [Google Scholar]

- 65.Peysakhovich A, Rand DG (2013) Habits of Virtue: Creating Norms of Cooperation and Defection in the Laboratory. Available: http://ssrncom/abstract=2294242.

- 66. Righetti F, Finkenauer C, Finkel EJ (2013) Low Self-Control Promotes the Willingness to Sacrifice in Close Relationships. Psychological Science [DOI] [PubMed] [Google Scholar]

- 67. Rand DG, Epstein ZG (2014) Risking Your Life Without a Second Thought: Intuitive Decision-Making and Extreme Altruism. PLOS ONE 9:e109687. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Rand DG, Kraft-Todd GT, Gruber J (2014) Positive Emotion and (Dis)Inhibition Interact to Predict Cooperative Behavior. Available: http://ssrncom/abstract=2429787. [DOI] [PMC free article] [PubMed]

- 69. Kieslich PJ, Hilbig BE (2014) Cognitive conflict in social dilemmas: An analysis of response dynamics. Judgment and Decision Making 9:510–522. [Google Scholar]

- 70.Bowles S, Gintis H (2002) Prosocial emotions. In: Durlauf LBaSN, editor. The Economy as a Evolving Complex System 3. pp. 339–364.

- 71. Chudek M, Henrich J (2011) Culture gene coevolution, norm-psychology and the emergence of human prosociality. Trends in cognitive sciences 15:218–226. [DOI] [PubMed] [Google Scholar]

- 72. Van Lange PA, De Bruin E, Otten W, Joireman JA (1997) Development of prosocial, individualistic, and competitive orientations: theory and preliminary evidence. Journal of personality and social psychology 73:733. [DOI] [PubMed] [Google Scholar]

- 73. Kiyonari T, Tanida S, Yamagishi T (2000) Social exchange and reciprocity: confusion or a heuristic? Evolution and Human Behavior 21:411–427. [DOI] [PubMed] [Google Scholar]

- 74.Yamagishi T (2007) The social exchange heuristic: A psychological mechanism that makes a system of generalized exchange self-sustaining. In:Radford M, Ohnuma S, Yamagishi Teditors. Cultural and ecological foundation of the mind. Sapporo: Hokkaido University Press. pp. 11–37.

- 75. Delton AW, Krasnow MM, Cosmides L, Tooby J (2011) Evolution of direct reciprocity under uncertainty can explain human generosity in one-shot encounters. Proceedings of the National Academy of Sciences 108:13335–13340. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76. Fehr E, Gächter S (2000) Cooperation and punishment in public goods experiments. American Economic Review 90:980–994. [Google Scholar]

- 77. Liberman V, Samuels SM, Ross L (2004) The Name of the Game: Predictive Power of Reputations versus Situational Labels in Determining Prisoner's Dilemma Game Moves. Personality and Social Psychology Bulletin 30:1175–1185. [DOI] [PubMed] [Google Scholar]

- 78. Rand DG, Greene JD, Nowak MA (2013) Rand et. al. reply. Nature 497:E2–E3. [Google Scholar]

- 79. Amir O, Rand DG, Gal YK (2012) Economic Games on the Internet: The Effect of $1 Stakes. PLOS ONE 7:e31461. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80. Horton JJ, Rand DG, Zeckhauser RJ (2011) The Online Laboratory: Conducting Experiments in a Real Labor Market. Experimental Economics 14:399–425. [Google Scholar]

- 81. Rand DG (2012) The promise of Mechanical Turk: How online labor markets can help theorists run behavioral experiments. Journal of theoretical biology 299:172–179. [DOI] [PubMed] [Google Scholar]

- 82. Buhrmester MD, Kwang T, Gosling SD (2011) Amazon's Mechanical Turk: A New Source of Inexpensive, Yet High-Quality, Data? Perspectives on Psychological Science 6:3–5. [DOI] [PubMed] [Google Scholar]

- 83. Paolacci G, Chandler J, Ipeirotis PG (2010) Running Experiments on Amazon Mechanical Turk. Judgment and Decision Making 5:411–419. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

(CSV)

Data Availability Statement

The authors confirm that all data underlying the findings are fully available without restriction. All data and code are available on David Rand's personal website, www.DaveRand.org.