Abstract

Previous studies in infants have shown that face-sensitive components of the ongoing EEG (the event-related potential, or ERP) are larger in amplitude to negative emotions (e.g., fear, anger) versus positive emotions (e.g., happy). However, it is still unclear whether the negative emotions linked with the face or the negative emotions alone contribute to these amplitude differences. We simultaneously recorded infant looking behaviors (via eye-tracking) and face-sensitive ERPs while 7-month-old infants viewed human faces or animals displaying happy, fear, or angry expressions. We observed that the amplitude of the N290 was greater (i.e., more negative) to angry animals compared to happy or fearful animals; no such differences were obtained for human faces. Eye-tracking data highlighted the importance of the eye region in processing emotional human faces. Infants that spent more time looking to the eye region of human faces showing fearful or angry expressions had greater N290 or P400 amplitudes, respectively.

Keywords: face processing, infant development, event-related potentials, eye tracking, emotions

1. Introduction

Facial expressions are a powerful form of non-verbal communication that convey information about the internal states of others; moreover, the ability to discriminate between emotional expressions is an important tool in social interactions, particularly before the onset of language. While the ability to recognize and understand emotional expressions develops slowly, discrimination between different emotions progresses rapidly over the first year (Leppänen & Nelson 2006; Righi & Nelson, 2013). There is substantial evidence from looking-time studies that by 12 months, infants are able to discriminate a range of facial expressions, particularly positive expressions (e.g., happy) from negative expressions (e.g., fear; de Haan & Nelson, 1998; Nelson, 1987). However, substantially less is known about the neural bases of this ability. The focus of the present study was to examine the neural correlates of the infant’s ability to discriminate different facial expressions.

The most common method by which investigators have examined the neural correlates of perceptual discrimination has been the recording of event-related potentials (ERPs). Previous studies have identified two components of the ERP in infants that are particularly sensitive to processing and interpreting faces: the N290, a negative deflection occurring approximately 290ms post stimulus onset; and the P400, a positive peak occurring around 400ms after stimulus onset. Both components are maximal over occipital temporal scalp regions (de Haan, Johnson, & Halit, 2003). The N290 and P400 reflect face sensitive processes similar to the adult N170. Like the N170, the peak amplitude of the N290 is greater for face compared to non- or inverted-face stimuli (de Haan, Pascalis, & Johnson, 2002; Halit, Csibra, Volein, & Johnson 2004; Halit, de Haan, & Johnson 2003) and the latency of the P400 response to faces is faster compared to objects (de Haan & Nelson, 1999) and has greater amplitude compared to inverted faces (de Haan, et al., 2002) between 6 and 12 months.

Research in children and adults has shown that the type of emotional expression modulates the amplitude of the N170; fearful faces evoke a greater amplitude than other emotional expressions, including neutral faces, between 4 and 15 years (Batty & Taylor, 2003) and in adults (Batty & Taylor, 2006; Eimer & Holmes, 2002; Leppänen, Moulson, Vogel-Farley, & Nelson, 2007; Rigato, Farroni, & Johnson, 2010). The evidence supporting the effects of emotional expressions on the amplitude and latency of the N290 and P400 in infants is limited to a small number of studies that varied in the emotion exemplars used, their presentation of stimuli, and the age of the infants.

Leppänen, et al. (2007) contrasted the neural responses of 7-month-old infants to a female model expressing a happy, fearful, or neutral face. The N290 in this sample did not vary by emotion in amplitude or peak latency, whereas the P400 had greater amplitude to a fearful face compared to both the happy and neutral expressions. In another study of 7-month-olds, Kobiella, Grossman, Reid, and Striano (2008) showed infants fearful and angry expressions. Consistent with the findings of Leppänen, et al., the P400 was greater for the fearful face compared to the angry face. Additionally, they observed a greater N290 (i.e., more negative) to angry compared to fearful faces. Taken together, these studies suggest that the P400 serves as an index of discrimination between emotion categories.

Indeed, this hypothesis was tested in a study of 7-month-olds observing morphed faces along a happy – sad continuum. Leppänen, Richmond, Vogel-Farley, Moulson, & Nelson (2009) familiarized infants to a model exhibiting either a happy (within) or sad (between) expression. Then, they presented infants with a novel model expressing a different intensity of happy; importantly, the difference in intensity between the face used for familiarization and the subsequent novel face was equated to approximately 30%. The results indicated that the P400 was greater for the novel face when infants viewed the between-emotion condition. However, when collapsing across the within- and between- groups, there were no P400 differences between the familiar and novel faces. This finding suggests that the difference between familiar and novel faces in the between-emotion condition was not due to the novel identity of the face; instead, it was due to the novel emotion. Thus, the difference between the P400s was a marker of infants’ categorization of emotion and not of facial identities.

One question that has been asked is whether the discrimination of certain expressions is driven by particular facial features or regions of the face. Evidence in adults suggests that the eye region of the face is important for face processing (Bentin, Golland, Flevaris, Robertson, & Moscovitch, 2006; Schyns, Jentzsch, Johnson, Schweinberger, & Gosselin, 2003) and that features of the eyes, particularly for fear, preferentially activate different brain regions (Whalen, 1998). Infants show a preference for looking at the eye region in the first month (Haith, Bergman, & Moore, 1977) that may contribute to the rapid development of brain regions involved in processing eye information (Farroni, Johnson, & Csibra, 2004; Taylor, Edmonds, McCarthy, & Allison, 2001).

There is some evidence that the N290 and P400 are modulated by changes in the features of the face (Key, Stone, & Williams, 2009; Scott & Nelson, 2006). Key and colleagues presented 9-month-old infants with a visual oddball task in which infants viewed a standard face for 70% of trials. The remaining trials displayed either a face with the eye region replaced by the eyes of another model or the mouth region replaced by the mouth of another model. Changing the mouth region shortened the latency of the N290 but did not affect the amplitude of either the N290 or P400; however, when the infants saw the faces with the different model’s eyes, they exhibited a larger N290 peak and a decrease in the P400 amplitude. Further, both of these components had a shorter latency when compared to the standard face. These findings suggest that at 9-months, infants are more sensitive to changes in the eye region of the face than in the mouth region.

This effect is also modulated by emotion. In a study manipulating whether the gaze of a happy or fearful expression affects face-processing ERPs, Rigato et al (2010) presented 4-month-olds with happy or fearful expressions with either a direct or averted gaze. Interestingly, gaze direction did not affect the N290 or P400 for fearful faces, but when the gaze of the happy expression was directed towards the infant, the N290 peaked earlier, suggesting that infants are tuned to the direction of the gaze of the model displaying the emotion. The lack of findings in the fearful condition were likely a result of the young age of the infants as attentional bias to fearful faces emerges between 5 and 7 months (Peltola, Leppänen, Mäki, & Hietanen, 2009).

In the studies reported above, the stimuli presented to the infants consisted entirely of human expression of emotions. An important question remains - are there higher order neural systems that are capable of extracting emotion information in general, regardless of the medium through which the emotion is expressed? For example, would infants be able to recognize the emotion “fear” if displayed by a human face as easily as if displayed by an animal face, or a scene that represents emotion? To examine this question, we presented infants with pictures of animals rated as expressing the emotions of happy, anger, and fear. By comparing ERP responses to happy, fearful, and angry expressions between human faces and animals we can begin to tease apart the contributions of the human face and each emotion on the face-processing ERPs.

In addition to understanding the contribution of emotion to the morphology of the N290 and P400, we sought to examine how individual looking behavior impacts the neural responses to faces. The evidence presented above demonstrates the importance of the eye region in processing facial stimuli; however, the evidence to date relied on altering the eye region of the stimuli. Here we recorded eye position data during the ERP task to explore how individual looking behavior may modify the neural response to emotion faces.

2. Methods

2.1 Participants

Typically developing 7-month-old infants were recruited from the greater Boston area to participate in a longitudinal study of emotion processing, temperament and genetics (n = 55). All infants were born full-term and had no known pre- or peri- natal complications (M age = 214 days, SD = 4.76, range 205–221). The experimental protocol was approved by the local IRB.

From the study sample, 34 participants (12 females) were included in the final sample for analysis. The additional 21 infants were excluded due to excessive eye and/or body movements that resulted in recording artifacts (n = 18), EEG net refusal (n = 2) or fussiness that resulted in too few trials recorded (n = 1).

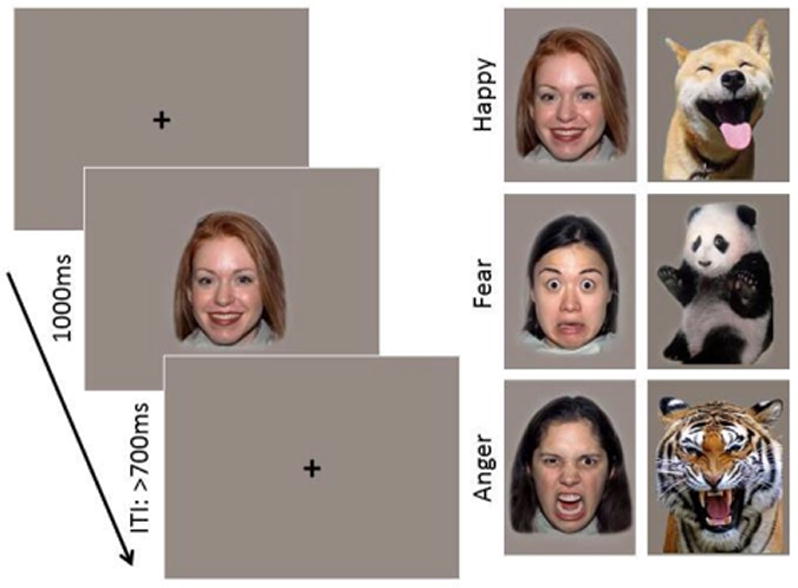

2.2 Stimuli

Stimuli were color images of either female faces or animals exhibiting happy, angry and fearful expressions (Figure 1). Human faces were drawn from the NimStim set of images (Tottenham et al., 2009). Previous research has demonstrated that infants are sensitive to race and gender information in faces during their first year of life; therefore, the race of the faces shown was matched to the race of the infant’s mother (Vogel, Monesson, & Scott, 2012). The animal stimulus set was drawn from a larger set of images selected from an extensive search using Google Images of animal faces or whole bodies depicting an anthropomorphic emotion. Images were rated on perceived emotion and intensity by a group of 12 female adults (M = 31.58 years, SD = 4.32). On average, anger animal images were rated as angry by 96.67% (SD = .06); fear animals as fearful by 90.83% (SD = .09); and happy animals as happy by 98.33% (SD = .04) of the participants. Intensity was rated on a 1 neutral to 5 very intense Likert scale. Average intensity ratings were M = 4.23 (SD = .29) for anger, M = 3.91 (SD = .78) for fear, and M = 3.92 (SD = .38) for happy animal images. Two final image sets were selected in which there were no significant differences on any of the rated dimensions. Stimulus sets were counterbalanced across participants. The animal stimulus set is available upon request from the authors.

Figure 1.

Task design and examples of face and animal stimuli expressing happy, fearful, and angry expressions.

For each experiment there were a total of 150 trials, 50 of each emotional category (happy, anger, fear). Each category consisted of 5 individual exemplars; therefore, each stimulus could repeat a maximum of 10 times. The stimuli were presented on a gray background; animals subtended 20.3° × 14.3° of visual angle and human faces subtended 14.3° × 12.2° of visual angle.

2.3 Experimental Procedure

Testing took place in an electrically-shielded and sound-attenuated room with low lighting. Infants were seated on a parent’s lap approximately 65 cm from a Tobii corneal reflection eye tracker. Continuous electroencephalogram (EEG) and eye gaze location were simultaneously recorded during a passive viewing emotion discrimination task. The testing procedure began with running a 5-point calibration procedure two times in a row in order to ensure the eye tracker was adequately tracking gaze. The calibration sequence involved a red dot appearing in each of the four corners of the computer monitor and in the center of the screen. Experimenters aimed to successfully track 3 or more out of the 5 calibration points each time. Calibration was completed using Tobii Studio (Tobii Technology AB, Sweden). A keyboard piano was used to present sounds from speakers positioned on each side of the monitor in order to attract the infant’s attention to the calibration object.

During testing, image presentation was controlled by an experimenter in an adjacent room. Image presentation was controlled using E-Prime 2.0 (Psychological Software Products, Harrisburg, PA). The experimenter monitored the infant’s looking behavior using a video camera and only presented a stimulus if the infant was looking at the computer monitor. Half of the infants in the study saw human faces and half saw images of animals. Each image was presented for 1000ms, followed by a fixation cross that remained on the screen until the experimenter initiated the next trial with a minimum inter-stimulus interval of 700ms (Figure 1). A second experimenter was seated next to the infant throughout the test, maintaining the infant’s attention to the monitor. Testing continued until the maximum number of trials had been reached, or the infant’s attention was no longer able to be maintained (M trials completed = 129, SD = 19.99, range = 85–150).

2.3.1 EEG Recording and Analysis

Continuous scalp EEG was recorded from a 128-channel HydroCel Geodesic Sensor Net1 (Electrical Geodesic Inc., Eugene, OR) that was connected to a NetAmps 300 amplifier (Electrical Geodesic Inc., Eugene, OR) and referenced online to a single vertex electrode (Cz). Channel impedances were kept at or below 100 kΩ and signals were sampled at 500 Hz. EEG data were preprocessed offline using NetStation 4.5 (Electrical Geodesic Inc., Eugene, OR).

The EEG signal was segmented to 1200ms post-stimulus onset, with a baseline period beginning 100ms prior to stimulus onset. Data segments were filtered using a .3–30 Hz bandpass filter and baseline-corrected using mean voltage during the 100ms pre-stimulus period. Automated artifact detection was applied to the segmented data in order to detect individual sensors that showed > 200 uV voltage changes within the segment period. The entire trial was excluded if more than 18 sensors (15%) overall had been rejected; this trial rejection criteria is consistent with previous infant ERP studies (Luyster, Powell, Tager-Flusberg, & Nelson, 2014; Righi, Westerlund, Congdon, Troller-Renfree, & Nelson, 2014) and is based on greater movement evinced by infants compared to adults (where the threshold is generally set at 10%). On average, only 2 sensors (SD = 1.34) were rejected in our region of interest. Data segments were then inspected manually to confirm the results of the automatic artifact detection algorithms. Segments containing eye blinks, eye movements, or drift were also rejected. Bad segments identified by either procedure were excluded from further analysis. Of the remaining trials, individual channels containing artifact were replaced using spherical spline interpolation. Average waveforms were generated separately for each participant and within each emotional category, and data were re-referenced to the average reference. The mean number of trials per condition average was not different between the two stimulus groups or between the emotional categories (Faces: M happy trials = 20.39, SD = 7.92, range 10–37; M anger trials = 21.17, SD = 6.84, range 12–36; M fear trials = 20.50, SD = 7.85, range 9–36; Animals: M happy trials = 20.56, SD = 6.07, range 9–31; M anger trials = 21.31, SD = 4.94, range 13–29; M fear trials = 20.00, SD = 5.82, range 10–31).

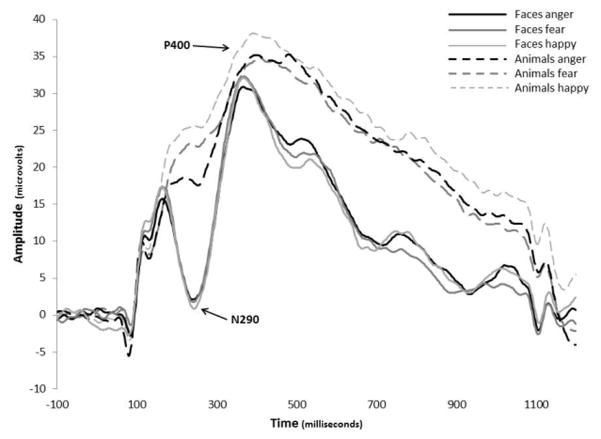

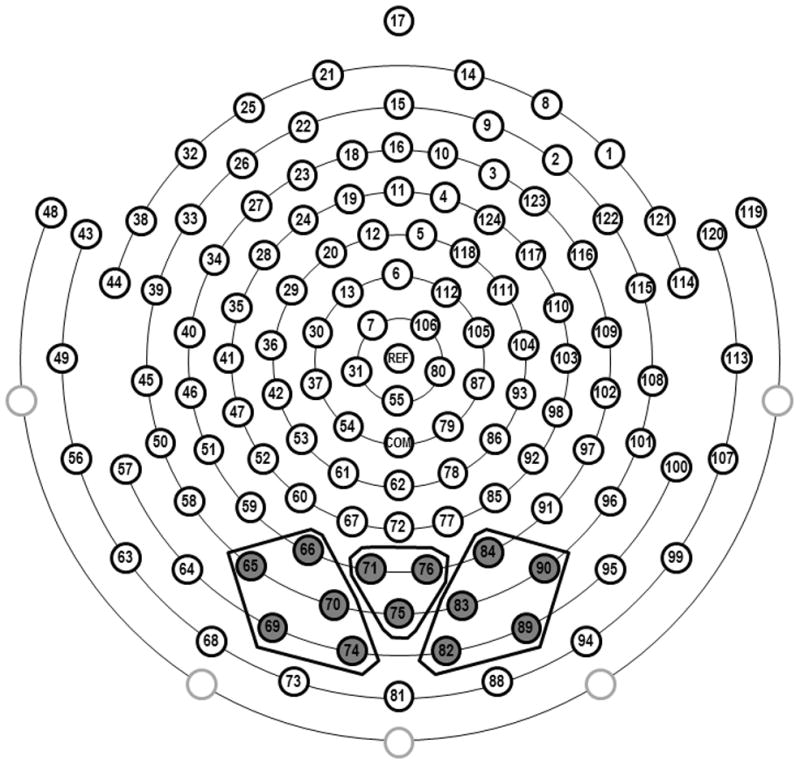

Inspection of the grand-averaged waveforms revealed two well-defined components that were prominent over occipito-temporal scalp: N290 and P400. Based on grand-averaged data and individual data, the N290 was analyzed within a time window of 200ms to 325ms and the P400 was analyzed within a time window of 325–500ms. Electrode groupings were selected based on previous research (e.g. de Haan et al., 2002; Leppänen et al., 2007; Halit et al., 2003; Rigato et al., 2010; Scott & Nelson, 2006), as well as on visual inspection of both the grand-averaged and individual waveforms. Thirteen occipital electrodes were identified and separated into regions of interest for the N290 and P400 (left: 65, 66, 69, 70, 74; midline: 71, 75, 76; right: 82, 83, 84, 89, 90). Figure 2 shows a schematic of the sensor net and the clusters of electrodes used in the analyses. Peak amplitude and latency were extracted for the N290 and because the P400 had a less defined peak, mean amplitude was computed.

Figure 2.

Schematic of the 128-channel sensor net with corresponding regions of interest used for extracting the face-sensitive N290 and P400 ERPs. Note that the four eye-lead channels are removed for infant comfort.

2.3.2 Eye Tracking Recording and Analysis

During stimulus presentation, gaze location for both eyes was recorded using a Tobii T120 (Tobii Technology AB, Sweden) eye tracker. Gaze information was sampled at 60 Hz and collected by E-Prime. Monitor specifications included an accuracy of 0.5 degrees of the visual angle and a tolerance of head movements within a range of 44 × 22 × 30 cm.

For each stimulus, areas of interest (AOI) that delineated the top, bottom, and contour of each image were traced onto the stimuli using transparent objects in E-Prime. Subsequent analyses were based on gaze data within these particular AOIs.

Gaze data files were run through a custom-made Python script (Python Software Foundation, http://www.python.org/) which extracted gaze information on each trial. Trial data were subsequently processed using SAS software version 9.3 for Windows (Copyright 2002–2010 by SAS Institute Inc., Cary, NC, USA). Trials were included for further analysis if gaze information was recorded for at least 500ms of the 1000ms total image time. Because there was considerable variability in the size of the eye and mouth regions across the animal stimuli, we were not confident in interpreting the summary statistics of eye-tracking behavior in the animal group. In the human faces group, the mean number of trials per condition average was not different between the emotional categories (M happy trials = 32.39, SD = 9.61, range 16–44; M anger trials = 32.50, SD = 8.83, range 11–45; M fear trials = 32.33, SD = 8.98, range 15–47).

3. Results

3.1 ERP Data

Peak amplitude and latency of the N290 and mean amplitude of the P400 were analyzed using repeated-measures analyses of variance in IBM SPSS Statistics version 19 for Windows (IBM Corp, Armonk, NY). Within-participant factors included emotion (happy, anger, fear) and electrode region (left, right, midline); stimulus type (human, animal) was included as a between-participants factor. Greenhouse-Geisser corrections were applied when the assumption of sphericity was violated. When significant (p≤ .05) main or interaction effects emerged, post hoc comparisons were conducted and a Bonferroni correction for multiple comparisons was applied.

3.1.1 N290

Analysis of N290 peak amplitude revealed significant main effects of emotion, F(2,64) = 5.007, p = .010, ηp2 = .135, and stimulus type, F(1,32) = 48.969, p < .001, ηp2 = .605. These main effects were qualified by an emotion x stimulus type interaction, F(2,31) = 5.660, p = .005, ηp2 = .150. Post hoc comparisons revealed that infants showed a significantly larger N290 to human faces compared to animal images for each of the 3 emotions (Figure 3). There were no differences between the emotions for human faces (M happy = −1.27 μV, SD = 8.9; M anger = −1.05 μV, SD = 8.1; M fear = −2.37 μV, SD = 7.1). However, angry animals (M = 11.54 μV, SD = 6.7) elicited a significantly larger N290 than happy animals (M = 18.53 μV, SD = 7.5; t(15) = 4.51, p = .001) and a marginally larger response than fearful animals (M = 15.01 μV, SD = 8.3; t(15) = 2.44, p = .082). There was no difference between happy and fearful animals.

Figure 3.

Grand average ERP waveforms showing the N290 and P400 in the right posterior electrodes. Solid lines are the response to human faces and dashed lines are the response to animals.

Analysis of N290 latency revealed no significant main or interaction effects of emotion, stimulus type, or region.

3.1.2 P400

Visual inspection of waveforms revealed that amplitude differences of the P400 may be driven by differences in the preceding N290 component. In order to control for the differences at the N290 in the analysis of the P400, a peak-to-trough subtraction was computed. The difference score variable for the P400 was entered in the ANOVA. Analyses revealed that after correcting for the preceding N290 effects, there was a main effect of stimulus type, F(1,32) = 4.634, p = .039, ηp2 = .126, in that human faces (M = 25.33 μV, SD = 10.3) elicited a significantly larger P400 component than animal images (M = 18.69 μV, SD = 7.2). Analyses also revealed a stimulus type x electrode region interaction, F(2,31) = 11.719, p < .001, ηp2 = .268. Post hoc comparisons indicated that human faces elicited larger amplitudes than animals in the left and right electrode regions. For animal images there were no differences between any of the electrode regions.

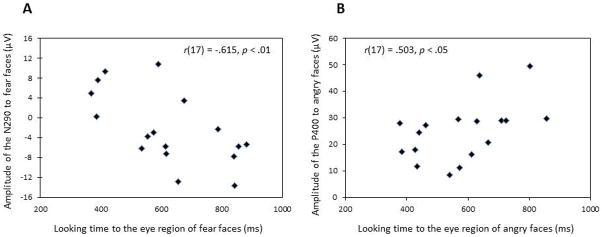

3.2 Brain-Behavior Correlations

We next examined whether the amplitude and latency of the N290 and P400 were modulated by the looking behaviors of the infants. Because these ERPs demonstrated specificity to human faces and because there was considerable variability in the size of the eye and mouth regions between animal stimuli, we only examined the relations between the total looking time the infants were looking in each region of the human faces and the ERP waveforms within each emotion. One infant was found to be an outlier in their looking time (<1.5 SD) to all three emotion faces and were excluded from the present analyses2.

3.2.1 N290

Pearson correlations between the total looking time to the eye and mouth regions of each emotion and the average amplitude of the N290 across the three posterior regions were computed. Looking time to the eye region of fear faces was negatively correlated with the N290 peak amplitude to fear faces (r(17) = −.615, p = .009); in contrast, looking to the mouth region of fear faces resulted in a positive correlation with the N290 amplitude to fear faces (r(17) = .521, p = .032). These results suggest that increased looking time to the eye region, compared to the mouth region, was related to a larger amplitude (i.e., more negative) of the N290 for fear faces (Figure 4a). Larger amplitude responses are generally interpreted as greater activation of the neuronal population thought to underpin a specific component (e.g., greater neural synchrony).

Figure 4.

Scatter-plot of looking time to the eye-region of (A) fear faces and N290 amplitude to fear faces and (B) angry faces and P400 amplitude to angry faces.

There were no significant relations between looking to the eye or mouth regions in happy or angry faces and N290 amplitude or between any emotion and N290 latency (Table 1).

Table 1.

Correlations between Eye Tracking Behavior and Face-Sensitive ERPs

| Total Looking ERP | Eyes

|

Mouth

|

||||

|---|---|---|---|---|---|---|

| Happy | Angry | Fear | Happy | Angry | Fear | |

| N290 amplitude | −.146 | −.421 | −.615** | .047 | .277 | .521* |

| N290 latency | −.016 | .052 | −.132 | .010 | .070 | .212 |

| P400 mean amplitude | .189 | .503* | .401 | −.167 | −.453 | −.442 |

Note: N = 17;

p < .01,

p < .05

To examine if the significant correlation between the N290 amplitude and looking to the eye region of fear faces was unique to fear, we compared the correlations for happy and angry against fear using the Pearson-Filon test. The analyses revealed a significant difference between fear and happy (z = 1.72, p = .042) but not between fear and angry (z = .771, p = .22).

3.2.2 P400

Pearson correlations between the total looking time to the eye and mouth regions of each emotion and the average amplitude of the P400 across the three posterior regions were computed. There was a positive relation between looking time to the eye region of angry faces and P400 amplitude to angry faces (r(17) = .503, p = .040); in contrast, there was a trend negative relation with looking to the mouth region of angry faces (r(17) = −.453, p = .068). These results suggest that increased looking time to the eye region, compared to looking to the mouth region, was related to a more positive amplitude of the P400 (Figure 4b).

There were no significant relations between looking to the eye or mouth regions in happy or fear faces and P400 amplitude (Table 1).

To examine if the correlation between the P400 amplitude and looking to the eye region of angry faces was unique to anger, we again used the Pearson-Filon test to compare the anger correlation to the correlations for happy and fear. The analyses revealed no significant differences between fear (z = 1.23, p = .11) or happy (z = .404, p = .34).

4. Discussion

The present study examined the effects of human expressions of emotion conveyed through human face and animal stimuli on 7-month-old face sensitive ERPs. The results suggest that higher order emotion processors may be affecting the amplitude of face-sensitive ERPs. When infants viewed animals exhibiting happy, fearful, and angry expressions, angry expressions resulted in a more negative deflection in the N290 compared to happy or fear expressions. This finding is in line with the results reported in Kobiella et al. (2008) contrasting fear and anger in human faces; however, in our sample of infants, these similarities were restricted to the N290 response to animal stimuli and were not found for the human faces. The results of this study further extend the findings of previous studies demonstrating the sensitivity of the N290 and P400 to face stimuli over non-human face stimuli. Johnson and colleagues have shown that 3- to 12-month-old infants have an increased amplitude of the N290 to human faces when compared to monkey faces (de Haan et al., 2002; Halit et al., 2003) or noise stimuli (Halit et al., 2004) and an increased amplitude of the P400 to human faces compared to inverted faces (de Haan et al., 2002). Here, we found that compared to the animals, both the N290 and P400 had increased amplitudes to the human face stimuli. However, the effect of emotions on the face-sensitive components emerged when we examined infants’ looking behaviors during the ERP task.

Interestingly, the amplitude of the N290 to fearful expressions was greater (i.e., more negative) the longer infants viewed the eye region of the human face; moreover, this effect was not observed for viewing the eye region of happy or angry faces. Similarly, looking to the eye region of angry faces resulted in larger P400 amplitude; an effect that was not observed in the other emotion conditions. Previous studies have suggested that the eye region plays an important role in face perception by manipulating the eye region of the face (Key et al., 2009; Rigato et al., 2010) however, those studies were dependent on infants’ ability to detect the changes in the eye region of the stimuli. The advantage of the current study was the simultaneous acquisition of eye tracking and ERPs in order to examine how individual differences in looking behaviors affect the face-sensitive N290 and P400. Comparison of the relations between looking time to the eyes and face-sensitive ERPs suggest that the eye region may be particularly important for the perception of negative emotions.

It has been proposed that the brain’s bias for efficiently processing fearful and angry expressions emerges between 6 and 12 months (for a review see Leppänen & Nelson, 2009). Moreover, evidence from fMRI studies in adults has outlined distinct neural structures involved in processing fear and angry faces. Threatening faces routinely activate the amygdala (Harris, Young, & Andrews, 2014; Mattavelli et al., 2013), but the amygdala’s response is still greater to fear compared to angry faces (Whalen et al., 2001). Conversely, angry faces activate the insula, cingulate, basal ganglia, thalamus, and hippocampus (Strauss et al., 2005). It is possible that the correlations between infant’s looking to the eyes of fear faces and the N290 and between looking to angry eyes and the P400 may have been a result of activation of these different networks. Ongoing data collection in younger and older infants in our lab may provide answers to these developmental questions.

Although inconsistent with previous studies that found effects of emotion on N290 and P400 amplitude, we did not observe an overall effect of emotion in the human face group. However, our study differs from others in that the ratio of exemplars to emotions is greater in the current study than in previous research (i.e., we used 5 individual exemplars expressing 3 emotions compared to the use of only one or two models expressing two emotions; Kobiella et al., 2008; Leppänen et al., 2007; Leppänen et al., 2009). Rigato and colleagues (2010) found latency differences in the N290 and P400 using 6 models, but again, only contrasting two emotion categories. Behavioral evidence suggests that at 7-months, infants should be able to categorize emotion across multiple exemplars. Using a habituation paradigm, Kestenbaum and Nelson (1990) presented infants with 3 different smiling women and following habituation infants were shown a novel face displaying happy, fear, or angry expression. Infants increased looking to fear and angry expressions but not to the novel smiling face. By familiarizing the infants to one emotion Kestenbaum and Nelson may have made discrimination of the remaining two emotions easier for the infants. Therefore it is possible that our task was too complex for infants to be able to categorize the three different emotions across the five individual identities. This is still an open question and future studies will be needed to examine the capacity of 7-month-old infants’ understanding of emotions across multiple exemplars.

Interpretation of the looking behaviors and ERP amplitude is limited by the lack of direct correspondence between the trials included in the ERPs and those used in calculation of looking times. The rate of trial loss was different between the two methods due to trials rejected for EEG artifact or loss of signal from the eye-tracking equipment. Comparing the amplitude of each component on trials in which the individual was looking to the eye region versus the mouth region for the majority of the stimulus presentation would allow us to measure the impact of the eyes versus the mouth on face-sensitive ERPs. Additionally, we were unable to examine any correlations between looking behavior and the ERPs in the animal stimuli due to the large variability in size and location of the eye and mouth regions across emotion categories. It would be interesting to see if processing the emotions in the animal stimuli showed a comparable pattern of fear in the N290 and anger in the P400 as was observed in the human faces.

The results of this study highlight the importance of the eye region in the development of face processing. We found that differential activation of face sensitive ERPs to fear and angry emotions was directly related to the amount of time infants spent looking at the eye region of the face stimuli. Moreover, the results suggest that the different neural circuitry involved in processing fear versus angry faces in adults is emerging in 7-month-olds and may be detected in scalp recorded ERPs.

Footnotes

Eye electrodes were removed from the nets for infants’ comfort thus resulting in 124 recording channels.

Analyses were run including the outlier and the results did not change the main findings.

References

- Batty M, Taylor MJ. Early processing of the six basic facial emotional expressions. Cognitive Brain Research. 2003;17:613–620. doi: 10.1016/s0926-6410(03)00174-5. [DOI] [PubMed] [Google Scholar]

- Batty M, Taylor MJ. The development of emotional face processing during childhood. Developmental Science. 2006;9:207–220. doi: 10.1111/j.1467-7687.2006.00480.x. [DOI] [PubMed] [Google Scholar]

- Bentin S, Gollard Y, Flevaris A, Robertson C, Moscovitch M. Processing the trees and the forest during initial stages of face perception: Electrophysiological evidence. Journal of Cognitive Neuroscience. 2006;18:1406–1421. doi: 10.1162/jocn.2006.18.8.1406. [DOI] [PubMed] [Google Scholar]

- de Haan M, Johnson MH, Halit H. Development of face-sensitive event-related potentials during infancy. International Journal of Psychophysiology. 2003;14:45–58. doi: 10.1016/s0167-8760(03)00152-1. [DOI] [PubMed] [Google Scholar]

- de Haan M, Nelson CA. Discrimination and categorization of facial expressions of emotion during infancy. In: Slater A, editor. Perceptual Development: Visual, Auditory, and Language Development in Infancy. London: University College Press; 1998. pp. 287–309. [Google Scholar]

- de Haan M, Nelson CA. Brain activity differentiates face and object processing in 6-month-old infants. Developmental Psychology. 1999;35:1113–1121. doi: 10.1037//0012-1649.35.4.1113. [DOI] [PubMed] [Google Scholar]

- de Haan M, Pascalis O, Johnson MH. Specialization of neural mechanisms underlying face recognition in human infants. Journal of Cognitive Neuroscience. 2002;14:199–209. doi: 10.1162/089892902317236849. [DOI] [PubMed] [Google Scholar]

- Eimer M, Holmes A. An ERP study on the time course of emotional face processing. NeuroReport. 2002;13:427–431. doi: 10.1097/00001756-200203250-00013. [DOI] [PubMed] [Google Scholar]

- Farroni T, Johnson MH, Csibra G. Mechanisms of eye gaze perception during infancy. Journal of Cognitive Neuroscience. 2004;16:1320–1326. doi: 10.1162/0898929042304787. [DOI] [PubMed] [Google Scholar]

- Haith MM, Bergman T, Moore MJ. Eye contact and face scanning in early infancy. Science. 1977;198:853–855. doi: 10.1126/science.918670. [DOI] [PubMed] [Google Scholar]

- Halit H, Csibra G, Volein A, Johnson MH. Face-sensitive cortical processing in early infancy. Journal of Child Psychology and Psychiatry. 2004;45:1228–1234. doi: 10.1111/j.1469-7610.2004.00321.x. [DOI] [PubMed] [Google Scholar]

- Halit H, de Haan M, Johnson MH. Cortical specialization for face processing: Face-sensitive event-related potential components in 3- and 12-month-old infants. NeuroImage. 2003;19:1180–1193. doi: 10.1016/s1053-8119(03)00076-4. [DOI] [PubMed] [Google Scholar]

- Harris RJ, Young AW, Andrews TJ. Dynamic stimuli demonstrate a categorical representation of facial expression in the amygdala. Neuropsychologia. 2014;56:47–52. doi: 10.1016/j.neuropsychologia.2014.01.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kestenbaum R, Nelson CA. The recognition and categorization of upright and inverted emotional expressions by 7-month-old infants. Infant Behavior and Development. 1990;13:497–511. [Google Scholar]

- Key APF, Stone W, Williams SM. What do infants see in faces? ERP evidence of different roles of eyes and mouth for face perception in 9-month-old infants. Infant and Child Development. 2009;18:149–162. doi: 10.1002/icd.600. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kobiella A, Grossman T, Reid VM, Striano T. The discrimination of angry and fearful facial expressions in 7-month-old infants: An event-related potential study. Cognition & Emotion. 2008;22:134–146. [Google Scholar]

- Leppänen JM, Moulson MC, Vogel-Farley VK, Nelson CA. An ERP study of emotional face processing in the adult and infant brain. Child Development. 2007;78:232–245. doi: 10.1111/j.1467-8624.2007.00994.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leppänen JM, Nelson CA. The development and neural bases of facial recognition. In: Kail RV, editor. Advances in Child Development and Behavior. Vol. 34. San Diego, CA: Academic Press; 2006. [DOI] [PubMed] [Google Scholar]

- Leppänen JM, Nelson CA. Tuning the developing brain to social signals of emotions. Nature Reviews Neuroscience. 2009;10:37–47. doi: 10.1038/nrn2554. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leppänen JM, Richmond J, Vogel-Farley VK, Moulson MC, Nelson CA. Categorical representation of facial expressions in the infant brain. Infancy. 2009;14:346–362. doi: 10.1080/15250000902839393. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luyster RJ, Powell C, Tager-Flusberg H, Nelson CA. Neural measures of social attention across the first year of life: Characterizing typical development and markers of autism risk. Developmental Cognitive Neuroscience. 2014;8:113–143. doi: 10.1016/j.dcn.2013.09.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mattavelli G, Sormaz M, Flack T, Asghar AUR, Fan S, Frey J, Andrews TJ. Neural responses to facial expressions support the role of the amygdala in processing threat. Social Cognitive and Affective Neuroscience. 2013:1–6. doi: 10.1093/scan/nst162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nelson CA. The recognition of facial expressions in the first two years of life: Mechanisms of development. Child Development. 1987;58:889–909. [PubMed] [Google Scholar]

- Peltola MJ, Leppänen JM, Mäki S, Hietanen JK. Emergence of enhanced attention to fearful faces between 5 and 7 months of age. Social Cognitive Affective Neuroscience. 2009;4:134–142. doi: 10.1093/scan/nsn046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rigato S, Farroni T, Johnson MH. The shared signal hypothesis and neural responses to expressions and gaze in infants and adults. Social Cognitive Affective Neuroscience. 2010;5:88–97. doi: 10.1093/scan/nsp037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Righi G, Nelson CA., III . The neural architecture and developmental course of face processing. In: Rakic P, Rubenstein J, editors. Comprehensive Developmental Neuroscience. Vol. 3. San Diego, CA: Elsevier; 2013. [Google Scholar]

- Righi G, Westerlund A, Congdon EL, Troller-Renfree S, Nelson CA. Infants’ experience-dependent processing of male and female faces: Insights from eye tracking and event-related potentials. Developmental Cognitive Neuroscience. 2014;8:144–152. doi: 10.1016/j.dcn.2013.09.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schyns PG, Jentzsch I, Johnson M, Schweinberger SR, Gosselin F. A principled method for determining the functionality of brain responses. Neuroreport. 2003;14:1665–1669. doi: 10.1097/00001756-200309150-00002. [DOI] [PubMed] [Google Scholar]

- Scott LS, Nelson CA. Featural and configural face processing in adults and infants: A behavioral and electrophysiological investigation. Perception. 2006;35:1107–1128. doi: 10.1068/p5493. [DOI] [PubMed] [Google Scholar]

- Strauss MM, Makris N, Aharon I, Vangel MG, Goodman J, Kennedy DN, Breiter HC. fMRI of sensitization to angry faces. NeuroImage. 2005;26:389–413. doi: 10.1016/j.neuroimage.2005.01.053. [DOI] [PubMed] [Google Scholar]

- Taylor MJ, Edmonds GE, McCarthy G, Allison T. Eyes first! Eye processing develops before face processing in children. NeuroReport. 2001;12:1671–1676. doi: 10.1097/00001756-200106130-00031. [DOI] [PubMed] [Google Scholar]

- Tottenham N, Tanaka JW, Leon AC, McCarry T, Nurse M, Hare TA, Nelson C. The NimStim set of facial expressions: judgments from untrained research participants. Psychiatry research. 2009;168:242–249. doi: 10.1016/j.psychres.2008.05.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vogel M, Monesson A, Scott LS. Building biases in infancy: The influence of race on face and voice emotion matching. Developmental Science. 2012;15:359–372. doi: 10.1111/j.1467-7687.2012.01138.x. [DOI] [PubMed] [Google Scholar]

- Whalen PJ. Fear, vigilance, and ambiguity: Initial neuroimaging studies of the human amygdala. Current Directions in Psychological Science. 1998;7:177–188. [Google Scholar]

- Whalen PJ, Shin LM, McInerney SC, Fischer H, Wright CI, Rauch SL. A functional MRI study of human amygdala responses to facial expression of fear versus anger. Emotion. 2001;1:70–83. doi: 10.1037/1528-3542.1.1.70. [DOI] [PubMed] [Google Scholar]