Abstract

Objective To assess whether publicly funded adult cancer trials satisfy the uncertainty principle, which states that physicians should enrol a patient in a trial only if they are substantially uncertain which of the treatments in the trial is most appropriate for the patient. This principle is violated if trials systematically favour either the experimental or the standard treatment.

Design Retrospective cohort study of completed cancer trials, with randomisation as the unit of analysis.

Setting Two cooperative research groups in the United States.

Studies included 93 phase III randomised trials (103 randomisations) that completed recruitment of patients between 1981 and 1995.

Main outcome measures Whether the randomisation favoured the experimental treatment, the standard treatment, or neither treatment; effect size (outcome of the experimental treatment compared with outcome of the standard treatment) for each randomisation.

Results Three randomisations (3%) favoured the standard treatment, 70 (68%) found no significant difference between treatments, and 30 (29%) favoured the experimental treatment. The average effect size was 1.20 (95% confidence interval 1.13 to 1.28), reflecting a slight advantage for the experimental treatment.

Conclusions In cooperative group trials in adults with cancer, there is a measurable average improvement in disease control associated with assignment to the experimental rather than the standard arm. However, the heterogeneity of outcomes and the small magnitude of the advantage suggest that, as a group, these trials satisfy the uncertainty principle.

Introduction

Randomised trials offer the best evidence about the safety and efficacy of new therapies but require substantial investment of time, resources, and patients. Before a trial starts, preliminary data should therefore support the study hypothesis. Such data, however, can raise ethical objections that some participants might receive predictably inferior therapy.1,2

Clinicians and patients generally have preconceptions about the relative merits of the study treatments.3 It is therefore important to consider under what conditions of prior knowledge and belief trials may ethically proceed. Many commentators argue that “equipoise,” defined by Freedman as the absence of “consensus within the expert clinical community about the comparative merits of the alternatives to be tested,” must exist.4 Others invoke the “uncertainty principle”5 (box), in part to avoid the “etymological connotation of an equal balance between... the alternatives to be tested.”6 Despite continued controversy,7 most authors agree that when the better treatment can be identified with reasonable confidence, it is both unethical and scientifically unnecessary to conduct the trial.8

Concerns about violations of the uncertainty principle, which have adverse practical and ethical consequences, are widespread.1,2,8-11 Surveys indicate that physicians involved with trials commonly have treatment preferences.12 Such preferences, whether well founded or not, reduce the likelihood that they will offer patients the chance to participate in a trial.13-15 Similarly, treatment preferences among patients decrease their willingness to enrol.14-17

For a physician or patient considering a trial, deciding whether the uncertainty principle is satisfied requires a set of expectations about how the new treatment compares with the standard treatment. These expectations are generally cited as grounds for determining whether offering a trial is ethical, though they may reflect inaccurate or even biased predictions about the trial's outcome. Although it is difficult to predict the outcomes of specific trials, such judgments need not be entirely subjective. Chalmers, taking a Bayesian perspective and considering trials as elements of a coherent system rather than as isolated events, suggests that outcomes of completed trials can inform estimates of the “prior probability of a proposed new treatment being superior to an established treatment.”18 In the absence of bias, the expectations of physicians and patients should bear some relation to estimates of prior probabilities derived in this way.8 Furthermore, the finding that new treatments prove superior to standard treatments most of the time would suggest a systematic violation of the uncertainty principle. Several authors have conducted empirical studies under this premise.10,19,20

The US National Cancer Institute funds several cooperative groups to conduct oncology trials. These groups provide a favourable setting for estimating prior probabilities in randomised trials. Firstly, they permit access to results of unpublished trials. This is important because publication bias could skew the proportion of trials with positive results. Secondly, though they study diverse diseases and interventions, their institutional continuity makes them relatively stable platforms for therapeutic development. Finally, as publicly funded organisations, their trials may be less subject to biases associated with industry sponsorship.10,21

The uncertainty principle

A patient can be entered [in a trial] if, and only if, the responsible clinician is substantially uncertain which of the trial treatments would be most appropriate for that particular patient. A patient should not be entered if the responsible clinician or the patient are for any medical or non-medical reasons reasonably certain that one of the treatments that might be allocated would be inappropriate for this particular individual (in comparison with either no treatment or some other treatment that could be offered to the patient in or outside the trial)5

To evaluate whether publicly funded cancer trials satisfy the uncertainty principle, we examined outcomes in an historical cohort of cooperative group trials.

Methods

Study sample

We identified phase III randomised trials coordinated by the Eastern Cooperative Oncology Group (ECOG) or Cancer and Leukemia Group B (CALGB). These two groups were among the larger multi-cancer groups supported by the National Cancer Institute that were active during the study period. To ensure that all trials had time to enrol patients and follow up and report results, we selected studies that finished recruiting participants between 1981 and 1995. We limited the cohort to trials testing whether a new anticancer therapy was more effective than a control. We excluded studies closing early due to poor recruitment as uninformative for our purposes but included studies closing early for other reasons (for example, interim results).

Data collection

We used original protocols to assess study design. For study results, we used (in preplanned order) statistician's reports, published articles, or meeting abstracts. We obtained unpublished documents from the statistical offices of the two groups.

A research assistant abstracted design and outcome data using standard forms. Each randomisation required abstraction of at least 45 data points. Administrative data included protocol number, disease, stage, dates opened and closed to recruitment, and reason for closure. Design data included number of randomisations, number of arms, intervention(s), placebo/observation controls, primary end point(s), and power calculations. When no primary end point was specified, we coded end points used in power calculations as primary. We coded each arm as experimental or standard on the basis of its description in the protocol's background, objectives, or statistics section. We excluded randomisations without defined experimental and standard arms.

Outcome data included recruitment, findings for each arm and end point, and results of hypothesis tests. To minimise retrospective biases, we evaluated outcomes according to the planned analysis specified in the protocol. Only rarely did the criterion for significance used in the report differ from that in the protocol (for example, one sided comparison planned, two sided used). In these circumstances, we used criteria defined in the protocol to determine significance.

For quality control, SJ independently re-abstracted about 10% of randomisations (n=12). The item error rate was less than 1.1%.

We identified 120 trials that met the criteria for eligibility. Because of factorial designs, 10 trials contributed two randomisations. Thus 130 randomisations (the unit of analysis) were eligible. Of these, 23 closed early because of poor recruitment and were excluded. Complete design and outcome data were available for 103 of the remaining 107 randomisations (96%). These 103 randomisations from 93 trials constituted our study cohort. The Eastern Cooperative Oncology Group contributed 65 randomisations (59 trials) and the Cancer and Leukemia Group B contributed 38 randomisations (34 trials). In total, 34 943 patients were included in study analyses.

Protocols were available for all trials. We abstracted outcomes from statistical reports (n=60), articles (n=29), and meeting abstracts (n=4).

Analysis

We aimed to describe how experimental treatments in phase III cooperative group cancer trials fare when compared with standard treatments. We addressed this in two ways. In the main analysis, we calculated the proportions of randomisations that favoured the experimental treatment, the standard treatment, or neither treatment, based on significance as specified in the protocol. In a supporting analysis, we calculated an effect size (for example, ratio of median survivals or response rates) for each two way comparison, then estimated the average effect size for the study cohort. Because effect sizes involving time to event and response rate end points differ mathematically and conceptually, we report them both together and separately. Also, because their distributions are inherently asymmetrical, we report geometric means. We limited analyses to primary end points.

The analysis was complicated because some randomisations involved multiple two way comparisons. This occurred for three reasons: multi-arm studies compared two or more experimental treatments with a control; some studies had two or more primary end points; and some analyses presented outcomes specific for various groups. To avoid overweighting trials involving multiple two way comparisons, we first averaged results within randomisations. For categorical analyses of our data, we classified randomisations as favouring experimental treatment if at least half of two way comparisons favoured that treatment and as favouring the standard if at least half of comparisons favoured that treatment.

Finally, we conducted an exploratory logistic regression analysis to identify attributes of studies that favoured experimental treatment. We evaluated five independent variables: end point, date closed to recruitment, disease setting, placebo/observation control, and average sample size per arm. When a study reported multiple two way comparisons, we considered each as a separate data point but used clustering to account for non-independence within randomisations. We report two sided P values. We used Stata 8 for Windows (StataCorp, College Station, TX) for analyses.

Results

Sample description

Table 1 shows administrative details of the 93 trials. The most studied cancers were breast, small cell lung, acute myeloid leukaemia, colorectal, and Hodgkin's and non-Hodgkin's lymphomas. Recruitment required a median of 4.3 years, or 20% longer than anticipated. Seven trials closed early on the basis of interim data.

Table 1.

Description of protocols and study designs. Figures are numbers (percentages) unless stated otherwise

| Characteristic | Data | |

|---|---|---|

| Type of cancer (n=93 protocols): | ||

| Breast | 20 | (21.5) |

| Small cell lung | 10 | (10.8) |

| Acute myeloid leukaemia | 9 | (9.7) |

| Colorectal | 8 | (8.6) |

| Hodgkin's disease | 7 | (7.5) |

| Non-Hodgkin's lymphoma | 7 | (7.5) |

| Non-small cell lung | 4 | (4.3) |

| Multiple myeloma | 4 | (4.3) |

| Other* | 24 | (25.8) |

| Date closed (n=93 protocols): | ||

| 1981-5 | 35 | (37.6) |

| 1986-90 | 28 | (30.1) |

| 1991-5 | 30 | (32.3) |

| Reason closed (n=93 protocols): | ||

| Reached recruitment target | 86 | (92.5) |

| Interim analysis | 7† | (7.5) |

| No of arms (n=103 randomisations): | ||

| 2 | 77 | (74.8) |

| 3 | 20 | (19.4) |

| ≥4 | 6 | (5.8) |

| Type of control (n=103 randomisations): | ||

| Active | 86 | (83.5) |

| Placebo or observation‡ | 17 | (16.5) |

| Sidedness of hypothesis test used in power calculations (n=97 randomisations that provided power calculations):

| ||

| 1 | 49 | (50.5) |

| 2 | 40 | (41.2) |

| Not specified | 8 | (8.3) |

| Power (n=94 randomisations that provided a unique study power):

|

|

|

| <0.80 | 3 | (3.2) |

| 0.80-0.84 | 62 | (66.0) |

| 0.85-0.89 | 15 | (16.0) |

| ≥0.90 | 14 | (14.9) |

| Alpha error (n=97 randomisations that provided power calculations)§:

|

|

|

| ≤0.025 | 6 | (6.2) |

| 0.026-0.05 | 47 | (48.5) |

| 0.051-0.10 | 39 | (40.2) |

| >0.10 | 5 | (5.2) |

| Effect size used in power calculations (n=88 randomisations for which a planned effect size could be calculated)¶:

| ||

| ≤1.25 | 2 | (2.3) |

| 1.26-1.50 | 42 | (47.7) |

| 1.51-1.75 | 27 | (30.7) |

| 1.76-2.0 | 13 | (14.8) |

| >2.0 | 4 | (4.6) |

| Median (interquartile range) | ||

| Duration of study accrual (years) (n=93 protocols)** | 4.3 | (3.2-5.3) |

| Ratio of actual to planned accrual duration (n=93 protocols)** | 1.2 | (1.0-1.8) |

| Sample size, total (n=103 randomisations) | 299 | (204-465) |

| Sample size, per arm (n=103 randomisations) | 125 | (92-184) |

| Ratio of actual to planned sample size (n=103 randomisations) | 1.0 | (1.0-1.1) |

Includes acute lymphoblastic leukaemia (3), melanoma (3), sarcoma (3), chronic lymphocytic leukaemia (3), prostate (2), bladder (2), pancreas (1), head and neck (1), ovarian (1), germ cell (1), oesophageal (1), renal-cell (1), thyroid (1), and myelodysplastic syndrome (1).

Four of these favoured experimental arm.

Participants had received active treatment before randomisation in 15 of 17 studies with placebo/observation control.

Two sided equivalent. Where sidedness was unspecified, we assumed power calculations involved two sided alpha errors.

Indicates hazard ratios for time to event comparisons, and response rate ratios for response comparisons.

Denominator is 86 studies (94 randomisations). Excludes studies that closed early on basis of interim data.

Table 1 also shows details of study design, including power calculations, for the 103 randomisations. About a quarter involved three or more arms. Seventeen involved placebo or observation controls, although participants received active treatment before randomisation in 15 of these studies.

The median sample size was 299 patients per randomisation, or 125 per arm. The median ratio of actual to planned recruitment was 1.0.

Study outcomes

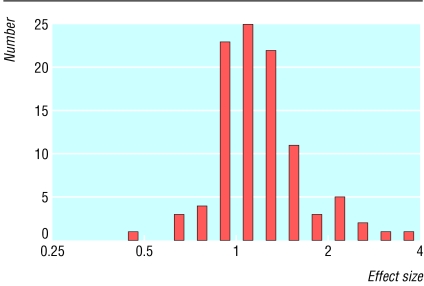

Table 2 reports comparisons between experimental and control treatments. Thirty randomisations (29%) favoured experimental treatment, three (3%) favoured standard treatment, and 70 (68%) favoured neither. The average effect size was 1.20 (95% confidence interval 1.13 to 1.28), reflecting an advantage for experimental treatment (figure). Comparisons involving time to event end points apart from survival favoured experimental treatment more often than those involving survival or response end points, whether they were analysed as categorical outcomes or as effect sizes.

Table 2.

Results of comparisons between experimental and control treatments. Figures are numbers (percentages)

| Control better | No significant difference | Experimental better | Geometric mean effect size (95% CI) | |

|---|---|---|---|---|

| All comparisons (n=103) | 3 (2.9) | 70 (68.0) | 30 (29.1) | 1.20* (1.13 to 1.28) |

| Response rate comparisons (n=42)† | 1 (2.4) | 33 (78.6) | 8 (19.1)‡ | 1.11§ (1.01 to 1.22) |

| Survival comparisons (n=41)† | 2 (4.9) | 33 (80.5) | 6 (14.6)‡ | 1.11§¶ (1.03 to 1.19) |

| Other time to event comparisons (n=59)† | 1 (1.7) | 35 (59.3) | 23 (39.0)‡ | 1.28§** (1.16 to 1.41) |

Effect sizes could be calculated for 101 randomisations.

Some randomisations reported two or more primary end points. Consequently, numbers for response rate, survival, and other time to event comparisons total more than 103.

P=0.03 for comparison of study outcome by end point (Fisher's exact test).

P=0.01 for comparison of effect sizes by end point (one way analysis of variance).

Effect size could be calculated for 40 randomisations evaluating survival.

Effect size could be calculated for 57 randomisations evaluating other time to event end points.

Figure 1.

Distribution of effect sizes among Eastern Cooperative Oncology Group and Cancer and Leukemia Group B randomised controlled trials, 1981-95. Effect sizes >1 favour experimental treatment; effect sizes <1 favour standard treatment

Published manuscripts were available for 86/103 randomisations (or 77/93 trials). Among published randomisations, 27 (31%) favoured experimental treatment. Among unpublished randomisations, three (18%) favoured experimental treatment (P=0.38, Fisher's exact test).

In the logistic regression analysis, the likelihood of favouring experimental treatment varied by end point, date of study closure, and disease setting, but not by type of control or sample size (table 3). Comparisons that used time to event end points apart from survival, closed in 1986-90, and involved locoregional solid tumours most often favoured experimental treatment.

Table 3.

| Independent variable‡ | Adjusted odds ratio (95% CI) | P value |

|---|---|---|

| End point: | ||

| Overall survival | Reference | |

| Other time to event | 4.7 (1.8 to 12.7) | 0.008 |

| Response rate | 2.8 (0.8 to 9.5) | |

| Date protocol closed to recruitment: | ||

| 1981-5 | Reference | |

| 1986-90 | 4.4 (1.6 to 12.1) | 0.009 |

| 1991-5 | 1.7 (0.5 to 5.5) | |

| Type of malignancy: | ||

| Advanced solid tumour | Reference | |

| Haematological | 1.9 (0.7 to 5.0) | 0.015 |

| Locoregional solid tumour | 5.8 (1.8 to 19.0) |

Results of logistic regression model evaluating attributes of randomisations in which compared with control arm, experimental arm met statistical criteria for superiority defined in protocol.

Some randomisations report more than one two way comparison of experimental to control arm. Model therefore uses clustering by randomisation to adjust for lack of independence within randomisations.

Use of placebo/observation control and number of patients per arm were not associated with significant difference in favour of experimental arm.

Discussion

We reviewed 93 randomised trials conducted by two US oncology cooperative groups over a 15 year period. We found that, on average, experimental treatment resulted in slightly better disease control than standard therapy did. Experimental therapy rarely proved less effective than the contemporary standard.

Despite this apparent imbalance, the heterogeneity of outcomes and small average effect sizes suggest that overall these trials satisfied the uncertainty principle. However, some might disagree with this interpretation, saying that the observed difference violates the uncertainty constraint.22 Others might argue that given the urgent need for advances against most cancers in adults, the proportion of successful experimental treatments is disappointingly low.

What did other studies find?

Few studies, all of which have looked at published articles or abstracts, have asked related questions in oncology. Chlebowski and Lillington found that 16% of trials comparing adjuvant therapies for localised breast cancer, but only 2% of trials for advanced breast cancer, favoured experimental treatment.23 We similarly found that trials for advanced solid tumours were less likely than other trials to favour experimental treatment. Machin et al observed that eight of 29 (28%) UK Medical Research Council trials for solid tumours favoured experimental treatment.20 Finally, Djulbegovic and colleagues observed that 56% of multiple myeloma trials published between 1996 and 1998 favoured experimental treatment.10 This advantage was most apparent among placebo controlled trials and trials funded by industry. There were no differences by type of control in our study, though few trials lacked active controls. The higher proportion of studies with positive results in the analysis of Djulbegovic et al may reflect the prevalence of placebo controlled trials and trials funded by industry, the use of qualitative conclusions rather than hypothesis tests to define outcomes,21 the inclusion of one disease, or publication bias.

Study implications

Our findings have additional implications for cancer trials. Firstly, with advances in design of targeted drugs, the proportion of trials with positive results may rise. While this would herald encouraging progress, the stronger biological rationales for experimental therapies and the consequent shift in physicians' and patients' subjective prior probabilities might make randomised trials ethically and logistically more difficult.

Secondly, that so few studies favoured experimental treatment suggests that the major impact of trials during this period was to prevent treatments that offer little incremental advantage from moving forward. Framing their purpose in this way might alter our perspective on what constitutes “success.” Trials with negative results have an essential role in public health and should not be viewed as failures. Recent trials of high dose treatment for metastatic breast cancer, which despite initial enthusiasm proved no better than standard chemotherapy, illustrate this critical function.24

Analytic and interpretive limitations

Our analysis has several limitations. The trials we studied involved diverse patient populations, interventions, and study designs. Providing a single estimate of prior probabilities is therefore oversimplified. Also, the prior evidence favouring the experimental treatment—possibly the strongest predictor of the trial's outcome—probably varied among trials. Unfortunately, we could not quantify the weight of evidence favouring the experimental treatment that was available at the start of each trial. Nor could we formally incorporate considerations of morbidity into the analysis. Had we been able to do so, the risk-benefit ratio of the two arms would probably have been even more closely balanced as the toxicity of experimental treatment is often greater than that of standard treatment. Application of our results to current trials requires caution as temporal trends might alter the underlying probability distributions. Finally, our data do not address the ethically important question of what physicians and others actually believed when these trials began. Studies that prospectively assessed physicians' prior beliefs and then correlated those beliefs with trial outcomes would be worthwhile.

Sample size problems could partly explain the high proportion of trials that found no significant difference between treatments. Most protocols reported reasonable power, but some calculations may have used optimistic estimates of effect sizes. Such trials are effectively underpowered and can miss meaningful differences. However, observed effect sizes were less than 1.25 in 54 studies (79%) that found no significant differences between treatments. The fact that effect sizes among equivocal trials were generally small indicates that any bias from inadequate power among the trials we included is limited.

We do not suggest that the proportions or effect sizes reported here can be used in isolation to estimate prior probabilities for individual trials. Other information about the study intervention, including biological plausibility and results of preliminary research, can indicate whether and how expectations for particular trials should differ from population norms. Nevertheless, such average probabilities can serve both as starting points for determining expectations about individual trials and to gauge the degree of uncertainty inherent in the system of trials as a whole.

Prior probabilities in other contexts, including paediatric oncology, research sponsored by industry, or non-cancer trials, probably differ from those we observed. Factors such as the inherent responsiveness of the conditions under study, sponsors' financial incentives, regulatory mandates (for example, about placebo controls), and customs regarding confirmatory trials undoubtedly affect the outcomes of trials. Such variation suggests that the uncertainty principle has ethical implications for trial systems as well as for individual studies and therefore should influence decision models about which therapies to advance to randomised trials. Determining the optimum distribution of prior probabilities across a system of trials is complex, involving the need to offer current patients the most effective therapies available, the obligation to avoid harming some participants by giving them experimental therapy that proves less effective than existing standards, and the mandate for efficient clinical progress. Ultimately, defining the limits of acceptable uncertainty will require that we address substantive questions about the relationships between individual patients and communities as mediated through the institution of clinical trials.

What is already known on this topic

The uncertainty principle is often cited as the ethical basis for randomised trials

In oncology, studies that evaluate whether this principle is satisfied give conflicting results and are subject to publication bias

What this study adds

In a cohort of publicly funded adult cancer trials that included unpublished studies, fewer than one third favoured the experimental treatment

On average, experimental treatments were associated with about a 20% improvement in disease control

The heterogeneity of results and small average effects suggest that as a group these trials satisfy the uncertainty principle

Conclusions

To summarise, we observed measurable average improvements in disease control associated with the receipt of experimental rather than standard therapy in adult cooperative group cancer trials. Nevertheless, the small magnitude of the advantage and the heterogeneity of results suggest that as a group these trials satisfy the uncertainty principle. Our results may encourage recognition that prior beliefs about the relative merits of the treatments being compared are tenuous at best. By highlighting this uncertainty, we hope patients and physicians will be more willing to help to advance cancer therapy through participation in controlled trials.

We thank the Cancer and Leukemia Group B and the Eastern Cooperative Oncology Group for allowing access to primary study documents.

Contributors: SJ, DPH, SLG, EJE, and JCW designed the study. SJ and LAB abstracted study data. SJ, DPH, and JCW were responsible for the analysis. SJ wrote the study report, which was reviewed by all authors. All authors read and approved the final draft. SJ is guarantor.

Funding: Greenwall Foundation and the US National Cancer Institute (CA96872). The Eastern Cooperative Oncology Group (CA23318) and the Cancer and Leukemia Group B (CA33601) receive funding from the National Cancer Institute.

Competing interests: DPH is the former group statistician of the Eastern Cooperative Oncology Group. SLG is the current group statistician and director of the statistical centre for the Cancer and Leukemia Group B.

Ethical approval: Not required.

References

- 1.Hellman S, Hellman DS. Of mice but not men. Problems of the randomized clinical trial. N Engl J Med 1991;324: 1585-9. [DOI] [PubMed] [Google Scholar]

- 2.Fried C. Medical experimentation: personal integrity and social policy. New York: American Elsevier, 1974.

- 3.Lilford RJ. Ethics of clinical trials from a bayesian and decision analytic perspective: whose equipoise is it anyway? BMJ 2003;326: 980-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Freedman B. Equipoise and the ethics of clinical research. N Engl J Med 1987;317: 141-5. [DOI] [PubMed] [Google Scholar]

- 5.Peto R, Baigent C. Trials: the next 50 years. Large scale randomised evidence of moderate benefits. BMJ 1998;317: 1170-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Enkin MW. Against: clinical equipoise and not the uncertainty principle is the moral underpinning of the randomised controlled trial. BMJ 2000;321: 757-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Miller FG, Brody H. A critique of clinical equipoise. Therapeutic misconception in the ethics of clinical trials. Hastings Cent Rep 2003;33: 19-28. [PubMed] [Google Scholar]

- 8.Edwards SJ, Lilford RJ, Braunholtz DA, Jackson JC, Hewison J, Thornton J. Ethical issues in the design and conduct of randomised controlled trials. Health Technol Assess 1998;2: i-vi,1-132. [PubMed] [Google Scholar]

- 9.Marquis D. Leaving therapy to chance. Hastings Cent Rep 1983;13: 40-7. [PubMed] [Google Scholar]

- 10.Djulbegovic B, Lacevic M, Cantor A, Fields KK, Bennett CL, Adams JR, et al. The uncertainty principle and industry-sponsored research. Lancet 2000;356: 635-8. [DOI] [PubMed] [Google Scholar]

- 11.Lau J, Antman EM, Jimenez-Silva J, Kupelnick B, Mosteller F, Chalmers TC. Cumulative meta-analysis of therapeutic trials for myocardial infarction. N Engl J Med 1992;327: 248-54. [DOI] [PubMed] [Google Scholar]

- 12.Alderson P. Equipoise as a means of managing uncertainty: personal, communal and proxy. J Med Ethics 1996;22: 135-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Taylor KM, Margolese RG, Soskolne CL. Physicians' reasons for not entering eligible patients in a randomized clinical trial of surgery for breast cancer. N Engl J Med 1984;310: 1363-7. [DOI] [PubMed] [Google Scholar]

- 14.Hunter CP, Frelick RW, Feldman AR, Bavier AR, Dunlap WH, Ford L, et al. Selection factors in clinical trials: results from the community clinical oncology program physician's patient log. Cancer Treat Rep 1987;71: 559-65. [PubMed] [Google Scholar]

- 15.Gotay CC. Accrual to cancer clinical trials: directions from the research literature. Soc Sci Med 1991;33: 569-77. [DOI] [PubMed] [Google Scholar]

- 16.Ubel PA, Merz JF, Shea J, Asch DA. How preliminary data affect people's stated willingness to enter a hypothetical randomized controlled trial. J Investig Med 1997;45: 561-6. [PubMed] [Google Scholar]

- 17.Jenkins V, Fallowfield L. Reasons for accepting or declining to participate in randomized clinical trials for cancer therapy. Br J Cancer 2000;82: 1783-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Chalmers I. What is the prior probability of a proposed new treatment being superior to established treatments? BMJ 1997;314: 74-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Gilbert JP, McPeek B, Mosteller F. Statistics and ethics in surgery and anesthesia. Science 1977;198: 684-9. [DOI] [PubMed] [Google Scholar]

- 20.Machin D, Stenning SP, Parmar MK, Fayers PM, Girling DJ, Stephens RJ, et al. Thirty years of Medical Research Council randomized trials in solid tumours. Clin Oncol 1997;9: 100-14. [DOI] [PubMed] [Google Scholar]

- 21.Als-Nielsen B, Chen W, Gluud C, Kjaergard LL. Association of funding and conclusions in randomized drug trials: a reflection of treatment effect or adverse events? JAMA 2003;290: 921-8. [DOI] [PubMed] [Google Scholar]

- 22.Lee SJ, Zelen M. Clinical trials and sample size considerations: another perspective. Stat Sci 2000;15: 95-110. [Google Scholar]

- 23.Chlebowski RT, Lillington LM. A decade of breast cancer clinical investigation: results as reported in the program/proceedings of the American Society of Clinical Oncology. J Clin Oncol 1994;12: 1789-95. [DOI] [PubMed] [Google Scholar]

- 24.Welch HG, Mogielnicki J. Presumed benefit: lessons from the American experience with marrow transplantation for breast cancer. BMJ 2002;324: 1088-92. [DOI] [PMC free article] [PubMed] [Google Scholar]