Abstract

In the sparse linear regression setting, we consider testing the significance of the predictor variable that enters the current lasso model, in the sequence of models visited along the lasso solution path. We propose a simple test statistic based on lasso fitted values, called the covariance test statistic, and show that when the true model is linear, this statistic has an Exp(1) asymptotic distribution under the null hypothesis (the null being that all truly active variables are contained in the current lasso model). Our proof of this result for the special case of the first predictor to enter the model (i.e., testing for a single significant predictor variable against the global null) requires only weak assumptions on the predictor matrix X. On the other hand, our proof for a general step in the lasso path places further technical assumptions on X and the generative model, but still allows for the important high-dimensional case p > n, and does not necessarily require that the current lasso model achieves perfect recovery of the truly active variables.

Of course, for testing the significance of an additional variable between two nested linear models, one typically uses the chi-squared test, comparing the drop in residual sum of squares (RSS) to a distribution. But when this additional variable is not fixed, and has been chosen adaptively or greedily, this test is no longer appropriate: adaptivity makes the drop in RSS stochastically much larger than under the null hypothesis. Our analysis explicitly accounts for adaptivity, as it must, since the lasso builds an adaptive sequence of linear models as the tuning parameter λ decreases. In this analysis, shrinkage plays a key role: though additional variables are chosen adaptively, the coefficients of lasso active variables are shrunken due to the penalty. Therefore, the test statistic (which is based on lasso fitted values) is in a sense balanced by these two opposing properties—adaptivity and shrinkage—and its null distribution is tractable and asymptotically Exp(1).

Key words and phrases: Lasso, least angle regression, p-value, significance test

1. Introduction

We consider the usual linear regression setup, for an outcome vector and matrix of predictor variables :

| (1) |

where are unknown coefficients to be estimated. [If an intercept term is desired, then we can still assume a model of the form (1) after centering y and the columns of X; see Section 2.2 for more details.] We focus on the lasso estimator [Tibshirani (1996), Chen, Donoho and Saunders (1998)], defined as

| (2) |

where λ ≥ 0 is a tuning parameter, controlling the level of sparsity in . Here, we assume that the columns of X are in general position in order to ensure uniqueness of the lasso solution [this is quite a weak condition, to be discussed again shortly; see also Tibshirani (2013)].

There has been a considerable amount of recent work dedicated to the lasso problem, both in terms of computation and theory. A comprehensive summary of the literature in either category would be too long for our purposes here, so we instead give a short summary: for computational work, some relevant contributions are Friedman et al. (2007), Beck and Teboulle (2009), Friedman, Hastie and Tibshirani (2010), Becker, Bobin and Candès (2011), Boyd et al. (2011), Becker, Candès and Grant (2011); and for theoretical work see, for example, Greenshtein and Ritov (2004), Fuchs (2005), Donoho (2006), Candes and Tao (2006), Zhao and Yu (2006), Wainwright (2009), Candès and Plan (2009). Generally speaking, theory for the lasso is focused on bounding the estimation error or , or ensuring exact recovery of the underlying model, [with supp(·) denoting the support function]; favorable results in both respects can be shown under the right assumptions on the generative model (1) and the predictor matrix X. Strong theoretical backing, as well as fast algorithms, have made the lasso a highly popular tool.

Yet, there are still major gaps in our understanding of the lasso as an estimation procedure. In many real applications of the lasso, a practitioner will undoubtedly seek some sort of inferential guarantees for his or her computed lasso model—but, generically, the usual constructs like p-values, confidence intervals, etc., do not exist for lasso estimates. There is a small but growing literature dedicated to inference for the lasso, and important progress has certainly been made, with many methods being based on resampling or data splitting; we review this work in Section 2.5. The current paper focuses on a significance test for lasso models that does not employ resampling or data splitting, but instead uses the full data set as given, and proposes a test statistic that has a simple and exact asymptotic null distribution.

Section 2 defines the problem that we are trying to solve, and gives the details of our proposal—the covariance test statistic. Section 3 considers an orthogonal predictor matrix X, in which case the statistic greatly simplifies. Here, we derive its Exp(1) asymptotic distribution using relatively simple arguments from extreme value theory. Section 4 treats a general (nonorthogonal) X, and under some regularity conditions, derives an Exp(1) limiting distribution for the covariance test statistic, but through a different method of proof that relies on discrete-time Gaussian processes. Section 5 empirically verifies convergence of the null distribution to Exp(1) over a variety of problem setups. Up until this point, we have assumed that the error variance σ2 is known; in Section 6, we discuss the case of unknown σ2. Section 7 gives some real data examples. Section 8 covers extensions to the elastic net, generalized linear models, and the Cox model for survival data. We conclude with a discussion in Section 9.

2. Significance testing in linear modeling

Classic theory for significance testing in linear regression operates on two fixed nested models. For example, if M and M ∪ {j} are fixed subsets of {1,…, p}, then to test the significance of the jth predictor in the model (with variables in) M ∪ {j}, one naturally uses the chi-squared test, which computes the drop in residual sum of squares (RSS) from regression on M ∪ {j} and M,

| (3) |

and compares this to a distribution. (Here, σ2 is assumed to be known; when σ2 is unknown, we use the sample variance in its place, which results in the F-test, equivalent to the t-test, for testing the significance of variable j.)

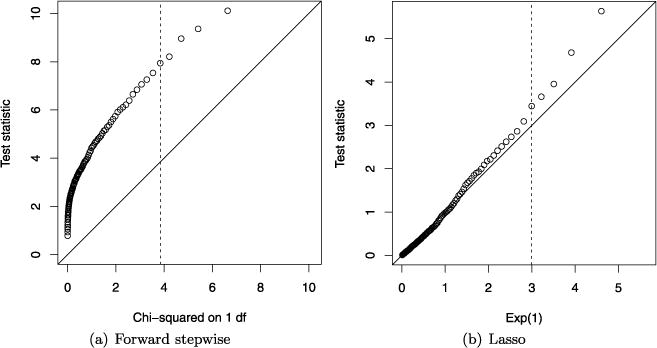

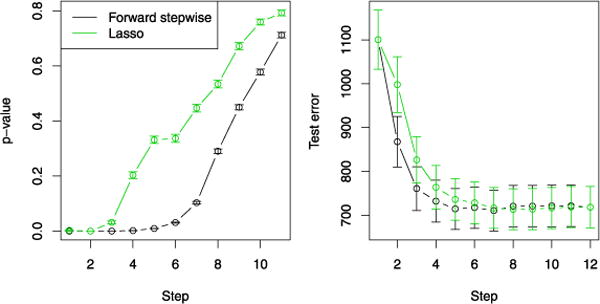

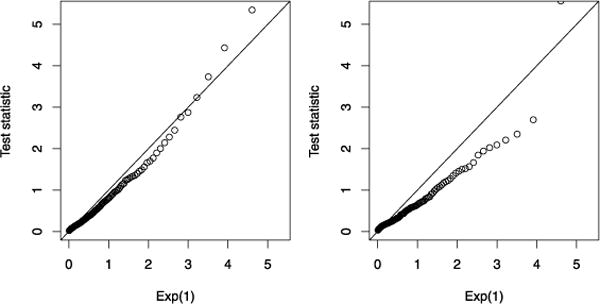

Often, however, one would like to run the same test for M and M ∪ {j} that are not fixed, but the outputs of an adaptive or greedy procedure. Unfortunately, adaptivity invalidates the use of a null distribution for the statistic (3). As a simple example, consider forward stepwise regression: starting with an empty model M = ∅, we enter predictors one at a time, at each step choosing the predictor j that gives the largest drop in residual sum of squares. In other words, forward stepwise regression chooses j at each step in order to maximize Rj in (3), over all j ∉ M. Since Rj follows a distribution under the null hypothesis for each fixed j, the maximum possible Rj will clearly be stochastically larger than under the null. Therefore, using a chi-squared test to evaluate the significance of a predictor entered by forward stepwise regression would be far too liberal (having type I error much larger than the nominal level). Figure 1(a) demonstrates this point by displaying the quantiles of R1 in forward stepwise regression (the chisquared statistic for the first predictor to enter) versus those of a variate, in the fully null case (when β* = 0). A test at the 5% level, for example, using the cutoff of 3.84, would have an actual type I error of about 39%.

FIG. 1.

A simple example with n = 100 observations and p = 10 orthogonal predictors. All true regression coefficients are zero, β* = 0. On the left is a quantile–quantile plot, constructed over 1000 simulations, of the standard chi-squared statistic R1 in (3), measuring the drop in residual sum of squares for the first predictor to enter in forward stepwise regression, versus the distribution. The dashed vertical line marks the 95% quantile of the distribution. The right panel shows a quantile–quantile plot of the covariance test statistic T1 in (5) for the first predictor to enter in the lasso path, versus its asymptotic null distribution Exp(1). The covariance test explicitly accounts for the adaptive nature of lasso modeling, whereas the usual chi-squared test is not appropriate for adaptively selected models, for example, those produced by forward stepwise regression.

The failure of standard testing methodology when applied to forward stepwise regression is not an anomaly—in general, there seems to be no direct way to carry out the significance tests designed for fixed linear models in an adaptive setting.6 Our aim is hence to provide a (new) significance test for the predictor variables chosen adaptively by the lasso, which we describe next.

2.1. The covariance test statistic

The test statistic that we propose here is constructed from the lasso solution path, that is, the solution in (2) a function of the tuning parameter λ ∈ [0, ∞). The lasso path can be computed by the well-known LARS algorithm of Efron et al. (2004) [see also Osborne, Presnell and Turlach (2000a, 2000b)], which traces out the solution as λ decreases from ∞ to 0. Note that when rank(X) < p there are possibly many lasso solutions at each λ and, therefore, possibly many solution paths; we assume that the columns of X are in general position,7 implying that there is a unique lasso solution at each λ > 0, and hence a unique path. The assumption that X has columns in general position is a very weak one [much weaker, e.g., than assuming that rank(X) = p]. For example, if the entries of X are drawn from a continuous probability distribution on , then the columns of X are almost surely in general position, and this is true regardless of the sizes of n and p; see Tibshirani (2013).

Before defining our statistic, we briefly review some properties of the lasso path.

The path is a continuous and piecewise linear function of λ, with knots (changes in slope) at values (these knots depend on y, X).

At λ = ∞, the solution has no active variables (i.e., all variables have zero coefficients); for decreasing λ, each knot λk marks the entry or removal of some variable from the current active set (i.e., its coefficient becomes nonzero or zero, resp.). Therefore, the active set, and also the signs of active coefficients, remain constant in between knots.

At any point λ in the path, the corresponding active set of the lasso solution indexes a linearly independent set of predictor variables, that is, rank(XA) = |A|, where we use XA to denote the columns of X in A.

For a general X, the number of knots in the lasso path is bounded by 3p (but in practice this bound is usually very loose). This bound comes from the following realization: if at some knot λk, the active set is and the signs of active coefficients are , then the active set and signs cannot again be A and sA at some other knot λℓ ≠ λk. This in particular means that once a variable enters the active set, it cannot immediately leave the active set at the next step.

For a matrix X satisfying the positive cone condition (a restrictive condition that covers, e.g., orthogonal matrices), there are no variables removed from the active set as λ decreases and, therefore, the number of knots is p.

We can now precisely define the problem that we are trying to solve: at a given step in the lasso path (i.e., at a given knot), we consider testing the significance of the variable that enters the active set. To this end, we propose a test statistic defined at the kth step of the path.

First, we define some needed quantities. Let A be the active set just before λk, and suppose that predictor j enters at λk. Denote by the solution at the next knot in the path λk+1, using predictors A ∪ {j}. Finally, let be the solution of the lasso problem using only the active predictors XA, at λ = λk+1. To be perfectly explicit,

| (4) |

We propose the covariance test statistic defined by

| (5) |

Intuitively, the covariance statistic in (5) is a function of the difference between and , the fitted values given by incorporating the jth predictor into the current active set, and leaving it out, respectively. These fitted values are parameterized by λ, and so one may ask: at which value of λ should this difference be evaluated? Well, note first that , that is, the solution of the reduced problem at λk is simply that of the full problem, restricted to the active set A (as verified by the KKT conditions). Clearly then, this means that we cannot evaluate the difference at λ = λk, as the jth variable has a zero coefficient upon entry at λk, and hence

Indeed, the natural choice for the tuning parameter in (5) is λ = λk+1: this allows the jth coefficient to have its fullest effect on the fit before the entry of the next variable at λk+1 (or possibly, the deletion of a variable from A at λk+1).

Secondly, one may also ask about the particular choice of function of . The covariance statistic in (5) uses an inner product of this difference with y, which can be roughly thought of as an (uncentered) covariance, hence explaining its name.8 At a high level, the larger the covariance of y with compared to that with , the more important the role of variable j in the proposed model A ∪ {j}. There certainly may be other functions that would seem appropriate here, but the covariance form in (5) has a distinctive advantage: this statistic admits a simple and exact asymptotic null distribution. In Sections 3 and 4, we show that under the null hypothesis that the current lasso model contains all truly active variables, A ⊇ supp(β*),

that is, Tk is asymptotically distributed as a standard exponential random variable, given reasonable assumptions on X and the magnitudes of the nonzero true coefficients. [In some cases, e.g., when we have a strict inclusion , the use of an Exp(1) null distribution is actually conservative, because the limiting distribution of Tk is stochastically smaller than Exp(1).] In the above limit, we are considering both n, p → ∞; in Section 4, we allow for the possibility p > n, the high-dimensional case.

See Figure 1(b) for a quantile–quantile plot of T1 versus an Exp(1) variate for the same fully null example (β* = 0) used in Figure 1(a); this shows that the weak convergence to Exp(1) can be quite fast, as the quantiles are decently matched even for p = 10. Before proving this limiting distribution in Sections 3 (for an orthogonal X) and 4 (for a general X), we give an example of its application to real data, and discuss issues related to practical usage. We also derive useful alternative expressions for the statistic, present a connection to degrees of freedom, review related work, and finally, discuss the null hypothesis in more detail.

2.2. Prostate cancer data example and practical issues

We consider a training set of 67 observations and 8 predictors, the goal being to predict log of the PSA level of men who had surgery for prostate cancer. For more details, see Hastie, Tibshirani and Friedman (2008) and the references therein. Table 1 shows the results of forward stepwise regression and the lasso. Both methods entered the same predictors in the same order. The forward stepwise p-values are smaller than the lasso p-values, and would enter four predictors at level 0.05. The latter would enter only one or maybe two predictors. However, we know that the forward stepwise p-values are inaccurate, as they are based on a null distribution that does not account for the adaptive choice of predictors. We now make several remarks.

Table 1.

Forward stepwise and lasso applied to the prostate cancer data example. The error variance is estimated by , the MSE of the full model. Forward stepwise regression p-values are based on comparing the drop in residual sum of squares (divided by ) to an F(1, n − p) distribution (using instead produced slightly smaller p-values). The lasso p-values use a simple modification of the covariance test (5) for unknown variance, given in Section 6. All p-values are rounded to 3 decimal places

| Step | Predictor entered | Forward stepwise | Lasso |

|---|---|---|---|

| 1 | lcavol | 0.000 | 0.000 |

| 2 | lweight | 0.000 | 0.052 |

| 3 | svi | 0.041 | 0.174 |

| 4 | lbph | 0.045 | 0.929 |

| 5 | pgg45 | 0.226 | 0.353 |

| 6 | age | 0.191 | 0.650 |

| 7 | lcp | 0.065 | 0.051 |

| 8 | gleason | 0.883 | 0.978 |

Remark 1

The above example implicitly assumed that one might stop entering variables into the model when the computed p-value rose above some threshold. More generally, our proposed test statistic and associated p-values could be used as the basis for multiple testing and false discovery rate control methods for this problem; we leave this to future work.

Remark 2

In the example, the lasso entered a predictor into the active set at each step. For a general X, however, a given predictor variable may enter the active set more than once along the lasso path, since it may leave the active set at some point. In this case, we treat each entry as a separate problem. Our test is specific to a step in the path, and not to a predictor variable at large.

Remark 3

For the prostate cancer data set, it is important to include an intercept in the model. To accommodate this, we ran the lasso on centered y and column-centered X (which is equivalent to including an unpenalized intercept term in the lasso criterion), and then applied the covariance test (with the centered data). In general, centering y and the columns of X allows us to account for the effect of an intercept term, and still use a model of the form (1). From a theoretical perspective, this centering step creates a weak dependence between the components of the error vector ε ∈ ℝn. If originally we assumed i.i.d. errors, εi ~ N(0, σ2), then after centering y and the columns of X, our new errors are of the form , where . It is easy see that these new errors are correlated:

One might imagine that such correlation would cause problems for our theory in Sections 3 and 4, which assumes i.i.d. normal errors in the model (1). However, a careful look at the arguments in these sections reveals that the only dependence on y is through XTy, the inner products of y with the columns of X. Furthermore,

which is the same as it would have been without centering (here 11T is the matrix of all 1s, and we used that the columns of X are centered). Therefore, our arguments in Sections 3 and 4 apply equally well to centered data, and centering has no effect on the asymptotic distribution of Tk.

Remark 4

By design, the covariance test is applied in a sequential manner, estimating p-values for each predictor variable as it enters the model along the lasso path. A more difficult problem is to test the significance of any of the active predictors in a model fit by the lasso, at some arbitrary value of the tuning parameter λ. We discuss this problem briefly in Section 9.

2.3. Alternate expressions for the covariance statistic

Here, we derive two alternate forms for the covariance statistic in (5). The first lends some insight into the role of shrinkage, and the second is helpful for the convergence results that we establish in Sections 3 and 4. We rely on some basic properties of lasso solutions; see, for example, Tibshirani and Taylor (2012), Tibshirani (2013). To remind the reader, we are assuming that X has columns in general position.

For any fixed λ, if the lasso solution has active set and signs , then it can be written explicitly (over active variables) as

In the above expression, the first term simply gives the regression coefficients of y on the active variables XA, and the second term can be thought of as a shrinkage term, shrinking the values of these coefficients toward zero. Further, the lasso fitted value at λ is

| (6) |

where denotes the projection onto the column space of XA, and is the (Moore–Penrose) pseudoinverse of .

Using the representation (6) for the fitted values, we can derive our first alternate expression for the covariance statistic in (5). If A and sA are the active set and signs just before the knot λk, and j is the variable added to the active set at λk, with sign s upon entry, then by (6),

where . We can equivalently write , the concatenation of sA and the sign s of the jth coefficient when it entered (as no sign changes could have occurred inside of the interval [λk, λk+1], by definition of the knots). Let us assume for the moment that the solution of reduced lasso problem (4) at λk+1 has all variables active and —remember, this holds for the reduced problem at λk, and we will return to this assumption shortly. Then, again by (6),

and plugging the above two expressions into (5),

| (7) |

Note that the first term above is , which is exactly the chi-squared statistic for testing the significance of variable j, as in (3). Hence, if A, j were fixed, then without the second term, Tk would have a distribution under the null. But of course A, j are not fixed, and so much like we saw previously with forward stepwise regression, the first term in (7) will be generically larger than , because j is chosen adaptively based on its inner product with the current lasso residual vector. Interestingly, the second term in (7) adjusts for this adaptivity: with this term, which is composed of the shrinkage factors in the solutions of the two relevant lasso problems (on X and XA), we prove in the coming sections that Tk has an asymptotic Exp(1) null distribution. Therefore, the presence of the second term restores the (asymptotic) mean of Tk to 1, which is what it would have been if A, j were fixed and the second term were missing. In short, adaptivity and shrinkage balance each other out.

This insight aside, the form (7) of the covariance statistic leads to a second representation that will be useful for the theoretical work in Sections 3 and 4. We call this the knot form of the covariance statistic, described in the next lemma.

Lemma 1

Let A be the active set just before the kth step in the lasso path, that is, , with λk being the kth knot. Also, let sA denote the signs of the active coefficients, , j be the predictor that enters the active set at λk, and s be its sign upon entry. Then, assuming that

| (8) |

or in other words, all coefficients are active in the reduced lasso problem (4) at λk+1 and have signs sA, we have

| (9) |

where

and sA∪{j} is the concatenation of sA and s.

The proof starts with expression (7), and arrives at (9) through simple algebraic manipulations. We defer it until Appendix A.1.

When does the condition (8) hold? This was a key assumption behind both of the forms (7) and (9) for the statistic. We first note that the solution of the reduced lasso problem has signs sA at λk, so it will have the same signs sA at λk+1 provided that no variables are deleted from the active set in the solution path for λ ∈ [λk+1, λk]. Therefore, assumption (8) holds:

When X satisfies the positive cone condition (which includes X orthogonal), because no variables ever leave the active set in this case. In fact, for X orthogonal, it is straightforward to check that C(A, sA, j, s) = 1, so Tk = λk(λk − λk+1)/σ2.

When k = 1 (we are testing the first variable to enter), as a variable cannot leave the active set right after it has entered. If k = 1 and X has unit normed columns, ‖Xi‖2 = 1 for i = 1,…, p, then we again have C(A, sA, j, s) = 1 (note that A = ∅), so T1 = λ1(λ1 − λ2)/σ2.

When sA = sign((XA)+y), that is, sA contains the signs of the least squares coefficients on XA, because the same active set and signs cannot appear at two different knots in the lasso path (applied here to the reduced lasso problem on XA).

The first and second scenarios are considered in Sections 3 and 4.1, respectively. The third scenario is actually somewhat general and occurs, for example, when ; in this case, both the lasso and least squares on XA recover the signs of the true coefficients. Section 4.2 studies the general X and k ≥ 1 case, wherein this third scenario is important.

2.4. Connection to degrees of freedom

There is an interesting connection between the covariance statistic in (5) and the degrees of freedom of a fitting procedure. In the regression setting (1), for an estimate ŷ [which we think of as a fitting procedure ŷ = ŷ(y)], its degrees of freedom is typically defined [Efron (1986)] as

| (10) |

In words, df(ŷ) sums the covariances of each observation yi with its fitted value ŷi. Hence, the more adaptive a fitting procedure, the higher this covariance, and the greater its degrees of freedom. The covariance test evaluates the significance of adding the jth predictor via something loosely like a sample version of degrees of freedom, across two models: that fit on A ∪ {j}, and that on A. This was more or less the inspiration for the current work.

Using the definition (10), one can reason [and confirm by simulation, just as in Figure 1(a)] that with k predictors entered into the model, forward stepwise regression had used substantially more than k degrees of freedom. But something quite remarkable happens when we consider the lasso: for a model containing k nonzero coefficients, the degrees of freedom of the lasso fit is equal to k (either exactly or in expectation, depending on the assumptions) [Efron et al. (2004), Zou, Hastie and Tibshirani (2007), Tibshirani and Taylor (2012)]. Why does this happen? Roughly speaking, it is the same adaptivity versus shrinkage phenomenon at play. [Recall our discussion in the last section following the expression (7) for the covariance statistic.] The lasso adaptively chooses the active predictors, which costs extra degrees of freedom; but it also shrinks the nonzero coefficients (relative to the usual least squares estimates), which decreases the degrees of freedom just the right amount, so that the total is simply k.

2.5. Related work

There is quite a lot of recent work related to the proposal of this paper. Wasserman and Roeder (2009) propose a procedure for variable selection and p-value estimation in high-dimensional linear models based on sample splitting, and this idea was extended by Meinshausen, Meier and Bühlmann (2009). Meinshausen and Bühlmann (2010) propose a generic method using resampling called “stability selection,” which controls the expected number of false positive variable selections. Minnier, Tian and Cai (2011) use perturbation resampling-based procedures to approximate the distribution of a general class of penalized parameter estimates. One big difference with the work here: we propose a statistic that utilizes the data as given and does not employ any resampling or sample splitting.

Zhang and Zhang (2014) derive confidence intervals for contrasts of high-dimensional regression coefficients, by replacing the usual score vector with the residual from a relaxed projection (i.e., the residual from sparse linear regression). Bühlmann (2013) constructs p-values for coefficients in high-dimensional regression models, starting with ridge estimation and then employing a bias correction term that uses the lasso. Even more recently, van de Geer and Bühlmann (2013), Javanmard and Montanari (2013a, 2013b) all present approaches for debiasing the lasso estimate based on estimates of the inverse covariance matrix of the predictors. (The latter work focuses on the special case of a predictor matrix X with i.i.d. Gaussian rows; the first two consider a general matrix X.) These debiased lasso estimates are asymptotically normal, which allows one to compute p-values both marginally for an individual coefficient, and simultaneously for a group of coefficients. All of the work mentioned in the present paragraph provides a way to make inferential statements about preconceived predictor variables of interest (or preconceived groups of interest); this is in contrast to our work, which instead deals directly with variables that have been adaptively selected by the lasso procedure. We discuss this next.

2.6. What precisely is the null hypothesis?

The referees of a preliminary version of this manuscript expressed some confusion with regard to the null distribution considered by the covariance test. Given a fixed number of steps k ≥ 1 along the lasso path, the covariance test examines the set of variables A selected by the lasso before the kth step (i.e., A is the current active set not including the variable to be added at the kth step). In particular, the null distribution being tested is

| (11) |

where β* is the true underlying coefficient vector in the model (1). For k = 1, we have A = ∅ (no variables are selected before the first step), so this reduces to a test of the global null hypothesis: β* = 0. For k > 1, the set A is random (it depends on y), and hence the null hypothesis in (11) is itself a random event. This makes the covariance test a conditional hypothesis test beyond the first step in the path, as the null hypothesis that it considers is indeed a function of the observed data. Statements about its null distribution must therefore be made conditional on the event that A ⊇ supp(β*), which is precisely what is done in Sections 3.2 and 4.2.

Compare the null hypothesis in (11) to a null hypothesis of the form

| (12) |

where S ⊆ {1,…, p} is a fixed subset. The latter hypothesis, in (12), describes the setup considered by Zhang and Zhang (2014), Bühlmann (2013), van de Geer and Bühlmann (2013), Javanmard and Montanari (2013a, 2013b). At face value, the hypotheses (11) and (12) may appear similar [the test in (11) looks just like that in (12) with S = {1,…, p} \ A], but they are fundamentally very different. The difference is that the null hypothesis in (11) is random, whereas that in (12) is fixed; this makes the covariance test a conditional hypothesis test, while the tests constructed in all of the aforementioned work are traditional (unconditional) hypothesis tests. It should be made clear that the goal of our work and these works also differ. Our test examines an adaptive subset of variables A deemed interesting by the lasso procedure; for such a goal, it seems necessary to consider a random null hypothesis, as theory designed for tests of fixed hypotheses would not be valid here.9 The main goal of Zhang and Zhang (2014), Bühlmann (2013), van de Geer and Bühlmann (2013), Javanmard and Montanari (2013a, 2013b), it appears, is to construct a new set of variables, say A, based on testing the hypotheses in (12) with S = {j} for j = 1,…, p. Though the construction of this new set à may have started from a lasso estimate, it need not be true that à matches the lasso active set A, and ultimately it is this new set à (and inferential statements concerning Ã) that these authors consider the point of interest.

3. An orthogonal predictor matrix X

We examine the special case of an orthogonal predictor matrix X, that is, one that satisfies XTX = I. Even though the results here can be seen as special cases of those for a general X in Section 4, the arguments in the current orthogonal X case rely on relatively straightforward extreme value theory and are hence much simpler than their general X counterparts (which analyze the knots in the lasso path via Gaussian process theory). Furthermore, the Exp(1) limiting distribution for the covariance statistic translates in the orthogonal case to a few interesting and previously unknown (as far as we can tell) results on the order statistics of independent standard χ1 variates. For these reasons, we discuss the orthogonal X case in detail.

As noted in the discussion following Lemma 1 (see the first point), for an orthogonal X, we know that the covariance statistic for testing the entry of the variable at step k in the lasso path is

Again using orthogonality, we rewrite for a constant C (not depending on β) in the criterion in (2), and then we can see that the lasso solution at any given value of λ has the closed-form:

where X1,…, Xp are columns of X, and Sλ : ℝ → ℝ is the soft-thresholding function,

Letting , j = 1,…, p, the knots in the lasso path are simply the values of λ at which the coefficients become nonzero (i.e., cease to be thresholded),

where |U(1)| ≥ |U(2)| ≥ … ≥ |U(p)| are the order statistics of |U1|,…,|Up| (somewhat of an abuse of notation). Therefore,

Next, we study the special case k = 1, the test for the first predictor to enter the active set along the lasso path. We then examine the case k ≥ 1, the test at a general step in the lasso path.

3.1. The first step, k = 1

Consider the covariance test statistic for the first predictor to enter the active set, that is, for k = 1,

We are interested in the distribution of T1 under the null hypothesis; since we are testing the first predictor to enter, this is

Under the null, U1,…, Up are i.i.d., Uj ~ N(0, σ2), and so |U1|/σ,…, |Up|/σ follow a χ1 distribution (absolute value of a standard Gaussian). That T1 has an asymptotic Exp(1) null distribution is now given by the next result.

Lemma 2

Let be the order statistics of an independent sample of χ1 variates (i.e., they are the sorted absolute values of an independent sample of standard Gaussian variates). Then

This lemma reveals a remarkably simple limiting distribution for the largest of independent χ1 random variables times the gap between the largest two; we skip its proof, as it is a special case of the following generalization.

Lemma 3

If are the order statistics of an independent sample of χ1 variates, then for any fixed k ≥ 1,

where the limiting distribution (on the right-hand side above) has independent components. To be perfectly clear, here and throughout we use Exp(α) to denote the exponential distribution with scale parameter α (not rate parameter α), so that if Z ~ Exp(α), then .

Proof

The χ1 distribution has CDF

where Φ is the standard normal CDF. We first compute

the last equality using Mills’ ratio. Theorem 2.2.1 in de Haan and Ferreira (2006) then implies that, for constants ap = F−1 (1 − 1/p) and bp = pF′(ap),

where E0 is a standard exponential variate, so − log E0 has the standard (or type I) extreme value distribution. Hence, according to Theorem 3 in Weissman (1978), for any fixed k ≥ 1, the random variables W0 = bp (Vk+1 − ap) and Wi = bp(Vi − Vi+1), i = 1,…, k, converge jointly:

where G0, E1,…, Ek are independent, G0 is Gamma distributed with scale parameter 1 and shape parameter k, and E1,…, Ek are standard exponentials. Now note that

We claim that ap/bp → 1; this would give the desired result as the second term converges to zero, using bp→∞. Writing ap, bp more explicitly, we see that 1 − 1/p = 2Φ(ap) − 1, that is, 1 − Φ(ap) = 1/(2p), and bp = 2pϕ(ap). Using Mills’ inequalities,

and multiplying by 2p,

Since ap → ∞, this means that bp/ap → 1, completing the proof. □

Practically, Lemma 3 tells us that under the global null hypothesis y ~ N(0, σ2), comparing the covariance statistic Tk at the kth step of the lasso path to an Exp(1) distribution is increasingly conservative [at the first step, T1 is asymptotically Exp(1), at the second step, T2 is asymptotically Exp(1/2), at the third step, T3 is asymptotically Exp(1/3), and so forth]. This progressive conservatism is favorable, if we place importance on parsimony in the fitted model: we are less and less likely to incur a false rejection of the null hypothesis as the size of the model grows. Moreover, we know that the test statistics T1, T2, … at successive steps are independent, and hence so are the corresponding p-values; from the point of view of multiple testing corrections, this is nearly an ideal scenario.

Of real interest is the distribution of Tk, k ≥ 1, not under the global null hypothesis, but rather, under the weaker null hypothesis that all variables excluded from the current lasso model are truly inactive (i.e., they have zero coefficients in the true model). We study this in next section.

3.2. A general step, k ≥ 1

We suppose that exactly k0 components of the true coefficient vector β* are nonzero, and consider testing the entry of the predictor at step k = k0 + 1. Let A* = supp(β*) denote the true active set (so k0 = |A*|), and let B denote the event that all truly active variables are added at steps 1, …, k0,

| (13) |

We show that under the null hypothesis (i.e., conditional on B), the test statistic is asymptotically Exp(1), and further, the test statistic at a future step k = k0 + d is asymptotically Exp(1/d).

The basic idea behind our argument is as follows: if we assume that the nonzero components of β* are large enough in magnitude, then it is not hard to show (relying on orthogonality, here) that the truly active predictors are added to the model along the first k0 steps of the lasso path, with probability tending to one. The test statistic at the (k0 + 1)st step and beyond would therefore depend on the order statistics of |Ui| for truly inactive variables i, subject to the constraint that the largest of these values is smaller than the smallest |Uj| for truly active variables j. But with our strong signal assumption, that is, that the nonzero entries of β* are large in absolute value, this constraint has essentially no effect, and we are back to studying the order statistics from a χ1 distribution, as in the last section. This is made precise below.

Theorem 1

Assume that X ∈ ℝn×p is orthogonal, and y ℝn is drawn from the normal regression model (1), where the true coefficient vector β* has k0 nonzero components. Let A* = supp(β*) be the true active set, and assume that the smallest nonzero true coefficient is large compared to ,

Let B denote the event in (13), namely, that the first k0 variables entering the model along the lasso path are those in A*. Then ℙ(B) → 1 as p → ∞, and for each fixed d ≥ 0, we have

The same convergence in distribution holds conditionally on B.

Proof

We first study ℙ(B). Let , and choose cp such that

Note that , independently for j = 1,…, p. For j ∈ A*,

so

At the same time,

Therefore, ℙ(B) → 1. This in fact means that ℙ(E|B) − ℙ(E) → 0 for any sequence of events E, so only the weak convergence of remains to be proved. For this, we let m = p − k0, and V1 ≥ V2 ≥ ⋯ ≥ Vm denote the order statistics of the sample |Uj|, j ∉ A* of independent χ1 variates. Then, on the event B, we have

As ℙ(B) → 1, we have in general

Hence, we are essentially back in the setting of the last section, and the desired convergence result follows from the same arguments as those for Lemma 3. □

4. A general predictor matrix X

In this section, we consider a general predictor matrix X, with columns in general position. Recall that our proposed covariance test statistic (5) is closely intertwined with the knots λ1 ≥ … ≥ λr in the lasso path, as it was defined in terms of difference between fitted values at successive knots. Moreover, Lemma 1 showed that (provided there are no sign changes in the reduced lasso problem over [λk+1, λk]) this test statistic can be expressed even more explicitly in terms of the values of these knots. As was the case in the last section, this knot form is quite important for our analysis here. Therefore, it is helpful to recall [Efron et al. (2004), Tibshirani (2013)] the precise formulae for the knots in the lasso path. If A denotes the active set and sA denotes the signs of active coefficients at a knot λk,

then the next knot λk+1 is given by

| (14) |

Where and are the values of λ at which, if we were to decrease the tuning parameter from λk and continue along the current (linear) trajectory for the lasso coefficients, a variable would join and leave the active set A, respectively. These values are10

| (15) |

where recall ; and

| (16) |

As we did in Section 3 with the orthogonal X case, we begin by studying the asymptotic distribution of the covariance statistic in the special case k = 1 (i.e., the first model along the path), wherein the expressions for the next knot (14), (15), (16) greatly simplify. Following this, we study the more difficult case k ≥ 1. For the sake of readability, we defer the proofs and most technical details until the Appendix.

4.1. The first step, k = 1

We assume here that X has unit normed columns: ‖Xi‖2 = 1, for i =1,…,p; we do this mostly for simplicity of presentation, and the generalization to a matrix X whose columns are not unit normed is given in the next section (though the exponential limit is now a conservative upper bound). As per our discussion following Lemma 1 (see the second point), we know that the first predictor to enter the active set along the lasso path cannot leave at the next step, so the constant sign condition (8) holds, and by Lemma 1 the covariance statistic for testing the entry of the first variable can be written as

(the leading factor C being equal to one since we assumed that X has unit normed columns). Now let , and R = XTX. With λ0 = ∞, we have A = ∅, and trivially, no variables can leave the active set. The first knot is hence given by (15), which can be expressed as

| (17) |

Letting j1, s1 be the first variable to enter and its sign (i.e., they achieve the maximum in the above expression), and recalling that j1 cannot leave the active set immediately after it has entered, the second knot is again given by (15), written as

The general position assumption on X implies that |Rj,j1| < 1, and so 1 − ss1Rj,j1 > 0, all j ≠ j1, s ∈ {−1,1}. It is easy to show then that the indicator inside the maximum above can be dropped, and hence

| (18) |

Our goal now is to calculate the asymptotic distribution of T1 = λ1(λ1 − λ2)/σ2, with λ1 and λ2 as above, under the null hypothesis; to be clear, since we are testing the significance of the first variable to enter along the lasso path, the null hypothesis is

| (19) |

The strategy that we use here for the general X case—which differs from our extreme value theory approach for the orthogonal X case—is to treat the quantities inside the maxima in expressions (17), (18) for λ1, λ2 as discrete-time Gaussian processes. First, we consider the zero mean Gaussian process

| (20) |

We can easily compute the covariance function of this process:

where the expectation is taken over the null distribution in (19). From (17), we know that the first knot is simply

In addition to (20), we consider the process

| (21) |

An important property: for fixed j1, s1, the entire process is independent of g(j1, s1). This can be seen by verifying that

and noting that g(j1, s1) and , all j ≠ j1, s ∈ {−1, 1}, are jointly

| (22) |

and from the above we know that for fixed j1, s1, M(j1, s1) is independent of g(j1, s1). If j1, s1 are instead treated as random variables that maximize g(j, s) (the argument maximizers being almost surely unique), then from (18) we see that the second knot is λ2 = M(j1, s1). Therefore, to study the distribution of T1 = λ1(λ1 − λ2)/σ2, we are interested in the random variable

on the event

It turns out that this event, which concerns the argument maximizers of g, can be rewritten as an event concerning only the relative values of g and M [see Taylor, Takemura and Adler (2005) for the analogous result for continuous-time processes].

Lemma 4

With g, M as defined in (20), (21), (22), we have

This is an important realization because the dual representation g(j1, s1) > M(j1, s1)} is more tractable, once we partition the space over the possible argument minimizers j1, s1, and use the fact that M(j1, s1) is independent of g(j1, s1) for fixed j1, s1. In this vein, we express the distribution of T1 = λ1(λ1 − λ2)/σ2 in terms of the sum

The terms in the above sum can be simplified: dropping for notational convenience the dependence on j1, s1, we have

where , which follows by simply solving for g in the quadratic equation g(g − M)/σ2 = t. Therefore,

| (23) |

where is the standard normal function (i.e., , for Φ the standard normal CDF), is the distribution of M(j1, s1), and we have used the fact that g(j1, s1) and M(j1, s1) are independent for fixed j1, s1, as well as M(j1, s1) > 0. Continuing from (23), we can write the difference between and the standard exponential tail, , as

| (24) |

where we used the fact that

We now examine the term inside the braces in (24), the difference between a ratio of normal survival functions and e−t; our next lemma shows that this term vanishes as m → ∞.

Lemma 5

For any t ≥ 0,

Hence, loosely speaking, if each M(j1, s1) → ∞ fast enough as p → ∞, then the right-hand side in (24) converges to zero, and T1 converges weakly to Exp(1). This is made precise below.

Lemma 6

Consider M(j1, s1) defined in (21), (22) over j1 = 1, …, p and s1 ∈ {−1,1}. If for any fixed m0 > 0

| (25) |

then the right-hand side in (24) converges to zero as p → ∞, and so ℙ(T1 > t) → e−t for all t ≥ 0.

The assumption in (25) is written in terms of random variables whose distributions are induced by the steps along the lasso path; to make our assumptions more transparent, we show that (25) is implied by a conditional variance bound involving the predictor matrix X alone, and arrive at the main result of this section.

Theorem 2

Assume that X ∈ ℝn×p has unit normed columns in general position, and let R = XTX. Assume also that there is some δ > 0 such that for each j = 1, …, p, there exists a subset of indices S ⊆ {1,…, p} \ {j} with

| (26) |

and the size of S growing faster than log p,

| (27) |

The under the null distribution in (19) [i.e., y is drawn from the regression model (1) with β* = 0], we have ℙ(T1 > t) → e−t as p → ∞ for all t ≥ 0.

Remark

Conditions (26) and (27) are sufficient to ensure (25), or in other words, that each M(j1, s1) grows as in ℙ(M(j1, s1) m0) = o(1/p), for any fixed m0. While it is true that will typically grow as p grows, some assumption is required so that M(j1, s1) concentrates around its mean faster than standard Gaussian concentration results (such as the Borell-TIS inequality) imply.

Generally speaking, the assumptions (26) and (27) are not very strong. Stated differently, (26) is a lower bound on the variance of , conditional on for all ℓ ∈ S \ {i}. Hence, for any j, we require the existence of a subset S not containing j such that the variables Ui, i ∈ S, are not too correlated, in the sense that the conditional variance of any one given all the others is bounded below. This subset S has to be larger in size than log p, as made clear in (27). Note that, in fact, it suffices to find a total of two disjoint subsets S1, S2 with the properties (26) and (27), because then for any j, either one or the other will not contain j.

An example of a matrix X that does not satisfy (26) and (27) is one with fixed rank as p grows. (This, of course, would also not satisfy the general position assumption.) In this case, we would not be able to find a subset of the variables , i = 1, …, p, that is both linearly independent and has size larger than r = rank(X), which violates the conditions. We note that in general, since |S| ≤ rank(X) ≤ n, and |S|/ log p → ∞, conditions (26) and (27) require that n/ log p → ∞.

4.2. A general step, k ≥ 1

In this section, we no longer assume that X has unit normed columns (in any case, this provides no simplification in deriving the null distribution of the test statistic at a general step in the lasso path). Our arguments here have more or less the same form as they did in the last section, but overall the calculations are more complicated.

Fix an integer k0 ≥ 0, subset A0 ⊆ {1, …, p} containing the true active set A0 ⊇ A* = supp(β*), and sign vector . Consider the event

| (28) |

We assume that ℙ(B) → 1 as p → ∞. In words, this is assuming that with probability approaching one: the lasso estimate at step k0 in the path has support A0 and signs ; the least squares estimate on A0 has the same signs as this lasso estimate; the knots at steps k0 + 1 and k0 + 2 correspond to joining events; and in particular, the maximization defining the joining event at step k0 + 1 can be taken to be unrestricted, that is, without the indicators constraining the individual arguments to be . Our goal is to characterize the asymptotic distribution of the covariance statistic Tk at the step k = k0 + 1, under the null hypothesis (i.e., conditional on the event B). We will comment on the stringency of the assumption that ℙ(B) → 1 following our main result in Theorem 3.

First note that on B, we have sA = sign((XA)+y), and as discussed in the third point following Lemma 1, this implies that the solution of the reduced problem (4) on XA cannot incur any sign changes over the interval [λk, λk+1]. Hence, we can apply Lemma 1 to write the covariance statistic on B as

where , A and sA are the active set and signs at step k − 1, and jk is the variable added to the active set at step k, with sign sk. Now, analogous to our definition in the last section, we define the discrete-time Gaussian process

| (29) |

For any fixed A, sA, the above process has mean zero provided that A ⊇ A*. Additionally, for any such fixed A, sA, we can compute its covariance function

| (30) |

Note that on the event B, the kth knot in the lasso path is

For fixed jk, sk, we also consider the process

| (31) |

(above, is the concatenation of sA and sk) and its achieved maximum value, subject to being less than the maximum of g(A,sA),

| (32) |

If jk, sk indeed maximize g(A,sA), that is, they correspond to the variable added to the active set at λk and its sign (note that these are almost surely unique), then on B, we have . To study the distribution of Tk on B, we are therefore interested in the random variable

on the event

| (33) |

Equivalently, we may write

Since ℙ(B) → 1, we have in general

| (34) |

where we have replaced all instances of A and sA on the right-hand side above with the fixed subset A0 and sign vector . This is a helpful simplification, because in what follows we may now take A = A0 and sA = as fixed, and consider the distribution of the random processes and . With A = A0 and sA = fixed, we drop the notational dependence on them and write these processes as g and M. We also write the scaling factor C(A0, , jk, sk) as C(jk, sk).

The setup in (34) looks very much like the one in the last section [and to draw an even sharper parallel, the scaling factor C(jk, sk) is actually equal to one over the variance of g(jk, sk), meaning that is standard normal for fixed jk, sk, a fact that we will use later in the proof of Lemma 8]. However, a major complication is that g(jk, sk) and M (jk, sk) are no longer independent for fixed jk, sk. Next, we derive a dual representation for the event (33) (analogous to Lemma 4 in the last section), introducing a triplet of random variables M+, M−, M0—it turns out that g is independent of this triplet, for fixed jk, sk.

Lemma 7

Let g be as defined in (29) (with A, sA fixed at A0, ). Let Σj,j′ denote the covariance function of g [short form for the expression in (30)].11 Define

| (35) |

| (36) |

| (37) |

Then the event E(jk, sk) in (33), that jk, sk maximize g, can be written as an intersection of events involving M+, M−, M0:

| (38) |

As a result of Lemma 7, continuing from (34), we can decompose the tail probability of Tk as

| (39) |

A key point here is that, for fixed jk, sk, the triplet M+(jk, sk), M−(jk, sk), M0(jk, sk) is independent of g(jk, sk), which is true because

and g(jk, sk), along with , for all j, s, form a jointly Gaussian collection of random variables. If we were to now replace M by M+ in the first line of (39), and define a modified statistic via its tail probability,

| (40) |

then arguments similar to those in the second half of Section 4.1 give a (conservative) exponential limit for .

Lemma 8

Consider g as defined in (29) (with A, sA fixed at A0, ), and M+, M−, M0 as defined in (35), (36), (37). Assume that for any fixed m0,

| (41) |

Then the modified statistic in (40) satisfies , for all t ≥ 0.

Of course, deriving the limiting distribution of was not the goal, and it remains to relate to . A fortuitous calculation shows that the two seemingly different quantities M+ and M—the former of which is defined as the maximum of particular functionals of g, and the latter concerned with the joining event at step k + 1—admit a very simple relationship: M+(jk, sk) ≤ M(jk, sk) for the maximizing jk, sk. We use this to bound the tail of Tk.

Lemma 9

Consider g, M as defined in (29), (31), (32) (with A, sA fixed at A0, ), and consider M+ as defined in (36). Then for any fixed jk, sk, on the event E(jk, sk) in (33), we have

Hence, if we assume as in Lemma 8 the condition (41), then limp→∞ ℙ(Tk > t) ≤ e−t for all t ≥ 0.

Though Lemma 9 establishes a (conservative) exponential limit for the covariance statistic Tk, it does so by enforcing assumption (41), which is phrased in terms of the tail distribution of a random process defined at the kth step in the lasso path. We translate this into an explicit condition on the covariance structure in (30), to make the stated assumptions for exponential convergence more concrete.

Theorem 3

Assume that X ∈ ℝn×p has columns in general position, and y ∈ ℝn is drawn from the normal regression model (1). Assume that for a fixed integer k0 ≥ 0, subset A0 ⊆ {1, …, p with A0 ⊇ A* = supp(β*) and sign vector ∈ {−1, 1}|A0|, the event B in} (28) satisfies ℙ(B) → 1 as p → ∞. Assume that there exists a constant 0 < η ≤ 1 such that

| (42) |

Define the matrix R by

Assume that the diagonal elements in R are all of the same order, that is, Rii/Rjj ≤ C for all i, j and some constant C > 0. Finally assume that, for each fixed j ∉ A0, there is a set S ⊆ {1, …, p} \ (A0 ∪ {j}) such that for all i ∈ S,

| (43) |

| (44) |

| (45) |

where δ > 0 is a constant (not depending on j), and the size of S grows faster than log p,

| (46) |

Then at step k = k0 + 1, we have limp→∞ ℙ(Tk > t) ≤ e−t for all t ≥ 0. The same result holds for the tail of Tk conditional on B.

Remark 5

If X has unit normed columns, then by taking k0 = 0 (and accordingly, A0 = ∅, ) in Theorem 3, we essentially recover the result of Theorem 2. To see this, note that with k0 = 0 (and A0, ), we have ℙ(B) = 1 for all finite p (recall the arguments given at the beginning of Section 4.1). Also, condition (42) trivially holds with η = 1 because A0 = ∅. Next, the matrix R defined in the theorem reduces to R = XTX, again because A0 = ∅; note that R has all diagonal elements equal to one, because X has unit normed columns. Hence, (43) is the same as condition (26) in Theorem 2. Finally, conditions (44) and (45) both reduce to |Rij| < 1, which always holds as X has columns in general position. Therefore, when k0 = 0, Theorem 3 imposes the same conditions as Theorem 2, and gives essentially the same result—we say “essentially” here is because the former gives a conservative exponential limit for T1, while the latter gives an exact exponential limit.

Remark 6

If X is orthogonal, then for any A0, conditions (42) and (43)–(46) are trivially satisfied [for the latter set of conditions, we can take, e.g., S = {1, …, p\(A0∪}{j})]. With an additional condition on the strength of the true nonzero coefficients, we can assure that ℙ(B) → 1 as p → ∞ with A0 = A*, = sign , and k0 = |A0|, and hence prove a conservative exponential limit for Tk; note that this is precisely what is done in Theorem 1 (except that in this case, the exponential limit is proven to be exact).

Remark 7

Defining for i ∉ A0, the condition (43) is a lower bound on the ratio of the conditional variance of Ui on Uℓ, ℓ ∉ S, to the unconditional variance of Ui. Loosely speaking, conditions (43), (44), and (45) can all be interpreted as requiring, for any j ∉ A0, the existence of a subset S not containing j (and disjoint from A0) such that the variables Ui, i ∈ S, are not very correlated. This subset has to be large in size compared to log p, by (46). An implicit consequence of (43)–(46), as argued in the remark following Theorem 2, is that n/log p → ∞.

Remark 8

Some readers will likely recognize condition (42) as that of mutual incoherence or strong irrepresentability, commonly used in the lasso literature on exact support recovery [see, e.g., Wainwright (2009), Zhao and Yu (2006)]. This condition, in addition to a lower bound on the magnitudes of the true coefficients, is sufficient for the lasso solution to recover the true active set A* with probability tending to one, at a carefully chosen value of λ. It is important to point out that we do not place any requirements on the magnitudes of the true nonzero coefficients; instead, we assume directly that the lasso converges (with probability approaching one) to some fixed model defined by A0, at the (k0)th step in the path. Here, A0 is large enough that it contains the true support, A0 ⊇ A*, and the signs are arbitrary—they may or may not match the signs of the true coefficients over A0. In a setting in which the nonzero coefficients in β* are well separated from zero, a condition quite similar to the irrepresentable condition can be used to show that the lasso converges to the model with support A0 = A* and signs , at step k0 = |A0| of the path. Our result extends beyond this case, and allows for situations in which the lasso model converges to a possibly larger set of “screened” variables A0, and fixed signs .

Remark 9

In fact, one can modify the above arguments to account for the case that A0 does not contain the entire set A* of truly nonzero coefficients, but rather, only the “strong” coefficients. While “strong” is rather vague, a more precise way of stating this is to assume that β* has nonzero coefficients both large and small in magnitude, and with A0 corresponding to the set of large coefficients, we assume that the (left-out) small coefficients must be small enough that the mean of the process g in (29) (with A = A0 and sA = ) grows much faster than M+. The details, though not the main ideas, of the arguments would change, and the result would still be a conservative exponential limit for the covariance statistic Tk at step k = k0 + 1. We may pursue this extension in future work.

5. Simulation of the null distribution

We investigate the null distribution of the covariance statistic through simulations, starting with an orthogonal predictor matrix X, and then considering more general forms of X.

5.1. Orthogonal predictor matrix

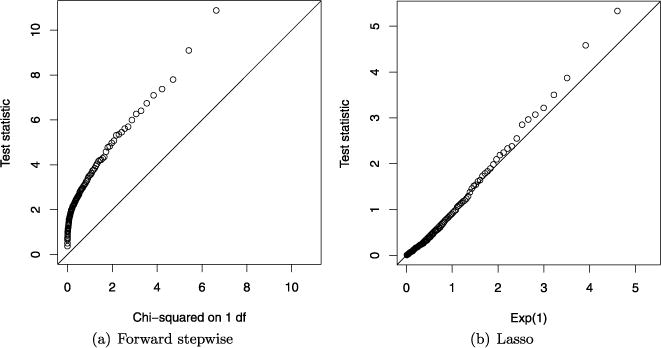

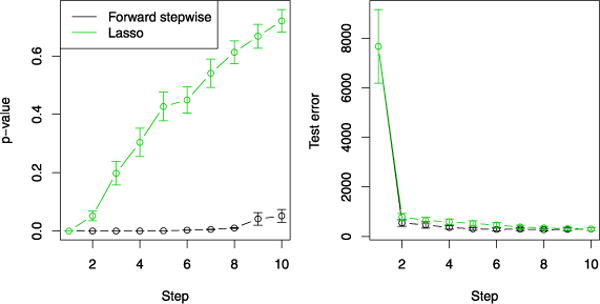

Similar to our example from the start of Section 2, we generated n = 100 observations with p = 10 orthogonal predictors. The true coefficient vector β* contained 3 nonzero components equal to 6, and the rest zero. The error variance was σ2 = 1, so that the truly active predictors had strong effects and always entered the model first, with both forward stepwise and the lasso. Figure 2 shows the results for testing the 4th (truly inactive) predictor to enter, averaged over 500 simulations; the left panel shows the chi-squared test (drop in RSS) applied at the 4th step in forward stepwise regression, and the right panel shows the covariance test applied at the 4th step of the lasso path. We see that the Exp(1) distribution provides a good finite-sample approximation for the distribution of the covariance statistic, while is a poor approximation for the drop in RSS.

FIG. 2.

An example with n = 100 and p = 10 orthogonal predictors, and the true coefficient vector having 3 nonzero, large components. Shown are quantile–quantile plots for the drop in RSS test applied to forward stepwise regression at the 4th step and the covariance test for the lasso path at the 4th step.

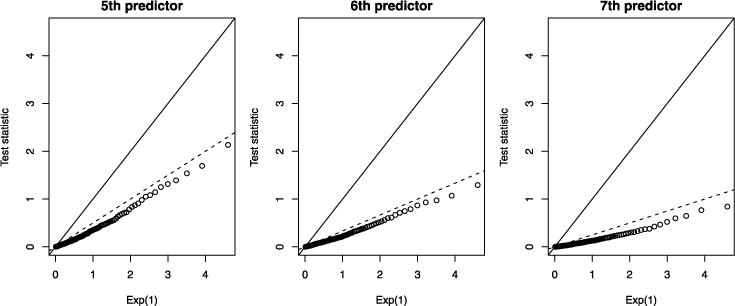

Figure 3 shows the results for testing the 5th, 6th and 7th predictors to enter the lasso model. An Exp(1)-based test will now be conservative: at a nominal 5% level, the actual type I errors are about 1%, 0.2% and 0.0%, respectively. The solid line has slope 1, and the broken lines have slopes 1/2, 1/3, 1/4, as predicted by Theorem 1.

FIG. 3.

The same setup as in Figure 2, but here we show the covariance test at the 5th, 6th and 7th steps along the lasso path, from left to right, respectively. The solid line has slope 1, while the broken lines have slopes 1/2, 1/3, 1/4, as predicted by Theorem 1.

5.2. General predictor matrix

In Table 2, we simulated null data (i.e., β* = 0), and examined the distribution of the covariance test statistic T1 for the first predictor to enter. We varied the numbers of predictors p, correlation parameter ρ, and structure of the predictor correlation matrix. In the first two correlation setups, the correlation between each pair of predictors was ρ, in the data and population, respectively. In the AR(1) setup, the correlation between predictors j and j′ is ρ|j−j′|. Finally, in the block diagonal setup, the correlation matrix has two equal-sized blocks, with population correlation ρ in each block. We computed the mean, variance and tail probability of the covariance statistic T1 over 500 simulated data sets for each setup. We see that the Exp(1) distribution is a reasonably good approximation throughout.

TABLE 2.

Simulation results for the first predictor to enter for a global null true model. We vary the number of predictors p, correlation parameter ρ and structure of the predictor correlation matrix. Shown are the mean, variance and tail probability of the covariance statistic T1, where q0.95 is the 95% quantile of the Exp(1) distribution, computed over 500 simulated data sets for each setup. Standard errors are given by “se.” (The panel in the bottom left corner is missing because the equal data correlation setup is not defined for p > n.)

| Equal data corr

|

Equal pop’n corr

|

AR(1)

|

Block diagonal

|

|||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ρ | Mean | Var | Tail pr | Mean | Var | Tail pr | Mean | Var | Tail pr | Mean | Var | Tail pr |

| n = 100, p = 10 | ||||||||||||

| 0 | 0.966 | 1.157 | 0.062 | 1.120 | 1.951 | 0.090 | 1.017 | 1.484 | 0.070 | 1.058 | 1.548 | 0.060 |

| 0.2 | 0.972 | 1.178 | 0.066 | 1.119 | 1.844 | 0.086 | 1.034 | 1.497 | 0.074 | 1.069 | 1.614 | 0.078 |

| 0.4 | 0.963 | 1.219 | 0.060 | 1.115 | 1.724 | 0.092 | 1.045 | 1.469 | 0.060 | 1.077 | 1.701 | 0.076 |

| 0.6 | 0.960 | 1.265 | 0.070 | 1.095 | 1.648 | 0.086 | 1.048 | 1.485 | 0.066 | 1.074 | 1.719 | 0.086 |

| 0.8 | 0.958 | 1.367 | 0.060 | 1.062 | 1.624 | 0.092 | 1.034 | 1.471 | 0.062 | 1.062 | 1.687 | 0.072 |

| se | 0.007 | 0.015 | 0.001 | 0.010 | 0.049 | 0.001 | 0.013 | 0.043 | 0.001 | 0.010 | 0.047 | 0.001 |

| n = 100, p = 50 | ||||||||||||

| 0 | 0.929 | 1.058 | 0.048 | 1.078 | 1.721 | 0.074 | 1.039 | 1.415 | 0.070 | 0.999 | 1.578 | 0.048 |

| 0.2 | 0.920 | 1.032 | 0.038 | 1.090 | 1.476 | 0.074 | 0.998 | 1.391 | 0.054 | 1.064 | 2.062 | 0.052 |

| 0.4 | 0.928 | 1.033 | 0.040 | 1.079 | 1.382 | 0.068 | 0.985 | 1.373 | 0.060 | 1.076 | 2.168 | 0.062 |

| 0.6 | 0.950 | 1.058 | 0.050 | 1.057 | 1.312 | 0.060 | 0.978 | 1.425 | 0.054 | 1.060 | 2.138 | 0.060 |

| 0.8 | 0.982 | 1.157 | 0.056 | 1.035 | 1.346 | 0.056 | 0.973 | 1.439 | 0.060 | 1.046 | 2.066 | 0.068 |

| se | 0.010 | 0.030 | 0.001 | 0.011 | 0.037 | 0.001 | 0.009 | 0.041 | 0.001 | 0.011 | 0.103 | 0.001 |

| n = 100, p = 200 | ||||||||||||

| 0 | 1.004 | 1.017 | 0.054 | 1.029 | 1.240 | 0.062 | 0.930 | 1.166 | 0.042 | |||

| 0.2 | 0.996 | 1.164 | 0.052 | 1.000 | 1.182 | 0.062 | 0.927 | 1.185 | 0.046 | |||

| 0.4 | 1.003 | 1.262 | 0.058 | 0.984 | 1.016 | 0.058 | 0.935 | 1.193 | 0.048 | |||

| 0.6 | 1.007 | 1.327 | 0.062 | 0.954 | 1.000 | 0.050 | 0.915 | 1.231 | 0.044 | |||

| 0.8 | 0.989 | 1.264 | 0.066 | 0.961 | 1.135 | 0.060 | 0.914 | 1.258 | 0.056 | |||

| se | 0.008 | 0.039 | 0.001 | 0.009 | 0.028 | 0.001 | 0.007 | 0.032 | 0.001 | |||

In Table 3, the setup was the same as in Table 2, except that we set the first k coefficients of the true coefficient vector equal to 4, and the rest zero, for k = 1, 2, 3. The dimensions were also fixed at n = 100 and p = 50. We computed the mean, variance, and tail probability of the covariance statistic Tk+1 for entering the next (truly inactive) (k + 1)st predictor, discarding those simulations in which a truly inactive predictor was selected in the first k steps. (This occurred 1.7%, 4.0% and 7.0% of the time, resp.) Again, we see that the Exp(1) approximation is reasonably accurate throughout.

TABLE 3.

Simulation results for the (k + 1)st predictor to enter for a model with k truly nonzero coefficients, across k = 1, 2, 3. The rest of the setup is the same as in Table 2 except that the dimensions were fixed at n = 100 and p = 50. The values are conditional on the event that the k truly active variables enter in the first k steps

| Equal data corr

|

Equal pop’n corr

|

AR(1)

|

Block diagonal

|

|||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ρ | Mean | Var | Tail pr | Mean | Var | Tail pr | Mean | Var | Tail pr | Mean | Var | Tail pr |

| k = 1 and 2nd predictor to enter | ||||||||||||

| 0 | 0.933 | 1.091 | 0.048 | 1.105 | 1.628 | 0.078 | 1.023 | 1.146 | 0.064 | 1.039 | 1.579 | 0.060 |

| 0.2 | 0.940 | 1.051 | 0.046 | 1.039 | 1.554 | 0.082 | 1.017 | 1.175 | 0.060 | 1.062 | 2.015 | 0.062 |

| 0.4 | 0.952 | 1.126 | 0.056 | 1.016 | 1.548 | 0.084 | 0.984 | 1.230 | 0.056 | 1.042 | 2.137 | 0.066 |

| 0.6 | 0.938 | 1.129 | 0.064 | 0.997 | 1.518 | 0.079 | 0.964 | 1.247 | 0.056 | 1.018 | 1.798 | 0.068 |

| 0.8 | 0.818 | 0.945 | 0.039 | 0.815 | 0.958 | 0.044 | 0.914 | 1.172 | 0.062 | 0.822 | 0.966 | 0.037 |

| se | 0.010 | 0.024 | 0.002 | 0.011 | 0.036 | 0.002 | 0.010 | 0.030 | 0.002 | 0.015 | 0.087 | 0.002 |

| k = 2 and 3rd predictor to enter | ||||||||||||

| 0 | 0.927 | 1.051 | 0.046 | 1.119 | 1.724 | 0.094 | 0.996 | 1.108 | 0.072 | 1.072 | 1.800 | 0.064 |

| 0.2 | 0.928 | 1.088 | 0.044 | 1.070 | 1.590 | 0.080 | 0.996 | 1.113 | 0.050 | 1.043 | 2.029 | 0.060 |

| 0.4 | 0.918 | 1.160 | 0.050 | 1.042 | 1.532 | 0.085 | 1.008 | 1.198 | 0.058 | 1.024 | 2.125 | 0.066 |

| 0.6 | 0.897 | 1.104 | 0.048 | 0.994 | 1.371 | 0.077 | 1.012 | 1.324 | 0.058 | 0.945 | 1.568 | 0.054 |

| 0.8 | 0.719 | 0.633 | 0.020 | 0.781 | 0.929 | 0.042 | 1.031 | 1.324 | 0.068 | 0.771 | 0.823 | 0.038 |

| se | 0.011 | 0.034 | 0.002 | 0.014 | 0.049 | 0.003 | 0.009 | 0.022 | 0.002 | 0.013 | 0.073 | 0.002 |

| k = 3 and 4th predictor to enter | ||||||||||||

| 0 | 0.925 | 1.021 | 0.046 | 1.080 | 1.571 | 0.086 | 1.044 | 1.225 | 0.070 | 1.003 | 1.604 | 0.060 |

| 0.2 | 0.926 | 1.159 | 0.050 | 1.031 | 1.463 | 0.069 | 1.025 | 1.189 | 0.056 | 1.010 | 1.991 | 0.060 |

| 0.4 | 0.922 | 1.215 | 0.048 | 0.987 | 1.351 | 0.069 | 0.980 | 1.185 | 0.050 | 0.918 | 1.576 | 0.053 |

| 0.6 | 0.905 | 1.158 | 0.048 | 0.888 | 1.159 | 0.053 | 0.947 | 1.189 | 0.042 | 0.837 | 1.139 | 0.052 |

| 0.8 | 0.648 | 0.503 | 0.008 | 0.673 | 0.699 | 0.026 | 0.940 | 1.244 | 0.062 | 0.647 | 0.593 | 0.015 |

| se | 0.014 | 0.037 | 0.002 | 0.016 | 0.044 | 0.003 | 0.014 | 0.031 | 0.003 | 0.016 | 0.073 | 0.002 |

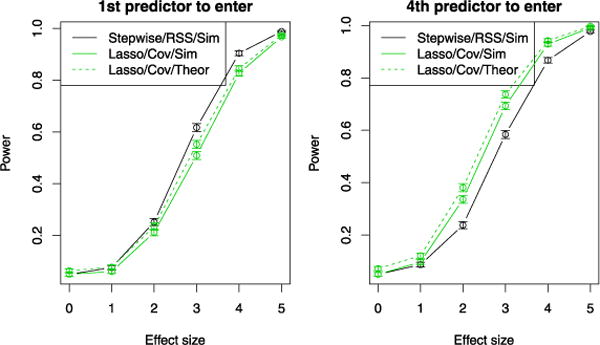

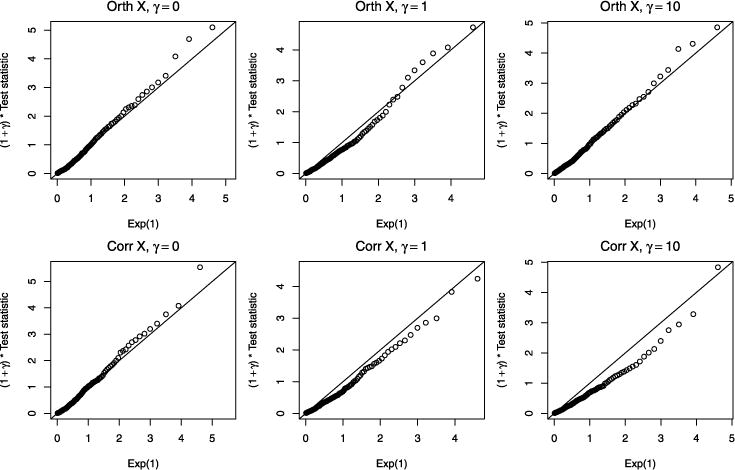

In Figure 4, we estimate the power curves for significance testing via the drop in RSS test for forward stepwise regression, and the covariance test for the lasso. In the former, we use simulation-derived cutpoints, and in the latter we use the theoretically-based Exp(1) cutpoints, to control the type I error at the 5% level. We find that the tests have similar power, though the cutpoints for forward stepwise would not be typically available in practice. For more details, see the figure caption.

FIG. 4.

Estimated power curves for significance tests using forward stepwise regression and the drop in RSS statistic, as well as the lasso and the covariance statistic. The results are averaged over 1000 simulations with n = 100 and p = 10 predictors drawn i.i.d. from N(0, 1) and σ2 = 1. On the left, there is one truly nonzero regression coefficient, and we varied its magnitude (the effect size parameter on the x-axis). We examined the first step of the forward stepwise and lasso procedures. On the right, in addition to a nonzero coefficient with varying effect size (on the x-axis), there are 3 additional large coefficients in the true model. We examined the 4th step in forward stepwise and the lasso, after the 3 strong variables have been entered. For the power curves in both panels, we use simulation-based cutpoints for forward stepwise to control the type I error at the 5% level; for the lasso we do the same, but also display the results for the theoretically-based [Exp(1)] cutpoint. Note that in practice, simulation-based cutpoints would not typically be available.

6. The case of unknown σ2

Up until now, we have assumed that the error variance σ2 is known; in practice it will typically be unknown. In this case, provided that n > p, we can easily estimate it and proceed by analogy to standard linear model theory. In particular, we can estimate σ2 by the mean squared residual error , with being the regression coefficients y on X (i.e., the full model). Plugging this estimate into the covariance statistic in (5) yields a new statistic Fk that has an asymptotic F-distribution under the null:

| (47) |

This follows because , the numerator Tk being asymptotically , the denominator being asymptotically , and we claim that the two are independent. Why? Note that the lasso solution path is unchanged if we replace y by PXy, so the lasso fitted values in Tk are functions of PXy; meanwhile, is a function of (I−PX)y. The quantities PXy and (I − PX)y are uncorrelated, and hence independent (recalling normality of y), so Tk and are functions of independent quantities and, therefore, independent.

As an example, consider one of the setups from Table 2, with n = 100, p = 80 and predictor correlation of the AR(1) form ρ|j−j′|. The true model is null, and we test the first predictor to enter along the lasso path. (We choose n, p of roughly equal sizes here to expose the differences between the σ2 known and unknown cases.) Table 4 shows the results of 1000 simulations from each of the ρ = 0 and ρ = 0.8 scenarios. We see that with σ2 estimated, the F2,n−p distribution provides a more accurate finite-sample approximation than does Exp(1).

TABLE 4.

Comparison of Exp(1), F2,N−p, and the observed (empirical) null distribution of the covariance statistic, when σ2 has been estimated. We examined 1000 simulated data sets with n = 100, p = 80 and the correlation between predictors j and j′ equal to ρ|j−j′|. We are testing the first step of the lasso path, and the true model is the global null. Results are shown for ρ = 0.0 and 0.8. The third column shows the tail probability computed over the 1000 simulations, where q0.95 is the 95% quantile from the appropriate distribution [either Exp(1) or F2,n−p]

| Mean | Variance | 95% quantile | Tail prob | |

|---|---|---|---|---|

| ρ = 0 | ||||

| Observed | 1.17 | 2.10 | 3.75 | |

| Exp(1) | 1.00 | 1.00 | 2.99 | 0.082 |

| F2,n−p | 1.11 | 1.54 | 3.49 | 0.054 |

| ρ = 0.8 | ||||

| Observed | 1.14 | 1.70 | 3.77 | |

| Exp(1) | 1.00 | 1.00 | 2.99 | 0.097 |

| F2,n−p | 1.11 | 1.54 | 3.49 | 0.064 |

When p ≥ n, estimation of σ2 is not nearly as straightforward; one idea is to estimate σ2 from the least squares fit on the support of the model selected by cross-validation. One would then hope that the resulting statistic, with this plug-in estimate of σ2, is close in distribution to F2,n−r under the null, where r is the size of the model chosen by cross-validation. This is by analogy to the low-dimensional n > p case in (47), but is not supported by rigorous theory. Simulations (withheld for brevity) show that this approximation is not too far off, but that the variance of the observed statistic is sometimes inflated compared that of an F2,n−r distribution (this unaccounted variability is likely due to the model selection process via cross-validation). Other authors have argued that using cross-validation to estimate σ2 when p ≫ n is not necessarily a good approach, as it can be anti-conservative; see, for example, Fan, Guo and Hao (2012), Sun and Zhang (2012) for alternative techniques. In future work, we will address the important issue of estimating σ2 in the context of the covariance statistic, when p ≥ n.

7. Real data examples

We demonstrate the use of covariance test with some real data examples. As mentioned previously, in any serious application of significance testing over many variables (many steps of the lasso path), we would need to consider the issue of multiple comparisons, which we do not here. This is a topic for future work.

7.1. Wine data

Table 5 shows the results for the wine quality data taken from the UCI database. There are p = 11 predictors, and n = 1599 observations, which we split randomly into approximately equal-sized training and test sets. The outcome is a wine quality rating, on a scale between 0 and 10. The table shows the training set p-values from forward stepwise regression (with the chi-squared test) and the lasso (with the covariance test). Forward stepwise enters 6 predictors at the 0.05 level, while the lasso enters only 3.

TABLE 5.

Wine data: forward stepwise and lasso p-values. The values are rounded to 3 decimal places. For the lasso, we only show p-values for the steps in which a predictor entered the model and stayed in the model for the remainder of the path (i.e., if a predictor entered the model at a step but then later left, we do not show this step—we only show the step corresponding to its last entry point)

| Forward stepwise

|

Lasso

|

||||||

|---|---|---|---|---|---|---|---|

| Step | Predictor | RSS test | p-value | Step | Predictor | Cov test | p-value |

| 1 | Alcohol | 315.216 | 0.000 | 1 | Alcohol | 79.388 | 0.000 |

| 2 | Volatile_acidity | 137.412 | 0.000 | 2 | Volatile_acidity | 77.956 | 0.000 |

| 3 | Sulphates | 18.571 | 0.000 | 3 | Sulphates | 10.085 | 0.000 |

| 4 | Chlorides | 10.607 | 0.001 | 4 | Chlorides | 1.757 | 0.173 |

| 5 | pH | 4.400 | 0.036 | 5 | Total_sulfur_dioxide | 0.622 | 0.537 |

| 6 | Total_sulfur_dioxide | 3.392 | 0.066 | 6 | pH | 2.590 | 0.076 |

| 7 | Residual_sugar | 0.607 | 0.436 | 7 | Residual_sugar | 0.318 | 0.728 |

| 8 | Citric_acid | 0.878 | 0.349 | 8 | Citric_acid | 0.516 | 0.597 |

| 9 | Density | 0.288 | 0.592 | 9 | Density | 0.184 | 0.832 |

| 10 | Fixed_acidity | 0.116 | 0.733 | 10 | Free_sulfur_dioxide | 0.000 | 1.000 |

| 11 | Free_sulfur_dioxide | 0.000 | 0.997 | 11 | Fixed_acidity | 0.114 | 0.892 |

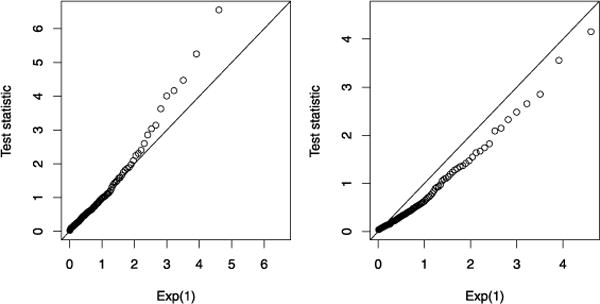

In the left panel of Figure 5, we repeated this p-value computation over 500 random splits into training test sets. The right panel shows the corresponding test set prediction error for the models of each size. The lasso test error decreases sharply once the 3rd predictor is added, but then somewhat flattens out from the 4th predictor onward; this is in general qualitative agreement with the lasso p-values in the left panel, the first 3 being very small, and the 4th p-value being about 0.2. This also echoes the well-known difference between hypothesis testing and minimizing prediction error. For example, the Cp statistic stops entering variables when the p-value is larger than about 0.16.

FIG. 5.

Wine data: the data were randomly divided 500 times into roughly equal-sized training and test sets. The left panel shows the training set p-values for forward stepwise regression and the lasso. The right panel show the test set error for the corresponding models of each size.

7.2. HIV data

Rhee et al. (2003) study six nucleotide reverse transcriptase inhibitors (NRTIs) that are used to treat HIV-1. The target of these drugs can become resistant through mutation, and they compare a collection of models for predicting the (log) susceptibility of the drugs, a measure of drug resistance, based on the location of mutations. We focused on the first drug (3TC), for which there are p = 217 sites and n = 1057 samples. To examine the behavior of the covariance test in the p > n setting, we divided the data at random into training and test sets of size 150 and 907, respectively, a total of 50 times. Figure 6 shows the results, in the same format as Figure 5. We used the model chosen by cross-validation to estimate σ2. The covariance test for the lasso suggests that there are only one or two important predictors (in marked contrast to the chi-squared test for forward stepwise), and this is confirmed by the test error plot in the right panel.

FIG. 6.

HIV data: the data were randomly divided 50 times into training and test sets of size 150 and 907, respectively. The left panel shows the training set p-values for forward stepwise regression and the lasso. The right panel shows the test set error for the corresponding models of each size.

8. Extensions

We discuss some extensions of the covariance statistic, beyond significance testing for the lasso. The proposals here are supported by simulations [in terms of having an Exp(1) null distribution], but we do not offer any theory. This may be a direction for future work.

8.1. The elastic net

The elastic net estimate [Zou and Hastie (2005)] is defined as

| (48) |

where γ ≥ 0 is a second tuning parameter. It is not hard to see that this can actually be cast as a lasso estimate with predictor matrix and outcome . This shows that, for a fixed γ, the elastic net solution path is piecewise linear over λ, with each knot marking the entry (or deletion) of a variable from the active set. We therefore define the covariance statistic in the same manner as we did for the lasso; fixing γ, to test the predictor entering at the kth step (knot λk) in the elastic net path, we consider the statistic