Abstract

Features of the retinal vasculature, such as vessel widths, are considered biomarkers for systemic disease. The aim of this work is to present a supervised approach to vessel segmentation in ultra-wide field of view scanning laser ophthalmoscope (UWFoV SLO) images and to evaluate its performance in terms of segmentation and vessel width estimation accuracy. The results of the proposed method are compared with ground truth measurements from human observers and with existing state-of-the-art techniques developed for fundus camera images that we optimized for UWFoV SLO images. Our algorithm is based on multi-scale matched filters, a neural network classifier and hysteresis thresholding. After spline-based refinement of the detected vessel contours, the vessel widths are estimated from the binary maps. Such analysis is performed on SLO images for the first time. The proposed method achieves the best results, both in vessel segmentation and in width estimation, in comparison to other automatic techniques.

OCIS codes: (100.2960) Image analysis; (100.3008) Image recognition, algorithms and filters; (100.4996) Pattern recognition, neural networks; (170.4470) Ophthalmology

1. Introduction

Evidence shows that changes in morphological features associated with retinal blood vessels such as widths, tortuosity and branching angles are biomarkers of systemic diseases such as hypertension, arteriosclerosis, stroke, myocardial infarction and cardiovascular disease [1–5]. The investigation of biomarkers has traditionally been confined to limited areas of the retina around the macula and the optic disc (OD). Examples are the Central Retinal Artery / Vein Equivalent [6] and Artery Vein Ratio [7], which are measured in an annulus that covers the surface from 1 to 1.5 optic disc diameters (ODD’s) around the center of the OD. Alternative devices such as the scanning laser ophthalmoscope (SLO) [8], with an ultra-wide field of view (UWFoV) of approximately 200° (compared to 30-60° with a fundus camera), can capture in a single image a larger part of the retina, allowing more extensive analysis of the associated vasculature. The automatic and objective quantification of morphometric vascular features is crucial, especially in population studies, where the manual annotation of large number of images would be an extremely time-consuming process. For this reason, since the work by Chaudhuri et al. [9], automatic tools for the segmentation of blood vessels and the estimation of their widths in fundus images have been extensively investigated and different approaches, such as [10–12] among others, have been presented in literature. To the best of our knowledge, the vast majority of these techniques have been developed for images acquired with fundus cameras and are less frequently applied to SLO images [13]. The task of vessel segmentation presents different challenges in UWFoV SLO images from conventional fundus images. For instance, the illumination is usually less uniform across such a large field of view. This leads to a lower contrast between the vessel edges and the background, especially in the periphery of the retina, and has to be taken into account.

The aim of this study is to present a supervised approach to vessel segmentation that is specifically tailored to UWFoV SLO images and to assess its performance in terms of segmentation accuracy and vessel width estimation error. We are the first group to investigate both tasks at the same time on this particular type of images. The proposed algorithm extends our previous unsupervised approach [14] based on Gaussian matched filters, morphological operations and hysteresis thresholding. This time multiple matched filters are adopted to take into account vessel width variations. The resulting maps, as well as information on vessel width and direction, are used as input to a neural network classifier. Segmentation results are evaluated using a ground truth set of images manually segmented by trained human observers.In addition, two well-known and publicly available segmentation techniques [15, 16], that give state-of-the-art results on fundus camera images, are adapted to work specifically on UWFoV SLO images and used for comparison. The performance of the proposed method is also evaluated in terms of accuracy of width estimation. The comparison is made, not only with the human observers and the two segmentation algorithms cited previously, but also with a version of the width estimation technique proposed by Lupascu et al. [17] that was adapted and modified for UWFoV SLO images.

No conclusions are drawn on associations between retinal vascular features and systemic disease but the method developed will enable future association studies.

2. Methods

This research followed the tenets of the Declaration of Helsinki and was approved by the Research Ethics Committee of the Universities of Dundee and Edinburgh, UK.

Informed consent was obtained by all the participants involved in the data collection.

2.1 Materials

For generality, the UWFoV SLO images (3900 x 3072 pixels) used in this study are acquired from a variety of volunteers with different medical histories. Ten images from volunteers (smokers and non-smokers, hypertensive and normotensive, mean age = 58.4 ± 17.5 years) involved in the SCOT-HEART Trial [18, 19], are captured using an Optos P200C SLO device. This non-mydriatic device makes use of a green laser source, operating at 532 nm, and of a red laser source, operating at 633 nm, to build a false color image of the retina and sub-retinal layers.

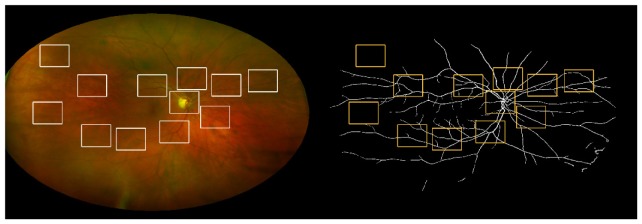

From each image [Fig. 1 ], 12 rectangular windows of size 2 x 1.5 ODD’s are extracted, manually segmented by two trained observers and used as ground truth to evaluate vessel segmentation. One window is centered on the OD. Four windows are located around the first one in order to capture the largest vessels usually visible in conventional fundus images with a narrower field of view. Three windows are located in the periphery of the image, avoiding eyelashes and other possible artifacts. Four additional windows are located in the area between the latest two groups. Apart from the one centered on the OD, the exact location of the other windows is different for each image, especially in the periphery, to ensure that windows not showing vessels are not selected.

Fig. 1.

Original UWFoV SLO image (left) and binary map segmented using the proposed method (right). The windows used for the segmentation evaluation are also shown.

The choice of this 120-window data set is made so that the selected windows are representative of the range of vessel widths and background intensities that can be found in an UWFoV SLO at different distances from the OD. This is also a good trade-off between the portion of the area covered by the 12 windows and the time cost of the manual segmentation of an entire image. We have determined empirically that approximately 18 hours are needed for an observer to manually segment an entire UWFOV SLO image while only 4 hours are required for 12 windows.

In the same set of images, 32 vessel widths per image, for a total of 144 widths, are manually annotated by three trained observers (Obs 1, Obs 2, Obs 3) and used to assess the accuracy of width estimation. Every width is measured once by each observer at selected points along the blood vessel paths chosen by Obs 1. Respectively, 8 vessels are annotated in the annulus between 1.0 and 1.5 ODD’s from the OD center, called zone B [20], 8 vessels between 1.5 and 2.0 ODD’s, 8 vessels between 2.0 and 2.5 ODD’s and the last 8 vessels in the region outside the last annulus. In each region 4 arteries and 4 veins are selected. All the annotations are made using specific tools from the VAMPIRE [21] software suite.

2.2 Pre-processing steps, morphological cleaning and matched filtering

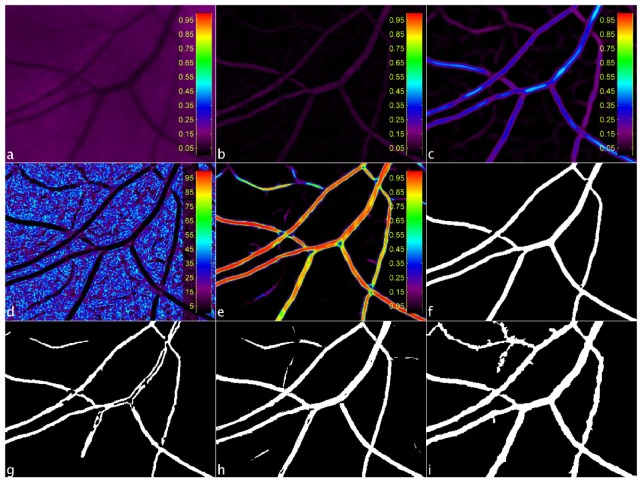

As reported in detail elsewhere [14], the green channel of the image [Fig. 2(a) ] undergoes a number of pre-processing steps to produce a map, Mm, where the background has been suppressed and the vascular network highlighted [Fig. 2(b)]. The retinal vasculature is further enhanced by convolving the map with a battery of orthogonally oriented Gaussian and Laplacian of Gaussian (LoG) kernels rotated in 15° steps to account for varying vessel orientation. The Gaussian filter is used to smooth the vessel along its direction while the LoG enhances the contrast of the vessel’s cross-sectional profile. The maximum intensity value at each pixel location is extracted to form a map [Fig. 2(c)], MC. The process is repeated at four different scales using different values of standard deviation σ for the LoG and the Gaussian filters to accommodate for the range of vessel widths expected after a visual inspection of the UWFoV SLO images. In particular, σ = w/(2√2), where the width of vessels are w = [3 7 11 15] pixels for the LoG kernel and a value equal to 4σ is used for the respective Gaussian kernel.

Fig. 2.

Example of window extracted from an UWFoV SLO image at different stages of the segmentation process: green channel of the image (a), Mm (b), MC (c), MSd (d), MNN (e), MB (f).Binary maps obtained by [14] (g), by [15] (h), by [16] (i).

A further step of morphological grayscale reconstruction is performed at this stage to recover small details lost during the process. A parametric map [22], MW, encoding the width range from the set of four maps from the convolution stage is created by taking the maximum value at each pixel location. Two direction maps are computed by measuring the local orientation using eigenvalue analysis of the Hessian matrix [23] on two orthogonal versions of Mm. By computing the local standard deviation (SD) of each of these direction maps and taking the minimum value at each pixel location, a SD map [Fig. 2(d)], MSd, is created without large SD values.

2.3 Neural network classification and hysteresis thresholding

The six parameterized maps from the previous processing steps (four MC’s, MW and MSd) form the input vector to the neural network classifier. A fully connected, two-layer, feed-forward neural network with nine neurons in the hidden layer is used at this stage. The activation function for the hidden layer is the log-sigmoid function, logsig(n) = 1/(1 + e-n), while the output layer has a softmax activation function, softmax(n) = en/∑en. The output is a map, MNN [Fig. 2(e)], where the intensity, at each pixel location, is the likelihood estimated by the neural network of that pixel belonging to a vessel.

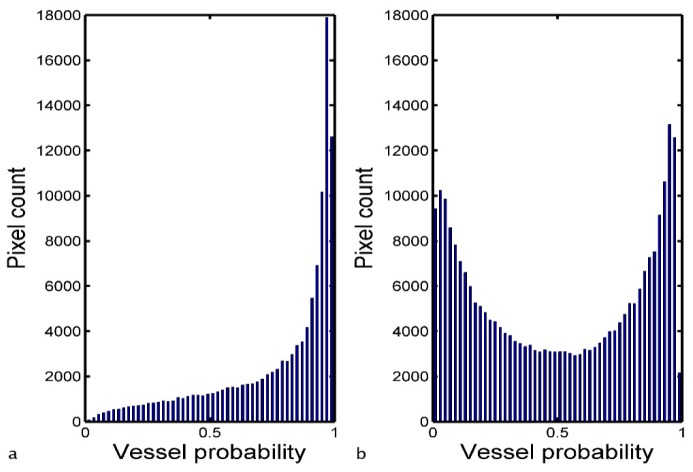

The final step in the processing towards the binary vessel map is to threshold MNN. Hysteresis thresholding is chosen due to its ability to include vessel pixels of lower likelihood that could otherwise be lost with a single global threshold level. A lower and upper bounds are set and any pixels with intensity higher than the upper bound are considered vessels and set to 1 in the final binary map. All the pixels with intensity values between the two bounds and connected to those above the upper bound are considered vessels as well and set to 1. The rest of the pixels are considered background and set to 0 in the final binary map. To aid in the thresholding step, a binary map of estimated vessels, ME, is obtained by setting all the pixels in MSd with intensity value ≤ 10 and connected to areas ≥ 1000 pixels (values determined by experiment) as vessel candidates and the rest as background. In the first instance, all pixel values in MNN that falls in the vessel regions defined by ME are identified and a vessel probability histogram is formed. Depending on the shape of this histogram one of two possible sets of hysteresis threshold bounds is chosen. If the histogram is well fitted (R2 > 0.85) by a power function, f(x) = a + bxc, with a single peak located between a vessel probability of 0.9 and 1, the lower and higher threshold bounds are fixed respectively at 0.50 and 0.65 [Fig. 3(a) ]. If the two conditions are not satisfied together, the threshold bounds are set respectively to Mh−0.15σh and Mh + 0.15σh, where Mh and σh are the mean value and the SD of the vessel probability histogram [Fig. 3(b)]. This second case occurs 20% of the times in our image set.

Fig. 3.

Examples of vessel probability histograms of all pixel values in MNN that falls in the vessel regions defined by ME: histogram well fitted by a power function with a single peek between 0.9 and 1 (a), histogram that does not satisfy the two conditions (b).

At this stage, ME is skeletonized and added to the upper bound hysteresis map to connect potential vessel pixels of lower probability. This can be particularly effective in peripheral regions of UWFoV SLO images where contrast may be low but ME can still predict vessel presence. Lastly, the binary map undergoes morphological cleaning to produce the final binary vessel map, MB [Fig. 2(f)].

2.4 Adaptation of fundus-camera image segmentation techniques

Two fundus-camera image segmentation techniques are adapted to achieve the best segmentation performance on UWFoV SLO images and used for comparison. First, the supervised algorithm by Soares et al. [15] is re-trained on our data set (leave-one-image-out cross-validation) and the Gabor wavelet coefficients are re-scaled to fit the widths of blood vessels on UWFoV SLO images. Second, the algorithm by Bankhead et al. [16], in which the wavelet levels of the IUWT are rescaled and the threshold percentage yielding the binary map is lowered to account for the lower percentage of vessel pixels visible in the 200° field of view.

2.5 Width estimation

Four different binary maps are obtained from the segmentation of each image using the aforementioned techniques: proposed method, and our previous unsupervised approach, Robertson et al. [Fig. 2(g)], Soares et al. [Fig. 2(h)], Bankhead et al. [Fig. 2(i)]. After a step of spline-refinement of the vessel edges [24] is applied to the outputs of each segmentation algorithm, all the width of all the vessels annotated in the WE-Data set is measured. The width estimation algorithm by Lupascu et al. [17] is re-implemented adding a pre-processing step of contrast enhancement and adapting the Gaussian fit for the detection of the vessel boundaries. The algorithm is then re-trained on our data set (leave-one-image-out cross-validation).

2.6 Statistics

The inter-observer agreement between the trained annotators that manually segmented the vessels is evaluated in terms of Cohen’s Kappa coefficient [25]. With a k value equal to 0.83 the agreement is considered “almost perfect” according to the guidelines in [26].

To evaluate the performance of vessel segmentation algorithms, standard metrics are computed according to the guidelines in [20]. Mean values and SD of true positive rate (TPR), false positive rate (FPR), positive predictive value (PPV), negative predictive value (NPV), accuracy (Acc) and area under the curve (AUC) of the receiving operating characteristics of the segmentation techniques are calculated. Assuming the OD size to be constant and according to the number of pixels that a window covers, mean and SD are weighted to account for the variation of window size among images. The values achieved using the first observer as ground truth and those achieved using the second observer as ground truth are then averaged to obtain the final results reported in Section 3 [Table 1 ]. A McNemar’s test [27] is used to assess whether the difference in segmentation accuracy between the two best performing algorithms is statistically significant or not.

Table 1. Vessel segmentation results.

| Segmentation Method | TPR | FPR | PPV | NPV | Acc | AUC |

|---|---|---|---|---|---|---|

| 2nd Observer | 0.833(0.026) | 0.015(0.003) | 0.833(0.026) | 0.985(0.003) | 0.972(0.003) | n. a. |

| Proposed method | 0.702(0.059) | 0.011(0.006) | 0.865(0.048) | 0.973(0.006) | 0.965(0.006) | 0.97 |

| Robertson et al. [14] | 0.593(0.073) | 0.009(0.005) | 0.858(0.062) | 0.963(0.010) | 0.957(0.008) | 0.87 |

| Soares et al. [15] | 0.674(0.062) | 0.017(0.004) | 0.786(0.056) | 0.970(0.006) | 0.957(0.006) | 0.96 |

| Bankhead et al. [16] | 0.819(0.038) | 0.033(0.007) | 0.697(0.049) | 0.983(0.004) | 0.954(0.006) | 0.95 |

Values are expressed as average and (SD).

For every vessel width, the average of the values measured by the three observers (Obs average) is considered as ground truth. The set of vessel widths is divided in three subgroups. This choice is motivated by the fact that we are interested in assessing the algorithm performances in detail at different scales. Large vessels are those generally taken into account for the investigation of biomarkers in zone B. Medium vessels are the most numerous across the large field of view of the images in our data set. Small vessels could be relevant for the investigation of different types of biomarkers such as the fractal dimension of the retinal vasculature [28]. The choice of the thresholds (6.5 and 9.0 pixels) between the three subgroups is determined by visual inspection of the histogram of the manually annotated vessel widths so that the groups contain approximately the same number of samples. The smallest width measured in the data set is equal to 3.6 pixels while the largest one is equal to 14.4 pixels.

Since no statistically significant difference is found between the distributions of width estimation errors of arteries and veins (unpaired t-test, α = 0.05, p-value = 0.19), no further distinction is made in that sense in our analysis. We report width ranges and results [Table 2 ] using mean and SD of the ground truth, and mean and SD of the differences between estimated value and ground truth. The Pearson’s correlation coefficients (r) between the ground truth and the widths, either measured by the observers or estimated from the binary vessel maps, are also reported in the table. Lastly, paired t-tests are performed between the ground truth and the set of widths estimated from the binary maps obtained by the different segmentation techniques.

Table 2. Vessel width results.

| Width range | Obs average | Differences with respect to the ground truth

|

|||||||

|---|---|---|---|---|---|---|---|---|---|

| Obs 1 | Obs 2 | Obs 3 | Robertson et al. [14] | Soares et al. [15] | Bankhead et al. [16] | Lupascu et al. [17] | Proposed method | ||

| Small 3.5-6.5 | 5.51 (0.72) | 0.16 (0.51) | −0.04 (0.41) | −0.11 (0.63) | −0.40 (1.09) | −0.20 (1.52) | 1.80 (1.41) | 1.54 (1.08) | 0.65 (0.94) |

| Medium 6.5-9.0 | 7.54 (0.73) | 0.41 (0.63) | 0.11 (0.55) | −0.53 (0.80) | −1.22 (1.65) | −0.40 (1.90) | 2.00 (1.24) | 0.84 (0.99) | 0.16 (1.12) |

| Large 9.0-14.5 | 10.53 (1.23) | 0.30 (0.75) | 0.13 (0.51) | −0.44 (0.85) | −3.69 (2.64) | −2.18 (3.92) | 1.36 (1.06) | −0.64 (1.64) | −0.55 (1.02) |

| Total 3.5-14.5 | 7.27 (1.94) | 0.30 (0.62) | 0.06 (0.50) | −0.35 (0.77) | −1.33 (2.03) | −0.63 (2.36) | 1.81 (1.29) | 0.85 (1.38) | 0.22 (1.11) |

| Pearson’s r | 1.00 | 0.96 | 0.97 | 0.92 | 0.42 | 0.49 | 0.81 | 0.71 | 0.82 |

Except from the last row, all values are expressed in pixels as average and (SD)

3. Results

The performance of the vessel segmentation algorithms are shown [Table 1]. From a McNemar’s test (α = 0.05, p-value < 0.001) we can infer that the proposed method achieves a significantly better segmentation accuracy with respect to the second best performing technique [15].

The evaluation of the vessel width estimation performance is shown [Table 2]. All figures are expressed in pixels. Given the UWFoV, the conversion pixel-µm depends on the location where the width is measured. This conversion can be calculated, following the instrument specifications provided by the manufacturers, after a stereographic projection (proprietary OPTOS software) of the image [29] that takes into account the gaze angle of the patient, determined by the location of the fovea. Based on a theoretical calculation on a subset of the annotated vessel widths, assuming a constant size of the eye bulb, we have determined that 1 pixel = 17.2 µm on average in zone B while at 4 ODD’s from the OD, where the farthest vessel width is measured, 1 pixel = 20.6 µm on average.

The proposed method is the only one that does not show a statistically significant difference (α = 0.05, p-value = 0.13) in the distribution of the estimated vessel widths with respect to the ground truth. Paired t-test between every other set of estimated widths and the ground truth result in a rejection of the null hypothesis (α = 0.05, p-value < 0.001 in all four cases).

4. Discussion

We presented a supervised vessel segmentation technique based on multi-scale matched filters, a neural network classifier and hysteresis thresholding. We addressed vessel segmentation in UWFoV SLO images as well as evaluated performance in terms of accuracy in vessel width estimation for the first time. The effectiveness of a segmentation algorithm in this second task is important since metrics based on vessel widths are considered biomarkers of systemic diseases. Therefore automatic segmentation algorithms producing inaccurate measures of widths could lead to erroneous conclusions in biomarker studies.

The proposed method achieves the best results in our comparison tests in vessel segmentation accuracy (Acc = 0.965 ± 0.006) and AUC (0.97). The low value of SD of the segmentation accuracy suggests that the method performs consistently on the entire set of windows. Windows located close to the OD are where largest vessels are the most visible. Thin vessels, instead, are more abundant in windows taken from the periphery of the image. A low SD in segmentation accuracy is therefore an indication of the goodness of the proposed technique in segmenting all possible scales of vessels. These results represent a considerable improvement with respect to our previous approach [14] and have proven to be significantly better than the performance achieved by the best of the techniques [15, 16] developed for fundus camera images that we adapted to UWFoV SLO images.

At the same time, the proposed method presents the lowest overall bias (0.22 pixels), which is comparable to those between human observers, and the lowest SD (1.11 pixels) in width estimation errors among the automatic algorithms [14–17] used for comparison. The results achieved by the proposed method are the only ones that do not show a statistically significant difference from the ground truth. Lastly, the values of Pearson’s r coefficients indicate that the widths estimated from the binary vessel maps automatically segmented with the proposed method are the most correlated (r = 0.82) to the ground truth.

It is worth noting that a good value of vessel segmentation accuracy does not necessarily imply good results in vessel width estimation. This is made explicit by the performance in the two tasks (see Table 1 and Table 2) of [14] and [15]. Both techniques show the second highest value of segmentation accuracy (Acc = 0.957) but at the same time the two lowest correlations (r respectively equal to 0.42 and 0.49) to the vessel width ground truth.

One known limitation of the proposed algorithm is its supervised nature that requires a tedious and time consuming step of manual segmentation of retinal images, necessary to train the neural network classifier. After the training phase, the time needed to process a whole UWFoV SLO image by our method is comparable (approximately 200 seconds) to the time required by the other supervised method [15] that has been tested. The unsupervised technique by Bankhead et al. [16] is considerably faster (10 seconds) given the same computer configuration (i5-3450 CPU @ 3.10 GHz, 8.00 GB of RAM).

A limitation of this study is that the OD dimension is assumed to be constant among participants as previously reported by other authors [7]. The study of the refraction of each subject is also beyond the scope of this work.

A more comprehensive investigation of the performance of the proposed method in conventional fundus camera images and the possible differences with respect to UWFoV SLO ones acquired from the same subject is currently being carried out.

Acknowledgements

The authors would like to thank Soares, Bankhead and colleagues for making their software publicly available. This project is supported by a joint grant from Optos plc and Scottish Imaging Network – a Platform of Scientific Excellence (SINAPSE). The Clinical Research Imaging Centre is supported by NHS Research Scotland (NRS) through NHS Lothian.

References and links

- 1.Patton N., Aslam T. M., MacGillivray T., Deary I. J., Dhillon B., Eikelboom R. H., Yogesan K., Constable I. J., “Retinal image analysis: concepts, applications and potential,” Prog. Retin. Eye Res. 25(1), 99–127 (2006). 10.1016/j.preteyeres.2005.07.001 [DOI] [PubMed] [Google Scholar]

- 2.McClintic B. R., McClintic J. I., Bisognano J. D., Block R. C., “The relationship between retinal microvascular abnormalities and coronary heart disease: a review,” Am. J. Med. 123, 374 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Wong T. Y., Islam F. M. A., Klein R., Klein B. E. K., Cotch M. F., Castro C., Sharrett A. R., Shahar E., “Retinal vascular caliber, cardiovascular risk factors, and inflammation: the multi-ethnic study of atherosclerosis (MESA),” Invest. Ophthalmol. Vis. Sci. 47(6), 2341–2350 (2006). 10.1167/iovs.05-1539 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Ogagarue E. R., Lutsey P. L., Klein R., Klein B. E., Folsom A. R., “Association of ideal cardiovascular health metrics and retinal microvascular findings: the Atherosclerosis Risk in Communities Study,” J. Am. Heart Assoc. 2(6), 000430 (2013). 10.1161/JAHA.113.000430 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Wang J. J., Liew G., Wong T. Y., Smith W., Klein R., Leeder S. R., Mitchell P., “Retinal vascular calibre and the risk of coronary heart disease-related death,” Heart 92(11), 1583–1587 (2006). 10.1136/hrt.2006.090522 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Parr J. C., Spears G. F., “General caliber of the retinal arteries expressed as the equivalent width of the central retinal artery,” Am. J. Ophthalmol. 77(4), 472–477 (1974). 10.1016/0002-9394(74)90457-7 [DOI] [PubMed] [Google Scholar]

- 7.Knudtson M. D., Lee K. E., Hubbard L. D., Wong T. Y., Klein R., Klein B. E. K., “Revised formulas for summarizing retinal vessel diameters,” Curr. Eye Res. 27(3), 143–149 (2003). 10.1076/ceyr.27.3.143.16049 [DOI] [PubMed] [Google Scholar]

- 8.Jones W., Karamchandani G., Panoramic Ophthalmoscopy, Optomap Images and Interpretation (SLACK Incorporated, 2007). [Google Scholar]

- 9.Chaudhuri S., Chatterjee S., Katz N., Nelson M., Goldbaum M., “Detection of blood vessels in retinal images using two-dimensional matched filters,” IEEE Trans. Med. Imaging 8(3), 263–269 (1989). 10.1109/42.34715 [DOI] [PubMed] [Google Scholar]

- 10.Staal J., Abràmoff M. D., Niemeijer M., Viergever M. A., van Ginneken B., “Ridge-based vessel segmentation in color images of the retina,” IEEE Trans. Med. Imaging 23(4), 501–509 (2004). 10.1109/TMI.2004.825627 [DOI] [PubMed] [Google Scholar]

- 11.Mendonça A. M., Campilho A., “Segmentation of retinal blood vessels by combining the detection of centerlines and morphological reconstruction,” IEEE Trans. Med. Imaging 25(9), 1200–1213 (2006). 10.1109/TMI.2006.879955 [DOI] [PubMed] [Google Scholar]

- 12.Ricci E., Perfetti R., “Retinal blood vessel segmentation using line operators and support vector classification,” IEEE Trans. Med. Imaging 26(10), 1357–1365 (2007). 10.1109/TMI.2007.898551 [DOI] [PubMed] [Google Scholar]

- 13.Xu J., Ishikawa H., Wollstein G., Schuman J. S., “Retinal vessel segmentation on SLO image,” Conf. Proc. IEEE Eng. Med. Biol. Soc. 2008, 2258–2261 (2008). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Robertson G., Pellegrini E., Gray C., Trucco E., MacGillivray T., “Investigating post-processing of scanning laser ophthalmoscope images for unsupervised retinal blood vessel detection,” in Proocedings of IEEE 26th International Symposium on Computer-Based Medical Systems (IEEE, 2013), pp. 441–444 10.1109/CBMS.2013.6627836 [DOI] [Google Scholar]

- 15.Soares J. V. B., Leandro J. J. G., Cesar Júnior R. M., Jelinek H. F., Cree M. J., “Retinal vessel segmentation using the 2-D Gabor wavelet and supervised classification,” IEEE Trans. Med. Imaging 25(9), 1214–1222 (2006). 10.1109/TMI.2006.879967 [DOI] [PubMed] [Google Scholar]

- 16.Bankhead P., Scholfield C. N., McGeown J. G., Curtis T. M., “Fast retinal vessel detection and measurement using wavelets and edge location refinement,” PLoS ONE 7(3), e32435 (2012). 10.1371/journal.pone.0032435 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Lupaşcu C. A., Tegolo D., Trucco E., “Accurate estimation of retinal vessel width using bagged decision trees and an extended multiresolution Hermite model,” Med. Image Anal. 17(8), 1164–1180 (2013). 10.1016/j.media.2013.07.006 [DOI] [PubMed] [Google Scholar]

- 18.Newby D. E., Williams M. C., Flapan A. D., Forbes J. F., Hargreaves A. D., Leslie S. J., Lewis S. C., McKillop G., McLean S., Reid J. H., Sprat J. C., Uren N. G., van Beek E. J., Boon N. A., Clark L., Craig P., Flather M. D., McCormack C., Roditi G., Timmis A. D., Krishan A., Donaldson G., Fotheringham M., Hall F. J., Neary P., Cram L., Perkins S., Taylor F., Eteiba H., Rae A. P., Robb K., Barrie D., Bissett K., Dawson A., Dundas S., Fogarty Y., Ramkumar P. G., Houston G. J., Letham D., O’Neill L., Pringle S. D., Ritchie V., Sudarshan T., Weir-McCall J., Cormack A., Findlay I. N., Hood S., Murphy C., Peat E., Allen B., Baird A., Bertram D., Brian D., Cowan A., Cruden N. L., Dweck M. R., Flint L., Fyfe S., Keanie C., MacGillivray T. J., Maclachlan D. S., MacLeod M., Mirsadraee S., Morrison A., Mills N. L., Minns F. C., Phillips A., Queripel L. J., Weir N. W., Bett F., Divers F., Fairley K., Jacob A. J., Keegan E., White T., Gemmill J., Henry M., McGowan J., Dinnel L., Francis C. M., Sandeman D., Yerramasu A., Berry C., Boylan H., Brown A., Duffy K., Frood A., Johnstone J., Lanaghan K., MacDuff R., MacLeod M., McGlynn D., McMillan N., Murdoch L., Noble C., Paterson V., Steedman T., Tzemos N., “Role of multidetector computed tomography in the diagnosis and management of patients attending the rapid access chest pain clinic, The Scottish computed tomography of the heart (SCOT-HEART) trial: study protocol for randomized controlled trial,” Trials 13(1), 184 (2012). 10.1186/1745-6215-13-184 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Robertson G., Peto T., Dhillon B., Williams M. C., Trucco E., van Beek E. J. R., Houston G., Newby D. E., MacGillivray T., “Wide-field Ophthalmic Imaging for Biomarkers Discovery in Coronary Heart Disease,” presented at the ARVO ISIE Imaging Conference, Seattle, US, May 2013. [Google Scholar]

- 20.Fraz M. M., Remagnino P., Hoppe A., Uyyanonvara B., Rudnicka A. R., Owen C. G., Barman S. A., “Blood vessel segmentation methodologies in retinal images--a survey,” Comput. Methods Programs Biomed. 108(1), 407–433 (2012). 10.1016/j.cmpb.2012.03.009 [DOI] [PubMed] [Google Scholar]

- 21.MacGillivray T., Perez-Rovira A., Trucco E., Chin K. S., Giachetti A., Lupascu C., Tegolo D., Wilson P. J., Doney A., Laude A., Dhillon B., “VAMPIRE: Vessel Assessment and Measurement Platform for Images of the Retina,” in Human Eye Imaging and Modelling, Ng E. Y. K., Tan J. H., Acharya U. R., Suri J. S., eds. (CRC Press, 2012). [DOI] [PubMed] [Google Scholar]

- 22.Jan J., Odstrcilik J., Gazarek J., Kolar R., “Retinal image analysis aimed at blood vessel tree segmentation and early detection of neural-layer deterioration,” Comput. Med. Imaging Graph. 36(6), 431–441 (2012). 10.1016/j.compmedimag.2012.04.006 [DOI] [PubMed] [Google Scholar]

- 23.Frangi A. F., Niessen W. J., Vincken K. L., Viergever M. A., “Multiscale vessel enhancement filtering,” in MICCAI 1998 Lecture Notes in Computer Science, Wells W. M., Colchester A. C. F., Delp S. L., eds. (Springer, 1998). [Google Scholar]

- 24.Cavinato A., Ballerini L., Trucco E., Grisan E., “Spline-based refinement of vessel contours in fundus retinal images for width estimation,” in Proceedings of IEEE International Symposium on Biomedical Imaging: from Nano to Macro (IEEE, 2013), pp. 860–863 10.1109/ISBI.2013.6556614 [DOI] [Google Scholar]

- 25.Carletta J., “Assessing agreement on classification tasks: the kappa statistics,” Comput. Linguist. 22(2), 249–254 (1996). [Google Scholar]

- 26.Landis J. R., Koch G. G., “The measurement of observer agreement for categorical data,” Biometrics 33(1), 159–174 (1977). 10.2307/2529310 [DOI] [PubMed] [Google Scholar]

- 27.McNemar Q., “Note on the sampling error of the difference between correlated proportions or percentages,” Psychometrika 12(2), 153–157 (1947). 10.1007/BF02295996 [DOI] [PubMed] [Google Scholar]

- 28.Doubal F. N., MacGillivray T. J., Patton N., Dhillon B., Dennis M. S., Wardlaw J. M., “Fractal analysis of retinal vessels suggests that a distinct vasculopathy causes lacunar stroke,” Neurology 74(14), 1102–1107 (2010). 10.1212/WNL.0b013e3181d7d8b4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Croft D. E., van Hemert J., Wykoff C. C., Clifton D., Verhoek M., Fleming A., Brown D. M., “Precise montaging and metric quantification of retinal surface area from ultra-widefield fundus photography and fluorescein angiography,” Ophthalmic Surg. Lasers Imaging Retina 45(4), 312–317 (2014). 10.3928/23258160-20140709-07 [DOI] [PubMed] [Google Scholar]