Abstract

We address the problem of sparse selection in linear models. A number of nonconvex penalties have been proposed in the literature for this purpose, along with a variety of convex-relaxation algorithms for finding good solutions. In this article we pursue a coordinate-descent approach for optimization, and study its convergence properties. We characterize the properties of penalties suitable for this approach, study their corresponding threshold functions, and describe a df-standardizing reparametrization that assists our pathwise algorithm. The MC+ penalty is ideally suited to this task, and we use it to demonstrate the performance of our algorithm. Certain technical derivations and experiments related to this article are included in the Supplementary Materials section.

Keywords: Degrees of freedom, LASSO, Nonconvex optimization, Regularization surface, Sparse regression, Variable selection

1. INTRODUCTION

Consider the usual linear regression setup

| (1) |

with n observations and p features X = [x1, …, xp], response y, coefficient vector β and (stochastic) error ε. In many modern statistical applications with p ≫ n, the true β vector is often sparse, with many redundant predictors having coefficient zero. We would like to identify the useful predictors and also obtain good estimates of their coefficients. Identifying a set of relevant features from a list of many thousand is in general combinatorially hard and statistically troublesome. In this context, convex relaxation techniques such as the LASSO (Chen and Donoho 1994; Tibshirani 1996) have been effectively used for simultaneously producing accurate and parsimonious models. The LASSO solves

| (2) |

The ℓ1 penalty shrinks coefficients towards zero, and can also set many coefficients to be exactly zero. In the context of variable selection, the LASSO is often thought of as a convex surrogate for best-subset selection:

| (3) |

The ℓ0 penalty penalizes the number of nonzero coefficients in the model.

The LASSO enjoys attractive statistical properties (Knight and Fu 2000; Donoho 2006; Meinshausen and Bühlmann 2006; Zhao and Yu 2006). Under certain regularity conditions on X, it produces models with good prediction accuracy when the underlying model is reasonably sparse. Zhao and Yu (2006) established that the LASSO is model selection consistent: Pr(

=

=

) → 1, where

) → 1, where

corresponds to the set of nonzero coeffi-cients (active) in the true model and

corresponds to the set of nonzero coeffi-cients (active) in the true model and

those recovered by the LASSO. Typical assumptions limit the pairwise correlations between the variables.

those recovered by the LASSO. Typical assumptions limit the pairwise correlations between the variables.

However, when these regularity conditions are violated, the LASSO can be suboptimal in model selection (Zou 2006; Friedman 2008; Zhang and Huang 2008; Zou and Li 2008; Zhang 2010a). Since the LASSO both shrinks and selects, it often selects a model that is overly dense in its effort to relax the penalty on the relevant coefficients. Typically in such situations greedier methods like subset regression and the noncon-vex methods we discuss here achieve sparser models than the LASSO for the same or better prediction accuracy, and enjoy superior variable-selection properties.

There are computationally attractive algorithms for the LASSO. The piecewise-linear LASSO coefficient paths can be computed efficiently via the LARS (homotopy) algorithm (Osborne, Presnell, and Turlach 2000; Efron et al. 2004). Coordinate-wise optimization algorithms (Friedman, Hastie, and Tibshirani 2009) appear to be the fastest for computing the regularization paths for a variety of loss functions, and scale well. One-at-a time coordinate-wise methods for the LASSO make repeated use of the univariate soft-thresholding operator

| (4) |

In solving (2), the one-at-a-time coordinate-wise updates are given by

| (5) |

where (assuming each xj is standardized to have mean zero and unit ℓ2 norm). Starting with an initial guess for β̃ (typically a solution at the previous value for λ), we cyclically update the parameters using (5) until convergence.

The LASSO can fail as a variable selector. In order to get the full effect of a relevant variable, we have to relax the penalty, which lets in other redundant but possibly correlated features. This is in contrast to best-subset regression; once a strong variable is included and fully fit, it drains the effect of its correlated surrogates.

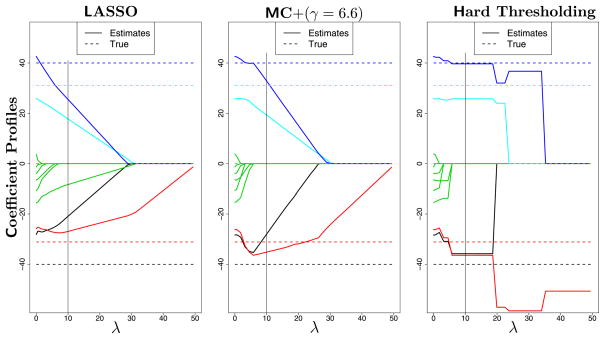

As an illustration, Figure 1 shows the regularization path of the LASSO coefficients for a situation where it is suboptimal for model selection. (The simulation setup is de-fined in Section 7. Here n = 40, p = 10, SNR = 3, β = (−40, −31, 01×6, 31, 40) and X ~ MVN(0, Σ), where Σ = diag[Σ(0.65; 5), Σ(0; 5)].)

Figure 1.

Regularization path for LASSO (left), MC+ (nonconvex) penalized least-squares for γ = 6.6 (center) and γ = 1+ (right), corresponding to the hard-thresholding operator (best subset). The true coefficients are shown as horizontal dotted lines. Here LASSO is suboptimal for model selection, as it can never recover the true model. The other two penalized criteria are able to select the correct model (vertical lines), with the middle one having smoother coefficient profiles than best subset on the right.

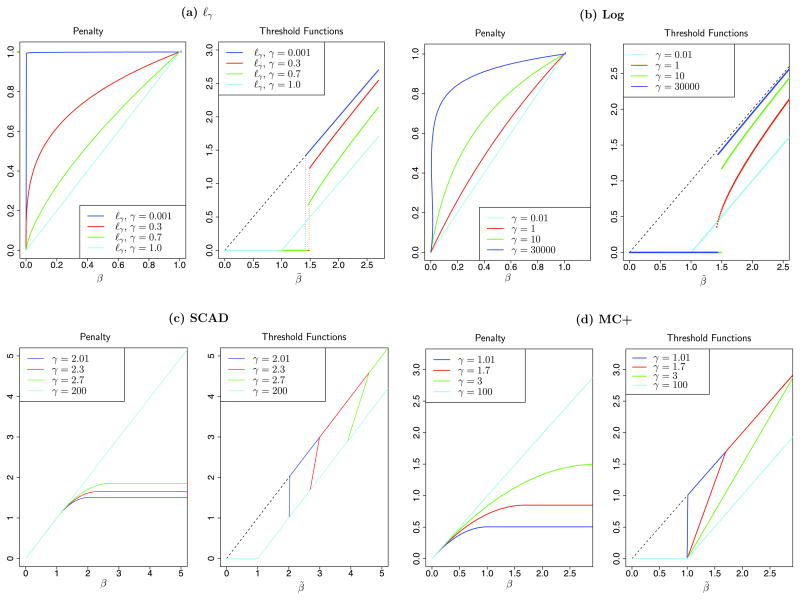

This motivates going beyond the ℓ1 regime to more aggressive nonconvex penalties (see the left-hand plots for each of the four penalty families in Figure 2), bridging the gap between ℓ1 and ℓ0 (Fan and Li 2001; Zou 2006; Friedman 2008; Zou and Li 2008; Zhang 2010a). Similar to (2), we minimize

Figure 2.

Nonconvex penalty families and their corresponding threshold functions. All are shown with λ = 1 and different values for γ.

| (6) |

where P(|β|; λ; γ) defines a family of penalty functions concave in |β|, and λ and γ control the degrees of regularization and concavity of the penalty respectively.

The main challenge here is in the minimization of the possibly nonconvex objective Q(β). As one moves down the continuum of penalties from ℓ1 to ℓ0, the optimization potentially becomes combinatorially hard (the optimization problems become nonconvex when the nonconvexity of the penalty is no longer dominated by the convexity of the squared error loss).

Our contributions in this article are as follows:

We propose a coordinate-wise optimization algorithm SparseNet for finding minima of Q(β). Our algorithm cycles through both λ and γ, producing solution surfaces β̂λ, γ for all families simultaneously. For each value of λ, we start at the LASSO solution, and then update the solutions via coordinate descent as γ changes, moving us towards best-subset regression.

We study the generalized univariate thresholding functions Sγ(β̃, λ) that arise from different nonconvex penalties (6), and map out a set of properties that make them more suitable for coordinate descent. In particular, we seek continuity (in β̃) of these threshold functions for both λ and γ.

We prove convergence of coordinate descent for a useful subclass of nonconvex penalties, generalizing the results of Tseng and Yun (2009) to nonconvex problems. Our results go beyond those of Tseng (2001) and Zou and Li (2008); they study stationarity properties of limit points, and not convergence of the sequence produced by the algorithms.

We propose a reparametrization of the penalty families that makes them even more suitable for coordinate-descent. Our reparametrization constrains the coordinate-wise effective degrees of freedom at any value of λ to be constant as γ varies. This in turn allows for a natural transition across neighboring solutions as as we move through values of γ from the convex LASSO towards best-subset selection, with the size of the active set decreasing along the way.

We compare our algorithm to the state of the art for this class of problems, and show how our approaches lead to improvements.

Note that this article is about an algorithm for solving a non-convex optimization problem. What we produce is a good estimate for the solution surfaces. We do not go into methods for selecting the tuning paramaters, nor the properties of the resulting estimators.

The article is organized as follows. In Section 2 we study four families of nonconvex penalties, and their induced thresholding operators. We study their properties, particularly from the point of view of coordinate descent. We propose a degree of freedom (df) calibration, and lay out a list of desirable properties of penalties for our purposes. In Section 3 we describe our SparseNet algorithm for finding a surface of solutions for all values of the tuning parameters. In Section 5 we illustrate and implement our approach using the MC+ penalty (Zhang 2010a) or the firm shrinkage threshold operator (Gao and Bruce 1997). In Section 6 we study the convergence properties of our SparseNet algorithm. Section 7 presents simulations under a variety of conditions to demonstrate the performance of SparseNet. Section 8 investigates other approaches, and makes comparisons with SparseNet and multistage local linear approximation (MLLA/LLA) (Candes, Wakin, and Boyd 2008; Zou and Li 2008; Zhang 2010b). The proofs of lemmas and theorems are gathered in the Appendix, and certain techical discussions/examples are relegated to the Supplementary Materials section.

2. GENERALIZED THRESHOLDING OPERATORS

In this section we examine the one-dimensional optimization problems that arise in coordinate descent minimization of Q(β). With squared-error loss, this reduces to minimizing

| (7) |

We study (7) for different nonconvex penalties, as well as the associated generalized threshold operator

| (8) |

As γ varies, this generates a family of threshold operators Sγ(·, λ) : ℜ → ℜ. The soft-threshold operator (4) of the LASSO is a member of this family. The hard-thresholding operator (9) can also be represented in this form

| (9) |

Our interest in thresholding operators arose from the work of She (2010), who also uses them in the context of sparse variable selection, and studies their properties for this purpose. Our approaches differ, however, in that our implementation uses coordinate descent, and exploits the structure of the problem in this context.

For a better understanding of nonconvex penalties and the associated threshold operators, it is helpful to look at some examples. For each penalty family (a)–(d), there is a pair of plots in Figure 2; the left plot is the penalty function, the right plot the induced thresholding operator.

The ℓγ penalty given by λP(t; λ; γ) = λ|t|γ for γ ∈ [0, 1], also referred to as the bridge or power family (Frank and Friedman 1993; Friedman 2008).

-

The log-penalty is a generalization of the elastic net family (Friedman 2008) to cover the nonconvex penalties from LASSO down to best subset.

(10) where for each value of λ we get the entire continuum of penalties from ℓ1(γ → 0+) to ℓ0(γ → ∞).

- The SCAD penalty (Fan and Li 2001) is defined via

(11) -

The MC+ family of penalties (Zhang 2010a) is defined by

(12) For each value of λ > 0 there is a continuum of penalties and threshold operators, varying from γ → ∞ (soft threshold operator) to γ → 1+ (hard threshold operator). The MC+ is a reparametrization of the firm shrinkage operator introduced by Gao and Bruce (1997) in the context of wavelet shrinkage.

Other examples of nonconvex penalties include the transformed ℓ1 penalty (Nikolova 2000) and the clipped ℓ1 penalty (Zhang 2010b).

Although each of these four families bridge ℓ1 and ℓ0, they have different properties. The two in the top row in Figure 2, for example, have discontinuous univariate threshold functions, which would cause instability in coordinate descent. The threshold operators for the ℓγ, log-penalty and the MC+ form a continuum between the soft and hard-thresholding functions. The family of SCAD threshold operators, although continuous, do not include H(·, λ). We study some of these properties in more detail in Section 4.

3. SparseNet: ALGORITHM TO CONSTRUCT THE REGULARIZATION SURFACE β̂λ,γ

We now present our SparseNet algorithm for obtaining a family of solutions β̂γ, λ to (6). The X matrix is assumed to be standardized with each column having zero mean and unit ℓ2 norm. For simplicity, we assume γ = ∞ corresponds to the LASSO and γ = 1+, the hard-thresholding members of the penalty families. The basic idea is as follows. For γ = ∞, we compute the exact solution path for Q(β) as a function of λ using coordinate-descent. These solutions are used as warm-starts for the minimization of Q(β) at a smaller value of γ, corresponding to a more nonconvex penalty. We continue in this fashion, decreasing γ, till we have the solutions paths across a grid of values for γ. The details are given in Algorithm 1.

In Section 4 we discuss certain properties of penalty functions and their threshold functions that are suited to this algorithm. We also discuss a particular form of df recalibration that provides attractive warm-starts and at the same time adds statistical meaning to the scheme.

We have found two variants of Algorithm 1 useful in practice:

In computing the solution at (γk, λℓ), the algorithm uses as a warm start the solution β̂γk+1,λℓ We run a parallel coordinate descent with warm start β̂γk,λℓ+1, and then pick the solution with a smaller value for the objective function. This often leads to improved and smoother objective-value surfaces.

It is sometimes convenient to have a different λ sequence for each value of γ —for example, our recalibrated penalties lead to such a scheme using a doubly indexed sequence λkℓ.

Algorithm 1.

SparseNet

|

In Section 5 we implement SparseNet using the calibrated MC+ penalty, which enjoys all the properties outlined in Section 4.3 below. We show that the algorithm converges (Section 6) to a stationary point of Q(β) for every (λ, γ).

4. PROPERTIES OF FAMILIES OF NONCONVEX PENALTIES

Not all nonconvex penalties are suitable for use with coordinate descent. Here we describe some desirable properties, and a recalibration suitable for our optimization Algorithm 1.

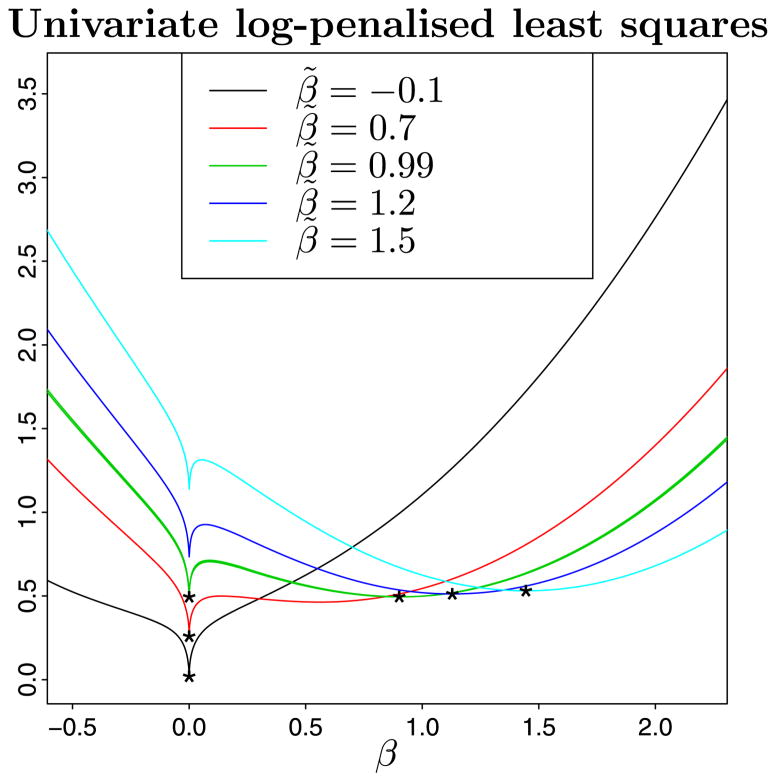

4.1 Effect of Multiple Minima in Univariate Criteria

Figure 3 shows the univariate penalized criterion (7) for the log-penalty (10) for certain choices of β̃ and a (λ, γ) combination. Here we see the nonconvexity of Q1(β), and the transition (with β̃) of the global minimizers of the univariate functions. This causes the discontinuity of the induced threshold operators β̃ ↦ Sγ(β̃, λ), as shown in Figure 2(b). Multiple minima and consequent discontinuity appears in the ℓγ, γ < 1 penalty as well, as seen in Figure 2(a).

Figure 3.

The penalized least-squares criterion (8) with the log-penalty (10) for (γ, λ) = (500, 0.5) for different values of β̃. The “*” denotes the global minima of the functions. The “transition” of the minimizers, creates discontinuity in the induced threshold operators.

It has been observed (Breiman 1996; Fan and Li 2001) that discontinuity of the threshold operators leads to increased variance (and hence poor risk properties) of the estimates. We observe that for the log-penalty (for example), coordinate-descent can produce multiple limit points (without converging)—creating statistical instability in the optimization procedure. We believe discontinuities such as this will naturally affect other optimization algorithms; for example, those based on sequential convex relaxation such as MLLA. In the Supplementary Materials Section B.4 we see that MLLA gets stuck in a suboptimal local minimum, even for the univariate log-penalty problem. This phenomenon is aggravated for the ℓγ penalty.

Multiple minima and hence the discontinuity problem is not an issue in the case of the MC+ penalty or the SCAD [Figures 2(c) and (d)].

Our study of these phenomena leads us to conclude that if the univariate functions Q(1)(β) (7) are strictly convex, then the coordinate-wise procedure is well behaved and converges to a stationary point. This turns out to be the case for the MC+ for γ > 1 and the SCAD penalties. Furthermore we see in Section 5 in (18) that this restriction on the MC+ penalty for γ still gives us the entire continuum of threshold operators from soft to hard thresholding.

Strict convexity of Q(1)(β) also occurs for the log-penalty for some choices of λ and γ, but not enough to cover the whole family. For example, the ℓγ family with γ < 1 does not qualify. This is not surprising since with γ < 1 it has an unbounded derivative at zero, and is well known to be unstable in optimization.

4.2 Effective df and Nesting of Shrinkage-Thresholds

For a LASSO fit μ̂λ(x), λ controls the extent to which we (over)fit the data. This can be expressed in terms of the effective degrees of freedom of the estimator. For our nonconvex penalty families, the df are influenced by γ as well. We propose to recalibrate our penalty families so that for fixed λ, the coordinate-wise df do not change with γ. This has important consequences for our pathwise optimization strategy.

For a linear model, df is simply the number of parameters fit. More generally, under an additive error model, df is defined by (Stein 1981; Efron et al. 2004)

| (14) |

where is the training sample and σ2 is the noise variance. For a LASSO fit, the df is estimated by the number of nonzero coefficients (Zou, Hastie, and Tibshirani 2007). Suppose we compare the LASSO fit with k nonzero coefficients to an unrestricted least-squares fit in those k variables. The LASSO coefficients are shrunken towards zero, yet have the same df ? The reason is the LASSO is “charged” for identifying the nonzero variables. Likewise a model with k coefficients chosen by best-subset regression has df greater than k (here we do not have exact formulas). Unlike the LASSO, these are not shrunk, but are being charged the extra df for the search.

Hence for both the LASSO and best subset regression we can think of the effective df as a function of λ. More generally, penalties corresponding to a smaller degree of nonconvex-ity shrink more than those with a higher degree of nonconvexity, and are hence charged less in df per nonzero coefficient. For the family of penalized regressions from ℓ1 to ℓ0, df is controlled by both λ and γ.

In this article we provide a recalibration of the family of penalties P(·; λ; γ) such that for every value of λ the coordinate-wise df across the entire continuum of γ values are approximately the same. Since we rely on coordinate-wise updates in the optimization procedures, we will ensure this by calibrating the df in the univariate thresholding operators. Details are given in Section 5.1 for a specific example.

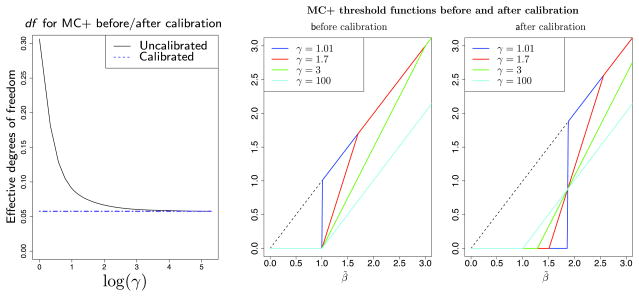

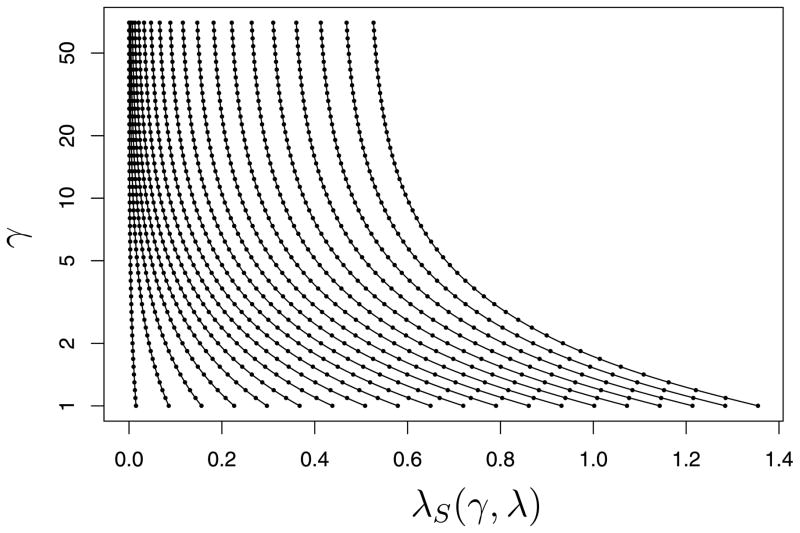

We define the shrinkage-threshold λS of a thresholding operator as the largest (absolute) value that is set to zero. For the soft-thresholding operator of the LASSO, this is λ itself. However, the LASSO also shrinks the nonzero values toward zero. The hard-thresholding operator, on the other hand, leaves all values that survive its shrinkage threshold λH alone; in other words it fits the data more aggressively. So for the df of the soft and hard thresholding operators to be the same, the shrinkage-threshold λH for hard thresholding should be larger than the λ for soft thresholding. More generally, to maintain a constant df there should be a monotonicity (increase) in the shrinkage-thresholds λS as one moves across the family of threshold operators from the soft to the hard threshold operator. Figure 4 illustrates these phenomena for the MC+ penalty, before and after calibration.

Figure 4.

Left: The df (solid line) for the (uncalibrated) MC+ threshold operators as a function of γ, for a fixed λ = 1. The dotted line shows the df after calibration. Middle: Family of MC+ threshold functions, for different values of γ, before calibration. All have shrinkage threshold λS = 1. Right: Calibrated versions of the same. The shrinkage threshold of the soft-thresholding operator is λ = 1, but as γ decreases, λS increases, forming a continuum between soft and hard thresholding.

Why the need for calibration? Because of the possibility of multiple stationary points in a nonconvex optimization problem, good warm-starts are essential for avoiding suboptimal solutions. The calibration assists in efficiently computing the doubly regularized (γ, λ) paths for the coefficient profiles. For fixed λ we start with the exact LASSO solution (large γ). This provides an excellent warm start for the problem with a slightly decreased value for γ. This is continued in a gradual fashion as γ approaches the best-subset problem. The df calibration provides a natural path across neighboring solutions in the (λ, γ) space:

λS increases slowly, decreasing the size of the active set

at the same time, the active coefficients are shrunk successively less.

We find that the calibration keeps the algorithm away from sub-optimal stationary points and accelerates the speed of convergence.

4.3 Desirable Properties for a Family of Threshold Operators

Consider the family of threshold operators

Based on our observations on the properties and irregularities of the different penalties and their associated threshold operators, we have compiled a list of properties we consider desirable:

-

γ ∈ (γ0, γ1) should bridge the gap between soft and hard thresholding, with the following continuity at the end points

(15) where λ and λH correspond to the shrinkage thresholds of the soft and hard threshold operators respectively.

λ should control the effective df in the family Sγ(·, λ); for fixed λ, the effective df of Sγ(·, λ) for all values of γ should be the same.

For every fixed λ, there should be a strict nesting (increase) of the shrinkage thresholds λS as γ decreases from γ1 to γ0.

The map β̃ ↦ Sγ(β̃, λ) should be continuous.

The univariate penalized least squares function Q(1)(β) (7) should be convex for every β̃. This ensures that coordinate-wise procedures converge to a stationary point. In addition this implies continuity of β̃ ↦ Sγ(β̃, λ) in the previous item.

The function γ ↦ Sγ(·, λ) should be continuous on γ ∈ (γ0, γ1). This assures a smooth transition as one moves across the family of penalized regressions, in constructing the family of regularization paths.

We believe these properties are necessary for a meaningful analysis for any generic nonconvex penalty. Enforcing them will require reparametrization and some restrictions in the family of penalties (and threshold operators) considered. In terms of the four families discussed in Section 2:

The threshold operator induced by the SCAD penalty does not encompass the entire continuum from the soft to hard thresholding operators (all the others do).

None of the penalties satisfy the nesting property of the shrinkage thresholds (item 3) or the degree of freedom calibration (item 2). In Section 5.1 we recalibrate the MC+ penalty so as to achieve these.

The threshold operators induced by ℓγ and log-penalties are not continuous (item 4); they are for MC+ and SCAD.

Q(1)(β) is strictly convex for both the SCAD and the MC+ penalty. Q(1)(β) is nonconvex for every choice of (λ > 0, γ) for the ℓγ penalty and some choices of (λ > 0, γ) for the log-penalty.

In this article we explore the details of our approach through the study of the MC+ penalty; indeed, it is the only one of the four we consider for which all the properties above are achievable.

5. ILLUSTRATION VIA THE MC+ PENALTY

Here we give details on the recalibration of the MC+ penalty, and the algorithm that results. The MC+ penalized univariate least-squares objective criterion is

| (16) |

This can be shown to be convex for γ ≥ 1, and nonconvex for γ < 1. The minimizer for γ > 1 is a piecewise-linear thresholding function (see Figure 4, middle) given by

| (17) |

Observe that in (17), for fixed λ > 0,

| (18) |

Hence γ0 = 1+ and γ1 = ∞. It is interesting to note here that the hard-threshold operator, which is conventionally understood to arise from a highly nonconvex ℓ0 penalized criterion (9), can be equivalently obtained as the limit of a sequence {Sγ(β̃, λ)}γ > 1 where each threshold operator is the solution of a convex criterion (16) for γ > 1. For a fixed λ, this gives a family {Sγ(β̃, λ)}γ with the soft and hard threshold operators as its two extremes.

5.1 Calibrating MC+ for df

For a fixed λ, we would like all the thresholding functions Sγ(β̃, λ) to have the same df; this will require a reparametrization. It turns out that this is tractable for the MC+ threshold function.

Consider the following univariate regression model

| (19) |

Without loss of generality, assume the xi’s have sample mean zero and sample variance one (the xi’s are assumed to be non-random). The df for the threshold operator Sγ(·, λ) is defined by (14) with

| (20) |

and . The following theorem gives an explicit expression of the df.

Theorem 1

For the model described in (19), the df of μ̂γ,λ (20) is given by

| (21) |

where these probabilities are to be calculated under the law β̃ ~ N(β, σ2/n).

Proof

We make use of Stein’s unbiased risk estimation (Stein 1981, SURE) result. It states that if μ̂ : ℜn → ℜn is an almost differentiable function of y, and y ~ N(μ, σ2In), then there is a simplification to the df(μ̂) formula (14)

| (22) |

where . Proceeding along the lines of proof in Zou, Hastie, and Tibshirani (2007), the function μ̂γ,λ : ℜ → ℜ defined in (20) is uniformly Lipschitz (for every λ ≥ 0, γ > 1), and hence almost everywhere differentiable. The result follows easily from the definition (17) and that of β̃, which leads to (21).

As γ → ∞, μ̂γ,λ → μ̂λ, and from (21), we see that

| (23) |

which corresponds to the expression obtained by Zou, Hastie, and Tibshirani (2007) for the df of the LASSO in the univariate case.

Corollary 1

The df for the hard-thresholding function H(β̃, λ) is given by

| (24) |

where ϕ* is taken to be the pdf of the absolute value of a normal random variable with mean β and variance σ2/n.

For the hard threshold operator, Stein’s simplified formula does not work, since the corresponding function y ↦ H(β̃, λ) is not almost differentiable. But observing from (21) that

| (25) |

we get an expression for df as stated. We note here that this limiting process also requires a standard dominated convergence argument, where the second moments are finite due to the Gaussian distribution of the errors.

These expressions are consistent with simulation studies based on Monte Carlo estimates of df. Figure 4(left) shows df as a function of γ for a fixed value λ = 1 for the uncalibrated MC+ threshold operators Sγ(·, λ). For the figure we used β = 0 and σ2 = 1.

5.1.1 Reparametrization of the MC+ Penalty

We argued in Section 4.2 that for the df to remain constant for a fixed λ and varying γ, the shrinkage threshold λS = λS(λ, γ) should increase as γ moves from γ1 to γ0. Theorem 3 formalizes this observation.

Hence for the purpose of df calibration, we reparametrize the family of penalties as follows:

| (26) |

With the reparametrized penalty, the thresholding function is the obvious modification of (17):

| (27) |

Similarly for the df we simply plug into the formula (21)

| (28) |

where .

To achieve a constant df, we require the following to hold for λ > 0:

The shrinkage-threshold for the soft threshold operator is λS(λ, γ = ∞) = λ and hence (a boundary condition in the calibration).

.

The definitions for df depend on β and σ2/n. Since the notion of df centers around variance, we use the null model with β = 0. We also assume without loss of generality that σ2/n = 1, since this gets absorbed into λ.

Theorem 2

For the calibrated MC+ penalty to achieve constant df, the shrinkage-threshold λS = λS(λ, γ) must satisfy the following functional relationship

| (29) |

where Φ is the standard normal cdf and ϕ the pdf of the same.

Theorem 2 is proved in the Supplementary Materials Section B.2, using (28) and the boundary constraint. The next theorem establishes some properties of the map (λ, γ) ↦ (λS(λ, γ), γλS(λ, γ)), including the important nesting of shrinkage thresholds.

Theorem 3

For a fixed λ:

γλS(λ, γ) is increasing as a function of γ.

λS(λ, γ) is decreasing as a function of γ.

Note that as γ increases, we approach the LASSO; see Figure 4(right). Both these relationships can be derived from the functional Equation (29). Theorem 3 is proved in the Supplementary Materials Section B.3.

5.1.2 Efficient Computation of Shrinkage Thresholds

In order to implement the calibration for MC+, we need an efficient method for evaluating λS(λ, γ)—that is, solving Equation (29). For this purpose we propose a simple parametric form for λS based on some of its required properties: the monotonicity properties just described, and the df calibration. We simply give the expressions here, and leave the details for the Supplementary Materials Section B.3:

| (30) |

where

| (31) |

The above approximation turns out to be a reasonably good estimator for all practical purposes, achieving a calibration of df within an accuracy of 5%, uniformly over all (γ, λ). The approximation can be improved further, if we take this estimator as the starting point, and obtain recursive updates for λS(λ, γ). Details of this approximation along with the algorithm are explained in the Supplementary Materials Section B.3. Numerical studies show that a few iterations of this recursive computation can improve the degree of accuracy of calibration up to an error of 0.3%; Figure 4(left) was produced using this approximation. Figure 5 shows a typical pattern of recalibration for the example we present in Figure 7 in Section 8.

Figure 5.

Recalibrated values of λ via λS(γ, λ) for the MC+ penalty. The values of λ at the top of the plot correspond to the LASSO. As γ decreases, the calibration increases the shrinkage threshold to achieve a constant univariate df.

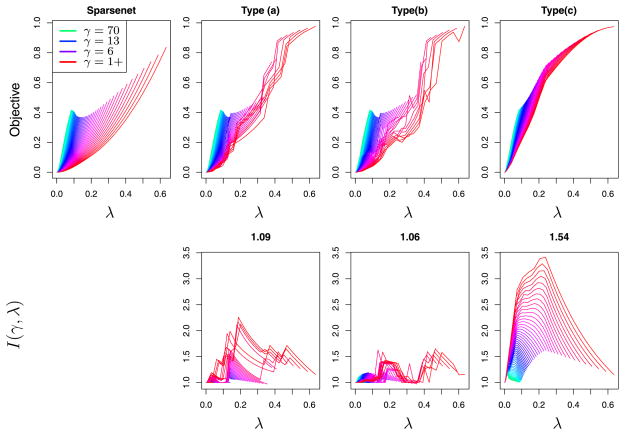

Figure 7.

Top row: objective function for SparseNet compared to other coordinate-wise variants—Type (a), (b), and MLLA Type (c)—for a typical example. Plots are shown for 50 values of γ (some are labeled in the legend) and at each value of γ, 20 values of λ. Bottom row: relative increase I(γ, λ) in the objective compared to SparseNet, with the average Ī reported at the top of each plot.

As noted below Algorithm 1 this leads to a lattice of values λk, ℓ.

6. CONVERGENCE ANALYSIS

In this section we present results on the convergence of Algorithm 1. Tseng and Yun (2009) show convergence of coordinate-descent for functions that can be written as the sum of a smooth function (loss) and a separable nonsmooth convex function (penalty). This is not directly applicable to our case as the penalty is nonconvex.

Denote the coordinate-wise updates βk+1 = Scw(βk), k = 1, 2, … with

| (32) |

Theorem 4 establishes that under certain conditions, SparseNet always converges to a minimum of the objective function; conditions that are met, for example, by the SCAD and MC+ penalties (for suitable γ).

Theorem 4

Consider the criterion in (6), where the given data (y, X) lies on a compact set and no column of X is degenerate (i.e., multiple of the unit vector). Suppose the penalty λP(t; λ; γ) ≡ P(t) satisfies P(t) = P(−t), P′(|t|) is nonnegative, uniformly bounded and inft P″(|t|) > −1; where P′(|t|) and P″(|t|) are the first and second derivatives (assumed to exist) of P(|t|) wrt |t|.

Then the univariate maps β ↦ Q(1)(β) are strictly convex and the sequence of coordinate-updates {βk}k converge to a (local) minimum of the function Q(β).

Note that the condition on the data (y, X) is a mild assumption (as it is necessary for the variables to be standardized). Since the columns of X are mean-centered, the nondegeneracy assumption is equivalent to assuming that no column is identically zero.

The proof is provided in Appendix A.1. Lemma A.1 and Lemma 3 under milder regularity conditions, establish that every limit point of the sequence {βk} is a stationary point of Q(β).

Remark 1

Note that Theorem 4 includes the case where the penalty function P(t) is convex in t.

7. SIMULATION STUDIES

In this section we compare the simulation performance of a number of different methods with regard to (a) prediction error, (b) the number of nonzero coefficients in the model, and (c) “misclassification error” of the variables retained. The methods we compare are SparseNet, local linear approximation (LLA) and multistage local linear approximation (MLLA) (Candes, Wakin, and Boyd 2008; Zou and Li 2008; Zhang 2010b), LASSO, forward-stepwise regression and best-subset selection. Note that SparseNet, LLA and MLLA are all optimizing the same MC+ penalized criterion.

We assume a linear model Y = Xβ + ε with multivariate Gaussian predictors X and Gaussian errors. The signal-to-noise ratio (SNR) and standardized prediction error (SPE) are defined as

| (33) |

The minimal achievable value for SPE is 1 (the Bayes error rate), if x is nonrandom. In our simulations we take x to be random, so the minimal value is likely to be larger. For each model, the optimal tuning parameters—λ for the penalized regressions, subset size for best-subset selection and stepwise regression— are chosen based on minimization of the prediction error on a separate large validation set of size 10K. We use SNR = 3 in all the examples.

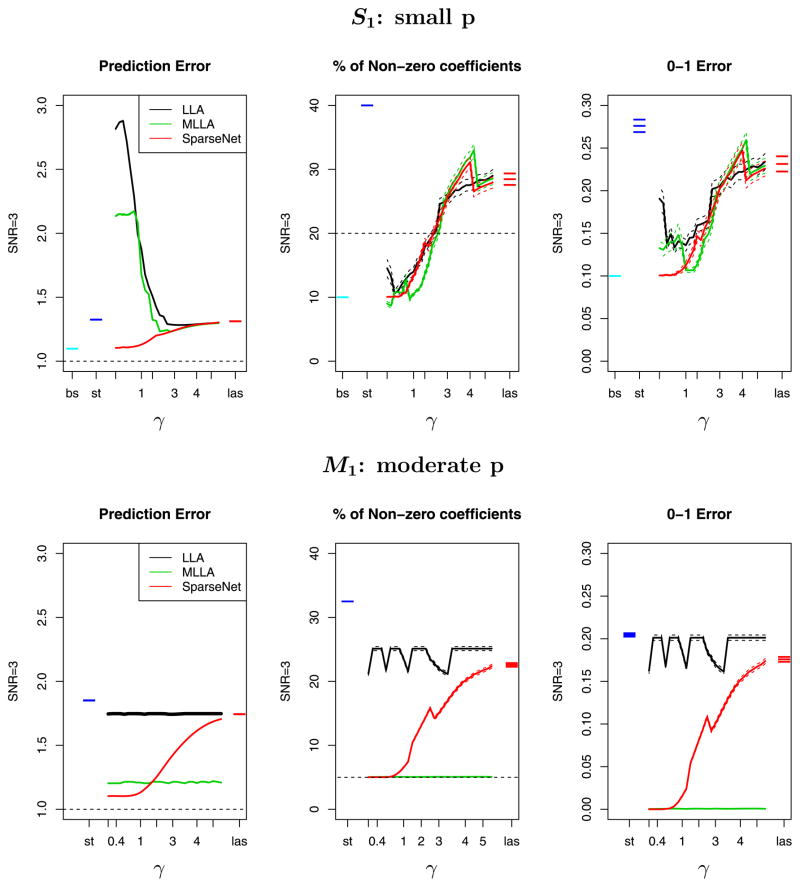

Since a primary motivation for considering nonconvex penalized regressions is to mimic the behavior of best subset selection, we compare its performance with best-subset for small p = 30. For notational convenience Σ(ρ;m) denotes a m × m matrix with 1’s on the diagonal, and ρ’s on the off-diagonal.

We consider the following examples:

-

S1

n = 35, p = 30, ΣS1 = Σ(0.4; p), and βS1 = (0.03, 0.07, 0.1, 0.9, 0.93, 0.97, 01×24).

-

M1

n = 100, p = 200, ΣM1 = {0.7|i−j|}1≤i, j≤p, and βM1 has 10 nonzeros such that , i = 0, 1, …, 9; and otherwise.

In example M1 best-subset was not feasible and hence was omitted. The starting point for both LLA and MLLA were taken as a vector of all ones (Zhang 2010b). Results are shown in Figure 6 with discussion in Section 7.1. These two examples, chosen from a battery of similar examples, show that SparseNet compares very favorably with its competitors.

Figure 6.

Examples S1 and M1. The three columns of plots represent prediction error, recovered sparsity and zero-one loss (all with standard errors over the 25 runs). Plots show the recalibrated MC+ family for different values of γ (on the log-scale) via SparseNet, LLA, MLLA, LASSO (las), step-wise (st), and best-subset (bs) (for S1 only).

We now consider some larger examples and study the performance of SparseNet varying γ.

-

M1(5)

n = 500, p = 1000, ΣM1(5) = blockkdiag(ΣM1, …, ΣM1) and βM1(5) = (βM1, …, βM1) (five blocks).

-

M1(10)

n = 500, p = 2000 (same as above with 10 blocks instead of five).

-

M2(5)

n = 500, p = 1000, ΣM2(5) = blockkdiag(Σ(0.5, 200), …, Σ(0.5, 200)) and βM2(5) = (βM2, …, βM2) (five blocks). Here βM2 = (β1, β2, …, β10, 01×190) is such that the first 10 coefficients form an equispaced grid on [0, 0.5].

-

M2(10)

n = 500, p = 2000, and is like M2(5) with 10 blocks.

The results are summarized in Table 1, with discussion in Section 7.1.

Table 1.

Table showing standardized prediction error (SPE), percentage of nonzeros and zero–one error in the recovered model via SparseNet, for different problem instances. The last column shows the standard errors, averaged across the four γ values. The true number of nonzero coefficients in the model are in square braces. Results are averaged over 25 runs

| log(γ) values

|

Average std. error | ||||

|---|---|---|---|---|---|

| 3.92 | 1.73 | 1.19 | 0.10 | ||

| Example: M1(5) | |||||

| SPE | 1.6344 | 1.3194 | 1.2015 | 1.1313 | 5.648 × 10−4 |

| % of nonzeros | 16.3 | 13.3 | 8.2 | 5.000 | 0.0702 |

| 0–1 error | 0.1137 | 0.0825 | 0.0319 | 0.0002 | 8.508 × 10−4 |

| Example: M2(5) | |||||

| SPE | 1.3797 | 1.467 | 1.499 | 1.7118 | 2.427 × 10−3 |

| % of nonzeros | 21.4 | 9.9 | 6.7 | 2.5 | 0.1037 |

| 0–1 error | 0.18445 | 0.08810 | 0.0592 | 0.03530 | 1.118 × 10−3 |

| Example: M1(10) | |||||

| SPE | 3.6819 | 4.4838 | 4.653 | 5.4729 | 1.152 × 10−2 |

| % of nonzeros | 23.0 | 12.0 | 8.5 | 6.2625 | 0.066 |

| 0–1 error | 0.1873 | 0.0870 | 0.0577 | 0.0446 | 1.2372 × 10−3 |

| Example: M2(10) | |||||

| SPE | 1.693 | 1.893 | 2.318 | 2.631 | 1.171 × 10−2 |

| % of nonzeros | 15.5 | 8.3 | 5.7 | 2.2 | 0.0685 |

| 0–1 error | 0.1375 | 0.0870 | 0.0678 | 0.047 | 0.881 × 10−3 |

7.1 Discussion of Simulation Results

In both S1 and M1, the aggressive nonconvex SparseNet outperforms its less aggressive counterparts in terms of prediction error and variable selection. Due to the correlation among the features, the LASSO and the less aggressive penalties estimate a considerable proportion of zero coefficients as nonzero. SparseNet estimates for γ ≈ 1 are almost identical to the best-subset procedure in S1. Step-wise performs worse in both cases. LLA and MLLA show similar behavior in S1, but are inferior to SparseNet in predictive performance for smaller values of γ. This shows that MLLA and SparseNet reach very different local optima. In M1 LLA/MLLA seem to show similar behavior across varying degrees of nonconvexity. This is undesirable behavior, and is probably because MLLA/LLA gets stuck in a local minima. MLLA does perfect variable selection and shows good predictive accuracy.

In M1(5) (n = 500, p = 1000), the prediction error decreases steadily with decreasing γ, the variable selection properties improve as well. In M1(10) (n = 500, p = 2000) the prediction error increases overall, and there is a trend reversal—with the more aggressive nonconvex penalties performing worse. The variable selection properties of the aggressive penalties, however, are superior to its counterparts. The less aggressive nonconvex selectors include a larger number of variables with high shrinkage, and hence perform well in predictive accuracy.

In M2(5) and M2(10), the prediction accuracy decreases marginally with increasing γ. However, as before, the variable selection properties of the more aggressive nonconvex penalties are far better.

In summary, SparseNet is able to mimic the prediction performance of best subset regression in these examples. In the high-p settings, it repeats this behavior, mimicking the best, and is the outright winner in several situations since best-subset regression is not available. In situations where the less aggressive penalties do well, SparseNet often reduces the number of variables for comparable prediction performance, by picking a γ in the interior of its range. The solutions of LLA and MLLA are quite different from the SparseNet and they often show similar performances across γ (as also pointed out in Candes, Wakin, and Boyd 2008 and Zou and Li 2008).

It appears that the main differences among the different strategies MLLA, LLA and SparseNet lie in their roles of optimizing the objective Q(β). We study this from a well-grounded theoretical framework in Section 8, simulation experiments in Section 8.1 and further examples in Section B.4 in the Supplementary Materials.

8. OTHER METHODS OF OPTIMIZATION

We shall briefly review some of the state-of-the art methods proposed for optimization with general nonconvex penalties.

Fan and Li (2001) used a local quadratic approximation (LQA) of the SCAD penalty. The method can be viewed as a majorize-minimize algorithm which repeatedly performs a weighted ridge regression. LQA gets rid of the nonsingularity of the penalty at zero, depends heavily on the initial choice β0, and is hence suboptimal (Zou and Li 2008) in searching for sparsity.

The MC+ algorithm of Zhang (2010a) cleverly tracks multiple local minima of the objective function and seeks a solution to attain desirable statistical properties. The algorithm is complex, and since it uses a LARS-type update, is potentially slower than coordinate-wise procedures.

Friedman (2008) proposes a path-seeking algorithm for general nonconvex penalties. Except in simple cases, it is unclear what criterion of the form “loss + penalty” it optimizes.

Multistage Local Linear Approximation

Zou and Li (2008), Zhang (2010b) and Candes, Wakin, and Boyd (2008) propose majorize-minimize (MM) algorithms for minimization of Q(β) (6), and consider the reweighted ℓ1 criterion

| (34) |

for some starting point β1. If βk+1 is obtained by minimizing (34)—we denote the update via the map βk+1 := Mlla(βk). There is currently a lot of attention payed to MLLA in the literature, so we analyze and compare it to our coordinate-wise procedure in more detail.

We have observed (Sections 8.1 and B.4.1) that the starting point is critical; see also Candes, Wakin, and Boyd (2008) and Zhang (2010a). Zou and Li (2008) suggest stopping at k = 2 after starting with an “optimal” estimator, for example, the leastsquares estimator when p < n. Choosing an optimal initial estimator β1 is essentially equivalent to knowing a-priori the zero and nonzero coefficients of β. The adaptive LASSO of Zou (2006) is very similar in spirit to this formulation, though the weights (depending upon a good initial estimator) are allowed to be more general than derivatives of the penalty (34).

Convergence properties of SparseNet and MLLA can be analyzed via properties of fixed points of the maps Scw(·) and Mlla(·) respectively. If the sequences produced by Mlla(·) or Scw(·) converge, they correspond to stationary points of the function Q.

The convergence analysis of MLLA is based on the fact that Q(β; βk) majorizes Q(β). If βk is the sequence produced via MLLA, then {Q(βk)}k≥1 converges. This does not address the convergence properties of {βk}k≥1 nor properties of its limit points. Zou and Li (2008) point out that if the map Mlla satisfies Q(β) = Q(Mlla(β)) for limit points of the sequence {βk}k≥1, then the limit points are stationary points of the function Q(β). It appears, however, that solutions of Q(β) = Q(Mlla(β)) are not easy to characterize by explicit regularity conditions on the penalty P. The analysis does not address the fate of all the limit points of the sequence {βk}k≥1 in case the sufficient conditions of proposition 1 in Zou and Li (2008) fail to hold true. Our analysis in Section 6 for SparseNet addresses all the concerns raised above. Fixed points of the map Scw(·) are explicitly characterized through some regularity conditions of the penalty functions as described in Section 6. Explicit convergence can be shown under additional conditions—Theorem 4.

Furthermore, a fixed point of the map Mlla(·) need not be a fixed point of Scw(·)—for specific examples see Section B.4 in the Supplementary Materials. Every stationary point of the coordinate-wise procedure, however, is also a fixed point of MLLA.

The formal framework being laid, we proceed to perform some simple numerical experiments in Section 8.1. Further discussions and comparisons between coordinate-wise procedures and MLLA can be found in Section B.4 in the Supplementary Materials.

8.1 Empirical Performance and the Role of Calibration

In this section we show the importance of calibration and the specific manner in which the regularization path is traced out via SparseNet in terms of the quality of the solution obtained. In addition we compare the optimization performance of LLA/MLLA versus SparseNet.

In Figure 7 we consider an example with n = p = 10, Σ = {0.8|i−j|}, 1 ≤ i, j, ≤ p, SNR= 1, β1 = βp = 1, all other βj = 0. We consider 50 values of γ and 20 values of λ, and compute the solutions for all methods at the recalibrated pairs (γ, λS(γ, λ)); see Figure 5 for the exact λk,ℓ values used in these plots.

We compare SparseNet with

Type (a) Coordinate-wise procedure that computes solutions on a grid of λ for every fixed γ, with warm-starts across λ (from larger to smaller values). No df calibration is used here.

Type (b) Coordinate-wise procedure “cold-starting” with a zero vector (She 2010) for every pair (λ, γ).

Type (c) MLLA with the starting vector initialized to a vector of ones (Zhang 2010b).

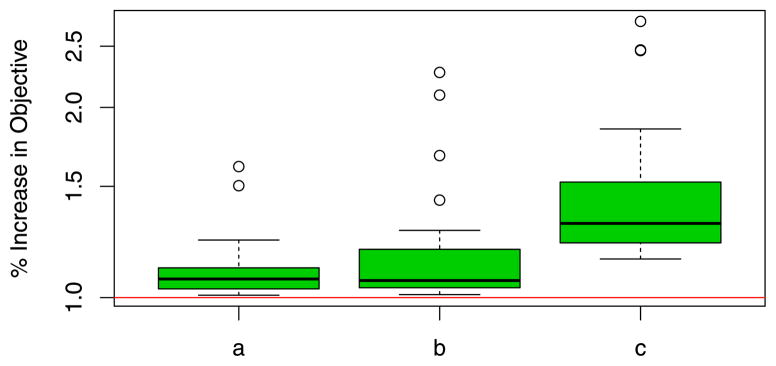

In all cases SparseNet shows superior performance. For each (γ, λ) pair we compute the relative increase I(γ, λ) = [Q(β*) + 0.05]/[Q(βs) + 0.05], where Q(β*) denotes the objective value obtained for procedure * [types (a), (b), or (c) above], and Q(βs) represents SparseNet. These values are shown in the second row of plots. They are also averaged over all values of (γ, λ) to give Ī (shown at the top of each plot). This is one of 24 different examples, over six different problem setups with four replicates in each. Figure 8 summarizes Ī for these 24 scenarios. Results are shown in Figure 7 (We show the curves of the objective values, as in Figure 7, for all 24 scenarios in the Supplementary Materials section). The figure shows clearly the benefits of using the proposed calibration in terms of optimization performance. MLLA appears to get “stuck” in local optima, supporting our claim in Section 7.1. For larger values of γ the different coordinate-wise procedures perform similarly, since the problem is close to convex.

Figure 8.

Boxplots of Ī over 24 different examples. In all cases SparseNet shows superior performance. The online version of this figure is in color.

Supplementary Material

Acknowledgments

Trevor Hastie was supported in part by grant DMS-1007719 from the National Science Foundation and grant RO1-EB001988-12 from the National Institutes of Health. The authors will like to thank the Associate Editor and two referees for valuable comments and suggestions that helped to improve and shorten this presentation.

APPENDIX A

A.1 Convergence Analysis for Algorithm 1

Lemma A.1, provides (weaker) conditions (than Theorem 4) under which limit point(s) of the sequence of coordinate-wise updates are local minimizers of Q(β). To prove Theorem 4 we require Lemma A.1.

Lemma A.1

Suppose the data (y, X) lies on a compact set. In addition, we assume the following:

The penalty function P(t) (symmetric around 0) is differentiable on t ≥ 0; P′(|t|) is nonnegative, continuous and uniformly bounded, where P′(|t|) is the derivative of P(|t|) wrt |t|.

The sequence generated {βk}k is bounded.

For every convergent subsequence {βnk}k ⊂ {βk}k the successive differences converge to zero: βnk − βnk−1 → 0.

If β∞ is any limit point of the sequence {βk}k, then β∞ is a minimum for the function Q(β); that is, for any δ = (δ1, …, δp) ∈ ℜp

| (A.1) |

In the Supplementary Materials Section B we present the proof of Lemma A.1. We also show via Lemma 2 that assumption 2 is by no means restrictive. Lemma 3 gives a sufficient condition under which assumption 3 of Lemma A.1 is true.

It is useful to note here that assumptions 2, 3 of Lemma A.1 follow from the assumptions of Theorem 4 (we make a further note of this in the proof below).

We are now in a position to present the proof of Theorem 4.

Proof of Theorem 4

We will assume WLOG that the data is standardized. For fixed i and (β1, …, βi−1, βi+1, …, βp), we will write (as defined in Lemma 3, the Supplementary Materials) for the sake notational convenience.

The subgradient(s) (Borwein and Lewis 2006) of χ(u) at u are given by

| (A.2) |

Also observe that

| (A.3) |

| (A.4) |

Line (A.4) is obtained from (A.3) by a Taylor’s series expansions on f (wrt to the ith coordinate) and |t| ↦ P(|t|). is the second derivative of the function f wrt the ith coordinate and equals 1 as the features are all standardized; |u*| is some number between |u + δ| and |u|. The rhs of (A.4) can be simplified as follows:

| (A.5) |

Since χ(u) is minimized at u0; 0 ∈ ∂χ(u0). Thus using (A.2) we get

| (A.6) |

if u0 = 0 then the above holds true for some value of sgn(u0) ∈ [−1, 1].

By definition of subgradient of x ↦ |x| and P′(|x|) ≥ 0 we have

| (A.7) |

Using (A.6) and (A.7) in (A.5) and (A.4) at u = u0 we have

| (A.8) |

Observe that (|u0 + δ| − |u0|)2 ≤ δ2. If P″(|u*|) ≤ 0 then . If P″(|u*|) ≥ 0 then .

Combining these two we get

| (A.9) |

By the conditions of this theorem, . Hence there exists a θ > 0,

which is independent of (β1, …, βi−1, βi+1, …, βp), such that

| (A.10) |

[For the MC+ penalty, ; γ > 1. For the SCAD, ; γ > 2.]

In fact the dependence on u0 above in (A.10) can be further relaxed. Following the above arguments carefully, we see that the function χ(u) is strictly convex (Borwein and Lewis 2006) as it satisfies

| (A.11) |

We will use this result to show the convergence of {βk}. Using (A.10) we have

| (A.12) |

where . (A.12) shows the boundedness of the sequence βm, for every m > 1 since the starting point β1 ∈ ℜp.

Using (A.12) repeatedly across every coordinate, we have for every m

| (A.13) |

Using the fact that the (decreasing) sequence Q(βm) converges, it is easy to see from (A.13), that the sequence βk cannot cycle without convergence ie it must have a unique limit point. This completes the proof of convergence of βk.

Observe that, (A.13) implies that assumptions 2 and 3 of Lemma A.1 are satisfied. Hence using Lemma A.1, the limit of βk is a minimum of Q(β)—this completes the proof.

Contributor Information

Rahul Mazumder, Email: rahulm@stanford.edu, Ph.D. Student, Department of Statistics, Stanford University, Stanford, CA 94305.

Jerome H. Friedman, Email: jhf@stanford.edu, Professor Emeritus, Department of Statistics, Stanford University, Stanford, CA 94305

Trevor Hastie, Email: hastie@stanford.edu, Professor, Departments of Statistics and Health, Research and Policy, Stanford University, Stanford, CA 94305.

References

- Borwein JM, Lewis AS. Convex Analysis and Nonlinear Optimization. 2. New York: Springer; 2006. [Google Scholar]

- Breiman L. Heuristics of Instability and Stabilization in Model Selection. The Annals of Statistics. 1996;24:2350–2383. [Google Scholar]

- Candes EJ, Wakin MB, Boyd S. Enhancing Sparsity by Reweighted l1 Minimization. Journal of Fourier Analysis and Applications. 2008;14(5):877–905. [Google Scholar]

- Chen S, Donoho D. technical report. Dept. of Statistics, Stanford University; 1994. On Basis Pursuit. [Google Scholar]

- Donoho D. For Most Large Underdetermined Systems of Equations, the Minimal ℓ1-Norm Solution Is the Sparsest Solution. Communications on Pure and Applied Mathematics. 2006;59:797–829. [Google Scholar]

- Efron B, Hastie T, Johnstone I, Tibshirani R. Least Angle Regression” (with discussion) The Annals of Statistics. 2004;32(2):407–499. [Google Scholar]

- Fan J, Li R. Variable Selection via Nonconcave Penalized Likelihood and Its Oracle Properties. Journal of the American Statistical Association. 2001;96(456):1348–1360. [Google Scholar]

- Frank I, Friedman J. A Statistical View of Some Chemometrics Regression Tools” (with discussion) Technometrics. 1993;35(2):109–148. [Google Scholar]

- Friedman J. technical report. Dept. of Statistics, Stanford University; 2008. Fast Sparse Regression and Classification. [Google Scholar]

- Friedman J, Hastie T, Tibshirani R. glmnet: Lasso and Elastic-Net Regularized Generalized Linear Models. R package version 1.1-4. 2009 available at http://www-stat.stanford.edu/~hastie/Papers/glmnet.pdf.

- Gao H-Y, Bruce AG. Waveshrink With Firm Shrinkage. Statistica Sinica. 1997;7:855–874. [Google Scholar]

- Knight K, Fu W. Asymptotics for Lasso-Type Estimators. The Annals of Statistics. 2000;28(5):1356–1378. [Google Scholar]

- Meinshausen N, Bühlmann P. High-Dimensional Graphs and Variable Selection With the Lasso. The Annals of Statistics. 2006;34:1436–1462. [Google Scholar]

- Nikolova M. Local Strong Homogeneity of a Regularized Estimator. SIAM Journal of Applied Mathematics. 2000;61:633–658. [Google Scholar]

- Osborne M, Presnell B, Turlach B. A New Approach to Variable Selection in Least Squares Problems. IMA Journal of Numerical Analysis. 2000;20:389–404. [Google Scholar]

- She Y. Sparse Regression With Exact Clustering. Electronic Journal of Statistics. 2010;4:1055–1096. [Google Scholar]

- Stein C. Estimation of the Mean of a Multivariate Normal Distribution. The Annals of Statistics. 1981;9:1131–1151. [Google Scholar]

- Tibshirani R. Regression Shrinkage and Selection via the Lasso. Journal of the Royal Statistical Society, Ser B. 1996;58:267–288. [Google Scholar]

- Tseng P. Convergence of a Block Coordinate Descent Method for Nondifferentiable Minimization. Journal of Optimization Theory and Applications. 2001;109:475–494. [Google Scholar]

- Tseng P, Yun S. A Coordinate Gradient Descent Method for Nonsmooth Separable Minimization. Mathematical Programming B. 2009;117:387–423. [Google Scholar]

- Zhang C-H. Nearly Unbiased Variable Selection Under Minimax Concave Penalty. The Annals of Statistics. 2010a;38:894–942. [Google Scholar]

- Zhang C-H, Huang J. The Sparsity and Bias of the Lasso Selection in High-Dimensional Linear Regression. The Annals of Statistics. 2008;36(4):1567–1594. [Google Scholar]

- Zhang T. Analysis of Multi-Stage Convex Relaxation for Sparse Regularization. Journal of Machine Learning Research. 2010b;11:1081–1107. [Google Scholar]

- Zhao P, Yu B. On Model Selection Consistency of Lasso. Journal of Machine Learning Research. 2006;7:2541–2563. [Google Scholar]

- Zou H. The Adaptive Lasso and Its Oracle Properties. Journal of the American Statistical Association. 2006;101:1418–1429. [Google Scholar]

- Zou H, Li R. One-Step Sparse Estimates in Nonconcave Penalized Likelihood Models. The Annals of Statistics. 2008;36(4):1509–1533. doi: 10.1214/009053607000000802. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zou H, Hastie T, Tibshirani R. On the Degrees of Freedom of the Lasso. The Annals of Statistics. 2007;35(5):2173–2192. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.