Abstract

We previously demonstrated that, within a passive viewing task, fearful facial expressions implicitly facilitate memory for contextual events, while angry facial expressions do not (Davis et al., 2011). The current study sought to more directly address the implicit effect of fearful expressions on attention for contextual events within a classic attentional paradigm (i.e., the attentional blink) where memory is tested on a trial-by-trial basis, thereby providing subjects with a clear explicit attentional strategy. Neutral faces of a single gender were presented via rapid serial visual presentation (RSVP) while bordered by four gray pound signs. Participants were told to watch for a gender change within the sequence (T1). Critically, the T1 face displayed either a neutral, fearful, or angry expression. Subjects were also told to detect a color change (i.e., gray to green; T2) at one of the four pound sign locations appearing after T1. This T2 color change could appear at one of six temporal positions. Participants were told to respond via button press immediately when a T2 target was presented. We found that fearful, compared to the neutral T1 faces, significantly increased target detection ability at four of the six temporal locations (all p’s < .05) while angry expressions showed no such effects. The results of this study suggest that fearful facial expressions can uniquely and implicitly enhance environmental monitoring above and beyond explicit attentional effects related to task instructions.

Extant research has demonstrated that, when images are presented rapidly, salient images will inhibit the ability to detect and process subsequently presented items within a critical temporal window (Raymond, Shapiro, & Arnell, 1992). Raymond and colleagues (1992) referred to this effect as the ‘attentional blink’ and nicely demonstrated that any presented stimulus can be made salient simply by cueing an individual to explicitly watch for its presentation. This seminal work led researchers to examine how the effect would hold for both cued and non-cued targets. In other words, would non-cued items that possessed some inherent salience influence subsequent attention independent of task demands? Indeed, the effects of both cued and non-cued, but inherently salient, items have been observed for various types of stimuli including faces (de Jong, Koster, van Wees, & Martens, 2009; de Jong, Koster, van Wees, & Martens, 2010; Milders, Sahraie, Logan, & Donnellon, 2006), scenes (Most, Chun, Widders, & Zald, 2005; Smith, Most, Newsome, & Zald, 2006), words (Arnell, Killman, & Fijavz, 2007; Keil & Ihssen, 2004), letters and symbols (Chun & Potter, 1995), and schematic drawings (Maratos, Mogg, & Bradley, 2008; Miyazawa & Iwasaki, 2010). We focused on the ability of non-cued, but emotionally meaningful images (i.e. facial expressions) to inherently capture attention as the starting point for the present experimental design.

Images of facial expressions of emotion are an example of stimuli that possess inherent salience. Maratos (2011) used schematic faces in an attentional blink paradigm to show that negatively valenced expressions (i.e. angry) presented in the T1 position produced a greater blink than either happy or neutral faces. Alternatively, other studies have focused on inherently salient images presented in the T2 position within the attentional blink paradigm. These studies have demonstrated that emotionally salient faces (De Martino, Kalisch, Rees, & Dolan, 2009; Maratos et al., 2008) or words (Anderson & Phelps, 2001) presented as the T2 target mitigate the magnitude of the blink following T1 detection. Thus, to date, facial expressions in the T1 position produce the attentional blink, whereas they mitigate or ‘break through’ the attentional blink in the T2 position. Here we sought to demonstrate that fearful facial expressions presented in the T1 position would, based on their unique predictive information value, would facilitate one particular form of T2 target detection.

Though fearful and angry facial expressions are usefully thought of together as negatively valenced and threat related (Hariri, Tessitore, Mattay, Fera, & Weinberger, 2002), previous research suggests a critical difference between the two expressions. Davis and colleagues (2011), in a passive viewing paradigm, showed that words presented following fearful facial expressions were better remembered than those presented after angry facial expressions, though the expressions had been matched for valence intensity and arousal value. This finding was interpreted to mean that since fearful expressions offer no information about the source of the current threat, they induce the viewer to direct their attention to the context. Angry expressions, on the other hand, embody a present threat and call for attention to remain focused on the individual who is angry. Two key facets to the Davis and colleagues (2011) study are as follows: 1) there were no instructions concerning what subjects would be asked about prior to this passive viewing study, so any memory effects were interpreted as an implicit effect of the expressions on subsequent memory, 2) memory for the words in the context was assessed at the end of the experiment, thus there was no trial-by-trial feedback or memory questions that might have impacted subjects’ attentional strategies. Therefore, the present study, aimed to more directly assess the implicit effect of fearful faces on contextual memory within a classic attentional blink paradigm where subjects report on trial events on a trial-by-trail basis, thereby providing subjects with information that would overtly direct their attention on subsequent trials.

Specifically, within a rapid serial visual presentation (RSVP) paradigm, we presented faces of a single gender that were bordered by four gray pound signs (i.e., #). Subjects were instructed to watch for a gender change (T1). Subjects were not informed that the T1 face that just changed gender was either fearful, angry, or neutral with respect to expression. Subjects were instructed that after they detected the T1 gender change, one of the peripheral pound signs would change color (T2). Subjects were instructed to press a button when they detected the subsequent peripheral pound sign color change (T2) that could occur at one of six temporal lags. At the end of each trial, subjects were asked to report the identity of the T1 face and the location of the T2 pound sign color change. Since our working hypothesis was that fearful expressions uniquely diffuse attention to the context, we considered it critical that the to be detected T2 event be of a peripheral nature. We expected the T1 gender change would produce an attentional blink, and hypothesized that fearful facial expressions in the T1 position would mitigate the temporal duration of this ‘blink’ and yield greater overall peripheral T2 detection, while angry and neutral expressions in the T1 position would not.

Materials and Methods

Participants

Thirty-seven healthy individuals (ages 18–33; 8 men and 29 women) participated in this experiment in exchange for monetary compensation. Participants were recruited using flyers and online postings seeking volunteers for a behavioral study of attention. Participants were screened for psychiatric illness with an abbreviated version of the non-patient edition of the Structured Clinical Interview for the DSM (First et al., 1995), which assessed for current and past history of major depressive disorder, dysthymia, hypomania, bi- polar disorder, specific phobia, social anxiety disorder, generalized anxiety disorder, and obsessive–compulsive disorder. Participants gave informed consent prior to participation, as per Dartmouth College’s Center for the Protection of Human Subjects guidelines.

Materials

The visual stimuli consisted of 200 × 300 pixel (2.78 × 4.17 inches) black and white photographs of male and female faces with neutral, angry, or fearful expressions taken from the NimStim facial set (Tottenham et al., 2009). Critically, previous work has shown fearful and angry facial expressions to be matched in terms of valence intensity and arousal value when measured as either subjective report (Davis et al., 2011; Ekman, 1997; Johnsen, Thayer, & Hugdahl, 1995; Matsumoto, Kasri, & Kooken, 1999) or objective GSR responses (Johnsen et al., 1995). Since these previous findings tested fearful and angry faces from the Ekman face stimuli (Ekman & Friesen, 1976), we note that Davis and colleagues (2011) found no significant difference in valence or arousal ratings between fearful and angry faces from the Nimstim face stimuli set (Tottenham et al., 2009).

All pictures were converted to gray scale and edited using Adobe Photoshop (Adobe Systems Incorporated) to control for contrast and luminance. All stimuli were presented using E-Prime (Psychology Software Tools, Incorporated) on a Lenovo ThinkPad T410 laptop with a 14-inch (35.5-cm), 60-Hz display screen and were viewed from a distance of approximately 57-cm. All analyses were performed using IBM SPSS Statistics (IBM Corporation).

Procedure

Overview

All faces were presented upright with four gray pound signs (#) surrounding the face at equal distances from the tip of the nose (i.e., one was located above, below, to the left, and to the right of each face). Noncritical distractor face images (N=15 per trial) had neutral expressions and were of the same gender. Subjects were explicitly instructed to look for a ‘gender change’ as the critical target event (T1). Critically, subjects were not informed that the T1 faces also differed in facial expression, being either neutral, angry, or fearful. This manipulation enabled us to directly assess the impact of the different emotional expressions amidst the consistent gender change event. Subjects were informed that after they observed a gender change in the repeating neutral faces (T1), they were to look for a change in color in one of the four pound signs (i.e., from gray to green, T2). This change could occur at any one of six temporal lags. Subjects pressed a button to signal that they had noticed the color change and the multiple temporal lags ensured that subjects had actually detected a change at the appropriate time. Further, the paradigm included ‘catch trials’ where subjects were told that sometimes there would be no color change and to not press the button in these instances. After each trial, subjects were asked two questions: first to report at which of the four locations the pound sign had changed color, and second, to identify the T1 face that had changed gender from three possible choices presented to the subject in a forced choice format (e.g., three different individuals displaying whatever expression was presented in the T1 position). These questions were asked to ensure that participants were following task instructions.

Although the task was quite demanding, assessment of trials where the target was correctly detected showed that participants were also able to report the T2 location consistently and accurately [98.7% – Fear; 98.9% – Anger; 99.0% Neutral; with no significant differences between expression (all p’s > .05).

Memory for the T1 face identity was also assessed to ensure task compliance. That is, given the unique aspect of the present design where the T2 event requires attention to the periphery it was necessary to ensure that subjects were monitoring the faces at T1 and not just monitoring the periphery exclusively. Subjects performed above chance for T1 identification across all trials [75.9% – Fear; 73.1% – Anger; 62.1% Neutral; with a significant difference between fear and anger, t(29) = 2.061, p = 0.048, fear and neutral, t(29) = 10.642, p < 0.001, and anger and neutral, t(29) = 7.538, p < 0.001]. This indicated that participants did follow task instructions. Since T1 memory performance differed between conditions, T1 memory was included in our analysis as a covariate of interest to assess its impact on T2 detection.

Participants received detailed explanations and examples of the task and also completed several practice trials in the presence of the experimenter before beginning the experiment.

Trial Timings

Each trial began with a fixation cross (+) presented at the center of the screen for 500ms followed by sequentially presented face stimuli, surrounded by the four gray pound signs. All faces were presented for 128 ms with each presented immediately after the last. We based this timing on previous attentional blink work using faces in the T1 position. Maratos and colleagues (2008) presented participants with schematic faces for 128.5 ms each. We adopted a similar presentation time so any differential effects between the present study and the work of Maratos et al (2008) could not be related to presentation time per se.

In an example trial, a subject would view 15 neutral female faces. At a variable point within the 15 presentations (i.e., 256 or 512ms from the beginning of the trial) a neutral, fearful, or angry male face would be presented (T1). Following the T1 event, the T2 event (pound sign color change) could occur at one of six possible lags (i.e., 128, 256, 384, 512, 640, or 768ms). Subjects were to report if they observed the T2 event via button press, except of course on catch trials. The allowable response window for detection was limited to 512ms after the T2 target event. This allowable response window was utilized because of the brief amount of time each face was on the screen and to ensure that T2 targets appearing at earlier lags did not receive a longer response time window than those appearing later. Further, this limited response window was used to reduce the likelihood that participants could benefit from random button pressing, e.g. indicating they detected a target at the end of every trial even if they were unsure that one had been presented. Because of this reaction time window ‘ceiling,’ we chose to analyze accuracy data as opposed to RT data. Any responses made outside of this window were counted as inaccurate. To ensure that there were no systematic differences in the number of responses excluded, a post-hoc ANOVA was conducted exploring the mean number of responses excluded as a function of emotional expression and found no difference between expressions [Fear = 18.9%, Anger = 20.7%, Neutral = 24%; F(2,89) = 2.810, p > .05].

All trials where subjects provided a target detection response within the 512 ms window were used in this analysis because a) we see this as a more direct measure of attention and b) the multiple lags and catch trials ensure the accuracy of this measure. That said, attentional blink studies typically use memory for the T2 target as the criteria for inclusion of a trial, and the present design allows for a consideration of the effect of emotional expression on detection per se and how these effects relate to subsequent memory for the T2 event location. Thus, we also provide data in the Results section on memory for the T2 events.

Experimental Timings and counterbalancing

Participants completed four runs of the task – each run consisted of 84 trials with a rest period between every 21 trials. Each of the six T2 lags comprised 48 trials while each of the three T1 facial expression categories comprised 112 trials. There were 48 catch trials. T1 gender and expression as well as T2 pound sign location and lag position were all pseudorandomized so that there were equal numbers of events in each category and so no systematic order effects existed within any one category. The experimenter reminded the participant of the instructions before beginning each new run.

Results

Of our original 37 participants, five were excluded because their performance did not reach an acceptable criterion (These subjects could not or perhaps would not do the task – four performed at below 10% correct, while another subject reported detecting a target on 79% of catch trials). Given the difficult nature of this task, it was important to account for any “non-responders”, i.e. individuals who failed to complete necessary task demands and who failed to appropriately follow instructions.

From the 32 subjects who complied with task instructions, we followed the findings of Bush, Hess, and Wolford (1993) and a priori excluded the best and worst performers from the analysis. The rationale for removing these two subjects was to assess a truncated mean, which controls for potential outliers without negatively impacting power. This left us with 30 (24 females) participants to be included in the analysis.

T2 Detection Accuracy Data

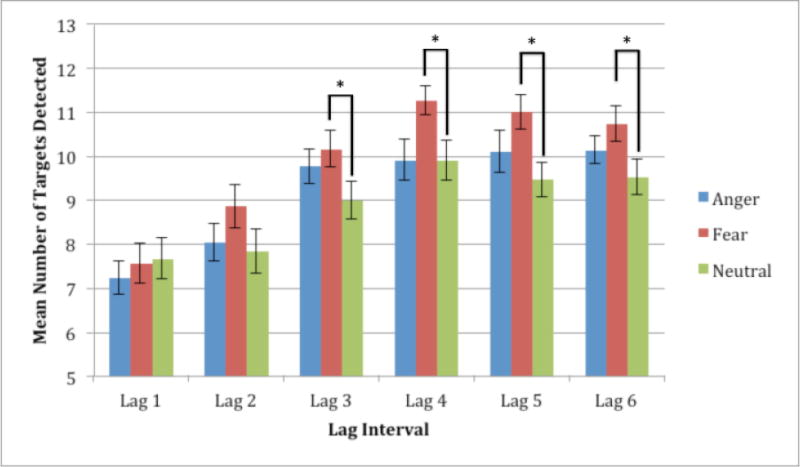

A 3 (T1 emotional expression) × 6 (T2 Lag) repeated measures ANOVA of number of trials correct (out of 16 per category) revealed a significant main effect of emotion, F(2,58) = 15.412, p < 0.001 and of lag, F(5,145) = 32.662, p < 0.001, with no significant interaction between Emotion and Lag, F(10,290) = 0.963, p = 0.476.

Planned paired samples T-tests invoked to explore our primary hypothesis that fearful expressions would augment the ability to detect a subsequent target compared to neutral faces, revealed a significant difference in target detection accuracy following Fear vs. Neutral faces at four of the six lag times: Lag 3 – t(29) = 2.522, p = 0.017; Lag 4- t(29) = 2.749, p = 0.010; Lag 5 – t(29) = 3.724, p = 0.001; Lag 6 – t(29) = 2.536, p = 0.017. Additionally, the difference between fear and neutral approached significance at Lag 2 – t(29) = 1.963, p = 0.059. There was no significant difference between target accuracy following Anger vs. Neutral trials at any of the lag times. Thus, the presentation of fearful expressions significantly increased the ability to detect a subsequent target compared to neutral faces at four of the six lag times examined.

Results are very similar when only examining trials where the T2 location was correctly reported with a significant difference between fearful and neutral expressions at Lag 4, t(29) = 2.332, p = 0.027; Lag 5, t(29) = 2.548, p = 0.016; and Lag 6, t(29) = 2.641, p = 0.013 and no significant differences between angry and neutral expressions (all p’s > 0.05). Further, examination of trials where the T2 was both accurately detected and recalled also revealed a significant difference between fearful and neutral expressions at Lag 3, t(29) = 2.564 , p = 0.016; Lag 4, t(29) = 2.579 , p = 0.015; Lag 5, t(29) = 3.717 , p = 0.001; and Lag 6, t(29) = 2.361 , p = 0.025, with no differences between angry and neutral (all p’s > 0.05).

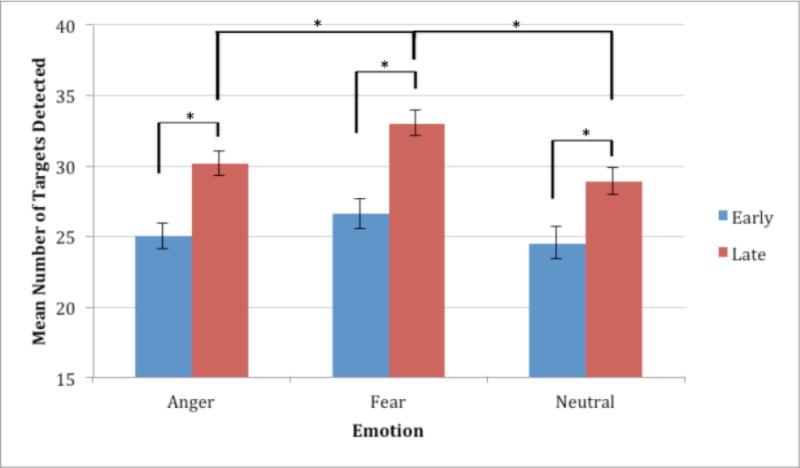

Raymond and colleagues (1992) suggested that target detection would be impaired within a 500msec window following T1 presentation. With that in mind, performance for Lags 1–3 and Lags 4–6 were combined to examine early and late lag performance. This also allowed for greater statistical power in order to directly compare T2 target detection following fearful vs. angry expressions. As would be expected from previous work, performance was significantly better for late lag times than early for anger, t(29) = 5.611, p < 0.001, fear, t(29) = 6.095, p < 0.001, and neutral, t(29) = 4.114, p < 0.001. Further, performance was significantly better on late fear trials than late anger trials, t(29) = 3.601, p = 0.001, and trended towards significance for early fear trials compared to early anger trials, t(29) = 1.917, p = 0.065. In addition, we also found that performance on fear trials was significantly better than neutral trials at both early, t(29) = 2.649, p = 0.13, and late, t(29) = 4.505, p < 0.001 lag times. It should also be noted that anger and neutral did not differ at either early or late lag times.

Given the differences in memory for the T1 faces, an analysis of covariance (ANCOVA) was conducted which included T1 memory performance as a covariate of interest. The results of this analysis again showed a main effect of emotion, F(2,520) = 6.015, p = 0.0026, and a main effect of lag, F(5,520) = 26.3, p < 0.001, with no significant interaction between emotion and lag, F(10,520) = 0.66 , p = 0.76175. The analysis also revealed a main effect of the covariate, F(1,520) = 18.670, p < 0.001. Although the covariate significantly contributes to T2 target detection, the finding that the main effects of emotion and lag are still significant when accounting for the covariate suggests that T1 memory is not driving the main effects of emotion and lag.

Discussion

The results of this study clearly show that the ability to detect a subsequent peripheral target is augmented by the presentation of a fearful face in a way that is not observed for either angry or neutral facial expressions. This effect was observed in an attentional blink paradigm that augmented attention to the T2 peripheral target event. By randomly altering the location of the T2 target, we demonstrated that attention in this case was widened or diffused throughout the environmental context to maximize the chance of detecting a peripheral target that could occur at any one of four peripheral locations. In addition, this study documents that not all negatively valenced facial expressions presented in the T1 position within an attentional blink paradigm should be expected to necessarily impact subsequent attention in the same way – in the present example emotional expressions in the T1 position can augment detection of events in the periphery.

The present finding fits nicely with our working hypotheses suggesting that fearful expressions possess greater predictive ‘source ambiguity’ compared to angry faces (Whalen, 1998; Whalen, 2007) and thus should diffuse attention and elicit greater attention to the surrounding context. That is, though fearful and angry facial expressions both signal an increase in the probability of threat, fearful expressions are more context dependent in that they offer no information about the source of that threat [see (Davis et al., 2011; Whalen, 1998) for further discussion]. These data offer an attentional mechanism to explain the facilitation in contextual memory benefits bestowed by fearful expressions (Davis et al., 2011; see also Awh, Vogel, & Oh, 2006 for general discussion).

Comparison of the present study with previous attentional blink studies

Anderson and Phelps (2001) showed that presentation of a negatively valenced or arousing target word at the T2 location could reduce the effect of the attentional blink following T1 target detection and allow for more accurate processing of the T2 target (see also De Martino et al., 2009; Maratos et al., 2008). The current work shows that fearful facial expressions presented at the T1 target location can uniquely augment T2 detection in a way that another negatively valenced facial expression (i.e., anger) does not. Thus, emotion can have differential effects on attention depending on the position of its occurrence in the attentional blink paradigm (i.e., T1 or T2).

The procedural design of the present task was based upon several previous studies exploring the attentional blink that have shown robust and consistent results using a variety of stimuli (Arnell et al., 2007; Chun & Potter, 1995; de Jong et al., 2009; de Jong, et al., 2010; Keil & Ihssen, 2004; Maratos et al., 2008; Milders et al., 2006; Miyazawa & Iwasaki, 2010; Most et al., 2005; Smith et al., 2006; Raymond et al., 1992). These studies, although variable in procedural details, tend to present individuals with a string of images. At some point in the string, a critical “distractor” (T1) is presented before a critical target (T2). These studies have consistently found that the ability to report about or recall aspects of the T2 event is impaired within a critical temporal window. Although these studies served as the background for the current work, a few alterations were incorporated and deserve further consideration.

First, the present study design deliberately rendered aspects of the T1 target both explicit and implicit to allow for examination of the interaction between attention and emotion. That is, participants were directed to explicitly monitor a T1 gender change, which would be the basis for the attentional blink, while we measured the implicit effect of emotion. Although it is true that the emotional expressions inherently imbue the T1 event with salience, we chose to manipulate gender in order to make all T1 events, including those with neutral expressions, salient. This manipulation allowed us to directly compare fearful and angry expressions against neutral expressions while ensuring that all T1 events, including neutral were salient in terms of attention to gender, for a more clear comparison of the effect of expression on our three conditions.

Second, requiring participants to indicate, in real-time when the T2 target was presented, allowed us to determine whether a participant ‘detected’ or ‘missed’ the target in a way that was independent of whether details about the target were accurately remembered. This manipulation allowed for the examination of target detection independent of any working memory effects. Previous research, which has traditionally required participants to report on a given aspect of the T2 event following each trial, have consistently demonstrated that recall of the T2 event is impaired if it occurred within a critical temporal window following the T1. We analyzed trials based on correct detection of the T2 event, rather than post-trial memory, to make the attentional nature of the present effect more clear. That said, in the methods we provide comparison data for trials based on accurate memory for T2 showing they provide nearly identical results. It is interesting to note that while detection was augmented at Lag 3, location memory was not – suggesting it is possible to show enhanced detection that ‘something’ has occurred, but not be able to report exactly what that ‘something’ was.

Comparison of the present results with previous facial expression studies

The fact that angry faces had a lesser effect on T2 target detection might, at first, appear to conflict with the work of Maratos (2011) who showed that angry expressions in the T1 target position attenuate T2 target detection. Indeed, since fearful and angry expressions are at once interesting, arousing, and negatively valenced, it would be reasonable to expect that both of these expressions would affect attention in similar ways (Davis et al., 2011; Shapiro, Raymond, Arnell, 1997; Smith et al., 2006). However, we demonstrate here that fearful expressions produce a widening of attention not observed following angry expressions. We suggest the difference between these two studies lies in the fact that Maratos (2011) tested the effect of T1 angry face targets on the detection of a centrally located T2 target, whereas the present design specifically sought to assess detection of a peripheral T2 target.

Memory for T1 faces differed for fearful > angry > neutral faces raising the possibility that memory for T1 faces might be driving the observed differences in T2 detection. Our analysis of covariance shows that attention to and explicit memory for the T1 faces did impact T2 detection performance, the analysis also shows that the presented effects hold even when accounting for T1 memory.

It is interesting to compare the T1 memory effect observed here to that observed by Davis and colleagues (2011). Whereas we observed better memory for fearful vs. angry expressions, Davis and colleagues (2011) observed better memory for angry vs. fearful expressions. There are several methodological differences between these two studies that might explain this difference. First, we asked participants to choose which of three faces they saw at the end of every trial. Davis and colleagues (2011) asked participants to report which faces they saw at the end of the study. We used T1 recall as a means to ensure task compliance and, consequently, we directed participants to attend to the T1 event, where Davis and colleagues (2011) gave no such instruction about attention to the faces. Consequently, we biased attentional control on a trial-by-trial basis in a way that was not present in the work of Davis and colleagues (2011), which focused on the implicit effect of emotional expressions on covert orienting. It is possible that the overt instruction to attend to the T1 and T2 events enhanced memory for both fearful and angry expressions and produced a memory effect that overshadowed the implicit memory effects reported by Davis et al (2011).

In the present experimental design, we essentially used angry facial expressions as a control condition for negative valence intensity and general arousal as possible explanations for the effects observed here to fearful expressions. As noted in the methods, subjective ratings of valence intensity in the present study or previous studies do not differ between fearful and angry expressions (Davis et al., 2011; Ekman, 1997; Johnsen et al., 1995; Matsumoto et al., 1999), nor does an objective measure of arousal (Johnsen et al., 1995 [i.e., GSR]). This is true whether measured using the Ekman face stimulus set (Ekman, 1997; Johnsen et al., 1995; Matsumoto et al., 1999) or the Nimstim face stimulus set (Davis et al., 2011) which we used in the present study. Thus, the possibility that fearful expressions produced greater arousal responses is an unlikely explanation for the present findings. Indeed, the fact that we found better target detection performance following fearful expressions is entirely consistent with the present working hypothesis, since an arousal hypothesis based on data from previous attentional blink studies would predict that emotionally arousing images would impair T2 detection.

Conclusions

The notion that the predictive ‘source ambiguity’ inherent to fearful expressions diffuses attention to the context is consistent with previous work showing that individuals consistently rely on context when resolving ambiguity (Barrett, Lindquist, & Gendron, 2007; Bouton, 1994; Carroll & Russell, 1996; Masuda, Ellsworth, & Veerdonk, 2008). Here we show that a similar diffusion of attention following presentation of a fearful expression allows for a more reliable and accurate detection of a critical target presented in the periphery. The present results might influence future neuroimaging studies assessing the role of the amygdala in biologically relevant learning, given the documented role of the amygdala in processing fearful expressions (Whalen et al., 2009) and a non-human animal literature showing that the amygdala serves to facilitate sensory information processing (i.e., increases attention) in the immediate environment in response to predictors of biologically relevant events (Kapp, Silvestri, & Guarraci, 1998; Vuilleumier & Driver, 2007; Whalen, 1998; Whalen, 2007).

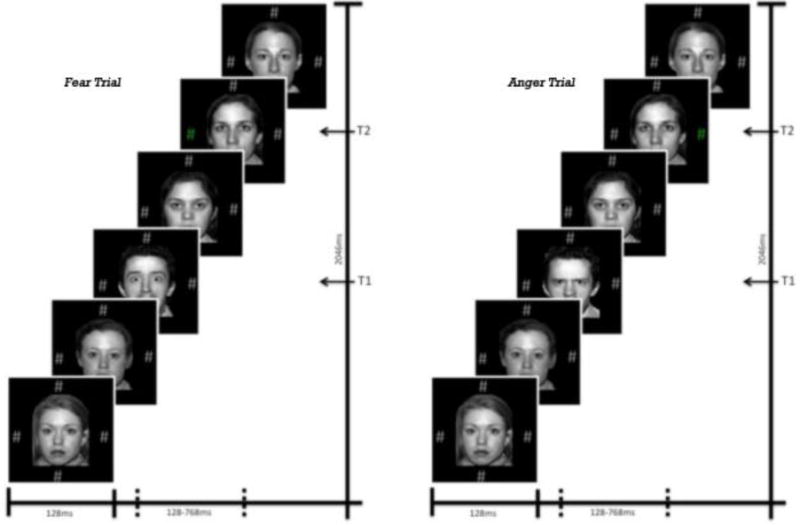

Figure 1. Examples of fear and anger trials.

Participants were presented with faces of a single gender and told to watch for a gender change (T1) that could signal the presentation of a target, i.e. a change in color at one of the four pound sign locations (T2). Faces were presented for 128ms each and the T2 target could appear at one of six temporal locations.

Figure 2. Mean number of targets detected at each of the six lag times.

The mean number of targets detected at each of the six lag times was examined to determine whether the expression that preceded the target at the T1 location influenced the ability to detect the target. Indeed, fear significantly increased the likelihood that targets would be detected at four of the six lag times (all p’s < 0.05) when compared to neutral while anger had no such effect.

Figure 3. Mean number of targets detected at early and late lag times.

To be certain that an attentional blink effect had occurred, performance was collapsed across early and late trials. For all emotional expressions, participants performed significantly better on late compared to early trials.

Acknowledgments

Funded by NIMH MH087016. We would like to thank George Wolford for his statistical advice as well as Sophie Palitz, Jennifer Buchholz, Holly Wakeman, and Iliana Meza-Gonzalez for their help with data collection.

References

- Anderson AK, Phelps EA. Lesions of the human amygdala impair enhanced perception of emotionally salient events. Nature. 2001;411(6835):305–9. doi: 10.1038/35077083. [DOI] [PubMed] [Google Scholar]

- Arnell KM, Killman KV, Fijavz D. Blinded by emotion: target misses follow attention capture by arousing distractors in RSVP. Emotion (Washington, DC) 2007;7(3):465–77. doi: 10.1037/1528-3542.7.3.465. [DOI] [PubMed] [Google Scholar]

- Awh E, Vogel EK, Oh SH. Interactions between attention and working memory. Neuroscience. 2006;139(1):201–8. doi: 10.1016/j.neuroscience.2005.08.023. [DOI] [PubMed] [Google Scholar]

- Barrett LF, Lindquist KA, Gendron M. Language as context for the perception of emotion. Trends in Cognitive Sciences. 2007;11(8) doi: 10.1016/j.tics.2007.06.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bouton M. Context, ambiguity, and classical conditioning. Current Directions in Psychological Science. 1994;3(2):49–53. [Google Scholar]

- Bush LK, Hess U, Wolford G. Transformations for within-subject designs: A monte carlo investigation. Psychological Bulletin. 1993;113(3):566–579. doi: 10.1037/0033-2909.113.3.566. [DOI] [PubMed] [Google Scholar]

- Carroll JM, Russell JA. Do facial expressions signal specific emotions? Judging emotion from the face in context. Journal of Personality. 1996;70(2):205–218. doi: 10.1037//0022-3514.70.2.205. [DOI] [PubMed] [Google Scholar]

- Chun MM, Potter MC. A two-stage model for multiple target detection in rapid serial visual presentation. Journal of experimental psychology. Human Perception and Performance. 1995;21(1):109–27. doi: 10.1037//0096-1523.21.1.109. [DOI] [PubMed] [Google Scholar]

- Davis FC, Somerville LH, Ruberry EJ, Berry ABL, Shin LM, Whalen PJ. A tale of two negatives: Differential memory modulation by threat-related facial expressions. Emotion (Washington, DC) 2011;11(3):647–55. doi: 10.1037/a0021625. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Jong PJ, Koster EHW, van Wees R, Martens S. Emotional facial expressions and the attentional blink: Attenuated blink for angry and happy faces irrespective of social anxiety. Cognition & Emotion. 2009;23(8):1640–1652. [Google Scholar]

- de Jong PJ, Koster EHW, van Wees R, Martens S. Angry facial expressions hamper subsequent target identification. Emotion (Washington, DC) 2010;10(5):727–32. doi: 10.1037/a0019353. [DOI] [PubMed] [Google Scholar]

- De Martino B, Kalisch R, Rees G, Dolan RJ. Enhanced processing of threat stimuli under limited attentional resources. Cerebral Cortex (New York, NY: 1991) 2009;19(1):127–33. doi: 10.1093/cercor/bhn062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ekman P. Should we call it expression or communication? Innovations in Social Science Research. 1997;10:333–344. [Google Scholar]

- Ekman P, Friesen WV. Pictures of facial affect. Palo Alto, CA: Consulting Psychologists Press; 1976. [Google Scholar]

- First MB, Spitzer RL, Gibbon M, Williams JBW, Davies M, Borus J, Howes MJ, Kane J, Pope HG, Jr, Rounsaville B. The structured clinical interview for DSM-III-R personality disorders (SCID-II). Part II: Multi-site test-retest reliability study. Journal of Personality Disorders. 1995;9(2):92–104. [Google Scholar]

- Hariri AR, Tessitore A, Mattay VS, Fera F, Weinberger DR. The amygdala response to emotional stimuli: A comparison of faces and scenes. NeuroImage. 2002;17(1):317–323. doi: 10.1006/nimg.2002.1179. [DOI] [PubMed] [Google Scholar]

- Johnsen BH, Thayer JF, Hugdahl K. Affective judgment of the Ekman faces: A dimensional approach. Journal of Psychophysiology. 1995;9:193–202. [Google Scholar]

- Kapp BS, Silvestri AJ, Guarraci FA. Vertebrate Models of Learning and Memory. In: Martinez JL Jr, Kesner RP, editors. Neurobiology of Learning and Memory. Academic Press; San Diego: 1998. pp. 289–332. [Google Scholar]

- Keil A, Ihssen N. Identification facilitation for emotionally arousing verbs during the attentional blink. Emotion (Washington, DC) 2004;4(1):23–35. doi: 10.1037/1528-3542.4.1.23. [DOI] [PubMed] [Google Scholar]

- Maratos FA. Temporal processing of emotional stimuli: The capture and release of attention by angry faces. Emotion (Washington, DC) 2011;11(5):1242–7. doi: 10.1037/a0024279. [DOI] [PubMed] [Google Scholar]

- Maratos FA, Mogg K, Bradley BP. Identification of angry faces in the attentional blink. Cognition & Emotion. 2008;22(7):1340–1352. doi: 10.1080/02699930701774218. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Masuda T, Ellsworth PC, Veerdonk EVD. Placing the face in context: Cultural differences in the perception of facial emotion. Journal of Personality. 2008;94(3):365–381. doi: 10.1037/0022-3514.94.3.365. [DOI] [PubMed] [Google Scholar]

- Matsumoto D, Kasri F, Kooken K. American-Japanese cultural differences in judgments of expression intensity and subjective experience. Cognition and Emotion. 1999;13:201–218. [Google Scholar]

- Milders M, Sahraie A, Logan S, Donnellon N. Awareness of faces is modulated by their emotional meaning. Emotion (Washington, DC) 2006;6(1):10–7. doi: 10.1037/1528-3542.6.1.10. [DOI] [PubMed] [Google Scholar]

- Miyazawa S, Iwasaki S. Do happy faces capture attention? The happiness superiority effect in attentional blink. Emotion (Washington, DC) 2010;10(5):712–6. doi: 10.1037/a0019348. [DOI] [PubMed] [Google Scholar]

- Most SB, Chun MM, Widders DM, Zald DH. Attentional rubbernecking: cognitive control and personality in emotion-induced blindness. Psychonomic Bulletin & Review. 2005;12(4):654–61. doi: 10.3758/bf03196754. [DOI] [PubMed] [Google Scholar]

- Raymond J, Shapiro K, Arnell K. Temporary suppression of visual processing in an RSVP task: An attentional blink? Journal of ExperimentalPsychology. 1992;18(3):849–860. doi: 10.1037//0096-1523.18.3.849. [DOI] [PubMed] [Google Scholar]

- Shapiro KL, Raymond JE, Arnell KM. The attentional blink. Trends in Cognitive Sciences. 1997;1(8):291–6. doi: 10.1016/S1364-6613(97)01094-2. [DOI] [PubMed] [Google Scholar]

- Smith SD, Most SB, Newsome LA, Zald DH. An emotion-induced attentional blink elicited by aversively conditioned stimuli. Emotion (Washington, DC) 2006;6(3):523–7. doi: 10.1037/1528-3542.6.3.523. [DOI] [PubMed] [Google Scholar]

- Tottenham N, Tanaka J, Leon AC, McCarry T, Nurse M, Hare TA, et al. The NimStim set of facial expressions: Judgments from untrained research participants. Psychiatry Research. 2009;168:242–249. doi: 10.1016/j.psychres.2008.05.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vuilleumier P, Driver J. Modulation of visual processing by attention and emotion: windows on causal interactions between human brain regions. Philosophical transactions of the Royal Society of London. Series B, Biological Sciences. 2007;362(1481):837–55. doi: 10.1098/rstb.2007.2092. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Whalen PJ. Fear, vigilance, and ambiguity: Initial neuroimaging studies of the human amygdala. Current Directions in Psychological Science. 1998;7(6):177–188. [Google Scholar]

- Whalen PJ. The uncertainty of it all. Trends in Cognitive Sciences. 2007;11(12):499–500. doi: 10.1016/j.tics.2007.08.016. [DOI] [PubMed] [Google Scholar]

- Whalen PJ, Davis FC, Oler JA, Kim H, Kim MJ, Neta M. Human amygdala responses to facial expressions of emotion. In: Whalen PJ, Phelps EA, editors. The Human Amygdala. New York: Guilford Press; 2009. pp. 265–288. [Google Scholar]