Abstract

Most papers on high-dimensional statistics are based on the assumption that none of the regressors are correlated with the regression error, namely, they are exogenous. Yet, endogeneity can arise incidentally from a large pool of regressors in a high-dimensional regression. This causes the inconsistency of the penalized least-squares method and possible false scientific discoveries. A necessary condition for model selection consistency of a general class of penalized regression methods is given, which allows us to prove formally the inconsistency claim. To cope with the incidental endogeneity, we construct a novel penalized focused generalized method of moments (FGMM) criterion function. The FGMM effectively achieves the dimension reduction and applies the instrumental variable methods. We show that it possesses the oracle property even in the presence of endogenous predictors, and that the solution is also near global minimum under the over-identification assumption. Finally, we also show how the semi-parametric efficiency of estimation can be achieved via a two-step approach.

Keywords: Focused GMM, Sparsity recovery, Endogenous variables, Oracle property, Conditional moment restriction, Estimating equation, Over identification, Global minimization, Semi-parametric efficiency

1. Introduction

In high-dimensional models, the overall number of regressors p grows extremely fast with the sample size n. It can be of order exp(nα), for some α ∈ (0, 1). What makes statistical inference possible is the sparsity and exogeneity assumptions. For example, in the linear model

| (1.1) |

it is assumed that the number of elements in S = {j : β0j ≠ 0} is small and EεX = 0, or more stringently

| (1.2) |

The latter is called “exogeneity”. One of the important objectives of high-dimensional modeling is to achieve the variable selection consistency and make inference on the coefficients of important regressors. See, for example, Fan and Li (2001), Hunter and Li (2005), Zou (2006), Zhao and Yu (2006), Huang, Horowitz and Ma (2008), Zhang and Huang (2008), Wasserman and Roeder (2009), Lv and Fan (2009), Zou and Zhang (2009), Städler, Bühlmann and van de Geer (2010), and Bühlmann, Kalisch and Maathuis (2010). In these papers, (1.2) (or EεX = 0) has been assumed either explicitly or implicitly1. Condition of this kind is also required by the Dantzig selector of Candès and Tao (2007), which solves an optimization problem with constraint for some C > 0.

In high-dimensional models, requesting that ε and all the components of X be uncorrelated as (1.2), or even more specifically

| (1.3) |

can be restrictive particularly when p is large. Yet, (1.3) is a necessary condition for popular model selection techniques to be consistent. However, violations to assumption either (1.2) or (1.3) can arise as a result of selection biases, measurement errors, autoregression with autocorrelated errors, omitted variables, and from many other sources (Engle, Hendry and Richard 1983). They also arise from unknown causes due to a large pool of regressors, some of which are incidentally correlated with the random noise Y−XTβ0. For example, in genomics studies, clinical or biological outcomes along with expressions of tens of thousands of genes are frequently collected. After applying variable selection techniques, scientists obtain a set of genes Ŝ that are responsible for the outcome. Whether (1.3) holds, however, is rarely validated. Because there are tens of thousands of restrictions in (1.3) to validate, it is likely that some of them are violated. Indeed, unlike low-dimensional least-squares, the sample correlations between residuals ∊̂, based on the selected variables XŜ, and predictors X, are unlikely to be small, because all variables in the large set Ŝc are not even used in computing the residuals. When some of those are unusually large, endogeneity arises incidentally. In such cases, we will show that Ŝ can be inconsistent. In other words, violation of assumption (1.3) can lead to false scientific claims.

We aim to consistently estimate β0 and recover its sparsity under weaker conditions than (1.2) or (1.3) that are easier to validate. Let us assume that and X can be partitioned as . Here XS corresponds to the nonzero coefficients β0S, which we call important regressors, and XN represents the unimportant regressors throughout the paper, whose coefficients are zero. We borrow the terminology of endogeneity from the econometric literature. A regressor is said to be endogenous when it is correlated with the error term, and exogenous otherwise. Motivated by the aforementioned issue, this paper aims to select XS with probability approaching one and making inference about β0S, allowing components of X to be endogenous. We propose a unified procedure that can address the problem of endogeneity to be present in either important or unimportant regressors, or both, and we do not require the knowledge of which case of endogeneity is present in the true model. The identities of XS are unknown before the selection.

The main assumption we make is that, there is a vector of observable instrumental variables W such that

| (1.4) |

Briefly speaking, W is called an “instrumental variable” when it satisfies (1.4) and is correlated with the explanatory variable X. In particular, as noted in the footnote, W = XS is allowed so that the instruments are unknown but no additional data are needed. Instrumental variables (IV) have been commonly used in the literature of both econometrics and statistics in the presence of endogenous regressors, to achieve identification and consistent estimations (e.g., Hall and Horowitz 2005). An advantage of such an assumption is that it can be validated more easily. For example, when W = XS, one needs only to check whether the correlations between ∊̂ and XŜ are small or not, with XŜ being a relatively low-dimensional vector, or more generally, the moments that are actually used in the model fitting such as (1.5) below hold approximately In short, our setup weakens the assumption (1.2) to some verifiable moment conditions.

What makes the variable selection consistency (with endogeneity) possible is the idea of over identification. Briefly speaking, a parameter is called “over-identified” if there are more restrictions than those are needed to grant its identifiability (for linear models, for instance, when the parameter satisfies more equations than its dimension). Let (f1,…, fp) and (h1,…, hp) be two different sets of transformations, which can be taken as a large number of series terms, e.g., B-splines and polynomials. Here each fj and hj are scalar functions. Then (1.4) implies

Write F = (f1(W),…, fp(W))T, and H = (h1(W),…, hp(W))T. We then have EεF = EεH = 0. Let S be the set of indices of important variables, and let FS and HS be the subvectors of F and H corresponding to the indices in S. Implied by EεF = EεH = 0, and , there exists a solution βS = β0S to the over-identified equations (with respect to βS) such as

| (1.5) |

In (1.5), we have twice as many linear equations as the number of unknowns, yet the solution exists and is given by βS = β0S. Because β0S satisfies more equations than its dimension, we call β0S to be over-identified. On the other hand, for any other set S̃ of variables, if S ⊄ S̃, then the following 2|S̃| equations (with |S̃| = dim(βS̃) unknowns)

| (1.6) |

have no solution as long as the basis functions are chosen such that FS̃ ≠ H S̃.3 The above setup includes W = XS with F = X and H = X2 as a specific example (or H = cos(X) + 1 if X contain many binary variables).

We show that in the presence of endogenous regressors, the classical penalized least squares method is no longer consistent. Under model

we introduce a novel penalized method, called focused generalized method of moments (FGMM), which differs from the classical GMM (Hansen 1982) in that the working instrument V(β) in the moment functions for FGMM also depends irregularly on the unknown parameter β (which also depends on (F, H), see Section 3 for details). With the help of over identification, the FGMM successfully eliminates those subset S̃ such that S ⊄ S̃. As we will see in Section 3, a penalization is still needed to avoid over-fitting. This results in a novel penalized FGMM.

We would like to comment that FGMM differs from the low-dimensional techniques of either moment selection (Andrews 1999, Andrews and Lu 2001) or shrinkage GMM (Liao 2013) in dealing with mis-specifications of moment conditions and dimension reductions. The existing methods in the literature on GMM moment selections cannot handle high-dimensional models. Recent literature on the instrumental variable method for high-dimensional models can be found in, e.g., Belloni et al. (2012), Caner and Fan (2012), García (2011). In these papers, the endogenous variables are in low dimensions. More closely related work is by Gautier and Tsybakov (2011), who solved a constrained minimization as an extension of Dantzig selector. Our paper, in contrast, achieves the oracle property via a penalized GMM. Also, we study a more general conditional moment restricted model that allows nonlinear models.

The remainder of this paper is as follows: Section 2 gives a necessary condition for a general penalized regression to achieve the oracle property. We also show that in the presence of endogenous regressors, the penalized least squares method is inconsistent. Sections 3 constructs a penalized FGMM, and discusses the rationale of our construction. Section 4 shows the oracle property of FGMM. Section 5 discusses the global optimization. Section 6 focuses on the semi-parametric efficient estimation after variable selection. Section 7 discusses numerical implementations. We present simulation results in Section 8. Finally, Section 9 concludes. Proofs are given in the appendix.

Notation

Throughout the paper, let λmin(A) and λmax(A) be the smallest and largest eigenvalues of a square matrix A. We denote by ‖A‖F, ‖A‖ and ‖A‖∞ as the Frobenius, operator and element-wise norms of a matrix A respectively, defined respectively as ‖A‖F = tr1/2(AT A), , and ‖A‖∞ = maxi,j ‖Aij‖. For two sequences an and bn, write an ≪ bn (equivalently, bn ≫ an) if an = o(bn). Moreover, |β|0 denotes the number of nonzero components of a vector β. Finally, and denote the first and second derivatives of a penalty function Pn(t), if exist.

2. Necessary Condition for Variable Selection Consistency

2.1. Penalized regression and necessary condition

Let s denote the dimension of the true vector of nonzero coefficients β0S. The sparse structure assumes that s is small compared to the sample size. A penalized regression problem, in general, takes a form of:

where Pn(·) denotes a penalty function. There are relatively less attentions to the necessary conditions for the penalized estimator to achieve the oracle property. Zhao and Yu (2006) derived an almost necessary condition for the sign consistency, which is similar to that of Zou (2006) for the least squares loss with Lasso penalty. To the authors' best knowledge, so far there has been no necessary condition on the loss function for the selection consistency in the high-dimensional framework. Such a necessary condition is important, because it provides us a way to justify whether a specific loss function can result in a consistent variable selection.

Theorem 2.1 (Necessary Condition)

Suppose:

-

Ln(β) is twice differentiable, and

-

There is a local minimizer β̂ = (β̂S, β̂N)T of

such that P(β̂N = 0) → 1, and √s ‖β̂ − β0‖ = op(1).

The penalty satisfies: Pn(·) ≥ 0, Pn(0) = 0, is non-increasing when t ∈ (0, u) for some u > 0, and . Then for any l ≤ p,

| (2.1) |

The implication (2.1) is fundamentally different from the “irrepresentable condition” in Zhao and Yu (2006) and that of Zou (2006). It imposes a restriction on the loss function Ln(·), whereas the “irrepresentable condition” is derived under the least squares loss and E(εX) = 0. For the least squares, (2.1) reduces to either or EεXl = 0, which requires a exogenous relationship between ε and X. In contrast, the irrepresentable condition requires a type of relationship between important and unimportant regressors and is specific to Lasso. It also differs from the Karush-Kuhn-Tucker (KKT) condition (e.g., Fan and Lv 2011) in that it is about the gradient vector evaluated at the true parameters rather than at the local minimizer.

The conditions on the penalty function in condition (iii) are very general, and are satisfied by a large class of popular penalties, such as Lasso (Tibshirani 1996), SCAD (Fan and Li 2001) and MCP (Zhang 2010), as long as their tuning parameter λn → 0. Hence this theorem should be understood as a necessary condition imposed on the loss function instead of the penalty.

2.2. Inconsistency of least squares with endogeneity

As an application of Theorem 2.1, consider a linear model:

| (2.2) |

where we may not have E(εX) = 0.

The conventional penalized least squares (PLS) problem is defined as:

In the simpler case when s, the number of nonzero components of β0, is bounded, it can be shown that if there exist some regressors correlated with the regression error ε, the PLS does not achieve the variable selection consistency. This is because (2.1) does not hold for the least squares loss function. Hence without the possibly ad-hoc exogeneity assumption, PLS would not work any more, as more formally stated below.

Theorem 2.2 (Inconsistency of PLS)

Suppose the data are i.i.d., s = O(1), and X has at least one endogenous component, that is, there is l such that |E(Xlε)| > c for some c > 0. Assume that , Eε4 < ∞, and Pn (t) satisfies the conditions in Theorem 2.1. If , corresponding to the coefficients of (XS, XN), is a local minimizer of

then either ‖β̃S – β0S ‖ = op(1), or lim supn→∞ P(β̃N = 0) < 1.

The index l in the condition of the above theorem does not have to be an index of an important regressor. Hence the consistency for penalized least squares will fail even if the endogeneity is only present on the unimportant regressors.

We conduct a simple simulated experiment to illustrate the impact of endogeneity on the variable selection. Consider

In the design, the unimportant regressors are endogenous. The penalized least squares (PLS) with SCAD-penalty was used for variable selection. The λ's in the table represent the tuning parameter used in the SCAD-penalty. The results are based on the estimated , obtained from minimizing PLS and FGMM loss functions respectively (we shall discuss the construction of FGMM loss function and its numerical minimization in detail subsequently). Here β̃S and β̂N represent the estimators for coefficients of important and unimportant regressors respectively.

From Table 1, PLS selects many unimportant regressors (FP). In contrast, the penalized FGMM performs well in both selecting the important regressors and eliminating the unimportant ones. Yet, the larger MSES of β̂S by FGMM is due to the moment conditions used in the estimate. This can be improved further in Section 6. Also, when endogeneity is present on the important regressors, PLS estimator will have larger bias (see additional simulation results in Section 8.)

Table 1.

Performance of PLS and FGMM over 100 replications. p = 50, n = 200

| PLS | FGMM | |||||||

|---|---|---|---|---|---|---|---|---|

| λ = 0.05 | λ = 0.1 | λ = 0.5 | λ = 1 | λ = 0.05 | λ = 0.1 | λ = 0.2 | ||

| MSES | 0.145 (0.053) | 0.133 (0.043) | 0.629 (0.301) | 1.417 (0.329) | 0.261 (0.094) | 0.184 (0.069) | 0.194 (0.076) | |

| MSEN | 0.126 (0.035) | 0.068 (0.016) | 0.072 (0.016) | 0.095 (0.019) | 0.001 (0.010) | 0 (0) | 0.001 (0.009) | |

| TP | 5 (0) | 5 (0) | 4.82 (0.385) | 3.63 (0.504) | 5 (0) | 5 (0) | 5 (0) | |

| FP | 37.68 (2.902) | 35.36 (3.045) | 8.84 (3.334) | 2.58 (1.557) | 0.08 (0.337) | 0 (0) | 0.02 (0.141) | |

MSES is the average of ‖β̂S − β0S‖ for nonzero coefficients. MSEN is the average of ‖β̂N − β0N‖ for zero coefficients. TP is the number of correctly selected variables, and FP is the number of incorrectly selected variables. The standard error of each measure is also reported.

3. Focused GMM

3.1. Definition

Because of the presence of endogenous regressors, we introduce an instrumental variable (IV) regression model. Consider a more general nonlinear model:

| (3.1) |

where Y stands for the dependent variable; g :ℝ × ℝ → ℝ is a known function. For simplicity, we require g be one-dimensional, and should be thought of as a possibly nonlinear residual function. Our result can be naturally extended to a multi-dimensional g function. Here W is a vector of observed random variables, known as instrumental variables.

Model (3.1) is called a conditional moment restricted model, which has been extensively studied in the literature, e.g., Newey (1993), Donald et al. (2009), Kitamura et al (2004). The high-dimensional model is also closely related to the semi/nonparametric model estimated by sieves with a growing sieve dimension, e.g., Ai and Chen (2003). Recently van de Geer (2008) and Fan and Lv (2011) considered generalized linear models without endogeneity. Some interesting examples of the generalized linear model that fit into (3.1) are:

linear regression, g(t1, t2) = t1 − t2;

logit model, g(t1, t2) = t1 − exp(t2)/(1 + exp(t2));

probit model, g(t1, t2) = t1 − Φ(t2) where Φ(·) denotes the standard normal cumulative distribution function.

Let (f1, …, fp) and (h1,…, hp) be two different sets of transformations of W, which can be taken as a large number of series basis, e.g., B-splines, Fourier series, polynomials (see Chen 2007 for discussions of the choice of sieve functions). Here each fj and hj are scalar functions. Write F = (f1(W),…, fp(W))T, and H = (h1(W),…, hp(W))T. The conditional moment restriction (3.1) then implies that

| (3.2) |

where FS and HS are the subvectors of F and H whose supports are on the oracle set S = {j ≤ p : β0j ≠ 0}. In particular, when all the components of XS are known to be exogenous, we can take F = X and H = X2 (the vector of squares of X taken coordinately), or H = cos(X) + 1 if X is a binary variable. A typical estimator based on moment conditions like (3.2) can be obtained via the generalized method of moments (GMM, Hansen 1982). However, in the problem considered here, (3.2) cannot be used directly to construct the GMM criterion function, because the identities of XS are unknown.

Remark 3.1

One seemingly working solution is to define V as a vector of transformations of W, for instance V = F, and employ GMM to the moment condition E[g(Y, XT β0)V] = 0. However, one has to take dim(V) ≥ dim(β) = p to guarantee that the GMM criterion function has a unique minimizer (in the linear model for instance). Due to p ≫ n, the dimension of V is too large, and the sample analogue of the GMM criterion function may not converge to its population version due to the accumulation of high-dimensional estimation errors.

Let us introduce some additional notation. For any β ∈ ℝp/{0}, and i = 1, …, n, define r = |β|0-dimensional vectors

Fi(β) = (fl1(Wi),…, flr(Wi))T and Hi(β) = (hl1(Wi),…, hlr(Wi))T, where (l1, …, lr) are the indices of nonzero components of β. For example, if p = 3 and β = (−1, 0, 2)T, then Fi(β) = (f1(Wi), f3(Wi))T, and Hi(β) = (h1(Wi), h3(Wi))T, i ≤ n.

Our focused GMM (FGMM) loss function is defined as

| (3.3) |

where wj1 and wj2 are given weights. For example, we will take and to standardize the scale (here represents the sample variance). Writing in the matrix form, for Vi(β) = (Fi(βT,H i(β)T)T,

where J(β) = diag{wl11, …, wlr1, wl12, …, wlr2}.4

Unlike the traditional GMM, the “working instrumental variables” V(β) depend irregularly on the unknown β. As to be further explained, this ensures the dimension reduction, and allows to focus only on the equations with the IV whose support is on the oracle space, and is therefore called the focused GMM or FGMM for short.

We then define the FGMM estimator by minimizing the following criterion function:

| (3.4) |

Sufficient conditions on the penalty function Pn(|βj|) for the oracle property will be presented in Section 4. Penalization is needed because otherwise small coefficients in front of unimportant variables would be still kept in minimizing LFGMM (β). As to become clearer in Section 6, the FGMM focuses on the model selection and estimation consistency without paying much effort to the efficient estimation of β0S.

3.2. Rationales behind the construction of FGMM

3.2.1. Inclusion of V(β)

We construct the FGMM criterion function using

A natural question arises: why not just use one set of IV's so that V(β) = F(β)? We now explain the rationale behind the inclusion of the second set of instruments H(β). To simplify notation, let Fij = fj(Wi) and Hij = hj(Wi) for j ≤ p and i ≤ n. Then Fi = (Fi1,…, Fip) and Hi = (Hi1,…, Hip). Also write Fj = fj (W) and Hj = hj (W) for j ≤ p.

Let us consider a linear regression model (2.2) as an example. If H(β) were not included and V(β) = F(β) had been used, the GMM loss function would have been constructed as

| (3.5) |

where for the simplicity of illustration, J(β) is taken as an identity matrix. We also use the L0-penalty Pn(|βj|) = λnI(|βj|≠0) for illustration. Suppose that the true where only the first s components are nonzero and that s > 1. If we, however, restrict ourselves to βp = (0, …, 0, βp), the criterion function now becomes

It is easy to see its minimum is just λn. On the other hand, if we optimize QFGMM on the oracle space , then

As a result, it is inconsistent for variable selection.

The use of L0-penalty is not essential in the above illustration. The problem is still present if the L1-penalty is used, and is not merely due to the biasedness of L1-penalty. For instance, recall that for the SCAD penalty with hyper parameter (a, λn), Pn(·) is non-decreasing, and when t ≥ aλn. Given that minj∈S|β0j|≫λn,

On the other hand, which is strictly less than QFGMM(β0). So the problem is still present when an asymptotically unbiased penalty (e.g., SCAD, MCP) is used.

Including an additional term H(β) in V(β) can overcome this problem. For example, if we still restrict to βp = (0,…, βp) but include an additional but different IV Hip, the criterion function then becomes, for the L0 penalty:

In general, the first two terms cannot achieve op(1) simultaneously as long as the two sets of transformations {fj(·)} and {hj(·)} are fixed differently. so long as n is large and

| (3.6) |

As a result, QFGMM(βp) is bounded away from zero with probability approaching one.

To better understand the behavior of QFGMM (β), it is more convenient to look at the population analogues of the loss function. Because the number of equations in

| (3.7) |

is twice as many as the number of unknowns (nonzero components in β), if we denote S̃ as the support of β, then (3.7) has a solution only when , which does not hold in general unless S̃ = S, the index set of the true nonzero coefficients. Hence it is natural for (3.7) to have a unique solution β = β0. As a result, if we define

the population version of LFGMM, then as long as β is not close to β0, G should be bounded away from zero. Therefore, it is reasonable for us to assume that for any δ > 0, there is γ(δ) > 0 such that

| (3.8) |

On the other hand, implies G(β0) = 0.

Our FGMM loss function is essentially a sample version of G(β), so minimizing LFGMM(β) forces the estimator to be close to β0, but small coefficients in front of unimportant but exogenous regressors may still be allowed. Hence a concave penalty function is added to LFGMM to define QFGMM.

3.2.2. Indicator function

Another question readers may ask is that why not define LFGMM(β) to be, for some weight matrix J,

| (3.9) |

that is, why not replace the irregular β-dependent V(β) with V, and use the entire 2p-dimensional V = (FT, HT)T as the IV? This is equivalent to the question why the indicator function in (3.3) cannot be dropped.

The indicator function is used to prevent the accumulation of estimation errors under the high dimensionality. To see this, rewrite (3.9) to be:

Since dim(Vi) = 2p ≫ n, even if each individual term evaluated at β = β0 is , the sum of p terms would become stochastically unbounded. In general, (3.9) does not converge to its population analogue when p ≫ n because the accumulation of high-dimensional estimation errors would have a non-negligible effect.

In contrast, the indicator function effectively reduces the dimension and prevents the accumulation of estimation errors. Once the indicator function is included, the proposed FGMM loss function evaluated at β0 becomes:

which is small because E[g(Y, XTβ0)FS] = E[g(Y, XTβ0)HS] = 0 and that there are only s = |S|0 terms in the summation.

Recently, there has been growing literature on the shrinkage GMM, e.g., Caner (2009), Caner and Zhang (2013), Liao (2013), etc, regarding estimation and variable selection based on a set of moment conditions like (3.2). The model considered by these authors is restricted to either a low-dimensional parameter space or a low-dimensional vector of moment conditions, where there is no such a problem of error accumulations.

4. Oracle Property of FGMM

FGMM involves a non-smooth loss function. In the appendix, we develop a general asymptotic theory for high-dimensional models to accommodate the non-smooth loss function.

Our first assumption defines the penalty function we use. Consider a similar class of folded concave penalty functions as that in Fan and Li (2001).

For any β = (β1,…, βs)T ∈ ℝs, and |βj| ≠ 0, j = 1,…, s, define

| (4.1) |

which is if the second derivative of Pn is continuous. Let

represent the strength of signals.

Assumption 4.1

The penalty function Pn(t) : [0, ∞) → ℝ satisfies:

Pn(0) = 0

Pn(t) is concave, non-decreasing on [0, ∞), and has a continuous derivative when t > 0.

.

There exists c > 0 such that supβ∈B(β0S,cdn) η(β) = o(1).

These conditions are standard. The concavity of Pn(·) implies that η(β) ≥ 0 for all β ∈ ℝs. It is straightforward to check that with properly chosen tuning parameters, the Lq penalty (for q ≤ 1), hard-thresholding (Antoniadis 1996), SCAD (Fan and Li 2001), and MCP (Zhang 2010) all satisfy these conditions. As thoroughly discussed by Fan and Li (2001), a penalty function that is desirable for achieving the oracle properties should result in an estimator with three properties: unbiasedness, sparsity and continuity (see Fan and Li 2001 for details). These properties motivate the needs of using a folded concave penalty.

The following assumptions are further imposed. Recall that for j ≤ p, Fj = fj (W) and Hj = hj (W).

Assumption 4.2

The true parameter β0 is uniquely identified by E(g(Y, XT β0)|W) = 0.

(Y1, X1),…, (Yn, Xn) are independent and identically distributed.

Remark 4.1

Condition (i) above is standard in the GMM literature (e.g., Newey 1993, Donald et al. 2009, Kitamura et al. 2004). This condition is closely related to the “over-identifying restriction”, and ensures that we can always find two sets of transformations F and H such that the equations in (3.2) are uniquely satisfied by βS = β0S. In linear models, this is a reasonable assumption, as discussed in Section 3.2. In nonlinear models, however, requiring the identifiability from either E(g(Y, XTβ0)|W) = 0 or (3.2) may be restrictive. Indeed, Dominguez and Lobato 2004) showed that the identification condition in (i) may depend on the marginal distributions of W. Furthermore, in nonparametric regression problems as in Bickel et al. (2009) and Ai and Chen (2003), the sufficient condition of Condition (i) is even more complicated, which also depends on the conditional distribution of X|W, and is known to be statistically untestable (see Newey and Powell 2003, Canay et al 2013).

Assumption 4.3

There exist b1, b2, b3 > 0 and r1, r2, r3 > 0 such that for any t > 0,

P(|g(Y, XTβ0)| > t) ≤ exp(−(t/b1)r1),

maxl≤p P(|Fl| > t) ≤ exp(−(t/b2)r2), maxl≤p P(|Hl > t) ≤ exp(−(t/b3)r3).

minj∈S var(g(Y, XT β0)Fj) and minj∈S var(g(Y, XT β0)Hj) are bounded away from zero.

var(Fj) and var(Hj) are bounded away from both zero and infinity uniformly in j = 1,…, p and p ≥ 1.

We will assume g(·,·) to be twice differentiable, and in the following assumptions, let

Assumption 4.4

g(·,·) is twice differentiable.

supt1, t2 |m(t1, t2)| < ∞, and supt1, t2 |q(t1, t2)| <∞.

It is straightforward to verify Assumption 4.4 for linear, logistic and probit regression models.

Assumption 4.5

There exist C1 > 0 and C2 > 0 such that

These conditions require that the instrument VS be not weak, that is, VS should not be weakly correlated with the important regressors. In the generalized linear model, Assumption 4.5 is satisfied if proper conditions on the design matrices are imposed. For example, in the linear regression model and probit model, we assume the eigenvalues of and are bounded away from both zero and infinity respectively, where ϕ(·) is the standard normal density function. Conditions in the same spirit are also assumed in, e.g., Bradic et al. (2011), and Fan and Lv (2011).

Define

| (4.2) |

Assumption 4.6

For some c > 0, λmin(ϒ) > c.

, , and .

and sup‖β − β0S ‖≤dn/4η(β)=o((s log p)−1/2).

.

This assumption imposes a further condition jointly on the penalty, the strength of the minimal signal and the number of important regressors. Condition (i) is needed for the asymptotic normality of the estimated nonzero coefficients. When either SCAD or MCP is used as the penalty function with a tuning parameter λn, and when λn = o(dn). Thus Conditions (ii)-(iv) in the assumption are satisfied as long as . This requires the signal dn be strong and s be small compared to n. Such a condition is needed to achieve the variable selection consistency.

Under the foregoing regularity conditions, we can show the oracle property of a local minimizer of QFGMM (3.4).

Theorem 4.1

Suppose s3 log p = o(n). Under Assumptions 4.1-4.6, there exists a local minimizer of QFGMM(β) with β̂S and β̂N being sub-vectors of β̂ whose coordinates are in S and Sc respectively, such that:

-

for any unit vector α ∈ ℝs, ‖α‖ = 1, where ,

-

In addition, the local minimizer β̂ is strict with probability at least 1 – δ for an arbitrarily small δ > 0 and all large n.

-

Let Ŝ = {j ≤ p : β̂j ≠ 0}. Then

Remark 4.2

As was shown in an earlier version of this paper Fan and Liao (2012), when it is known that E[g(Y, XTβ0)|XS] = 0 but likely E[g(Y, XTβ0)|X] ≠ 0, we can take V = (FT, HT)T to be transformations of X that satisfy Assumptions 4.3-4.6. In this way, we do not need an extra instrumental variable W, and Theorem 4.1 still goes through, while the traditional methods (e.g., penalized least squares in the linear model) can still fail as shown by Theorem 2.2. In the high-dimensional linear model, compared to the classical assumption: E(ε|X) = 0, our condition E(ε| XS) = 0 is relatively easier to validate as XS is a low-dimensional vector.

Remark 4.3

We now explain our required lower bound on the signal . When a penalized regression is used, which takes the form , it is required that if Ln (β) is differentiable, . This often leads to a requirement of the lower bound of dn. Therefore, such a lower bound of dn depends on the choice of both the loss function Ln(β) and the penalty. For instance, in the linear model when least squares with a SCAD penalty is employed, this condition is equivalent to . It is also known that the adaptive lasso penalty requires the minimal signal to be significantly larger than (Huang, Ma and Zhang 2008). In our framework, the requirement arises from the use of the new FGMM loss function. Such a condition is stronger than that of the least squares loss function, which is the price paid to achieve variable selection consistency in the presence of endogeneity. This condition is still easy to satisfy as long as s grows slowly with n.

Remark 4.4

Similar to the “irrpresentable condition” for Lasso, the FGMM requires important and unimportant explanatory variables not be strongly correlated. This is fulfilled by Assumption 4.6(iv). For instance, in the linear model and VS contains XS as in our earlier version, this condition implies . Strong correlation between (XS, XN) is also ruled out by the identifiability condition Assumption 4.2. To illustrate the idea, consider a case of perfect linear correlation: for some (α, δ) with δ ≠ 0. Then, . As a result, the FGMM can be variable selection inconsistent because β0 and (β0S − α, δ) are observationally equivalent, violating Assumption 4.2.

5. Global minimization

With the over identification condition, we can show that the local minimizer in Theorem 4.1 is nearly global. To this end, define an l∞ ball centered at β0 with radius δ:

Assumption 5.1 (over-identification)

For any δ > 0, there is γ > 0 such that

This high-level assumption is hard to avoid in high-dimensional problems. It is the empirical counterpart of (3.8). In classical low-dimensional regression models, this assumption has often been imposed in the econometric literature, e.g., Andrews (1999), Chernozhukov and Hong (2003), among many others. Let us illustrate it by the following example.

Example 5.1

Consider a linear regression model of low dimensions: , which implies and where p is either bounded or slowly diverging with n. Now consider the following problem:

Once for all index set S̃ ≠ S, the objective function is then minimized to zero uniquely by β = β0. Moreover, for any δ > 0 there is γ > 0 such that when β ∉ Θδ ∪ {0}, we have G(β) > γ > 0. Assumption 5.1 then follows from the uniform weak law of large number: with probability approaching one, uniformly in (β ∉ Θδ ∪ {0},

When p is much larger than n, the accumulation of the fluctuations from using the law of large number is no longer negligible. It is then challenging to show that ‖E[g(Yi, XTβ)V(β)] ‖ is close to uniformly for high-dimensional β's, which is why we impose Assumption 5.1 on the empirical counterpart instead of the population.

Theorem 5.1

Assume . Under Assumption 5.1 and those of Theorem 4.1, the local minimizer β̂ in Theorem 4.1 satisfies: for any δ > 0, there exists γ > 0,

The above theorem demonstrates that β̂ is a nearly global minimizer. For SCAD and MCP penalties, the condition holds when λn = o(s−1), which is satisfied if s is not large.

Remark 5.1

We exclude the set {0} from the searching area in both Assumption 5.1 and Theorem 5.1 because we do not include the intercept in the model so X(0) = 0 by definition, and hence QFGMM(0) = 0. It is reasonable to believe that zero is not close to the true parameter, since we assume there should be at least one important regressor in the model. On the other hand, if we always keep X1 = 1 to allow for an intercept, there is no need to remove {0} in either Assumption 5.1 or the above theorem. Such a small change is not essential.

Remark 5.2

Assumption 5.1 can be slightly relaxed so that γ is allowed to decay slowly at a certain rate. The lower bound of such a rate is given by Lemma D.2 in the appendix. Moreover, Theorem 5.1 is based on an over-identification assumption, which is essentially different from the global minimization theory in the recent high-dimensional literature, e.g., Zhang (2010), Bühlmann and van de Geer (2011, ch 9), and Zhang and Zhang (2012).

6. Semi-parametric efficiency

The results in Section 5 demonstrate that the choice of the basis functions {fj, hj}j≤p forming F and H influences the asymptotic variance of the estimator. The resulting estimator is in general not efficient. To obtain a semi-parametric efficient estimator, one can employ a second step post-FGMM procedure. In the linear regression, a similar idea has been used by Belloni and Chernozhukov (2013).

After achieving the oracle properties in Theorem 4.1, we have identified the important regressors with probability approaching one, that is,

This reduces the problem to a low-dimensional problem. For simplicity, we restrict s = O(1). The problem of constructing semi-parametric efficient estimator (in the sense of Newey (1990) and Bickel et al. (1998)) in a low-dimensional model

has been well studied in the literature (see, for example, Chamberlain (1987), Newey (1993)). The optimal instrument that leads to the semi-parametric efficient estimation of β0S is given by D(W)σ(W)−2, where

Newey (1993) showed that the semi-parametric efficient estimator of β0S can be obtained by GMM with the moment condition:

| (6.1) |

In the post-FGMM procedure, we replace XS with the selected X̂S obtained from the first-step penalized FGMM. Suppose there exist consistent estimators D̂(W) and σ̂ (W)2 of D(W) and σ(W)2. Let us assume the true parameter ‖β0S‖∞ < M for a large constant M > 0. We then estimate β0S by solving

| (6.2) |

on {βS : ‖β0S‖∞ ≤ M}, and the solution is assumed to be unique.

Assumption 6.1

-

There exist C1 > 0 and C2 > 0 so that

In addition, there exist σ̂(w)2 and D̂ (w) such that

where χ is the support of W.

The consistent estimators for D(w) and σ(w)2 can be obtained in many ways. We present a few examples below.

Example 6.1 (Homoskedasticity)

Suppose for some nonlinear function h(·). Then σ(w)2 = E(ε2|W = w) = σ2, which does not depend on w under homoskedasticity. In this case, equations (6.1) and (6.2) do not depend on σ2.

Example 6.2 (Simultaneous linear equations)

In the simultaneous linear equation model, XS linearly depends on W as:

for some coefficient matrix Π, where u is independent of W. Then D(w) = E(XS|W = w) = Πw. Let X̂ = (X̂S1, …, X̂Sn), W̄ = (W1, …, Wn). We then estimate D(w) by Π̂w, where Π̂ = (X̂W̄T)(W̄W̄)−1.

Example 6.3 (Semi-nonparametric estimation)

We can also assume a semi-parametric structure on the functional forms of D(w) and σ(w)2:

where D(·;θ1) and σ2(·;θ2) are semi-parametric functions parameterized by θ1 and θ2. Then D(w) and σ(w)2 are estimated using a standard semi-parametric method. More generally, we can proceed by a pure nonparametric approach via respectively regressing and on W, provided that the dimension of W is either bounded or growing slowly with n (see Fan and Yao, 1998).

Theorem 6.1

Suppose s = O(1), Assumption 6.1 and those of Theorem 4.1 hold. Then

and [E(σ(W)−2D(W)D(W)T)]–1 is the semi-parametric efficiency bound in Chamberlain (1987).

7. Implementation

We now discuss the implementation for numerically minimizing the penalized FGMM criterion function.

7.1. Smoothed FGMM

As we previously discussed, including an indicator function benefits us in dimension reduction. However, it also makes LFGMM unsmooth. Hence, minimizing QFGMM(β) = LFGMM(β)+Penalty is generally NP-hard.

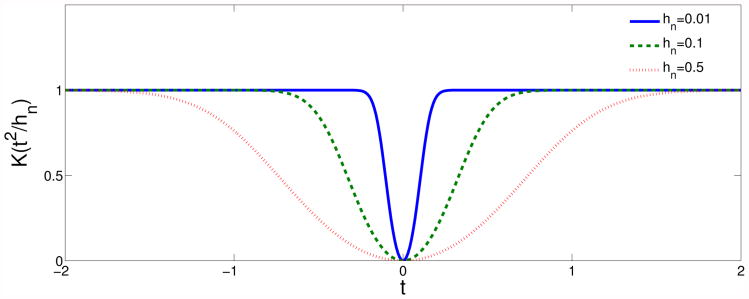

We overcome this discontinuity problem by applying the smoothing technique as in Horowitz (1992) and Bondell and Reich (2012), which approximates the indicator function by a smooth kernel K : (−∞,∞) → ℝ that satisfies

0 ≤ K(t) < M for some finite M and all t ≥ 0.

K(0) = 0 and lim|t|→∞ K(t) = 1.

lim sup|t|→∞ |K′(t)t| = 0, and lim sup|t|→∞ |K″(t)t2| < ∞.

We can set , where F(t) is a twice differentiable cumulative distribution function. For a pre-determined small number hn, LFGMM is approximated by a continuous function LK(β) with the indicator replaced by . The objective function of the smoothed FGMM is given by

As hn→0+, converges to I(βj≠0), and hence LK(β) is simply a smoothed version of LFGMM (β). As an illustration, Figure 1 plots such a function.

Fig 1. as an approximation to I(t≠0).

Smoothing the indicator function is often seen in the literature on high-dimensional variable selections. Recently, Bondell and Reich (2012) approximate I(t≠0) by to obtain a tractable non-convex optimization problem. Intuitively, we expect that the smoothed FGMM should also achieve the variable selection consistency. Indeed, the following theorem formally proves this claim.

Theorem 7.1

Suppose for a small constant γ ∈ (0, 1). Under the assumptions of Theorem 4.1 there exists a local minimizer β̂′ of the smoothed FGMM QK(β) such that, for ,

In addition, the local minimizer β̂′ is strict with probability at least 1 − δ for an arbitrarily small δ > 0 and all large n.

The asymptotic normality of the estimated nonzero coefficients can be established very similarly to that of Theorem 4.1, which is omitted for brevity.

7.2. Coordinate descent algorithm

We employ the iterative coordinate algorithm for the smoothed FGMM minimization, which was used by Fu (1998), Daubechies et al. (2004), Fan and Lv (2011), etc. The iterative coordinate algorithm minimizes one coordinate of β at a time, with other coordinates kept fixed at their values obtained from previous steps, and successively updates each coordinate. The penalty function can be approximated by local linear approximation as in Zou and Li (2008).

Specifically, we run the regular penalized least squares to obtain an initial value, from which we start the iterative coordinate algorithm for the smoothed FGMM. Suppose β(l) is obtained at step l. For k ∈ {1, …, p}, denote by a (p − 1)-dimensional vector consisting of all the components of (β(l) but . Write as the p-dimensional vector that replaces with t. The minimization with respect to t while keeping fixed is then a univariate minimization problem, which is not difficult to implement. To speed up the convergence, we can also use the second order approximation of along the kth component at :

| (7.1) |

where is a quadratic function of t. We solve for

| (7.2) |

which admits an explicit analytical solution, and keep the remaining components at step l. Accept t* as an updated kth component of β(l) only if strictly decreases. The coordinate descent algorithm runs as follows.

-

Set l = 1. Initialize β(1) = β̂*, where β̂* solves

using the coordinate descent algorithm as in Fan and Lv (2011).

-

Successively for k = 1, …, p, let t* be the minimizer of

Update as t* if

Otherwise set . Increase l by one when k = p.

Repeat Step 2 until | QK(β(l))−QK (β(l+1))| < ε, for a pre-determined small ε.

When the second order approximation (7.1) is combined with SCAD in Step 2, the local linear approximation of SCAD is not needed. As demonstrated in Fan and Li (2001), when Pn(t) is defined using SCAD, the penalized optimization of the form has an analytical solution.

We can show that the evaluated objective values {QK(β(l))}l≥1 is a bounded Cauchy sequence. Hence for an arbitrarily small ε > 0, the above algorithm stops after finitely many steps. Let M(β) denote the map defined by the algorithm from β(l) to β(l+1). We define a stationary point of the function QK(β) to be any point β at which the gradient vector of QK(β) is zero. Similar to the local linear approximation of Zou and Li (2008), we have the following result regarding the property of the algorithm.

Theorem 7.2

The sequence {QK (β(l))}l≥1 is a bounded non-increasing Cauchy sequence. Hence for any arbitrarily small ε > 0, the coordinate descent algorithm will stop after finitely many iterations. In addition, if QK (β) = QK (M(β)) only for stationary points of QK(·) and if β* is a limit point of the sequence {(β(l)) l≥1, then β* is a stationary point of QK (β).

Theoretical analysis of non-convex regularization in the recent decade has focused on numerical procedures that can find local solutions (Hunter and Li 2005, Kim et al. 2008, Brehenry and Huang 2011). Proving that the algorithm achieves a solution that possesses the desired oracle properties is technically difficult. Our simulated results demonstrate that the proposed algorithm indeed reaches the desired sparse estimator. Further investigation along the lines of Zhang and Zhang (2012) and Loh and Wainwright (2013) is needed to investigate the statistical properties of the solution to non-convex optimization problems, which we leave as future research.

8. Monte Carlo Experiments

8.1. Endogeneity in both important and unimportant regressors

To test the performance of FGMM for variable selection, we simulate from a linear model:

with p = 50 or 200. Regressors are classified as being exogenous (independent of ε) and endogenous. For each component of X, we write if Xj is endogenous, and if Xj is exogenous, and and are generated according to:

where {ε, u1, …, up} are independent N(0, 1). Here F = (F1, …, Fp)T and H = (H1, …, Hp)T are the transformations (to be specified later) of a three-dimensional instrumental variable W = (W1, W2, W3)T ∼ N3(0, I3). There are m endogenous variables (X1, X2, X3, X6, …, X2+m)T, with m = 10 or 50. Hence three of the important regressors (X1, X2, X3) are endogenous while two are exogenous (X4, X5).

We apply the Fourier basis as the working instruments:

The data contain n = 100 i.i.d. copies of (Y, X, F, H). PLS and FGMM are carried out separately for comparison. In our simulation we use SCAD with pre-determined tuning parameters of λ as the penalty function. The logistic cumulative distribution function with h = 0.1 is used for smoothing:

There are 100 replications per experiment. Four performance measures are used to compare the methods. The first measure is the mean standard error (MSES) of the important regressors, determined by the average of ‖β̂S − β0S‖ over the 100 replications, where S = {1, …, 5}. The second measure is the average of the MSE of unimportant regressors, denoted by MSEN. The third measure is the number of correctly selected non-zero coefficients, that is, the true positive (TP), and finally, the fourth measure is the number of incorrectly selected coefficients, the false positive (FP). In addition, the standard error over the 100 replications of each measure is also reported. In each simulation, we initiate β(0) = (0,…, 0)T, and run a penalized least squares (SCAD(λ)) for λ = 0.5 to obtain the initial value for the FGMM procedure. The results of the simulation are summarized in Table 2, which compares the performance measures of PLS and FGMM.

Table 2. Endogeneity in both important and unimportant regressors, n = 100.

| PLS | FGMM | ||||||

|---|---|---|---|---|---|---|---|

| λ = 1 | λ =3 | λ = 4 | λ = 0.08 | λ = 0.1 | λ = 0.3 | post-FGMM | |

| p = 50 m = 10 | |||||||

| MSES | 0.190 (0.102) | 0.525 (0.283) | 0.491 (0.328) | 0.106 (0.051) | 0.097 (0.043) | 0.102 (0.037) | 0.088 (0.026) |

| MSEN | 0.171 (0.059) | 0.240 (0.149) | 0.183 (0.149) | 0.090 (0.030) | 0.085 (0.035) | 0.048 (0.034) | |

| TP | 5 (0) | 5 (0) | 4.97 (0.171) | 5 (0) | 5 (0) | 5 (0) | |

| FP | 27.69 (6.260) | 14.63 (5.251) | 10.37 (4.539) | 3.76 (1.093) | 3.5 (1.193) | 1.63 (1.070) | |

|

| |||||||

| p = 200 m = 50 | |||||||

| MSES | 0.831 (0.787) | 0.966 (0.595) | 1.107 (0.678) | 0.111 (0.048) | 0.104 (0.041) | 0.231 (0.431) | 0.092 (0.032) |

| MSEN | 1.286 (1.333) | 0.936 (0.799) | 0.828 (0.656) | 0.062 (0.018) | 0.063 (0.021) | 0.053 (0.075) | |

| TP | 5 (0) | 4.9 (0.333) | 4.73 (0.468) | 5 (0) | 5 (0) | 4.94 (0.246) | |

| FP | 86.760 (27.41) | 42.440 (15.08) | 35.070 (13.84) | 4.726 (1.358) | 4.276 (1.251) | 2.897 (2.093) | |

m is the number of endogenous regressors. MSES is the average of ‖β̂S − β0S‖ for nonzero coefficients. MSEN is the average of ‖β̂N − β0N‖ for zero coefficients. TP is the number of correctly selected variables; FP is the number of incorrectly selected variables, and m is the total number of endogenous regressors. The standard error of each measure is also reported.

PLS has non-negligible false positives (FP). The average FP decreases as the magnitude of the penalty parameter increases, however, with a relatively large MSES for the estimated nonzero coefficients, and the FP rate is still large compared to that of FGMM. The PLS also misses some important regressors for larger λ. It is worth noting that the larger MSES for PLS is due to the bias of the least squares estimation in the presence of endogeneity. In contrast, FGMM performs well in both selecting the important regressors, and in correctly eliminating the unimportant regressors. The average MSES of FGMM is significantly less than that of PLS since the instrumental variable estimation is applied instead. In addition, after the regressors are selected by the FGMM, the post-FGMM further reduces the mean squared error of the estimators.

8.2. Endogeneity only in unimportant regressors

Consider a similar linear model but only the unimportant regressors are endogenous and all the important regressors are exogenous, as designed in Section 2.2, so the true model is as the usual case without endogeneity. In this case, we apply (F, H) = (X, X2) as the working instruments for FGMM with SCAD(λ) penalty, and need only data X and Y = (Y1, …, Yn). We still compare the FGMM procedure with PLS. The results are reported in Table 3.

Table 3. Endogeneity only in unimportant regressors, n = 200.

| PLS | FGMM | |||||

|---|---|---|---|---|---|---|

| λ = 0.1 | λ = 0.5 | λ = 1 | λ = 0.05 | λ = 0.1 | λ = 0.2 | |

| p = 50 | ||||||

| MSES | 0.133 (0.043) | 0.629 (0.301) | 1.417 (0.329) | 0.261 (0.094) | 0.184 (0.069) | 0.194 (0.076) |

| MSEN | 0.068 (0.016) | 0.072 (0.016) | 0.095 (0.019) | 0.001 (0.010) | 0 (0) | 0.001 (0.009) |

| TP | 5 (0) | 4.82 (0.385) | 3.63 (0.504) | 5 (0) | 5 (0) | 5 (0) |

| FP | 35.36 (3.045) | 8.84 (3.334) | 2.58 (1.557) | 0.08 (0.337) | 0 (0) | 0.02 (0.141) |

|

| ||||||

| p = 300 | ||||||

| MSES | 0.159 (0.054) | 0.650 (0.304) | 1.430 (0.310) | 0.274 (0.086) | 0.187 (0.102) | 0.193 (0.123) |

| MSEN | 0.107 (0.019) | 0.071 (0.023) | 0.086 (0.027) | 5 × 10−4(0.006) | 0 (0) | 5 × 10−4(0.005) |

| TP | 5 (0) | 4.82 (0.384) | 3.62 (0.487) | 5 (0) | 5 (0) | 4.99 (0.100) |

| FP | 210.47 (11.38) | 42.78 (11.773) | 7.94 (5.635) | 0.11 (0.37) | 0 (0) | 0.01 (0.10) |

It is clearly seen that even though only the unimportant regressors are endogenous, however, the PLS still does not seem to select the true model correctly. This illustrates the variable selection inconsistency for PLS even when the true model has no endogeneity. In contrast, the penalized FGMM still performs relatively well.

8.3. Weak minimal signals

To study the effect on variable selection when the strength of the minimal signal is weak, we run another set of simulations with the same data generating process as in Design 1 but we change β4 = −0.5 and β5 = 0.1, and keep all the remaining parameters the same as before. The minimal nonzero signal becomes |β5| = 0.1. Three of the important regressors are endogenous as in Design 1. Table 4 indicates that the minimal signal is so small that it is not easily distinguishable from the zero coefficients.

Table 4. FGMM for weak minimal signal β4 = −0.5, β5 = 0.1.

| p = 50 | m = 10 | p = 200 | m = 50 | |||

|---|---|---|---|---|---|---|

| λ = 0.05 | λ = 0.1 | λ = 0.5 | λ = 0.05 | λ = 0.1 | λ = 0.5 | |

| MSES | 0.128 (0.020) | 0.107 (0.000) | 0.118 (0.056) | 0.138 (0.061) | 0.125 (0.074) | 0.238 (0.154) |

| MSEN | 0.155 (0.054) | 0.097 (0.000) | 0.021 (0.033) | 0.134 (0.052) | 0.108 (0.043) | 0.084 (0.062) |

| TP | 4.12 (0.327) | 4 (0) | 4 (0) | 4.04 (0.281) | 3.98 (0.141) | 3.8 (0.402) |

| FP | 4.93 (1.578) | 5 (0) | 2.08 (0.367) | 4.72 (1.198) | 4.3 (0.948) | 1.95 (1.351) |

9. Conclusion and Discussion

Endogeneity can arise easily in the high-dimensional regression due to a large pool of regressors, which causes the inconsistency of the penalized least-squares methods and possible false scientific discoveries. Based on the over-identification assumption and valid instrumental variables, we propose to penalize an FGMM loss function. It is shown that FGMM possesses the oracle property, and the estimator is also a nearly global minimizer.

We would like to point out that this paper focuses on correctly specified sparse models, and the achieved results are “pointwise” for the true model. An important issue is the uniform inference where the sparse model may be locally misspecified. While the oracle property is of fundamental importance for high-dimensional methods in many scientific applications, it may not enable us to make valid inference about the coefficients uniformly across a large class of models (Leeb and Pötscher 2008, Belloni et al. 2013)5. Therefore, the “post-double-selection” method with imperfect model selection recently proposed by Belloni et al. (2013) is important for making uniform inference. Research along that line under high-dimensional endogeneity is important and we shall leave it for the future agenda.

Finally, as discussed in Bickel et al. (2009) and van de Geer (2008), high-dimensional regression problems can be thought of as an approximation to a nonparametric regression problem with a “dictionary” of functions or growing number of sieves. Then in the presence of endogenous regressors, model (3.1) is closely related to the non-parametric conditional moment restricted model considered by, e.g., Newey and Powell (2003), Ai and Chen (2003), and Chen and Pouzo (2008). While the penalization in the latter literature is similar to ours, it plays a different role and is introduced for different purposes. It will be interesting to find the underlying relationships between the two models.

Supplementary Material

Acknowledgments

We would like to thank the anonymous reviewers, Associate Editor and Editor for their helpful comments that helped to improve the paper.

This project was supported by the National Science Foundation grant DMS-1206464 and the National Institute of General Medical Sciences of the National Institutes of Health through Grant Numbers R01GM100474 and R01-GM072611. The bulk of the research was carried out while Yuan Liao was a postdoctoral fellow at Princeton University.

Appendix A: Proofs for Section 2

Throughout the Appendix, C will denote a generic positive constant that may be different in different uses. Let sgn(·) denote the sign function.

A.1. Proof of Theorem 2.1

Proof. When β̂ is a local minimizer of Qn(β), by the Karush-Kuhn-Tucker (KKT) condition, ∀l ≤ p,

where if β̂l ≠ 0; if β̂l = 0, and we denote . By the monotonicity of , we have . By Taylor expansion and the Cauchy-Schwarz inequality, there is β̃ on the segment joining β̂ and β0 so that, on the event β̂N = 0, (β̂j − β0j = 0 for all j ∉ S)

The Cauchy-Schwarz inequality then implies that maxl≤p |∂Ln(β̂)/∂βl − ∂Ln(β0)/∂βl| is bounded by

By our assumption, √s‖β̂S − β 0S‖ = op(1). Because P(β̂N = 0) → 1,

| (A.1) |

This yields that ∂Ln(β0)/∂βl = op(1).

A.2. Proof of Theorem 2.2

Proof. Let be the i.i.d. data of Xl where Xl is an endogenous regressor. For the penalized LS, . Under the theorem assumptions, by the strong law of large number almost surely, which does not satisfy (2.1) of Theorem 2.1.

Appendix B: General Penalized Regressions

We present some general results for the oracle properties of penalized regressions. These results will be employed to prove the oracle properties for the proposed FGMM. Consider a penalized regression of the form:

Lemma B.1

Under Assumption 4.1, if (β = (β1,…, βs)T is such that maxj≤s |βj − β0S,j| ≤ dn, then

Proof. By Taylor's expansion, there exists β* ( for each j) lying on the line segment joining β and β0S, such that

Then .

Since is non-increasing (as Pn is concave), for all j ≤ s. Therefore .

In the theorems below, with S = {j : β0j ≠ 0}, define a so-called “oracle space” ℬ = {β ∈ ℝp : βj = 0 if j ∉ S}. Write Ln(βS, 0) = Ln(β) for . Let βS = (βS1,…, βSs) and

Theorem B.1 (Oracle Consistency)

Suppose Assumption 4.1 holds. In addition, suppose Ln(βS, 0) is twice differentiable with respect to βS in a neighborhood of β0S restricted on the subspace ℬ, and there exists a positive sequence an = o(dn) such that:

-

For any ε > 0, there is Cε > 0 so that for all large n,

(B.1) -

For any ε > 0, δ > 0, and any nonnegative sequence αn = o(dn), there is N > 0 such that when n > N,

(B.2)

Then there exists a local minimizer of

such that . In addition, for an arbitrarily small ε > 0, the local minimizer β̂ is strict with probability at least 1 − ε, for all large n.

Proof. The proof is a generalization of the proof of Theorem 3 in Fan and Lv (2011). Let . It is our assumption that kn = o(1). Write Q1(βS) = Qn(βS, 0), and L1(βS) = Ln(βS, 0). In addition, write

Define

τ = {β ∈ ℝs : ‖ β − β0S‖ ≤knτ} for some τ > 0. Let ∂

τ = {β ∈ ℝs : ‖ β − β0S‖ ≤knτ} for some τ > 0. Let ∂

τ denote the boundary of

τ denote the boundary of

τ. Now define an event

τ. Now define an event

On the event Hn(τ), by the continuity of Q1, there exists a local minimizer of Q1 inside

τ. Equivalently, there exists a local minimizer

of Qn restricted on

inside

. Therefore, it suffices to show that ∀ε > 0, there exists τ > 0 so that P(Hn(τ)) > 1 − ε for all large n, and that the local minimizer is strict with probability arbitrarily close to one.

τ. Equivalently, there exists a local minimizer

of Qn restricted on

inside

. Therefore, it suffices to show that ∀ε > 0, there exists τ > 0 so that P(Hn(τ)) > 1 − ε for all large n, and that the local minimizer is strict with probability arbitrarily close to one.

For any βS ∈ ∂

τ, which is ‖βS − β0S‖ = knτ, there is β* lying on the segment joining βS and β0S such that by the Taylor's expansion on L1 (βS):

τ, which is ‖βS − β0S‖ = knτ, there is β* lying on the segment joining βS and β0S such that by the Taylor's expansion on L1 (βS):

By Condition (i) ‖∇L1(β0S)‖ = Op(an), for any ε > 0, there exists C1 > 0, so that the event H1 satisfies P(H1) > 1 − ε/4 for all large n, where

| (B.3) |

In addition, Condition (ii) yields that there exists Cε > 0 such that the following event H2 satisfies P(H2) ≥ 1 − ε/4 for all large n, where

| (B.4) |

Define another event H3 = {‖∇2L1(β0S) − ∇2L1(β*)‖F < Cε/4}. Since ‖βS − β0S‖ = knτ, by Condition (B.2) for any τ > 0, P(H3) > 1 — ε/4 for all large n. On the event H2 ∩ H3, the following event H4 holds:

By Lemma B.1,

. Hence for any βS ∈ ∂

τ, on H1 ∩ H4,

τ, on H1 ∩ H4,

For

, we have

. Therefore, we can choose τ > 8(C1 + 1)/(3Cε) so that Q1(βS)−Q1(β0S) ≥ 0 uniformly for β ∈ ∂

τ. Thus for all large n, when τ > 8(C1 + 1)/(3Cε),

τ. Thus for all large n, when τ > 8(C1 + 1)/(3Cε),

It remains to show that the local minimizer in

τ (denoted by β̂S) is strict with a probability arbitrarily close to one. For each h ∈ ℝ/{0}, define

τ (denoted by β̂S) is strict with a probability arbitrarily close to one. For each h ∈ ℝ/{0}, define

By the concavity of Pn(·), ψ(·) ≥ 0. We know that L1 is twice differentiable on ℝs. For βS ∈

τ Let A(βS) = ∇2L1(βS) − diag{ψ(βS1), …, ψ(βSs)}. It suffices to show that A(β̂S) is positive definite with probability arbitrarily close to one. On the event H5 = {η(β̂S) ≤ supβ∈B(β0S,cdn)

η(β)} (where cdn is as defined in Assumption 4.1(iv)),

τ Let A(βS) = ∇2L1(βS) − diag{ψ(βS1), …, ψ(βSs)}. It suffices to show that A(β̂S) is positive definite with probability arbitrarily close to one. On the event H5 = {η(β̂S) ≤ supβ∈B(β0S,cdn)

η(β)} (where cdn is as defined in Assumption 4.1(iv)),

Also define events H6 = {‖∇2L1(βS) − ∇2L1(β0S)‖F < Cε/4} and H7 = {λmin(∇2L1(β0S)) > Cε. Then on H5∩H6∩H7, for any α ∈ ℝs satisfying ‖α‖ = 1, by Assumption 4.1(iv),

for all large n. This then implies λmin(A(β̂S)) ≥ Cε/4 for all large n.

We know that P(λmin[∇2L1(β0S)] > Cε) > 1 − ε. It remains to show that P(H5 ∩ H6) > 1 — ε for arbitrarily small ε. Because kn = o(dn), for an arbitrarily small ε > 0, P(H5) > P(β̂S ∈ B(β0S, cdn)) ≥ 1 − ε/2 for all large n. Finally,

The previous theorem assumes that the true support S is known, which is not practical. We therefore need to derive the conditions under which S can be recovered from the data with probability approaching one. This can be done by demonstrating that the local minimizer of Qn restricted on ℬ is also a local minimizer on ℝp. The following theorem establishes the variable selection consistency of the estimator, defined as a local solution to a penalized regression problem on ℝp.

For any β ∈ ℝp, define the projection function

| (B.5) |

Theorem B.2(Variable selection)

Suppose Ln : ℝp → ℝ satisfies the conditions in Theorem B.1, and Assumption 4.1 holds. Assume the following Condition A holds:

Condition A: With probability approaching one, for β̂S in Theorem B.1, there exists a neighborhood ℋ ⊂ ℝp of , such that for all but βN ≠ 0,

| (B.6) |

Then (i) with probability approaching one, is a local minimizer in ℝp of

(ii) For an arbitrarily small ε > 0, the local minimizer β̂ is strict with probability at least 1 − ε, for all large n.

Proof. Let with β̂S being the local minimizer of Q1(βS) as in Theorem B.1. We now show: with probability approaching one, there is a random neighborhood of β̂, denoted by ℋ, so that ∀β = (βS, βN) ∈ ℋ with βN ≠ 0, we have Qn(β̂) < Qn(β). The last inequality is strict.

To show this, first note that we can take ℋ sufficiently small so that Q1(β̂) ≤ Q1(βS) because β̂S is a local minimizer of Q1(βS) from Theorem B.1. Recall the projection defined to be

, and Qn(

β) = Q1(βS) by the definition of Q1. We have Qn(β̂) = Q1(β̂S) ≤ Q1(βS) = Qn(

β) = Q1(βS) by the definition of Q1. We have Qn(β̂) = Q1(β̂S) ≤ Q1(βS) = Qn(

β). Therefore, it suffices to show that with probability approaching one, there is a sufficiently small neighborhood of ℋ of β ^, so that for any

with βN ≠ 0, Qn(

β). Therefore, it suffices to show that with probability approaching one, there is a sufficiently small neighborhood of ℋ of β ^, so that for any

with βN ≠ 0, Qn(

β) < Qn(β).

β) < Qn(β).

In fact, this is implied by Condition (B.6):

| (B.7) |

The above inequality, together with the last statement of Theorem B.1 implies part (ii) of the theorem.

Appendix C: Proofs for Section 4

Throughout the proof, we write FiS = Fi(β0S), HiS = Hi(β0S) and .

Lemma C.1

supβ∈ℝp λmax(J(β)) = Op(1), and λmin(J(β0)) is bounded away from zero with probability approaching one.

Proof. Parts (i)(ii) follow from an application of the standard large deviation theory by using Bernstein inequality and Bonferroni's method. Part (iii) follows from the assumption that var(Fj) and var(Hj) are bounded uniformly in j ≤ p.

C.1. Verifying conditions in Theorems B.1, B.2

C.1.1. Verifying conditions in Theorem B.1

For any β ∈ℝp, we can write . Define

Then L̃FGMM (βS) = LFGMM(βS, 0).

Condition (i)

, where

| (C.1) |

By Assumption 4.5, ‖An(β0)‖ = Op(1). In addition, the elements in J(β0) are uniformly bounded in probability due to Lemma C.1. Hence . Due to , using the exponential-tail Bernstein inequality with Assumption 4.3 plus Bonferroni inequality, it can be shown that there is C > 0 such that for any t > 0,

which implies . Similarly, . Hence .

Condition (ii)

Straightforward but tedious calculation yields

where Σ(β0S) = 2An(β0S)J(β0)An(β0S)T, and M(β0S) = 2Z(β0S)B(β0S), with (suppose XiS = (Xil1, …, Xils)T)

It is not hard to obtain , and ‖Z(β0S)‖F = Op(s), and hence .

Moreover, there is a constant C > 0, and for all large n and any ε > 0. This then implies P(λmin[J(β0)] > C) > 1 − ε. Recall Assumption 4.5 that λmin(EAn(β0S)EAn(β0S)T) > C2 for some C2 > 0. Define events

Then on the event ,

Note that . Hence Condition (B.1) is then satisfied.

Condition (iii)

It can be shown that for any nonnegative sequence αn = o(dn) where dn = mink∈S |β0k|/2, we have

| (C.2) |

holds for any ε and δ > 0. As for Σ(βS), note that for all βS such that ‖βS − β0S‖ < dn/2, we have βS,k ≠ 0 for all k ≤ s. Thus J(βS) = J(β0S). Then P(sup‖βS−β0S‖<αn‖Σ(βS) − Σ(β0S)‖F ≤ δ) > 1 − ε holds since P(sup‖βS−β0S‖<αn‖An(βS) − An(β0S)‖F ≤ δ) > 1 − ε.

C.1.2. Verifying conditions in Theorem B.2

Proof. We verify Condition A of Theorem B.2, that is, with probability approaching one, there is a random neighborhood ℋ of , such that for any with βN ≠ 0, condition (B.6) holds.

Let F(

β) = {Fl : l ∈ S, βl ≠ 0} and H(

β) = {Fl : l ∈ S, βl ≠ 0} and H(

β) = {Hl: l ∈ S, βl ≠ 0} for any fixed

. Define

β) = {Hl: l ∈ S, βl ≠ 0} for any fixed

. Define

where J1(

β) and J2(

β) and J2(

β) are the upper-|S|0 and lower-|S|0 sub matrices of J(

β) are the upper-|S|0 and lower-|S|0 sub matrices of J(

β). Hence LFGMM(

β). Hence LFGMM(

(β)) = Ξ(

(β)) = Ξ(

β). Then LFGMM(β) − Ξ(β) equals

β). Then LFGMM(β) − Ξ(β) equals

where wl1 = 1/var̂(Fl and wl2 = 1/var̂(Hl. So LFGMM(β) ≥ Ξ(β). This then implies LFGMM(

β) − LFGMM(β) ≤ Ξ(

β) − LFGMM(β) ≤ Ξ(

β) − Ξ(β). By the mean value theorem, there exists λ ∈ (0,1), for

,

β) − Ξ(β). By the mean value theorem, there exists λ ∈ (0,1), for

,

Let ℋ be a neighborhood of

(to be determined later). We have shown that Ξ (

β) − Ξ (β) = Σl∉S,βl≠0

βl(al(β) + bl(β)), for any β ∈ ℋ,

β) − Ξ (β) = Σl∉S,βl≠0

βl(al(β) + bl(β)), for any β ∈ ℋ,

and bl(β) is defined similarly based on H. Note that h lies in the segment joining β and

β, and is determined by β, hence should be understood as a function of β. By our assumption, there is a constant M, such that |m(t1, t2) | and |q(t1, t2)|, the first and second partial derivatives of g, and

are all bounded by M uniformly in t1, t2 and l, k ≤ p. Therefore the Cauchy-Schwarz and triangular inequalities imply

β, and is determined by β, hence should be understood as a function of β. By our assumption, there is a constant M, such that |m(t1, t2) | and |q(t1, t2)|, the first and second partial derivatives of g, and

are all bounded by M uniformly in t1, t2 and l, k ≤ p. Therefore the Cauchy-Schwarz and triangular inequalities imply

Hence there is a constant M1 such that if we define the event (again, keep in mind that h is determined by β)

then P(Bn) → 1. In addition with probability one,

where, . For some deterministic sequence rn (to be determined later), we can define the above ℋ to be dddd

then supβ∈ℋ‖β − β̂‖1 < rn. By the mean value theorem and Cauchy Schwarz inequality, there is β̃:

Hence there is a constant M2 such that P(Z1 < M2√srn) → 1.

Let . By the triangular inequality and mean value theorem, there are h̃ and lying in the segment between β̂ and β0 such that

where we used the assumption that . We showed that in the proof of verifying conditions in Theorem B.1. Hence by Theorem B.1, . Thus

By the assumption , hence , where M1 is defined in the event Bn. Consequently, if we define an event , then P(Bn ∩ Dn → 1, and on the event Bn ∩ Dn,

We can , and thus .

On the other hand, Because (

β)j = βj for either j ∈ S or βj = 0, there exists λ2 ∈ (0,1),

β)j = βj for either j ∈ S or βj = 0, there exists λ2 ∈ (0,1),

For all l ∉ S, |βl| ≤ ‖β − β0‖1 < rn. Due to the non-increasingness of , . We can make rn further smaller so that which is satisfied for example, when rn < λn if SCAD(λn) is used as the penalty. Hence

Using the same argument we can show

. Hence LFGMM(

β) − LFGMM(β) < Σl∉S,βl≠0

βl (al(β)+bl(β)) ≤ Σl∉S

Pn(|βl|) for all β ∈ {β : ‖β − β̂‖1 < rn} under the event Bn ∩ Dn. Here rn is such that

and

. This proves Condition A of Theorem B.2 due to P(Bn ∩ Dn) → 1.

β) − LFGMM(β) < Σl∉S,βl≠0

βl (al(β)+bl(β)) ≤ Σl∉S

Pn(|βl|) for all β ∈ {β : ‖β − β̂‖1 < rn} under the event Bn ∩ Dn. Here rn is such that

and

. This proves Condition A of Theorem B.2 due to P(Bn ∩ Dn) → 1.

C.2. Proof of Theorem 4.1: parts (ii) (iii)

We apply Theorem B.2 to infer that with probability approaching one, is a local minimizer of QFGMM(β). Note that under the event that is a local minimizer of QFGMM (β), we then infer that Qn (β) has a local minimizer such that β̂N = 0. This reaches the conclusion of part (ii). This also implies P(Ŝ ⊂ S) → 1.

By Theorem B.1, and as proved in verifying conditions in Theorem B.1, we have ‖β0S − β̂S‖ = op(dn). So

This implies P(S ⊂ Ŝ) → 1. Hence P(Ŝ = S) → 1.

C.3. Proof of Theorem 4.1: part (i)

Let .

Lemma C.2

Under Assumption 4.1,

where ∘ denotes the element-wise product.

Proof. Write , where . By the triangular inequality and Taylor expansion,

where β* lies on the segment joining β̂S and β0S. For any ε > 0 and all large n,

This implies η(β*) = Op(max‖βS−β0S‖<dn/4 η(β)). Therefore, is upper-bounded by

which implies the result since .

Lemma C.3

Let Ωn = √nΓ−1/2. Then for any unit vector α ∈ℝs,

Proof. We have ∇L̃FGMM(β0S) = 2An(β0S)J(β0)Bn, where . We write , and Γ = 4AJ(β0)ϒJ(β0)TAT.

By the weak law of large number and central limit theorem for iid data,

for any unit vector α̃∈ℝ2s. Hence by the Slutsky's theorem,

Proof of Theorem 4.1: part (i)

Proof. The KKT condition of β̂S gives

| (C.3) |

By the mean value theorem, there exists β* lying on the segment joining β0S and β̂S such that

Let D = (∇2L̃FGMM(β*) − ∇2L̃FGMM(β0S))(β̂S − β0S). It then follows from (C.3) that for , and any unit vector α,

In the proof of Theorem 4.1, condition (ii), we showed that ∇2L̃FGMM(β0S) = Σ + Op(1). Hence by Lemma C.3, it suffices to show .

By Assumptions 4.5 and 4.6(i), λmin(Γn)−1/2 = Op(1).Thus ‖ αTΩn‖ = Op(√n). Lemma C.2 then implies is bounded by .

It remains to prove ‖D‖ = op(n−1/2), and it suffices to show that

| (C.4) |

due to , and Assumption 4.6 that . Showing (C.4) is straightforward given the continuity of ∇2L̃FGMM.

Appendix D: Proofs for Sections 5 and 6

The local minimizer in Theorem 4.1 is denoted by and P(β̂N) → 1. Let .

D.1. Proof of Theorem 5.1

Lemma D.1

Proof. We have, . By Taylor expansion, with some β̂ in the segment joining β0S and β̂S,

Note that is bounded due to Assumption 4.5. Apply Taylor expansion again, with some β̂*, the above term is bounded by

Note that supt1,t2| q(t1, t2)| < ∞ by Assumption 4.4. The second term in the above is bounded by . Combining these terms, is bounded by .

Lemma D.2

Under the theorem's assumptions

Proof. By the foregoing lemma, we have

Now, for some β̃Sj in the segment joining β̂Sj and β0j,

The result then follows.

Note that ∀δ > 0,

Hence by Assumption 5.1, there exists γ > 0,

On the other hand, by Lemma D.2, QFGMM(β̂G) = op(1). Therefore,

Q.E.D.

D.2. Proof of Theorem 6.1

Lemma D.3

Define . Under the theorem assumptions, supβS ∈ Θ ‖ρ(βS) − ρn(βS)‖ = op(1).

Proof. We first show three convergence results:

| (D.1) |

| (D.2) |

| (D.3) |

Because both supw ‖D̂ (w) − D(w)‖ and supw |σ̂(w)2 − σ(w)2| are op(1), proving (D.1) and (D.2) is straightforward. In addition, given the assumption that , (D.3) follows from the uniform law of large number. Hence we have,

In addition, the event XS = X̂S occurs with probability approaching one, given the selection consistency P(Ŝ = S) → 1 achieved in Theorem 4.1.

The result then follows because .

Given Lemma D.3, Theorem 6.1 follows from a standard argument for the asymptotic normality of GMM estimators as in Hansen (1982) and Newey and McFadden (1994, Theorem 3.4). The asymptotic variance achieves the semi-parametric efficiency bound derived by Chamberlain (1987) and Severini and Tripathi (2001). Therefore, β̂* is semi-parametric efficient.

Appendix E: Proofs for Section 7

The proof of Theorem 7.1 is very similar to that of Theorem 4.1, which we leave to the online supplementary material, downloadable from http://terpconnect.umd.edu/∼yuanliao/high/supp.pdf

Proof of Theorem 7.2

Proof. Define . We first show Ql,k ≤ Ql,k−1 for 1 < k ≤ p and Ql+1,1 ≤ Ql,p. For 1 < k ≤ p, Ql,k − Ql,k−1 equals

Note that the difference between and only lies on the kth position. The kth position of is while that of is . Hence by the updating criterion, Ql,k ≤ Ql,k−1 for k ≤ p.

Because is the first update in the l + 1th iteration, . Hence

On the other hand, for ,

Hence . Note that differs β(l) only on the first position. By the updating criterion, Ql+1,1 − Ql,p ≤ 0.

Therefore, if we define {Lm}m≥1 = {Q1,1, …,Q1,p, Q2,1, …,Q2,p, …}, then we have shown that {Lm}m≥1 is a non-increasing sequence. In addition, Lm ≥ 0 for all m ≥ 1. Hence Lm is a bounded convergent sequence, which also implies that it is Cauchy. By the definition of QK(β(l)), we have QK(β(l)) = Ql,p, and thus {QK(β(l))}l≥1 is a sub-sequence of {Lm}. Hence it is also bounded Cauchy. Therefore, for any ε > 0, there is N > 0, when l1, l2 > N, | QK(β(l1)) − QK(β(l2))| < ε, which implies that the iterations will stop after finite steps.

The rest of the proof is similar to that of the Lyapunov's theorem of Lange (1995, Prop. 4). Consider a limit point β* of {β(l)} l≥1 such that there is a subsequence limk→∞ β(lk) = β*. Because both QK(·) and M(·) are continuous, and QK(β(l)) is a Cauchy sequence, taking limits yields

Hence β* is a stationary point of QK(β).

Footnotes

In fixed designs, e.g., Zhao and Yu (2006), it has been implicitly assumed that for all j < p.

We thank the AE and referees for suggesting the use of a general vector of instrument W, which extends to the more general endogeneity problem, allowing the presence of endogenous important regressors. In particular, W is allowed to be XS, which amounts to assume that E(ε|XS) = 0 by (1.4), but allow E(ε|X) ≠ 0. In this case, we can allow the instruments W = XS to be unknown, and F and H to be defined below can be transformations of X. This is the setup of an earlier version of this paper, which is much weaker than (1.2) and allows some of XN to be endogenous.

The compatibility of (1.6) requires very stringent conditions. If are invertible, then a necessary condition for (1.6) to have a common solution is that , which does not hold in general when F ≠ H.

For technical reasons we use a diagonal weight matrix and it is likely non-optimal. However, it does not affect the variable selection consistency in this step.

We thank a referee for reminding us this important research direction.

Contributor Information

Jianqing Fan, Email: jqfan@princeton.edu, Department of Operations Research and Financial Engineering, Princeton University, Princeton, NJ 08544.

Yuan Liao, Email: yuanliao@umd.edu, Department of Mathematics, University of Maryland, College Park, MD 20742.

References

- Ai C, Chen X. Efficient estimation of models with conditional moment restrictions containing unknown functions. Econometrica. 2003;71:1795–1843. [Google Scholar]

- Andrews D. Consistent moment selection procedures for generalized method of moments estimation. Econometrica. 1999;67:543–564. [Google Scholar]

- Andrews D, Lu B. Consistent model and moment selection procedures for GMM estimation with application to dynamic panel data models. J Econometrics. 2001;101:123–164. [Google Scholar]

- Antoniadis A. Smoothing noisy data with tapered coiflets series. Scand J Stat. 1996;23:313–330. [Google Scholar]

- Belloni A, Chen D, Chernozhukov V, Hansen C. Sparse models and methods for optimal instruments with an application to eminent domain. Econometrica. 2012;80:2369–2429. [Google Scholar]

- Belloni A, Chernozhukov V. Least squares after model selection in high-dimensional sparse models. Bernoulli. 2013;19:521–547. [Google Scholar]

- Belloni A, Chernozhukov V, Hansen C. Inference on treatment effects after selection amongst high-dimensional controls. Review of Economic Studies 2013 Forthcoming. [Google Scholar]

- Bickel P, Klaassen C, Ritov Y, Wellner J. Efficient and adaptive estimation for semiparametric models. Springer; New York: 1998. [Google Scholar]

- Bickel P, Ritov Y, Tsybakov R. Simultaneous analysis of Lasso and Dantzig selector. Ann Statist. 2009;37:1705–1732. [Google Scholar]

- Bondell H, Reich B. Consistent high-dimensional Bayesian variable selection via penalized credible regions. J Amer Statist Assoc. 2012;107:1610–1624. doi: 10.1080/01621459.2012.716344. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bradic J, Fan J, Wang W. Penalized composite quasi-likelihood for ultrahigh-dimensional variable selection. J R Stat Soc Ser B. 2011;73:325–349. doi: 10.1111/j.1467-9868.2010.00764.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Breheny P, Huang J. Coordinate descent algorithms for non convex penalized regression, with applications to biological feature selection. Ann Appl Statist. 2011;5:232–253. doi: 10.1214/10-AOAS388. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bühlmann P, Kalisch M, Maathuis M. Variable selection in high-dimensional models: partially faithful distributions and the PC-simple algorithm. Biometrika. 2010;97:261–278. [Google Scholar]

- Bühlmann P, van de Geer S. Statistics for High-Dimensional Data: Methods, Theory and Applications. Springer; New York: 2011. [Google Scholar]

- Canay I, Santos A, Shaikh A. On the testability of identification in some nonparametric odes with endogeneity. Econometrica. 2013;81:2535–2559. [Google Scholar]

- Caner M. Lasso-type GMM estimator. Econometric Theory. 2009;25:270–290. [Google Scholar]

- Caner M, Fan Q. Hybrid generalized empirical likelihood estimators: instrument selection with adaptive lasso. Manuscript 2012 [Google Scholar]

- Caner M, Zhang H. Adaptive elastic net GMM with diverging number of moments. Journal of Business and Economic Statistics. 2013 doi: 10.1080/07350015.2013.836104. forthcoming. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Candès E, Tao T. The Dantzig selector: statistical estimation when p is much larger than n. Ann Statist. 2007;35:2313–2404. [Google Scholar]