Abstract

Unhealthy behavior is responsible for much human disease, and a common goal of contemporary preventive medicine is therefore to encourage behavior change. However, while behavior change often seems easy in the short run, it can be difficult to sustain. This article provides a selective review of research from the basic learning and behavior laboratory that provides some insight into why. The research suggests that methods used to create behavior change (including extinction, counterconditioning, punishment, reinforcement of alternative behavior, and abstinence reinforcement) tend to inhibit, rather than erase, the original behavior. Importantly, the inhibition, and thus behavior change more generally, is often specific to the “context” in which it is learned. In support of this view, the article discusses a number of lapse and relapse phenomena that occur after behavior has been changed (renewal, spontaneous recovery, reinstatement, rapid reacquisition, and resurgence). The findings suggest that changing a behavior can be an inherently unstable and unsteady process; frequent lapses and relapse should be expected to occur. In the long run, behavior-change therapies might benefit from paying attention to the context in which behavior change occurs.

Keywords: behavior change, contingency management, behavioral inhibition, context, relapse

Behavior causes a surprising amount of human disease. For example, an estimated 40% of premature deaths in the U.S. can be attributed to unhealthy behaviors, such as smoking and inactivity (e.g., Schroeder, 2007). Eliminating such behaviors, and replacing them with healthier ones, is therefore one of the most important strategies for improving U.S. population health. But a persistent challenge to the field is that sustaining behavior change is not easy. Classic data suggest that roughly 70% of individuals who successfully quit illicit drug use, cigarette smoking, or problem drinking return to their old behaviors within a year (Hunt, Barnett, & Branch, 1971). More recent data suggest similar outcomes (e.g., Hughes, Keely, & Naud, 2004; Kirshenbaum, Olsen, & Bickel, 2009). Even patients who enter an incentive-based “contingency-management” treatment that explicitly reinforces healthy behavior with vouchers or prizes (e.g., Higgins, Silverman, & Heil, 2008; Higgins, Silverman, Sigmon, & Naito, 2012; Fisher, Green, Calvert, & Glasgow, 2011) often return to their unwanted behaviors over time. That is, once contingency management stops, and the reinforcers are discontinued, many individuals return to the original behavior (e.g., John, Loewenstein, Troxel, Norton, Fassbender, & Volpp, 2011; Silverman, DeFulio, & Sigurdsson, 2012). Despite the fact that contingency management is one of the most successful behavioral intervention strategies, for the case of drug dependence, “the development of more enduring solutions to sustain abstinence over years and lifetimes is perhaps the greatest challenge facing the substance abuse treatment research community today” (Silverman et al., 2012, p. S47).

The purpose of the present article is to present some research from the basic behavioral laboratory that might shed light on why it is so difficult to sustain behavior change. The issue has been discussed in other papers (e.g., Bouton, 2000, 2002); the current article focuses on behavior change in general with an emphasis on recent work addressing instrumental (operant) learning. Roughly three decades of basic research on behavior change suggests two main conclusions. First, changing or replacing an old behavior with a new behavior does not erase the original one. Second, behavior change can be remarkably specific to the “context” in which it occurs. Both of these features of behavior change appear to be general across different treatment strategies for creating change. They might provide some insight into why behavior change can be so difficult to maintain.

Behavior change is not erasure

Behavior change can be studied in the laboratory with variations of two well-known behavioral methods. In the first, organisms like rats or pigeons learn to perform specific behaviors (such as pressing a lever or pecking at a disk) to obtain food, water, or drug reinforcers. The study of such operant conditioning provides a method that allows behavioral scientists to study how “free” or “voluntary” behavior is influenced by its consequences. In the second method, Pavlovian or classical conditioning, the organism learns to associate a signal (such as presentation of a tone or light) with upcoming reinforcers or punishers (e.g., food, water, drugs, or a mild shock). This kind of learning in turn allows the organism to adapt to significant events in the environment by making anticipatory responses in the presence of the signal. Both Pavlovian and operant learning are widely represented in human experience and provide the building blocks of many complex behaviors and actions (e.g., Baldwin & Baldwin, 2001).

In either type of learning, behavior change can be studied by altering the relationship between the action or the signal and the reinforcing or punishing outcome. In extinction, perhaps the most basic form of behavior change, the strength or rate of the behavior declines when the reinforcing outcome is eliminated. The behavior eventually goes away, and is said to be “extinguished.” Extinction is a reliable way to reduce a learned behavior, and it is thought to be the mechanism behind various cognitive behavior therapies that eliminate unwanted behaviors, thoughts, or emotions by repeatedly exposing the patient to the cues or situations that trigger them (e.g., Craske, Kircanski, Zelikowsky, Mystkowski, Chowdhury, & Baker, 2008). It is tempting to conclude that extinction erases or destroys the original learning. But the evidence suggests that extinction is best thought of as producing a kind of behavioral inhibition. That is, the original behavior is still in the brain or memory system, but is inhibited and ready to return to performance under certain conditions. Learning theorists have long emphasized a distinction between learning and performance. Just because a behavior is not manifest in performance does not mean that its underlying basis is gone. It is potentially available to produce lapse or relapse.

Since the 1970s, extinction has been studied extensively with Pavlovian methods. As noted above, when the significant event is no longer presented, anticipatory responses to the signal go away. However, the extinguished response can readily return with any of several experimental manipulations (see Bouton, 2004; Bouton & Woods, 2008, for more extensive discussions). These are summarized in Table 1. In what is probably the most fundamental example, the renewal effect, extinguished responding to the signal (the conditioned stimulus or “CS”) returns if the CS is simply tested in a different context (e.g., Bouton & Bolles, 1979; Bouton & King, 1983; Bouton & Peck, 1989). (In the animal laboratory, “contexts” are usually provided by the Skinner boxes in which learning and testing occur; they usually differ in their visual, olfactory, tactile, and spatial respects.) In spontaneous recovery, the extinguished response can return if the CS is tested again after some time has elapsed after extinction. The phenomenon can be viewed as another example of the renewal effect in which extinction is shown to be specific to its temporal context (e.g., Bouton, 1988). In reinstatement (e.g., Rescorla & Heth, 1975), mere exposure to the significant event (the unconditioned stimulus or “US”) again after extinction can make responding return to the CS. Importantly, the reinstating effect of presenting the US alone is also a context effect. For example, in Pavlovian learning, presentation of the US must occur in the context in which testing will take place in order for the response to return (Bouton, 1984; Bouton & Bolles, 1979; Bouton & King, 1983; Bouton & Peck, 1989; see also Westbrook, Iordanova, Harris, McNally, & Richardson, 2002). The picture that emerges is that behavior after extinction is quite sensitive to the current context. When the trigger cue is returned to the acquisition context, when the context is merely changed, or when the context is associated with the reinforcer again, the cue (CS) can readily trigger responding again.

Table 1.

Context, lapse, and relapse effects that can interfere with lasting behavior change

| Phenomenon | Description |

|---|---|

| Renewal | After behavior change, a return of the first behavior that occurs when the context is changed |

| ABA renewal | Renewal of behavior in its original context (A) after behavior change in another context (B) |

| ABC renewal | Renewal of behavior in a new context (C) after behavior change in a context (B) that is different from the original context (A) |

| AAB renewal | Renewal of behavior in a new context (B) after behavior has been changed in its original context (A) |

| Spontaneous recovery | Return of the first behavior that occurs when time elapses after behavior change |

| Reinstatement | Return of first behavior that occurs when the individual is exposed to the original US or reinforcer again after behavior change. Can depend on contextual conditioning. |

| Rapid reacquisition | Fast return of the first behavior when it (or the signal that elicits it) is paired with the reinforcer or US again. |

| Resurgence | Return of an extinguished operant behavior that can occur when a behavior that has replaced it is extinguished |

A fourth phenomenon is rapid reacquisition. In this case, when CS-US pairings are resumed after extinction, the return of responding can be very rapid (Napier, Macrae, & Kehoe, 1992; Ricker & Bouton, 1996). Rapid reacquisition may be especially relevant to behavior change in the natural world, because the US or reinforcer is usually presented whenever a lapsing drug user or over-eater consumes the drug or junk food again. The evidence suggests that reacquisition is rapid because the reinforced trials were part of the “context” of original conditioning (Bouton, Woods, & Pineno, 2004; Ricker & Bouton, 1996). Thus, when the CS and US are paired again, the organism is returned to the original context, and responding recovers because it is a form of an ABA renewal effect. Once again, performance after extinction depends on context. And the meaning of “context” can be very broad and include not only the physical background, but recent events, mood states, drug states, deprivation states, and time (e.g., see Bouton, 1991, 2002).

It is important to note that what we know about extinction also applies to other Pavlovian behavior-change procedures (Bouton, 1993). For example, in counterconditioning, the CS is paired with a new US in Phase 2 instead of simply being presented alone. Here we also find little evidence for erasure and a lot for the role of context. For example, when CS-shock pairings are followed by CS-food pairings, renewal of fear occurs after a context change (Peck & Bouton, 1990), spontaneous recovery occurs after the passage of time (Bouton & Peck, 1992), and reinstatement of fear to the tone occurs if shock is presented alone again (Brooks, Hale, Nelson, & Bouton, 1995). Renewal and spontaneous recovery of appetitive behavior can also occur when tone-shock follows tone-food (Peck & Bouton, 1990; Bouton & Peck, 1992). We have also seen renewal and spontaneous recovery after discrimination reversal learning in which tone-shock and light-no shock were followed by tone-no shock and light-shock (Bouton & Brooks, 1992). And when an inhibitory CS that signals “no reinforcer” is converted into an excitor that now signals that the reinforcer will occur, the original inhibitory meaning can return upon return to the original inhibitory conditioning context (Peck, 1995; see also Fiori, Barnet, & Miller, 1994). All of these findings suggest that extinction can be viewed as a representative form of retroactive inhibition in which new learning replaces the old (Bouton, 1993). Learning something new about a stimulus does not necessarily erase the earlier learning. It involves inhibition that is sensitive to context change.

The variety of different lapse and relapse effects suggests that behavior change can be an intrinsically unsteady affair. Given the many possible context changes that can occur in the natural world after a behavior is inhibited, repeated lapses should always be expected. One rule of thumb is that after extinction the signal has had a history of two associations with the US (CS-US learned in conditioning and CS-no US learned in extinction). Its meaning is therefore ambiguous. And like the current meaning of an ambiguous word (or the verbal response it evokes), the current response evoked by the trigger cue depends on the current context. More detailed reviews of extinction in Pavlovian conditioning with an eye toward making it more enduring can be found in Bouton and Woods (2008) and Laborda, McConnell, and Miller (2011).

Extinction and inhibition of voluntary behavior

More recent research has asked whether similar principles apply to extinction in operant learning. As noted above, operant learning may be especially relevant for understanding factors that influence voluntary behaviors, such as over-eating, smoking, problem drinking, and illicit drug use. Here again, extinction can be created (and behavior “eliminated”) by allowing the organism to make the response without the reinforcer. And although the procedure makes the behavior go away, it does not necessarily erase it. For example, recent experiments have demonstrated the ABA, ABC, and AAB renewal effects after operant extinction (Bouton, Todd, Vurbic, & Winterbauer, 2011). ABA and AAB renewal have also been demonstrated in discriminated operant learning, where the response is only reinforced in the presence of a discriminative stimulus (SD), such as a tone or a light, which consequently sets the occasion for the response (ABA renewal: Nakajima, Tanaka, Urushihara, & Imada, 2000; Vurbic, Gold, & Bouton, 2011; Todd, Vurbic, & Bouton, 2014; AAB renewal: Todd et al., 2014). The discriminated operant situation may be especially relevant to problematic human behavior, because so much of the latter takes place in the presence of cues that regularly set the occasion for them (for example, overeating takes place in the presence of stimuli, such as a bag of chips or a bucket of fried chicken, that set the occasion for eating). Moreover, reinstatement, spontaneous recovery, and rapid reacquisition have also been demonstrated in operant learning. They have all occurred in drug self-administration experiments in which animals are reinforced for responding with drugs of abuse, such as heroin, cocaine, or alcohol (see Bouton, Winterbauer, & Vurbic, 2013 for a review). Thus, as in Pavlovian conditioning, operant extinction depends on inhibition that is specific to the context in which it is learned.

Recent evidence from my laboratory further suggests that the organism learns something very specific when an operant behavior is extinguished: It learns not to make a specific response in a specific context (e.g., Todd, 2013; Todd et al., 2014). We know this, for example, because extinction of one behavior (e.g., pressing a lever) in the presence of an SD (e.g., a tone) can prevent renewal of the same response occasioned by other SDs (e.g., a light) in the same context (Todd et al., 2014, Experiment 3). In contrast, extinction of a different behavior (e.g., pulling a chain) is not as effective. Similarly, renewal is also not prevented by simple unreinforced exposure to the context without allowing the animal to make the response (Bouton et al., 2011, Experiment 4). This result is consistent with the finding that simple exposure to contextual cues might not weaken drug taking (Conklin & Tiffany, 2002). Our evidence suggests that extinction of operant behavior may require that the individual be given an opportunity to learn to inhibit the response directly. Interestingly, at least one effective extinction-based treatment of overeating in children required the children to make the response (eating) at least a little in the presence of food cues during cue exposure (Boutelle, Zucker, Peterson, Rydell, Cafri, & Harnack, 2011).

We also know that our understanding of extinction applies to other retroactive interference arrangements in the operant paradigm. For example, Marchant, Khuc, Pickens, Bonci, and Shaham (2013) reported that alcohol seeking in rats could be suppressed by punishment. In their experiment, lever pressing was first trained by reinforcing it with alcohol. In a second phase, responses also produced a mild footshock, which reduced the behavior’s probability to near zero. However, when punishment occurred in Context B after the original training occurred in Context A, the response returned (was renewed) when it was tested in Context A. We recently examined renewal after punishment in more detail (Bouton & Schepers, 2014). We found that the context-specificity of punishment was not merely due to the subject associating the shock with Context B (which could have suppressed behavior on its own), and that renewal also occurred if testing was conducted in a neutral Context C (i.e., we observed ABC renewal). We also found that, paralleling research on operant extinction (Todd, 2013), training one response (R1, e.g., chain pulling) in Context A and a different response (R2, e.g., lever pressing) in Context B and then punishing each in the opposite context (i.e., R1 in Context B and R2 in Context A) allowed a renewal of responding when the responses were tested in their original training contexts. The test context also affected choice of R1 vs. R2 when both were made available at the same time. In punishment, as in extinction, the organism thus learns not to make a specific response in a specific context. Interestingly, re-exposure to a drug reinforcer can also reinstate operant drug self-administration behavior after it has been punished (Panlilo, Thorndike, & Schindler, 2003; see also Panlilio, Thorndike, & Schindler, 2005). The fact that what we know about extinction might also apply to punishment is important because the knowledge of aversive consequences of a behavior is another reason why humans might stop over-eating or drug-taking.

Renewal of instrumental behavior occurs after still other forms of behavioral inhibition. For example, instead of studying extinction, Nakajima, Urushihara, and Masaka (2002) introduced a negative contingency between the operant response and getting the reinforcer in Phase 2 (see also Kearns & Weiss, 2007). After first training rats to lever press for food pellets, they made pressing the lever postpone a reinforcer that otherwise occurred freely. This suppressed responding, of course. But when the rats were returned to Context A after the negative contingency training had occurred in Context B, responding was renewed. These results, like the punishment results, suggest that other forms of behavioral inhibition besides extinction create a context-dependent form of inhibition—and not erasure or unlearning.

Resurgence after behavior change

These ideas come together further in a paradigm that may have an especially direct connection to the contingency management or incentivized treatments mentioned at the start of this article (e.g., Higgins et al., 2008; Fisher et al., 2011). Replacing an operant behavior with a new behavior while the first is being extinguished can still allow relapse to occur when the replacement behavior is itself extinguished (e.g., Leitenberg, Rawson, & Bath, 1970). The basic method is as follows. In an initial phase, pressing a lever (R1) is reinforced. Then, in a response-elimination phase, R1 is extinguished (it no longer produces the reinforcer) at the same time an alternative behavior (pressing a second lever, R2) is reinforced. At the end of the response elimination phase, R2 has replaced R1. But when R2 is no longer reinforced, the animal returns to and makes a number of responses on R1. R1 is said to have “resurged.” Once again, extinction (of R1) did not erase it. This phenomenon, the last one listed in Table 1, may be a more direct laboratory model of what occurs in either therapy or the natural world when a problem behavior is replaced with a healthier one.

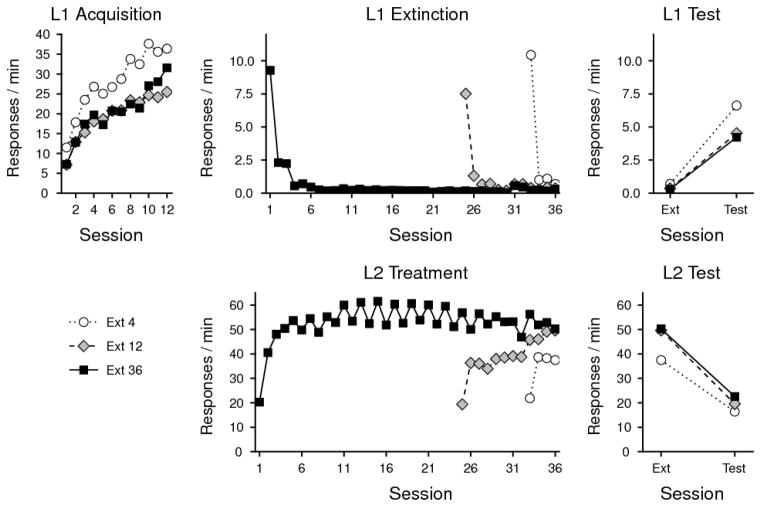

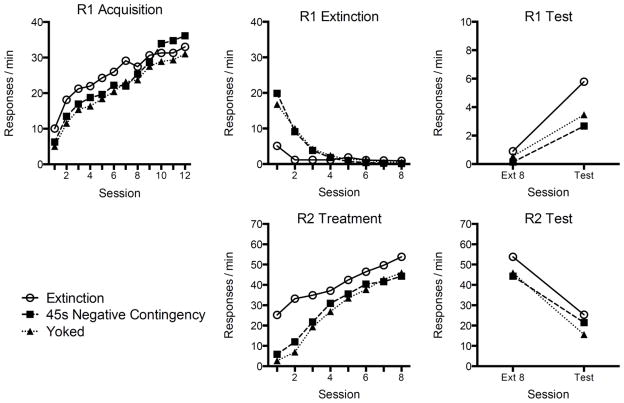

The field has recently begun to study resurgence in some detail (e.g., Bouton & Schepers, 2014; Cançado & Lattal, 2011; Lieving & Lattal, 2003; Shahan & Sweeney, 2011; Sweeney & Shahan, 2013; Winterbauer & Bouton, 2010, 2012; Winterbauer, Lucke, & Bouton, 2013). My colleagues and I have shown that under certain conditions resurgence may survive very extensive response elimination training. As illustrated in Figure 1, 36 sessions of response elimination did nothing to decrease resurgence beyond what we observed after 4 or 12 sessions (Winterbauer et al., 2013; but see Leitenberg, Rawson, & Mulick, 1975). In other experiments, we found that introducing an “abstinence” contingency into the response elimination phase did not abolish the effect either (Bouton & Schepers, 2014; see Figure 2). In this case, instead of merely extinguishing R1 while R2 was being reinforced, a reinforcer was delivered for R2 only if R1 had not been emitted for a minimum period of time (e.g., 45, 90, or 135 s). The addition of this abstinence contingency weakened the final resurgence effect, but it did not eliminate it. This result may not be surprising based on the evidence, reviewed above, that an original behavior can survive many different retroactive interference treatments.

Figure 1.

Resurgence of extinguished operant behavior. Left to right, the top panels illustrate the rate at which subjects performed the target first behavior (L1 for Lever 1) during the acquisition phase, response elimination (extinction) phase, and the resurgence test phase (respectively). The bottom panels illustrate the rate of the replacement behavior (L2 for Lever 2) during the response elimination and resurgence testing phases. Groups that received 4 (Ext 4), 12 (Ext 12), or 36 (Ext 36) sessions of response elimination training showed reliable resurgence of L1 responding that did not differ significantly (upper right). From Winterbauer et al. (2013).

Figure 2.

Resurgence of operant behavior that was suppressed by an abstinence contingency. Left to right, the top panels show the rate at which subjects performed the target first behavior (R1 for Response 1) during acquisition, response elimination (Extinction), and resurgence testing. The bottom panels illustrate the acquisition of the replacement behavior (R2) during response elimination and testing. The groups differed in their treatment during response elimination: Group Extinction received R1 extinction while R2 was reinforced; Group 45 s Negative Contingency was required to abstain from R1 for at least 45 s if an R2 response was to be reinforced; subjects in Group Yoked received reinforcement at the same points in time as a subject in the Negative Contingency group but without being required to abstain from R1. Note that the latter groups showed the same reduced, but not eliminated, resurgence (upper right). From Bouton and Schepers (2014).

My colleagues and I have argued that resurgence may simply be another example of the context-specificity of extinction. That is, the organism might learn to inhibit its performance of R1 in the “context” of a second behavior (R2) being reinforced. Then, when R2 reinforcers are themselves discontinued, the context changes—and R1 responding returns in the form of an ABC renewal effect (Bouton et al., 2011; Todd, 2013; Todd et al., 2012). Consistent with this idea, resurgence can be reduced, and possibly eliminated, if we allow the animal to learn extinction of R1 in a “context” that is more similar to the one that prevails during resurgence testing. For example, resurgence is reduced if the rate at which reinforcers are delivered for R2 is gradually decreased or “thinned” over the course of the response elimination phase (Winterbauer & Bouton, 2012; see also Sweeney & Shahan, 2013). Thinner schedules of reinforcement may allow the rat to learn not to make R1 in the context of fewer and fewer reinforcers—a context more like the one that prevails during resurgence testing. In related, yet-to-be-published work, Sydney Trask and I found that resurgence can be eliminated if very thin schedules of reinforcement are used to reinforce R2 from the beginning (see also Leitenberg, Rawson, & Mulick, 1975; Sweeney & Shahan, 2013). Finally, our experiments on the effects of adding an abstinence contingency (Bouton & Schepers, 2014) discovered that the reason why an abstinence contingency between R1 and the reinforcer weakened resurgence had nothing to do with the abstinence contingency per se. Instead, requiring abstinence from R1 made it difficult to earn reinforcers for R2 and reduced their frequency. It therefore gave the subject the opportunity to learn that R1 was extinguished during prolonged periods without a reinforcer. We know this because rats that were allowed to earn the reinforcer at the same rate as an abstinence group, but without an actual abstinence contingency, showed the same reduced, but not eliminated, level of resurgence (Figure 2). Across experiments, then, a reliable way to reduce resurgence is to give the subject an opportunity to learn not to perform R1 in the absence of frequent reinforcement.

All in all, one of the messages of our work on resurgence is that response-elimination therapies might benefit from encouraging generalization from the behavior-change context to new contexts that might otherwise allow relapse in the form of the renewal effect. Another possibility, of course, would be to maintain abstinence reinforcement indefinitely, as suggested by Silverman et al. (2012), who demonstrated prolonged abstinence among cocaine users when they were given prolonged abstinence-contingent employment.

The general context-dependence of operant behavior

Encouraging generalization to new contexts is also important for another reason. Our recent work on operant behavior has further discovered that operant behaviors are always context-dependent to some extent. That is, if a rat is reinforced for pressing a lever or pulling a chain in one context, merely testing the response in a second context consistently seems to weaken it. This effect of changing the context after operant learning appears to occur regardless of reinforcement schedule, the amount of training, whether the behavior is a discriminated or a non-discriminated operant, and whether the changed-to context is equally associated with reinforcers or the training of a different operant response (Bouton et al., 2011; Bouton, Todd, & León, 2014; Thrailkill & Bouton, submitted; Todd, 2013). The context thus appears to play a rather general role in enabling operant behavior. This idea has been a revelation to us because unlike operant responses, Pavlovian responses (fear or appetitive behaviors triggered by signals for shock or food) are often not weakened by changing the context (e.g., Bouton, Frohardt, Waddell, Sunsay, & Morris, 2008; Bouton & King, 1983; Bouton & Peck, 1989). Recent research thus suggests that there may be something especially important about the context in supporting voluntary, operant behavior.

The revelation is worth mentioning in a discussion of behavior change because it suggests that any new and healthy behavior that a patient might learn might also be disrupted by a change of context. Thus, in addition to the effects summarized in Table 1 (which can make first-learned unhealthy behaviors return), merely changing the context on a healthy behavior may be another factor that will weaken it. This is another reason to encourage generalization to new contexts, perhaps by training healthy behavior in the contexts where the patient or client will most need it. Another possibility is to reinforce the new behavior in multiple contexts (cf. Gunther, Denniston, & Miller, 2008; Wasserman & Bhatt, 1992). From a theoretical perspective, practice in multiple contexts might help, because contexts are made up of many “stimulus elements,” and generalization to a new context might depend on the number of elements it has in common (e.g., Estes, 1955). By training a behavior in multiple contexts, one increases the breadth of elements that can occasion the behavior, thereby increasing the likelihood that a new context will contain an already-treated element. Behavior change can be made more permanent by being aware of the operant behavior’s inherent context dependence.

The context-specificity of operant behavior makes ABC and AAB renewal after extinction especially interesting (e.g., Bouton et al., 2011; Todd, 2013; Todd et al., 2014). In both the ABC and AAB situations, inhibited responding returns in a context that is different from both the conditioning context and the extinction context. The fact that responding returns tells us that, despite the fact that operant behavior is at least somewhat context-specific, its inhibition is even more so. Therefore, the unhealthy first-learned behaviors that we may want to get rid of may still generalize better to new contexts than their inhibition will. This, coupled with the fact that behavior change does not cause erasure, provides the familiar imbalance that can make sustained behavior change so difficult.

Conclusions

To summarize this brief review of basic research on behavior change, successful learning of a new behavior does not permanently replace an earlier one. Behavior change does not equal unlearning; just because an old behavior has achieved a zero value does not mean that it has been erased. Second, the new behavior may be easy to disrupt because it may be especially context-dependent. Although our recent results suggest that all operant behavior may be inherently context-specific, the second-learned behavior appears to be more so (e.g., Nelson, 2002). Third, the fact that context change can disrupt the new behavior (and cause a lapse of the original one) underscores the importance of finding ways to make new behaviors generalize. For contingency management/incentives interventions, if prolonged or “lifetime” continuation of abstinence reinforcement (e.g., Silverman et al., 2012) is not possible, our resurgence results suggest that giving the client practice inhibiting her unhealthy behavior in the absence of explicit reinforcement (e.g., by thinning the rate at which abstinence is reinforced) might help behavior change to persist. Another possibility is to make sure the new behaviors are practiced in the contexts where lapse and relapse are most likely—including the contexts in which the first behavior was learned. A final tool for encouraging generalization to new contexts would be to practice the new behavior in multiple contexts. The point is that therapies must be designed to anticipate the possible deleterious consequences of context change.

There may be other ways of encouraging generalization between the treatment and relapse contexts. One method involves presenting cues during lapse or relapse testing that can remind the organism of extinction. Brooks and Bouton (1993, 1994) found that a discrete cue that was presented intermittently during extinction could reduce spontaneous recovery or renewal if it was presented just before the test (see also, e.g., Brooks, 2000; Collins & Brandon, 2002; Mystkowski, Craske, Echiverri, & Labus, 2006). Reminder cues can also reduce renewal after the extinction of operant alcohol-seeking (Willcocks & McNally, 2014). In another method of encouraging generalization between the treatment and relapse contexts, Woods and Bouton (2007) modified an operant extinction procedure so that the response was occasionally reinforced after gradually lengthening intervals (see also Bouton, Woods, & Pineno, 2004). When the response was paired with the reinforcer again during a reacquisition phase, the rats given this treatment responded less than those given a traditional extinction treatment after each of the new response-reinforcer pairings. Woods and Bouton argued that the rat had learned that reinforced responses signaled more extinction instead of more imminent response-reinforcer pairings, and this allowed extinction to generalize more effectively to the reacquisition test. The method may be related to a smoking-reduction procedure introduced by Cinciripini et al. (1994, 1995) in which smokers slowly reduce their cigarette consumption by smoking only at predetermined intervals. From our point of view, occasional but distributed cigarettes (response-reinforcer pairings) may reduce the tendency of a single smoke to set the occasion for another one.

Can other new methods be developed that will help promote behavior change? Several possibilities have been proposed. First, since response elimination involves new learning, drug compounds that can facilitate the learning process may be able to facilitate it. One example is d-cycloserine, a partial agonist of the NMDA receptor that is involved in long-term potentiation, a cellular model of learning. Although administering DCS during extinction can facilitate the rate at which Pavlovian fear extinction is learned (e.g., Walker, Ressler, Lu, & Davis, 2002), it does not necessarily reduce extinction’s context-dependency (Bouton, Vurbic, & Woods, 2008; Woods & Bouton, 2006). That is, renewal can still occur when the extinguished fear signal is tested in the original fear-conditioning context. There is also evidence that DCS can facilitate operant extinction learning (e.g., Leslie, Norwood, Kennedy, Begley, & Shaw, 2012), although our own attempts to produce such an effect have been unsuccessful (Vurbic et al., 2011). Vurbic et al. suggested that the drug may be mostly effective in Pavlovian extinction, which can be a part of operant procedures that involve explicit extinction of conditioned reinforcers (see Thanos et al., 2011). A second possibility for promoting permanent behavior change is reconsolidation (e.g., Nader, Schafe, & LeDoux, 2000). The idea here is that when a memory is retrieved, it becomes temporarily vulnerable to disruption by administration of certain drugs before it is reconsolidated (made permanent again) (e.g., Nader et al., 2000; Kindt, Soeter, & Vervliet, 2009). The argument is that the disrupted memory is at least partly erased. There is evidence that the process is most effective with weak (undertrained) or old memories (Wang, de Oliveira Alvares, & Nader, 2009). There is also evidence that extinction conducted soon after a memory is retrieved can also interfere with reconsolidation (Monfils, Cowansage, Klann, & LeDoux, 2009; Xue et al., 2012; but see Chan, Leung, Westbrook, & McNally, 2010; Kindt & Soeter, 2013; Soeter & Kindt, 2011). We are a long way from understanding the latter phenomenon, however; for example, it is not clear why the first trial of any extinction procedure does not retrieve the memory and produce the same effect. At this point in time, we do not know enough about the conditions that permit reconsolidation to take place.

Until we do, the safest approach to promoting behavior change may be to assume that lapse and relapse can potentially occur, especially with a change of context. The animal research reviewed here encourages a very broad definition of “context.” Although exteroceptive apparatus or room cues support both animal and human memory performance (e.g., see Smith & Vila, 2001), the results reviewed in the present article suggest that it is useful to think that time, recent reinforcers, and recent signal-reinforcer or response-reinforcer pairings can also serve (as in spontaneous recovery, resurgence, and rapid reacquisition, respectively). As noted earlier, previous reviews of the literature (e.g., Bouton, 1991, 2002) have suggested that drug states, hormonal states, mood states, and deprivation states can also play the role of context. At this point in time, research from the basic behavior laboratory mainly provides ideas and principles. More research with humans in applied settings will be necessary to provide more specific information about the kinds of contextual cues that may be most important to people who are undertaking behavior change.

Highlights.

When behavior changes, the new behavior does not erase the old

The new learning can be relatively specific to the context in which it is learned

These principles explain several forms of lapse and relapse that can interfere with sustained behavior change

They are consistent with a great deal of basic laboratory research on behavior change

Acknowledgments

Supported by Grant RO1 DA033123 from the National Institute on Drug Abuse. I thank Stephen Higgins, Scott Schepers, Eric Thrailkill, and Sydney Trask for their comments and discussion of the manuscript.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Baldwin JD, Baldwin JI. Behavior principles in everyday life. 4. Upper Saddle River, NJ: Prentice-Hall, Inc; 2001. [Google Scholar]

- Boutelle KN, Zucker NL, Peterson CB, Rydell SA, Cafri G, Harnack L. Two novel treatments to reduce overeating in overweight children: A Randomized Controlled Trial. Journal of Consulting and Clinical Psychology. 2011;79:759–771. doi: 10.1037/a0025713. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bouton ME. Differential control by context in the inflation and reinstatement paradigms. Journal of Experimental Psychology: Animal Behavior Processes. 1984;10:56–74. [Google Scholar]

- Bouton ME. A contextual analysis of fear extinction. In: Martin PR, editor. Handbook of Behavior Therapy and Psychological Science: An Integrative Approach. Elmsford, NY: Pergamon Press; 1991. pp. 435–453. [Google Scholar]

- Bouton ME. Context, time, and memory retrieval in the interference paradigm of Pavlovian learning. Psychological Bulletin. 1993;114:80–99. doi: 10.1037/0033-2909.114.1.80. [DOI] [PubMed] [Google Scholar]

- Bouton ME. A learning theory perspective on lapse, relapse, and the maintenance of behavior change. Health Psychology. 2000;19:57–63. doi: 10.1037/0278-6133.19.suppl1.57. [DOI] [PubMed] [Google Scholar]

- Bouton ME. Context, ambiguity, and unlearning: Sources of relapse after behavioral extinction. Biological Psychiatry. 2002;52:976–986. doi: 10.1016/s0006-3223(02)01546-9. [DOI] [PubMed] [Google Scholar]

- Bouton ME. Context and behavioral processes in extinction. Learning & Memory. 2004;11:485–494. doi: 10.1101/lm.78804. [DOI] [PubMed] [Google Scholar]

- Bouton ME, Bolles RC. Contextual control of the extinction of conditioned fear. Learning and Motivation. 1979a;10:445–466. [Google Scholar]

- Bouton ME, Bolles RC. Role of conditioned contextual stimuli in reinstatement of extinguished fear. Journal of Experimental Psychology: Animal Behavioral Processes. 1979b;5:368–378. doi: 10.1037//0097-7403.5.4.368. [DOI] [PubMed] [Google Scholar]

- Bouton ME, Brooks DC. Time and context effects on performance in a Pavlovian discrimination reversal. Journal of Experimental Psychology: Animal Behavior Processes. 1993;19:165–179. doi: 10.1037//0097-7403.19.1.77. [DOI] [PubMed] [Google Scholar]

- Bouton ME, Frohardt RJ, Sunsay C, Waddell J, Morris RW. Contextual control of inhibition with reinforcement: Adaptation and timing mechanisms. Journal of Experimental Psychology: Animal Behavior Processes. 2008;34:223–236. doi: 10.1037/0097-7403.34.2.223. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bouton ME, King DA. Contextual control of the extinction of conditioned fear: Tests for the associative value of the context. Journal of Experimental Psychology: Animal Behavior Processes. 1983;9:248–265. [PubMed] [Google Scholar]

- Bouton ME, Peck CA. Context effects on conditioning, extinction, and reinstatement in an appetitive conditioning preparation. Animal Learning and Behavior. 1989;17:188–198. [Google Scholar]

- Bouton ME, Peck CA. Spontaneous recovery in cross-motivational transfer (counter-conditioning) Animal Learning & Behavior. 1992;20:313–321. [Google Scholar]

- Bouton ME, Schepers ST. Resurgence of instrumental behavior after an abstinence contingency. Learning & Behavior. 2014;42:131–143. doi: 10.3758/s13420-013-0130-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bouton ME, Schepers ST. Renewal of instrumental behavior after punishment in preparation. [Google Scholar]

- Bouton ME, Todd TP, León SP. Contextual control of discriminated operant behavior. Journal of Experimental Psychology: Animal Learning and Cognition. 2014;40:92–105. doi: 10.1037/xan0000002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bouton ME, Todd TP, Vurbic D, Winterbauer NE. Renewal after the extinction of free-operant behavior. Learning and Behavior. 2011;39:57–67. doi: 10.3758/s13420-011-0018-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bouton ME, Vurbic D, Woods AM. d-Cycloserine facilitates context-specific fear extinction learning. Neurobiology of Learning and Memory. 2008;90:504–510. doi: 10.1016/j.nlm.2008.07.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bouton ME, Winterbauer NE, Vurbic D. Context and extinction: Mechanisms of relapse in drug self-administration. In: Haselgrove M, Hogarth L, editors. Clinical Applications of Learning Theory. East Sussex, UK: Psychology Press; 2012. pp. 103–134. [Google Scholar]

- Bouton ME, Woods AM. Extinction: Behavioral mechanisms and their implications. In: Byrne JH, Sweatt D, Menzel R, Eichenbaum H, Roediger H, editors. Learning and Memory: A Comprehensive Reference. Vol. 1. 2008. pp. 151–171. [Google Scholar]

- Bouton ME, Woods AM, Pineño O. Occasional reinforced trials during extinction can slow the rate of rapid reacquisition. Learning and Motivation. 2004;35:371–390. doi: 10.1016/j.lmot.2006.07.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brooks DC. Recent and remote extinction cues reduce spontaneous recovery. Quarterly Journal of Experimental Psychology. 2000;53B:25–58. doi: 10.1080/027249900392986. [DOI] [PubMed] [Google Scholar]

- Brooks DC, Bouton ME. A retrieval cue for extinction attenuates spontaneous recovery. Journal of Experimental Psychology: Animal Behavior Processes. 1993;19:77–89. doi: 10.1037//0097-7403.19.1.77. [DOI] [PubMed] [Google Scholar]

- Brooks DC, Bouton ME. A retrieval cue for extinction attenuates response recovery (renewal) caused by a return to the conditioning context. Journal of Experimental Psychology: Animal Behavior Processes. 1994;20:366–379. doi: 10.1037//0097-7403.19.1.77. [DOI] [PubMed] [Google Scholar]

- Brooks DC, Hale B, Nelson JB, Bouton ME. Reinstatement after counterconditioning. Animal Learning & Behavior. 1995;23:383–390. [Google Scholar]

- Cançado CRX, Lattal KA. Resurgence of temporal patterns of responding. Journal of the Experimental Analysis of Behavior. 2011;95:271–287. doi: 10.1901/jeab.2011.95-271. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chan WY, Leung HT, Westbrook RF, McNally GP. Effects of recent exposure to a conditioned stimulus on extinction of Pavlovian fear conditioning. Learning & Memory. 2010;17:512–521. doi: 10.1101/lm.1912510. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cinciripini PM, Lapitsky L, Seay S, Wallfisch A, Kitchens K, Vunakis HV. The effects of smoking schedules on cessation outcome: Can we improve on common methods of gradual and abrupt nicotine withdrawal? Journal of Consulting and Clinical Psychology. 1995;63:388–399. doi: 10.1037//0022-006x.63.3.388. [DOI] [PubMed] [Google Scholar]

- Cinciripini PM, Lapitsky LG, Wallfisch A, Mace R, Nezami E, Vunakis HV. An evaluation of a multicomponent treatment program involving scheduled smoking and relapse prevention procedures: Initial Findings. Addictive Behaviors. 1994;19:13–22. doi: 10.1016/0306-4603(94)90047-7. [DOI] [PubMed] [Google Scholar]

- Collins BN, Brandon TH. Effects of extinction context and retrieval cues on alcohol cue reactivity among nonalcoholic drinkers. Journal of Consulting and Clinical Psychology. 2002;70:390–397. [PubMed] [Google Scholar]

- Conklin CA, Tiffany ST. Applying extinction research and theory to cue-exposure addiction treatments. Addiction. 2002;97:155–167. doi: 10.1046/j.1360-0443.2002.00014.x. [DOI] [PubMed] [Google Scholar]

- Craske MG, Kircanski K, Zelikowsky M, Mystkowski J, Chowdhury N, Baker A. Optimizing inhibitory learning during exposure therapy. Behaviour Research & Therapy. 2008;46:527. doi: 10.1016/j.brat.2007.10.003. [DOI] [PubMed] [Google Scholar]

- Estes WK. Statistical theory of distributional phenomena in learning. Psychological Review. 1955;62:369–377. doi: 10.1037/h0046888. [DOI] [PubMed] [Google Scholar]

- Fiori LM, Barnet RC, Miller RR. Renewal of Pavlovian conditioned inhibition. Animal Learning & Behavior. 1994;22:47–52. [Google Scholar]

- Fisher EB, Green L, Calvert AL, Glasgow RE. Incentives in the modification and cessation of cigarette smoking. In: Schachtman TR, Reilly S, editors. Associative Learning and Conditioning Theory: Human and Non-Human Applications. Oxford: Oxford University Press; 2011. pp. 321–342. [Google Scholar]

- Gunther LM, Denniston JC, Miller RR. Conducting exposure treatment in multiple contexts can prevent relapse. Behaviour Research and Therapy. 1998;36:75–91. doi: 10.1016/s0005-7967(97)10019-5. [DOI] [PubMed] [Google Scholar]

- Higgins ST, Silverman K, Heil SH, editors. Contingency Management in Substance Abuse Treatment. New York: Guilford Press; 2008. [Google Scholar]

- Higgins ST, Silverman K, Sigmon SC, Naito NA. Incentives and health: An introduction. Preventive Medicine. 2012;55(Suppl):S2–6. doi: 10.1016/j.ypmed.2012.04.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hughes JR, Keely J, Naud S. Shape of relapse curve and long-term abstinence among untreated smokers. Addiction. 2004;99:29–38. doi: 10.1111/j.1360-0443.2004.00540.x. [DOI] [PubMed] [Google Scholar]

- Hunt WA, Barnett LW, Branch LG. Relapse rates in addiction programs. Journal of Clinical Psychiatry. 1971;27:455–456. doi: 10.1002/1097-4679(197110)27:4<455::aid-jclp2270270412>3.0.co;2-r. [DOI] [PubMed] [Google Scholar]

- John LK, Loewenstein G, Troxel AB, Norton L, Fassbender JE, Volpp K. Financial incentives for extended weight loss: A randomized control trial. Journal of General Internal Medicine. 2011;26:621–626. doi: 10.1007/s11606-010-1628-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kearns DN, Weiss SJ. Contextual renewal of cocaine seeking in rats and its attenuation by the conditioned effects of an alternative reinforcer. Drug and Alcohol Dependence. 2007;90:193–202. doi: 10.1016/j.drugalcdep.2007.03.006. [DOI] [PubMed] [Google Scholar]

- Kindt M, Soeter M, Vervliet B. Beyond extinction: Erasing human fear responses and preventing the return of fear. Nature Neuroscience. 2009;12:256–258. doi: 10.1038/nn.2271. [DOI] [PubMed] [Google Scholar]

- Kirshenbaum AP, Olsen DM, Bickel WK. A quantitative review of the ubiquitous relapse curve. Journal of Substance Abuse Treatment. 2009;36:8–17. doi: 10.1016/j.jsat.2008.04.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Laborda MA, McConnell BL, Miller RR. Behavioral techniques to reduce relapse after exposure therapy: Applications of studies of experimental extinction. In: Schachtman TR, Reilly SS, editors. Associative learning and conditioning theory: Human and non-human applications. New York: Oxford; 2011. pp. 79–103. [Google Scholar]

- Leitenberg H, Rawson RA, Bath K. Reinforcement of competing behavior during extinction. Science. 1970;169:301–303. doi: 10.1126/science.169.3942.301. [DOI] [PubMed] [Google Scholar]

- Leitenberg H, Rawson RA, Mulick JA. Extinction and reinforcement of alternative behavior. Journal of Comparative Physiological Psychology. 1975;88:640–652. [Google Scholar]

- Leslie JC, Norwood K, Kennedy PJ, Begley M, Shaw D. Facilitation of extinction of operant behaviour in C57Bl/6 mice by chlordiazepoxide and D-cycloserine. Psychopharmacology. 2012;223:223–235. doi: 10.1007/s00213-012-2710-4. [DOI] [PubMed] [Google Scholar]

- Lieving GA, Lattal KA. Recency, repeatability, and reinforcer retrenchment: An experimental analysis of resurgence. Journal of the Experimental Analysis of Behavior. 2003;80:217–233. doi: 10.1901/jeab.2003.80-217. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marchant NJ, Khuc TN, Pickens CL, Bonci A, Shaham Y. Context-induced relapse to alcohol seeking after punishment in a rat model. Biological Psychiatry. 2013;73:256–262. doi: 10.1016/j.biopsych.2012.07.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Monfils MH, Cowansage KK, Klann E, LeDoux JE. Extinction-reconsolidation boundaries: key to persistent attenuation of fear memories. Science. 2009;324:951–955. doi: 10.1126/science.1167975. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Myers KM, Ressler KJ, Davis M. Different mechanisms of fear extinction dependent on length of time since fear acquisition. Learning & Memory. 2006;13:216–223. doi: 10.1101/lm.119806. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mystkowski JL, Craske MG, Echiverri AM, Labus JS. Mental reinstatement of context and return of fear in spider-fearful participants. Behavior Therapy. 2006;37:49–60. doi: 10.1016/j.beth.2005.04.001. [DOI] [PubMed] [Google Scholar]

- Nader K, Schafe GE, LeDoux JE. Fear memories require protein synthesis in the amygdala for reconsolidation after retrieval. Nature. 2000;406:722–726. doi: 10.1038/35021052. [DOI] [PubMed] [Google Scholar]

- Nakajima S, Tanaka S, Urushihara K, Imada H. Renewal of extinguished lever-press responses upon return to the training context. Learning and Motivation. 2000;31:416–431. [Google Scholar]

- Nakajima S, Urushihara K, Masaki T. Renewal of operant performance formerly eliminated by omission or noncontingency training upon return to the acquisition context. Learning and Motivation. 2002;33:510–525. [Google Scholar]

- Napier RM, Macrae M, Kehoe EJ. Rapid reacquisition in conditioning of the rabbit’s nictitating membrane response. Journal of Experimental Psychology: Animal Behavior Processes. 1992;18:182–192. doi: 10.1037//0097-7403.18.2.182. [DOI] [PubMed] [Google Scholar]

- Nelson JB. Context specificity of excitation and inhibition in ambiguous stimuli. Learning and Motivation. 2002;33:284–310. [Google Scholar]

- Panlilio LV, Thorndike EB, Schindler CW. Reinstatement of punishment-suppressed opioid self-administration in rats: An alternative model of relapse to drug abuse. Psychopharmacology. 2003;168:229–235. doi: 10.1007/s00213-002-1193-0. [DOI] [PubMed] [Google Scholar]

- Panlilio LV, Thorndike EB, Schindler CW. Lorazepam reinstates punishment-suppressed remifentanil self-administration in rats. Psychopharmacology. 2005;179:374–382. doi: 10.1007/s00213-004-2040-2. [DOI] [PubMed] [Google Scholar]

- Pavlov IP. In: Conditioned reflexes. Anrep GV, translator and editor. London: Oxford University Press; 1927. [Google Scholar]

- Peck CA. Unpublished doctoral dissertation. University of Vermont; 1995. Context-specificity of Pavlovian conditioned inhibition. [Google Scholar]

- Peck CA, Bouton ME. Context and performance in aversive-to-appetitive and appetitive-to-aversive transfer. Learning and Motivation. 1990;21:1–31. [Google Scholar]

- Rescorla RA, Heth CD. Reinstatement of fear to an extinguished conditioned stimulus. Journal of Experimental Psychology: Animal Behavior Processes. 1975;1:88–96. [PubMed] [Google Scholar]

- Ricker ST, Bouton ME. Reacquisition following extinction in appetitive conditioning. Learning & Behavior. 1996;24:423–436. [Google Scholar]

- Schroeder SA. We can do better: Improving the health of the American people. The New England Journal of Medicine. 2007;357:1221–1228. doi: 10.1056/NEJMsa073350. [DOI] [PubMed] [Google Scholar]

- Shahan TA, Sweeney MM. A model of resurgence based on behavioral momentum theory. Journal of the Experimental Analysis of Behavior. 2011;95:91–108. doi: 10.1901/jeab.2011.95-91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Silverman K, DeFulio A, Sigurdsson SO. Maintenance of reinforcement to address the chronic nature of drug addiction. Preventive Medicine. 2012;55:S46–S53. doi: 10.1016/j.ypmed.2012.03.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith SM, Vela E. Environmental context-dependent memory: A review. Psychonomic Bulletin & Review. 2001;8:203–220. doi: 10.3758/bf03196157. [DOI] [PubMed] [Google Scholar]

- Soeter M, Kindt M. Disrupting reconsolidation: pharmacological and behavioral manipulations. Learning & Memory. 2011;18:357–366. doi: 10.1101/lm.2148511. [DOI] [PubMed] [Google Scholar]

- Sweeney MM, Shahan TA. Effects of high, low, and thinning rates of alternative reinforcement on response elimination and resurgence. Journal of the Experimental Analysis of Behavior. 2013;100:102–116. doi: 10.1002/jeab.26. [DOI] [PubMed] [Google Scholar]

- Thanos PK, Subrize M, Lui W, Puca Z, Ananth M, Michaelides M, Wang GJ, Volkow ND. D-cycloserine facilitates extinction of cocaine self-administration in C57 mice. Synapse. 2011;65:1099–1105. doi: 10.1002/syn.20944. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Todd TP. Mechanisms of renewal after the extinction of instrumental behavior. Journal of Experimental Psychology: Animal Behavior Processes. 2013;39:193–207. doi: 10.1037/a0032236. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Todd TP, Vurbic D, Bouton ME. Mechanisms of renewal after the extinction of discriminated operant behavior. Journal of Experimental Psychology: Animal Learning and Cognition. 2014 doi: 10.1037/xan0000021. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Todd TP, Winterbauer NE, Bouton ME. Effects of amount of acquisition and contextual generalization on the renewal of instrumental behavior after extinction. Learning and Behavior. 2012;40:145–157. doi: 10.3758/s13420-011-0051-5. [DOI] [PubMed] [Google Scholar]

- Thrailkill EA, Bouton ME. Contextual control of instrumental actions and habits. doi: 10.1037/xan0000045. in preparation. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tronson NC, Corcoran KA, Jovasevic V, Radulovic J. Fear conditioning and extinction: Emotional states encoded by distinct signaling pathways. Trends in Neurosciences. 2008;35:145–155. doi: 10.1016/j.tins.2011.10.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vurbic D, Gold B, Bouton ME. Effects of d-cycloserine on the extinction of appetitive operant learning. Behavioral Neuroscience. 2011;125:551–559. doi: 10.1037/a0024403. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Walker DL, Ressler KJ, Lu KT, Davis M. Facilitation of conditioned fear extinction by systemic administration or intra-amygdala infusions of D-cycloserine as assessed with fear-potentiated startle in rats. Journal of Neuroscience. 2002;22:2343–2351. doi: 10.1523/JNEUROSCI.22-06-02343.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang SH, de Oliveira Alvares L, Nader K. Cellular and systems mechanisms of memory strength as a constraint on auditory fear reconsolidation. Nature Neuroscience. 2009;12:905–913. doi: 10.1038/nn.2350. [DOI] [PubMed] [Google Scholar]

- Wasserman EA, Bhatt RS. Conceptualization of natural and artificial stimuli by pigeons. In: Honig WK, Fetterman JG, editors. Cognitive aspects of stimulus control. Hillsdale, NJ: Erlbaum; 1992. pp. 203–223. [Google Scholar]

- Westbrook RF, Iordanova M, Harris JA, McNally G, Richardson R. Reinstatement of fear to an extinguished conditioned stimulus: Two roles for context. Journal of Experimental Psychology: Animal Behavior Processes. 2002;28:97–110. [PubMed] [Google Scholar]

- Willcocks AL, McNally GP. An extinction retrieval cue attenuates renewal but not reacquisition of alcohol seeking. Behavioral Neuroscience. 2014;128:83–91. doi: 10.1037/a0035595. [DOI] [PubMed] [Google Scholar]

- Winterbauer NE, Bouton ME. Mechanisms of resurgence of an extinguished instrumental behavior. Journal of Experimental Psychology: Animal Behavior Processes. 2010;36:343–353. doi: 10.1037/a0017365. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Winterbauer NE, Bouton ME. Effects of thinning the rate at which the alternative behavior is reinforced on resurgence of an extinguished instrumental response. Journal of Experimental Psychology: Animal Behavior Processes. 2012;38:279–291. doi: 10.1037/a0028853. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Winterbauer NE, Lucke S, Bouton ME. Some factors modulating the strength of resurgence after extinction of an instrumental behavior. Learning and Motivation. 2013;44:60–71. doi: 10.1016/j.lmot.2012.03.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Woods AM, Bouton ME. D-cycloserine facilitates extinction but does not eliminate renewal of the conditioned emotional response. Behavioral Neuroscience. 2006;120:1159–1162. doi: 10.1037/0735-7044.120.5.1159. [DOI] [PubMed] [Google Scholar]

- Woods AM, Bouton ME. Occasional reinforced responses during extinction can slow the rate of reacquisition of an operant response. Learning and Motivation. 2007;38:56–74. doi: 10.1016/j.lmot.2006.07.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xue Y, Luo Y, Wu P, Shi H, Xue L, Chen C, Zhu W, Ding Z, Bao Y, Shi J, Epstein DH, Shaham Y, Lu L. A memory retrieval-extinction procedure to prevent drug craving and relapse. Science. 2012;336:241–245. doi: 10.1126/science.1215070. [DOI] [PMC free article] [PubMed] [Google Scholar]