Abstract

We describe a new smartphone app called BLaDE (Barcode Localization and Decoding Engine), designed to enable a blind or visually impaired user find and read product barcodes. Developed at The Smith-Kettlewell Eye Research Institute, the BLaDE Android app has been released as open source software, which can be used for free or modified for commercial or non-commercial use. Unlike popular commercial smartphone apps, BLaDE provides real-time audio feedback to help visually impaired users locate a barcode, which is a prerequisite to being able to read it. We describe experiments performed with five blind/visually impaired volunteer participants demonstrating that BLaDE is usable and that the audio feedback is key to its usability.

Keywords: Visual impairment, blindness, assistive technology, product identification

Introduction

The ability to identify products such as groceries and other products is very useful for blind and visually impaired persons, for whom such identification information may be inaccessible. There is thus considerable interest among these persons in barcode readers, which read the product barcodes that uniquely identify almost all commercial products.

The smartphone is a potentially convenient tool for reading product barcodes, since many people carry smartphones and would prefer not to carry a dedicated barcode reader (even if dedicated readers may be more effective than smartphone readers). A variety of smartphone apps are available for reading barcodes, such as RedLaser and the ZXing project (for iPhone and Android, respectively), and a large amount of research has been published on this topic (Kongqiao; Wachenfeld). However, almost all of these systems are intended for users with normal vision and require them to center the barcode in the image.

Aside from past work by the authors (“An Algorithm Enabling Blind Users…”; “A Mobile Phone Application…”), the only published work we are aware of that is closely related to BLaDE is that of Kulyukin and collaborators, who have also developed a smartphone video-based barcode reader for visually impaired users (Kutiyanawala; Kulyukin). However, this reader requires the user to align the camera frame to the barcode so that the barcode lines appear horizontal or vertical in the camera frame. By contrast, BLaDE lifts this restriction (see next section), thereby placing fewer constraints on the user and simplifying the challenge of finding and reading barcodes.

At the time that this manuscript was originally submitted, the one commercially available smartphone barcode reader expressly designed for visually impaired users, Digit-Eyes (http://www.digit-eyes.com/), did not provide explicit feedback to alert the user to the presence of a barcode before it could be read; however, after time of submission, such feedback was added to a later version of Digit-Eyes, in response to requests from Digit-Eyes customers (“Digit-Eyes Announces…”). The Codecheck iPhone app (http://www.codecheck.info/) for Swiss users, which also provides such feedback, is based on an early version of BLaDE (“Codecheck”).

BLaDe Description

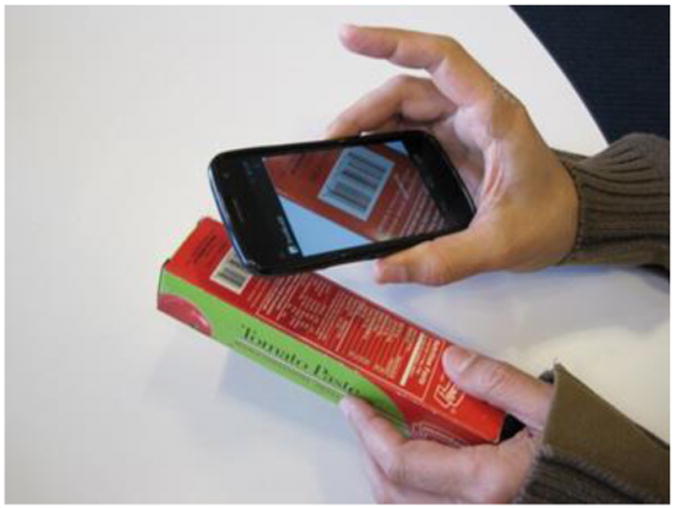

The BLaDE system (see Fig. 1) provides real-time feedback to first help the user find the barcode on a product using a smartphone camera or webcam and then help orient the camera to read the barcode. Earlier versions of the computer vision algorithms and user interface were described in (“An Algorithm Enabling Blind Users…”; “A Mobile Phone Application…”), and details of the latest version are described in our technical report (“BLaDE”). BLaDE has been implemented both as an Android smartphone app and for use on a Linux desktop computer with a webcam. It has been released as open source code, available at http://sourceforge.net/p/sk-blade, so that anyone can use the software or modify it free of charge.

Figure 1.

Image shows user running BLaDE smartphone app, which is running on a smartphone that is pointed towards a product barcode.

BLaDE takes several video frames per second and analyzes each frame to detect the presence of a barcode in it. The detection algorithm functions even when only part of the barcode is visible in the image, or when the barcode is too far away from the camera to be read. Moreover, the barcode can appear at any orientation in the image and need not appear with its bars aligned horizontally or vertically. Whenever a barcode has been detected in an image, an audio tone is issued to alert the user.

The audio tone is modulated to help the user center the barcode in the image and bring the camera close enough to the barcode to capture detailed images of it. Specifically, the tone volume reflects the size of the barcode in the image, with higher volume indicating a more appropriate size (not too small or too big) and hence more appropriate viewing distance; the degree of tone continuity (from stuttered to continuous) indicates how well centered the barcode is in the image, with a more continuous tone corresponding to better centering. A visually impaired user first moves the camera slowly so as to find the barcode; further feedback helps the user to move the camera until the barcode is sufficiently well resolved and decoded. If BLaDE reads a barcode and is sufficiently confident of its reading, the system reads aloud the barcode number (or information about the barcode such as the name of the product).

Experiments with Visually Impaired Users

The development of BLaDE has been driven by ongoing feedback from blind and visually impaired users. Preliminary experiments with two blind volunteer participants led to the current version of BLaDE as well as a training procedure for new users. We conducted a controlled experiment with five additional blind/visually impaired participants (all of whom have either no light perception or insufficient vision to find a barcode on a package) using the current BLaDE version.

Before the actual experiment, the training session acquainted each participant with the purpose and operation of BLaDE and focused on several topics: (1) Holding the smartphone camera properly. This entails maintaining an appropriate distance from and orientation to a product of interest (specifically, with the smartphone screen held roughly parallel to the surface of the product, at a distance of approximately 4-6 inches) and taking care to not cover the camera lens with the fingers. An important concept that was explained to those participants who were not already familiar with it was the camera's field of view, which encompasses a certain range of viewing angles, and which explains why an entire barcode may be fully contained within the field of view at one viewing distance but not at a closer distance. (2) How to use BLaDE to search for a barcode, emphasizing likely/unlikely locations of barcodes on packages and the need for slow, steady movement. Several barcode placement rules were explained, such as the fact that on a flat, rectangular surface, the barcode is rarely in the center and is usually closer to an edge; on a can, the barcode is usually on the sleeve, rarely on top or bottom lid. Participants were told that the search for a product barcode usually requires exploring the entire surface area of the package using two kinds of movements: translation (hovering above the product, maintaining a roughly constant height above the surface) and approaching or receding from the surface. (3) How to adjust camera movement based on BLaDE feedback. Participants were advised to first translate the camera to seek a tone that is as continuous as possible (indicating that the barcode is centered), and then to vary the distance to maximize the volume (indicating that the appropriate distance has been reached); if the tone became stuttered, they were advised to translate again to seek a continuous tone, and continue as before.

Each participant continued with a practice session, in which he/she searched for and read barcodes on products that were not used in the subsequent experiment. The experimenter turned off the audio feedback in some practice trials in preparation for the experiment, which compared trials with feedback on and feedback off.

In the experiment each participant had to find and read barcodes on 18 products, consisting of 4 barcodes, each printed on a sheet of paper, and 14 grocery products including boxes, cans, bottles and tubes. In advance of the experiment, 10 of the products were chosen at random to be read with feedback and 8 without; the sequence of products was also fixed at random, as was the starting orientation of each product (e.g., for a box, which face was up and at what orientation).

Each participant was given up to 5 minutes to search for and read the barcode on each of the 18 products, for a total of 90 = 5 × 18 trials; if the barcode was not located within that time then the trial for that product was terminated. Of the 50 trials conducted with feedback on, the barcodes were located and read successfully in 41 trials (82% success rate, with a median time of 41 sec. among successes); of the 40 trials conducted with feedback off, the barcodes were located and read successfully in 26 trials (65% success rate, with a median time of 68 sec. among successes). (The data are summarized in Table 1.) In all trials, whenever a barcode was read aloud to the user, the barcode was correctly decoded.

Table 1.

2×2 contingency table comparing the number of successes and failures (among 90 total trials, aggregated over all volunteer participants) for two different conditions, feedback on and feedback off.

| Number successes | Number failures | |

|---|---|---|

| Feedback on | 41 | 9 |

| Feedback off | 26 | 14 |

A 2×2 contingency table analysis to compare success rates with and without feedback yields a p-value of p=0.056. While just below the level of statistical significance (which is likely due to the low number of subjects), this result suggests a trend that feedback improves users' chances of finding and reading barcodes. This is consistent with feedback from the users themselves, who reported that the feedback was helpful. In our opinion, BLaDE's ability to help users locate barcodes is a crucial function of the system that greatly mitigates the challenge of searching for and reading barcodes.

Discussion

Our experience with BLaDE underscores the difficulty that blind users face in using a barcode reader, which requires them to search for barcodes on products in order to read them. This search can take longer on a smartphone-based system due to the much slower rate of processing, smaller detection range and narrower field of view compared with a dedicated system, implying a tradeoff between ease of detection and the burden of having to own and carry a separate device. Empirically we found that the search process tends to be shorter for smaller products (which present less surface area to be searched) and for rectangular packages (curved surfaces are awkward to search, forcing the user to rotate the package relative to the camera). Audio feedback is important for speeding up the search process, and the key to improving BLaDE or other smartphone-based barcode readers in the future lies in improving this feedback.

Two main improvements to the audio feedback can be considered. First, the maximum distance at which a barcode can be detected could be extended, which would allow the user to search a larger region of a package at one time. (If the maximum allowable distance was long enough, then an entire side of a rectangular package could be searched in a single video frame.) This will require using higher resolution video frames when searching for barcodes without sacrificing the processing speed (currently several frames per second). This can be achieved by improving the search algorithm to take advantage of the continually increasing processing power of modern smartphones. Second, it may be worthwhile to experiment with different types of audio feedback. For example, while the current BLaDE feedback indicates how centered a barcode is in the image, explicit audio feedback could be added to indicate what direction (left, right, up or down) the user needs to translate the smartphone to center it. While some users may find this additional information useful, it does complicate the user interface (and may introduce additional lag if the information is conveyed with verbal feedback), which could be unappealing to other users.

Conclusion

Experiments with blind/visually impaired volunteer participants demonstrate the feasibility of BLaDE, and suggest that its usability is significantly enhanced by real-time feedback to help the user find barcodes before they are read.

We are exploring commercialization options of BLaDE, including collaboration with an organization interested in releasing a consumer-oriented smartphone app that includes detailed information associated with a barcode (e.g., preparation instructions for packaged items). Since BlaDE has been released as an open source project, developers can add additional functionality to suit their needs. This way, we aim to facilitate the development of high-quality barcode scanners that can be used by blind and visually impaired persons.

Acknowledgments

The authors acknowledge support by the National Institutes of Health from grant number 1 R01 EY018890-01 and by the Department of Education, NIDRR grant number H133E110004.

Contributor Information

Ender Tekin, Email: ender@ski.org.

David Vásquez, Email: david@ski.org.

James M. Coughlan, Email: coughlan@ski.org.

Works cited

- Vladimir Kulyukin, Kutiyanawala Aliasgar, Zaman Tanwir. Eyes-Free Barcode Detection on Smartphones with Niblack's Binarization and Support Vector Machines. In: Arabnia HR, editor. 16th International Conference on Image Processing, Computer Vision, and Pattern Recognition; July 16-19, 2012; Las Vegas, Nevada. pp. 284–290. [Google Scholar]

- Aliasgar Kutiyanawala, Kulyukin Vladimir, Nicholson John. Toward Real Time Eyes-Free Barcode Scanning on Smartphones in Video Mode. In: Bailey N, editor. 2011 Rehabilitation Engineering and Assistive Technology Society of North America Conference (Resna 2011); June 5-8, 2011; Toronto, Canada. [Google Scholar]

- Ender Tekin, Coughlan James. An Algorithm Enabling Blind Users to Find and Read Barcodes. In: Morse B, editor. 2009 IEEE Workshop on Applications of Computer Vision (WACV 2009); Dec. 7-8, 2009; Snowbird, Utah. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ender Tekin, Coughlan James. A Mobile Phone Application Enabling Visually Impaired Users to Find and Read Product Barcodes. In: Miesenberger K, Klaus J, Zagler W, Karshmer A, editors. 12th International Conference on Computers Helping People with Special Needs (ICCHP '10); July 14-16, 2010; Vienna, Austria. pp. 290–295. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ender Tekin, Coughlan James. BLaDE: Barcode Localization and Decoding Engine. Technical Report 2012-RERC01. 2012 Dec; Web. 20 May 2013. < http://www.ski.org/Rehab/Coughlan_lab/BLaDE/BLaDE_TechReport.pdf>.

- Steffen Wachenfeld, Terlunen Sebastian, Jiang Xiaoyi. Robust recognition of 1-D barcodes using camera phones. In: Ejiri M, Kasturi R, di Baja GS, editors. 19th International Conference on Pattern Recognition (ICPR 2008); Dec. 8-11, 2008; Tampa, FL. [Google Scholar]

- Kongqiao Wang, Zou Yanming, Wang Hao. 1D barcode reading on camera phones. International Journal of Image and Graphics. 2007;7(3):529–550. Print. [Google Scholar]

- Codecheck: iPhone app helps blind people to recognize products. EuroSecurity. 2012 Web. 20 May 2013. < http://www.eurosecglobal.de/codechek-iphone-app-helps-blind-people-to-recognize-products.html>.

- Digit-Eyes Announces Enhanced Image Detection, More Recording Features, Support of VCard QR Codes. Digital Miracles press release. 2013 Web. 20 May 2013. < http://www.pr.com/press-release/479519>.