Summary

We propose a novel class of models for functional data exhibiting skewness or other shape characteristics that vary with spatial or temporal location. We use copulas so that the marginal distributions and the dependence structure can be modeled independently. Dependence is modeled with a Gaussian or t-copula, so that there is an underlying latent Gaussian process. We model the marginal distributions using the skew t family. The mean, variance, and shape parameters are modeled nonparametrically as functions of location. A computationally tractable inferential framework for estimating heterogeneous asymmetric or heavy-tailed marginal distributions is introduced. This framework provides a new set of tools for increasingly complex data collected in medical and public health studies. Our methods were motivated by and are illustrated with a state-of-the-art study of neuronal tracts in multiple sclerosis patients and healthy controls. Using the tools we have developed, we were able to find those locations along the tract most affected by the disease. However, our methods are general and highly relevant to many functional data sets. In addition to the application to one-dimensional tract profiles illustrated here, higher-dimensional extensions of the methodology could have direct applications to other biological data including functional and structural MRI.

Keywords: Gaussian and t-copulas, Quantile modeling, Skewed functional data, Tractography data

1. Introduction

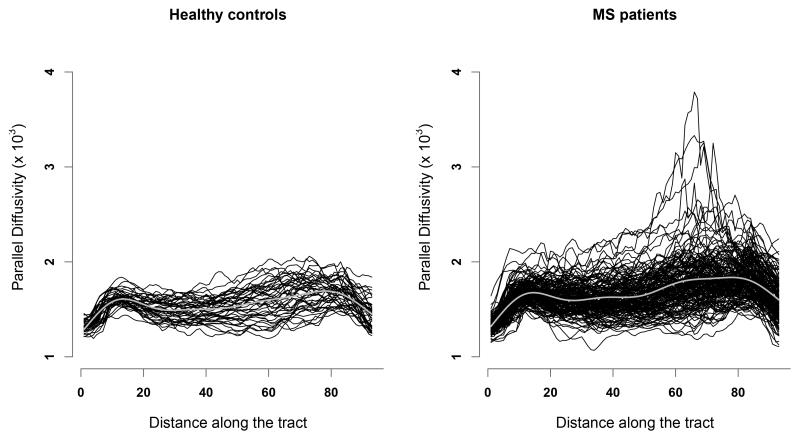

Most models used in functional data analysis (FDA) focus on modeling the mean and covariance functions. Although such models are often adequate, there are important cases where interest is focused on skewness or other shape characteristics. For example, the right panel of Figure 1 illustrates profiles of multiple sclerosis (MS) patients, recorded along the corpus callosum tract of the brain. The functions have skewed pointwise distributions, with the amount of skewness varying spatially. Modeling data with such characteristics represents the main focus and novelty of our work.

Figure 1.

Parallel diffusivity profiles within the corpus callosum tract in 162 MS patients (right panel) and 42 healthy controls subjects (left panel). Penalized spline estimates of the mean functions are shown as thick grey curves. Distance units are arbitrary.

In this paper, we develop models and inferential methods for the analysis of functional skewed data. Fundamentally different from the common method of moment-based approaches of modeling functional data (Di et al., 2009; Crainiceanu, Staicu, and Di, 2009; Staicu, Crainiceanu, and Carroll, 2010 etc.), our methods are based on pointwise distributional assumptions of the underlying stochastic process. The parametric assumptions are used to capture higher order moments of the pointwise distributions; in comparison the nonparametric approaches use only the first two moments. Specifically, our approach assumes that the pointwise distributions of the underlying stochastic process are in a parametric family with one or more shape parameters, for example, a skew normal or skew t family. The shape parameters, as well as the mean and variance, are modeled nonparametrically as function of location.

The third novel feature here is the use of copulas to model spatial dependence. We use Gaussian or t-copulas, since, unlike Archimedean copulas, they include correlation functions which are fundamental to FDA. The use of copulas allows a modular approach where the marginal distributions and dependencies can be modeled separately. In our model, the observed functions are transformations of an underlying latent Gaussian processes and, for a t-copula, a chi-squared random variable that induces tail dependence. Principal components analysis (PCA) and a Karhunen-Loève expansion can be applied to the latent Gaussian process, much as they would be applied if the observed data were Gaussian.

1.1 The diffusion tensor imaging study

The methodology described in this paper is motivated by a state-of-the-art diffusion tensor imaging (DTI) study that analyzes and compares white matter tracts in healthy individuals and MS patients. DTI is an in vivo magnetic resonance technique for imaging the white matter tracts in the brain by measuring the three-dimensional directions of water diffusion (Basser, et al. 1994, 2000). In the DTI study, we are interested in modeling DTI profiles, such as mean diffusivity and parallel diffusivity, along the corpus callosum, the major tract connecting the two cerebral hemispheres. Mean diffusivity is an orientation-independent measure of water movement within the tract, whereas parallel diffusivity estimates the component of that movement co-axial with the tract’s axon bundles.

Figure 1 displays parallel diffusivity profiles for healthy controls and MS patients. Parallel diffusivity is recorded at many locations along the tract, so that the tract profile can be viewed as a continuous curve sampled at a discrete set of points. Scientific interest includes comparing the means, quantiles, and correlation functions of the two samples; see Greven, et al. (2010) for more details on the scientific questions. At a quick look, the control and MS data seem to differ in a few ways, such as mean and variability along the tract, but perhaps even more so with respect to skewness. The MS subjects’ profiles exhibit more skewness compared to the controls, especially around locations 50–80 along the corpus callosum tract.

These data suggest that there is a need for methods that can accommodate strong temporally- or spatially-varying skewness and within-function correlation. The computational speed is essential, since FDA is faced with continuously larger and more complex data sets. Moreover, one needs inferential methods, for example, to test whether skewness differences between spatial locations, or between groups of subjects, are statistically significant. In addition to the application to one dimensional tract profiles illustrated here, higher-dimensional extensions of the methodology could have direct applications to other biological data including functional and structural MRI. In these applications, accurate modeling of the tails of distributions is a critical step in identifying abnormalities as well as limiting false positive results.

1.2 Current methods

A reasonable approach for the analysis of tractography data is functional data analysis (FDA)—see, for example, the excellent monographs of Ramsay and Silverman (2005), Ferraty and Vieu (2006), and Ramsay, Hooker, and Graves (2009). A fundamental idea in FDA is to decompose the space of curves into principal directions of variation by a Principal Component Analysis (PCA) of the raw data or smoothed curves. PCA provides a simple recipe for dimension reduction by including only eigenvalue–eigenvector pairs where the estimated eigenvalue is relatively large. However, PCA is a second-order methodology in that it uses only the mean and covariance functions, and the Karhunen-Loève expansion based on its eigen-decomposition assumes a joint Gaussian distribution (Yao, Müller, and Wang, 2005).

The focus of current FDA methods on mean and covariance functions ignores subtler differences in distributions that could be better characterized by quantiles. Quantiles, like means, have tremendous data compression potential and are easy to interpret, a sine-qua-non in the century of data. In simulation experiments, we have shown that the quantiles of asymmetric data are estimated with substantial bias if the Gaussian model typical of FDA is assumed; see Section 6 and Figure S2 of the supplemental materials.

The paper is organized as follows. Section 2 introduces functional processes with heterogeneous shape characteristics and describes the modeling methodology. Section 3 develops the estimation procedure and Section 4 discusses prediction. Section 5 contains an extensive simulation study. Section 6 presents a comparison of our methodology with alternatives. Applications to the tractography data are presented in Section 7. A brief discussion is given in Section 8.

2. Functional models with spatially-varying marginal distributions

Let with j = 1, …, mi be the data for subject i, i = 1, …, N. We assume that Yi is a random curve defined on domain and sampled at a grid of points ; typically we take . Furthermore, suppose that

| (1) |

where μ(t) is the mean function, σ(t) is the standard deviation function, and Wi(t) is a latent process such that Wi(t) is uniformly (0, 1) distributed for each t. Here G(·; α) is a cumulative distribution function in a parametric family of distributions with zero mean, unit variance, and shape parameter vector α. For example, α can be the scalar shape parameter of the skew normal distribution (Azzalini, 1985) or the two-dimensional shape parameter vector of the skew t distribution due to Azzalini and Capitanio (2003) or a somewhat different skew t distribution of Fernandez and Steel (1998). Also, G−1(·; α) denotes the usual inverse CDF. We assume that the shape parameter α(t) varies smoothly with t. To simplify notation, we sometimes use Gt:= G{·; α(t)}. Our main objectives are to estimate the population functions μ(t), σ(t), and α(t) and describe the dependence of the functional process.

We refer to model (1) as the quantile-induced functional model, because model (1) implies the following model for the pth quantile: Qp(t) = μ(t)+σ(t)Gt−1(p), 0 < p < 1. The advantage of representing the functional data using model (1) is that it allows the specification of the dependence of the random process Yi through the latent process Wi. Since Wi(t) is uniformly distributed for each t, the joint distribution of {Wi(ti1), …, Wi(timi)} is a copula, which we will model parametrically using Gaussian or t copulas.

An alternative to our methodology is to estimate the marginal distributions and the correlation structure separately. The marginal distributions could be estimated, for example, by quantile regression or by fitting univariate distribution at each time point by maximum likelihood or methods of moments. The correlation structure could be estimated by PCA. What is missing with this approach is a technique for combining the marginal distributions and the correlation structure into a probability model for the joint distribution of the data. Our copula methodology supplies this missing technique.

2.1 Population-level parameters

We model the mean function μ(t), variance function σ2(t), and the shape parameter functions α(t) using penalized splines, though other basis expansion approximations can be used. Because the variance is positive, we use log-splines to model σ2(t). Let be a pth degree truncated power series spline basis with dimension q = 1 + p + K having fixed knots (κ1, …, κK). Because the penalty prevents overfitting, the number of knots has very little effect on a penalized spline fit, provided the number of knots is sufficiently large to accommodate fine-level features in the data (Ruppert, 2002; Li and Ruppert 2008). However, an excess of knots will slow the computations. Therefore, in practice, we allow a different number of knots for μ, σ, and α, since some of these functions may require more knots than others. To keep notation simple, in our exposition we will use the same spline basis for each of these three functions. We write μ(t) = B(t)Tβμ and log{σ2(t)} = B(t)Tβσ, where the spline bases and coefficient vectors are q-dimensional. In some cases it is desirable to model, not α(t), but a suitable transformation of α(t), say h{α(t)}, where h{α(t)} applies a one-to-one transformation to each coordinate of α(t). For example, if any component of α(t) is positive we could log-transform that component. Our model for α(t) is α(t) = h−1{B(t)Tβα}. Here βα is a q×dim{α(t)} matrix of parameters.

2.2 Functional dependence and copulas

A copula is a multivariate distribution function whose univariate marginal distributions are all uniform on (0,1). If X = (X1, …, Xn) is a random vector and if Fi is the continuous marginal distribution of Xi, then the distribution of {F1(X1), …, Fn(Xn)} is called the copula of X. Copulas offer a convenient way of modeling multivariate observations because the modeling is broken into two independent parts: (1) modeling the dependencies through a copula; and (2) modeling the univariate marginal distributions (Sklar, 1959).

A Gaussian copula is the copula of some multivariate Gaussian distribution. The copula of a random vector is unchanged by strictly increasing transformations of its components, so a Gaussian copula is completely specified by a correlation matrix. If X has a Gaussian copula, this does not imply that X is Gaussian, only that X has the same copula as some Gaussian random vector. Stated differently, X has the same dependence structure as some multivariate normal random vector, but its marginal distributions need not be Gaussian. Similarly, a t-copula is the copula of some multivariate t-distribution and is specified by a correlation matrix and a scalar degrees of freedom parameter.

Gaussian copulas offer a convenient way to model multivariate dependencies, because these are determined by a familiar quantity, a correlation matrix. The limitation of the Gaussian copula concerns the tail dependence behavior. The coefficient of the tail dependence between a pair (X, Y) of random variables is , which is easily shown to be symmetric in X and Y. For a bivariate Gaussian pair, this coefficient is zero unless their correlation equals 1 (Embrechts, McNeil & Straumann, 2002). This means that under a Gaussian copula, the components behave in the extreme tails as if they were either independent, or perfectly correlated. In contrast, t-copulas exhibit tail dependence even in the case of zero-correlation; see McNeil, Frey, and Embrechts (2005). We found evidence of strong tail dependence in the DTI data; see Section 7.

3. Estimation Methodology

We use penalized maximum likelihood to estimate the mean, standard deviation and shape parameter functions, μ(t), σ(t) and α(t). Each of these functions contains a reasonably large number of parameters and the correlation matrix of the Gaussian or t-copula is also of sizable dimension. Because of the large number of parameters, simultaneous estimation of all parameters can take an excessive amount of computational time. To circumvent this problem, we have developed a more rapid multi-stage estimation procedure.

3.1 Mean, variance, and shape parameter functions

To speed computations, the estimates of μ(t), σ(t) and α(t) are obtained in two steps. In the first, the sample of curves is reduced to undersmoothed estimates of these three functions. In the second, these curve estimates are further smoothed by penalized splines.

Step 1: For simplicity, we start with the case where the functions are sampled on a common dense grid of points, so that tij = tj for j = 1, …, m and all i. Initial estimates of the mean, variance and shape parameter functions are constructed as follows: for each fixed j, define μj = μ(tj), σj = σ(tj) and αj = α(tj). Then, the parametric estimates of μj, σj and αj are obtained by maximizing the pointwise likelihood function

| (2) |

where g(x; αj) = ∂G(x; αj)/∂x is the density function corresponding to the distribution Gtj. For example, when Gt is in the skew normal or skew t family of distributions, the functions sn.mle or st.mle of the sn package for R (Azzalini, 2010) can compute these estimates.

Maximum likelihood estimation of α often requires some care. For example, if g is a skew normal density or a skew t density with known degrees of freedom, then the estimate of α (the skewness parameter) is infinite with positive probability for moderate sample sizes (Azzalini, 1985; Genton, 2004; Sartori, 2006). This instability problem is due to the choice of parameterization in that large changes in α lead to small changes in the density. It should be noted, however, that infinite values of α give valid densities, the half-normal (or half t) densities. The problem is that some estimation methods, for example, spline smoothing, have problems accommodating infinite values. A possible remedy for this problem is mentioned in “Alternative 1” below.

When the curves are sparsely sampled, another estimation method is needed. Note that the estimates of μj, σj and αj in (2) are obtained by local polynomial estimating equations (Carroll, Ruppert, and Welsh, 1998), with degree zero polynomials and a bandwidth so small that only data exactly at tj are used to estimate the functions at tj. For sparse and irregularly spaced data, local estimating equations with larger bandwidths and higher polynomial degree can be used to compute estimates on a grid t1, …, tm, say. The estimates should be undersmoothed, since they will be smoothed further in Step 2. Suppose that the ith subject’s curve is observed at tij, j = 1, …, mi. For estimation at tk using Lth degree local polynomial estimation, define , , and for the qth component function of α(t). Then estimating equation approach minimizes

over all coefficients in μ(t), σ(t), and α(t). The estimates of μ, σ, and α at tk are μ0, σ0, and (α0,1, … , α0,Q), respectively, where Q is the dimension of α. Here Kb(·) is a kernel function and b is a bandwidth.

In summary, regardless of whether we have sparse or dense data, at the end of Step 1 we have undersmoothed estimates on a grid t1, … , tm. These estimates will be denoted by , , and , j = 1, … ,m. Step 2 uses and and, in Alternative 1, is also used.

Step 2: The final estimates of μ(t), σ(t) and α(t) are constructed by smoothing the Step 1 estimates using penalized splines. The mean parameter βμ, where μ(t) = B(t)Tβμ, is estimated by minimizing the penalty criterion , where , Bj = B(tj), and Dμ is a q×q = diag(0, … , 0, 1 … , 1) (p+1 zeros followed by K ones) penalty matrix. This choice of penalty matrix shrinks the pth degree spline towards a pth degree polynomial, since the polynomial coefficients are not penalized. Only the truncated power functions, which give the deviation of the spline from a polynomial, are penalized. The penalty matrices Dσ and Dα used below have the same form as Dμ; see Ruppert et al. (2003) for a discussion of penalty matrices. In a similar way is obtained by minimizing for .

For the shape function parameters, we propose a penalized marginal likelihood criterion where the mean and variance functions parameters are fixed at estimates (see also Sartori, 2006). More specifically, let be the standardized observations, using the estimates of the mean and variance functions obtained above. Define the penalized marginal likelihood function:

where is, up to an additive constant, the log-likelihood function corresponding to the distribution of . We use as the roughness penalty, where Dα is the roughness penalty matrix. The shape parameter βα is obtained as the minimizer of the penalized criterion PLα(βα).

For the mean and variance estimation, we selected the smoothing parameters λμ and λσ using the restricted maximum likelihood estimation (REML) (Wood, 2006). For the shape parameter function estimation λα we used using the corrected AIC criterion (Ruppert et al., 2003); other choices, such as CSV or AIC can be used. The function optim of the stats package for R with the BFGS algorithm can be used for optimization. Let , and be the estimated mean, standard deviation and shape parameter functions respectively.

Alternative 1

One fast alternative is to estimate the shape parameter functions using the same approach as for the mean and variance function. To handle infinite values of αj, we model transformed parameters h(αj) instead. For example, if G(·; α), −∞ ≤ α ≤ ∞, is the skew normal distribution, then an appropriate transformation is h(·) = log[Φ(·/s)/{1 − Φ(·/s)}, the normal CDF with standard deviation s, which maps [−∞, ∞] to [0, 1]; the choice s = 5 was used in our simulation study and worked satisfactorily. This transformation eliminates the infinite values that cause problems. The shape parameter βα minimizes the penalized criterion , with λ and Dα defined as above. While this approach is extremely fast, it estimates the shape parameter function with higher variability.

Alternative 2

The estimation method described in this section estimates μ(t), σ(t) and α(t) without accounting for the dependence of the process. One way to incorporate the functional dependence is via a penalized full likelihood approach. We briefly outline this idea. Let C(; Ω) be a copula distribution and c(·; Ω) the corresponding copula density, where Ω is the vector of copula parameters. It follows that the log-likelihood of the full model can be written as:

| (3) |

where ti = (ti1, …, tim), Yij=Yi(tij), is the penalized log-likelihood function of Yij and . Here Bij = B(tij) for all i and j. To avoid undersmoothing of the population-level functions, which may be caused by large-dimensional function bases, a penalized criterion will be used. The mean, variance and shape parameter functions parameters βμ, βσ and βα are chosen to minimize the penalized pseudo log-likelihood , where is a consistent non parametric estimate of the copula parameter Ω and Ωμ, Ωσ and Ωα are roughness penalties. Estimation of Ω, that does not depend on parameters β’s, is discussed in Section 3.2.

Minimization of PL(βμ, βσ, βα, ) does not have a closed-form solution. To avoid the challenging numerical optimization problem, we use a three-step iterative algorithm. Denote by , , the current estimates of the mean parameters, variance parameters and shape parameters respectively. More specifically, in the first step the mean parameters βμ are updated by . In the second step the variance parameters βσ are updated by . Finally, in the third step the shape parameters βα are updated by . At each step the corresponding smoothing parameter is chosen using the corrected AIC criterion (Ruppert et al., 2003). These three steps are then iterated until convergence; estimates under independence can be used as initial estimates of mean, variance and shape parameters.

Our experience from simulation studies is that, provided that the copula parameters are estimated accurately, Alternative 2 estimates of the population functions μ(·), σ(·), and α(·) are not very different from the estimates that use the working independence assumption with regards to the bias and variability. However the computational cost of Alternative 2 is considerably higher.

3.2 Calibrating copulas

The approach for estimating the copula parameters depends on the type of copula used. A Gaussian copula requires only estimation of the correlation matrix of the underlying Gaussian distribution. A t-copula requires estimation of a correlation matrix and a degree of freedom parameter. First, we describe estimation of the correlation matrix, which is similar for the two copula families, and then describe estimation of the degree of freedom parameter.

If a Gaussian copula is assumed, then the copula correlation is precisely the Pearson correlation of the Gaussian process

| (4) |

This process has standard normal marginal distributions, so K(s, t) = cov{Zi(s), Zi(t)} is both the process covariance and correlation function, and thus it is the latent copula correlation function. One straightforward way to estimate K(·, ·) is to use method of moment estimators of the covariance function corresponding to the approximately Gaussian processes obtained by replacing the population functions by their estimates, . We model as the sum of two independent components: a finite basis expansion process, say with covariance function , and a white noise process with variance . By a finite basis expansion process, we mean a Karhunen-Loéve expansion truncated at a finite, and generally small, number of eigenvectors (Di et al., 2009).

Let be the method of moment estimator of . Let for s ≠ t; for s = t, is estimated using a bivariate thin-plate spline smoother applied to for t ≠ s. This approach to estimation of the diagonal elements removes the “nugget effect” due to the white noise term, and was proposed by Staniswalis and Lee (1998). Because is modeled by a finite basis expansion process, it makes sense to use a reduced rank approximation of the covariance of . We estimate by ; if this estimate is not positive, then it is replaced by a small positive number. Assume that the reduced rank approximation of is ΨΛLΨT, where ΛL is diagonal matrix of dimension L < m and Ψ is an m × L matrix with orthogonal columns. Here L is a finite truncation, determined by say the percentage of explained variance (Di et al., 2009). The correlation matrix of the copula density is the correlation matrix corresponding to the covariance matrix . Because , this correlation matrix is guaranteed to be positive definite.

The estimates of the correlation matrix just described use the estimates of the population mean, variance, and shape functions, and will be degraded by errors in the estimation of these functions. As an alternative, the sample Kendall’s tau matrix can be used to estimate the latent correlation matrix K (McNeil, et al., 2005, Section 5.3.2). Estimation of the correlation matrix using Kendall’s tau is easiest when tij = tj for all i, which is the case we will discuss. The sample Kendall’s tau is invariant to increasing transformations, so that using the data Yi(tj), or the transformed gives the same result. Using (5.32) of McNeil, et al. (2005), let be the sample Kendall’s tau between {Y1(tj), … , YN(tj)} and {Y1(tj′), … , YN(tj′)} and define . Then, the estimation of K proceeds as above.

In our extensive simulation study, the two methods of estimating the copula correlation matrix performed almost identically. When the data are sparse and tij = tj for all i does not hold, then one can estimate the correlation matrix using the techniques in Yao et al. (2005). A full investigation of the sparse case is beyond the scope of this paper.

Suppose now that a t-copula is assumed. Consider the transformed random functions , where Tν is the t-CDF with ν degrees of freedom; these curves are distributed according to a t-process with ν degrees of freedom. One easy way to understand the t-process is through the following explicit construction. Let be a Gaussian process with mean 0 and covariance function K(s, t) = cov{Zi(s),Zi(t)} such that K(t, t) = 1, so that K(t, t) is also the correlation function. Write , where is a chi-squared random variable with ν degrees of freedom and independent of Zi. If ν > 2, then the covariance function of is and so its correlation function is K(s, t). If ν ≤ 2, then has infinite second moments, so it does not have a covariance or a correlation function. However, K(·, ·) can still be regarded as an “association function” of and it is the correlation function of Zi.

Calibrating the t-copula requires estimation of both correlation matrix K and degrees of freedom parameter ν. Estimation of K using Kendall’s tau is as for Gaussian copulas. To estimate ν, we use a “pseudo-likelihood” method. Pseudo-likelihood means estimating certain parameters by maximizing the likelihood with all other parameters fixed at estimates (Gong and Samaniego, 1981). Denote by the estimate of the latent correlation matrix. Let be the empirical distribution function of {Yi(tj) : i = 1, … ,N} except that the denominator is N + 1, and not N. Let , where Rij is the rank of Yi(tj) among {Yi(tj) : i = 1, … ,N}, where j = 1, … m. The “full pseudo-likelihood” method for estimation of ν is to fit the t-copula to the with K fixed at the estimate .

4. Predicting response trajectories

Our methodology allows prediction of the trajectory Y for a subject from irregular measurements by prediction of the latent process W. Let be the predicted latent process at a new time point t and corresponding to the ith subject; the predicted response at time point t is determined as

| (5) |

The prediction of the trajectory of the latent process differs according to the copula model assumed, and is detailed next.

Gaussian copulas

Consider first the case where the dependence is modeled with a Gaussian copula. Using (4) it follows that prediction of Wi is equivalent to prediction of Zi(t) = Φ−1{Wi(t)}, or in fact of the de-noised Zi. This is now easy, since under our model assumptions, Zi(t) can be expressed using Karhunen-Loéve expansion as , where {ψℓ : ℓ ≥ 1} forms an orthogonal basis in the space of squared integrable functions, ξiℓ are uncorrelated, have mean zero and variance equal to one. Also εi(t) is assumed to have mean zero, variance for all t and be independent from the ξ’s.

Estimates of the covariance function of Zi’s are obtained using the method of moments along with smoothing; see Section 3.2. Furthermore, estimates of the functional principal components (FPC) ψℓ’s, of the eigenvalues λℓ’s and the noise variance are obtained by using Mercer’s theorem of the covariance function of the de-noised curves. It follows that prediction of the trajectory {Zi(t) : t} reduces to prediction of the functional principal components (FPC) scores, ξiℓ. For this step we use Gaussian assumptions, that ξiℓ and εi(t) are jointly Gaussian. The best linear prediction of the FPC scores for the ith subject, given the data from that individual is the usual conditional expectation, which is found to be . Here Zi = {Zi(ti1), … ,Zi(timi)}T is the transformed data for the ith individual, ψiℓ = {ψℓ(ti1), … , ψℓ(timi)}T , and ΣZi is the mi×mi matrix of the covariance of Zi with the (j, j′)th entry equal to .

Then the prediction of the de-noised transformed trajectory Zi(t) for the ith subject is , by using the first L eigenfunctions, where ’s and ’s are the estimated FPC eigenfunctions and eigenvalues respectively and ’s are the estimated FPC scores. Here L is a finite truncation, determined by say the percentage of explained variance (Di et al., 2009). It follows that the prediction of Wi(t) is .

t-copulas

We consider the case of t-copula model and assume that the degree of freedom parameter, ν, is larger than 2. Now we need to work with which require transformation of the data using Tν distribution, instead of Zi(t) which uses the standard normal transformation. Notice that , where , and λℓ’s and ψℓ defined as before. Although the Gaussian assumptions no longer hold in this case, there is empirical evidence (Yao et al., 2005) that the above formula of still provides the best linear prediction of the FPC scores, , given the transformed information for the ith subject. There are a few modifications to be made: 1) the new variance of the noise is and the new variance of the scores is ν/(ν − 2). The prediction for the de-noised transformed trajectory for the ith subject is then thus the prediction of Wi(t) is , where is the estimated degrees of freedom of the t-copula.

Prediction can be done with standard functional PCA tools; Section 5.2 contains the prediction errors obtained using conditional expectation directly (Di et al., 2009) and they are similar to the ones obtained by our method. However, our method also estimates the pointwise quantile functions, which is essential for prediction intervals. The pointwise quantile functions estimated using FPCA are based on a normality assumption of the scores and can fail terribly, as discussed in Section 6 and illustrated in the Supplementary material. Prediction methods currently do not exist for quantile regression because dependence is not modeled. However, it is possible to combine quantile regression for modeling the marginal distributions with our copula methodology for modeling dependence, as mentioned at the end of Section 6. For these reasons we view our modeling approach as a unifying approach for both prediction and quantile estimation.

5. Simulation studies

We conducted an extensive simulation study to assess the performance of the estimation procedures described in Section 3. For each of the combinations of mean, standard deviation, shape parameter, and covariance function described below, 100 data sets, each consisting of N = 200 random trajectories, were generated from model (1). Each curve was sampled on a grid of equi-spaced timepoints {tij : j = 1, … , 80} in [0, 1], where i = 1, … ,N. The simulated processes use Wi(t) = FX,t{Xi(t)}, where Xi’s are a sample of mean-zero Gaussian curves and FX,t(·) is the CDF of Xi(t) for all 0 ≤ t ≤ 1.

We set Xi(t) = Zi(t) + εi(t), where the εi(t) are independent N(0, σ2 = 0.10). Two main covariance structures of the underlying process Zi are considered:

I. Finite basis expansion. We assume Zi’s are a sample of random curves with mean 0 and covariance function KZ(s, t) = cov{Zi(t),Zi(s)}. The covariance function has the expansion in terms of eigenfunctions ψℓ’s and eigenvalues λℓ’s. In the simulations, L = 3 and there were two cases:

, and ; or

-

, and ,

where 0 ≤ t ≤ 1. We chose λℓ = (1/2)ℓ−1 for ℓ = 1, 2, 3.

II. Matern covariance structure. We let Zi be Gaussian process with mean zero, variance equal to 2, and Matérn auto-correlation function

| (6) |

with (i) range φ = 0.07 and order κ = 1; and (ii) with range φ = 0.14 and order κ = 1. Here Kκ is the modified Bessel function of order κ.

We set Gt to be the standardized skew normal distribution with shape parameter α(t), which is implemented in the sn package of R. The standardization is such that the resulting distribution has mean 0 and variance 1. We consider all the possible combinations from the following scenarios:

mean function: (a) μ(t) = 6; and (b) μ(t) = −2.2t5 + 3t3 − 1.2t + 0.7;

variance function: (a) σ2(t) = exp(−5); and (b) σ2(t) = {2.2t5 −3t3 + 1.2t + 0.3}/ exp(4);

shape parameter function: (a) α(t) = 0; (b) α(t) = −21(t ≤ 0.5) + 41(t > 0.5); (c) α(t) = 5t2 − 19t + 5; and (d) α(t) = −10 sin(2πt), for t ∈ [0, 1].

5.1 Model estimation performance

For the estimation of the mean and variance functions, the smoothing parameters were selected using REML implemented in the R package mgcv (Wood, 2006). For the estimation of the shape parameter functions, we used cubic regression splines with 5 knots, with the smoothing parameter selected by the corrected AIC criterion. We used a Gaussian copula to model dependence. For bivariate smoothing of the covariance function, tensor product penalized cubic regression splines with 10 knots per dimension were used, and REML estimation was used for the selection of the smoothing parameter (Wood, 2006).

The supplementary material (Figure S1) includes an illustration of a data set generated from covariance structure (IIii), mean function (1b), variance function (2b), and shape function (3d) along with the estimates of the mean, log-variance and shape parameter functions from 100 simulated data sets. The estimates of all population-level functions show nearly no bias and various degrees of smoothness. In particular, the log-variance estimates are somewhat undersmoothed, which is what is expected in the case of smoothing positively correlated data with a working independence assumption (Altman, 1990; Hart, 1991). Nonetheless, the undersmoothing does not degrade the MSE by much, as will be seen soon. In Table 1, we report estimates of the square root of the integrated mean squared error (IMSE), the integrated squared bias (ISBIAS), and the integrated variance (IVAR). For example, for , these quantities are defined as: , and . Here and denote the sample mean and sample variance of , for 0 ≤ t ≤ 1. The results confirm our previous observations: the mean function estimator has very small IMSE, irrespective of the covariance structure. The somewhat larger IMSE of the log-variance function estimator is caused by its larger variability. For the shape parameter function estimator both the bias and the variability cause a larger IMSE. In general, estimation of the mean, log-variance and shape functions is not affected by the structure of the dependence of the latent process in cases where the latent process exhibits stronger dependence. As expected, when the latent process has very weak dependence, such as in scenario (II i), better estimates are obtained for all the population functions.

Table 1.

Estimates of the squared root of ISBIAS, the root of IVAR and the root of IMSE for the estimates of the mean function (1b), the log-variance function (2b), the shape parameter function (3d) when the latent process is specified as in (Ii) and (Iii) or in (IIi) and (IIii).

| Latent Covariance |

Population Level Functions |

|||

|---|---|---|---|---|

| (I i) | Mean | 0.27 | 0.68 | 0.72 |

| (I ii) | 0.27 | 0.70 | 0.75 | |

| (II i) | 0.27 | 0.55 | 0.61 | |

| (II ii) | 0.27 | 0.63 | 0.69 | |

|

| ||||

| (I i) | Log-variance | 1.71 | 10.06 | 10.16 |

| (I ii) | 1.45 | 10.45 | 10.50 | |

| (II i) | 1.69 | 7.58 | 7.73 | |

| (II ii) | 1.68 | 9.51 | 9.61 | |

|

| ||||

| (I i) | Shape | 67.35 | 111.36 | 129.67 |

| (I ii) | 66.44 | 112.39 | 130.07 | |

| (II i) | 61.30 | 57.72 | 84.00 | |

| (II ii) | 65.54 | 71.76 | 96.25 | |

Figure 2 illustrates the performance of our methodology with respect to capturing the true dependence of the functional process. Figure 2 displays the estimates of the first three eigenfunctions and eigenvalues of the copula correlation matrix in 100 simulated data sets from scenario (Ii). The true eigenfunctions and eigenvalues correspond to the correlation function derived from the model specified by the covariance structure (Ii); these eigenfunctions/eigenvalues are different from the ones defining the covariance functions. The copula correlation was estimated using Kendall’s tau; however, similar performance was noted when the sample Pearson correlation of the transformed curves was used.

Figure 2.

Estimated eigenfunctions (gray lines, top panels) and eigenvalues (bottom panels) and their corresponding true eigenfunctions (black lines) / eigenvalues (dotted line) derived from the true model, when the model uses mean function (1b), variance function (2b), shape function (3d), and covariance structure (Ii).

5.2 Prediction performance

We investigate the mean prediction error, in order to assess the performance of our prediction approach. Consider first a Gaussian copula and let , where is an estimate of the pointwise distribution of Yi(t); notice that can be obtained using estimates of the mean, standard deviation and shape parameter functions, or by using the pointwise empirical distribution function. Also let be the predicted trajectory by the approach in Section 4. Then the prediction error is determined as . If {ti1, … , timi} is the set of points at which the ith curve is observed for i = 1, … , n, then the IPE can be estimated by , where is determined using (5). Table 3 reports the estimated squared root IPE using 100 simulated data sets from a few scenarios (IPEo), and when Ft is estimated using the empirical CDF. In addition it presents the average squared root IPE for the trajectory of a new subject, which is observed either on the first half of the set of the sampling points (IPEnew1), or at randomly selected time points (IPEnew2). More specifically, let {t1, … , tm} be the sampling points in our data, and be the observed data corresponding to a new subject, where k < m. Our methodology allows to predict the trajectory for this new subject for the sampling points at which it has not been observed; Table 3 gives the estimate of the average squared root of IPE, calculated as , where is the set of time points at which the subject has not been observed. For example IPEnew1 uses for j = 1, … , k, while for IPEnew2 we select < … < randomly from {t1, … , tm}; in both scenarios k = 41. In all the cases, the estimates of the mean, variance and shape parameter functions are obtained using the approach described in Section 3; for the shape parameter function Alternative 1 was used.

Table 3.

Estimates of the squared root of IPE using the observed data (IPEo) and corresponding to two trajectories: one that is observed on the first 41 of the sampling design points (IPEnewl) and one that is observed at 41 random time points (IPEnew2). In parenthesis are displayed, the counterparts using common functional PCA tools (Di et al., 2009). The scenarios use skew normal distribution with the mean (1b), the log-variance (2b), the shape parameter (3d) and covariance structures (I i)-(II ii). The correct copula model is used in the estimation.

| Latent Cov |

Gaussian copula |

t - copula with 4 d.f. |

||||

|---|---|---|---|---|---|---|

| (I i) | 0.025 (0.025) | 0.029 (0.036) | 0.025 (0.027) | 0.020 (0.020) | 0.023 (0.025) | 0.020 (0.020) |

| (I ii) | 0.029 (0.029) | 0.030 (0.037) | 0.028 (0.029) | 0.023 (0.023) | 0.023 (0.028) | 0.022 (0.023) |

| (II i) | 0.056 (0.055) | 0.125 (0.122) | 0.075 (0.077) | 0.045 (0.044) | 0.094 (0.096) | 0.056 (0.059) |

| (II ii) | 0.038 (0.038) | 0.139 (0.125) | 0.049 (0.052) | 0.031 (0.030) | 0.096 (0.099) | 0.037 (0.040) |

It appears that the latent covariance structure has a slight effect on the performance of the prediction, especially in the case of forecasting the trajectory of a new subject. For both Gaussian and t-copulas, the prediction performance corresponding to the observed data, IPEo, is very good for designs (I i) and (I ii); but somewhat poorer when the latent correlation has a shorter range, such as in designs (II i) and (II ii). We notice similar performance for the prediction of a new trajectory, although this varies further with the design of the time points at which the new subject is observed. The the accuracy of the prediction is very close to the one based on the observed data, when the new subject is observed at 41 randomly selected time points (fourth and seventh column of Table 3), than when it is observed at the first 41 time points (third and sixth column of Table 3). Overall, our prediction approach has good performance, which in addition is very close to the accuracy using common functional PCA tools (Di et al., 2009), as illustrated by Table 3.

6. Comparison between quantile regression and a Karhunen-Loéve expansion

One main advantage of our model is that it both estimates the pointwise quantile functions and provides a Karhunen-Loéve expansion to model dependencies. We are not aware of any competing methodology with this feature. Nonetheless, nonparametric quantile regression and a KL might be considered as alternatives to our methodology, so a comparison with them is in order. For fixed p ∈ (0, 1), our estimated quantile function is . Table 2 gives the estimates of the IMSE and ISBIAS of for p = 1%, 10% and 50%, for one particular scenario. These results are compared to quantile regression estimates using constrained linear splines with an AIC chosen roughness penalty, implemented by the R package cobs of Ng and Maechler (2007). Our method outperforms cobs with respect to ISBIAS, IVAR, and IMSE, especially for more extreme quantile levels. Of course, the modeling of dependence by the KL expansion is an advantage of our methodology over quantile regression, because the KL expansion provide a probability model for the data generating mechanism, which facilitates prediction (Section 4) and allows model-based bootstrapping. However, non-parametric regression quantile estimation is a good competitor with respect to marginal quantile estimation. For an additional comparison, in Figure S2 of the supplementary material, we plot for one scenario the quantile functions estimated with our method, the cobs software, and the KL expansion which assumes that the scores are normally distributed. As expected, the quantile functions with the latter method are badly biased when asymmetry exists in the data. Moreover, although the KL expansion can model correlation, because of its Gaussian assumption, the KL expansion cannot accommodate tail dependence.

Table 2. Estimates of the squared root of ISBIAS, the root of IVAR and the root of IMSE for the estimates of the quantile functions of levels 1%, 2%, 5%, 10% and 50%, for the case when the true mean function is (1b), the log-variance function is (2b), the shape parameter function is (3d) and the latent process is specified by (Ii).

| Method | Quantile level | |||

|---|---|---|---|---|

| Model based | 1% | 0.46 | 1.58 | 1.64 |

| COBS | 1.40 | 1.95 | 2.39 | |

|

| ||||

| Model based | 2% | 0.40 | 1.40 | 1.45 |

| COBS | 1.12 | 1.45 | 1.83 | |

|

| ||||

| Model based | 5% | 0.33 | 1.17 | 1.21 |

| COBS | 0.71 | 1.18 | 1.37 | |

|

| ||||

| Model based | 10% | 0.30 | 0.99 | 1.03 |

| COBS | 0.41 | 1.04 | 1.11 | |

|

| ||||

| Model based | 50% | 0.31 | 0.65 | 0.72 |

| COBS | 0.39 | 0.77 | 0.85 | |

Our methodology uses a parametric model for the marginal distributions, and we have focused on the skew normal and skew t families, with only 3, respectively, 4 parameters. In situations where the marginal distributions are not well approximated by either of these models, one could use a more highly parameterized model, such as normal mixture models. Alternatively, one could use quantile regression regression to estimate the marginal distributions in combination with our copula approach to modeling dependence.

7. Application: DTI tractography

We apply our proposed method to a study of the diffusion characteristics of white matter tracts in patients with multiple sclerosis (MS) and healthy controls. White matter tracts consist of axons that convey information between parts of the brain. These axons are covered with myelin, a white fatty coating. The myelin sheath helps the nerve transmit signals at a very fast rate. Myelin damage, as seen in MS and other demyelinating diseases, impairs axonal conduction and can be associated with axonal degeneration. Inflammatory demyelination and axon damage in the corpus callosum tract are prominent features of MS and may partially account for impaired performance on complex tasks (Ozturk et al., 2010).

DTI reveals microscopic details about the architecture of the white matter tracts by measuring the three-dimensional directions of water diffusion in the brain (Basser et al. 1994, 2000). Our study uses measurements of the parallel diffusivity within the corpus callosum for 162 MS patients and 42 healthy controls. Goldsmith et al. (2010) used parallel diffusivity within left intracranial cortico-spinal tracts to classify subjects as MS cases or controls. Figure 1 displays tract profiles sampled at 93 locations in MS patients and controls.

A quick inspection of Figure 1 suggests that the controls and MS patients have somewhat similar means but different variability and asymmetry across tract location. We assumed that at each tract location, the parallel diffusivity for each group has a skew t distribution with constant, but unknown, degrees of freedom and location-specific skewness. The degrees of freedom parameter (ν) was estimated through maximum likelihood under a working independence assumption. This approach yields for the MS group and for the control group. Because of the control group is very large, and since the skew t distribution reduces to skew normal when ν = ∞, we used the skew normal for this group.

The estimated mean, variance and skewness functions in the healthy volunteer and MS groups, along with confidence intervals of the corresponding difference functions, are included in the supplementary material, Figure S3. The results indicate slightly larger mean of parallel diffusivity for cases than for controls. Furthermore, using 90% bootstrap confidence intervals (based on 1000 resamples) they depict significantly higher variability and considerably larger third-moment skewness coefficient functions for the parallel diffusivity in the MS group than in the control group. For the control group, the skewness function depends solely on the shape parameter that governs a skew normal distribution; while it depends on both the degrees of freedom and the shape parameters for the MS group. Figure S3 shows that the skewness function in the MS sample of parallel diffusivity is significantly larger than the skewness function in the control sample for locations 30-85 along the corpus callosum tract.

Figure 3 illustrates the comparison between the quantile functions of the parallel diffusivity profiles corresponding to the two groups at the quantile levels 1%, 50%, 95% and 99%. The lower quantile functions seem comparable for the two groups: this is expected when the mean functions are close to each other and the variance functions are small, which is our case. The higher level quantiles are indicative of the differences between the two groups in both the shape parameter and the variance. Figure 3 (bottom panel) shows the 90% pointwise confidence intervals of the estimated difference between the quantile functions of the two groups: the quantile functions for the MS group are significantly larger than the corresponding ones for the control group for probabilities of 50% and higher.

Figure 3.

Estimated quantiles of the parallel diffusivity profiles within the corpus callosum tracts of the MS patients and controls. Top panel: Estimated quantiles functions for the MS group (black lines) and the control group (grey lines). Bottom panel: Estimated difference between the quantiles of the MS and control groups (solid lines) with 90% pointwise confidence intervals (dashed lines) from 1000 bootstrap replicates.

We first used Gaussian copulas, with group specific parameters, to model the dependencies in the parallel diffusivity profiles. Figure 4 shows the estimated eigenfunctions and eigenvalues of the correlation matrices for the MS patients and control groups. The similarity between these estimates in the two groups is remarkable. It suggests that to compare these groups of curves, models with common correlation functions can be used in future analyses. At the same time, it indicates that the difference in the distributions of the parallel diffusivity profiles in the MS group and controls can be summarized solely by the marginal distributions. In particular one could use the pointwise quantile functions obtained in the control group to identify most unusual parallel diffusivity in the MS group. Furthermore, because the mean and the variance are significantly larger in the MS group than in the control group irrespective of the tract location, one can use the pointwise skewness to identify the most interesting parts of the tract.

Figure 4.

Estimated eigenfunctions of the latent Gaussian copula correlation functions in the MS (black) and control (grey) groups. Percentages of explained variance are given in parenthesis corresponding to MS and healthy subjects.

In addition, t-copulas were fit to the MS and control groups. The correlation matrices, estimated by the Kendall’s tau approach in Section 3.2, are very similar to the Gaussian copula correlation matrices. The maximum likelihood estimates of copula degrees of freedom were 3.30 for the control group and 4.88 for the MS group. (These estimates should not be confused with the estimates of ν for the marginal skew t distributions.) Such small values of the copula degrees of freedom parameter imply substantial tail dependence. The tail dependence would have been missed if Gaussian models had been used, since there is no tail dependence in a multivariate normal distribution, except in the case of perfect correlation. The tail dependence also seems apparent in the data, for example in Figure 1 where individual curves that are extreme at one tract location tend to be extreme at other locations. This behavior would not occur if the data had a multivariate Gaussian distribution, even with high correlation, unless the correlation were perfect.

Our findings are consistent with previous studies of diffusivity. These have shown that acute axonal injury at first decreases parallel diffusivity, but, as the tissue repairs, parallel diffusivity normalizes and then increases above normal. This is likely due to increased overall diffusivity and is probably not specific for a particular kind of tissue injury, e.g., not specific to MS. Another possibility is that the researchers have overestimated parallel diffusivity, because as the tract atrophies, one get contributions from adjacent cerebrospinal fluid (where diffusivity is high). However, the investigators have tried to minimize this effect, and it not considered to be a major problem. The main contributions of this new method to the understanding of MS are (1) identification of the most severely affected cases; and (2) identification of the interesting parts of the tract.

8. Conclusion

This paper introduces a model for functional data that exhibit non-Gaussian characteristics such as skewness, heavy tails, and tail dependence. Our model includes a Karhunen-Loève expansion for a latent, but estimable, Gaussian process that induces dependencies. Our approach is based on copula methodology and combines elements of parametric and nonparametric modeling. However, robustness of our methodology to the choice of the parametric families of marginal and copula distributions and goodness-of-fit testing of these families remain open problems.

Supplementary Material

Acknowledgments

We are grateful to the Editor, Associate Editor and one Referee for their insightful comments. Staicu’s research was partially supported by NSF grant DMS 1007466. The research of Crainiceanu and Ruppert was supported by NIH grant R01NS060910. The authors would like to acknowledge Peter Calabresi for providing the data and the National Multiple Sclerosis Society and EMD Serono for providing funds for data collection.

Footnotes

Supplementary material

Figures S1-S3 referenced in Sections 5–6 are available under the Paper Information link at the Biometrics website http://www.biometrics.tibs.org.

References

- Altman N. Kernel smoothing of data with correlated errors. Journal of the American Statistical Association. 1990;85:749–759. [Google Scholar]

- Azzalini A. A class of distributions which includes the normal ones. Scandinavian Journal of Statistics. 1985;12:171–178. [Google Scholar]

- Azzalini A, Capitanio A. Distributions generated by perturbation of symmetry with emphasis on a multivariate skew t distribution. Journal of the Royal Statistics Society, Series B. 2003;65:367–389. [Google Scholar]

- Azzalini A. R package ‘sn’: The skew-normal and skew-t distributions (version 0.4-15) 2010 URL http://azzalini.stat.unipd.it/SN.

- Basser P, Mattiello J, LeBihan D. Mr diffusion tensor spectroscopy and imaging. Biophysical Journal. 1994;66:259–267. doi: 10.1016/S0006-3495(94)80775-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Basser P, Pajevic S, Pierpaoli C, Duda J. In vivo fiber tractography using dt-mri data. Magnetic Resonance in Medicine. 2000;44:625–632. doi: 10.1002/1522-2594(200010)44:4<625::aid-mrm17>3.0.co;2-o. [DOI] [PubMed] [Google Scholar]

- Carroll RJ, Ruppert D, Welsh AH. Local Estimating Equations. Journal of the American Statistical Association. 1998;93:214–227. [Google Scholar]

- Crainiceanu CM, Staicu AM, Di C. Generalized Multilevel Functional Regression. Journal of the American Statistical Association. 2009;104:1550–61. doi: 10.1198/jasa.2009.tm08564. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Di C, Craininceanu CM, Caffo BS, Punjabi NM. Multilevel functional principal component analysis. Annals of Applied Statistics. 2009;3:458–488. doi: 10.1214/08-AOAS206SUPP. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Embrechts P, McNeil A, Straumann D. Correlation and dependency in risk management: properties and pitfalls. In: Dempster MAH, editor. Risk Management: Value at Risk and Beyond. Cambridge University Press; Cambridge: 2002. pp. 176–223. [Google Scholar]

- Ferraty F, Vieu P. Nonparametric Functional Data Analysis: Theory and Practice. Springer; New York: 2006. [Google Scholar]

- Fernandez C, Steel MFJ. On Bayesian Modelling of Fat Tails and Skewness. Journal of the American Statistical Association. 1998;93:359–371. [Google Scholar]

- Genton MG. Skew-Elliptical Distributions and Their Applications: A Journey Beyond Normality. Chapman & Hall/CRC; Boca Raton, FL: 2004. edited volume. [Google Scholar]

- Goldsmith J, Bobb J, Crainiceanu CM, Caffo B, Reich D. Penalized Functional Regression. Journal of Computational and Graphical Statistics. 2011 doi: 10.1198/jcgs.2010.10007. DOI: 10.1198/jcgs.2010.10007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gong G, Samaniego FJ. Pseudo Maximum Likelihood Estimation: Theory and Applications. Annals of Statistics. 1981;9:861–869. [Google Scholar]

- Greven S, Crainiceanu CM, Caffo B, Reich D. Longitudinal Functional Principal Component Analysis. Electronic Journal of Statistics. 2010;4:1022–1054. doi: 10.1214/10-EJS575. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hart JD. Kernel regression estimation with time series errors. Journal of the Royal Statistical Society, Series B. 1991;53:173–187. [Google Scholar]

- Li Y, Ruppert D. On The Asymptotics Of Penalized Splines. Biometrika. 2008;95:415–436. [Google Scholar]

- McNeil A, Frey R, Embrechts P. Quantitative Risk Management. Princeton University Press; Princeton and Oxford: 2005. [Google Scholar]

- Ng P, Maechler M. A fast and efficient implementation of qualitatively constrained quantile smoothing splines. Statistical Modelling. 2007;7:315–28. [Google Scholar]

- Ozturk A, Smith SA, Gordon-Lipkin EM, Harrison DM, Shiee N, Pham DL, Caffo BS, Calabresi PA, Reich DS. MRI of the corpus callosum in multiple sclerosis: association with disability. Multiple Sclerosis. 2010;16:166–177. doi: 10.1177/1352458509353649. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ramsay JO, Silverman BW. Functional Data Analysis. 2nd ed. Springer; New York: 2005. [Google Scholar]

- Ramsay JO, Hooker G, Graves S. Functional Data Analysis with R and MATLAB. Springer; New York: 2009. [Google Scholar]

- Ruppert D. Selecting the number of knots for penalized splines. Journal of Computational and Graphical Statistics. 2002;11:735–757. [Google Scholar]

- Ruppert D, Wand M, Carroll R. Semiparametric Regression. Cambridge University Press; Cambridge: 2003. [Google Scholar]

- Sartori N. Bias prevention of maximum likelihood estimates for scalar skew normal and skew t distributions. Journal of Statistical Planning and Inference. 2006;136:4259–4275. [Google Scholar]

- Sklar A. Fonctions de répartition à n dimensions et leurs marges. Publ Inst Stat Univ Paris. 1959;8:229–231. [Google Scholar]

- Staicu A-M, Crainiceanu CM, Carroll RJ. Fast Methods for Spatially Correlated Multilevel Functional Data. Biostatistics. 2010;11:177–194. doi: 10.1093/biostatistics/kxp058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Staniswalis JG, Lee JJ. Nonparametric regression analysis of longitudinal data. Journal of the American Statistical Association. 1998;93:1403–1418. [Google Scholar]

- Yao F, Müller H-G, Wang J-L. Functional data analysis for sparse longitudinal data. Journal of the American Statistical Association. 2005;100:577–590. [Google Scholar]

- Wood S. Generalized Additive Models: An Introduction with R. Boca Raton Chapman and Hall/CRC; 2006. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.