Abstract

Complex community oriented health care prevention and intervention partnerships fail or only partially succeed at alarming rates. In light of the current rapid expansion of critically needed programs targeted at health disparities in minority populations, we have designed and are testing an “logic model plus” evaluation model that combines classic logic model and query based evaluation designs (CDC, NIH, Kellogg Foundation) with advances in community engaged designs derived from industry-university partnership models. These approaches support the application of a “near real time” feedback system (diagnosis and intervention) based on organizational theory, social network theory, and logic model metrics directed at partnership dynamics, combined with logic model metrics.

Keywords: Partnership failure, Partnership success, Logic model design, Organizational theory, Partnership best practices, Health disparities evaluation, Cancer program evaluation, Community engaged evaluation, Organizational culture evaluation, Social network models of partnerships

1. Introduction

Partnerships, or structured collaborations across organizations, are increasingly promoted as a strategy to achieve both synergy and efficiency. Complex research and educational partnerships have enormous potential for innovation, productivity, and failure at high cost. The organizational research literature demonstrates that voluntary partnerships fail between 70 and 90 percent of the time, especially when the partnerships include some combination of business, industry, academics, communities, and government. The classic, deficit model on partnership failure is well documented (Arino & de la Torre, 1998; Duysters, Kok, & Vaandrager, 1999; Gulati, Khanna, & Nohria, 1994; Meschi, 1997; Nahavandi & Malekzadeh, 1988) and there are increasingly sophisticated best practices models available to help overcome the deficits (Briody & Trotter, 2008; Leonard, 2011; Mendel, Damberg, Sorbero, Varda, & Farley, 2009; Walsh, 2006). Following these cautions and models, we are defining our partnership (the Partnership for Native American Cancer Prevention – NACP) as a voluntary association of individuals embedded in a formal cross-institutional collaborative program (U-54). NACP is focused on an over-arching set of goals, objectives, and principles for accomplishing research on cancer health disparities in Native American communities. The partnership has both formal and informal elements (goals, objectives, processes, behaviors) that are accomplished through both formal and informal activities and relationships.

In spite of the grim statistics on partnership failure, the federal government is constantly funding large multisite, multi-institutional projects like NACP that are designed to produce breakthrough “translation science” for health and medical care innovations. These innovation partnerships are increasingly complicated and often made up of “silos” trying to be integrated systems. Examples of “silos” abound in academic and research organizations, and also exist in communities and other partnerships. A silo is an organizational structure that is focused on the successful completion of a relatively narrow but integral part of the organizational mission, and as a consequence is resistant to intrusions (or extrusions) that challenge the existing paradigm. Even within single educational or research institutions, academic departments often have virtually impermeable boundaries that reduce the opportunity for cross-, multi-, and inter-disciplinary creativity, cooperation and productivity. This issue becomes even more problematic when multiple institutions, each with their own silos, are involved.

The overall goal of NACP is to connect researchers across disciplines and to integrate the sometimes disparate missions of outreach to communities and feedback from communities (especially ones that are significantly culturally different from the universities and researchers) while supporting the differing missions of training and research. Such connection and integration must deliberately span natural and artificial boundaries, (i.e. the boundary or “siloing” mechanisms natural to people who effectively live in a different cultural milieus). One consequence of the need to reduce “siloing” is to create an evaluation model that shows the integration of organizational units across boundaries, as well as showing the impact on diversity of viewpoint on productivity of the units within those boundaries. A recent review (Uzzi, Mukherjee, Stringer, & Jones, 2013) found that scientific publications bringing or citing novel perspectives into an otherwise “conventional” approach were likely to have higher than average citation rates (interpreted as having the highest impact overall). Such papers were more often produced by teams of collaborating authors than by single authors. Thus the success of teams in melding differences of perspective can be critical to achieving the hoped-for outcomes for collaboration, and in turn this highlights the importance of being able to monitor and manage the effectiveness of the team or partnership. This approach attempts to improve the process needed to move innovations “from bench to bed to community” and to have a significant impact on the new directions for personalized medicine, precision medicine and population medicine at the broadest level. Small simple projects have not produced the desired results. Successful translation science and health disparities programs need to effectively engage multiple, often competing, and always complex stakeholder groups in research partnerships in order to move innovation and translation of health care innovations from bench to bed to community and back again. This ongoing change in both the scale and the complexity of translation science partnerships presents a number of new, not simply larger, challenges to evaluation theory and science.

We are proposing (and currently testing) an evaluation model that brings together standard evaluation design elements informed by recent organizational theory focused on partnership dynamics (Trotter, Briody, et al., 2008; Trotter, Sengir, et al., 2008, Chap. 2). Several key elements of the challenges for complex health partnership assessment have been clearly addressed by Scarinci and colleagues (Scarinci, Johnson, Hardy, Marron, & Partridge, 2009) in their innovative evaluation design for a complex community engaged cancer health disparities cancer program. Our model, which is being deployed in the Partnership for Native American Cancer Prevention,1 follows and expands on the Scarinci et al. model through the incorporation of a “Logic Model Plus” design which includes partnership dynamics feedback (partnership metrics) in addition to traditional output metrics (Logic Model). The model uses both query-based evaluation approaches and standard output metrics, but additionally evaluates the dynamics of the partnership relationships, which unfortunately are the most common source of failure for partnership success (Trotter & Briody, 2006).

Our model acknowledges the fact that community engaged translational research is especially challenging in projects that include community partners, governmental partners, and university–research institution partners in a complex mix (Brinkerhoff, 2002; Brown et al., 2013; Scott & Thurston, 2004; Zhang, 2005). Collaborating partners are frequently engaged in very different cultures, organizational structures, goals, and measures of success, requiring differentiated approaches to the appropriate assessment process (that is, both metrics and qualitative assessments; cf. Brett, Heimendinger, Boender, Morin, & Marshall, 2002; Ross et al., 2010). Care providers function under different expectations and constraints than do government agencies, social service entities, advocacy groups, and the general public (each of which has its own culture), grouped under the heading “community.” (Bryan, 2005; Reid & Vianna, 2001) Meanwhile, academic researchers have their own distinctive cultures and reward systems, which frequently clash with the timelines and value systems of the other partners. Investments in major partnerships are intended to further collaborative efforts, but these efforts can be sabotaged by cultural differences among partners if those differences are not acknowledged and specifically accounted for, especially in any on-going evaluation design (Hindle, Anderson, Giberson, & Kayseas, 2005). A well-designed and responsive evaluation system can do much to monitor and to facilitate management of such cultural differences and their impact on effective functioning within a partnership. This paper proposes an expanded evaluation and intervention model for creating, maintaining, and exiting successful university, government, and community partnerships for cancer workforce training, community outreach, and basic translational science projects.

2. Previous models and previous lessons learned

The growth rate for institutionally based research partnerships has exploded, with serious scholarly attention to that expansion beginning around 2000 (American Council on Education, 2001; Brinkerhoff, 2002; Ertel, Weiss, & Vision, 2001). Part of that attention results from the failure statistics cited above, as well as close attention to a “deficit” approach to the evaluation of failed programs. The reasons behind unacceptable failure rates are increasingly very well known (Arino & de la Torre, 1998; Gill & Butler, 2003; Mohr & Spekman, 1994), predictable (Sastry, 1997; Spekman & Lamb, 1997; Turpin, 1999), and in many cases preventable with a solid diagnostic and feedback evaluation design (Sengir et al., 2004a, 2004b, Trotter, Briody, et al., 2008; Trotter, Sengir, et al., 2008). The benchmark literature has been confirmed repeatedly and the reasons for success and failure have remained solidly stable through time. Partnerships most commonly fail because of a breakdown in relationships among partners, and only secondarily from lack of resources, technology failures, or unsuccessful science, even though the relationship failures often cite the other three issues as a causal explanation for the relationship failure. As mentioned above, one critical design advancement has been the expansion and refinement of the model to include both institutional and community impacts, as well as outcomes (Scarinci et al., 2009). This article adds a third element, relationship dynamics, to the other elements and presents a diagnostic and maintenance evaluation model derived from a university–industry generated asset based best practices model that more closely predict the success and failure of partnerships (Trotter & Briody, 2006; Trotter, Briody, et al., 2008).

3. Context: the partnership for native american cancer prevention

In 2001 the National Cancer Institute established a program of investment in academic partnerships aimed at reducing minority health disparities in cancer burdens, using the U54 (collaborative partnership program) funding mechanism now known as PACHE (Partnerships to Advance Cancer Health Equity). These partnerships are designed to link one of the nation's comprehensive cancer centers (highly productive and successful research centers at major research universities) with an institution of higher education that serves significant numbers of students (and communities) from under-represented groups encountering cancer health disparities (Native American, African American, Hispanic, Pacific Islander, etc.). The specific aims of these partnerships are to reduce cancer disparities by: (1) building cancer research capacity at the non-cancer-center partner institution; (2) recruiting and training an increasingly diverse and culturally sensitive biomedical workforce by building synergies across the two institutions; and (3) designing and carrying out research efforts aimed directly at understanding and mitigating cancer disparities. The Native American Cancer Research Partnership (CA 96281) was first funded by NCI in 2002 as a collaborative project between the University of Arizona's Arizona Cancer Center (located in Tucson, AZ) and Northern Arizona University (Flagstaff, AZ). The Partnership is focused on cancer burdens experienced by Native American tribal communities in the American Southwest, especially Arizona. There are 23 federally recognized tribes in Arizona, and the Partnership seeks to engage specific tribal nations in collaborative research and outreach programs aimed at understanding and reducing cancer impacts in those communities. After an initial five years of funding, the Partnership experienced several leadership changes and a hiatus of funding, as well as a drastic change in evaluation strategy (from an annual, minimal external review by an independent evaluator, to an integrated multi-layered evaluation and feedback system). In 2008, renamed as the Partnership for Native American Cancer Prevention (NACP), the group was successful in competing for full support as a U54 through mid-2014. A new evaluation team and process was established at that point, aimed at more complete tracking of short, mid-, and long-term outcomes relevant to each of the specific aims, and additionally a more complete assessment of the overall partnership dynamics. Critical concerns for the Partnership included communication and decision making mechanisms within and between institutions (e.g., the researchers, outreach personnel, project leadership, and students) as well as issues of communication and management between university and tribal governments. While often unacknowledged, there are significant cultural differences between the two universities (even though they are part of a single state university enterprise), one being a Top 25 research university and the other a doctoral high-research institution that continues to view a high-quality undergraduate education as its highest mission. The leadership team for NACP has sought to use evaluation data and reports to do formative assessments useful in management and guidance of Partnership activities, including assessment of communication and challenges in collaboration within and across institutions. Evaluation efforts are also being used to prepare data on accomplishments as well as identify opportunities for future work, for use in future proposals for external funding.

4. Development of the NACP evaluation model

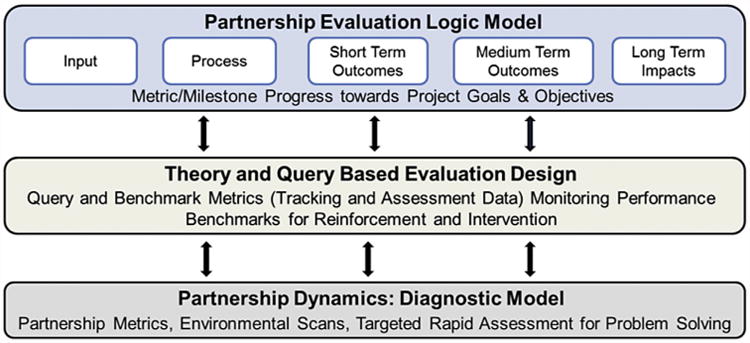

The NACP evaluation model is a synthesis of several well tested evaluation designs, including the work of the CDC Evaluation Working Group (CDC Local Program Evaluation Planning Guide), the W. K. Kellogg Evaluation handbook, the Urban Institute evaluation design, plus the National Cancer Institute's models for program evaluation, each of which can be found at easily accessible web sites2 combined with a university–industry best practices and asset based model for research development (Trotter, Briody, et al., 2008; Trotter, Sengir, et al., 2008). The former models are primarily either logic model designs, or logic model plus benchmarked and milestone (process assessment) designs. Based on empirical evidence of the success of a more integrated monitoring, feedback and intervention design (Sengir et al., 2004a, 2004b, Trotter, Sengir, et al., 2008) the NACP leadership team decided to adopt a more complex evaluation model and test it as a part of the overall scientific enterprise for the U-54 partnership. Because of the extremely high failure rate of complex research partnerships, we have added a significant “partnership dynamic” element to our design in order to address the “non-science” threats to the success of our partnerships. The resulting composite design can be described as a “Logic Model Plus” design. The integrated evaluation model has three components, graphically represented in Fig. 1. These are (1) a solid logic model evaluation design that allows for the development of short, medium, and long term metrics and milestones for each sub-project or component in the partnership (individual research projects, outreach activities, training activities); (2) a query based evaluation model (qualitative query and quantitative hypothesis driven approaches to provide a theory based evaluation design) that also includes an environmental scanning element to identify emerging problems and resolve them); and (3) a relationship dynamics component to monitor and provide feedback on the partnership elements of the program. The model integrates a NIH standard “logic model” evaluation enhanced by a “theory based” query and milestone assessment approach. The final element is inclusion of a targeted diagnostic and intervention capacity that incorporates contemporary organizational and evaluation theories into the evaluation design, diagnostic process and intervention targeting process.

Fig. 1.

Integrated NACP evaluation model.

This overall evaluation model for NACP also includes a standard mix of process, impact, and outcome evaluation procedures that assess how well and to what extent the NACP program is achieving its proposed outcomes. The model combines a quasi-independent evaluation with an administrative “diagnostic and intervention” feedback mechanism.

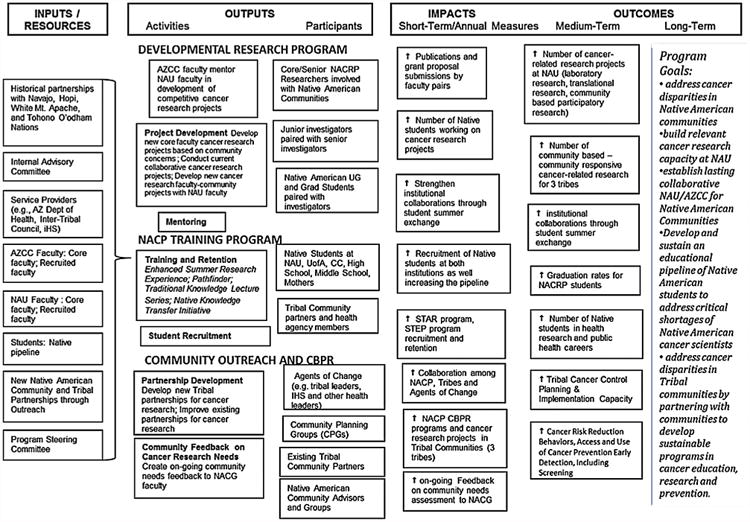

4.1. NACP logic model

The NACP logic model incorporates the basic outcome measures identified for each of the resource and activities in the program (administration, research, outreach, and training) with the necessary benchmarks and baseline data for the assessment of each disparate or overlapping program element. These metrics are enhanced by milestone (both process and outcome evaluation benchmarks) assessment and feedback, plus student and program tracking systems. The overall structure of the partnership logic model design is informed by the NCI Prevention Research Center recommendations for program evaluation metrics (Manning, McGeary, Estabrook, & Committee for Assessment of NIH Centers of Excellence Program, 2004). This part of the integrated evaluation model provides excellent guidance for creating both baseline and outcome metrics for specific parts of any complex program. The following NACP logic model visually demonstrates the complexity and the potential for integration of various elements. The NACP Logic model provides a critical framework for the instrument development and data collection employed in our evaluation design. Each short, medium and long-term outcome identified in the logic model has a specific data collection instrument associated with it, segmented by core activity (training, research, outreach, administration). The existing data collection instruments are utilized to monitor and report programmatic progress. Feedback on specific metrics is provided on a monthly, quarterly, six month, and/or annual basis to key personnel to monitor progress and correct under-performance if necessary (Fig. 2).

Fig. 2.

Native American Cancer Prevention Project Logic model.

Combining the expert identified metrics of the logic model, with our key theory based queries and the relationship dynamics model resulted in the development of 27 “logic model plus” data collection instruments. A small set of examples of these instruments (where data is collected across potential organizational boundaries and disciplinary silos) is presented in Table 1. Full descriptions of instruments are available on request.

Table 1.

Examples of NACP logic model plus associated data instruments.

| Instrument name | Description of data collected/metrics |

|---|---|

| Outreach | |

| Community event form/evaluation of event | Includes data on tribal affiliation, date, community event information, contact for meeting organizer and outcomes/future tasks. Monitors community outreach outcomes, impacts, including events that engage researchers and training elements of NACP in the outreach effort. |

| Training | |

| Mentor/Mentee evaluation forms | Student data, core participation (research, training, outreach academic information (majors, research focus, research project title, learning goals, expected degree date), progress on research activities (level of participation, skills acquired), satisfaction with the research activities performed, comments, mentor/mentee relationship (based on guidance/support provided, approachability, feedback/comments on work submitted). Provides both process and outcome metrics for student training and training outcomes. |

| Research | |

| Research progress form | Project title (NACP funded), UA partner researcher/amount of time each week spend, collaborating/communicating working with NACP students and faculty, etc., manuscripts in press, published, in progress, current funded research projects, proposals for external review funded, declined, in review, Research Aims. This database provides quarterly updates on all research outputs and dissemination activities, plus grant activity related to meeting NIH/NCI goals for the research program elements of NACP. |

| Administration/evaluation | |

| Milestone/benchmark monitoring | Short term updates based on the short term goals outlined in the NACP logic model Medium term goal updates based on the medium term goals outlined in the NACP logic model. Provides quarterly feedback on milestones and benchmarks met, in progress, or needing intervention. |

| Partnership questionnaire | Deployed to NACP core, IAC, PSC, funded project PIs and all students involved in research labs, summer training program students and mentors. Questions include: frequency of communication, importance of communication compared with others on the list, importance of the shared work you do, level of cooperation with this person, level of conflict with this person. Purpose is to monitor NACP partnership health and test the “logic model plus” evaluation design and outcomes. |

4.2. Partnership evaluation query/theory based design

All individual elements of the NACP logic model, and the short to long term outcomes, are organized in relation to the query based evaluation design in the Logic Plus model. The query based element in the evaluation design allows us to test project specific metrics and milestones (performance metrics and benchmarks) that can be framed as either hypotheses, using an evaluation theory based approach, or qualitative inquiries that can be addressed in a mixed methods approach. These query based questions were derived in two parallel approaches. First, once the formal goals, metrics and milestones for each element of the partnership (administration, evaluation, research, outreach, and training) were established we did a review of the existing literature to see what formal hypotheses had been assessed in the organizational, focused on the goals and objectives of each program element. Second we did an expert-based query design based on asking the PI's what questions (or hypotheses) they felt should be answered in relation to their area of endeavor and the other partnership elements. We are now in the process of answering the expert questions, and testing a subset of the hypotheses for the query based design, based on a total of 4.5 years of evaluation data collection. This analysis relates, primarily, to the final impact measures for the project, since the short and medium term output and the process measures have been provided on a continuing basis. Combining the logic model metrics with a “query based” theoretical approach allows us to actually set and test hypotheses about both the partnership dynamics and the individual program outputs, or more importantly, about the interaction of the two. Examples of some of the process and outcome queries for NACP include “Is the program working as planned?” “Are the program's goals being met?” “To what extent and in what time frame are the three primary programs (collaborative research mentoring, educational programs, community outreach and CBPR) being implemented as planned?” “To what extent are new, enhanced, or expanded (multi-site and multi-disciplinary) research projects, methods, approaches, tools, or technologies being identified and initiated?” To what extent are the outreach, community based, participatory efforts (1) maintaining existing historic collaborative relationships, (2) developing new collaborative relationships beyond the baseline, (3) creating the infrastructure to identify and transmit the community needs (cancer disparities) to the researchers, and (4) providing information, program direction and feedback from the researchers to both individuals and institutional representatives for Native American communities?” These and other theory driven queries will be answered in depth in future analyses and publications of the NACP evaluation data.

4.3. Relationship dynamics evaluation design

The relationship dynamics portion of our evaluation model grew out of a “best practices” assessment of university–industry research collaboration programs (Sengir et al., 2004a, 2004b; Trotter, Briody, et al., 2008; Trotter, Sengir, et al., 2008). Trotter and colleagues were asked to document the reason for success of four industry–university collaborative research laboratories to allow for expansion of the research partnership paradigm. Two of the very useful findings, which have subsequently been incorporated into our current design, were (1) that there is a predictive/diagnostic life cycle for partnerships that provides an excellent predictive “partnership” structural dynamic over time that can be used to identify and fix structural level problems, and (2) there is also an excellent diagnostic of individual contributions and relationships embedded within the “relationships dynamics” elements of the evaluation model that can be used to identify individual and sub-group (i.e. siloing) issues (working groups, partnership bridges, and succession plan replacement functions when people are added to or lost from the partnership). Partnerships succeed when they have the appropriate structure, acceptable joint vision (aims, objectives), and positive relationship dynamics (Briody & Trotter, 2008). Following the basic evaluation aphorism that “you get what you measure,” our Logic Model Plus design measures and monitors partnership “health” in addition to measuring benchmarks, milestones, and logic model metrics. The original research that led to the current model focused on university–industry research collaborations and produced a diagnostic model plus a set of best practices that we have modified to fit a university–NIH-community partnership, rather than university–industry partnership. The original research demonstrated that complex voluntary partnerships have predictable “stage-based” life cycles and that the partnership dynamics for each stage can be monitored for either health or intervention in terms of the institutional structure of the partnership or the individual relationship dynamics (positive or needing intervention) (Trotter, Briody, et al., 2008; Trotter, Sengir, et al., 2008). That research also demonstrated that a basic systems dynamics model of relationship dynamics was a useful tool for both diagnosis and maintenance of partnership health (Briody & Trotter, 2008; Sengir et al., 2006, 2007; (GP-303794: 845OR-66). The baseline and ongoing evaluation and tracking milestones, along with process measures (qualitative and quantitative), should be seen as the primary theory-driven measures that are identified in the evaluation questions.

4.4. Implementation of the evaluation

The first six months of NACP start-up were devoted to the basic logistics of the evaluation implementation. The goals, objectives, and milestones for each core were converted into short, medium and long term metrics supported by appropriate data collection instruments and data base design. Instruments were designed as either fillable word forms, PDF forms, or web based (Lime Survey) electronic forms. The forms were pilot tested and some adjustments made in terms of variables and metrics that fit the overall goals of each element of the partnership, as well as paying serious attention to response burden and frequency of data collection. The average length of time spent on the average of the 27 instruments, per person, per month is approximately 12 min (with a subjective time estimate by the participants of about 1 h). Only a small subset of the instruments is deployed each month. One of the key elements of this part of the evaluation process was to design and test the feedback systems to meet both the administrative and external oversight needs of the program. The logic model data collection is collected at varying intervals, and reported within those same intervals. Outreach activities are constant and on-going, and require monthly data collection, leading to both monthly and quarterly reporting. Research activity is continuous, but the metrics (publications, grant writing, research milestones, etc.) are best collected and reported on a quarterly basis. And training activity (mentoring, research skills development) has multiple data collection and reporting time schedules depending on the context and timing of training program processes, since those training activities include student lab experiences and mentoring experiences (data collected once each semester), summer training programs (pre-post data collected for each type of program), internship experiences, and some forms of formal training (data collected in relation to each experience). Interspersed with this scheduled activity (data collection forms go out once a month to the appropriate individual, reports go to appropriate stakeholders once a month based on the previous month's data collection), we also conduct special (mixed method) assessments of specific program features at the request of the PIs or investigators. Feedback is given on those special projects as special reports. Cumulative quarterly reports are provided on all of the evaluation metrics. As a consequence, the fourth quarter report is also a cumulative annual report. Both the reports and the data collection are iterative process and are expected to evolve over time as needed.

The complexity of our data collection, analysis and reporting procedures produces several generic challenges to implementing our evaluation model. The first challenge was a lack of experience on the part of a significant portion of the NACP participants in understanding the expanded data collection needs in all three areas of focus (research, training, and outreach), beyond simple counts (number of publications, number of students, number of community events). The enhanced data collection process led to grumbling and very slow responses or occasional failures to submit data. We were very fortunate that both the lead PIs, and the external advisory committee did understand the long term value of the overall evaluation data, and supported the data collection in spite of the grumbling, so we were able to collect the data with minimal late or missing data (although a significant amount of follow up to achieve that goal). Now, virtually all of the PI's are using the evaluation data for (1) competitive renewals, (2) ancillary grants, and (3) publications on the accomplishments of the program. The second challenge was the labor intensity involved in our evaluation design. Once set up, the data collection is fairly user friendly. In order to minimize the response burden, the various instruments are deployed with the best periodicity for our reporting requirements to both the administration and the external and internal advisory committees. On the other hand, the evaluation staff is under constant time pressure to conduct the analysis, prepare the reports (monthly, quarterly, annually, and special reports occasionally). Correct staffing and workflow improvements are a constant challenge. The third challenge was the challenge of securing the attention of the appropriate individuals in relation to the organizational feedback (diagnostics and corrections) and, where necessary, corrective action. The volume of data produced for the whole group was challenging, as was the need to do targeted corrections in workflow, productivity and relationships.

On the positive side, the process of feedback and stakeholder engagement is a major element that promotes the synthesis of our qualitative and quantitative data. While the evaluation core can produce critical insights, including an on-going synthesis of both the qualitative and quantitative data simultaneously from all three parts of the model (logic model, queries, and relationship dynamics), no amount of synthesis is useful without action. Our model uses a multi-targeted, and a hierarchical engagement model for the evaluation data. Different levels of synthesis are presented to each of the primary target audiences. Action only happens if they hear and understand the connections between the data and their desired outcomes. The primary responsibility for synthesis of our mixed methods data falls on the evaluation core; however the ultimate responsibility for action on that synthesis is administrative action through the internal and external advisory committees and the administrative core.

4.5. Example of logic model data produced by the logic plus design

The following table is an example of the feedback provided to the training and outreach cores, all core PI's, and administration in relation to the quarterly data collection and the cumulative annual data collection. We chose the metrics on the NACP training program as an example of typical quarterly and annual feedback for the training core, as well as feedback for the outreach core (increase in tribal consultation activities and increase in CBPR activities, both of which require forms of data collection beyond the typical counts of publications and grants that are required for NIH funded projects) (Table 2).

Table 2.

Examples of short term, cumulative outcome progress.

| Logic model outcome measures | NACP short term outcome progress Year 4 Quarter 1 |

|---|---|

| Increase the number of Native students working on cancer research projects | NACP has trained 198 students (Summer Training Programs and Research Projects combined) Years 1–4 (as of Y4/Q1) |

| 45.95% of the students trained (N = 91) were American Indian or Alaska Native | |

| Students being mentored in NACP labs/research projects | |

| Year 1:Percent of AI/AN Students working on NACP projects = 34% | |

| Year 2: Percent of AI/AN Students working on NACP projects = 43% | |

| Year 3: Percent of AI/AN Students working on NACP projects = 55% | |

| Year 4: Percent of AI/AN Students working on NACP projects = 67% | |

| Increase recruitment of Native students at both institutions as well as increasing the pipeline | Summer Training Program AI/AN student participation: |

| Year 1 (N = 16) AI/AN participated in summer training programs | |

| Year 2 (N = 21) AI/AN participants in summer training programs | |

| Year 3 (N = 18) AI/AN participants in summer training programs | |

| NACP Pipeline: | |

| 198 students participated in NACP programs in the 4 year project period, 66% (N = 131) of NACP students moved through an informal NACP pipeline (that is, students moved from one NACP program or activity to another) | |

| NACP needs to formalize the Pipeline program goals & pathways for students | |

| Increase collaboration among NACP, tribes, and agents of change | Ongoing collaborations are occurring among NAU, UACC/UA and Tribal Cancer Partnership, Tribal Cancer Support Services, and Tribal Human Research Review Board, Tribal Department of Health, and the Intertribal Council of Arizona (ITCA) |

| Increase NACP CBPR programs and cancer research projects in Tribal communities | Starting with the 2011 NACP Call for Proposals a specific focus was directed at research that includes collaboration with Native American Communities |

| Summer 2012 Research Retreats held July 2011 & 2012 | |

| Number of Outreach Events/Activities that occurred in the three tribal communities (Cumulative Years 1–4, through Y4Q1) (N = 317) | |

| Number of Outreach Meetings that occurred in the three communities (cumulative as of Y4Q1) (N = 836) |

This type of data was used for monitoring and providing for both annual and quarterly planning exercises to identify and reinforce success in the training program. In addition, our milestone data have been particularly useful in monitoring and reporting progress on key elements of our processes and procedures and assuring that major goals remain on track.

4.6. Example of mixed method assessments

The overall NACP methodological approach to data collection and analysis follows a “mixed-methods” design that includes both qualitative and quantitative data collection and analysis. As an example, student progression, through training, mentoring, and support is simultaneously measured through training program metrics, and through qualitative assessment tools such as interviews and focus groups. The numbers identify changes through time in terms of outcomes, and the qualitative data explains the context and the reasons for those changing numbers. The result is a set of evidence based best practices for program development and program maintenance. Table 3 includes the totals for all student participants in NACP programs to date including the total number of students by year by all research projects and the percent of American Indian and Alaska Native (AIAN) students. These numbers show considerable progress in recruiting Native American students into the short training programs, the mentoring programs and the various long term laboratory experiences.

Table 3.

Cumulative student participation NACP training (Summer Programs & Research Projects).

| Training program type | Year 1 | Year 2 | Year 3 | Year 4b | ||||

|---|---|---|---|---|---|---|---|---|

|

|

|

|

|

|||||

| Total students | Percent AI/AN | Total students | Percent AI/AN | Total students | Percent AI/AN | Total students | Percent AI/AN | |

| Training programs | 35 | 48 | 50 | 60 | 48 | 60 | 17 | 82 |

| NACP research projects | 40 | 22 | 60 | 28 | 52 | 61 | 34 | 67 |

| Cumulativea | N = 73 | 34 | N = 104 | 43 | N = 85 | 55 | N = 40 | 67 |

The total numbers for each program (summer & NACP research projects) do not equal the cumulative totals because some students participated in more than one program in a year. Each student is only counted once in the cumulative totals.

Year 4 data do not include summer training programs, as they are scheduled for summer 2013. Year 4 data also do not include spring 2013 students working on research projects.

Qualitative student feedback was collected as a part of the evaluation instruments for the NACP Program and this data was subsequently integrated into the analysis of the overall progress for the training program. For example, one student shared what they learned during the program: I did do a lot but I learned a lot. It was tons of work but necessary. Some of it was not explained to me but I learned through trial and error. Students also commented on their interest to be involved in research in the future. One participant said, I enjoyed working in the X Laboratory very much. I hope to work in the X Lab again soon. The coding and analysis of the qualitative data has led directly to mentoring and student experience improvements for the program as a whole. In addition, with their permission, requests for future engagement in specific mentoring arrangements is both encouraged and accommodated whenever possible through informal channels.

4.7. Partnership diagnostics and dynamics

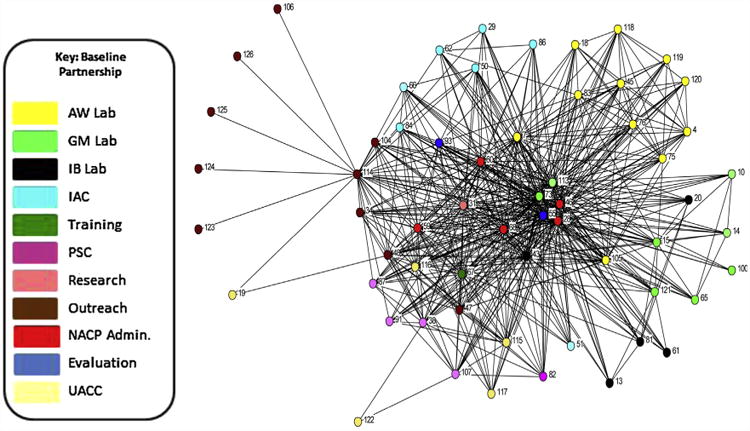

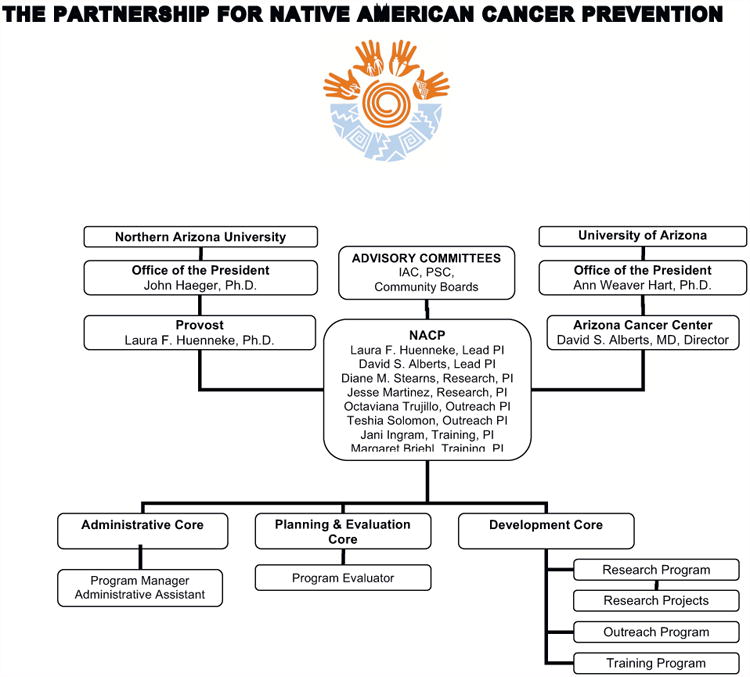

Complex organizational structures are often displayed as organizational charts. NACP's structure can be described as a multiple PI structure with three cores being collaboratively administered across institutions. When depicted in an organizational chart it has a hierarchical (ladder like) structure with paired PI's working across each organizational boundary.

In contrast, the partnership dynamics data, collected primarily through the use of the partnership survey conducted annually, depict the actual (network based) relationships and dynamics (communication, joint work, trust, cooperation, conflict, etc.) at work in the partnership. The partnership metrics (Trotter, Sengir, et al., 2008) include monitoring the structural elements (size, evolutionary stages, structural holes, roles, fragmentation, reach (communication), and structural distance patterns of the partnership as it transitions through a predictable life cycle (Trotter, Briody, Catlin, Meerwarth, & Sengir, 2004). The partnership dynamics include identification of key player positions (Borgatti, 2003; Trotter & Briody, 2006), as well as timely or premature transitional stages. The overall model also identifies potential succession candidates for key positions, communication issues that need to be reinforced or mitigated, level of conflict and cooperation in the system, and basic partnership structure compared with best practices models of partnership structure.

As an example, the Year 1 partnership structural diagram (Fig. 4) depicts the actual relationships that have developed within the partnership as a whole during its first six months of operation. As can be seen, the official organizational chart (Fig. 3) is a poor representation of the multiplex relationships in the working partnership. The circles in the diagram represent each of the named individuals in the partnership, and the lines between them show a composite view of their interconnected relationships. Fig. 4 represents the dynamic aspects of the partnership structure, including the two universities and the communities receiving outreach activities during the early (start-up) stage of the partnership. At this stage, the expected structure of the partnership is a very densely connected core (globular structure) that has sub-regions (note the clustering of the yellow and green dots representing research labs) that are connected working groups embedded in the structure. The core is the glue that holds the structure together over time by maintaining goals, objectives and processes, and transmits those to the nascent working groups, while also monitoring the progress and in some cases intervening if problems arise. The figure also shows how outside groups (in this case community outreach activity) starts at the periphery of the organizational core [note the fan shaped structure on the left]. Eventually that working group will become more connected (integrated) in the overall structure over time, with additional connections beyond the original bridge (outreach coordinator). Through time, as NACP is successful, the structure will evolve in line with the research partnership life stage predictive model (Sengir et al., 2004a, 2004b; Trotter et al., 2004).

Fig. 4.

Year 1 NACP partnership structure.

Fig. 3.

NACP formal organizational structure.

The NACP relationship dynamics analysis aims at assessing the overall health of the partnership, based on comparing the NACP partnership structure and dynamics with the anticipated model developed for industry–university partnerships (Sengir et al., 2006; Trotter, Briody, et al., 2008; Trotter, Sengir, et al., 2008). When compared to the model's ideal “life stage based” partnership structure (Trotter et al., 2004), the NACP partnership shows appropriate structure and development for its evolutionary stage. The colored dots represent different functioning parts of the partnership structure (labs, administrative core, outreach, training, etc.), and also show an appropriate level of connection and communication for this stage of development. This structure represents a solid and stable (well connected) core of individuals who provide the relationship “glue” holding the partnership together and on course. The clusters of colored dots represent coherent working groups that are sub-groups within the overall structure. These are well connected within the subgroup, but also connected, through the core, to other subgroups. This provides a mechanism for rapid communication of goals and directions, while also preserving close cooperation within subunits. Additional analysis of roles, key player attributes, fragmentation statistics, and other sociometrics (Trotter & Briody, 2006) are then available as diagnostic or reinforcement (best practices) information that can be used to enhance the partnership aspects of the project, in addition to the logic model and query based metrics.

Going forward, the logic model metrics, and the relationship dynamics data will be longitudinally compared (over the 5 year life cycle) with the original best practices partnership model in order to (1) test the basic structural and relationship dynamics between university–industry partnership models compared with university–university–community models of partnership and to (2) test the efficacy and organizational impact of the logic model plus design of the NACP evaluation model will against the outcomes and impacts of the first (differently evaluated) period of the U-54 project, and potentially against matched case studies of other U-54s if possible.

5. Lessons learned

One of the key lessons learned is that our multifaceted evaluation strategy and data collection process produces different “value-added” elements to various partnership stakeholders, within different timeframes, and oriented to differing expectations, needs, and perceptions. Understanding this lesson has been crucial to effectively disseminating information and understandings about the partnership. When our partnering Native communities have been concerned about not deriving value from the Partnership, we have found it both necessary and beneficial to modify our evaluation, data collection and dissemination frameworks to meet their expectations, based on both community requests and based on our own concern over following appropriate, culturally sensitive, ethical guidelines. One of our lessons learned is that there is a certain amount of ambiguity in relation to the protection of human subjects in research (IRB concerns, concerns over the responsible conduct of science, concerns over cross-cultural applicability of processes and procedures) in relation to general program evaluation data as opposed to research data that will be disseminated. Our standard procedure, based on our desire to include the new evaluation model as a part of the overall research orientation of the NACP program, has been to consistently, from the start, take the most conservative approach and to request IRB approval and oversight for all of the data collected within the scope of the NACP evaluation model, and to follow all required informed consent procedures for any qualitative, quantitative, or administrative data.

It has also been important to understand that higher education is not a monolithic culture. The assumption that the institutional and academic value, funding, long-term employment prospects, of the partnership are “obvious” to both academic partners fails the partnership dynamics test because there are significant differences in culture, resources, assumptions, reward systems, and relationship assumptions between the two partner research universities although both are managed by a single state's public education enterprise (cf. Franz 2012). Since standard evaluation processes sometimes omit sampling or tracking these issues (community or inter-university), they are kept underground, with consequent minor, and sometimes major, partnership failures. Some of the stakeholders in the partnership are actively engaged, but others are less directly engaged, which makes some of the outcome data of less direct interest to some stakeholders. For example, our pipeline students constitute an audience that is asked to put a lot into evaluation (surveys, skill tests, mentoring activities) but may not personally see or derive much benefit from the short or even medium term outcomes of the evaluation process, even though their input is vital to the overall program success (Hensel & Paul, 2012). As a consequence, there is a constantly changing view of the utility and the perceived burden of our evaluation activities throughout the life cycle of the partnership that must be consistently addressed. Evaluation results must be clearly presented in terms and in formats that are appropriately designed to provide the maximum value to each stakeholder group (communities, institutions, NIH, scientists, students, educators, etc.). There is no single “one size fits all” process for effectively transmitting or disseminating the Logic Model Plus evaluation data to everyone.

We have certainly learned that our partnership works best when there is a clear common vision, integrated interaction and strong positive relationship dynamics between and among the administration and the three functional core working groups (Research, Training, Outreach). The short and longer term outcomes clearly suffer when the partnership becomes fragmented or siloed. This seems obvious, but it is a central tenet of our model. The integrated evaluation methods serve to link the program components if they are used as a diagnostic and monitoring tool. However, even where the specific stakeholders or larger group helped shape the outcomes to be tracked, and produced the metrics encompassed in evaluation tools, it is easy to forget that model only works at full capacity when partners USE the results. Otherwise, it becomes an exercise to check off, a milestone to report, but not a tool to use. Consequently, we have learned that there is a need to build in formal time and effort to review evaluation results, discuss them, and use them for continuous improvement or for re-orientation of efforts. Even the periodicity of the evaluation data collection has turned out to be a useful feedback tool. Since data are collected (and reported) on a monthly, quarterly, semester, and annual basis with minimal delay, it is harder for stakeholders to ignore the actual outcomes and processes of the partnership when they have to report results on a regular basis. The structural (social network) element of the evaluation model has the ability to identify the “weakest links” in the partnership efforts. These weak links are identified in several ways: by low productivity (poor metrics from the logic model), by “weak structural ties” (connectivity, communication) in the relationship survey, and finally by process evaluation considerations (benchmarks, etc.). The evaluation model then allows mid-course corrections on a timely basis that otherwise would not have been made or might have been made only after serious damage had been done.

Finally, one of the lessons learned is that while the full data from the Logic Model Plus evaluation model must be collected throughout the project, there is definitely a time sensitive (life cycle) differential in the utilization of the data. In the early stages of the program the primary need is for monitoring and feedback on the basic metrics and milestones for the project, especially short term output metrics and organizational/process milestones that relate to startup and baseline productivity. The relationship dynamics data at that point in time are useful for determining that the startup structure fits the overall predicted structure for the startup stage (Trotter & Briody, 2006). The midlife stage (starting at 12–18 months after project initiation, assuming a 5 year life cycle) focuses on a combination of short term metrics (quarterly progress toward annual goals and objectives), medium range metrics (overall programmatic progress), with some additional information from the relationship dynamics modeling to identify “weak links” and difficult program structures that may be contributing to the health or lack of health of the partnership. The final stage, the transitional stage, is where all of the logic model, query based metrics, and the relationship dynamics data comes into full, and integrated rather than particularized use. The transition stage of any partnership produces a set of options that include dissolution of the partnership, as-is maintenance, or transformation and reinvigoration (Trotter, Briody, et al., 2008), depending on the cumulative power of the medium and longer term metrics (including impact metrics) and the mature relationship dynamics that would support one of these transitional stages. On a very practical level, these evaluation results are highly valuable at this stage for answering key institutional questions about the risk and benefit of going after competing renewal opportunities for complex programmatic grants; the outcomes of the current partnership are available to demonstrate success, and the stability and future potential for survival of the partnership are well documented.

6. Summary

All of our information to date indicates that the logic model plus evaluation design is a successful innovation for complex inter-institutional and community engaged research and training partnerships. The data and the evaluation model design for the Native American Cancer Prevention project has already spun off a number of related partnership projects including Bridges to Baccalaureate3 (NIH Grant #1R25GM102788), the Center for American Indian Resiliency4 (NIH Grant#1P20MD006872), as well as other pending partnership grants that include Northern Arizona University and Native American communities in Arizona, using the success of NACP model as a foundation for new initiatives. The Native American Cancer Prevention project is now in its mature stage (year 4) and beginning create transitional strategies (competing renewal efforts), using the cumulative data available through our evaluation model. The application of the Logic Model Plus approach will be more fully tested by the level of success in that transitional effort.

Biographies

Robert T. Trotter II, is an Arizona Regents' Professor and Associate Vice President for Health Research Initiatives, Northern Arizona University. He serves as the evaluator for the National Cancer Institute funded Native American Cancer Prevention Program U54 grant, as well as the NIH funded Center for American Indian Resiliency grant (C.A.I.R.), and several R25 programs. He is a medical anthropologist and has published extensively in the area of cross-cultural health care delivery systems, university–industry–community–health care partnerships, complementary and alternative medicine, cross-cultural applicability models alcohol, drug abuse and social networks.

Kelly Laurila, MA is the Evaluation Coordinator for four programs: (1) Partnership for Native American Cancer Prevention (1U54CA143925-01), (2) University of Arizona Cancer Center: Cancer Prevention and Control Translational Research (2R25CA078447-11), (3) Center for American Indian Resiliency (1P20MD006872-01), and (4) Bridging Arizona Native American Students to Bachelor's Degrees (1R25GM102788-01). She is the NAU Program Coordinator for the Clinical and Translation Science Institute. She has experience working with diverse populations conducting program evaluation, rapid assessment evaluation activities, and community-based needs assessments.

David S. Alberts, MD is a Regents Professor of Medicine, Pharmacology, Public Health and Nutritional Science and Director of the University of Arizona Cancer Center. He serves as the Contact Principal Investigator for the National Cancer Institute funded Native American Cancer Prevention U54 Grant in partnership with Northern Arizona University, Arizona. He is a clinical pharmacologist and medical oncologist, who specializes in women's cancer prevention and treatment and has published more than 700 journal articles, book chapters, and books. He serves as Chair of the Cancer Prevention Committee in the GOG.

Laura Huenneke, Ph.D. is Provost at Northern Arizona University; she served previously as dean and as vice president for research. She earlier spent 16 years in the Department of Biology at New Mexico State University. She earned a Ph.D. in Ecology & Evolutionary Biology from Cornell University; her research interests focus on the influence of biological diversity on ecosystem structure and function. She is also committed to effective communication of scientific and environmental understanding to the public. Currently she is the university's Lead Investigator for the Partnership for Native American Cancer Prevention, an NIH program building research and training capacity.

Footnotes

The Partnership for Native American Cancer Prevention is a cooperative partnership between Northern Arizona University (NAU, a minority serving institution), the University of Arizona Cancer Center (UACC), and the National Cancer Institute Grant # 1U54CA143925-01). Funded by the National Cancer Institute.

Websites for evaluation design included in our model: (www.cdc.gov/eval; www.dhs.cahwnet.gov/ps/cdic/ccb/TCS/html/Evaluation_Resources.htm; http://www.wkkf.org/knowledge-center/resources/2010/W-K-Kellogg-Foundation-Evaluation-Handbook.aspx; https://www.bja.gov/evaluation/guide/documents/evaluation_strategies.html; http://www.ncbi.nlm.nih.gov/books/NBK24675/.

The Bridges to Baccalaureate Program is an R25 training grant targeted at creating a successful pipeline program for Native American students. The program provides both laboratory and summer enhancement programs and experiences that support student career advancement in the biomedical sciences. The program includes partnerships between Northern Arizona University, Native American communities, and both tribal and community colleges.

The Center for American Indian Resiliency (C.A.I.R.) is a small center innovation grant focused on research, training, outreach, and community based participatory research and capacity development for Native American communities in Arizona. The program includes partnership activities between Northern Arizona University, Dine College, the University of Arizona, and tribal communities.

Contributor Information

Robert T. Trotter, II, Email: Robert.trotter@nau.edu.

Kelly Laurila, Email: Kelly.Laurila@nau.edu.

David Alberts, Email: dalberts@uacc.arizona.edu.

Laura F. Huenneke, Email: Laura.huenneke@nau.edu.

References

- American Councilon Education. Working together, creating knowledge. Washington, DC: University–Industry Research Collaboration Initiative; 2001. [Google Scholar]

- Arino A, de la Torre J. Learning from failure: Towards an evolutionary model of collaborative ventures. Organization Science. 1998;9(3):306–326. [Google Scholar]

- Borgatti SP. dynamic social network modeling and analysis: Workshop summary and papers. National Academy of Sciences; 2003. The Key Player Problem. [Google Scholar]

- Brett J, Heimendinger J, Boender C, Morin C, Marshall J. Using ethnography to improve intervention design. American Journal of Health Promotion. 2002;16(6):331–340. doi: 10.4278/0890-1171-16.6.331. [DOI] [PubMed] [Google Scholar]

- Brinkerhoff JM. Assessing and improving partnership relationships and outcomes: a proposed framework. Evaluation and Program Planning. 2002;25:215–231. [Google Scholar]

- Briody EK, Trotter RT., II . Partnering for performance: Collaboration and culture from the inside out. New York: Roman and Littlefield; 2008. [Google Scholar]

- Brown LD, Alter TR, Brown LG, Corbin MA, Flaherty-Craig C, McPhail LG, et al. Rural Embedded Assistants for Community Health (REACH) Network: First-person accounts in a community–university partnership. American Journal of Community Psychology. 2013 Mar;51(1–2):206–216. doi: 10.1007/s10464-012-9515-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bryan J. Fostering educational resilience and achievement in urban schools through school–family–community partnerships. Professional School Counseling. 2005 Feb;8(3):219–227. [Google Scholar]

- Duysters G, Kok G, Vaandrager M. Crafting successful strategic technology partnerships. R&D Management. 1999;29(4):343–351. [Google Scholar]

- Ertel D, Weiss J, Visioni LJ. Managing alliance relationships: A cross-industry study of how to build and manage successful alliances. Brighton, MA: Vantage Partners; 2001. [Google Scholar]

- Franz N, Childers J, Sanderlin N. Assessing the culture of engagement on a university campus. Journal of Community Engagement and Scholarship. 2012;5(2):29–40. [Google Scholar]

- Gill J, Butler RJ. Managing instability in cross-cultural alliances. Long Range Planning. 2003;36(6):543–563. [Google Scholar]

- Gulati R, Khanna T, Nohria N. Unilateral commitments and the importance of process in alliances. Sloan management review. 1994 Spring:61–69. [Google Scholar]

- Hensel NH, Paul EL, editors. Faculty support and undergraduate research: Innovations in faculty role definition, workload, and reward. Washington, DC: Council on Undergraduate Research; 2012. p. 159. [Google Scholar]

- Hindle K, Anderson RB, Giberson RJ, Kayseas B. Relating practice to theory in indigenous entrepreneurship: A pilot investigation of the Kitsaki Partnership Portfolio. American Indian Quarterly. 2005;29(Winter/Spring):1–23. [Google Scholar]

- Leonard J. Using Bronfenbrenner's ecological theory to understand community partnerships: A historical case study of one urban high school. Urban Education. 2011 Sep;46(5):987–1010. [Google Scholar]

- Schmitz C. LimeSurvey Project Team. LimeSurvey: An Open Source survey tool. Hamburg, Germany: LimeSurvey Project; 2012. http://www.limesurvey.org. [Google Scholar]

- Manning FJ, McGeary M, Estabrook R, editors. Committee for Assessment of NIH Centers of Excellence Program. NIH Extramural Center Programs: Criteria for Initiation and Evaluation. National Academies Press; 2004. p. 230. 0-309-53028-8, http://www.nap.edu/catalog/10919.html. [PubMed] [Google Scholar]

- Mendel P, Damberg CL, Sorbero MES, Varda DM, Farley DO. The growth of partnerships to support patient safety practice adoption. Health Services Research. 2009 Apr;44(2 Pt 2):717–738. doi: 10.1111/j.1475-6773.2008.00932.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meschi P. Longevity and cultural differences of international joint ventures: towards time-based cultural management. Human Relations. 1997;50(2):211–228. [Google Scholar]

- Mohr J, Spekman R. Characteristics of partnership success: partnership attributes, communication behavior, and conflict resolution techniques. Strategic Management Journal. 1994;15:135–152. [Google Scholar]

- Nahavandi A, Malekzadeh AR. Acculturation in mergers and acquisitions. Academy of Management Review. 1988;13(1):79–90. [Google Scholar]

- Reid PT, Vianna E. Negotiating partnerships in research on poverty with community-based agencies. Journal of Socials Issues. 2001 Summer;57(2):337–355. [Google Scholar]

- Ross LF, Loup A, Nelson RM, Botkin JR, Kost R, Smith GR, et al. The challenges of collaboration for academic and community partners in a research partnership: points to consider. Journal of Empirical Research on Human Research Ethics. 2010;5(March):19–32. doi: 10.1525/jer.2010.5.1.19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sastry MA. Problems and paradoxes in a model of punctuated organizational change. Administrative Science Quarterly. 1997;42(2):237–275. [Google Scholar]

- Scarinci I, Johnson RE, Hardy C, Marron J, Partridge EE. Planning and implementation of a participatory evaluation strategy: A viable approach in the evaluation of community-based participatory programs addressing cancer disparities. Evaluation and Program Planning. 2009;32:221–228. doi: 10.1016/j.evalprogplan.2009.01.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scott CM, Thurston WE. The influence of social context on partnerships in Canadian health systems. Gender, Work & Organization. 2004 Sep;11(5):481–505. [Google Scholar]

- Sengir GH, Trotter RT, II, Briody EK, Kulkarni DM, Catlin LB, Meerwarth TL. Modeling relationship dynamics in GM's research-institution partnerships. GM Research & Development Center Publication; 2004. Mar, General Motors R&D 9747. [Google Scholar]

- Sengir GH, Trotter RT, II, Briody EK, Kulkarni DM, Catlin LB, Meerwarth TL. Modeling relationship dynamics in GM's Research Institution Partnerships. Journal of Manufacturing Technology Management. 2004;15(7):541–559. [Google Scholar]

- Sengir GH, Trotter RT, II, Kulkarni DM, Catlin LB, Briody EK, Meerwarth TL. U.S Patent 7, 280, 977, (GP-303794: 845OR-66) System and model for performance value based collaborative relationships. 2007 Oct 9; Issued.

- Spekman R, Lamb CJ. Fruit fly alliances: The rise of short-lived partnerships. Alliance Analyst. 1997;15(August):1–4. [Google Scholar]

- Trotter RT, II, Briody EK. General Motors R&D. Vehicle Dynamics Research Lab; 2006. Jun, “Its all about relationships” not just buying and selling ideas: improving partnership success through reciprocity. [Google Scholar]

- Trotter RT, II, Briody EK, Catlin LB, Meerwarth TL, Sengir GH. General Motors R&D 9907. GM Research & Development Center Publication; 2004. Oct, The evolving nature of GM R&D's collaborative research labs: Learning from stages and roles. [Google Scholar]

- Trotter RT, II, Briody EK, Sengir GH, Meerwarth TL. The life cycle of collaborative partnerships: evolutionary structure in industry–university research networks. Connections. 2008 Jun;28(1):40–58. [Google Scholar]

- Trotter RT, II, Sengir GH, Briody EK. In: The cultural processes of partnerships in partnering for performance: Collaboration and culture from the inside out. Briody EK, Trotter RT II, Sengir GH, editors. New York: Roman and Littlefield; 2008. [Google Scholar]

- Turpin T. Managing the boundaries of collaborative research: A contribution from cultural theory. International Journal of Technology Management. 1999;18(3–4):232–246. [Google Scholar]

- Uzzi B, Mukherjee S, Stringer M, Jones B. Atypical combinations and scientific impact. Science. 2013;342:468–472. doi: 10.1126/science.1240474. [DOI] [PubMed] [Google Scholar]

- Walsh D. Best practices in university–community partnerships: lessons learned from a physical-activity-based program. The Journal of Physical Education Recreation & Dance. 2006 Apr;77(4):45–56. [Google Scholar]

- Zhang X. Critical success factors for public–private partnerships in infrastructure development. Journal of Construction Engineering & Management. 2005 Jan;131(1):3–14. 12p. [Google Scholar]