Abstract

For the consequences of our actions to guide behavior, the brain must represent different types of outcome-related information. For example, an outcome can be construed as negative because an expected reward was not delivered or because an outcome of low value was delivered. Thus behavioral consequences can differ in terms of the information they provide about outcome probability and value. We investigated the role of the striatum in processing probability-based and value-based negative feedback by training participants to associate cues with food rewards and then employing a selective satiety procedure to devalue one food outcome. Using functional magnetic resonance imaging, we examined brain activity related to receipt of expected rewards, receipt of devalued outcomes, omission of expected rewards, omission of devalued outcomes, and expected omissions of an outcome. Nucleus accumbens activation was greater for rewarding outcomes than devalued outcomes, but activity in this region did not correlate with the probability of reward receipt. Activation of the right caudate and putamen, however, was largest in response to rewarding outcomes relative to expected omissions of reward. The dorsal striatum (caudate and putamen) at the time of feedback also showed a parametric increase correlating with the trialwise probability of reward receipt. Our results suggest that the ventral striatum is sensitive to the motivational relevance, or subjective value, of the outcome, while the dorsal striatum codes for a more complex signal that incorporates reward probability. Value and probability information may be integrated in the dorsal striatum, to facilitate action planning and allocation of effort.

Keywords: fMRI, striatum, caudate, nucleus accumbens, reward processing

decision making is guided by information about the value and probability of the outcomes of one's choices. Value is a subjective measure that varies with internal motivational states and goals. For example, ordering chocolate almond cake may be rewarding, but not to someone with a nut allergy or to someone who is too full for dessert. Estimates of outcome probability, on the other hand, are derived by learning the contingencies that exist between actions and outcomes. For some actions, the contingency between action and outcome is very strong, such as when one turns on a faucet and water pours out. Other actions, such as playing a slot machine, have less predictable consequences.

The subjective value of an outcome, defined here as the desirability of the outcome, if obtained, can be altered through changes in motivational state. In both animals and humans, a selective satiety procedure has been used to reduce outcome value; individuals are fed a food reward to satiety, so that the value of that outcome decreases (Balleine and Dickinson 1998; Tricomi et al. 2009). Further delivery of that food constitutes an outcome of low subjective value. For instance, the receipt of an extra slice of cake when you are sated would be an outcome of low value, even though the first slice may have had a high subjective value.

In contrast, the omission of an expected positive outcome constitutes a violation of a learned action-outcome contingency, without resulting in a change in outcome value. For example, turning on a faucet and having no water come out indicates a change in outcome probability; however, the value of the outcome itself does not change. Thus although delivery of an unpleasant outcome and omission of an expected rewarding outcome are both less favorable than receipt of a reward, they differ in terms of the information they provide about outcome value and probability.

A network consisting of the striatum and its afferents from midbrain, prefrontal, and limbic structures has been implicated in various aspects of feedback-based decision making (Doya 2008; Rushworth and Behrens 2008). The head of the caudate nucleus has been shown to be necessary for acquiring action-outcome associations. Its activity in humans is modulated by perceived action-outcome contingency (Tricomi et al. 2004; Yin et al. 2005). The nucleus accumbens, on the other hand, is required for the modulation of action vigor by motivational signals and is active during presentations of rewarding stimuli (de Borchgrave et al. 2002; O'Doherty et al. 2004). It is unclear, however, whether these striatal structures differentially process different forms of negative feedback.

In this fMRI experiment, we investigated the role of the striatum in processing value-based and probability-based feedback. Participants were initially trained to associate fractal cues with food rewards. Then, value was altered through a selective satiety procedure, which devalued one food outcome. Probability of outcome receipt was altered by omitting the outcome on a majority of trials presented in a second phase of the experiment. This allowed us to examine brain activity related to receipt and omission of expected rewards and devalued outcomes.

METHODS

Participants

Twenty-five participants were initially recruited for this study (16 women, 9 men; mean age = 22.4 yr, SD = 2.4 yr). Four participants were excluded from analysis because of excessive motion during scanning (n = 1), signal-to-noise ratio or mean intensity abnormality (n = 2), or equipment malfunction during session (n = 1). The final behavioral and neuroimaging analyses were conducted on 21 participants (14 women, 7 men; mean age = 22.3 yr, SD = 2.5 yr). Participants responded to a posted advertisement, and all gave written informed consent. All participants were prescreened to ensure that they were not dieting and that they enjoyed eating chocolate and cheddar crackers. Average weight for female subjects was 123.4 lb (SD = 17.42 lb, range = 105–166 lb) and for male subjects was 160.33 lb (SD = 24.94 lb, range = 130–200 lb). Since the experiment involved food consumption, the eating attitudes test (EAT-26; Garner et al. 1982) was administered prior to the experiment, which indicated no eating disorders in any of the participants [mean score: 4.2 ± 3.8 (SD); range: 0–10]. All scores were under the 11-point cutoff, a threshold for screening that ensures the exclusion of anyone at high risk for an eating disorder (Orbitello et al. 2006). Participants were asked to fast for at least 4 h prior to the experiment, although they were allowed to drink water. The experiment was approved by the Institutional Review Board of the University of Medicine and Dentistry of New Jersey as well as the Institutional Review Board of Rutgers University.

Experimental Procedure

At the beginning of the experiment, participants were asked to rate on a scale from 0 to 5 how pleasant they would find eating M&Ms (Mars, McLean, VA) and cheddar-flavored Goldfish crackers (Pepperidge Farm, Norwalk, CT). Then they performed the training phase of a computerized learning task. During each trial, a fractal image was shown on the screen for 1.5 s, along with a row of three squares directly below it. One of the three squares was highlighted in yellow, indicating which button press was the correct one for that particular trial (1, 2, or 3). Subjects were instructed that if they pressed this response button on the keyboard within the 1.5-s time interval, they would have the chance to earn a food reward. After a jittered interstimulus interval of 1–8 s, if the button was pressed, either a picture of an M&M or a Goldfish cracker would be shown (indicating a food reward of the corresponding type, to be consumed after the training phase) or a Ø symbol would be shown, indicating no reward. These outcomes were probabilistically associated with the five fractal stimuli, in the proportions shown in Table 1. Specifically, two of the fractal images were more likely to be associated with Goldfish rewards and two were more likely to yield M&Ms. In all cases in which no button was pressed in the allotted time, the Ø symbol was shown. A jittered intertrial interval (ITI) of 1–8 s followed each trial. The total number of M&Ms and Goldfish earned was in proportion to the number of times these outcomes were displayed, and after 120 training trials (24 for each fractal), the subject was given his/her earnings to eat.

Table 1.

Fractal-outcome probabilities in training phase and test phase

| Outcome 1 | Outcome 2 | No Outcome | |

|---|---|---|---|

| Training | |||

| Fractals 1–4 | 75% | 17% | 8% |

| Fractal 5 | 4% | 8% | 88% |

| Test | |||

| Fractals 1 and 3 | 75% | 16% | 9% |

| Fractals 2 and 4 | 9% | 3% | 88% |

| Fractal 5 | 3% | 9% | 88% |

Outcome 1 = M&Ms for fractals 1 and 2, Goldfish for fractals 3 and 4; vice versa for outcome 2 (see Fig. 1). One outcome is devalued prior to the test phase.

After this training phase, participants consumed their earned food rewards (generally, between 5 and 7 Goldfish crackers and M&Ms). In addition, one of the two foods was randomly chosen to be devalued through a selective satiety procedure (e.g., Gottfried et al. 2003; Tricomi et al. 2009), in which subjects were asked to eat that food until it was no longer pleasant to them. Thus participants ate only a small amount of one food, so that its subjective value remained high, whereas they ate much more of the other food, so that its subjective value would decrease through satiation. To encourage subjects to eat a large amount of the food to be devalued, they were provided with ∼1 cup of this food in a bowl in front of them. Most subjects consumed this amount, although a few consumed more or less than this. In every case, participants ate more than twice as much of the devalued food than they ate of the still-rewarded food. After the subjects finished eating, they rated the pleasantness of the two foods again. Then, they performed the test phase of the experiment during acquisition of fMRI data. In this phase, the same basic task was utilized; however, the outcome for two of the fractals was omitted on a majority of trials (see Table 1). A jittered ITI of 1–8 s between trials was used to aid in the estimation of the BOLD signal produced on each trial. Participants were instructed to maintain responding for all conditions, and since the fractal-outcome associations were probabilistic, there was a possibility of reward on every trial. Trials were divided into four runs of ∼7.5 min each, and presentation of the five trial types was randomized (32 trials of each type; 160 trials overall). The BOLD responses for the following conditions could then be compared: 1) receipt of expected reward, 2) omission of expected reward, 3) receipt of devalued outcome, 4) omission of devalued outcome, and 5) expected omission of outcome (see Fig. 1). Participants were told that at the end of the study they would be asked to eat the amount of each food reward earned during the task.

Fig. 1.

Example stimulus-outcome associations. Note that the fractal-outcome pairings and the food chosen for devaluation were randomized by subject. Additionally, the outcomes were delivered probabilistically, according to the probabilities listed in Table 1.

fMRI Data Acquisition

A 3-T Siemens Allegra head-only scanner and a Siemens standard head coil were used for data acquisition at the University of Medicine and Dentistry of New Jersey. Anatomical images were acquired with a T1-weighted protocol (256 × 256 matrix, 176 1-mm sagittal slices) Functional images were acquired with a single-shot gradient echo EPI sequence (TR = 2,500 ms, TE = 25 ms, flip angle = 80°, FOV = 192 × 192 mm, slice gap = 0 mm). Forty-three contiguous oblique-axial slices (3 mm × 3 mm × 3 mm voxels) were acquired in an oblique orientation of 30° to the anterior commissure-posterior commissure (AC-PC) axis, which reduces signal dropout in the ventral prefrontal cortex relative to AC-PC aligned images (Deichmann et al. 2003).

Data Analysis

Analysis of imaging data was conducted with Brain Voyager QX software, version 2.0 (Brain Innovation, Maastricht, The Netherlands). The data were initially corrected for motion and slice scan time by cubic spline interpolation. Furthermore, spatial smoothing was performed with a three-dimensional Gaussian filter (8-mm FWHM), along with voxelwise linear detrending and high-pass filtering of frequencies (3 cycles per time course). Structural and functional data of each participant were then transformed to standard Talairach stereotaxic space (Talairach and Tournoux 1988).

After preprocessing, the Talairach-transformed fMRI data were analyzed with a random-effects general linear model (GLM). The onsets of each cue (fractal presentation) and outcome event were modeled as stick functions and then convolved with a canonical hemodynamic response function to create regressors of interest for the different conditions: 1) receipt of expected reward, 2) omission of expected reward, 3) receipt of devalued outcome, 4) omission of devalued outcome, and 5) expected omission of outcome. We conducted pairwise comparisons between each of these conditions, both at presentation of cue and at presentation of outcome. Regressors of no interest were also generated using the realignment parameters from the image preprocessing to further correct for residual subject motion. Missed trials and trials in which feedback was incongruent with the dominant cue-outcome contingencies (e.g., when omission of reward followed the reward cue) were modeled as confound predictors.

A 2 × 2 ANOVA with time of task (first or second half) and receipt of reward (reward or reward omission) as factors was performed at the time of the cue, in order to check for learning of fractal-outcome contingencies over time. Additional analyses focused on identifying regions that showed differential responses to the different fractal cues and different types of outcome events. In addition to generation of whole brain statistical maps, a priori regions in the caudate and nucleus accumbens were selected for testing; parameter estimates were also extracted from these regions to graph activation across conditions. These anatomically based regions of interest (ROIs) ensure statistical independence when comparing parameter estimates across all conditions (Kriegeskorte et al. 2009), whereas the whole brain analyses show the full extent of the brain regions exhibiting significant differences between conditions. In the caudate, cubic regions of 8 mm3 were centered at x = ±11, y = 11, z = 8, based on the average of coordinates reported elsewhere (Delgado et al. 2000, 2004; Tricomi et al. 2004; Zink et al. 2004). The nucleus accumbens regions were the same size and were centered at x = ±10, y = 8, z = −4; these coordinates have been used to define nucleus accumbens ROIs in previous studies (Bischoff-Grethe et al. 2009; Cools et al. 2002), based on Talairach atlas location and peak activation coordinates from prior work (Breiter et al. 2001; Delgado et al. 2000; Knutson et al. 2001). These regions were selected because of their previously evidenced roles in the processing of rewarding feedback. For example, the nucleus accumbens region has shown a specific role for processing of the valence of a stimulus (Knutson et al. 2005), while the caudate nucleus region has been shown to encode receipt of large versus small reward (Delgado et al. 2003).

A complementary analysis included the trialwise probability of reward receipt as a parametric modulator. Thus this analysis identified brain regions that demonstrated an increase in BOLD signal at the time of feedback, correlating with the probability that the valued outcome (i.e., the still-rewarding food) would be received on any given trial. This probability value was calculated separately for each fractal, based on the reinforcement history of that fractal cue. Therefore, the probability value on any given trial was the running average of the reinforcement of the fractal cue up until that trial, with 0 indicating no receipt of reward and 1 indicating receipt of reward.

Finally, we estimated an additional model that included the expected value as a parametric modulator of the cue regressor and the prediction error as a parametric modulator of the outcome regressor. These measures were calculated with the following equations: B(1) = 1 for cues associated with the still-valued food, or 0 otherwise; and for all t > 1, PE(t) = V(t) − B(t); B(t + 1) = B(t) + αPE(t), where PE is the prediction error, t is the trial number, V(t) = 1 for still-valued outcomes or 0 otherwise, α = 0.65, and B is the expected value. The expected value was calculated separately for each fractal cue. Since the task did not involve acquisition of behavioral choice data, the α value could not be calculated from behavioral data. Instead, the fMRI data from the first five subjects were modeled iteratively, using α values ranging from 0.1 to 0.7 in steps of 0.05 (cf. Hare et al. 2008); α = 0.65 was found to provide the best fit to the data from these subjects, so this value was used for the complete data set.

Throughout this report, all t-tests are two-tailed. For our whole brain analyses, all significant clusters were identified at P = 0.005 and withstood a contiguous cluster threshold of 5 voxels (i.e., >135 mm3). The cluster threshold was used to correct for multiple comparisons and was determined with the Cluster Threshold Estimator plug-in in BrainVoyager QX, which identifies the threshold at which the mapwise probability of a false detection (i.e., type I error rate, or corrected threshold) remains lower than 0.05. As this tool provides information on the type I error rate across the whole brain, it constitutes a principled correction and is compliant with recent recommendations to avoid arbitrary cluster thresholds (Bennett et al. 2009).

RESULTS

Behavioral Results

Participants indicated the pleasantness of each food type both before and after the selective satiety procedure on a Likert scale [ranging from 0 (very unpleasant) to 5 (very pleasant); see Table 2]. A 2 × 2 ANOVA of the Likert scale ratings with time (before/after selective satiety procedure) and food type (valued/devalued) as factors revealed a significant interaction (F1,20 = 40.4, P < 0.0001). Post hoc t-tests confirm that after the devaluation procedure pleasantness ratings of the devalued food decreased significantly more than those for the valued food (t20 = 8.698, P < 0.0001). This cannot be attributed to a general satiety or decrease in pleasantness over the training phase, because the pleasantness ratings for the valued food did not change significantly over time (t20 = −0.77, P = 0.45). Also, the ratings of both foods at the beginning of the task did not differ, demonstrating that, overall, there were no individual preference biases toward either of the food items (t20 = 0.491; P = 0.63). Therefore, the ratings were consistent with the interpretation that the subjects became sated on the devalued but not the valued food.

Table 2.

Pleasantness ratings of foods before and after selective satiety procedure

| Food Type | Mean | SD |

|---|---|---|

| Presatiety procedure pleasantness ratings (0–5) | ||

| Reward | 4.19 | 0.93 |

| Devalued | 4.10 | 0.83 |

| Postsatiety procedure pleasantness ratings (0–5) | ||

| Reward | 4.33 | 0.73 |

| Devalued | 1.86 | 1.46 |

Values are pleasantness ratings of foods before and after the selective satiety procedure, on a scale of 0 (very unpleasant) to 5 (very pleasant).

fMRI Results

Results at cue.

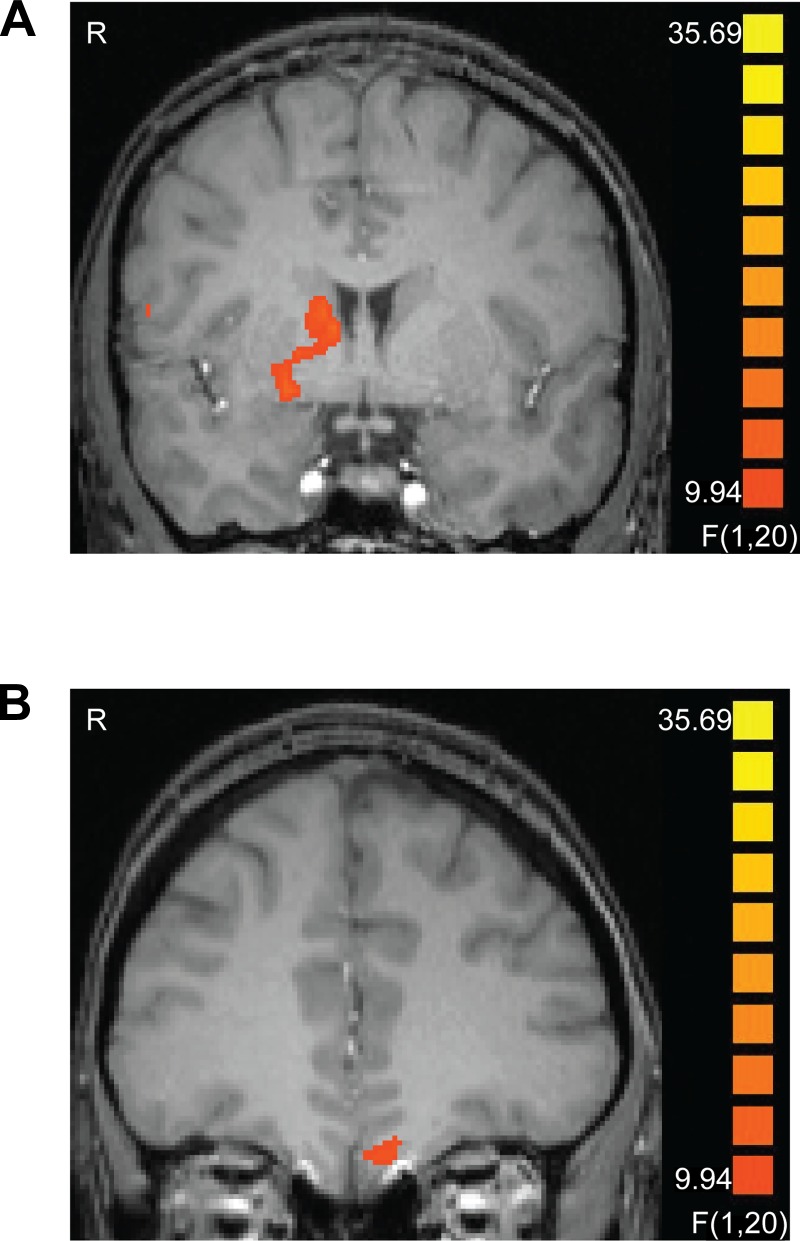

We analyzed the cue data with respect to the type of outcome each fractal predicted. We did not identify any significant effects in the striatum for any pairwise contrasts of the experimental conditions when collapsing across trials from the entire length of the scan. However, since the fractal-outcome contingencies during the scan were altered from the training phase, we expected that BOLD responses at the time of the cue might change over time, as the new contingencies were learned. To identify regions showing this pattern, we performed a 2 × 2 ANOVA with half of task (first half/last half) and cue signifying presence/omission of reward (i.e., still-valued food) as factors. This analysis yielded an interaction effect, whereby the reward cue elicited more activity in the striatum (ventral putamen, extending dorsally to the caudate) and medial orbitofrontal cortex (OFC) than the reward omission cue did in the second half of the task (F1,20 > 9.9, P < 0.05, corrected). The activation peaks are listed in Table 3, and a cluster-threshold corrected map of this ANOVA is featured in Fig. 2.

Table 3.

Regions showing a significant cue condition (reward cue vs. reward omission cue) by task half interaction

| Talairach Coordinates |

|||||

|---|---|---|---|---|---|

| Region | Cluster Size, mm3 | Max F | x | y | z |

| Precentral gyrus | 233 | 25.41 | 56 | 4 | 12 |

| Ventral putamen/caudate nucleus | 766 | 25.32 | 20 | 4 | −3 |

| Medial orbitofrontal cortex | 236 | 15.85 | −1 | 31 | −22 |

| Posterior insula | 229 | 34.70 | −34 | −14 | −6 |

Regions are those identified as showing a significant cue condition (reward cue vs. reward omission cue) by task half (1st half vs. 2nd half) interaction (P < 0.05, corrected).

Fig. 2.

The right striatum (A) and the left medial orbitofrontal cortex (OFC) (B) showed a significant interaction of cue condition and task half, with more activation elicited by the reward cue than the reward omission cue in the second half of the task only (P < 0.05, corrected). Images are left-right reversed.

Additionally, the regions identified as showing a significant effect of expected value at the time of cue presentation are listed in Table 4. The right putamen, as well as several cortical regions, showed this effect. We did not find significant effects in our a priori ROIs in the nucleus accumbens (left: t20 = 0.287, P = 0.77; right: t20 = 0.428, P = 0.67) or caudate (left: t20 = 0.068, P = 0.94; right: t20 = 0.699, P = 0.49), however.

Table 4.

Regions showing significant parametric modulation of cue by expected value and of outcome by prediction error

| Talairach Coordinates |

|||||

|---|---|---|---|---|---|

| Region | Cluster Size, mm3 | Max t | x | y | z |

| Expected value at cue | |||||

| Putamen | 178 | 4.90 | 26 | 13 | 6 |

| Precuneus | 131 | 4.15 | −7 | −47 | 60 |

| Superior frontal gyrus | 168 | 4.88 | −13 | 52 | 18 |

| Middle frontal gyrus | 391 | 4.28 | −40 | 46 | 9 |

| Superior temporal gyrus | 140 | 4.93 | −46 | −53 | 27 |

| Prediction error at outcome | |||||

| Inferior frontal gyrus (BA47) | 191 | −5.03 | −43 | 28 | −6 |

Regions are those identified as showing significant parametric modulation of cue by expected value and of outcome by prediction error (P < 0.05, corrected).

Results at outcome.

We identified significant effects in the striatum for three pairwise contrasts at the time of outcome presentation (namely, reward receipt vs. devalued outcome, reward receipt vs. expected omission of an outcome, and reward receipt vs. reward omission). The regions identified as showing a significant effect for each of the outcome-related contrasts (reward vs. devalued outcome, reward vs. expected omission of an outcome, and reward receipt vs. reward omission) are listed in Table 5. Of interest, the reward > devalued outcome contrast identified a region in the right ventral striatum, the reward > expected omission feedback contrast identified regions in the right caudate nucleus and right putamen, and the reward > reward omission contrast identified a region in the left putamen. We also note that an additional region in the left medial prefrontal cortex was identified as showing a reward > devalued outcome effect at P < 0.005 (peak Talairach coordinates: x = −7, y = 45, z = 5), but this region was only 4 voxels in size and therefore did not survive our cluster threshold correction.

Table 5.

Regions showing significant differences between outcome conditions

| Talairach Coordinates |

|||||

|---|---|---|---|---|---|

| Region | Cluster size, mm3 | Max t | x | y | z |

| Reward feedback > devalued feedback | |||||

| Nucleus accumbens | 148 | 4.25 | 11 | 10 | −3 |

| Reward feedback > expected omission feedback | |||||

| Inferior temporal gyrus | 296 | 4.30 | 56 | −14 | −27 |

| Right cerebellum, posterior lobe (declive) | 229 | 5.10 | 44 | −65 | −15 |

| Middle frontal gyrus | 200 | 5.25 | 29 | 58 | 3 |

| Right cerebellum, posterior lobe (cerebellar tonsil) | 381 | 4.64 | 35 | −47 | −36 |

| Right cerebellum, posterior lobe (declive) | 139 | 4.46 | 32 | −71 | −21 |

| Putamen | 188 | 4.94 | 26 | 13 | 9 |

| Caudate nucleus | 547 | 5.62 | 5 | 7 | 6 |

| Cingulate gyrus | 227 | 4.40 | −4 | −23 | 36 |

| Posterior cingulate gyrus | 197 | 4.90 | −1 | −29 | 24 |

| Angular gyrus | 188 | 5.07 | −40 | −59 | 36 |

| Anterior lobe of cerebellum | 1350 | 6.22 | −43 | −53 | −27 |

| Middle occipital gyrus | 691 | 5.60 | −49 | −74 | −6 |

| Inferior temporal gyrus | 475 | 5.47 | −49 | −56 | −12 |

| Reward receipt > reward omission | |||||

| Anterior lobe of cerebellum | 382 | 4.87 | 35 | −47 | −24 |

| Inferior occipital gyrus | 299 | 4.88 | 26 | −92 | −6 |

| Lingual gyrus | 277 | 5.39 | 23 | −77 | −9 |

| Anterior lobe of cerebellum | 159 | 5.28 | 29 | −38 | −18 |

| Putamen | 291 | 5.38 | −19 | 4 | 9 |

| Precuneus | 149 | 5.57 | −37 | −71 | 36 |

| Anterior lobe of cerebellum | 540 | 4.58 | −37 | −50 | −21 |

| Middle temporal gyrus | 246 | 4.45 | −40 | −56 | 24 |

| Inferior frontal gyrus | 372 | 4.78 | −49 | 7 | 33 |

Regions are those identified as showing a significant effect for each of the outcome-related contrasts (P < 0.05, corrected).

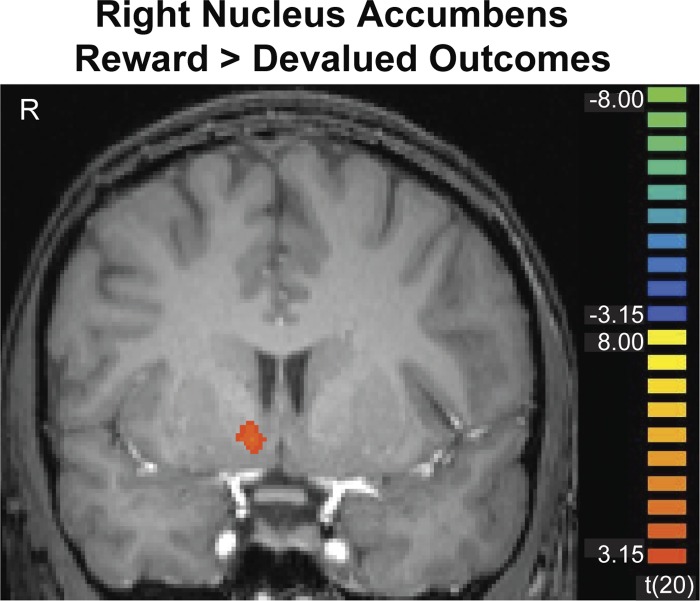

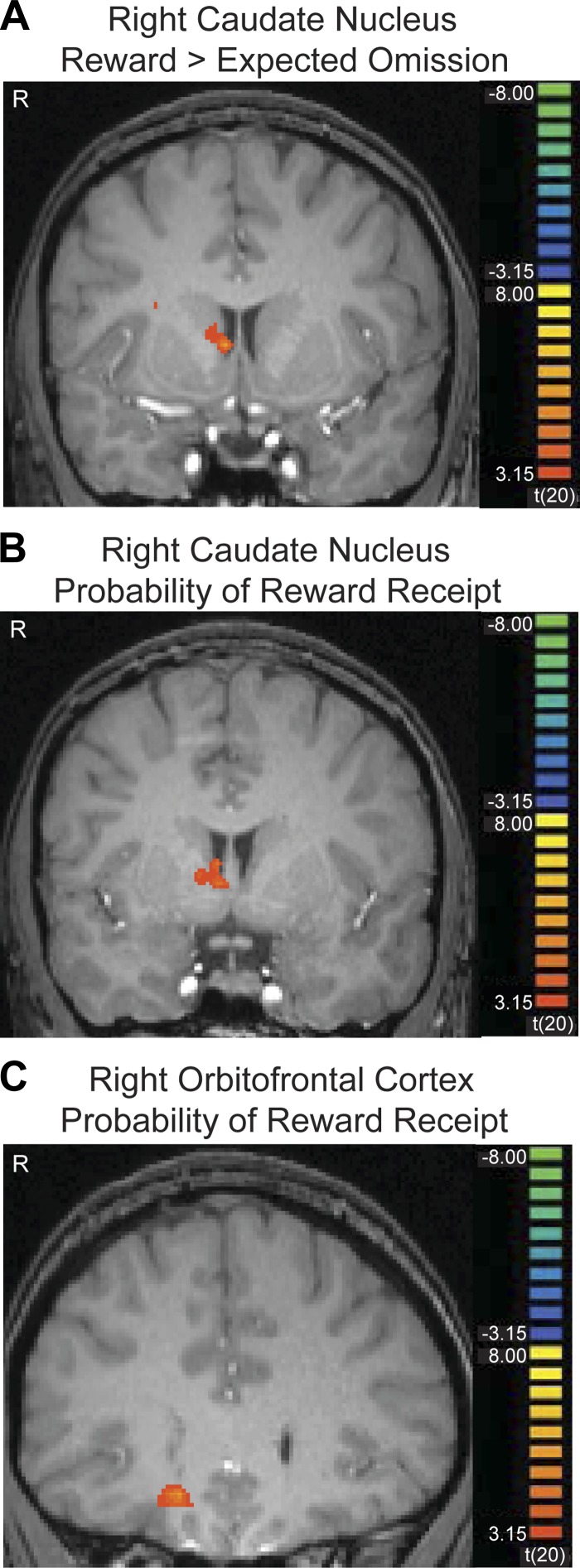

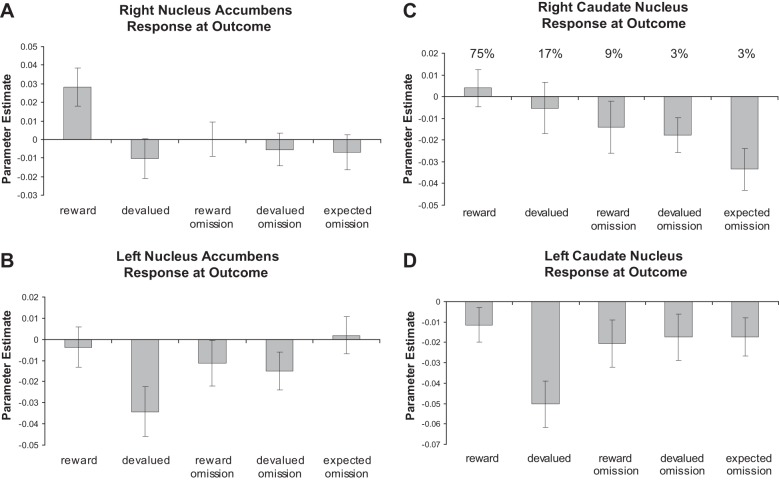

Figures 3 and 4 show cluster-threshold corrected maps depicting the striatal regions we identified at the time of the outcome. Figure 3 depicts a right nucleus accumbens region that showed a significant effect for the reward > devalued outcome contrast (P < 0.05, corrected). Figure 4A depicts a right caudate nucleus region that showed a significant effect for the reward > expected omission contrast (P < 0.05, corrected). Also shown in Fig. 5 are plots of the regression coefficients for all conditions in the caudate and nucleus accumbens, which were extracted based on a priori cubic regions of 8 mm3, centered on coordinates from previous work (Bischoff-Grethe et al. 2009; Cools et al. 2002; Delgado et al. 2000, 2004; Tricomi et al. 2004; Zink et al. 2004). Since the regression coefficients shown in these graphs were made with a priori ROIs, they are not subject to a bias that could be introduced by displaying the coefficients based on a particular statistical contrast. The graphs of the regression coefficients based on our significant clusters of activation look very similar, however.

Fig. 3.

The right nucleus accumbens showed significantly greater activation after rewarding outcomes than devalued outcomes (P < 0.05, corrected). Image is left-right reversed.

Fig. 4.

The right caudate nucleus (A) showed significantly greater activation after rewarding outcomes than expected omission of feedback (P < 0.05, corrected). The right caudate (B) and the right central OFC (C) also show a significant parametric modulation of activation by probability of reward receipt; as the probability of reward increases, these regions are activated more. Images are left-right reversed.

Fig. 5.

Plots of the parameter estimates across all conditions are shown for right (A) and left (B) nucleus accumbens regions of interest (ROIs) centered on Talairach coordinates of (10, 8, −4) and (−10, 8, −4), as well as right (C) and left (D) caudate ROIs centered on Talairach coordinates of (11, 11, 8) and (−11, 11, 8), based on previous work. The average probability of reward receipt for each condition is also shown.

In the a priori right nucleus accumbens region, rewarding outcomes yield significantly greater activity than devalued outcomes (t20 = 3.85; P < 0.001). This region also shows significantly greater activation for rewarding outcomes versus expected omissions (t20 = 4.22; P < 0.001). Similarly, the left nucleus accumbens shows a significant difference between rewarding and devalued outcomes (t20 = 2.87, P = 0.009). This effect is also significant in the left caudate nucleus (t20 = 3.32; P = 0.003). The right caudate, however, shows an increase in BOLD signal at the rewarding feedback and a decrease at the expected omission of an outcome, producing a significant difference between these conditions (t20 = 4.30; P < 0.001).

The results of the t-tests above suggest that the nucleus accumbens may be more sensitive to information about outcome valence (reward vs. devalued), while the caudate may be more sensitive to reward probability. However, an expected reward omission may be interpreted as both an outcome of low value (a null sign) and a low probability of reward receipt. Furthermore, a devalued outcome both has low motivational value and is associated with a low probability of reward. Therefore, we performed a complementary analysis, identifying brain regions that showed a significant parametric increase in BOLD signal at the time of outcome correlating with the trialwise probability of reward receipt; as the probability of reward increases, these regions are activated more (Table 6). We found a significant effect in the right caudate nucleus (Fig. 4; P < 0.05, corrected). A region in the right central OFC was also significantly activated in this analysis. Although this analysis accounted for trialwise fluctuations in probability, the average probability of reward receipt for each condition is included in Fig. 5, to help illustrate this effect.

Table 6.

Regions showing significant parametric modulation of feedback by probability of reward receipt

| Talairach Coordinates |

|||||

|---|---|---|---|---|---|

| Region | Cluster size, mm3 | Max t | x | y | z |

| Precentral gyrus | 163 | 5.99 | 35 | −5 | 33 |

| Anterior middle frontal gyrus | 193 | 5.00 | 32 | 58 | 9 |

| Putamen | 195 | 5.82 | 26 | 13 | 9 |

| Middle frontal gyrus | 245 | 4.65 | 29 | 43 | −3 |

| Central orbitofrontal cortex | 485 | 5.49 | 14 | 25 | −9 |

| Caudate nucleus | 245 | 4.67 | 5 | 1 | 3 |

| Cingulate gyrus | 334 | 5.73 | −1 | −29 | 27 |

| Angular gyrus | 343 | 4.72 | −40 | −59 | 33 |

| Anterior lobe of cerebellum | 488 | 4.94 | −43 | −53 | −21 |

Regions are those identified as showing a significant parametric modulation of feedback by probability of reward receipt (P < 0.05, corrected).

Finally, as shown in Table 4, the only region identified as showing an effect of prediction error at the time of feedback presentation was the left inferior frontal gyrus, which showed larger decreases in activation as the prediction error increased. No significant effects were found for our a priori regions in the caudate (left: t20 = 0.027, P = 0.98; right: t20 = 0.344, P = 0.73) and nucleus accumbens (left: t20 = 0.185, P = 0.86; right: t20 = 0.149, P = 0.88).

DISCUSSION

Our results provide evidence that, at the time of outcome, the ventral striatum carries information about the value of the outcome, which is dependent on the subject's current motivational state. Meanwhile, the dorsal striatum (caudate and putamen) carries a signal that includes information about both the value of the stimulus and reward probability. These results build on previous work examining reward representations in the human brain. It is now well known that both the ventral and dorsal striatum show effects of valence, with increases in activation for rewards, such as monetary gains, and decreases in activation for punishments, such as monetary losses (Coricelli et al. 2005; Delgado et al. 2000, 2003; Yacubian et al. 2006). It is unclear from previous work, however, whether the brain interprets outcomes of low value similarly to how it interprets absences of potential reward or whether these processes are distinct. Our design included both the omission of rewarding outcomes and the delivery of devalued outcomes, allowing us to separately investigate neural responses after a decrease in reward probability and after a decrease in reward value.

Striatal Response to Changes in Cue-Outcome Contingency

After the training phase of this experiment, the food outcomes associated with two of the fractal cues were omitted. Although we did not find significant cue-related effects in the striatum when collapsing across the whole fMRI phase of the experiment, we found evidence that the striatum adjusted its response to the cues based on the change in cue-outcome contingency. At the time of cue presentation, activation of the putamen was parametrically modulated by the expected value of the upcoming outcome. Additionally, in the second half of the task only, cues predicting an upcoming reward produced greater activation in the striatum than cues predicting reward omission. These findings provide evidence that signals in the striatum reflect the known value of an upcoming outcome as well as the value of a received outcome. That is, information about the value of the upcoming outcome, as well as about the cue's reinforcement history, is reflected in striatal activity at the time of the cue. This fits with previous evidence indicating a role for the striatum in anticipation of upcoming rewards (Bjork and Hommer 2007; Ernst et al. 2004; Galvan et al. 2005; Gottfried et al. 2003; Haruno and Kawato 2006; Knutson et al. 2005; Yacubian et al. 2006) and in reinforcement learning processes (Daw and Doya 2006; Montague et al. 2006).

Subjective Value Coding in Ventral Striatum

We found that the nucleus accumbens showed selective activation to receipt of appealing food outcomes relative to devalued food outcomes. Many studies that have previously investigated value representations in the brain have focused on rewards of varying magnitude, such as small and large monetary rewards (Breiter et al. 2001; Delgado et al. 2003; Guitart-Masip et al. 2011; Knutson et al. 2005; Smith et al. 2009; Tobler et al. 2007; Yacubian et al. 2006). Importantly, our study extends these findings by showing that the ventral striatum responds differently to the same outcome (a particular food), depending on how pleasant the participant currently finds that outcome to be (i.e., depending on the outcome's context-dependent subjective value). This finding builds on previous work focusing on effects of satiety on neural responses to cues (e.g., Gottfried et al. 2003), and it extends previous work showing that ventral striatal activity is influenced by the subjective value of outcomes based on a host of factors, such as counterfactual comparisons (Breiter et al. 2001), delay in reward delivery (Kable and Glimcher 2007; Peters and Buchel 2009), risk and ambiguity aversion (Levy et al. 2010), and fairness considerations (Tricomi et al. 2010).

Probability Coding in Dorsal Striatum

We found that the right caudate nucleus and putamen showed a differential response to rewards and the expected absence of a reward and the dorsal putamen showed a differential response to rewards and omissions of reward. Additionally, the caudate and the putamen both showed a parametric increase in signal at the outcome phase as the probability of reward receipt increased. To show this pattern of results, the dorsal striatum would need to be sensitive both to the current value of the outcome and its probability. Therefore, the dorsal striatum may be an important locus for integrating value and probability information, which would be useful for action planning. Haber et al. (2000) have proposed a hierarchy of information flow from the ventromedial to the dorsolateral striatum (i.e., from nucleus accumbens to caudate to putamen), via striatonigrostriatal projections. In this way, value-related signals from the ventral striatum may influence the dorsal striatum, which may then integrate this information with information about probability.

These findings are in line with previous research showing effects of reward schedule (Tanaka et al. 2008) and expected value (i.e., reward magnitude × probability) on activation of the dorsal striatum (Hsu et al. 2005; Tobler et al. 2007). The caudate has also been shown to be sensitive to action-outcome contingency (Elliott et al. 2004; O'Doherty et al. 2004; Tanaka et al. 2008; Tricomi et al. 2004; Zink et al. 2004). In the expected omission condition there is a low probability that an action will lead to reward, and thus a low action-outcome contingency, whereas in the reward condition there is a high contingency between the button press and the outcome. Thus the dorsal striatum may play an important role in cementing the learned relationship between a given action and a desired outcome (Balleine et al. 2009; Haruno and Kawato 2006; Samejima et al. 2005).

The representation of outcome probability is necessary to make well-informed future decisions. For example, outcomes of low probability may not be worth investing effort to achieve. Alternatively, when outcome probabilities are not fixed, an increase in the allocation of effort might be necessary to increase the probability of obtaining a goal that is difficult to achieve. Indeed, individual differences in dopamine responsivity (i.e., receptor availability) in the caudate, measured with PET, have been linked to a willingness to expend effort to earn a low-probability reward (Treadway et al. 2012). When action-reward contingency is high, anticipated effort is also likely to be high; that is, participants will likely put more effort into responding when they believe that their effort will result in increased reward. Accordingly, the putamen has been found to be active when anticipated effort is high (Kurniawan et al. 2013), and also when participants choose to expend low effort for reward versus high effort (i.e., when their action is more likely to produce a positive outcome; Kurniawan et al. 2010). In addition, Croxson et al. (2009) found that the putamen plays a specific role in encoding anticipated effort, while the ventral striatum plays a specific role in encoding expected reward. In our study, we did not vary levels of effort required to gain a reward, but probability may affect neural responses in a similar way. In rats, an increase in effort has been observed after omission of expected food rewards. That is, when food rewards associated with pressing a lever cease to be delivered, rats temporarily increase their response rate on the lever, as if they believe that an increase in action vigor will elicit delivery of the omitted outcome (Amsel 1958; Dickinson 1975; Stout et al. 2003). This effect depends critically on outcome probability; highly predictable outcomes generate more vigorous responses after their omission compared with less predictable outcomes, demonstrating one way in which information about outcome probability is used to govern behavior (Dudley and Papini 1997).

It is of interest that this effect of reward probability occurred at the time of outcome presentation, rather than at the presentation of the cue. The caudate is not showing significant effects of expected value or prediction error in this experiment. Rather than coding for the difference between the actual and expected outcomes, it is instead responding more to receipt of predicted rewards than predicted lack of reward. Prediction error responses in the striatum tend to be found in the ventral striatum or the putamen, rather than the caudate (Abler et al. 2006; Hare et al. 2008; McClure et al. 2003; O'Doherty et al. 2003; Yacubian et al. 2006). Since prediction error incorporates information about how unexpected an outcome is, the ventral striatum must have access to information about outcome probability. It may be that the unexpectedness of an outcome contributes to how subjectively rewarding it is, which in turn influences ventral striatum activity, whereas the caudate signal reflects the strength of action-outcome contingency, which increases with increasing probability of reward.

Laterality of Effects

The results of our whole brain analyses were significant only on the right side for both the nucleus accumbens and the caudate nucleus. In the nucleus accumbens, this seems to be due to a thresholding issue, since a similar pattern of results was observed in the left nucleus accumbens region that was defined a priori. However, we note that our results were markedly different in the right and left caudate nuclei. Our a priori defined left caudate region showed a pattern more similar to that observed in the nucleus accumbens than in the right caudate, with differential activation to rewarding and devalued outcomes. If there is a continuum with the ventromedial striatum more sensitive to subjective value and the dorsolateral striatum more sensitive to probability, it is possible that since the caudate is in the middle of this continuum we happened to find more evidence for value coding in the left caudate and probability coding in the right caudate. It is also interesting to note that, as shown in Fig. 5, the differential effect of rewarding and devalued outcomes seems to be driven by a decrease in activation to devalued outcomes for both left nucleus accumbens and left caudate, whereas this effect seems to be driven by an increase in activation to rewarding outcomes for the right nucleus accumbens. Previous work on lateralization of reward-related activity in the striatum has shown mixed results, with some studies showing higher dopamine binding and synthesis and a stronger dopaminergic reward response in the right striatum than the left (Cannon et al. 2009; Martin-Soelch et al. 2011; van Dyck et al. 2002; Vernaleken et al. 2007) and other work suggesting stronger left-lateralized activity supporting approach processes (Murphy et al. 2003; Tomer et al. 2014). Therefore, additional research will be necessary to determine the nature of potential laterality differences in the striatum, and especially whether there are laterality differences in processing reward value versus probability.

Role of Orbitofrontal Cortex

Previous work has suggested that the prefrontal cortex, especially the medial OFC, plays an important role in representing reward value in the brain (Grabenhorst and Rolls 2011; Kringelbach et al. 2003; O'Doherty 2007; Peters and Buchel 2010; Rudenga and Small 2013). The OFC has been shown to track decision value, goal value, and outcome value (de Wit et al. 2009; Hare et al. 2008; Peters and Buchel 2010). In this experiment, we found that the medial OFC, like the striatum, adjusted its activity to the fractal cues such that in the second half of the task cues predicting reward produced greater activation than cues predicting reward omission. Although we did not observe significantly greater activation in the OFC for reward receipt relative to devalued food outcomes, it is possible that this null result may be due to a thresholding issue, since we did identify a region in the left medial prefrontal cortex that showed an effect of reward versus devalued outcome receipt that did not withstand our correction for multiple comparisons. Additionally, we observed a significant effect of reward probability in the right central OFC. Previous research has indicated that the central OFC may play a role in stimulus-outcome learning (O'Doherty 2007; Valentin et al. 2007). Its sensitivity to reward outcome probability in our experiment may thus reflect a role in learning how strongly each of the fractal stimuli was associated with a rewarding outcome.

Conclusion

To adaptively make decisions, we must be able to track the subjective value of an outcome in a way that is sensitive to changes in motivational state. In addition, we must be able to register the probability of a given outcome occurring. Our results suggest that the ventral striatum is especially sensitive to the motivational relevance of an outcome, and it does not seem to track information about the probability of an outcome occurring. Activity in the dorsal striatum, however, appears to correlate with the probability of reward receipt at the time of outcome.

By using food rewards, we were able to change the subjective value of the outcome without changing the outcome itself; the magnitude of the reward did not change, but its value did. Additionally, food outcomes allow for the delivery of an outcome of low value without taking away something of high value. For example, receipt of a low-value food is an experience different from loss of money. Thus our experiment emphasizes the subjective nature of valuation and its dependence on motivational state and shows that the brain's reward circuitry is sensitive to these subjective factors.

The reduction of an outcome's value and the omission of a previously well-predicted reward can both be thought of as “negative” outcomes, or punishments. Our results suggest, however, that these two types of negative outcomes are not isomorphic but rather that they affect brain activity in distinct ways. Thus impairments in value and probability representation might result in distinct types of behavior. For example, an overweighting of reward value relative to probability might lead to compulsive gambling in an effort to achieve a reward of high value that has a very low probability of occurrence, whereas an overweighting of probability might lead one to avoid taking risks necessary to achieve a desired goal because of a low probability of success. A successful integration of information about value and probability, however, allows us to make effective decisions and allocate an appropriate amount of effort to achieve our desired goals.

GRANTS

This work was supported by National Institute on Drug Abuse Grant R15 DA-029544 to E. Tricomi. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institute on Drug Abuse or the National Institutes of Health.

DISCLOSURES

No conflicts of interest, financial or otherwise, are declared by the author(s).

AUTHOR CONTRIBUTIONS

Author contributions: E.T. conception and design of research; E.T. and K.M.L. interpreted results of experiments; E.T. and K.M.L. prepared figures; E.T. and K.M.L. drafted manuscript; E.T. and K.M.L. edited and revised manuscript; E.T. and K.M.L. approved final version of manuscript; K.M.L. performed experiments; K.M.L. analyzed data.

ACKNOWLEDGMENTS

The authors thank Mauricio Delgado and Mike Shiflett for their valuable comments.

Present address of K. Lempert: Dept. of Psychology, New York University, New York, NY 10003.

REFERENCES

- Abler B, Walter H, Erk S, Kammerer H, Spitzer M. Prediction error as a linear function of reward probability is coded in human nucleus accumbens. Neuroimage 31: 790–795, 2006. [DOI] [PubMed] [Google Scholar]

- Amsel A. The role of frustrative nonreward in noncontinuous reward situations. Psychol Bull 55: 102–119, 1958. [DOI] [PubMed] [Google Scholar]

- Balleine BW, Dickinson A. The role of incentive learning in instrumental outcome revaluation by sensory-specific satiety. Anim Learn Behav 26: 46–59, 1998. [Google Scholar]

- Balleine BW, Liljeholm M, Ostlund SB. The integrative function of the basal ganglia in instrumental conditioning. Behav Brain Res 199: 43–52, 2009. [DOI] [PubMed] [Google Scholar]

- Bennett CM, Wolford GL, Miller MB. The principled control of false positives in neuroimaging. Soc Cogn Affect Neurosci 4: 417–422, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bischoff-Grethe A, Hazeltine E, Bergren L, Ivry RB, Grafton ST. The influence of feedback valence in associative learning. Neuroimage 44: 243–251, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bjork JM, Hommer DW. Anticipating instrumentally obtained and passively-received rewards: a factorial fMRI investigation. Behav Brain Res 177: 165–170, 2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Breiter HC, Aharon I, Kahneman D, Dale A, Shizgal P. Functional imaging of neural responses to expectancy and experience of monetary gains and losses. Neuron 30: 619–639, 2001. [DOI] [PubMed] [Google Scholar]

- Cannon DM, Klaver JM, Peck SA, Rallis-Voak D, Erickson K, Drevets WC. Dopamine type-1 receptor binding in major depressive disorder assessed using positron emission tomography and [11C]NNC-112. Neuropsychopharmacology 34: 1277–1287, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cools R, Clark L, Owen AM, Robbins TW. Defining the neural mechanisms of probabilistic reversal learning using event-related functional magnetic resonance imaging. J Neurosci 22: 4563–4567, 2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coricelli G, Critchley HD, Joffily M, O'Doherty JP, Sirigu A, Dolan RJ. Regret and its avoidance: a neuroimaging study of choice behavior. Nat Neurosci 8: 1255–1262, 2005. [DOI] [PubMed] [Google Scholar]

- Croxson PL, Walton ME, O'Reilly JX, Behrens TE, Rushworth MF. Effort-based cost-benefit valuation and the human brain. J Neurosci 29: 4531–4541, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Daw ND, Doya K. The computational neurobiology of learning and reward. Curr Opin Neurobiol 16: 199–204, 2006. [DOI] [PubMed] [Google Scholar]

- de Borchgrave R, Rawlins JN, Dickinson A, Balleine BW. Effects of cytotoxic nucleus accumbens lesions on instrumental conditioning in rats. Exp Brain Res 144: 50–68, 2002. [DOI] [PubMed] [Google Scholar]

- de Wit S, Corlett PR, Aitken MR, Dickinson A, Fletcher PC. Differential engagement of the ventromedial prefrontal cortex by goal-directed and habitual behavior toward food pictures in humans. J Neurosci 29: 11330–11338, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deichmann R, Gottfried JA, Hutton C, Turner R. Optimized EPI for fMRI studies of the orbitofrontal cortex. Neuroimage 19: 430–441, 2003. [DOI] [PubMed] [Google Scholar]

- Delgado MR, Locke HM, Stenger VA, Fiez JA. Dorsal striatum responses to reward and punishment: effects of valence and magnitude manipulations. Cogn Affect Behav Neurosci 3: 27–38, 2003. [DOI] [PubMed] [Google Scholar]

- Delgado MR, Nystrom LE, Fissell C, Noll DC, Fiez JA. Tracking the hemodynamic responses to reward and punishment in the striatum. J Neurophysiol 84: 3072–3077, 2000. [DOI] [PubMed] [Google Scholar]

- Delgado MR, Stenger VA, Fiez JA. Motivation-dependent responses in the human caudate nucleus. Cereb Cortex 14: 1022–1030, 2004. [DOI] [PubMed] [Google Scholar]

- Dickinson A. Transient effects of reward presentation and omission on subsequent operant responding. J Comp Physiol Psychol 88: 447–458, 1975. [Google Scholar]

- Doya K. Modulators of decision making. Nat Neurosci 11: 410–416, 2008. [DOI] [PubMed] [Google Scholar]

- Dudley RT, Papini MR. Amsel's frustration effect: a pavlovian replication with control for frequency and distribution of rewards. Physiol Behav 61: 627–629, 1997. [DOI] [PubMed] [Google Scholar]

- Elliott R, Newman JL, Longe OA, Deakin JF. Instrumental responding for rewards is associated with enhanced neuronal response in subcortical reward systems. Neuroimage 21: 984–990, 2004. [DOI] [PubMed] [Google Scholar]

- Ernst M, Nelson EE, McClure EB, Monk CS, Munson S, Eshel N, Zarahn E, Leibenluft E, Zametkin A, Towbin K, Blair J, Charney D, Pine DS. Choice selection and reward anticipation: an fMRI study. Neuropsychologia 42: 1585–1597, 2004. [DOI] [PubMed] [Google Scholar]

- Galvan A, Hare TA, Davidson M, Spicer J, Glover G, Casey BJ. The role of ventral frontostriatal circuitry in reward-based learning in humans. J Neurosci 25: 8650–8656, 2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Garner DM, Olmsted MP, Bohr Y, Garfinkel PE. The eating attitudes test: psychometric features and clinical correlates. Psychol Med 12: 871–878, 1982. [DOI] [PubMed] [Google Scholar]

- Gottfried JA, O'Doherty J, Dolan RJ. Encoding predictive reward value in human amygdala and orbitofrontal cortex. Science 301: 1104–1107, 2003. [DOI] [PubMed] [Google Scholar]

- Grabenhorst F, Rolls ET. Value, pleasure and choice in the ventral prefrontal cortex. Trends Cogn Sci 15: 56–67, 2011. [DOI] [PubMed] [Google Scholar]

- Guitart-Masip M, Fuentemilla L, Bach DR, Huys QJ, Dayan P, Dolan RJ, Duzel E. Action dominates valence in anticipatory representations in the human striatum and dopaminergic midbrain. J Neurosci 31: 7867–7875, 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haber SN, Fudge JL, McFarland NR. Striatonigrostriatal pathways in primates form an ascending spiral from the shell to the dorsolateral striatum. J Neurosci 20: 2369–2382, 2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hare TA, O'Doherty J, Camerer CF, Schultz W, Rangel A. Dissociating the role of the orbitofrontal cortex and the striatum in the computation of goal values and prediction errors. J Neurosci 28: 5623–5630, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haruno M, Kawato M. Different neural correlates of reward expectation and reward expectation error in the putamen and caudate nucleus during stimulus-action-reward association learning. J Neurophysiol 95: 948–959, 2006. [DOI] [PubMed] [Google Scholar]

- Hsu M, Bhatt M, Adolphs R, Tranel D, Camerer CF. Neural systems responding to degrees of uncertainty in human decision-making. Science 310: 1680–1683, 2005. [DOI] [PubMed] [Google Scholar]

- Kable JW, Glimcher PW. The neural correlates of subjective value during intertemporal choice. Nat Neurosci 10: 1625–1633, 2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Knutson B, Adams CM, Fong GW, Hommer D. Anticipation of increasing monetary reward selectively recruits nucleus accumbens. J Neurosci 21: 1–5, 2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Knutson B, Taylor J, Kaufman M, Peterson R, Glover G. Distributed neural representation of expected value. J Neurosci 25: 4806–4812, 2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Simmons WK, Bellgowan PS, Baker CI. Circular analysis in systems neuroscience: the dangers of double dipping. Nat Neurosci 12: 535–540, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kringelbach ML, O'Doherty J, Rolls ET, Andrews C. Activation of the human orbitofrontal cortex to a liquid food stimulus is correlated with its subjective pleasantness. Cereb Cortex 13: 1064–1071, 2003. [DOI] [PubMed] [Google Scholar]

- Kurniawan IT, Guitart-Masip M, Dayan P, Dolan RJ. Effort and valuation in the brain: the effects of anticipation and execution. J Neurosci 33: 6160–6169, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kurniawan IT, Seymour B, Talmi D, Yoshida W, Chater N, Dolan RJ. Choosing to make an effort: the role of striatum in signaling physical effort of a chosen action. J Neurophysiol 22: 222–223, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Levy I, Snell J, Nelson AJ, Rustichini A, Glimcher PW. Neural representation of subjective value under risk and ambiguity. J Neurophysiol 103: 1036–1047, 2010. [DOI] [PubMed] [Google Scholar]

- Martin-Soelch C, Szczepanik J, Nugent A, Barhaghi K, Rallis D, Herscovitch P, Carson RE, Drevets WC. Lateralization and gender differences in the dopaminergic response to unpredictable reward in the human ventral striatum. Eur J Neurosci 33: 1706–1715, 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McClure SM, Berns GS, Montague PR. Temporal prediction errors in a passive learning task activate human striatum. Neuron 38: 339–346, 2003. [DOI] [PubMed] [Google Scholar]

- Montague PR, King-Casas B, Cohen JD. Imaging valuation models in human choice. Annu Rev Neurosci 29: 417–448, 2006. [DOI] [PubMed] [Google Scholar]

- Murphy FC, Nimmo-Smith I, Lawrence AD. Functional neuroanatomy of emotions: a meta-analysis. Cogn Affect Behav Neurosci 3: 207–233, 2003. [DOI] [PubMed] [Google Scholar]

- O'Doherty J, Dayan P, Schultz J, Deichmann R, Friston K, Dolan RJ. Dissociable roles of ventral and dorsal striatum in instrumental conditioning. Science 304: 452–454, 2004. [DOI] [PubMed] [Google Scholar]

- O'Doherty JP. Lights, Camembert, action! The role of human orbitofrontal cortex in encoding stimuli, rewards and choices. Ann NY Acad Sci 1121: 254–272, 2007. [DOI] [PubMed] [Google Scholar]

- O'Doherty JP, Dayan P, Friston K, Critchley H, Dolan RJ. Temporal difference models and reward-related learning in the human brain. Neuron 28: 329–337, 2003. [DOI] [PubMed] [Google Scholar]

- Orbitello B, Ciano R, Corsaro M, Rocco PL, Taboga C, Tonutti L, Armellini M, Balestrieri M. The EAT-26 as screening instrument for clinical nutrition unit attenders. Int J Obes (Lond) 30: 977–981, 2006. [DOI] [PubMed] [Google Scholar]

- Peters J, Buchel C. Overlapping and distinct neural systems code for subjective value during intertemporal and risky decision making. J Neurosci 29: 15727–15734, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peters J, Buchel C. Neural representations of subjective reward value. Behav Brain Res 213: 135–141, 2010. [DOI] [PubMed] [Google Scholar]

- Rudenga KJ, Small DM. Ventromedial prefrontal cortex response to concentrated sucrose reflects liking rather than sweet quality coding. Chem Senses 38: 585–594, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rushworth MF, Behrens TE. Choice, uncertainty and value in prefrontal and cingulate cortex. Nat Neurosci 11: 389–397, 2008. [DOI] [PubMed] [Google Scholar]

- Samejima K, Ueda Y, Doya K, Kimura M. Representation of action-specific reward values in the striatum. Science 310: 1337–1340, 2005. [DOI] [PubMed] [Google Scholar]

- Smith BW, Mitchell DG, Hardin MG, Jazbec S, Fridberg D, Blair RJ, Ernst M. Neural substrates of reward magnitude, probability, and risk during a wheel of fortune decision-making task. Neuroimage 44: 600–609, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stout SC, Boughner RL, Papini MR. Reexamining the frustration effect in rats: aftereffects of surprising reinforcement and nonreinforcement. Learn Motiv 34: 437–456, 2003. [Google Scholar]

- Talairach J, Tournoux P. Co-Planar Stereotaxic Atlas of the Human Brain: an Approach to Medical Cerebral Imaging. Stuttgart, Germany: Thieme Medical, 1988. [Google Scholar]

- Tanaka SC, Balleine BW, O'Doherty JP. Calculating consequences: brain systems that encode the causal effects of actions. J Neurosci 28: 6750–6755, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tobler PN, O'Doherty JP, Dolan RJ, Schultz W. Reward value coding distinct from risk attitude-related uncertainty coding in human reward systems. J Neurophysiol 97: 1621–1632, 2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tomer R, Slagter HA, Christian BT, Fox AS, King CR, Murali D, Gluck MA, Davidson RJ. Love to win or hate to lose? Asymmetry of dopamine D2 receptor binding predicts sensitivity to reward versus punishment. J Cogn Neurosci 26: 1039–1048, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Treadway MT, Buckholtz JW, Cowan RL, Woodward ND, Li R, Ansari MS, Baldwin RM, Schwartzman AN, Kessler RM, Zald DH. Dopaminergic mechanisms of individual differences in human effort-based decision-making. J Neurosci 32: 6170–6176, 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tricomi E, Balleine BW, O'Doherty JP. A specific role for posterior dorsolateral striatum in human habit learning. Eur J Neurosci 29: 2225–2232, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tricomi E, Rangel A, Camerer CF, O'Doherty JP. Neural evidence for inequality-averse social preferences. Nature 463: 1089–1091, 2010. [DOI] [PubMed] [Google Scholar]

- Tricomi EM, Delgado MR, Fiez JA. Modulation of caudate activity by action contingency. Neuron 41: 281–292, 2004. [DOI] [PubMed] [Google Scholar]

- Valentin VV, Dickinson A, O'Doherty JP. Determining the neural substrates of goal-directed learning in the human brain. J Neurosci 27: 4019–4026, 2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Dyck CH, Seibyl JP, Malison RT, Laruelle M, Zoghbi SS, Baldwin RM, Innis RB. Age-related decline in dopamine transporters: analysis of striatal subregions, nonlinear effects, and hemispheric asymmetries. Am J Geriatr Psychiatry 10: 36–43, 2002. [PubMed] [Google Scholar]

- Vernaleken I, Weibrich C, Siessmeier T, Buchholz HG, Rosch F, Heinz A, Cumming P, Stoeter P, Bartenstein P, Grunder G. Asymmetry in dopamine D2/3 receptors of caudate nucleus is lost with age. Neuroimage 34: 870–878, 2007. [DOI] [PubMed] [Google Scholar]

- Yacubian J, Glascher J, Schroeder K, Sommer T, Braus DF, Buchel C. Dissociable systems for gain- and loss-related value predictions and errors of prediction in the human brain. J Neurosci 26: 9530–9537, 2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yin HH, Knowlton BJ, Balleine BW. Blockade of NMDA receptors in the dorsomedial striatum prevents action-outcome learning in instrumental conditioning. Eur J Neurosci 22: 505–512, 2005. [DOI] [PubMed] [Google Scholar]

- Zink CF, Pagnoni G, Martin-Skurski ME, Chappelow JC, Berns GS. Human striatal responses to monetary reward depend on saliency. Neuron 42: 509–517, 2004. [DOI] [PubMed] [Google Scholar]