Abstract

Background

Publication of a manuscript does not end an author’s responsibilities. Reasons to contact an author after publication include clarification, access to raw data, and collaboration. However, legitimate questions have been raised regarding whether these responsibilities generally are being met by corresponding authors of biomedical publications.

Questions/purposes

This study aims to establish (1) what proportion of corresponding authors accept the responsibility of correspondence; (2) identify characteristics of responders; and (3) assess email address decay with time. We hypothesize that the response rate is unrelated to journal impact factor.

Methods

We contacted 450 corresponding authors throughout various fields of biomedical research regarding the availability of additional data from their study, under the pretense of needing these data for a related review article. Authors were randomly selected from 45 journals whose impact factors ranged from 52 to 0; the source articles were published between May 2003 and May 2013. The proportion of corresponding authors who replied, along with author characteristics were recorded, as was the proportion of emails that were returned for inactive addresses; 446 authors were available for final analysis.

Results

Fifty-three percent (190/357) of the authors with working email addresses responded to our request. Clinical researchers were more likely to reply than basic/translational scientists (51% [114/225] versus 34% [76/221]; p < 0.001). Impact factor and other author characteristics did not differ. Logistic regression analysis showed that the odds of replying decreased by 15% per year (odds ratio [OR], 0.85; 95% CI, 0.79–0.91; p < 0.001), and showed a positive relationship between clinical research and response (OR, 2.0; 95% CI, 1.3–2.9; p = 0.001). In 2013 all email addresses (45/45) were reachable, but within 10 years, 49% (21/43) had become invalid.

Conclusions

Our results suggest that contacting corresponding authors is problematic throughout the field of biomedical research. Defining the responsibilities of corresponding authors by journals more explicitly—particularly after publication of their manuscript—may increase the response rate on data requests. Possible other ways to improve communication after research publication are: (1) listing more than one email address per corresponding author, eg, an institutional and personal address; (2) specifying all authors’ email addresses; (3) when an author leaves an institution, send an automated reply offering alternative ways to get in touch; and (4) linking published manuscripts to research platforms.

Introduction

Publication of a manuscript does not end an author’s responsibilities. Collaboration, access to individual participant data (such as for meta-analysis [8, 11]), and clarification of the points made in a paper are important reasons for researchers to maintain open lines of communication with the users of that information. This is at least as important in laboratory science as in clinical research; reproducibility of basic science results has been reported by some investigators to be low [1, 7], and in one report, only ½ of the experimental resources such as cell lines and antibodies are uniquely identifiable after publication [13]. Another report indicated that only 60% of the clinical trials provide ample information for implementation of the described intervention [4]. Unsurprisingly, an unusable report of research has been identified as one of the main reasons for avoidable waste in biomedical science, approximately estimated to affect 50% of all publications [3].

The corresponding author holds a special position on any published work. This author is the designated person to answer inquiries, or find the coauthor who can, and should be responsive to such queries.

Aware of the importance of adequate communication after publication, we aimed to establish (1) what proportion of corresponding authors accept the responsibility of correspondence; (2) identify characteristics of responders; and (3) assess email address decay with time. Our null hypothesis is that the response rate is independent of journal impact factor. In addition, we hypothesize that response rate is uninfluenced by date of publication, author characteristics, type of email address, and possible conflict of interest.

Materials and Methods

A previous survey found that more researchers were denied data than there were researchers who admitted that they had withheld information. Researchers suspected that participants may have underreported engaging in behaviors that they viewed as contrary to accepted norms of practice [2]. To prevent this, we decided not to send a questionnaire. Instead we mimicked an actual request for additional data from their study, under the pretense of needing these data for a related review article. After approval of our institutional review board for our study, which did involve some deception but no actual physical risk, we selected 45 journals, chose one paper per year from each journal during a 10-year period (May 2003 to May 2013), and contacted the corresponding author of each of those papers. We drafted an email mentioning the contacted author’s name and manuscript title, and asked the authors if they were willing to cooperate without requesting any exact data at that point.

Selection of Journals

In selecting the 45 biomedical journals, we aimed to cover the full range of impact factors. First we chose the 15 highest-ranked medical journals and the 15 highest-ranked basic science/translational research journals; their impact factors ranged from 52 to 6. Second, we included another 15 journals covering the remaining gap from 6 to 0 in five steps. We selected one basic science journal, one general/internal medicine, and one surgery journal with an impact factor closest to each step (no orthopaedic journals were involved; Appendix 1).

In the determination of impact factors, basic science, and medical categories, we used the division used by ISI Web of Knowledge’s most recent version of the Journal Citation Reports [10]. All basic science/translational research journals were drafted from the categories: biochemical research methods, biochemistry/molecular biology, cell/tissue engineering, and cell biology. The 15 highest ranked clinical journals were selected from oncology, general/internal medicine, and surgery. The categories general/internal medicine and surgery also provided the clinical journals selected. Journals had to be available on PubMed during the 10-year period, and non-English journals were excluded. Because we planned to contact all corresponding authors requesting additional information for a review article, we excluded journals predominantly publishing reviews because one preferably includes original research in a summary article, rather than content from other review articles.

Selection of Articles and Authors

A MEDLINE text summary of the selected journals’ manuscripts was extracted from PubMed. We imported the text files into Stata® (Version 13; StataCorp LP, College Station, TX, USA) and articles tagged as reviews, editorials, letter to editors, biographies, comments, case reports, and historical articles were removed.

After random assignment of a number between zero and 1 to each article (random number generator algorithm was set to start at seed 19083457), we selected three articles ranked closest to zero for each year and journal.

Our previous experience with contacting authors showed a low rate of personal email addresses and senior authors serving as corresponding authors [12]. To ensure sufficient personal email addresses and senior corresponding authors, of the three selected articles, we chose one favoring papers with a personal email address or a senior author as corresponding author.

Four of the initially included 450 corresponding authors were excluded from final analysis because one journal did not have an email address available from May 2003 to April 2004; one paper listed a wrong email address on PubMed; and one person was included twice under a different name and asked to be removed from the study.

Correspondence

On October 22, we sent out our covert survey using an automated email message. The email was signed by one of the authors of this study (TT) and included his institute, contact details, and position as a research fellow without indicating a specific specialty (Appendix 2). After 3 weeks, replies were collected and all participants received a followup email signed by the principal investigator (JHS) and the initial sender (TT), explaining the nature of our project (Appendix 3). All data were from that moment on stored anonymously. Although the majority of responses were positive, two formal complaints were reported to our institutional review board, pertaining to the deception.

Measured Outcome Variables

We recorded the following information on the corresponding authors: response; position on paper; reported degree; personal or institution email address; date of publication; basic science or clinical research; category of medical research specialty, surgery or general/internal medicine; and any conflict of interest or industry involvement mentioned in the manuscript.

Response was defined as any nonautomatic reply to our email. Position on paper was graded as: “first,” “senior,” or “other”; if the paper was written by one person, this author was ranked as the first author. We categorized degree as: PhD (+ others), MD (without PhD, + others), and other degrees. The degree was copied from the original manuscript, response email, or established through a Google search.

We differentiated between personal and institutional email by suffixes such as “.edu”, “.gov”, “.org”, and “.ac” and major personal email providers: Gmail, Hotmail, Yahoo, 163, AOL. Specific delineation between basic science and clinical research articles and surgery or general/internal medicine was established by abstract reading.

Statistical Analysis

Data were analyzed using Stata® (Version 13, StataCorp LP) as a result of the predominantly non-Gaussian distribution as assessed by Shapiro-Wilk test; data were compared by Kruskal-Wallis rank test. We compared discrete data using Fisher’s exact test. We performed a stepwise, backward, logistic regression analysis for all bivariate analyses with a probability less than 0.10 to test for confounding influence on reply rate. By bivariate power analysis on our primary null hypothesis, we established 432 authors needed to be contacted (G*power 3.1.7; α 0.05; power 0.80).

Results

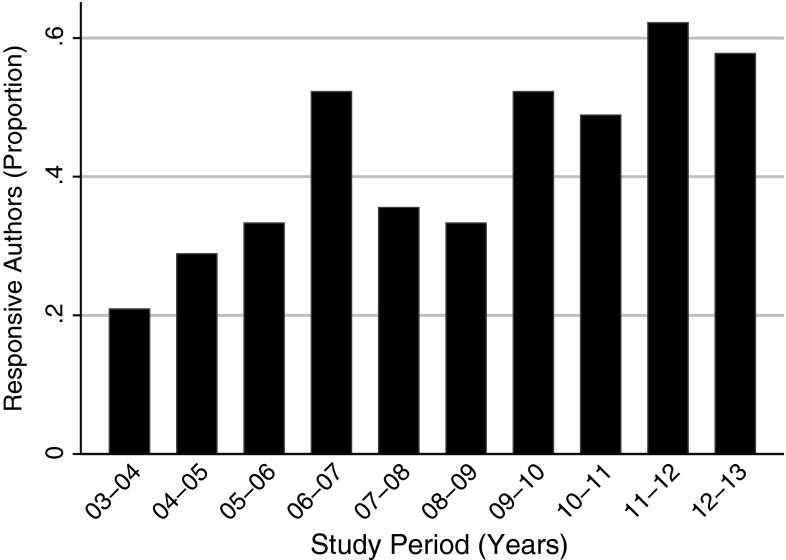

Of all deliverable inquiries, 53% (190/357) were answered by the corresponding author. Owing to an increase in the proportion of undeliverable emails, response proportions were higher for more recently published papers, ranging from 21% (nine of 43) for papers published in 2003 to 58% (26/45) for those published in 2013 (p < 0.001; Fig. 1). Average impact factor among respondents, nonrespondents, and undeliverable email addresses was not different with the numbers available (median 7, 8, and 8, respectively; p = 0.44; Table 1).

Fig. 1.

The histogram shows the proportion of corresponding authors who responded to our request for additional data between 2003 and 2013.

Table 1.

Proportion of response, no reply, and undeliverable emailed requests

| Dependent variables | Percentage (n = 446) | Range between 2003 and 2013 | p value | Impact factor* | p value |

|---|---|---|---|---|---|

| Response | 43% (190) | 21% (9/43)–58% (26/45) | < 0.001 | 7 (4–15) | 0.44 |

| No reply | 37% (167) | 30% (13/43)–42% (19/45) | 0.50 | 8 (3–14) | |

| Undeliverable | 20% (89) | 49% (21/43)–0% (0/45) | < 0.001 | 8 (5–12) |

* Median, ± interquartile range.

Clinical researchers were 1.5 times more likely to reply to our email request (response proportion clinical researcher 51% [114/225] versus 34% [76/221] basic/translational; p < 0.001). This difference seems dependent on a higher undeliverable proportion among basic/translational research (26% [58/221] basic/translational versus 14% [31/225] clinical; p = 0.001) and not on actual unresponsiveness (39% [87/221] basics/translational versus 36% [80/225] clinical; p = 0.41). Other characteristics such as type of email address or possible conflict of interest did not differ between responders and nonresponders (Table 2). Logistic regression analysis included date of publication and field of research and those factors explained 5.7% of the variability in response. We found a positive relationship between response and clinical research (odds ratio [OR], 2.0; 95% CI, 1.3–2.9; standard error [SE], 0.40; p = 0.001). Odds of replying decreased by 15% per year (OR, 0.85; 95% CI, 0.79–0.91; SE, 0.030; p < 0.001).

Table 2.

Characteristics of the contacted corresponding authors

| Independent variables | Proportion of response | p value |

|---|---|---|

| Degree | ||

| PhD | 0.42 (89/212) | 0.79 |

| MD | 0.45 (81/189) | |

| Other | 0.39 (15/38) | |

| Research field | ||

| Basic/translational | 0.34 (76/221) | 0.001 |

| Clinical | 0.51 (114/225) | |

| Specialty | ||

| General/internal | 0.44 (123/280) | 0.76 |

| Surgery | 0.47 (24/51) | |

| Personal | 0.44 (47/108) | 0.82 |

| Institutional | 0.42 (143/338) | |

| Position on paper | ||

| First | 0.43 (181/423) | 1.0 |

| Senior | 0.40 (8/20) | |

| Other | 0.33 (1/3) | |

| Industry involvement | ||

| Yes | 0.48 (25/52) | 0.88 |

| No | 0.46 (88/192) | |

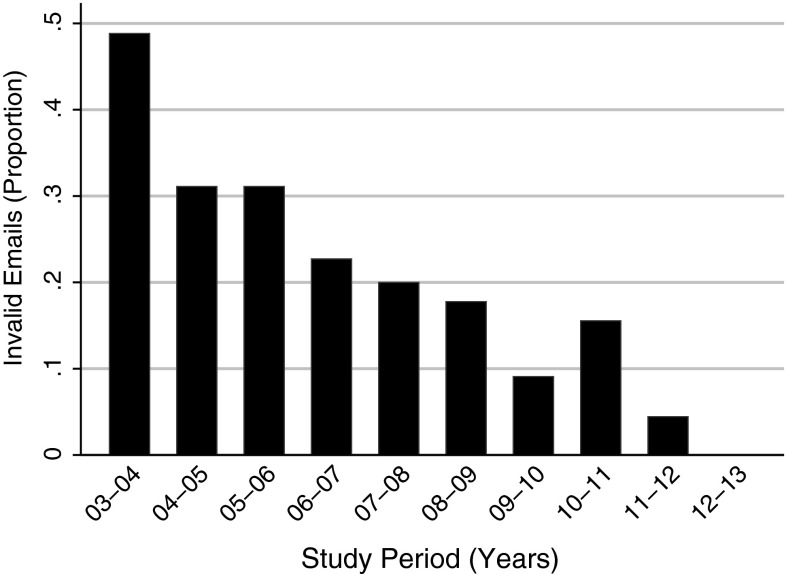

In 2013 there was no email decay and all email addresses (45/45) were reachable; however, within 10 years, almost ½ of all email addresses had become invalid (49% [21/43]; p < 0.001; Fig. 2).

Fig. 2.

This histogram shows the proportion of corresponding authors whose email address was invalid between 2003 and 2013.

Discussion

After publication of a manuscript, authors should remain available for access to raw data and data clarification, especially because of the importance of individual participant data for meta-analyses [8, 11], the low reproducibility of basic science results [1, 7, 13], and the encountered difficulties when implementing described clinical interventions [4]. We aimed to establish how many authors accept the responsibility of correspondence and identify characteristics of responders. As a result of susceptibility for desirable answers when authors are aware they are completing a survey, we mimicked an actual data request. We hope that this study, confirming that contacting corresponding authors is problematic throughout the field of biomedical research—nearly ½ of all authors with a working email address did not respond to our inquiry—will create awareness regarding author responsibilities after publication.

This study has some limitations. Because we did not request specific data immediately, some recipients might have experienced the email as ambiguous and therefore did not respond to it. After explanation of our survey, four authors confirmed that this sense of unease was the reason for them not to respond. However, inquiry regarding distinct data would lead to a far greater investment of recipients’ time. In the minimization of demanded time, we also decided to contact corresponding authors only once. Making several attempts to contact authors would have increased the response rate in addition to the email burden. In addition, we contacted corresponding authors using only the email address listed on PubMed. In cases in which an author’s name is unique and their institution is reasonably open in publishing contact information on the web, an Internet search might result in more current contact information. As Internet server providers increasingly deliberately disable nondelivery reports, the amount of expired email addresses might be underestimated, which was an endpoint our study could not assess. After careful consideration together with our internal review board we decided that the deception in this study was of minimal risk, justifiable, and not likely to cause harm. We decided that medical researchers were not a vulnerable or special population, and more likely than most to understand and see the value in what we regarded as a small but justified deception. We understood there could be complaints related to this project, which were reported to our review board.

To our knowledge, the current work is the largest study on responsiveness in the medical field; only two other small studies address this issue [9, 12]. In one study, which requested data sets of 10 authors, three did not reply and two email addresses were no longer working [9]. For a review needing additional data, 32% (six of 19) did not reply [12]. Similar problems have been encountered in other fields. Twenty-seven percent (38/141) of the psychologists contacted for their dataset left the inquiry unanswered [16, 17]. When the biologist requested raw data of morphologic research on plants and animals, they found a response rate of 36% (191/516) [14]. When evolutionary biologists were contacted in two other studies, 29% (51/176) [15] and 17% (five of 30) replied, the latter being a written request [5]. Journals might consider helping researchers who do not receive a response from a corresponding author by contacting the author as well, or by providing contact information of other researchers involved. If no satisfaction is obtained, the corresponding author could be denied future publication in that journal until the matter is resolved, similar to the hold on a library card for not returning a book.

To our knowledge, this is the first study aimed to identify responder characteristics. We found a higher proportion of undeliverable emails among basic/translational researchers. Researchers in these fields seem to move or change their email more often.

Although still substantial, the rate of email decay has declined, as it ranged from 100% (nine of nine) to 24% (3915/16,340) in the previous decade (1994–2004) [18] versus 49% (21/43) to 0% (45/45) in our study. Undeliverable messages mainly result from expired email addresses, most likely because an author leaves an institution or changes his or her email address—a problem more pronounced in the field of basic and translational research. Possible solutions to decrease email decay would be to increase the number of email addresses on published papers [14]. In theory, personal email addresses might be more robust than institutional ones, because people might change institutes more often then they change their personal email; our results do not support this hypothesis. However, by requiring an institutional and a personal email address, the chances increase that at least one remains valid. A more rigorous step recently taken by some journals is listing the email addresses of all authors on the published paper and in PubMed [6]. Another adjustment would be to send an automated reply offering alternative ways to get in touch after the corresponding author has left the institution as opposed to making the address invalid, a remedy only encountered twice in our study. By linking published manuscripts to research platforms posting up-to-date contact information (similar to social media websites), email decay might become less relevant.

Our results show that only approximately ½ of all authors actively accept the responsibilities of corresponding authorship. In addition, email decay inhibits author correspondence substantially. Although email overload is a fact of everyday life, this does not alleviate the ethical responsibility of data sharing. Defining the responsibilities of corresponding authors by journals more explicitly, particularly after publication of their manuscript, may increase the response rate on data requests. Email decay might be decreased by: (1) listing two email addresses; (2) listing email addresses of all authors; (3) offering alternative ways to get in touch when the corresponding author leaves his or her institution; and (4) linking published manuscripts to research platforms.

Acknowledgments

We thank Jos de Bruin, Drs and David Ring MD, PhD (Orthopaedic Hand and Upper Extremity Service, Massachusetts General Hospital, Harvard Medical School, Boston, MA) for their thorough reading and helpful comments.

Appendix 1. Selected journals

| Ranking | Journal | Impact factor | General focus* |

|---|---|---|---|

| 1 | New England Journal of Medicine | 52 | Clinical research |

| 2 | Lancet | 39 | Clinical research |

| 3 | Journal of the American Medical Association | 30 | Clinical research |

| 4 | Lancet Oncology | 25 | Clinical research |

| 5 | Cancer Cell | 25 | Basic science |

| 6 | Journal of Clinical Oncology | 18 | Clinical research |

| 7 | British Medical Journal | 17 | Clinical research |

| 8 | Molecular Cell | 15 | Basic science |

| 9 | Molecular Psychiatry | 15 | Basic science |

| 10 | Genome Research | 14 | Basic science |

| 11 | Journal of the National Cancer Institute | 14 | Clinical research |

| 12 | Acta Crystallographica Section D | 14 | Basic science |

| 13 | Annals of Internal Medicine | 14 | Internal/general medicine |

| 14 | Developmental Cell | 13 | Basic science |

| 15 | Genes & Development | 12 | Basic science |

| 16 | Journal of Cell Biology | 11 | Basic science |

| 17 | Cell Research | 11 | Basic science |

| 18 | Molecular Biology and Evolution | 10 | Basic science |

| 19 | Leukemia | 10 | Basic science |

| 20 | Cancer Research | 8.7 | Basic science |

| 21 | Cell Death & Differentiation | 8.4 | Basic science |

| 22 | Nucleic Acids Research | 8.3 | Basic science |

| 23 | Clinical Cancer Research | 7.8 | Clinical research |

| 24 | Stem Cells | 7.7 | Basic science |

| 25 | Annals of Oncology | 7.4 | Clinical research |

| 26 | Canadian Medical Association Journal | 6.5 | Clinical research |

| 27 | Journal of Internal Medicine | 6.5 | Internal/general medicine |

| 28 | Annals of Surgery | 6.3 | Surgery |

| 29 | American Journal of Transplantation | 6.2 | Clinical research |

| 30 | Journal of Neuro-Oncology | 6.2 | Clinical research |

| 31 | Cell Cycle | 5.2 | Basic science |

| 32 | Annals of Medicine | 5.1 | Internal/general medicine |

| 33 | Journal of Neurology, Neurosurgery and Psychiatry | 4.9 | Surgery |

| 34 | American Journal of Preventive Medicine | 3.9 | Internal/general medicine |

| 35 | Liver Transplantation | 3.9 | Surgery |

| 36 | Journal of Molecular Biology | 3.9 | Basic science |

| 37 | Shock Journal | 2.6 | Surgery |

| 38 | Journal of Pain and Symptom Management | 2.6 | Internal/general medicine |

| 39 | International Journal of Biological Macromolecules | 2.6 | Basic science |

| 40 | Journal of Investigative Surgery | 1.3 | Surgery |

| 41 | Biological Trace Element Research | 1.3 | Basic science |

| 42 | Yonsei Medical Journal | 1.3 | Surgery |

| 43 | Preparative Biochemistry & Biotechnology | 0.41 | Basic science |

| 44 | International Surgery | 0.31 | Surgery |

| 45 | Scottish Medical Journal | 0.29 | Internal/general medicine |

* As indicated by the ISI web of knowledge.

Appendix 2. Sent email request

Dear Dr [author’s initials and last name]:

With great interest we read your article: [manuscript title]. We would like to include your article in our systematic review; however, we need some additional data not mentioned in your manuscript. Please let us know if you would be so kind to provide us with this data.

Kind regards,

T. Teunis MD

Research Fellow

Massachusetts General Hospital

Harvard Medical School

phone: (+1) 617-724-9563

email: tteunis@partners.org

fax: (+1) 617-643-1274

Appendix 3. Explanatory email

Dear Dr [author’s initials and last name]:

Thank you for your response. We want to inform you that our previous email was intentionally misleading: we are conducting a survey on the response rate of corresponding authors. To minimize bias, we were not able to tell you this in our previous correspondence. Your paper was randomly selected. Our intent is to see how many corresponding authors actually accept the role of “corresponding author” and are willing to share data on email request. We are not actually asking for any additional data. All results are handled confidentially and are from this point on anonymized. Your name will not be linked to any response provided. If you wish to have a copy of our study results/report, let us know by email and we will send you a copy electronically. If you have any further questions, please do not hesitate to contact us. We apologize for any distress or inconvenience, and thank you for your response.

Sincerely,

Teun Teunis MD

Research fellow

&

Joseph H. Schwab MD, MA

Principal Investigator, Chief of Spine Surgery

Department of Orthopaedic Surgery

Massachusetts General Hospital

Harvard Medical School

Address: Room 3.946, Yawkey Building

Massachusetts General Hospital

55 Fruit Street

Boston, MA 02114, USA

phone: 617-724-9563

email1: tteunis@mgh.harvard.edu

email2: jhschwab@mgh.harvard.edu

fax: 617-643-1274

Footnotes

One of the authors (TT) received research grants from the Prince Bernhard Culture Fund & Kuitse Fung (Amsterdam, The Netherlands) (less than USD 10,000), and Fundatie van de Vrijvrouwe van Renswoude te’s-Gravenhage (The Hague, The Netherlands) (less than USD 10,000).

One of the authors (JS) certifies that he, or a member of his immediate family, has or may receive payments or benefits, during the study period from Stryker (Kalamazoo, MI, USA) (less than USD 10,000), and Biom’up (Saint-Priest, France) (less than USD 10,000).

All ICMJE Conflict of Interest Forms for authors and Clinical Orthopaedics and Related Research ® editors and board members are on file with the publication and can be viewed on request.

Clinical Orthopaedics and Related Research ® neither advocates nor endorses the use of any treatment, drug, or device. Readers are encouraged to always seek additional information, including FDA-approval status, of any drug or device prior to clinical use.

Each author certifies that his or her institution waived approval for the human protocol for this investigation and that all investigations were conducted in conformity with ethical principles of research.

References

- 1.Begley CG, Ellis LM. Drug development: raise standards for preclinical cancer research. Nature. 2012;483:531–533. doi: 10.1038/483531a. [DOI] [PubMed] [Google Scholar]

- 2.Blumenthal D, Campbell EG, Anderson MS, Causino N, Louis KS. Withholding research results in academic life science: evidence from a national survey of faculty. JAMA. 1997;277:1224–1228. doi: 10.1001/jama.1997.03540390054035. [DOI] [PubMed] [Google Scholar]

- 3.Chalmers I, Glasziou P. Avoidable waste in the production and reporting of research evidence. Lancet. 2009;374:86–89. doi: 10.1016/S0140-6736(09)60329-9. [DOI] [PubMed] [Google Scholar]

- 4.Glasziou P, Meats E, Heneghan C, Shepperd S. What is missing from descriptions of treatment in trials and reviews? BMJ. 2008;336:1472–1474. doi: 10.1136/bmj.39590.732037.47. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Leberg PL, Neigel JE. Enhancing the retrievability of population genetic survey data? An assessment of animal mitochondrial DNA studies. Evolution. 1999;53:1961–1965. doi: 10.2307/2640454. [DOI] [PubMed] [Google Scholar]

- 6.Menendez ME, Bot AG, Hageman MG, Neuhaus V, Mudgal CS, Ring D. Computerized adaptive testing of psychological factors: relation to upper-extremity disability. J Bone Joint Surg Am. 2013;95:e149. doi: 10.2106/JBJS.L.01614. [DOI] [PubMed] [Google Scholar]

- 7.Prinz F, Schlange T, Asadullah K. Believe it or not: how much can we rely on published data on potential drug targets? Nat Rev Drug Discov. 2011;10:712. doi: 10.1038/nrd3439-c1. [DOI] [PubMed] [Google Scholar]

- 8.Riley RD, Lambert PC, Abo-Zaid G. Meta-analysis of individual participant data: rationale, conduct, and reporting. BMJ. 2010;340:c221. doi: 10.1136/bmj.c221. [DOI] [PubMed] [Google Scholar]

- 9.Savage CJ, Vickers AJ. Empirical study of data sharing by authors publishing in PLoS journals. PloS One. 2009;4:e7078. doi: 10.1371/journal.pone.0007078. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Science and Scholarly Research Division. Journal Citation Reports®. Available at: http://wokinfo.com/products_tools/analytical/jcr/. Accessed November 3, 2013.

- 11.Simmonds MC, Higgins JP, Stewart LA, Tierney JF, Clarke MJ, Thompson SG. Meta-analysis of individual patient data from randomized trials: a review of methods used in practice. Clin Trials. 2005;2:209–217. doi: 10.1191/1740774505cn087oa. [DOI] [PubMed] [Google Scholar]

- 12.Teunis T, Nota SP, Hornicek FJ, Schwab JH, Lozano-Calderon SA. Outcome after reconstruction of the proximal humerus for tumor resection: a systematic review. Clin Orthop Relat Res. 2014;472:2245–2253. doi: 10.1007/s11999-014-3474-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Vasilevsky NA, Brush MH, Paddock H, Ponting L, Tripathy SJ, Larocca GM, Haendel MA. On the reproducibility of science: unique identification of research resources in the biomedical literature. PeerJ. 2013;1:e148. doi: 10.7717/peerj.148. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Vines TH, Albert AY, Andrew RL, Debarre F, Bock DG, Franklin MT, Gilbert KJ, Moore JS, Renaut S, Rennison DJ. The availability of research data declines rapidly with article age. Curr Biol. 2014;24:94–97. doi: 10.1016/j.cub.2013.11.014. [DOI] [PubMed] [Google Scholar]

- 15.Vines TH, Andrew RL, Bock DG, Franklin MT, Gilbert KJ, Kane NC, Moore JS, Moyers BT, Renaut S, Rennison DJ, Veen T, Yeaman S. Mandated data archiving greatly improves access to research data. FASEB J. 2013;27:1304–1308. doi: 10.1096/fj.12-218164. [DOI] [PubMed] [Google Scholar]

- 16.Wicherts JM, Bakker M, Molenaar D. Willingness to share research data is related to the strength of the evidence and the quality of reporting of statistical results. PloS One. 2011;6:e26828. doi: 10.1371/journal.pone.0026828. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Wicherts JM, Borsboom D, Kats J, Molenaar D. The poor availability of psychological research data for reanalysis. Am Psychol. 2006;61:726–728. doi: 10.1037/0003-066X.61.7.726. [DOI] [PubMed] [Google Scholar]

- 18.Wren JD, Grissom JE, Conway T. E-mail decay rates among corresponding authors in MEDLINE: the ability to communicate with and request materials from authors is being eroded by the expiration of e-mail addresses. EMBO Rep. 2006;7:122–127. doi: 10.1038/sj.embor.7400631. [DOI] [PMC free article] [PubMed] [Google Scholar]