Abstract

Traditionally, epidemiologic methodologies have focused on measurement of exposures, outcomes, and program impact through reductionistic, yet complex statistical modeling. Although not new to the field of epidemiology, two frameworks that provide epidemiologists with a foundation for understanding the complex contexts in which programs and policies are implemented were presented to maternal and child health (MCH) professionals at the 2012 co-hosted 18th Annual MCH Epidemiology Conference and 22nd CityMatCH Urban Leadership Conference. The complex systems approach offers researchers in MCH the opportunity to understand the functioning of social, medical, environmental, and behavioral factors within the context of implemented public health programs. Implementation science provides researchers with a framework to translate the evidence-based program interventions into practices and policies that impact health outcomes. Both approaches offer MCH epidemiologists conceptual frameworks with which to re-envision how programs are implemented, monitored, evaluated, and reported to the larger public health audience. By using these approaches, researchers can begin to understand and measure the broader public health context, account for the dynamic interplay of the social environment, and ultimately, develop more effective MCH programs and policies.

Keywords: Implementation science, Complex systems thinking, Quality assurance, Public health, Theory

Introduction

During the 2012 co-hosted 18th Annual Maternal and Child Health Epidemiology Conference and 22nd CityMatCH Urban Leadership Conference, the opening plenary session highlighted the potential application of two innovative areas of research in public health and the field of maternal and child health (MCH): complex systems thinking and implementation science. Although these approaches were not new to the field of epidemiology, they provided alternative frameworks for MCH professionals to consider when implementing new programs or modifying existing programs for diverse settings.

The following article summarizes and supplements the plenary session from the Conference by briefly describing each approach, discussing how both are innovative in the field of MCH, and suggesting that the two approaches are complementary tools which could be used to inform programmatic and policy work. The authors challenge MCH professionals to consider these tools in the design, planning, implementation, and evaluation of population-based programs to effectively meet the needs of MCH populations.

Complex Systems Thinking

Traditionally, epidemiologic methods have focused on isolating the “causal” health effect of a single factor or “exposure,” necessitating holding all other factors constant, so that the causal effect can be properly identified. By contrast, complex systems approaches focus on understanding the functioning of the system as a whole. The underlying rationale is that identifying the effect of a specific intervention on the systems often requires understanding how the system as a whole functions. Therefore, the effect of a given factor may often depend on the state of other factors in the system and be affected by feedback loops and dependencies. Understanding the fundamental relationships in the system including interactions between individuals, between individuals and environments over time, and between social and biologic processes is essential for distinguishing factors in contexts which poorly impact health. Understanding fundamental relationships is also essential for identifying appropriate intervention points to address these factors, and for anticipating the potential impact a new program will have when introduced into a specific community or “system.” Successful implementation of public health interventions requires an understanding of the environment (e.g. families, communities, schools, health care system) in which the target population live and function.

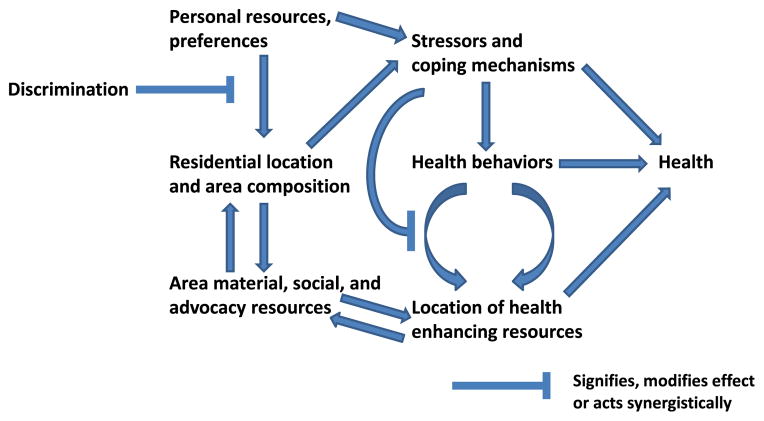

Programs and interventions have increased in complexity over time and evaluating the impact of these multidimensional programs requires a broader framework than has traditionally been used [1, 2]. Complex systems thinking explicitly acknowledges the presence of multiple levels, feedback loops, and dependencies (such as individuals influencing and affecting each other). Multiple levels of influence, feedback loops, and dependencies result in the emergence of macro-level patterns [3]. A more thorough understanding of the dynamic relationships in the system [3] may help anticipate and monitor the effectiveness of a program or intervention over time. For example, estimating the plausible impact of a neighborhood level intervention to increase physical activity may require consideration of feedback loops and dependencies. The physical environment (e.g. the availability of safe places to walk or exercise) may affect the physical activity levels of individuals. Additionally, individuals who are physically active may elect to reside in a neighborhood with walking or biking paths. The presence of many active residents in the neighborhood may encourage others to become active and may in turn trigger further increases in the number of sidewalks and walkable space to accommodate residents. Using this example, initiatives to improve the physical environments could result in a reinforcing feedback loop that triggers further beneficial changes in the environment. Feedback loops may be reinforcing or buffering and may result in unanticipated smaller or larger impacts on health outcomes than expected, and effects on other factors that could potentially influence health outcomes (Fig. 1) [4].

Fig. 1.

Dynamic relationships between area factors, personal factors, health behaviors, and health outcomes adapted from [4]

In addition to feedback loops, dependencies between individuals (another key component of systems) may also influence the potential impact of a program to improve health. Since MCH programs are necessarily implemented in social settings, the outcomes experienced by one individual may be dependent on or affected by the outcomes of another individual given preexisting behaviors, interactions, and relationships [3]. The impact of social media, or how behaviors or practices are adopted by groups of individuals using multiple modes of communication, is an example of a mechanism which generates dependence [2].

Current evaluation plans for MCH programs and interventions may lack appropriate consideration of the system in which these interventions are embedded. Identifying the key components of the system and the relationships, feedback loops, and dependencies fundamental to the system is a first important step in a systems approach. Various systems simulation tools can then be used to explore the functioning of the system and evaluate the plausible impact of different interventions, and similarly, how impact may be modified by other factors or features of the system.

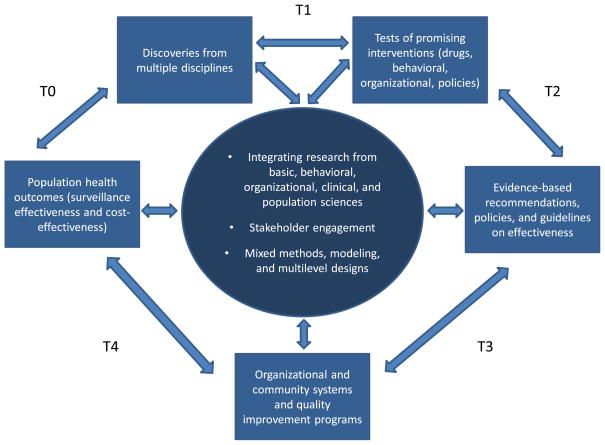

Implementation Science

The term ‘implementation science’ is defined as the communication of evidence-based, effective approaches to affect change in clinical or programmatic practice, to ultimately impact health outcomes [5]. Implementation science supports researchers and scientists in understanding the processes that can successfully integrate interventions and health care information within community or clinical settings [6]. Implementation science can be contextualized as a part of a broader translational research pathway, which extends from the discovery of new scientific information to the testing of interventions that can arise from that discovery, and then the movement through systems, policy, and improvement in population health [7]. Implementation science largely focuses on the translation of interventions into practice and policy (represented by T3 and T4 in Fig. 2), reflecting interpretation of both positive and negative results, and identifying successful and unsuccessful approaches that contribute to development of recommendations and guidelines. Furthermore, translation and interpretation in implementation science are expected to provide evidence to guide future advances at each phase. In doing so, implementation science as a discipline supports identification of attributes that allow for iterative changes and adaptation to impact health outcomes.

Fig. 2.

The process of moving scientific knowledge to practice and policy *adapted from [7 and [16]

Understanding the implementation of evidence-based approaches includes assessing the feasibility, acceptability, uptake, cost, and sustainability of health programs, including the influence on patients, practitioners, and systems. Research on implementation often involves integrating multiple sciences, including behavioral, organizational, clinical, and public health; fully engaging participating stakeholders in the process; and applying mixed methodologies, models, and multilevel designs in analyses.

The ultimate success of implementation science is strongly determined in the initial phases of scientific discovery. Useful evidence can be first generated by research in multiple health-related disciplines including medicine, public health, biology, chemistry, and other natural sciences (T0). The first phase of translation includes testing the new evidence in the form of clinical, therapeutic, behavioral, social, or policy interventions aimed at interceding to reduce a poor health outcome or promote healthier choices (T1). In the second phase, entities research and assess the impact on the outcomes of the interventions to determine whether significant change is produced (T2). If so, recommendations or guidelines may be developed by national organizations, agencies, or panels. In the field of MCH, examples of these groups include the Secretary’s Advisory Committee on Infant Mortality (SACIM), the American Academy of Pediatrics (AAP) Committee on the Fetus and Newborn, or the American College of Obstetricians and Gynecologists (ACOG) Committee on Obstetric Practice [8–10].

Implementation science, recently referred to as “T3” or “T4” translational research, is concerned with contextualizing advances and measuring the uptake, adoption, and adaptation of guidelines and recommendations into practice and policy. Specifically, the planned sustainability of evidence-based guidelines and recommendations are of particular focus, beyond understanding of costs, barriers encountered, and progress made during implementation. The third phase includes research to increase the adoption of evidence-based approaches into practice and policy (T3). The last phase of the process is evaluation of the approaches in real-world settings (T4). The evaluation may include testing differences between interventions when applied to divergent populations, the impact of cost on implementation and adherence to the guidelines or recommendations, and comparisons of outcomes between multiple settings using the same approach. One example of an ongoing implementation research project is an iterative, participatory study, ‘My Own Health Report,’ (MOHR) which recruited nine primary care practices including stakeholder groups, partners, and patients to test a process for implementing behavioral health screening instruments within typical practice. The study focused on researchers determining the extent to which these instruments could be used to provide valuable information to shape provider and patient decision-making. The MOHR trial participants have continued to provide input on the study design, content, measures, goals, factors, cost, and the impact of behavioral health assessments on their practice [11]. The goal of early engagement of all participants is to ensure long-term sustainability of the project and active participation by patients over time. Such continued engagement of participants for the duration of the trial has allowed the program implementers to modify or re-direct program services while maintaining fidelity to the core model.

Implementation science allows researchers to question traditional assumptions often reinforced in the published literature. For example, health professionals and practitioners may interpret the application of guidelines and recommendations as a static process, or that the evidence-base, once published, is set and therefore resistant to new findings or external effects. The concept that among programs or intervention sites, fidelity to the program structure and model is often viewed as more important than the adaptability of the program framework among different populations in different settings, exemplifies these assumptions. Others may assume in the context of research studies that health systems are unchanging over time or that implementing a new program within a given setting will result in immediate change. When a new program or intervention is initially implemented, researchers may expect the effect in the target population to be greatest, and then that the program or intervention will lose effect over time rather than stabilize or gain momentum. As a result, researchers may miss opportunities to improve programs over time, redirect, or modify the program model, instead assuming that all changes will have negative consequences [12]. Finally, often unquestioned is the concept that intervention or target populations are considered homogenous, and that ending or choosing not to implement an intervention as originally designed is a poor choice. Implementation science provides the framework to question these assumptions, and findings can lead to better integration of evidence-based interventions, better organization of care, and ultimately better health outcomes.

Complex Systems Thinking and Implementation Science: Two Complementary Approaches with the Potential to Impact the Field of MCH

Both complex systems thinking and implementation science are innovative and complimentary approaches that, when adopted by the field of MCH, may improve the development and evaluation of current and/or newly developed programs. Complex systems thinking offers the opportunity for MCH professionals to better account for the dynamic processes through which exposures, interventions, and policies influence health, by considering multiple levels of influence, interdependencies, feedback loops and nonlinearities when planning, implementing, and evaluating programs. Additionally, complex systems thinking and tools can provide insight into how factors functioning at various levels (e.g. discrimination, access to care, neighborhood stressors, and policies regarding maternity leave) jointly operate to generate macro level patterns such as disparities in maternal and birth outcomes. By comparison, implementation science provides a conceptual framework for translating evidence-based approaches to be applied in public health programs, and the translation of evidence-based research to address macro-level issues such as disparity are paramount to moving the field of MCH forward. For example, understanding how the AAP standard terminology for fetal, infant, and perinatal deaths are operationalized and implemented by hospital and state regulatory systems will both impact clinical care and may affect disparate rates of neonatal complications and death [13].

In order to introduce, refine, and sustain evidence-based programs successfully for improving the health of MCH populations, the MCH community must have a thorough understanding of both the systems in which MCH populations live and the complicated delivery systems within which programs are implemented. If researchers or scientists utilize this understanding, the effects of new programs in the population can be better anticipated, the processes used to deliver the program can be continuously monitored and improved, and when a program has unanticipated effects, appropriate changes can be implemented. Examples of programmatic areas in MCH that currently use or are poised to use these approaches include Perinatal Quality Collaboratives, State Infant Mortality Initiatives, State Fetal and Infant Mortality Reviews, State Maternal Mortality Reviews, and other MCH systems-based collaborations including the Collaborative Improvement and Innovation Network (CoIIN) to Reduce Infant Mortality and the Maternal, Infant, and Early Childhood Home Visiting (MIECHV) Program [14, 15]. Though emphasizing different levels of measurement, each approach adds value to the methodological development, implementation, and evaluation of MCH programs.

Challenging the MCH Field to Think Broadly

With both the complex systems thinking and implementation science approaches, MCH professionals have additional tools with which to consider broadening program impact. While MCH professionals may not utilize all aspects of these approaches, awareness can help these professionals in developing conceptual models of MCH outcomes that consider the complexities of the environments in which MCH populations live, and the complicated delivery systems within which MCH programs are implemented. For example, data or the capacity for working with that data, may not be available to MCH epidemiologists in public health agencies for performing agent-based modeling to test hypotheses about systems [1], but could provide critical information to program directors during the planning, implementation, and evaluation of MCH programs. In an era of ‘return on investment,’ re-prioritization of resources, and justification of the cost to benefit ratio, understanding the dynamic interplay between individuals and systems that MCH programs target is vital for making programs as effective and efficient as possible. Additionally, understanding that fidelity to a model does not necessarily include implementing the MCH program in the same way in every place, at every time, and that adaptation to best fit a population or geographic region improves the chances of program sustainability and success. The uncertainty involved in assessing program effectiveness in ‘real world’ settings can be reduced, and the comparability between outcomes impacted in programs implemented in a population-based setting and rigorously controlled trials is addressed. Using these two complementary approaches, MCH professionals can maximize program benefit and reach. By considering such innovative approaches during program planning, implementation, and evaluation, the way in which MCH-related issues are addressed by professionals may change, and the MCH field will benefit from continuing to build its evidence base to support well-defined programs and interventions aimed at impacting population health.

Footnotes

The findings and conclusions in this report are those of the authors and do not necessarily represent the official position of the Centers for Disease Control and Prevention, Department of Health and Human Services, or the National Institute of Mental Health, National Institutes of Health.

Contributor Information

Charlan D. Kroelinger, Email: ckroelinger@cdc.gov, Division of Reproductive Health, National Center for Chronic Disease Prevention and Health Promotion, Centers for Disease Control and Prevention, 4770 Buford Hwy NE, MS F-74, Chamblee, GA 30341, USA

Kristin M. Rankin, Division of Epidemiology and Biostatistics, School of Public Health, University of Illinois at Chicago, Chicago, IL, USA

David A. Chambers, Division of Services and Intervention Research, National Institute of Mental Health, National Institutes of Health, Bethesda, MD, USA

Ana V. Diez Roux, Department of Epidemiology, School of Public Health, University of Michigan, Ann Arbor, MI, USA

Karen Hughes, Division of Family and Community Health Services, Ohio Department of Health, Columbus, OH, USA.

Violanda Grigorescu, Division of Reproductive Health, National Center for Chronic Disease Prevention and Health Promotion, Centers for Disease Control and Prevention, 4770 Buford Hwy NE, MS F-74, Chamblee, GA 30341, USA.

References

- 1.Auchincloss AH, Diez Roux AV. A new tool for epidemiology: The usefulness of dynamic-agent models in understanding place effects on health. American Journal of Epidemiology. 2008;168:1–8. doi: 10.1093/aje/kwn118. [DOI] [PubMed] [Google Scholar]

- 2.Diez Roux AV. Next steps in understanding the multilevel determinants of health. Journal of Epidemiology and Community Health. 2008;62:957–959. doi: 10.1136/jech.2007.064311. [DOI] [PubMed] [Google Scholar]

- 3.Diez Roux AV. Complex systems thinking and current impasses in health disparities research. American Journal of Public Health. 2011;101(9):1627–1634. doi: 10.2105/AJPH.2011.300149. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.18th annual maternal and child health epidemiology (mch epi) conference–advancing partnerships: Data, practice, and policy. [cited Access 21 October 2013];Plenary sessions: Plenary I–using the principles of complex systems thinking and implementation science to enhance MCH program planning and delivery. http://learning.mchb.hrsa.gov/conferencearchives/epi2012/

- 5.Proctor EK, Landsverk J, Aarons G, et al. Implementation research in mental health services: An emerging science with conceptual, methodological, and training challenges. Administration and Policy In Mental Health. 2009;36:24–34. doi: 10.1007/s10488-008-0197-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Rabin BA, Brownson RC, Haire-Joshu D, et al. A glossary for dissemination and implementation research in health. Journal of Public Health Management and Practice. 2008;14(2):117–123. doi: 10.1097/01.PHH.0000311888.06252.bb. [DOI] [PubMed] [Google Scholar]

- 7.Glasgow RE, Vinson CA, Chambers DA, et al. National institutes of health approaches to dissemination and implementation science: Current and future directions. American Journal of Public Health. 2012;102(7):1274–1281. doi: 10.2105/AJPH.2012.300755. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. [cited Access 4 September 2013];Secretary’s advisory committee on infant mortality. http://www.hrsa.gov/advisorycommittees/mchbadvisory/InfantMortality/index.html.

- 9. [cited Access 4 September 2013];Commitee on fetus and newborn. http://www.aap.org/en-us/about-the-aap/Committees-Councils-Sections/Pages/Committee-on-Fetus-and-Newborn.aspx.

- 10. [cited Access 4 September 2013];Committees and councils. http://www.acog.org/About_ACOG/ACOG_Departments/Committees_and_Councils.

- 11.Krist AH, Glenn BA, Glasgow RE, et al. Designing a valid randomized pragmatic primary care implementation trial: The my own health report (mohr) project. Implementation Science. 2013;8:73–88. doi: 10.1186/1748-5908-8-73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Chambers DA, Glasgow RE, Stange KC. The dynamic sustainability framework: Addressing the paradox of sustainment amid ongoing change. Implementation Science. 2013;8(1):117. doi: 10.1186/1748-5908-8-117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Barfield WD. Standard terminology for fetal, infant, and perinatal deaths. Pediatrics. 2011;128(1):177–181. doi: 10.1542/peds.2011-1037. [DOI] [PubMed] [Google Scholar]

- 14. [cited Access 4 September 2013];Collaborative improvement and innovation network to reduce infant mortality. http://mchb.hrsa.gov/infantmortality/coiin/

- 15. [cited Access 4 September 2013];Maternal, infant, and early childhood home visiting program. http://mchb.hrsa.gov/programs/homevisiting/

- 16.Khoury MJ, Gwinn M, Ioannidis JPA. The emergence of translational epidemiology: From scientific discovery to population health impact. American Journal of Epidemiology. 2010;172:517–524. doi: 10.1093/aje/kwq211. [DOI] [PMC free article] [PubMed] [Google Scholar]