Abstract

Human faces are fundamentally dynamic, but experimental investigations of face perception traditionally rely on static images of faces. While naturalistic videos of actors have been used with success in some contexts, much research in neuroscience and psychophysics demands carefully controlled stimuli. In this paper, we describe a novel set of computer generated, dynamic, face stimuli. These grayscale faces are tightly controlled for low- and high-level visual properties. All faces are standardized in terms of size, luminance, and location and size of facial features. Each face begins with a neutral pose and transitions to an expression over the course of 30 frames. Altogether there are 222 stimuli spanning 3 different categories of movement: (1) an affective movement (fearful face); (2) a neutral movement (close-lipped, puffed cheeks with open eyes); and (3) a biologically impossible movement (upward dislocation of eyes and mouth). To determine whether early brain responses sensitive to low-level visual features differed between expressions, we measured the occipital P100 event related potential (ERP), which is known to reflect differences in early stages of visual processing and the N170, which reflects structural encoding of faces. We found no differences between faces at the P100, indicating that different face categories were well matched on low-level image properties. This database provides researchers with a well-controlled set of dynamic faces controlled on low-level image characteristics that are applicable to a range of research questions in social perception.

Faces are the primary source of visual social information, and they garner selective attention from very early in human life (Frank, Vul, & Johnson, 2009). Much cognitive and neuroscience research has established that faces are processed differently than other objects (Tsao & Livingstone, 2008). Humans are more attentive to faces than other visual stimuli (Bindemann, Burton, Hooge, Jenkins, & De Haan, 2005), as evidenced by overt measures of visual attention, such as looking time (Birmingham, Bischof, & Kingstone, 2008), and covert measures of processing and perception such as electroencephalography (EEG) (Bentin, Allison, Puce, Perez, & McCarthy, 1996). Moreover, because the development of face perception exhibits a prolonged maturational course, research into face perception allows for insights into cognitive development. As face expertise develops over time, it is developmentally vulnerable and can offer insights into atypical brain development even in children as young as six months. Finally, multiple clinical disorders (e.g., autism, schizophrenia, and depression) are associated with varying levels of difficulty with social function (Horley, Williams, Gonsalvez, & Gordon, 2004; Klin, Jones, Schultz, Volkmar, & Cohen, 2002; Sasson et al., 2007), which are associated with problems in face perception (Edwards, Jackson, & Pattison, 2002; J. C. McPartland et al., 2011) . Thus from a clinical and developmental perspective, face perception is a valuable domain of research.

Faces are fundamentally dynamic. While it is possible to determine gender, emotion, or identity from a static image of a face, faces are continually animate in everyday experience. However, prior research in face perception has been almost entirely reliant on static images of faces. In this paper, we introduce a novel stimulus set of animated photorealistic computer-generated faces. These stimuli were developed for neuroscience research in dynamic and interactive face processing, and they are stringently controlled for low- and high-level visual characteristics, e.g., luminance and speed of movement. These animations are consistent with human musculoskeletal characteristics and exhibit three movements: fear, puffed cheeks, and a biologically impossible motion1. These stimuli will allow for more ecologically valid assays of face perception while maintaining the careful control of visual characteristics necessary for perceptual and psychophysiological research.

This stimulus set addresses several challenges associated with stimuli used for face perception research. It is difficult to obtain realistic emotional expressions from untrained individuals (Gosselin, Perron, & Beaupre, 2010). In addition, obtaining large stimulus sets while controlling for age, sex, and ethnicity is resource intensive. Photographs must also be standardized for both low- and high-level visual properties, such as luminance and size, and pose and expression respectively. Computer-generated faces allow for finer control of both low-level perceptual and high-level expressive aspects of the stimuli.

Variability in low-level image properties, e.g., luminance and size, can introduce a confound in face perception research (Rousselet & Pernet, 2011a). Properties, such as contrast, texture, and luminance, impact face selective indices of neural processing in both EEG and fMRI investigations (Rousselet & Pernet, 2011b; Yue, Cassidy, Devaney, Holt, & Tootell, 2011) obfuscating face-sensitive brain activity. This sensitivity to low-level visual features in the visual system, both general, and face specific, highlights the need for careful control of the stimuli to avoid spurious effects driven by irrelevant aspects of the stimuli. The control of these low-level features in stimuli allows for more accurate appraisal of experimental effects of higher-level differences such as expression.

Controlling for high-level image features imposes an additional set of constraints. Despite the universality of face structure (i.e., two eyes, a nose, and a mouth), there is substantial variability among photographs of faces. Faces themselves differ in size and shape, and these differences can persist even after careful manipulation of images. While this variability in size and shape is characteristic of natural faces, it can introduce a confounding dimension in an experimental context. In addition to controlling for the composition of the image, photographs must also be taken under identical conditions (e.g., lighting and pose), and faces making multiple expressions must remain immobile lest the entire image change (Tolles, 2008).

Because of the difficulties inherent in finding photographic stimuli that meet the aforementioned constraints, an increasing number of face processing studies have employed computer-generated faces. Computer-generated faces show comparable results to real faces in behavioral and EEG research (Balas & Nelson, 2010; Freeman, Pauker, Apfelbaum, & Ambady, 2010; Lindsen, Jones, Shimojo, & Bhattacharya, 2010), and a recently released database of static computer-generated faces was used to replicate experimental findings of other-race and face inversion effects (Matheson & McMullen, 2011). The stimulus set we present here is a novel contribution to the field, addressing two currently unmet needs by (1) introducing movement and (2) contributing both emotional and non-emotional facial movements and poses while controlling for luminance, movement speed, size, and quality of expression.

Stimuli Description

The set is composed of 222 image sequences in the Portable Network Graphics (PNG) format. The set is composed of 177 unique identities, each identity showing from one to three expressions (fear, puffed cheeks, and impossible movement), i.e., not all faces show all three expressions. Because our processing pipeline involved specific modifications to the structure of individual faces, the unique properties of some faces resulted in unusual appearance during certain expressions, e.g., certain facial features were stretched or crimped in ways that appeared odd-looking. These faces were removed from the dataset. For each expression there are 2 sets of luminance matched image sequences. In the first, the first frames of each sequence are luminance matched, and, in the second, the final frames of each sequence are luminance matched; this allows for the images to be presented forward or backward with equivalent luminance, a process is described in detail below. These images are designed to be presented as 500ms clips where each image is presented for approximately 16ms, a speed commensurate with the refresh rate of most LCD displays. Appendix 1 contains instructions for generating animations from the stimulus image files.

Standardization of Stimuli

Standardization of luminance

Luminance was defined as a pixel value between 0 and 255, with 0 being completely black and 255 being completely white. Luminance was controlled over the entire animation using the following steps: (1) A baseline average luminance value was chosen corresponding to a pixel value of 145. (2) The difference between this luminance value and the average luminance of the first frame of every sequence was calculated; average luminance was calculated over the oval region of the internal features of each face. (3) The difference in luminance between the first frame of each sequence and the baseline luminance was subtracted from each first frame. This resulted in each first frame having an equal average luminance. (4) For each sequence of frames 2 through 30, the difference in luminance (calculated in (2)) between the first frame and the baseline was subtracted. Thus, the remaining frames were not luminance matched to the baseline but were matched to the difference applied to the initial frame in the animation. The resulting animation maintains the impression of equal lighting across all frames. (5) This entire procedure was repeated for sequences in reverse, i.e., the luminance difference was calculated from the final frame in the sequence, and applied to frames 29 through 1. This supports presentation of matched sequences in reverse, i.e., with faces transitioning from expression to neutral. As the luminance across images is matched on the first image, the luminance will change slightly across images in a sequence, this change will be slightly different in forward versus reverse sequences, as the matching is based on the first frame. Again, by matching only on the first frame, the resulting animation maintains the appearance of consistent lighting on the face.

Standardization of movement speed

In order to avoid confounds among conditions in pacing of movement and to maintain consistency with patterns of human movement, we constrained the movement in three specific ways: (1) The movements of all regions of the face are simultaneous, resulting in uniform movement timing across the entire face. (2) The movements follow a smooth (sigmoidal) pattern of acceleration; movement speed is maximal at the midpoint of the movement and minimal at the start and finish. This pattern of movement is consistent with biological motion in that there are no abrupt changes in velocity (Wann, Nimmo-Smith, & Wing, 1988). (3) The duration of each movement condition is identical, 500ms across 30 frames at a 60hz. In these faces the movement of all facial features is deliberately simultaneous ensuring standardization across expressions. Recent research suggests that the temporal dynamics of different facial features differ across emotions (Jack, Garrod, & Schyns, 2014). As this set contains only one affective expression, temporal dynamics were constrained to ensure comparability across expressions.

Standardization of expressions

The degree of movement within conditions was further controlled to reduce unwanted variability. A series of poses were developed for each facial movement. Each pose specified the endpoint of the movement for each facial region, e.g., the height of the eyelid at the end of the fear expression. These poses were applied to each face to generate the movements. This allowed for movements to be uniform across faces while maintaining the unique shape and texture of each face. This enabled us to control movement in two key ways: (1) The expressions were matched in terms of variability within expressions, i.e., there was no more variability in one set of expressions than another. (2) Each sequence entailed movement across the upper and lower regions of the face. This ensured that participants would see movement regardless of point of gaze on the face during the animation.

Standardization of size

The use of a single three-dimensional head model ensured consistency of the size of the faces and consistency in the location and size of the facial regions. This uniform size allowed for a uniform masking of faces to ensure that only the inner region of the face is shown (Gronenschild, Smeets, Vuurman, van Boxtel, & Jolles, 2009). For the current facial set, the masking obscures that these faces have no hair, renders them consistent with common photographic stimulus sets, and ensures that movements such as puffed cheeks do not change the size of the face on the screen.

Generating individual faces

Each unique identity in the stimulus set was generated using FaceGen software (Singular Inversions, 2010). FaceGen uses the approach described in Blanz & Vetter (1999) to parameterize and generate three-dimensional faces of varying age, race, and gender; similar faces are currently used in another recently published stimulus set of faces (Matheson & McMullen, 2011). These faces were then exported as polygon meshes, collections of connected points in a three-dimensional space that represent the shape of the face and head. This mesh formed the basic shape of each individual face and the neutral pose for this face. Polygon meshes were then imported into the Softimage software (Autodesk, 2011) and rigged using the FaceRobot tool. Rigging is the process of assigning a set of parameterized controllers for individual regions of the face while ensuring that deformations on one part of the face are congruent with other regions of the face (e.g., when the right eyebrow is raised the skin around the brows deforms differently depending on whether the eye is closed or the other eyebrow is raised). Additionally, FaceRobot was used to incorporate realistic eyes and teeth into the model. The facial rigs were then used to generate the three expressions used in this data set: fear, puffed cheeks, and impossible movement.

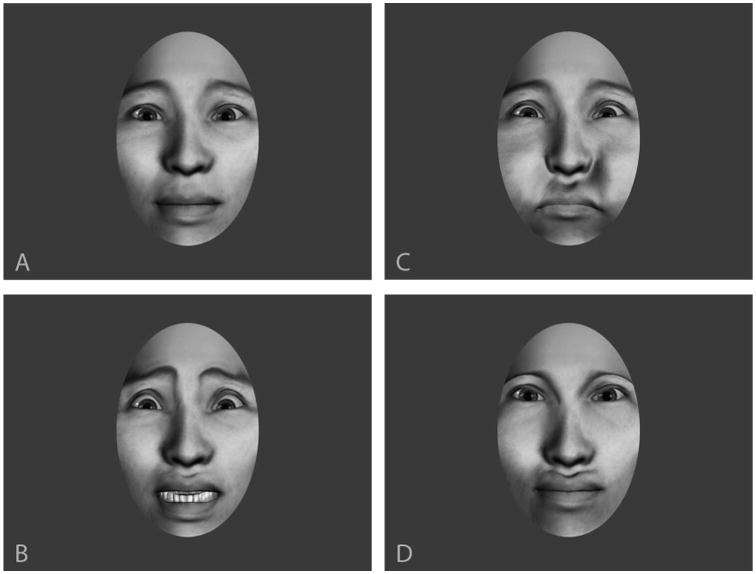

The expressions developed in this stimulus set were chosen to maximize conceptual versatility across a finite set of conditions. The expressions, fear expression, neutral expression, and disarrangement of facial features, are intended to encompass movement patterns that are affective versus neutral and biologically possible versus impossible, factors of focal interest in social neuroscience research. All expressions are shown in Figure 1:

Figure 1.

Facial expressions: A: neutral, B: fear, C: puffed cheeks, D: impossible dislocation of eyes and mouth. Note that, though the final pose appears to be an unusual but possible face configuration, the movements themselves are clearly impossible to viewers.

Affective movement

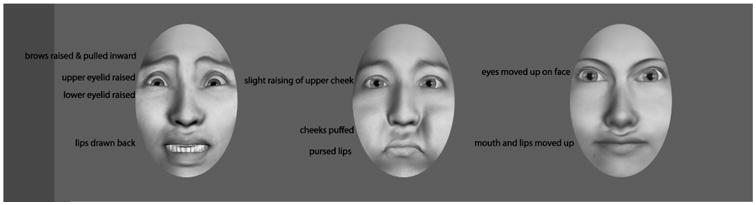

Fear was chosen as it is a universally identifiable emotion (P. Ekman & Friesen, 1971), is expressed in both the upper and lower regions of the face, and is the focus of considerable neuroscience research. Emotions indicating a threat, such as fear, are detected more rapidly (Belinda et al., 2005), elicit face specific neural circuitry earlier than other emotions (Blau, Maurer, Tottenham, & McCandliss, 2007), and are thought to also activate a subcortical route for face and emotion recognition (Vuilleumier, Armony, Driver, & Dolan, 2003). Fear expressions were based on the Ekman and Friesen descriptions of emotions (Paul Ekman & Friesen, 1975). Specifically, the lips are drawn and pulled back, the brows are raised and pulled outward, the lower eyelid is slightly pulled up, and the upper eyelid is raised exposing significantly more sclera above the iris (see Figure 2).

Figure 2.

Fear, puffed cheek, and impossible expressions.

Neutral movement

For the comparison condition to fear, we chose puffed cheeks as they are not emotional, but still represent a familiar facial movement. We extended the cheeks beyond the boundaries of the face, pursing the lips, and slightly closing the jaw to generate the expression. Additionally, the eyes opened slightly resulting in constriction of the skin around the eyes. The eye manipulations were incorporated to make the motion more realistic and to ensure that the facial motion occurred in both the lower and upper regions of the face.

Impossible movement

Impossible movement was included as a contrast for both fear and puffed cheeks. This expression offers a control condition for both the dynamics of facial movement and familiarity with facial expressions. The resulting “impossible” faces involve an upward dislocation of the eyes and mouth while maintaining the integrity of the face structure. The tissue around the mouth and eyes deforms upward and the bridge of the nose is stretched to accommodate the change. The impossible motion was designed to mimic a change of the face that incorporated the same soft tissue deformations as possible motions but with changes that are impossible given the structure of the human face.

ERP Validation

As these stimuli were developed for electrophysiological research in face perception, we ran a validation sample with the goal of verifying that different expressions did not differ on low-level image properties that might confound findings. In line with recent research addressing the contribution of low-level image properties to face perception (Rossion & Caharel, 2011; Rousselet & Pernet, 2011b), we compared P1 amplitude and latency to static faces in each condition (neutral, fear, puff, and impossible). Differences at the occipital P1 among stimulus classes have been interpreted as evidence for low-level visual differences among stimulus classes e.g., contrast and luminance, rather than high-level differences e.g., expression and identity. We also analyzed the face sensitive N170, a negative going deflection in the EEG following the P1. There is some evidence that the N170 is modulated by fearful expressions (Blau et al., 2007), but these findings are not universal (Eimer & Holmes, 2002; Holmes, Vuilleumier, & Eimer, 2003).

Twenty-nine participants (15 male, mean age 22.4, one participant did not report age) were recruited from the University of Washington and Yale University communities. All procedures were conducted with the understanding and written consent of participants and with approval of the Human Investigation Committee at the Yale School of Medicine consistent with the 1964 Declaration of Helsinki. Stimuli consisted of 210 distinct grayscale faces from the current stimulus set (70 fear, 70 puffed, 70 impossible movement). All stimuli were presented in frontal view and at a standardized viewing size (10.2° by 6.4°) on a uniform gray background. Stimuli were presented on a 18-inch color monitor (60 Hz, 1024 × 768 resolution) with E-Prime 2 software (Schneider, Eschman, & Zuccolotto, 2002) at a viewing distance of 72 cm in a sound attenuated room with low ambient illumination. EEG was recorded continuously at 250 Hz using NetStation 4.3. A 128 electrode cap was fitted on the participant's head according to the manufacturer's specifications (Tucker, 1993). Data were filtered on-line with a .1-200Hz bandpass filter. Impedances were kept below 40 kΩ. Participants at Yale University wore a 128 electrode Hydrocel Geodesic Sensor Net, and participants at the University of Washington wore a 128 electrode Geodesic Sensor Net. Although nets have minor differences in layout, electrodes of interest were comparable across both nets in terms of standard 10/20 system positions. Specific electrode numbers were 89, 90, 91, 94, 95, 96 (right hemisphere) and 58, 59, 64, 65, 68, 69 (left hemisphere) for the Hydrocel net and 90, 91, 92, 95, 96, 97, (right hemisphere) and 58, 59, 64, 65, 69, 70, (left hemisphere) for the Geodesic net. The regions subtended by these electrodes correspond to the occipitotemporal recording sites that are measured using electrodes T5 and T6 in the 10-20 system and have been used successfully in prior studies of face processing (McPartland, Cheung, Perszyk, & Mayes, 2010; McPartland, Dawson, Webb, Panagiotides, & Carver, 2004).

Trial structure was as follows: A central fixation crosshair was presented for a duration randomly varying between 200 and 300ms followed by a centrally presented static face either neutral or displaying an expression. After 500ms the face moved, displaying one of three expressions: fear, puffed cheeks, or impossible movement, or neutral for those faces with an initial expression, followed by the 500ms of the final frame of the animation. In this way, we were able to segment ERPs to the initial face for both neutral and affective faces. It is these data that we will present here. To monitor attention participants were asked to count the number of randomly interspersed target stimuli (white balls). The 30-minute experiment included 420 total trials in random sequence (70 faces presented from puffed to neutral, 70 faces from neutral to puffed, 70 faces from impossible to neutral, 70 faces from neutral to possible, 70 faces from fear to neutral, 70 faces from neutral to fear, and 45 targets).

EEG data were processed using NetStation 4.5 software. Data were low-pass filtered offline at 30 Hz prior to segmentation. Filtered data were then segmented to an epoch lasting from 100ms before to 500ms after the onset of the initial static face. Artifact detection settings were set to 200 μv for bad channels, 140 μv for eye blinks, and 100 μv for eye movements. Channels with artifacts on more than 40% of trials were marked as bad channels and replaced through spline interpolation (Picton et al., 2000). Segments that contained eye blinks, eye movement, or more than 10 bad channels were marked as bad and excluded. Automated artifact detection was confirmed via hand editing for each subject for each trial. Data were re-referenced to an average reference and baseline corrected to the 100ms pre-stimulus epoch. Trial-by-trial data were subsequently averaged at each electrode for each condition (e.g, “fear” and “neutral,” separately for every individual). P1 and N170 amplitude were measured as the first two most positive and negative peaks following stimulus onset and were identified in individual averages. Latency was calculated to peak amplitude.

Results

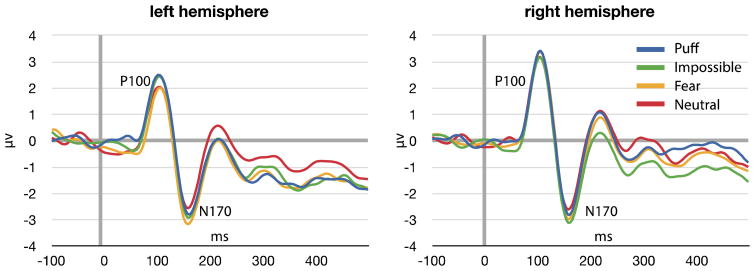

P1

Amplitude and latency were analyzed using two separate repeated measures ANOVA with expression (neutral, puff, fear, impossible) and hemisphere (left, right) as the within-subjects factors. In both models there were no significant main effects or interactions for expression in terms of amplitude [F(3,26) = 1.05, p = .386], latency [F(3,26) = .61, p = .614], and for interactions including hemisphere [F(3,26) = 1.41, p = .261] in terms of latency [F(3,26) =1.41, p = .614]. There was a main effect of hemisphere on P1 amplitude [F(3,26) =4.49, p = .043], but this was not mediated by expression. There was no main effect of hemisphere on latency [F(3,26) =1.18, p = .286].

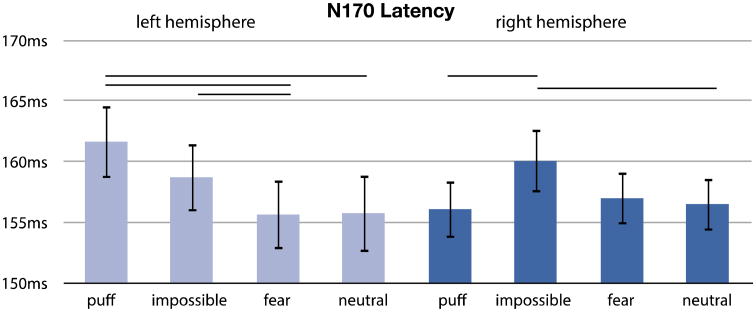

N170

We additionally analyzed the face sensitive N170 with similar models, i.e., two separate repeated measures ANOVA with expression (neutral, puff, fear, impossible) and hemisphere (left, right) as the within-subjects factors. There were no significant main effects or interactions for expression in terms of amplitude: expression [F(3,26) = 1.72, p = .187]; hemisphere [F(3,26) = .004, p = .952]; hemisphere × emotion [F(3,26) = .438, p = .728]. For latency, there was a main effect of expression and a marginal interaction between hemisphere and expression: expression [F(3,26) = 3.24, p = .038]; hemisphere [F(3,26) =.083, p =.776]; hemisphere × emotion [F(3,26)=2.63, p = .071]. Neutral and fear faces elicited shorter N170 latencies across both hemispheres. This effect was driven by a significant difference between neutral and impossible facial expressions. The interaction effect between expression and hemisphere reflected longer latencies to impossible facial expressions and puffed cheeks in the left hemisphere and longer latency to impossible facial expressions in the right hemisphere. Figure 3 depicts grand averaged waveforms from right and left hemisphere electrodes, and figure 4 depicts variability in latency across different expressions.

Figure 3.

ERP waveforms depicting activity over left and right occipitotemporal electrodes in response to face presentation. Note that at early components (P1) there is little variability among different expressions. EEG data is filtered from .1 to 30hz.

Figure 4.

N170 peak latency by expression and hemisphere. Horizontal lines above bars indicate conditions that were significantly different.

Discussion

In this paper we have introduced and described a novel photorealistic set of facial stimuli, including dynamic facial expressions. The use of photorealistic computer-generated faces allowed for precise control of both movement and low-level image characteristics such as luminance and size. Furthermore, these faces exhibit uniform dynamics of movement among expressions and across individual faces. This stimulus set includes 222 stimuli of varying race and gender spanning three expressions, with individual images representing each step of the animation. Individual frames can be presented separately, in sequence, or merged into an animation depending on the needs of the experimenter.

Our validation study revealed expected electrophysiological response to the computer-generated faces. Waveform morphology was consistent with prior research, reflecting a characteristic P1-N170 complex over occipitotemporal scalp. Consistent with the design of our stimuli, consistent response at an early sensory component (P1) indicated that the stimuli were well matched on low-level visual features. While differences in brain activity in response to low-level visual features persist beyond the P1 (Bieniek, Frei, & Rousselet, 2013) and are evident in the hemodynamic response (Yue et al., 2011), our results suggest that low-level differences indexed by the P1(Rossion & Caharel, 2011) do not account for differences observed at subsequent components in these data. In addition, a robust face-sensitive response (N170) was observed subsequent to this initial component that exhibited limited variability in response to familiarity of expression. While consistent response patterns across conditions at the N170 have been observed in prior research (Holmes et al., 2003), other studies have detected variability by emotional expression at this component (Blau et al., 2007). Specifically, the less familiar nature of the puffed cheek and impossible facial expressions may have led to the delayed N170 observed here. Future directions exploring differences between expressions will include different imaging modalities, such as fMRI, and experimental paradigms that more directly probe the sources of visual information used in perceptual tasks, such as reverse correlation techniques. The latter approach, exemplified by the “bubbles” paradigms, collect more experimental trials, and can more deeply elaborate the neural time course of face processing. (Schyns, Bonnar, & Gosselin, 2002; Schyns, Petro, & Smith, 2007)

In the context of neurophysiological research, this stimulus set has several unique strengths. First, it tightly and effectively controls low-level visual features that are known to impact brain response to visual stimuli, such as luminance. Our results show that these faces do not elicit differences in brain indices of visual perception sensitive to low-level image characteristics. Secondly, the faces are animated, which allows us to study processing of dynamic facial stimuli. The inclusion of animation addresses an emerging need in face perception research. Currently, there is much less research examining the perception of dynamic versus static faces (Curio, Bülthoff, & Giese, 2011), although perception of facial movement dynamics is a crucial component of social cognition (Pitcher, Dilks, Saxe, Triantafyllou, & Kanwisher, 2011); (Bassili, 1979; Pike, Kemp, Towell, & Phillips, 1997).

This stimulus set offers animated facial expressions standardized for psychophysical research. Individuals interested in incorporating these stimuli into experimental paradigms should be aware of several limitations, including a restricted range of expressions and tightly confined age range of depicted individuals. Therefore, this stimulus set would not be appropriate for studies of positive emotional expressions or studies of the perception of children's faces. Also, as these faces are distributed as rendered images, it is not possible to modify them arbitrarily in accord with the Facial Action Coding System (FACS), which other computer generated systems offer (Krumhuber, Tamarit, Roesch, & Scherer, 2012; Roesch et al., 2011; Yu, Garrod, & Schyns, 2012). Most importantly, these faces are not real; while the computer-generated nature of the faces poses a challenge to ecological validity, this weakness is offset by improved control over the images necessary for psychophysiological research. There is some concern that artificial faces are ill-suited for cognitive neuroscience research, specifically that later face sensitive components differ for photographs of real faces as compared to illustrated faces (Wheatley, Weinberg, Looser, Moran, & Hajcak, 2011). However these concerns are balanced in light of several facts: (1) Different stimulus sets of photographs of faces may be attributable to different experimental findings (Machado-de-Sousa et al., 2010). (2) Computer generated faces have been used successfully in neuroscience research and show effects beyond very early electrophysiological components in the EEG (Recio, Sommer, & Schacht, 2011) and in hemodynamic research (Bolling et al., 2011; Mosconi, Mack, McCarthy, & Pelphrey, 2005). (3) There is a history of successfully using artificially generated, e.g., computer-generated and drawn faces in psychophysics research (Bentin, Sagiv, Mecklinger, Friederici, & von Cramon, 2002; Cheung, Rutherford, Mayes, & McPartland, 2010), suggesting that artificial faces can be of substantial experimental utility. The success of these approaches indicates that artificial, photorealistic facial stimuli are an effective addition to the constellation of stimuli used in face perception research.

The goal of this dataset was to present multiple identities exhibiting affective and neutral movements and biologically plausible, but impossible movements, which would have been very difficult with photographs of real individuals. Furthermore, the electrophysiological data showing that these faces do not differ on low-level visual properties suggests that these stimuli are well-suited for the demands of neuroscience and psychophysiological research. While these stimuli were developed for electrophysiological experiments, they are not limited to this domain. These artificially generated faces can be freely distributed to the research community without concern for participant confidentiality. Freely available stimuli allow for a point of reference for comparing research findings across studies and reducing variability attributable to idiosyncrasies between study-specific stimulus sets.

How to obtain the stimulus set

The stimulus set is free for research use and is available with limited restrictions. Specifically: (1) the images are not-redistributed; (2) credit is given to both this publication (Naples et al.,) and the Following funding sources, NIMH R01 MH100173 (McPartland), NIMH K23 MH086785 (McPartland), NIMH R21 MH091309 (McPartland, Bernier), Autism Speaks Translational Postdoctoral Fellowship (Naples), CTSA Grant Number UL1 RR024139 (McPartland), Waterloo Foundation 1167-1684 (McPartland), and Patterson Trust 13-002909 (McPartland); (3) and the images are not used for any commercial purposes.

To receive access to this stimulus set please email: Mcp.lab@yale.edu

Supplementary Material

Appendix 1: Converting image sequences to movie files

There are several free and commercial software packages that can convert numbered image sequences into movies. We recommend Virtualdub (http://www.virtualDub.org). Virtualdub is free and open-source. For instructions on opening an image sequence as a movie, instructions are available here: http://www.virtualdub.org/blog/pivot/entry.php?id=34

Footnotes

The impossible movement is not consistent with human face structure.

Supplementary material can be viewed using the link below… https://yale.app.box.com/s/s3ozillc7gmmexw15wtb

References

- Autodesk. Softimage. San Rafael, CA: 2011. [Google Scholar]

- Balas B, Nelson CA. The role of face shape and pigmentation in other-race face perception: An electrophysiological study. Neuropsychologia. 2010;48(2):498–506. doi: 10.1016/J.Neuropsychologia.2009.10.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bassili JN. Emotion recognition: the role of facial movement and the relative importance of upper and lower areas of the face. J Pers Soc Psychol. 1979;37(11):2049–2058. doi: 10.1037//0022-3514.37.11.2049. [DOI] [PubMed] [Google Scholar]

- Bentin S, Allison T, Puce A, Perez E, McCarthy G. Electrophysiological Studies of Face Perception in Humans. J Cogn Neurosci. 1996;8(6):551–565. doi: 10.1162/jocn.1996.8.6.551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bentin S, Sagiv N, Mecklinger A, Friederici A, von Cramon YD. Priming visual face-processing mechanisms: electrophysiological evidence. Psychol Sci. 2002;13(2):190–193. doi: 10.1111/1467-9280.00435. [DOI] [PubMed] [Google Scholar]

- Bieniek MM, Frei LS, Rousselet GA. Early ERPs to faces: aging, luminance, and individual differences. Front Psychol. 2013;4:268. doi: 10.3389/fpsyg.2013.00268. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bindemann M, Burton AM, Hooge ITC, Jenkins R, De Haan EHF. Faces retain attention. Psychonomic Bulletin & Review. 2005;12(6):1048–1053. doi: 10.3758/bf03206442. [DOI] [PubMed] [Google Scholar]

- Birmingham E, Bischof WF, Kingstone A. Gaze selection in complex social scenes. Visual Cognition. 2008;16(2-3):341–355. doi: 10.1080/13506280701434532. [DOI] [Google Scholar]

- Blanz V, Vetter T. A morphable model for the synthesis of 3D faces. Siggraph 99 Conference Proceedings. 1999:187–194. [Google Scholar]

- Blau VC, Maurer U, Tottenham N, McCandliss BD. The face-specific N170 component is modulated by emotional facial expression. Behavioral and Brain Functions. 2007;3 doi: 10.1186/1744-9081-3-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bolling DZ, Pitskel NB, Deen B, Crowley MJ, McPartland JC, Kaiser MD, et al. Pelphrey KA. Enhanced neural responses to rule violation in children with autism: A comparison to social exclusion. Developmental Cognitive Neuroscience. 2011;1(3):280–294. doi: 10.1016/J.Dcn.2011.02.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cheung CH, Rutherford HJ, Mayes LC, McPartland JC. Neural responses to faces reflect social personality traits. Soc Neurosci. 2010;5(4):351–359. doi: 10.1080/17470911003597377. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Curio Cb, Bülthoff HH, Giese MA. Dynamic faces : insights from experiments and computation. Cambridge, Mass: MIT Press; 2011. [Google Scholar]

- Edwards J, Jackson HJ, Pattison PE. Emotion recognition via facial expression and affective prosody in schizophrenia: A methodological review (vol 22, pg 789, 2002) Clinical Psychology Review. 2002;22(8):1267–1285. doi: 10.1016/s0272-7358(02)00130-7. doi:Pii S0272-7358(02)00162-9 Doi 10.1016/S0272-7358(02)00162-9. [DOI] [PubMed] [Google Scholar]

- Eimer M, Holmes A. An ERP study on the time course of emotional face processing. Neuroreport. 2002;13(4):427–431. doi: 10.1097/00001756-200203250-00013. [DOI] [PubMed] [Google Scholar]

- Ekman P, Friesen WV. Constants across cultures in the face and emotion. J Pers Soc Psychol. 1971;17(2):124–129. doi: 10.1037/h0030377. [DOI] [PubMed] [Google Scholar]

- Ekman P, Friesen WV. Unmasking the face; a guide to recognizing emotions from facial clues. Englewood Cliffs, N.J.: Prentice-Hall; 1975. [Google Scholar]

- Frank MC, Vul E, Johnson SP. Development of infants' attention to faces during the first year. Cognition. 2009;110(2):160–170. doi: 10.1016/j.cognition.2008.11.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Freeman JB, Pauker K, Apfelbaum EP, Ambady N. Continuous dynamics in the realtime perception of race. Journal of Experimental Social Psychology. 2010;46(1):179–185. doi: 10.1016/J.Jesp.2009.10.002. [DOI] [Google Scholar]

- Gosselin P, Perron M, Beaupre M. The Voluntary Control of Facial Action Units in Adults. Emotion. 2010;10(2):266–271. doi: 10.1037/A0017748. [DOI] [PubMed] [Google Scholar]

- Holmes A, Vuilleumier P, Eimer M. The processing of emotional facial expression is gated by spatial attention: evidence from event-related brain potentials. Brain Res Cogn Brain Res. 2003;16(2):174–184. doi: 10.1016/s0926-6410(02)00268-9. [DOI] [PubMed] [Google Scholar]

- Horley K, Williams LM, Gonsalvez C, Gordon E. Face to face: visual scanpath evidence for abnormal processing of facial expressions in social phobia. Psychiatry Research. 2004;127(1-2):43–53. doi: 10.1016/J.Psychres.2004.02.016. [DOI] [PubMed] [Google Scholar]

- Jack RE, Garrod OG, Schyns PG. Dynamic Facial Expressions of Emotion Transmit an Evolving Hierarchy of Signals over Time. Curr Biol. 2014;24(2):187–192. doi: 10.1016/j.cub.2013.11.064. [DOI] [PubMed] [Google Scholar]

- Klin A, Jones W, Schultz R, Volkmar F, Cohen D. Visual fixation patterns during viewing of naturalistic social situations as predictors of social competence in individuals with autism. Archives of General Psychiatry. 2002;59(9):809–816. doi: 10.1001/archpsyc.59.9.809. [DOI] [PubMed] [Google Scholar]

- Krumhuber EG, Tamarit L, Roesch EB, Scherer KR. FACSGen 2.0 Animation Software: Generating Three-Dimensional FACS-Valid Facial Expressions for Emotion Research. Emotion. 2012;12(2):351–363. doi: 10.1037/A0026632. [DOI] [PubMed] [Google Scholar]

- Lindsen JP, Jones R, Shimojo S, Bhattacharya J. Neural components underlying subjective preferential decision making. Neuroimage. 2010;50(4):1626–1632. doi: 10.1016/J.Neuroimage.2010.01.079. [DOI] [PubMed] [Google Scholar]

- Machado-de-Sousa JP, Arrais KC, Alves NT, Chagas MH, de Meneses-Gaya C, Crippa JA, Hallak JE. Facial affect processing in social anxiety: tasks and stimuli. Journal of neuroscience methods. 2010;193(1):1–6. doi: 10.1016/j.jneumeth.2010.08.013. [DOI] [PubMed] [Google Scholar]

- Matheson HE, McMullen PA. A computer-generated face database with ratings on realism, masculinity, race, and stereotypy. Behavior Research Methods. 2011;43(1):224–228. doi: 10.3758/S13428-010-0029-9. [DOI] [PubMed] [Google Scholar]

- McPartland J, Cheung CHM, Perszyk D, Mayes LC. Face-related ERPs are modulated by point of gaze. Neuropsychologia. 2010;48(12):3657–3660. doi: 10.1016/J.Neuropsychologia.2010.07.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McPartland J, Dawson G, Webb SJ, Panagiotides H, Carver LJ. Event-related brain potentials reveal anomalies in temporal processing of faces in autism spectrum disorder. Journal of Child Psychology and Psychiatry. 2004;45(7):1235–1245. doi: 10.1111/J.1469-7610.2004.00318.X. [DOI] [PubMed] [Google Scholar]

- McPartland JC, Wu J, Bailey CA, Mayes LC, Schultz RT, Klin A. Atypical neural specialization for social percepts in autism spectrum disorder. Soc Neurosci. 2011;6(5-6):436–451. doi: 10.1080/17470919.2011.586880. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mosconi MW, Mack PB, McCarthy G, Pelphrey KA. Taking an “intentional stance” on eye-gaze shifts: a functional neuroimaging study of social perception in children. Neuroimage. 2005;27(1):247–252. doi: 10.1016/j.neuroimage.2005.03.027. [DOI] [PubMed] [Google Scholar]

- Picton TW, Bentin S, Berg P, Donchin E, Hillyard SA, Johnson R, et al. Taylor MJ. Guidelines for using human event-related potentials to study cognition: Recording standards and publication criteria. Psychophysiology. 2000;37(2):127–152. doi: 10.1017/S0048577200000305. [DOI] [PubMed] [Google Scholar]

- Pike GE, Kemp RI, Towell NA, Phillips KC. Recognizing moving faces: The relative contribution of motion and perspective view information. Visual Cognition. 1997;4(4):409–438. [Google Scholar]

- Pitcher D, Dilks DD, Saxe RR, Triantafyllou C, Kanwisher N. Differential selectivity for dynamic versus static information in face-selective cortical regions. Neuroimage. 2011;56(4):2356–2363. doi: 10.1016/j.neuroimage.2011.03.067. [DOI] [PubMed] [Google Scholar]

- Recio G, Sommer W, Schacht A. Electrophysiological correlates of perceiving and evaluating static and dynamic facial emotional expressions. Brain Res. 2011;1376:66–75. doi: 10.1016/j.brainres.2010.12.041. [DOI] [PubMed] [Google Scholar]

- Roesch EB, Tamarit L, Reveret L, Grandjean D, Sander D, Scherer KR. FACSGen: A Tool to Synthesize Emotional Facial Expressions Through Systematic Manipulation of Facial Action Units. Journal of Nonverbal Behavior. 2011;35(1):1–16. doi: 10.1007/S10919-010-0095-9. [DOI] [Google Scholar]

- Rossion B, Caharel S. ERP evidence for the speed of face categorization in the human brain: Disentangling the contribution of low-level visual cues from face perception. Vision Res. 2011;51(12):1297–1311. doi: 10.1016/j.visres.2011.04.003. [DOI] [PubMed] [Google Scholar]

- Rousselet GA, Pernet CR. Quantifying the Time Course of Visual Object Processing Using ERPs: It's Time to Up the Game. Front Psychol. 2011a;2:107. doi: 10.3389/fpsyg.2011.00107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rousselet GA, Pernet CR. Quantifying the Time Course of Visual Object Processing Using ERPs: It's Time to Up the Game. Frontiers in psychology. 2011b;2:107. doi: 10.3389/fpsyg.2011.00107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sasson N, Tsuchiya N, Hurley R, Couture SM, Penn DL, Adolphs R, Piven J. Orienting to social stimuli differentiates social cognitive impairment in autism and schizophrenia. Neuropsychologia. 2007;45(11):2580–2588. doi: 10.1016/J.Neuropsychologia.2007.03.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schneider W, Eschman A, Zuccolotto A. E-Prime: User's guide. Psychology Software Incorporated; 2002. [Google Scholar]

- Schyns PG, Bonnar L, Gosselin F. Show me the features! Understanding recognition from the use of visual information. Psychol Sci. 2002;13(5):402–409. doi: 10.1111/1467-9280.00472. [DOI] [PubMed] [Google Scholar]

- Schyns PG, Petro LS, Smith ML. Dynamics of visual information integration in the brain for categorizing facial expressions. Curr Biol. 2007;17(18):1580–1585. doi: 10.1016/j.cub.2007.08.048. [DOI] [PubMed] [Google Scholar]

- Singular Inversions. FaceGen. Toronto ON: 2010. [Google Scholar]

- Tolles T. Practical Considerations for Facial Motion∼Capture. In: Deng Z, Neumann U, editors. Data-driven 3D facial animation(pp viii, 296 p) London: Springer; 2008. [Google Scholar]

- Tsao DY, Livingstone MS. Mechanisms of face perception. Annual Review of Neuroscience. 2008;31:411–437. doi: 10.1146/Annurev.Neuro.30.051606.094238. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tucker DM. Spatial sampling of head electrical fields: the geodesic sensor net. Electroencephalogr Clin Neurophysiol. 1993;87(3):154–163. doi: 10.1016/0013-4694(93)90121-b. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Armony JL, Driver J, Dolan RJ. Distinct spatial frequency sensitivities for processing faces and emotional expressions. Nature Neuroscience. 2003;6(6):624–631. doi: 10.1038/nn1057. [DOI] [PubMed] [Google Scholar]

- Wheatley T, Weinberg A, Looser C, Moran T, Hajcak G. Mind perception: real but not artificial faces sustain neural activity beyond the N170/VPP. PLoS One. 2011;6(3):e17960. doi: 10.1371/journal.pone.0017960. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yu H, Garrod OGB, Schyns PG. Perception-driven facial expression synthesis. Computers & Graphics-Uk. 2012;36(3):152–162. doi: 10.1016/J.Cag.2011.12.002. [DOI] [Google Scholar]

- Yue XM, Cassidy BS, Devaney KJ, Holt DJ, Tootell RBH. Lower-Level Stimulus Features Strongly Influence Responses in the Fusiform Face Area. Cerebral Cortex. 2011;21(1):35–47. doi: 10.1093/Cercor/Bhq050. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.