Abstract

μManager is an open-source, cross-platform desktop application, to control a wide variety of motorized microscopes, scientific cameras, stages, illuminators, and other microscope accessories. Since its inception in 2005, μManager has grown to support a wide range of microscopy hardware and is now used by thousands of researchers around the world. The application provides a mature graphical user interface and offers open programming interfaces to facilitate plugins and scripts. Here, we present a guide to using some of the recently added advanced μManager features, including hardware synchronization, simultaneous use of multiple cameras, projection of patterned light onto a specimen, live slide mapping, imaging with multi-well plates, particle localization and tracking, and high-speed imaging.

Keywords: high-speed imaging, high-content screening, localization microscopy, Micro-Manager, open-source microscopy

BACKGROUND

μManager (“Micro-Manager”) is an open-source, cross-platform (Windows, Mac, and Linux) desktop application that facilitates computer control of light microscopes for researchers. The application provides an intuitive graphical user interface and a documented programming interface for controlling microscope hardware. μManager runs as an ImageJ plugin (ImageJ is a widely used, freely available image analysis software [1]), and can be downloaded at no cost from the website [2]. μManager is currently used in thousands of laboratories worldwide and is mentioned in more than 500 publications. The routine use of μManager is extensively documented, both on the μManager website and in a previous publication [3].

μManager’s design facilitates both routine applications and the development of advanced microscopy methods. The application’s programming interface for hardware control provides a common set of commands that can be used to control a supported camera from any manufacturer. Likewise, vendor-neutral command sets are provided for shutters, stages, and multi-state devices such as objective turrets and filter wheels. Furthermore, μManager’s plugin capabilities allow new application-level functionality to be added for specialized techniques.

Here, we present step-by-step instructions on how to use a number of advanced features and plugins that have been added to μManager in recent years. We assume that the reader has a basic understanding of the use of μManager, as described previously [3].

MATERIALS

To use the μManager software one needs at least a digital camera supported by the software. A complete and current list of supported hardware (including cameras) is available on the μManager website [4]. Additional hardware and supplies are listed in the individual Procedures below. The protocols were written for μManager version 1.4.16. They should still be usable in later versions, but some details may have changed.

PROCEDURES AND ANTICIPATED RESULTS

High-content screening plugin

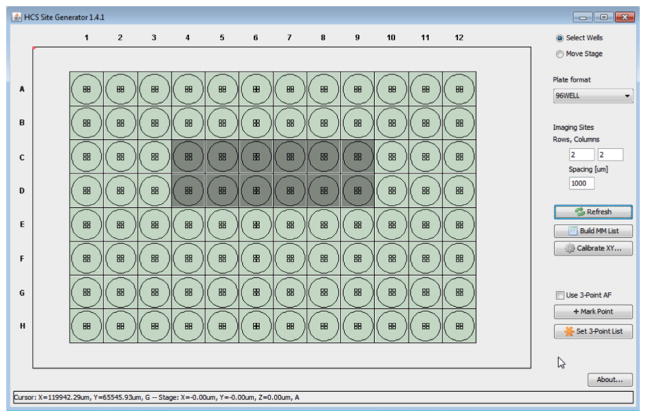

The high-content screening plugin “HCS Site Generator” (available since μManager version 1.4.15) enables the use of multi-well plates for automated acquisition of large datasets. The plugin provides an efficient way to prepare a list of XY-positions for running a Multi-Dimensional Acquisition sequence with any settings, across multiple wells (Fig. 1).

Figure 1.

The HCS Site Generator plugin provides a simple user interface to generate XY positions for a multi-well plate for imaging with μManager’s Multi-Dimensional Acquisition.

Place a multi-well plate with samples on a microscope. After you activate HCS Site Generator from the μManager plugins menu, a window will be displayed containing a grid representing a multi-well plate. On the right are a series of controls. Choose a Plate Format corresponding to the type of multi-well plate to be used (available options are 24-, 96-, and 384-well plates of standard dimensions, as well as a layout with four adjacent slides).

Calibrate the grid so that it matches the stage positions corresponding to different wells. Press the “Calibrate XY” button and move the XY stage to the center of well A1. If a joystick is not available for controlling the XY stage, use μManager’s “Stage Control” plugin.

Click “Select wells” and use the mouse to choose the wells that should be visited for imaging. Dragging or clicking allows wells to be selected; holding down the control key allows multiple wells to be included.

Specify a grid of “Imaging Sites” by entering the number of rows and columns and the spacing between images in microns. Images in this grid pattern will be acquired in each well during multi-dimensional acquisition.

Finally, press “Build MM List” to send the calculated positions to μManager’s XY Position List. Open the Multi-Dimensional Acquisition window, make sure “Multi Positions (XY)” is checked, and press the “Edit Position List” button to make sure the well plate positions are present in the list. Then apply all other desired acquisition settings and press “Acquire!”

-

Auto-focus settings for HCS acquisition are specified in the Multi-Dimensional Acquisition Window in the same way as with any other multi-position protocol. In addition, the HCS plugin provides “3-point” interpolative focus calculation that can be used instead of auto-focusing as a much faster alternative. 3-point focus can be also used together with the regular image-based focus to make the latter’s operation faster and more reliable. To set up 3-point focus:

-

6.1

Move the XY stage to a particular well, and using live mode, find the Z drive position for best focus.

-

6.2

Press the “+ Mark Point” button at lower right. Repeat this procedure at two more positions, as far away as possible from the first position and from each other.

-

6.3

Press “Set 3-Point List” to provide μManager with a “focal plane” that will then be adhered to during multi-well imaging. The focal plane is simply a plane fitted through three points selected in the previous steps. It is important to enable all three axes (XY and Z) in the Position List window in order for 3-point focus to work. The HCS plugin will then use the focal plane to calculate an estimated Z position for each selected imaging site and include it in the position list. Therefore, whenever the XY stage moves to the new imaging site, the estimated Z position will be applied as well. Typically 3-point focus keeps images in focus for magnifications 10X and less, thus eliminating the need for a much slower autofocus procedure. For higher magnifications, 3-point focus is most likely not good enough but can bring the Z-stage close to the actual focus position for subsequent refinement by automated autofocus.

-

6.1

High Speed Imaging

The newest scientific CMOS (sCMOS) cameras, including the Andor Zyla, Hamamatsu Orca Flash, 4.0 and PCO Edge, offer extremely high data rates, due to their large format sensors and high frame rates. The fastest sCMOS cameras can produce 100 frames per second of 2560×2160 pixels each, or 1.1 GB/s. High speed sCMOS cameras have proven useful for imaging three-dimensional evolving specimens [5] and advanced applications such as super-resolution microscopy [6]. As of release 1.4.15, μManager is optimized to continuously acquire images from these cameras at full speed, and stream the images to disk or store them in RAM.

To store the images continuously as they arrive at full speed from these high-speed cameras, you need a suitable computer. Following is a guide to making high-speed acquisition work:

Choose a computer. First, determine the necessary data size. If the maximum size of image sequences (taking into account the number of frames and region of interest size) is less than 100 GB, it may be practical to store the images in RAM and save to disk after the acquisition. For larger sizes, a RAID0 array of solid-state drives (SSDs) can be used. Ideally, the RAID controller should support the SATA ‘TRIM’ command, necessary to maintain optimal SSD performance without reformatting. For acquisition at 1.1 GB/s data rates, we used three or four SSDs (Samsung 840 Series 256 GB) in a RAID0 configuration, using a PCIe RAID controller (LSI 9240-4i) on a Windows 7 computer with 128 GB of PC1600 RAM on eight memory channels and two quad-core Intel Xeon CPUs. For maximum performance, set the RAID0-based virtual disk strip size to 1MB, the write policy to “Always Write Back,” and the allocation unit size to 64 KB.

Ensure that the SSD RAID0 array can handle high data speeds (more than 1.1 GB/s) by using a disk speed-benchmarking program, such as HDTune. To accurately measure writing speed, it may help to temporarily disable the disk’s write caching and to select the longest write length possible in the benchmarking application.

Install the desired sCMOS camera using the Hardware Configuration Wizard and select device settings for high-speed imaging. For example, the 10-tap Andor Zyla can run at 100 frames per second when 11-bit images are selected.

If using μManager version 1.4.15, make sure that the option “Fast storage” is activated (Tools menu > Options). This option is the default since μManager release 1.4.16 and has been removed from the Options.

For a test acquisition, open the Multi-Dimensional Acquisition window. Select “Time Points” and choose a number of images to acquire (the required storage size is displayed). Set the time interval to zero.

De-select Positions, Slices, Channels, and Autofocus. For storage in RAM, deselect “Save images.” For storage on disk, select “Save images”, choose “Image stack file”, and select a directory on the storage drive. Press the “Acquire!” button to start the acquisition.

High-speed acquisition can be combined with other dimensions. For example, a fast movie can be acquired at several positions consecutively. Additionally, multiple channels and slices can be acquired during a high-speed acquisition with the use of hardware triggering (see the section on hardware synchronization below).

Slide Explorer

The Slide Explorer (available since version 1.3.47) is a plugin designed to provide an intuitive user interface for acquiring areas of the microscope slide larger than the camera’s field of view. When the user zooms or pans the Slide Explorer map view to a region not yet imaged, the plugin immediately starts acquiring images from the microscope to synthesize a mosaic image of the new region, similar to the Google Maps interface (Fig. 2).

Figure 2. The Slide Explorer plugin allows a large image to be synthesized by a grid of tiled images.

On the left is a mosaic image of a mouse kidney section imaged in three fluorescent channels. On the right is a zoomed-in portion of the mosaic image.

To generate a tiled image of a region of the slide, the Slide Explorer requires a coordinate mapping between the camera’s pixels and the XY stage’s coordinate system. When necessary, the Slide Explorer launches μManager’s Pixel Calibrator plugin to carry out the measurements necessary to create this mapping. A protocol for using the Pixel Calibrator plugin follows.

Pixel Calibrator Plugin usage

We assume here that distances on the motorized XY stage have been calibrated by the manufacturer. The many XY stages equipped with hardware encoders can usually be relied upon to produce distance measurements accurate to 10–100 nm. The Pixel Calibrator measures the size and orientation of pixels on the camera relative to the size and orientation of axes on the XY stage. To use it:

Focus on a sample that has high contrast and has non-repetitive features.

Open the Pixel Calibrator plugin from the Plugins menu, if it hasn’t already been launched by the Slide Explorer.

Choose a “Safe travel radius” in microns to ensure that the Pixel Calibrator does not move the XY stage so far as to crash the objective lens into the side of the stage. On most specimens, 1 mm of travel radius is enough to calibrate even low-magnification objectives.

Press the “Start” button to initiate pixel calibration. The Pixel Calibrator plugin acquires a first “home” image, and then commands the XY stage to move along the X axis by a very small initial displacement (1 micron). Then it doubles the displacement for each subsequent move. An image is acquired with the current hardware settings at each new position until the stage has moved by nearly a whole field of view. A cross correlation between the last displaced image and the initial home image is used to measure the size of the displacement between them in pixels. A similar image series is acquired and analyzed along the Y axis. Then, the XY stage is commanded to move the center of the home image so that it can be imaged at each of four corners of the display. Pixel displacements (j,k)i are measured at each of these four corners and the corresponding requested XY stage coordinates (x,y)i are automatically used to solve the linear least squares problem to compute the affine transformation matrix A between the camera pixel coordinates and the stage coordinates. The affine relation is stored in μManager and used by the Slide Explorer to synthesize a large image made of a mosaic of individual images from the camera.

Using Slide Explorer 2

Once pixels have been calibrated, you are ready to acquire a large area using Slide Explorer 2. The plugin borrows channel settings and the Z slice distance from the multi-dimensional acquisition window.

Press “New” to start a new Slide Explorer session, and choose a location to save the new data set. A window will open and begin by tiling a very small region (2 × 2 tiles) in a single slice, with all channels requested.

Expand the Slide Explorer window by dragging the bottom-right corner, and press the “-” button once to zoom out. The empty area around the center tiles will begin to fill in with new tiles as the hardware acquires new images at unexplored locations.

Use the mouse to drag the Slide Explorer map to a new position. Tiles are acquired, starting from the center, and then radially outward in a rectangular spiral. Only tiles in empty areas of the visible window will be acquired, to let the user control the acquisition area.

Press the “<“ and “>“ buttons to move to different Z slice levels. New tiles will be acquired at the current Z slice.

Press the spacebar to pause or resume acquisition.

When acquisition of the map view is paused, double click anywhere in the displayed map to navigate the stage back to that position. A yellow box indicates your current location on the map. Use buttons on μManager’s main window to acquire a Snap or run Live mode, or run a multi-dimensional acquisition at that location.

Press the control key to use mouse clicks to add positions to μManager’s position list. These positions can be used in multi-dimensional acquisition sets. For example, the user might wish to acquire a large map on a field of cells, mark several cells of interest as positions in the position list, and then acquire a time lapse across these positions to record their behavior in parallel.

Localization Microscopy Plugin

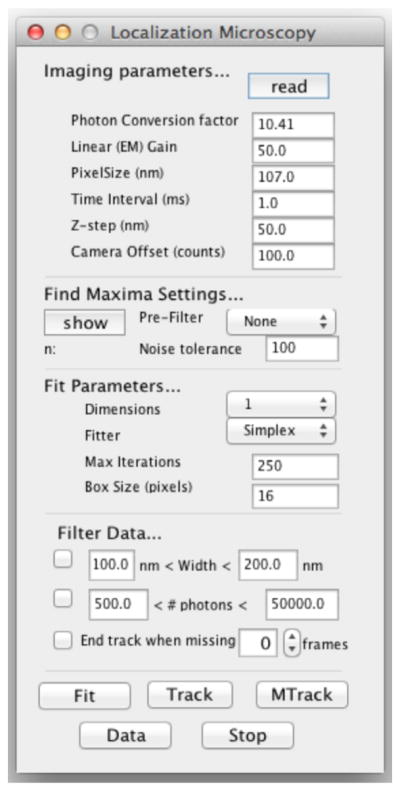

μManager’s Localization Microscopy plugin (available since version 1.4.10) automates the analysis of images of large numbers of single molecule fluorophores, quantum dots, or other localizable nano-scale particles. The plugin uses very rapid algorithms to automatically discover diffraction-limited spots in an image, to carry out PSF (point spread function) fitting to find the position of spots with nanometer precision in 2 or 3 dimensions, track those spots across multiple time points, use those spots to align data between channels, and use the resulting data to reconstruct PALM/STORM-style super-resolution images. It also provides an advanced user interface for filtering, inspecting, and manipulating localization data sets (Fig. 3). This plugin is purely for image analysis, and a multitude of other software packages [7] provide similar capabilities. Here’s how to analyze an image series:

Figure 3. The main window for the Localization Microscopy plugin.

Edit settings in this window to control the algorithms for localization and tracking of particles in an image series.

Imaging parameters provide the plugin with the necessary information to express the results in numbers of photons rather than arbitrary units. The “Photon Conversion factor” (number of photons per digital unit in the image) depends on various camera settings including analog gain and readout mode and can be found in the documentation for your camera. It can also be measured, as described on the μManager website [8]. Use the “read” button to deduce some imaging parameters from the selected image. Do not correct the Photon Conversion factor for the (linear) EM gain used, as the software will apply this correction automatically.

Find Maxima settings: The software finds local maxima by searching in a square with a size defined in “Box Size”. It only accepts local maxima whose intensity is “Noise tolerance” higher than the average of the four corners. Pre-filtering is rarely needed. The maxima finder is very fast and can even be used on live images.

-

The “Fit parameters” let you specify the kind of multi-dimensional Gaussian that will serve as a model for the PSF.

-

3.1

“Dimensions”: choose whether the X- and Y-width of the Gaussian vary together during fitting (1D) or can change independently (2D), and if the resulting ellipse can rot ate (3D).

-

3.2

“Fitter”: choose among fitting algoritms, including Simplex (Nelder Mead), Maximum Likelihood Estimator (MLE), and Levenberg-Marquardt algorithms. Levenberg-Marquardt uses the first derivative of the fitting function with respect to fitting parameters to pursue a best-fit result more quickly.

-

3.3

“Max iterations”: specify how long fitting should run for a given particle before the algorithm decides a particle is unfittable.

-

3.4

“Box size”: specify the width (in pixels) of the square sub-image over which the Gaussian function is to be fit to pixel intensities (usually, 6 pixels is sufficient).

-

3.1

The “Filter Data…” section allows outliers to be removed from point-fitting results. Spot width and intensities can be restricted to a range of physically reasonable values. Because single fluorescent particles can blink during an imaging sequence, you can specify the number of frames during which a particle can be missing before a track is declared “ended.”

Press the Fit button to run the spot finding and fitting algorithms. On machines with multiple cores, algorithms run in parallel to maximize efficiency (set the ImageJ setting “Parallel threads”, available through the ImageJ menu Edit > Options > Memory & Threads, to the number of available cores). Speeds of 20,000 spots per second are easily obtained on recent hardware.

To track motion of a single particle, draw an ROI around the particle with the mouse, and then click the Track button. The plugin will compute a time series for that particle and add it to the list of fitting series in the Data window (described below). Pressing the MTrack button will result in a tracking series for each particle detected in the first image of the time series. At present, tracking is not very sophisticated; it merely attempts a Gaussian fit on successive frames and can get confused by nearby particles.

-

The Data button opens a secondary Data window. This window contains a list of fitting series resulting from image sets, and a set of powerful tools for manipulating these data sets:

-

7.1

The General panel provides for saving and loading spot tracking data sets from files. Binary saving saves spots to a Tagged Spot Format (tsf) [9], which offers high-performance storage.

-

7.2

The two-color section carries out matching of spots between two overlaid channels (as from a DualView imager, or two cameras), and provides algorithms for computing a coordinate mapping between the two channels: Affine mapping provides a strictly linear mapping between the two data sets; LWM (local weighted mean [10]) allows for local warping due to chromatic aberrations. The “2C Correct” button allows data sets from one channel to be re-mapped to the coordinate system of the other channel. The resulting re-mapped pair of images can be used for directly measuring the distance between two particles of different colors.

-

7.3

The Tracks section allows plotting of positions and intensities of particles across time points. The “Math” button provides a way to do arithmetic operations between track series, and the “Straighten” button uses Principal Component Analysis to rotate a track (as from the movement of a molecular motor along an extended filament) along the X axis for further analysis.

-

7.4

The Localization Microscopy Panel provides for the rendering of PALM/STORM-style images. The “Z Calibration” button assumes the use of an astigmatic lens introduced in the imaging path to measure Z position by the shape of the fit Gaussian [11]. The Drift Correction button removes an overall displacement of the particles over time, to correct for drift in the XY Stage. Spots in the image can be rendered as individual pixels or simulated Gaussian probability density. Displayed spots can be filtered by width and intensity.

-

7.5

The computed results from spots and tracks can be displayed in ImageJ’s spreadsheet window. Selecting individual rows from particle-fitting results will highlight the corresponding spot in the image data set, allowing easy inspection and debugging of fitting results.

-

7.1

Hardware Synchronization

A central capability of μManager is Multi-Dimensional Acquisition (MDA), which allows image sets to be acquired at multiple XY positions, Z slices, time points, and channels. Conventionally, MDA is accomplished by sending commands from the computer to the devices each time a change (in, e.g., stage position or illumination) is required. This communication can add unnecessary latency (up to 100 ms) between image frames. In the case of a time series, the timing to issue commands to the devices and camera is controlled by the application software, a method that cannot consistently produce accurate timings on a standard desktop operating system. The resulting timing jitter can be on the order of tens of milliseconds, unacceptable for fast time series.

Much faster and accurately timed operation is possible with most cameras (when acquiring a preset sequence of frames) as well as many other devices (when executing a pre-programmed sequence of commands). μManager’s built-in hardware synchronization support can take advantage of these capabilities to perform fast MDA, such as a fast Z stack with a piezo stage or fast multi-channel imaging with lasers shuttered by acousto-optical tunable filters (AOTFs). From the microscope user’s perspective, this is done seamlessly, such that μManager’s MDA engine automatically delegates control to hardware when possible.

Synchronization between the camera and the other devices is achieved by routing TTL (Transistor-Transistor Logic) pulses over signal cables. In the configuration currently supported by μManager, the camera, operated in sequence acquisition mode, acts as the timing-generating device, sending out TTL pulses at each exposure. The pulses are sent (typically via BNC cable) to a sequencing device, which may be standalone or built into a stage or illumination controller. Upon each pulse, the sequencing device advances the hardware to the correct state for the next exposure, based on a sequence of positions or illumination settings uploaded ahead of time.

Setup

Because synchronization is driven by the camera’s clock, devices must respond rapidly compared to the exposure time. Devices currently supporting sequencing with μManager include:

Standalone micro-controllers (can be attached to other equipment):

Arduino microcontroller boards with μManager’s custom firmware, for controlling devices via TTL and Z stages by analog voltages.

ES Imaging microcontrollers, built for μManager compatibility, that can control AOTF-based laser combiners, TTL-controllable illuminators, and piezo Z stages whose position can be controlled by an external analog voltage signal

Micro-controllers built into other equipment:

Agilent’s MLC400 series Monolithic Laser Combiner, for laser channel switching

ASI’s MS-2000 series multi-axis stage controllers, for sequencing piezo Z stage positions

Märzhäuser TANGO series controllers for positioning Märzhäuser ‘s piezo Z stages

Specific instructions for setting up each of these devices for hardware triggering can be found on the μManager website.

Many scientific cameras provide a TTL output signal synchronized with individual frame exposures. In one typical configuration, the camera trigger output provides a TTL HIGH signal while a frame is exposing, and TTL LOW between exposures (during the dead time between frames).

The hardware triggering setup is as follows:

Connect the camera and the sequencing controller to the computer and use μManager’s Hardware Configuration Wizard to generate a configuration setup that includes both devices. If the sequencer controls a third device (such as a piezo Z stage or laser combiner), ensure that these are al so connected with the appropriate cables.

Use μManager’s Snap and Live modes to confirm that the camera can acquire images. Open the Device/Property browser and confirm that the controller’s properties are available and can be set to different values. For example, the Arduino microcontroller device adapter exposes a set of “TTL output” properties that can be set “On” and “Off”. If the Arduino is controlling an illuminator, then changing these properties should result in turning the different illumination channels on and off.

Enable “Sequencing” in the sequencing device. All sequencing device adapters have a property (usually called “Sequencing” or something similar) that, when “On,” allows μManager to upload command sequences to the sequencing device. To ensure that Sequencing is “On” by default, edit (or create) the “System” configuration group so that it includes the “Sequencing” property and contains a preset named “Startup”, in which “Sequencing” is set to “On”.

-

μManager is now ready to run a hardware-triggered MDA sequence. μManager’s MDA engine creates a triggered or partially triggered acquisition sequence by looking for channel switching and slice switching events that can be triggered by hardware and then generates trigger sequences. For example, an acquisition could require two channels (call them A and B), and a 3-slice Z stack (1, 2, and 3): in this case, the acquisition engine will produce a 6-event trigger sequence (A1, B1, A2, B2, A3, B3). If non-triggerable changes are required, the MDA engine falls back to software-controlled operation. Hardware triggering is enabled when these conditions are satisfied:

-

4.1

For multiple channels, check “Channels” and select a “Channel group” and add two or more channels to the list of channels. For hardware triggering, the property changes necessary to switch between channels must all be triggerable properties, and the exposure time for each channel must be the same.

-

4.2

To include a Z stack, check the “Z-stacks (slices)” box and enter a Start, End, and Step Size in microns. For hardware triggering, μManager ‘s current default Z stage must be a triggerable piezo stage.

-

4.3

Activate Time Points, Multiple Positions, and Autofocus as needed. Choose an Acquisition Order “Slices, Channels” for channels to be switched at each Z slice, or “Channels, Slices” for a Z stack to be obtained for each channel. Multiple Positions and Autofocus are not hardware-triggered (but a sub-sequence of Z slices or channels at each position can be hardware-triggered).

-

4.1

Click “Acquire!” We expect one image plane (a monochrome image for a particular channel and slice) acquired at every camera exposure. As each image arrives from the camera, the acquisition engine records metadata with the appropriate Channel, Slice, and Frame tags. The multi-dimensional image viewer then uses those tags to display the image planes correctly.

Multiple Cameras

Imaging multiple fluorescence channels simultaneously is advantageous for studying fast dynamic processes. The use of filter changers introduces a delay between images from different channels. To overcome this limitation, dichroic mirrors can be used to project two images side-by-side onto the same camera sensor (commercial devices using this approach are the Dual-View from Photometrics and the Optosplit from Cairn Research), which are then separated into individual channels in software using μManager’s SplitView plugin. Alternatively, μManager can acquire different channels from separate, synchronized cameras, through the Multi Camera virtual camera device, providing a larger field of view. To use this functionality, the cameras need to have the same number (rows and columns) of pixels and the same number of bytes per pixel (i.e. the size of the images in the computer’s memory needs to be identical). Multi-camera setup is as follows:

After addition of two or more physical cameras to your configuration (or two virtual “Demo Cameras” for testing), add the Multi Camera virtual camera device (from the “Utilities” device group) to your current configuration using μManager’s Hardware Configuration Wizard. The Multi Camera virtual device’s purpose is to broadcast the commands it receives to multiple cameras, and to pass any acquired images from its child cameras back to the application.

In the main μManager window, edit the System configuration group, and add the Core-Camera property and the Camera1/Camera2/Camera3 properties from the Multi Camera device. Then edit the System-Startup configuration preset, and set Core-Camera to “Multi Camera” and the Camera properties to the appropriate physical cameras. It is also convenient to add an extra “Camera” configuration group, with the sole property “Core”-”Camera”. This will produce a drop down menu on the main window that allows you to choose between imaging from either of the individual physical cameras or Multi Camera mode.

Now the exposure and binning settings will be propagated from Multi Camera to the physical cameras whenever they are set from the user interface. Focus on a sample with particles that can be imaged in two channels (for example, TetraSpeck beads from Invitrogen, “Full Spectrum” from Bangs Labs). Turn on live mode to observe how the two cameras are overlaid as a pair of channels.

It may be that the image from one camera is mirrored, or physical constraints prevent a camera from being rotated by 90 degrees. In this case, open the “Image Flipper” Plugin, turn on Live mode, and experiment with rotations and flipping until the images are properly aligned. Once the orientation is correct, perform fine adjustments to the two cameras to get their images precisely registered.

Open the Multi-Dimensional Acquisition window, and set up a two-channel acquisition (for example, Cy3 and Cy5). Create a 10-frame sequence with no time interval between frames, and press the “Acquire!” button. Multi-channel, multi-camera acquisitions produce an image set with several synthetic channels. These synthetic channels will have names combining the name of the camera with the name of the physical channel, e.g.: “Camera1-Cy3”, “Camera2-Cy3”, “Camera1-Cy5”, “Camera2-Cy5”.

To get perfect (sub-pixel) registration between the images on the two cameras, image registration software will almost invariably be necessary. To facilitate such registration, download and install the ImageJ plugin “GridAligner” [12], which uses a calibration image to automatically deduce the optimal affine transform function and applies the transform to other experimental images sets.

Phototargeting

The Projector plugin enables the use of light projection devices that beam light through the microscope objective to phototarget specimens. Phototargeting can be used for photobleaching (e.g., for Fluorescence Recovery After Photobleaching (FRAP) observations), photoactivation and photoswitching of fluorescent proteins and inorganic dyes, and photoablation. Devices intended for use with the Projector plugin include (1) spatial light modulators (SLMs) including digital mirror devices (DMDs) and liquid crystal on silicon (LCoS) chips, and (2) galvanometric mirror- based laser targeters. In the past year two galvo mirror devices, the Andor MicroPoint and the Rapp UGA-40, were integrated into μManager.

The following protocol describes the setup and use of μManager’s photo-targeting functionality.

NOTE: As with any microscope illumination hardware, eye safety is a concern, and eye protection appropriate for the brightness and color of the illumination light should be worn, particularly during initial installation and setup.)

After the hardware device has been installed, use the Hardware Configuration Wizard to add the phototargeting device to μManager’s configuration settings. A list of supported devices can be found on the μManager website.

Place a slide for testing and calibrating the phototargeting device on the microscope stage. Lower-power devices intended for photobleaching or photoswitching fluorescent dyes can be calibrated using a uniformly autofluorescent plastic slide such as those from Chroma [13]. High-power devices used for photo-ablation can be calibrated using a metal-coated mirror slide, which is ablated at each point visited by the illumination, producing a hole visible under brightfield illumination.

Start the Projector plugin. The plugin window contains three tabs for phototargeting control: “Point and Shoot”, “ROIs”, and “Setup.” Choose the Setup tab first, and click the “On” and “Off” buttons. The illumination should turn on and off, and may be visibly emitted at the objective. Galvo mirror-based devices will exhibit a single illumination spot, while SLMs will illuminate a large region. Once the illumination is on, enable μManager’s live mode and ensure that the illumination is visible on the camera. Press the “Center” button to illuminate a small region at the center of the ‘phototargeter’s two-dimensional range. Adjust the mounting of the phototargeting hardware to roughly center the illumination pattern on the camera image. To avoid over-exposing the camera with fluorescence from a test slide, it may be necessary to reduce illumination power, reduce camera exposure time, or switch to a dichroic where only a small part of the emitted light is transmitted.

Adjust the optics to tighten the focus of the spot on the test slide. Plastic fluorescent slides, due to their thickness, will exhibit a “halo” around the intended pattern, even when it is in focus. Focusing the spot just beyond the surface of the test slide usually minimizes the halo pattern.

Once the illumination pattern has been properly aligned, the camera’s pixel coordinates need to be mapped to the phototargeter’s coordinate system, which consists of galvo mirror angles or SLM pixel coordinates. Press the “Calibrate” button in the Projector Plugin’s “Setup” tab. The calibration procedure starts by illuminating a few test spots sequentially near the center of the phototargeting range. Each spot is imaged, and its position is automatically measured by the plugin. A successful measurement will be indicated by a small “+” overlaid on the spot. This initial test generates a rough linear estimate of the relation between camera pixels and phototargeting coordinates. Next, a series of spots in a square lattice are illuminated one by one across the camera field of view and automatically located. Following these measurements, affine transformation matrices are computed for each cell in the lattice, and stored as a detailed non-linear coordinate map between the camera and the phototargeting device.

We can now test the results of the calibration procedure. Enable μManager’s Live mode, and switch to the “Point and Shoot” tab, and press the Point and Shoot “On” button. If prompted, enter an “illumination interval” for the spot. Holding down the Control button, click somewhere on the live image. An illumination spot should appear at the mouse click for the interval requested. Try clicking in a number of places to confirm that the calibration is correct; if there appear to be errors, then try improving the illumination and repeat the previous calibration step.

Point and Shoot mode can be very useful for many experiments, such as FRAP. On a live specimen, switch to a dichroic cube that allows simultaneous imaging illumination and phototargeting illumination. Using the Multi-Dimensional Acquisition dialog, start a fast acquisition (zero interval between frames). Now “Point and Shoot” can be triggered simultaneously with imaging, and the immediate response to phototargeting can be observed and recorded.

Using the calibration slide, switch to the “ROI(s)” tab. Choose the “polygon ROI” tool in the ImageJ toolbar window, and then draw an ROI on the live image. Next, in the Projector Controls, click the “Set ROIs” button to send the ROI to the phototargeting device. When transmission succeeds, the message “One ROI submitted” should appear. Set “Loop” to 1 “times” and “Spot dwell time” to 500 ms. Pressing “Run ROIs now!” will result in illuminating the ROI (galvo-based devices will raster across the ROI, while SLMs will turn on all pixels inside the ROI). For multiple ROIs, use ImageJ’ s “ROI Manager.”

After ROIs have been submitted, you can attach phototargeting events to a multi-dimensional acquisition. Check the “Run ROIs in Multi-Dimensional Acquisition” checkbox, and specify the frame (time point) at which phototargeting should occur. You can also require that ROIs are repeatedly illuminated in later frames.

Troubleshooting

Since these protocols can be executed with many different hardware components, individual problems are hard to predict. However, support for problems encountered while using the software is available in two forms:

μManager has a build-in trouble reporting mechanism (μManager menu Help > Report a problem…). Use this to send complete documentation of the issue to the μManager developers. They will respond by email and help to figure out the problem.

The μManager mailing list [14] has about 1000 subscribers some of whom may have experienced the same problem and are willing to help. The mailing list can be found at: https://micro-manager.org/wiki/Micro-Manager%20Community

Acknowledgments

Thanks to Pariksheet Nanda of Andor Corporation and Andre Ratz, Simona Stelea, and Gert Rapp (Rapp Optical) for extensive help with debugging the Projector plugin, Max Krummel of UC San Francisco for help with debugging the Slide Explorer. Kurt Thorn for extensive help with hardware triggering and general debugging of μManager and the Slide Explorer. Norman Glasgow (Andor), Patrick Gregorio (Hamamatsu), James Butler (Hamamatsu), George Peeters (Solamere), and Kurt Thorn (UCSF) helped with debugging high speed acquisition. This work was supported by grant R01EB007187 from the NIH (NIBIB).

Footnotes

Competing interests: A.D.E. is now self-employed and provides Micro-Manager related programming services.

References

- 1.ImageJ website. NIH; Wayne Rasband. Cited on August 18, 2014. http://imagej.nih.gov/ij. [Google Scholar]

- 2.Micro-Manager website. Micro-Manager team in Ron Vale lab, Dept. of Cellular and Mol. Pharm. University of California; San Francisco: Cited on August 18, 2014. http://micro-manager.org. [Google Scholar]

- 3.Edelstein A, Amodaj N, Hoover K, Vale R, Stuurman N. Computer control of microscopes using μManager. Curr Protoc Mol Biol. 2010;14 doi: 10.1002/0471142727.mb1420s92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Devices supported in μManager. Micro-Manager team in Ron Vale lab, Dept. of Cellular and Mol. Pharm. University of California; San Francisco: Cited on August 18, 2014. http://micro-manager.org/wiki/DeviceSupport. [Google Scholar]

- 5.Tomer R, Khairy K, Amat F, Keller PJ. Quantitative high-speed imaging of entire developing embryos with simultaneous multiview light-sheet microscopy. Nature Methods. 2012;9:755–763. doi: 10.1038/nmeth.2062. [DOI] [PubMed] [Google Scholar]

- 6.Huang ZL, Zhu H, Long F, Ma H, Qin L, et al. Localization-based super-resolution microscopy with an sCMOS camera.Optics express. Opt Express. 2011;19:19156–19168. doi: 10.1364/OE.19.019156. [DOI] [PubMed] [Google Scholar]

- 7.Overview of Localization Microscopy Software. Cited on October 24, 2014. http://bigwww.epfl.ch/smlm/software/

- 8.Instructions and scripts to measure camera specifications. Nico Stuurman on the Micro-Manager website, Ron Vale Lab, Dept. of Cellular and Mol. Pharm. University of California; San Francisco: Cited on August 18, 2014. http://micro-manager.org/wiki/Measuring_camera_specifications. [Google Scholar]

- 9.Nico Stuurman on the Micro-Manager website, Ron Vale Lab, Dept. of Cellular and Mol. Pharm. University of California; San Francisco: Description of the Tagged Spot Format. Cited on August 18, 2014. http://micro-manager.org/wiki/Tagged_Spot_File_%28tsf%29_format. [Google Scholar]

- 10.Goshtasby A. Image registration by local approximation methods. Image and Vision Computing. 1988;6:255–261. doi: 10.1016/0262-8856(88)90016-9. [DOI] [Google Scholar]

- 11.Huang B, Wang W, Bates M, Zhuang X. Three-dimensional super-resolution imaging by stochastic optical reconstruction microscopy. Science. 2008;319:810–813. doi: 10.1126/science.1153529. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Diagnostic slides from Chroma. Chroma Technology Corp; Cited on August 18, 2014. http://www.chroma.com/products/filter-accessories/diagnostic-slides. [Google Scholar]

- 13.ImageJ plugin GridAligner. Nico Stuurman in Ron Vale Lab, Dept. of Cellular and Mol. Pharm. University of California; San Francisco: Cited on August 18, 2014. http://valelab.ucsf.edu/~nstuurman/IJplugins/GridAligner.html. [Google Scholar]

- 14.Micro-Manager mailing list. Sourceforge. Cited on August 18, 2014. https://lists.sourceforge.net/lists/listinfo/micro-manager-general.