Abstract

Performance is often improved when targets are presented in space near the hands rather than far from the hands. Performance in hand-near space may be improved because participants can use proprioception from the nearby limb and hand to provide a narrower and more resolute frame of reference. An equally compelling alternative is that targets appearing near the hand fall within the receptive fields of visual-tactile bimodal cells, recruiting them to assist in the visual representation of targets that appear near but not far from the hand. We distinguished between these two alternatives by capitalizing on research showing that vision and proprioception have differential effects on the precision of target representation (van Beers RJ, Sittig AC, Denier van der Gon JJ. Exp Brain Res 122: 367–377, 1998). Participants performed an in-to-center reaching task to an array of central target locations with their right hand, while their left hand rested near (beneath) or far from the target array. Reaching end-point accuracy, variability, time, and speed were assessed. We predicted that if proprioception contributes to the representation of hand-near targets, then error variability in depth will be smaller in the hand-near condition than in the hand-far condition. By contrast, if vision contributes to the representation of hand-near targets, then error variability along the lateral dimension will be smaller in the hand-near than in the hand-far condition. Our results showed that the placement of the hand near the targets reduced end-point error variability along the lateral dimension only. The results suggest that hand-near targets are represented with greater visual resolution than far targets.

Keywords: peripersonal space, sensory resolution, multisensory integration, frames of reference, visual processing, motor control, attention

a growing body of evidence suggests that people treat visual stimuli that appear near their hands—in hand-near space—differently than stimuli appearing away from their hands. Neuropsychological studies report that patients with impaired attention can indicate the presence of a target in their weak, contralesional visual field if their hand is placed near the target (di Pellegrino and Frassinetti 2000; Làdavas et al. 1998). Participants with cortical blindness are able to detect (Schendel and Robertson 2004) and determine the size (Brown et al. 2008) of visual items presented in the blind field when a hand is placed near (but not touching) the location where these items will appear. Studies of healthy participants suggest that people can detect hand-near visual targets more quickly (Reed et al. 2006, 2010), shift their attention (Abrams et al. 2008) and eyes (Thura et al. 2008) away from hand-near targets more slowly, and represent hand-near targets more reliably for action (Brown et al. 2009) compared with targets appearing away from the hand. Although some demonstrations of hand-near effects suggest that people need to be able to see their nearby hand together with the target (di Pellegrino and Frassinetti 2000; Farne et al. 2000), especially those in patient populations with acquired attention disorders, such as extinction and neglect, other demonstrations suggest that vision of the hand is not a requirement (Brown et al. 2008, 2009; Reed et al. 2006). Finally, there is no consensus on the possibility that hand-near effects in humans can be driven consistently by vision of a fake hand (Brown et al. 2009; Farne et al. 2000; Pavani et al. 2000). Nonetheless, these studies have inspired questions and hypotheses concerning the source of hand-near effects and the role that vision and proprioception may play in their appearance.

What is the source of visual-processing advantages in hand-near space? One candidate hypothesis is that performance in hand-near space may be improved because participants can combine proprioceptive information from the nearby hand with visual information about the target to provide a narrower and more resolute frame of reference within which to map target location proprioceptively. Participants may be better able to localize the target when it appears near a hand because it provides an opportunity to represent its location as both a visual location and as a potential posture. Research suggests that whereas vision and proprioception each make unique contributions to target localization, target representations resulting from the availability of both visual and proprioceptive information have better resolution than what would be predicted by simply overlapping or summing the contributions of vision and proprioception alone (Van Beers et al. 1999). Therefore, when a target is presented near the hand, the possible addition of computed proprioceptive information may allow for a more robust and precise representation of target location, supporting the performance benefits associated with hand-near space.

An equally compelling alternative, however, is that performance in hand-near space may improve the resolution of the visual representation of the target by recruiting neurons responsible for integrating visual and tactile information. Neurophysiological evidence in monkeys describes visual-tactile (bimodal) neurons that respond to touch stimuli on the hand or visual stimuli on or near the hand (Graziano 1999; Graziano and Gandhi 2000; Graziano et al. 1994). Bimodal neurons have tactile receptive fields (tRFs) on the skin of the hand and visual receptive fields (vRFs) that overlap and extend beyond the tRF into the space that surrounds the hand. The vRF is anchored to the hand such that it follows the hand as it moves (even when the monkey cannot see his arm) (Graziano 1999). Finally, bimodal neuron firing rates reflect the distance between the visual stimulus and hand (Graziano 1999; Graziano and Gandhi 2000; Graziano et al. 1994). Functional imaging studies in humans show that targets appearing near a hand selectively activate regions of the intraparietal sulcus (IPS) (Makin et al. 2007), supramarginal gyrus (SMG), and both the dorsal and ventral premotor cortex (PMd and PMv, respectively) compared with targets appearing far from the hand (Brozzoli et al. 2011; Gentile et al. 2011). These features suggest that bimodal neurons can be recruited by and code the presence of visual stimuli that appear near the hand.

van Beers et al. (1998, 1999) described the unique contributions that vision and proprioception make to target localization. van Beers et al. (1998) asked participants to localize targets that were defined by vision (participants matched the position of a visually presented target with their unseen right hand or their unseen left hand) or proprioception (participants matched the position of their unseen right fingertip with their unseen left hand). When target location was defined by vision, localization was more precise along the lateral dimension than in depth. This finding is in keeping with our general understanding that lateral position is vision's strongest dimension. Whereas lateral and vertical dimensions are represented directly on the retina, depth must be computed from oculomotor, binocular, and pictorial cues. By contrast, when target location was defined by proprioception, localization was more precise in depth than along the lateral dimension. In a follow-up study, van Beers et al. (1999) developed and supported a model of sensory integration, based on the finding that vision and proprioception make differential contributions to the precision of target representations. Thus these dimension-dependent reductions in target-localization variability reflect the relative strengths and weaknesses of the visual and upper-limb proprioceptive signals, and they combine in a way that optimizes the precision of target representation (van Beers et al. 1999).

We capitalized on the results of van Beers et al. (1998, 1999) to design a test that may determine whether placing a hand near the target improved the resolution of the visual representation of the target (perhaps due to the recruitment of bimodal neurons) or if placing a hand near the target allowed participants to represent the target within an additional proprioceptive frame of reference (perhaps by computing a posture congruent with the target location). We reasoned that if presenting targets near a hand improves the visual representation of the target by recruiting visual-tactile bimodal cells to represent the target, then pointing precision should be improved along the lateral dimension more than in depth when targets are presented near the hand. By contrast, if proprioception contributes to hand-near target representations, because hand-near targets can be represented proprioceptively, then pointing precision should be improved in depth more than along the lateral dimension when targets are presented near the hand.

Participants used their right hand to make pointing movements from start positions arranged around the circumference of a circle to a central target location. The central target location was jittered trial to trial to prevent participants from learning its exact coordinates. We manipulated whether participants' own left hand was placed near (beneath the target array) or far (on their lap) from the target display, and we measured end-point variability along the lateral dimension and in depth. The in-to-center target arrangement controlled for movement direction-dependent biases in end-point variability (Gordon et al. 1994a, b) and reduced the likelihood that hand-presence effects are attributable to the hand attracting attention [e.g., Jackson et al. (2010)], since the target appeared predictably in the center of the workspace (although not in the exact same location) on every trial regardless of hand position. Given our hypotheses above, we predicted that if the presentation of targets near the hand influences the visual representation of the target, then end-point variability along the lateral dimension will be reduced to a greater extent than variability in depth in the hand-near condition compared with the hand-far condition. We predicted that if proprioception contributes to hand-near target representations, then end-point variability in depth will be reduced to a greater extent than lateral variability in the hand-near condition. The two experiments presented below differ only in the extent to which participants see their hand present in the display. In Experiment 1, participants see their hand being placed in the display at the beginning of every block of hand-present trials. To control for a potential role for visual memory, in Experiment 2, participants viewed their hand in the display at the beginning of the experiment only.

EXPERIMENT 1

Methods

Participants.

Fifty-six right-handed undergraduate students (mean age 19.0 ± 1.2 yr; range 18–26 yr) participated in this research for extra credit or renumeration. All participants reported being right handed (Van Strien 1992), with normal or corrected-to-normal vision and no history of any neurological or musculoskeletal disorder. All were naïve to the purpose of the study. Twenty-eight (24 women) and 28 (21 women) people participated in the first and second experiments, respectively. No one participated in both experiments. The Trent University Research Ethics Board approved all procedures, and each participant gave written, informed consent before participation.

Apparatus.

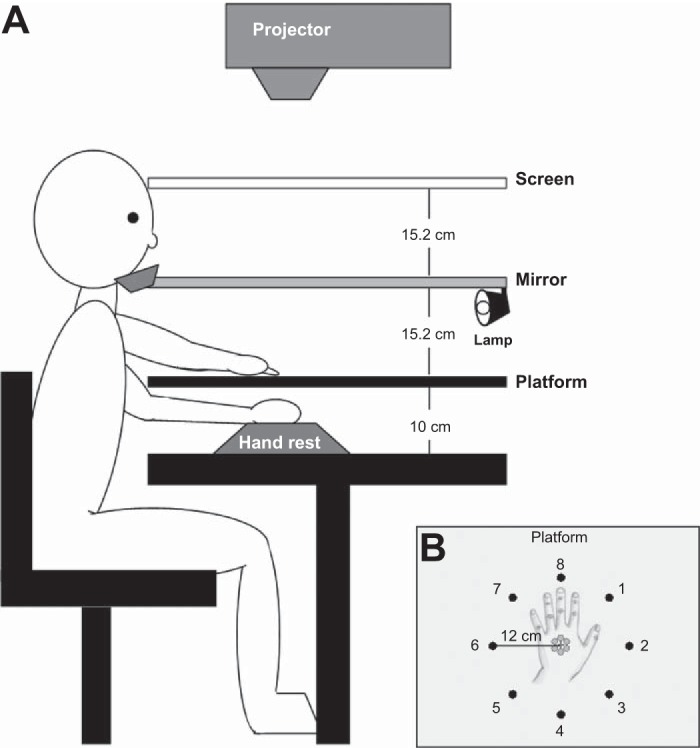

Participants sat at a table whose working surface (71 × 51 cm) was 85 cm from the floor (see Fig. 1A). A projector (Optoma DLP EP739; Optoma Technology, Mississauga, ON, Canada), a white-cloth projection screen, a semisilvered mirror, and a reaching platform were arranged, such that visual targets presented on the screen above the mirror appeared to be presented below the mirror on the platform. The platform was suspended 10 cm above the table surface. A formed foam handrest was placed below the platform, such that when the left hand was placed on the handrest, the dorsal surface of the hand rested 2 cm below the surface of the platform. A second function of the handrest was to standardize the location of the left hand when it was placed beneath the display.

Fig. 1.

Experimental set up. A: the arrangement of the screen, mirror, and platform surfaces relative to the participant, projector, and handrest. B: the arrangement of the potential start (black) and target positions (gray). Start and target images were projected onto the screen but appeared to be on the platform. The platform was opaque and appears to be transparent here for illustration purposes only. The hand was placed beneath the platform and was not visible to the participants. An outline of its position relative to the targets and start positions is shown here.

The display system was used to present reaching start and target locations to participants. Start locations and targets were viewed from an average viewing distance of 27 cm and subtended 1.9° (0.9 cm diameter) on average. All were gray circles presented on a black background. The centers of the eight start positions were arranged around the circumference of a virtual circle with a 12-cm radius. The centers of the six target locations were arranged around a virtual circle with a 1-cm radius. Multiple target locations were used to prevent participants from learning the coordinates of a single target location. This start-to-target arrangement controlled for movement direction-dependent biases in end-point variability (Gordon et al. 1994a, b). All displays were created in MATLAB (MathWorks, Natick, MA) using the Psychophysics Toolbox (version 3.0.8) (Brainard 1997; Pelli 1997).

A motion-tracking system (Liberty; Polhemus, Burlington, VT) was used to track the location of the pointing finger over time during reaching. A single marker was taped to the nail of the participant's right index finger. All motion-tracking data were collected (100 Hz) using the prok-liberty toolbox for MATLAB (http://www.prokopenko.org/liberty.html) on a personal computer. All data were stored for later analysis offline.

Design.

The experiment used an 8 − start position × 6 − target position × 2 − left-hand presence (left hand near or far from the display) within-subject design. There were six blocks completed for each participant and a total of 48 trials/block. This design resulted in 18 observations for each start position and hand condition. Start and target positions were varied randomly trial to trial, whereas left-hand position was blocked. Left-hand presence alternated between blocks, and the order was counterbalanced across subjects. On hand-present blocks, the left hand rested just below the target array, and on hand-absent blocks, the left hand rested in the participant's lap. As soon as testing commenced, the left hand remained unseen for the remainder of the block. All reaching movements were performed with the right hand.

Procedure.

ALIGNMENT.

At the beginning of each block, the platform was removed, and a small lamp was turned on under the mirror so that the participant could see the position of his or her left hand relative to the position of a projected dot representing the display center. The handrest was positioned so that the dot appeared in the center of the back of the hand. The handrest position was fixed so that on subsequent blocks the participant could remove and reposition the left hand into the same centered location without looking below the platform. This alignment procedure was repeated at the beginning of each hand-present block to allow participants to see regularly the position of their left hand in the workspace. Otherwise, the small lamp was turned off, and the left hand was unseen once reaching trials commenced and remained unseen for the duration of each block.

REACHING TASK.

The experiment was conducted in a dimly lit room. Each participant was seated at the display table (Fig. 1). Seat and chin-rest heights were adjusted so that the participant could see the entire display comfortably. The reaching task began with the presentation of the first start circle and a smaller red circle (0.95° visual angle) that acted as a cursor in that it showed the location of the right index finger in the display in real time. The participant was instructed to move the index finger so that the cursor was placed in the center of the start circle. Once the experimenter was satisfied that the participant had acquired the start circle, the participant initiated the presentation of the target by pressing a key. The start circle disappeared, and a target circle appeared simultaneously. The participant was instructed to reach to the target as quickly and as accurately as possible, without making corrections. Both the target and the cursor representing fingertip location disappeared at movement onset; the movements were performed open loop. Two seconds after target presentation, the next start position appeared, and the participant moved toward the next start position. When the finger was within 1.2 cm of the start circle (10% of the movement distance), the red cursor reappeared, allowing the participant to bring the index finger accurately into the center of the next start circle. As such, the participant did not receive nor could he or she derive visual information about movement-path error or end-point error.

Each participant completed a short practice block (10 trials) before beginning the first block. Practice was followed by six experimental blocks of 48 trials each. Participants were provided with short breaks of self-determined duration between blocks.

Data analysis.

All data analyses were conducted using MATLAB (MathWorks) and SPSS software (SPSS, IBM, Armonk, NY). Movement trajectories were selected using an algorithm in which movement initiation was defined as the time at which tangential velocity first exceeded 0.05 m/s, and movement end was defined as the first time after peak velocity (PV) that tangential velocity fell below 0.05 m/s. Reaction time (RT), movement time (MT), and PV were assessed. Movement end-point error along the lateral dimension and in depth was determined by calculating the signed difference between the target location and movement end-point along the lateral or depth dimensions, respectively. Movement end-point variability was calculated separately along the lateral dimension and in depth for each participant. It was defined as the mean absolute distance between the mean end-point to any one target and all reaches to that target within each cell of the start position by target location by hand-presence design. Each of the six dependent variables—mean signed error and end-point variability along the lateral dimension, mean signed error and end-point variability in depth, mean movement time, and mean PV—was submitted to an eight-start position by two-left-hand presence repeated-measures ANOVA (α = 0.05). Main effects of start position were decomposed using Tukey's honest significant difference post hoc tests.

Results

Figure 2, A and B, shows end-point error in all conditions for all participants in the left-hand-absent and -present conditions, respectively. The ellipses represent the range containing 90% of the data in each condition and whose cardinal axes span from the fifth to the 95th percentiles for the error data along the lateral dimension and in depth. The figure also shows the target location translated to the center of error space (the 0, 0 position along the lateral dimension and in depth). Finally, the mean signed error, averaged across all start positions, is represented.

Fig. 2.

Results from Experiment 1. A and B: the end position with respect to the target location (i.e., the end-point error) for every reaching movement for all participants (∘) in the hand-far and hand-near conditions, respectively. The black crosses represent the target location, and the gray crosses show the average signed reaching error. The ellipses represent the range containing 90% of the data in each condition and whose cardinal axes span from the fifth to the 95th percentiles for the error data along the lateral dimension and in depth. C and D: mean variability along the horizon and in depth, respectively, as a function of hand presence. E and F: mean movement time (MT), and peak velocity (PV), respectively, as a function of hand presence. In all panels, error bars represent the SE.

Mean end-point variability along the lateral dimension was submitted to an eight-start position by two-left-hand presence repeated-measures ANOVA. This analysis revealed a significant main effect for hand presence [F(1, 27) = 4.27, P = 0.048], such that mean variability along the lateral dimension was lower when the left hand was present (1.26 ± 0.01 cm; mean ± SE) than when it was absent (1.21 ± 0.01 cm). There was also a main effect of start position [F(7, 27) = 3.37, P = 0.01], such that lateral variability was lowest when participants started at the bottom-most (6 o'clock) start position (0.95 ± 0.02 cm) and greatest when they started at the left-most (9 o'clock) start position (1.44 ± 0.02 cm). Start position did not interact with hand presence (P = 0.883). A separate analysis revealed no significant influence of target location (P = 0.105) and no interaction of target location with hand (P = 0.235).

By contrast, when mean end-point variability in depth was submitted to the same ANOVA, there was no main effect for hand presence (P = 0.858) and no interaction of hand presence and start position (P = 0.853). There was, however, a significant effect of start position [F(7, 27) = 16.03, P < 0.01], such that variability in depth was lowest when participants started at the left-most (9 o'clock) start position (0.98 ± 0.02 cm) and greatest when they started at the top- and bottom-most start positions (1.89 ± 0.02 cm and 1.80 ± 0.02 cm, respectively). A separate analysis revealed no significant influence of target location (P = 0.409) and no interaction of target location with hand (P = 0.785).

The change in variability, both along the lateral and depth dimensions, in response to the addition of the hand to the display for each participant is shown in Fig. 3. Data points with negative values (to the left of the vertical 0 line) indicate that the participant showed reduced lateral variability with the addition of the hand to the display. Data points with negative values (below the horizontal 0 line) reflect participants who showed reduced variability in depth with the addition of the hand to the display. Whereas there is an even distribution above (n = 12) and below (n = 16) the horizontal line, there are a greater number of participants to the left (n = 19) of the vertical line than to the right (n = 9).

Fig. 3.

Scatterplot depicting the mean change in variability as a function of hand condition (hand present − hand absent) along the lateral and depth dimensions for each participant. Participants from Experiment 1 are shown as black dots, and participants from Experiment 2 are shown as gray dots. Participants to the left of the vertical 0 line showed reduced lateral variability with the addition of the hand to the display. Participants below the horizontal 0 line showed reduced variability in depth with the addition of the hand to the display. Whereas there is a fairly even distribution around the horizontal line, there are a greater number of participants to the left of the vertical line than to the right in both experiments.

The analysis of mean signed lateral error revealed a significant main effect of start position [F(7, 27) = 15.53, P < 0.001]. As shown in Fig. 2, A and B, participants tended to reach to the left of the target. Mean signed error along the lateral dimension varied with start position in a systematic way, such that participants' leftward error was significantly lower if they were reaching toward their body compared with all other reaching directions (P < 0.05), as shown in Fig. 4. There was no main effect of hand presence (P = 0.535) and no interaction between hand presence and start position (P = 0.473).

Fig. 4.

Signed lateral error (cm) as a function of reaching start location. In general, participants consistently reached to the left of the target, and in this graph, more negative values represent a larger error to the left of the target. These errors were larger when participants were reaching away from their body (start positions 3–5) and smaller when participants reached toward their body (start positions 1, 7, and 8). This pattern was consistent across the 2 experiments. Error bars represent the SE.

In general, the analysis of mean signed error in depth revealed that participants tended to reach to a location that was nearer to the body than the target, as shown in Fig. 2, A and B. This performance, however, was not sensitive to the presence of the left hand in the display or to start position. The analysis of mean signed error in depth revealed no main effect for hand presence (P = 0.550), no main effect for start position (P = 0.377), and no interaction (P = 0.382).

Measures of response timing.

Finally, comparisons of variability associated with left-hand presence would not be valid if participants systematically changed the timing and/or speed of their movements with hand presence. To check whether this was the case, we submitted RT, MT, and tangential PV to ANOVA (see Fig. 5A). For all of the timing measures (RT, MT, and PV), there were neither main effects (RT: P = 0.650; MT: P = 0.456; PV: P = 0.646) nor interactions (RT: P = 0.158; MT: P = 0.211; PV: P = 0.548) involving left-hand presence. There were, however, significant main effects of start position for RT [F(7, 27) = 2.10, P = 0.045], MT [F(7, 27) = 25.64, P < 0.001], and PV [F(7, 27) = 37.19, P < 0.001].

Fig. 5.

Mean reaction time (ms), mean MT (ms), and mean tangential PV (mm/s) as a function of reaching start location. A and B: results from Experiments 1 and 2, respectively.

Post hoc comparisons of RT means show that participants responded significantly more quickly to the presentation of the target when they were poised to make a movement from the left (9 o'clock)- and right (3 o'clock)-most start positions (268 ± 4 ms and 269 ± 4 ms, respectively) and were slowest to react when they started from the bottom-most start position (6 o'clock; 300 ± 4 ms). Movements initiated from start positions in the upper right and lower left, requiring rotation about the elbow, were performed more quickly (420 ± 4 ms and 427 ± 4 ms, respectively) than movements initiated from start positions in the upper left or lower right (515 ± 4 ms and 493 ± 4 ms, respectively), requiring rotations about both the elbow and shoulder. The variation of movement end-point variability, MT, and PV with start position noted here is consistent with the known anisotropy associated with right-arm reaching movements (Gordon et al. 1994a, b). In general, the findings here do not preclude the comparison of variability in left-hand near and far conditions.

Whereas the ANOVA results show that hand presence does not influence reach preparation time, to determine whether reach preparation time is related to reach variability, we correlated RT and MT with end-point variability and present these results in Table 1. In Experiment 1, this analysis showed that there is a weak but significant positive relationship between end-point variability and RT: more time allocated to reach planning is associated with a greater end-point variability. This relationship was present in both spatial dimensions and in both the hand-absent and hand-present conditions. MT was correlated negatively with end-point variability in depth in the hand-absent case only.

Table 1.

Pearson correlation coefficients representing the relationship among reaction time (RT), movement time (MT), and end-point variability in the lateral and depth dimensions from Experiment 1

| Hand Absent |

Hand Present |

|||

|---|---|---|---|---|

| RT, ms | MT, ms | RT, ms | MT, ms | |

| Lateral variability, cm | 0.146 | −0.028 | 0.239 | 0.052 |

| P = 0.041 | P = 0.697 | P = 0.001 | P = 0.465 | |

| Variability in depth, cm | 0.161 | −0.251 | 0.236 | −0.132 |

| P = 0.024 | P < 0.01 | P = 0.001 | P = 0.065 | |

Discussion

By capitalizing on past results showing that 1) adding visual information to the target representation reduces reaching end-point variability along the lateral dimension more than in depth, and 2) adding proprioceptive information to the target representation reduces end-point variability in depth more than along the lateral dimension (van Beers et al. 1998, 1999), we designed an experiment to determine whether hand-near targets improve the visual or proprioceptive representation of a visually presented target. The results showed that placing the nonreaching hand beneath the target array improved reaching end-point variability along the lateral dimension only and induced no reliable change to variability in depth. This result is consistent with the nearby hand invoking an improved visual representation of the target.

One potential explanation for this reduction in variability along the lateral dimension only is that when the target appears in the space near the hand, it falls within the vRF of visual-tactile bimodal cells, thereby recruiting them to contribute to the visual representation of the target. An alternative account is centered on the possibility that participants may be able to use visual memory for hand position in the hand-near but not the hand-far conditions. The placement of the hand in the display improved the visual representation of the target because at the beginning of each hand-near block, participants saw the location of their hand relative to the display and were perhaps able to invoke a visual memory of the hand that could act as a reference point for their representation of each target. To address this possibility, we conducted a second experiment in which participants viewed their hand with respect to the display only once, at the very beginning of the experiment. Whereas the second experiment does not completely eliminate visual memory as a possible explanation, if visual memory for hand position in the display is acting as a reference, then the established visual memory should be considerably weaker in this experiment, and this relative weakness could eliminate the reduction in variability observed along the lateral dimension in the first experiment.

EXPERIMENT 2

Method

Participants.

Twenty-eight right-handed undergraduate students participated in this research for course credit. All participants gave informed consent before participating. Participants in Experiment 1 were not permitted to participate in Experiment 2.

Procedure.

The apparatus and design of Experiment 2 were identical to that of Experiment 1. Experiments 1 and 2 differed in the number and frequency of opportunities for participants to view their left hand in the display. In Experiment 1, participants viewed their left hand as it aligned with the target array at the beginning of each hand-present block. Experiment 2 differed in that the alignment procedure was performed only once at the beginning of the experiment. At the beginning of subsequent hand-present blocks, participants used the fixed position of the foam hand rest to reposition their left hand in the same location each time. Therefore, in Experiment 2, participants did not see the position of their left hand relative to the display space for the duration of the experiment.

Results

Figure 6, A and B, shows end-point error in all conditions for all participants in the left-hand-absent and -present conditions, respectively. The ellipses represent the range containing 90% of the data in each condition. The figure also shows the target location translated to the center of error space (the 0, 0 position along the lateral dimension and in depth), and the mean signed error, averaged across all start positions and participants, is represented.

Fig. 6.

Results from Experiment 2. A and B: the end position with respect to the target location (i.e., the end-point error) for every reaching movement for all participants (∘) in the hand-far and hand-near conditions, respectively. The black crosses represent the target location, and the gray crosses show the average signed reaching error. The ellipses represent the range containing 90% of the data in each condition and whose cardinal axes span from the 5th to the 95th percentiles for the error data along the lateral dimension and in depth. C and D: mean variability along the horizon and in depth, respectively, as a function of hand presence. E and F: mean MT and PV, respectively, as a function of hand presence. In all panels, error bars represent the SE.

Mean end-point variability along the lateral dimension was submitted to an eight-start position by two-left-hand presence repeated-measures ANOVA. This analysis revealed a significant main effect for hand presence [F(1, 27) = 4.30, P = 0.048], such that mean variability along the lateral dimension was lower when the left hand was present (0.94 ± 0.01 cm) than when it was absent (0.98 ± 0.01 cm). There was also a main effect of start position [F(7, 27) = 17.21, P < 0.001], such that lateral variability was lowest when participants started at the top-most (12 o'clock) start position (0.65 ± 0.02 cm) and greatest when they started at the right (3 o'clock)- and left (9 o'clock)-most start positions (1.29 ± 0.02 cm and 1.18 ± 0.02 cm, respectively). There was no interaction between left-hand presence and start position (P = 0.151). A separate analysis revealed no significant influence of target location (P = 0.740) and no interaction of target location with hand (P = 0.425).

By contrast, when mean end-point variability in depth was submitted to the same ANOVA, there was no main effect for hand presence (P = 0.844) and no interaction of hand presence and start position (P = 0.648). There was, however, a significant main effect for start position [F(7,27) = 17.14, P < 0.001]. Variability in depth was lowest when participants started at the right-most (3 o'clock) start position (0.93 ± 0.02 cm) and greatest when they started at the top-most (12 o'clock) and bottom-right (5 o'clock) start positions (1.49 ± 0.02 cm and 1.56 ± 0.02 cm, respectively). A separate analysis revealed no significant influence of target location (P = 0.252) and no interaction of target location with hand (P = 0.665).

The change in variability, both along the lateral and depth dimensions, in response to the addition of the hand to the display for each participant is shown in Fig. 3. Data points with negative values (to the left of the vertical 0 line) indicate that the participant showed reduced lateral variability with the addition of the hand to the display. Data points with negative values (below the horizontal 0 line) reflect participants who showed reduced variability in depth with the addition of the hand to the display. Whereas there is an almost even distribution above (n = 13) and below (n = 15) the horizontal line, there are a greater number of participants to the left (n = 17) of the vertical line than to the right (n = 11).

The analysis of mean signed error along the lateral dimension revealed a significant main effect of hand presence [F(1, 27) = 8.02, P = 0.009]. As seen in Fig. 6, A and B, participants tended to reach slightly to the left of the target, but this error was significantly smaller when the hand was present in the display (−0.70 ± 0.02 cm) than when the hand was absent (−0.80 ± 0.02 cm).

Mean signed error along the lateral dimension varied with start position in a systematic way [F(7, 27) = 11.06, P < 0.001], as shown in Fig. 4. In general, reaches away from the body resulted in larger errors to the left than reaches made toward the body. There was no interaction between hand presence and start position (P = 0.473). In general, this finding mirrors the leftward bias that healthy participants often show when they are attempting to estimate the center of space or bisect a line (Jewel and McCourt 2000).

In general, participants tended to reach to a mean location that was nearer to the body than was the target, as can be seen in Fig. 6, A and B. This performance, however, was not sensitive to the presence of the left hand in the display or to start position. The analysis of mean signed error in depth revealed no main effect for hand presence (P = 0.550), no main effect for start position (P = 0.377), and no interaction (P = 0.382).

Measures of response timing.

Finally, to ensure that performance timing did not vary systematically with hand presence, we submitted RT, MT, and PV to ANOVA. For all measures (see Fig. 5B), there were neither main effects (RT: P = 0.713; MT: P = 0.667; PV: P = 0.710) nor interactions (RT: P = 0.127; MT: P = 0.116; PV: P = 0.739) involving left-hand presence. There were, however, main effects of start position for RT [F(7, 27) = 2.12, P = 0.043], MT [F(7, 27) = 14.98, P < 0.001], and PV [F(7, 27) = 32.32, P < 0.001]. Consistent with the results of Experiment 1, participants responded significantly more quickly when responding from the bottom most (232 ± 2 ms) and were slowest when they started from the upper-right corner of the workspace (270 ± 2 ms). Consistent with the results of Experiment 1, participants responded significantly more quickly when responding from the bottom most (232 ± 2 ms) and were slowest when they started from the upper-right corner of the workspace (270 ± 2 ms). Movements initiated from start positions in the upper right and lower left, requiring rotation about the elbow, were performed more quickly (454 ± 4 ms and 453 ± 4 ms, respectively) than movements initiated from start positions in the upper left or at the bottom (534 ± 4 ms and 544 ± 4 ms, respectively), requiring rotations about both the elbow and shoulder. The variation of MT and PV noted here is consistent with the known anisotropy associated with limb movements (Gordon et al. 1994a, b).

In Experiment 1, we found that RT was positively correlated to both lateral and depth end-point variability. These results were not repeated, however, in Experiment 2, in which we restricted participants' access to vision of their hand. In Experiment 2, this analysis revealed only a significant positive relationship between MT and lateral variability in the hand-present condition only (see Table 2).

Table 2.

Pearson correlation coefficients representing the relationship among RT, MT, and end-point variability in the lateral and depth dimensions from Experiment 2

| Hand Absent |

Hand Present |

|||

|---|---|---|---|---|

| RT, ms | MT, ms | RT, ms | MT, ms | |

| Lateral variability, cm | −0.046 | 0.112 | −0.032 | 0.160 |

| P = 0.469 | P = 0.095 | P = 0.633 | P = 0.017 | |

| Variability in depth, cm | 0.123 | 0.039 | −0.016 | 0.112 |

| P = 0.065 | P = 0.563 | P = 0.811 | P = 0.095 | |

GENERAL DISCUSSION

Research shows that people process visual stimuli that appear near their hands—in hand-near or pericutaneous space—differently than stimuli appearing away from their hands. What is the nature of the additional information provided when a visual target appears near a hand? Here, we consider two possible alternatives. One possibility is that the representation of targets (and therefore, perception and performance) in hand-near space is improved because participants can use proprioception from the nearby limb and hand to provide a narrower and more resolute frame of reference within which to place the target. Put differently, because the target is near the hand, it may be possible to compute a potential posture that would allow the target to be reached. This proprioceptive representation may then be used to augment the visual representation of the target and thereby, improve reaching performance.

An equally compelling alternative, however, is that placement of the hand near the target array augments the visual representation of the target. A target appearing near the hand may fall within the receptive fields of visual-tactile neurons, and this may recruit them to assist in the visual representation of the target. This recruitment has been cited as a possible explanation for improved visual and motor performance involving targets that appear in the space near the hands (Brown et al. 2008, 2009; di Pellegrino and Frassinetti 2000; Làdavas et al. 1998; Reed et al. 2010).

We distinguished between these two alternatives by capitalizing on research showing that vision and proprioception have differential effects on the precision of target representation (van Beers et al. 1998, 1999): when vision contributes to the target representation, localization is more precise along the lateral dimension. By contrast, when proprioception contributes to the target representation, localization is more precise in depth. In our experiment, participants performed an in-to-center reaching task to an array of central target locations with their right hand, while their left hand rested near (beneath) or far from the target array, and we measured movement of end-point variability along the lateral and depth dimensions. If the representation of hand-near targets is augmented by additional visual information, perhaps by the recruitment of visual-tactile bimodal cells, then we predicted that variability would be reduced more along the lateral dimension than in depth in the hand-near condition. By contrast, if the representation of hand-near target is augmented by additional proprioceptive information, then variability would be reduced more in depth than along the lateral dimensions in the hand-near condition. The results of two experiments showed that the placement of the hand near the target array significantly reduced end-point variability along the lateral dimension, suggesting that the nature of information provided when a visual target appears near a hand is more likely visual than proprioceptive.

The finding that hand presence did not interact with start position indicates that these hand-presence effects cannot be attributed to movement direction-dependent effects on reaching variability [see Gordon et al. (1994a, b)]. Moreover, the finding that performance timing measures (RT, MT, and PV) did not vary with hand presence indicates that the hand-presence effect on movement precision cannot be explained by speed-accuracy or speed-precision trade-offs.

Bimodal visual-tactile neurons, discovered in the monkey IPS and PMv, have overlapping vRFs and tRFs, respectively, typically on the hand or face (Fogassi et al. 1996; Graziano 1999; Graziano and Gandhi 2000; Graziano et al. 1994; Rizzolatti et al. 1981a, b). An interesting feature of visual-tactile bimodal cells is that their vRF surrounds and extends beyond the tRF, such that visual stimuli appearing near but not touching the skin (within the vRF alone) can also recruit these neurons. Space near the hands and face is represented more densely than space far from the hands and face, and bimodal-cell firing rates gradually decay as the distance between the stimulus and the edge of the tRF increases (Graziano 1999; Graziano et al. 1994). In sum, visual information presented near the hands, i.e., in pericutaneous space, may recruit bimodal neurons, allowing them to contribute to visual processing and thereby, increasing the resolution with which a hand-near target is represented.

The vRFs of bimodal neurons are tied to hand position in space, and therefore, they must receive or make use of proprioceptive information (Graziano 1999; Graziano et al. 1994; Rizzolatti et al. 1981a, b). Interestingly, single-cell recording studies show that whereas visual-tactile bimodal cells must receive and use limb-position information—because the vRF is modulated by limb position—visual-tactile neurons in the premotor cortex fire in response to the presentation of a visual or tactile (or both) stimulus (Graziano 1999; Graziano et al. 1994). As such, our claim is that whereas proprioception represents hand location (and therefore, informs the location of the bimodal cell vRF), it may not play a direct role in the representation of hand-near visual targets and therefore, may not account for the performance benefits associated with hand-near targets. Although bimodal cells in premotor cortex do not appear to code limb position directly, investigations of the response properties of lateral parietal area 7B revealed cells that responded to either visual or tactile stimulation, and amongst those cells, there was a small proportion that responded to active and passive limb movements (Leinonen et al. 1979). Moreover, ongoing investigations into the multisensory processing capabilities of cells in primary somatosensory cortex [e.g., Iwamura et al. (1993)] will continue to shed light on how hand-near targets are represented.

It is not surprising that targets for reaching are candidates for hand-near benefits. The posterior parietal cortex, together with the premotor cortex, is involved in the visual representation of targets and in the transformation of this visual information into eye- and body-centered coordinates for both reaching and grasping (Buneo and Andersen 2012; Buneo et al. 2002; Chang and Snyder 2010; Luppino et al. 1999; McGuire and Sabes 2011; Murata et al. 1997; Rizzolatti and Matelli 2003). The nature of these representations and how they combine to code target location relative to the eye, shoulder, and hand are not always straightforward, due to the contributions of multiple sensory modalities to reach planning and control and possibly due to motor and posture redundancy in the upper-limb system (Bernier and Grafton 2010; Chang and Snyder 2010; McGuire and Sabes 2011). Regardless, the presence of bimodal cells in brain regions typically associated with target representation for reaching (area 5, IPS, and PMv) is consistent with the notion that they could contribute to the visual representation of target information for action. Although difficult to test conclusively in humans, functional-imaging research shows that activation in the anterior IPS (Makin et al. 2007), SMG, and both the PMd and PMv, respectively, was greater when a visual target was presented near, compared with far from, a passive hand (Brozzoli et al. 2011), regardless of the participants' ability to see the hand (Makin et al. 2007). Heightened activation in these regions in the hand-near condition may be linked to the recruitment of visual-tactile bimodal cells located there. Further research could determine whether this finding extends to the context of reaching.

The alternative hypothesis considered was that targets appearing near a passive hand are processed more quickly and more accurately because participants can combine proprioceptive information from the nearby hand with visual information about the target to provide a narrower and more resolute frame of reference within which to map target location using proprioception. Participants may be better able to localize the target when it appears near a hand because it provides a better opportunity to represent the target location as both a visual location and as a posture. Although participants did not consistently reduce variability in depth when a hand was present, when we inspect Fig. 5 closely, it is clear that the placement of the left hand in the reach space does induce changes in variability in depth, as about one-half of the participants showed reductions in variability, whereas the others showed increases in variability. It is possible that proprioception contributes to the representation of the target, but that participants show individual differences in their ability to use this information or require some training before this information can be used consistently. In short, whereas this hypothesis was not supported by these results, there are several other methods that could be used to test this hypothesis, and it deserves further consideration.

Finally, this study does not address the possibility that hand-near benefits arise because hand presence draws attention to its nearby surround. Indeed, Jackson et al. (2010) demonstrated that hand position-related proprioception can act as an effective cue to draw attention to a visual target when the location of the target was varied and unpredictable. By contrast, in the current experiment, the target was presented predictably in the center of the workspace on every trial, reducing the need to engage attention to locate the target. To explain our findings, attention must be drawn, perhaps endogenously, from the current start position to the central target region by a hand that is stationary and not visible. Complicating this possibility is the observation that when a hand is unseen for extended periods of time, judgment of its location relative to a visual target is subject to drift (Brown et al. 2003; Paillard and Brouchon 1968; Smeets et al. 2006; Wann and Ibrahim 1992). Drift could introduce variability into the assignment of attention to a particular location, reducing its usefulness. Whereas we believe that attention does not provide the most parsimonious explanation for the results presented here, further tests of the attention hypothesis are needed.

Conclusion

A growing body of evidence suggests that people treat visual stimuli that appear in hand-near space differently than stimuli appearing away from their hands. The evidence provided here suggests that hand-near targets enjoy a more robust visual representation than far targets and may be consistent with the notion that hand-near targets recruit visual-tactile bimodal cells.

GRANTS

Support for this research was funded by grants from the National Science and Engineering Research Council (NSERC; Canada) and Trent University (to L. E. Brown).

DISCLOSURES

The authors declare that there are no conflicts of interest.

AUTHOR CONTRIBUTIONS

Author contributions: L.E.B. conception and design of research; M.C.M. and S.M. performed experiments; L.E.B. analyzed data; L.E.B. interpreted results of experiments; L.E.B. and M.C.M. prepared figures; L.E.B., M.C.M., and S.M. drafted manuscript; L.E.B. edited and revised manuscript; L.E.B. approved final version of manuscript.

ACKNOWLEDGMENTS

The authors thank Janette Bramley for help with data collection.

REFERENCES

- Abrams R, Davoli C, Du F, Knapp Paull D W. Altered vision near the hands. Cognition 107: 1035–1047, 2008. [DOI] [PubMed] [Google Scholar]

- Bernier PM, Grafton ST. Human posterior parietal cortex flexibly determines reference frames for reaching based on sensory context. Neuron 68: 776–778, 2010. [DOI] [PubMed] [Google Scholar]

- Brainard DH. The psychophysics toolbox. Spat Vis 10: 433–436, 1997. [PubMed] [Google Scholar]

- Brown LE, Kroliczak G, Demonet J, Goodale MA. A hand in blindsight: hand placement near target improves size perception in blind visual field. Neuropsychologia 46: 786–802, 2008. [DOI] [PubMed] [Google Scholar]

- Brown LE, Morrissey B, Goodale MA. Vision in the palm of your hand. Neuropsychologia 47: 1621–1626, 2009. [DOI] [PubMed] [Google Scholar]

- Brown LE, Rosenbaum DA, Sainburg RL. Limb position drift: implications for control of posture and movements. J Neurophysiol 90: 3105–3118, 2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brozzoli C, Gentile G, Petkova VI, Ehrsson HH. fMRI adaptation reveals a cortical mechanism for the coding of space near the hand. J Neurosci 31: 9023–9032, 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buneo CA, Andersen RA. Integration of target and hand position signals in the posterior parietal cortex: effects of workspace and hand vision. J Neurophysiol 108: 187–199, 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buneo CA, Jarvis MR, Batista AP, Andersen RA. Direct visuomotor transformations for reaching. Nature 416: 632–636, 2002. [DOI] [PubMed] [Google Scholar]

- Chang SW, Snyder LH. Idiosyncratic and systematic aspects of spatial representations in the macaque parietal cortex. Proc Natl Acad Sci USA 107: 7951–7956, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- di Pellegrino G, Frassinetti F. Direct evidence from parietal extinction of enhancement of visual attention near a visible hand. Curr Biol 10: 1475–1477, 2000. [DOI] [PubMed] [Google Scholar]

- Farne A, Pavani F, Meneghello F, Ladavas E. Left tactile extinction following visual stimulation of a rubber hand. Brain 123: 2350–2360, 2000. [DOI] [PubMed] [Google Scholar]

- Fogassi L, Gallese V, Fadiga L, Luppino G, Matelli M, Rizzolatti G. Coding of peripersonal space in inferior premotor cortex (area F4). J Neurophysiol 76: 141–157, 1996. [DOI] [PubMed] [Google Scholar]

- Gentile G, Petkova VI, Erhsson HH. Integration of visual and tactile signals from the hand in the human brain: an fMRI study. J Neurophysiol 105: 910–922, 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gordon J, Ghilardi MF, Cooper SE, Ghez C. Accuracy of planar reaching movements. II. Systematic extent errors resulting from inertial anisotropy. Exp Brain Res 99: 112–130, 1994a. [DOI] [PubMed] [Google Scholar]

- Gordon J, Ghilardi MF, Ghez C. Accuracy of planar reaching movements. I. Independence of direction and extent variability. Exp Brain Res 99: 97–111, 1994b. [DOI] [PubMed] [Google Scholar]

- Graziano MS. Where is my arm? The relative role of vision and proprioception in the neuronal representation of limb position. Proc Natl Acad Sci USA 96: 10418–10421, 1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Graziano MS, Gandhi S. Location of the polysensory zone in the precentral gyrus of anesthetized monkeys. Exp Brain Res 135: 259–266, 2000. [DOI] [PubMed] [Google Scholar]

- Graziano MS, Yap GS, Gross CG. Coding of visual space by premotor neurons. Science 266: 1054–1057, 1994. [DOI] [PubMed] [Google Scholar]

- Iwamura Y, Tanaka M, Sakamoto M, Hikosaka O. Rostrocaudal gradients in receptive field complexity in the finger region of alert monkey's postcentral gyrus. Exp Brain Res 92: 360–369, 1993. [DOI] [PubMed] [Google Scholar]

- Jackson CS, Miall RC, Balslev D. Spatially valid proprioceptive cues improve the detection of a visual stimulus. Exp Brain Res 205: 31–40, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jewell G, McCourt ME. Pseudoneglect: a review and meta-analysis of performance factors in line bisection tasks. Neuropsychologia 38: 93–110, 2000. [DOI] [PubMed] [Google Scholar]

- Làdavas E, di Pellegrino G, Farnè A, Zeloni G. Neuropsychological evidence of an integrated visuotactile representation of peripersonal space in humans. J Cogn Neurosci 10: 581–589, 1998. [DOI] [PubMed] [Google Scholar]

- Leinonen L, Hyvärinen K, Nyman G, Linnankoski I. Functional properties of neurons in the lateral part of associative area 7 in awake monkeys. Exp Brain Res 34: 299–320, 1979. [DOI] [PubMed] [Google Scholar]

- Luppino G, Murata A, Govoni P, Matelli M. Largely segregated parietofrontal connections linking rostral intraparietal cortex (areas AIP and VIP) and the ventral premotor cortex (areas F5 and F4). Exp Brain Res 128: 181–187, 1999. [DOI] [PubMed] [Google Scholar]

- Makin T, Holmes NP, Zohary E. Is that near my hand? Multisensory representation of peripersonal space in human intraparietal sulcus. J Neurosci 27: 731–740, 2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McGuire LM, Sabes PN. Heterogeneous representations in the superior parietal lobule are common across reaches to visual and proprioceptive targets. J Neurosci 31: 6661–6673, 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murata A, Fadiga L, Fogassi L, Gallese V, Raos V, Rizzolatti G. Object representation in the ventral premotor cortex (area F5) of the monkey. J Neurophysiol 78: 2226–2230, 1997. [DOI] [PubMed] [Google Scholar]

- Paillard J, Brouchon M. Active and passive movements in the calibration of position sense. In: The Neuropsychology of Spatially Oriented Behavior, edited by Freedman SJ. Homewood, IL: Dorsey, 1968. [Google Scholar]

- Pavani F, Spence C, Driver J. Visual capture of touch: out-of-the-body experiences with rubber gloves. Psychol Sci 11: 353–359, 2000. [DOI] [PubMed] [Google Scholar]

- Pelli DG. The Video Toolbox software for visual psychophysics: transforming numbers into movies. Spat Vis 10: 437–442, 1997. [PubMed] [Google Scholar]

- Reed CL, Betz R, Garza JP, Roberts RJ Jr. Grab it! Biased attention in functional hand and tool space. Atten Percept Psychophys 72: 236–245, 2010. [DOI] [PubMed] [Google Scholar]

- Reed CL, Grubb JD, Steele C. Hands up: attentional prioritization of space near the hand. J Exp Psychol Hum Percept Perform 32: 166–177, 2006. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Matelli M. Two different streams form the dorsal visual system: anatomy and function. Exp Brain Res 153: 146–157, 2003. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Scandolara C, Matelli M, Gentilucci M. Afferent properties of periarcuate neurons in macaque monkeys. I. Somatosensory responses. Behav Brain Res 2: 126–146, 1981a. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Scandolara C, Matelli M, Gentilucci M. Afferent properties of periarcuate neurons in macaque monkeys. II. Visual responses. Behav Brain Res 2: 147–163, 1981b. [DOI] [PubMed] [Google Scholar]

- Schendel K, Robertson LC. Reaching out to see: arm position can attenuate human visual loss. J Cogn Neurosci 16: 935–943, 2004. [DOI] [PubMed] [Google Scholar]

- Smeets JB, van den Dobbelsteen JJ, de Grave DD, van Beers RJ, Brenner E. Sensory integration does not lead to sensory calibration. Proc Natl Acad Sci USA 103: 18781–18786, 2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thura D, Boussaoud D, Meunier M. Hand position affects saccadic reaction times in monkeys and humans. J Neurophysiol 99: 2194–2202, 2008. [DOI] [PubMed] [Google Scholar]

- van Beers RJ, Sittig AC, Denier van der Gon JJ. The precision of proprioceptive position sense. Exp Brain Res 122: 367–377, 1998. [DOI] [PubMed] [Google Scholar]

- van Beers RJ, Sittig AC, Gon JJ. Integration of proprioceptive and visual position information: an experimentally supported model. J Neurophysiol 81: 1355–1364, 1999. [DOI] [PubMed] [Google Scholar]

- Van Strien JW. Classificatie van links- en rechtshandige proefpersonen. [Classification of left- and right-handed research participants.] Nederlans Tijdschr Psychol 47: 88–92, 1992. [Google Scholar]

- Wann JP, Ibrahim SF. Does limb proprioception drift? Exp Brain Res 91: 162–166, 1992. [DOI] [PubMed] [Google Scholar]