Significance

Peer review is an institution of enormous importance for the careers of scientists and the content of published science. The decisions of gatekeepers—editors and peer reviewers—legitimize scientific findings, distribute professional rewards, and influence future research. However, appropriate data to gauge the quality of gatekeeper decision-making in science has rarely been made publicly available. Our research tracks the popularity of rejected and accepted manuscripts at three elite medical journals. We found that editors and reviewers generally made good decisions regarding which manuscripts to promote and reject. However, many highly cited articles were surprisingly rejected. Our research suggests that evaluative strategies that increase the mean quality of published science may also increase the risk of rejecting unconventional or outstanding work.

Keywords: peer review, innovation, decision making, publishing, creativity

Abstract

Peer review is the main institution responsible for the evaluation and gestation of scientific research. Although peer review is widely seen as vital to scientific evaluation, anecdotal evidence abounds of gatekeeping mistakes in leading journals, such as rejecting seminal contributions or accepting mediocre submissions. Systematic evidence regarding the effectiveness—or lack thereof—of scientific gatekeeping is scant, largely because access to rejected manuscripts from journals is rarely available. Using a dataset of 1,008 manuscripts submitted to three elite medical journals, we show differences in citation outcomes for articles that received different appraisals from editors and peer reviewers. Among rejected articles, desk-rejected manuscripts, deemed as unworthy of peer review by editors, received fewer citations than those sent for peer review. Among both rejected and accepted articles, manuscripts with lower scores from peer reviewers received relatively fewer citations when they were eventually published. However, hindsight reveals numerous questionable gatekeeping decisions. Of the 808 eventually published articles in our dataset, our three focal journals rejected many highly cited manuscripts, including the 14 most popular; roughly the top 2 percent. Of those 14 articles, 12 were desk-rejected. This finding raises concerns regarding whether peer review is ill-suited to recognize and gestate the most impactful ideas and research. Despite this finding, results show that in our case studies, on the whole, there was value added in peer review. Editors and peer reviewers generally—but not always—made good decisions regarding the identification and promotion of quality in scientific manuscripts.

Peer review alters science via the filtering out of rejected manuscripts and the revision of eventually published articles. Publication in leading journals is linked to professional rewards in science, which influences the choices scientists make with their work (1). Although peer review is widely cited as central to academic evaluation (2, 3), numerous scholars have expressed concern about the effectiveness of peer review, particularly regarding the tendency to protect the scientific status quo and suppress innovative findings (4, 5). Others have focused on errors of omission in peer review, offering anecdotes of seminal scientific innovations that faced emphatic rejections from high-status gatekeepers and journals before eventually achieving publication and positive regard (6–8). Unfortunately, systematic study of peer review is difficult, largely because of the sensitive and confidential nature of the subject matter. Based on a dataset of 1,008 manuscripts submitted to three leading medical journals—Annals of Internal Medicine, British Medical Journal, and The Lancet—we analyzed the effectiveness of peer review. In our dataset, 946 submissions were rejected and 62 were accepted. Among the rejections, we identified 757 manuscripts eventually published in another venue. The main focus of our research is to examine the degree to which editors and peer reviewers made decisions and appraisals that promoted manuscripts that would receive the most citations over time, regardless of where they were published.

Materials and Methods

To analyze the effectiveness of peer review, we compared the fates of accepted and rejected—but eventually published—manuscripts initially submitted to three leading medical journals in 2003 and 2004, all ranked in the top 10 journals in the Institute for Scientific Information Science Citation Index. These journals are Annals of Internal Medicine, British Medical Journal, and The Lancet. In particular, we examined how many citations published articles eventually garnered, whether they were published in one of our three focal journals or rejected and eventually published in another journal. To gauge postpublication impact and scientific influence, citation counts as of April 2014 were culled from Google Scholar (see Figs. S1 and S2 and SI Appendix for citation comparisons of major scholarly databases). We also examined the logarithms of those counts because citations in academia tend to be distributed exponentially, with a few articles garnering a disproportionate number of citations (9, 10). In our sample, 62 manuscripts were accepted of 1,008 submitted, yielding an overall 6.2% acceptance rate over that time period. Among rejected manuscripts, we identified 757 articles that were eventually published elsewhere after their rejection from our three focal journals. The remaining 189 rejected manuscripts (18.8%) were either altered beyond recognition when published or “file-drawered” by their authors. Eleven accepted manuscripts had missing or incomplete data, leaving a sample of 51 accepted manuscripts in our dataset.

Our study was approved by the Committee on Human Research at the University of California, San Francisco. As it is not possible to completely remove all identifiers from the raw data and protect the confidentiality of all the participating authors, reviewers, and editors, all the data (archival and taped) are stored securely and can be accessed only by the research team at the University of California, San Francisco. One journal required that the authors give permission to be part of the study. All authors from that journal subsequently granted permission. We wish to express our gratitude to those authors, as well as to the journal editors for sharing their data with us.

Citation analysis has long been used to analyze intellectual history and social behavior in science. It is important to consider the strengths and limitations of citation data in the context of our research. Scientists cite work for a myriad of reasons (11, 12). However, the vast majority of citations are either positive or neutral in nature (13). We worked with the assumption that scientists prefer to build upon other quality research with their own work. As Latour and Woolgar (14) suggested, citation is an act of deference, as well as the means by which intellectual credit and content flows in science. Relatedly, we also assumed that most scientists want to produce quality work and will seldom attempt to garner credit and attention by blatantly doing bad work. Thus, on the whole, the attention and impact associated with citations provides a reasonable measure of quality. Citations provide an objective and quantitative measure of credit and attention flows in science.

Results

Our results suggest that gatekeepers were at least somewhat effective at recognizing and promoting quality. The main gatekeeping filters we identified were (i) editorial decisions regarding which manuscripts to desk-reject and (ii) reviewer scores for manuscripts sent for peer review. Desk-rejections are manuscripts that an editor decides not to send for peer review after an initial evaluation. This choice entails no further journal or personal resources being expended on gestating or considering the article, although the lack of peer review means that the authors will be free to submit their article elsewhere relatively quickly. An article sent for peer review can still be rejected as well. Reviewers, usually anonymous scholars with relevant expertise, provide feedback to authors regarding their manuscripts, which journal editors use to decide whether to reject, recommend revisions (seldom with a guarantee of eventual publication), or accept an article for publication.

Merton (1) posited that science tends to reward high-status academics merely by virtue of their previously attained status, dubbing this self-fulfilling prophecy the “Matthew Effect.” Examining rejected and accepted manuscripts separately helps rule out potential Matthew Effects affecting citation outcomes, because any citation discrepancies cannot be explained by the halo or reputational effects of being published in one of our three elite focal journals.

Rejected Manuscripts.

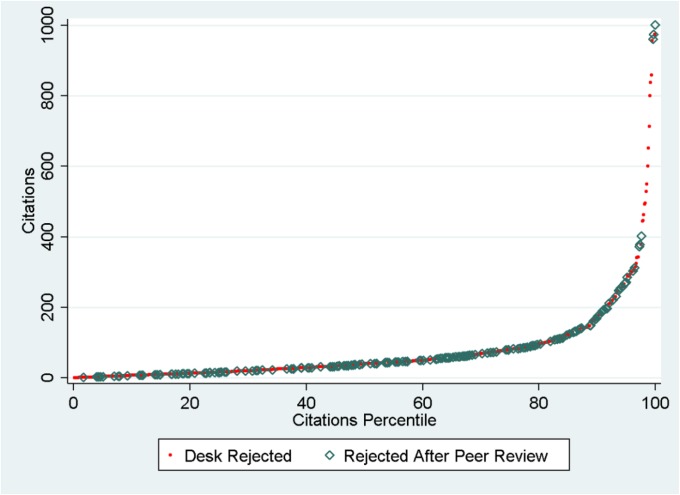

Generally, the journal editors in our study made good appraisals regarding which articles to desk-reject. Desk-rejected articles eventually published in other journals received fewer citations than those that went through at least one round of peer review before rejection. Of 1,008 articles in our dataset, 772 were desk-rejected by at least one of our focal-journals. Eventually published desk-rejected articles (n = 571) received on average 69.80 citations, compared with 94.65 for articles sent for peer review before rejection (n = 187; P < 0.01). Because citations are often distributed exponentially, with a few articles garnering disproportionate attention, we also used the logarithm of citation counts as a dependent variable to diminish the potential influence of a few highly cited outlier articles. Logging citations yields similar results, with desk-rejections receiving a mean of 3.44 logged citations, compared with 3.92 for peer-reviewed rejections (P < 0.001). Fig. 1 shows a graphical illustration of desk-rejections and peer review rejections. In general, articles chosen for peer review tended to receive significantly more citations than desk-rejected manuscripts. However, a number of highly cited articles were desk-rejected, including 12 of the 15 most-cited cases. Of the other 993 initially submitted manuscripts, 760 (76.5%) were desk-rejected. This finding suggests that in our case study, articles that would eventually become highly cited were roughly equally likely to be desk-rejected as a random submission. In turn, although desk-rejections were effective with identifying impactful research in general, they were not effective in regards to identifying highly cited articles.

Fig. 1.

Citation distribution of rejected articles (peer reviewed vs. desk-rejected).

Articles sent for peer review may have benefited from receiving feedback from attentive reviewers. However, the magnitude of the difference between the desk-rejected and nondesk-rejected articles, as well as the fact that 12 of the most highly cited articles were desk-rejected and received little feedback, suggests that innate quality of initial submissions explains at least some of the citation gap. Further, if a future highly cited manuscript was aided by critical feedback attached to a “reject” decision from a journal, it renders the decision not to at least grant an opportunity at revision an even more egregious mistake. Despite the importance of peer review, its scope of influence with regard to changing and gestating articles may be limited. The core innovations and research content of a scientific manuscript are rarely altered substantially through peer review. Instead, peer review tends to focus on narrower, more technical details of a manuscript (15). However, there is some evidence that peer review improves scientific articles, particularly in the medical sciences (16).

Peer reviewers also appeared to add value to peer review with regards to the promotion and identification of quality. We assigned reviewer scores to quantify the perceived initial quality of each submitted manuscript. For each “accept” recommendation, three points were assigned, two for “minor changes,” one for “major changes,” and zero for reject. From these values, each manuscript received a mean score. Whereas some initially lauded manuscripts may have improved little over the peer review process, and other marginal initial submissions may have improved greatly, initial perceived quality was related to citation outcomes. For manuscripts with two or more reviewers, there were weak but positive correlations between initial scores and eventual citations received. Reviewer scores were correlated 0.28 (P < 0.01; n = 89) with citations and 0.21 with logged citations (P < 0.05). Although the effects of the peer-reviewer scores on citation outcomes appeared weaker than those of editors making desk-rejections, it is worth noting the survivor bias of manuscripts that are not desk-rejected. It is generally easier to distinguish scientific work of very low quality than it is to recognize finer gradations distinguishing the best contributions in advance of publication (17, 18).

Related to the Matthew Effect (1) and underscoring the importance of social status in science, evaluators have been found to judge equivalent work from high-status sources more favorably than work from lower-status contributors (11, 19, 20). Consequently, placement in a prestigious journal can bolster the visibility and perceived quality of work of many scientists. Evaluating complex work is difficult, so scientists often rely on heuristics to judge quality; status of scholars, institutions, and journals are common means of doing so (21, 22). Unsurprisingly, citations received by manuscripts were positively correlated with the impact factor of the journal in which it was eventually published. Journal impact factor was correlated 0.54 with citations (P < 0.001; n = 757) and 0.42 with logged citations (P < 0.001). Of course, it is difficult to disentangle exactly how much these positive correlations were a result of (i) higher-quality articles being published in competitive high impact journals and (ii) visibility or halo effects associated with publishing in more prestigious journals.

Accepted Manuscripts.

Next, we examined manuscripts that survived the peer review process and were eventually published in one of our three target journals. Among the 40 articles that were scored by at least two peer reviewers, there were weak positive correlations between reviewer scores and citations received (0.21; n = 40) and citations logged (0.26). Both of these correlations fell short of statistical significance, in part because of the small sample. As another way of gauging the ability of peer reviewers to identify quality manuscripts, we compared submissions that had at least one “reject” recommendation from a reviewer with those that never received such a recommendation. Many have bemoaned the practice at most highly competitive academic journals of rejecting an article that does not achieve a positive consensus among all reviewers (23). After all, some rejection recommendations are unwise. Among accepted articles, rejection recommendations from reviewers were associated with substantial differences in citation outcomes. Published articles that had not received a rejection recommendation from a peer reviewer (n = 30) received a mean of 162.80 citations, compared with 115.24 for articles that received at least one rejection recommendation (n = 21; P < 0.10). Similarly, manuscripts without rejection recommendations received a mean of 4.72 logged citations, compared with 4.33 logged citations for articles for which a peer reviewer had recommended rejection (P < 0.10). Results approached significance, suggesting that among accepted manuscripts, rejection recommendations—or lack thereof—were at best weakly predictive of future popularity.

If anything, the differences in citation outcomes for rejected and accepted articles with more initially favorable peer reviews likely underestimates the effectiveness of scientific gatekeeping. Assuming eventually published manuscripts were at least not made worse in subsequent submissions after initial rejection, and in some cases improved, this should mitigate quality discrepancies between articles that received more and less favorable initial assessments.

Fates of Rejected vs. Accepted Articles.

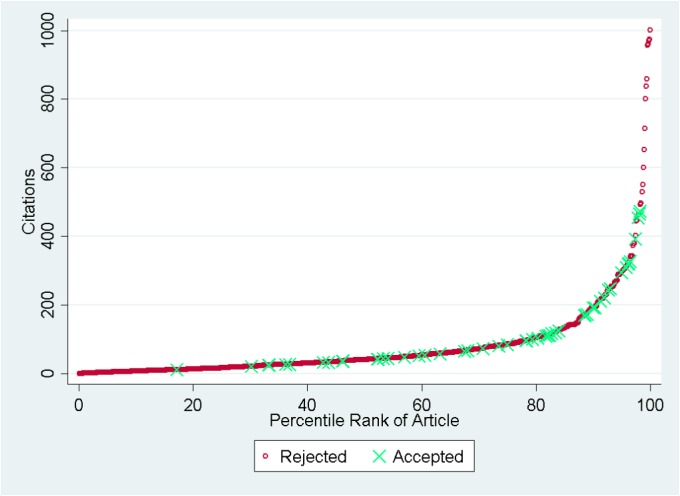

Although our results suggest that gatekeeping at our three focal journals was effective, it was far from perfect. When examining the entire population of 808 eventually published manuscripts, our three focal journals rejected the 14 most highly cited articles. This entails 15 total cases because one article was rejected by two of our focal journals before eventual publication. Most of the 14 most-cited articles were published in lower-impact journals than the initial target journal. In some cases, the impact factor of the publishing journal was substantially lower than the initial target journal from which the manuscript was rejected. The “best” acceptance decision one of our focal journals made was publishing the 16th most-cited case, which placed in the 98th percentile of submitted manuscripts. Despite the 15 glaring omissions at the top, on the whole gatekeepers appeared to make good decisions. Citation percentiles for accepted articles ranged from a minimum of 17.06 to a maximum of 98.15. The median percentile was 79.36, with quartiles at 53.28 (25th percentile) and 91.22 (75th percentile). Fig. 2 provides a graphical illustration of the distribution of citations for accepted and rejected articles. As is generally the case in science, citations are distributed exponentially. Near the 85th percentile, citations increase sharply. Whether this does—or should—influence the evaluative strategies of gatekeepers is an open question. These results also raise the question of to what degree these positive outcomes for accepted submissions, particularly the dearth of barely or never-cited articles, are because the prestige of the journal and/or the innate quality of the manuscripts.

Fig. 2.

Citation distribution of accepted and rejected articles.

To ensure that these findings were not excessively influenced by the majority of articles published in less-eminent journals, we repeated the previous analysis, restricting articles to those published in journals with at least an 8.00 impact factor. The correlation between impact factor and citations received remained positive, but was roughly halved to 0.28. Interestingly, after running t tests comparing rejected and accepted manuscripts for articles published in high-impact journals, rejected articles received more citations. Specifically, rejected articles averaged 212.77 citations and accepted manuscripts averaged 143.22 (P < 0.05). The relationship is similar with logged citations (4.77 for rejections, 4.53 for acceptances), although it falls short of statistical significance, suggesting that it is a few highly cited articles underpinning the significant difference. Multiple regression analysis, allowing for impact factor to be used as a control variable, yielded similar results (Tables S1 and S2). Controlling for impact factor shows that surprisingly, rejected manuscripts were more cited than accepted manuscripts when published in prestigious journals.

By restricting analysis to journals with impact factors greater than 8.00, we are more likely to be cherry-picking gatekeeping mistakes and ignoring the vast cache of articles that were “rightfully” sent down the journal hierarchy. In addition to a status symbol, journal impact factors represent positive, but often noisy signals of manuscript quality. Authorial ambition may also be relevant; there was likely some self-selection in our manuscript pool. We looked solely at articles initially submitted to elite journals, as opposed to manuscripts that the authors targeted for a less eminent journal in the first place. In some cases, authors know their articles better than peer reviewers, who possess disparate sources of expertise. Further, ambition can portend success. For example, Dale and Krueger (24) found that future earnings were better predicted by the highest-status college to which a student applied, as opposed to attended. Low acceptance rates also likely create a substantial pool of high-quality articles that would fit well in other high-quality journals. Alberts et al. expressed concern that very high rejection rates tend to squeeze out innovative research (25). High-status journals with very low acceptance rates tend to emphasize avoiding errors of commission (publishing an unworthy article) over avoiding errors of omission (rejecting a worthy article).

Explaining and Justifying Rejection.

Most articles received similar boilerplate text in their rejection letters. In the anomalous case of the #3 ranked article, which received uniformly favorable feedback, the standard rejection letter was modified slightly to acknowledge that the article had received positive reviews but was being rejected anyway. The standard editorial response sent to many rejected authors emphasizes a need for “strong implications” and a need to “change practice.” These correspondences reveal the importance of what Gieryn (26) dubbed “boundary work” in demarcating worthy versus unworthy science. Some rejected submissions may have been excellent articles that did not fit with the perceived mission or image of the journal. In most cases, gatekeepers acknowledged that the article they were rejecting had at least some merit, but belonged in a different, usually specialist, journal. Normal science and replication are more likely to occur in such journals. In contrast, generalist journals tend to assume, or at least perceive, a different role in science, particularly when the publication is high-status. Since professional power and status tend to be linked to control over abstract knowledge (27, 28), the emphasis on general implications is not surprising. Although accruing citations is valuable for authors and journals, image is also important. High-status actors and institutions often strategically forego valuable, but lower-status, downmarket niches to preserve exclusive reputations and identities (29).

Based on the feedback from editors and peer reviewers reported in SI Appendix, Table 1 summarizes the most commonly stated justifications for rejection.

Table 1.

Most common justifications for article rejection among top 15-cited cases

| Justification | n |

| Lacking novelty | 7 |

| Methodological problems | 4 |

| Magnitude of results too small | 4 |

| No reason given | 3 |

| Insufficient data/evidence | 2 |

| Speculative results/questionable validity | 2 |

Gatekeepers prioritized novelty—at least as they perceived it—in their adjudications. High-status journals distinguish themselves by publishing cutting-edge science of theoretical importance (2, 30), so the emphasis on novelty is unsurprising. Further, because highly cited articles are expected to be novel and paradigm-shifting (5, 31), it is surprising that almost half of the top 15 cases were criticized for their lack of novelty. However, perceived novelty may only be relative. George Akerlof received the 2001 Nobel Prize in economics for his advances in behavioral economics. This work is well-known as a source of one of the most (in)famous rejection mistakes in contemporary scientific publishing. Akerlof’s seminal article, “The Market for ‘Lemons’,” initially faced stiff resistance—if not outright hostility—in peer review at elite economics journals. The first two journals where the article was submitted rejected it on the basis of triviality. The third journal rejected the article because it was “too” novel, and if the article was correct, it would mean that “economics would be different” (32). Relatedly, former Administrative Science Quarterly editor William Starbuck (33) observed that gatekeepers are often territorial and dogmatic in their criticisms, criticizing methods and data attached to theories they dislike and lauding methods and data attached to theories they prefer.

Our results suggest that Akerlof’s experience facing rejections with an influential article may not be abnormal. Mark Granovetter’s “The Strength of Weak Ties,” the most-cited article in contemporary sociology, was emphatically rejected by the first journal where he submitted the manuscript (34). Rosalyn Yalow received the Nobel Prize in medicine for work that was initially rejected by Science and only published in a subsequent journal after substantial compromise (6). Outside of science, at the start of her career, famed author J. K. Rowling experienced 12 rejections of her first Harry Potter book before eventually finding a publisher willing to take a chance on her book for a small monetary advance (35). Highly cited articles and innovations experiencing rejection may be more the rule than the exception.

Decisions to reject or accept manuscripts can be complex. Multiple characteristics of articles and authors—and not just novelty—are associated with publication. Lee et al. (22) listed numerous potential sources of bias in peer review, including social characteristics of authors, as well as the intellectual content of their scientific work. The tendency of gatekeepers to prefer work closer to their own and the scientific status quo is a source of intellectual conservativism in science (5). Previous analysis of this dataset found that submitted manuscripts were more likely to be published if they have high methodological quality, larger sample sizes, a randomized, controlled design, disclosed funding sources, and if the corresponding author lives in the same country as that of the publishing journal (36). Scientists have expressed concern that this publication bias selectively distorts the corpus of published of science and contributes to the publication of dubious results (37, 38).

Impact Factors of Submitted vs. Published Journals.

Among the 14 most highly cited articles, most manuscripts were eventually published in a lower impact factor journal than the initial focal journal to which they were submitted. Table 2 reports the ratios of impact factors of initially submitted journal to published journal. Impact factor ratios are reported, as opposed to exact impact factors, as a precaution to preserve the anonymity of authors and their manuscripts. A higher ratio denotes a decrease in journal status in publication source, because the numerator (initially submitted journal) is greater than the denominator (published journal), whereas a lower ratio denotes an increase in status. Most articles ended up moving down the status hierarchy, because there are few journals in academia with impact factors higher than our three focal journals and authors tend to submit manuscripts to less-selective journals following rejection (39).

Table 2.

Impact factor comparisons of submitted versus published journals, 14 most highly cited articles

| Citation rank | Impact factor ratio of submitted to published journal | Publication journal outcome |

| 1 | 0.85 | Higher |

| 2 (first rejection) | 0.18 | Higher |

| 2 (second rejection) | 0.46 | Higher |

| 3 | 0.85 | Higher |

| 4 | 1.41 | Lower |

| 5 | 1.39 | Lower |

| 6 | 3.02 | Lower |

| 7 | 1.11 | Lower |

| 8 | 0.96 | Higher |

| 9 | 2.92 | Lower |

| 10 | 12.14 | Lower |

| 11 | 1.49 | Lower |

| 12 | 6.96 | Lower |

| 13 | 2.57 | Lower |

| 14 | 6.79 | Lower |

Time to Publication and Received Citations.

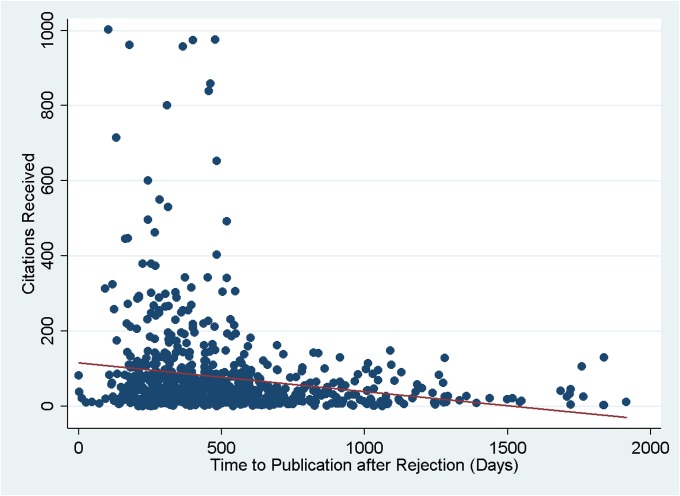

We also examined the relationship between time to publication (specifically, time since initial submission to our three focal journals) and citations received. The correlation between time to publications and citations received was only −0.18. However, Fig. 3 shows that there appears to be a “wall” after about 500 d for highly cited articles. After roughly 500 d, no manuscript received more than 200 citations. In fact, this weak negative correlation is driven by a small number of highly cited articles published in the first 500 d since initial submission. This finding suggests that if editors and peer reviewers made a mistake in overlooking or rejecting a future seminal contribution, the peer review system as a whole appears to be generally able to rectify the mistake within 2 y. If one of our three focal journals happened to reject an excellent article—out of error or perhaps an unlucky choice of peer reviewers—such articles appear to be published elsewhere relatively quickly. Of course, it is impossible to observe counterfactuals for meritorious rejected articles that were scooped, severely revised, never published, or consigned to an obscure publication outlet. Alternatively, in a fast-moving field such as medicine, results not published quickly are prone to becoming scooped or stale, and thus less likely to become highly cited. Repeated time-consuming rejections at other journals are also likely an indicator of the true quality of a manuscript, as authors tend to send an article further down the journal status hierarchy after each rejection (39).

Fig. 3.

Citation distribution of rejected articles by time to publication.

Among accepted articles that we could track from submission to publication, the number of rounds of revision a manuscript endured before publication had no major effect on citation outcomes among accepted manuscripts. Increased rounds of revision were weakly negatively correlated with citations (−0.13) and citations logged (−0.12) (n = 40). This suggests that there were diminishing returns to multiple rounds of revision. The function of numerous revisions in scientific journals may be more evaluative and bureaucratic than gestational.

Discussion

Our research suggests that in our case studies, both editors and peer reviewers made generally, but not always, good decisions with regards to identifying which articles would receive more citations in the future. These results are inured to potential Matthew Effects, as comparisons among rejected manuscripts showed that better-rated rejected articles still fared better than those that were evaluated more negatively. The rejection of the 14 most-cited articles in our dataset also suggests that scientific gatekeeping may have problems with dealing with exceptional or unconventional submissions. The fact that 12 of the 14 most-cited articles were desk-rejected is also significant. Rapid decisions are more likely to be informed by heuristic thinking (40). In turn, research that is categorizable into existing research frames is more likely to appeal to risk-averse gatekeepers with time and resource constraints, because people generally find uncertainty to be an aversive state (41). This may serve as an explanation for why exceptional and uncommon work may be particularly vulnerable to rejection.

Many of the problems editors and reviewers identified with the rejected articles were potentially fixable. However, journals have finite time and resources. There is no guarantee authors will be able to execute a successful revision. Some rejected manuscripts might have been casualties of a 6% acceptance rate, as opposed to inherently flawed or deficient. On the other hand, some rejected articles may not have fit well with the scope and mission of the initial focal journal, or the manuscript may have been improved since the initial submission. A focus on elite, cutting-edge research germane to high-status scientists may also open niches for popular contributions outside of those particular missions and constituencies, particularly dealing with practice and application.

It is also important to consider that citations are not necessarily a perfect indicator of scientific merit. Contagion processes are often decoupled from quality. Since the utility of information is often dependent on widespread adoption by others (42), diffusion patterns often have arbitrary influences and suboptimal innovations can gain precedence (43). Extreme performance can be more indicative of volatility than merit (44). Further, some innovations with mass appeal may have greater diffusion potential appealing to lowest common denominators, while more complex or esoteric contributions can be harder for many to understand or cite. Status signals and other social factors can profoundly affect diffusion processes, as well as people’s opinions and perceptions of quality (45). This is a key mechanism underpinning the Matthew Effect in science. After a scientific paper achieves widespread adoption, it is usually cited—often vaguely or inaccurately—as an exemplar of a general idea, as opposed to for the quality of its research (12). Impact factors, citations, intellectual credit, and visibility are the main means by which scholars and journals accrue status (14), so it is in the interest of editors to generally select highly cited articles for acceptance. Market forces also shape scientific incentives and journal status hierarchies, as journal revenues are linked to impact factors, even though using quantitative metrics to gauge the quality of a journal is controversial (46).

Prediction is a difficult task in most complex systems (47). This is particularly true in science, where cutting-edge research is inherently uncertain (30). Like with professional sports (48), financial investing (49), fine art (50), venture capitalism (51), books, movies, and music (52), identifying quality and predicting future performance with science and knowledge appears to be rife with noise and randomness. Former American Economic Review editor Preston McAfee (53) argued that gatekeeping errors in science are not only inevitable, they can also be efficient. Journals that do not occasionally wrongly reject unconventional submissions will publish articles of lower average quality on the whole. Gans and Shepherd (7) chronicled numerous gatekeeping mistakes in economics, resulting in widespread concerns among journal editors of rejecting another future classic article (53). However, changing evaluative cultures in an attempt to accommodate potentially exceptional articles may be costly. Dilemmas often exist between strategies that yield lower means but higher extreme values, and those with higher means and less variance (54). Because most new ideas tend to be bad ideas (55), resisting unconventional contributions may be a reasonable and efficient default instinct for evaluators. However, this is potentially problematic because unconventional work is often the source of major scientific breakthroughs (5). In turn, it worth considering whether the high mean and median citation rates for articles accepted in our three focal journals could have come at the expense of rejecting the some of the most highly cited articles. More generally, this finding raises the normative and strategic question of whether editors should try to maximize the mean or median quality of publications, even if such gatekeeping strategies or evaluative cultures entail a higher risk of rejecting highly cited articles. The medical context in our study is relevant to the question of whether and to what degree science should be risk-averse. Much decision-making in medicine is influenced by the Hippocratic Oath, emphasizing the imperative to avoid harm above all else. This underscores the need for follow-up studies of peer review in different journals and disciplines to enable replication and comparison with different epistemic and scientific cultures.

Although editors and reviewers appear to be able to improve predictions of which articles will receive the most citations—if not while also improving scientific output via selection and gestation—both errors of omission and commission were prominent in our case studies. Lindsey (17) posited that among the highest quality, most competitive manuscripts at elite high-rejection journals, peer review is “little better than a dice roll.” Cole et al. (56) argued that roughly one-half of a competitive National Science Foundation granting process was a matter of luck. Even with effective peer review, occasional acceptances of mediocre contributions and rejections of excellent manuscripts may be inevitable (33, 57). Given the effectiveness of desk-rejections as shown in our results, it seems that distinguishing poor scientific work is easier than distinguishing excellent contributions. However, the high rate of desk-rejections among extremely highly cited articles in our case studies suggests that although peer review was effective at predicting “good” articles, it simultaneously had difficulties in identifying outstanding or breakthrough work. In turn, the complexity of science appears to limit the predictive abilities of even the best peer reviewers and editors.

Supplementary Material

Acknowledgments

We thank Aaron Panofsky for helping initiate this project. Our research was supported by Grant 5R01NS044500-02 from the Research Integrity Program, Office of Research Integrity/National Institutes of Health collaboration, as well as a Banting Postdoctoral Fellowship and Sloan Foundation Economics of Knowledge Contribution Project fellowship (to K.S.).

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

Data deposition: Our data are confidential and securely stored at the University of California, San Francisco.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1418218112/-/DCSupplemental.

References

- 1.Merton RK. The Matthew Effect in science. Science. 1968;159(3810):56–63. [PubMed] [Google Scholar]

- 2.Lamont M. How Professors Think: Inside the Curious World of Academic Judgment. Harvard Univ Press; Cambridge, MA: 2009. [Google Scholar]

- 3.Kassirer JP, Campion EW. Peer review. Crude and understudied, but indispensable. JAMA. 1994;272(2):96–97. doi: 10.1001/jama.272.2.96. [DOI] [PubMed] [Google Scholar]

- 4.Mahoney MJ. Publication prejudices: An experimental study of confirmatory bias in the peer review system. Cognit Ther Res. 1977;1(2):161–175. [Google Scholar]

- 5.Horrobin DF. The philosophical basis of peer review and the suppression of innovation. JAMA. 1990;263(10):1438–1441. [PubMed] [Google Scholar]

- 6.Yalow RS. 1977. Nobel Lecture. Radioimmunoassay: A Probe For Fine Structure Of Biologic Systems. December 8, 1977 at Stockholm Concert Hall, Stockholm, Sweden.

- 7.Gans JS, Shepherd GB. How are the mighty fallen: Rejected classic articles by leading economists. J Econ Perspect. 1994;8(1):165–179. [Google Scholar]

- 8. doi: 10.1038/425645a. Nature (2003) Coping with peer rejection. Nature 425(6959):645. [DOI] [PubMed]

- 9.Lotka AJ. The frequency distribution of scientific productivity. J Wash Acad Sci. 1926;16:317–323. [Google Scholar]

- 10.Barabási AL, Albert R. Emergence of scaling in random networks. Science. 1999;286(5439):509–512. doi: 10.1126/science.286.5439.509. [DOI] [PubMed] [Google Scholar]

- 11.Lynn FB. Diffusing through disciplines: Insiders, outsiders and socially influenced citation behavior. Soc Forces. 2014;93(1):355–382. [Google Scholar]

- 12.Hargens LL. Using the literature: Reference networks, reference contexts, and the social structure of scholarship. Am Sociol Rev. 2000;65(6):846–865. [Google Scholar]

- 13.Bornmann L, Daniel H-D. What do citation counts measure: A review of studies on citing behavior. J Doc. 2008;64(1):45–80. [Google Scholar]

- 14.Latour B, Woolgar S. Laboratory Life: The Construction of Scientific Facts. Sage; Los Angeles: 1979. [Google Scholar]

- 15.Ellison G. Evolving standards for academic publishing: A q-r theory. J Polit Econ. 2002;110(5):994–1034. [Google Scholar]

- 16. Goodman SN, Berlin J, Fletcher SW (1994) Manuscript quality before and after peer review and editing at Annals of Internal Medicine. Annals of Internal Medicine 121(1):11–21. [DOI] [PubMed]

- 17.Lindsey D. Assessing precision in the manuscript review process: A little better than a dice roll. Scientometrics. 1988;14(1-2):75–82. [Google Scholar]

- 18.Cicchetti DV. The reliability of peer review for manuscript and grant submissions: A cross-disciplinary investigation. Behav Brain Sci. 1991;14(1):119–135. [Google Scholar]

- 19.Peters DP, Ceci SJ. Peer review practices of psychological journals: The fate of published articles, submitted again. Behav Brain Sci. 1982;5(2):187–195. [Google Scholar]

- 20.Leahey E. The role of status in evaluating research: The case of data editing. Soc Sci Res. 2004;33(3):521–537. [Google Scholar]

- 21.Long JS, Fox MF. Scientific careers: Universalism and particularism. Annu Rev Sociol. 1995;24:45–71. [Google Scholar]

- 22.Lee CJ, Sugimoto CR, Zhang G, Cronin B. Bias in peer review. JASIST. 2013;64(1):2–17. [Google Scholar]

- 23.Armstrong JS, Hubbard R. Does the need for agreement among reviewers inhibit the publication of controversial findings? Behav Brain Sci. 1991;14(1):136–137. [Google Scholar]

- 24.Dale SB, Krueger AB. Estimating the payoff to attending a more selective college: An application of selection on observables and unobservables. Q J Econ. 2002;117(4):1491–1527. [Google Scholar]

- 25. Alberts B, Kirschner MW, Tilghman S, Varmus H (2014) Rescuing US biomedical research from its systemic flaws. Proc Natl Acad Sci USA 111(16):5773–5777. [DOI] [PMC free article] [PubMed]

- 26.Gieryn TF. Boundary-work and the demarcation of science. Am Sociol Rev. 1983;48(6):781–795. [Google Scholar]

- 27.Abbott AD. Status and status strain in the professions. Am J Sociol. 1981;86(4):819–835. [Google Scholar]

- 28. Smith PK, Trope Y (2006) You focus on the forest when you're in charge of the trees: Power priming and abstract information processing. J Pers Soc Psychol 90(4):578--596. [DOI] [PubMed]

- 29.Podolny JM. Status Signals: A Sociological Study of Market Competition. Princeton Univ Press; Princeton, NJ: 2005. [Google Scholar]

- 30.Cole S. The hierarchy of the sciences? Am J Sociol. 1983;89(1):111–139. [Google Scholar]

- 31.Kuhn TS. The Structure of Scientific Revolutions. Univ of Chicago Press; Chicago: 1962. [Google Scholar]

- 32.Akerlof GA. 2003 Writing “The Market for ‘Lemons’”: A Personal and Interpretive Essay. Available at www.nobelprize.org/nobel_prizes/economic-sciences/laureates/2001/akerlof-article.html. Accessed June 2, 2014.

- 33.Starbuck WH. Turning lemons into lemonade: Where is the value in peer reviews? J Manage Inq. 2003;12(4):344–351. [Google Scholar]

- 34. Available at scatter.files.wordpress.com/2014/10/granovetter-rejection.pdf. Accessed October 23, 2014.

- 35. McGinty S (June 16, 2003) The J.K. Rowling Story. The Scotsman. Available at www.scotsman.com/lifestyle/books/the-jk-rowling-story-1-652114. Accessed December 9, 2014.

- 36.Lee KP, Boyd EA, Holroyd-Leduc JM, Bacchetti P, Bero LA. Predictors of publication: Characteristics of submitted manuscripts associated with acceptance at major biomedical journals. Med J Aust. 2006;184(12):621–626. doi: 10.5694/j.1326-5377.2006.tb00418.x. [DOI] [PubMed] [Google Scholar]

- 37.Dickersin K. The existence of publication bias and risk factors for its occurrence. JAMA. 1990;263(10):1385–1389. [PubMed] [Google Scholar]

- 38.Ioannidis JPA. Why most published research findings are false. PLoS Medicine. 2005;2(8):0696–0701. doi: 10.1371/journal.pmed.0020124. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Calcagno VE, et al. Flows of research manuscripts among scientific journals reveal hidden submission patterns. Science. 2012;388(6110):1065–1069. doi: 10.1126/science.1227833. [DOI] [PubMed] [Google Scholar]

- 40.Kahneman D. Thinking Fast and Slow. Farrar, Straus and Giroux; New York: 2011. [Google Scholar]

- 41.Mueller JS, Melwani S, Goncalo JA. The bias against creativity: Why people desire but reject creative ideas. Psychol Sci. 2012;23(1):13–17. doi: 10.1177/0956797611421018. [DOI] [PubMed] [Google Scholar]

- 42.Granovetter M, Soong R. Threshold models of interpersonal effects in consumer demand. J Econ Behav Organ. 1986;7:83–99. [Google Scholar]

- 43. Arthur WB (1989) Competing technologies, increasing returns and lock-in by historical events. The Economic Journal 99:116–131.

- 44. Denrell J, Liu C (2012) Top performers are not the most impressive when extreme performance indicates unreliability. Proc Natl Acad Sci USA 109(24):9331--9336. [DOI] [PMC free article] [PubMed]

- 45.Salganik MJ, Dodds PS, Watts DJ. Experimental study of inequality and unpredictability in an artificial cultural market. Science. 2006;311(5762):854–856. doi: 10.1126/science.1121066. [DOI] [PubMed] [Google Scholar]

- 46.Seglen PO. Why the impact factor of journals should not be used for evaluating research. BMJ. 1997;314(7079):498–502. doi: 10.1136/bmj.314.7079.497. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Silver N. The Signal and the Noise. Penguin; New York: 2012. [Google Scholar]

- 48.Massey C, Thaler RH. The loser’s curse: Decision making and market efficiency in the National Football League draft. Manage Sci. 2013;59(7):1479–1495. [Google Scholar]

- 49.Mauboussin MJ. The Success Equation. Harvard Business Review Press; Boston: 2012. [Google Scholar]

- 50. Dempster AM, ed (2014) Risk and Uncertainty in the Art World (Bloomsbury, London)

- 51. Gompers P, Lerner J (2006) The Venture Capital Cycle (MIT Press, Cambridge, MA)

- 52. Hirsch PM (1972) Processing fads and fashions: An organization-set analysis of cultural industry systems. Am J Sociol 77(4):639–659.

- 53.McAfee RP. Edifying editing. In: Szenberg M, Ramrattan L, editors. Secrets of Economics Editors. MIT Press; Cambridge, MA: 2014. pp. 33–44. [Google Scholar]

- 54.Denrell J, March JG. Adaptation as information restriction: The hot stove effect. Organ Sci. 2001;12(5):523–538. [Google Scholar]

- 55.Levinthal DA, March JG. The myopia of learning. Strateg Manage J. 1993;14(S2):95–112. [Google Scholar]

- 56.Cole S, Cole JR, Simon GA. Chance and consensus in peer review. Science. 1981;214(4523):881–886. doi: 10.1126/science.7302566. [DOI] [PubMed] [Google Scholar]

- 57.Stinchcombe AL, Ofshe R. On journal editing as a probabilistic process. Am Sociol. 1969;4(2):116–117. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.