Abstract

During communication we combine auditory and visual information. Neurophysiological research in nonhuman primates has shown that single neurons in ventrolateral prefrontal cortex (VLPFC) exhibit multisensory responses to faces and vocalizations presented simultaneously. However, whether VLPFC is also involved in maintaining those communication stimuli in working memory or combining stored information across different modalities is unknown, although its human homolog, the inferior frontal gyrus, is known to be important in integrating verbal information from auditory and visual working memory. To address this question, we recorded from VLPFC while rhesus macaques (Macaca mulatta) performed an audiovisual working memory task. Unlike traditional match-to-sample/nonmatch-to-sample paradigms, which use unimodal memoranda, our nonmatch-to-sample task used dynamic movies consisting of both facial gestures and the accompanying vocalizations. For the nonmatch conditions, a change in the auditory component (vocalization), the visual component (face), or both components was detected. Our results show that VLPFC neurons are activated by stimulus and task factors: while some neurons simply responded to a particular face or a vocalization regardless of the task period, others exhibited activity patterns typically related to working memory such as sustained delay activity and match enhancement/suppression. In addition, we found neurons that detected the component change during the nonmatch period. Interestingly, some of these neurons were sensitive to the change of both components and therefore combined information from auditory and visual working memory. These results suggest that VLPFC is not only involved in the perceptual processing of faces and vocalizations but also in their mnemonic processing.

Keywords: faces, macaque, monkey, multisensory, vocalization, working memory

Introduction

Communication is a multisensory phenomenon (McGurk and MacDonald, 1976; Campanella and Belin, 2007; Ghazanfar et al., 2010). We employ vocal sounds, mouth movements, facial motions, and hand/body gestures, when speaking to one another. To comprehend these communication signals, we need to retain many auditory and visual cues in memory and integrate them, while we retrieve their referents from knowledge stores. Thus, a communication circuit must include brain regions that receive auditory and visual inputs and are capable of processing, remembering, and integrating complex audiovisual information.

The joint processing of auditory and visual information has been shown to take place in a number of brain regions including the superior colliculus, temporal cortex, and the frontal lobes (Stein and Stanford, 2008; Murray and Wallace, 2012). Neuroimaging studies have especially noted activations in the inferior frontal gyrus (IFG) during the processing of audiovisual speech stimuli. The human IFG, including Broca's area, is activated not only when auditory and visual verbal materials are processed (Calvert et al., 2001; Homae et al., 2002; Jones and Callan, 2003; Miller and D'Esposito, 2005; Ojanen et al., 2005; Noppeney et al., 2010; Lee and Noppeney, 2011), but also when they are stored in memory for further manipulation, which suggests IFG plays a role in working memory (WM) of communication stimuli (Paulesu et al., 1993; Schumacher et al., 1996; Crottaz-Herbette et al., 2004; Rämä and Courtney, 2005).

More specific investigations of neuronal activity during the processing of communication stimuli have been performed in nonhuman primates who also use face and vocal stimuli in their social interactions. One region involved in audiovisual integration in nonhuman primates is the ventrolateral prefrontal cortex (VLPFC), which includes areas 12/47 and 45 and is homologous with the human IFG (Petrides and Pandya, 1988). Single-unit recordings have identified “face cells” in VLPFC (Wilson et al., 1993; O Scalaidhe et al., 1997; Scalaidhe et al., 1999) and neurons that are responsive to species-specific vocalizations (Romanski and Goldman-Rakic, 2002; Romanski et al., 2005). Many VLPFC neurons are multisensory and are responsive to vocalizations and the corresponding facial gesture presented simultaneously (Sugihara et al., 2006; Romanski and Hwang, 2012; Diehl and Romanski, 2014). However, further studies are needed to elucidate the individual and ensemble activity that occurs in VLPFC when face and vocal stimuli are remembered and integrated during WM processing.

Previous studies have illustrated the importance of the prefrontal cortex in WM and have postulated a role for VLPFC in nonspatial WM (Wilson et al., 1993; Nee et al., 2013; Plakke et al., 2013a; Plakke and Romanski, 2014), but few studies have addressed how neurons retain combined stimuli, such as a vocalization and a facial expression. In the current study, we therefore examined neuronal activity in the primate VLPFC during a WM task where both auditory and visual stimuli are the memoranda. Our neurophysiological results indicate that VLPFC neurons are recruited during WM processing of faces and vocalizations, with some cells activated by changes in either face or vocal information while others are multisensory and attend to both stimuli.

Materials and Methods

Subjects and apparatus.

Two female rhesus monkeys (Macaca mulatta) were used (Monkey P and T; 6.7 and 6.2 kg). All procedures conformed to the guidelines of National Institutes of Health and were approved by the University of Rochester Care and Use of Animals in Research Committee. Before training, a titanium head post was surgically implanted on the skull of each subject for head fixation. During training, the subject sat in a primate chair with the head fixed in a sound-attenuated room. Visual stimuli were presented on a computer monitor (NEC MultiSync LCD1830, 1280 × 1024, 60 Hz), which was at 75 cm distance from the eyes. Auditory stimuli were presented via two speakers (Yamaha MSP5; frequency response 50–40 kHz) placed on either side of the monitor at the height of the subject's head. Eye position was continuously monitored with an infrared pupil monitoring system (ISCAN). Behavioral data (eye position and button press) were collected on a PC via PCI interface boards (NI PCI-6220 and NI PCI-6509; National Instruments). The timing of stimulus presentation and reward delivery was controlled with in-house C++ software, which was built based on Microsoft DirectX technologies.

Stimuli.

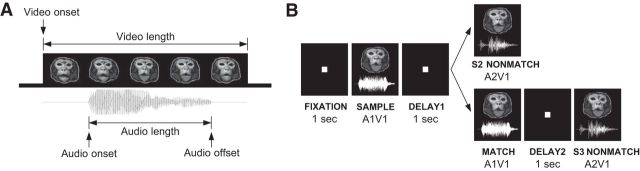

Stimuli were short movie clips of vocalizing monkeys filmed in our own colony. The video was captured at 30 fps with a size of 320 × 240 pixels (6.8 × 5.1° in visual angle), and the audio was recorded with a 48 kHz sampling rate and 16-bit resolution. Once the video and audio tracks of the movie clips were digitized, they were processed with VirtualDub (virtualdub.org) and GoldWave software (GoldWave). Each movie clip was shortened so that only the relevant vocalization was presented with the accompanying facial gesture (Fig. 1A). The length of the video tracks was 467–1367 ms (892 ms on average) and that of the audio ranged from 145 to 672 ms (308 ms on average). Sound pressure level of auditory stimuli was adjusted to 65–75 dB (35–112 mPa) at the level of the subject's ear.

Figure 1.

Audiovisual nonmatch-to-sample task. A, A face-vocalization movie stimulus used in the audiovisual nonmatch-to-sample task is shown in this schematic representation. Note that in these movie stimuli, the auditory component (vocalization) was always preceded by the visual component (face movie). The length of the video tracks ranged from 467 to 1367 ms (892 ms on average) and that of the audio tracks from 145 to 672 ms (308 ms on average). B, Schematic of the audiovisual nonmatch-to-sample task. A face-vocalization movie was presented as the sample stimulus and the subject was required to remember the auditory and visual components (vocalization and accompanying facial gesture) across the following delays and to detect the change of any component in subsequent stimulus presentations with a button press. In half the trials, the nonmatching stimulus was presented as the second stimulus (S2 nonmatch) and, in the other half of the trials, a matching stimulus intervened and the nonmatch occurred as the third stimulus (S3 nonmatch). The example shown here depicts an audio nonmatch trial where only the vocalization changed in the nonmatch stimulus (A1V1→A2V1) but the face component remained the same.

Vocalization stimuli were also imported into MATLAB (The MathWorks) and processed to create noise sound stimuli, which were used to test the auditory discrimination of our subjects (see below). To create them, we extracted the envelope of the vocalization with the Hilbert transform and applied it on white Gaussian noise of which the frequency band was limited to be similar to that of the vocalization. The resulting noise sound was normalized in root mean square amplitude to the original vocalization. Therefore, these noise stimuli had different power spectra from the original vocalizations, but similar temporal features.

Task.

The subjects were trained to perform an audiovisual nonmatch-to-sample task (Fig. 1B). They were required to remember a movie clip presented during the sample period and detect a nonmatching movie clip in subsequent stimulus presentations. When they successfully detected the nonmatch and indicated it by pressing a button located on the front panel of the chair, they were rewarded with juice after 0.5 s. The subject initiated a trial by fixating for 1 s on a red square presented at the center of the screen. Then, the sample stimulus (i.e., an audiovisual face-vocalization movie) was presented, followed by a 1 s delay period. In half the trials, the second stimulus presented after the delay was the nonmatch (“S2 nonmatch”). In the other half of the trials, the sample stimulus and the delay period were repeated before the nonmatch, making the occurrence of the nonmatch unpredictable. The subjects were required to withhold the button press for this repeated sample stimulus (i.e., match) until the nonmatch stimulus was finally presented (“S3 nonmatch”).

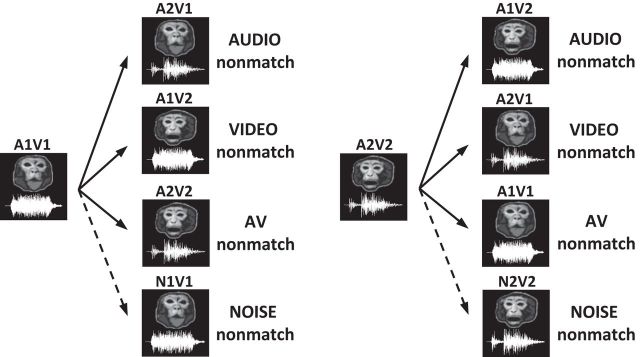

In each session of training, we selected a pair of audiovisual movies for the sample stimuli (A1V1 and A2V2) and created their nonmatches by interchanging the audio and video tracks of these two movies (Fig. 2). Since each movie clip had an auditory component (An) and a visual component (Vn), the exchange could occur between the audio tracks (audio nonmatch), the video tracks (video nonmatch) or both audio and video tracks (AV nonmatch). For example, when the sample stimulus was A1V1, its audio, video, and AV nonmatches were A2V1, A1V2, and A2V2, respectively. Thus, the nonmatching audiovisual stimuli consisted of incongruent (A2V1, A1V2) or congruent (A2V2) face-vocalization movies. To create these audiovisual nonmatch stimuli, we carefully chose two movies of different vocalization call types in which vocalizations and the mouth motion were similar in length. For the incongruent nonmatch stimuli, we aligned the onset time of the nonmatching vocalization to that of the original vocalization so that the subjects could not use temporal asynchrony as a nonmatch cue.

Figure 2.

Nonmatch types. The types of nonmatch conditions that occurred in the audiovisual nonmatch-to-sample task are illustrated with the sample vocalization movie (A1V1 or A2V2). In the neurophysiology recording experiments, there were three types of nonmatch stimuli (audio, video, and AV nonmatches), which were created by interchanging the auditory (An) and visual (Vn) components between the two sample vocalization movies (A1V1 and A2V2). A fourth nonmatch stimulus (noise nonmatch) was used in the behavioral studies and was created by replacing the vocalization with a noise sound stimulus that had the same temporal envelope as the sample.

These three types of nonmatch stimuli were used for the neurophysiological recordings, which is the main study of this paper. In addition, we performed a series of behavioral experiments to test the auditory discrimination ability of our subjects. In these behavioral experiments, we used another nonmatch type in which the vocalizations of the sample movies were replaced with band-limited white noise stimuli that we created (noise nonmatch; Fig. 2). These noise stimuli were easy to discriminate from vocalizations due to their distinct power spectra and therefore helped us to determine whether our subjects had any difficulties in general auditory processing.

Throughout the experiments, we used four pairs, or eight movie clips, and alternated them from session to session. For each testing or recording session, the two vocalization movies that were paired differed in call type and gender (e.g., female affiliative call vs male agonistic call) to make vocalization discrimination easier. Subjects were allowed a total of 900 ms plus the duration of the movie stimulus to press the button during the nonmatch period. If they did not respond during this period, the trial was aborted without reward and the next trial began. If the subject broke eye fixation during stimulus presentation or pressed the button before the nonmatch period, that trial was aborted immediately. Unrewarded trials were repeated but were randomized again with the remaining trials so that the subject could not guess the conditions of the next trial. All trial conditions were presented in a pseudorandom fashion and counterbalanced across trials.

Single-unit recording.

After training in the task was complete, a titanium recording cylinder (19 mm inner diameter) was implanted over VLPFC (centered 29–30 mm anterior to the interaural line and 20–21 mm lateral to midline on skull). The recording cylinder was angled 30° to the vertical to maximize an orthogonal approach to VLPFC, areas 12/47 and 45 (Preuss and Goldman-Rakic, 1991). Recordings were made in both hemispheres of Monkey P and the right hemisphere of Monkey T while they performed the task. During recordings, one or two glass-coated tungsten electrodes (impedance 1–2 MΩ; Alpha Omega) were lowered by motorized microdrives (Nan Instruments) to the target areas. Single-unit activity was discriminated and collected with a signal processing system (RX5-2; Tucker-Davis Technologies). Electrode trajectories were confirmed with MRI for both subjects and later with histology for Monkey P. The MRI image slices were traced with NIH MIPAV software (mipav.cit.nih.gov) and were reconstructed to a 3D model with MATLAB to plot the recording sites (Fig. 5).

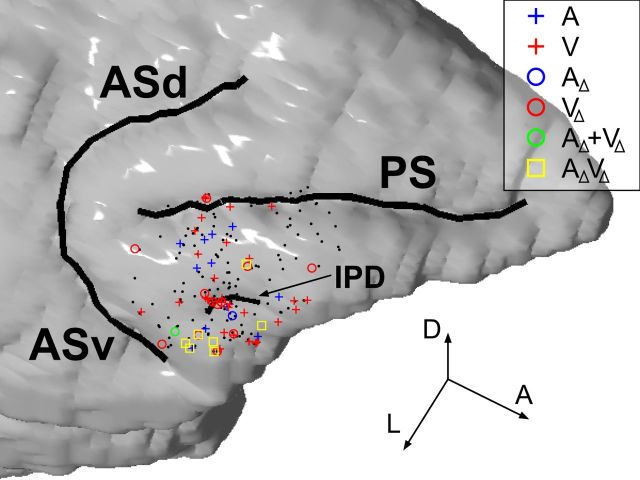

Figure 5.

The locations of neurons that had significant activity in the audiovisual task are shown on a reconstruction of Monkey T's brain based on MRI. The neurons recorded from Monkey P were overlaid onto the reconstruction based on histologically confirmed anatomical landmarks. Color-coded dots are shown where activity was significantly modulated by the auditory component (A; A1 or A2) or the visual component (V; V1 or V2) of the vocalization movies (Eq. 1) and the auditory component change (AΔ; A1→A2 or A2→A1) or the visual component change (VΔ; V1→V2 or V2→V1) in Equation 4. AΔ + VΔ indicates significant main effects of both AΔ and VΔ, and AΔVΔ, a significant interaction. Black dots are neurons with no such effects. The three arrow lines indicate the directions of the anteroposterior (A), dorsoventral (D), and mediolateral (L) axes, respectively, and their lengths correspond to 5 mm each. PS, principal sulcus; ASd, dorsal arcuate sulcus; ASv, ventral arcuate sulcus; IPD, inferior prefrontal dimple.

Analysis of behavioral data.

The percentage of rewarded trials (i.e., the success rate) was calculated as follows. In this task, the subjects typically made two types of errors: (1) not detecting the S2 nonmatch stimulus (missed-press error) and (2) pressing the button during the match stimulus (wrong-press error). The other types of errors (pressing the button during the sample or delay period and missing the S3 nonmatch stimulus) accounted for only 0.39% of the total number of trials and were not considered in the analysis. When subjects made a wrong-press error, the trial was aborted before the nonmatch stimulus was presented, so the error was not attributed to a particular nonmatch condition. Therefore, we divided the number of wrong-press errors that occurred in the trials of the same sample stimulus by the number of the nonmatch conditions and assigned each portion evenly to compute the success rate for each nonmatch condition. The trials aborted due to breaking eye fixation (1.61% of total trials) were not included in the calculation of the success rates.

The reaction time (RT) was defined as time from the video onset to the button-press response. However, for the audio nonmatch conditions, the RT was also calculated from the audio onset to estimate the processing time of auditory mismatch information.

Analysis of neural data.

To separate activity related to the button response from stimulus-related activity during the nonmatch period, we used a 700 ms window during the nonmatch stimulus, which was long enough to capture the neural response to the late audio components, but shorter than the fastest mean RT of our subjects (Fig. 3B). In addition, we tested button press-related activation by comparing activity between the ±50 ms window from button press and another 100 ms window preceding it. For the neurons that had significant activity related to the button press (17 neurons; t test, p < 0.05), we used a window 150 ms shorter than the fastest mean RT among the nonmatch conditions instead.

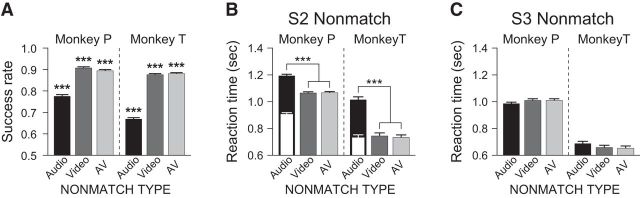

Figure 3.

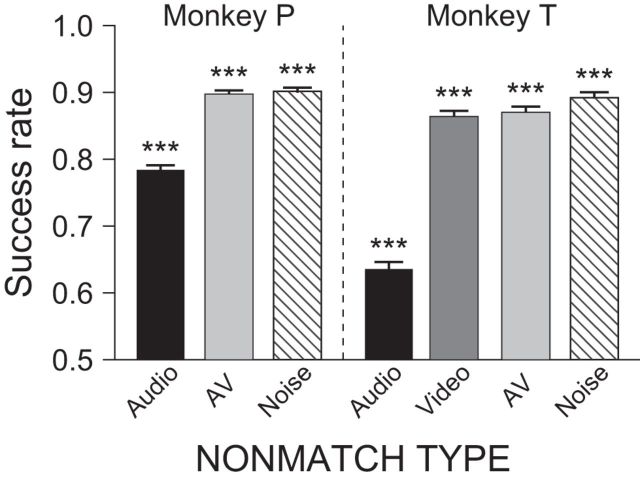

Behavioral performance. A, The performance of each subject is shown as percentage correct by each nonmatch trial type (audio, black; video, dark gray; audiovisual or AV, light gray) during the neurophysiological recordings. B, C, The reaction times (RTs) are shown for trials when the nonmatch occurred as the second stimulus (S2 nonmatch) and as the third stimulus after a match stimulus (S3 nonmatch), respectively. The white bars in B are the RTs recalculated from the audio onset. Error bars indicate SEM; ***p < 0.001.

To analyze neuronal responses during the nonmatch period, we applied the following regression model. This model included a set of dummy variables corresponding to the auditory and visual components of the nonmatch stimulus, as well as the nonmatch type (i.e., audio, video, and AV nonmatches).

where SR denotes the spike rate during the nonmatch period, and a0–a5, regression coefficients. S indicates the sample stimulus type (0, A1V1; 1, A2V2), and A and V indicate the auditory component (0, A1; 1, A2) and the visual component (0, V1; 1, V2) of the nonmatch stimulus, respectively. Since the nonmatch type was a categorical variable that had three classes (audio, video, and AV nonmatches), two dummy variables, NMA-AV and NMV-AV, were used to represent it. NMA-AV measured the differential effect between the audio and AV nonmatches (0, AV nonmatch; 1, audio nonmatch) and NMV-AV, between the video and AV nonmatches (0, AV nonmatch; 1, video nonmatch).

The significance of the nonmatch type (i.e., the combined effect of NMA-AV and NMV-AV) was tested with a partial F test. To compute the marginal variance explained by NMA-AV and NMV-AV, we also applied the following reduced model, which did not include NMA-AV and NMV-AV.

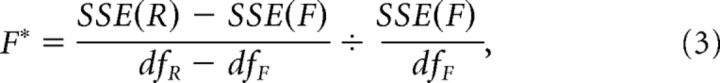

Then, the F statistic was computed as follows:

|

where SSE(R) and SSE(F) refer to the sum of squared errors for the reduced model (Eq. 2) and the full model (Eq. 1), respectively, and dfR and dfF indicate the degrees of freedom associated with the reduced model and the full model.

For those neurons that showed a significant effect of the nonmatch type, the effects of the auditory and visual component changes were tested further with another regression model:

where SR denotes the spike rate during the match or the nonmatch period, and a0–a3, regression coefficients. AΔ and VΔ indicated whether the auditory and visual components of the nonmatch were changed from the sample movie or not, respectively (0, unchanged; 1, changed). AΔVΔ was an interaction term. This model is equivalent to a two-way ANOVA model with AΔ and VΔ as factors. Note that the auditory component change (A1→A2 or A2→A1) occurred during the nonmatch period in both audio and AV nonmatch conditions and the visual component change (V1→V2 or V2→V1), in the video and AV nonmatch conditions. Neither of them occurred during the match period.

After the models were fit, t tests were performed to determine the statistical significance of each regression coefficient. The effects of some independent variables in the regression models were compared based on the standardized regression coefficients (SRCs). The SRC of an independent variable is defined as ai (si/sd), in which ai denotes the raw regression coefficient of the independent variable and si and sd the SDs of the independent variable and the dependent variable, respectively.

Results

Behavioral performance in the audiovisual nonmatch-to-sample task

The subjects were trained with an audiovisual nonmatch-to-sample task in which an audiovisual face-vocalization movie was presented as the sample and the subjects detected a nonmatching stimulus (Fig. 1). The nonmatch conditions included audiovisual stimuli in which the vocalization component of the face-vocalization track had been replaced (audio nonmatch), the facial gesture video track had been replaced (video nonmatch), or both the face and vocalization components of the sample movie had been replaced (AV nonmatch; Fig. 2). We ran 168 sessions of this task (83 sessions for Monkey P and 85 for Monkey T) and analyzed behavioral performance by examining the success rate and the reaction time.

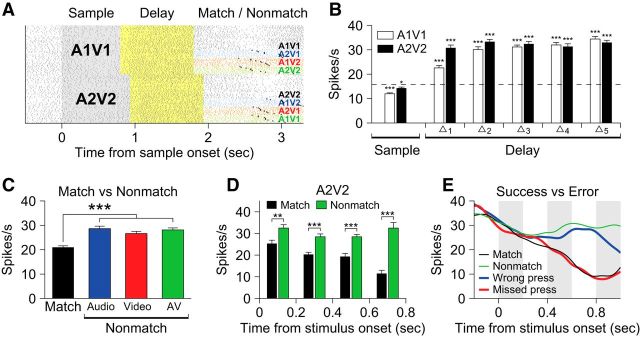

Both subjects performed the task above chance level in all conditions (χ2 test, p < 0.001; Fig. 3A). Comparing between conditions, we found that their success rates in the audio nonmatch condition were significantly lower than the other nonmatch conditions (χ2 test, p < 0.001). Although previous studies have shown that performance of auditory discrimination by nonhuman primates is reduced compared with visual discrimination (Goldman and Rosvold, 1970; Fritz et al., 2005), it was important for us to confirm that this was not due to a general deficiency in acoustic processing or insufficient training for auditory discrimination. Therefore, we tested the auditory discrimination ability of our subjects with a second discrimination task in which the audio component of the face-vocalization stimuli was replaced with not only a different vocalization but also a noise sound that is easier to distinguish (noise nonmatch; see Materials and Methods). This discrimination test was performed in an identical manner as the main study. Monkey P was tested with audio, AV, and noise nonmatch conditions for 86 sessions, and Monkey T, with audio, video, AV, and noise nonmatch conditions for 31 sessions. The results were comparable to those of the main study (Fig. 4). Both subjects performed the task well above chance level in all nonmatch conditions (χ2 test, p < 0.001). As in the main study, the performance in the audio nonmatch condition (i.e., vocalization change) was lower (χ2 test, p < 0.001), but the success rate in the noise nonmatch condition was higher than that in the audio nonmatch (χ2 test, p < 0.001) and as good as the performance in the video and AV nonmatch conditions in both subjects. Both the audio and noise nonmatch conditions require detection of a change in the auditory component of the audiovisual stimulus. If our subjects were impaired in general auditory processing or did not know how to respond to the auditory component change, the same low success rate should have been observed in both noise nonmatch and audio nonmatch conditions. Since this was not the case, we concluded that the low performance in the audio nonmatch condition might simply reflect the difficulty in discriminating between the vocalization stimuli. In fact, these results are similar to a recent study that examined auditory discrimination in monkeys with a variety of sound types and found that noise or pure tones were discriminated from monkey vocalizations better than other sound types (Scott et al., 2013).

Figure 4.

Performance in the behavioral task with the noise nonmatch stimulus. The auditory discrimination performance of both subjects was better with stimuli that were acoustically dissimilar to the vocalization (i.e., noise nonmatch, striped bars), compared with the vocalization–vocalization discrimination in the audio nonmatch (black bars). Error bars indicate SEM; ***p < 0.001.

We also analyzed the RT of the subjects. During the S2 nonmatch period, the RTs were significantly different between the subjects and across the nonmatch conditions (two-way ANOVA; Fig. 3B), which was unexpected. First, the RTs of Monkey P were longer than those of Monkey T in all nonmatch conditions (F test, p < 0.001). In our task, the subjects were not required to position their responding hands at a particular location at the beginning of a trial. We observed that Monkey P retracted its hand from the button after making a response and reached out again in the next trial, whereas Monkey T held the hand near the button at all times. Therefore, Monkey P's longer RTs were partly due to longer arm travel distance. Second, the RT in the audio nonmatch condition was significantly slower than those in the other two nonmatch conditions (F test, p < 0.001). This could be due to the fact that, in macaque vocalizations, the auditory component (vocalization) naturally lags behind the onset of the visual component (mouth movement) by hundreds of milliseconds (160–404 ms, 283 ms on average in our stimuli; Fig. 1A), as discussed in previous studies (Ghazanfar et al., 2005; Chandrasekaran and Ghazanfar, 2009). In other words, subjects might be slower in detecting the audio change in the audio nonmatch condition simply because the vocalization occurred later than the video. To fairly compare the time taken from stimulus changes to button responses, we recalculated the RT from audio onset for the audio nonmatch condition (Fig. 3B, white bars). Compared with this recalculated RT, Monkey P actually took longer to respond in the video and AV nonmatch conditions than it did to the audio change in the audio nonmatch condition (t test, p < 0.001) and Monkey T showed no difference (t test, p > 0.69). This suggests that the slow RT in the audio nonmatch condition could be due to the relatively late audio onset rather than the longer processing of the audio mismatch.

The RTs in the S3 nonmatch period were shorter than those in the S2 nonmatch period overall (t test, p < 0.001; Fig. 3C). Furthermore, there was no significant difference in the RT across the nonmatch conditions such as was observed in the S2 nonmatch period (F test, p = 0.92). This is not surprising since the third stimulus was always a nonmatch and thus required a button press. In fact, in our task, the match/nonmatch decision was required only for the second stimulus that was presented after the sample. The second delay and S3 nonmatch were used so that a behavioral response would be required on every trial. Because a decision was only required for the second stimulus and the forthcoming behavioral response was predictable from the second delay, the neural activity during the S3 nonmatch period will not be considered in the current paper but will be discussed in a separate manuscript.

Neurophysiological responses of VLPFC neurons to face-vocalization stimuli during the sample and delay period

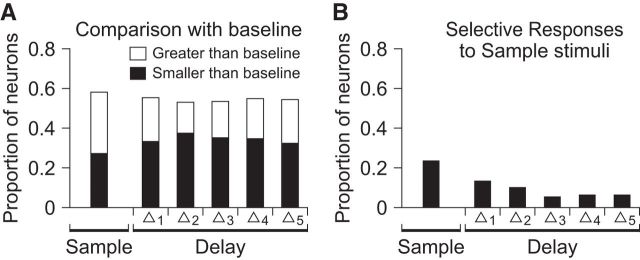

We recorded the activity of 215 neurons from VLPFC (114 from Monkey P, 101 from Monkey T; Fig. 5), while the subjects performed the task with audio, video, and AV nonmatch conditions (Fig. 6A). First, we assessed the responsiveness of VLPFC neurons during our audiovisual task by comparing the activity during the sample and first delay periods with the spontaneous baseline firing rate. The baseline firing rate was estimated from a 500 ms period before the sample stimulus onset. Some neurons not only responded differently from baseline during the sample period, but also maintained differential activity over the first delay period (Fig. 6B). This delay period activity is likely related to the maintenance of the sample stimulus in memory, as previously suggested by sustained activity during the delay period in other working memory tasks (Fuster, 1973; Rosenkilde et al., 1981; Miller et al., 1996; Fuster et al., 2000; Freedman et al., 2001; Plakke et al., 2013b). In total, 58.1% (= 125/215) of the recorded neurons showed significantly different activity during the sample period compared with baseline as did the same proportion of neurons (58.1%) during the first delay period (t test, p < 0.05; Fig. 7A). The proportions of the neurons that had greater or smaller activity than baseline were similar to each other during the sample period [52.8% (= 66/125) and 47.2% (= 59/125), respectively]. During the first delay period, however, 37.6% (= 47/125) showed elevated activity and 62.4% (= 78/125) showed reduced activity (χ2 test, p < 0.05). We also compared the sample and delay activity between the two sample audiovisual movies to determine whether selectivity played a role in the responses. Such differential responses were found in 23.7% (= 51/215) of neurons during the sample period and 12.1% (= 26/215) during the first delay period, indicating some selectivity even with a small stimulus set (t test, p < 0.05; Fig. 7B).

Figure 6.

An example neuron with sustained delay activity and match suppression. A, A raster plot of the activity of a single neuron during the audiovisual nonmatch-to-sample task. Each raster line corresponds to a single trial and each tick represents a single spike. Trials are regrouped according to match/nonmatch conditions and color coded as follows: black, sample/match; blue, audio nonmatch; red, video nonmatch; green, AV nonmatch. The asterisks on the rasters of nonmatch trials indicate the time of button presses. B, Sustained delay activity. The activity of the same neuron during the sample and delay periods is compared with the baseline activity (the dotted line). The size of each bin in the delay period (Δn) is 200 ms. C, The response of this same neuron is significantly decreased to the match stimulus compared with the nonmatch stimuli, while there is no difference among the nonmatch stimuli. The bars are color coded with the same scheme as in A. D, The same neuron's response to stimulus A2V2 when it appeared as a match (black bars) and elicited suppression compared with the response as a nonmatch stimulus (green bars). The result for A1V1 was similar except that the match activity decreased after 200 ms. E, Comparison of neural activity between success and error trials in this single neuron. Neural activity during missed nonmatch responses (missed-press errors) is similar to correct match responses where the button press is withheld. Conversely, wrong presses during the match period resemble correct button presses during the nonmatch stimulus. Error bars indicate SEM; ***p < 0.001; **p < 0.01; *p < 0.05.

Figure 7.

Neurons significantly active during the sample and delay period. A, The proportions of neurons in which activity was significantly different from spontaneous activity during the sample and the delay period (t test, p < 0.05). The size of each bin in the delay period (Δn) is 200 ms. B, The proportions of neurons that showed selective activity in either the sample or the delay period for one of the two sample movies tested (t test, p < 0.05).

We hypothesized that activity related to working memory would be diminished during the second delay period, since the next stimulus was always a nonmatch and there was no need for the subjects to keep the sample stimulus in memory to achieve a correct response. As expected, the proportion of neurons with elevated/suppressed activity decreased from 58.1% (= 125/215; first delay period) to 43.7% (= 94/215; second delay period). In addition, only 40.4% (= 19/47) of the neurons with elevated activity and 65.4% (= 51/78) with suppressed activity during the first delay showed the same behavior during the second delay. The percentage of neurons with stimulus-selective activity during the second delay period was 14.0% (= 30/215), which was not significantly different from that during the first delay period.

VLPFC activity related to working memory during stimulus comparison

Previous working memory studies that were performed with visual or auditory stimuli found that neurons in the lateral prefrontal cortex responded differently to the test stimulus depending on whether it was a match or a nonmatch (Miller et al., 1991, 1996; Plakke et al., 2013b). Some neurons responded more strongly to stimuli that matched the sample than when the same stimuli were nonmatching, referred to as match enhancement, and some responded less to matching than nonmatching stimuli, referred to as match suppression. These match/nonmatch-related modulations indicate that the neurons are involved in comparing the match and nonmatch stimuli with the remembered sample. To see how many of our VLPFC neurons responded differently to the match and nonmatch stimuli, we first compared the activity of the neurons between the match period and the AV nonmatch period in which the identical stimulus was presented. We tested all recorded neurons with a two-way ANOVA, using the stimulus type (A1V1 or A2V2) and the task epochs (match or nonmatch) as the factors. Thirty-six (16.7%) neurons were stimulus selective, 34 (15.8%) neurons distinguished between match and nonmatch stimuli, and 10 (4.7%) neurons showed a significant interaction between the factors (F test, p < 0.05). Among those 34 neurons that responded differently to match and nonmatch stimuli, most (29/34) showed a reduction in the neuronal response to match stimuli while a few (5/34) showed enhancement.

In addition to the AV nonmatch stimuli (A1V1 or A2V2), however, our task also includes the audio and video nonmatch stimuli that have one component in common with the sample but are still considered nonmatching. Therefore, we must consider whether a neuron responded to the audio and video nonmatches in the same way as it did to the AV nonmatch, to determine whether the neuron differentiated matches and nonmatches. For that reason, we excluded neurons from this analysis that showed a significantly different response in the audio or video nonmatch condition compared with the AV nonmatch condition. This left 21 neurons that distinguished between matching and nonmatching stimuli. Among those 21 neurons, 4 showed match enhancement and 17 showed match suppression. An example neuron with match suppression is shown in Figure 6. This neuron responded strongly to all three nonmatch types, regardless of the modality of the changed component (Fig. 6C). This effect was not related to the button-press response, since activity related to the button response was excluded from the analysis window (see Materials and Methods) and the suppression in the match period occurred much earlier than the time of button press in this neuron (Fig. 6D).

We further examined how the activity of these VLPFC neurons is correlated with the match and nonmatch decisions of our subjects, by comparing the neuronal response on success and error trials. Our subjects sometimes mistook a match stimulus for a nonmatch and pressed the button (wrong-press error) or they did not press the button because they incorrectly judged the test stimulus to be a match when it was actually a nonmatch (missed-press error). In those error trials, activity of some neurons was modulated not by the actual matching/nonmatching status of the incoming stimuli, but by the subjective judgment, or decision, of the monkeys. Therefore, even though the presented stimuli were identical, the neuronal activity was different between wrong-press errors and match responses and so was the activity between missed-press errors and nonmatch responses (Fig. 6E). Among the 21 neurons with match enhancement or match suppression, 6 were excluded due to insufficient number of error trials, but 9 of the remaining 15 showed significantly different responses between success and error trials during the match and nonmatch periods (t test; p < 0.05).

Response of VLPFC neurons to the different nonmatch types

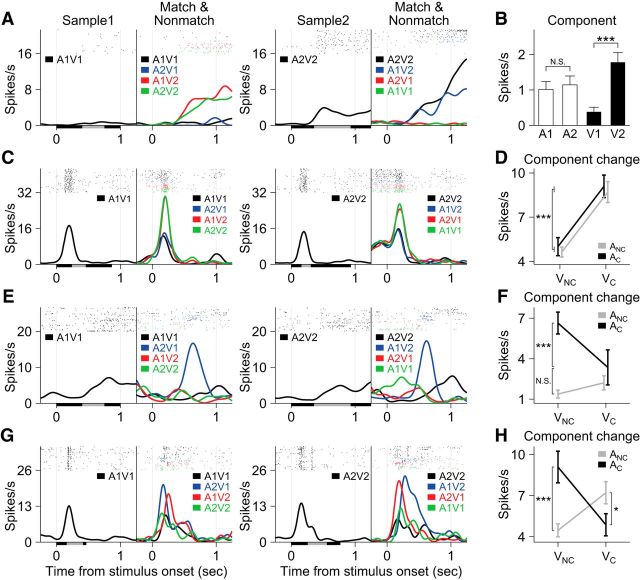

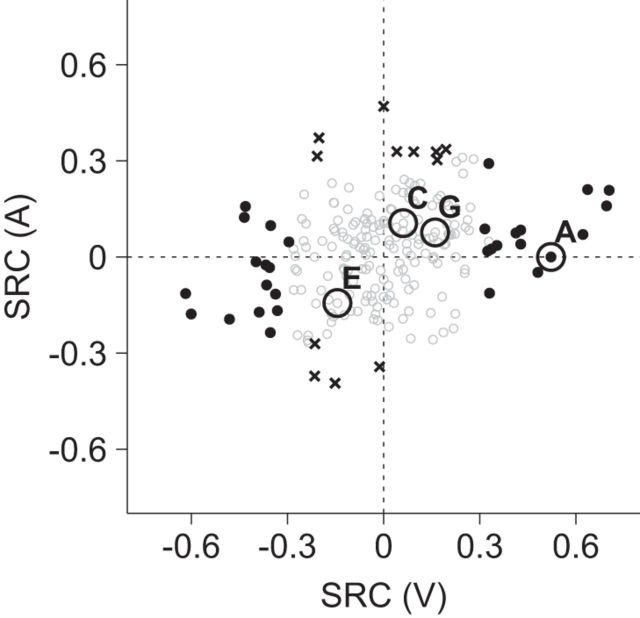

We were most interested in whether and how VLPFC neurons responded to the different nonmatch conditions. Many neurons modulated their activity according to one or more variables in our regression model, which allowed us to differentiate effects of particular stimuli, or the auditory and visual components of the audiovisual movie stimuli as well as effects of task variables such as the nonmatch type (Eq. 1; see Materials and Methods). In some neurons, the activity during the nonmatch period was simply modulated by a particular sensory component of the nonmatch stimuli. For example, the neuron shown in Figure 8A increased its activity whenever the presented stimulus included the face movie from the second sample movie, V2. The fact that the neuron is responsive to a visual component is confirmed by grouping and comparing trials according to the component included in the match/nonmatch stimuli (Fig. 8B). There was no difference in response between conditions that contained A1 and those that included A2, but there was a significant increase for those conditions that included V2, compared with the ones that included V1. From the regression analysis, 30 (14.0%) neurons had a significant modulation by visual components (Vn) and 12 (5.6%) by auditory components (An; t test, p < 0.05; Fig. 9). The proportions of these two neuron groups were significantly different (χ2 test, p < 0.01), indicating that more neurons were responsive to the visual than the auditory components of the vocalization movies.

Figure 8.

Four example neurons that are responsive to stimulus or component change(s) during the nonmatch periods. A, C, E, G, Spike-density functions and rasters are colored as follows: black, sample/match; blue, audio nonmatch; red, video nonmatch; green, AV nonmatch. Black and gray bars on the abscissae indicate the duration of the video and audio components of the sample stimuli, respectively. A, B, A neuron that responds to a particular visual component (V2) of the audiovisual stimuli. The activity during the match and nonmatch periods is sorted according to the auditory or visual component included in the stimuli and presented in B, which shows the spike rate was greater for all stimuli containing V2. C, D, A neuron that had a significant change in firing for a unisensory (visual) component change between the samples and the nonmatches (V1→V2 or V2→V1). D, The activity during the match and nonmatch periods is regrouped according to whether the auditory and visual components of the stimuli are repeated or changed. E–H, Two neurons are shown which have responses that are dependent on the change status of both sensory components (multisensory component change neurons). E and F show a neuron with an increase in activity during the audio nonmatch where the auditory component changes but the visual component does not and G and H depict a neuron that responded significantly to both auditory and visual component changes but only when one component changes at a time. F and H are in the same format as D. Error bars indicate SEM; ***p < 0.001; *p < 0.05. ANC, audio no-change; AC, audio change; VNC, video no-change; VC, video change.

Figure 9.

Effects of auditory and visual components. The standardized regression coefficients (SRCs) for the auditory components (A) in Equation 1 are plotted against the SRCs for the visual components (V). The positive SRC indicates that the neuron elicited a greater response to A2 (or V2) compared with A1 (or V1); the negative SRC conveys the opposite. Filled circles (black) and black crosses correspond to the neurons with a significant effect of the visual components or the auditory components, respectively. Open circles (gray) are the neurons with no significant effect of the auditory or visual components. A, C, E, and G indicate data points from the example neurons shown in Figure 8A, C, E, and G. Note that C, E, and G neurons did not show a significant effect of the auditory or visual components.

The activity of some neurons was related to the nonmatch type. For example, the neuron shown in Figure 8C increased its activity during the nonmatch period for the video nonmatch (red) and AV nonmatch (green) conditions. Another example neuron shown in Figure 8E exhibited an increase for the audio nonmatch (blue) conditions only. Note that this nonmatch type effect was not stimulus specific: it was not dependent on either a particular sample stimulus or a particular auditory or visual component (Fig. 9) but upon the rule of which component of the nonmatch stimulus had changed from the sample. We identified 32 neurons with a significant effect of the nonmatch type (F test, p < 0.05; Eq. 3).

We tested these nonmatch-type neurons further with another regression model to investigate how their activity was modulated by the change of each sensory component in the nonmatch stimuli (Eq. 4; see Materials and Methods). This analysis clearly revealed that some neurons responded to the change of one sensory component while others were modulated by particular combinations of the auditory and visual changes. For example, the activity of the neuron in Figure 8C was enhanced when the visual component changed from the sample stimulus in the video and AV nonmatch conditions (V1→V2 or V2→V1). However, this activity modulation was unrelated to the auditory component change, since the response elicited by the neuron was not different between both nonmatch conditions, whether the auditory component was changed (AV nonmatch) or not (video nonmatch; Fig. 8D). On the other hand, the neuron in Figure 8E increased its activity when the auditory component switched but the visual component remained unchanged (A1V1→A2V1 or A2V2→A1V2; Fig. 8F), which cannot be accounted for by either auditory component change or visual component change alone. These results indicate that some VLPFC neurons are sensitive to the change of one sensory component from the sample stimulus (unisensory component change neuron), while other neurons are sensitive to the changes of both auditory and visual components (multisensory component change neuron). The neuron shown in Figure 8, G and H, is another multisensory component change neuron, which increased its activity when either the auditory or visual component changed alone (i.e., in the audio and video nonmatch conditions), but not when both or none of the components changed (i.e., in the AV nonmatch and match conditions).

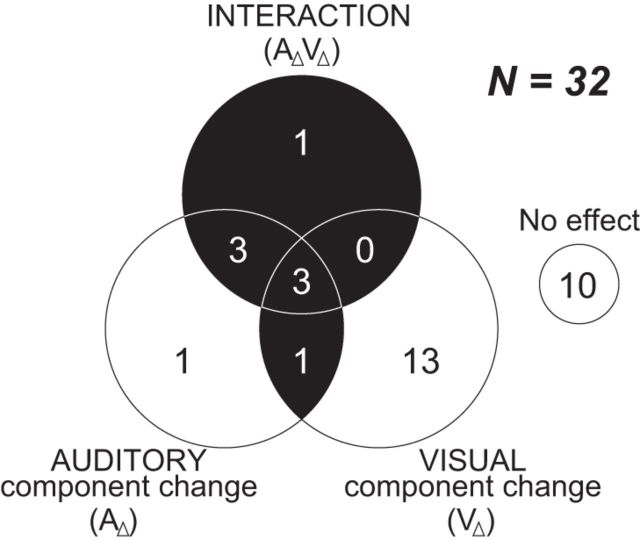

The effect of the multiple component changes can be represented by either the main effects of both auditory and visual component changes (AΔ and VΔ, respectively) or their interaction (AΔVΔ) in our model (Eq. 4). Of 32 nonmatch-type neurons, we found 8 (= 25.0%) multisensory component change neurons (1 neuron with the significant main effects of both AΔ and VΔ; 7 neurons with the interaction effect; Fig. 10). In addition, 14 (= 43.8%) were unisensory component change neurons and 10 neurons (= 31.3%) showed neither the effect of AΔ nor VΔ. Note that most of the unisensory component change neurons were sensitive to the visual component change (1 with the main effect of AΔ alone; 13 with the main effect of VΔ alone). We also noted that the neurons that were responsive to the component change(s), especially the multisensory component change neurons, were mostly located near the inferior prefrontal dimple (IPD) or lateral to IPD areas (Fig. 5), a location where previous studies have noted overlapping representation of face-, vocalization-, and multisensory-responsive neurons (Sugihara et al., 2006; Romanski and Averbeck, 2009).

Figure 10.

Summary of neuronal responses to the component change(s) during the nonmatch period. The neurons with an effect of the nonmatch type are grouped by the result of the regression analysis (Eq. 4). AΔ indicates the change of the auditory component between the sample and the test stimulus (A1→A2 or A2→A1) and VΔ indicates the change of the visual component (V1→V2 or V2→V1). AΔVΔ is the interaction. The black area indicates multisensory component change neurons that had significant main effects of both AΔ and VΔ, or a significant interaction effect.

Discussion

In this study, we investigated the activity of VLPFC neurons while nonhuman primates remembered and discriminated complex, natural audiovisual stimuli. Our task is a novel nonmatch-to-sample paradigm, which employs vocalizations and their accompanying facial gestures and requires using information from both auditory and visual stimuli simultaneously, as we do during face-to-face communication. VLPFC neurons were active during several phases of the audiovisual task, and more than half of the neurons were responsive during the sample period and often maintained this activity during the delay period. In the nonmatch period, VLPFC neurons exhibited both stimulus and task-related activity with some neurons responding to the particular face or the vocalization presented, while other neurons showed evidence of WM for the nonmatch target. We also found that responses to the nonmatch stimuli were complex and multisensory, in the sense that the response to one auditory or visual component change was often dependent on a change in the other component. This suggests that information about auditory and visual stimuli maintained in WM is integrated across modalities in VLPFC and that VLPFC is an important site for audiovisual integration and memory.

Working memory and cross-modal information integration in VLPFC

Previous investigations have shown that the lateral prefrontal cortex is important for WM functions and that there may be areal segregation for spatial and nonspatial information processing (Wilson et al., 1993; Belger et al., 1998; Courtney et al., 1998; Hoshi et al., 2000; Rämä et al., 2004; Romanski, 2004; Nee et al., 2013). Evidence suggests that dorsolateral prefrontal cortex (DLPFC) may be specialized for processing visual and auditory spatial cues (Watanabe, 1981; Funahashi et al., 1989; Quintana and Fuster, 1992; Kikuchi-Yorioka and Sawaguchi, 2000; Constantinidis et al., 2001), whereas VLPFC is specialized for processing nonspatial features of stimuli (e.g., color and shape) and the identity of faces and vocalizations (Rosenkilde et al., 1981; Wilson et al., 1993; O Scalaidhe et al., 1997; Rämä and Courtney, 2005). Our results are in accordance with these findings in that VLPFC neurons showed task-related activity such as sustained delay activity and match/nonmatch preference in our WM task using face and vocalization stimuli and therefore support the idea that VLPFC has a role in object WM.

In addition, our findings indicate that VLPFC is involved in integrating information from different modalities during WM. During nonmatch detection, the activation of some VLPFC neurons was not dependent on whether the changed component was auditory or visual but whether the test stimulus was matching or nonmatching. This suggests that modality-specific information about the stimulus is combined and used to guide a decision based on the task rule. Moreover, the discovery of multisensory component change neurons, which attend to information from both auditory and visual channels and can encode whether the stimulus change occurs in both channels or in only one of them, provides direct evidence of cross-modal integration in VLPFC. While prefrontal neurons have previously been reported to integrate within-modal information in WM (Quintana and Fuster, 1993; Baker et al., 1996; Rao et al., 1997; D'Esposito et al., 1998; Rainer et al., 1998; Prabhakaran et al., 2000; Sala and Courtney, 2007), the role of the prefrontal cortex in cross-modal integration during WM has been investigated in only a few studies. Some showed that single prefrontal neurons represented behaviorally meaningful auditory and visual associations (Fuster et al., 2000; Zhang et al., 2014) and others demonstrated that neurons in DLPFC are engaged in parallel processing of auditory and visual spatial information during WM maintenance (Kikuchi-Yorioka and Sawaguchi, 2000; Artchakov et al., 2007). Our study is the first to demonstrate that VLPFC neurons perform nonspatial mnemonic functions across modalities.

The audiovisual task used in the current study to examine audiovisual WM is novel in its reliance on dynamic, naturalistic, and species-specific face-vocalization stimuli across all task epochs. Previous experiments on WM for communication stimuli presented face and vocal stimuli separately (Schumacher et al., 1996; Kamachi et al., 2003; Crottaz-Herbette et al., 2004) or let subjects choose which modality to remember based on saliency or by instruction (Parr, 2004; Rämä and Courtney, 2005). Our audiovisual paradigm allows us to examine how cross-modal integration of WM occurs using the same stimuli that comprise face-to-face communication and which VLPFC neurons have been shown to prefer (O Scalaidhe et al., 1997; Romanski and Goldman-Rakic, 2002; Tsao et al., 2008).

Auditory discrimination in nonhuman primates

Some recent studies, which showed that macaques had relatively poor performance in an auditory match-to-sample paradigm (Scott et al., 2012, 2013), concluded that macaques have a “limited form” of auditory WM. This conflicts with our current results where we have noted sustained delay activity and match suppression/enhancement in VLPFC neurons when audiovisual comparisons including auditory discrimination were required. These are common neuronal correlates of WM (Miller et al., 1991, 1996; Plakke et al., 2013b) and we have shown for the first time that they are present with compound audiovisual stimuli. One difference from previous tasks is that we used a nonmatch-to-sample paradigm in which the intervening, repeated stimulus was identical to the sample and was therefore less susceptible to retroactive interference than memoranda in the match-to-sample task (Scott et al., 2012, 2013). This modification may account for some of the differences in neuronal activity and behavior.

We found that vocalization discrimination performance was inferior to face discrimination performance. Previous studies have shown that macaques are slow in learning auditory delayed match- or nonmatch-to-sample tasks and performance remains poor even after prolonged training (Wright et al., 1990; Fritz et al., 2005; Ng et al., 2009; Scott et al., 2012), compared with visual discrimination performance. This difference between auditory and visual discrimination may be related to behavior in their natural habitat, where most communication exchanges occur in close proximity, or face-to-face, since macaques are not arboreal like New World monkeys. Some researchers report that rhesus monkeys rely on vocalizations in social interaction only 5–32% of the time (Altmann, 1967; Partan, 2002), suggesting that facial cues may be more prominent in social interactions. Our analysis revealed that there are fewer VLPFC neurons responsive to the vocalization component of the audiovisual stimulus, or its change, compared with the number of neurons responsive to the face or the change of the face. These results parallel findings of greater numbers of visual unimodal responses compared with auditory unimodal responses in VLPFC (Sugihara et al., 2006; Diehl and Romanski, 2014), and in the monkey amygdala, another face-vocalization integration area, where many neurons are robustly responsive to faces (Gothard et al., 2007; Kuraoka and Nakamura, 2007; Mosher et al., 2010), but fewer neurons respond to corresponding vocalizations (Kuraoka and Nakamura, 2007). Moreover, even human subjects fare better at face recognition than voice recognition in many situations (Colavita, 1974; Hanley et al., 1998; Joassin et al., 2004; Calder and Young, 2005; Sinnett et al., 2007). Nonetheless, when we substituted an acoustically dissimilar noise stimulus as the nonmatch instead of a mismatching vocalization, discrimination performance was greatly improved, suggesting that vocal discrimination difficulties may therefore be due to feature similarity rather than mnemonic capacity (Scott et al., 2013).

Comparative similarities between VLPFC and human IFG

It has been suggested that VLPFC and the human IFG are homologous because they share similar cytoarchitectonic features (Petrides and Pandya, 2002) and may have some functional similarities as well (Romanski, 2012). The human IFG, including Broca's area, has long been linked with speech and language processes (Geschwind, 1970; Dronkers et al., 2007; Grodzinsky and Santi, 2008). Similarly, VLPFC has a discrete auditory region where neurons respond to complex stimuli including species-specific vocalizations (Romanski and Goldman-Rakic, 2002). These neurons tend to respond to multiple vocalizations on the basis of acoustic features (Romanski et al., 2005), which is consistent with a notion that VLPFC is part of the ventral auditory processing stream that analyzes the features of auditory objects (Belin et al., 2000; Binder et al., 2000; Scott et al., 2000; Zatorre et al., 2004). Moreover, macaque VLPFC is adjacent to vocal motor control regions (Petrides et al., 2005) and receives afferents from temporal lobe auditory regions (Petrides and Pandya, 1988; Barbas, 1992; Hackett et al., 1999; Romanski et al., 1999a, b; Saleem et al., 2014).

The human IFG is also involved in maintaining and integrating auditory and visual WM, which is necessary during communication. Human imaging studies have revealed that IFG is coactivated during auditory and visual verbal WM tasks or during delayed recognition tasks for unfamiliar faces and voices (Schumacher et al., 1996; Crottaz-Herbette et al., 2004; Rämä and Courtney, 2005), suggesting a role in nonspatial information processing independent of the stimulus modality, similar to the role of the neurons localized to the macaque VLPFC here. Therefore, our study provides support for the hypothesis that VLPFC and the human IFG share not only similar cytoarchitecture but also a similar function in the integration and mnemonic processing of cross-modal communication information.

Footnotes

This work was supported by National Institutes of Health Grants DC04845 (L.M.R.) and The Schmitt Program on Integrative Brain Research.

The authors declare no competing financial interests.

References

- Altmann SA. Social communication among primates. Chicago: University of Chicago Press; 1967. The structure of primate social communication; pp. 325–362. [Google Scholar]

- Artchakov D, Tikhonravov D, Vuontela V, Linnankoski I, Korvenoja A, Carlson S. Processing of auditory and visual location information in the monkey prefrontal cortex. Exp Brain Res. 2007;180:469–479. doi: 10.1007/s00221-007-0873-8. [DOI] [PubMed] [Google Scholar]

- Baker SC, Frith CD, Frackowiak RS, Dolan RJ. Active representation of shape and spatial location in man. Cereb Cortex. 1996;6:612–619. doi: 10.1093/cercor/6.4.612. [DOI] [PubMed] [Google Scholar]

- Barbas H. Architecture and cortical connections of the prefrontal cortex in the rhesus monkey. Adv Neurol. 1992;57:91–115. [PubMed] [Google Scholar]

- Belger A, Puce A, Krystal JH, Gore JC, Goldman-Rakic P, McCarthy G. Dissociation of mnemonic and perceptual processes during spatial and nonspatial working memory using fMRI. Hum Brain Mapp. 1998;6:14–32. doi: 10.1002/(SICI)1097-0193(1998)6:1<14::AID-HBM2>3.0.CO%3B2-O. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Belin P, Zatorre RJ, Lafaille P, Ahad P, Pike B. Voice-selective areas in human auditory cortex. Nature. 2000;403:309–312. doi: 10.1038/35002078. [DOI] [PubMed] [Google Scholar]

- Binder JR, Frost JA, Hammeke TA, Bellgowan PS, Springer JA, Kaufman JN, Possing ET. Human temporal lobe activation by speech and nonspeech sounds. Cereb Cortex. 2000;10:512–528. doi: 10.1093/cercor/10.5.512. [DOI] [PubMed] [Google Scholar]

- Calder AJ, Young AW. Understanding the recognition of facial identity and facial expression. Nat Rev Neurosci. 2005;6:641–651. doi: 10.1038/nrn1724. [DOI] [PubMed] [Google Scholar]

- Calvert GA, Hansen PC, Iversen SD, Brammer MJ. Detection of audio-visual integration sites in humans by application of electrophysiological criteria to the BOLD effect. Neuroimage. 2001;14:427–438. doi: 10.1006/nimg.2001.0812. [DOI] [PubMed] [Google Scholar]

- Campanella S, Belin P. Integrating face and voice in person perception. Trends Cogn Sci. 2007;11:535–543. doi: 10.1016/j.tics.2007.10.001. [DOI] [PubMed] [Google Scholar]

- Chandrasekaran C, Ghazanfar AA. Different neural frequency bands integrate faces and voices differently in the superior temporal sulcus. J Neurophysiol. 2009;101:773–788. doi: 10.1152/jn.90843.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Colavita FB. Human sensory dominance. Percept Psychophys. 1974;16:409–412. doi: 10.3758/BF03203962. [DOI] [Google Scholar]

- Constantinidis C, Franowicz MN, Goldman-Rakic PS. The sensory nature of mnemonic representation in the primate prefrontal cortex. Nat Neurosci. 2001;4:311–316. doi: 10.1038/85179. [DOI] [PubMed] [Google Scholar]

- Courtney SM, Petit L, Maisog JM, Ungerleider LG, Haxby JV. An area specialized for spatial working memory in human frontal cortex. Science. 1998;279:1347–1351. doi: 10.1126/science.279.5355.1347. [DOI] [PubMed] [Google Scholar]

- Crottaz-Herbette S, Anagnoson RT, Menon V. Modality effects in verbal working memory: differential prefrontal and parietal responses to auditory and visual stimuli. Neuroimage. 2004;21:340–351. doi: 10.1016/j.neuroimage.2003.09.019. [DOI] [PubMed] [Google Scholar]

- D'Esposito M, Aguirre GK, Zarahn E, Ballard D, Shin RK, Lease J. Functional MRI studies of spatial and nonspatial working memory. Brain Res Cogn Brain Res. 1998;7:1–13. doi: 10.1016/S0926-6410(98)00004-4. [DOI] [PubMed] [Google Scholar]

- Diehl MM, Romanski LM. Responses of prefrontal multisensory neurons to mismatching faces and vocalizations. J Neurosci. 2014;34:11233–11243. doi: 10.1523/JNEUROSCI.5168-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dronkers NF, Plaisant O, Iba-Zizen MT, Cabanis EA. Paul Broca's historic cases: high resolution MR imaging of the brains of Leborgne and Lelong. Brain. 2007;130:1432–1441. doi: 10.1093/brain/awm042. [DOI] [PubMed] [Google Scholar]

- Freedman DJ, Riesenhuber M, Poggio T, Miller EK. Categorical representation of visual stimuli in the primate prefrontal cortex. Science. 2001;291:312–316. doi: 10.1126/science.291.5502.312. [DOI] [PubMed] [Google Scholar]

- Fritz J, Mishkin M, Saunders RC. In search of an auditory engram. Proc Natl Acad Sci U S A. 2005;102:9359–9364. doi: 10.1073/pnas.0503998102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Funahashi S, Bruce CJ, Goldman-Rakic PS. Mnemonic coding of visual space in the monkey's dorsolateral prefrontal cortex. J Neurophysiol. 1989;61:331–349. doi: 10.1152/jn.1989.61.2.331. [DOI] [PubMed] [Google Scholar]

- Fuster JM. Unit activity in prefrontal cortex during delayed-response performance: neuronal correlates of transient memory. J Neurophysiol. 1973;36:61–78. doi: 10.1152/jn.1973.36.1.61. [DOI] [PubMed] [Google Scholar]

- Fuster JM, Bodner M, Kroger JK. Cross-modal and cross-temporal association in neurons of frontal cortex. Nature. 2000;405:347–351. doi: 10.1038/35012613. [DOI] [PubMed] [Google Scholar]

- Geschwind N. The organization of language and the brain. Science. 1970;170:940–944. doi: 10.1126/science.170.3961.940. [DOI] [PubMed] [Google Scholar]

- Ghazanfar AA, Maier JX, Hoffman KL, Logothetis NK. Multisensory integration of dynamic faces and voices in rhesus monkey auditory cortex. J Neurosci. 2005;25:5004–5012. doi: 10.1523/JNEUROSCI.0799-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ghazanfar AA, Chandrasekaran C, Morrill RJ. Dynamic, rhythmic facial expressions and the superior temporal sulcus of macaque monkeys: implications for the evolution of audiovisual speech. Eur J Neurosci. 2010;31:1807–1817. doi: 10.1111/j.1460-9568.2010.07209.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldman PS, Rosvold HE. Localization of function within the dorsolateral prefrontal cortex of the rhesus monkey. Exp Neurol. 1970;27:291–304. doi: 10.1016/0014-4886(70)90222-0. [DOI] [PubMed] [Google Scholar]

- Gothard KM, Battaglia FP, Erickson CA, Spitler KM, Amaral DG. Neural responses to facial expression and face identity in the monkey amygdala. J Neurophysiol. 2007;97:1671–1683. doi: 10.1152/jn.00714.2006. [DOI] [PubMed] [Google Scholar]

- Grodzinsky Y, Santi A. The battle for Broca's region. Trends Cogn Sci. 2008;12:474–480. doi: 10.1016/j.tics.2008.09.001. [DOI] [PubMed] [Google Scholar]

- Hackett TA, Stepniewska I, Kaas JH. Prefrontal connections of the parabelt auditory cortex in macaque monkeys. Brain Res. 1999;817:45–58. doi: 10.1016/S0006-8993(98)01182-2. [DOI] [PubMed] [Google Scholar]

- Hanley JR, Smith ST, Hadfield J. I recognise you but I can't place you: an investigation of familiar-only experiences during tests of voice and face recognition. Q J Exp Psychol. 1998;51:179–195. doi: 10.1080/027249898391819. [DOI] [Google Scholar]

- Homae F, Hashimoto R, Nakajima K, Miyashita Y, Sakai KL. From perception to sentence comprehension: the convergence of auditory and visual information of language in the left inferior frontal cortex. Neuroimage. 2002;16:883–900. doi: 10.1006/nimg.2002.1138. [DOI] [PubMed] [Google Scholar]

- Hoshi E, Shima K, Tanji J. Neuronal activity in the primate prefrontal cortex in the process of motor selection based on two behavioral rules. J Neurophysiol. 2000;83:2355–2373. doi: 10.1152/jn.2000.83.4.2355. [DOI] [PubMed] [Google Scholar]

- Joassin F, Maurage P, Bruyer R, Crommelinck M, Campanella S. When audition alters vision: an event-related potential study of the cross-modal interactions between faces and voices. Neurosci Lett. 2004;369:132–137. doi: 10.1016/j.neulet.2004.07.067. [DOI] [PubMed] [Google Scholar]

- Jones JA, Callan DE. Brain activity during audiovisual speech perception: an fMRI study of the McGurk effect. Neuroreport. 2003;14:1129–1133. doi: 10.1097/00001756-200306110-00006. [DOI] [PubMed] [Google Scholar]

- Kamachi M, Hill H, Lander K, Vatikiotis-Bateson E. “Putting the face to the voice”: matching identity across modality. Curr Biol. 2003;13:1709–1714. doi: 10.1016/j.cub.2003.09.005. [DOI] [PubMed] [Google Scholar]

- Kikuchi-Yorioka Y, Sawaguchi T. Parallel visuospatial and audiospatial working memory processes in the monkey dorsolateral prefrontal cortex. Nat Neurosci. 2000;3:1075–1076. doi: 10.1038/80581. [DOI] [PubMed] [Google Scholar]

- Kuraoka K, Nakamura K. Responses of single neurons in monkey amygdala to facial and vocal emotions. J Neurophysiol. 2007;97:1379–1387. doi: 10.1152/jn.00464.2006. [DOI] [PubMed] [Google Scholar]

- Lee H, Noppeney U. Physical and perceptual factors shape the neural mechanisms that integrate audiovisual signals in speech comprehension. J Neurosci. 2011;31:11338–11350. doi: 10.1523/JNEUROSCI.6510-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McGurk H, MacDonald J. Hearing lips and seeing voices. Nature. 1976;264:746–748. doi: 10.1038/264746a0. [DOI] [PubMed] [Google Scholar]

- Miller EK, Li L, Desimone R. A neural mechanism for working and recognition memory in inferior temporal cortex. Science. 1991;254:1377–1379. doi: 10.1126/science.1962197. [DOI] [PubMed] [Google Scholar]

- Miller EK, Erickson CA, Desimone R. Neural mechanisms of visual working memory in prefrontal cortex of the macaque. J Neurosci. 1996;16:5154–5167. doi: 10.1523/JNEUROSCI.16-16-05154.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller LM, D'Esposito M. Perceptual fusion and stimulus coincidence in the cross-modal integration of speech. J Neurosci. 2005;25:5884–5893. doi: 10.1523/JNEUROSCI.0896-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mosher CP, Zimmerman PE, Gothard KM. Response characteristics of basolateral and centromedial neurons in the primate amygdala. J Neurosci. 2010;30:16197–16207. doi: 10.1523/JNEUROSCI.3225-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murray MM, Wallace MT. The neural bases of multisensory processes. Boca Raton, FL: CRC; 2012. [PubMed] [Google Scholar]

- Nee DE, Brown JW, Askren MK, Berman MG, Demiralp E, Krawitz A, Jonides J. A meta-analysis of executive components of working memory. Cereb Cortex. 2013;23:264–282. doi: 10.1093/cercor/bhs007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ng CW, Plakke B, Poremba A. Primate auditory recognition memory performance varies with sound type. Hear Res. 2009;256:64–74. doi: 10.1016/j.heares.2009.06.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Noppeney U, Ostwald D, Werner S. Perceptual decisions formed by accumulation of audiovisual evidence in prefrontal cortex. J Neurosci. 2010;30:7434–7446. doi: 10.1523/JNEUROSCI.0455-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ojanen V, Möttönen R, Pekkola J, Jääskeläinen IP, Joensuu R, Autti T, Sams M. Processing of audiovisual speech in Broca's area. Neuroimage. 2005;25:333–338. doi: 10.1016/j.neuroimage.2004.12.001. [DOI] [PubMed] [Google Scholar]

- O Scalaidhe SP, Wilson FA, Goldman-Rakic PS. Areal segregation of face-processing neurons in prefrontal cortex. Science. 1997;278:1135–1138. doi: 10.1126/science.278.5340.1135. [DOI] [PubMed] [Google Scholar]

- Parr LA. Perceptual biases for multimodal cues in chimpanzee (Pan troglodytes) affect recognition. Anim Cogn. 2004;7:171–178. doi: 10.1007/s10071-004-0207-1. [DOI] [PubMed] [Google Scholar]

- Partan SR. Single and multichannel signal composition: facial expressions and vocalizations of rhesus macaques (Macaca mulatta) Behaviour. 2002;139:993–1027. doi: 10.1163/15685390260337877. [DOI] [Google Scholar]

- Paulesu E, Frith CD, Frackowiak RS. The neural correlates of the verbal component of working memory. Nature. 1993;362:342–345. doi: 10.1038/362342a0. [DOI] [PubMed] [Google Scholar]

- Petrides M, Pandya DN. Association fiber pathways to the frontal cortex from the superior temporal region in the rhesus monkey. J Comp Neurol. 1988;273:52–66. doi: 10.1002/cne.902730106. [DOI] [PubMed] [Google Scholar]

- Petrides M, Pandya DN. Comparative cytoarchitectonic analysis of the human and the macaque ventrolateral prefrontal cortex and corticocortical connection patterns in the monkey. Eur J Neurosci. 2002;16:291–310. doi: 10.1046/j.1460-9568.2001.02090.x. [DOI] [PubMed] [Google Scholar]

- Petrides M, Cadoret G, Mackey S. Orofacial somatomotor responses in the macaque monkey homologue of Broca's area. Nature. 2005;435:1235–1238. doi: 10.1038/nature03628. [DOI] [PubMed] [Google Scholar]

- Plakke B, Romanski LM. Auditory connections and functions of prefrontal cortex. Front Neurosci. 2014;8:199. doi: 10.3389/fnins.2014.00199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Plakke B, Diltz MD, Romanski LM. Coding of vocalizations by single neurons in ventrolateral prefrontal cortex. Hear Res. 2013a;305:135–143. doi: 10.1016/j.heares.2013.07.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Plakke B, Ng CW, Poremba A. Neural correlates of auditory recognition memory in primate lateral prefrontal cortex. Neuroscience. 2013b;244:62–76. doi: 10.1016/j.neuroscience.2013.04.002. [DOI] [PubMed] [Google Scholar]

- Prabhakaran V, Narayanan K, Zhao Z, Gabrieli JD. Integration of diverse information in working memory within the frontal lobe. Nat Neurosci. 2000;3:85–90. doi: 10.1038/71156. [DOI] [PubMed] [Google Scholar]

- Preuss TM, Goldman-Rakic PS. Myelo- and cytoarchitecture of the granular frontal cortex and surrounding regions in the strepsirhine primate Galago and the anthropoid primate Macaca. J Comp Neurol. 1991;310:429–474. doi: 10.1002/cne.903100402. [DOI] [PubMed] [Google Scholar]

- Quintana J, Fuster JM. Mnemonic and predictive functions of cortical neurons in a memory task. Neuroreport. 1992;3:721–724. doi: 10.1097/00001756-199208000-00018. [DOI] [PubMed] [Google Scholar]

- Quintana J, Fuster JM. Spatial and temporal factors in the role of prefrontal and parietal cortex in visuomotor integration. Cereb Cortex. 1993;3:122–132. doi: 10.1093/cercor/3.2.122. [DOI] [PubMed] [Google Scholar]

- Rainer G, Asaad WF, Miller EK. Memory fields of neurons in the primate prefrontal cortex. Proc Natl Acad Sci U S A. 1998;95:15008–15013. doi: 10.1073/pnas.95.25.15008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rämä P, Courtney SM. Functional topography of working memory for face or voice identity. Neuroimage. 2005;24:224–234. doi: 10.1016/j.neuroimage.2004.08.024. [DOI] [PubMed] [Google Scholar]

- Rämä P, Poremba A, Sala JB, Yee L, Malloy M, Mishkin M, Courtney SM. Dissociable functional cortical topographies for working memory maintenance of voice identity and location. Cereb Cortex. 2004;14:768–780. doi: 10.1093/cercor/bhh037. [DOI] [PubMed] [Google Scholar]

- Rao SC, Rainer G, Miller EK. Integration of what and where in the primate prefrontal cortex. Science. 1997;276:821–824. doi: 10.1126/science.276.5313.821. [DOI] [PubMed] [Google Scholar]

- Romanski LM. Domain specificity in the primate prefrontal cortex. Cogn Affect Behav Neurosci. 2004;4:421–429. doi: 10.3758/CABN.4.4.421. [DOI] [PubMed] [Google Scholar]

- Romanski LM. Integration of faces and vocalizations in ventral prefrontal cortex: implications for the evolution of audiovisual speech. Proc Natl Acad Sci U S A. 2012;109(Suppl):10717–10724. doi: 10.1073/pnas.1204335109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Romanski LM, Averbeck BB. The primate cortical auditory system and neural representation of conspecific vocalizations. Annu Rev Neurosci. 2009;32:315–346. doi: 10.1146/annurev.neuro.051508.135431. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Romanski LM, Goldman-Rakic PS. An auditory domain in primate prefrontal cortex. Nat Neurosci. 2002;5:15–16. doi: 10.1038/nn781. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Romanski LM, Hwang J. Timing of audiovisual inputs to the prefrontal cortex and multisensory integration. Neuroscience. 2012;214:36–48. doi: 10.1016/j.neuroscience.2012.03.025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Romanski LM, Bates JF, Goldman-Rakic PS. Auditory belt and parabelt projections to the prefrontal cortex in the rhesus monkey. J Comp Neurol. 1999a;403:141–157. doi: 10.1002/(SICI)1096-9861(19990111)403:2<141::AID-CNE1>3.0.CO%3B2-V. [DOI] [PubMed] [Google Scholar]

- Romanski LM, Tian B, Fritz J, Mishkin M, Goldman-Rakic PS, Rauschecker JP. Dual streams of auditory afferents target multiple domains in the primate prefrontal cortex. Nat Neurosci. 1999b;2:1131–1136. doi: 10.1038/16056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Romanski LM, Averbeck BB, Diltz M. Neural representation of vocalizations in the primate ventrolateral prefrontal cortex. J Neurophysiol. 2005;93:734–747. doi: 10.1152/jn.00675.2004. [DOI] [PubMed] [Google Scholar]

- Rosenkilde CE, Bauer RH, Fuster JM. Single cell activity in ventral prefrontal cortex of behaving monkeys. Brain Res. 1981;209:375–394. doi: 10.1016/0006-8993(81)90160-8. [DOI] [PubMed] [Google Scholar]

- Sala JB, Courtney SM. Binding of what and where during working memory maintenance. Cortex. 2007;43:5–21. doi: 10.1016/S0010-9452(08)70442-8. [DOI] [PubMed] [Google Scholar]

- Saleem KS, Miller B, Price JL. Subdivisions and connectional networks of the lateral prefrontal cortex in the macaque monkey. J Comp Neurol. 2014;522:1641–1690. doi: 10.1002/cne.23498. [DOI] [PubMed] [Google Scholar]

- Scalaidhe SP, Wilson FA, Goldman-Rakic PS. Face-selective neurons during passive viewing and working memory performance of rhesus monkeys: evidence for intrinsic specialization of neuronal coding. Cereb Cortex. 1999;9:459–475. doi: 10.1093/cercor/9.5.459. [DOI] [PubMed] [Google Scholar]

- Schumacher EH, Lauber E, Awh E, Jonides J, Smith EE, Koeppe RA. PET evidence for an amodal verbal working memory system. Neuroimage. 1996;3:79–88. doi: 10.1006/nimg.1996.0009. [DOI] [PubMed] [Google Scholar]

- Scott BH, Mishkin M, Yin P. Monkeys have a limited form of short-term memory in audition. Proc Natl Acad Sci U S A. 2012;109:12237–12241. doi: 10.1073/pnas.1209685109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scott BH, Mishkin M, Yin P. Effect of acoustic similarity on short-term auditory memory in the monkey. Hear Res. 2013;298:36–48. doi: 10.1016/j.heares.2013.01.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scott SK, Blank CC, Rosen S, Wise RJ. Identification of a pathway for intelligible speech in the left temporal lobe. Brain. 2000;123:2400–2406. doi: 10.1093/brain/123.12.2400. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sinnett S, Spence C, Soto-Faraco S. Visual dominance and attention: the Colavita effect revisited. Percept Psychophys. 2007;69:673–686. doi: 10.3758/BF03193770. [DOI] [PubMed] [Google Scholar]

- Stein BE, Stanford TR. Multisensory integration: current issues from the perspective of the single neuron. Nat Rev Neurosci. 2008;9:255–266. doi: 10.1038/nrn2331. [DOI] [PubMed] [Google Scholar]

- Sugihara T, Diltz MD, Averbeck BB, Romanski LM. Integration of auditory and visual communication information in the primate ventrolateral prefrontal cortex. J Neurosci. 2006;26:11138–11147. doi: 10.1523/JNEUROSCI.3550-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tsao DY, Schweers N, Moeller S, Freiwald WA. Patches of face-selective cortex in the macaque frontal lobe. Nat Neurosci. 2008;11:877–879. doi: 10.1038/nn.2158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Watanabe M. Prefrontal unit activity during delayed conditional discriminations in the monkey. Brain Res. 1981;225:51–65. doi: 10.1016/0006-8993(81)90317-6. [DOI] [PubMed] [Google Scholar]

- Wilson FA, Scalaidhe SP, Goldman-Rakic PS. Dissociation of object and spatial processing domains in primate prefrontal cortex. Science. 1993;260:1955–1958. doi: 10.1126/science.8316836. [DOI] [PubMed] [Google Scholar]

- Wright AA, Shyan MR, Jitsumori M. Auditorysame/different concept learning by monkeys. Anim Learn Behav. 1990;18:287–294. doi: 10.3758/BF03205288. [DOI] [Google Scholar]

- Zatorre RJ, Bouffard M, Belin P. Sensitivity to auditory object features in human temporal neocortex. J Neurosci. 2004;24:3637–3642. doi: 10.1523/JNEUROSCI.5458-03.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang Y, Hu Y, Guan S, Hong X, Wang Z, Li X. Neural substrate of initiation of cross-modal working memory retrieval. PLoS One. 2014;9:e103991. doi: 10.1371/journal.pone.0103991. [DOI] [PMC free article] [PubMed] [Google Scholar]