SUMMARY

Facial motion transmits rich and ethologically vital information [1, 2], but how the brain interprets this complex signal is poorly understood. Facial form is analyzed by anatomically distinct face patches in the macaque brain [3, 4], and facial motion activates these patches and surrounding areas [5, 6]. Yet it is not known whether facial motion is processed by its own distinct and specialized neural machinery, and if so, what that machinery’s organization might be. To address these questions, we used functional magnetic resonance imaging (fMRI) to monitor the brain activity of macaque monkeys while they viewed low- and high-level motion and form stimuli. We found that, beyond classical motion areas and the known face patch system, moving faces recruited a heretofore-unrecognized face patch. Although all face patches displayed distinctive selectivity for face motion over object motion, only two face patches preferred naturally moving faces, while three others preferred randomized, rapidly varying sequences of facial form. This functional divide was anatomically specific, segregating dorsal from ventral face patches, thereby revealing a new organizational principle of the macaque face-processing system.

RESULTS

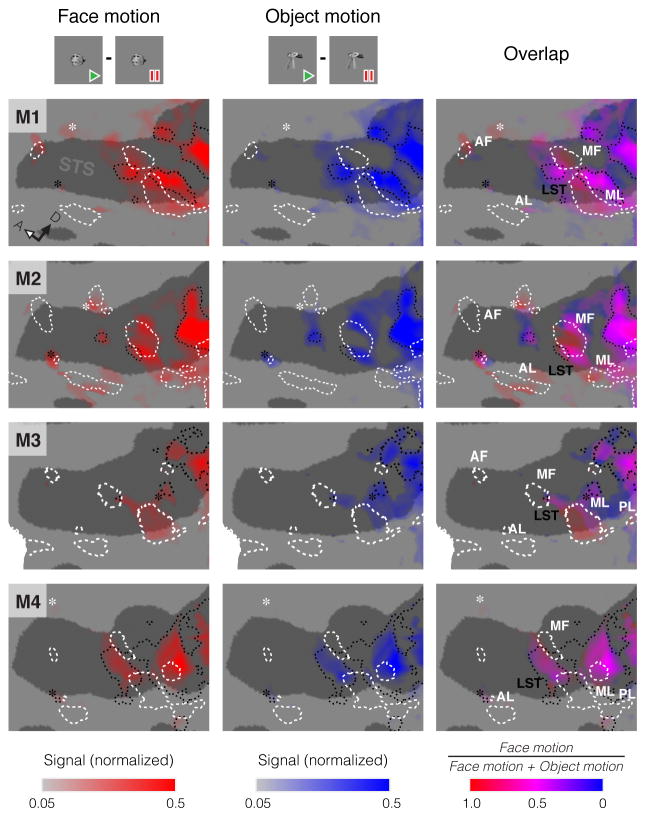

Face motion activates a diverse set of functionally specific areas

Face motion activates a large expanse of cortex in and around the superior temporal sulcus (STS) [5, 6]. The degree to which this merely reflects underlying sensitivity to general motion or face form remains unclear. We examined the functional basis of this activation by mapping it alongside regions specialized for general low-level motion and face form, using high-resolution, contrast-enhanced fMRI to monitor brain activity in four alert rhesus macaque monkeys (M1–M4) during visual stimulus presentation (Figures S1A, S2; Supplemental Experimental Methods). We used this same basic technique throughout this study. The resulting functional maps (Figure S2A–B) revealed motion areas including MT, MSTv, FST and LST [7, 8], and face patches PL, ML, MF, AL and AF [3]. Motion areas and face patches in the STS fundus, despite their proximity, remained spatially disjunct (Figure S2C–D). Face motion activated some face patches, all identified motion areas, and further outlying areas (Figure 1, left column). Non-face object motion (Figure 1, center column) did not activate any face patches, but activated all identified motion areas and a subset of the aforementioned outlying areas (Figure 1, right column). Outlying areas responsive to both face- and non-face motion likely represent specializations for forms in motion [9], that lack a specificity for faces. Importantly, we also found outlying areas that were recruited by face motion, but neither object nor general motion (Figure 1, white asterisks). These maps show that responses to face motion extend throughout the motion sensitive STS, into at least a subset of face patches, and, intriguingly, beyond the classical face patch system and motion areas.

Figure 1. Selectivities for Motion Carried by Faces or Non-Face Objects along the Macaque STS.

Regions responding to face motion (left column, red; natural face movies - face pictures) or non-face object motion (middle column, blue; natural object movies - object pictures), and the relative strength of these contrasts (right column), in the left hemisphere of each subject. Opacity reflects the contrast strength (normalized signal change). This data is presented on a flattened cortical model of the area surrounding the STS, with dark gray regions representing sulci and light gray regions representing gyri (as in Figure S2B). Dashed white lines outline areas of static face selectivity and dotted black lines outline areas of low-level motion selectivity, both measured in independent experiments (Figure S2). Similarly, white labels indicate face patches and black labels indicate motion areas. Black asterisks highlight areas responding to face and object motion outside of recognized motion processing areas. White asterisks highlight areas more activated by face motion than object motion outside of known face patches. For orientation, the white-filled arrow points anteriorly and the black arrow points dorsally.

Signal change in maps is normalized per-subject and thresholded at a false discovery rate (FDR) of q < 0.01. See also Figure S2.

A novel face patch responds to moving faces

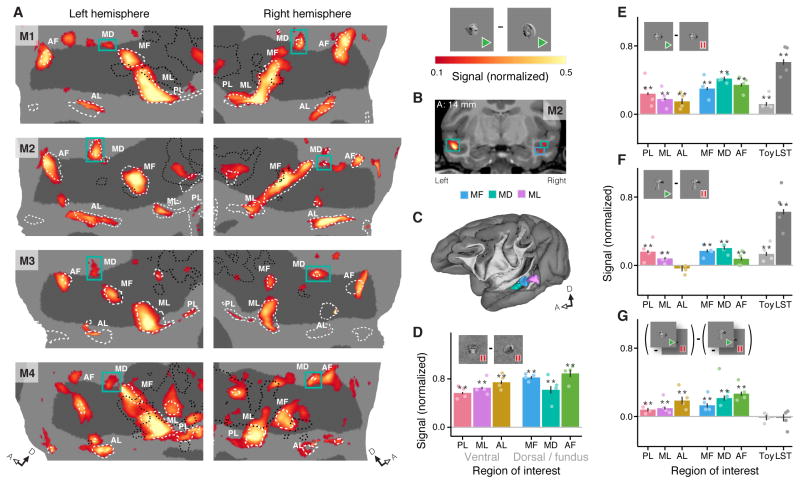

To extend beyond the classical face patch system and map areas that may be attuned to the motion of faces [10, 11], we contrasted fMRI responses to movies of faces with responses to movies of articulated toys (Figure S1C). This dynamic localizer (Figure 2A) activated all of the earlier-identified face patches (Figure S2B) and additional parts of the STS’s dorsal bank, including many of the areas that had been selectively recruited by face motion (Figure 1, white asterisks). These new dorsal activations included scattered points of face selectivity that varied from individual to individual and, importantly, one area of selectivity at a consistent location in every subject and hemisphere. This area was located anterodorsal to face patches ML and MF (Figure 2A), spatially distinct from both (Figures 2B–C, S3). We call this new area the Middle Dorsal face patch (MD). Thus the pairing of face form and motion reliably recruits six face-specific patches around the STS: PL, ML and AL along its ventral lip, and MF, AF, and the just-recognized MD in its fundus and dorsal bank.

Figure 2. Responses to Complex Motion within an Extended Face Patch System.

(A) Left: Dynamic face selectivity (localizer face movies – localizer object movies, Figure S1C) on flattened maps of the STS of each hemisphere in 4 subjects. Green boxes highlight the newly described middle dorsal face patch MD, so-called because it is in the dorsal bank of the STS and neighbors other middle face patches ML and MF. Dashed white lines outline static face selectivity and dotted black lines outline low-level motion selectivity (as in Figure 1). Right: signal strength color map and schematic of contrast. (B) Coronal slice from M2, showing position of MD and its separation from MF. The right side of the brain is on the right side of the page. The anterior-posterior stereotaxic coordinate is taken relative to the interaural line. (C) Volumetric model of M2’s left hemisphere showing the relative locations of ML (purple), MF (blue), and MD (green). (D) Plot of static face selectivity (faces - fruits and vegetables) within the six face patch ROIs that were defined with the dynamic face selectivity localizer (panel A). (E) Responses to face motion (natural face movies - face pictures) and (F) object motion (natural object movies - object pictures) in the face patches, ‘toy patch’, and LST. (G) Strength of interaction between responses to form and motion: (natural face movies - face pictures) - (natural object movies - object pictures).

* = p < 0.05 and ** = p < 0.01, corrected using Holm–Bonferroni method for 30 tests (6 ROIs × 1 measure + 8 ROIs × 3 measures). Dots on bar plots represent the values for individual subjects. Error bars represent standard error. Signal change in bar plots is normalized per ROI. Signal change in maps is normalized per-subject and thresholded at an FDR of q < 0.01. The raw data analyzed in panels E–G is the same data plotted in Figure 1. See also Figures S3 and S4.

All STS face patches possess a distinctive selectivity for face motion

The preference for moving faces over moving objects in these six face patches could result from two different specializations: selectivity either for face form or for face motion. In fact, all face patches demonstrated similar degrees of selectivity for facial form (Figure 2D) and a preference for facial motion (Figure 2E). The facial motion preference was more pronounced in the patches along the fundus and dorsal bank of the STS. Responses to non-face object motion (Figure 2F) were smaller than responses to facial motion throughout. Consistent with this, the interaction between shape category (face vs. object) and motion (moving vs. static) was significant in all STS face patches (Figure 2G). Thus all face patches exhibit a response to motion that is face-specific. Two neighboring control areas, an object-selective STS region that responded more to moving toys than moving faces (referred to as the ‘toy patch’) and motion area LST [7], were sensitive to both face and object motion to a similar extent (Figure 2E–G). The observed form-specific motion-selectivity of the face patches is, therefore, not due to an imbalance of low-level motion energy across stimuli, a conclusion further supported by balanced activation of general motion areas (Figure S4). Thus selectivity for both the form and motion of faces characterizes all STS face patches, but not the STS at large.

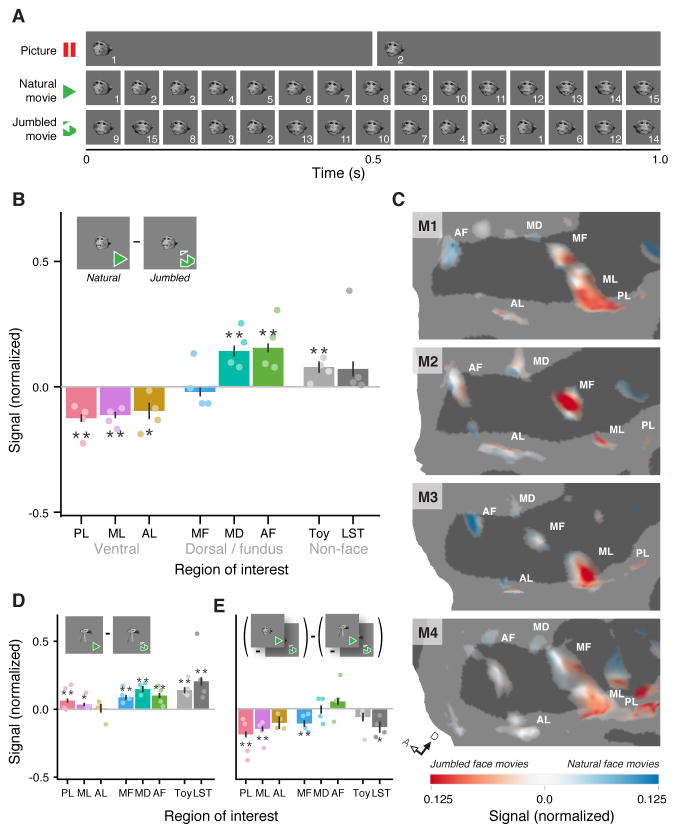

Natural face motion selectivity divides the STS face patch system

We now know that all STS face patches are selective for facial motion (Figure 2G). But does activity within these areas represent natural facial motion, or is it simply a response to all updates in face pose, natural or unnatural? We addressed this question by challenging the face-processing system with two stimulus sets that were identical in static content and frame rate, but differed in motion quality: the normal, ‘natural’ movies used earlier in the study (Figure 1), and ‘jumbled’ versions of the same movies, where frames were presented in a random order (Figures 3A, S1C). We found that dorsal face patches MD and AF showed a significantly greater response to natural movies of faces (Figure 3B). In contrast, ventral face patches PL, ML and AL not only failed to respond more to natural movies, but, surprisingly, their responses were significantly stronger for jumbled movies. MF, positioned between MD and ML, showed no significant preference for either movie type. Thus the face patch system is fundamentally differentiated along a ventrodorsal axis (Figure 3C): the dorsal portion responds preferentially to natural face movements and the ventral portion responds preferentially to facial shapes undergoing rapid, even random, transitions.

Figure 3. Preferential Responses to Natural or Disordered Face Motion within the Face Patch System.

(A) Schematics of stimuli used for analyses of natural motion selectivity. For faces and non-face objects, picture, natural movie, and jumbled movie stimuli were derived from the same 60 fps (frames per second) source videos. Each video was downsampled to 2 fps in the picture condition and 15 fps in the movie conditions. By randomizing the order of each natural movie’s frames, a matched jumbled movie was created. Each exemplar stimulus lasted 3 s; a 1 s period is shown here for demonstration. (B) Preference for natural face motion over jumbled face motion across six face patches and two control regions. Dorsal patches MD and AF show a significant preference for natural face motion, while, conversely, ventral patches PL, ML, and AL significantly prefer the rapidly changing jumbled face movies. (C) Preference for either natural face motion (red) or jumbled face motion (blue), as calculated in panel B, across face-selective cortex. Opacity reflects strength of face selectivity (Figure 2A). (D) Preference for natural object movies over jumbled object movies. (E) Strength of interaction between form (face or object) and frame ordering (natural or jumbled): (natural face movies - jumbled face movies) - (natural object movies - jumbled object movies).

* = p < 0.05 and ** = p < 0.01, corrected using Holm–Bonferroni method for 24 tests (8 ROIs × 3 measures). Dots on bar plots represent the values for individual subjects. Error bars represent standard error. Signal change in bar plots is normalized per ROI. Signal change in maps is normalized per subject.

The divergent responses of face patches to natural versus jumbled motion did not extend to non-face objects: no patch preferred jumbled object movies to natural ones (Figure 3D). Furthermore, the two control regions responded more to natural object motion (compared to jumbled object motion) than to natural facial motion (compared to jumbled facial motion). As a result, face patches PL, ML, MF and control area LST showed significant interactions between motion quality (natural or jumbled) and form (face or object; Figure 3E). Thus, while natural motion improved localization of an extended face processing system (Figure 2A), and all constituent areas of this system were selective for an interaction of face form and motion (Figure 2G), this shared selectivity arose from two different specializations: the dorsal face patches (MD and AF) genuinely represent natural facial motion, while the ventral face patches (PL, ML and AL) appear to prefer rapidly changing facial pose, regardless of kinematic meaning.

DISCUSSION

From just a glance at a face, we gather an abundance of social information [12]. Set in motion, the face comes alive, augmenting this knowledge [13, 14], but also posing a challenge for the neural systems that must now interpret an evolving subject [15]. The current study aimed to identify the neural machinery that navigates these intertwined opportunities and challenges of facial motion, leveraging a model system that is similar to the human face-processing system [3, 16], remains highly reproducible across subjects [3], and enables mechanistic exploration of the computations underlying face recognition [4]. The specialized areas that we recruited with naturally moving faces likely mark a key component of the machinery for dynamic face recognition.

The architecture of face motion processing revealed here includes areas selective for low- and high-level motion [5], face form [6], and natural facial motion. These areas all neighbor each other but remain spatially distinct. This picture of a functionally heterogeneous mosaic represents a fundamental departure from earlier fMRI studies [5, 6] which suggested that any motion responsiveness found in dorsal face patches [6] was a byproduct of these areas overlapping a generally motion responsive region. Our results reflect a different reality: while some STS regions are broadly motion-sensitive – responding similarly to face motion and non-face motion – neighboring areas specifically process face motion.

One such area is MD, a newly described face patch in the upper bank of the STS (Figures 2A–C, S3). While MD is occasionally evident when static stimuli are used for mapping (similar to aMF [17]), dynamic stimuli allowed us to locate this area in all eight hemispheres that we studied. This pattern is reminiscent of the human pSTS (sometimes called STS [16]) face area, a region critical for processing moving faces [18] which is likewise identified sporadically with static stimuli but reliably with dynamic ones [6, 10] and shows selectivity for natural face motion [19]. Interestingly, the human pSTS face area does not appear to be strongly connected to the ventrally located fusiform and occipital face areas [20]. Similarly in macaque monkeys, when connectivity of face patches was mapped, no strong projections to the location of MD were reported [21]. Furthermore, anatomically variable activations by faces are found anterior to both MD (this study, [17]) and human pSTS [11]. One plausible scenario for this variability is that these anterior regions represent a variety of social signals of diverse complexity [22, 23], and are only partially and erratically activated by faces. Thus functional specialization, connectivity, and relative location indicate that MD might be the macaque homolog of the human pSTS face area, and could therefore be critical for establishing general homology between face processing systems of humans and macaques [3, 16].

We found a new functional differentiation within the macaque face-processing system wherein dorsal patches preferred naturally moving faces, while ventral patches (to our surprise) preferred random transitions in face pose (Figure 3B–C). This reveals a novel dimension of the cortical representation of faces and marks, to our knowledge, the first time that fMRI has revealed an overt functional dissociation – where different areas have significant and opposing selectivities – within the macaque face-processing system.

This preference for natural facial motion suggests that cells in MD and AF, beyond selectivity for static facial form (Figure 2D; see [24]), also exhibit selectivity for the kinematics of naturally moving faces. Some neurons in these patches may fire only in response to a specific sequence of poses, a mechanism that has been proposed for the neural coding of biological motion [25, 26]. On the other hand, the apparent selectivity of ventral face patches PL, ML and AL for randomized face motion is unlikely to reflect a genuine selectivity for specific sequences of facial pose. Rather, this preference may reflect purely shape-selective face neurons that adapt quickly [27], respond less to expected stimuli [28, 29], or show a combination of these effects [30, 31]. Thus a predictive coding scheme, where deviations from expectation drive neural activity [32, 33], could underlie processing in the ventral patches. While predictive coding models generally assume predictions from later processing levels inform earlier processing levels (e.g. [32]), our discovery of qualitatively distinct representations of facial motion within the face patch system allows an alternative hypothesis to be explored: dynamic face representations in dorsal face patches might generate predictions of momentary features which are communicated to ventral patches through lateral connections [21].

While our use of jumbled frames as a control revealed a functional divide within the face-patch system, jumbling is a coarse manipulation that introduces discontinuities into continuous motion and interrupts the possible expectation of preserved stimulus identity. This study, therefore, speaks specifically to functional specializations for continuous face motion. A recent experiment demonstrated that certain human face areas respond differentially to movies of facial expressions played either forwards in time (a continuous, biologically plausible motion) or backwards (a continuous but implausible motion) [34]. A similar comparison in monkeys might deepen our understanding, showing further motion specialization within the face patches or refining the mechanistic understanding of the division we describe.

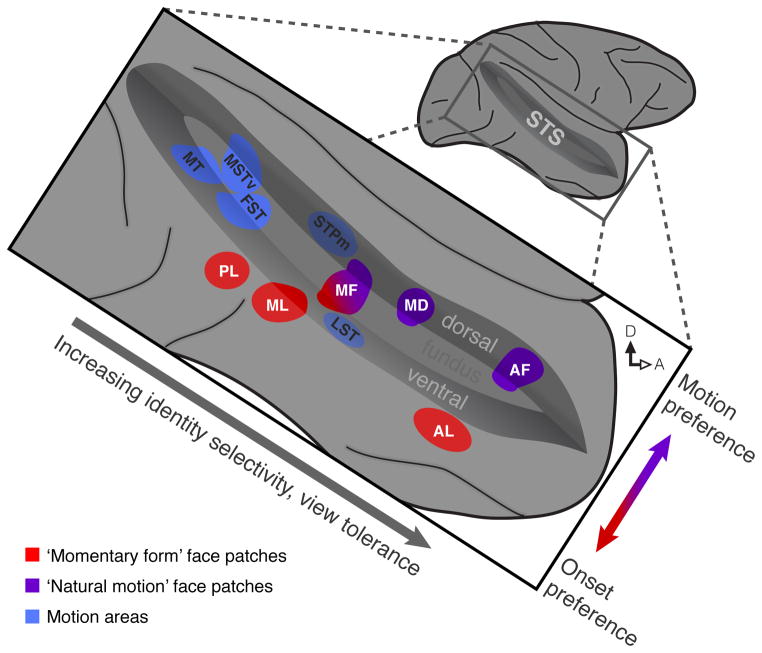

By integrating our results with the findings of earlier studies, we can develop a picture of how face form and motion processing are arranged in the macaque temporal lobe (Figure 4). Within and around the STS, face patches and general motion areas adjoin each other, but are anatomically distinct. The face patches are differentiated along two axes. As information flows from posterior to anterior, face patches show increased form specificity and view tolerance [4], consistent with general trends in the temporal lobe [35]. Along the ventral to dorsal axis, there is a functional transition that reflects a likely selectivity for momentary facial form in the ventral patches and for continuous facial motion in the dorsal ones. This picture is compatible with influential “division of labor” face recognition models (e.g. [36]), particularly those that posit a separation of static features (such as identity) from dynamic ones (such as expression) [37]. In fact, our findings present the best evidence yet of such a division of labor between identifiable nodes in the macaque brain, opening the door to further characterization of putative static and dynamic streams by electrophysiological and causal approaches. This could ultimately elucidate how the myriad signals conveyed by faces are given meaning by the brain [38, 39] at neuron and network levels. In this way, the specializations for facial motion within the areas described here provide a concrete anatomical framework for investigating both the computations that extract and abstract from facial dynamics and, more generally, the interrelated neural representations of form and motion.

Figure 4. Model of Face Motion and Face Form Processing along the Macaque Temporal Lobe.

Functional specificity of face patches is organized along two main anatomical axes. From posterior to anterior, face patches show increasing identity selectivity and increasing tolerance to viewing condition [4]. Along the dorso-ventral axis, face patches show differential selectivity for natural motion, with ‘dynamic’ dorsal patches (purple) responding to natural motion and ‘static’ ventral patches (red) responding more to rapidly varying face stimuli. Face motion activates all of these patches as well as motion processing areas (blue), which are selective for neither momentary face form nor natural face motion.

Supplementary Material

Figure S1. Example Stimuli, Related to Experimental Methods

(A) Sample frames from the low-level motion stimulus set and definition of contrast (blue arrow) for measuring low-level motion selectivity. (B) Sample stimuli from the object category stimulus set and definition of the static face selectivity contrast. (C) Sample frames from the object motion stimulus set and definition of related contrasts. Note that the source movies used to create the stimuli for the dynamic face selectivity contrast were different than the source movies used for the other blocks of this stimulus set; they featured the same monkeys and cage toys demonstrating different actions. We used data collected in response to the localizer movies solely to localize the face patch ROIs, and based all analyses within these ROIs on independent data collected during the presentation of other stimuli.

Figure S2. Spatially Dissociated Selectivities for Static Faces and Low-Level Motion in the STS Fundus, Related to Figure 1

(A) Left: face form selectivity (red; faces - fruits & vegetables) and low-level motion selectivity (blue; plaid movies - plaid pictures) plotted on an inflated model of M1’s right cortical hemisphere. The gray box highlights the region depicted below in B. Right: the hue at each point reflects the relative strength (normalized signal change) of these two contrasts, and opacity reflects the strength of the strongest contrast. (B) Flattened maps, as in panel A, of the STS of each hemisphere in 4 subjects. Face patches (white text) and motion areas (black text) are labeled according to the criteria described in Experimental Methods. Dotted lines represent retinotopic meridians used to identify motion areas and solid lines represent retinotopic meridians at the anterior edge of area V4. Note how there is widespread separation of face selectivity and low-level motion selectivity. We found just one exception to this rule of spatial segregation of function: in M2 and M4 the ventral, foveal aspect of the MT/MSTv/FST motion complex exhibited face selectivity (similar to area pPL of [S1]) (C) Scatter plot of joint face and motion selectivity of voxels in the fundus of the STS (as defined by sulcal depth). Static face selectivity and low-level motion selectivity (signal change, normalized per subject) were determined for voxels in the fundus of the STS (excluding the MT/MSTv/FST complex, see Supplemental Experimental Methods) most responsive to movies of faces or objects. Dot color represents significant (q < 0.01) stimulus selectivities of each voxel: red for static face selectivity (faces - fruits and vegetables), blue for low-level motion selectivity (plaid movies - plaid pictures), magenta for both. These selectivity distributions had a voxel-wise distribution overlap value (DOV) of 0.34 (see Supplemental Experimental Methods). (D) Determination of statistical significance of measured DOV. The gray plot shows the null distribution of DOV, under the assumption of a random association between static face-selectivity and low-level motion selectivity across the considered voxels. The dashed red line represents the measured DOV of 0.34 (from panel A). This value is very significantly (p < 0.00001) smaller than expected by chance associations. Thus face and motion selectivity are neither co-localized nor independently distributed in the fundus of the STS, but are spatially segregated. Alternative quantifications of similarity of these stimulus selectivity distributions using cosine distance and Spearman correlation confirmed that they were less similar than would be expected by chance (both p < 0.0005). These p-values reflect upper (less significant) limits of 99% confidence intervals (see Supplemental Experimental Methods).

Figure S3. Identification and Location of the Middle Dorsal Face Patch (MD), Related to Figure 2

(A) Inflated cortical surface model of M2’s left hemisphere showing the relative locations of ML (purple), MF (blue), and MD (green). (B) Slice representations of the middle face patches (ML, MF, and MD) in all subjects (top to bottom). Parasagittal slices (left column) show the left hemispheres. Coronal and horizontal slices (center and right column, respectively) are presented following neurological convention, with the right side of the brain on the right side of the page. Slice coordinates assume standard stereotaxic positioning and are measured from the midpoint of the interaural line. The coronal slice of M2 is the one shown in Figure 2B and is reproduced here for completeness. Functional maps show the dynamic face selectivity localizer thresholded at an FDR of q < 0.01.

Figure S4. Analysis of Motion Content in Face and Object Movies, Related to Figure 2

(A) Responses of two ROIs, MT/MSTv/FST complex and LST, to two stimulus contrasts, face movie motion (natural face movies - face pictures; “face motion selectivity” in Figure S1C) and object movie motion (natural object movies - object pictures; “object motion selectivity” in Figure S1C). Both motion selective regions responded just as much (LST) if not more (MT/MSTv/FST) to the motion in the object movies than to the motion in the face movies. * = p < 0.05 and ** = p < 0.01, corrected using Holm–Bonferroni method for 6 tests (2 ROIs × (2 measures + comparison)). Dots on bar plots represent the values for individual subjects. Signal change is normalized per ROI. Error bars represent standard error. The data from LST are the same as those presented in Figure 2E–G. (B) Response of the 250 voxels in each subject’s temporal lobe most responsive to low-level motion (as measured by the low-level motion selectivity localizer) to face movie and object movie motion. Responses to the two types of motion are highly correlated. Linear regression suggests that the voxels in the temporal lobes most sensitive to low level motion responded ~89% as strongly to motion in the face movies as they responded to motion in the object movies. Signal change is normalized per subject. Both analyses suggest that motion content in face and object movies was well matched, and if not entirely equal, slightly larger in object movies than in face movies.

Acknowledgments

The authors thank Akinori Ebihara, Rizwan Huq, Sofia Landi, Srivatsun Sadagopan, Caspar Schwiedrzik, Stephen Shepherd, Julia Sliwa, and Wilbert Zarco for help with animal training, data collection, and discussion of methods, veterinary services and animal husbandry staff for care of the subjects, Kalanit Grill-Spector, Douglas Greve, A. James Hudspeth, Hauke Kolster, Gaby Maimon, Sebastian Moeller, Keith Purpura, Jonathan Victor, Jonathan Winawer, and Galit Yovel for experimental advice, and Margaret Fabiszak, Marya Fisher, and Caspar Schwiedrzik for comments on the manuscript.

This work was supported by a Pew Biomedical Scholar Award, a McKnight Scholar Award, a New York Stem Cell Foundation’s Robertson Neuroscience Investigator Award, the NSF Science and Technology Center for Brains, Minds and Machines, and a National Eye Institute (R01 EY021594-01A1) grant to W.A.F. C.F. was supported by a Medical Scientist Training Program grant (NIGMS T32GM007739) to the Weill Cornell/Rockefeller/Sloan-Kettering Tri-Institutional MD-PhD Program.

Footnotes

AUTHOR CONTRIBUTIONS

C.F. and W.A.F designed the experiment. C.F. performed the experiment. C.F. analyzed the data. C.F. and W.A.F. wrote the manuscript.

This paper’s content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Mosher CP, Zimmerman PE, Gothard KM. Videos of conspecifics elicit interactive looking patterns and facial expressions in monkeys. Behav Neurosci. 2011;125:639–652. doi: 10.1037/a0024264. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Shepherd SV, Ghazanfar AA. Engaging neocortical networks with dynamic faces. In: Giese M, Curio C, Bülthoff HH, editors. Dynamic Faces. The MIT Press; 2012. pp. 105–122. [Google Scholar]

- 3.Tsao DY, Moeller S, Freiwald WA. Comparing face patch systems in macaques and humans. P Natl Acad Sci USA. 2008;105:19514–19519. doi: 10.1073/pnas.0809662105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Freiwald WA, Tsao DY. Functional compartmentalization and viewpoint generalization within the macaque face-processing system. Science. 2010;330:845–851. doi: 10.1126/science.1194908. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Furl N, Hadj-Bouziane F, Liu N, Averbeck BB, Ungerleider LG. Dynamic and static facial expressions decoded from motion-sensitive areas in the macaque monkey. J Neurosci. 2012;32:15952–15962. doi: 10.1523/JNEUROSCI.1992-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Polosecki P, Moeller S, Schweers N, Romanski LM, Tsao DY, Freiwald WA. Faces in motion: selectivity of macaque and human face processing areas for dynamic stimuli. J Neurosci. 2013;33:11768–11773. doi: 10.1523/JNEUROSCI.5402-11.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Nelissen K, Vanduffel W, Orban GA. Charting the lower superior temporal region, a new motion-sensitive region in monkey superior temporal sulcus. J Neurosci. 2006;26:5929–5947. doi: 10.1523/JNEUROSCI.0824-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Kolster H, Mandeville JB, Arsenault JT, Ekstrom LB, Wald LL, Vanduffel W. Visual field map clusters in macaque extrastriate visual cortex. J Neurosci. 2009;29:7031–7039. doi: 10.1523/JNEUROSCI.0518-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Nelissen K, Borra E, Gerbella M, Rozzi S, Luppino G, Vanduffel W, Rizzolatti G, Orban GA. Action observation circuits in the macaque monkey cortex. J Neurosci. 2011;31:3743–3756. doi: 10.1523/JNEUROSCI.4803-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Fox CJ, Iaria G, Barton JJS. Defining the face processing network: optimization of the functional localizer in fMRI. Hum Brain Mapp. 2009;30:1637–1651. doi: 10.1002/hbm.20630. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Pitcher D, Dilks DD, Saxe RR, Triantafyllou C, Kanwisher N. Differential selectivity for dynamic versus static information in face-selective cortical regions. NeuroImage. 2011;56:2356–2363. doi: 10.1016/j.neuroimage.2011.03.067. [DOI] [PubMed] [Google Scholar]

- 12.Willis J, Todorov A. First impressions: making up your mind after a 100-ms exposure to a face. Psychol Sci. 2006;17:592–598. doi: 10.1111/j.1467-9280.2006.01750.x. [DOI] [PubMed] [Google Scholar]

- 13.Knight B, Johnston A. The role of movement in face recognition. Vis Cogn. 1997;4:265–273. [Google Scholar]

- 14.Lander K, Christie F, Bruce V. The role of movement in the recognition of famous faces. Mem Cognit. 1999;27:974–985. doi: 10.3758/bf03201228. [DOI] [PubMed] [Google Scholar]

- 15.Sinha P. Analyzing dynamic faces: key computational challenges. In: Curio C, Bülthoff HH, Giese MA, editors. Dynamic Faces. MIT Press; MA: 2011. [Google Scholar]

- 16.Yovel G, Freiwald WA. Face recognition systems in monkey and human: are they the same thing? F1000Prime Rep. 2013;5 doi: 10.12703/P5-10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Janssens T, Zhu Q, Popivanov ID, Vanduffel W. Probabilistic and single-subject retinotopic maps reveal the topographic organization of face patches in the macaque cortex. J Neurosci. 2014;34:10156–10167. doi: 10.1523/JNEUROSCI.2914-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Pitcher D, Duchaine B, Walsh V. Combined TMS and fMRI reveal dissociable cortical pathways for dynamic and static face perception. Curr Biol. 2014;24:2066–2070. doi: 10.1016/j.cub.2014.07.060. [DOI] [PubMed] [Google Scholar]

- 19.Schultz J, Brockhaus M, Bülthoff HH, Pilz KS. What the human brain likes about facial motion. Cereb Cortex. 2013;23:1167–1178. doi: 10.1093/cercor/bhs106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Gschwind M, Pourtois G, Schwartz S, Van De Ville D, Vuilleumier P. White-matter connectivity between face-responsive regions in the human brain. Cereb Cortex. 2012;22:1564–1576. doi: 10.1093/cercor/bhr226. [DOI] [PubMed] [Google Scholar]

- 21.Moeller S, Freiwald WA, Tsao DY. Patches with links: a unified system for processing faces in the macaque temporal lobe. Science. 2008;320:1355–1359. doi: 10.1126/science.1157436. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Perrett DI, Hietanen JK, Oram MW, Benson PJ. Organization and functions of cells responsive to faces in the temporal cortex. Philos Trans R Soc Lond, B, Biol Sci. 1992;335:23–30. doi: 10.1098/rstb.1992.0003. [DOI] [PubMed] [Google Scholar]

- 23.Hein G, Knight RT. Superior temporal sulcus—it’s my area: or is it? J Cogn Neurosci. 2008;20:2125–2136. doi: 10.1162/jocn.2008.20148. [DOI] [PubMed] [Google Scholar]

- 24.McMahon DBT, Jones AP, Bondar IV, Leopold DA. Face-selective neurons maintain consistent visual responses across months. P Natl Acad Sci USA. 2014;111:8251–8256. doi: 10.1073/pnas.1318331111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Giese MA, Poggio T. Neural mechanisms for the recognition of biological movements. Nat Rev Neurosci. 2003;4:179–192. doi: 10.1038/nrn1057. [DOI] [PubMed] [Google Scholar]

- 26.Vangeneugden J, De Mazière PA, Van Hulle MM, Jaeggli T, Van Gool L, Vogels R. Distinct mechanisms for coding of visual actions in macaque temporal cortex. J Neurosci. 2011;31:385–401. doi: 10.1523/JNEUROSCI.2703-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Grill-Spector K, Malach R. fMR-adaptation: a tool for studying the functional properties of human cortical neurons. Acta Psychol. 2001;107:293–321. doi: 10.1016/s0001-6918(01)00019-1. [DOI] [PubMed] [Google Scholar]

- 28.Summerfield C, Trittschuh EH, Monti JM, Mesulam MM, Egner T. Neural repetition suppression reflects fulfilled perceptual expectations. Nat Neurosci. 2008;11:1004–1006. doi: 10.1038/nn.2163. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Meyer T, Olson CR. Statistical learning of visual transitions in monkey inferotemporal cortex. P Natl Acad Sci USA. 2011;108:19401–19406. doi: 10.1073/pnas.1112895108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Larsson J, Smith AT. fMRI repetition suppression: neuronal adaptation or stimulus expectation? Cereb Cortex. 2012;22:567–576. doi: 10.1093/cercor/bhr119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Perrett DI, Xiao D, Barraclough NE, Keysers C, Oram MW. Seeing the future: Natural image sequences produce “anticipatory” neuronal activity and bias perceptual report. Q J Exp Psychol. 2009;62:2081–2104. doi: 10.1080/17470210902959279. [DOI] [PubMed] [Google Scholar]

- 32.Friston K. A theory of cortical responses. Philos Trans R Soc Lond, B, Biol Sci. 2005;360:815–836. doi: 10.1098/rstb.2005.1622. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Clark A. Whatever next? Predictive brains, situated agents, and the future of cognitive science. Behav Brain Sci. 2013;36:181–204. doi: 10.1017/S0140525X12000477. [DOI] [PubMed] [Google Scholar]

- 34.Reinl M, Bartels A. Face processing regions are sensitive to distinct aspects of temporal sequence in facial dynamics. NeuroImage. 2014;102:407–415. doi: 10.1016/j.neuroimage.2014.08.011. [DOI] [PubMed] [Google Scholar]

- 35.Rust NC, DiCarlo JJ. Selectivity and tolerance (“invariance”) both increase as visual information propagates from cortical area V4 to IT. J Neurosci. 2010;30:12978–12995. doi: 10.1523/JNEUROSCI.0179-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Bruce V, Young A. Understanding face recognition. Br J Psychol. 1986;77(Pt 3):305–327. doi: 10.1111/j.2044-8295.1986.tb02199.x. [DOI] [PubMed] [Google Scholar]

- 37.Haxby JV, Hoffman E, Gobbini M. The distributed human neural system for face perception. Trends Cogn Sci. 2000;4:223–233. doi: 10.1016/s1364-6613(00)01482-0. [DOI] [PubMed] [Google Scholar]

- 38.Calder AJ, Young AW. Understanding the recognition of facial identity and facial expression. Nat Rev Neurosci. 2005;6:641–651. doi: 10.1038/nrn1724. [DOI] [PubMed] [Google Scholar]

- 39.Barraclough NE, Perrett DI. From single cells to social perception. Philos Trans R Soc Lond, B, Biol Sci. 2011;366:1739–1752. doi: 10.1098/rstb.2010.0352. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Figure S1. Example Stimuli, Related to Experimental Methods

(A) Sample frames from the low-level motion stimulus set and definition of contrast (blue arrow) for measuring low-level motion selectivity. (B) Sample stimuli from the object category stimulus set and definition of the static face selectivity contrast. (C) Sample frames from the object motion stimulus set and definition of related contrasts. Note that the source movies used to create the stimuli for the dynamic face selectivity contrast were different than the source movies used for the other blocks of this stimulus set; they featured the same monkeys and cage toys demonstrating different actions. We used data collected in response to the localizer movies solely to localize the face patch ROIs, and based all analyses within these ROIs on independent data collected during the presentation of other stimuli.

Figure S2. Spatially Dissociated Selectivities for Static Faces and Low-Level Motion in the STS Fundus, Related to Figure 1

(A) Left: face form selectivity (red; faces - fruits & vegetables) and low-level motion selectivity (blue; plaid movies - plaid pictures) plotted on an inflated model of M1’s right cortical hemisphere. The gray box highlights the region depicted below in B. Right: the hue at each point reflects the relative strength (normalized signal change) of these two contrasts, and opacity reflects the strength of the strongest contrast. (B) Flattened maps, as in panel A, of the STS of each hemisphere in 4 subjects. Face patches (white text) and motion areas (black text) are labeled according to the criteria described in Experimental Methods. Dotted lines represent retinotopic meridians used to identify motion areas and solid lines represent retinotopic meridians at the anterior edge of area V4. Note how there is widespread separation of face selectivity and low-level motion selectivity. We found just one exception to this rule of spatial segregation of function: in M2 and M4 the ventral, foveal aspect of the MT/MSTv/FST motion complex exhibited face selectivity (similar to area pPL of [S1]) (C) Scatter plot of joint face and motion selectivity of voxels in the fundus of the STS (as defined by sulcal depth). Static face selectivity and low-level motion selectivity (signal change, normalized per subject) were determined for voxels in the fundus of the STS (excluding the MT/MSTv/FST complex, see Supplemental Experimental Methods) most responsive to movies of faces or objects. Dot color represents significant (q < 0.01) stimulus selectivities of each voxel: red for static face selectivity (faces - fruits and vegetables), blue for low-level motion selectivity (plaid movies - plaid pictures), magenta for both. These selectivity distributions had a voxel-wise distribution overlap value (DOV) of 0.34 (see Supplemental Experimental Methods). (D) Determination of statistical significance of measured DOV. The gray plot shows the null distribution of DOV, under the assumption of a random association between static face-selectivity and low-level motion selectivity across the considered voxels. The dashed red line represents the measured DOV of 0.34 (from panel A). This value is very significantly (p < 0.00001) smaller than expected by chance associations. Thus face and motion selectivity are neither co-localized nor independently distributed in the fundus of the STS, but are spatially segregated. Alternative quantifications of similarity of these stimulus selectivity distributions using cosine distance and Spearman correlation confirmed that they were less similar than would be expected by chance (both p < 0.0005). These p-values reflect upper (less significant) limits of 99% confidence intervals (see Supplemental Experimental Methods).

Figure S3. Identification and Location of the Middle Dorsal Face Patch (MD), Related to Figure 2

(A) Inflated cortical surface model of M2’s left hemisphere showing the relative locations of ML (purple), MF (blue), and MD (green). (B) Slice representations of the middle face patches (ML, MF, and MD) in all subjects (top to bottom). Parasagittal slices (left column) show the left hemispheres. Coronal and horizontal slices (center and right column, respectively) are presented following neurological convention, with the right side of the brain on the right side of the page. Slice coordinates assume standard stereotaxic positioning and are measured from the midpoint of the interaural line. The coronal slice of M2 is the one shown in Figure 2B and is reproduced here for completeness. Functional maps show the dynamic face selectivity localizer thresholded at an FDR of q < 0.01.

Figure S4. Analysis of Motion Content in Face and Object Movies, Related to Figure 2

(A) Responses of two ROIs, MT/MSTv/FST complex and LST, to two stimulus contrasts, face movie motion (natural face movies - face pictures; “face motion selectivity” in Figure S1C) and object movie motion (natural object movies - object pictures; “object motion selectivity” in Figure S1C). Both motion selective regions responded just as much (LST) if not more (MT/MSTv/FST) to the motion in the object movies than to the motion in the face movies. * = p < 0.05 and ** = p < 0.01, corrected using Holm–Bonferroni method for 6 tests (2 ROIs × (2 measures + comparison)). Dots on bar plots represent the values for individual subjects. Signal change is normalized per ROI. Error bars represent standard error. The data from LST are the same as those presented in Figure 2E–G. (B) Response of the 250 voxels in each subject’s temporal lobe most responsive to low-level motion (as measured by the low-level motion selectivity localizer) to face movie and object movie motion. Responses to the two types of motion are highly correlated. Linear regression suggests that the voxels in the temporal lobes most sensitive to low level motion responded ~89% as strongly to motion in the face movies as they responded to motion in the object movies. Signal change is normalized per subject. Both analyses suggest that motion content in face and object movies was well matched, and if not entirely equal, slightly larger in object movies than in face movies.