Abstract

We propose a semiparametric method for estimating a precision matrix of high-dimensional elliptical distributions. Unlike most existing methods, our method naturally handles heavy tailness and conducts parameter estimation under a calibration framework, thus achieves improved theoretical rates of convergence and finite sample performance on heavy-tail applications. We further demonstrate the performance of the proposed method using thorough numerical experiments.

Keywords: Precision matrix, calibrated estimation, elliptical distribution, heavy-tailness, semiparametric model

I. Introduction

We Consider the problem of precision matrix estimation. Let X = (X1, …, Xd)T be a d-dimensional random vector with mean μ ∈ ℝd and covariance matrix Σ ∈ ℝd×d, where . We want to estimate the precision matrix Ω = Σ−1 based on n independent observations. In this paper we focus on high dimensional settings where d/n → ∞. To handle the curse of dimensionality, we assume that Ω is sparse (i.e., many off-diagonal entries of Ω are zero).

A popular statistical model for precision matrix estimation is multivariate Gaussian, i.e., X ~ N(μ, Σ). Under Gaussian models, sparse precision matrix encodes the conditional independence relationship of the random variables [8], [21], which has motivates numerous applications in different research areas [3], [15], [36]. In the past decade, many precision matrix estimation methods have been proposed for Gaussian distributions. For more details, let x1, …, xn ∈ ℝd be n independent observations of X, we define the sample covariance matrix as

| (1) |

where . [1], [11], [38] propose the penalized Gaussian log-likelihood method named graphical lasso (GLASSO), which solves

| (2) |

where λ > 0 is a regularization parameter for controlling the bias-variance tradeoff. In another line of research, [5], [37] propose pseudo-likelihood methods to estimate the precision matrix. Their methods adopt a column-by column estimation scheme and are more amenable to theoretical analysis. More specifically, given a matrix A ∈ ℝd×d, let A*j = (A1j,…,Adj)T denote the jth column of A, we define and . [5] propose CLIME estimator, which solves

| (3) |

to estimate the jth column of the precision matrix. Moreover, let ||A||1 = maxj ||A*j||1 be the matrix ℓ1 norm of A, and ||A||2 be the largest singular value of A, (i.e., the spectral norm of A), [5] show that if we choose

| (4) |

the CLIME estimator in (3) attains the rates of convergence

| (5) |

where , and p = 1, 2. Scalable software packages for GLASSO and CLIME=have been developed which scale to thousands of dimensions [16], [22], [40].

Though significant progress has been for estimating Gaussian graphical models, most existing methods have two drawbacks: (i) They generally require the underlying distribution to be light-tailed [5], [7]. When this assumption is violated, these sample covariance matrix-based methods may have poor performance. (ii) They generally use the same tuning parameter to regularize the estimation, which is not adaptive to the individual sparseness of each column (More details will be provided in §III.B) and may lead to inferior finite sample performance. In another word, the regularization for estimating different columns of the precision matrix is not calibrated.

To overcome the above drawbacks, we propose a new sparse precision matrix estimation method, named EPIC (Estimating Precision matrIx with Calibration), which simultaneously handles data heavy-tailness and conducts calibrated estimation. To relax the tail conditions, we adopt a combination of the rank-based transformed Kendall’s tau estimator and Catoni’s M-estimator [7], [18]. Such a semiparametric combination has shown better statistical properties than those of the sample covariance matrix for the heavy-tailed elliptical distributions [6], [7], [10], [17]. We will explain more details in § II and § IV. To calibrate the parameter estimation, we exploit a new framework proposed by [12]. Under this framework, the optimal tuning parameter does not depend on any unknown quantity of the data distribution, thus the EPIC estimator is tuning insensitive [25]. Computationally, the EPIC estimator is formulated as a convex program, which can be efficiently solved by the parametric simplex method [34]. Theoretically, we show that the EPIC estimator attains improved rates of convergence than the one in (5) under mild conditions. Numerical experiments on both simulated and real datasets show that the EPIC method outperforms existing precision matrix estimation methods.

The rest of this paper is organized as follows: In §II, we briefly review the elliptical family; In §III, we describe the proposed method and derive the computational algorithm; In §IV, we analyze the statistical properties of the EPIC estimator; In §V and §VI, we conduct numerical experiments on both simulated and real datasets to illustrate the effectiveness of the proposed method; In §VII, we discuss other related precision matrix estimation methods and compare them with our method [23]-[25].

II. Background

We start with some notations. Let v = (v1, …, vd)T ∈ ℝd be a vector, we define vector norms: , , . Let be a subspace of ℝd, we use to denote the projection of v onto . We also define orthogonal complement of as . Given a matrix A ∈ ℝd×d, let A*j = (A1j,…,Adj)T and Ak* = (Ak1, …, Akd)T denote the jth column and kth row of A in vector forms, we define matrix norms: ||A||1 = maxj||A*j||1, ||A||2 = ψmax(A), ||A||∞ = maxk||Ak*||1, , ||A||max = maxj||A*j||∞, where ψmax(A) is the largest singular value of A. We use Λmax(A) and Λmin(A) to denote the largest and smallest eigenvalues of A. Moreover, we define the projection of A*j onto as .

We then briefly review the elliptical family, which has the following definition.

Definition 2.1 ([10]): Given μ ∈ ℝd and a symmetric positive semidefinite matrix Σ with rank (Σ) = r ≤ d, we say that a d-dimensional random vector X = (X1, …,Xd)T follows an elliptical distribution with parameter μ, ξ, and Σ denoted by

| (6) |

if X has a stochastic representation

| (7) |

where ξ ≥ 0 is a continuous random variable independent of U. Here is uniformly distributed on the unit sphere in ℝr, and Σ = AAT.

Note that A and ξ in (7) can be properly rescaled without changing the distribution. Thus existing literature usually imposes an additional constraint ||Σ||max 1 to make the distribution identifiable [10]. However, such=a constraint does not necessarily make Σ the covariance matrix of X. Since we are interested in estimating the precision matrix in this paper, we require and rank (Σ) = d such that the precision matrix of the elliptical distribution exists. Under this assumption, we use an alternative constraint , which not only makes the distribution identifiable but also has Σ defined as the conventional covariance matrix (e.g., as in the Gaussian distribution).

Remark 1: Σ can be factorized as Σ = ΘZΘ, where Z is the Pearson correlation matrix, and Θ = diag(θ1, …, θd) with θj as the standard deviation of Xj. Since Θ is a diagonal matrix, we can rewrite the precision matrix Ω as Ω = Θ−1ΓΒ−1, where Γ = Z−1 is the inverse correlation matrix.

Remark 2: As a generalization of the Gaussian family, the elliptical family has been widely applied to many research areas such as dimensionality reduction [19], portfolio theory [14], and data visualization [33]. Many of these applications rely on an effective estimator of the precision matrix for elliptical distributions.

III. Method

Motivated by the above discussion, the EPIC method has three steps: We first use the transformed Kendall’s tau estimator and Catoni’s M-estimator to obtain and respectively; We then plug into a calibrated inverse correlation matrix estimation procedure to obtain ; At last we assemble and to obtain . We explain more details about these three steps in the following subsections.

A. Correlation Matrix and Standard Deviation Estimation

To estimate Z, we adopt the transformed Kendall’s tau estimator proposed in [10] and [23]. More specifically, we define a population version of the Kendall’s tau statistic between Xj and Xk as follows,

where and are independent copies of Xj and Xk respectively. For elliptical distributions, [10], [23] show that Zkj’s and τkj’s have the following relationship

| (8) |

Therefore given x1, …, xn be n independent observations of X, where xi = (xi1,…, xid)T, we first calculate a sample version of the Kendall’s tau statistic between Xj and Xk by

for all k ≠ j, and 1 otherwise. We then obtain a correlation matrix estimator by the same entrywise transformation as (8),

| (9) |

To estimate Θ, we exploit the Catoni’s M-estimator proposed in [7]. For heavy-tailed distributions, [7] show that the Catoni’s M-estimator has better theoretical and empirical performance than the sample moment-based estimator. In particular, let ψ(t) = sign(t) · log(1 + |t| + t2/2) be a univariate function where sign(0) = 0. Let and be the estimatior of and respectively which solve the following two equations:

| (10) |

| (11) |

Here Kmax is a preset upper bound of maxj Var(Xj) and . [7] shows that the solutions to (10) and (11) must exist and can be efficiently solved by the Newton-Raphson algorithm [31]. Once we obtain and , we estimate the marginal standard deviation θj by

| (12) |

where Kmin is a preset lower bound of .

Remark 3: We choose the combination of the transformed Kendall’s tau estimator and Catoni’s M-estimator instead of sample covariance matrix, because we are handling heavy-tailed elliptical distributions. For light-tailed distributions (e.g. Gaussian distribution), we can still use the sample correlation matrix and sample standard deviation to estimate the Z and Θ. The extension of our proposed methodology and theory is straightforward. See more details in §IV.

B. Calibrated Inverse Correlation Matrix Estimation

We then plug the transformed Kendall’s tau estimator into the following convex program,

| (13) |

for all j = 1, …, d, where c can be any constant between 0 and 1 (e.g., c = 0.5). Here τj serves as an auxiliary variable to calibrate the regularization [12], [32]. Both the objective function and constraints in (13) contain τj to prevent from choosing τj either too large or too small.

To gain more intuition of the formulation of (13), we first consider estimating the jth column of the inverse correlation matrix using the CLIME method in a regularization form as follows,

| (14) |

where ν > 0 is the regularization parameter. The next proposition presents an alternative formulation of (14).

Proposition III.1: The following optimization problem

| (15) |

has the same solution as (14).

The proof of Proposition III.1 is provided in Appendix A. If we set ν/c = λ, then the only difference between (13) and (15) is that (13) contains a constraint ||Γ*j||1 ≤ τj. Due to the complementary slackness, this additional constraint encourages the regularization λτj to be proportional to the ℓ1 norm of the jth column (weak sparseness). From the theoretical analysis in §IV, we see that the regularization is calibrated in this way.

In the rest of this subsection, we omit the index j in (13) for notational simplicity. We denote Γ*j, I*j, and τj by γ, e, and τ respectively. By reparametrizing γ = γ+−γ−, we can rewrite (13) as the following linear program,

| (16) |

where λ = λ1. Though (16) can be solved by general linear program solvers (e.g. the simplex method as suggested in [5]), these general solvers cannot scale to large problems. In Appendix B, we provide a more efficient parametric simplex method [34], which naturally exploits the underlying sparsity structure, and attains better empirical performance than the simplex method.

C. Symmetric Precision Matrix Estimation

Once we get the inverse correlation matrix estimate , we estimate the precision matrix by

Remark 4: A possible alternative is that we first assemble a covariance matrix estimator

| (17) |

then directly estimate Ω by solving

for all j = 1, …, d. However, such a direct estimation procedure makes the regularization parameter selection sensitive to marginal variability. See [20], [26], [29] for more discussions of the ensemble rule.

The EPIC method does not guarantee the symmetry of . To get a symmetric estimate, we take an additional projection procedure to obtain a symmetric estimator

| (18) |

where ||·||∗ can be the matrix ℓ1, Frobenius, or max norm. More details about how to choose a suitable norm will be explained in the next section.

Remark 5: For the Frobenius and max norms, (18) has a closed form solution as follows,

For the matrix #x2113;1 norm, see our proposed smoothed proximal gradient algorithm in Appendix C. More details about how to choose a suitable norm will be explained in the next section.

IV. Statistical Properties

To analyze the statistical properties of the EPIC estimator, we define the following class of sparse symmetric matrices,

where κu is a constant, and (s, d, M) may scale with the sample size n. We assume that the following conditions hold:

-

(A.1)

,

(A.2) θmin ≤ minj θj ≤ maxj θj ≤ θmax,

-

(A.3)

maxj |μj| ≤ μmax, maxj ,

-

(A.4)

s2 log d/n→0,

where θmax, θmin, μmax, and K are constants.

Remark 6: Condition (A.3) only requires the fourth moment of the distribution to be finite. In contrast, sample covariance-based estimation methods can not achieve such theoretical results. See more details in [5] and [7].

Remark 7: The bounded mean in Condition (A.3) is actually a mild assumption. Existing high dimensional theories (Cai et al. 2011; Yuan, 2010; Rothman et al. 2008) on sparse precision matrix estimation all require the distribution to be light-tailed. For example, there exists some constant K such that for some r >> 4. By Jessen’s inequality, we have , which implies that . In another word, they also require maxj|μj| to be bounded

Before we proceed with main results, we first present the following important lemma.

Lemma 1: We assume that X ~ EC(μ, ξ, Σ) and (A.2)-(A.4) hold. Let and be defined in (9) and (12). There exist universal constants κ1 and κ2 such that for large enough n,

| (19) |

| (20) |

The proof of Lemma 1 is provided in Appendix D.

Remark 8: Lemma 1 shows that the transformed Kendall’s tau estimator and Catoni’s M-estimator possess good concentration properties for heavy-tailed elliptical distributions. That enables us to obtain a consistent precision matrix estimator in high dimensions.

A. Parameter Estimation Consistency

Theorem IV.1 provides the rates of convergence for precision matrix estimation under the matrix #x2113;1, spectral, and Frobenius norms.

Theorem IV.1: Suppose that X ~ EC(μ, ξ, σ) and (A.1)-(A.4) hold, if we take and choose the matrix #x2113;1 norm as ||·||* in (18), then for large enough n and p = 1,2, there exists a universal constant C1 such that

| (21) |

Moreover, if we choose the Forbenius norm as ||·||* in (18), then for large enough n, there exists a universal constant C2 such that

| (22) |

The proof of Theorem IV.1 is provided in Appendix E. Note that the rates of convergence obtained in the above theorem are faster than those in [5].

B. Model Selection Consistency

Theorem IV.2 provides the rate of convergence under the elementwise max norm.

Theorem IV.2: Suppose that X ~ EC(μ, ξ, Σ) and (A.1)-(A.4) hold. If we take and choose the max norm for (18), then for large enough n, there exists a universal constant C3 such that

| (23) |

Moreover, let E = {(k, j)|Ωkj ≠ 0}, and , if there exists large enough constant C4 such that

then we have .

The proof of Theorem IV.2 is provided in Appendix G. The obtained rate of convergence in Theorem IV.2 is comparable to that of [5].

Remark 9: Our selected regularization parameter in Theorems IV.1 and IV.2 does not contain any unknown parameter of the underlying distribution (e.g. ||Γ1||). Note that κ1 comes from (19) in Lemma 1. Theoretically we can choose κ1 as a reasonably large without any additional tuning (e.g. . See more details in [23]). In practice, we found that a fine tuning of κ1 delivers better finite sample performance.

V. Numerical Results

In this section, we compare the EPIC estimator with several competing estimators including:

CLIME.RC: We obtain the sparse precision matrix estimator by plugging the covariance matrix estimator defined in (17) into (3).

CLIME.SC: We obtain the sparse precision matrix estimator by plugging the sample covariance matrix estimator S defined in (1) into (3).

GLASSO.RC: We obtain the sparse precision matrix estimator by plugging the covariance matrix estimator defined in (17) into (2).

Moreover, (3) is also solved by the parametric simplex method as our proposed EPIC method, and (2) is solved by the block coordinate descent algorithm. All experiments are conducted on a PC with Core i5 3.3GHz CPU and 16GB memory. All programs are coded using C using double precision, and further called from R.

A. Data Generation

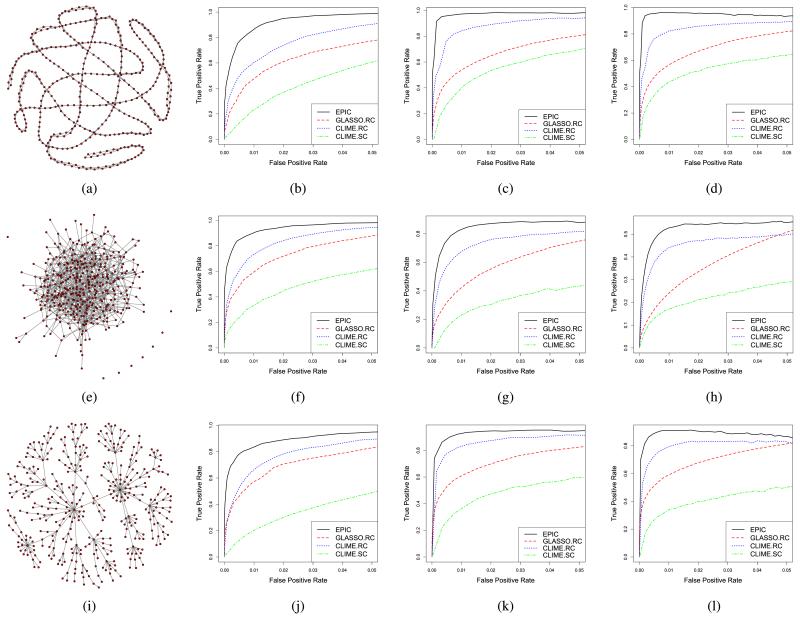

We consider three different settings for comparison: (1) d = 101; (2) d = 201; (3) d = 401. We adopt the following three graph generation schemes, as illustrated in Figure 1, to obtain precision matrices:

Band. Each node is assigned an index j with j = 1, …, d. Two nodes are connected by an edge if the difference between their indices is no larger than 2.

Erdös-Rényi. We set an edge between each pair of nodes with probability 4/d, independently of the other edges.

Scale-free. The degree distribution of the graph follows a power law. The graph is generated by the preferential attachment mechanism.

Fig. 1.

Three different graph patterns and corresponding average ROC curves. EPIC outperforms the competitors throughout all settings. (a) Band (d = 401). (b) Band (d = 101). (c) Band (d = 201). (d) Band (d = 401). (e) Erdös-Rényi (d = 401). (f) Erdös-Rényi (d = 101). (g) Erdös-Rényi (d = 201). (h) Erdös-Rényi (d = 401). (i) Scale-free (d = 401). (j) Scale-free (d = 101). (k) Scale-free (d = 201). (l) Scale-free (d = 401).

The graph begins with an initial chain graph of 10 nodes. New nodes are added to the graph one at a time. Each new node is connected to an existing node with a probability that is proportional to the number of degrees that the existing nodes already have. Formally, the probability pi that the new node is connected to the ith existing node is where ki is the degree of node i.

Let G be the adjacency matrix of the generated graph, we calculate as

where all Ukj’s are independently sampled from the uniform distribution Uniform (−1, +1). Let be the rescaling operator that converts a symmetric positive definite matrix to the corresponding correlation matrix, we further calculate

where Θ is the diagonal standard deviation matrix with for j = 1,…, d.

We then generate independent samples from the t-distribution with 6 degrees of freedom, mean 0, and covariance Σ. For the EPIC estimator, we set c = 0.5 in (13). For the Catoni’s M-estimator, we set Kmax = 10 and Kmin = 0.1.

B. Timing Performance

We first evaluate the computational performance of the parametric simplex method. For each model, we choose a regularization parameter, which yields approximate 0.05 · d(d − 1) nonzero off-diagonal entries. The EPIC and CLIME methods are solved by the parametric simplex method, which is described in Appendix B. The GLASSO is solved by the dual block coordinate descent algorithm, which is described in [11]. Table I summarizes the timing performance averaged over 100 replications. To obtain the baseline performance, we solve the CLIME.SC method using the simplex method1 as suggested in [5]. We see that all four methods greatly outperform the baseline. The EPIC, CLIME.RC, and CLIME.SC methods attain similar timing performance for all settings, and the GLASSO.RC method is more efficient than the others for d = 201 and d = 401.

TABLE I. Timing Performance of Different Estimators on the Band, Erdös-Rényi, and Scale-Free Models (in Seconds). The Baseline Performance Is Obtained by Solving the CLIME.SC Method Using the Simplex Method.

| Model | d | EPIC | GLASSO.RC | CLIME.RC | CLIME.SC | BASELINE |

|---|---|---|---|---|---|---|

| 101 | 0.1561(0.0248) | 0.3633(0.0070) | 0.1233(0.0057) | 0.1701(0.0119) | 49.467(1.7862) | |

| Band | 201 | 1.6622(0.1253) | 0.4417(0.0122) | 1.5897(0.1249) | 1.6085(0.0518) | 687.57(23.720) |

| 401 | 23.061(0.5777) | 1.0864(0.1403) | 24.441(1.5344) | 25.445(3.8066) | 4756.4(170.25) | |

|

| ||||||

| 101 | 0.1414(0.0079) | 0.3703(0.0072) | 0.1309(0.0331) | 0.2073(0.0925) | 59.775(2.0521) | |

| Erdös-Rényi | 201 | 1.6214(0.5175) | 0.4448(0.0164) | 1.5992(0.1840) | 1.6155(0.2957) | 803.51(29.835) |

| 401 | 21.722(0.5470) | 1.1517(0.0959) | 22.795(0.6999) | 24.230(3.1871) | 4531.7(151.46) | |

|

| ||||||

| 101 | 0.2245(0.0514) | 0.4398(0.0843) | 0.1509(0.0054) | 0.1871(0.0149) | 55.112(1.7109) | |

| Scale-free | 201 | 1.8682(0.1078) | 0.4632(0.0067) | 1.5472(0.1350) | 1.7235(0.1778) | 865.98(31.399) |

| 401 | 21.926(0.7112) | 1.0093(0.1140) | 23.135(1.4318) | 25.596(3.3401) | 4991.2(202.44) | |

C. Parameter Estimation

To select the regularization parameter, we independently generate a validation set of n samples from the same distribution. We tune λ over a refined grid, then the selected optimal regularization parameter is , where denotes the estimated precision matrix of the training set using the regularization parameter λ, and denotes the estimated covariance matrix of the validation set using either (1) or (17). Tables II and III summarize the numerical results averaged over 100 replications. We see that the EPIC estimator outperforms the GLASSO.RC and CLIME.RC estimators in all settings.

TABLE II.

Quantitive Comparison of Different Estimators on the Band, Erdös-Rényi, and Scale-Free Models. The EPIC Estimator Outperforms the Competitors in all Settings

| Spectral Norm: | |||||

|---|---|---|---|---|---|

|

| |||||

| Model | d | EPIC | GLASSO.RC | CLIME.RC | CLIME.SC |

| 101 | 3.3748(0.2081) | 4.4360(9,1445) | 3.3961(0.4403) | 3.6885(0.5850) | |

| Band | 201 | 3.3283(0.1114) | 4.8616(0.0644) | 3.4559(0.0979) | 4.4789(0.3399) |

| 401 | 3.5933(0.5192) | 5.1667(0.0354) | 4.0623(0.2397) | 5.7164(0.9666) | |

|

| |||||

| 101 | 2.1849(0.2281) | 2.6681(0.1293) | 2.6787(0.8414) | 2.3391(0.2976) | |

| Erdös-Rényi | 201 | 1.8322(0.0769) | 2.3753(0.0949) | 2.0106(0.3943) | 2.0528(0.1548) |

| 401 | 1.3322(0.1294) | 2.4265(0.0564) | 2.0051(0.4144) | 4.0667(1.1174) | |

|

| |||||

| 101 | 2.1113(0.3081) | 2.9979(0.1654) | 2.0401(0.3703) | 2.6541(0.5882) | |

| Scale-free | 201 | 2.3519(0.1779) | 3.2394(0.1078) | 2.3785(0.4186) | 2.5789(0.5139) |

| 401 | 3.2273(0.1201) | 4.0105(0.5812) | 3.3139(0.5812) | 3.9287(1.1750) | |

TABLE III.

Quantitive Comparison of Different Estimators on the Band, Erdös-Rényi, and Scale-Free Models. The EPIC Estimator Outperforms the Competitors in All Settings

| Frobenius Norm: | |||||

|---|---|---|---|---|---|

|

| |||||

| Model | d | EPIC | GLASSO.RC | CLIME.RC | CLIME.SC |

| 101 | 9.4307(0.3245) | 11.069(0.2618) | 9.7538(0.3949) | 11.392(0.8319) | |

| Band | 201 | 12.720(0.2282) | 16.135(0.1399) | 13.533(0.1898) | 14.850(0.6167) |

| 401 | 18.298(1.0537) | 23.177(0.1957) | 20.412(0.2366) | 25.254(1.0002) | |

|

| |||||

| 101 | 6.0660(0.1552) | 6.8777(0.2115) | 6.7097(0.3672) | 7.3789(0.4390) | |

| Erdös-Rényi | 201 | 6.7794(0.1632) | 8.1531(0.1828) | 7.6175(0.2616) | 8.3555(0.2844) |

| 401 | 7.3497(0.1743) | 10.795(0.1323) | 8.3869(0.4755) | 11.104(0.6069) | |

|

| |||||

| 101 | 4.6695(0.2435) | 5.6689(0.2344) | 4.9658(0.1762) | 6.2264(0.3841) | |

| Scale-free | 201 | 5.6732(0.1782) | 7.2768(0.0940) | 6.2343(0.2401) | 7.2842(0.3310) |

| 401 | 7.2979(0.1094) | 9.0940(0.0935) | 7.3765(0.2328) | 9.5396(0.5636) | |

D. Model Selection

To evaluate the model selection performance, we calculate the ROC curve of each obtained regularization path using the false positive rate (FPR) and true positive rate (FNR) defined as follows,

Figure 1 summarizes ROC curves of all methods averaged over 100 replications.2 We see that the EPIC estimator outperforms the competing estimators throughout all settings. Similarly, our method outperforms the sample covariance matrix-based CLIME estimator.

VI. Real Data Example

To illustrate the effectiveness of the proposed EPIC method, we adopt the sonar dataset from UCI Machine Learning Repository3 [13]. The dataset contains 101 patterns obtained by bouncing sonar signals off a metal cylinder at various angles and under various conditions, and 97 patterns obtained from rocks under similar conditions. Each pattern is a set of 60 features. Each feature represents the logarithm of the energy integrated over a certain period of time within a particular frequency band. Our goal is to discriminate between sonar signals bounced off a metal cylinder and those bounced off a roughly cylindrical rock.

We randomly split the data into two sets. The training set contains 80 metal and 77 rock patterns. The testing set contains 21 metal and 20 rock patterns. Let μ(k) be the class conditional means of the data where k = 1 represents the metal category and k = 0 represents the rock category. [5] assume that two classes share the same covariance matrix, and then adopt the sample mean for estimating μk’s and the sample covariance matrix-based CLIME estimator for estimating Ω. In contrast, we adopt the Catoni’s M-estimator for estimating μk’s and the EPIC estimator for estimating Ω. We classify a sample x to the metal category if

and to the rock category otherwise. We use the testing set to evaluate the performance of the EPIC estimator. For tuning parameter selection, we use a 5-fold cross validation on the training set to pick the regularization parameter λ.

To evaluate the classification performance, we use the criteria of misclassification rate, specificity, sensitivity, and Mathews Correlation Coefficient (MCC). More specifically, let yi’s and ’s be true labels and predicted labels of the testing samples, we define

where

Table IV summarizes the performance of both methods averaged over 100 replications (with standard errors in parentheses). We see that the EPIC estimator significantly outperforms the competitor on the sensitivity and misclassification rate, but slightly worse on the specificity. The overall classification performance measured by MCC shows that the EPIC estimator has about 8% improvement over the competitor.

TABLE IV.

Quantitive Comparison of the EPIC and Sample Covariance Matrix-Based CLIME Estimators in the Sonar Data Classification

| Method | Misclassification Rate | Specificity | Sensitivity | MCC |

|---|---|---|---|---|

| EPIC | 0.1990(0.0285) | 0.7288(0.0499) | 0.8579(0.0301) | 0.6023(0.0665) |

|

| ||||

| CLIME.SC | 0.2362(0.0317) | 0.7460(0.0403) | 0.7791(0.0429) | 0.5288(0.0631) |

VII. Discussion and Conclusion

In this paper, we propose a new sparse precision matrix estimation method for the elliptical family. Our method handles heavy-tailness, and conducts parameter estimation under a calibration framework. We show that the proposed method achieves improved rates of convergence and better finite sample performance than existing methods. The effectiveness of the proposed method is further illustrated by numerical experiments on both simulated and real datasets.

[25] proposed another calibrated graph estimation method named TIGER for Gaussian family. However, unlike the EPIC estimator, the TIGER method can not handle the elliptical family due to two reasons: (1) The transformed Kendall’s tau estimator cannot guarantee the positive semidefiniteness. If we directly plug it into the TIGER method, it makes the TIGER formulation nonconvex. Existing algorithms may not obtain a global solution in polynomial time. (2) The theoretical analysis in [25] is only applicable to the Gaussian family. Theoretical properties of the TIGER method for the elliptical family is unclear.

Another closely related method is the rank-based CLIME method for estimating inverse correlation matrix estimation for the elliptical family [24]. The rank-based CLIME method is based on the formulation in (3) and cannot calibrate the regularization. Furthermore, the rank-based CLIME method can only estimate the inverse correlation matrix. Thus for applications such as the linear discriminant analysis (as is demonstrated in §6) which requires the input to be a precision matrix [2], [30], [35], the rank-based CLIME method is not applicable.

Acknowledgments

This work was supported in part by the National Science Foundation under Grant IIS1408910 and Grant IIS1332109 and in part by the National Institutes of Health under Grant R01MH102339, Grant R01GM083084, and Grant R01HG06841.

Biographies

Tuo Zhao received his B.S. and M.S. degrees in Computer Science from Harbin Institute of Technology, and his second M.S. degree in Applied Math from University of Minnesota.

He is currently a Ph.D. Candidate in Department of Computer Science at Johns Hopkins University. He is also a visiting student in Department of Operations Research and Financial Engineering at Princeton University. His research focuses on large-scale semiparametric and nonparametric learning and applications to high throughput genomics and neuroimaging.

Han Liu received a joint Ph.D. degree in Machine Learning and Statistics from the Carnegie Mellon University, Pittsburgh, PA, USA in 2011.

He is currently an Assistant Professor of Statistical Machine Learning in the Department of Operations Research and Financial Engineering at Princeton University, Princeton, NJ. He is also an adjunct Professor in the Department of Biostatistics and Department of Computer Science at Johns Hopkins University. He built and is serving as the principal investigator of the Statistical Machine Learning (SMiLe) lab at Princeton University. His research interests include high dimensional semiparametric inference, statistical optimization, Big Data inferential analysis.

APPENDIX A PROOF OF PROPOSITION III.1

Proof: To show the equivalence between (14) and (15), we only need to verify that the optimal solution to (15) satisfies

| (A.1) |

We then prove (A.1) by contradiction. Assuming that there exists some such that

| (A.2) |

(A.2) implies that is also a feasible solution to (15) and

| (A.3) |

(A.3) contradicts with the fact that minimizes (15). Thus (A.1) must hold, and (15) is equivalent to (14).

Appendix B Parametric Simplex Method

We provide a brief description of the parametric simplex method only for self-containedness. More details of the derivation can be found in [34]. We consider the following generic form of linear program,

| (B.1) |

where c ∈ ℝm, A ∈ ℝn×m, and b ∈ ℝn. It is well known that (B.1) has a dual formulation as ∈follows,

| (B.2) |

where y = (y1, …, yn)T ∈ ℝn are dual variables. The simplex method usually solves either (B.1) or (B.2). It contains two phases: Phase I is to find a feasible initial solution for Phase II; Phase II is an iterative procedure to recover the optimal solution based on the given initial solution.

Different from the simplex method, the parametric simplex method adds some perturbation to (B.1) and (B.2) such that the optimal solutions can be trivially obtained. More specifically, the parametric simplex method solves the following pair of linear programs

| (B.3) |

| (B.4) |

where β ≥ 0 is a perturbation parameter, p ∈ ℝn and q ∈ ℝm are perturbation vectors. When β, p, and q are suitably chosen such that b + βp ≥ 0 and c + βq ≤ 0, x = 0 and y = 0 are the optimal solutions to (B.3) and (B.4) respectively. The parametric simplex method is an iterative procedure, which gradually reduces β to 0 (corresponding to no perturation) and eventually recovers the optimal solution to (B.1).

To derive the iterative procedure, we first add slack variables w = (w1, …, wn)T ∈ ℝn, and rewrite (B.3) as

| (B.5) |

where H = [AI], , and

Since b + βp ≥ 0 and c + βq ≤ 0, is the optimal solution to (B.5). We then divide all variables in into a nonbasic group and a basic group . In particular, belong to the nonbasic group denoted by , and belong to the basic group denoted by . We also divide H into two submatrices and , where contains all columns of H corresponding to , and contains all columns of H corresponding to . We then rewrite the constraint in (B.5) as . Consequently, we obtain the primal dictionary associated with the basic group by

| (B.6) |

| (B.7) |

where , , , , and φP is the objective value of (B.5) at current iteration.

We then add slack variables z = (z1, … , zm)T, and rewrite (16) as

| (B.8) |

To make the notation consistent with the primal problem, we define

Similarly we can obtain the dual dictionary associated with the nonbasic variable by

| (B.9) |

| (B.10) |

where , and φD is the objective value of the dual problem at current iteration.

Once we obtain (B.6), (B.7), (B.9), and (B.10), we start to decrease β, and the smallest value of β at current iteration is obtained by

we then swap a pair of basic and nonbasic variables in B and N and update the primal and dual dictionaries such that β can be decreased to β*. See more details on updating the dictionaries in [34]. By repeating the above procedure, we eventually decrease β to 0. The parametric simplex method guarantees the feasibility and optimality for both (B.3) and (B.4) in each iteration, and eventually obtain the optimal solution to the original problem (B.3).

Since the parametric simplex method starts with all zero solutions, it can recover the optimal solution only in a few iterations when the optimal solution is very sparse. That naturally fits into the sparse estimation problems such as the EPIC method. Moreover, if we rewrite (16) in the same form as (B.3), we need to set p = (0T, eT, 0)T and start with β = 1. Since c = (−1T, −c)T, we can set q = 0 i.e., we do not need perturbation on c. Thus the computation in each iteration can be further simplified due to the sparsity of p and q.

Remark B.1: For sparse estimation problems, Phase I of the simplex method does not guarantee the sparseness of the initial solution. As a result, Phase II may start with a dense initial solution, and gradually reduce the sparsity of the solution. Thus the overall convergence of the simplex method often requires a large number of iterations when the optimal solution is very sparse.

APPENDIX C Smoothed Proximal Gradient Algorithm

We first apply the smoothing approach in [28] to obtain a smooth surrogate of the matrix #x2113;1 norm based on the Fenchel dual representation,

| (C.1) |

where η > 0 is a smoothing parameter. (C.1) has a closed form solution as follows,

| (C.2) |

where , and γk is the minimum positive value such that . See [9] for an efficient algorithms to find γk with the average computational complexity of O(d2). As is shown in [28], the smooth surrogate is smooth, convex, and has a simple form gradient as

Since is obtained by the soft-thresholding in (C.2), we have G(Ω) continuous in Ω with the Lipschitz constant η−1. Motivated by these good computational properties, we consider the following optimization problem instead of (18),

| (C.3) |

To solve (C.3), we adopt the accelerated projected gradient algorithm proposed in [27]. More specifically, we define two sequences of auxiliary variables {M(t)} and {W(t)} with M(0) = W(0) = Ω(0), and a sequence of weights {θt = 2/(1+t)}. For the tth iteration, we first calculate the auxiliary variable M(t) as

We then calculate the auxiliary variable W(t) as

where ηt is the step size. We can either choose ηt = η in all iterations or estimate ηt’s by the back-tracking=line search for better empirical performance [4]. At last, we calculate Ω(t) as,

The next theorem provides the convergence rate of the algorithm with respect to minimizing (18).

Theorem C.1: Given the desired accuracy ε such that , let η = d−1ε/2, we need the number of iterations to be at most

Proof: Due to the fact that ||A||F ≤ d||A||∞, a direct consequence of (C.1) is the following uniform bound

Then we consider the following decomposition

where the last inequality comes from the result established in [27],

Thus given dη = ε/2, we only need

| (C.4) |

By solving (C.4), we obtain

Theorem C.1 guarantees that the above algorithm achieves the optimal rate of convergence for minimizing (18) over the class of all first-order computational algorithms.

APPENDIX D Proof of Lemma 1

Proof: [7] shows that there exist universal constants κ3 and κ4 such that

| (D.1) |

| (D.2) |

We then define the following events

Conditioning on , we have

| (D.3) |

Conditioning on and , (D.3) implies

| (D.4) |

(D.4) further implies

| (D.5) |

Conditioning , (D.5) implies

| (D.6) |

Combining (D.1), (D.2), and (D.6), for small enough ε such that

| (D.7) |

we have

| (D.8) |

By taking the union bound of (D.8), we have

If we take , then (D.7) implies that we need n large enough such that

Taking , we then have

(19) is a direct result in [24], therefore its proof is omitted.

Appendix E Proof Of Theorem IV.1

Proof: We first define the following pair of orthogonal subspaces ,

We will use to exploit the sparseness of Γ*j. We then define the following event

Conditioning on , we haveis

| (E.1) |

Now let τj = ||Γ*j||1, (E.1) implies that (Γ*j, τj) is a feasible solution to (13). Since is the empirical minimizer, we have

| (E.2) |

where the last equality comes from the fact that .

Let be the estimation error, (E.2) implies

| (E.3) |

where (i) comes from the constraint in (13): and (ii) comes from the fact Combining the fact with (E.3), we have

| (E.4) |

where . (E.4) implies that belongs the following cone shape set

The following lemma characterizes an important property of when holds.

Lemma E.1: Suppose that X ~ EC(μ, ξ, Σ), and (A.1) and hold. Given any , for small enough λ such that , we have

| (E.5) |

The proof of Lemma E.1 is provided in Appendix E.1. Since Δ*j exactly belongs to , we have a simple variant of (E.5) as

| (E.6) |

where the last inequality comes from the fact that has at most s nonzero entries. Since

| (E.7) |

where the last inequality comes from (E.4). Combining (E.6) and (E.7), we have

| (E.8) |

Assuming that , (E.8) implies

| (E.9) |

Combining (E.6) and (E.9), we have

| (E.10) |

Assuming that , (E.10) implies

| (E.11) |

Recall , in order to secure

we need large enough n such that

Combining (E.9) and (E.11), we have

| (E.12) |

Combining (E.4), (E.6), and (E.12), we obtain

| (E.13) |

Combining (E.12) and (E.13), we have

| (E.14) |

By Lemma E.1 again, (E.14) implies

| (E.15) |

Let and . Recall , by definition of the matrix #x2113;1 and Frobenius norms, (E.13) and (E.15) imply

| (E.16) |

and

| (E.17) |

Now we start to derive the error bound of obtained by the ensemble rule. We have the following decomposition

| (E.18) |

Moreover, for any A, B, C ∈ ℝd×d, where A and C are diagonal matrices, we have

| (E.19) |

| (E.20) |

Here we define the following event

Thus conditioning , (E.16), (E.18), and (E.19) imply

| (E.21) |

If (A.4): s2logd/n → 0 holds, then (E.21) is determined by the slowest rate . Thus for large enough n, there exists a universal constant C4 such that

| (E.22) |

Similarly, conditioning on , (E.17), (E.18), (E.20) and the fact imply

| (E.23) |

Again if (A.4) holds, then (E.23) is determined by the slowest rate . Thus for large enough n, there exists a universal constant C2 such that

| (E.24) |

We then proceed to prove the error bound of obtained by the symmetrization procedure (18). Let C1 = 2C4, if we choose the matrix #x2113;1 norm as ||·||* in (18), we have

| (E.25) |

where the second inequality comes from the fact that Ω is a feasible solution to (18), and is the empirical minimizer. If we choose the Frobenius norm as ||·||* in (18), using the fact that the Frobenius norm projection is contractive, we have

| (E.26) |

All above analysis are conditioned on and . Thus combining Lemma 1 with (E.25) and (E.26), we have

| (E.27) |

| (E.28) |

where p = 1, 2, and (E.27) comes from the fact that ||A||2 ≤ ||A||1 for any symmetric matrix A.

APPENDIX F Proof of Lemma E.1

Proof: Since , we have

| (F.1) |

Since , we have , which implies

| (F.2) |

where the last inequality comes from the fact that there are at most s nonzero entries in . Then combining (F.1) and (F.2), we have

| (F.3) |

Since we have , (F.3) implies

APPENDIX G Proof Of Theorem IV.2

Proof: Our following analysis also assumes that holds. Since implies (E.1),

where τj = ||Γ*j||1. Then (Γ*j, τj) is a feasible solution to (13), which implies

| (G.1) |

Moreover, we have

which further implies

| (G.2) |

Combing (G.1) and (G.2), we have where the last inequality comes from

| (G.3) |

where the last inequality comes from

By (G.3), we have

| (G.4) |

Recall , by the definition of the max norm and (G.4), we have

| (G.5) |

where κ7 = κ1(1 + 4c)/c. Since for any A, B, C ∈ ℝd×d, where A and C are diagonal matrices, we have

| (G.6) |

Conditioning on

| (G.7) |

(G.6), (E.18) and the fact ||Γ||max ≤ M imply

| (G.8) |

Again if (A.4): s2 log d/n → 0 holds, then (G.8) determined by the slowest rate . Thus for large enough n, if we choose the max norm as ||·||* in (18), we have

| (G.9) |

where the second inequality comes from the fact that Ω is a feasible solution to (18), and is the empirical minimizer.

Note that the results obtained here only depend on and . Thus by Lemma 1 and (G.9), let C3 = 2κ7, we have

To show the partial consistency in graph estimation , we follow a similar argument to Theorem 4 in [25]. Therefore the proof is omitted.

Footnotes

The implementation of the simplex method is based on the R packages linprog and lpSolve.

The ROC curves from different replications are first aligned by regularization parameters. The averaged ROC curve shows the false positive and true positive rate averaged over all replications w.r.t. each regularization parameter

Available at http://http://archive.ics.uci.edu/ml/datasets.html.

This paper was presented at the 27th Annual Conference on Neural Information Processing Systems in 2013.

Contributor Information

Tuo Zhao, Department of Operations Research and Financial Engineering, Princeton University, Princeton, NJ 08544 USA, and also with the Department of Computer Science, Johns Hopkins University, Baltimore, MD 21218 USA (tour@cs.jhu.edu).

Han Liu, Department of Operations Research and Financial Engineering, Princeton University, Princeton, NJ 08544 USA (hanliu@princeton.edu).

REFERENCES

- [1].Banerjee O, El Ghaoui L. A. d’Aspremont, “Model selection through sparse maximum likelihood estimation for multivariate Gaussian or binary data,”. J. Mach. Learn. Res. 2008 Jun;9:485–516. [Google Scholar]

- [2].Bickel PJ, Levina E. “Some theory for Fisher’s linear discriminant function, ‘naive Bayes’, and some alternatives when there are many more variables than observations,”. Bernoulli. 2004;10(6):989–1010. [Google Scholar]

- [3].Blei DM, Lafferty JD. “A correlated topic model of science,”. Ann. Appl. Statist. 2007;1(1):17–35. [Google Scholar]

- [4].Boyd S, Vandenberghe L. Convex Optimization. 2nd ed Cambridge Univ. Press; Cambridge, U.K.: 2009. [Google Scholar]

- [5].Cai T, Liu W, Luo X. “A constrained 1 minimization approach to sparse precision matrix estimation,”. J. Amer. Statist. Assoc. 2011;106(494):594–607. [Google Scholar]

- [6].Cambanis S, Huang S, Simons G. “On the theory of elliptically contoured distributions,”. J. Multivariate Anal. 1981;11(3):368–385. [Google Scholar]

- [7].Catoni O. “Challenging the empirical mean and empirical variance: A deviation study,”. Ann. Inst. Henri Poincaré Probab. Statist. 2012;48(4):1148–1185. [Google Scholar]

- [8].Dempster AP. “Covariance selection,”. Biometrics. 1972;28(1):157–175. [Google Scholar]

- [9].Duchi J, Shalev-Shwartz S, Singer Y, Chandra T. “Efficient projections onto the #x2113;1-ball for learning in high dimensions,”. Proc.25th Int. Conf. Mach. Learn. 2008:272–279. [Google Scholar]

- [10].Fang K-T, Kotz S, Ng KW. Monographs on Statistics and Applied Probability. Vol. 36. Chapman & Hall; London, U.K.: 1990. Symmetric Multivariate and Related Distributions. [Google Scholar]

- [11].Friedman J, Hastie T, Tibshirani R. “Sparse inverse covariance estimation with the graphical lasso,”. Biostatistics. 2008;9(3):432–441. doi: 10.1093/biostatistics/kxm045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].Gautier E, Tsybakov AB. “High-dimensional instrumental variables regression and confidence sets,” ENSAE ParisTech, Malakoff, France. Tech. Rep. 2011 arxiv.org. [Google Scholar]

- [13].Gorman RP, Sejnowski TJ. “Analysis of hidden units in a layered network trained to classify sonar targets,”. Neural Netw. 1988;1(1):75–89. [Google Scholar]

- [14].Gupta AK, Varga T, Bodnar T. Elliptically Contoured Models in Statistics and Portfolio Theory. Springer-Verlag; New York, NY, USA: 2013. [Google Scholar]

- [15].Honorio J, Ortiz L, Samaras D, Paragios N, Goldstein R. Advances in Neural Information Processing Systems. Vol. 22. Curran Associates; Red Hook, NY, USA: 2009. “Sparse and locally constant Gaussian graphical models,”. [Google Scholar]

- [16].Hsieh C-J, Dhillon IS, Ravikumar PK, Sustik MA. Advances in Neural Information Processing Systems. Vol. 24. Curran Associates; Red Hook, NY, USA: 2011. “Sparse inverse covariance matrix estimation using quadratic approximation,”; pp. 2330–2338. [Google Scholar]

- [17].Hult H, Lindskog F. “Multivariate extremes, aggregation and dependence in elliptical distributions,”. Adv. Appl. Probab. 2002;34(3):587–608. [Google Scholar]

- [18].Kruskal WH. “Ordinal measures of association,”. J. Amer. Statist. Assoc. 1958;53(284):814–861. [Google Scholar]

- [19].Krzanowski WJ. Principles of Multivariate Analysis. Clarendon; Oxford, U.K.: 2000. [Google Scholar]

- [20].Lam C, Fan J. “Sparsistency and rates of convergence in large covariance matrix estimation,”. Ann. Statist. 2009;37(6 B):4254–4278. doi: 10.1214/09-AOS720. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [21].Lauritzen SL. Graphical Models. Vol. 17. Oxford Univ. Press; London, U.K.: 1996. [Google Scholar]

- [22].Li X, Zhao T, Yuan X, Liu H. “The flare Package for Highdimensional Sparse Linear Regression in R,”. J. Mach. Learn. Res. 2014 [PMC free article] [PubMed] [Google Scholar]

- [23].Liu H, Han F, Yuan M, Lafferty J, Wasserman L. “Highdimensional semiparametric Gaussian copula graphical models,”. Ann. Statist. 2012;40(4):2293–2326. [Google Scholar]

- [24].Liu H, Han F, Zhang C-H. Advances in Neural Information Processing Systems. Vol. 25. Curran Associates; Red Hook, NY, USA: 2012. “Transelliptical graphical models,”. [Google Scholar]

- [25].Liu H, Wang L. Tech. Rep. Massachusett Inst. Technol.; Cambridge, MA, USA: 2012. TIGER: A tuning-insensitive approach for optimally estimating Gaussian graphical models. [Google Scholar]

- [26].Liu H, Wang L, Zhao T. Sparse covariance matrix estimation with eigenvalue constraints. J. Comput. Graph. Statist. 2014;23(2):439–459. doi: 10.1080/10618600.2013.782818. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [27].Nesterov YE. An approach to constructing optimal methods for minimization of smooth convex functions. Èkonomika Matematicheskie Metody. 1988;24(3):509–517. [Google Scholar]

- [28].Nesterov Y. Smooth minimization of non-smooth functions. Math. Program. 2005;103(1):127–152. [Google Scholar]

- [29].Rothman AJ, Bickel PJ, Levina E, Zhu J. Sparse permutation invariant covariance estimation. Electron. J. Statist. 2008;2:494–515. [Google Scholar]

- [30].Shao J, Wang Y, Deng X, Wang S. Sparse linear discriminant analysis by thresholding for high dimensional data. Ann. Statist. 2011;39(2):1241–1265. [Google Scholar]

- [31].Stoer J, Bulirsch R, Bartels R, Gautschi W, Witzgall C. Introduction to Numerical Analysis. Vol. 2. Springer-Verlag; New York, NY, USA: 1993. [Google Scholar]

- [32].Sun T, Zhang C. Scaled sparse linear regression. Biometrika. 2012;99(4):879. [Google Scholar]

- [33].Tokuda T, Goodrich B, Van Mechelen I, Gelman A, Tuerlinckx F. Tech. Rep. Columbia Univ.; New York, NY, USA: 2011. Visualizing distributions of covariance matrices. [Google Scholar]

- [34].Vanderbei RJ. Linear Programming: Foundations and Extensions. Springer-Verlag; New York, NY, USA: 2008. [Google Scholar]

- [35].Wakaki H. Discriminant analysis under elliptical populations. Hiroshima Math. J. 1994;24(2):257–298. [Google Scholar]

- [36].Wille A, et al. Sparse graphical Gaussian modeling of the isoprenoid gene network in arabidopsis thaliana. Genome Biol. 2004;25(5):R92. doi: 10.1186/gb-2004-5-11-r92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [37].Yuan M. High dimensional inverse covariance matrix estimation via linear programming. J. Mach. Learn. Res. 2010 Mar;11:2261–2286. [Google Scholar]

- [38].Yuan M, Lin Y. Model selection and estimation in the Gaussian graphical model. Biometrika. 2007;94(1):19–35. [Google Scholar]

- [39].Zhao T, Liu H. Advances in Neural Information Processing Systems. Vol. 26. Curran Associates; Red Hook, NY, USA: 2013. Sparse inverse covariance estimation with calibration. [Google Scholar]

- [40].Zhao T, Liu H, Roeder K, Lafferty J, Wasserman L. The huge package for high-dimensional undirected graph estimation in R. J. Mach. Learn. Res. 2012;13(1):1059–1062. [PMC free article] [PubMed] [Google Scholar]