Abstract

Introduction

Tablet computer-based screening may have the potential for detecting patients at risk for opioid abuse in the emergency department (ED). Study objectives were a) to determine if the revised Screener and Opioid Assessment for Patients with Pain (SOAPP®-R), a 24-question previously paper-based screening tool for opioid abuse potential, could be administered on a tablet computer to an ED patient population; b) to demonstrate that >90% of patients can complete the electronic screener without assistance in <5 minutes and; c) to determine patient ease of use with screening on a tablet computer.

Methods

This was a cross-sectional convenience sample study of patients seen in an urban academic ED. SOAPP®-R was programmed on a tablet computer by study investigators. Inclusion criteria were patients ages ≥18 years who were being considered for discharge with a prescription for an opioid analgesic. Exclusion criteria included inability to understand English or physical disability preventing use of the tablet.

Results

93 patients were approached for inclusion and 82 (88%) provided consent. Fifty-two percent (n=43) of subjects were male; 46% (n=38) of subjects were between 18–35 years, and 54% (n=44) were >35 years. One hundred percent of subjects completed the screener. Median time to completion was 148 (interquartile range 117.5–184.3) seconds, and 95% (n=78) completed in <5 minutes. 93% (n=76) rated ease of completion as very easy.

Conclusions

It is feasible to administer a screening tool to a cohort of ED patients on a tablet computer. The screener administration time is minimal and patient ease of use with this modality is high.

INTRODUCTION

Screening tools to detect undiagnosed mental health and substance use problems have been developed to enable earlier detection of disorders, and thus, earlier care.1 Multiple tools have been developed for this purpose, including the Patient Health Questionnaire (PHQ-9) for depression, Alcohol Use Disorders Identification Test (AUDIT) and the Drug Abuse Screening Test (DAST).2–4 These tools are an important first step in the process of SBIRT (screening, brief intervention and referral to treatment).5 Using such screening tools in the emergency department (ED) can be powerful, particularly at the time of exacerbation of disease.6,7

The process of screening patients may be time consuming, costly and can require staff resources that do not exist.8 Computerized screening may be a solution to this dilemma.9 Computerized screening requires minimal staff time, scores are calculated without error and, with the recent increased number of available products and expanded use of tablet computers in society over the past several years, patients are becoming comfortable interacting with technology. Given these factors and the evolution of tablet computers that are now lighter, less expensive and with a longer battery life,10 screening ED patients with tablet computers may be an attractive option.

In this study, we used an electronic tablet version of a screener for opioid prescription abuse potential. Opioid prescription abuse in the United States has increased exponentially over the past decade.11 Deaths from drug overdose have surpassed deaths from motor vehicle accidents, and the problem has been described as an epidemic,12,13 elevating screening for opioid abuse potential to great importance.

The screening tool we chose for our ED population is the Revised Screener and Opioid Assessment for Patients with Pain (SOAPP®-R).14 This proprietary screening measure, developed and validated by Inflexxion, Inc. as part of a NIDA-funded Small Business Innovative Research (SBIR) grant, was developed and validated in pain clinic patients and is also commonly used in primary care practices. The Centers for Disease Control and Prevention have concluded: “Health-care providers should only use opioid pain relievers in carefully screened and monitored patients when non-opioid pain reliever treatments are insufficient to manage pain.”11 Despite the fact that up to 42% of ED visits are for painful conditions15 and that emergency physicians commonly prescribe opioids, screening tools like this are not commonly used in the ED setting.

Our study has the following objectives: a) To determine if this screening tool could be administered on a tablet computer in an ED patient population; b) To demonstrate that >90% of patients can complete the screener without assistance in <5 minutes and; c) To determine patient perception of ease of use with screening on a tablet computer.

MATERIAL AND METHODS

Study Location

This was a cross-sectional, prospective, convenience sample study of patients seen at a single urban academic Level I trauma center with approximately 42,000 annual visits. The protocol was approved as exempt by our hospital’s institutional review board. Patient consent was determined by the patient indicating willingness to continue on the welcome screen of the tablet computer program.

Programming the Tablet Screener

SOAPP®-R was administered on a generic seven-inch tablet running the Android operating system (PC709 Android 4.0 Tablet, dimensions 7×5×0.25 inches). Permission to use the SOAPP®-R instrument in electronic format for this study was granted by its copyright holder (Inflexxion, Inc., Newton, MA). The tablet was programmed using the “App Inventor” programming language.16 In addition to the screening tool, basic demographic questions and a final question asking satisfaction/ease of use with the tablet screener were included.

Patients

Included individuals were patients ages ≥18 years who were being considered for discharge with an opioid analgesic by the attending emergency physician. Exclusion criteria were the following: inability to understand English, physical disability preventing use of the tablet, the patient was not being prescribed an opioid for the treatment of acute or chronic pain (e.g. codeine given for cough suppression or buprenorphine or methadone for maintenance of a drug treatment program), dementia or other mental impairment, or the patient was a prisoner.

Intervention

Patients were identified by physicians informing the research assistant that they were being discharged with an opioid analgesic, or when the research assistant saw on the electronic charting system (Medhost EDIS, Medhost, Inc., Plano TX) that the patient was being discharged with such a prescription. This trained researcher approached the patient, briefly described the study, and handed them the tablet with the survey program open. Consent was acknowledged on the tablet, and a welcome screen informed patients that their responses would not be shared with their treating clinicians, and thus, not affect medications prescribed to them. Although the researcher was present at all times, patients were required to complete the screener without assistance. The researcher was also unaware of the patients’ screening results, which were stored only on the tablet for later analysis and not reported at the time of screening. The internet functionality of the tablet was disabled to prevent possible breach of data, and the tablet was stored in a locked safe at the clinical site when not in use. Data were exported to a computer in a locked office on a weekly basis during the study.

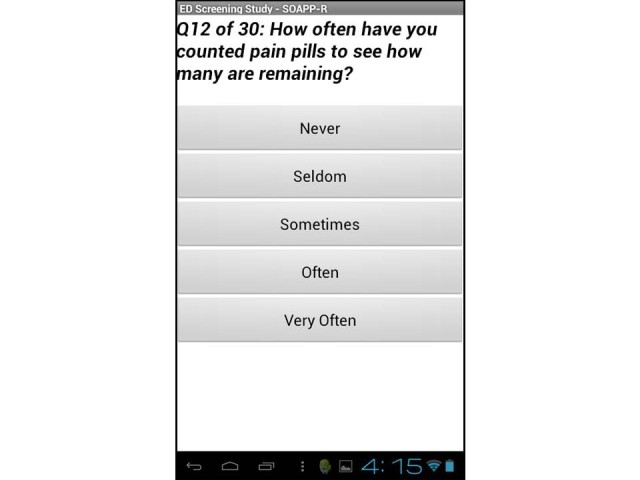

The SOAPP®-R is a 24-question screening tool that has a question stem followed by one of five responses, each with an associated number of points: never (0 points), seldom (1 point), sometimes (2 points), often (3 points), and very often (4 points). Therefore, the range of total points possible is 0–96. A positive score on the screener, which has been identified as predicting aberrant medication-related behavior within six months after initial testing, is 18 points or higher. This score was determined to have a sensitivity of 81% for detecting high-risk patients.14 The tool was originally designed to be administered on paper and completed in less than 10 minutes (600 seconds). A screen shot of the tablet version is found in the Figure.

Figure.

Sample screenshot of the electronic screening tool.

Outcome Measures

The three outcome measures were a) to determine if the SOAPP®-R could be administered on a tablet computer to an ED patient population, determined by survey completion rate; b) to demonstrate that the vast majority of patients can complete the electronic screener without assistance in <5 minutes (an arbitrary cutoff we thought would be most reasonable for patients and clinicians) and; c) to determine patient ease of use with screening on a tablet computer determined by a survey question built in to the tablet application asking patients to describe their experience as one of five choices: very easy, somewhat easy, neutral, somewhat difficult, or very difficult.

THEORY/CALCULATION

Power Calculation

Our sample size was based on calculations for a companion study comparing SOAPP-R scores with prescription drug monitoring data. We estimated that 30% (+/− 10%) of patients who completed SOAPP®-R would score as “at-risk” (score ≥18). The necessary sample size to obtain that margin of error with a 95% CI was determined to be 81 patients. This estimate was based on a prior study at our site showing that 33.1% of patients had evidence of aberrant drug-related behavior (≥4 opioid prescriptions and ≥4 providers in a 12-month period) on the state prescription drug monitoring program database.17 We purport that this number of patients is also sufficient for gathering adequate pilot data for this study.

Statistical Analysis

We exported data from the tablet to a desktop computer and imported the data into statistical analysis software. There was no manual transfer of data required, so risk of data loss was negligible. Descriptive statistics were generated. We calculated mean, standard deviation, median, and minimum and maximum values for all continuous variables. Frequencies and percentages were calculated for all categorical variables. We analyzed all data with JMP v8.0 (SAS Institute, Inc., Cary, NC).

RESULTS

Patients

Between May and August 2013, 93 patients were approached for inclusion, and 82 (88%) provided consent. Patient characteristics are demonstrated in Table.

Table.

Characteristics of included patients in tablet computer-based screening for possible risk for opioid abuse.

| Characteristics | n (%) |

|---|---|

| Age (years) | |

| 18–25 | 19 (23.2%) |

| 26–35 | 16 (19.5%) |

| 36–45 | 19 (23.2%) |

| 46–55 | 23 (28.0%) |

| 56-older | 5 (6.1%) |

| Race | |

| White | 51 (62.2%) |

| Black | 21 (25.6%) |

| Asian | 2 (2.4%) |

| Other/declined to answer | 8 (9.8%) |

| Ethnicity | |

| Latino | 10 (12.2%) |

| Not Latino | 72 (87.8%) |

Outcome Measures

One hundred percent of subjects were able to complete the tablet screener without assistance. Every patient completed the screener, answering all of the questions. Distribution of time to completion was not parametric. The median time to completion of the 24 questions on the SOAPP®-R was 148.0 seconds (interquartile range=117.5–185.3). Seventy-eight of 82 patients (95.1%) were able to complete the screener in <300 seconds (5 minutes). The mean SOAPP®-R score was 16.0 (95% CI 13.2–18.8). Approximately one third (32.9%, n=27) of patients had a SOAPP®-R score ≥18, indicating that they were “at risk” for aberrant behavior.

Patients rated ease of completion as 93% (n=76) very easy, 1% (n=1) somewhat easy, 5% (n=4) neutral, 1% (n=1) somewhat difficult. Overall, the tablet had no malfunctions and operated normally throughout the study.

DISCUSSION

This study demonstrated that a screening tool for opioid abuse potential can be administered electronically to an ED patient population. Our research joins multiple prior studies in various clinical settings demonstrating the applicability and feasibility of electronic screening. Early studies of computerized screening in healthcare settings were performed before the introduction of tablet computers, and focused mainly on the fidelity between paper and electronic versions of the screener. For example, Olajos-Clow et al. studied patients completing the Mini Asthma Quality of Life questionnaire.18 Patients were randomized to either a paper or a computerized version. The researchers found that there was good agreement between the two methods and that the electronic version was preferred by most participants. Similar findings were present in other crossover comparison studies of electronic versus original paper versions.19–22

Other studies have looked at technology-based screening specifically in the ED patient population. Cotter et al. surveyed adolescents and young adults about their energy drink and caffeinated beverage use, administered on a tablet computer.23 Ewing et al. administered the computerized alcohol screening and intervention (CASI) system to screen over 1,000 traumatized patients for alcohol use with the aforementioned AUDIT tool in electronic format.24 And although not for screening purposes, an interactive computerized history-taking program has been successfully used to augment history information at triage without delaying patient care.25

In a large study, Ranney et al. interviewed 664 ED patients about their use of technology.26 The study found that baseline use of computers and mobile phones was high (>90%) in their patient population, although the methodology oversampled adolescents/young adults, and mean patient age was 31 years. Patients were concerned about their confidentiality in regards to the internet and social media, but were interested in technology-based behavioral health interventions.

All of these studies confirm that patients can interact with the technology. That said, one of our concerns at the onset of this research was truthfulness of patients. It would be easy to simply select the same answer for each question or not answer honestly. One of the earliest studies to evaluate this problem was Lucas et al. in 1977.27 Using a primitive computer system, it was determined that patients being screened for alcohol consumption reported significantly greater amounts of alcohol use to the computer than they reported to psychiatrists asking the same question. Our results, demonstrating that 32.9% of patients had a score of 18 points or higher (“at-risk”) on the SOAPP®-R screener, suggest they were most likely being truthful and is remarkably consistent with our prior research indicating that 33.1% of patients with back pain, headache or dental pain exhibited aberrant medication use behavior.17 It must be emphasized that patients were told that the results were not going to be shared with their treating clinician. If they had been, results may have varied. Future dedicated research on the accuracy of the screener must be done before any conclusions can be made about this aspect of the screening tool. Furthermore, it is not known what steps emergency clinicians would take after they learn about a positive screening result for one of their patients.

There are also studies describing the downsides of such technology. For example, while initial reports of diagnostic computer kiosks were positive, Ackerman and colleagues described the failure of kiosks in their EDs and concluded that there are context-related factors involved in implementation of information technology projects into complex medical settings.28 The study serves as a warning that what is feasible in one hospital may not work in others.

There are important factors to consider with self-programming of a tablet screener, such as a possible copyright infringement if permission to use commercial screener is not obtained, issues of collection and protection of protected health information (especially when dealing with sensitive issues such as substance abuse histories and other highly confidential patient data), and eventual integration into an electronic medical record. The developers of the SOAPP®-R at Inflexxion do offer a commercially available tablet version (the Pain Assessment Interview Network—Clinical Advisory System – “PainCAS”).

This study supports three concepts. The first is that, with graphics-based programming languages like App Inventor, it is now possible for clinicians with minimal prior programming experience to create programs that can be used in the clinical setting, rendering development and implementation costs minimal. The second is that patients are able to interact with the technology of tablet computers in the ED setting, find them easy to use and appear to respond truthfully to the questions asked on a screener. The third concept is that, because it is electronic, there is little chance of data loss and exact times to completion of the survey can be recorded. Our app recorded the exact time taken from the first question of SOAPP®-R appearing on the screen to answering the last question, allowing for a precise measurement of time that did not rely on a researcher.

LIMITATIONS

As this was a convenience sample, selection bias may have been present. The study was conducted when research staff was available to enroll so only a small percentage of potentially eligible subjects was enrolled. We only included patients who were fluent in English and might have therefore excluded at-risk minority populations. Furthermore, because this is a single center study in an urban environment, the results may not be externally applicable to other patient populations. Specifically, we do not know if our patient population has more experience using tablet computers than others. Only 6.1% of our patients were aged 56 or older, so it is not possible to comment on the use of the tablet computer in the elderly population. Although about one-third of patients had an “at-risk” SOAPP®-R score, it is possible that patients were not truthful with the results. Alternatively, because patients knew that the results would not be reported to their treating clinician, they may have been honest when they would not have been if they feared that their answers would prevent them from receiving an opioid pain reliever.

Configuration of the tablet response buttons (vertical layout) is different than the paper version (horizontal layout) and may have predisposed patients towards simply the top answers (i.e. never or seldom), which could result in our study underestimating the true prevalence of “at-risk” SOAPP®-R scores. We did not compare paper and computerized versions of the screener, which may have indicated advantages of one modality over the other.

CONCLUSION

Our study demonstrates that it is feasible to program a tablet-based screening tool for opioid abuse potential and administer it in a time-efficient fashion to a cohort of ED patients. Patients rated the screening tool as easy to use. All enrolled patients were able to complete the tool without assistance, and required no additional staff resources for screening. The efficient completion time and patient-reported ease of completion support the conclusion that tablet computers may be used to screen ED patients.

Footnotes

Supervising Section Editor: Sanjay Arora, MD

Full text available through open access at http://escholarship.org/uc/uciem_westjem

Conflicts of Interest: By the WestJEM article submission agreement, all authors are required to disclose all affiliations, funding sources and financial or management relationships that could be perceived as potential sources of bias. Dr. Stephen Butler of Inflexxion, Inc., who is a co-creator of SOAPP-R, and Dr. Traci Green, who works for this company, provided input on study design and helped edit the manuscript prior to submission. However, they did not contribute to the running of the study or data analysis. Dr. Scott Weiner, the principal investigator of the study, purchased the tablet and programmed the survey. The company did not provide funding for the study, tablet or programming.

REFERENCES

- 1.Substance Abuse and Mental Health Services Administration. Screening tools. [Accessed Oct 15, 2014]. Available at: http://www.integration.samhsa.gov/clinical-practice/screening-tools.

- 2.Spitzer RL, Kroenke K, Williams JB. Validation and utility of a self-report version of the PRIME-MD: the PHQ primary care study. Primary care evaluation of mental disorders. Patient health questionnaire. JAMA. 1999;282(18):1737–1744. doi: 10.1001/jama.282.18.1737. [DOI] [PubMed] [Google Scholar]

- 3.Saunders JB, Aasland OG, Babor TF, et al. Development of the alcohol use disorders identification test (AUDIT): WHO collaborative project on early detection of persons with harmful alcohol consumption--II. Addiction. 1993;88(6):791–804. doi: 10.1111/j.1360-0443.1993.tb02093.x. [DOI] [PubMed] [Google Scholar]

- 4.Gavin DR, Ross HE, Skinner HA. Diagnostic validity of the drug abuse screening test in the assessment of DSM-III drug disorders. Br J Addict. 1989;84(3):301–7. doi: 10.1111/j.1360-0443.1989.tb03463.x. [DOI] [PubMed] [Google Scholar]

- 5.Bernstein SL, D’Onofrio G. A promising approach for emergency departments to care for patients with substance use and behavioral disorders. Health Aff (Millwood) 2013;32(12):2122–8. doi: 10.1377/hlthaff.2013.0664. [DOI] [PubMed] [Google Scholar]

- 6.Bernstein SL. The clinical impact of health behaviors on emergency department visits. Acad Emerg Med. 2009;16(11):1054–9. doi: 10.1111/j.1553-2712.2009.00564.x. [DOI] [PubMed] [Google Scholar]

- 7.Babcock Irvin C, Wyer PC, Gerson LW. Preventive care in the emergency department, Part II: Clinical preventive services--an emergency medicine evidence-based review. Society for Academic Emergency Medicine Public Health and Education Task Force Preventive Services Work Group. Acad Emerg Med. 2000;7(9):1042–54. doi: 10.1111/j.1553-2712.2000.tb02098.x. [DOI] [PubMed] [Google Scholar]

- 8.Delgado MK, Acosta CD, Ginde AA, et al. National survey of preventive health services in US emergency departments. Ann Emerg Med. 2011;57(2):104–108. doi: 10.1016/j.annemergmed.2010.07.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Revere D, Dunbar PJ. Review of computer-generated outpatient health behavior interventions: clinical encounters “in absentia”. J Am Med Inform Assoc. 2001;8(1):62–79. doi: 10.1136/jamia.2001.0080062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Berger E. The iPad: gadget or medical godsend? Ann Emerg Med. 2010;56(1):A21–2. doi: 10.1016/j.annemergmed.2010.05.010. [DOI] [PubMed] [Google Scholar]

- 11.Centers for Disease Control and Prevention (CDC) Vital signs: overdoses of prescription opioid pain relievers---United States, 1999--2008. MMWR Morb Mortal Wkly Rep. 2011;60(43):1487–92. [PubMed] [Google Scholar]

- 12.Centers for Disease Control and Prevention (CDC) CDC grand rounds: prescription drug overdoses - a U.S. epidemic. MMWR Morb Mortal Wkly Rep. 2012;13;61(1):10–3. [PubMed] [Google Scholar]

- 13.Manchikanti L, Helm S, 2nd, Fellows B, et al. Opioid epidemic in the United States. Pain Physician. 2012;15(3 Suppl):ES9–38. [PubMed] [Google Scholar]

- 14.Butler SF, Fernandez K, Benoit C, et al. Validation of the revised Screener and Opioid Assessment for Patients with Pain (SOAPP®-R) J Pain. 2008;9(4):360–72. doi: 10.1016/j.jpain.2007.11.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Pletcher MJ, Kertesz SG, Kohn MA, et al. Trends in opioid prescribing by race/ethnicity for patients seeking care in US emergency departments. JAMA. 2008;299(1):70–8. doi: 10.1001/jama.2007.64. [DOI] [PubMed] [Google Scholar]

- 16.Wikipedia. App Inventor for Android. [Accessed Oct 15, 2014]. Available at: http://en.wikipedia.org/wiki/App_Inventor_for_Android.

- 17.Weiner SG, Griggs CA, Mitchell PM, et al. Clinician impression versus prescription drug monitoring program criteria in the assessment of drug-seeking behavior in the emergency department. Ann Emerg Med. 2013;62(4):281–9. doi: 10.1016/j.annemergmed.2013.05.025. [DOI] [PubMed] [Google Scholar]

- 18.Olajos-Clow J, Minard J, Szpiro K, et al. Validation of an electronic version of the Mini Asthma Quality of Life Questionnaire. Respir Med. 2010;104(5):658–67. doi: 10.1016/j.rmed.2009.11.017. [DOI] [PubMed] [Google Scholar]

- 19.Cook AJ, Roberts DA, Henderson MD, et al. Electronic pain questionnaires: a randomized, crossover comparison with paper questionnaires for chronic pain assessment. Pain. 2004;110(1–2):310–7. doi: 10.1016/j.pain.2004.04.012. [DOI] [PubMed] [Google Scholar]

- 20.Larsson BW. Touch-screen versus paper-and-pen questionnaires: effects on patients’ evaluations of quality of care. Int J Health Care Qual Assur Inc Leadersh Health Serv. 2006;19(4–5):328–38. doi: 10.1108/09526860610671382. [DOI] [PubMed] [Google Scholar]

- 21.VanDenKerkhof EG, Goldstein DH, Blaine WC, et al. A comparison of paper with electronic patient-completed questionnaires in a preoperative clinic. Anesth Analg. 2005;101(4):1075–80. doi: 10.1213/01.ane.0000168449.32159.7b. [DOI] [PubMed] [Google Scholar]

- 22.Frennered K, Hägg O, Wessberg P. Validity of a computer touch-screen questionnaire system in back patients. Spine (Phila Pa 1976) 2010 Mar 15;35(6):697–703. doi: 10.1097/BRS.0b013e3181b43a20. [DOI] [PubMed] [Google Scholar]

- 23.Cotter BV, Jackson DA, Merchant RC, et al. Energy drink and other substance use among adolescent and young adult emergency department patients. Pediatr Emerg Care. 2013;29(10):1091–7. doi: 10.1097/PEC.0b013e3182a6403d. [DOI] [PubMed] [Google Scholar]

- 24.Ewing T, Barrios C, Lau C, et al. Predictors of hazardous drinking behavior in 1,340 adult trauma patients: a computerized alcohol screening and intervention study. J Am Coll Surg. 2012;215(4):489–95. doi: 10.1016/j.jamcollsurg.2012.05.010. [DOI] [PubMed] [Google Scholar]

- 25.Benaroia M, Elinson R, Zarnke K. Patient-directed intelligent and interactive computer medical history-gathering systems: a utility and feasibility study in the emergency department. Int J Med Inform. 2007;76(4):283–8. doi: 10.1016/j.ijmedinf.2006.01.006. [DOI] [PubMed] [Google Scholar]

- 26.Ranney ML, Choo EK, Wang Y, et al. Emergency department patients’ preferences for technology-based behavioral interventions. Ann Emerg Med. 2012;60(2):218–27. doi: 10.1016/j.annemergmed.2012.02.026. [DOI] [PubMed] [Google Scholar]

- 27.Lucas RW, Mullin PJ, Luna CB, et al. Psychiatrists and a computer as interrogators of patients with alcohol-related illnesses: a comparison. Br J Psychiatry. 1977;131:160–7. doi: 10.1192/bjp.131.2.160. [DOI] [PubMed] [Google Scholar]

- 28.Ackerman SL, Tebb K, Stein JC, et al. Benefit or burden? A sociotechnical analysis of diagnostic computer kiosks in four california hospital emergency departments. Soc Sci Med. 2012;75(12):2378–85. doi: 10.1016/j.socscimed.2012.09.013. [DOI] [PubMed] [Google Scholar]