Abstract

Observing touch has been reported to elicit activation in human primary and secondary somatosensory cortices and is suggested to underlie our ability to interpret other's behavior and potentially empathy. However, despite these reports, there are a large number of inconsistencies in terms of the precise topography of activation, the extent of hemispheric lateralization, and what aspects of the stimulus are necessary to drive responses. To address these issues, we investigated the localization and functional properties of regions responsive to observed touch in a large group of participants (n = 40). Surprisingly, even with a lenient contrast of hand brushing versus brushing alone, we did not find any selective activation for observed touch in the hand regions of somatosensory cortex but rather in superior and inferior portions of neighboring posterior parietal cortex, predominantly in the left hemisphere. These regions in the posterior parietal cortex required the presence of both brush and hand to elicit strong responses and showed some selectivity for the form of the object or agent of touch. Furthermore, the inferior parietal region showed nonspecific tactile and motor responses, suggesting some similarity to area PFG in the monkey. Collectively, our findings challenge the automatic engagement of somatosensory cortex when observing touch, suggest mislocalization in previous studies, and instead highlight the role of posterior parietal cortex.

Keywords: action, empathy, hands, human fMRI, touch, vision

Introduction

It has been reported frequently that human somatosensory cortices are automatically engaged during observation of touch. For example, viewing a leg being brushed was reported to elicit activation in the secondary somatosensory cortex (SII; Keysers et al., 2004). Similarly, seeing a face being touched was reported to elicit activation in the primary somatosensory cortex (SI; Blakemore et al., 2005). Recent studies focusing on hands report that observing a hand being touched elicits activation in the hand representation of SI (Schaefer et al., 2009, 2013; Kuehn et al., 2013, 2014) but only for intentional, not accidental, touch (Ebisch et al., 2008, 2011). That the neural systems involved in processing somatosensory signals originating in our own bodies might also be recruited when observing others has been taken to suggest a key role for the somatosensory cortex in empathy and social perception (Keysers and Gazzola, 2009, 2014).

Despite the apparent consensus that the somatosensory cortex is engaged during observed touch, many inconsistencies and open questions remain. First, there is inconsistency over whether responses are primarily observed in SI (Blakemore et al., 2005; Schaefer et al., 2009, 2012, 2013; Kuehn et al., 2013, 2014), SII (Keysers et al., 2004; Keysers and Gazzola, 2009), or both (Ebisch et al., 2008), and when responses are observed in SI, there are conflicting reports over whether those activations include the first cortical somatosensory area, BA3 (Kuehn et al., 2013, 2014 vs Schaefer et al., 2009). Furthermore, there is confusion over whether there is any hemispheric lateralization of responses, with some studies reporting primarily left hemisphere (Keysers et al., 2004), some right hemisphere (Blakemore et al., 2005), some bilateral (Meyer et al., 2011), and some in which task responses make it difficult to reliably assess any lateralization (Schaefer et al., 2009, 2012; Kuehn et al., 2013, 2014). Second, what aspects of the visual stimulus are necessary and sufficient to drive responses is unclear. For example, it has been reported that observing touch of an object is as effective as touch of a person (Ebisch et al., 2008, 2011; Keysers and Gazzola, 2014). Finally, beyond the somatosensory cortex, the broader topography of activation across the parietal cortex is also unclear. Specifically, how the selective activations for observed touch compare with those for observed action and with visual–tactile responses in the posterior parietal cortex. Answers to these questions are critical to understand the broader circuits engaged during observation of touch and action and the potential role of the somatosensory cortex in social cognition.

To reconcile these inconsistencies, we conducted a detailed mapping of the topography and functional selectivity to observed touch in 40 participants. Despite the power of our design, we were unable to find selective activation for observed touch in the hand regions of either SI or SII. Instead, we observed two distinct peaks of response in the posterior parietal cortex with a strong hemispheric bias to the left. Collectively, our results argue against the automatic engagement of the somatosensory cortex during the observation of touch and question the putative role of this cortex in empathy and social cognition.

Materials and Methods

We investigated responses to observed touch in four main experiments. The first experiment focused on identifying the topography of activation, whereas the three subsequent experiments focused on characterizing the selectivity of the regions identified.

Participants

Forty-two healthy right-handed volunteers participated in this fMRI study (aged 22–41 years, 23 females); two participants were excluded because of excessive head motion. All participants completed Experiment 1, and 14 participants were recruited in each of Experiments 2–4. All participants had normal or corrected-to-normal vision and gave written informed consent. Both consent and protocol were approved by the National Institutes of Health Institutional Review Board.

Experiment 1

In this experiment, we used four different localizers to examine the topography of activation to observed touch (Table 1).

Table 1.

A list of localizers performed in Experiment 1 and a summary of conditions in each localizer

| Localizer | Conditions |

|---|---|

| Observed touch | Brush right hand |

| Brush left hand | |

| Brush only (brush on left side) | |

| Brush only (brush on right side) | |

| Motor | Lip pursing |

| Wiggle left index finger | |

| Wiggle right index finger | |

| Tactile | Brush left index finger |

| Brush right index finger | |

| Observed reach | Hand reaching to object, left visual field |

| Hand reaching to object, right visual field | |

| Scrambled video, left visual field | |

| Scrambled video, right visual field |

Localizing selectivity for observed touch.

Our blocked-design localizer for observed touch comprised four conditions: (1) brush hand (left); (2) brush hand (right); (3) brush only (left side of fixation); and (4) brush only (right side of the fixation). Each run contained 16 blocks, and each block comprised five different clips selected randomly from four hand identities, lasting for a total of 14 s. Each block was followed by a fixation period (6 s), and an additional 16 s fixation block was presented at the beginning and end of the run. In total, each run lasted for 6 min 16 s, and there were two separate runs. A fixation cross was presented in the center of the screen throughout the run. Importantly, participants were required to maintain fixation during the scan and to place their hands on the laps and keep them still, so that responses to the movie clips were not confounded by responses from any hand movements.

Motor localizer.

A blocked-designed localizer was used to identify somatomotor regions (n = 40). Participants were prompted by simple instructions in the center of the screen (“left finger,” “right finger,” “lips”) that flashed at a rate of 1 Hz. Participants were asked to either gently wiggle their left or right index fingers or purse their lips in time with the visual cue. There were 18 blocks within each run, with each condition occurring six times in a counterbalanced order. Each block lasted for 10 s, followed by a 6 s fixation period. Furthermore, every three blocks, this fixation period was extended to 16 s to help minimize physical fatigue. In addition, a 16 s fixation block was added to the beginning and end of the run. One run of motor localizer was presented that lasted for 5 min 42 s. Participants were instructed to maintain fixation and keep their body still during the rest period.

Tactile localizer.

A blocked-designed localizer with two conditions was used to identify cortical regions selective during light tactile stimulation. Participants were instructed to keep their eyes closed during this scan, so that somatosensory responses were not confounded by any potential vicarious visual activation. An experimenter present in the scan room delivered brush strokes on the participant's left or right index fingers at 2 Hz, in time with visual cues projected on the screen. Thus, we stimulated the same fingers as seen in the observed touch localizer. A single run of tactile localizer was presented lasting 4 min 42 s. This run was always presented at the very end of the scan session so that activations in the observed touch runs would not be primed by the tactile responses.

Reach-selective localizer.

Movie stimuli from Shmuelof and Zohary (2006) were used here (kindly provided by Dr. Lior Shmuelof, Ben-Gurion University of the Negev, Zlotowski Center for Neuroscience, Beer-Sheva, Israel). One run of reach-selective localizer was presented, comprising six conditions showing a left or right hand (horizontal flipped images of the left hand) reaching to one of the 15 man-made graspable objects and scrambled versions of these same clips, all presented peripherally on the left or right side of the screen (6.5° from the central fixation cross). Each run contained 16 blocks, each block lasting 12 s interleaved with an 8 s fixation period for a total run time of 8 min 50 s. A fixation cross was present in the center of the screen throughout the scan, and participants were instructed to maintain fixation throughout.

Experiments 2–4.

In all three experiments, nine types of movie clips were presented in an event-related manner. Each event comprised a 2 s movie clip plus a 2 s blank screen with a central fixation cross, such that each event lasted for 4 s. All movie clips subtended 5° of visual angle and showed stroking or motion at the rate of 2 Hz. Within each run, there were 126 movie events in total, with 36 jittered fixations varying between 2 and 10 s. All events were counterbalanced using optseq2 from the Freesurfer software (http://surfer.nmr.mgh.harvard.edu/). There were four repetitions per condition. Each run lasted for 7 min and 12 s. In each experiment, there were six event-related runs (each run contained a different sequence of event) for each participant. Participants were required to perform an oddball task in which they were asked to detect a flower (2 s plus 2 s fixation period) and make responses with their left foot using an in-house designed MRI-safe foot paddle instead of a hand response task, which potentially can lead to confounding responses in the hand tactile/motor regions (SI and SII; Schaefer et al., 2012; Kuehn et al., 2013). Responses during the oddball task were later regressed out by the GLM procedure.

Experiment 2.

To investigate the effect of hand (left vs right) and whether seeing brushing on hand is necessary, nine types of movie clip were presented in this event-related experiment. The conditions include brush only, hand only, brush near, and brush hand, either from a left or right hand set, as well as the response condition, a flower. All movie clips contain either a left or a right hand from four hand identities (two males and two females), except the brush-only conditions, which showed a brush stroking either the left side or the right side of the background, matching the location in the hand conditions.

Experiment 3.

To examine the effect of viewpoint, all movie clips (brush only, hand only, brush near, and brush hand) were presented in an allocentric view or an egocentric view (flipped images of the allocentric clips), again from the same four hand identities as Experiment 2.

Experiment 4.

To investigate form and motion specificity, nine types of movie clips were presented. For form specificity, six conditions were presented showing a brush stroking on a hand or a variety of hand-like stimuli: (1) a real hand; (2) rubber hand; (3) paper hand; (4) foot; and (5) a pair of scissors. For motion specificity, three conditions were used: (1) laser beam “stroking” on hand; (2) index finger tapping; and (3) brush only. All body-like stimuli were from the right side of the body, and the brushing location was always constant across all conditions.

Imaging acquisition

Participants were scanned on a research dedicated GE 3 Tesla Signa scanner (GE Healthcare) located in the Clinical Research Center on the National Institutes of Health campus in Bethesda, Maryland. Whole-brain volumes were acquired using an eight-channel head coil (30 slices; 64 × 64 matrix; FOV, 200 × 200 mm; in-plane resolution, 3.125 × 3.125 mm; slice thickness, 4 mm; 0.4 mm interslice gap; TR, 2 s; TE, 30 ms). For each participant, a high-resolution anatomical scan was also acquired. All functional localizer and event-related runs were interleaved.

fMRI preprocessing

Data were analyzed with the AFNI software package (http://afni.nimh.nih.gov/). Before statistical analysis, the first and last eight volumes of each run were removed, and all images were motion corrected to the eighth volume of the first run. After motion correction, images from the localizer runs were smoothed with a 5 mm FWHM Gaussian kernel. Data from the event-related runs were unsmoothed. Cortical surfaces were created using FreeSurfer (http://surfer.nmr.mgh.harvard.edu/), and functional data were then displayed on cortical surfaces using SUMA (http://afni.nimh.nih.gov/afni/suma). Data were sampled to individual participant surfaces before averaging in the group analysis.

fMRI statistical analysis

To examine the topography of selectivity for observed touch, we conducted two separate contrasts on data generated by the observed touch localizer (n = 40, random effect, p < 10−3): (1) to identify any selective activation for observing each hand, separate contrasts of brush hand > brush only for the left and right hand were conducted; and (2) to increase power, data for both hands were combined, and we contrasted brush hand (left) + brush hand (right) > brush only (left) + brush only (right).

To further examine the topography between visual activations for observing touch with motor and somatosensory regions, group analyses using motor, tactile, and visual localizers were conducted (random effect, p < 10−3): (1) for motor localizers (n = 40), to identify somatomotor regions in the each hemisphere, we contrasted contralateral hand movement > lip movement; and (2) for tactile localizers (n = 14), to identify the somatosensory cortex in each hemisphere, we contrasted brush contralateral index finger > brush ipsilateral index finger.

To quantify the responses in the tactile SI and SII regions for observed touch, SI and SII ROIs were generated within each participant from the independent functional tactile localizer, contrasting contralateral index finger > ipsilateral index finger (random effect, p < 10−3), and β values in SI and SII were extracted from the observed touch localizer.

To characterize the functional properties of regions identified as selective for observed touch, we examined responses in each of these regions during Experiments 2–4. Specifically, we focused on two observed touch-selective posterior parietal ROIs in the left hemisphere (superior parietal and inferior parietal) and extracted β values for the event-related Experiments 2–4 from these ROIs.

In the event-related experiments, we first examined the magnitude of response within each ROI to all nine conditions using a standard GLM procedure. Data for the flower stimulus were then discarded. We then investigated the pattern of response within each ROI. Data from the six event-related runs were analyzed using a split-half analysis the same as our previous study (Chan et al., 2010). Instead of using the conventional method of splitting the data into just odd and even runs, we iterated through multiple splits of the data (similar to the standard iterative leave-one-out procedure for classifiers). In total, there were 10 splits of the six runs. For each half of the data in each split, a standard GLM procedure was used to create significance maps by performing t tests between each condition and baseline. The t values for each condition were then extracted from the voxels within each ROI and cross-correlated across the halves of each split. Correlation values were averaged across the 10 splits. Discrimination indices were calculated by subtracting the averaged off-diagonal values from the averaged diagonal values for the relevant conditions.

Results

Topography of selectivity for observed touch

For Experiment 1, we aimed to identify the regions selectively responsive during touch observation. Given previous reports, we expected to elicit activation in SI and SII. Participants viewed movies of four different conditions showing a brush and/or a hand: (1) brush hand (left); (2) brush hand (right); (3) brush only (right side of the screen, corresponding to the location during left hand brushing); and (4) brush only (left side of the screen, corresponding to the location during right hand brushing). Importantly, and in contrast to some previous studies (Schaefer et al., 2009, 2013; Kuehn et al., 2013), participants passively viewed the movies and were not required to make any motor responses that could potentially affect both contralateral and ipsilateral hemispheres, making it possible to investigate hemispheric laterality. Across 40 participants, we identified regions selectively responsive to observed touch by contrasting brush hand with brush only, separately for left hand and right hand conditions (Fig. 1). This contrast represents a necessary but not stringent criterion for observed touch-selective activation because the two conditions differ in many respects. At a group level, results were strikingly similar for both the left hand and right hand contrasts, with two foci of activation, in superior and inferior parietal cortices (Fig. 2). In both cases, selective responses were observed predominantly in the left hemisphere, with almost no selectivity in the right hemisphere. Furthermore, the direct contrast of brush hand (left) and brush hand (right) revealed no significant differences anywhere in the parietal cortex. At first glance, it could be thought that these two foci of activation correspond to SI and SII. However, the superior activation is slightly posterior of the postcentral sulcus and does not correspond to the expected anatomical location of the hand representation in the somatosensory cortex. Similarly, although the inferior activation extends into the postcentral sulcus, in which BA2 is located, it is more inferior than the typical location of the hand representation, posterior to the “hand knob” on the precentral gyrus (Yousry et al., 1997). Furthermore, although it is also close to SII, the inferior parietal activation does not extend into the parietal operculum between the lip of the lateral sulcus and its fundus, in which the finger representation has been localized (Ruben et al., 2001).

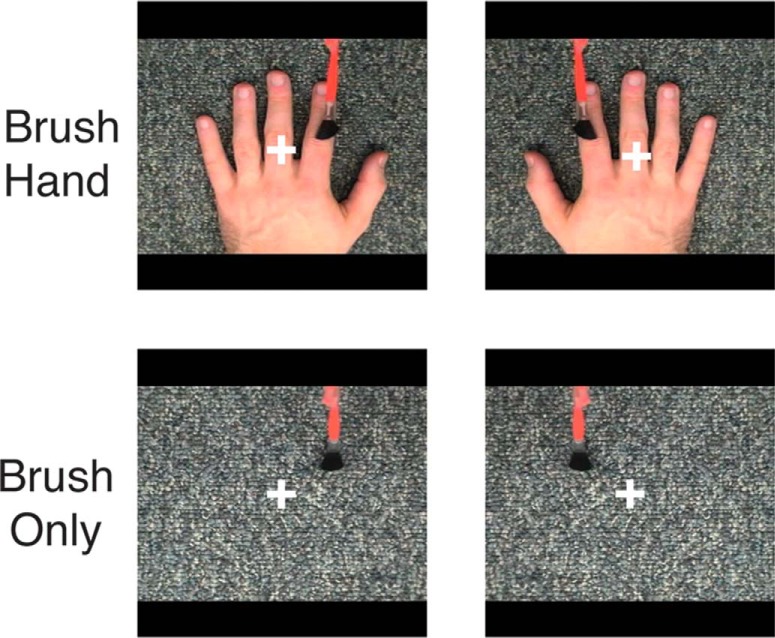

Figure 1.

Stimuli for localizing observed touch-selective regions (Experiment 1). Each movie clip lasted for 2 s, presented in a blocked paradigm. Across 40 participants, we defined regions responsive to observed touch by contrasting brush hand to brush only.

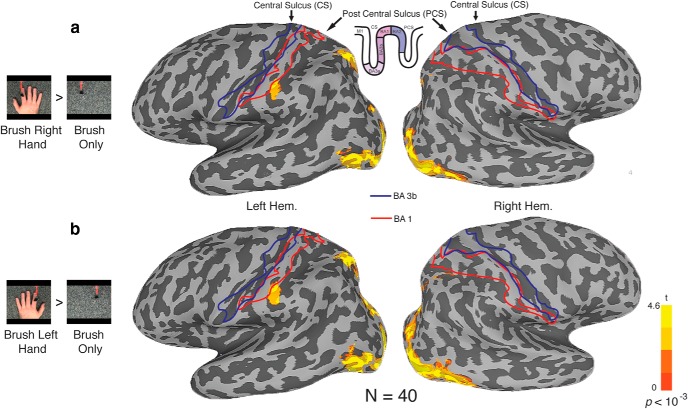

Figure 2.

Selectivity for observed touch visualized on inflated surfaces (n = 40). Blue and red outlines indicate the location of BA3b and BA1, respectively, based on cytoarchitectural probability maps (Roland et al., 1997; Geyer et al., 1999). a, Contrasting brush hand (right) to brush only (p < 10−3) elicited activation in the left ventral cortex, as well as the superior and inferior regions in the posterior parietal cortex. b, Similarly, contrasting brush hand (left) to brush only elicited an almost identical set of activations.

To examine the topography of responses in more detail and given the lack of difference between brushing the left and right hand, we collapsed the data across both hand conditions (Fig. 3a). Although the size of the superior and inferior activations increased, they remained distinct from the expected anatomical locations of the hand representation in SI and SII. To compare the location of brush-selective activations with functionally defined somatomotor regions, all subjects were also tested in a simple blocked-design scan in which they wiggled either their left or right index finger (corresponding to the identity of the fingers brushed in the videos) or moved their lips in a pursing action. Contrasting responses during contralateral finger movement with lip movement revealed the expected somatomotor regions selective for each body part (Fig. 3a). Specifically, finger movement selectively elicited responses extending posteriorly from the precentral sulcus through the hand knob (Yousry et al., 1997) and into the postcentral sulcus. In contrast, lip movement selectively elicited responses in the inferior part of the precentral gyrus (immediately below the hand knob) that extended into the inferior part of the postcentral gyrus. Thus, the parietal activations we observed do not appear to correspond to the distinctive and classically defined hand representation in SI.

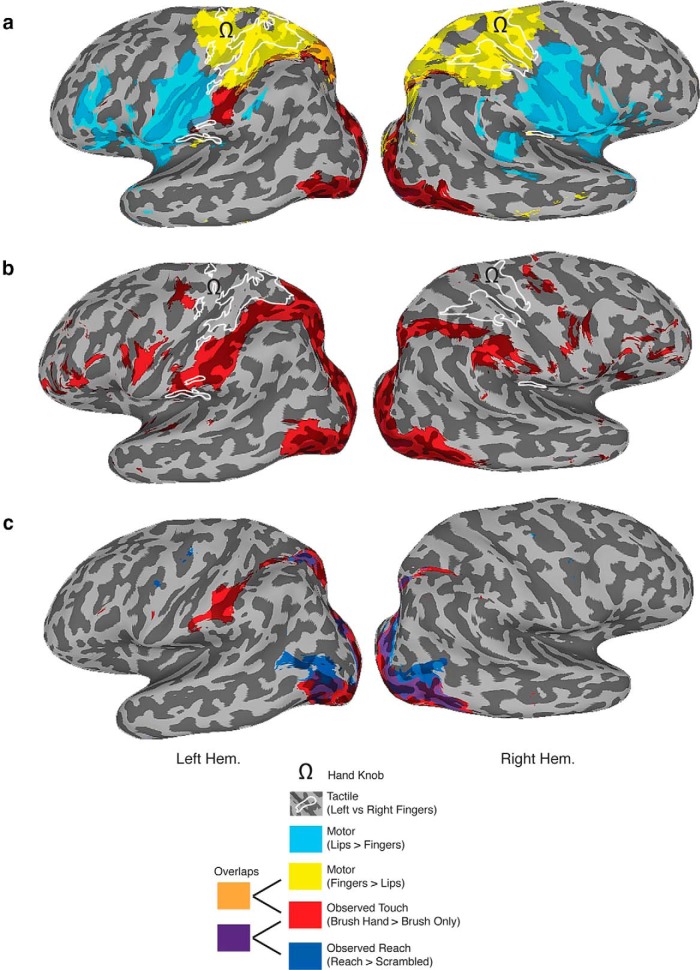

Figure 3.

Topography of selective responses for observed touch. Inflated left and right hemispheres showing the relationship between observed touch-selective activation (combined for the left and right hands, red), somatomotor regions (lips, light blue; fingers, yellow), tactile finger-selective responses (white outlines), and reach-selective activation (dark blue). a, The observed touch-selective regions were mostly distinct from both the lip and finger regions identified with the somatomotor localizer and the tactile finger-selective regions identified with the tactile localizer. The small overlap between the superior parietal observed touch selectivity and the somatomotor finger activations is too far posterior to be considered SI. Note that, although the inferior parietal observed touch-selective activation appeared to extend onto the postcentral gyrus and thus BA2, it does not correspond to the location of the hand representation in somatosensory cortex either anatomically or functionally. All contrasts were defined at p < 10−3. b, Intriguingly, even at a very lenient threshold (p < 0.1 uncorrected) for observed touch selectivity, the activation simply skirts around the tactile representations of the finger in both SI and SII. c, Topography for observed touch and observed reach (Shmuelof and Zohary, 2006). Both localizers elicit activations in the superior parietal cortex, but only for observed touch was there selective activation in the inferior parietal cortex.

To further verify the location of the hand representations in SI and SII, we used a functional localizer using light tactile stimulation on the left and right index fingers in a subset of participants (n = 14). Comparing activation with the two fingers revealed that selective tactile responses were mostly contained within the regions identified by contrasting finger and lip movement but also included the parietal operculum, corresponding to SII. Importantly, the superior and inferior parietal regions selectively responsive to observed touch did not overlap with either of these finger-selective tactile activations. Thus, on the basis of both anatomical and functional criteria, the superior and inferior parietal regions showing selective activation for observed touch do not appear to correspond to either SI and SII but instead are in the posterior parietal cortex.

To confirm that the lack of overlap between the observed touch-selective and tactile finger-selective regions did not simply reflect the choice of threshold, we reexamined the topographic relationship between these activations using a very lenient threshold for observed touch (p < 0.1, uncorrected; Fig. 3b). Although the extent of the selective activations for observed touch increased substantially, revealing activations in the dorsal and ventral premotor cortices, the selective responses in the parietal cortex remained distinct from the finger-selective activations, with the observed touch selectivity simply skirting around the functionally defined SI hand representation and failing to extend into the lateral sulcus and SII.

Even when we extract the responses from individually defined tactile finger-selective regions in SI and SII (n = 14), there is no significant response to either brush hand or brush only and no difference in response between the two conditions (Fig. 4a).

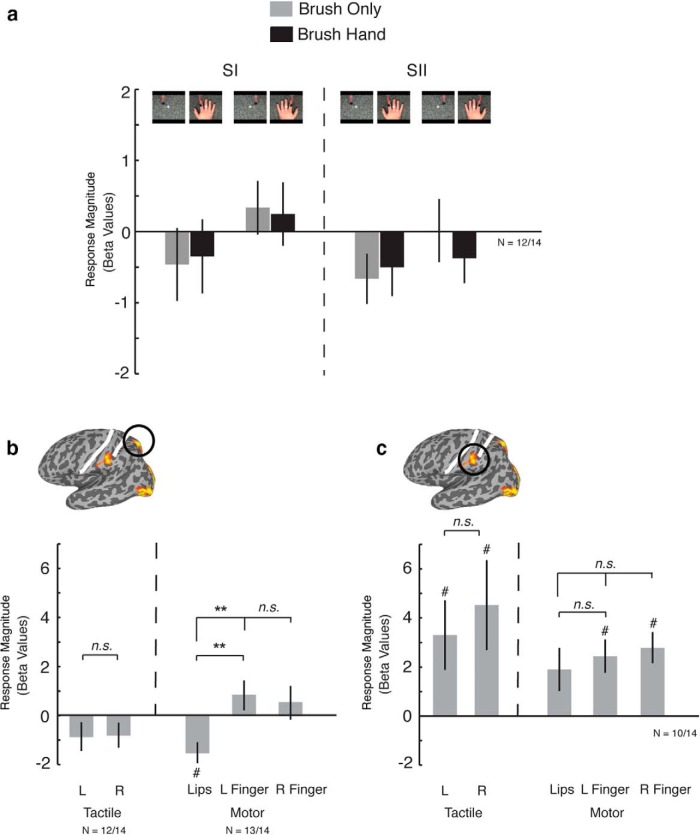

Figure 4.

a, Responses extracted from independently localized left SI and SII within each participant (n = 12 of 14). There were no significant responses and no difference between conditions in either SI or SII. b, Responses extracted from independently localized superior parietal region within each participant (n = 12 of 14 for tactile responses, n = 13 of 14 for motor responses). Although there were no significant responses in any condition, there was a difference in responses between the lips and fingers in the somatomotor localizer. c, Responses extracted from independently localized inferior parietal region within each participant (n = 10 of 14). Contrary to the superior region, the inferior parietal cortex showed moderate tactile responses but no finger selectivity. This region also demonstrated some nonspecific activation to lips, left finger, and right finger motor movements. **p < 0.001; #p < 0.05, significant difference from 0; n.s, no significant difference. L, Left; R, Right.

So far, we have considered selective responses to observed touch and their relationship to the somatosensory cortex. We also wanted to establish how these regions compare with those parietal areas identified previously as selective for the observation of hand reaching (Culham and Kanwisher, 2001; Culham and Valyear, 2006; Shmuelof and Zohary, 2006, 2008). Using the exact same stimuli used in a previous study (Shmuelof and Zohary, 2006), we compared responses to videos depicting reaching with scrambled versions of those same movie clips (n = 14; Fig. 3c). This analysis revealed selective responses in the superior parietal cortex, just posterior to the postcentral sulcus, that overlapped substantially with the selective responses to observed touch. However, no selectivity for observed reach was observed in the inferior parietal cortex.

In summary, by adopting multiple analysis strategies—(1) a generous contrast and statistical threshold, (2) both anatomical and functional definitions of the somatosensory cortex, and (3) analysis at both the group and individual levels—we have demonstrated that observing touch does not elicit activation within the hand representation of SI or SII. Instead, our data highlight the contribution of the posterior parietal cortex to touch observation with two distinct foci in the superior and inferior parietal cortices. To further characterize the response properties in these parietal regions, we conducted additional investigations of functional properties and selectivity to determine the potential functional role of the superior and inferior parietal regions.

Functional properties of regions selective for observed touch

We first examined the tactile and motor responses in the observed touch-selective regions in the superior and inferior parietal cortices. Focusing on those participants in whom we collected both tactile and motor mapping data (n = 14), we localized the observed touch-selective regions in individual participants and extracted the responses from the tactile and motor runs. In the superior parietal region (Fig. 4b), there were no significant responses above baseline for any of the motor (lips, left, or right finger movements) or tactile (left or right finger) conditions. However, there was a significant difference in the response between lip and finger movements because of the negative response for the lips (lips vs left finger movement, t(1,13) = 6.16, p < 0.01; lips vs right finger movement, t(1,13) = 4.55, p < 0.001) but no significant difference in the responses between left and right finger movement (t(1,13) = 0.512, p > 0.1). The absence of any clear tactile or motor response further argues against this region corresponding to SI.

In contrast, in the inferior parietal region (Fig. 4c), there were significant responses to all motor and tactile conditions. However, there were no significant differences between tactile stimulation of the left index finger and right index finger (t(1,8) = 1.87, p > 0.05) or between movement of left index finger, right index finger, or lips (left vs right finger movement, t(1,8) = 0.72, p > 0.5; lip vs left finger movement, t(1,8) = 0.83, p > 0.1; lip vs right finger movement, t(1,8) = 1.4, p > 0.1). The lack of specificity of these responses is consistent with this area not overlapping with either SI or SII, which typically shows specificity for both the body part and the side of the body.

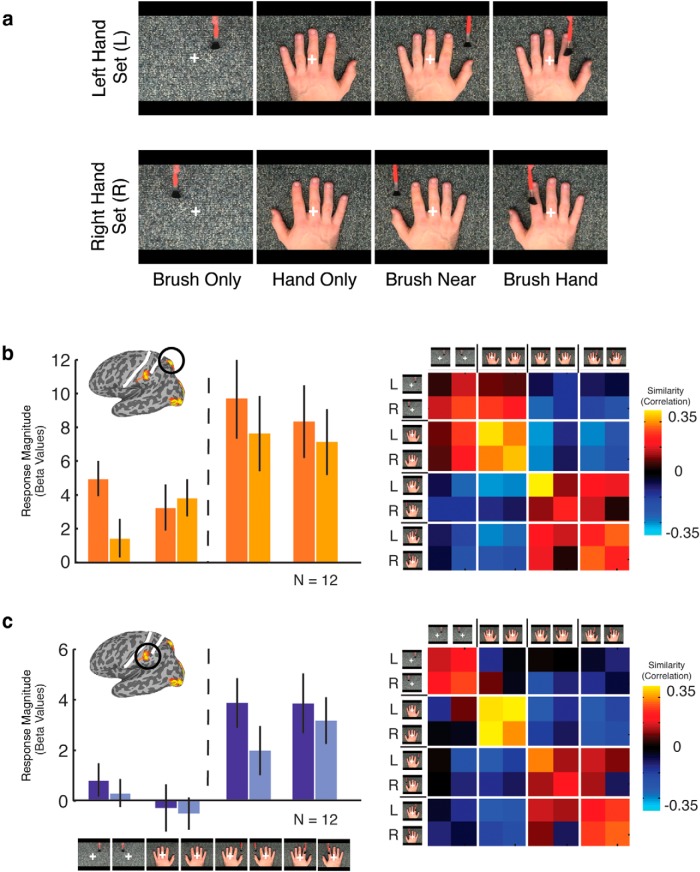

Experiment 2: selectivity for hand laterality and observed touch

To investigate the effect of hand laterality and the importance of observed touch compared with brushing near the hand, a subgroup of participants (n = 12) were presented with two sets of conditions, one for the left and one for the right hand, each comprising four conditions: (1) brush only; (2) hand only; (3) brush near; and (4) brush hand (Fig. 5). For magnitude of response, a three-way ANOVA with ROI (superior, inferior), laterality (left or right set), and condition (brush only, hand only, brush near, and brush hand) as factors revealed main effects of ROI (F(1,11) = 11, p < 0.01), reflecting stronger responses in the superior than inferior region, condition (F(3,33) = 14.2, p < 0.0001), reflecting stronger responses to brush near and brush hand compared with the other two conditions, and laterality (F(1,11) = 12.5, p < 0.005), reflecting stronger responses for the left compared with the right set of conditions. These main effects were qualified by significant interactions between ROI and laterality (F(1,11) = 6.3, p < 0.05), reflecting a stronger effect of laterality in the superior compared with inferior region, and also between laterality and condition (F(3,33) = 7.2, p < 0.001), reflecting a stronger effect of laterality for brush only and brush near compared with hand only and brush hand.

Figure 5.

a, Stimuli for Experiment 2. Movie clips were presented in an event-related manner, eight conditions were divided into left and right hand sets. Each set contained four conditions: (1) brush only; (2) hand only; (3) brush near; and (4) brush hand. b, c, Response magnitude (left) and similarity correlation matrix (right) for the superior parietal and inferior parietal regions, respectively.

Importantly, there was no difference in response to brush hand and brush near, suggesting that, while the presence of both the brush and the hand are necessary, seeing actual touching of the finger is not needed. Furthermore, although the effects of laterality could be taken to indicate specificity for the hand being touched, the fact that this effect is present even for the brush-only condition and absent for the hand-only condition strongly suggests that it may be driven by the presence of the brush and motion in the contralateral visual field and may reflect underlying retinotopy in these parietal regions.

To determine whether information about observed touch is present in the pattern of responses across the two parietal regions, we also conducted multivoxel pattern analysis. Similarity matrices for each region (Fig. 5) show that the main distinction is between the conditions containing both a brush and a hand (brush hand, brush near) and those containing only one stimulus (brush only, hand only). For each condition, we tested the effect of laterality by computing discrimination indices comparing the within-set correlations with the between-set correlations (see Materials and Methods). Only for brush near could the left versus right conditions be distinguished (t(1,11) = 3.4, p < 0.005). Similarly, we computed discrimination indices for brush hand versus brush near and found significant discrimination in both regions (superior parietal, t(1,11) = 3.0, p < 0.001, one-tailed; inferior parietal, t(1,11) = 3.2, p < 0.005, one-tailed).

Thus, both magnitude and response pattern data suggest the importance of the presence of both the brush and the hand for responses in these posterior parietal regions. However, effects of hand laterality and touching differ, with effects of laterality primarily in magnitude and effects of touching in the pattern of response. Importantly, the effect of laterality on magnitude could simply reflect a contralateral retinotopic bias for visual motion.

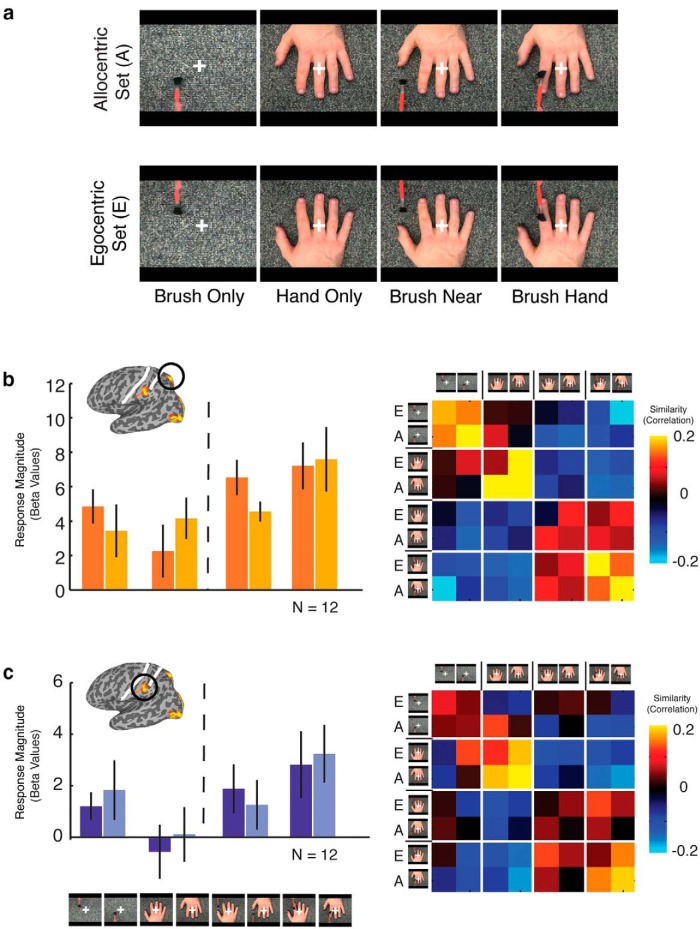

Experiment 3: selectivity for viewpoint and touch

To investigate the effect of viewpoint and to further test the importance of observed touch compared with brushing near the hand, a second subgroup of participants (n = 12) were tested with two sets of conditions, an allocentric and an egocentric set, each comprising four conditions: (1) brush only; (2) hand only; (3) brush near; and (4) brush hand (Fig. 6). For magnitude of response, a three-way ANOVA with region (superior, inferior), viewpoint (allocentric, egocentric), and condition (brush only, hand only, brush near, brush hand) as factors revealed significant main effects of both region (F(1,11) = 20.6, p < 0.001), reflecting stronger responses in the superior than inferior region, and condition (F(1,3) = 6.8, p < 0.001), reflecting stronger responses for brush near and brush hand compared with the brush-only and hand-only conditions but no other main effects or interactions. Thus, although the results confirm the importance of the presence of both brush and hand, there is no evidence for an effect of viewpoint.

Figure 6.

a, Stimuli for Experiment 3. Movie clips were presented in an event-related manner, and eight conditions were divided into allocentric and egocentric hand sets. Each set contained four conditions: (1) brush only; (2) hand only; (3) brush near; and (4) brush hand. b, c, Response magnitude (left) and similarity correlation matrices (right) for the superior parietal and inferior parietal regions, respectively.

Similarity matrices based on the patterns of response again showed a distinction between those conditions containing both a brush and a hand (brush hand, brush near) and those containing only one stimulus (brush only, hand only; Fig. 6), with some additional separation between the brush only and hand only conditions. Discrimination indices for both viewpoint (allocentric vs egocentric) and touch (brush near vs brush hand) were not significant in either region. Thus, in contrast to the previous experiment, there was no evidence for any effect of actual touch compared with brushing near the hand.

Given the inconsistency in the results from Experiments 2 and 3 for the effect of actual touch, we combined the egocentric right-hand conditions from the two subgroups of participants from each experiment tested with those conditions (n = 24). For magnitude, there was no difference in response to brush near and brush hand in either the superior or inferior parietal region. However, discrimination indices based on the patterns of response for these two conditions were consistent with the results from experiment 2, showing some discrimination between brush hand and brush near, but in the superior parietal region only (t(11) = 4.9, p < 0.001).

Collectively, these results confirm that the presence of both a brush and a hand is necessary to drive responses but demonstrate no modulation by viewpoint. Furthermore, although there was no significant effect of brush hand versus brush near in the egocentric/allocentric conditions, collapsing across the two subgroups of participants in Experiments 2 and 3 revealed significant differences between these conditions in the pattern of responses, but not magnitude, in the superior parietal region.

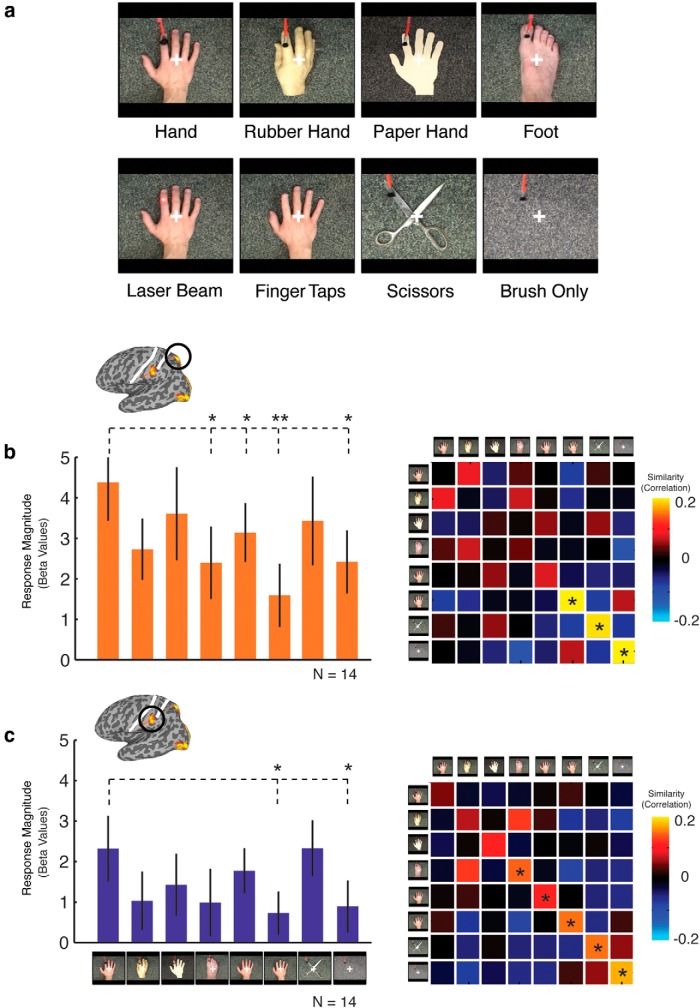

Experiment 4: selectivity for agent and object of touch

To investigate the selectivity of responses and their potential functional role, a final subgroup of participants (n = 14) were presented with video clips in which we manipulated the nature of the object being touched (hand, rubber hand, paper hand, foot), the form of the agent of touch (brush, laser beam), or the nature of the action (brushing, finger tapping; Fig. 7). For magnitude of response, a two-way ANOVA with ROI (superior, inferior) and condition as factors revealed a main effect of ROI only (F(1,13) = 15.7, p < 0.005), reflecting stronger responses in the superior compared with inferior parietal region and suggesting little overall selectivity in either region. However, planned one-tailed contrasts between brush hand and each of the other conditions revealed significantly weaker responses in both parietal regions for brush only, as expected (both t(1,13) > 2.1, p < 0.05), and finger tapping (both t(1,13) > 2.2, p < 0.05), again highlighting the importance of the presence of an agent of touch. In addition, in the superior, but not inferior, region, significantly weaker responses were also observed for laser beam and foot (both t(1,13) > 1.8, p < 0.05). Overall, these results suggest limited selectivity in response magnitude for the form of the object and agent of touch beyond the necessity of both an agent and object of touch.

Figure 7.

a, Stimuli for Experiment 4. Movie clips were presented in an event-related manner; conditions were designed to test selectivity profiles of the parietal regions with stimuli varying across form of the object of touch (hand, rubber hand, paper hand, foot, scissors) and form of the motion or agent of touch (finger taps, laser beam), plus a control condition (brush only). b, c, Response magnitude (left) and similarity correlation matrices (right) for the superior parietal and inferior parietal regions, respectively. * indicates significant discrimination between the within-correlation values and the average of between-correlation values.

Similarity matrices for both superior and inferior regions reveal no distinct clustering of conditions (Fig. 7b,c). However, a distinct band of high correlations are visible down the main diagonal of the matrices, stronger in the inferior than superior region, corresponding to higher within-condition than between-condition correlations. Planned one-tailed contrasts of discrimination indices revealed significant discrimination for finger tapping, scissors, and brush only (all t(1,13) > 2.6, p < 0.05) in the superior region. However, discrimination was stronger in the inferior region, with significant discrimination for foot, laser beam, finger tapping, scissors, and brush only (all t(1,13) > 1.6, p < 0.05), with a trend for paper hand (t(1,13) = 1.74, p < 0.055).

In summary, response magnitude and discrimination indices reveal that both superior and inferior parietal regions show some degree of selectivity between observing a hand and other non-hand stimuli. Such selectivity was observed primarily in the patterns of response and not in response magnitude. Importantly, however, none of the conditions that contained a hand-like stimulus and a second moving stimulus could be discriminated easily, suggesting that, in the context of observing actual touch, there is little selectivity.

Comparison with ventral brain areas

So far, we have focused on regions in the parietal cortex, but the contrast of brush hand versus brush only also produced selective responses in two ventral brain regions on the lateral occipital and ventral temporal surfaces, likely including the body-selective extrastriate body area (Downing et al., 2001, 2006; Chan et al., 2004, 2010) and fusiform body area (Peelen and Downing, 2005; Schwarzlose et al., 2005; Downing et al., 2006; Taylor et al., 2007), respectively. In contrast to the parietal regions, activation in ventral areas was bilateral without any clear hemispheric bias. The lateral region was coextensive with the early visual cortex, presumably because of the large retinotopic differences between brush hand and brush only, and we focused primarily on the region in the ventral temporal cortex, in the left hemisphere only, for comparison with the parietal regions.

In Experiments 2 and 3, response magnitude across conditions in the ventral temporal region was similar to that observed in the parietal regions, except that there was a much stronger response to the static hand. Similarly, the patterns of response to hand only were more similar to brush hand and brush near, and more distinct from brush only, than in the parietal regions. As with the parietal regions, there was an effect of laterality on magnitude of response in Experiment 2, again reflecting a bias for visual motion in the contralateral visual field (this effect of laterality was reversed in the right hemisphere). Finally, in Experiment 4, there was a significant difference in the magnitude of response only between brush hand and brush only and, in the pattern of response, very strong discrimination for brush only, with smaller but significant discrimination for scissors and finger tapping.

Discussion

No evidence of vicarious activation in the somatosensory cortex

We find no evidence for automatic and selective activation of SI and SII to observed touch, challenging the putative role for these regions in understanding others' behavior. In both whole-brain analyses across 40 participants and analysis of responses in individually defined somatosensory regions, there was no difference in response to observing a hand being brushed and the brush presented alone. Instead, we found that observing touch elicited robust activation in the left hemisphere posterior parietal cortex close to, but not overlapping, the hand representations in the somatosensory cortex. Responses in the posterior parietal cortex were dependent on the presence of both an agent and object of touch (brush plus hand) but showed only limited selectivity beyond that and were not selective consistently for hand brushing compared with brushing near the hand. These parietal regions may be similar to those identified previously as responsive to observed reaching and observed grasping, but our current results demonstrate that neither reaching nor grasping is necessary to drive these regions.

Relationship to previous studies

In contrast to our findings, previous studies have reported that SI (Blakemore et al., 2005; Schaefer et al., 2009, 2012, 2013; Kuehn et al., 2013, 2014) and SII (Keysers et al., 2004; Ebisch et al., 2008, 2011; Keysers and Gazzola, 2009) are responsive selectively to observed touch. However, it is important to note that the regions we identified are close to somatosensory cortices, and previous studies may have misattributed their activations to SI or SII. Furthermore, although the inferior parietal region in our study appears to extend onto the anterior bank of the postcentral sulcus (BA2), the location of this activation is inconsistent with the location of the hand representation identified with tactile stimulation.

Attribution to SI and SII in previous studies was determined on the basis of tactile responsiveness and/or anatomy (often atlas based). However, these criteria alone may not be sufficient to claim reliably that observing touch also recruits the same somatosensory cortical areas responsible for feeling touch. First, tactile responses in the parietal cortex are not limited to anterior regions but can be found posterior to the postcentral sulcus (Huang et al., 2012). Our inferior parietal region did exhibit some tactile responses, but there was no differential response for touch of the left or right finger. However, both SI and SII would be expected to show stronger contralateral than ipsilateral responses (Disbrow et al., 2000; Ruben et al., 2001; Martuzzi et al., 2014). In one of the early studies on touch observation, SII was identified as the region showing responses to brushing of either leg relative to rest (Keysers et al., 2004), which would likely include areas outside SII, including our inferior parietal region. Indeed, although the tactile-responsive region in that study did extend into the lateral sulcus, consistent with the location of SII, the overlap with the observed touch responses was outside the lateral sulcus, and surprisingly, given the attribution of SII, responses in the overlap were weaker for actual compared with observed touch. Although we did not observe tactile responses in our superior parietal region, there was a differential response to motor movement of the lips compared with the fingers, suggesting some somatotopic properties. Together, these considerations highlight that the presence of tactile responsiveness alone is not sufficient to claim SI or SII.

Second, even if anatomy suggests that activation extends into the postcentral sulcus, and thus into BA2, it is important to consider the underlying somatotopy. In our data, the activation that extended into the postcentral sulcus was far inferior to the representation of the hand in SI, identified with tactile and motor stimuli, and was also superior to the SII region in the parietal operculum. Instead, the inferior parietal region abuts the face representation in BA2.

Based on our findings, we suggest that previous studies may have misattributed activations in the posterior parietal cortex to SI and SII. A close look at the peak activation coordinates reported in previous studies is consistent with this account, with activations that are often much more inferior (Ebisch et al., 2008; Kuehn et al., 2013, 2014) or posterior (Kuehn et al., 2013, 2014) to the typical coordinates for the SI hand representation (Martuzzi et al., 2014). In many cases, the coordinates reported either overlap or are very close to the superior and inferior parietal regions we describe.

Support for the role of SI in touch observation has also been provided by transcranial magnetic stimulation (TMS) studies showing that stimulation of the parietal cortex produces a behavioral deficit for judgments of visual hand stimuli (Bolognini et al., 2011). The TMS sites were localized to SI on the basis of induction of extinction/paresthesia after a tactile stimulus, but, given the likely spread of the TMS effect and uncertainties over the precise stimulation site in the absence of any fMRI guidance or online navigation, the results could reflect effects on the posterior parietal cortex rather than the somatosensory cortex.

In summary, our findings emphasize the need to provide careful anatomical and functional mapping of tactile, motor, and visual responsiveness to demonstrate convincingly responses to observed touch in SI or SII.

Action observation in the posterior parietal cortex

Previous studies have demonstrated selective responses in the parietal cortex for observed action (Caspers et al., 2010), such as reaching (Shmuelof and Zohary, 2005, 2006, 2008; Filimon et al., 2007, 2014), grasping (Grafton et al., 1996; Peeters et al., 2009; Turella et al., 2009; Oosterhof et al., 2010; Nelissen et al., 2011), tool use (Culham et al., 2003, 2006; Shmuelof and Zohary, 2005, 2006, 2008; Culham and Valyear, 2006; Filimon et al., 2007), or general action observation (Buccino et al., 2001; Abdollahi et al., 2013), and the posterior parietal cortex has been described as part of the so-called action observation network (AON; Rizzolatti and Craighero, 2004; Turella and Lingnau, 2014). We found that our superior parietal region overlapped with responses selective for observed reaching (Shmuelof and Zohary, 2005, 2006, 2008), and the two parietal regions we identify may be similar to those identified as part of the AON. Importantly, however, our stimuli involved no reaching or grasping, suggesting that a characterization solely in terms of these specific hand actions may be misleading. Instead, it may be more appropriate to characterize them in terms of general interactions between objects and body parts.

Functional properties of the observed touch-selective regions

We characterized the functional properties of the superior and inferior regions in Experiments 2–4, revealing effects of laterality, but not viewpoint, inconsistent modulation by observing actual touch, and some selectivity for the form of the object and agent of touch. However, the strongest effect we observed was the importance of both an agent and object of touch (e.g., brush plus hand). This is in contrast to the ventral temporal cortex, in which the presence of the hand alone was sufficient to drive responses.

The effect of laterality most likely reflects an effect of brush location and the presence of basic retinotopy in these regions rather than an effect of hand identity. Specifically, we found larger responses when the brush was in the contralateral visual field, and this effect was strongest for brush only but absent for hand only. Although we focused on the left hemisphere, the same effect was observed (but for the opposite visual field) for the right superior parietal region. This result is consistent with the presence of multiple retinotopic maps in the human superior parietal cortex (Swisher et al., 2007; Saygin and Sereno, 2008; Huang et al., 2012) and sensitivity to visual motion (Konen and Kastner, 2008). This apparent retinotopic effect was weaker but still present in the inferior parietal region. More generally, the potential influence of retinotopy emphasizes the need for caution in interpreting effects as the result of high-level differences between conditions that differ in their low-level visual properties.

Recent studies have emphasized the multimodal properties of the posterior parietal cortex (Sereno and Huang, 2014), and, consistent with this view, we observed some motor and tactile responsiveness, predominantly in our inferior parietal region. In particular, we observed nonselective responses to movement of the lips and fingers and to tactile stimulation of the left and right fingers. The presence of tactile, motor, and visual responsiveness in the inferior parietal cortex is reminiscent of the properties of area PFG in monkeys (Rozzi et al., 2008), which has been implicated in action observation (Nelissen et al., 2011) and contains mirror neurons (Ferrari et al., 2005; Rizzolatti and Sinigaglia, 2010). Thus, we tentatively suggest that the inferior parietal region we identify may correspond to area PFG in nonhuman primates.

Conclusions

Overall, our results question the role of the somatosensory cortex in understanding others' experiences. We find that observing touch activates regions primarily in the left posterior parietal cortex and not the hand representations in SI or SII.

Footnotes

This work was supported by the National Institutes of Health Intramural Research Program of the National Institute of Mental Health Protocol 93-M-0170, NCT00001360. We thank Dr. Ziad Saad for helpful discussions on implementing SUMA and Dr. Lior Shmuelof for providing the “reach-selective movie localizer.” We also thank Sandra Truong, Victoria Elkis, Emily Bilger, Beth Aguila, and Marcie King for assistance with fMRI data collection.

References

- Abdollahi RO, Jastorff J, Orban GA. Common and segregated processing of observed actions in human SPL. Cereb Cortex. 2013;23:2734–2753. doi: 10.1093/cercor/bhs264. [DOI] [PubMed] [Google Scholar]

- Blakemore SJ, Bristow D, Bird G, Frith C, Ward J. Somatosensory activations during the observation of touch and a case of vision-touch synaesthesia. Brain. 2005;128:1571–1583. doi: 10.1093/brain/awh500. [DOI] [PubMed] [Google Scholar]

- Bolognini N, Rossetti A, Maravita A, Miniussi C. Seeing touch in the somatosensory cortex: a TMS study of the visual perception of touch. Hum Brain Mapp. 2011;32:2104–2114. doi: 10.1002/hbm.21172. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buccino G, Binkofski F, Fink GR, Fadiga L, Fogassi L, Gallese V, Seitz RJ, Zilles K, Rizzolatti G, Freund HJ. Action observation activates premotor and parietal areas in a somatotopic manner: an fMRI study. Eur J Neurosci. 2001;13:400–404. doi: 10.1111/j.1460-9568.2001.01385.x. [DOI] [PubMed] [Google Scholar]

- Caspers S, Zilles K, Laird AR, Eickhoff SB. ALE meta-analysis of action observation and imitation in the human brain. Neuroimage. 2010;50:1148–1167. doi: 10.1016/j.neuroimage.2009.12.112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chan AW, Peelen MV, Downing PE. The effect of viewpoint on body representation in the extrastriate body area. Neuroreport. 2004;15:2407–2410. doi: 10.1097/00001756-200410250-00021. [DOI] [PubMed] [Google Scholar]

- Chan AW, Kravitz DJ, Truong S, Arizpe J, Baker CI. Cortical representations of bodies and faces are strongest in commonly experienced configurations. Nat Neurosci. 2010;13:417–418. doi: 10.1038/nn.2502. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Culham JC, Kanwisher NG. Neuroimaging of cognitive functions in human parietal cortex. Curr Opin Neurobiol. 2001;11:157–163. doi: 10.1016/S0959-4388(00)00191-4. [DOI] [PubMed] [Google Scholar]

- Culham JC, Valyear KF. Human parietal cortex in action. Curr Opin Neurobiol. 2006;16:205–212. doi: 10.1016/j.conb.2006.03.005. [DOI] [PubMed] [Google Scholar]

- Culham JC, Danckert SL, DeSouza JF, Gati JS, Menon RS, Goodale MA. Visually guided grasping produces fMRI activation in dorsal but not ventral stream brain areas. Exp Brain Res. 2003;153:180–189. doi: 10.1007/s00221-003-1591-5. [DOI] [PubMed] [Google Scholar]

- Culham JC, Cavina-Pratesi C, Singhal A. The role of parietal cortex in visuomotor control: what have we learned from neuroimaging? Neuropsychologia. 2006;44:2668–2684. doi: 10.1016/j.neuropsychologia.2005.11.003. [DOI] [PubMed] [Google Scholar]

- Disbrow E, Roberts T, Krubitzer L. Somatotopic organization of cortical fields in the lateral sulcus of Homo sapiens: evidence for SII and PV. J Comp Neurol. 2000;418:1–21. doi: 10.1002/(SICI)1096-9861(20000228)418:1<1::AID-CNE1>3.0.CO;2-P. [DOI] [PubMed] [Google Scholar]

- Downing PE, Jiang Y, Shuman M, Kanwisher N. A cortical area selective for visual processing of the human body. Science. 2001;293:2470–2473. doi: 10.1126/science.1063414. [DOI] [PubMed] [Google Scholar]

- Downing PE, Chan AW, Peelen MV, Dodds CM, Kanwisher N. Domain specificity in visual cortex. Cereb Cortex. 2006;16:1453–1461. doi: 10.1093/cercor/bhj086. [DOI] [PubMed] [Google Scholar]

- Ebisch SJ, Perrucci MG, Ferretti A, Del Gratta C, Romani GL, Gallese V. The sense of touch: embodied simulation in a visuotactile mirroring mechanism for observed animate or inanimate touch. J Cogn Neurosci. 2008;20:1611–1623. doi: 10.1162/jocn.2008.20111. [DOI] [PubMed] [Google Scholar]

- Ebisch SJ, Ferri F, Salone A, Perrucci MG, D'Amico L, Ferro FM, Romani GL, Gallese V. Differential involvement of somatosensory and interoceptive cortices during the observation of affective touch. J Cogn Neurosci. 2011;23:1808–1822. doi: 10.1162/jocn.2010.21551. [DOI] [PubMed] [Google Scholar]

- Ferrari PF, Rozzi S, Fogassi L. Mirror neurons responding to observation of actions made with tools in monkey ventral premotor cortex. J Cogn Neurosci. 2005;17:212–226. doi: 10.1162/0898929053124910. [DOI] [PubMed] [Google Scholar]

- Filimon F, Nelson JD, Hagler DJ, Sereno MI. Human cortical representations for reaching: mirror neurons for execution, observation, and imagery. Neuroimage. 2007;37:1315–1328. doi: 10.1016/j.neuroimage.2007.06.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Filimon F, Rieth CA, Sereno MI, Cottrell GW. Observed, executed, and imagined action representations can be decoded from ventral and dorsal areas. Cereb Cortex. 2014 doi: 10.1093/cercor/bhu110. Advance online publication. [DOI] [PubMed] [Google Scholar]

- Geyer S, Schleicher A, Zilles K. Areas 3a, 3b, and 1 of human primary somatosensory cortex: 1. Microstructural organization and interindividual variability. Neuroimage. 1999;10:63–83. doi: 10.1006/nimg.1999.0440. [DOI] [PubMed] [Google Scholar]

- Grafton ST, Fagg AH, Woods RP, Arbib MA. Functional anatomy of pointing and grasping in humans. Cereb Cortex. 1996;6:226–237. doi: 10.1093/cercor/6.2.226. [DOI] [PubMed] [Google Scholar]

- Huang RS, Chen CF, Tran AT, Holstein KL, Sereno MI. Mapping multisensory parietal face and body areas in humans. Proc Natl Acad Sci U S A. 2012;109:18114–18119. doi: 10.1073/pnas.1207946109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keysers C, Gazzola V. Expanding the mirror: vicarious activity for actions, emotions, and sensations. Curr Opin Neurobiol. 2009;19:666–671. doi: 10.1016/j.conb.2009.10.006. [DOI] [PubMed] [Google Scholar]

- Keysers C, Gazzola V. Dissociating the ability and propensity for empathy. Trends Cogn Sci. 2014;18:163–166. doi: 10.1016/j.tics.2013.12.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keysers C, Wicker B, Gazzola V, Anton JL, Fogassi L, Gallese V. A touching sight: SII/PV activation during the observation and experience of touch. Neuron. 2004;42:335–346. doi: 10.1016/S0896-6273(04)00156-4. [DOI] [PubMed] [Google Scholar]

- Konen CS, Kastner S. Representation of eye movements and stimulus motion in topographically organized areas of human posterior parietal cortex. J Neurosci. 2008;28:8361–8375. doi: 10.1523/JNEUROSCI.1930-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuehn E, Trampel R, Mueller K, Turner R, Schütz-Bosbach S. Judging roughness by sight—a 7-Tesla fMRI study on responsivity of the primary somatosensory cortex during observed touch of self and others. Hum Brain Mapp. 2013;34:1882–1895. doi: 10.1002/hbm.22031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuehn E, Mueller K, Turner R, Schutz-Bosbach S. The functional architecture of S1 during touch observation described with 7 T fMRI. Brain Struct Funct. 2014;219:119–140. doi: 10.1007/s00429-012-0489-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Martuzzi R, van der Zwaag W, Farthouat J, Gruetter R, Blanke O. Human finger somatotopy in areas 3b, 1, and 2: a 7T fMRI study using a natural stimulus. Hum Brain Mapp. 2014;35:213–226. doi: 10.1002/hbm.22172. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meyer K, Kaplan JT, Essex R, Damasio H, Damasio A. Seeing touch is correlated with content-specific activity in primary somatosensory cortex. Cereb Cortex. 2011;21:2113–2121. doi: 10.1093/cercor/bhq289. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nelissen K, Borra E, Gerbella M, Rozzi S, Luppino G, Vanduffel W, Rizzolatti G, Orban GA. Action observation circuits in the macaque monkey cortex. J Neurosci. 2011;31:3743–3756. doi: 10.1523/JNEUROSCI.4803-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oosterhof NN, Wiggett AJ, Diedrichsen J, Tipper SP, Downing PE. Surface-based information mapping reveals crossmodal vision-action representations in human parietal and occipitotemporal cortex. J Neurophysiol. 2010;104:1077–1089. doi: 10.1152/jn.00326.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peelen MV, Downing PE. Selectivity for the human body in the fusiform gyrus. J Neurophysiol. 2005;93:603–608. doi: 10.1152/jn.00513.2004. [DOI] [PubMed] [Google Scholar]

- Peeters R, Simone L, Nelissen K, Fabbri-Destro M, Vanduffel W, Rizzolatti G, Orban GA. The representation of tool use in humans and monkeys: common and uniquely human features. J Neurosci. 2009;29:11523–11539. doi: 10.1523/JNEUROSCI.2040-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rizzolatti G, Craighero L. The mirror-neuron system. Annu Rev Neurosci. 2004;27:169–192. doi: 10.1146/annurev.neuro.27.070203.144230. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Sinigaglia C. The functional role of the parieto-frontal mirror circuit: interpretations and misinterpretations. Nat Rev Neurosci. 2010;11:264–274. doi: 10.1038/nrn2805. [DOI] [PubMed] [Google Scholar]

- Roland PE, Geyer S, Amunts K, Schormann T, Schleicher A, Malikovic A, Zilles K. Cytoarchitectural maps of the human brain in standard anatomical space. Hum Brain Mapp. 1997;5:222–227. doi: 10.1002/(SICI)1097-0193(1997)5:4<222::AID-HBM3>3.0.CO;2-5. [DOI] [PubMed] [Google Scholar]

- Rozzi S, Ferrari PF, Bonini L, Rizzolatti G, Fogassi L. Functional organization of inferior parietal lobule convexity in the macaque monkey: electrophysiological characterization of motor, sensory and mirror responses and their correlation with cytoarchitectonic areas. Eur J Neurosci. 2008;28:1569–1588. doi: 10.1111/j.1460-9568.2008.06395.x. [DOI] [PubMed] [Google Scholar]

- Ruben J, Schwiemann J, Deuchert M, Meyer R, Krause T, Curio G, Villringer K, Kurth R, Villringer A. Somatotopic organization of human secondary somatosensory cortex. Cereb Cortex. 2001;11:463–473. doi: 10.1093/cercor/11.5.463. [DOI] [PubMed] [Google Scholar]

- Saygin AP, Sereno MI. Retinotopy and attention in human occipital, temporal, parietal, and frontal cortex. Cereb Cortex. 2008;18:2158–2168. doi: 10.1093/cercor/bhm242. [DOI] [PubMed] [Google Scholar]

- Schaefer M, Xu B, Flor H, Cohen LG. Effects of different viewing perspectives on somatosensory activations during observation of touch. Hum Brain Mapp. 2009;30:2722–2730. doi: 10.1002/hbm.20701. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schaefer M, Heinze HJ, Rotte M. Embodied empathy for tactile events: Interindividual differences and vicarious somatosensory responses during touch observation. Neuroimage. 2012;60:952–957. doi: 10.1016/j.neuroimage.2012.01.112. [DOI] [PubMed] [Google Scholar]

- Schaefer M, Rotte M, Heinze HJ, Denke C. Mirror-like brain responses to observed touch and personality dimensions. Front Hum Neurosci. 2013;7:227. doi: 10.3389/fnhum.2013.00227. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schwarzlose RF, Baker CI, Kanwisher N. Separate face and body selectivity on the fusiform gyrus. J Neurosci. 2005;25:11055–11059. doi: 10.1523/JNEUROSCI.2621-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sereno MI, Huang RS. Multisensory maps in parietal cortex. Curr Opin Neurobiol. 2014;24:39–46. doi: 10.1016/j.conb.2013.08.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shmuelof L, Zohary E. Dissociation between ventral and dorsal fMRI activation during object and action recognition. Neuron. 2005;47:457–470. doi: 10.1016/j.neuron.2005.06.034. [DOI] [PubMed] [Google Scholar]

- Shmuelof L, Zohary E. A mirror representation of others' actions in the human anterior parietal cortex. J Neurosci. 2006;26:9736–9742. doi: 10.1523/JNEUROSCI.1836-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shmuelof L, Zohary E. Mirror-image representation of action in the anterior parietal cortex. Nat Neurosci. 2008;11:1267–1269. doi: 10.1038/nn.2196. [DOI] [PubMed] [Google Scholar]

- Swisher JD, Halko MA, Merabet LB, McMains SA, Somers DC. Visual topography of human intraparietal sulcus. J Neurosci. 2007;27:5326–5337. doi: 10.1523/JNEUROSCI.0991-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taylor JC, Wiggett AJ, Downing PE. Functional MRI analysis of body and body part representations in the extrastriate and fusiform body areas. J Neurophysiol. 2007;98:1626–1633. doi: 10.1152/jn.00012.2007. [DOI] [PubMed] [Google Scholar]

- Turella L, Lingnau A. Neural correlates of grasping. Front Hum Neurosci. 2014;8:686. doi: 10.3389/fnhum.2014.00686. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Turella L, Erb M, Grodd W, Castiello U. Visual features of an observed agent do not modulate human brain activity during action observation. Neuroimage. 2009;46:844–853. doi: 10.1016/j.neuroimage.2009.03.002. [DOI] [PubMed] [Google Scholar]

- Yousry TA, Schmid UD, Alkadhi H, Schmidt D, Peraud A, Buettner A, Winkler P. Localization of the motor hand area to a knob on the precentral gyrus. Brain. 1997;120:141–157. doi: 10.1093/brain/120.1.141. [DOI] [PubMed] [Google Scholar]