Abstract

The neural systems that code for location and facing direction during spatial navigation have been extensively investigated; however, the mechanisms by which these quantities are referenced to external features of the world are not well understood. To address this issue, we examined behavioral priming and fMRI activity patterns while human subjects re-instantiated spatial views from a recently learned virtual environment. Behavioral results indicated that imagined location and facing direction were represented during this task, and multi-voxel pattern analyses indicated the retrosplenial complex (RSC) was the anatomical locus of these spatial codes. Critically, in both cases, location and direction were defined based on fixed elements of the local environment and generalized across geometrically-similar local environments. These results suggest that RSC anchors internal spatial representations to local topographical features, thus allowing us to stay oriented while we navigate and to retrieve from memory the experience of being in a particular place.

Introduction

To be oriented in the world, an organism must know where it is and which direction it is facing. In rodents, location information is encoded by place and grid cells in the hippocampal formation,1, 2 and directional information is coded by head direction (HD) cells in Papez circuit structures3; recent work has observed similar spatial codes in these regions in humans4–8. These cellular populations are coordinated with each other, such that if the directional signal coded by HD cells rotates, the firing fields of place and grid cells rotate by a corresponding amount (e.g.9). This suggests that the HD cells support an internal compass that represents the animal's heading (i.e. facing direction) and updates this quantity as the animal moves.

However, for a neural compass to be useful, it is not enough to represent direction in arbitrary coordinates – the heading must be defined relative to fixed features of the environment, just as the heading revealed by a magnetic compass is defined relative to the north-south axis of the earth. This presents a challenge: in the absence of magnetoception or a sidereal/solar compass, there is no single perceptible feature that consistently indicates direction across all terrestrial environments. A possible solution is to use one’s perceived orientation relative to local (currently-visible) landmarks to anchor one’s sense of direction, which can then be maintained during navigation through idiothetic cues10–12. To do this, however, one must have a representation of one’s heading relative to local environmental features that is at least potentially separable from the internal sense of direction supported by the HD cells. Although there is considerable evidence that both rodents and humans use allothetic information to orient themselves, a neural locus for this separate representation of locally-referenced facing direction has yet to be identified.

Based on previous neuroimaging and neuropsychological results4, 13–16, as well as theoretical models17, 18, we hypothesized that the retrosplenial/medial parietal region (referred to here as the "retrosplenial complex", or RSC) might support this locally-referenced representation of heading, thus providing a “dial” for the neural compass. Moreover, we hypothesized that recovery of this heading representation might be an essential element of spatial memory retrieval. To test these ideas, we collected behavioral and functional magnetic resonance imaging (fMRI) data while subjects re-instantiated views of a newly learned virtual environment during performance of a judgment of relative direction (JRD) task. This task requires subjects to imagine themselves in a specific location facing a specific direction, which means that they must mentally re-orient themselves (i.e. re-establish their sense of direction) on each trial. Critically, the virtual environment was divided into separate “museums” that had distinguishing visual features but identical internal geometries, which were set at different angles relative to each other within a larger “park” (Fig. 1a). This design allowed us to distinguish between three kinds of spatial representations: (i) spatial representations that used a single global reference frame that applied across the entire environment, (ii) spatial representations that used locally-anchored reference frames that were unique to each museum, (iii) spatial representations that used locally-anchored reference frames that generalized across different museums with similar geometry.

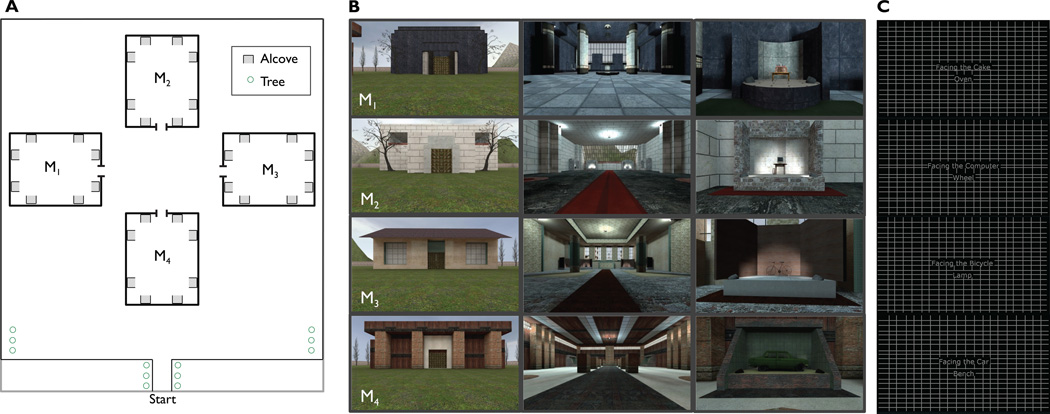

Figure 1. Map and images of the virtual environment.

A. Map of the virtual park and the four museums. Each museum was oriented at a unique direction with respect to the surrounding park. Objects were displayed within alcoves, which are indicated by grey squares. Each alcove could only be viewed from one direction.

B. Images of the exteriors, interiors and alcoves of each museum.

C. Example screen shots from the fMRI version of the judgment of relative direction (JRD) task. Participants imagined themselves facing the bicycle, and responded to indicate whether the lamp would be to their left or right from this view.

Previous behavioral work with the JRD task has found evidence for coding of locations and directions in spatial reference frames aligned to local features of the environment such as room geometry or the arrangement of objects within a room (and also in frames aligned to egocentric axes when external features are absent)19, 20. Our primary concern was to test the hypothesis that RSC (or other brain regions) might mediate these locally-referenced spatial frames. To this end, we used behavioral priming (in Experiment 1) and similarities and differences between multi-voxel patterns elicited on different retrieval trials (in Experiment 2) to identify location and direction codes, and then tested whether these spatial representations were aligned to the local features or not. To anticipate, our results indicate that RSC codes for the facing direction and location of imagined viewpoints, and that these are coded in a reference frame that is anchored to environmental features but generalizes across local environments with similar geometry.

Results

Behavioral evidence for direction coding

We first used cognitive behavioral testing to establish that directional codes are used during our version of the JRD task (location codes are considered below). In Experiment 1, participants learned a virtual environment consisting of four “museums,” which were visually distinguishable but had similar internal geometry. After reaching criterion during a training phase during which they were required to navigate from a starting point outside the museums to individual objects within (see Methods), they were tested on their knowledge of the objects’ locations. On each trial, they were verbally cued to imagine themselves facing a reference object, and they used the keyboard to indicate whether a target object would be to their left or right. Because each object was placed in such a way that it could only be viewed from a specific direction, specifying a reference object implicitly specified both a facing direction and a location (see Fig. 1b). Participants responded accurately on most trials (M = 89.8% correct, S = 6%). Only correct trials were entered into analyses of reaction time.

We hypothesized that directional representations used for mental orientation on each trial would be revealed through behavioral priming: specifically, that reaction times would be speeded on trials that had the same implied direction as the immediately-preceding trial. For this first set of analyses, we defined direction locally. That is, “North” for each museum was defined as the direction facing the wall opposite the doorway, and repetition of this orientation (or other orientations) across museums was considered to be a repetition of the same direction. To ensure that priming effects were attributable to repetition of direction rather than repetition of location, we excluded from analysis cases for which the facing object on the immediately preceding trial was drawn from the same corner of the same museum or the geometrically equivalent corner of a different museum (see Fig. 2a). This restriction imposes a criterion of abstractness on direction coding: representations of imagined direction must generalize across different views and different locations.

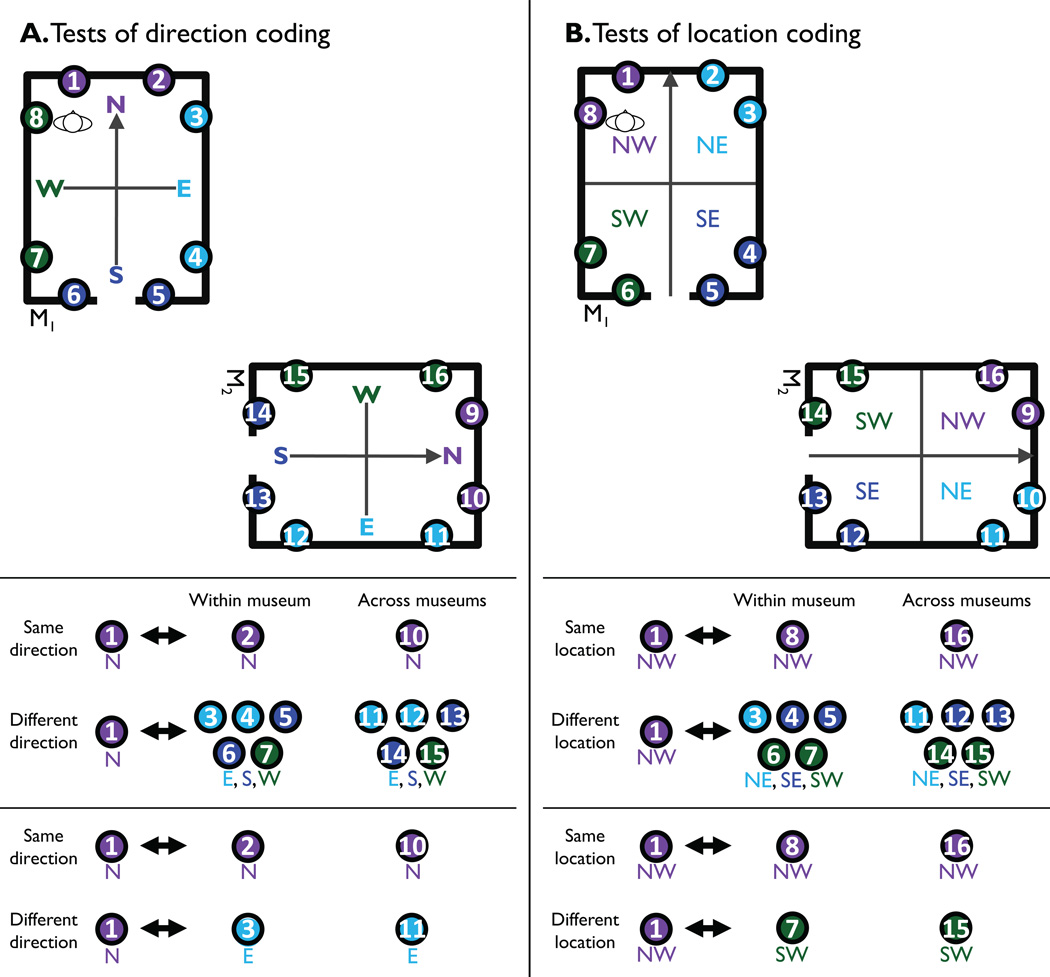

Figure 2. Summary of analysis scheme.

A. Contrasts used to test for coding of facing direction. Top panel shows all views for two of the four museums; views that face the same direction as defined by the local museum frame are colored the same. Middle panel shows comparisons between view 1 and other views that face the same or different local direction, within and across museums. To partially control for location, view 1 is never compared to views in the same corner (i.e. views 1, 8, 9 and 16 are excluded). Bottom panel shows a test for direction coding that completely controls for location: in this case, the same-direction comparison view is located in the same corner as the different-direction comparison view.

B. Contrasts used to test for coding of location. Top panel shows all views for two of the four museums; views located in the same corner of the environment (defined by the local museum frame) are colored the same. Middle panel shows comparisons between view 1 and other views located in the same or different corner, within and across museums. To partially control for direction, view 1 is never compared to views facing the same local direction (i.e. views 1, 2, 9 and 10 are excluded). Bottom panel shows a test for location coding that completely controls for direction: in this case, the same-location comparison view faces the same direction as the different-location comparison view.

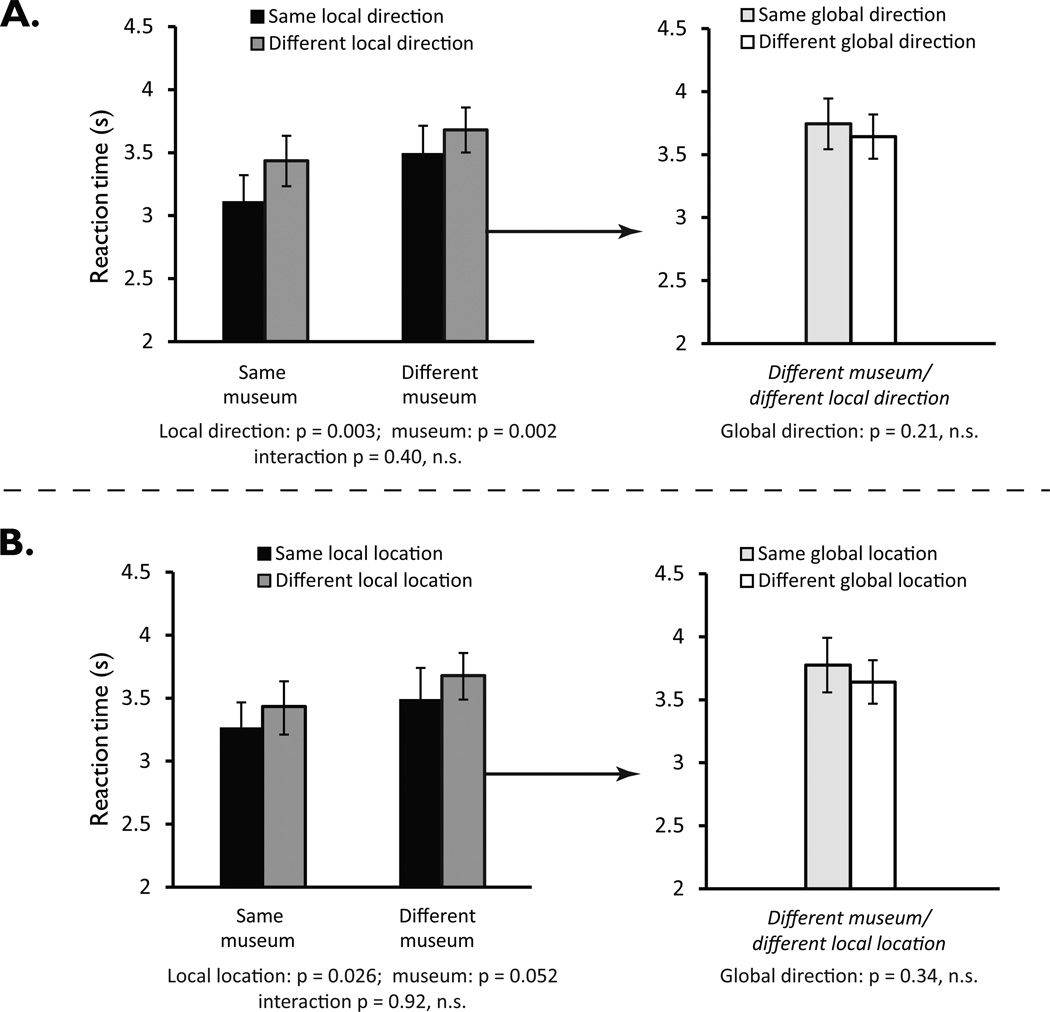

A repeated-measures ANOVA with direction (same/different as preceding trial) and museum (same/different as preceding trial) as factors revealed a main effect of direction, F(1,21) = 11.631, p = 0.003, with faster reaction times when imagined direction was repeated over successive trials (3.31 ± 0.20 s same vs. 3.56 ± 0.18 s different) (Fig. 3a, left panel). We also observed a main effect of repeating museum, F(1,21) = 11.802, p = 0.002, with faster reaction times when reference objects on successive trials were located within the same museum (3.27 ± 0.19 s same vs. 3.59 ± 0.19 s different). No significant interaction between direction and museum was observed, F(1,21) = 0.734, p = 0.401. The absence of an interaction is important because it suggests that direction was defined consistently across all four museums. That is, “local North” in one museum was equivalent to “local North” in another, even though these were different directions in the global reference frame. In contrast, if direction had been coded in a local manner that was unique to each museum, or using a global system that applied across all museums, then we would not have seen this equivalence between locally-defined directions.

Figure 3. Behavioral priming for facing direction and location in Experiment 1.

A. Priming for facing direction in the local (museum-anchored) reference frame. Left panel shows that reaction times were faster when local direction (e.g. facing the back wall) was repeated across successive trials compared to trials in which local direction was not repeated, irrespective of whether the repetition was within or across museums. Right panel shows breakdown of reaction timess for different-museum/different local direction trials, based on whether global direction was repeated or not. Results indicate an absence of residual coding of direction in the global frame.

B. Priming for location defined in the local reference frame. Left panel shows that reaction times were faster when location defined locally (e.g. back right corner) was repeated across successive trials compared to trials in which location was not repeated, irrespective of whether the repetition was within or across museums. Right panel shows breakdown of reaction times for different-museum/different local direction trials, based on whether location defined globally was repeated or not. Results indicate an absence of residual coding of location in the global frame. Error bars in both panels indicate standard error of the mean.

These data indicate that participants represented their facing direction during the JRD task and updated this representation when the direction changed from trial to trial, thus leading to an reaction time cost. Moreover, this representation of facing direction generalized across different museums with similar local geometry. Further analyses indicated that reaction times were faster for imagined views facing the wall opposite the doorway (Supplementary Fig. 1), suggesting that this direction served as a “conceptual north” within each environment19 and providing additional evidence for spatial codes that vary with direction.

Behavioral evidence for location coding

When reorienting themselves on each trial of the experiment, participants established an imagined location in front of the reference object in addition to establishing an imagined direction. We hypothesized that, as with facing direction, this location code would reveal itself through priming. For this analysis, objects in the same corner of a museum were considered to be in the same location, as were objects in geometrically-equivalent corners in different museums (where geometrical equivalence was defined in terms of the local reference frame—thus, for example, the corner opposite the door on the left in one museum was considered equivalent to the corner opposite the door on the left in the other museums). To ensure that priming effects reflected repetition of location rather than repetition of direction, we only analyzed cases for which the implied direction differed across successive trials (see Fig. 2b).

A repeated-measures ANOVA, with location (same vs. different) and museum (same vs. different) as factors, found a significant main effect of location, F(1,21) = 5.695, p = 0.026, indicating that—as predicted— reaction times were faster when imagined location was repeated over successive trials (3.31 ± 0.20 s same vs. 3.56 ± 0.18 s different) (Fig. 3B, left panel). There was a marginal effect of repeating museum, F(1,21) = 4.227, p = 0.052. Critically, there was no interaction of location repetition and museum repetition, F(1,21) = 0.012, p = 0.915, indicating that priming occurs between geometrically-equivalent locations, not only when these locations are physically the same, but also when they are different locations in different museums. These results showed that location was represented in this task as well as direction and—like direction—it was coded using a locally-anchored reference frame that generalizes across geometrically-equivalent environments.

Comparing local and global reference frames

Although our results strongly implicate coding with respect to local reference frames, we also tested whether there might also be residual coding of location and direction within the global reference frame. Because museums were oriented orthogonally or oppositely within the park, it was possible to dissociate local and global spatial quantities by examining response priming across trials from different museums.

To test for global coding of direction, we divided different-museum/different-direction trials into two groups: (i) trials for which local direction differed from the immediately-preceding trial but global direction was the same (e.g. in Fig. 2a, Views 1 and 15 face different local directions but both face global North), and (ii) trials for which local and global direction both differed. There was no difference in reaction time between these two trial types, t(21) = 1.283, p = 0.214; hence, there was no evidence of global direction priming (Fig. 3a, right panel). Indeed, the trend was in the opposite direction: reaction time was slower when global direction was repeated (3.74 ± 0.20 s) than when it changed (3.64 ± 0.18 s). To test for global coding of location, we performed an analogous analysis. That is, we divided different-museum/different location trials into (i) trials for which the location was in a different corner of the museum as defined by the local frame but the same corner as defined by the global frame, (ii) trials for which there was no spatial equivalence in either the local or global frame. There was no significant difference in reaction timess between these two kinds of trials, t(21) = 0.976, p = 0.340, hence no evidence for location priming in the global frame (Fig. 3B, right panel).

Finally, we considered the strongest case for priming in the global reference frame by examining trials for which both location and direction, defined in this frame, were repeated across museums. These were compared to trials for which both location and direction defined locally were repeated across museum. For example, we compared reaction times when view 1 was followed by view 15 (repetition of global location and direction) to reaction times when view 1 was followed by view 9 (repetition of local location and direction). Reaction times were significantly faster for repetitions of the locally analogous view than for repetitions of the globally analogous view (t(21) = 4.242, p = 0.00036), once again suggesting that location and direction were coded in the local rather than the global reference frame.

Directional coding in RSC

We next turned to fMRI to understand the neural basis of the spatial codes revealed in the first experiment. Participants in Experiment 2 underwent a modified version of the training procedure (see Methods), and then performed the JRD task in the scanner while fMRI data were obtained. To keep the scan sessions to a manageable length, locations at test were drawn from only two of the four museums shown at training; in addition, the timing and sequence of trials was adjusted to maximize fMRI signal detection. Most other aspects of the testing procedure were identical between the two experiments (see Methods).

Participants achieved a high level of accuracy on the JRD task in Experiment 2 (M = 94.4% correct, SD = 6%). Reaction times were significantly faster than in Experiment 1, likely because fMRI participants received an additional training session on the day of the scan (2.60 s vs. 3.39 s, unequal ns t-test: t(44) = 4.599, p = 0.00008). Directional and location priming effects were not significant in Experiment 2 (direction: F(1,23) = 1.501, p = 0.233, n.s.; location: F(1,23) = 1.190, p = 0.287, n.s.), but other behavioral effects were consistent across the two experiments (see Supplementary Fig. 1). Although this lack of priming might suggest that qualitatively different representations were used to solve the task in the two experiments, a more plausible explanation is that priming effects were weakened in Experiment 2 by the use of a longer intertrial interval (see Methods). As fMRI data did not depend on obtaining differences in reaction times, we proceeded to look for neural signals corresponding to coding of direction.

We focused our initial analyses on RSC, as previous work suggests that this region might be critically involved in coding of spatial quantities such as facing direction4, 6, 16. This region was functionally defined in each subject based on a contrast of scenes > objects in independent localizer scans. To investigate whether RSC represented participants’ imagined direction, we calculated similarities between multivoxel activity patterns elicited by all 16 possible views (8 reference objects × 2 museums) across two independent data sets corresponding to the first and second half of the experiment. We then compared pattern similarity for views facing the same locally defined direction to pattern similarity for views facing different directions. As in the behavioral experiment, we only analyzed view pairs for which the implied location was different (Fig. 2a, middle panel). Thus, same-view pairs were excluded, as were view pairs that faced different directions from the same location (i.e. views in the same corner).

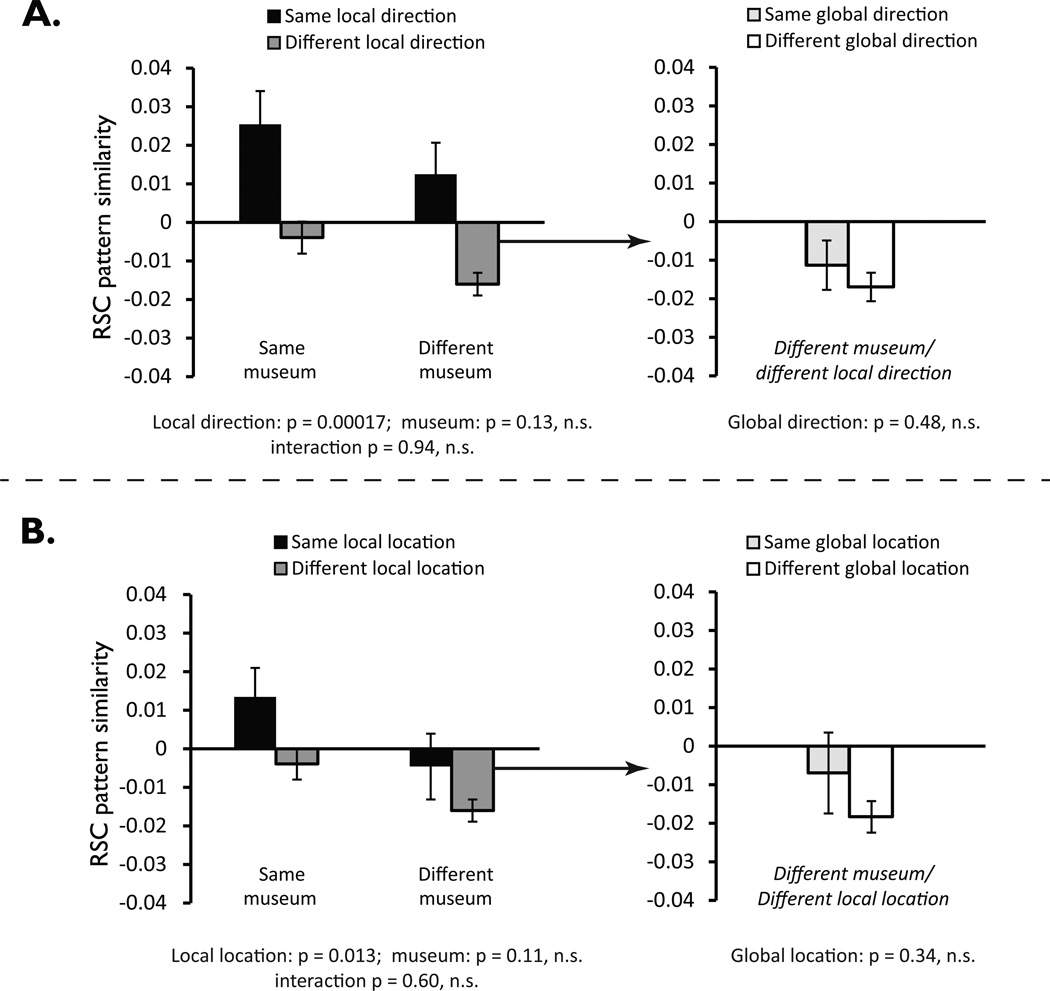

The results indicated that RSC coded imagined facing direction (Fig. 4a, left panel). A 2 × 2 repeated-measures ANOVA with factors for direction (same vs. different) and museum (same vs. different) found a significant main effect of direction, F(1,23) = 20.009, p = 0.00017. There was no effect of museum identity, F(1,23) = 2.450, p = 0.131 and no interaction between direction and museum, F(1,23) = 0.005, p = 0.942. Thus, just as in Experiment 1, direction was coded in a manner that generalized across equivalent local directions in different museums. These data suggest that RSC encodes imagined facing direction during memory retrieval and does so in a way that is referenced to the local environment and is consistent across geometrically-similar environments.

Figure 4. Coding of facing direction and location in RSC activation patterns in Experiment 2.

A. Coding of local direction in the local (museum-referenced) frame in RSC. Left panel shows that pattern similarity between views that face the same direction in local space was greater than pattern similarity between views that face different local directions, both within and across museums. Right panel shows breakdown of pattern similarity for different-museum/different local direction trials, based on whether global direction was repeated or not. Results indicate an absence of residual coding of direction in the global frame.

B. Coding of location defined the local reference frame in RSC. Left panel shows that pattern similarity between views in the same or geometrically-equivalent corners was greater than pattern similarity between views in different corners, both within and across museums. Right panel shows breakdown of pattern similarity for different-museum/different local direction trials, based on whether views were in the same location in the global reference frame. Results indicate an absence of residual coding of location in the global frame. Error bars in both panels indicate standard error of the mean.

The analysis above potentially confounds direction coding with location coding because implied locations were, on average, closer together for same-direction views than for different-direction views. To ensure that the results above truly reflected coding of direction, we performed an additional test of direction coding for which location was completely controlled. Specifically, we compared each view to two other views, both of which had the same implied location, one with the same implied direction as the original view, the other with a different implied direction (Fig. 2a, bottom panel). We submitted these controlled comparison pattern similarities to a 2 × 2 repeated-measures ANOVA with direction and museum as factors. Even when controlling for location in this manner, we observed greater pattern similarity for same-direction views than for different-direction views, F(1,23) =8.279, p = 0.008, with no main effect of museum, F(1,23) = 0.850, p = 0.366, and no interaction of direction and museum, F(1,23) = 1.043, p = 0.318.

Location coding in RSC

In the above analyses, we examined direction coding while controlling for location, to ensure that the results could not be explained by location coding. However, the results of these analyses do not preclude the possibility that both location and direction might be encoded. To test this, we compared pattern similarities for views obtained in the same location (i.e. the same corner) to pattern similarities for views obtained in different locations, with geometrically-equivalent corners in different museums (as defined by the local reference frame) counting as the same location. As in Experiment 1, we restricted this analysis to pairs of views that differed in direction (Fig. 2b, middle panel). A 2 × 2 repeated-measure ANOVA with location and museum as factors found a main effect of location, F(1,23) = 7.162, p = 0.013, indicating that patterns were more similar for views in the same (or geometrically-equivalent) corner than for views in different corners (Fig. 4b, left panel). There was no main effect of museum, F(1,23) = 2.719, p = 0.113 and no interaction of location with museum F(1,23) = 0.284, p = 0.600. Thus, location is encoded in RSC; moreover, like direction, it is encoded using a local reference frame that is anchored to each museum and generalizes across museums.

Although the comparisons above were restricted to views facing different directions, a potential confound is that views in the same corner face more similar directions than views in different corners. For example, views in different corners can differ by 180 degrees whereas views in the same corner can differ at most by 90 degrees. To control for this, we performed an additional analysis in which we only considered pattern similarities between views that differed by 90 degrees (Fig. 2b, bottom panel). A 2 × 2 ANOVA on these revealed a main effect of location, F(1,23) = 4.581, p = 0.043, and no main effect of museum, F(1,23) = 1.834, p = 0.189 and no interaction of location by museum F(1,23) = 0.768, p = 0.390. Thus, just as direction coding can be observed in RSC when location is completely controlled, so can location coding be observed when direction is completely controlled.

Comparing local and global reference frames in RSC

Our analyses suggest that RSC coded both direction and location in the local reference frame. Following the same logic as in the behavioral analyses of Experiment 1, we then tested for residual coding of global direction and global location. First, we calculated the average similarity between views in different museums that faced the same global direction but different local directions and compared this to the average similarity between views in different museums that differed in both global and local direction. This analysis revealed no difference in pattern similarity relating to global direction, t(23) = 0.724, p = 0.476 (Fig. 4A, right panel). Second, we calculated the average similarity between views in different museums that were in the same location as defined by the global reference frame, and we compared this to the average similarity between views in different museums whose locations were non-corresponding in both reference frames. We found no difference between these conditions, t(23) = 0.965, p = 0.344 (Fig. 4B, right panel), once again consistent with an absence of global coding.

Finally, we compared the pattern similarity for views that share both location and direction across museums, as defined by either the local or global reference frame. Pattern similarity was greater for locally analogous views than for the globally analogous views, t(23) = 3.495, p = 0.002. In sum, our results indicated that RSC coded spatial quantities that were referenced to the internal geometry of the museums rather than to the global geometry of the park.

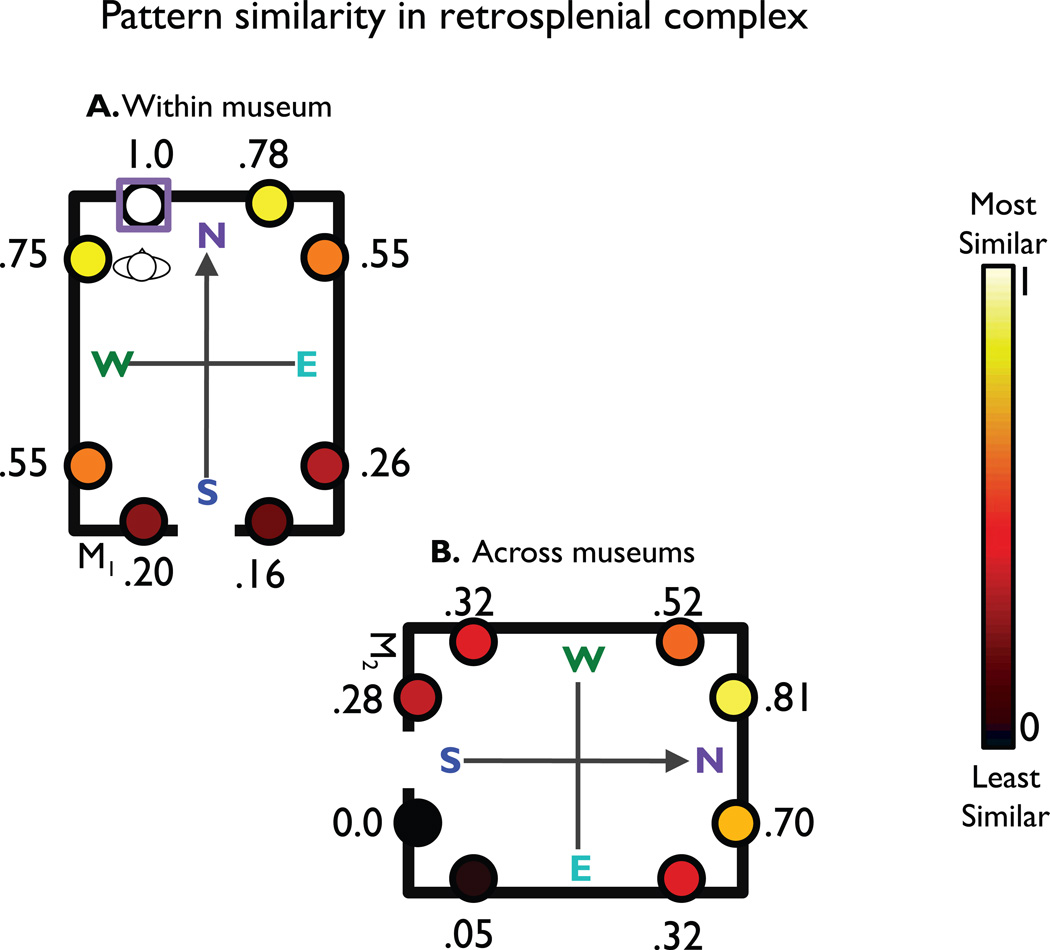

Visualization and Reconstruction of Spatial Similarities

To better understand the nature of the spatial representations revealed above and to qualitatively assess the full pattern of spatial coding in RSC, we visualized similarities between views by overlaying them on a map of the environment (Fig. 5). To create this map, we first created a pattern similarity map for each of the 16 views that indicated the similarity between that view (starting view) and the 15 other views (comparison views). We then combined these maps by aligning the starting views to one another using rotations and reflections that maintained the relative spatial relationships between the starting and comparison views. We then averaged the aligned maps to produce a composite similarity map.

Figure 5.

Visualization of pattern similarities in RSC. This diagram summarizes all 256 pairwise relationships between views in terms of 16 possible spatial relationships. To create this diagram, we first created a pattern similarity map for each of the 16 views by calculating the similarity between that view (starting view) and the other 15 other views (comparison views). These maps were then averaged over spatially equivalent starting views while maintaining the spatial relationships between the starter view and the comparison views. For example, the value for the view directly to the right of the starter view (starter view indicated by the square) represents the average pattern similarity between all views that face the same local direction and are located within the same museum. Pattern similarities were converted to a range from 0 to 1 and colored according to this value. The highest level of similarity was between patterns corresponding to the same view, as indicated by the value of 1.0 for the starter view. Pattern similarities are also high for views facing the same direction, located in the same corner, and there is also an effect of distance between views.

Inspection of the similarity map confirms and extends the previous findings. The starting view was maximally similar to itself (scaled to 1.0), indicating consistency of the response pattern across the first and second half of the experiment. Within a museum, the most similar non-identical views were the view facing the same direction in the adjacent location and the view facing the orthogonal direction in the same location. Across museums, the most similar views were the locally analogous view and the view facing the same local direction; there was also some similarity for the view facing the orthogonal direction in the equivalent location. Finally, comparison views in adjacent corners to the starter view were more similar than views in the opposite corner, indicating the possibility that RSC may represent location in a continuous gradient corresponding to distance or may represent the discrete boundaries shared among views.

To evaluate these last two possibilities, we analyzed similarities between views in terms of number of shared boundaries (same corner—2 shared boundaries, adjacent corner—1 shared boundary, opposite corner—no shared boundaries), excluding view pairs that faced the same direction. Note that because the number of shared boundaries is highly correlated with Euclidean distance, these two factors are virtually indistinguishable in our current design. A 3 × 2 repeated-measures ANOVA with factors for shared boundaries and museum (same vs. different) found a significant main effect of shared boundaries, F(2,46) = 6.675, p = 0.003, a marginal main effect of museum, F(1,23) = 3.200, p = 0.087, and no interaction, F(2,46) = 0.316, p = 0.730. The main effect of shared boundaries was explained by a significant linear contrast, F(1,23) = 8.615, p = 0.007, reflecting the fact that pattern similarity continuously increased as a function of the number of shared boundaries and/or distance between the views. This sensitivity to boundaries raised the question of whether RSC represents the structure of the environment near the imagined view rather than the heading and location of the imagined view relative to that structure. Whereas location coding would be predicted under both accounts, direction coding would only be predicted under the latter. To examine this, we compared the main effects of direction and location from the earlier analyses. A paired t-test confirmed reliably stronger coding of facing direction than location, t(23) = 2.652, p = 0.014, suggesting that RSC is more concerned with situating imagined views relative to local geometric structure than in representing that structure itself.

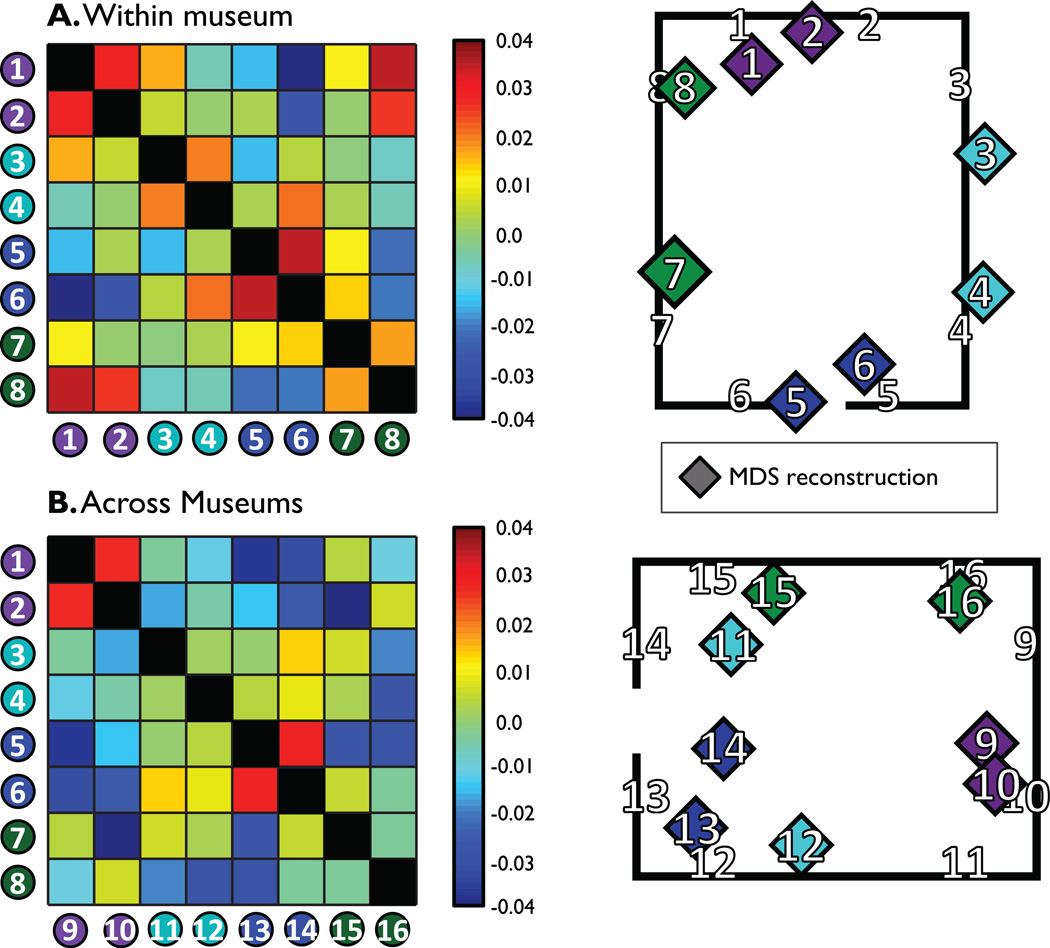

These results provide another demonstration that activity patterns in RSC contain rich information about the implied headings and locations of imagined views that could be used to link views to long-term spatial knowledge, and thus to localize and orient oneself within a mental map of local space. To further test this idea, we examined whether pattern similarities in RSC were sufficient to reconstruct the relative positions of views within a museum. We calculated each subject’s correlation matrix for the eight different views that occurred within the same museum and then averaged them to produce a grand correlation matrix (Fig. 6A, left panel). This grand correlation matrix was submitted to multidimensional scaling to produce a two-dimensional map of the views, which was then aligned with a Procrustes analysis to a schematic of our museums (Fig. 6A, right panel). Patterns of similarity in RSC created a reconstruction of the views within a museum that was significantly less distorted than chance (D: 16%, p = 0.00008). To assess the regional specificity of this finding, we repeated this procedure with the other functionally defined regions of interest (ROIs) (parahippocampal place area, occipital place area, early visual cortex); none were able to perform reliable spatial reconstruction (all Ds > 58%, ps > 0.1).

Figure 6. Multivoxel patterns in RSC contain sufficient information about the spatial relations between views to reconstruct the spatial organization of the environment.

A. Within museum view reconstruction. Left shows the average confusion matrix between views located within the same museum. Right shows reconstruction of view location from multidimensional scaling and Procrustes alignment. The estimated locations (colored diamonds) are close to the real locations (numbers in black outline).

B. Across museum view reconstruction. Left shows the average confusion matrix between views located in different museums. Right shows reconstruction of view location from multidimensional scaling and Procrustes alignment. Although somewhat noisier than the within-museum reconstruction, locations were more accurate than would be expected by chance.

We then performed the same analysis on the across-museum view similarities (Fig. 6B, left & right panels). Again, reconstruction was also significantly less distorted than chance (D: 47%, p = 0.015), although it tended to be less accurate than the reconstruction based on within-museum view similarities (difference in D: 31%, p = 0.09). Successful spatial reconstruction across museums suggests that RSC’s representation of views in one museum can accurately predict the relations among views in another museum. This generalization is consistent with the idea that RSC represents views relative to the frame of the local environment and can generalize these relationships to different environments with similar geometry.

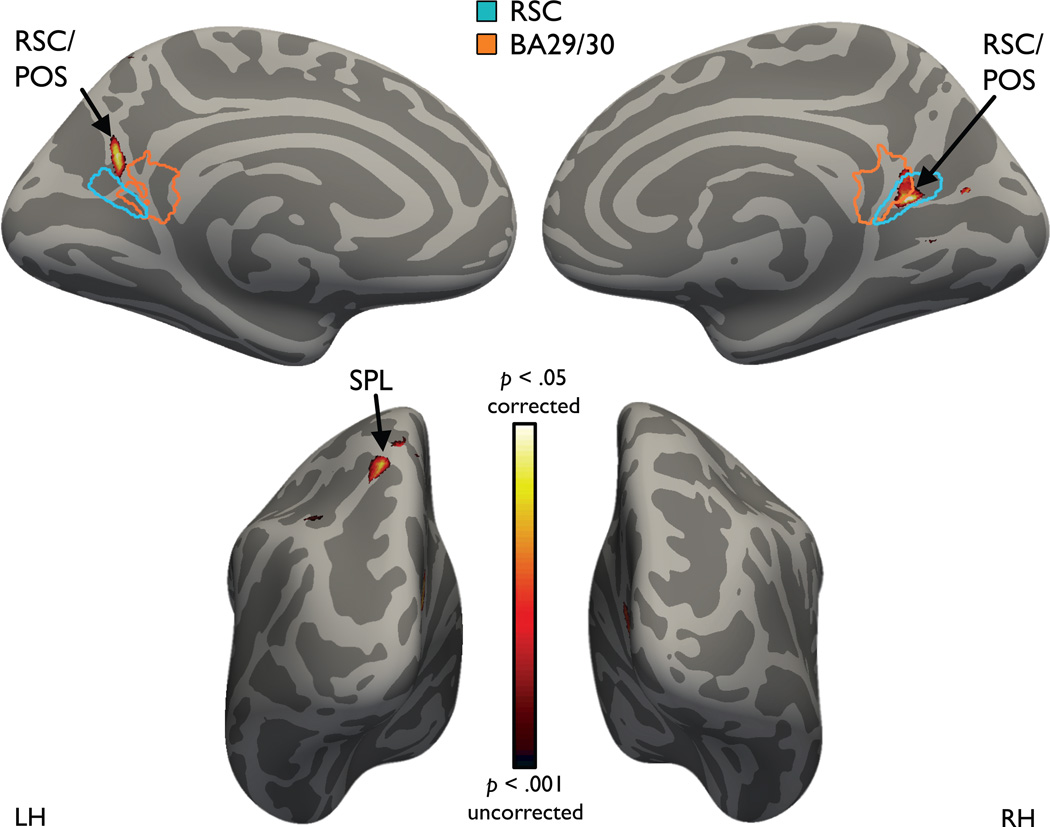

Whole-Brain Analyses

Having discovered RSC representations that coded for direction and location within the local space in our region of interest analysis, we then used a whole-brain searchlight analysis21 to discover other regions that might code these quantities (Fig. 7). Consistent with our ROI analysis, local direction could be decoded in bilateral RSC, at the juncture of the calcarine sulcus and parietal occipital sulcus and just posterior to retrosplenial cortex proper (Montreal Neurological Institute (MNI) coordinates: right 14–58 11; left −5 –65 20). Beyond this region, we also observed significant local direction decoding in the left superior parietal lobule (SPL) (MNI coordinates: −19 −67 54). No other brain regions exhibited local direction coding at either corrected significance levels or the more liberal threshold of p < 0.001 uncorrected. An analogous searchlight analysis for local location coding found no brain regions at corrected significance levels, although coding in bilateral RSC, left SPL, and other regions of posterior parietal cortex were observed at uncorrected significance levels. We did not observe evidence for coding of global direction in any region of the brain even at relatively liberal thresholds (p<0.005 uncorrected). Detailed analyses of the response in the left SPL are presented in Supplementary Fig. 2, and results from other ROIs are presented in Supplementary Figs. 3&4.

Figure 7.

Whole-brain searchlight analysis of multivoxel coding of local direction. Voxels in yellow are significant (p < 0.05) after correcting for multiple comparisons across the entire brain. Consistent with the results of the ROI analyses, imagined facing direction could be decoded in right RSC, at the juncture of the calcarine sulcus and parietal occipital sulcus and just posterior to retrosplenial cortex proper (Brodmann Area (BA) 29/30), and in a slightly more posterior locus in the left hemisphere. Direction coding was also observed in the left superior parietal lobule (SPL). The outline of RSC was created by transforming individual subjects’ ROIs to standard space and computing a group t-statistic thresholded at p < 0.001. The outline of BA 29/30 was based on templates provided in MRIcron (http://www.mricro.com/mricron/install.html).

Discussion

Our results indicate that the retrosplenial complex (RSC), a region in human medial parietal lobe, represents imagined facing direction and imagined location during spatial memory recall; moreover, it does so using a reference frame that is anchored to local environmental features and generalizes across local environments with similar geometric structures. These findings suggest that RSC is centrally involved in a critical component of spatial navigation: establishing one's position and orientation relative to the fixed elements of the external world.

Location and direction codes revealed themselves in two ways. First, through behavioral priming: reaction times on the judgment of relative direction (JRD) task were speeded when imagined facing direction or imagined location were repeated across successive experimental trials (Experiment 1). Second, through multi-voxel pattern analysis: distributed fMRI activity patterns in RSC during the JRD task were more similar for imagined views corresponding to the same facing direction or location than for imagined views corresponding to different facing directions or locations (Experiment 2). The fact that direction and location were defined relative to local environmental features was established by examining priming and decoding across views in different local environments (i.e. museums) that were geometrically similar but oriented orthogonally to each other. Views facing the same local direction (as defined by local geometry) in different museums were treated as similar, as assessed by both priming and MVPA decoding, whereas views facing the same global direction were not. Moreover, locations were treated as equivalent across museums if they were in the equivalent position within the room, but not if they were in the equivalent position as defined by the global reference frame. Thus, both behavioral priming and fMRI signals revealed spatial codes that were anchored to the local environmental features and generalized across geometrically similar local environments, rather than codes that were idiosyncratic to each local environment, or codes that were anchored to a global reference frame. To our knowledge, this is the first evidence for a heading representation that uses the same alignment principles across different environments, such that local north (i.e. “facing the back wall”) in one local environment is equivalent to local north in another. A geometry-dependent but environment-independent representation of this type might be critical for translating viewpoint-dependent scene information ("I am in a rectangular room, facing the short wall") into allocentric spatial codes ("I am facing to the North")—and, conversely, for re-creating viewpoint-dependent scenes from allocentric memory traces17.

The finding that RSC represents imagined direction and imagined location in a local spatial reference frame during spatial recall is anticipated by previous neuroimaging and neuropsychological results. This region has been previously implicated in spatial navigation13, 22 and spatial memory14, 23. It responds strongly during passive viewing of environmental scenes in fMRI studies, especially when the scenes depict familiar places24. Moreover, its response is increased when subjects retrieve spatial information25, 26, perform JRD tasks from memory27, or move through an environment for which they have obtained survey knowledge28. fMRI adaptation is observed in RSC when successively-presented scenes face the same direction16, and we previously reported that multivoxel patterns elicited in RSC when viewing familiar scenes contain information about location and direction that is independent of the specific view4. Damage to RSC produces a profound form of topographical disorientation, in which patients can accurately recognize landmarks but cannot use these landmarks to orient themselves in space15. The current results extend this previous work by showing that RSC codes directions and locations while subjects perform a behavioral task that elicits direction-dependent and location-dependent effects. In addition, we demonstrate for the first time that these spatial codes are anchored to environmental features in a consistent manner that applies across different local environments—a crucial element of a spatial referencing system that was not demonstrated by previous studies. The reliability of these neural codes was evidenced by the fact we were able to recreate accurate maps of the spatial relationships between views based on activity patterns in RSC. To our knowledge, this represents the first case in which the spatial structure of an environment could be reconstructed purely from the neural correlates of human imagination.

Our findings also dovetail with previous behavioral research on spatial memory recall23, 29. When subjects perform a JRD task, they typically exhibit orientation-dependent (i.e. direction-dependent) performance. In cases where subjects have experienced only one view of an environment, accuracy is greater and reaction times are faster for imagined views that face the same direction as the learned view, irrespective of the imagined location19. In cases where subjects have learned multiple views of an environment, or have extensive experience over time, performance is typically best for a single imagined facing direction that acts as a “conceptual north,” and it is better for facing directions that are aligned or orthogonal to this primary reference direction than for directions that are misaligned19, 20. The factors that influence the alignment of the reference axes are subject of much investigation, but previous work indicates that spatial geometry can be a dominant influence under many circumstances. Indeed, geometry has long been known to guide reorientation, often to the detriment of other cues30, 31 and geometry is a strong controller of HD cells when animals cannot rely on a pre-existing sense of direction32. Consistent with these previous results, we observed orientation-dependent priming and orientation-dependent activity patterns when orientation was defined relative to local geometry. Moreover, we also observed location-dependent priming and location-dependent activity patterns when location was defined relative to the same spatial reference frame. In sum, our results build on the classic findings from the behavioral literature by showing that priming, like overall performance, is orientation dependent, and by demonstrating that RSC encodes a spatial reference frame that may underlie this orientation-dependent behavior.

An open question is how the spatial codes in RSC are implemented neurally. HD cells have been previously identified in the retrosplenial cortex of the rodent33, 34. Thus, one possibility is that the HD code at encoding is re-instantiated at retrieval. However, there are reasons to doubt that the firing of HD cells, in and of themselves, can explain our current results. To our knowledge, there is little evidence that HD cells code direction in a manner that is consistent across different environments with similar geometries, as observed here in RSC. Indeed, the fact that HD cells maintain stable preferred directions when traveling across distinct environments11, 35, 36 suggests that these cells do not directly represent local geometric relationships. Rather, they maintain a consistent sense of direction through path integration37, only referring back to the environment periodically to recalibrate when error has accumulated10. This means that a different mechanism that codes heading relative to local features is necessary to provide the calibration. Moreover, we found that spatial codes in RSC do not solely represent facing direction but also include information about location, which is not encoded by HD cells. This is consistent with previous work indicating that cells in the retrosplenial/medial parietal region encode a variety of spatial quantities, not just heading direction33, 38.

One possibility is that the responses we observe in RSC reflect the firing of neurons that encode the egocentric bearings to specific landmarks. In principle, any landmarks could be represented by such reference vector cells, but to explain our result at least some of the landmarks must correspond reliably to Euclidean directions and have a clear analogy across the different museums. This suggests that the primary landmarks in the current environment are the walls, although it is possible that other features such as the doorway might be used, whose bearings would only approximately correspond to Euclidean direction. To account for location sensitivity, we must further posit that reference vector cells preferentially encode directions to nearby rather than distant landmarks during the JRD task, which would lead to location decoding because the identities of the nearest walls (as well as the door) would differ as a function of location. Under this account, both location and direction should be decodable in RSC, with the relative balance of these two kinds of information determined by the overlap in the identities of the landmarks used to define direction at each location4. Such a landmark referencing system could be used to anchor the HD system and thus provide a sense of direction. Alternatively, the results might be explained by the firing of orientation-modulated boundary vector cells (BVCs)39–41, which would fire when facing a given direction at a certain distance from specific walls. Finally, it is possible that RSC represents heading and location using a mixed ensemble code that is not easily interpretable at the single unit level42.

Whatever the underlying neural code, it is clear that spatial representations in RSC were tied to local environmental features in the current experiment. That said, we must caveat the use of the word local. Reference objects and target objects were always located in the same museum. If subjects had been asked to imagine themselves within a museum and report the bearing to a target outside, they might have accessed a global rather than a local spatial frame. Previous behavioral work with non-nested environments has indicated that reference frames can be observed across many different scales, from tabletops, to rooms19, to campuses43. Whether RSC represents spatial reference frames at all of these scales is an important question for future investigation—for tasks that involve memory recall, the “local” scene might potentially expand to include all landmarks that can be encompassed by the imagination, not just landmarks that are actually visible from a particular point. An additional point is that subjects learned all the museums simultaneously, which might have prompted them to encode a single spatial frame or spatial schema44 that was applicable to all of them, rather than separate spatial frames that would apply uniquely to each. We observed some anecdotal evidence in support of this idea during the pre-scan encoding phase: when subjects were asked to find an object, they sometimes traveled to the right location in the wrong museum. This suggests that they knew where the object was within the spatial frame, but did not remember the museum to which the frame should be applied. One possibility is that the absence of proprioceptive and vestibular cues during virtual navigation may have made it difficult for RSC to use path integration to distinguish between museums. However, similar results were observed in a recent rodent neurophysiology study which found that hippocampal place cells fired in analogous positions in geometrically identical environments that were learned in a single training session, even though these proprioceptive and vestibular cues were present45. Nevertheless, a different training regime, in which subjects learned one museum thoroughly before learning another, might have resulted in less generalizability of responses between local environments; furthermore, it is possible that the local environments might dissociate after further experience46. Finally, we should note that the present results leave it unclear whether the critical environmental factor for defining heading was the shape of the museum as defined by the walls, the shaping of egocentric experience by the single door, or the principal axis defined by both factors.

Beyond its role in providing a system of reference for defining heading and location, our results also illuminate the contribution of RSC to memory retrieval more broadly. Much recent interest has focused on the striking overlap among brain regions involved in the retrieval of autobiographical memories, the imagination of novel events, and navigation14, 47, 48. These regions, including RSC, are believed to form a network that mediates both prospective thought and episodic memory49. Our results identify a specific role for RSC in the construction and reconstruction of episodes: by situating our imagined position and heading relative to stable spatial reference elements, RSC allows us to mentally place ourselves within an imaginary world built on the foundations of our remembered experience. This conclusion is consistent with previous work indicating that RSC is strongly activated when imagining changes in one’s viewpoint50 or when retrieving spatial relationships following first-person navigation27. Moreover, the fact that RSC codes a spatial schema that can apply across different local environments suggests the intriguing possibility that it might also be involved in coding memory schemas that are not explicitly spatial.

In summary, our experiments demonstrate a neural locus for the local spatial reference frames used for mental reorientation during spatial recall. RSC represents the locations and directions of imagined views with respect to these local reference frames, and this spatial code is rich enough to allow recreation of accurate maps of the local space. Like a compass rose, RSC anchors our sense of direction to the world, thus allowing us to stay oriented while we navigate and to reconstruct from memory the experience of being in a particular place.

Methods

Participants

Forty-six healthy subjects (23 female; mean age, 21.2 ± 1.7 years) with normal or corrected-to-normal vision were recruited from the University of Pennsylvania community. Twenty-two participated in the behavioral study (Experiment 1) and twenty-four were scanned with fMRI (Experiment 2). Sample size was determined based on a pilot behavioral experiment. Subjects provided written informed consent in compliance with procedures approved by the University of Pennsylvania Institutional Review Board. Six additional participants from Experiment 2 were excluded prior to scanning for scoring less than 70% on the behavioral practice trials after the first day of training (see below). Three additional subjects were scanned in Experiment 2 but were excluded before analysis: the first for technical difficulties in fMRI acquisition, the second because she requested to terminate the scanning session early, and the third for sleeping.

MRI acquisition

Scanning was performed at the Hospital of the University of Pennsylvania using a 3T Siemens Trio scanner equipped with a 32-channel head coil. High-resolution T1-weighted images for anatomical localization were acquired using a three-dimensional magnetization-prepared rapid acquisition gradient echo pulse sequence [repetition time (TR), 1620 ms; echo time (TE), 3.09 ms; inversion time, 950 ms; voxel size, 1 × 1 × 1 mm; matrix size, 192 × 256 × 160]. T2*-weighted images sensitive to blood oxygenation level-dependent contrasts were acquired using a gradient echo echoplanar pulse sequence (TR, 3000 ms; TE, 30 ms; flip angle 90°; voxel size, 3 × 3 × 3 mm; field of view, 192; matrix size, 64 × 64 × 44).

Stimuli and procedure

Virtual Environment

A virtual environment consisting of a park containing four large rectangular buildings, or “museums” (Fig. 1A), was constructed with the Source SDK Hammer Editor (www.valvesoftware.com, Valve Software, Bellevue, WA). Distal orientational cues surrounded the park, such as a mountain range and the sun at a high azimuth to the “North”, apartment buildings and refineries to the East and West, and two high rise apartment buildings to the South. The museums had identical interior geometry, with an aspect ratio of 0.84 and an axis of elongation running parallel to the direction of entry, but they were distinguishable interiorly and exteriorly based on textures and architectural features, such as facades, columns, and plinths (Fig. 1B). Large windows were set in the back wall of three of the four musuems. Museums were placed in the environment so that each one was entered from a unique direction. This had the effect of dissociating direction within a museum as defined by the axis of entry and internal geometry (e.g. facing the back wall, or local North) from direction in the exterior park (e.g. facing the mountains, or global North).

Each museum contained eight distinct, nameable objects that were arranged in alcoves along the walls (Fig. 1B). Because of the shape of the alcoves, objects could only be viewed head-on from a specific direction. In Experiment 2, we randomized the assignment of objects to alcoves in the environment across participants, to ensure that reliable decoding of direction and location could not be attributed to decoding of object identity.

Training session

All subjects underwent an initial training session in which they learned the layout of the virtual environment and the location of objects within it. This session was divided into a free exploration period (15 minutes) and a guided learning period (approximately 45 minutes). Subjects in Experiment 2 performed an additional training session two days later, immediately prior to the fMRI scan, consisting of the guided learning period only.

In the free exploration period, subjects were placed at the entrance to the park (‘start’, Fig. 1A). After receiving instruction on how to navigate using the arrow keys, they were allowed to explore the environment at will. The only guidance provided by the experimenter was that they should make sure that they visited each of the buildings during this phase, a criterion met by every subject. The environment was rendered and displayed on a laptop running the commercial game software Portal (www.valvesoftware.com, Valve Software, Bellevue, WA).

The guided learning phase of the training session was divided into 8 self-paced blocks. At the beginning of each, the name of an object was presented on the screen and the subject was asked to navigate from the entrance to the park to the object as directly as possible. Once the subject had navigated to the correct location of the object, the name of another target object was presented on the screen and they were asked to navigate directly to it. After locating eight objects (two from each museum) the subject was teleported back to the entrance of the park and the next block began. All of the objects remained visible throughout the task to afford subjects additional opportunities to learn their locations while simultaneously challenging them to apply this knowledge. Targets were drawn randomly, with the constraint that no target object was repeated until all objects had been found once. Each object was searched for twice for a total of 64 trials.

Testing session

Immediately following the initial training session, subjects in Experiment 1 completed 384 trials of a judgment of relative direction (JRD) task. On each trial, subjects used to the keyboard to report whether a target object from the training environment would be located to their left or right if they were standing in front of and facing a reference object. The names of the reference object and target object were presented visually and simultaneously in gray letters on two different lines at the center of a black screen (e.g. “Facing the Bicycle”, “Lamp,” Fig. 1C). Subjects were instructed to imagine themselves looking directly at the reference object while making their judgment; this required them to imagine themselves in a specific location while facing a specific direction (Fig. 2). They were told to report left and right broadly, including anything that would be on that side of their body and not just directly to the left (e.g. in Fig. 2A, when facing View 1, View 7 would be to the left). Trials ended as soon as the participant responded and the next trial began after a 750 ms inter-trial interval. The target object was always within the same museum as the reference object. No feedback was given. One 60 s break was given halfway through the experiment to mitigate mental fatigue.

The 384 trials in Experiment 1 were ordered randomly but with the constraint that successive trials faced the same direction 50% of the time, and that direction changed at least once every six trials. The probability of repeating museum or corner across successive trials was not manipulated (25% for each based on chance). These transition probabilities were adopted to ensure we would have sufficient trials to detect priming of direction within a given museum. Because reaction times often have an asymmetric distribution, reaction times that were 2.5 standard deviations above the mean were removed (5% of responses in Exp 1, and 1% of responses in Exp 2), and median reaction times were calculated for each condition.

Subjects in Experiment 2 only completed 20 trials of the JRD task after the initial training session, after which they received feedback on their performance. They then returned 2 days later for a second training session (described above), after which they completed 544 trials of the JRD task in the fMRI scanner. The experimental paradigm was the same as in Experiment 1, except that the timing was modified to fit the requirements of fMRI acquisition: rather than disappearing after the subject’s response as in Experiment 1, word cues remained on the screen for 5000 ms followed by a 1000 ms gap before the start of the next trial. Note that this meant that there was a substantially longer interval between a participants’ response and the subsequent trial, which might have reduced behavioral priming effects. In addition, a grid of jittered grey lines were superimposed behind the word cues to limit differences in visual extent. Testing was performed in 8 scan runs, each of which was 8 m and 24 s and consisted of 68 JRD trials and 5 null trials during which the subject viewed a fixation cross and made no response for 12 seconds. To keep the scan sessions to a manageable length, only 16 of the 32 reference objects shown at training were used, drawn from 2 of the 4 museums, which were chosen to be adjacent thus oriented orthogonally to one another. (e.g. Fig. 2). The four possible museum pairs meeting this requirement (1–2; 2–3; 3–4; 4-1) were randomly counterbalanced across subjects.

JRD trials were presented within a continuous carryover sequence51, which ordered the 16 imagined views (corresponding to the 16 reference objects) in a serially balanced design for which each view preceded and followed every other view, including itself, exactly once. Two unique carryover sequences were generated for each subject, with an entire sequence shown over 4 scan runs. Each view was tested 17 times within each carryover sequence.

Functional Localizer

In addition to the main experiment, subjects also completed two functional localizer scans each lasting 5 min 32 s, which consisted of 16 s blocks of scenes, objects, and scrambled objects. Images were presented for 600 ms with a 400 ms inter-stimulus interval as subjects performed a one-back task on image repetition.

MRI Data Analysis

Data preprocessing

Functional images were corrected for differences in slice timing by resampling slices in time to match the first slice of each volume. Images were then realigned to the first volume of the scan run and subsequent analyses were performed within the subjects’ own space. Motion correction was performed using MCFLIRT52. Data for the functional localizer scan were smoothed with a 6 mm full-width at half-maximum Gaussian filter; data for multivoxel pattern analyses were not smoothed.

Functional regions of interest

Data from the functional localizer scan were used to identify retrosplenial complex (RSC), an area in the retrosplenial/parietal-occipital sulcus region that has been previously implicated in spatial memory and navigation. RSC was defined for each subject individually and in their own space, using a contrast of scenes>objects in the subjects’ functional localizer data and a group-based anatomical constraint of scene-selective activation derived from a large number (42) of localizer subjects in our lab53. To select a constant number of voxels in a threshold-free manner, an individual’s RSC within a hemisphere was defined as the top 100 voxels that responded more strongly to scenes than to objects and fell within the group-parcel mask warped to the subject’s own space with a linear transformation. This method ensures that RSC can be defined in both hemispheres for every subject. Scene-selective ROIs corresponding to the parahippocampal place area (PPA) and occipital place area (TOS/OPA) were defined in the same manner, and early visual cortex (EVC) was defined based on a contrast of scrambled-objects>objects.

Multivoxel pattern analysis

To test whether an ROI contained spatial information about the direction and location of views within the local environment, we calculated the correlation between multivoxel patterns associated with each of the 16 views (corresponding to the 16 reference objects) across different continuous carryover sequences54. This procedure allowed us to test whether the information carried by patterns of responses in the first half of the experiment (i.e. the first carryover sequence) generalized to patterns in the second half. First, we estimated the multivoxel activity pattern reflecting the response to each view within a carryover sequence. To do this, we applied a general linear model (GLM) with 16 regressors, one for each view, to the time course of functional activity within each voxel. Separate GLMs were performed on each of the four runs corresponding to a continuous carryover sequence, and the resulting parameter estimates were averaged to provide an estimate of the average response to a view in that half of the experiment. Multivoxel patterns were represented by a vector concatenating the responses across the voxels of an ROI. GLMs were implemented in FSL55 (http://fsl.fmrib.ox.ac.uk/fsl/fslwiki/) and included highpass filters that removed low temporal frequencies, and nuisance regressors for motion parameters and outlier volumes discovered using the Artifact Detection Toolbox (http://www.nitrc.org/projects/artifact_detect/). The first three volumes of each run were discarded to ensure data quality.

Next, we measured the similarity between activity patterns corresponding to the 16 views by calculating the Pearson correlations between patterns in the two halves of the data corresponding to the two carryover sequences. Individual pattern were normalized prior to this computation by subtracting out the grand mean pattern (i.e. the cocktail mean) for each continuous carryover sequence4. This calculation created a 16 × 16 correlation matrix for each subject (Fig. 2). To test for coding of a particular quantity, such as location or direction, we computed the average correlation for views that shared that quantity (e.g., faced the same local direction) and compared this to the average correlation for views that did not share that quantity (e.g., faced different directions), taking into account various restrictions in the other spatial quantities as described in the main text. These values were computed for each subject, and then submitted to statistical analysis of cross-subject reliability. For four subjects, the 16th view was not represented within the continuous carryover sequence due to technical errors. For these subjects, the analysis was performed as normal but adjusted to exclude this view.

Visualization and reconstruction of spatial similarities

To qualitatively visualize pattern similarity in RSC, we created a pattern similarity map for each of the 16 views by calculating the similarity between that view (starting view) and the other 15 other views (comparison views). Each map was subjected to rotations and reflections that aligned the starter views to one another while maintaining the spatial relations between starter and comparison views. These maps were then averaged together to create a composite similarity map. Pattern similarities were converted to a range from 0 to 1 and colored according to this value.

To create reconstructions of the museums, multivoxel patterns compared across carryover sequences to calculate a correlation matrix for the eight unique view positions in a museum for each subject. These correlation matrices were then averaged to produce a grand correlation matrix that was submitted to multidimensional scaling to produce a two-dimensional map of the views56 and then aligned with a Procrustes analysis57 to a schematic of our museums. Distortion of the reconstructed map was defined as the sum of squared errors between the reconstructed and true points after optimal linear alignment, and significance was calculated by randomly shuffling the correlation matrix and applying multidimensional scaling to the result over one hundred thousand iterations to produce a chance distribution for distortion. A similar reconstruction was performed based on similarities between corresponding views in different museums.

Searchlight analysis

To test for coding of local direction and other spatial quantities outside of our pre-defined ROIs, we implemented a wholebrain searchlight analysis21. This analysis stepped through every voxel of the brain and centered a small spherical ROIs (radius 5mm) around it. The comparisons among views for discriminating different sources of spatial information (Fig. 2) were then performed within the spherical neighborhood. The central voxel of the sphere was assigned the pattern discrimination score (e.g., the difference in pattern similarity between views that face the same or different local directions) calculated over its neighborhood. For each subject, this procedure generated a map associated with the pattern discriminability of a particular spatial quantity. These maps were then aligned to the MNI template with a linear transformation and submitted to a second-level random-effects analysis to test the reliability of discrimination for that quantity across subjects. To find the true type I error rate, we performed Monte Carlo simulations that permute the sign of the whole-brain maps from individual subjects58. We reported only the voxels that survive correction for multiple comparisons across the entire brain, significant at p < 0.05.

Statistics

Data distributions were assumed to be normal, but this was not formally tested. Repeated-measures ANOVAs were used compare reaction times (Experiment 1) and pattern similarities (Experiment 2) as a function of imagined direction and location. Where appropriate, paired-sample t-tests were used to directly compare different possible coding schemes that could explain the data (i.e. local vs. global coding). Non-parametric Monte Carlo simulations were used to determine significance for the reconstructions of spatial similarities as well as for the whole-brain searchlight analyses.

A methods checklist is available with the supplementary materials.

Supplementary Material

Acknowledgements

This research was supported the National Institutes of Health (EY022350) and the National Science Foundation (SBE-0541957, SBE-1041707). We would like to thank Anthony Stigliani for assistance with data collection and three anonymous referees for useful comments on an earlier version of the manuscript.

Footnotes

Author Contributions

S.A.M., L.K.V., and R.A.E. designed the experiments. S.A.M. and J.R. collected the data. S.A.M. analyzed data with input from L.K.V. & R.A.E. S.A.M. and R.A.E. wrote the manuscript.

References

- 1.O'Keefe J, Dostrovsky J. The hippocampus as a spatial map. Preliminary evidence from unit activity in the freely-moving rat. Brain Research. 1971;34:171–175. doi: 10.1016/0006-8993(71)90358-1. [DOI] [PubMed] [Google Scholar]

- 2.Hafting T, Fyhn M, Molden S, Moser M-B, Moser EI. Microstructure of a spatial map in the entorhinal cortex. Nature. 2005;436:801–806. doi: 10.1038/nature03721. [DOI] [PubMed] [Google Scholar]

- 3.Taube JS, Muller RU, Ranck JB. Head-direction cells recorded from the postsubiculum in freely moving rats. I. Description and quantitative analysis. The Journal of Neuroscience. 1990;10:420–435. doi: 10.1523/JNEUROSCI.10-02-00420.1990. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Vass LK, Epstein RA. Abstract Representations of Location and Facing Direction in the Human Brain. The Journal of Neuroscience. 2013;33:6133–6142. doi: 10.1523/JNEUROSCI.3873-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Hassabis D, et al. Decoding Neuronal Ensembles in the Human Hippocampus. Current Biology. 2009;19:546–554. doi: 10.1016/j.cub.2009.02.033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Doeller CF, Barry C, Burgess N. Evidence for grid cells in a human memory network. Nature. 2010;463:657–661. doi: 10.1038/nature08704. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Ekstrom AD, et al. Cellular networks underlying human spatial navigation. Nature. 2003;425:184–188. doi: 10.1038/nature01964. [DOI] [PubMed] [Google Scholar]

- 8.Jacobs J, et al. Direct recordings of grid-like neuronal activity in human spatial navigation. Nature Neuroscience. 2013;16:1188–1190. doi: 10.1038/nn.3466. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Knierim JJ, Kudrimoti HS, McNaughton BL. Place cells, head direction cells, and the learning of landmark stability. The Journal of Neuroscience. 1995;15:1648–1659. doi: 10.1523/JNEUROSCI.15-03-01648.1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Valerio S, Taube JS. Path integration: how the head direction signal maintains and corrects spatial orientation. Nature Neuroscience. 2012;15:1445–1453. doi: 10.1038/nn.3215. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Yoder RM, et al. Both visual and idiothetic cues contribute to head direction cell stability during navigation along complex routes. Journal of Neurophysiology. 2011;105:2989–3001. doi: 10.1152/jn.01041.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Yoder RM, Clark BJ, Taube JS. Origins of landmark encoding in the brain. Trends in Neurosciences. 2011;34:561–571. doi: 10.1016/j.tins.2011.08.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Epstein RA. Parahippocampal and retrosplenial contributions to human spatial navigation. Trends in Cognitive Sciences. 2008;12:388–396. doi: 10.1016/j.tics.2008.07.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Vann SD, Aggleton JP, Maguire EA. What does the retrosplenial cortex do? Nature Reviews Neuroscience. 2009;10:792–802. doi: 10.1038/nrn2733. [DOI] [PubMed] [Google Scholar]

- 15.Aguirre GK, D'Esposito M. Topographical disorientation: a synthesis and taxonomy. Brain. 1999;122:1613–1628. doi: 10.1093/brain/122.9.1613. [DOI] [PubMed] [Google Scholar]

- 16.Baumann O, Mattingley JB. Medial Parietal Cortex Encodes Perceived Heading Direction in Humans. The Journal of Neuroscience. 2010;30:12897–12901. doi: 10.1523/JNEUROSCI.3077-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Byrne P, Becker S, Burgess N. Remembering the past and imagining the future: A neural model of spatial memory and imagery. Psychological Review. 2007;114:340–375. doi: 10.1037/0033-295X.114.2.340. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.McNaughton BL, Knierim JJ, Wilson MA. Vector encoding and the vestibular foundations of spatial cognition: Neurophysiological and computational mechanisms. 1995 [Google Scholar]

- 19.Shelton AL, McNamara TP. Systems of Spatial Reference in Human Memory. Cognitive Psychology. 2001;43:274–310. doi: 10.1006/cogp.2001.0758. [DOI] [PubMed] [Google Scholar]

- 20.Mou W, McNamara TP. Intrinsic frames of reference in spatial memory. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2002;28:162–170. doi: 10.1037/0278-7393.28.1.162. [DOI] [PubMed] [Google Scholar]

- 21.Kriegeskorte N, Goebel R, Bandettini P. Information-based functional brain mapping. Proceedings of the National Academy of Sciences of the United States of America. 2006;103:3863–3868. doi: 10.1073/pnas.0600244103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Maguire E. The retrosplenial contribution to human navigation: A review of lesion and neuroimaging findings. Scandinavian Journal of Psychology. 2001;42:225–238. doi: 10.1111/1467-9450.00233. [DOI] [PubMed] [Google Scholar]

- 23.Burgess N. Spatial Cognition and the Brain. Annals of the New York Academy of Sciences. 2008;1124:77–97. doi: 10.1196/annals.1440.002. [DOI] [PubMed] [Google Scholar]

- 24.Epstein RA, Higgins JS, Jablonski K, Feiler AM. Visual scene processing in familiar and unfamiliar environments. Journal of Neurophysiology. 2007;97:3670–3683. doi: 10.1152/jn.00003.2007. [DOI] [PubMed] [Google Scholar]

- 25.Epstein RA, Parker WE, Feiler AM. Where Am I Now? Distinct Roles for Parahippocampal and Retrosplenial Cortices in Place Recognition. The Journal of Neuroscience. 2007;27:6141–6149. doi: 10.1523/JNEUROSCI.0799-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Rosenbaum RS, Ziegler M, Winocur G, Grady CL, Moscovitch M. “I have often walked down this street before”: fMRI Studies on the hippocampus and other structures during mental navigation of an old environment. Hippocampus. 2004;14:826–835. doi: 10.1002/hipo.10218. [DOI] [PubMed] [Google Scholar]

- 27.Zhang H, Copara M, Ekstrom AD. Differential Recruitment of Brain Networks following Route and Cartographic Map Learning of Spatial Environments. PLoS ONE. 2012;7:e44886. doi: 10.1371/journal.pone.0044886. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Wolbers T, Büchel C. Dissociable Retrosplenial and Hippocampal Contributions to Successful Formation of Survey Representations. The Journal of Neuroscience. 2005;25:3333–3340. doi: 10.1523/JNEUROSCI.4705-04.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Burgess N, Spiers HJ, Paleologou E. Orientational manoeuvres in the dark: dissociating allocentric and egocentric influences on spatial memory. Cognition. 2004;94:149–166. doi: 10.1016/j.cognition.2004.01.001. [DOI] [PubMed] [Google Scholar]

- 30.Cheng K. A purely geometric module in the rat's spatial representation. Cognition. 1986;23:149–178. doi: 10.1016/0010-0277(86)90041-7. [DOI] [PubMed] [Google Scholar]

- 31.Gallistel CR. The organization of learning. Cambridge, MA, US: The MIT Press; 1990. [Google Scholar]

- 32.Knight R, Hayman R, Lin Ginzberg L, Jeffery K. Geometric Cues Influence Head Direction Cells Only Weakly in Nondisoriented Rats. The Journal of Neuroscience. 2011;31:15681–15692. doi: 10.1523/JNEUROSCI.2257-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Cho J, Sharp PE. Head direction, place, and movement correlates for cells in the rat retrosplenial cortex. Behavioral Neuroscience. 2001;115:3–25. doi: 10.1037/0735-7044.115.1.3. [DOI] [PubMed] [Google Scholar]

- 34.Chen L, Lin L-H, Green E, Barnes C, McNaughton B. Head-direction cells in the rat posterior cortex. Exp Brain Res. 1994;101:8–23. doi: 10.1007/BF00243212. [DOI] [PubMed] [Google Scholar]

- 35.Taube JS, Burton HL. Head direction cell activity monitored in a novel environment and during a cue conflict situation. Journal of Neurophysiology. 1995;74:1953–1953. doi: 10.1152/jn.1995.74.5.1953. [DOI] [PubMed] [Google Scholar]

- 36.Dudchenko PA, Zinyuk LE. The formation of cognitive maps of adjacent environments: Evidence from the head direction cell system. Behavioral Neuroscience. 2005;119:1511–1523. doi: 10.1037/0735-7044.119.6.1511. [DOI] [PubMed] [Google Scholar]

- 37.McNaughton BL, Battaglia FP, Jensen O, Moser EI, Moser M-B. Path integration and the neural basis of the 'cognitive map'. Nature Reviews Neuroscience. 2006;7:663–678. doi: 10.1038/nrn1932. [DOI] [PubMed] [Google Scholar]

- 38.Sato N, Sakata H, Tanaka YL, Taira M. Navigation-associated medial parietal neurons in monkeys. Proceedings of the National Academy of Sciences. 2006;103:17001–17006. doi: 10.1073/pnas.0604277103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Lever C, Burton S, Jeewajee A, O'Keefe J, Burgess N. Boundary Vector Cells in the Subiculum of the Hippocampal Formation. The Journal of Neuroscience. 2009;29:9771–9777. doi: 10.1523/JNEUROSCI.1319-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Solstad T, Boccara CN, Kropff E, Moser M-B, Moser EI. Representation of Geometric Borders in the Entorhinal Cortex. Science. 2008;322:1865–1868. doi: 10.1126/science.1166466. [DOI] [PubMed] [Google Scholar]

- 41.Sharp PE. Subicular place cells generate the same “map” for different environments: Comparison with hippocampal cells. Behavioural Brain Research. 2006;174:206–214. doi: 10.1016/j.bbr.2006.05.034. [DOI] [PubMed] [Google Scholar]

- 42.Hung CP, Kreiman G, Poggio T, DiCarlo JJ. Fast Readout of Object Identity from Macaque Inferior Temporal Cortex. Science. 2005;310:863–866. doi: 10.1126/science.1117593. [DOI] [PubMed] [Google Scholar]

- 43.Marchette S, Yerramsetti A, Burns T, Shelton A. Spatial memory in the real world: long-term representations of everyday environments. Mem Cogn. 2011;39:1401–1408. doi: 10.3758/s13421-011-0108-x. [DOI] [PubMed] [Google Scholar]

- 44.Tse D, et al. Schemas and Memory Consolidation. Science. 2007;316:76–82. doi: 10.1126/science.1135935. [DOI] [PubMed] [Google Scholar]