Abstract

To behave adaptively, we must learn from the consequences of our actions. Doing so is difficult when the consequences of an action follow a delay. This introduces the problem of temporal credit assignment. When feedback follows a sequence of decisions, how should the individual assign credit to the intermediate actions that comprise the sequence? Research in reinforcement learning provides two general solutions to this problem: model-free reinforcement learning and model-based reinforcement learning. In this review, we examine connections between stimulus-response and cognitive learning theories, habitual and goal-directed control, and model-free and model-based reinforcement learning. We then consider a range of problems related to temporal credit assignment. These include second-order conditioning and secondary reinforcers, latent learning and detour behavior, partially observable Markov decision processes, actions with distributed outcomes, and hierarchical learning. We ask whether humans and animals, when faced with these problems, behave in a manner consistent with reinforcement learning techniques. Throughout, we seek to identify neural substrates of model-free and model-based reinforcement learning. The former class of techniques is understood in terms of the neurotransmitter dopamine and its effects in the basal ganglia. The latter is understood in terms of a distributed network of regions including the prefrontal cortex, medial temporal lobes cerebellum, and basal ganglia. Not only do reinforcement learning techniques have a natural interpretation in terms of human and animal behavior, but they also provide a useful framework for understanding neural reward valuation and action selection.

Keywords: Reinforcement learning, sequential choice, temporal credit assignment

Introduction

To behave adaptively, we must learn from the consequences of our actions. These consequences sometimes follow a single decision, and they sometimes follow a sequence of decisions. Although single-step choices are interesting in their own right (for a review, see Fu & Anderson, 2006), we focus here on the multi-step case. Sequential choice is significant for two reasons. First, sequential choice introduces the problem of temporal credit assignment (Minsky, 1963). When feedback follows a sequence of decisions, how should one assign credit to the intermediate actions that comprise the sequence? Second, sequential choice makes contact with everyday experience. Successful resolution of the challenges imposed by sequential choice permits fluency in domains where achievement hinges on a multitude of actions. Unsuccessful resolution leads to suboptimal performance at best (Fu & Gray, 2004; Yechiam et al., 2003) and pathological behavior at worst (Herrnstein & Prelec, 1991; Rachlin, 1995).

Parallel Learning Processes

Contemporary accounts of sequential choice build on classic theories of behavioral control. We consider these theories in brief to prepare for our discussion of sequential choice.

In describing how humans and animals select actions, psychologists have long distinguished between habitual and goal-directed behavior (James, 1950/1890). This distinction was made rigorous in stimulus-response and cognitive theories of learning during the behaviorist era. Stimulus-response theories portrayed action as arising directly from associations between stimuli and responses (Hull, 1943). These theories emphasized the role of reinforcement in augmenting habit strength. Cognitive theories, on the other hand, portrayed action as arising from prospective inference over internal models, or maps, of the environment (Tolman, 1932). These theories stressed the interplay between planning, anticipation, and outcome evaluation in goal-directed behavior.

Research in psychology and neuroscience has provided new insight into the distinction between stimulus-response and cognitive learning theories. The emerging view is that two forms of control, habitual and goal-directed, coexist as complementary mechanisms for action selection (Balleine & O’Doherty, 2010; Daw, Niv, & Dayan, 2005; Doya, 1999; Rangel, Camerer, & Montague, 2008). Although both forms of control allow the individual to attain desirable outcomes, behavior is only considered goal-directed if (1) the individual has reason to believe that an action will result in a particular outcome, and (2) the individual has reason to pursue that outcome. Evidence for this distinction comes from animal conditioning studies, which show that different factors engender habitual and goal-directed control (Balleine & O’Doherty, 2010). Further evidence comes from physiological studies, which show that different neural structures are necessary for the expression of habitual and goal-directed behavior (Balleine & O’Doherty, 2010).

The same distinction has arisen in the computational field of reinforcement learning (RL). RL addresses the question of how one should act to maximize reward. The key feature of the reinforcement learning problem is that the individual does not receive instruction, but must learn from the consequences of their actions. Two general solutions to this problem exist: model-free RL and model-based RL. Model-free techniques use stored action values to evaluate candidate behaviors, whereas model-based techniques use an internal model of the environment to prospectively calculate the values of candidate behaviors (Sutton & Barto, 1998).

Deep similarities exist between model-free RL, habitual control, and stimulus-response learning theories. All treat stimulus-response associations as the basic unit of knowledge, and all use experience to adjust the strength of these associations. Deep similarities also exist between model-based RL, goal-directed control, and cognitive learning theories. All treat action-outcome contingencies as the basic unit of knowledge, and all use this knowledge to simulate prospective outcomes. The dichotomy between model-free and model-based learning, which has been applied to decisions that require a single action, extends to decisions that involve a sequence of actions.

Scope of Review

The goal of this review is to synthesize research from the fields of cognitive psychology, neuroscience, and artificial intelligence. We focus on model-free and model-based learning. Existing reviews concentrate on the computational or neural properties of these techniques, but no review has systematically evaluated the results of studies that involve multi-step decision making. A spate of recent experiments has shed light on the behavioral and neural basis of sequential choice, however. To that end, we examine the correspondence between predictions of reinforcement learning models and the results of these experiments. The first and second sections of this review introduce the computational and neural underpinnings of model-free RL and model-based RL. The third section examines a range of problems related to temporal credit assignment that humans and animals face. The final section identifies outstanding questions and directions for future research.

Model-Free Reinforcement Learning

Reinforcement learning is not a monolithic technique, but rather a class of techniques designed for a common problem: learning through trial-and-error to act so as to maximize reward. The individual is not told what to do, but instead must select an action, observe the result of performing the action, and learn from the outcome. Because feedback pertains only to the selected action, the individual must sample alternative actions to learn about their values. Also, because the consequences of an action may be delayed, the individual must learn to select actions that maximize immediate and future reward.

Computational Instantiation of Model-Free Reinforcement Learning

Prediction

In model-free RL, differences between actual and expected outcomes, or reward prediction errors, serve as teaching signals. After the individual receives an outcome, a prediction error is computed,

| (1) |

The value rt+1 denotes immediate reward, V(st+1) denotes the estimated value of the new world state (i.e., future reward), and V(st) denotes the estimated value of the previous state. The temporal discount rate (γ) controls the weighting of future reward. Discounting ensures that when state values are equal, the individual will favor states that are immediately rewarding.

The prediction error equals the difference between the value of the outcome, [rt+1 + γ·V(st+1)], and the value of the previous state, V(st). The prediction error is used to update the estimated value of the previous state,

| (2) |

The learning rate (α) scales the size of updates. When expectations are revised in this way, the individual can learn to predict the sum of immediate and future rewards. This is called temporal difference (TD) learning.

TD learning relates to the integrator model (Bush & Mosteller, 1955) and the Rescorla-Wagner learning rule (Rescorla & Wagner, 1972), two prominent accounts of animal conditioning and human learning. Like these models, TD learning explains many conditioning phenomena in terms of the discrepancy between actual and expected rewards (e.g., blocking, overshadowing, and conditioned inhibition; for a review, see Sutton & Barto, 1990). TD learning differs, however, in that it is sensitive to immediate and future reward, whereas the integrator model and the Rescorla-Wagner learning rule are only sensitive to immediate reward. Thus, while all three models account for first-order conditioning, TD learning alone accounts for second-order conditioning. These strengths notwithstanding, TD learning fails to account for some of the same conditioning phenomena that challenge the integrator model and the Rescorla-Wagner learning rule (e.g., latent inhibition, sensory preconditioning, facilitated reacquisition after inhibition, and the partial reinforcement extinction effect), a point that we return to throughout the review and in the discussion.

Control

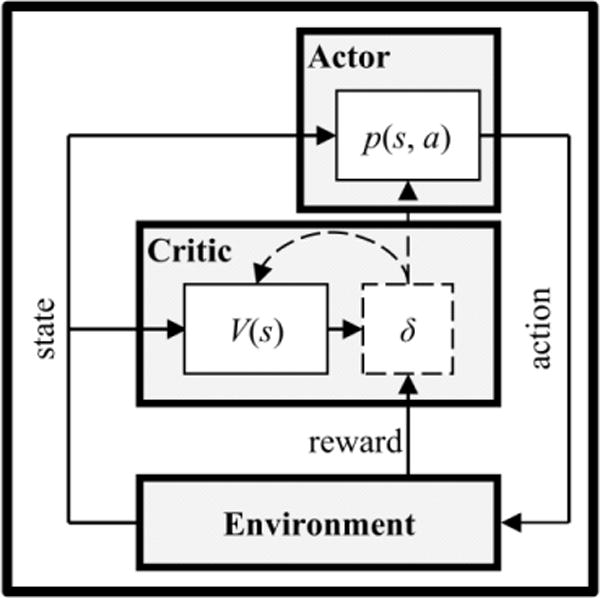

Prediction is only useful insofar as it facilitates selection. The actor/critic model (Sutton & Barto, 1998) advances a two-process account of how humans and animals deal with this control problem (Figure 1). The critic computes and uses prediction errors to learn state values (Eqs. 1 and 2). Positive prediction errors indicate things have gone better than expected, and negative prediction errors indicate things have gone worse than expected. The actor uses prediction errors to adjust preferences, p(s, a),

| (3) |

Figure 1.

Actor/critic architecture. The actor records preferences for actions in each state. The critic combines information about immediate reward and the expected value of the subsequent state to compute reward prediction errors (δ). The actor uses reward prediction errors to update action preferences, p(s, a), and the critic uses reward prediction errors to update state values, V(s).

This learning rule effectively states that the individual should repeat actions that result in greater rewards than usual, and that the individual should avoid actions that result in smaller rewards than usual. As the individual increasingly favors actions that maximize reward, the discrepancy between actual and expected outcomes decreases and learning ceases. Other model-free techniques like Q-learning and SARSA bypass state values entirely and learn action values directly (Sutton & Barto, 1998).

Preferences must still be converted to decisions. This can be done with the softmax selection rule,

| (4) |

The temperature parameter (τ) controls the degree of stochasticity in behavior. Selections become more random as τ increases, and selections become more deterministic as τ decreases. The softmax selection rule allows the individual to exploit knowledge of the best action while exploring alternatives in proportion to their utility. Aside from its computational appeal, the softmax selection rule resembles Luce’s choice axiom and Thurstonian theory, two attempts to map preference strengths to response probabilities (Luce, 1977). The softmax selection rule also approximates greedy selection among actions whose utility estimates are subject to continuously varying noise (Fu & Anderson, 2006).

The chief advantage of model-free techniques is that they learn state and action values without a model of the environment (i.e., state transitions and reward probabilities).1 Another advantage of model-free RL is that action selection is computationally simple. The individual evaluates actions based on stored preferences or utility values. This simplicity comes at the cost of inflexibility, however. Because state and action values are divorced from outcomes, the individual must experience outcomes to update these values. For example, if a rat unexpectedly discovers reward in a maze blind, the value of the blind will increase immediately. The value of the corridor leading to the blind will only increase, however, after the rat has subsequently passed through the corridor and arrived at the revalued location.

Eligibility traces

Eligibility traces mitigate this problem (Singh & Sutton, 1996). When a state is visited, an eligibility trace is initiated. The trace marks the state as eligible for update and fades according to the decay parameter (λ),

| (5) |

Prediction error is calculated in the conventional manner (Eq. 1), but the signal is used to update all states according to their eligibility,

| (6) |

Separate traces are assigned to state-action pairs, and state-action pairs are also updated according to their eligibility. Eligibility traces allow prediction errors to pass beyond immediate states and actions, and to reach other recent states and actions.

Neural Instantiation of Model-Free Reinforcement Learning

Dopamine

Researchers have extensively studied the neural basis of model-free RL. Much of this work focuses on dopamine, a neurotransmitter that plays a role in appetitive approach behavior (Berridge, 2007), and is a key component in pathologies of behavioral control such as addiction, Parkinson’s disease, and Huntington’s disease (Hyman & Malenka, 2001; Montague, Hyman, & Cohen, 2004; Schultz, 1998).2 The majority of dopamine neurons are located in two midbrain structures, the substantia nigra pars compacta (SNc) and the medially adjoining ventral tegmental area (VTA). The SNc and VTA receive highly convergent inputs and project to virtually the entire brain. The striatum and prefrontal cortex receive the greatest concentration of dopamine afferents, with the SNc selectively targeting the dorsal striatum and the VTA targeting the ventral striatum and prefrontal cortex.

In a series of studies, Schultz and colleagues demonstrated that the phasic responses of dopamine neurons mirrored reward prediction errors (Schultz, 1998). When a reward was unexpectedly presented, neurons showed enhanced activity at the time of reward delivery. When a conditioned stimulus preceded reward, however, neurons no longer responded to reward delivery. Rather, the dopamine response transferred to the earlier stimulus. Finally, when a reward was unexpectedly omitted following a conditioned stimulus, neurons showed depressed activity at the expected time of reward delivery. These observations motivated the idea that the phasic response of dopamine neurons codes for reward prediction errors (Montague, Dayan, & Sejnowski, 1996; Schultz, Dayan, & Montague, 1997).

Basal ganglia

The basal ganglia are a collection of linked subcortical structures that mediate learning and cognitive functions (Packard & Knowlton, 2002). The main input structure of the basal ganglia, the striatum, receives excitatory connections from the frontal cortex. Striatal neurons modulate activity in the thalamus via a direct pathway that passes through the internal segment of the globus pallidus (GPi), and an indirect pathway that passes through the external segment of the globus pallidus (GPe) and to the GPi (Joel, Niv, & Ruppin, 2002). At an abstract level, frontal collaterals convey information about the state of the world to the striatum. Activation from the direct and indirect pathways converges on the thalamus, resulting in facilitation or suppression of action representations.

Dopamine mediates plasticity at corticostriatal synapses (Wickens, Begg, & Arbuthnott, 1996; Reynolds, Hylan, & Wickens, 2001). This has led to the proposal that three factors govern changes in the strength of corticostriatal synapses in a multiplicative fashion: presynaptic depolarization, postsynaptic depolarization, and dopamine concentration (Reynolds & Wickens, 2002). By adjusting the strength of corticostriatal synapses according to a reward prediction error, the basal ganglia comes to facilitate actions that yield positive outcomes, and to suppress actions that do not.

Actor/critic

The actor and critic elements have been associated with the dorsal and ventral subdivisions of the striatum (Joel, Niv, & Ruppin, 2002; O’Doherty et al., 2004). Structural connectivity studies support this proposal. The dorsal striatum shares reciprocal connections with motor cortices, whereas the ventral striatum receives information about context, stimuli, and rewards through its connections with limbic and associative structures. Additionally, the ventral striatum, through its connections with the VTA and SNc, can influence activity in midbrain nuclei that project to itself and the dorsal striatum (Haber et al., 1990; 2000; Joel, Niv, & Ruppin, 2002). In a similar way, the critic affects the computation of prediction error signals that reach itself and the actor.

Physiological and lesion studies also implicate the ventral striatum in the acquisition of state values, and the dorsal striatum in the acquisition of action values (Balleine & O’Doherty, 2010; Cardinal et al., 2002; Packard & Knowlton, 2002). Human neuroimaging results further support this proposal. Instrumental conditioning tasks, which require behavioral responses, engage the dorsal and ventral striatum. Classical conditioning tasks, which do not require behavioral responses, mainly engage the ventral striatum (Elliott et al., 2004; O’Doherty et al., 2004; Tricomi, Delgado, & Fiez, 2004).

Model-Based Reinforcement Learning

Model-free techniques assign utility values directly to states and actions. To select among candidate actions, the individual compares the utility of each. Model-based techniques adopt a fundamentally different approach. From experience, the individual learns the reward function –that is, the rewards contained in each state. The individual also learns the state transition function – that is, the mapping between the current state, actions, and resulting states. Using this world model (i.e., the reward function and the transition function), the individual prospectively calculates the utility of candidate actions in order to select among them.

Computational Instantiation of Model-Based Reinforcement Learning

Learning a world model

Model-based approaches require a model of the environment. How can the individual learn about the reward function, R(st+1), and the state transition function, T(st, at, st+1), from experience? One solution is to compute something akin to the prediction errors used in model-free RL. After the individual enters a state and receives reward rt+1, a reward prediction error is calculated,

| (7) |

This differs from the prediction error in model-free RL because it does not include a term for future reward. The reward prediction error is used to update the value of R(st+1),

| (8) |

After the individual arrives at state st+1, a state prediction error is also calculated,

| (9) |

The state prediction error is used to update the value of T(st, at, st+1),

| (10) |

The likelihoods of all states not arrived at are then normalized to ensure that transition probabilities sum to one. The use of error-driven learning to acquire a causal relationship model constitutes a variation of the Rescorla-Wagner learning rule (1972).

Policy search

In model-based RL, information about the reward and transition functions is used to calculate state values,

| (11) |

This quantity equals the value of possible outcomes, {R(s′) + γ·V(s′)}, weighted according to their probability given the individual’s selection policy, π(st, a′), and the state transition function, T(st, a′, s′). After state values are calculated, actions are evaluated in terms of their immediate and future rewards,

| (12) |

Finally, the selection policy is updated by setting π(st, at) = maxaQFWD(st, a). In other words, the individual selects the most valuable action in each state.3 By iteratively applying these evaluations over all states and actions, the individual will arrive at an optimal selection policy. This is called policy iteration (Sutton & Barto, 1998).

Policy iteration is but one example of how the individual can use a world model to select actions. The individual can also use transition and reward functions to calculate expected rewards over the next n time steps for all sequences of actions to identify the one that maximizes return (i.e., forward or depth-first search; Daw, Niv, & Dayan, 2005; Johnson & Redish, 2007; Simon & Daw, 2011; Smith, Becker, & Kapur, 2006). Alternatively, working from the desired final state, the individual can reason back to the current state (i.e., backward induction; Busemeyer & Pleskac, 2009). Finally, using the transition and reward functions as a generative world model, the individual can apply Bayesian techniques to infer which policy is most likely to maximize return (Solway & Botvinick, 2012).

The chief advantage of model-based RL is that it efficiently propagates experience to antecedent states and actions. For example, if a rat unexpectedly discovers reward in a maze blind, policy iteration allows the rat to immediately revalue states and actions leading to that blind. Model-based RL has two main drawbacks, however. First, model-based RL requires a complete model of the environment. Second, in environments with many states, the costs of querying the world model become prohibitive in terms of time and computation. The finite capacity of human working memory magnifies this concern: people cannot hold an endlessly branching decision tree in memory.

Neural Instantiation of Model-Based Reinforcement Learning

Reward function

Neurons in the orbitofrontal cortex (OFC) encode stimulus incentive values. OFC responses decrease when participants are satiated on the evoking unconditioned stimulus (Rolls, Kringelbach, & de Araujo, 2003; Valentin, Dickinson, & O’Doherty, 2007), and OFC responses correlate with people’s willingness to pay for appetitive stimuli (Plassmann, O’Doherty, & Rangel, 2007). Additionally, experienced and imagined rewards activate the OFC (Bray, Shimojo, & O’Doherty, 2010). These functions have been linked with goal-directed behavior, a position further supported by the observations that OFC lesions abolish devaluation sensitivity (Izquierdo, Suda, & Murray, 2004), and impair animals’ ability to associate distinct stimuli with different types of food rewards (McDannald et al., 2011).

Transition function

Several regions provide candidate transition functions. Chief among these is the hippocampus. Rodent navigation studies show that receptive fields of hippocampal pyramidal cells form a cognitive map (O’Keefe & Nadel, 1978). Neurons preferentially fire as the animal traverses specific locations in the environment. Ensemble activity sometimes correlates with positions other than the rodent’s current location, however. For instance, when rodents rest after traversing a maze, the sequence of active hippocampal fields ‘replays’ navigation paths (Foster & Wilson, 2006). Additionally, when rodents arrive at choice points in a maze, they pause and engage in vicarious trial-and-error behavior such as looking down alternate paths (Tolman, 1932). The sequence of active hippocampal fields simultaneously ‘preplays’ alternate paths at such points, hinting at the involvement of the hippocampus in forward search (Johnson & Redish, 2007).

The acquisition and storage of relational knowledge among non-spatial stimuli also depends on the hippocampus and the surrounding medial temporal lobes (MTL; Bunsey & Eichenbaum, 1996). MTL-mediated learning occurs rapidly, MTL-based memories contain information about associations among stimuli, and MTL-based memories are accessible in and transferrable to novel contexts (Cohen & Eichenbaum, 1993). These features of the MTL coincide with core properties of model-based RL. Interestingly, the hippocampus becomes active when people remember the past and imagine the future, paralleling reports of hippocampal replay and preplay events in rodents (Schacter, Addis, & Buckner, 2007). Thus, among the many types of knowledge it stores, the hippocampus may contain state transition functions that permit forward search in humans as well.

The cerebellum contains a different type of transition function in the form of internal models of the sensorimotor apparatus (Doya, 1999; Ito, 2008). These models allow the sensorimotor system to identify motor commands that will produce target outputs. Movement is not a prerequisite for cerebellar activation, however. Cognitive tasks also engage the cerebellum (Stoodley, 2012; Strick, Dum, & Fiez, 2009). This suggests that the cerebellum contains internal models that contribute to non-motoric planning as well (Imamizu & Kawato, 2009; Ito, 2008).

Lastly, specific basal ganglia structures encode information about relationships between actions and outcomes. Electrophysiological recordings and lesion studies have identified three anatomically and functionally distinct cortico-basal-ganglia loops: a sensorimotor loop that mediates habitual control, an associative loop that mediates goal-directed control, and a limbic loop that mediates the impact of primary reward values on habitual and goal-directed control (Balleine, 2005; Balleine & O’Doherty, 2010). Model-based RL has been linked with the associative loop (Daw, Niv, & Dayan, 2005; Dayan & Niv, 2008; Niv, 2009). Lesions of the rodent prelimbic cortex and dorsomedial striatum, parts of the associative loop, abolish sensitivity to outcome devaluation and contingency degradation, two assays used to establish that behavior is goal-directed (Balleine & O’Doherty, 2010; Ostlund & Balleine, 2005; Yin, Knowlton, & Balleine, 2005).4

Researchers have identified homologous areas in the primate brain (Wunderlich, Dayan, & Dolan, 2012). Neuroimaging studies have found that the ventromedial prefrontal cortex (vmPFC) encodes expected reward attributable to chosen actions (Daw et al., 2006; Gläscher, Hampton, & O’Doherty, 2009; Hampton, Bossaerts, & O’Doherty, 2006). Further, activity in the vmPFC is modulated by outcome devaluation (Valentin, Dickinson, & O’Doherty, 2007), and the vmPFC and dorsomedial striatum (the anterior caudate) detect the strength of the contingency between actions and rewards (Liljeholm et al., 2011; Tanaka, Balleine, & O’Doherty, 2008). These findings demonstrate that the vmPFC and dorsomedial striatum are sensitive to the defining properties of goal-directed control.

Decision policy

The prefrontal cortex supports more abstract forms of responses. For example, certain neurons in the lateral prefrontal cortex (latPFC) appear to encode task sets, or “rules” that establish context-dependent mappings between stimuli and responses (Asaad, Rainer, & Miller, 2000; Mansouri, Matsumoto, & Tanaka, 2006; Muhammad, Wallis, & Miller, 2006; White & Wise, 1999). Human neuroimaging experiments underscore the involvement of the latPFC in rule retrieval and use (Bunge, 2004). The task sets evoked in these studies are akin to a literal decision policy.

The PFC also supports sequential choice behavior. For example, in a maze navigation task, neurons in the latPFC exhibited sensitivity to initial, intermediate, and final goal positions prior to movement onset (Mushiake et al., 2006; Saito et al., 2005). Additionally, human neuroimaging experiments show that the PFC is active in tasks that require planning, and that PFC activation increases with planning difficulty (Anderson, Albert, & Fincham, 2005; Owen, 1997). Moreover, latPFC damage impairs planning and rule-guided behavior while leaving other types of responses intact (Bussey, Wise, & Murray, 2001; Fuster, 1997; Hoshi, Shima, & Tanji, 2000; Owen, 1997; Shallice, 1982). Collectively, these results highlight the involvement of the PFC in facets of model-based RL. That is not to say that the PFC only performs model-based RL. Rather, model-based RL draws on a multitude of functions performed by the PFC and other regions as described above.

Hybrid Models

The computational literature contains proposals for pairing model-free and model-based approaches. There is evidence that the brain also combines model-free and model-based RL. For example, some computational algorithms augment model-free learning by replaying or simulating experiences offline (e.g., the Dyna-Q algorithm; Lin, 1992; Sutton, 1990). The model-free system treats simulated episodes as real experience to accelerate TD learning. In a similar way, hippocampal replay may facilitate model-free RL by allowing the individual to update cached values offline using simulating experiences (Gershman, Markman, & Otto, in press; Johnson & Redish, 2005). Thus, the model-based system may train a model-free controller.

Other computational algorithms use cached values to limit the depth of search in model-based RL (Samuel, 1959). This is especially pertinent in light of the limited capacity of human short-term memory. For example, although a chess player has complete knowledge of the environment, and can enumerate the full state-space to discover the optimal move in principle, chess masters consider a far smaller subspace before acting (de Groot, 1946/1978). This amounts to pruning the branches of the decision tree (Huys et al., 2012). Rather than exhaustively calculating future reward, the individual estimates future reward using heuristics (Newell & Simon, 1972) or cached values from the model-free system (Daw, Niv, & Dayan, 2005). Although neural evidence for such pruning is sparse, one study demonstrated that ventral-striatal neurons responded when rats received reward and when they engaged in vicarious trial-and-error behavior at choice points in a T-maze (van der Meer & Redish, 2009). Thus, the model-free system may contribute information about branch values to a model-based controller.

Problems and Paradigms in Sequential Choice

Model-free and model-based RL provide normative solutions to the problem of temporal credit assignment, and neuroscientific investigations have begun to map components of these frameworks onto distinct neural substrates (Table 1). We now turn to empirical work that presents variants of the temporal credit assignment problem. We ask whether humans and animals can cope with these challenges. If so, how, and if not, why not?

Table 1.

Candidate Structures Implementing Model-Free and Model-Based RL

| Component | Structure |

|---|---|

| Model-free RL | |

| Prediction error | Substantia nigra pars compacta |

| Ventral tegmental area | |

| Actor | Ventral striatum |

| Critic | Dorsolateral striatum |

|

| |

| Model-based RL | |

| Reward function | Orbitofrontal cortex |

| Transition function | Hippocampus |

| Cerebellum | |

| Dorsomedial striatum | |

| Ventromedial prefrontal cortex | |

| Decision policy | Lateral prefrontal cortex |

Second-Order Conditioning and Secondary Reinforcers

In the archetypal classical conditioning experiment, a conditioned stimulus (CS) precedes an unconditioned stimulus (US). For example, a dog views a light (the CS) before receiving food (the US). Initially, the US evokes an unconditioned response, such as salivation, but the CS does not. When the CS and US are repeatedly paired, however, the CS comes to evoke a conditioned response, salivation, as well (Pavlov, 1927). Holland and Rescorla (1975) asked whether second-order conditioning was possible – that is, can a CS be used to condition a neutral stimulus? They found that when a neutral stimulus was paired with a CS, the neutral stimulus came to evoke a conditioned response as well.

The spread of reinforcement is also seen in tasks that require behavioral responses. In the standard instrumental conditioning paradigm, an animal performs a response and receives reward. For example, a pigeon presses a lever and is given a food pellet. Skinner (1938) asked whether a neutral stimulus, once conditioned, could act as a secondary reinforcer – that is, can a CS shape instrumental responses? Indeed, when an auditory click was first associated with food pellets, pigeons learned to press a lever that simply produced the auditory click (Skinner, 1938).

In TD learning, a model-free technique, the CS inherits the value of the US that follows it. Consequently, the CS can condition neutral stimuli (i.e., second-order conditioning), and the CS can support the acquisition of instrumental responses (i.e., secondary reinforcement). By this view, the CS mediates behavior directly through its reinforcing potential. Model-based accounts can also accommodate these results. The individual may learn that a neutral stimulus or action leads to the CS, and that the CS leads to the US. By this view, the CS mediates behavior indirectly through its link with the US.

The question of model-free or model-based RL maps onto the classic question of stimulus-response or stimulus-stimulus association. Some results are consistent with the model-free/stimulus-response position. For example, in higher-order conditioning experiments, animals continue to exhibit a conditioned response to the second-order CS even after the first-order CS is extinguished (Rizley & Rescorla, 1972). This shows that once conditioning is complete, the second-order conditioned response no longer depends on the first-order CS. Other results are consistent with the model-based/stimulus-stimulus position. For example, in sensory preconditioning, the second stimulus is paired with the first stimulus, and the first stimulus is only then paired with the US (Brogden, 1939; Rizley & Rescorla, 1972). Once conditioning is complete, the second-order CS evokes a conditioned response even though it was never paired with the revalued, first-order CS. This shows that conditioning can occur even if the training schedule does not permit the backward propagation of reward to the second-order CS.

Physiological studies

Some of the strongest evidence for TD learning comes from studies of the phasic responses of dopamine neurons to rewards and reward-predicting stimuli. The dopamine response conforms to basic properties of the reward prediction error signal in classical and instrumental tasks (Pan et al., 2005; Schultz, 1998), and during self-initiated movement sequences (Wassum et al., 2012). More nuanced tests substantiate the dopamine prediction error hypothesis. First, dopamine neurons respond to the earliest predictors of reward in classical and instrumental conditioning tasks (Schultz, Apicella, & Ljungberg, 1993). Second, dopamine neurons respond more strongly to stimuli that predict probable rewards (Fiorillo, Tobler, & Schultz, 2003) and rewards with large magnitudes (Morris et al., 2006; Satoh et al., 2003; Tobler, Fiorillo, & Schultz; 2005). Additionally, when probability and magnitude are crossed, dopamine neurons respond to expected value rather than to the constituent parts (Tobler, Fiorillo, & Schultz, 2005). Third, in blocking paradigms, animals fail to learn associations between blocked stimuli and rewards. Accordingly, dopamine neurons respond more weakly to blocked stimuli than to unblocked stimuli (Waelti, Dickinson, & Schultz, 2001). Fourth and finally, animals and humans discount delayed rewards (Frederick, Loewenstein, & O’Donoghue, 2002). Dopamine neurons also respond more weakly to stimuli that predict delayed rewards (Kobayashi & Schultz, 2008; Roesch, Calu, & Schoenbaum, 2007).

FMRI studies

Researchers have attempted to identify prediction error signals in humans using fMRI. Many experiments have examined BOLD responses to parametric manipulations expected to produce prediction errors. Others have used hidden-variable analyses in conjunction with TD models to identify regions where activation correlates with a prediction error signal. Both approaches consistently show that prediction errors modulate activity throughout the striatum, a region densely innervated by dopamine neurons (Berns et al., 2001; Delgado et al., 2003; McClure, Berns, & Montague, 2003; O’Doherty et al., 2004; O’Doherty, Hampton, & Kim, 2007; Pagnoni et al., 2002; Rutledge et al., 2010).

A characteristic feature of the TD learning signal and of dopamine responses is that they propagate back to the earliest outcome predictor. This is also true of BOLD responses. In one illustrative study, participants underwent appetitive conditioning (O’Doherty et al., 2003). Activity in the ventral striatum at the time of reward delivery conformed to a prediction error signal. The BOLD response was maximal when a pleasant liquid was unexpectedly delivered, and the response was minimal when a pleasant liquid was unexpectedly withheld. Activity at the time of stimulus presentation also conformed to a prediction error signal. The BOLD response was greatest when the stimulus predicted delivery of the pleasant liquid.

Other fMRI studies have replicated the finding that conditioned stimuli evoke neural prediction errors during classical and instrumental conditioning (Abler et al., 2006; O’Doherty et al., 2004; Tobler et al., 2006). In one such study, cues predicted rewards with probabilities ranging from 0% to 100% (Abler et al., 2006). Following cue presentation, activity in the nucleus accumbens (NAc) increased as a linear function of reward probability, and following reward delivery, activity increased as a linear function of the unexpectedness of reward. NAc activity also increases with anticipated reward magnitude (Knutson et al., 2005).

In these examples, conditioning was successful. In contrast, when a blocking procedure is used, participants fail to associate blocked stimuli with rewards (Tobler et al., 2006). Paralleling this behavioral result, the ventral putamen showed weaker responses to blocked stimuli than to unblocked stimuli. Additionally, and as anticipated by TD learning, the ventral putamen showed greater responses to rewards that followed blocked stimuli than to rewards that followed unblocked stimuli.

These studies support the notion that the BOLD signal in the striatum conveys a model-free report. One recent study challenges that notion, however (Daw et al. 2011). In that study, participants made two selections before receiving feedback. The first selection led to one of two intermediate states with fixed probabilities (Figure 2). Participants memorized these probabilities in advance. The second selection, made from the intermediate state, was rewarded probabilistically. Participants learned these probabilities during the experiment. Model-based and model-free RL make opposing predictions for how outcomes will influence first-stage selections. Model-free RL credits first- and second-stage selections for outcomes, and so predicts that participants will repeat first-stage selections whenever they receive reward. Model-based RL only credits second-stage selections for outcomes, and so predicts that participants will favor the first-stage selection that leads to the most rewarding intermediate state. Consequently, model-based RL predicts that the individual will repeat first-stage selections when the expected transition occurs and the trial is rewarded, and when the unexpected transition occurs and the trial is not rewarded.

Figure 2.

Transition structure in sequential choice task (Daw et al., 2011). The first selection lead to one of two intermediate states with fixed probabilities, and the second selection was rewarded probabilistically.

Participants’ behavior reflected a blend of these predictions: they were more likely to repeat the initial selection when the trial was rewarded, and they did so most often when the initial selection led to the expected intermediate state. To account for these results, Daw et al. (2011) proposed a hybrid model that combined estimated action values from model-free and model-based controllers. Daw et al. generated prediction errors using TD learning. They also generated prediction errors based on the difference between the value of the actual outcome and the value predicted by the model-based controller. BOLD responses in the ventral striatum correlated with the difference between outcomes and model-free predictions, supporting the idea that the ventral striatum is involved in TD learning. BOLD responses further correlated with the difference between outcomes and model-based predictions, however. Daw et al. (2011) concluded that the ventral striatum did not literally implement model-based RL, as this result might suggest. Rather, other regions that implement model-based RL influenced the utility values represented in the ventral striatum.

Electrophysiological studies

Researchers have also attempted to identify neural prediction errors in humans using scalp-recorded event-related potentials (ERPs). Early studies revealed a frontocentral error-related negativity (ERN) that appeared 50 to 100 ms after error commission (Falkenstein et al., 1991; Gehring et al., 1993). Subsequent studies have revealed a frontocentral negativity that appears 200 to 300 ms after the display of aversive feedback (Miltner, Braun, & Coles, 1997). Many features of this feedback-related negativity (FRN) indicate that it relates to reward prediction error. First, the FRN amplitude depends on the difference between actual and expected reward (Holroyd & Coles, 2002; Walsh & Anderson, 2011a, 2011b). Second, the FRN amplitude correlates with post-error adjustment (Cohen & Ranganath, 2007). Third, converging methodological approaches indicate that the FRN originates from the anterior cingulate (Holroyd & Coles, 2002), a region implicated in cognitive control and behavioral selection (Kennerley et al., 2006). These ideas have been synthesized in the reinforcement learning theory of the error-related negativity (RL-ERN), which proposes that midbrain dopamine neurons transmit a prediction error signal to the anterior cingulate, and that this signal reinforces or punishes actions that preceded outcomes (Holroyd & Coles, 2002; Walsh & Anderson, 2012).

According to RL-ERN, outcomes and stimuli that predict outcomes should be able to evoke an FRN. To test this hypothesis, researchers have examined whether predictive stimuli produce an FRN. In some studies, cues perfectly predicted outcomes. In those studies, ERPs were more negative after cues that predicted losses than after cues that predicted wins (Baker & Holroyd, 2009; Dunning & Hajcak, 2007). In other studies, cues provided probabilistic information about outcomes. There too, ERPs were more negative after cues that predicted probable losses than after cues that predicted probable wins (Holroyd, Krigolson, & Lee, 2011; Liao et al., 2011; Walsh & Anderson, 2011a). In each case, the latency and topography of the cue-locked FRN coincided with the feedback-locked FRN.

RL-ERN is but one account of the FRN (Holroyd & Coles, 2002). According to another proposal, the anterior cingulate monitors response conflict (Botvinick et al., 2001; Yeung et al., 2004). Upon detecting coactive, incompatible responses, the anterior cingulate signals the need to increase control in order to resolve the conflict. Consistent with this view, ERP studies have revealed a frontocentral negativity called the N2 that appears when participants must inhibit a response (Pritchard et al., 1991). Source localization studies indicate that the N2, like the FRN, arises from the anterior cingulate (van Veen & Carter, 2002; Yeung et al., 2004). Further, fMRI studies have reported enhanced activity in the anterior cingulate following incorrect responses as well as correct responses under conditions of high response conflict (Carter et al., 1998; Kerns et al., 2004).

RL-ERN focuses on the ERN and the FRN, whereas the conflict monitoring hypothesis focuses on the N2 and the ERN. RL-ERN can be augmented to account for the N2, however, by assuming that conflict resolution incurs cognitive costs, penalizing high conflict states (Botvinick, 2007). Alternatively, high conflict states may have lower expected value because they engender greater error likelihoods (Brown & Braver, 2005).

Goal Gradient

Hull noted that reactions to stimuli followed immediately by rewards were conditioned more strongly than reactions to stimuli followed by rewards after a delay (1932). Based on this observation, he proposed that the power of primary reinforcement transferred to earlier stimuli, producing a spatially extended goal gradient that decreased in strength with distance from reward. The concept of the goal-gradient has inspired much research on maze learning. This theory predicts that errors will occur most frequently at early choice points in mazes. Because future reward is discounted, the values of states and actions most remote from reward are near zero. As the difference between the values of actions decreases, the probability that the individual will select the correct response with a softmax selection rule (Eq. 4) decreases as well.

Consistent with these predictions, rats make the most errors at choice points furthest from reward (Spence, 1932; Tolman & Honzik, 1930). Researchers have since identified other factors that affect maze navigation. For example, subjects enact incorrect responses that anticipate future choice points (Spragg, 1934), they more frequently enter blinds oriented toward the goal (Spence, 1932), and they commit a disproportionate number of errors in the direction of more probable turns (Buel, 1935). Although a multitude of factors affect maze learning, the backward elimination of blinds operates independently of these factors.

Fu and Anderson (2006) asked whether humans also show a goal gradient. In their task, participants navigated through rooms in a virtual maze (Figure 3). Each room contained one object that marked its identity, and two cues that participants chose between. After choosing a cue, participants transitioned to a room that contained a new object and cues. If participants selected the correct cues in Rooms 1, 2, and 3, they arrived at the exit. If they made an incorrect selection in any room, they ultimately arrived at a dead end. Participants only received feedback upon reaching the exit or a dead end, and upon reaching a dead end, they were returned to the last correct room. The goal-gradient hypothesis predicts that errors will be most frequent in Room 1, followed by Room 2, and then by Room 3. The results of the experiment confirmed this prediction.

Figure 3.

Experiment interface (left) and maze structure with correct path in gray (right)(Fu & Anderson, 2006). To exit the maze, participants needed to select the correct cues in Rooms 1, 2, and 3.

Fu and Anderson fit a SARSA model to their data. Like participants, the model produced a negatively accelerated goal gradient. This is a natural consequence of discounting future reward (i.e., application of γ in Eq. 1). The maximum reward that can reach a room, RD, decreases as a function of its distance (D) from the exit,

| (13) |

The discount term (γ) controls the steepness of the gradient. This function is equivalent to one derived by Spence to describe the goal gradient in rat maze learning (1932).

This interpretation of the goal gradient is in terms of model-free RL. Might a model-based controller also exhibit a goal gradient? Yes. Future reward is discounted in some instantiations of forward search (Eqs. 11 and 12). Consequently, differences among state and action values decrease with their distance from reward. In other versions of model-based RL, future reward is not discounted but error is accrued with each subsequent step in forward search (Daw, Niv, & Dayan, 2005). The compounding of error with increasing search depth would yield a goal gradient as well.

Eligibility traces

Fu and Anderson’s model predicted slower learning in Room 1 than was observed. This relates to a weakness of TD learning: when reward follows a delay, credit slowly propagates to distant states and actions. Walsh and Anderson (2011a) directly compared the behavior of TD models with and without eligibility traces in a sequential choice task. Like Fu and Anderson (2006), they found that models without eligibility traces learned the initial choice in a sequence more slowly than participants did. The addition of eligibility traces resolved this discrepancy.

In these examples, deviations between model predictions and behavior were slight. Even moderately complex problems exacerbate the challenge of assigning credit to distant states and actions, however (Janssen & Gray, 2012). For instance, Gray et al. (2006) found that Q-learning required 100,000 trials to match the proficiency of human participants after 50 trials. Eligibility traces greatly accelerate learning in such cases where rewards are delayed by multiple states.

Latent Learning and Detour Behavior

Latent learning

Work aimed at distinguishing between stimulus-response and cognitive learning theories provided early support for model-based RL. Two classic examples are latent learning and detour behavior. Latent learning experiments examine whether individuals can learn about the structure of the environment in the absence of reward. In one such experiment, rats navigated through a compound T-maze until they reached an end box (Blodgett, 1929). Following several unrewarded sessions, food was placed at the end box. After discovering the food, rats committed far fewer errors as they navigated to the end box in the next trial. This indicates that they acquired information about the structure of the maze during training and in the absence of reward.5

In a recent study of latent learning in humans, participants navigated through two intermediate states before arriving at a terminal state (Gläscher et al., 2010). Before performing the task, they learned the transition probabilities leading to terminal states, and they then learned the reward values assigned to terminal states. Gläscher et al. compared the behavior of three models to participants’ performance at test. The SARSA model used reward prediction errors to learn action values from experience during the test phase. The FORWARD model used knowledge of the transition and reward probabilities to calculate action values prospectively with policy iteration. Lastly, the HYBRID model averaged action values from the separate SARSA and FORWARD models. Gläscher et al. found that the HYBRID model best matched participants’ behavior. The FORWARD component accounted for the fact that participants immediately exercised knowledge of the transition and reward functions, and the SARSA component accounted for the fact that they continued to learn from experience during the test.

By collecting neuroimaging data as participants performed the task, Gläscher et al. could search for neural correlates of model prediction errors. Reward prediction errors (δt) generated by the SARSA model correlated with activity in the ventral striatum, corroborating other reports of this region’s involvement in model-free RL. State prediction errors (δSPE; Eq. 9) generated by the FORWARD model correlated with activity in the intraparietal sulcus and the latPFC, indicating that these regions contribute to the acquisition or storage of a state transition function. The finding that the latPFC represents transitions is consistent with the role of this region in planning (Fuster, 1997; Mushiake et al., 2006; Owen, 1997; Saito et al., 2005; Shallice, 1982).

Detour behavior

Detour behavior experiments examine how individuals adjust their behavior upon detecting environmental change. In one such study (Tolman & Honzik, 1930), rats selected from three paths, two of which shared a segment leading to the end box (Figure 4). When Path 1 was blocked at point A, rats immediately switched to Path 2, and when Path 1 was blocked at point B, rats immediately switched to Path 3. This indicates that they revised their internal model upon encountering detours, and that they used this revised model to identify the shortest remaining path.

Figure 4.

Maze used to assess detour behavior in rats (Tolman & Honzik, 1930). In different trials, detours were placed at points A and B.

Simon and Daw asked whether humans were as sensitive to detours (2011). In their task, participants navigated through a grid of rooms, some of which contained known rewards. Doors connecting the rooms changed between trials, eliminating old paths and creating new paths. Simon and Daw fit model-free and model-based controllers to each participant’s behavior, and found that the model-based controller best accounted for nearly all participants’ choices. This result indicates that humans, like rats, update their model of the environment upon encountering detours and that they use this model to infer the shortest path to the goal.

Simon and Daw used fMRI to identify neural correlates of model-free and model-based prediction errors. Activity in the dorsolateral PFC and OFC correlated with model-based values. Additionally, activity in the ventral striatum, though correlated with model-free values, was more strongly correlated with model-based values. Simon and Daw also identified regions that responded according to the expected value of the reward in the next room. This analysis revealed significant clusters of activation in the superior frontal cortex and the parahippocampal cortex.

The discovery that model-based value signals correlated with activity in the dorsolateral PFC and OFC is consistent with the purported role of these regions in planning. Additionally, the finding that future reward correlated with activity in the PFC and MTL is consistent with the idea that these regions contribute to goal-directed behavior. The discovery that activity in the ventral striatum correlated more strongly with model-based than with model-free value signals is surprising, however, because this region is typically associated with TD learning. As in Daw et al. (2011), this result need not imply that the striatum itself implements model-based RL. Rather, other regions that implement model-based RL may bias striatal representations of action values.6

Partially Observable Markov Decision Processes (POMDP)

Most studies of choice involve Markov decision processes (MDP). The key feature of MDPs is that state transitions and rewards depend on the current state and action, but not on earlier states or actions. An important challenge is to extend learning theories to partially observable Markov decision processes (POMDPs). In POMDPs, the system’s dynamics follow the Markov property, but the individual cannot directly observe the system’s underlying state. POMDPs can be dealt with in three ways. First, the individual can simply ignore hidden states (Loch & Singh, 1998). Second, the individual can maintain a record of past states and actions to disambiguate the current state (McCallum, 1995). Third, the individual can maintain a belief state vector that contains the relative likelihood of each state (Kaelbling, Littman, & Cassandra, 1998). We consider each of these alternatives in turn.

Eligibility traces

In one task that violated the Markov property, participants selected from two images (Tanaka et al., 2009). For some image pairs, monetary reward or punishment was delivered immediately. For other image pairs, reward or punishment was delivered after three trials (Figure 5). In trials with delayed outcomes, the hidden state is the correctness of the response, which affects the value of the score displayed three trials later. Tanaka et al. asked whether participants could learn correct responses for the outcome-delayed image pairs. Although one response resulted in a future loss, and the other in a future gain, the states that immediately followed both responses mapped onto the same visual percept. Because TD learning calculates future reward based on the value of the state that immediately follows an action (Eq. 1), this creates a credit assignment bottleneck. Yet participants learned the correct responses for the outcome-delayed image pairs.

Figure 5.

Delayed reward task (Tanaka et al., 2009). Some rewards were delivered immediately (trial t + 1), and some rewards were delivered after a delay (trial t + 3).

Tanaka et al. evaluated two computational models. The first used internal memory elements to store the past three decisions. The representation of states in this model included the last three decisions and the current image pair. This representation restores the Markov property, but expands the size of the state space. The second model applied TD learning with eligibility traces to observable states, and did not store past decisions. The eligibility trace model better accounted for participants’ choices, while the model with internal memory elements heavily fractionated the state space resulting in slow learning. In a related study, participants learned the initial and final selections in a sequential choice task even though the final selection violated the Markov property (Fu & Anderson, 2008a). Although Fu and Anderson interpreted their results in terms of TD learning, eligibility traces would be needed to acquire the initial response in their task as well.

How do eligibility traces allow TD learning to overcome these credit assignment bottlenecks? Because both actions lead to the same intermediate state, the intermediate outcome does not adjudicate between actions. When the final outcome is delivered, wins produce positive prediction errors and losses produce negative prediction errors. Because the initial action remains eligible for update, the TD model can assign these later, discriminative prediction errors to earlier actions.

We have considered violations of the Markov property in sequential choice tasks. Embodied agents face a more pervasive type of credit bottleneck when perceptual and motor behaviors separate choices from rewards (Anderson, 2007; Walsh & Anderson, 2009). For example, in representative instrumental conditioning studies, a rat chooses between levers, but only receives reward upon entering the magazine (Balleine et al., 1995; Killcross & Coutureau, 2003). In a strict sense, the act of entering the magazine would block assignment of credit to the lever press. Eligibility traces are applicable to these credit assignment bottlenecks as well.

Memory

The second technique for dealing with POMDPs is to remember past states and actions to disambiguate the current state (McCallum, 1995). Tanaka et al.’s (2009) memory model, described in the previous section, did just that. Cognitive architectures that include a short-term memory component also accomplish tasks in this way (Anderson, 2007; Frank & Claus, 2006; O’Reilly & Frank, 2006; Wang & Laird, 2007). For example, in the 12-AX task, the individual views a continuous stream of letters and numbers (O’Reilly & Frank, 2006; Todd, Niv, & Cohen, 2009). The correct response mappings for the current item depend on the identity of earlier items. As such, the individual must maintain and update information about context. According to the gating hypothesis, the prefrontal cortex maintains such contextual information (O’Reilly & Frank, 2006). The same mechanism that supports the acquisition of stimulus-response mappings, the dopamine predication error signal, teaches the basal ganglia when to update the contents of working memory. Memory can also be used to disambiguate states in sequential choice tasks. For example, in the absence of salient cues, a rat must remember prior moves to determine its current location in a maze. Wang and Laird (2007) showed that a cognitive model that used past actions to disambiguate the current state better accounted for the idiosyncratic navigation errors of rats than did a model without memory.

Belief states

The final technique for dealing with POMDPs is to maintain a belief state vector that contains the relative likelihood of each state (Kaelbling, Littman, & Cassandra, 1998). Calculating these likelihoods is non-trivial when the underlying state of the system is not observable; for example, when different events or actions lead probabilistically to different states with identical appearances. Upon acting and receiving a new observation, the individual updates the belief state vector. Updated beliefs depend on states’ prior probabilities, and states’ conditional probabilities given the new observation. When paired with model-based techniques, belief states allow the individual to calculate the values of actions, weighted according to the likelihood that the individual is in each state.

Belief states have been used to capture sequential sampling results (Dayan & Daw, 2008; Rao, 2010). For example, in the dot-motion detection task, the individual reports the direction in which an array of dots is moving. Some dots move coherently and some move randomly. In the dot-motion detection task, states are the possible directions of motion, observations are the array of coherent and incoherent dots at each moment, and the belief state vector contains the subject’s expectations and confidence about the direction of motion. Belief states have also been used to model navigational uncertainty (Stankiewicz et al., 2006; Yoshida & Ishii, 2006). The challenge in these navigation tasks is to move toward an occluded location while gathering information to disambiguate one’s current location. Interestingly, in sequential sampling and navigation studies, humans perform worse than ideal observer models (Doshi-Velez & Ghahramani, 2011; Stankiewicz et al., 2006). These shortcomings have been attributed to errors in updating belief states.

Distributed Outcomes

The problem of temporal credit assignment arises when feedback follows a sequence of decisions. A related problem arises when the consequences of a decision are distributed over a sequence of outcomes. This is the case in the Harvard Game, a task where participants select between an action that increases immediate reward and an action that increases future reward (Herrnstein et al., 1993). Like Aesop’s fabled grasshopper, humans and animals struggle to forgo immediate gratification to secure future rewards when confronted with such a scenario.

In one Harvard Game experiment (Tunney & Shanks, 2002), participants chose between two actions, left and right. The immediate (or local) reward associated with left exceeded the reward associated with right by a fixed amount (Figure 6), but the future (or global) reward associated with both actions grew in proportion to the percentage of responses allocated to right during the previous ten trials. Consequently, selection of left (melioration) increased immediate reward, but selection of right (maximization) increased total reward. In this and other Harvard Game experiments, responses change the probability or magnitude of reward without altering the observable state of the system. Consequently, the basic TD model can learn only to meliorate (Montague & Berns, 2002), as do participants in most Harvard Game experiments.

Figure 6.

Harvard Game payoff functions (Tunney & Shanks, 2002). Payoff for meliorating (choose left) and maximizing (choose right) as a function of the percentage of maximizing responses during the previous ten trials.

Yet participants do not always meliorate (Tunney & Shanks, 2002). Four factors increase the percentage of maximizing responses. First, displaying cues that mark the underlying state of the system increases maximization (Gureckis & Love, 2009; Herrnstein et al., 1993). Second, shortening the inter-trial interval increases maximization (Bogacz et al., 2007). Third, maximizing is negatively associated with the length of the averaging window (i.e., the number of trials over which response allocation is computed; Herrnstein et al., 1993; Yarkoni et al., 2005). Fourth, maximizing is negatively associated with the immediate difference between the payoff functions for the two responses (Heyman & Dunn, 2002; Warry, Remington, & Sonuga-Barke, 1999).

The Harvard Game constitutes a POMDP; the probability or magnitude of reward depends on the current choice and the history of actions. One could restore the Markov property by providing participants with information about their action history. Indeed, displaying cues that mark the underlying state of the system increases maximization (Gureckis & Love, 2009; Herrnstein et al., 1993). By restoring the Markov property, cues may allow participants to credit actions for future reward based on observable states. Do POMDP techniques permit maximization when state cues are absent? Yes. A model with memory elements can record the history of actions. Such an internal record can disambiguate states in the same manner as external cues. Likewise, a model with belief states can learn the rewards associated with each of the system’s underlying states. The model can then simulate the long-term utility associated with performing sequences of minimizing and maximizing responses to decide which yields greater reward.

Eligibility traces provide an especially elegant account of various Harvard Game manipulations. Bogacz et al. (2007) modeled the effect of inter-trial interval duration using real-time decaying eligibility traces. Over long inter-trial intervals, traces decayed to zero, reducing their ability to support learning. Like participants, the model exhibited maximization when inter-trial intervals were short, and melioration when inter-trial intervals were long. Additionally, as the length of the averaging window increases, and as the difference between the payoff functions increases, the individual must integrate outcomes over a larger number of trials before the value of maximizing exceeds the value of meliorating. Melioration may ensue when the decay of eligibility traces fails to permit integration of outcomes over a sufficient number of trials. In sum, a TD model with eligibility traces can account for the results of several Harvard Game manipulations.

Hierarchical Reinforcement Learning

As the field of reinforcement learning has matured, focus has shifted to factors that limit its applicability. Foremost among these is the scaling problem: the performance of standard reinforcement learning techniques declines as the number of states and actions increase. Hierarchical reinforcement learning (HRL) is one solution to the scaling problem (Barto & Mahadevan, 2003; Botvinick, Niv, & Barto, 2009; Dietterich, 2000). The HRL framework is expanded to include temporally abstract options, representations comprised of primitive, interrelated actions. For instance, the actions involved in adding sugar to coffee (grasp spoon, scope sugar, lift spoon to cup, deposit sugar) are represented by a single high-level option (‘add sugar’). Still more complex skills (‘make coffee’) are assembled from high-level options (Botvinick, Niv, & Barto, 2009).

In HRL, each option has a designated subgoal. Pseduo-reward is issued upon subgoal completion, and is used to reinforce the actions selected as the individual enacted an option. External reward, in turn, is issued upon task completion and is used to reinforce the options selected as the individual performed the task. This segmentation of the learning episode enhances scalability in two ways. First, because pseudo-rewards are issued following subgoal completion, the individual need not wait until the end of the task to receive feedback. Thus, reward is not discounted as substantially, and the actions that comprise an option are insulated against errors that occur as the individual pursues later subgoals. Second, HRL allows the individual to learn more efficiently from experience. When options can be applied to new tasks (e.g., adding sugar to coffee, and then adding sugar to tea), the individual can recombine options rather than relearn the larger number of primitive actions they entail.

One psychological prediction of HRL is that credit assignment will occur when participants make progress toward subgoals, even when subgoals do not directly relate to primary reinforcement. To test this hypothesis, Ribas-Fernandes et al. (2011) conducted an experiment where participants navigated a cursor to an intermediate target and then to a final target. The location of the intermediate target sometimes changed unexpectedly. Location shifts that decreased the distance to the intermediate target without decreasing the distance to the final target triggered activation in the anterior cingulate cortex, a region implicated in signaling primary rewards. The shift would not be expected to produce a standard reward prediction error because it did not reduce the total distance to the final goal. The shift would be expected to produce a pseudo-reward prediction error, however, because it did reduce the distance to the intermediate subgoal. Thus, the ACC may also signal pseudo-rewards, as in the HRL framework.

Disorders and Addiction

Reinforcement learning has been applied to the study of psychological disorders (Maia & Frank, 2011; Redish, Jensen, & Johnson, 2008; Redgrave, 2010). For example, the initial stage of Parkinson’s disease is characterized by the loss of dopaminergic inputs from the SNc to the striatum (Maia & Frank, 2011; Redish, Jensen, & Johnson, 2008). Given that these regions support model-free control, it is not surprising that Parkinson patients display an impaired ability to acquire and express habitual responses. Reinforcement learning has also been applied to the study of addiction. Redish et al. (2008) describe ten vulnerabilities in the decision-making system. These constitute points through which drugs of addiction can produce maladaptive responses. Some vulnerabilities relate to the goal-direct system; for example, drugs that alter memory storage and access could affect model-based, forward search. Other vulnerabilities relate to the habit system; for example, drugs that artificially increase dopamine could inflate model-free, action value estimates.

The reinforcement learning framework offers insight into deficits in sequential choice as well. For instance, ‘impulsivity’ is defined as choosing a smaller immediate reward over a larger delayed reward. This is the case in addiction: the individual selects behaviors that are immediately rewarding but ultimately harmful. Addicts discount future reward more steeply than do non-addicts. This was demonstrated in an experiment where participants chose between a large delayed reward and smaller immediate rewards (Madden et al., 1997). Opioid-dependent participants accepted smaller immediate rewards than did controls, a result that holds across other types of addiction (Reynolds, 2006).

Impulsivity has been associated with changes in the availability of the neurotransmitter serotonin (Mobini et al., 2000). Diminished serotonin levels caused by lesions and pharmacological manipulations increase impulsivity. The impact of serotonin on choice behavior has been simulated in the reinforcement learning framework by decreasing the eligibility trace decay term or by lowering the temporal discounting rate (Doya, 2000; Schweighofer et al., 2008; Tanaka et al., 2009). Decreasing the eligibility trace decay term (λ) reduces the efficiency of propagating credit from delayed outcomes to earlier states and actions. Lowering the discounting rate (γ), in turn, minimizes the contribution of future outcomes to the calculation of utility values. In both cases, the net result is impulsive behavior.

Discussion

Psychologists have long distinguished between habitual and goal-directed behavior. This distinction was made rigorous in stimulus-response and cognitive theories of learning during the behaviorist era. Although this distinction has carried forward in studies of human and animal choice, the apparent dichotomy between these perspectives has given way to a unified view of habitual and goal-directed control as complementary mechanisms for action selection. Similar ideas have emerged in the field of artificial intelligence. Model-free RL resonates with stimulus-response theories and the notion of habitual control, whereas model-based RL resonates with cognitive theories and the notion of goal-directed control.

The pursuit of such compatible ideas in the fields of psychology and artificial intelligence is not coincidental. Humans and animals regularly face the very problems that reinforcement learning methods are designed to overcome. One such problem is temporal credit assignment. When feedback follows a sequence of decisions, how should credit be assigned to the intermediate actions that comprise the sequence? Model-free RL solves this problem by learning internal value functions that store the sum of immediate and future rewards expected from each state and action. Model-based RL solves this problem by learning state transition and reward functions, and by using this internal model to identify actions that will result in goal attainment. Not only do these techniques have a natural interpretation in terms of human and animal behavior, but they also provide a useful framework for understanding neural reward valuation and action selection. Reciprocally, the manner in which humans and animals cope with temporal credit assignment provides a model for designing artificial learning systems that can solve the very same problem (Sutton & Barto, 1998).

Throughout this review, we have emphasized the utility of RL as a model of reward valuation and action selection. We now explore three remaining questions that are central to a unified theory of habitual and goal-directed control. The answers to these questions have ramifications for theories of sequential choice, and decision making more generally.

What Factors Promote Model-Based and Model-Free Control?

Under what circumstances is behavior model-based, and under what circumstances is it model-free? Animal conditioning studies are informative with respect to this question. Animals are sensitive to outcome devaluation and contingency degradation early in conditioning but not after extended training (Adams et al., 1981; Dickinson et al., 1998), a result that has been replicated with humans (Tricomi, Balleine, & O’Doherty, 2009). This suggests that with overtraining, goal-directed (i.e., model-based) control gives way to habitual (i.e., model-free) control. Extending this result to sequential choice, Gläscher et el. (2010) found that participants’ behavior was initially predicted by a model-based controller, and later by a model-free controller.

Secondary tasks that consume attention and working memory also shift the balance from model-based to model-free control. In two experiments, Fu and Anderson asked participants to make a pair of decisions before receiving feedback (2008a, 2008b). Participants in the dual-task condition performed a memory-intensive n-back task as they made selections. Although participants ultimately learned both choices in the single- and dual-task conditions, the order in which they learned the choices differed. Participants in the dual-task condition learned the second choice before the first. This is consistent with a TD model in which reward propagates from later states to earlier states. Conversely, participants in the single-task condition learned the first choice before the second. This is consistent with a model in which each of the choices and the outcome are encoded in memory, but the first choice is encoded more strongly owing to a primacy advantage (Drewnowski & Murdock, 1980). Likewise, Otto et al. (in press) found that having participants perform a demanding secondary task engendered reliance upon a model-free control strategy in the primary, sequential choice task. In the absence of the secondary task, participants reverted to a model-based control strategy.

Finally, time constraints evoke model-free control. In one study that examined this issue, participants learned the transition function for a grid navigation task (Fermin et al., 2010). At test, participants navigated to a novel location or to a well-rehearsed location. Fermin et al. manipulated start time by presenting the go signal immediately after the goal location appeared, or after a six-second delay. Prestart delay facilitated performance when participants navigated to a novel location but not when they navigated to a well-rehearsed location. This suggests that participants planned the sequence of moves to the novel location in the six-second start time condition, and that they used an automatized sequence of moves to navigate to well-rehearsed locations in both start time conditions. The finding that prestart delay facilitated performance when participants navigated to novel locations highlights the time costs associated with forward search. This echoes other findings that planning times increase with search depth and complexity (Hayes, 1965; Owen et al., 1995; Simon & Daw, 2011).

How Does the Cognitive System Arbitrate Between Model-Based and Model-Free Control?

According to several proposals, arbitration between model-free and model-based control is guided by two conflicting constraints (Daw, Niv, & Dayan, 2005; Fermin et al., 2010; Keramati, Dezfouli, & Piray, 2011). First, the computational simplicity of model-free RL permits rapid response selection whereas model-based RL requires a time consuming search. Second, model-free RL is inflexible in adapting to changing conditions whereas model-based RL can quickly adapt with experience. Consequently, model-based RL is initially favored for its greater accuracy, and model-free RL is ultimately favored for its greater efficiency. When approximation techniques such as pruning are employed, model-based estimates remain uncertain despite extended training, further favoring the transition to model-free control (Daw, Niv, & Dayan, 2005).