Summary

Gaussian graphical models are useful to analyze and visualize conditional dependence relationships between interacting units. Motivated from network analysis under di erent experimental conditions, such as gene networks for disparate cancer subtypes, we model structural changes over multiple networks with possible heterogeneities. In particular, we estimate multiple precision matrices describing dependencies among interacting units through maximum penalized likelihood. Of particular interest are homogeneous groups of similar entries across and zero-entries of these matrices, referred to as clustering and sparseness structures, respectively. A non-convex method is proposed to seek a sparse representation for each matrix and identify clusters of the entries across the matrices. Computationally, we develop an e cient method on the basis of di erence convex programming, the augmented Lagrangian method and the block-wise coordinate descent method, which is scalable to hundreds of graphs of thousands nodes through a simple necessary and sufficient partition rule, which divides nodes into smaller disjoint subproblems excluding zero-coe cients nodes for arbitrary graphs with convex relaxation. Theoretically, a finite-sample error bound is derived for the proposed method to reconstruct the clustering and sparseness structures. This leads to consistent reconstruction of these two structures simultaneously, permitting the number of unknown parameters to be exponential in the sample size, and yielding the optimal performance of the oracle estimator as if the true structures were given a priori. Simulation studies suggest that the method enjoys the benefit of pursuing these two disparate kinds of structures, and compares favorably against its convex counterpart in the accuracy of structure pursuit and parameter estimation.

Keywords: Simultaneous pursuit of sparseness and clustering, multiple networks, non-convex, prediction, signaling network inference

1 Introduction

Graphical models are widely used to describe relationships among interacting units. Major components of the models are nodes that represent random variables, and edges encoding conditional dependencies between the nodes. Of great current interest is the identification of certain lower-dimensional structures for undirected graphs. The central topic of this paper is maximum penalized likelihood estimation of multiple Gaussian graphical models for simultaneously pursuing two disparate kinds of structures–sparseness and clustering.

In the literature on Gaussian graphical models, the current research e ort has concentrated on reconstruction of a single sparse graph. Methods to exploit matrix sparsity include [1, 5, 11, 13, 14, 15, 24], among others. For multiple Gaussian graphical models, existing approaches mainly focus on either exploring temporal smoothing structure [7, 25] or encour aging common sparsity across the networks [6, 10]. In this paper, we focus on pursuing both clustering and sparseness structures over multiple graphs, including temporal clustering as a special case while allowing for abrupt changes of structures over graphs. For multiple graphs without a temporal ordering, our method enables to identify possible element-wise heterogeneity among undirected graphs. This is motivated by heterogeneous gene regulatory networks corresponding to disparate cancer subtypes [18, 21]. In such a situation, the overall associations among genes remain similar for each network, whereas specific pathways and certain critical nodes (genes) may be di erentiated under disparate conditions.

For multiple Gaussian graphical models, estimation is challenging due to enormous can didate graphs of order 2Lp2, where p is the total number of nodes and L is total number of graphs. To battle the curse of dimensionality, we explore two dissimilar types of structures simultaneously: (1) sparseness within each graph and (2) element-wise clustering across graphs. The benefit of this exploration is three-fold. First, it goes beyond sparseness pursuit alone for each graph, which is usually inadequate given a large number of unknown parameters relative to the sample size, as demonstrated in four numerical examples in Section 5. Second, borrowing information across graphs enables us to detect the changes of sparseness and clustering structures over the multiple graphs. Third, pursuit of these two structures at the same time is suited for our problem, which seeks both similarities and di erences among the multiple graphs.

To this end, we propose a regularized/constrained maximum likelihood method for simultaneous pursuit of sparseness and clustering structures. Computationally, we develop a strategy to convert the optimization involving matrices to a sequence of much simpler quadratic problems. Most critically, we derive a necessary and sufficient partition rule to partition the nodes into disjoint subproblems excluding zero-coe cient nodes for multiple arbitrary graphs with convex relaxation, where the rule is applied before computation is performed. Such a rule has been used in [12] for convex estimation of a single matrix, but has not been available for multiple arbitrary graphs, to our knowledge. This makes e cient computation possible for multiple large graphical models, which otherwise is rather di cult if not impossible. Theoretically, we develop a novel theory for the proposed method, and show that it enables to reconstruct the oracle estimator as if the true sparseness and element-wise clustering structures were given a priori, which leads to reconstruction of the two types of structures consistently. This occurs roughly when the size of L matrices p2L is of order exp(An), where p is the dimension of the matrices and A is related to the Hessian matrices of the negative log-determinant of the true precision matrices and the resolution level for simultaneous pursuit of sparseness and element-wise clustering, c.f., Corollary 2. Moreover, we quantify the improvement due to structural pursuit beyond that of sparsity.

The rest of this article is organized as follows. Section 2 introduces the proposed method. Section 3 is devoted to estimation of partial correlations across multiple graphical models, and develops computational tools for e cient computation. Section 4 presents a theory concerning the accuracy of structural pursuit and parameter estimation, followed by some numerical examples in Section 5 and an application to signaling network inference in Section 6. Section 7 discusses various issues in modeling. Finally, the appendix contains proofs.

2 Proposed method

Consider the L−sample problem with the l−th sample from ; l = 1,...,L, we estimate Ω = (Ω1,..., ΩL), where is the p × p inverse covariance matrix and positive definite, denoted by , μl and Σl are the corresponding mean vector and covariance matrix, and the sample size

For maximum likelihood estimation, the profile likelihood for Ω, after μ1,..., μL are maximized out, is proportional to

| (1) |

where and are the corresponding sample mean and covariance matrix, det and tr denote the determinant and trace. In (1), the number of unknown parameters in Ω can greatly exceed the sample size n.

2.1 General penalized multiple precision matrices estimation

TO avoid non-identifiability in (1) and encourage low dimensional structures, we propose a regularized maximum likelihood approach through penalty functions Jjk(·):

| (2) |

where Ω = (Ω1,... ,ΩL) and only the off-diagonals {ωjkl} of Ωl are regularized. Note that Jjk(·) could be any function that penalizes jk-th entries across Ωl's. This encompasses many existing penalty-based approaches for multiple Gaussian graphical models [6, 22] as special cases.

In general, the maximization problem (2) involving L matrices is computationally difficult. To meet the computational challenges, we develop a general block-wise coordinate descent strategy to reduce (2) to an iterative procedure involving much easier subproblems. Before proceeding, we introduce some notations. Let the jth row (or column) of Ωl be ωjl, let ω−jl = (ωj1l, . . . ,ωj(j−1)l,... ,ωj(j+1)l, . . . ,ωjpl) be a (p — 1)-dimensional vector, excluding the jth component of ωjl, and Ω−jl be the sub-matrix without the jth row and column of Ωl, and be the inverse of Ω−jl.

Our proposed method maximizes (2) by sweeping each row (or column) of Ω across l = 1,... ,L. Using the property that with T indicating the transpose, we rewrite (2), after ignoring constant terms, as a function

| (3) |

First, for each fixed row (or column) of Ω across l = 1,... ,L, we maximize (3) over the diagonals (ωjj1,... ,ωjjl) given the corresponding o -diagonals (ω−j1,..., ω−jl). Setting the partial derivatives of (3) in the diagonals to be zero yields the profile maximizer of (2)

| (4) |

Second, substituting (4) into (3) yields the negative profile likelihood of (2) for (ω−jl,... ,ω−jl)

| (5) |

Third, the aforementioned process is repeated for each rows (or columns) of Ω until a certain stopping criterion is satisfied. By Theorem 1, profiling is equivalent to the original problem for separable convex penalty functions summarized as follows.

Theorem 1 Iteratively minimizing (5) over the off-diagonals (ω−j1,... ,ω−jL) and updating diagonals ωjjl by (4); j = 1,... ,p, l = 1,... ,L converges to a local maximizer of (2). Moreover, if Jjk(·) are convex, it converges to a global maximizer.

Theorem 1 reduces (2) to iteratively solving (5) which is quadratic in its argument. On this ground we design e cient methods for solving (2) with a specific choice of Jjk(·) next.

2.2 Pursuit of sparseness and clustering structures

A zero element in Ωl corresponds to conditional independence between two components of Y(l) given its other components [9]. Thus, within each precision matrix Ωl, estimating its elements reconstructs its graph structure, where a zero-element of Ωl corresponds to no edges between the two nodes, encoding conditional independence. In addition, the nodes connecting many other nodes are identified, called network hubs. On the other hand, over multiple precision matrices, estimating element-wise clustering structure can reveal the change of sparseness and clustering structures.

To detect clustering structures, consider element-wise clustering of entries of Ω1,... ,ΩL based on possible prior knowledge. The prior knowledge is specified loosely in an undirected graph with each node corresponding to a triplet (j, k, l); 1 ≤ j < k ≤ p, 1 ≤ l ≤ L. That is, an edge between node (j, k, l) and (j, k, l′) means that the (j, k)th entry of Ωl and the (j, k)th entry of Ωl′ tend to be similar a priori and thus can be pushed to share the same value. Specifically, let denote a set of edges between two distinct nodes (j, k, l) ≠ = (j, k, l′) of , where (l, l′) indicates a connection between the two nodes (j, k, l),(j, k, l′). To identify homogeneous subgroups of off-diagonals {ωjkl} of Ωl across l = 1,... ,L over , including the group of zero-elements, we propose a non-convex penalty of the form

| (6) |

to regularize (2), where λ1 and λ2 are nonnegative tuning parameters controlling the degrees of sparseness and clustering, is the truncated L1-penalty of [17], called TLP in what follows, which, after rescaled by , approximates the L0-function when tuning parameter tends to 0+.

Note that our approach is applicable to a variety of applications by specifying the graph . For time varying graphs, our method can be used to detect the change of clustering structure, where is a serial graph as in the fused Lasso [19], and a serial temporal relation is defined only for elements in adjacent matrices. One key di erence between our method and the smoothing method [25, 7] is that it enables to accommodate abrupt changes of structures over networks. For multiple graphs without a serial ordering, the proposed method enables to identify possible element-wise heterogeneity among undirected graphs, such as gene regulatory networks corresponding to disparate cancer subtypes [18, 21]. Heterogeneity of this type can be dealt with by specifying a complete graph for each .

3 Computation

This section proposes a relaxation method to treat non-convex penalties in (6). For large-scale problems, a partition rule may be useful, which breaks large matrices into many small ones to process separately. A novel necessary and sufficient partition rule is derived for our non-convex penalization method as well as its convex counterpart, generalizing the results for single precision matrix estimation [12, 22].

3.1 Non-convex optimization

For the non-convex minimization (2) with (6), we develop a relaxation method by solving a sequence of convex problems. This method integrates di erence convex (DC) programming with block-wise coordinate descent method based on the foregoing strategy.

For DC programming, we first decompose S(Ω) into a di erence of two convex functions: S(Ω) = S1(Ω) – S2(Ω), with

| (7) |

where a DC decomposition of is used. Then the trailing convex function S2(Ω) is iteratively approximated by its minorization, say at iteration m, . This obtained through minorization of at , which is the solution at iteration m − 1, where is the gradient of at ; see [17] for more discussions about minorization of this type. At iteration m, the cost function to minimize is

| (8) |

subject to ; l = 1, ···, L, where ; .

To solve (8), we apply Theorem 1 to iteratively minimize:

| (9) |

and update diagonal elements using (4). This quadratic problem can then be e ciently solved using augmented Lagrangian methods as in [26].

Unlike the coordinate descent method updating one component at a time, we update one component of ζ and two components (ωjkl, ωjjl) for Ωl at the same time.

In (9), computation of by directly inverting Ω−jl has a complexity of O(p3) operations for each (j, l). For e cient computation, we utilize the special property of our sweeping operator in that the (p − 1)2 elements of Ωl are unchanged except one row and one column are swept, in addition to the rank one property for updating the formula. In (9), we derive an analytic formula through block-wise inversion and the Neumann formula of a square matrix, to compute (Ω−jl)−1 from and from for each (j, l). That is,

| (10) |

| (11) |

This amounts to O(p2) operations.

The foregoing discussion leads to our DC block-wise coordinate descent algorithm through sweeping operations over p(p−1) off-diagonals of (Ω1,... ,ΩL), with each operation involving the L corresponding off-diagonals.

Algorithm 1:

Step 1. (Initialization) Set ; l = 1, ···, L, E(0) = {(j,k,l) : 1 ≤ j ≠ k ≤ p, 1 ≤ l ≤ L}, , m = 0 and precision tolerance ε = 10−5 for Step 2.

Step 2. (Iteration) At current iteration m, . Then solve (8) applying the block-wise coordinate descent algorithm to update Ω to yield . And set ; . Specifically,

For each row (column) index j = 1,... ,p, compute using (10); l = 1,... ,L. Solve (9) to obtain and then compute through (4); l = 1,... ,L. Update with its jth row replaced by and its jth column by symmetry. Finally update using (11). Go to next iteration j + 1 until all rows of have been swept.

Repeat a) until the decrement of the objective function is less than ε. After convergence, update Ω to yield based on a).

Step 3. (Stopping criterion) Terminate when E(m+1) = E(m) and F(m+1) = F(m), otherwise, repeat Step 2 with m = m + 1.

The overall complexity of Algorithm 1 is of order O(p3L2). And real computational time of our algorithm depends highly on values of λ1, λ2 and the number of iterations. In Example 1, it takes about 30 seconds for one simulation run with (p, L) = (200,4) over 100 grids on a 8-core computer with Intel(R) Core(TM) i7-3770 processors and 16GB of RAM.

3.2 Partition rule for large-scale problems

This section establishes a necessary and sufficient partition rule for our non-convex penal ization method and its convex counterpart using the sample covariances, permitting fast computation for large-scale problems by partitioning nodes into disjoint subsets excluding the zero-coeffcient subset then applying the proposed method to each nonzero subset. Such a result exists only for a single matrix or a special case of multiple matrices, c.f., [12, 22].

In what follows, we only consider the case where are identical. Given this graph , with denoting the node and edge sets, we write l ~ l′ if , or two nodes are connected. First consider the convex grouping penalty over , followed by a general case, where the penalized log-likelihood is

| (12) |

where Ωl,off denotes the off-diagonal elements of Ωl and Sl = (sjkl)1≤j,k≤p are the sample covariance matrices, l = 1,... ,L.

The next theorem derives a necessary and sufficient condition for the jkth element of across l = 1,... ,L, for , where is the minimizer of (12), and is any subset. This partitions the node set into disjoint subsets of connected nodes, with no connections between these subsets.

Theorem 2 (Partition rule for (12)) for all and l = 1,... ,L, if and only if (sjk1,... ,sjkL) , for all , where with denoting the number of edges between the nodes in and the remaining nodes in .

Similar results hold for the proposed non-convex regularized estimators.

Theorem 3 (Partition rule for non-convex regularization) Denote by the solution obtained from Algorithm 1 for (2). Similarly, given any , for all ; ; l = 1,... ,L, if and only if , where .

Corollary 1 simplifies the expression of for specific graphs.

Corollary 1 In the cases of the fused graph and the complete graph, we have

The partition rule is useful for e cient computation, as it may reduce computation cost substantially. It can be used in several ways. First, the rule partitions nodes into disjoint connected subsets through the sample covariances sjkl's. This breaks the original large problem into smaller subproblems, owing to this necessary and sufficient rule. Second, Algorithm 1

can be applied to each subproblem independently, permitting parallel computation.

Algorithm 2 integrates the partition rule in Theorem 3 with Algorithm 1 to make the proposed method applicable to large-scale problems.

Algorithm 2 (A partition version of Algorithm 1):

Step 1. (Screening) Compute the sample-covariance matrix Sl; l = 1,... ,L. Construct a p × p symmetric matrix T = (tjk)1≤j,k≤p, with tjk = 0 if and tjk = 1 otherwise. Treating T as an adjacency matrix of an undirected graph, we compute its maximum connected components to form a partition of nodes using breadth-first search or depth-first search algorithm, c.f., [3]

Step 2. (Subproblems) For i = 1,... ,q, solve (2) for each subproblem consisting of nodes in , by applying Algorithm 1 to obtain the solution .

Step 3. (Combining results) The final solution .

4 Theory

This section investigates theoretical aspects of the proposed method. First we develop a general theory on maximum penalized likelihood estimation involving two types of L0-constraints for pursuit of sparseness and clustering. Then we specialize the theory for estimation of multiple precision matrices in Section 4.3. Now consider a constrained L0-version of (2):

| (13) |

as well as its computational surrogate

| (14) |

where θ = ( β,η) with and η representing the off-diagonals and diagonals of Ω, and three non-negative tuning parameters (). Note that Algorithm 1 yields a local minimizer of (14), relaxing it by solving a sequence of convex problems.

In what follows, we will prove that global minimizers of (13) and (14) reconstruct the ideal oracle estimator as if the true sparseness and clustering structures of the precision matrices were known in advance. As a result of the reconstruction, key properties of the oracle estimator are simultaneously achieved by the proposed method.

4.1 The oracle estimator and consistent graph

To define the oracle estimator, let denote a partition of by the parameter β, i.e. , with and satisfying , where K(β) is the number of nonzero clusters and A(β) is the support of β. Let be the true partition induced by β0, with θ0 = (β0,η0) the true parameter value and .

Definition 1 (Oracle estimator) Given , the oracle estimator is defined as: , the corresponding maximum likelihood estimator.

In (13) and (14), the edge set of is important for clustering. In order for simultaneous pursuit of sparseness and clustering structures to be possible, we may need to be consistent with the clustering structure of the true precision matrices. In other words, a consistent graph is a minimal requirement for reconstruction of the oracle estimator, where there must exist a path connecting any nodes within the same true cluster.

Definition 2 (Consistent graph ) An undirected graph is consistent with the true cluster , if the subgraph restricting nodes on is connected; j = 1,... ,K0

4.2 Non-asymptotic probability error bounds

This section derives a non-asymptotic probability error bound for simultaneous sparseness and clustering pursuit, based on which we prove that (13) and (14) reconstruct the oracle estimator. This implies consistent identification of the sparseness and clustering structures of multiple graphical models, under one simple assumption, called the degree-of-separation condition.

Before proceeding, we introduce some notations. Given a graph , let be a constrained set with , where with as defined above. Given a partition , let . Given an index set , let . Let , and . Roughly, S* quantifies complexity of the space of candidate precision matrices scaled by the number of nonzero entries.

The degree-of-separation condition will be used to ensure consistent reconstruction of the oracle estimator: For some constant c1 > 0,

| (15) |

where with | · | and denoting the size of a set and that of set difference, respectively, is the Hellinger-distance for densities with respect to a dominating measure μ.

We now define the bracketing Hellinger metric entropy of space , denoted by the function H(·, ), which is the logarithm of the cardinality of the u-bracketing (of ) of the smallest size. That is, for a bracket covering satisfying and for any , there exists a j such that , a.e. P , then H(u, ) is log(min{m: S(u, m)}), where . For more discussions about metric entropy of this type, see [8].

Assumption A: (Complexity of the parameter space) For some constant c0 > 0 and any 0 < t < ε ≤ 1, , where is a local parameter space, and be a collection of square-root densities indexed by any subset .

Next we present our non-asymptotic probability error bounds for reconstruction of the oracle estimator by global minimizers of (13) and (14) in terms of Cmin( θ0), n, d and d0, where d0 and d can depend on n. Consistency is established for reconstruction of as well as structure recovery. Note that is asymptotically optimal, hence the optimality translates into the global minimizers of (13) and (14).

Theorem 4 (Global minimizer of (13)) Under Assumption A, if is consistent with , then for a global minimizer of (13) with estimated grouping at (C1, C2) = (d0, c0) with c0 = C(β0, ε),

| (16) |

Under (15), as n, d → ∞.

For the constrained truncated L1-likelihood, one additional condition–Assumption B is necessary. We requires the Hellinger-distance to be smooth so that the approximation of the truncated L1-function to the L0-function becomes adequate by tuning τ.

Assumption B: For some constants d1-d3 > 0,

| (17) |

where θτ = ( βτ, η) with , and for ; k = 0, 1, · · · , K(β).

Theorem 5 (Global minimizer of (14)) Assume that Assumption A with replaced by and Assumption B are met. If is consistent with , then for a global minimizer of (14) with estimated grouping with estimated grouping at (C1,C2) = (d0, c0) with c0 = C(β0, ε) and ,

| (18) |

Under (15), as n, d → ∞.

4.3 An illustrative example

We now apply the general theory in Theorems 2 and 3 to the estimation of multiple precision matrices, in which the true precision matrices in each cluster are the same, with the number of break points among these clusters. In this case, a serial graph is considered for clustering.

Denote by p and L0 the dimension of the precision matrix and the number of distinctive clusters, respectively. Let be the p2 × p2 Hessian matrix of – log det(Ωl, whose (jk, j′k′) element is tr(ΣlΔjkΣlΔj′k′), c.f., [2]. Define

to be the resolution level for simultaneous sparseness and clustering pursuit.

An application of Theorems 2 and 3 with β and η being off-diagonals and diagonals of Ω leads to the following result.

Corollary 2 (Multiple precision matrices with a serial graph) When U is a serial graph, all the results in Theorems 2 and 3 for simultaneous pursuit of sparseness and clustering hold under two simple conditions:

| (19) |

for some constant c4 > 0. Sufficiently, if

| (20) |

holds for some constant c0 > 0, then and as n, d → +∞.

Corollary 2 suggests that the amount of reconstruction improvement would be of the order of 1/L if the L precision matrices are identical. In general, the amount of improvement of joint estimation over separate estimation is L/ log(L) when g0 is small, i.e. g0 max (log (d0/g0), 1) ≲ log (Lp(p – 1)/2), by contrasting the sufficient condition in (20) with that for a separate estimation approach in [17], where ≲ denotes inequality ignoring constant terms. Here g0 describes similarity among L precision matrices with a small value corresponding to a high-degree of similarity shared among precision matrices.

5 Numerical examples

This section studies operational characteristics of the proposed method via simulation in sparse and nonsparse situations with di erent types of graphs in both low- and high-dimensional settings. In each simulated example, we compare our method against its convex counterpart for seeking the sparseness structure for each graphical model and identifying the grouping structure among multiple graphical models, and contrast the method against its counter part seeking the sparseness structure alone. In addition, we also compare against a kernel smoothing method for time-varying networks [7, 25] in Examples 1-3, whenever appropriate. The smoothing method defines a weighted average over sample covariance matrices at time points as with wll′(h) = K(h−1|l – l′|); l = 1, · · · , L, where K(x) = (1 – |x|)∏(|x| < 1)is a triangular kernel, h is a bandwidth, and l = 1, · · · , L denotes clusters. Then within each cluster l, the precision matrix estimate is obtained by solving

| (21) |

using the glasso algorithm [5], and the final estimate is obtained through tuning over (h,, λ)-grids. Two performance metrics are used to measure the accuracy of parameter estimation as well as that of correct identification of the sparseness and grouping structures.

In Examples 1-3, temporal clustering pursuit is performed over Ω1, · · · , ΩL through a serial graph ε = {{(j, k, l), (j′, k′, l′) : j = j′, k = k′, |l| − l′| = 1}. That is, only adjacent matrices may be possibly clustered. In Example 4, general clustering pursuit is conducted through a complete graph ε = {{(j, k, l), (j′, k′, l′) : j = j′, k = k′, l < l′}.

For the accuracy of parameter estimation, the average entropy loss (EL) and average quadratic loss (QL) are considered, defined as

For the accuracy of identification, average false positive (FPV) and false negative (FNV) rates for sparseness pursuit, as well as those (FPG) and (FNG) for grouping are used:

where Ωl,off denotes the off-diagonal elements of Ωl. Note that FPV and FNG as well as FNV and FNG are not comparable due to normalization with and without the zero-group, respectively.

For tuning, we minimize a prediction criterion with respect to the tuning parameter(s) on an independent test set with the same sample size as the training set. The prediction criterion is , where is the sample covariance matrix for the tuning data; l = 1, · · · , L. Then estimated tuning parameter is obtained: λ* = argminλCV(λ ), which is used in the estimated precision matrices. Here minimization of CV(λ) is performed through a simple grid search over the domain of the tuning parameter(s).

All simulations are performed based on 100 simulation replications. Three di erent types of networks are considered. Specifically, Example 1 concerns a chain network with small p and L but large n, where each Ωl is relatively sparse and a temporal change occurs at two different l values. Example 2 deals with a nearest neighbor networks for each Ωl and the same temporal structure as in Example 2. Examples 3 and 4 study exponentially decaying networks in nonsparse precision matrices in high and low-dimensional situations with large and small L, respectively. In Examples 1-3 and Example 4, Algorithms 1 and 2 are respectively applied.

Example 1: Chain networks: This example estimates tridiagonal precision matrices as in [4]. Specifically, is AR(1)-structured with its ij-element being σijl = exp(−|sil− sjl|/2), and s1l < s2l < · · · spl are randomly chosen: sil−s(i−1)l ~ Unif(0.5, 1); i = 2, · · · , p, l = 1,..., L. The following situations are considered: (I) (n, p, L) = (120, 30, 4), (n, p, L) = (120, 200, 4), with Ω1 = Ω2, Ω3 = Ω4; (II) (n, p, L) = (120, 20, 30), (n, p, L) = (120, 10, 90), with Ω1 = · · · = ΩL/3, Ω(1+L/3) = · · · = Ω2L/3, Ω1+2L/3 = · · · = ΩL. Then, we study the proposed method's performance as a function of the number of graphs and the number of nodes.

Example 2: Nearest neighbor networks. This example concerns networks described in [11]. In particular, we generate p points randomly on a unit square, and compute the k nearest neighbors of each point based on the Euclidean distance. In the case of k = 3, three points are connected to each point. For each ”edge” in the graph, the corresponding off-diagonal in a precision matrix is sampled independently according to the uniform distribution over [−1, −0.5] υ [0.5, 1], and the ith diagonal is set to be the sum of the absolute values of the ith row off-diagonals. Given the previous cluster, the matrices in the current cluster are obtained by randomly adding or deleting a small fraction of nonzero elements in the matrices from previous cluster. Finally, each row of a precision matrix is divided by the square root of the product of corresponding diagonals () so that diagonals of the final precision matrices are one. The following scenarios are considered: (I) (n, p, L) = (300, 30, 4) and (n, p, L) = (300, 200, 4), where Ω1 = ΩL/3, Ω1+L3/3 = · · · = Ω2L/3, Ω1+2L/3 = · · · = Ωl. In (I), the first cluster of matrices (Ω1,Ω2) are generated using the above mechanism, with the second cluster of matrices (Ω3, Ω4) obtained by deleting one edge for each node in the network. In (II), the generating mechanism remains except that the third cluster of matrices (Ω1+2L/3, · · · , ΩL) are generated by adding an edge for each node in its previous adjacent network.

Example 3: Exponentially decaying networks. This example examines a nonsparse situation in which elements of precision matrices are nonzero, and decay exponentially with respect to their Euclidean distances to the corresponding diagonals. In particular, the (i, j)th entry of the lth precision matrix ωijl is exp (al|i − j|) with al sampled uniformly over [1, 2]. In this case, it is sensible to report the results for parameter estimation as opposed to identifying nonzeros As in Examples 1 and 2, several scenarios are considered: (I) (p, L) = (30, 4), (p, L) = (200, 4), and the sample size n = 120 or 300 with Ω1 = Ω2 = Ω3 = Ω4, and (II) (p, L) = (20, 30), (p, L) = (10, 90), and the sample size n = 120 or 300 with Ω1 = · · · = ΩL/3, Ω1+L/3 = · · · = Ω2L/3, Ω1+2L/3 = · ·· = ΩL.

Example 4: Large precision matrices. This example utilizes the partition rule to treat large-scale simulations. First, we examine two cases (n, p, L) = (120, 1000, 4) and (n, p, L) = (500, 2000, 4) with Ω1 = Ω2 and Ω3 = Ω4, where four precision matrices are considered with size 1000 × 1000 and 2000 × 2000 for pairwise clustering, where is the complete graph. Here each precision matrix is set to be a block-diagonal matrix: Ωl = Diag( l1, · · · , Ωlq); l = 1,· · · L, where Ω1j = Ω2j, Ω3j = Ω4j are 20 × 20 matrices generated in the same fashion as that in Examples 1. Finally, the complete graph is used as opposed to the fused graph. Overall, the complexity is much higher than the previous examples.

As suggested by Tables 1-4, the proposed method performs well against its competitors in parameter estimation and correct identification of the sparseness and grouping structures across all the situations. With regard to accuracy of identification of the sparseness and clustering structures, the proposed method has the smallest false positives in terms of FPV and FPG, yielding sharper parameter estimation than the competitors. This says that shrinkage towards common elements is advantageous for parameter estimation in a low-or high-dimensional situation. Note that the largest improvement occurs for the most difficult situation in Example 4.

Table 1.

Average entropy loss, denoted by EL, (SD in parentheses) and average quadratic loss, denoted by QL, (SD in parentheses), average false positive for sparseness pursuit, denote by FPV, (SD in parentheses), average false negative for sparseness pursuit, denoted as FNV, (SD in parentheses), average false positive for grouping, denoted by FPG, (SD in parentheses), and average false negative for grouping, denoted by FNG, (SD in parentheses), based on 100 simulations, for estimating precision matrices in Example 1 with n = 120. Here “Smooth”, “Lasso”, “TLP”, “Our-con” and “Ours” denote estimation of individual matrices with kernel smoothing method proposed in [25], the L1 sparseness penalty, that with non-convex TLP penalty, the convex counterpart of our method with the L1-penalty for sparseness and clustering, and our non-convex estimates by solving (2) with penalty (6). The best performer is bold-faced.

| (p, L) | Method | EL | QL | FPV | FNV | FPG | FNG |

|---|---|---|---|---|---|---|---|

| (30, 4) | Smooth | 0.570(.005) | 2.617(.266) | .312(.016) | .000(.000) | .392(.020) | .000(.000) |

| Lasso | 1.547(.074) | 5.416(.393) | .200(.009) | .000(.000) | .377(.015) | .000(.000) | |

| TLP | 0.746(.084) | 4.688(.617) | .043(.006) | .001(.002) | .108(.008) | .000(.000) | |

| Our-con | 1.288(.064) | 3.700(.270) | .129(.016) | .000(.000) | .045(.020) | .251(.032) | |

| Ours | 0.525(.055) | 3.494(.418) | .040(.009) | .000(.000) | .009(.007) | .267(.043) | |

| (200, 4) | Smooth | 7.118(.173) | 22.45(2.61) | .087(.017) | .000(.000) | .106(.018) | .000(.000) |

| Lasso | 36.48(.426) | 69.21(1.97) | .013(.001) | .000(.000) | .027(.001) | .000(.000) | |

| TLP | 5.305(.351) | 33.67(2.22) | .004(.000) | .005(.003) | .014(.000) | .000(.000) | |

| Our-con | 36.28(.422) | 66.53(1.87) | .010(.001) | .000(.000) | .012(.001) | .122(.021) | |

| Ours | 3.500(.164) | 23.71(1.33) | .003(.000) | .000(.000) | .001(.000) | .280(.007) | |

| (20, 30) | Smooth | 1.122(.023) | 1.983(.056) | .131(.006) | .000(.000) | .223(.006) | .000(.000) |

| Lasso | 1.685(.028) | 3.770(.113) | .152(.005) | .000(.000) | .314(.008) | .000(.000) | |

| TLP | 0.507(.023) | 3.081(.180) | .077(.004) | .000(.000) | .198(.005) | .000(.000) | |

| Ours-con | 1.593(.028) | 3.256(.097) | .136(.007) | .000(.000) | .055(.003) | .024(.004) | |

| Ours | 0.236(.015) | 1.812(.130) | .068(.016) | .000(.000) | .020(.002) | .032(.004) | |

| (10, 90) | Smooth | 0.339(.007) | 0.603(.017) | .271(.014) | .000(.000) | .420(.012) | .000(.000) |

| Lasso | 0.575(.010) | 1.439(.038) | .273(.007) | .000(.000) | .541(.009) | .000(.000) | |

| TLP | 0.250(.009) | 1.404(.061) | .196(.006) | .000(.000) | .434(.008) | .000(.000) | |

| Our-con | 0.519(.009) | 1.190(.032) | .284(.018) | .000(.000) | .071(.005) | .008(.002) | |

| Ours | 0.100(.005) | 0.748(.043) | .017(.020) | .000(.000) | .028(.004) | .012(.002) | |

Table 4.

Average entropy loss, denoted by EL, (SD in parentheses) and average quadratic loss, denoted by QL, (SD in parentheses), average false positive for sparseness pursuit, denote by FPV, (SD in parentheses), average false negative for sparseness pursuit, denoted as FNV, (SD in parentheses), average false positive for grouping, denoted by FPG, (SD in parentheses), and average false negative for grouping, denoted by FNG, (SD in parentheses), based on 100 simulations, for estimating precision matrices in Example 4. Here “Lasso”, “TLP”, “Our-con” and “Ours” denote estimation of individual matrices with the L1 sparseness penalty, that with non-convex TLP penalty, the convex counterpart of our method with the L1-penalty for sparseness and clustering, and our non-convex estimates by solving (2) with penalty (6). The best performer is bold-faced.

| (p, L) | Method | EL | QL | FPV | FNV | FPG | FNG |

|---|---|---|---|---|---|---|---|

| (1000, 4) | Lasso | 378.5(2.09) | 829.4(13.3) | .0005(.0000) | .0270(.0030) | .0020(.0000) | .0002(.0003) |

| TLP | 36.05(.1.78) | 201.9(8.81) | .0004(.0000) | .0270(.0030) | .0020(.0000) | .0002(.0003) | |

| Our-con | 377.3(2.07) | 805.2(12.9) | .0004(.0000) | .0110(.0020) | .0017(.0000) | .0497(.0040) | |

| Ours | 26.8(1.35) | 160.9(7.266) | .0003(.0000) | .0130(.0020) | .0017(.0000) | .0267(.0027) | |

| (2000, 4) | Lasso | 225.6(.413) | 358.1(1.13) | .0009(.0000) | .0000(.0000) | .0018(.0000) | .0000(.0000) |

| TLP | 9.160(.083) | 54.17(.654) | .0007(.0000) | .0000(.0000) | .0015(.0000) | .0000(.0000) | |

| Our-con | 225.6(.413) | 358.1(1.12) | .0009(.0000) | .0000(.0000) | .0018(.0000) | .0000(.0000) | |

| Ours | 8.617(.081) | 51.79(.657) | .0006(.0000) | .0000(.0000) | .0005(.0000) | .1750(.0020) | |

Compared with pursuit of sparseness alone–TLP, the amount of of improvement of our method is from 143% to 244% and 118% % to 236% in terms of the EL and QL when n = 120, and from 80.5% to 228% and 96.5% to 240% in terms of the EL and QL when n = 300, as indicated in Table 3. This comparison suggests that exploring the sparseness structure alone is inadequate for multiple graphical models. Pursuit of two types of structures appears advantageous in terms of performance, especially for large matrices.

Table 3.

Average entropy loss, denoted by EL, (SD in parentheses) and average quadratic loss, denoted by QL, (SD in parentheses), based on 100 simulations, for estimating multiple precision matrices in Example 3. Here “Smooth”, “Lasso”, “TLP”, “Our-con” and “Ours” denote estimation of individual matrices with kernel smoothing method proposed in [25], the L1 sparseness penalty, that with non-convex TLP penalty, the convex counterpart of our method with the Li-penalty for sparseness and clustering, and our non-convex estimates by solving (2) with penalty (6). The best performer is bold-faced.

| Set-up | Method | n = 120 | n = 300 | ||

|---|---|---|---|---|---|

| (p,L) | EL | QL | EL | QL | |

| (30, 4) | Smooth | 0.468(.042) | 0.941(.097) | 0.231(.056) | 0.476(.034) |

| Lasso | 1.158(.062) | 2.534(.175) | 0.736(.036) | 1.434(.086) | |

| TLP | 1.546(.100) | 3.625(.262) | 0.575(.045) | 1.301(.121) | |

| Our-con | 0.897(.066) | 1.823(.166) | 0.699(.038) | 1.317(.085) | |

| Ours | 0.501(.063) | 1.143(.160) | 0.247(.017) | 0.524(.042) | |

| (200, 4) | Smooth | 6.882(.220) | 13.26(.535) | 2.578(.066) | 4.843(.130) |

| Lasso | 10.37(.173) | 21.92(.498) | 5.449(.094) | 10.84(.211) | |

| TLP | 12.34(.202) | 25.87(.560) | 5.523(.153) | 12.08(.365) | |

| Our-con | 6.091(.199) | 12.34(.484) | 4.625(.098) | 8.625(.209) | |

| Ours | 5.079(.265) | 11.84(.658) | 1.682(.038) | 3.551(.096) | |

| (20, 30) | Smooth | 0.490(.021) | 0.878(.042) | 0.278(.008) | 0.492(.015) |

| Lasso | 0.786(.020) | 1.670(.052) | 0.564(.012) | 1.066(.027) | |

| TLP | 0.987(.036) | 2.454(.107) | 0.355(.014) | 0.819(.035) | |

| Our-con | 0.653(.023) | 1.281(.055) | 0.528(.012) | 0.959(.027) | |

| Ours | 0.317(.013) | 0.730(.036) | 0.183(.005) | 0.391(.014) | |

| (10, 90) | Smooth | 0.230(.008) | 0.409(.017) | 0.115(.003) | 0.203(.005) |

| Lasso | 0.318(.008) | 0.694(.022) | 0.205(.004) | 0.398(.008) | |

| TLP | 0.402(.012) | 1.010(.043) | 0.148(.004) | 0.346(.011) | |

| Our-con | 0.240(.008) | 0.487(.019) | 0.180(.004) | 0.335(.007) | |

| Ours | 0.158(.005) | 0.369(.014) | 0.082(.002) | 0.176(.005) | |

Compared with its convex counterpart “our-con”, our method leads to between a 19.9% and a 106% improvement, and between a 4.2% improvement and a 75.5% improvement in terms of the EL and QL when n = 120, and between a 18.3% improvement and a 120% improvement, and a 90.3% improvement and a 151% improvement in terms of the EL and QL when n = 300; see Table 3. This is expected because more accurate identification of structures tends to yield better parameter estimation.

In contrast to the smoothing method [7, 25] for time-varying network analysis, across all cases except one low-dimensional case of L = 4 and p = 30 in Table 3, our method yields a 54.5% improvement and a 20.3% improvement in terms of the EL and QL when n = 120, and a 51.9% improvement and a 25.8% improvement in terms of the EL and QL when n = 300 when L is not too small, c.f. Tables 1-4.

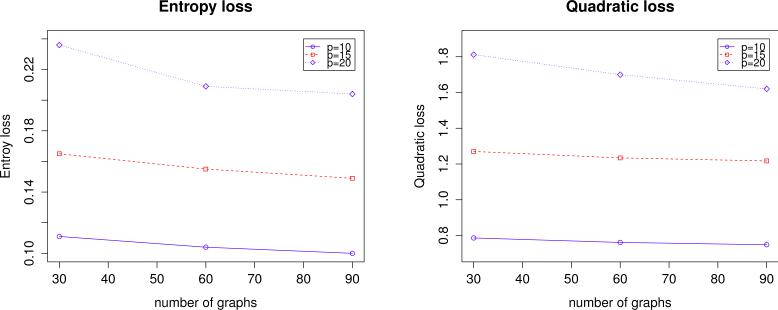

To understand how the proposed method performs relative to (n, p, L), we examine Table 3 and Figure 1 in further detail. Overall, the proposed method performs better as n, L increases and worse as p increases. Interestingly, as suggested by Figure 1, the method performs better as L increases, which confirms with our theoretical analysis.

Figure 1.

Average entropy and quadratic losses of the proposed method over di erent p and L values over 100 simulation replications in Example 1.

In summary, the proposed method achieves the desired objective of pursuing simultaneous both sparseness and clustering structures to battle the curse of dimensionality in a high-dimensional situation.

6 Signaling network inference

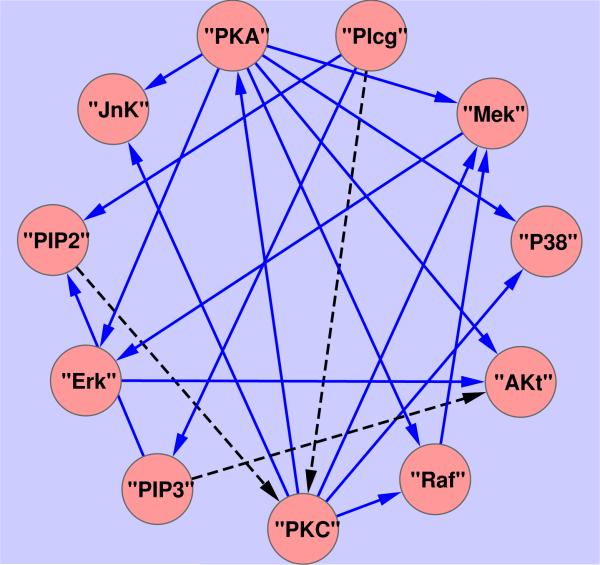

This section applies the proposed method to the multivariate single cell flow cytometry data in [16] to infer a signaling network or pathway; a consensus version of the network with eleven proteins is described in Figure 3. In this study, a multiparameter flow cytometry recorded the quantitative amounts of the eleven proteins in a single cell as an observation. To infer the network, experimental perturbations on various aspects of the network were imposed before the amounts of the eleven proteins were measured under each condition. The idea was that, if a chemical was applied to stimulate or inhibit the activity of a protein, then both the abundance of the protein and those of its downstream proteins in the network would be expected to increase or decrease, while those of non-related proteins would barely change. There were ten types of experimental perturbations on di erent targets: 1) activating a target (CD3) in the upstream of the network so that the whole network was expected to be perturbed; 2) activating a target (CD28) in the upsteam of the network; 3) activating a target (ICAM2) in the upsteam of the network; 4) activating PKC; 5) activating PKA; 6) inhibiting PKC; 7) inhibiting Akt; 8) inhibiting PIP2; 9) inhibiting Mek; 10) inhibiting a target (PI3K) in the upstream of the network. In [16], data were collected under nine experimental conditions and then used to infer a directed network; each of the nine experimental conditions was either a single type of perturbation or a combination of two or three types of perturbations. Interestingly, data were also collected under another five conditions, each of which was a combination of two of the previous nine conditions. Hence, the data o ered an opportunity to infer the two networks under the two sets of the conditions: since the two sets of conditions largely overlapped, we would expect the two networks to be largely similar to each other; on the other hand, due to the di erence between the two sets of the conditions, some deviations between the two networks were also anticipate. There were n1 = 7466 and n2 = 4206 observations under the two sets of the conditions respectively.

Figure 3.

Signaling network reproduced from Figure 3(A) of [16], where the black dashed line represents links that have been missed by methods in [16].

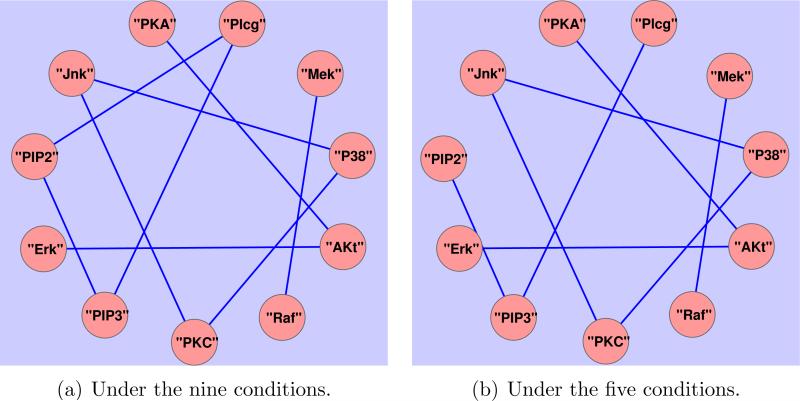

We apply the proposed method to the normalized data under the two sets of the conditions respectively. Due to the expected similarities between the two networks, we consider grouping to encourage common structure defined by connecting edges between the two networks. The tuning parameters are estimated by a three-fold cross-validation. The reconstructed two undirected networks are now displayed in Figure 2, with 9 and 8 estimated (undirected) links for the two groups of conditions, being a subset of the 20 (directed) links in the gold standard signaling network as displayed in Figure 3, which is a consensus network that has been verified biologically, c.f. [16]. The reconstructed undirected graphs miss some edges as compared to the gold standard network, for instance, the links from protein “PKC” to “Raf” and “Mek”. The three edges missed by [16], “PIP3” to “Akt”, “Plcg” to “PKC”, and “PIP2” to “PKC”, are also missed by our method, possibly reflecting lack of information in the data due to no direct interventions imposed on “PIP3” and ”Plcg”.

Figure 2.

Reconstructed networks for simultaneous pursuit of clustering and sparsity.

Overall, the proposed method appears to work well in that the network inferred from the first set of conditions recovers one more dependence relationships than that from the second set of conditions, which is expected given that the second set of interventional conditions is less specific than the first one.

Here we analyze the data by contrasting the network constructed under the nine conditions with n1 = 7466 against that under the five conditions with n2 = 4206. Of particular interest is the detection of network structural changes between the two sets of conditions.

7 Discussion

This article proposes a novel method to pursue two disparate types of structures—sparseness and clustering for multiple Gaussian graphical models. The proposed method is equipped with an e cient algorithm for large graphs, which is integrated with a partition rule to break down a large problem into many separate small problems to solve. For data analysis, we have considered signaling network inference in a low-dimensional situation. Worthy of note is that the proposed method can be equally applied to high-dimensional data, such as reconstructing and comparing gene regulatory networks across four subtypes of glioblastoma multiforme based on gene expression data [21].

To make the proposed method useful in practice, inferential tools need to be further developed. A Monte Carlo method may be considered given the level of complexity of the underlying problems. Moreover, the general approach developed here can be expanded to other types of graphical models, for instance, dynamic network models or time-varying graphical models [7]. This enables us to build time dependency into a model through, for example, a Markov property. Further investigation is necessary.

Table 2.

Average entropy loss, denoted by EL, (SD in parentheses) and average quadratic loss, denoted by QL, (SD in parentheses), average false positive for sparseness pursuit, denote by FPV, (SD in parentheses), average false negative for sparseness pursuit, denoted as FNV, (SD in parentheses), average false positive for grouping, denoted by FPG, (SD in parentheses), and average false negative for grouping, denoted by FNG, (SD in parentheses), based on 100 simulations, for estimating precision matrices in Example 2 with n = 300. Here “Smooth”, “Lasso”, “TLP”, “Our-con” and “Ours” denote estimation of individual matrices with kernel smoothing method proposed in [25], the L1 sparseness penalty, that with non-convex TLP penalty, the convex counterpart of our method with the L1-penalty for sparseness and clustering, and our non-convex estimates by solving (2) with penalty (6). The best performer is bold-faced.

| (p, L) | Method | EL | QL | FPV | FNV | FPG | FNG |

|---|---|---|---|---|---|---|---|

| (30, 4) | Smooth | 0.418(.025) | 1.081(.072) | .387(.054) | .009(.008) | .543(.060) | .002(.002) |

| Lasso | 0.732(.041) | 1.840(.122) | .229(.011) | .061(.013) | .466(.016) | .006(.005) | |

| TLP | 0.772(.055) | 2.290(.192) | .038(.005) | .184(.020) | .162(.010) | .037(.014) | |

| Our-con | 0.591(.039) | 1.373(.104) | .081(.019) | .033(.013) | .056(.035) | .146(.021) | |

| Ours | 0.359(.036) | 1.012(.111) | .044(.009) | .031(.015) | .004(.002) | .233(.012) | |

| (200, 4) | Smooth | 3.198(.069) | 7.823(.187) | .129(.010) | .030(.006) | .161(.010) | .013(.002) |

| Lasso | 6.902(.140) | 17.91(.435) | .049(.001) | .234(.010) | .110(.002) | .039(.004) | |

| TLP | 8.350(.215) | 23.71(.710) | .003(.001) | .493(.015) | .017(.001) | .116(.010) | |

| Our-con | 6.151(.148) | 15.58(.439) | .014(.005) | .198(.013) | .030(.010) | .142(.046) | |

| Ours | 2.977(.135) | 8.108(.399) | .001(.000) | .191(.010) | .001(.000) | .284(.012) | |

| (20,30) | Smooth | 0.409(.011) | 0.839(.006) | .063(.007) | .024(.004) | .290(.007) | .005(.001) |

| Lasso | 0.470(.011) | 1.157(.034) | .290(.006) | .034(.004) | .611(.008) | .001(.001) | |

| TLP | 0.491(.017) | 1.421(.057) | .081(.004) | .118(.007) | .341(.006) | .004(.001) | |

| Our-con | 0.303(.010) | 0.682(.025) | .071(.013) | .008(.002) | .044(.014) | .023(.002) | |

| Ours | 0.111(.006) | 0.317(.019) | .012(.005) | .006(.003) | .011(.002) | .032(.003) | |

| (10, 90) | Smooth | 0.123(.003) | 0.248(.007) | .132(.013) | .008(.002) | .518(.008) | .001(.000) |

| Lasso | 0.170(.003) | 0.419(.010) | .405(.010) | .007(.002) | .798(.008) | .000(.000) | |

| TLP | 0.155(.005) | 0.439(.014) | .175(.007) | .020(.003) | .609(.007) | .000(.000) | |

| Our-con | 0.099(.008) | 0.230(.007) | .135(.024) | .000(.000) | .043(.015) | .001(.001) | |

| Ours | 0.040(.002) | 0.117(.005) | .015(.014) | .000(.000) | .009(.001) | .001(.001) | |

8 Appendix

Proof of Theorem 1: The equivalence follows directly from Theorem 4.1 in [20].

Next we present two lemmas to be used in the proof of Theorem 2.

Lemma 1 For any , (22) and (23) are equivalent:

| (22) |

| (23) |

Proof: If (22) holds, then for any , |cTx0| = |b1cTx1 + · · · + bmcTxm| ≤ |b1||cTx1| + · · · + |bm||cTxm, which is no greater than |cTx1| + · · · + |cTxm|, implying (23). For the converse, assume that for any , |cTx0| ≤ |cTx1| + · · · + |cTxm|. Consider the following convex minimization:

| (24) |

where B(x) is an indicator function with B(x) = 0 when |x| ≤ 1 and B(x) = +∞ otherwise. First, we need to show that the constraint set in (24) is nonempty. Suppose that it is empty. Let c0 = (I − P(x1, · · · , xm))x0, where P(x1, · · · , xm) is the projection matrix onto the linear space spanned by x1, · · · , xm. Since x0, x1, · · · , xm are linearly independent, we have that ||c0||2 > 0. Therefore , contracting to that |cTx0| ≤ |cTx1| + · · · + |cTxm|. Hence the constraint set of (24) is nonempty and we denote its optimal value by p* . Next we convert (24) to its dual by introducing dual variable for the equality constraints in (24) through Lagrange multipliers:

| (25) |

By the assumption that |cTx0| ≤ |cTx1| + · · · + |cTxm| for any c, the maximal of (25) d* must satisfy d* ≤ 0. Hence d* = 0 because it is attained by ν = 0. Moreover, Slater's condition holds because constraint set of (24) is nonempty. By the strong duality principle, the duality gap is zero, and hence that p* = d* = 0. Consequently, a minimizer of (24) (b1, · · · , bm) exists with |b1| ≤ 1, · · · , |bm| ≤ 1, satisfying the constraints x0 + b1x1 + · · · + bmxm = 0. This implies (23). This completes the proof.

Lemma 2 For s = (s1,..., sL) and a connected graph , there exist |gl| ≤ 1, |gu′| ≤ 1, gu′ = –gl′l; 1 ≤ l, l′ ≤ L such that

| (26) |

is equivalent to for any with .

Proof: First, for some |gl| ≤ 1, |gu′| ≤ 1, gu′ = –gl′l; 1 ≤ l, l′ ≤ L, if (26) holds then,

which is no greater than for any . Conversely, by Lemma 1, it suffices to show that for any ,

| (27) |

provided that for any . To this end, for any permutation (k1, · · · , kL) ∈ σ(1, · · · , L) and l = 1, · · · , L, define convex region , where σ(1, · · · , L) denotes the set of all possible permutation (1, · · · , L). It's easy to see that Then, consider the function . Note that g(c) over each region is a convex function. BY the maximal principle, its maximum (over each region) can be attained at the extreme points . It is easy to show that the extreme points must be of the form for some and t ≠ 0, that is the non-zero components must be equal to each other. Hence, g(c) evaluated at the extreme points of reduces to for some , which, by assumption, is always nonpositive. This completes the proof.

Proof of Theorem 2: We shall use the KKT condition of (12), or local optimality, which is in the form of

| (28) |

where is the inversion of matrices and ∂||.||1 denotes the subgradient of the l1 function. If for any , , 1 ≤ l ≤ L, then for any , , 1 ≤ l ≤ L. By Lemma2, we must have for any , . Conversely, if for any , , again by Lemma 2, the KKT condition in (28) holds at , l = 1, · · · , L for jkth components for any , , 1 ≤ l ≤ L. Hence, for any , , 1 ≤ l ≤ L. This completes the Proof.

Proof Theorem 3: Let be the DC solution at iteration m. If the diagonal matrix is initialized as in Algorithm 1, then an application of Theorem 2 on yields that for any , , implying that for any , , 1 ≤ l ≤ L. Next, we prove by induction that if for any , , then for any , , 1 ≤ l ≤ L holds for any m ≥ 1. Suppose that for any , , 1 ≤ l ≤ L holds for some m ≥ 2, then at DC iteration m, . This, together with

Theorem 2, again implies that for any , , 1 ≤ l ≤ L. Using the finite convergence of the DC algorithm, c.f., Theorem 1, we have for any , , implying that for any , , 1 ≤ l ≤ L. Conversely, if for some for any , , 1 ≤ l ≤ L consider the next DC iteration, we have for any , , 1 ≤ l ≤ L. Using the same argument as above with the converse part of Theorem 2, we obtain that for any , . This completes the proof.

Proof of Corollary 1: For the fused graph, let and {1, · · · , L}, then if then . Conversely, if , then . Next, assume that , and write with i1 ≤ i1 + l1 < i2 < i2 + l2 > · · · > iq < iq + lq. Then

In the case of the complete graph, set , given Sk1 ≥ · · · ≥ SKL, then we have . Conversely, for any , . This completes the proof.

Proof of Theorem 4: The proof uses a large deviation probability inequality of [23] to treat one-sided log-likelihood ratios with constraints. This enables us to obtain sharp results without a moment condition on both tails of the log-likelihood ratios.

Recall that and . Let a class of candidate subsets be for sparseness pursuit. Note that any A ⊂ {1, · · · , d} can be partitioned into . Then we partition accordingly with , where , with . Moreover, , where . So .

To bound the error probability, note that if then then , by Definition 1. Conversely, if or , then . Thus . So . This together with implies that . Consequently is upper bounded by

where is the outer measure and the last two inequalities use the fact that and for .

For I, we apply Theorem 1 of [23] to bound each term. Towards this end, we verify their entropy condition (3.1) for the local entropy over for , and i = 0, · · · , d0. Under Assumption A satisfies there with respect to ε > 0, that is,

| (29) |

for some constant c3 > 0 and c4, say c3 = 10 and . By Assumption A, implies (29), provided that .

Now, let and . Using inequalities for binomial coefficients: and , , we have, by Theorem 1 of [23], that for a constant c2 > 0, say ,

This completes the proof.

Proof of Theorem 5: The proof is similar to that of Theorem 4 with some minor modifications. Given τ and , a partition associated with is defined to satisfy the following (i) ; (ii) |βj1 − βj2| ≤ τ for any j different groups. Let .

The rest of the proof is basically the same as that in Theorem 2 with a modification that and are replaced by and respectively. Here, , , and .

Next, we show if and only if , where . Now . By (14), , with d0 = d1, yields that ; . In addition, the second constraint of (14) implies , yielding that for any j1, j2 , , i = 1, · · · , K. By graph consistency of , is connected over , implying that for any j1, , i = 1, · · · , K. This further implies that and , meaning that that . On the other hand, it is obvious that if then . Hence, from which we conclude that . This together with implies that is bounded by

where the last step uses the fact that , under Assumption B. Then, for some constant c3, is upper bounded by

provided that . This completes the proof.

Proof of Corollary 2: First we derive an upper bound of S*. Let the number of nonzero elements of the precision matrix in the sth cluster for l = 1, · · · , L. Let be the average number of nonzero elements. For any with , let and . Let ajk = #{l : xjkl ≠ 0} for . Note that q0 ≤ p0 and since |A| ≤ d0. By the definition of , we have

| (30) |

This together with log(1 + g0) ≤ g0 implies log S* ≤ 2g0 max(log(d0/g0),1). To lower bound Cmin(θ0), we proceed similarly with the proof of Proposition 2 in [17]. Specifically, note that . Thus,

| (31) |

An application of Proposition 2 of [17] yields that each term in (31) is lower bounded by . Therefore, . Now if , we have . If , then by definition of , there must exist (j, k, l) such that ωjkl = ωjk(l+1) and . Here

A combination of both the cases yield that

which, after taking infimum over , leads to . This, together with, the upper bound on log S* in Theorems 4 and 5, gives a sufficient condition for simultaneous pursuit of sparseness and clustering: , for some c0 > 0. Moreover, under this condition, and as n, d → +∞. This completes the proof.

Footnotes

This research was supported in part by the National Science Foundation Grant DMS-1207771 and National Institutes of Health Grants R01GM081535, HL65462 and R01HL105397. The authors thank the editors and the reviewers for helpful comments and suggestions.

References

- 1.Banerjee O, El Ghaoui L, d'Aspremont A. Model selection through sparse maximum likelihood estimation for multivariate Gaussian or binary data. Journal of Machine Learning Research. 2008;9:485–516. [Google Scholar]

- 2.Boyd SP, Vandenberghe L. Convex optimization. Cambridge Univ Pr; 2004. [Google Scholar]

- 3.Cormen TH. Introduction to algorithms. The MIT press; 2001. [Google Scholar]

- 4.Fan J, Feng Y, Wu Y. Network exploration via the adaptive lasso and SCAD penalties. The annals of applied statistics. 2009;3(2):521–541. doi: 10.1214/08-AOAS215SUPP. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Friedman J, Hastie T, Tibshirani R. Sparse inverse covariance estimation with the graphical lasso. Biostatistics. 2008;9(3):432. doi: 10.1093/biostatistics/kxm045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Guo J, Levina E, Michailidis G, Zhu J. Joint estimation of multiple graphical models. Biometrika. 2011;98(1):1. doi: 10.1093/biomet/asq060. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Kolar M, Xing EP. On time varying undirected graphs.. Proceedings of the 14th International Conference on Artificial Intelligence and Statistics.2011. [Google Scholar]

- 8.Kolmogorov AN, Tikhomirov VM. ε-entropy and ε-capacity of sets in function spaces. Uspekhi Matematicheskikh Nauk. 1959;14(2):3–86. [Google Scholar]

- 9.Lauritzen Steffen L. Graphical models. Oxford University Press; 1996. [Google Scholar]

- 10.Li B, Chun H, Zhao H. Sparse estimation of conditional graphical models with application to gene networks. Journal of the American Statistical Association. 2012;107:152–167. doi: 10.1080/01621459.2011.644498. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Li H, Gui J. Gradient directed regularization for sparse Gaussian concentration graphs, with applications to inference of genetic networks. Biostatistics. 2006;7(2):302. doi: 10.1093/biostatistics/kxj008. [DOI] [PubMed] [Google Scholar]

- 12.Mazumder R, Hastie T. Exact covariance thresholding into connected components for large-scale graphical lasso. The Journal of Machine Learning Research. 2012;13:781–794. [PMC free article] [PubMed] [Google Scholar]

- 13.Meinshausen N, Bühlmann P. High-dimensional graphs and variable selection with the lasso. The Annals of Statistics. 2006;34(3):1436–1462. [Google Scholar]

- 14.Rocha GV, Zhao P, Yu B. A path following algorithm for sparse pseudo-likelihood inverse covariance estimation (splice). Arxiv preprint arXiv:0807.3734. 2008 [Google Scholar]

- 15.Rothman AJ, Bickel PJ, Levina E, Zhu J. Sparse permutation invariant covariance estimation. Electronic Journal of Statistics. 2008;2:494–515. [Google Scholar]

- 16.Sachs K, Perez O, Pe'er D, Lauffenburger DA, Nolan GP. Causal protein-signaling networks derived from multiparameter single-cell data. Science. 2005;308(5721):523. doi: 10.1126/science.1105809. [DOI] [PubMed] [Google Scholar]

- 17.Shen X, Pan W, Zhu Y. Likelihood-based selection and sharp parameter estimation. Journal of American Statistical Association. 2012;107:223–232. doi: 10.1080/01621459.2011.645783. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Shimamura Teppei, Imoto Seiya, Yamaguchi Rui, Nagasaki Masao, Miyano Satoru. Inferring dynamic gene networks under varying conditions for transcriptomic network comparison. Bioinformatics. 2010;26(8):1064–1072. doi: 10.1093/bioinformatics/btq080. [DOI] [PubMed] [Google Scholar]

- 19.Tibshirani R, Saunders M, Rosset S, Zhu J, Knight K. Sparsity and smoothness via the fused lasso. Journal of the Royal Statistical Society: Series B. 2005;67:91–108. [Google Scholar]

- 20.Tseng P. Convergence of a block coordinate descent method for nondifferentiable minimization. Journal of optimization theory and applications. 2001;109(3):475–494. [Google Scholar]

- 21.Verhaak R, Hoadley KA, Purdom E, Wang V, Qi Y, Wilkerson MD, Miller CR, Ding L, Golub T, Mesirov JP, et al. An integrated genomic analysis identifies clinically relevant subtypes of glioblastoma characterized by abnormalities in pdgfra, idh1, egfr and nf1. Cancer cell. 2010;17(1):98. doi: 10.1016/j.ccr.2009.12.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Witten DM, Friedman JH, Simon N. New insights and faster computations for the graphical lasso. Journal of Computational and Graphical Statistics. 2011;20:892–900. [Google Scholar]

- 23.Wong WH, Shen X. Probability inequalities for likelihood ratios and convergence rates of sieve mles. The Annals of Statistics. 1995:339–362. [Google Scholar]

- 24.Yuan M, Lin Y. Model selection and estimation in the Gaussian graphical model. Biometrika. 2007;94(1):19. [Google Scholar]

- 25.Zhou S, Lafferty J, Wasserman L. Time varying undirected graphs. Machine Learning. 2010;80(2):295–319. [Google Scholar]

- 26.Zhu Y, Shen X, Pan W. Simultaneous grouping pursuit and feature selection over an undirected graph. Journal of the American Statistical Association. 2013;108:713–725. doi: 10.1080/01621459.2013.770704. [DOI] [PMC free article] [PubMed] [Google Scholar]