Abstract

It is difficult to create spoken forms that can be understood on the spot. But the manual modality, in large part because of its iconic potential, allows us to construct forms that are immediately understood, thus requiring essentially no time to develop. This paper contrasts manual forms for actions produced over 3 time spans—by silent gesturers who are asked to invent gestures on the spot; by homesigners who have created gesture systems over their lifespans; and by signers who have learned a conventional sign language from other signers—and finds that properties of the predicate differ across these time spans. Silent gesturers use location to establish co-reference in the way established sign languages do, but show little evidence of the segmentation sign languages display in motion forms for manner and path, and little evidence of the finger complexity sign languages display in handshapes in predicates representing events. Homesigners, in contrast, not only use location to establish co-reference, but also display segmentation in their motion forms for manner and path and finger complexity in their object handshapes, although they have not yet decreased finger complexity to the levels found in sign languages in their handling handshapes. The manual modality thus allows us to watch language as it grows, offering insight into factors that may have shaped and may continue to shape human language.

Keywords: Sign language, gesture, homesign, predicate classifiers, co-reference, manner, path, segmentation, Nicaraguan Sign Language

Sign languages, like spoken languages, develop over an historical timespan. As a result, the current form of a sign language is a product of generations of use by a community of signers.1 A number of competing pressures have been identified that might play a role in shaping the current forms of language, be they signed or spoken (Bloomfield, 1933; Jakobson & Halle, 1956; Sapir, 1921). For example, Slobin (1977) suggests that the form a language takes is shaped, at least in part, by the requirement that language be semantically clear, and by the often competing requirement that language be processed efficiently and quickly. Regardless of the pressures that cause languages to change, in both spoken (Sapir, 1921) and signed (Frishberg, 1975) languages, the changes that come about in response to these pressures tend to be constrained by intra-linguistic factors; that is, the changes are not free to vary indiscriminately but rather tend to conform to the internal system that defines the language.

Although it is difficult to create spoken forms that can be immediately understood, the iconicity of the manual modality -- the fact that the hand can transparently represent its referent (e.g., a twisting hand motion can refer to the act of twisting) -- makes it possible to invent signed forms that can be understood “on the spot” and thus do not need time to develop. The manual modality thus allows us to explore the impact that pressures across different timespans have on the form of language.

We can, for example, compare signs invented over three different time spans:2

(1) Conventional sign languages, used by a deaf community, are passed down from generation to generation. Sign languages are autonomous languages not based on the spoken language in the community (e.g., Sutton-Spence & Woll, 1999). Although they share many characteristics, there is also considerable diversity across the sign languages of the world (Brentari, 2010) and these differences are likely to evolve over historical time.

(2) Homesigns, invented by deaf individuals who are unable to acquire the spoken language that surrounds them and who have not been exposed to sign language, are used to communicate with hearing people and, in some instances, are developed by one deaf individual over a period of years.3 Homesign has been studied in American (Goldin-Meadow & Mylander, 1984; Goldin-Meadow, 2003a), Chinese (Goldin-Meadow & Mylander, 1998), Turkish (Goldin-Meadow Namboodiripad, Mylander, Özyürek & Sancar, in press 2013), Brazilian (Fusillier-Souza, 2006), and Nicaraguan (Coppola & Newport, 2005) individuals, and has been found to contain many, but not all, of the properties that characterize natural language; e.g., structure within the word (morphology, Goldin-Meadow, Mylander & Butcher, 1995; Goldin-Meadow, Mylander & Franklin, 2007), structure within basic components of the sentence (markers of thematic roles, Goldin-Meadow & Feldman, 1977; nominal constituents, Hunsicker & Goldin-Meadow, 2012; recursion, Goldin-Meadow, 1982; the grammatical category of subject, Coppola & Newport, 2005), and structure in how sentences are modulated (negations and questions, Franklin, Giannakidou & Goldin-Meadow, 2011).

(3) Silent gestures, created by hearing speakers who are asked not to talk and use only their hands to communicate, are developed in the moment. Silent gestures have been mined primarily for word order in speakers of a wide variety of languages--English, Chinese, Turkish, Spanish, Italian, Hebrew, Japanese, and Korean (Goldin-Meadow, So, Özyürek & Mylander, 2008; Langus & Nespor, 2010; Meir, Lifshitz, Ilkbasaran & Padden, 2010; Gibson, Piantadosi, Brink, Bergen, Lim & Saxe, 2013; Hall, Ferreira & Mayberry, 2013; Hall, Mayberry & Ferreira, 2013). Despite the fact that the canonical word orders for simple transitive sentences in the languages spoken in these countries differ, the gesture order used by the silent gesturers to describe a prototypical event encoded in a transitive sentence (i.e., an animate acting on inanimate) is identical in all countries. The silent gestures thus do not appear to be a mere translation into gesture of the language that the gesturer routinely speaks, but reflect the construction of new forms on the spot.

My goal is to contrast the manual forms for actions (i.e., predicates) produced by signers, homesigners, and silent gesturers, focusing on three dimensions that were first introduced by Stokoe (1960) as phonological parameters within a structural linguistics framework. These dimensions have continued to be used more broadly in characterizing processes within sign languages, which is how they are used here: (1) variations in location that refer back to previously identified referents; (2) variations in motion that highlight different aspects of an event; and (3) variations in handshape that differ as a function of type of classifier predicate. In addition, where the data are available, I also consider the gestures hearing speakers produce as they talk (i.e., co-speech gesture), as those gestures could serve as a model for homesign and can also find their way into conventional sign languages (e.g., Nyst, 2012)

Nicaragua offers an excellent forum within which to explore these issues. In the late 1970s and early 1980s, rapidly expanding programs in special education in Nicaragua brought together in great numbers deaf children and adolescents who were, at the time, homesigners (Kegl & Iwata, 1989; Senghas, 1995). As they interacted on school buses and in the schoolyard, these students converged on a common vocabulary of signs and ways to combine those signs into sentences, and a new language--Nicaraguan Sign Language (NSL)--was born. The language has continued to develop as new cohorts of children enter the community and learn to sign from older peers. In the meantime, there are still individuals in Nicaragua who do not have access to NSL; in other words, there are still Nicaraguan homesigners. Moreover, homesigners in Nicaragua can continue to use their own communication systems into adulthood, unlike homesigners in the U.S., China or Turkey, who are likely to either learn a conventional sign language or receive cochlear implants and focus on spoken language beyond childhood. Adult Nicaraguan homesign systems have been studied and found to display many properties found in natural language (referential devices, Coppola & Senghas, 2010; the grammatical category of subject, Coppola & Newport, 2005; lexical quantifiers, Spaepen, Coppola, Spelke, Carey & Goldin-Meadow, 2011; plural markers, Coppola, et al., 2013).

Although it would be most convincing to compare a given form type across all three groups (signers, homesigners, and silent gesturers) within Nicaragua, the relevant research has not been done in all cases. I will therefore piece together comparisons using data from other cultures to explore how three aspects of form (location, motion, handshape) vary over time-scales in signs and gestures used to represent actions (predicates).

Location: Using space for co-reference

Sign languages have evolved in a different biological medium from spoken languages. Despite striking differences in modality, many grammatical devices found in spoken languages are also found in sign languages (e.g., signaling who does what to whom by the order in which a word or sign is produced; Liddell, 1984). But sign languages employ at least one grammatical device that is not possible in spoken language—signaling differences in meaning by modulating where signs are produced in space. All sign languages studied thus far use space to indicate referents and the relations among them (Aronoff, Meir, Padden, & Sandler, 2003; Mathur & Rathmann, 2010). These uses of space lay the foundation for maintaining coherence in a discourse. As one simple example from American Sign Language (ASL), a signer can associate a spatial location with an entity and later articulate a sign with respect to that location to refer back to the entity, akin to co-reference in a spoken language (e.g., “Bert yelled at Ernie and then apologized to him” where him refers back to Ernie). After associating a location in space with Ernie, the signer can later produce a verb with respect to that space to refer back to Ernie without repeating the sign for Ernie (Padden, 1988). By using the same space for an entity, signers maintain co-reference. Co-reference is an important function in all languages (Bosch, 1983) and considered a “core” property of grammar (Jackendoff, 2002).

Using space for co-reference is found not only in well-established sign languages, but also in emerging languages, for example, in the first cohort of NSL (Senghas & Coppola, 2001). Moreover, Flaherty, Goldin-Meadow, Senghas and Coppola (2013) have recently expanded the groups within which this phenomenon has been explored to include adult homesigners in Nicaragua. They asked four homesigners, four cohort 1 signers (exposed to NSL upon school entry in the late 1970s and early 1980s), four cohort 2 signers (exposed to NSL upon school entry in the late 1980s and early 1990s), and four cohort 3 signers (exposed to NSL upon school entry in the late 1990s and early 2000s) to view videoclips depicting simple events involving either two animate participants or one animate and one inanimate participant. The adults were asked to describe what they saw to a family member (homesigners) or to a peer (signers). All four homesigners were found to use gestures coreferentially--they produced verb gestures in spatial locations previously associated with a referent (the subject of the sentence, the object, or both), although they did so less often than all three of the NSL cohorts. Using space for co-reference thus appears to be a device basic to manual languages, young or old.

But will hearing speakers asked to communicate using only their hands construct this same device immediately? Borrowing a paradigm used in previous work (Casey, 2003; Dufour, 1993; Goldin-Meadow, McNeill, & Singleton, 1996), So, Coppola, Licciardello and Goldin-Meadow (2005) instructed 18 hearing adults, all naïve to sign language, to describe videotaped scenes using gesture and no speech (i.e., silent gesture). One group of adults saw events presented in an order that told a story (connected events). The other group saw the same events in random order interspersed with events from other stories (unconnected events). The adults did indeed use space coreferentially—they established a location for a character with one gesture and then re-used that location in subsequent gestures to refer back to the character. Moreover, they did so significantly more often when describing connected events than when describing unconnected events (i.e., when they could use the same spatial framework throughout the story).

To situate these findings within a gestural context, it is interesting to note that when the same adults were asked to describe the events in speech, they did not use their co-speech gestures (i.e., the gestures that they produced along with speech) co-referentially any more often for connected events than for unconnected events, suggesting that hearing individuals use space in this way only when their gestures are forced to assume the full burden of communication.4 If using space co-referentially comes so naturally to the manual modality, why then is space not used for this purpose more often in gestures that accompany speech? Co-speech gestures may work differently from gesture used on its own simply because the gestures that accompany speech do not form a system unto themselves but are instead integrated into a single system with speech (Goldin-Meadow et al., 1996); co-reference can be marked in speech, thus eliminating the need for it to be redundantly marked in gesture.

Silent gesturers and homesigners can thus both take a first step toward using location in the way it is used in conventional sign languages (see Table 1), suggesting that the seeds of a spatial system are present in individuals who are inventing their own gestures, either in the moment or over time. But one important point to note is that the mappings used by the silent gesturers (or the homesigners, for that matter) were not particularly abstract. All of the events that the adults were shown involved physical actions; what the adults did was establish a gestural stage and then use that stage to reenact the motion events they saw. A question for future research is whether silent gesturers (as well as homesigners) can take the step taken by all conventional sign languages and use their gestural stage to convey abstract relations (e.g., can they use a location established for Ernie to indicate that Bert is talking to Ernie, as opposed to physically interacting with him?). Turning a co-referential system into a spatial grammar may--or may not--require a community of signers and transmission over generations of users.

Table 1. Structural Uses of Location, Motion, and Handshape in Predicates by Signers, Homesigners, and Silent Gesturers.

| Linguistic Dimension | Conventional Sign Language (learned from other signers) |

Homesign (developed over time) |

Silent Gesture (created on the spot) |

|---|---|---|---|

|

Location: Predicates positioned in space to establish co-reference |

YES | YES | YES |

|

Motion: Motion predicates segmented into manner and path components |

YES | YES | NO |

|

Handshape: Predicate classifiers distinguished by finger complexity (high on object, low on handling) |

YES | YES, but… | NO |

Motion: Using motion to focus on different aspects of an event

Languages, both signed and spoken, often contain separate lexical items for manner (roll) and path (down) despite the fact that these two aspects of crossing-space events occur simultaneously. For example, when a ball rolls down an incline, the rolling manner occurs throughout the downward path. Senghas, Kita, and Özyürek (2004) found evidence of manner/path segmentation in the earliest cohorts of NSL. Members of cohort 1 analyzed complex motion events into basic elements and sequenced these elements into structured expressions (although they did so less often than members of cohorts 2 and 3). For example, all of the NSL signers produced separate signs for manner and path and produced them in sequence, e.g., roll-down. Interestingly, this type of segmentation was not observed in the gestures that Nicaraguan Spanish speakers produced along with their speech. The hearing speakers conflated manner and path into a single gesture; for example, a rolling movement made while moving the hand downward, roll+down. Here again we see a categorical difference between the gestures that accompany speech and gestures that are used as a primary communication system, as in the earliest cohorts of NSL.

Segmentation of manner and path has not yet been explored in homesigners in Nicaragua. However, in a recent reanalysis of the NSL data, Senghas, Özyürek, and Goldin-Meadow (2013) found that although cohort 1 signers (who are a step away from homesigners) displayed segmentation in their signs, there was evidence that they were not completely committed to segmentation and were actually experimenting with it. In addition to coding sentences that contained only sequenced (roll–down) or only conflated (roll+down) forms, Senghas and colleagues coded a third form, which they called mixed--a conflated sign that was combined with a manner and/or path sign (e.g., roll+down–roll; down-roll+down). Although all four groups (co-speech gesturers, cohort 1 signers, cohort 2 signers, cohort 3 signers) produced a few examples of the mixed form, the form was produced most often by cohort 1, for whom it was the predominant response. The mixed form is a combination of the analog and the segmented, and seems to be the favored strategy when gesture initially assumes the full burden of communication and becomes a primary language system. Segmentation seems to begin when language begins, although it is not fully developed.

But is segmentation and combination in motion forms a characteristic of communication systems only when they are shared by communities of signers, or will it arise in individuals who use the manual modality to communicate? This question can be best answered by observing homesigners. Although as mentioned earlier there are no data to address this question available at the moment from homesigners in Nicaragua, there are relevant data from Turkey not only on homesign, but also on silent gesturers.

Özyürek, Furman, and Goldin-Meadow (2013) asked 7 Turkish child homesigners (mean age = 4 years, 2 months) to describe animated motion events, and compared their gestures to the co-speech gestures produced by the homesigners’ hearing mothers and by 18 hearing adults and 14 hearing children (mean age = 4 years, 9 months) in the same community. The most frequent response for all of the hearing speakers, adults and children alike, was a path gesture used on its own (e.g., down). The homesigners also produced path alone gestures but, in addition, they produced many gesture strings conveying both manner and path and these strings were not only conflated (e.g., roll+down) but also mixed (e.g., roll+down-down) forms. Like the cohort 1 signers, the Turkish homesigners seemed to be experimenting with segmentation, pulling either manner or path out of the conflated form and expressing the component along with the conflated form.

After describing the events in speech, the 18 hearing adults were asked to describe the events again, this time using only their hands, that is, as silent gesturers. When using only gesture and no speech, the silent gesturers increased the number of gesture strings they produced containing both manner and path. They thus resembled the homesigners in what they conveyed. However, they differed from the homesigners in how they conveyed it--the silent gesturers produced more conflated forms (roll+down) than the homesigners, but fewer mixed forms (roll+down-roll). They thus appeared to be less likely to experiment with segmentation than the homesigners, relying for the most part on conflation when expressing both manner and path. Note that the conflated form is, in fact, a more transparent mapping of the actual event in that the manner of motion occurs simultaneously throughout the path.

To summarize thus far, unlike the location example discussed earlier where we found similarities in how silent gesturers and homesigners use spatial location for co-reference, we find differences between the two groups when we turn to motion (see Table 1). Homesigners take one additional step, not taken by silent gesturers, toward the segmentation found in established sign systems--they adopt the mixed motion form. Segmenting an action into its component parts allows the homesigner to highlight one component of the action without highlighting the other, a property that distinguishes language (even homesign) from pictorial or mimetic representations. When a mime depicts moving forward, he enacts the manner and that manner propels him forward along the path--manner and path are not dissociable in the actual event itself, nor in mimetically based representations of the event. The interesting result is that even though homesign could easily represent a motion event veridically (and therefore mimetically), it often does not. The fact that silent gesturers tend to exploit the fully mimetic representational format suggests that action segmentation may well require time and repeated use to emerge.

Handshape: Using finger complexity to distinguish between handling vs. object classifier predicates

The classifier system is a heavily iconic component of sign languages (Padden, 1988; Brentari & Padden, 2001; Aronoff et al., 2003, Aronoff, Meir & Sandler, 2005). Sign language classifiers are closest in function to verb classifiers in spoken languages. The handshape is an affix on the verb and can either represent properties of the object itself (object classifiers, e.g., a C-shaped hand representing a curved object) or properties of the hand as it handles the object (handling classifiers, e.g., thumb and fingers positioned as though handling a thin paper). Despite the iconicity found in the handshapes used in ASL classifier predicates, these handshapes have morphological structure--they are discrete, meaningful, productive forms that are stable across related contexts (Supalla, 1982; Emmorey & Herzig, 2003, Eccarius, 2008; although see Dudis, 2004; Liddell & Metzger, 1998; and Schembri, Jones, & Burnham, 2005, for evidence that classifiers also display analog properties reminiscent of co-speech gesture). Moreover, there are commonalities in how handshape is used in classifier predicates that cut across different sign languages. In all sign languages studied to date (Brentari & Eccarius, 2010; Eccarius, 2008), finger complexity tends to be higher in object classifier predicates than in handling classifier predicates. How did this conventionalized pattern of finger complexity in sign language form arise?

One possibility is that the pattern is a natural way of gesturing about objects and how they are handled. The high finger complexity in object classifiers might reflect the fact that objects have many different, sometimes complex, shapes, which would need a range of finger configurations to be adequately represented in the hand. For example, it is plausible that a pen might be represented by one finger because it is thin and a ruler by two fingers because it is slightly wider. In contrast, the same handshape could be used to represent handling a pen or a ruler as it is moved, which could lead to a narrower range of handshapes for handling classifiers than for object classifiers.

If the conventionalized pattern of finger complexity found in sign languages reflects natural ways of gesturing about objects and how they are handled, we would expect that hearing speakers who are asked to use only their hands to communicate (i.e., silent gesturers) would display this pattern. In other words, if iconicity is the primary factor motivating the finger complexity pattern in sign languages, silent gesturers should also display the pattern. Alternatively, even if the finger complexity pattern has iconic roots, changes over historical time could have moved the system away from those roots. If so, the finger complexity pattern found in silent gesturers should look different from the pattern found in signers.

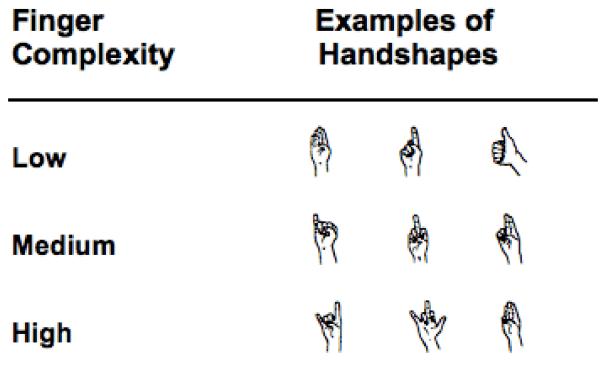

Brentari, Coppola, Mazzoni and Goldin-Meadow (2012) explored these possibilities by giving two groups of signers (3 signers of Italian Sign Language, LIS, and 3 signers of American Sign Language, ASL) and two groups of silent gestures (3 in Italy and 3 in the United States) a set of vignettes to describe. They classified handshapes as follows: low finger complexity handshapes either have all fingers extended, or the index finger or thumb extended; medium finger complexity handshapes have an additional elaboration--the extended finger is on the ulnar side or middle of the hand, or two fingers are selected; high finger complexity handshapes are all remaining handhshapes, (see Figure 1).

Figure 1. Examples of finger complexity in handshapes found in object and handling classifier predicates.

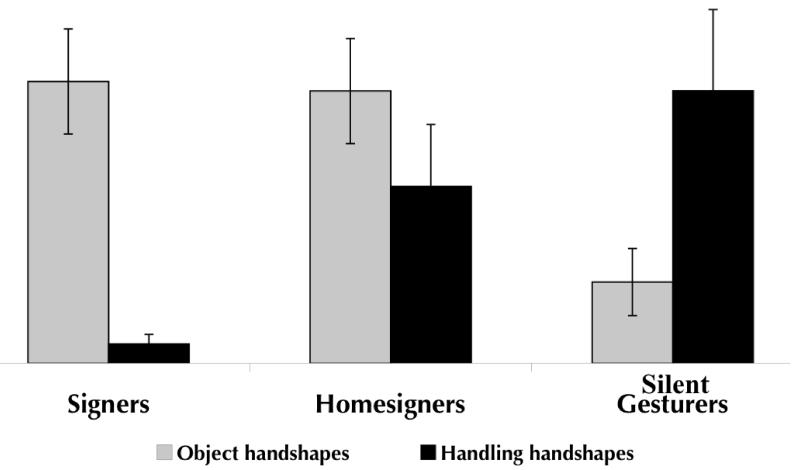

Brentari and colleagues (2012) found that the signers in both countries replicated the finger complexity pattern previously described (Brentari & Eccarius, 2010; Eccarius, 2008)—their object classifier handshapes had more finger complexity than their handling classifier handshapes. Interestingly, however, the silent gesturers in both countries showed a different pattern--the handshapes they produced to represent handling objects had more finger complexity than the handshapes they produced to represent the objects themselves (see Figure 2), perhaps because they have had extensive experience moving objects with their hands and less experience representing objects with their hands. These findings suggest that the pattern found in established sign languages is not a codified version of the pattern invented by hearing individuals on the spot.

Figure 2.

Average finger complexity for signers (ASL, LIS), homesigners (Nicaraguan), and silent gesturers (American, Italian) in the handshapes of object (gray bars) and handling (black bars) classifier predicates; error bars are standard errors (reprinted from Brentari, Coppola, Mazzoni & Goldin-Meadow, 2012).

Brentari and colleagues (2012) then went on to ask whether continued use of gesture as a primary communication system results in a pattern that is more similar to the finger complexity pattern found in established sign languages or to the pattern found in silent gesturers. They asked 4 adult homesigners in Nicaragua to describe the same vignettes, and found that the homesigners pattern more like signers than like silent gesturers--their finger complexity in object classifiers is higher than that of silent gesturers (indeed as high as signers), and their finger complexity in handling classifiers is lower than that of silent gesturers (but not quite as low as signers; see Figure 2). Generally, the findings indicate two markers of the conventionalization of handshape in sign languages: (1) increasing finger complexity in object classifiers, and (2) decreasing finger complexity in handling classifiers. The homesigners achieved signer levels with respect to the first marker, but not yet with respect to the second.

As in the motion example discussed earlier where we found differences in how silent gesturers and homesigners convey manner and path, we also find differences between the two groups in how they use finger complexity in the handshapes of their predicate classifiers (see Table 1). Again, the homesigners take an additional step, not taken by silent gesturers, toward the conventional system used by signers--they display finger complexity in their object handshapes at the level found in signers. The fact that silent gesturers do not show this pattern suggests that it takes time and repeated use to emerge. There is, however, another step to go to achieve the conventional pattern found in sign languages: decreasing finger complexity in handling handshapes to the level found in signers, a step that may require a community of signers to achieve.

Other properties of language likely to differ over time-scales

We have found that three properties of predicate forms show different patterns with respect to time-scale of development; these patterns are summarized in Table 1. Silent gesturers, who are inventing their communication on the spot, are able to use location to establish co-reference, but show little evidence of segmentation in the motion forms they use to represent manner and path found in established sign languages, and show little evidence of the finger complexity pattern found in classifier predicate handshapes in established sign languages. In contrast, homesigners not only are able to use location to establish co-reference, but also have begun to experiment with segmentation when conveying manner and path, and to increase finger complexity in object handshapes in their predicate classifiers, although they have not yet decreased finger complexity in their handling handshapes. These results are, of course, tentative since the data come from a variety of sources. Future work will need to explore these patterns within the same culture in order to validate them.

In addition, future work can be designed to explore other properties of the predicate whose forms might be differentially affected by developmental time-scales. For example, Coppola, Spaepen, and Goldin-Meadow (2013) have found that adult homesigners in Nicaragua not only use lexical gestures to represent quantity (e.g., they hold up four fingers to indicate that there are four sheep in a picture), but they also use markers on their predicates that indicate more than one (akin to a plural marker on the verb, e.g., a homesigner produces a fluid movement with two “bumps” to indicate 8 frogs on a lily pad = many-be-located). These non-cardinal markers are fully integrated into the homesigners’ sign systems in that they are incorporated into their gesture sentences (e.g., the “many-be-located” gesture is part of the following sentence: frog sit many-be-located5), and those sentences follow the same structural patterns as sentences that do not contain number marking (Coppola et al., 2013). It would be interesting to know, on the one hand, if silent gesturers can invent plural markers of this sort to determine whether this linguistic property can be created on the spot; and, on the other hand, if signers use plural markers in ways that differ from the homesigners to determine whether the linguistic property changes as a function of being used by a community of signers over generations.

We can also look beyond the predicate at nominal constituents and sentence modulations. Hunsicker and Goldin-Meadow (2012) found that an American homesigner developed complex nominal constituents in his homesign system (see also Hunsicker, 2012, who replicated this phenomenon in 9 Nicaraguan homesigners). For example, rather than use either a pointing demonstrative gesture (that) or an iconic noun gesture (bird) to refer to a bird riding a bike (in a picture book), the child produced both the demonstrative point and the iconic noun within a single sentence (that bird pedals). This multi-gesture device for referring to objects (by indicating the category of the object, bird, along with a particular member of the category, that) displays many of the criterial features of complex nominal constituents (it can be used in the same semantic and syntactic contexts as single demonstratives or single nouns and thus is a substitutable unit, see Hunsicker & Goldin-Meadow, 2012). The question is whether silent gesturers will ever combine these two types of gestures when referring to an object in a gesture sentence, and whether signers will start to use the combinations in a routine and predictable fashion.

Finally, Franklin, Giannakidou, and Goldin-Meadow (2011) found that an American homesigner was able to modulate his gesture sentences, producing a negative marker (a side-to-side headshake) at the beginnings of his sentences and a question marker (a two-handed flip gesture) at the ends of his sentences. We know that hearing speakers use both the headshake and the flip along with their speech. The question is whether silent gestures use these forms as systematically as homesigners, and whether signers increase the syntactic complexity of the forms (e.g., do they move negation within the sentence to indicate scope?).

To summarize, we have seen that language in the manual modality may go through several steps as it develops. The first and perhaps the biggest step is the distance between the manual modality when it is used along with speech (co-speech gesture) and the manual modality when it is used in place of speech (silent gesture, homesign, and sign language). Gestures produced along with speech form an integrated system with that speech (McNeill, 1992; Kendon, 1980, 2004). As part of this integrated system, co-speech gestures are frequently called on to serve multiple functions—for example, they not only convey propositional information (Goldin-Meadow, 2003b), but they also coordinate social interaction (Bavelas, Chovil, Lawrie, & Wade, 1992; Haviland, 2000) and break discourse into chunks (Kendon, 1972; McNeill, 2000). As a result, the form of a co-speech gesture reflects a variety of pressures, pressures that may compete with using those gestures in the way that a silent gesturer, homesigner, or signer does.

When asked to use gesture on its own, silent gesturers do not use gesture as they typically do when they speak, but rather transform their gestures into a system that has some linguistic properties (e.g., word order, Gershkoff-Stowe & Goldin-Meadow, 2000; Goldin-Meadow et al., 1996), but not all linguistic properties. This transformation may be comparable in some ways to the transformation that homesigners perform when they take the gestures that they see in the hearing world and turn them into homesign (Goldin-Meadow, 2003a,b), but it differs in other ways, likely because homesigners differ from silent gesturers on several important dimensions. First, homesigners do not have access to an accessible linguistic system that could serve as input to their homesigns; in contrast, silent gesturers all have learned and routinely use a spoken language (although there is no evidence that they recruit that language when fashioning their silent gestures, Goldin-Meadow et al., 2008; Hall et al., 2013a,b; Langus & Nespor, 2010; Meir et al., 2010; Gibson et al., 2013). Second, homesigners have been using their gestures for many years; in contrast, silent gesturers create their gestures on the spot. The differences that we have found between the gestures generated by homesigners vs. silent gesturers thus point to the potential importance of these two factors--linguistic input and time--in the development of a language system.

Although homesigners introduce more linguistic properties into the gestural communication systems they develop than silent gesturers do, they too do not develop all of the properties found in natural language. By comparing homesign to established sign languages--communication systems that are used by a community of signers and handed down from generation to generation--we can discover the importance of these two factors in the continued development of language in the manual modality. The manual modality thus gives us a unique perspective from which to observe language creation, and offers insight into factors that have shaped and continue to shape human language.

Footnotes

Although sign languages are the product of many centuries of use, there is weak intergenerational transmission (as a native language) since so few deaf infants are born to signing parents. There are thus additional factors that are likely to have influenced features of sign language structure (see, for example, Kyle & Woll, 1987; Rimor, Kegl, Schermer, & Lane, 1984).

Also relevant to this discussion but beyond the scope of this paper are secondary sign languages such as those used by aboriginal groups in Australia (e.g., Kendon, 1988), which share properties both with primary sign languages and with spoken languages.

The gestures hearing individuals produce when they talk to homesigners could have an impact on the resulting homesign systems. However, there is no evidence that these hearing individuals are fluent users of the homesigners’ gesture systems, even in Nicaragua (e.g., Coppola, Spaepen & Goldin-Meadow, 2013; Goldin-Meadow, Brentari, Horton, Coppola & Senghas, 2013). In this sense, homesigns differ from the shared sign languages that develop in communities with an unusually high incidence of deafness where both hearing and deaf community members use the system (e.g., Nyst, 2012).

It is important to note, however, that hearing speakers do use space systematically in their co-speech gestures for some discourse functions, e.g., Levy & McNeill, 1992, Gulberg, 2006.

The fact that there are many frogs is conveyed only in the predicate in this sentence, and the precise number of frogs is not conveyed at all, providing further evidence that the marker is non-cardinal and, in this sense, comparable to a plural marker.

Supported by R01DC00491 from the National Institute of Deafness and Other Communicative Disorders.

References

- Aronoff M, Meir I, Padden C, Sandler W. Classifier constructions and morphology in two sign languages. In: Emmorey K, editor. Perspectives on classifier constructions in sign languages. Erlbaum Associates; Mahwah, NJ: 2003. pp. 53–84. [Google Scholar]

- Aronoff M, Meir I, Sandler W. The paradox of sign language morphology. Language. 2005;81(2):301–344. doi: 10.1353/lan.2005.0043. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bavelas JB, Chovil N, Lawrie DA, Wade A. Interactive gestures. Discourse Processes. 1992;15:469–489. [Google Scholar]

- Bloomfield L. Language. Holt, Rinehart and Winston; New York: 1933. [Google Scholar]

- Bosch P. Agreement and anaphora: A study of the roles of pronouns in discourse and syntax. Academic Press; London: 1983. [Google Scholar]

- Brentari D, editor. Sign languages: A Cambridge language survey. Cambridge University Press; Cambridge, UK: 2010. [Google Scholar]

- Brentari D, Coppola M, Mazzoni L, Goldin-Meadow S. When does a system become phonological? Handshape production in gesturers, signers, and homesigners. Natural Language and Linguistic Theory. 2012;30(1):1–31. doi: 10.1007/s11049-011-9145-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brentari D, Eccarius P. Handshape contrasts in sign language phonology. In: Brentari D, editor. Sign languages: A Cambridge language survey. Cambridge University Press; Cambridge, UK: 2010. pp. 284–311. [Google Scholar]

- Brentari D, Padden C. A language with multiple origins: Native and foreign vocabulary in American Sign Language. In: Brentari D, editor. Foreign vocabulary in sign language: A cross-linguistic lnvestigation of word formation. Erlbaum Associates; Mahway, NJ: 2001. pp. 87–119. [Google Scholar]

- Casey S. “Agreement” in gestures and signed languages: The use of directionality to indicate referents involved in actions. Unpublished doctoral dissertation. University of California; San Diego: 2003. [Google Scholar]

- Coppola M, Newport E,L. Grammatical subjects in home sign: Abstract linguistic structure in adult primary gesture systems without linguistic input. Proceedings of the National Academy of Sciences. 2005;102(52):19249–19253. doi: 10.1073/pnas.0509306102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coppola M, Senghas A. Deixis in an emerging sign language. In: Brentari D, editor. Sign languages: A Cambridge language survey. Cambridge University Press; Cambridge, UK: 2010. pp. 543–569. [Google Scholar]

- Coppola M, Spaepen E, Goldin-Meadow S. Communicating about number without a language model: Linguistic devices for number are robust. Cognitive Psychology. 2013 doi: 10.1016/j.cogpsych.2013.05.003. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dudis P. Body partitioning and real-space blends. Cognitive Linguistics. 2004;15(2):223–238. [Google Scholar]

- Dufour R. The use of gestures for communicative purpose: Can gestures become grammatical? Unpublished doctoral dissertation. University of Illinois; Urbana-Champaign: 1993. [Google Scholar]

- Eccarius P. A constraint-based account of handshape contrast in sign languages. Unpublished doctoral dissertation. Purdue University; West Lafayette, IN: 2008. [Google Scholar]

- Emmorey K, Herzig M. Categorical vs. gradient properties in classifier constructions in ASL. In: Emmorey K, editor. Perspectives on Classifier Constructions in Sign Languages. Erlbaum Associates; Mahwah, NJ: 2003. pp. 221–246. [Google Scholar]

- Flaherty M, Goldin-Meadow S, Senghas A, Coppola M. Growing a spatial grammar: The emergence of verb agreement in Nicaraguan Sign Language; Poster presented at the 11th Theoretical Issues in Sign Language Research Conference; London, England. 2013.Jul, [Google Scholar]

- Franklin A, Giannakidou A, Goldin-Meadow S. Negation, questions, and structure building in a homesign system. Cognition. 2011;118(3):398–416. doi: 10.1016/j.cognition.2010.08.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frishberg N. Arbitrariness and iconicity: Historical change in American Sign Language. Language. 1975;51:696–719. [Google Scholar]

- Fusellier-Souza I. Emergence and development of sign languages: From a semiogenetic point of view. Sign Language Studies. 2006;7(1):30–56. [Google Scholar]

- Gershkoff-Stowe L, Goldin-Meadow S. Is there a natural order for expression semantic relations? Cognitive Psychology. 2002;45:375–412. doi: 10.1016/s0010-0285(02)00502-9. [DOI] [PubMed] [Google Scholar]

- Gibson E, Piantadosi ST, Brink K, Bergen L, Lim E, Saxe R. A noisy-channel account of crosslinguistic word order variation. Psychological Science. 2013 doi: 10.1177/0956797612463705. in press. [DOI] [PubMed] [Google Scholar]

- Goldin-Meadow S. The resilience of recursion: A study of a communication system developed without a conventional language model. In: Wanner E, Gleitman LR, editors. Language acquisition: The state of the art. Cambridge University Press; New York: 1982. [Google Scholar]

- Goldin-Meadow S. The resilience of language: What gesture creation in deaf children can tell us about how all children learn language. Psychology Press; New York: 2003a. [Google Scholar]

- Goldin-Meadow S. Hearing gesture: How our hands help us think. Harvard University Press; Cambridge, MA: 2003b. [Google Scholar]

- Goldin-Meadow S, Brentari D, Horton L, Coppola M, Senghas A. Watching language grow in the manual modality: How the hand can distinguish between nouns and verbs. 2013 doi: 10.1016/j.cognition.2014.11.029. Under review. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldin-Meadow S, Feldman H. The development of language-like communication without a language model. Science. 1977;197:401–403. doi: 10.1126/science.877567. [DOI] [PubMed] [Google Scholar]

- Goldin-Meadow S, McNeill D, Singleton J. Silence is liberating: Removing the handcuffs on grammatical expression in the manual modality. Psychological Review. 1996;103:34–55. doi: 10.1037/0033-295x.103.1.34. [DOI] [PubMed] [Google Scholar]

- Goldin-Meadow S, Mylander C. Gestural communication in deaf children: The effects and non-effects of parental input on early language development. Monographs of the Society for Research in Child Development. 1984;49(3) no.207. [PubMed] [Google Scholar]

- Goldin-Meadow S, Mylander C. Spontaneous sign systems created by deaf children in two cultures. Nature. 1998;391:279–281. doi: 10.1038/34646. [DOI] [PubMed] [Google Scholar]

- Goldin-Meadow S, Mylander C, Butcher C. The resilience of combinatorial structure at the word level: Morphology in self-styled gesture systems. Cognition. 1995;56:195–262. doi: 10.1016/0010-0277(95)00662-i. [DOI] [PubMed] [Google Scholar]

- Goldin-Meadow S, Mylander C, Franklin A. How children make language out of gesture: Morphological structure in gesture systems developed by American and Chinese deaf children. Cognitive Psychology. 2007;55:87–135. doi: 10.1016/j.cogpsych.2006.08.001. [DOI] [PubMed] [Google Scholar]

- Goldin-Meadow S, Namboodiripad S, Mylander C, Özyürek A, Sancar B. The resilience of structure built around the predicate: Homesign gesture systems in Turkish and American deaf children. Journal of Cognition and Development. 2013 doi: 10.1080/15248372.2013.803970. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldin-Meadow S, So W-C, Özyürek A, Mylander C. The natural order of events: How speakers of different languages represent events nonverbally. Proceedings of the National Academy of Sciences. 2008;105(27):9163–9168. doi: 10.1073/pnas.0710060105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gulberg M. Handling discourse: Gestures, reference tracking, and communication strategies in early L2. Language Learning. 2006;56:155–196. [Google Scholar]

- Hall ML, Ferreira VS, Mayberry RI. Investigating constituent order change with elicited pantomime: A functional account of SVO emergence. Cognitive Science. 2013a doi: 10.1111/cogs.12105. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hall ML, Mayberry RI, Ferreira VS. Cognitive constraints on constituent order: Evidence from elicited pantomime. Cognition. 2013b doi: 10.1016/j.cognition.2013.05.004. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haviland J. Pointing, gesture spaces, and mental maps. In: McNeill D, editor. Language and gesture. Cambridge University Press; New York: 2000. pp. 13–46. [Google Scholar]

- Hunsicker D. Complex nominal constituent development in Nicaraguan homesign. Unpublished doctoral dissertation. University of Chicago; 2012. [Google Scholar]

- Hunsicker D, Goldin-Meadow S. Hierarchical structure in a self-created communication system: Building nominal constituents in homesign. Language. 2012;88(4):732–763. doi: 10.1353/lan.2012.0092. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jackendoff R. Foundations of language: Brain, meaning, grammar, evolution. Oxford University Press; Oxford: 2002. [DOI] [PubMed] [Google Scholar]

- Jakobson R, Halle M. Fundamentals of language. Mouton; The Hague: 1956. [Google Scholar]

- Kegl J, Iwata G. In: Carlson R, Delancey S, Gilden S, Payne D, Saxena A, editors. Lenguaje de Signos Nicaragüense: A pidgin sheds light on the “creole?” ASL; Proceedings of the Fourth Annual Meeting of the Pacific Linguistics Conference; Eugene. 1989; pp. 266–294. University of Oregon, Department of Linguistics. [Google Scholar]

- Kendon A. Some relationships between body motion and speech: An analysis of an example. In: Siegman A, Pope B, editors. Studies in dyadic communication. Pergamon Press; Elmsford, NY: 1972. pp. 177–210. [Google Scholar]

- Kendon A. Gesticulation and speech: Two aspects of the process of utterance. In: Key MR, editor. Relationship of verbal and nonverbal communication. Mouton; The Hague: 1980. pp. 207–228. [Google Scholar]

- Kendon A. Sign languages of Aboriginal Australia. Cambridge University Press; New York: 1988. [Google Scholar]

- Kendon A. Gesture: Visible action as utterance. Cambridge University Press; Cambridge: 2004. [Google Scholar]

- Kyle J, Woll B. Historical and comparative aspects in BSL. In: Kyle J, editor. Sign and School. Multilingual Matters; Clevedon, UK: 1987. pp. 12–34. [Google Scholar]

- Langus A, Nespor M. Cognitive systems struggling for word order. Cognitive Psychology. 2010;60:291–318. doi: 10.1016/j.cogpsych.2010.01.004. [DOI] [PubMed] [Google Scholar]

- Levy ET, McNeill D. Speech, gesture, and discourse. Discourse Processes. 1992;15:277–301. [Google Scholar]

- Liddell S. THINK and BELIEVE: Sequentiality in American Sign Language. Language. 1984;60:372–392. [Google Scholar]

- Liddell SK, Metzger M. Gesture in sigh language discourse. Journal of Pragmatics. 1998;30:657–697. [Google Scholar]

- Mathur G, Rathmann . Verb agreement in sign language. In: Brentari D, editor. Sign languages: A Cambridge language survey. Cambridge University Press; Cambridge, UK: 2010. pp. 173–196. [Google Scholar]

- McNeill D. Hand and mind: What gestures reveal about thought. University of Chicago Press; Chicago: 1992. [Google Scholar]

- McNeill D. Catchments and contexts: Non-modular factors in speech and gesture production. In: McNeill D, editor. Language and gesture. Cambridge University Press; New York: 2000. pp. 312–328. [Google Scholar]

- Meir I, Lifshitz A, Ilkbasaran D, Padden C. In: Smith ADM, Schouwstra M, de Boer B, Smith K, editors. The interaction of animacy and word order in human languages: A study of strategies in a novel communication task; Proceedings of the Eighth Evolution of Language Conference; Singapore. 2010; pp. 455–456. World Scientific Publishing Co. [Google Scholar]

- Nyst VAS. Shared Sign Languages. In: Pfau M, Steinbach M, Woll B, editors. Sign language. Mouton de Gruyter; Berlin: 2012. pp. 552–574. [Google Scholar]

- Özyürek A, Furman R, Goldin-Meadow S. Journal of Child Language. 2013. Emergence of segmentation and sequencing in motion event representations without a language model: Evidence from Turkish homesign. in press. [Google Scholar]

- Padden C. Interaction of morphology and syntax in American Sign Language. Garland Press; New York: 1988. [Google Scholar]

- Rimor M, Kegl JA, Schermer T, Lane L. Natural phonetic processes underlie historical change and register variation in American Sign Language. Sign Language Studies. 1984;43:97–119. [Google Scholar]

- Sapir E. Language. Harcourt, Brace and World, Inc.; New York: 1921. [Google Scholar]

- Schembri A, Jones C, Burnham D. Comparing action gestures and classifier verbs of motion: Evidence from Australian Sign Language, Taiwan Sign Language, and nonsigners’ gesture without speech. Journal of Deaf Studies and Deaf Education. 2005;10:272–290. doi: 10.1093/deafed/eni029. [DOI] [PubMed] [Google Scholar]

- Senghas A. Children’s contribution to the birth of Nicaraguan Sign Language, Unpublished doctoral dissertation. MIT; Cambridge, MA: 1995. [Google Scholar]

- Senghas A, Coppola M. Children creating language: How Nicaraguan Sign Language acquired a spatial grammar. Psychological Science. 2001;12:323–328. doi: 10.1111/1467-9280.00359. [DOI] [PubMed] [Google Scholar]

- Senghas A, Kita S, Özyürek A. Children creating core properties of language: Evidence from an emerging sign language in Nicaragua. Science. 2004;305:1779–1782. doi: 10.1126/science.1100199. [DOI] [PubMed] [Google Scholar]

- Senghas A, Özyürek A, Goldin-Meadow S. In: Smith ADM, Schouwstra M, de Boer B, Smith K, editors. The evolution of segmentation and sequencing: Evidence from homesign and Nicaraguan Sign Language; Proceedings of the Eighth Evolution of Language Conference; Singapore. 2013; pp. 279–289. World Scientific Publishing Co. [Google Scholar]

- Slobin DI. Language change in childhood and in history. In: Macnamara J, editor. Language learning and thought. Academic Press; New York: 1977. pp. 185–214. [Google Scholar]

- So C, Coppola M, Licciardello V, Goldin-Meadow S. The seeds of spatial grammar in the manual modality. Cognitive Science. 2005;29:1029–1043. doi: 10.1207/s15516709cog0000_38. [DOI] [PubMed] [Google Scholar]

- Spaepen E, Coppola M, Spelke E, Carey S, Goldin-Meadow S. Number without a language model. Proceedings of the National Academy of Sciences of the United States of America. 2011;108(8):3163–3168. doi: 10.1073/pnas.1015975108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stokoe WC. Studies in Linguistics, Occasional Papers. 1960. Sign language structure: An outline of the visual communications systems; p. 8. [DOI] [PubMed] [Google Scholar]

- Supalla T. Structure and acquisition of verbs of motion and location in American Sign Language. Unpublished doctoral dissertation. University of California; San Diego: 1982. [Google Scholar]

- Sutton-Spence R, Woll B. The Linguistics of British Sign Language: An Introduction. Cambridge University Press; Cambridge, UK: 1999. [Google Scholar]