Abstract

Technology holds promise in terms of providing support to older adults. To date there have been limited robust systematic efforts to evaluate the psychosocial benefits of technology for older people and identify factors that influence both the usability and uptake of technology systems. In response to these issues we developed the Personal Reminder Information and Social Management System (PRISM), a software application designed for older adults to support social connectivity, memory, knowledge about topics, leisure activities and access to resources. This trail is evaluating the impact of access to the PRISM system on outcomes such as social isolation, social support and connectivity. This paper reports on the approach used to design the PRISM system, study design, methodology and baseline data for the trial. The trial is multi-site randomized field trial. PRISM is being compared to a Binder condition where participants received a binder that contained content similar to that found on PRISM. The sample includes 300 older adults, aged 65 – 98 years, who lived alone and at risk for being isolated. The primary outcome measures for the trial include indices of social isolation and support and well-being. Secondary outcomes measures include indices of computer proficiency, technology uptake and attitudes towards technology. Follow-up assessments occurred at 6 and 12 months post-randomization. The results of this study will yield important information about the potential value of technology for older adults. The study also demonstrates how a user-centered iterative design approach can be incorporated into the design and evaluation of an intervention protocol.

Key Terms: Social interaction, technology, research methods and issues

Introduction

The increasing number of older people in the population, especially the “oldest old” presents opportunities and challenges. Although the majority of older adults report good health, the likelihood of developing a disability or chronic condition, experiencing difficulties performing daily living activities, or experiencing cognitive declines increases with age, especially for those in the later decades (Federal Interagency Forum on Aging-Related Statistics, 2012). Many older adults also experience problems with reduced social contacts and social isolation (Victor, Scambler, Bond & Bowling, 2000), which generally results in poorer quality of life, mental and physical health status, cognitive deterioration and increased mortality (e.g., Aylaz, Artürk Ü, Erci, Ӧztürk & Aslan, 2012; Ellis & Hickie, 2001; Fratiglioni, Wang, Ericsson, Maytan, & Winblad, 2000; Steptoe, Shankar, Demakakos & Wardle; 2013). Thus, interventions aimed at improving social relationships for older adults represent an opportunity to improve quality of life and well-being.

Technology holds promise in this respect. For example, the Internet can provide access to information and services; expand educational and recreational opportunities; support social connectivity and ties to family and friends especially those who are long distant (Czaja & Lee, 2012). Data indicate that an increasing number of older adults are using the Internet and that one of the most common reason for use is for communication and social activities (e.g., Zickuhr & Madden, 2012). In fact, recently, there have been numerous studies that have demonstrated the value of technology for older adults on outcomes related to social connectivity and other indices of well-being and the findings have been largely positive (e.g., Sum, Matthews, Hughes, and Campell, 2008; White et. al., 1999; Erickson and Johnson, 2011; Choi, Kong & Jung, 2012; Cotton, Anderson & McCullough, 2013).

However, many of these studies are plagued by methodological shortcomings such as a lack of robust evaluation strategies, control groups, long-term follow-up assessments, or large, diverse samples. The goal of this multi-site trial is to gather rigorous systematic evidence about the value of technology for older adults and to identify factors that affect usability, acceptance and adoption of technology. The trial is evaluating the PRISM software application. The features of PRISM were designed to support social connectivity and engagement, memory, knowledge about topics and resources, and engagement in leisure activities. PRISM is being compared to a Binder condition where participants received a notebook/binder that contained content similar to that found on the PRISM system. We hypothesized that the use of PRISM (e.g., email and the buddy feature; community resource feature) will result in increases in perceived social support, social connectivity, engagement, and decreases in perceived social isolation for those in the PRISM condition. We also hypothesized that exposure to and use of the PRISM system will result in more positive attitudes towards computers, greater acceptance of technology acceptance and increased computer proficiency for those in the PRISM condition.

This paper reports on the process used to design PRISM, the trial design, recruitment activities, and characteristics of the trial participants. The trial has several unique features. PRISM was designed using an iterative user-centered design process that involved older adults. Our sample is large and diverse. We include an extensive assessment battery that will allow us to examine a wide array of issues related to the value of technology and technology uptake. The trial was conducted to adhere, as far as possible, to Consort Standards for Randomized Clinical Trials RCTs.

Methods

Overview of the Study Design

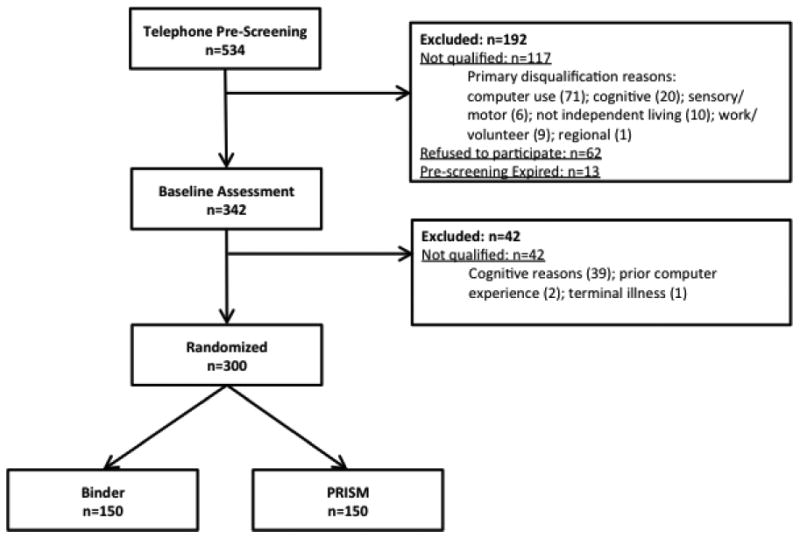

The trial is a multi-site randomized controlled trial that was conducted at three sites: University of Miami Miller School of Medicine (UMMSM) (Miami), Florida State University (Tallahassee) and Georgia Institute of Technology (Atlanta) of the National Institute of Aging funded Center for Research and Education on Aging and Technology Enhancement (CREATE). Following a telephone screening and baseline assessment, eligible participants were randomized into the PRISM condition or the Binder condition (Figure 1). Those assigned to the PRISM condition received a computer equipped with the PRISM software and those assigned to the Binder condition received a binder. The duration of the system evaluation period was 12 months. Follow-up assessments occurred at 6 and 12 months post randomization. Participants also completed a brief telephone assessment at 18 months. The trial was highly manualized and standardized protocols for recruitment, assessment, implementation and data transfer were followed at all three sites. The Institutional Review Boards at the site institutions approved the study protocol. The UMMSM serves as the coordinating site for the study.

Figure 1. Consort Diagram.

Eligibility Criteria

The eligibility criteria were aimed at identifying older adults who were socially isolated. Participants were required to be age 65 years of age or older; live alone in an independent community setting; not be employed or be volunteering more than 5 hrs./week; and not spend more than 10 hrs./week at a Senior Center or Formal organization. We chose these criteria as living alone, employment and social connections are important correlates of social isolation (Hawthorne, 2006). Participants were also required to speak English, have at least 20/60 vision with or without correction, be able to read at the 6th grade level, have minimal computer and Internet use in the past three months; and planning to remain in the area in the same living arrangements for the trial duration. Participants were ineligible if they were blind or deaf; had a terminal illness or severe motor impairment and were cognitively impaired (Mungus corrected score of < 26 on the Mini Mental Status Examination (MMSE) (Folstein, Folstein & McHugh, 1975). All participants provide written informed consent.

The PRISM Condition (Computer Condition)

Overview

Participants randomized to the PRISM condition received a Lenovo “Mini Desktop” PC with a keyboard, mouse (or trackball for those with inability to control a mouse) and a 19” LCD monitor and the PRISM software application. They were also provided with a printer. The participants' computers were linked to a secure server at the University of Miami and real time data was collected on system usage. Participants were unable to add other applications or delete PRISM. Internet access was provided through a wireless card. Participants were able to keep the computer after the duration of the trial.

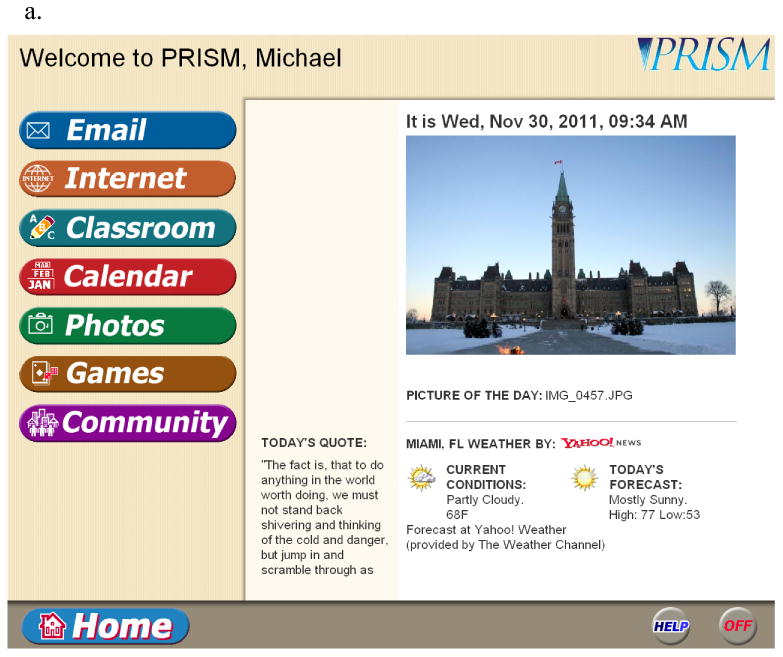

The PRISM software application was designed to support social connectivity, memory, knowledge about topics and resources, and resource access among older adults. The software included: Internet access (with a menu of vetted links to sites for older people such as NIHSeniorHealth.Gov); an annotated resource guide; a classroom feature; a calendar feature, a photo feature; email; and games (Figure 2a) and an online help feature.

Figure 2.

a. PRISM Home Page

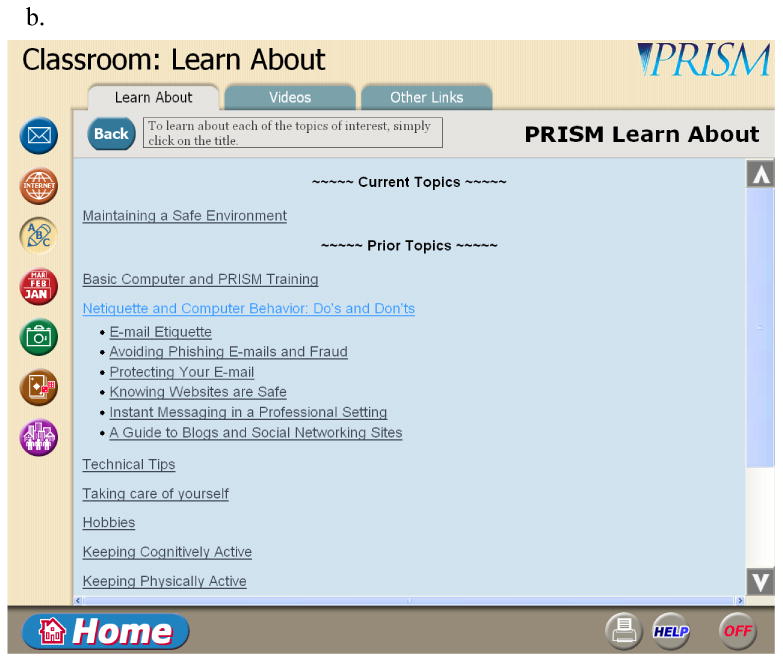

b. PRISM Classroom Feature

The classroom feature was dynamic and contained scripted information, vetted videos, and vetted links to other sites on a broad array of topics (e.g., cognitive health; traveling tips, nutrition). New material was placed in the classroom every month and remained in the “classroom library.” The classroom feature also contained links to basic computer and PRISM training, mouse practice exercises and “computer etiquette.” The email feature enabled participants to send emails to families, friends, PRISM staff and also had a “buddy component” intended to foster social connectivity. Specifically, upon enrollment participants assigned to the PRISM condition were asked if they wish to be a “PRISM buddy.” If they agreed, their email address was placed in the “PRISM Buddy” tab of the email feature as well as a few key words describing their hobbies/interests. The intent was to stimulate email exchanges and foster new relationships among the participants. The photo feature was preloaded with an album created by the research team. Participants were able also to create their own albums and share photos. We thought that the sharing of photos would also foster social connectivity. The community resource feature contained information about local and national resources of potential value to seniors (e.g., Area Aging on Aging, Transportation Services, National Institute on Aging) as well as local community events that might be of interest. The community event information was updated monthly and also placed in the calendar. The calendar feature was pre-populated with information on holidays and as noted, community events (which were updated). Participants could also add information to their calendar. The calendar feature also had a reminder feature and participants could choose the schedule for reminders (e.g., daily, weekly, monthly). This was intended to support prospective memory or remembering to support specific actions in the future (e.g. doctor's appointment, birthday). The calendar also had a notebook feature that enable participants to make lists of items they wished to remember so as a “to do” list. Due to software constraints the games feature was restricted to single player games but included games such as Solitaire and checkers. An online help feature was also available on the system that provided general help and help for use of each feature.

Features were accessed with a single click on the feature name on the sidebar menu and the main categories within a feature were arranged using tabs that appear at the top of the screen (Figure 2b). The homepage contained the date and time; the weather; a picture and quote of the day. The research team preselected the pictures and quotes. Users accessed PRISM by simply turning on the computer; there were no login requirements.

A program was developed to monitor system use that parsed usage on a daily basis. Overall usage was monitored as well as use of the specific features and tabs within features (e.g., the buddy aspect of the email feature). An automatic e-mail message was sent to a site coordinator if a participant had not used the system for more than 7 days who then contacted the participant to determine the reason for nonuse (e.g., technical difficulties). The frequency, duration, and nature of all contacts with the technical help staff were recorded.

Design of the PRISM Software

The PRISM software application was built on the Big Screen Live platform, a software-as-a-service application that was designed to provide easy access to the Internet services - email, photo sharing, news, web browsing, games, and simplified online shopping (Carousel Information Management Solutions Inc.). We used an iterative user-centered design approach where older adults were actively involved throughout the design process via a survey study, focus groups and pilot testing.

The survey study was conducted at the Atlanta and Tallahassee sites and included 321 participants (57% were female) who ranged in age from 60-93 (M = 74.62; SD = 5.98), the majority of whom (88%) were active users of computers. We chose to conduct the survey with older adults who were active computer users in order to gather information about the importance of various activities (e.g., socializing) to quality of life; the value of having access to computers and the Internet; and features and information topics that would be of potential value to older adults. The information gathered helped determine our selection of features for PRISM; topics for the classroom feature and the resource guide; and website favorites. For example, the respondents indicated that having opportunities for social interaction such as email and sharing photos was extremely useful and important. They also indicated websites that they found useful such as the websites for: Medicare, the Social Security Administration and Area Agency on Aging. When asked about topics for educational opportunities most respondents indicated the importance of learning about basic computer skills, tips on finance, investments, and home repair. These responses helped guide our selection of topics for the classroom feature.

We also conducted two initial focus groups at the Miami site and a total of 14 adults (5 males and 9 females) aged 60-85 years (M = 74.00; SD = 8.85) participated in the two groups. The participants were introduced to the concept of the PRISM system and shown an early mockup via a Power Point presentation. They were then asked to comment on the potential value of PRISM; the planned system features and content of the features; and the interface. Data from the focus groups was also used to guide the initial design of the system. The participants also commented on potential topics of interest for the classroom features; important resources; the screen graphics; the choice of icons; and the functionality of the calendar feature. For example, the participants indicated they would like a notebook added to the calendar feature and that they would like a reminder feature included in the calendar. They also stated that it would be useful to have the date and time added to the home page. With respect to the classroom some suggested topics included information on exercise and nutrition, travel and health issues.

The initial design of the system and chosen features were also based on: 1) theories regarding successful aging (e.g., Activity Theory (Rowe & Kahn, 1998)); 2) the existing literature regarding age changes in abilities (e.g., prospective memory loss (Backman, Small & Wahlin, 2001)); 3) guidelines regarding interface design and training for older adults (Fisk, Rogers, Charness, Czaja & Sharit, 2009); 4) the human-computer interaction literature; 5) recent findings regarding patterns of Internet use among older adults; 6) data from our Core battery regarding technology usage patterns (e.g., Czaja et al., 2006); and 7) existing models of technology adoption (e.g., TAM (Bagozzi, 2007)) and technology diffusion (e.g., “epidemic models” (Geroski, 2000)).

Following analyses of the survey and focus group data and review of information from the other sources listed above, an initial working prototype of the system was then developed and reviewed with respect to adherence to existing usability criteria (Fisk et al., 2009) by the research team. We used standard usability assessment tools such as heuristic analysis and a cognitive walkthrough to identify potential user difficulties (Nielsen, 2000). This analyses resulted in a number of changes to the system such as: increasing the contrast ratio of the icons and labels on the screen buttons, rewording of the some of the onscreen instructions to make them consistent and less technical; including two types of help features (feature specific and general); changing the functionality for the buttons to increase consistency; simplifying the method for uploading photos; simplification of the page banners and the footers; and adding tabs to all of the features for consistency.

The refined prototype was then pilot tested by a sample of five older adults at each site (5 males; 10 females) who ranged in age from 66-87 (M = 77; SD = 8.14). The majority had experience with computers (n=12) and the Internet (n=10). The participants were trained on the system, asked to use the various features and complete an evaluation/usability questionnaire. The majority (n=13) of participants indicated it was easy learn how to use PRISM, that they were satisfied with PRISM as a whole (n=14) and that it was enjoyable to use (n=12). The participants also provided important feedback on needed modifications to the system such as making the help system and the calendar easier to navigate and the need for more training on basic window operations and use of the mouse.

Based on the results of the pilot testing the system was further refined and pilot tested at all three sites a second time with an additional sample of 12 older adults (4 per site) (aged 67-87; M = 75.08; SD = 6.22). The training protocol was also piloted tested. Feedback from this round of pilot testing resulted in further refinements to the training protocol and the system interface. For example, further refinements were made to the online help system and a PRISM primer and mouse practice exercises were added to the classroom feature. We also enhanced our training on use of the printer.

Binder Condition

Participants assigned to this condition received a notebook that contains content similar to that within the PRISM software. The binder contained a calendar, an annotated resource guide (basic information about the resources and contact information); games (e.g., word games, playing cards and card game rule book); information about community groups; and information sheets on the same topics included in the “classroom feature” of the PRISM software. This material also contained references to other sources of material on the topic and was updated monthly via mail to parallel the dynamic nature of the classroom feature in the PRISM condition. Participants were also given the opportunity to be a “Buddy,” which in this case meant sharing their phone number and interests with other participants assigned to this condition. They received the same number of planned contacts as those in the PRISM condition. We chose this control condition as it allowed us to evaluate the medium of information delivery, computer vs. paper and the potential benefits of computers such as easier access to a wide variety of information via the Internet, the ability to communicate asynchronously, the ease of exchanging photos, saving information (e.g., communications, notes), etc.

Recruitment

Various methods were used for participant recruitment that included: advertisement in local media and newsletters, attendance at church and community meetings, interactions with agencies serving older adults (e.g., Meals on Wheels, Elderly, Disability and Veterans Services Bureau), posting flyers in low income senior housing buildings and public libraries, mailing lists and participant registries. Analysis of the recruitment data indicated that a variety of recruitment activities were required to locate and recruit the study participants. Overall, the most fruitful recruitment activities included: community outreach activities such as speaking at churches or senior housing locations or interacting with agencies serving older adults (32%), followed by placing of flyers and brochures (29%), and referrals from family or word of mouth (19%). Media ads in newspapers and radio/TV accounted for 15% and participant registries for 3% of the recruitment of participants, respectively. As shown in Table 3, there were some differences in sources of recruitment by ethnicity/culture and by age. There were no differences in recruitment source by gender (p > .05).

Table 3. Sources of Recruitment by Ethnicity/Culture and Age.

| Race/Ethnicity*** | Age Groups** | Total Overall | ||||

|---|---|---|---|---|---|---|

| White Caucasian | African American | Hispanic | 65-80 yrs | 80+ yrs | ||

| Community Outreach | 23% | 34% | 71% | 32% | 31% | 32% |

| Flyers/Brochures | 35% | 23% | 7% | 25% | 37% | 29% |

| Word of mouth | 17% | 25% | 13% | 22% | 13% | 19% |

| Newspaper/Paid ad | 18% | 12% | 9% | 15% | 15% | 15% |

| Participant Registry | 3% | 4% | 0% | 4% | 1% | 3% |

| Other/Unknown | 3% | 1% | 0% | 2% | 3% | 2% |

Note:

(χ2 = 62.15, 15, p < .001);

(χ2 = 15.69, 5, p < .01)

Protocol and Contact Schedule

Interested participants contacted the study coordinator at each site and completed a telephone screening that assessed eligibility status (e.g., age; prior computer/Internet experience, living arrangements). A home baseline assessment was then scheduled for those who were eligible and remained interested in participation. During the baseline assessment participants provided informed consent and completed the measurement battery (Table 1), administered by an assessor who was trained and certified, using a standardized protocol by the Miami site. Participants were then randomly assigned to study condition.

Table 1. Description of the Assessment Battery.

| Measure | Name of Measure | Description | |

|---|---|---|---|

| Screening Measures | Telephone Pre-screening | Telephone screening | Includes questions related to their education, culture ethnicity, vision/hearing impairments, experience with computers, living arranges, employment status, recruitment sources. |

| Wechsler Memory Scale III (WMS-III) (Wechsler, 1997) | Memory screening | This is phone screening. Participants are asked to recall elements from brief stories. A higher score reflects better memory. | |

| Potential Moderating Variables/Sample Description | Demographic Information (Czaja et al., 2006) | Demographic characteristics and self-ratings of health. | Includes questions related to age, education, occupational status, income, culture ethnicity, living arrangements and perceptions of health and health functioning. |

| Life Space Questionnaire (Stalvey, Owsley, Sloane & Ball, 1999) | Mobility and Activity Patterns | Participants answer 9 questions related to their mobility during the past 3 days. Each item is rated “yes or no” and a higher score reflects greater mobility. | |

| Personality Inventory (TIPI) (Gosling, Rentfrow & Swann, 2003) | Personality Traits | 10 items that assess personality traits. A higher score indicates higher agreement. Extraversion Cronbach's α = .259, agreeableness Cronbach's α = .195, conscientiousness Cronbach's α = .207, emotional stability Cronbach's α = .483, openness to experience Cronbach's α = .253. | |

| Technology Experience Questionnaire (Czaja et al., 2006) | Experience with Technology, Computers and the Internet | Participants answer a series of questions that assessed use of technology and use/breadth of experience with computer technology and the Internet. | |

| Wide Range Achievement Test (WRAT)-R (Wilkinson, 1993) | General Reading Level | Individuals are given list of 42 words and 15 letters and asked to read the words. If they make 5 consecutive errors they read the letters. Total score ranges from 0 – 57. | |

| Potential Moderating Variables: Cognitive Ability Measures | Animal Fluency (Rosen, 1980) | Verbal Fluency | Individuals are asked to verbally name as many animals as possible in 60 seconds. A total score is computed based on the number of animals named. |

| Choice Reaction Time (CREATE for PRISM trial) | Choice Reaction Time | A computer-based measure, where participants to respond to a stimulus, which appears on the screen as quickly as possible for 40 trials. | |

| Digit Symbol Substitution (Wechsler, 1981) | Processing speed/psychomotor function. | Individuals are asked to recall digit – number pairings for nine digit-symbol pairs. The score is the number of correct symbols within 90 sec. | |

| Letter Sets (Ekstrom, French, Harman & Derman, 1976) | Induction/Reasoning | A participate has to identify dissimilar letters in five sets of letters, each set containing four letters. A higher score reflects higher reasoning abilities. | |

| Mini Mental State Exam (Folstein, Folstein & McHugh, 1975) | Cognitive Status | Participants are asked a series of questions that assess their cognitive status. | |

| Shipley Institute of Living Scale (Zachary, 1986) | Vocabulary/Crystallized Intelligence | Participants circle the word that has the same or nearly the same as a referent word for 40 words. A higher score indicates greater vocabulary/crystallized intelligence. | |

| Simple Reaction Time (CREATE for this trial) | Simple Reaction Time | Individuals are asked to use their dominant hand to press the keyboard when a stimulus appears. There are a total of 40 trials. | |

| Stroop Color Name Test (McCabe, Robertson & Smith, 2005) | Working Memory | A computer-based measure, which requires individuals to name the color of a series of 2-6 congruent and incongruent color-words and recall the color order. | |

| Test of Functional Health Literacy in Adults (STOFHLA) (Baker et al., 1999) | Functional Health Literacy | Rates reading and computational skills. Scores range from 0 to 36; 0-16 inadequate health literacy; 17-22 marginal health literacy and 18-36 adequate health literacy. | |

| Trails A & B (Reitan, 1958) | Attention and General Executive Functioning | In Part A, individuals are asked to connect consecutively numbered circles. In Part B: Individuals are asked to connect alternating numbered and lettered circles. | |

| Perception of Memory Functioning (Gilewski, Zelinksi & Schaie, 1990) | Everyday Memory Functioning | 64 items that assess perception of memory functioning including: frequency of forgetting (Cronbach's α = .941); seriousness of forgetting (Cronbach's α = .949); retrospective functioning (Cronbach's α = .880) and use of mnemonics (Cronbach's α = .827). A higher score indicates fewer problems. | |

| Wide Range Achievement Test (WRAT)-R (Wilkinson, 1993) | General Reading Level | Individuals are given list of 42 words and 15 letters and asked to read the words. If they make 5 consecutive errors they read the letters. Total score ranges from 0 – 57. | |

| Primary Outcome Measures | Quality of Life (Logsdon, Gibbons, McCurry & Teri, 2002) | Perceived Quality of Life | A 13-item scale where respondents rate aspects of their life from poor to excellent. A higher score indicates better quality of life (Cronbach's α = .855). |

| Friendship Scale (Hawthorne, 2006) | Social Isolation | Six items that assess social isolation. Responses are rated as almost always to not at all. A higher score indicates less isolation (Cronbach's α = .747). | |

| Lubben Social Network Index (Lubben, 1988) | Social Network Size | A 12 item scale where individuals are asked to indicate the size of their social network. A higher score indicates higher level of social support. | |

| Interpersonal Support Evaluation List (Cohen, Mermelstein, Kamarack & Hoberman, 1985) | Social Support | 12-items that assesses overall social support and 3 dimensions of social support: degree, belonging and tangible. A higher score indicates more support. (Cronbach's αs for Overall Support, Appraisal, Belonging, and Tangible Support are .847, .679, .714, and .708 respectively). | |

| Loneliness Scale (Russell, Deplau & Ferguson, 1978) | Perceived Loneliness | 20 items that assess perceived loneliness. Score ranges from 20 to 80. A higher score indicates greater loneliness (Cronbach's α = .912). | |

| Perceived Vulnerability Scale (Myall, Hine, Marks & Thorsteinsson, 2009) | Perceived Vulnerability | 22 items that assess perceived vulnerability to aging-related outcomes A higher score indicates greater perceived vulnerability. Cronbach's α = .947). | |

| Potential Mediating Variables | Center for Epidemiologic Studies -Depression Scale (Radloff, 1977) | Depressive Symptoms | 20 items that assess level of depressive symptoms. Scores range from 0 to 60, with higher scores indicating more depressive symptoms. (Cronbach's α= .872). |

| Formal Care and Services Utilization (Wisniewski et al., 2003) | Use of Support Services | 13 items that about the type (e.g., homemaker, home health aide,) and frequency of services received during the past month. A higher score reflects greater service use. | |

| Functional Health and Well-being (SF-36) (Ware & Sherbourne, 1992) | Self-rating of functional health and well-being. | A 36-item questionnaire that assess 8 dimensions of health and well-being: Cronbach's αs for Physical Functioning, Role Limitations, Role Limitations due to Emotional Problems, Energy/Fatigue, Emotional Well-Being, Social Functioning, Pain and General Health are .906, .886, .784, .835, .805, .819, .838, and .755 respectively. | |

| Life Engagement Test (Scheier et al., 2006) | Engagement in Life Activities | 6 items that assess involvement in life activities. A higher score indicates greater involvement (Cronbach's α = .767). | |

| Secondary Outcome Measures | Computer Attitudes (Jay & Willis, 1992) | Attitudes about computers (comfort, efficacy and interest). | A 15-item scale that assesses 3 dimensions of computer attitudes (comfort, efficacy, and interest). Scores range from 5 to 15. A higher score indicates a higher comfort, efficacy and interest (Cronbach's αs for Comfort, Efficacy, and Interest are .814, .799, and .770 respectively) |

| Computer Proficiency (Boot et al., 2013) | Computer Proficiency | 33 items that assess proficiency in 6 domains related to computer skills. Participants rate their ability on a 5-point scale (1 = never tried 5 = very easily). A higher score indicates higher proficiency. Basic Computer skills (Cronbach's α = .882); Printing skills (Cronbach's α = .900); Using communication tools (Cronbach's α = .923); Internet skills (Cronbach α = .891); Calendaring (Cronbach's α = .927), and Using entertainment (Cronbach's α = .780). | |

| Technology Acceptance Questionnaire (CREATE for this trial) | Acceptance of Technology | A 12-item questionnaire that assesses the usability and utility of PRISM or the Binder |

Note. We did not compute Cronbach's Alpha for scales or measures that had an accuracy score or a count such as use of services.

Participants in both conditions received three additional home visits for training. For those assigned to PRISM the training consisted of training and practice on basic computer, mouse and windowing skills and then training and practice on each of the PRISM features. They were also provided with a user manual and easy to use brief “help” card. A usability expert initially vetted both of these documents. In addition, participants were able to contact a technical help line at the University of Miami. Participants in the Binder condition received training and engaged in practice on use of the binder materials (e.g., completing the calendar, playing a card game). All participants receive a “check-in” call one week following the third home visit and at 3 months and 9 months. They then completed the follow-up battery at 6 and 12 months and an 18 months brief telephone interview. Participants in the PRISM condition were compensated $25 for each assessment. Those in the binder condition were compensated $25 for the baseline and 6-month assessment and $100 for the 12-month assessment, as they did not receive a computer. They were also provided with an opportunity to receive basic computer training following the 12-month assessment.

To ensure blinding, a certified assessor blinded to treatment condition administered the primary outcome measures via a telephone interview. The same assessor who was blinded to treatment condition mailed the secondary outcome measures (e.g., computer attitudes) and other instruments that were self-administered (e.g., demographics). A certified assessor also administered those parts of the remainder of the follow-up battery (non-primary outcome measures) that needed to be completed in the home such as the cognitive ability measures. Participants were also interviewed at 6 and 12 months regarding their perceptions of PRISM or the Binder and the perceived impact on their day-to-day activities.

Measures

Tables 1 provide a description of the measures and where appropriate Cronbach's Alpha for each measure based on the participant's baseline values (Table 1). As shown participants completed a background questionnaire that assessed basic demographic information and self-ratings of health, and measures of computer attitudes, technology/computer/Internet experience, general technology acceptance and computer proficiency. In addition, they completed a life space questionnaire that assessed their mobility and activity patterns and a brief personality inventory. Also, the Test of Functional Health Literacy in Adults (STOFHLA, Baker, William, Parker, Gazmararian & Nurss, 1999) was administered as a measure of health literacy and the WRAT (Wilkinson, 1993) as a measure of general reading ability. The demographic measures will serve as potential moderating variables in our analyses

Measures of several cognitive abilities were collected at the baseline and the 12-month follow-up. The measures chosen were based on evidence indicating that the abilities are related to adoption of technology (Czaja et al., 2006) and computer-based task performance (e.g., Czaja, Sharit, Hernandez, Nair & Loewenstein, 2010). Participants also completed a questionnaire that assessed various aspects of their everyday memory functioning (Gilewski, Zelinkski & Schaie, 1990). These measures were included to examine if cognitive abilities predicted use of PRISM or the binder.

Primary and Secondary Outcome Measures

The primary outcome measures for the trial include changes, at 6 and 12 months, include: degree of social isolation, social support and overall wellbeing (See Table 1). Our secondary outcome measures include indices of computer attitudes and computer proficiency.

Our broad array of outcome measures will also allow us to examine potential moderator (e.g., age, health status) and mediator (e.g., changes in emotional wellbeing, and satisfaction with various aspects of life (See Table 1) variables on the primary outcome measures. These will include both single measures and multivariate outcomes using Principal Components Analyses and Linear Structural Equation approaches as described in the Data Analysis Section. Multivariate composites of cognitive variables will also be used as moderators in our analyses. Changes at, at 6 and 12 months, in attitudes towards technology, technology acceptance and adoption and computer proficiency will be examined as secondary measures.

PRISM Related Measures

Participants in both conditions completed an evaluation questionnaire at both 6 and 12 months, which assessed satisfaction with PRISM or the Binder. They also completed a brief semi-structured interview regarding their overall impressions of how PRISM or the binder has impacted their everyday activities. Real time data of system usage patterns was collected for participants randomized to the PRISM condition. In addition, a log was maintained of technical help requests.

Eighteen-Month Interview

A brief telephone interview was conducted at 18 months that included questions related to technology adoption, attitudes towards technology and continued use of PRISM or the Binder.

Treatment Fidelity

The trial was highly manualized. A detailed manual of operations was developed for all study protocols and used at all three sites and the training and implementation protocols were scripted. A detailed manual was also developed for all data transfer and data management activities. All sites applied equivalent procedures and protocols and standardized protocols for screening, tracking, training, and contacting participants. In addition, all assessors and interventionists were trained and certified by the Miami site and trial activities at all three sites are discussed at team meetings. There were monthly conference calls with the project coordinators and the data management team around issues related to data collection, transfer or the PRISM technology. All data is maintained at a secure server at the Coordinating site.

Data and Safety Monitoring

An independent data safety monitoring board (DSMB) was convened for the trial and met twice yearly (in person and via conference call) or as needed. The DSMB provided study oversight and monitored participant safety. The DSMB included five members with expertise in gerontology, geriatrics, clinical trials, biostatistics and behavioral interventions.

Sample

As shown in Figure 1, across the three sites a total of 534 individuals received the telephone pre-screening. Of these, 192 people were excluded due to ineligibility (n= 117), lack of interest in participating (n= 62) or because they could not be reached to schedule a baseline assessment (n=13). A total of 342 people received the baseline assessment and of these 42 were excluded. Across the prescreening and baseline assessment the primary reasons for ineligibility were: failure to meet the cognitive criteria (31%), significant computer/Internet experience (30%), work or volunteer activities (8%), or living arrangements (8%). A total of 300 participants were enrolled in the trial across the three sites, 150 in each of the two conditions. The sample is primarily female (78%) and ranges in age from 64 to 98 years (M = 76.15, SD = 7.4). It should be noted that one participant turned 65 in the time window established for scheduling of the baseline assessment following telephone screening. The participants are also ethnically diverse (54% White) and most are of lower socio-economic status and do not have a college degree (78%). There were no differences in age, gender, education, race/ethnicity or income level among those randomized to the PRISM or Binder condition. There were no differences between the groups in measures of cognitive abilities, baseline ratings of computer attitudes or self-ratings of functional health and well-being (all ps. > .05).

However, as shown in Table 2 those randomized to the Binder condition had on average higher CESD scores [F(1,298) = -2.72, p = .007], and reported more social isolation [F(1,298) = 2.21, p < .03], lower quality of life [F(1,298) = 4.13, p < .04] and less life engagement [F(1,298) = 2.39, p < .02].

Table 2. Baseline Demographic and Measures of Support, Quality of Life and Depression for Participants in the PRISM STUDY.

| Total Group | PRISM | Binder | df | F-value Mann-Whitney U or X2 | |

|---|---|---|---|---|---|

|

| |||||

| Age | 76.15 | 76.97 | 75.34 | 1,298 | 3.69 |

| Range 64-98 | (SD=7.4) | (SD=7.3) | (SD=7.4) | ||

| Median= 76.0 | Median= 76.5 | median= 74.0 | |||

|

| |||||

| Gender | 78.0 % female | 79.3 % female | 76.7% female | 1 | .18 |

|

| |||||

| Education | HS or less=39.0 % | HS or less=43.3 % | HS or less=34.7 % | 3 | 5.67 |

| Some College=38.7 % | Some College = 35.3% | Some College=42.0 % | |||

| College Grad= 13.0 % | College Grad= 10.0 % | College Grad= 16.0 % | |||

| Post Graduate=9.3 % | Post Graduate=11.3 % | Post Graduate=7.3% | |||

|

| |||||

| Ethnicity | Hispanic=9.0 % | Hispanic= 8.0 % | Hispanic=10.0 % | 3 | .60 |

| White NonHispanic= 54.0 % | White NonHispanic= 53.3 % | White NonHispanic= 54.7% | |||

| Black Non-Hispanic=32.7 % | Black Non-Hispanic=34.0 % | Black Non-Hispanic=31.3% | |||

| Non-Hispanic Other=4.3% | Non-Hispanic Other=4.7% | Non-Hispanic Other=4.0% | |||

|

| |||||

| Household Income | Less than $30,000 = 86.6 % | Less than $30,000 = 84.7 % | Less than $30,000 =88.6 % | 2 | 1.41 |

| $30,000-$59,999 = 11.9% | $30,000-$59,999= 13.1% | $30,000-$59,999= 10.6 % | |||

| $60,000+ =1.5% | $60,000+ = 2.2 % | $60,000+ = .8% | |||

|

| |||||

| +CES-D Score | 11.11 (SD=9.1) | 9.70 (SD=8.3) | 12.52 (SD=9.5) | 1,298 | -2.72** |

| Range 0-52 | Median=9.0 | Median= 8.0 | Median= 12.0 | ||

|

| |||||

| +Social Isolation | 19.24 (SD=3.9) | 19.72 (SD=3.7) | 18.75 (SD=4.1) | 1,298 | 2.21* |

| Range 4-24 | Median=20.0 | Median=21.0 | Median=20.0 | ||

|

| |||||

| +Life Engagement | 24.81 (SD=4.1) | 25.30 (SD=4.1) | 24.29 (SD=4.1) | 1,298 | 2.39* |

| Range 11-30 | Median=25.0 | Median= 26.0 | Median= 25.0 | ||

|

| |||||

| Level of Support | 25.11 (SD=6.9) | 25.33 (SD=6.9) | 24.90 (SD=6.9) | 1,298 | .30 |

| Range=6-36 | Mean= 24.0 | Mean= 25.0 | Median= 24.0 | ||

|

| |||||

| Loneliness Scale | 39.52 (SD=10.0) | 38.81 (SD=9.7) | 40.23 (SD=10.3) Median= | 1,297 | 1.51 |

| Range= 20-73 | Median= 38.0 | Median= 38.0 | 39.0 | ||

|

| |||||

| Quality of Life | 38.35 (SD=5.8) | 39.02 (SD=5.6) | 37.67 (SD=6.0) | 1,298 | 4.13* |

| Range= 21-52 | Median= 38.0 | Median= 39.0 | Median=37.0 | ||

|

| |||||

| Life Space | 5.71 (SD=1.4) | 5.79 (SD=1.4) | 5.64 (SD=1.4) | 1,296 | .91 |

| Range= 21-52 | Median= 5.0 | Median= 6.0 | Median= 5.0 | ||

Notes: 1)+ Because violation of normality assumptions, non-parametric distributional tests were performed using the Mann-Whitney U; 2) Education was not available for two subjects and these values were obtained for the screening data.

p ≤ .01;

p ≤ .05

All primary and secondary outcomes of the study will be tested based on an intention-to-treat approach using a two-tailed level of significance set at alpha=.05. The analyses will involve a series of Repeated Measures Mixed Model ANOVA's (3 time points and 2 treatment groups), with adjustment for baseline values of outcome variables to be used for comparing the two conditions. To assess the effect of treatment, the primary effect of interest is the Group X Time interaction term. The researchers have projected a 20% attrition rate at the 12-month follow-up. A small to medium effect size (f) for the Group X Time interaction even as small as .15 would yield statistical power exceeding .85 even allowing for a modest correlation between the repeated measures.

We appreciate that the study is being conducted at three sites and that by definition participants are nested within regions. This may influence the standard error of the estimates in longitudinal analytic models. While low intra-class correlation coefficients may strengthen the notion of independence we will still incorporate any possible contribution of site to the model using random effect approaches. We will also consider latent growth curve approaches (McArdle et al, 2008, Roesch et al., 2010), that explicitly segmenting time into two epochs to evaluate intervention group differences in change from pretest to posttest and from posttest to follow-up. Using full-information maximum likelihood or Expectation Maximization to allow inclusion of all available data from each case. Both latent mediator and moderator analyses can be performed using bias-corrected bootstrap confidence intervals on the product terms, the most powerful test of mediation (MacKinnon, Lockwood, & Williams, 2004).

Any baseline differences present in the two conditions after equate groups at baseline and/or will be employed as covariates in multi-level modeling.

Discussion and Project Challenges

The PRISM trial is designed to examine the potential value of a simple to use software application designed to support social connectivity, memory, knowledge about topics and resources, and resource access among older adults on outcomes related to well-being and social connectivity for older adults at risk for social isolation. We are also gathering data on factors that influence usability, technology acceptance and use. Findings regarding the benefits on technology on social connectivity outcomes among older adults have been largely positive and suggest that access to computers and the Internet may improve opportunities for social interaction and social connectivity. However, many of the studies that have been done have involved small samples, have lacked control groups, or long term follow-up evaluations. This is one of the first studies to examine these issues with a diverse older adult population that includes people in the older cohorts and those of lower socio-economic status. Further, the technology system was designed using a user-centered design approach that included input from older adults.

During the design and implementation of this trial the study team encountered a few issues that reinforced the challenges associated with conducting randomized clinical trials, especially with older adults, and especially with technology-based interventions. As in every trial a number of decisions must be made that influence participant recruitment, feasibility of protocol implementation, the project timeline, and ultimately the validity and reliability of the outcomes.

One major challenge was participant recruitment. Given that we were targeting participants “at risk for social isolation” venues such as senior centers, which are frequent sources of participants in other trials involving older adults, were not fruitful sources of recruitment in this trial. Instead strategies that involved forming relationships with programs and agencies such as Meals on Wheels that serve vulnerable populations and purchasing mailing lists were more successful. We also faced recruitment challenges because of criteria for limited computer and Internet experience given the increased use of technology among older adults. We also learned that the success of our recruitment strategies varied across the three sites, which reinforces the notion that recruitment strategies have to be innovative and adaptive and tailored to study context and target populations. In addition, having strong relationships with community partners and leaders was critical to our recruitment success. Given that our consent form explained both conditions and included the fact that those randomized to the Binder condition would not be receiving a computer, we offered basic computer training to those randomized to the Binder condition at the end of the trial. This was to support retention in the Binder condition.

Our cognitive criteria also posed challenges with respect to participant eligibility given the targeted age range of our sample. The likelihood of cognitive decline and the incidence of cognitive impairments increase with advancing age (Craik & Salthouse, 2011). However, although many surveys on technology use aggregate individuals 65 and above into one group, there is reason to believe that older members of this group are more likely to have the low levels of computer experience desired for the intervention. For this reason, we did not include an upper limit on participation (with our oldest participant being 98 years old).

Other challenges to the project include limitations in Internet access speed in neighborhoods of some of our participants, and variability in home environments, which posed challenges for implementation of the PRISM system. For example, we encountered crowded living conditions, pets, and clutter, which sometimes made it difficult to “set-up” the system within the participants' homes. Cellular strength was inconsistent in some areas meaning we had to, in some cases, provide an alternative Internet connection (e.g., DSL). We also encountered variability in basic skills among our participants so although we used a standardized training protocol we needed to build adaptability into our training. For example, some of our participants had never used a keyboard and thus needed more training on the fundamentals. Also we had to adapt our training protocol early on to include more practice on use of the mouse as an input device. Finally, there are always challenges associated with ensuring standardization across research sites.

We also encountered a number of challenges related to the technical design of the software and some valuable lessons learned for future trials. One important lesson was the use of a user-centered design approach. We got extremely valuable feedback from our focus groups and pilot testing of the system, which informed our system design decisions and clearly enhanced the usability of the PRISM system. The importance of adhering to existing guidelines (Czaja & Sharit, 2012) for training older adults was also reinforced. As noted, we had to expand our training on basic computer, window and mouse skills, before advancing to training on actual use of the PRISM system. We also learned the importance of tracking reasons for participant non-eligibility and analyzing these data throughout the course of the trial. For example, we learned early on that some participants expressed disinterest in participating in the trial as they were not guaranteed receipt of a computer and the PRISM system. Thus we decided to offer participants randomized to the Binder condition training on basic computer and Internet skills following trial completion.

The coordination between the technical support staff and study interventionists/assessors was also critical to the successful implementation of the trial. We had to clearly allocate functions among these team members and establish clear procedural and communication protocols. In general, the development of a Manual of Operations and standardized protocols for data management was also essential as was providing centralized training for our study personnel.

Despite these challenges, the outcomes of the trial will yield important information on the benefits of technology for vulnerable older adult populations. It will also yield important data on features of technology that are useful for seniors as well as factors influencing technology acceptance and use. Further, given our extensive battery we will be able to examine how these outcomes vary according to participant characteristics such as gender, age, and cognitive abilities. The trial is also yielding information on challenges associated with conducting these types of trials with older adults, which can help inform the design of future studies in this area. Finally, the trial provides an illustrative example of how a user-centered design approach can be incorporated into the design of intervention protocols. This approach can be generalized to a broad range of interventions especially those that involve a technology application.

As with many intervention trials, the study also has some limitations. For example, our criterion for cognitive status was rather stringent which may impact the generalizability of the findings as socially isolation is linked to cognitive impairments. In fact, failing to meet the cognitive criterion was one of the primary reasons participants were excluded from the trial. In addition, a percentage of older adults still do not have Internet access because of factors such as cost or limited knowledge about the benefits of the Internet. Given that many services and resources important to older adults are available online it would seem that providing Internet access at reduced or no cost would be important for vulnerable populations. Finally, even though our sample was large for technology-based intervention studies aimed at older adults, PRISM needs to be evaluated with larger samples across more geographic regions and with adults of other culture/ethnic groups in different living contexts.

Acknowledgments

The authors would like to thank David L. Loewenstein and Shih Hua Fu for their help with the analyses of the baseline data. We would also like to thank Mario Hernandez, Tracy Mitzner, Kelly Arredondo and Jaci Bartley for their help with recruitment, and the members of the CREATE Scientific Advisory Board and our Data Safety Monitoring Board for their assistance with the design of the trial. We would also like to thank Carousel Information Management Solutions Inc. for providing us with access to the Big Screen Live system and for technical support.

Funding: The National Institute on Aging/National Institutes of Health supported this work (NIA 3 PO1 AG017211, Project CREATE III – Center for Research and Education on Aging and Technology Enhancement).

Footnotes

Conflicts of Interest: The authors report no conflicts of interest.

Trial Registration Number: NCT01497613

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Administration on Aging. A profile of older Americans 2012. 2012 Retrieved from http://www.aoa.gov/AoAroot/Aging_Statistics/Profile/2012/docs/2012profile.pdf.

- Aylaz R, Artürk Ü, Erci B, Ӧztürk H, Aslan H. Relationship between depression and loneness in elderly and examination of influential factors. Archive of Gerontological Geriatrics. 2012;55:548–554. doi: 10.1016/j.archger.2012.03.006. [DOI] [PubMed] [Google Scholar]

- Backman L, Small BJ, Wahlin A. Aging and memory: Cognitive and biological perspective. In: Birren JE, Schaie KW, editors. Handbook of the psychology of aging. 5th ed. Sand Diego, CA: Academic Press; 2001. pp. 349–377. [Google Scholar]

- Bagozzi RP. The legacy of the technology acceptance model and a proposal for a paradigm shift. Journal of the Association of Information Systems. 2007;8:244–254. [Google Scholar]

- Baker DW, Williams MV, Parker RM, Gazmararian JA, Nurss J. Development of a brief test to measure functional health literacy. Patient Education Counseling. 1999;38:33–42. doi: 10.1016/s0738-3991(98)00116-5. [DOI] [PubMed] [Google Scholar]

- Boot WR, Charness N, Czaja SJ, Sharit J, Rogers WA, Mitzner T, Nair S. The Computer Proficiency Questionnaire (CPQ): Assessing low and high computer proficient seniors. The Gerontologist. 2003 doi: 10.1093/geron/gnt117. Epub ahead of print. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Choi M, Kong S, Jung D. Computer and Internet interventions for loneliness and depression in older adults: A meta-analysis. Healthcare Information Research. 2012;18:191–198. doi: 10.4258/hir.2012.18.3.191. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen S, Mermelstein R, Kamarack T, Hoberman H. Measuring the functional components of social support. In: Sarason, editor. Social Support: Theory, Research, and Application. Hague, Netherlands: Martinus Nijhoff; 1985. pp. 73–94. [Google Scholar]

- Cotton SR, Anderson WA, McCullough BM. Impact of Internet use on loneliness and contact with others among older adults: Cross-sectional analysis. Journal Medical Internet Research. 2013:15. doi: 10.2196/jmir.2306. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Craik FIM, Salthouse TA. The Handbook of Aging and Cognition. 3rd. New York: Psychology Press; 2011. [Google Scholar]

- Curran GM, Mukherjee S, Allee E, Owen RR. A process for developing an implementation intervention: QUERI Series. Implementation Science. 2008;3:17. doi: 10.1186/1748-5908-3-17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Czaja SJ, Boot WR, Charness N, Rogers WA, Sharit K, Fisk AD, Nair SN. The Personalized Reminder Information and Social Management system (PRISM) Trial: challenges and Lessons Learned. Clinical Trials. doi: 10.1016/j.cct.2014.11.004. submitted. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Czaja SJ, Charness N, Fisk AD, Hertzog C, Nair SN, Rogers WA, Sharit J. Factors Predicting the Use of Technology: Findings from the Center for Research and Education on Aging and Technology Enhancement (CREATE) Psychology and Aging. 2006;21:333–352. doi: 10.1037/0882-7974.21.2.333. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Czaja SJ, Lee CC. Older Adults and Information Technology: Opportunities and Challenges. In: Jacko JA, editor. The Human Computer-Interaction Handbook. 3nd. Boca Raton: CRC Press; 2012. pp. 825–840. [Google Scholar]

- Czaja SJ, Sharit J. Aging and skill acquisition: Designing training programs for older adults. Boca Raton, FL: CRC Press; 2012. [Google Scholar]

- Czaja SJ, Sharit J, Hernandez M, Nair N, Loewenstein D. Variability among older adults in Internet information seeking performance. Gerontechnology. 2010;9:46–55. [Google Scholar]

- Dickinson A, Gregor P. Computer use has no demonstrated impact on the well-being of older adults. International J of Human Computer Studies. 2006;64(8):744–753. [Google Scholar]

- Ekstrom RB, French JW, Harman HH, Derman D. Manual for kit factor-referenced cognitive tests. Princeton, NJ: Educational Testing Service; 1976. [Google Scholar]

- Ellis P, Hickie I. What causes mental illness. In: Bloch S, Singh B, editors. Foundations of Clinical Psychiatry. Melbourne: Melbourne University Press; 2001. pp. 43–62. [Google Scholar]

- Erickson J, Johnson GM. Internet use and psychological wellness during late adulthood. Canadian Journal on Aging. 2011 May;30(02):197–209. doi: 10.1353/cja.2011.0029. [DOI] [PubMed] [Google Scholar]

- Fisk AD, Rogers WA, Charness N, Czaja SJ, Sharit J. Designing for Older Adults: Principles and Creative Human Factors Approach. 2nd Ed. Boca Raton, FL: CRC Press; 2009. [Google Scholar]

- Older Americans 2012: Key Indicators of Well-Being. Washington, DC: U.S. Government Printing Office; 2012. Federal Interagency Forum on Aging-Related Statistics. Federal Interagency Forum on Aging-Related Statistics. [Google Scholar]

- Folstein MF, Folstein SE, McHugh PR. Mini-mental state: A practical method for grading the cognitive state of patients for the clinician. Journal of Psychiatric Research. 1975;12:189–98. doi: 10.1016/0022-3956(75)90026-6. [DOI] [PubMed] [Google Scholar]

- Fratiglioni L, Wang H, Ericsson K, Maytan M, Winblad B. Influence of social entowrk on occurrence of dementia: A community-based longitudinalstudy. Lancet. 2000;355:1315–1319. doi: 10.1016/S0140-6736(00)02113-9. [DOI] [PubMed] [Google Scholar]

- Geroski PA. Models of technology diffusion. Research Policy. 2000;29:603–625. [Google Scholar]

- Gilewski MJ, Zelinski EM, Schaie KW. The memory functioning questionnaire for assessment of memory complaints in adulthood and old age. Psychology and Aging. 1990;5(4):482–490. doi: 10.1037//0882-7974.5.4.482. [DOI] [PubMed] [Google Scholar]

- Gosling SD, Rentfrow PJ, Swann WB., Jr A very brief measure of the Big-Five personality domains. Journal of Research in Personality. 2003;37:504–528. [Google Scholar]

- Hawthorne G. Measuring social isolation in older adults: Development and initial validation of the friendship scale. Social Indicators Research. 2006;77:521–548. [Google Scholar]

- Holt-Lunstad J, Smith TB, Layton JB. Social Relationships and Mortality Risk: A Meta-analytic Review. PLoS Med. 2012;7(7):e1000316. doi: 10.1371/journal.pmed.1000316. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jay GM, Willis SL. Influence of direct computer experience on older adults' attitude toward computers. J of Gerontology: Psychological Sciences. 1992;47:P250–P257. doi: 10.1093/geronj/47.4.p250. [DOI] [PubMed] [Google Scholar]

- Logsdon RG, Gibbons LE, McCurry SM, Teri L. Assessing quality of life in older adults with cognitive impairment. Psychosomatic Medicine. 2002;64:510–519. doi: 10.1097/00006842-200205000-00016. [DOI] [PubMed] [Google Scholar]

- Lubben JE. Assessing social networks among elderly populations. Family Community Health. 1988;11:42–52. [Google Scholar]

- MacKinnon DP, Lockwood CM, Williams J. Confidence limits for the indirect effect: Distribution of the product and resampling methods. Multivariate Behavioral Research. 2004;39:99–128. doi: 10.1207/s15327906mbr3901_4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McArdle JJ, Prindle JJ. A latent change score analysis of a randomized clinical trial in reasoning training. Psychology and Aging. 2008;23(4):702–719. doi: 10.1037/a0014349. [DOI] [PubMed] [Google Scholar]

- McCabe DP, Robertson CL, Smith AD. Age differences in stroop interference in working memory. Journal of Clinical and Experimental Neuropsychology. 2005;27(5):633–44. doi: 10.1080/13803390490919218. [DOI] [PubMed] [Google Scholar]

- Myall BR, Hine DW, Marks ADG, Thorsteinsson EB. Assessing individual differences in perceived vulnerability of in older adults. Personality and Individual Differences. 2009;46:8–13. [Google Scholar]

- Nielsen J. Designing web usability: The practice of simplicity. Indianapolis: New Riders Publishing; 2000. [Google Scholar]

- Radloff L. The CES-D scale: a self-report depression scale for research in the general population. Applied Psychological Measures. 1977;1:385–401. [Google Scholar]

- Reitan RM. Validity of the trail making test as an indicator of organic brain damage. Perception and Motor Skills. 1958;8:271–276. [Google Scholar]

- Roesch SC, Aldridge AA, Stocking SN, Villodas F, Leung Q, Bartley CE, et al. Multilevel factor analysis and structural equation modeling of daily diary coping data: Modeling trait and state variation. Multivariate Behavioral Research. 2010;45(5):767–789. doi: 10.1080/00273171.2010.519276. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rosen WG. Verbal fluency in aging and dementia. Journal of Clinical Neurology. 1980;2:135–146. [Google Scholar]

- Rowe JW, Kahn RL. Successful aging. New York: Random House; 1998. [Google Scholar]

- Russell D, Peplau LA, Ferguson ML. Developing a measure of loneliness. Journal of Personality Assessment. 1978:290–294. doi: 10.1207/s15327752jpa4203_11. [DOI] [PubMed] [Google Scholar]

- Salthouse TA. Major issues in cognitive aging. Oxford: Oxford Univ Press; 2010. [Google Scholar]

- Scheier MF, Wrosch C, Baum A, Cohen S, Matire LM, Matthews KA, Zdaniuk B. The life engagement test: assessing purpose in life. Journal of Behavioral Medicine. 2006;29:291–298. doi: 10.1007/s10865-005-9044-1. [DOI] [PubMed] [Google Scholar]

- Shea S, Weinstock RS, Starren J, Teresi J, Palmas W, Field L, Latigua RA. A randomized trial comparing telemedicine case management with usual care in older, ethnically diverse, medically underserved patients with diabetes mellitus. Journal of American Medical Information Association. 13:40–51. doi: 10.1197/jamia.M1917. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sleger K, van Boxtel MPJ, Jolles J. Effects of computer training and internet usage on the well-being and quality of life of older adults: A randomized, controlled study. J of Gerontology: Psychological Science. 2008;63B:P176–P184. doi: 10.1093/geronb/63.3.p176. [DOI] [PubMed] [Google Scholar]

- Stalvey BT, Owsley C, Sloane ME, Ball K. The life space questionnaire: a measure of the extent of the mobility of older adults. Journal of Applied Gerontology. 1999;18(4):460–478. [Google Scholar]

- Steptoe A, Shankar A, Demakakos P, Wardle J. Social isolation, loneliness, and all-caused mortality in older men and women. PNAS Early Edition. 2013 doi: 10.1073/pnas.1219686110. 1 of 5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sum S, Mathews RM, Hughes I, Campbell A. Internet use and loneliness in older adults. Cyberpsychol Behav. 2008 Apr;11(2):208–11. doi: 10.1089/cpb.2007.0010. [DOI] [PubMed] [Google Scholar]

- Victor C, Scambler S, Bond J, Bowling A. Being alone in later life: loneliness, social isolation and living alone. Reviews in Clinical Gerontology. 2000;10:407–41. [Google Scholar]

- Vimarlund V, Olve N. Economic analyses for ICT in elderly healthcare: questions and challenges. Health Information Journal. 2005;11(4):309–321. [Google Scholar]

- Ware JE, Sherbourne CD. The MOS 36-item short-form healthy survey (SF-36). Conceptual framework and item selection. Medical Care. 1992;30(6):473–483. [PubMed] [Google Scholar]

- White H, McConnell E, Clipp E, Bynum L, Teague C, Navas L, Craven S, Halbrecht H. Surfing the net in later life: A review of the literature and pilot study of computer use and quality of life. Journal of Applied Gerontology. 1999;18:358–378. [Google Scholar]

- Wilkinson G. Wide Range Achievement Test- Third Revision. Wilmington, DE: Jastak Associates; 1993. [Google Scholar]

- Wechsler D. Wechsler Memory Scale 3rd Edition (WMS-III) New York: The Psychological Corporation; 1997. [Google Scholar]

- Wechsler D. Manual for Wechsler Memory Scaled Revised. New York: The Psychological Corp; 1981. [Google Scholar]

- Wisniewsski S, Belle SH, Coon D, Marcus SM, Ory M, Burgio L, Schulz R. The Resources for Enhancing Alzheimer's Caregiver Health (REACH): Project design and baseline characteristics. Psychology and Aging. 2003;18:375–384. doi: 10.1037/0882-7974.18.3.375. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zachary RA. Shipley Institute of Living Scale: Revised Manual. Los Angeles: Western Psychological Services; 1986. [Google Scholar]

- Zickuhr K, Madden M. Older adults and Internet use. 2012 Retrieve from http://www.pewinternet.org/∼/media//Files/Reports/2012/PIP_Older_adults_and_internet_use.pdf.