Abstract

Although infants’ cognitions about the world must be influenced by experience, little research has directly assessed the relation between everyday experience and infants’ visual cognition in the laboratory. Eye-tracking procedures were used to measure 4-month-old infants’ eye-movements as they visually investigated a series of images. Infants with pet experience (N = 27) directed a greater proportion of their looking at the most informative region of animal stimuli—the head—than did infants without such experience (N = 21); the two groups of infants did not differ in their scanning of images of human faces or vehicles. Thus, infants’ visual cognitions are influenced by everyday experience, and theories of cognitive development in infancy must account for the effect of experience on development.

Very young infants can remember human faces (Quinn, Yahr, Kuhn, Slater, & Pascalis, 2002) and recognize adult-defined categories such as dog versus cat (Quinn, Eimas, & Rosenkrantz, 1993). A critical question is how much such responding reflects on-line processing versus existing knowledge (Bornstein & Mash, 2010; Haith, 1998; Madole & Oakes, 1999; Mandler, 1999). Clearly, responding to abstract or novel objects and pictures (Younger, 1985; Younger & Cohen, 1986) reflects knowledge infants acquired in the task. When infants are presented with realistic representations of “real” categories such as dog (Eimas & Quinn, 1994; Oakes, Coppage, & Dingel, 1997; Quinn et al., 1993), however, their responding is ambiguous; it might reflect solely features and commonalities discovered during the course of the task, memory of past encounters with similar items, or some combination.

Despite this inherent ambiguity, strong claims have been made from patterns of infants’ looking about their existing knowledge (Carey, 2000; Spelke, 1998). Claims that infants’ performance reflects knowledge they possessed before coming to the lab are problematic given that the familiarization and habituation tasks used to assess such knowledge and abilities are learning tasks (Colombo & Mitchell, 2009). Moreover, it is becoming increasingly clear that even when infants respond to real, familiar categories and concepts in such tasks, their responding is influenced by the statistical information presented during learning (Oakes et al., 1997; Oakes & Spalding, 1997; Quinn et al., 1993). However, the familiarity of the stimuli or type of stimuli does influence infants’ performance in such tasks (Kovack-Lesh, Horst, & Oakes, 2008; Quinn et al., 2008; Quinn et al., 2002). Therefore, a complex picture is emerging in which we understand infants’ performance in such tasks as on-line learning that occurs over longer time scales, such as over days, weeks, or months.

For example, Kovack-Lesh et al. (2008; 2012) observed that 4-month-old infants’ learning about images of cats in the laboratory was influenced both by their previous experience with pets and by the on-line strategy they adopted for looking at the items during familiarization (i.e., how much they looked back-and-forth between two simultaneously presented images). Because infants’ strategies for deploying visual attention (in terms of how they glanced back and forth between the two available images) and their past experience together were related to how infants categorized and remembered individual images of cats, it is possible that experience actually induces changes in strategies for how infants visually inspect images related to that experience. For example, Hurley et al. (2010) found that 6-month-old infants with and without pets distributed their looking differently on a series of trials with images of cats or dogs. Specifically, they differed in the duration of their looking to the images and the number of glances between two simultaneously presented stimuli. Together, this previous work shows relations between infants’ animal experience and their categorization of and memory for animal images as well as relations between infants’ animal experience and the global strategies they use when deploying visual attention. The present investigation took the important next step to identify whether and how experience shapes the strategies infants use when visually investigating new items by comparing how infants with and without pets directed their attention while scanning cat and dog images.

We chose this domain because research has revealed that infants have a somewhat precocious ability to learn about, remember, and categorize cat and dog images. For example, 3- to 4-month-old infants are sensitive to the categorical distinction between dogs and cats (Oakes & Ribar, 2005; Quinn et al., 1993). They recognize this distinction even when they are shown only silhouettes of the stimuli (Quinn & Eimas, 1996). Moreover, there is a body of literature indicating the most informative regions of such stimuli for 3- to 4-month-old infants. Specifically at this age infants use information about the head, but not the rest of the body, for categorizing cats and dogs (Quinn & Eimas, 1996; Spencer, Quinn, Johnson, & Karmiloff-Smith, 1997). By 6 months infants (even without pet experience) exhibit a bias to look at the head regions of cats and dogs (Quinn, Doran, Reiss, & Hoffman, 2009). Systematic work by Mareschal, French, and Quinn (French, Mareschal, Mermillod, & Quinn, 2004; Mareschal, French, & Quinn, 2000; Mareschal & Quinn, 2001) examining the distribution of features between dogs and cats revealed that most of the differences in how features were distributed between dogs and cats were in the face and head regions (e.g., spacing of ears). This observation confirmed that features in this region were more diagnostic than other features in differentiating dogs from cats. Thus, we can ask whether experience with pets is related to how infants’ visual inspection of animals is distributed to these diagnostic regions.

There is evidence that infants’ experience shapes some aspects of processing of visual images in infancy. By 3 to 4 months infants’ preferences for particular faces is influenced by the gender of their primary caregiver (Quinn et al., 2002) and the race of the faces around them (Bar-Haim, Ziv, Lamy, & Hodes, 2006; Kelly et al., 2005). By 9 months infants’ face discrimination is selective for human faces from familiar races (Kelly, Liu, et al., 2007; Pascalis, de Haan, & Nelson, 2002; Pascalis & Kelly, 2009; Scott, Shannon, & Nelson, 2006). Nelson (2001) suggested that infants’ daily experience with particular types of faces tunes their face processing to those familiar face types. In support of this general notion, enhancing infants’ experience with monkey faces can induce them to maintain superior abilities to recognize individual monkey faces (Pascalis et al., 2005; Scott & Monesson, 2009).

A key question is whether such effects are face-specific. If such effects reflect mechanisms that are specific to face processing, we may not see the effects of daily experience on infants’ learning of and preferences for other types of stimuli. Moreover, given that faces are extremely common in infants’ everyday experience, it would not be surprising if more casual daily encounters with objects other than faces would not have the same effect on infants’ learning. Remarkably, however, Bornstein and Mash (2010) found that infants’ attention to completely novel objects in a laboratory task was influenced by a few minutes of daily exposure to pictures of those objects over a period of a few weeks. And, Kovack-Lesh et al. (2008; 2012) found a connection between exposure to pets at home and 4-month-old infants memory for and categorization of images of cats in the laboratory. Thus, daily home exposure to objects other than human faces does appear to contribute to infants’ visual cognition in the laboratory.

We examined eye-movements during infants’ visual inspection of images to understand how experience is related to differences in the strategies they adopt to process stimuli. Because eye-movement measures reveal how people look at informative regions of images, they can be thought of as reflecting active, on-line processing (Aslin & McMurray, 2004; Karatekin, 2007). Our goal was to assess infants’ visual inspection of the stimuli under study. Differences in how infants visually inspect stimuli may result in differences in learning. For example, Johnson, Slemmer, and Amso (2004) found that young infants’ scanning of the rod portions of a classic rod-and-box experiment predicted their learning that the rod was connected behind the occluding box. Amso and colleagues (Amso, Fitzgerald, Davidow, Gilhooly, & Tottenham, 2010) found that infants’ scanning of facial features during habituation predicted their ability to differentiate different types of faces. The point is that measuring whether and how long infants look at different regions of stimuli provides insight into how they sample information in displays. Infants who sample more from highly relevant or meaningful regions of a stimulus—such as the infants observed by Johnson et al. who spent more time looking at the moving rod pieces—may learn more about the most meaningful or significant features of a stimulus than other infants who sample those regions less. Thus, evaluating differences in eye-movements as infants visually investigate stimuli can provide understanding into differences, or lack of differences, in the ways in which infants are sampling—and therefore learning about—stimuli. Conceptually, this approach is similar to work identifying individual differences in speed of processing, and relating those individual differences to subsequent learning (Colombo, Mitchell, Coldren, & Freeseman, 1991; Jankowski, Rose, & Feldman, 2001). In the present investigation, we probe individual differences in processing strategies. This work can provide a foundation for future studies more directly examining how such differences are related to learning.

We measured visual inspection of images in a sample of 4-month-old infants, some with pets at home and others with no pet experience. Some stimuli were relevant to this difference in their experience (dogs and cats) and some stimuli were neutral with respect to differences in their experience (human faces and vehicles). We hypothesized that increased knowledge about a domain, in this case from daily exposure to a pet in the home, would alter infants’ distribution of attention when encountering new items from that domain. This hypothesis is similar to that of Smith and colleagues (Smith, Jones, Landau, Gershkoff-Stowe, & Samuelson, 2002) who argued that past learning modulates present attention. They demonstrated that experience learning the names of shape-based categories induced children to focus attention to shape when subsequently learning new category names.

We reasoned that infants with extensive experience with pets might direct more of their attention to the most informationally rich or diagnostic regions of animals—that is the head and face region—than will infants without pet experience. By examining infants’ eye-movements as they inspected images of cats and dogs, we could determine both whether the head bias documented by Quinn et al. (2009) emerges earlier than 6 months, and also whether the bias differs as a function of pet experience. Because we anticipated that differences in scanning of dogs and cats would reflect infants’ specific knowledge of animals, and not a general difference in visual inspection, we did not expect these groups of infants to differ in their inspection of human faces or vehicles.

Note that here we use experience as a proxy for development. Often, in cross-sectional studies, age is used as a proxy for experience—differences in older and younger infants’ responding is thought to reflect, in part, differences in experience (e.g. Kelly, Quinn, et al., 2007; Liu et al., 2010a). Of course, older and younger infants differ in a number of ways, and differences in experience co-vary with differences in maturation, neuroanatomical change, motor, perceptual, and cognitive development, and so on. Therefore, here we take the complementary approach of holding age constant and examining differences in infants’ responding as a function of differences in one type of experience. This allows us to understand how developmental change may be induced by differences in experience, while holding constant other aspects of development that vary with age.

Method

Participants

The final sample included 48 healthy, full-term 4-month-old infants (M = 123.13 days, SD = 8.02 days; 23 girls and 25 boys) with no history of vision problems; 34 infants were reported to be White (13 who were Hispanic), 3 infants were Asian (1 who was Hispanic), 6 were mixed race (4 who were Hispanic), and race was not reported for 5 infants (4 who were Hispanic). All mothers had completed High School and 25 had earned at least a bachelor’s degree. Additional infants were tested but excluded from the analyses due to general inattention (e.g., never looking at the top half of the stimuli, n = 2), inability to calibrate (n = 5), equipment or experimenter error (n = 10), or failure to attend on the minimum number of trials for inclusion (n = 3, see results section).

Those infants who lived with an indoor pet or spent more than 20 hours per week (e.g., at daycare) with at least one indoor pet were assigned to the Pet Group (n = 27; M = 123.19 days, SD = 8.00, 16 boys); 10 infants had only cats, 12 infants had only dogs, and 5 infants had both. Infants without regular contact with dogs or cats were assigned to the No Pet Group (n = 21; M = 123.05 days, SD = 8.24, 9 boys). The two groups did not differ in age, t(46) = .06, p = .95.

Stimuli

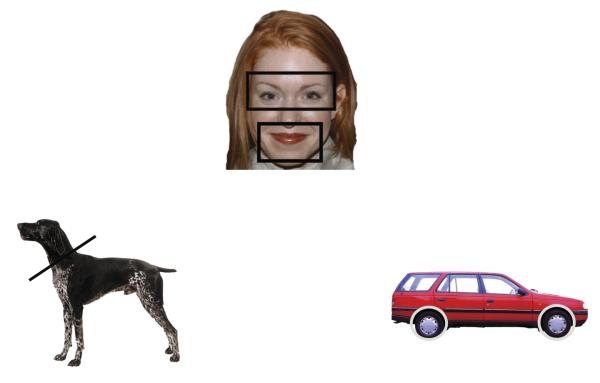

The experimental stimuli were 24 digitized photographs of items from each of four categories: dogs, cats, human faces, and vehicles (see examples in Figure 1). All images subtended approximately 21.5° × 14.25° visual angle at 100 cm viewing distance. Dogs and cats all had visible faces, varied in breed, coloring, marking, and stance (in each category, 15 animals were standing, 4 were sitting, and 5 were lying down), and half of the animals were facing left.

Figure 1.

Examples of the stimuli used and the AOIs

The 24 vehicles (e.g., a porche, a moving van, and cement-mixer), varied in shape and color, and half were facing left. The 24 human faces (12 female) were taken from the MacBrain Face Stimulus Set (Tottenham et al., 2009). The individuals were approximately in their mid-20s, of varied races, facing forward, smiling with lips closed, with no extra apparel such as hats or glasses (4 men had some facial hair). According to the documentation provided, 4 faces were Asian-American, 6 were African-American, 3 were Latino-American, and 11 were European-American.

The calibration stimuli were multi-colored circles that loomed to approximately 6° × 6° visual angle. The attention-getters were different colored geometric shapes (e.g., purple diamond, green triangle) that continuously loomed for 800 ms (to approximately 16° × 16° visual angle) accompanied by randomly selected buzzing and beeping sounds. The reorienting stimuli were randomly chosen brief clips of children’s television shows (Teletubbies, Blues Clues, Sesame Street), animated animals singing, and a series of pictures of babies accompanied by classical music. These reorienting stimuli were only presented when an infant looked away from the monitor for a prolonged period of time, and they were presented only until the eye-tracking system had once again focused on the infant’s right eye with a good track.

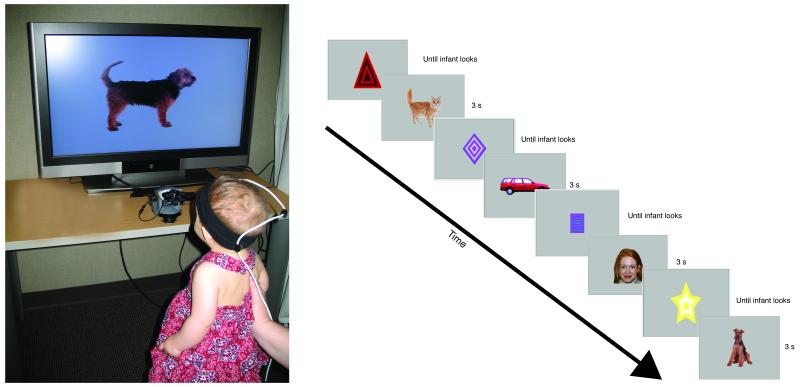

Apparatus

We used an Applied Science Laboratory (ASL) pan/tilt R6 eye tracker to collect eye-gaze information. The eye-tracker was centered at the bottom and approximately 25 cm in front of a 37″ LCD monitor (19:9 aspect ratio). It emitted an infrared light source and detected the corneal and pupil reflection of that light source; this information was used to determine the point-of-gaze (POG) at a rate of 60 Hz. The eye-tracker interfaced with an Ascension Flock of Birds magnetic head tracking system (mini-bird) that was used to track changes in infant’s head position, and help the eye-tracker maintain focus on the infant’s eye if needed. A sensor, attached to an infant sized headband, was positioned above the infant’s right eye (see Figure 2); the sensor transmitted the location and orientation of the infant’s head in multi-dimensional space to the eye-tracking system, which used this information to refocus the on the infant’s right eye, if needed.

Figure 2.

Infant in the eye-tracking set-up (left) and an example of the sequence of trials in the experimental design.

A Dell Computer was used to control the eye-tracker and to allow an experimenter to move the camera to focus on the infant’s eye, indicate when the infant looked at calibration points, adjust the settings for the sensitivity of the system to detect the reflection of the infrared light source, and save the data. From the ASL system we recorded an image of the stimulus presented on the monitor, with cross-hairs superimposed indicating the infant’s POG, and a close-up of the infant’s right eye, with cross-hairs imposed indicating the corneal and pupil reflection of the infrared light source. Experimenters used these images to judge on-line calibration and the quality of the track. A second Dell computer, connected to the LCD monitor, was used to interactively present stimuli, using a program we created using Adobe Director.

Procedure

Each infant sat on his or her parent’s lap in a dimly lit room, approximately 100 cm from the large LCD monitor and 75 cm from the eye-tracker (see Figure 2). Parents wore occluding glasses to obstruct their view of the stimuli. An eye-tracking experimenter and a stimulus-presentation experimenter sat out of sight behind a white curtained divider.

First, we calibrated the system to the infant. The stimulus-presentation experimenter presented the looming shapes, short videos, and so on, and the eye-tracking experimenter focused the eye-tracker on the infant’s right eye, indicating the off-set from the eye to the head-tracking sensor. Once the eye-tracker was clearly focused on the eye, the automatic tracking systems were engaged and the eye-tracker maintained a track using the pupil and corneal reflection, only adjusting the camera position based on data from the mini-bird when the system lost the image of the eye.

Next, we calibrated the infant’s POG. The stimulus-presentation experimenter presented looming circles at points 11.5° above and to the left and 11.5° below and to the right of the monitor’s center by pressing keys on the stimulus-presentation computer. The eye-tracking experimenter indicated when the infant fixated these stimuli by pressing a key on the eye-tracking computer. The eye-tracker used the information about the pupil and corneal reflections when the infant was fixating these known locations to determine the location of each subsequent fixation. The calibration procedure took only a few minutes to complete.

Immediately after calibration, the stimulus-presentation experimenter pressed a computer key to initiate the series of experimental trials. The sequence of events for the experimental trials is presented in Figure 2. Before each trial, attention-getting stimuli were presented at the center of the monitor. To ensure that trials started when the infants were fixating the monitor, the stimulus-presentation experimenter initiated each trial only when the infant was looking at these stimuli (as indicated by the cross-hairs centered on the looming shape). If the infant became very inattentive, the stimulus-presentation experimenter could present the stimuli to reorient the infant to the monitor, and if necessary the eye-tracking experimenter could manually adjust the camera.

The main experiment consisted of a sequence of 3-s trials. These very short trial durations examine differences in infants’ initial responses to the stimuli. We know that infants respond differently to stimuli as a function of previous experience on trials of longer durations (Kelly, Liu, et al., 2007; Liu et al., 2010b; Quinn et al., 2009); it is unknown whether they initially respond differently to those stimuli, or if the differences emerge over time. Eye-tracking provides us with the unique ability to establish whether differences exist in very short trials. Because our main measure is the pattern of scanning, it is possible to determine whether infants’ sampling of stimuli differs from their initial inspection of those stimuli.

The program we created in Adobe Director to run this experiment generated for each infant a list of 96 trials with stimuli assigned with the following constraints: (1) trials were in randomly ordered blocks of 4 trials, each including a dog trial, a cat trial, a human face trial, and a vehicle trial, and (2) stimuli were selected (without replacement) so that each stimulus was presented once to each infant (as the result of a programming error, 2 infants with pets and 2 infants without pets were presented with one cat twice over the course of the experiment; analyses conducted excluding these infants revealed the same basic patterns as those reported here including the full sample. To increase our power, we opted to include these infants in the final sample). Trials continued until the infant showed signs of disinterest (e.g. refusing to look at the screen, looking at the parent, trying to turn around away from the monitor), or until all 96 trials were presented.

We recorded from each session the dynamic image of the sequence of attention-getters and stimuli on each trial (and reorienting stimuli) with cross-hairs superimposed indicating the infants’ POG and the output from the eye-camera (a close-up view of the infants’ right eye). These two images were fed through a mixer into a DVR recording device, creating a digital recording (.AVI) of the stimulus with the cross-hairs superimposed, and the eye in the bottom right-hand corner, at a resolution of 30 frames per second. To import these digital recordings into NoldusObserver for coding (see next section), we converted them to MPEG files using the free software FFmpeg (http://ffmpeg.org/). The resulting MPEG files had a framerate of 29.97 frames per second, and were converted without any additional compression.

Coding

Eye-tracking procedures provide an enormous amount of data, and a first step in analyzing such data is determining the best way to reduce it. To address our main hypotheses, we elected to determine the proportion of time infants spent looking at the most informationally relevant region of each stimulus type. Because our goal was to determine whether infants’ visual investigation of dog and cat stimuli varied as a function of pet experience, we reasoned that differences would be observed in the proportion of time they spent looking at those regions.

For each stimulus type we identified the most informationally rich regions, based on the relevant literatures. For the dog and cat stimuli, we identified the head region as the most informationally relevant. Note that our animal stimuli were highly variable in pose, and as a result the images varied in the visibility of all four legs, the tail, etc. Thus, it was not possible to create areas of interest (AOIs) that captured each of these features. Moreover, previous work has shown that infants rely more on the head regions than the non-head regions to differentiate images of cats and dogs (Quinn & Eimas, 1996). Each of our stimulus images had a well-defined head region, allowing us to identify a clear AOI for the head region and a second AOI for the remaining body parts (i.e., head and non-head AOIs).

For the human face stimuli, we identified the eyes and mouth regions as the most informationally relevant. A number of studies using a variety of methods and subject populations have shown the importance of the mouth and eye regions for processing images of human faces by observers across the lifespan (Allison, Puce, & McCarthy, 2000; Amso et al., 2010; Heisz & Shore, 2008). We therefore created separate AOIs for the eye and mouth regions, and used them to create a mouth-and-eyes AOI. Finally, studies of object recognition and categorization indicate that the physical features that determine object function are the most informationally important (Rakison & Butterworth, 1998; Stark & Bowyer, 1994). Thus, for the vehicles, we identified the wheels as the most informative AOIs. See Figure 1 for examples of the AOIs for each type of stimuli.

We used ImageJ (Rasband, 1997-2001) to calculate the area of each AOI for each stimulus. On average, the head region comprised 23% of the dog images, the head region comprised 19% of the cat images, the wheel region comprised 16% of the vehicle images, and the eye and mouth regions combined comprised 35% of the face images.

Highly trained coders, unaware of infants’ pet experience, coded infants’ POG off-line from the recording of the session. We used Noldus Observer to record frame-by-frame whether the POG, as defined by the center of the cross-hairs generated by the eye-tracking system, was in the specified AOIs for each stimulus. This approach to summarizing infants’ looking behavior allowed us to optimize the AOIs for each of our stimuli, which were highly varied (i.e. the animals were in different positions, so the heads were located in different places in space, and the vehicle wheels varied in size and shape as well as location). To ensure that coders used the consistent AOI boundaries, we provided them with an image of each stimulus indicating the relevant AOIs. The software for processing eye-tracking gaze coordinates provided by ASL defines regions in rectangular spaces; thus, our hand-drawn AOIs and human judgments allowed more precision in determining whether infants’ POG were in the specified regions than would be possible with automatic coding. Because our coders were unaware of the infants’ pet status, coder biases will not contribute to any differences or lack of differences we observe as a function of pet experience.

Coders judged frame-by-frame the location of the POG on each frame in which the following conditions were met: the cross-hairs were superimposed on the stimuli and the right eye (recorded in the lower right-hand corner) was open and visible (occasionally, the eye camera would focus on the left eye; because we calibrated the POG using the corneal reflection and pupil of the right eye, the POG recorded from the left eye is not valid). For each frame, coders indicated whether the infants’ POG was in an AOI specified for each stimulus as described earlier. The POG was coded as being in a region only when the cross-hairs had remained in that region for 3 consecutive frames, or about 100 ms (we used 3 consecutive frames so coders would not indicate that the POG was in a region when the eye was moving from one region to the next). Routines in Noldus calculated the duration of continued looking to that region until the coder indicated that the POG was directed to a different region.

Our highly trained coders were extremely consistent in their coding. Two independent coders recorded POG on each frame for the animal trials for 14 infants, the face trials for 13 infants, and the vehicle trials for 15 infants. Agreement for the particular AOI fixated on each frame was high; for animal trials it was 93.10% (r = .99, Kappa = .99), for face trials it was 89.88% (r = .95, Kappa = .89), and for vehicle trials it was 91.13% (r = .99, Kappa = .90).

Results

We conducted several analyses on these data. We included in our sample all infants who completed at least two trials of each type, and who accumulated more than 500 ms of looking on most trials. Only 3 infants were excluded for not meeting these criteria. The 48 infants who comprised the final sample contributed, on average, 50.17 trials to the analyses (SD = 20.11, range 18 to 96 trials). An Analysis of Variance (ANOVA) conducted on the number of each trial type that infants contributed resulted in no significant effects or interactions. In general, infants had approximately the same number of the four types of trials. Importantly, the number of trials completed did not differ by pet experience: infants with pets contributed approximately the same number of trials (M = 50.56, SD = 18.78, range 18 to 90 trials) as did infants who did not have pets (M = 49.67, SD = 22.16, range 18 to 96), t(46) = .15, p = .88, d = .04.

Infants’ overall interest in the stimuli

First we evaluated infants’ overall level of interest in the stimuli to establish whether there are general differences in the level of attention by infants with and without pets, and whether infants preferred one or more of our stimulus types to others. The mean total looking time (i.e., looking to all AOIs combined) for each stimulus type is presented in Table 1. Infants looked for similar times to all four trial types, with slightly longer looking at human faces than at any of the other types of stimuli. We entered infants’ average total looking times to each type of trial into an ANOVA with trial type (dog, cat, vehicle, human face) as the within-subjects variable and pet group as the between-subjects variable.

Table 1.

Means (in s) for infants’ looking to each stimulus type by pet group (SD in parentheses)

| Group | N | Trial Type |

|||

|---|---|---|---|---|---|

| Cat | Dog | Face | Vehicle | ||

| Overall | 48 | 2.06 a (.54) | 2.16 a (.43) | 2.46 b (.43) | 2.03a (.47) |

| Pet | 21 | 2.14 (.46) | 2.17 (.40) | 2.47 (.36) | 1.97 (.39) |

| No Pet | 17 | 1.96 (.62) | 2.15 (.48) | 2.44 (.51) | 2.12 (.55) |

* For the group as a whole, means with different subscripts differed significantly, p < .01. In other words, infants’ looking during the face trials was significantly greater than their looking during the other three trial types (comparisons were not conducted on the individual group means; the means are provided here for illustration purposes only).

The main effect of trial type was significant, F(3, 138) = 19.93, p < .001, ηp2 = .30. To determine which stimuli infants preferred, we conducted follow-up comparisons of these four means, using Benjamini and Hochberg’s (1995) False Discovery Rate (FDR) to adjust for multiple comparisons. According to this method, we first determined how many comparisons we planned to conduct (in this case 6) and how many of those had p-values less than or equal to .05 (in this case 3). Based on these values, our adjusted criterion for significance was p ≤ .03. Our comparisons using this criteria revealed that infants looked significantly longer at images of human faces than at images of vehicles, t(47) = 6.61, p < .001, d = .95, dogs, t(47) = 5.23, p < .001, d = .76, or cats, t(47) = 5.72, p < .001, d = .83, consistent with other findings that infants prefer human faces to other kinds of stimuli (Fagan, 1972; Fantz, 1961; Gliga, Elsabbagh, Andravizou, & Johnson, 2009).

The ANOVA also revealed a marginally significant interaction between trial type and pet group, F(3, 138) = 2.55, p = .06, ηp2 = .05. The means are provided in Table 1. Both groups of infants showed the same general pattern, and the magnitudes of the differences between the groups of infants were modest. Because in our main analyses we do not directly compare the different trial types, except for comparing infants’ looking at cats and dogs, these effects will have little impact on our main conclusions.

Effect of pet experience on infants’ visual inspection of dogs and cats

Our main hypotheses were addressed in analyses that evaluated differences in the visual inspection patterns of infants with and without pets as they viewed images of dogs and cats. We were particularly interested in whether distribution of looking to the head regions versus the non-head regions differed for infants with and without pet experience. In the following analyses we directly compared infants’ distribution of looking to cats and dogs. Note we did not have a priori predictions that infants with and without pets would treat dogs and cats differently. Rather, we entered infants’ inspection of dogs and cats separately in the analyses because (1) the means in Table 1 suggest a slight preference for dogs compared to cats, and (2) the head regions comprise different proportions of the overall images of dogs and cats (see below).

We first entered the average duration of infants’ looking to the head and non-head regions to images of cats and dogs into an ANOVA with region and animal type as the within-subjects factor and pet group as the between-subjects factor. There was a main effect of animal, F(1, 46) = 4.67, p = .04, ηp2 = .09; looking (averaged across the head and non-head regions) was greater for dogs (M = 1.08 s, SD = .22) than cats (M = 1.03 s, SD= .27). The main effect of region also was significant, F(1, 46) = 68.11, p < .001, ηp2 = .60, due to infants looking longer at the proportionately larger non-head region (M = 1.35 s, SD = .37) than at the proportionately smaller head region (M = .76 s, SD = .33).

The ANOVA also revealed a significant region by animal interaction, F(1, 46) = 6.95, p = .01, ηp2 = .13, indicating that infants distributed their looking differently to dogs and cats (see Table 2). We compared the means for this interaction, adjusting our significance level using Benjanimi and Hochberg’s (1995) FDR. Three of the 4 comparisons (head versus non-head for each animal, and comparison of the two animals for each region) had significance levels less than or equal to .05, resulting in a modified criterion for significance of p ≤ .04. These comparisons revealed that the interaction was due to infants looking longer at the head regions of the dogs than at the head regions of the cats, t(47) = 3.38, p = .001, d =.49; their levels of looking to the non-head regions of dogs and cats did not differ, t(47) = 1.30, p = .20, d =19, and they looked significantly longer at the non-head regions than at the head regions for cats, t(47) = 8.98, p <.001, d = 1.30, and dogs, t(47) = 5.07, p < .001, d = .73. This difference in infants’ looking to the head regions of dogs and cats was not surprising given that the dog heads were proportionately bigger than the cat heads

Table 2.

Means (in s) for infants’ looking to head and non-head regions of dog and cat images (SD in parentheses)

| Cat | Dog | |

|---|---|---|

| Head | .67a (.34) | .84b (.39) |

| Non-head | 1.38c (.42) | 1.32 a,b,c (.40) |

Note: Means with different subscripts differ significantly (we did not compare cat head to dog non-head or dog head to cat non-head).

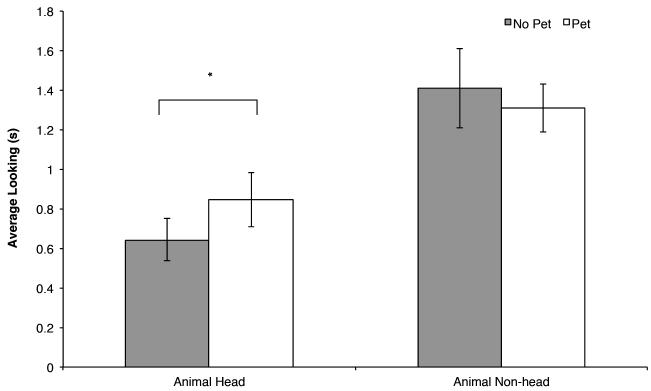

More importantly for our hypotheses, the predicted pet group by region interaction was significant, F(1, 46) = 4.17, p = .05, ηp2 = .08 (see Figure 3). We probed this interaction by comparing the duration of looking by infants with and without pets for each region. Only one of these two comparisons had a p value less than or equal to .05, thus applying Benjanimi and Hochberg’s (1995) FDR correction our criterion for significance was p ≤ .03. Infants with and without pets showed similar levels of interest in the non-head regions of animals, t(46) = .94, p = .35, d = .27, but infants with pets looked longer at the head regions of images of dogs and cats than did infants without pets, t(46) = 2.21, p = .03, d = .64. Thus, infants’ distribution of looking to the regions of these animals varied as a function of their experience with pets.

Figure 3.

Mean looking time to each region for animal stimuli (averaged across dog and cat images) in s by pet group. Means that differ are indicated by an asterisk, p < .05.

Note that although infants generally looked longer at the heads of dogs than cats, the difference between infants with and without pets was observed when averaging across trials with dog and cat images. Inspection of the individual means revealed the same pattern for infants’ interest in dog heads (infants with pets: M = .91, SD = .41 vs. infant without pets: M = .75, SD = .34) and cats (infants with pets: M = .78, SD = .35 vs. infants without pets: M = .54, SD = .30).

To further understand the relation between pet experience and infants’ visual inspection of these stimuli, we next analyzed the proportion of time infants spent looking at the heads. Given the differences in infants’ interest in dogs and cats, proportion scores would help evaluate infants’ interest in head regions while controlling for the baseline differences in infants’ levels of interest in the images of cats and dogs. In addition, as described later, proportion scores allow us to evaluate whether infants’ distribution of looking to the head region is greater than expected given the relative size of those regions.

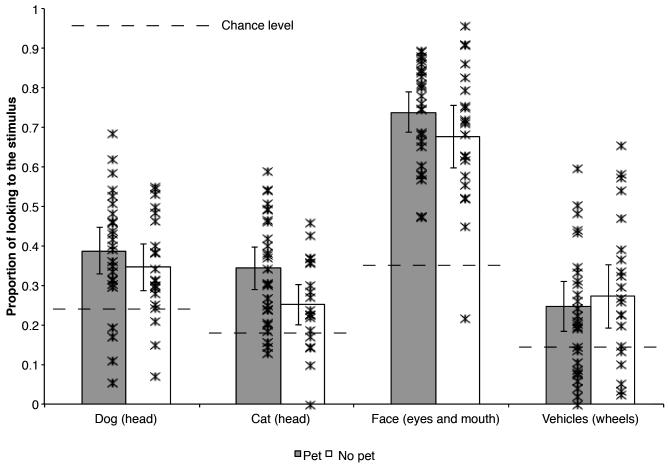

We created proportion scores for each type of animal by dividing the duration of infants’ looking to the head regions by their total looking to the animal (head and non-head combined). The proportion scores for dog and cat images are presented in the left portion of Figure 4; infants with pets had a greater proportion of their looking devoted to the heads of both animals than did infants without pets. We also depict the mean proportion scores for each infant in this figure. In general, most individual infants with pets had higher proportion of looking at the heads of dogs and cats than did most individual infants without pets.

Figure 4.

The mean proportion of infants’ looking devoted to the most informationally rich regions of each stimulus type by pet group (error bars represent 95% confidence intervals; individual infants are represented by with individual asterisks). The lines bisecting the bars represents chance levels (i.e., the area each of the areas of interest comprise in the actual image) for each stimulus type (23% for dog heads, 19% for cat heads, 35% for the eyes and mouths (combined) of human faces, and 16% for the wheels of vehicles); all means were significantly greater than chance, ps? .02.

An ANOVA conducted on these scores with animal type (dog, cat) as the within-subject factor and pet group as the between-subject factor revealed a main effect of animal, F(1, 46) = 13.39, p = .001, ηp2 = .23. Consistent with the analyses of looking time, when looking at dog images, infants spent a greater proportion of their looking devoted to the head regions (M = .37, SD = .14) than when they were looking at cat images (M = .32, SD = .14), presumably reflecting the fact the difference in the relative size of dog and cat heads.

The predicted effect of pet group also was significant, F(1, 46) = 3.93, p = .05, ηp2 = .08. Infants with pets devoted a significantly greater proportion of their looking to the head regions (M = .37, SD = .13) than did infants without pets (M = .30, SD = .10), consistent with the analyses just described. Note that again although the effect was greater for cats than it was for dogs, there was no significant interaction between animal type and pet group, confirming that there were no significant differences between infants with and without pets as a function of stimulus type.

Proportion scores not only reveal differences between infants with and without pets, they also can provide insight into whether infants look at these regions more than expected by chance. Recall that Quinn and his colleagues (2009) observed that even naïve 6-month-old infants (i.e., those without pet experience) exhibited a “head bias” when looking at images similar to ours; therefore, we asked whether infants at 4 months similarly have such a bias, and whether this bias is observed both in infants with and without pet experience. Moreover, Quinn and colleagues found that head bias on relatively long trials over a familiarization period. Here, we can uncover such a head bias when infants initially inspect novel dog and cat images.

To address these questions we compared the proportion of infants’ looking to the head regions of the dog and cat stimuli to the proportion of the area those regions comprised in the images (i.e., “chance” level, assuming that if infants’ looking is randomly distributed across the images, the proportion of time spent looking at the head will be equivalent to the relative size of that region). These analyses revealed that the proportion of looking distributed to the head regions of dogs was significantly different from the proportion of the images of dogs devoted to the head region for both infants with pets, t(26) = 5.54, p < .001, d = 1.07, and infants without pets, t(20) = 4.08, p = .001, d = .89. Similarly, the proportion of looking distributed to the head regions of cats was significantly different from the proportion images of cats devoted to the head region for both infants with pets, t(26) = 5.91, p < .001, d = 1.14, and infants without pets, t(20) = 2.52, p = .02, d = .55. Thus, although infants with and without pets devoted different proportions of their looking to the head region, both groups of infants looked at these informationally important regions more than is expected by the size of those regions relative to the size of the image as a whole, suggesting that the bias observed by Quinn et al. (2009) in 6-month-old infants is present by 4 months. Our results extend this previous finding; we found the bias in 4-month-old infants during their visual inspection of briefly presented stimuli regardless of pet experience, but this bias is significantly stronger if infants have daily experience with a pet.

Comparison of infants with pets and non-pets on “neutral” stimuli

One limitation of drawing conclusions from the analyses just reported is that it is impossible to determine whether infants with and without pets differ specifically on their visual inspection of pets, or if they are different in general in their visual inspection of stimuli. That is, the same pattern of results may be obtained if infants’ with pets learn about scanning animal images in particular, or if infants with pets scan images differently than do infants without pets in general. It is possible that parents who raise infants with pets differ in their treatment of infants in a variety of ways that result in general differences in the emergence of visual inspection behavior by 4 months.

To test our hypothesis that the effects we observed are specific to infants’ investigation of images of animals, we interleaved our dog and cat trials with two types of trials with “neutral” stimuli with respect to pet experience. We reported the analysis of infants’ total looking on each trial in a previous section. As described in that section, and summarized in Table 1, infants with and without pets did not differ in their looking to human faces and vehicles, both of which should be equally relatively familiar regardless of pet experience. Consistent with other studies showing that faces effectively capture infants’ attention compared to other stimuli (Fagan, 1972; Fantz, 1961; Gliga & Csibra, 2007), infants generally preferred human faces to all the other stimuli. Infants’ looking to the vehicles did not differ from their looking to the dogs and cats.

To determine whether infants’ scanning of these stimuli differed, we conducted the same analyses as described earlier for infants’ looking to the regions of the cat and dog stimuli. We did not include all four stimulus types in a single analysis for several reasons. First, the relative familiarity of and social significance of human faces may make infants scan those stimuli differently than other stimuli. Direct comparisons between human faces and other stimuli in terms of infants’ directing looking to the target AOI would be difficult to make. Second, given that the target AOIs comprised different proportions of each of the four types of stimuli, we expect by chance that infants will look longer at the target AOIs of some stimuli than others. Finally, direct comparisons of how infants’ scan the different stimuli are not theoretically meaningful in the current context. Our study was not designed with this in mind (i.e., the AOIs were different sizes, we had more animal stimuli than the other types of stimuli). For these reasons, we concentrated our analyses on comparisons of infants with and without pets for each type of stimulus separately.

Our first ANOVAs compared the duration of infants’ looking to the target and non-target regions of each stimulus type with pet group as the between-subjects comparison. The analysis of the human faces revealed only a main effect of region, F(1, 46) = 84.65, p < 001, ηp2 = .65. There was no effect of pet, p = .78. Comparison of the duration of their looking to those regions confirmed that infants with pets (M = 1.83 s, SD = .41) and without pets (M = 1.69 s, SD = .59) had similar levels of looking to the eyes and mouths of faces, t(46) = 1.01, p = .32, d = .29. Similarly, the analysis of the vehicles revealed only a main effect of region, F(1, 46) = 94.84, p < .001, ηp2 = .67, and comparison of the duration of looking to the wheels revealed no difference in the duration of looking by infants with pets (M = .49 s, SD = .34) and infants without pets (M = .63 s, SD = .48), t(46) = 1.12, p = .27, d = .33. Thus, for these “neutral” stimuli, infants with and without pets did not differ in how they distributed their looking.

We confirmed this conclusion with comparisons of the proportion of looking devoted to the target AOIs by the two groups of infants. The mean and individual proportion scores, presented in the right half of Figure 4, indicate a different pattern than was observed for dogs and cats. Infants with and without pets did not differ in the proportion of looking devoted to the eyes and mouths of human faces, t(46) = 1.41, p = .16, d = .41, or to the wheels of vehicles, t(46) = 1.24, p = .22, d = .36. Note that the means of individual infants suggests that there was one infant without pets with a proportion spent looking at the eyes and mouth that was lower than that of the other infants in the group; without this infant, the overall mean and the spread of scores for infants with and without pets is very similar (similarly, there was one infant without pets who never looked at the head of cats, but even when this infant is excluded from that mean, the overall mean and spread of proportion looking to the head regions of cats by infants without pets is still lower than that of infants with pets). Thus, unlike the distribution of their looking to animal stimuli, infants with and without pets did not differ in the proportion of their looking to the most informationally rich regions of the human faces and vehicle stimuli.

Finally, we tested infants’ biases to look at these regions. Comparison of the proportion of infants’ looking to these regions to chance (i.e., the proportion of the area the regions comprised), confirmed that the proportion of time infants spent looking at the eyes and mouths, relative to their total looking at the faces, was greater than expected given the relative size of those regions combined, for both infants without pets, t(20) = 8.59, p < .001, d = 1.88, and infants with pets, t(26) = 15.79, p < .001, d = 3.04, and that the proportion of time spent looking at the wheels, relative to their total looking at the vehicles, was greater than expected given the relative size of that region for both infants without pets, t(20) = 2.92, p = .008, d = .64, and infants with pets, t(26) = 2.83, p = .009, d = .56. This manipulation check is important for confirming that indeed we have identified significant and compelling regions of these stimuli. Thus, the lack of a difference between infants with and without pets for their preference for these regions does not reflect a general lack of attention to those regions. The point is that 4-month-old infants did have biases to look at the regions we identified as informationally rich, but there were no differences between infants with and without pets, supporting our conclusion that the differences in biases we observed for dog and cat stimuli reflected the effect of pet experience on their visual inspection of stimuli similar to that experience, and not the effect of pet experience on visual inspection of stimuli in general.

Discussion

These results suggest that by 4 months of age infants’ daily experiences with pets influenced their visual inspection of images of cats and dogs in the laboratory. Although even naïve 4-month-old infants had the kind of “head bias” observed by Quinn and his colleagues (2009) using a very different eye-tracking procedure with naïve 6-month-old infants, our results suggest that experience makes this bias stronger, at least in younger infants. We found that 4-month-old infants with pets spent a significantly higher proportion of their looking to the head regions of animals than did infants without pets. Thus, by 4 months infants appear to recognize which regions are most diagnostic in these stimuli, but infants who have more experience with dogs or cats find those diagnostic regions of dogs and cats even more compelling. These findings represent a significant step forward in our understanding of how experience over developmental time—in this case, daily exposure to a pet—interacts with behavior over much shorter timescales—in this case, attentional distribution during 3-s exposures to images. Indeed, it is remarkable that differences in visual scanning can be observed even during brief, 3-s exposures to the stimuli. This suggests that infants’ initial approach to novel images of cats and dogs differs as a function of their pet experience; these differences in experience may create perceptual biases that guide infants’ visual inspection of these stimuli.

The design of our experiment allows us to conclude that infants’ experience is related to their visual inspection of novel stimuli similar to that experience, and but not to general differences in how infants visually inspect all stimuli. Specifically, we found that infants with and without pets differed in their visual inspection of images of animal stimuli, but not in their visual inspection of the more neutral stimuli of human faces and vehicles. Importantly, all infants devoted more of their looking than expected by chance to the informationally rich regions of all four types of stimuli.

Here we used experience as a proxy for development, complementing other work in which age differences were used as a proxy for differences in experience (Anzures, Quinn, Pascalis, Slater, & Lee, 2010; Kelly, Liu, et al., 2007). Combined, the studies reported in the literature suggest that a significant factor in infants’ perceptual development is the effect that experience looking at some types of items has on infants’ learning of items similar to their experience. We believe that experience with a particular type of item—in this case pets—facilitates infants’ learning about how to distribute their attention when inspecting those types of stimuli, presumably maximally increasing their exposure to the most informative or diagnostic features of the stimuli. Previous work in which age and experience were confounded show how the specific experience associated with age—even experience that is experimentally induced—is related to changes in infants’ learning of stimuli (Pascalis et al., 2005; Scott & Monesson, 2009). This study reveals the effect of experience on infants’ learning-related behavior even when age is held constant. Therefore, these results provide additional support for claims that perceptual development is influenced, in part, by the infants’ experience viewing and perceiving particular types of stimuli.

Our use of eye-tracking allowed us to draw conclusions about experience and infants’ distribution of attention because we measured the microstructure of infants’ looking behavior (Aslin, 2007). Using such methods, we could evaluate differences as a function of experience in 3-s trials; measures of overall looking are insensitive to differences in such short trials, instead revealing differences in overall looking time preferences as a function of experience in trials of 10-s or longer (Bar-Haim et al., 2006; Kelly, Liu, et al., 2007; Quinn et al., 2008). Moreover, because eye-tracking allows us to measure precisely where infants look, we could actually detect differences in patterns of visual inspection strategy infants adopted as they looked, rather than measuring how much looking time infants accumulated during trials. Thus, our results provide insight into the strategies infants use when presented with stimuli that make contact with differences in experience.

We propose that our results reflect a mechanism like that discovered by Smith and her colleagues (2002) in the context of language development. Smith and colleagues observed that young children’s experience with and knowledge of categories and category labels shaped their subsequent learning by directing attentional processes—over the course of their daily lives infants learn categories heavily defined by shape (e.g., cups, cars) and they come to selectively attend to shape when learning categories. Thus, the input determines what information children learn to focus on. This is conceptually similar to Adolph’s (2008) argument that infants learn to learn, a concept derived from theorizing in animal behavior (Harlow, 1949) and connectionism (Kehoe, 1988). That is, infants not simply acquire milestones such as crawling, reaching, and walking; they learn how to adaptively adjust those abilities to changes in the context. We speculate that our results suggest that infants with pets have not simply formed a representation of dogs or cats that they apply when encountering new images, but that experience with dogs and cats induces the acquisition of strategies infants use to direct their attention when visually inspecting animal images. Indeed, the kinds of differences in scanning we observed here have been found in other contexts to be related to differences in what infants learn about stimuli, that is the pattern of dishabituation they show to novel stimuli (Amso et al., 2010; Johnson et al., 2004). Thus, previous findings of differences in infants’ categorization for and memory of cat and dog stimuli (Kovack-Lesh et al., 2008; Kovack-Lesh et al., 2012) may reflect variations in how infants with and without pets sample information of the images. And, this general mechanism may explain why infants can discriminate better between faces from a familiar race than an unfamiliar race (Kelly, Quinn, et al., 2007) and why infants’ categorization of familiar stimuli is not strongly influenced by the level of variability within the to-be-categorized items (Pauen, 2000). Each case may reflect infants’ past knowledge directing their attention to the most informationally rich or diagnostic portions of relatively familiar stimuli, allowing them to better learn about such stimuli than about other, less familiar stimuli.

We assume that infants’ exposure to pets has a particularly strong influence on the development of such strategies for learning how to learn about animals. Infants generally are highly attentive to dynamic, moving, animate stimuli (Hunnius & Reint, 2004; Shaddy & Colombo, 2004). Therefore, pets may be extremely compelling stimuli. For example, as cats walk across the room, jump up on a windowsill, or stand up and stretch unexpectedly, they may automatically capture infants’ attention. Saffran (2009) argued that learning is accomplished by recognizing statistical regularities in the environment, but mechanisms must be in place to allow children to attend to and recognize those regularities. Thus, infants who have pets can attend to those pets, extract information about features of animals, track statistical patterns, and adopt strategies for inspecting images similar to those animals not only because the pets are present in the environment, but because these animals are salient, compelling stimuli that attract attention. The point is that the kind of effects we observed here—as well as effects for the familiarity of the gender or race of faces on infants’ face processing—may reflect the effect of experience with highly salient, compelling items and not experience with any item. It would not be surprising, for example, if infants’ daily experience with stationary items to which their attention is not directed would have little or no influence on how they directed their attention to similar stimuli.

In summary, these results add to our understanding of how infants’ looking can reflect their existing knowledge. Rather than drawing conclusions about infants’ well formed concepts from their post-familiarization responding to tests (Carey, 2000), we used procedures that allow us to draw conclusions about infants’ moment-to-moment visual inspection of stimuli in the laboratory. Our results show that such visual inspection can reflect infants’ past experience, suggesting that infants adopt different in-the-moment strategies for attending to—and ultimately learning about—stimuli as a function of how familiar they are with such stimuli. Thus, these results add to a growing literature showing that looking tasks can reflect learning over multiple timescales.

Acknowledgments

We thank Lisa Christoffer, Emily Spring, and Karen Leung and the undergraduate students at the Infant Cognition Lab at the UC Davis Center for Mind and Brain for their help in collecting and coding these data. This work was made possible by grant HD56018 from the Eunice Kennedy Shriver National Institute of Child Health & Human Development and Mars-Waltham and by grant BCS 0951580 from the National Science Foundation. The content is solely the responsibility of the authors and does not necessarily represent the official views of the Eunice Kennedy Shriver National Institute Of Child Health & Human Development, the National Institutes of Health, Mars-Waltham, or the National Science Foundation.

References

- Adolph KE. Learning to move. Current Directions in Psychological Science. 2008:213–218. doi: 10.1111/j.1467-8721.2008.00577.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Allison T, Puce A, McCarthy G. Social perception from visual cues: Role of the STS region. Trends in Cognitive Sciences. 2000:267–278. doi: 10.1016/s1364-6613(00)01501-1. [DOI] [PubMed] [Google Scholar]

- Amso D, Fitzgerald M, Davidow J, Gilhooly T, Tottenham N. Visual exploration strategies and the development of infants’ facial emotion discrimination. Frontiers in Developmental Psychology. 2010 doi: 10.3389/fpsyg.2010.00180. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anzures G, Quinn PC, Pascalis O, Slater AM, Lee K. Categorization, categorical perception, and asymmetry in infants’ representation of face race. Developmental Science. 2010:553–564. doi: 10.1111/j.1467-7687.2009.00900.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aslin RN. What’s in a look? Developmental Science. 2007:48–53. doi: 10.1111/J.1467-7687.2007.00563.X. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aslin RN, McMurray B. Automated corneal-reflection eye tracking in infancy: Methodological developments and applications to cognition. Infancy. 2004:155–163. doi: 10.1207/s15327078in0602_1. [DOI] [PubMed] [Google Scholar]

- Bar-Haim Y, Ziv T, Lamy D, Hodes M. Nature and nurture in own-race face processing. Psychological Science. 2006:159–163. doi: 10.1111/j.1467-9280.2006.01679.x. [DOI] [PubMed] [Google Scholar]

- Benjamini Y, Hochberg Y. Controlling the false discovery rate: A practical and powerful approach to multiple testing. Journal of the Royal Statistical Society Series B Methodological. 1995:289–300. [Google Scholar]

- Bornstein MH, Mash C. Experience-based and on-line categorization of objects in early infancy. Child Development. 2010:884–897. doi: 10.1111/j.1467-8624.2010.01440.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carey S. The origin of concepts. Journal of Cognition and Development. 2000:37–41. [Google Scholar]

- Colombo J, Mitchell DW. Infant visual habituation. Neurobiology of Learning and Memory. 2009:225–234. doi: 10.1016/j.nlm.2008.06.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Colombo J, Mitchell DW, Coldren JT, Freeseman LJ. Individual differences in infant visual attention: Are short lookers faster processors or feature processors? Child Development. 1991:1247–1257. [PubMed] [Google Scholar]

- Eimas PD, Quinn PC. Studies on the formation of perceptually based basic-level categories in young infants. Child Development. 1994:903–917. [PubMed] [Google Scholar]

- Fagan JF. Infants’ recognition memory for faces. Journal of Experimental Child Psychology. 1972:453–476. doi: 10.1016/0022-0965(72)90065-3. [DOI] [PubMed] [Google Scholar]

- Fantz R. The origin of form perception. Scientific American. 1961:66–72. doi: 10.1038/scientificamerican0561-66. [DOI] [PubMed] [Google Scholar]

- French RM, Mareschal D, Mermillod M, Quinn PC. The role of bottom-up processing in perceptual categorization by 3- to 4-month-old infants: Simulations and data. Journal of Experiencial Psychology: General. 2004:382–397. doi: 10.1037/0096-3445.133.3.382. [DOI] [PubMed] [Google Scholar]

- Gliga T, Csibra G. Progress in brain research. Vol. 164. Elsevier; 2007. Seeing the face through the eyes: A developmental perspective on face expertise; pp. 323–339. [DOI] [PubMed] [Google Scholar]

- Gliga T, Elsabbagh M, Andravizou A, Johnson M. Faces attract infants’ attention in complex displays. Infancy. 2009:550–562. doi: 10.1080/15250000903144199. [DOI] [PubMed] [Google Scholar]

- Haith M. Who put the cog in infant cognition? Is rich interpretation too costly? Infant Behavior and Development. 1998:167–179. [Google Scholar]

- Harlow HF. The formation of learning sets. Psychological Review. 1949:51–65. doi: 10.1037/h0062474. [DOI] [PubMed] [Google Scholar]

- Heisz JJ, Shore DI. More efficient scanning for familiar faces. Journal of Vision. 2008:1–10. doi: 10.1167/8.1.9. [DOI] [PubMed] [Google Scholar]

- Hunnius S, Reint HG. Developmental changes in visual scanning of dynamic faces and abstract stimuli in infants: A longitudinal study. Infancy. 2004:231–255. doi: 10.1207/s15327078in0602_5. [DOI] [PubMed] [Google Scholar]

- Hurley KB, Kovack-Lesh KA, Oakes LM. The influence of pets on infants’ processing of cat and dog images. Infant Behavior and Development. 2010:619–628. doi: 10.1016/j.infbeh.2010.07.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jankowski JJ, Rose SA, Feldman JF. Modifying the distribution of attention in infants. Child Development. 2001:339–351. doi: 10.1111/1467-8624.00282. [DOI] [PubMed] [Google Scholar]

- Johnson SP, Slemmer JA, Amso D. Where infants look determines how they see: Eye movements and object perception performance in 3-month-olds. Infancy. 2004:185–201. doi: 10.1207/s15327078in0602_3. [DOI] [PubMed] [Google Scholar]

- Karatekin C. Eyetracking studies of normative and atypical development. Developmental Review. 2007:283–348. [Google Scholar]

- Kehoe EJ. A layered network model of associative learning: Learning to learn and configuration. Psychological Review. 1988:411–433. doi: 10.1037/0033-295x.95.4.411. [DOI] [PubMed] [Google Scholar]

- Kelly DJ, Liu S, Ge L, Quinn PC, Slater AM, Lee K, Pascalis O. Cross-race preferences for same-race faces extend beyond the African versus Caucasian contrast in 3-month-old infants. Infancy. 2007:87–95. doi: 10.1080/15250000709336871. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kelly DJ, Quinn PC, Slater AM, Lee K, Ge L, Pascalis O. The other-race effect develops during infancy: Evidence of perceptual narrowing. Psychological Science. 2007:1084–1089. doi: 10.1111/j.1467-9280.2007.02029.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kelly DJ, Quinn PC, Slater AM, Lee K, Gibson A, Smith M, Pascalis O. Three-month-olds, but not newborns, prefer own-race faces. Developmental Science. 2005:F31–F36. doi: 10.1111/j.1467-7687.2005.0434a.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kovack-Lesh KA, Horst J, Oakes LM. The cat is out of the bag: The joint influence of previous experience and looking behavior on infant categorization. Infancy. 2008:285–307. [Google Scholar]

- Kovack-Lesh KA, Oakes LM, McMurray B. Contributions of attentional style and previous experience to 4-month-old infants’ categorization. Infancy. 2012 doi: 10.1111/j.1532-7078.2011.00073.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu S, Quinn PC, Wheeler A, Xiao N, Ge L, Lee K. Similarity and difference in the processing of same- and other-race faces as revealed by eye tracking in 4- to 9-month-olds. Journal of Experimental Child Psychology. 2010a:180–189. doi: 10.1016/j.jecp.2010.06.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu S, Quinn PC, Wheeler A, Xiao N, Ge L, Lee K. Similarity and difference in the processing of same- and other-race faces as revealed by eye tracking in 4- to 9-month-olds. Journal of Experimental Child Psychology. 2010b doi: 10.1016/j.jecp.2010.06.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Madole KL, Oakes LM. Making sense of infant categorization: Stable processes and changing representations. Developmental Review. 1999:263–296. [Google Scholar]

- Mandler JM. Seeing is not the same as thinking: Commentary on “Making sense of infant categorization”. Developmental Review. 1999:297–306. [Google Scholar]

- Mareschal D, French Robert, Quinn PC. A connectionist account of asymmetric category learning in early infancy. Developmental psychology. 2000:635–645. doi: 10.1037/0012-1649.36.5.635. [DOI] [PubMed] [Google Scholar]

- Mareschal D, Quinn PC. Categorization in infancy. Trends in Cognitive Sciences. 2001:443–450. doi: 10.1016/s1364-6613(00)01752-6. [DOI] [PubMed] [Google Scholar]

- Nelson CA. The development and neural bases of face recognition. Infant and Child Development. 2001:3–18. [Google Scholar]

- Oakes LM, Coppage DJ, Dingel A. By land and by sea: The role of perceptual similarity in infants’ categorization of animals. Developmental Psychology. 1997:396–407. doi: 10.1037//0012-1649.33.3.396. [DOI] [PubMed] [Google Scholar]

- Oakes LM, Ribar R. A comparison of infants’ categorization in paired and successive presentation familiarization tasks. Infancy. 2005:85–98. doi: 10.1207/s15327078in0701_7. [DOI] [PubMed] [Google Scholar]

- Oakes LM, Spalding TS. The role of exemplar distribution in infants’ differentiation of categories. Infant Behavior and Development. 1997:457–475. [Google Scholar]

- Pascalis O, de Haan M, Nelson CA. Is face processing species-specific during the first year of life? Science. 2002:1321–1322. doi: 10.1126/science.1070223. [DOI] [PubMed] [Google Scholar]

- Pascalis O, Kelly DJ. The origins of face processing in humans: Phylogeny and ontogeny. Perspectives on Psychological Science. 2009:200–209. doi: 10.1111/j.1745-6924.2009.01119.x. [DOI] [PubMed] [Google Scholar]

- Pascalis O, Scott LS, Kelly DJ, Shannon RW, Nicholson E, Coleman M, Nelson CA. Plasticity of face processing in infancy. Proceedings of the National Academy of Sciences of the United States of America. 2005:5297. doi: 10.1073/pnas.0406627102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pauen S. Early differentiation within the animate domain: Are humans something special? Journal of Experimental Child Psychology. 2000:134–151. doi: 10.1006/jecp.1999.2530. [DOI] [PubMed] [Google Scholar]

- Quinn PC, Doran MM, Reiss JE, Hoffman JE. Time course of visual attention in infant categorization of cats versus dogs: Evidence for a head bias as revealed through eye tracking. Child Development. 2009:151–161. doi: 10.1111/j.1467-8624.2008.01251.x. [DOI] [PubMed] [Google Scholar]

- Quinn PC, Eimas PD. Perceptual cues that permit categorical differentiation of animal species by infants. Journal of Experimental Child Psychology. 1996:189–211. doi: 10.1006/jecp.1996.0047. [DOI] [PubMed] [Google Scholar]

- Quinn PC, Eimas PD, Rosenkrantz S. Evidence for representations of perceptually similar natural categories by 3-month-old and 4-month-old infants. Perception. 1993:463–475. doi: 10.1068/p220463. [DOI] [PubMed] [Google Scholar]

- Quinn PC, Uttley L, Lee K, Gibson A, Smith M, Slater AM, Pascalis O. Infant preference for female faces occurs for same - but not other-race faces. Journal of Neuropsychology. 2008:15–26. doi: 10.1348/174866407x231029. [DOI] [PubMed] [Google Scholar]

- Quinn PC, Yahr J, Kuhn A, Slater AM, Pascalis O. Representation of the gender of human faces by infants: A preference for female. Perception. 2002:1109–1121. doi: 10.1068/p3331. [DOI] [PubMed] [Google Scholar]

- Rakison DH, Butterworth GE. Infants’ attention to object structure in early categorization. Developmental Psychology. 1998:1310–1325. doi: 10.1037//0012-1649.34.6.1310. [DOI] [PubMed] [Google Scholar]

- Rasband WS. Image. J. U. S. National Institutes of Health; Bethesda, Maryland, USA: 1997-2001. http://imagej.nih.gov/ij/ [Google Scholar]

- Saffran JR. What can statistical learning tell us about infant learning? In: Woodward Amanda, Needham Amy., editors. Learning and the infant mind. Oxford University Press; Oxford: 2009. [Google Scholar]

- Scott LS, Monesson A. The origin of biases in face perception. Psychological Science. 2009:676–680. doi: 10.1111/j.1467-9280.2009.02348.x. [DOI] [PubMed] [Google Scholar]

- Scott LS, Shannon RW, Nelson CA. Neural correlates of human and monkey face processing in 9-month-old infants. Infancy. 2006:171–186. doi: 10.1207/s15327078in1002_4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shaddy DJ, Colombo J. Developmental changes in infant attention to dynamic and static stimuli. Infancy. 2004:355–365. [Google Scholar]

- Smith LB, Jones S, Landau B, Gershkoff-Stowe L, Samuelson LK. Object name learning provides on-the-job training for attention. Psychological Science. 2002:13–19. doi: 10.1111/1467-9280.00403. [DOI] [PubMed] [Google Scholar]

- Spelke ES. Nativism, empiricism, and the origins of knowledge. Infant Behavior & Development. 1998:181. [Google Scholar]

- Spencer J, Quinn PC, Johnson MH, Karmiloff-Smith A. Heads you win, tails you lose: Evidence for young infants categorizing mammals by head and facial attributes. Early Development and Parenting. 1997:113–126. [Google Scholar]

- Stark L, Bowyer K. Function-based generic recognition for multiple object categories. CVGIP Image Underst. 1994:1–21. [Google Scholar]

- Tottenham N, Tanaka JW, Leon AC, McCarry T, Nurse M, Hare TA, Nelson C. The NimStim set of facial expressions: Judgments from untrained research participants. Psychiatry Research. 2009:242–249. doi: 10.1016/j.psychres.2008.05.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Younger BA. The segregation of items into categories by ten-month-old infants. Child Development. 1985:1574–1583. [PubMed] [Google Scholar]

- Younger BA, Cohen LB. Developmental change in infants’ perception of correlations among attributes. Child Development. 1986:803–815. [PubMed] [Google Scholar]