Abstract

A major challenge for decision theory is to account for the instability of expressed preferences across time and context. Such variability could arise from specific properties of the brain system used to assign subjective values. Growing evidence has identified the ventromedial prefrontal cortex (VMPFC) as a key node of the human brain valuation system. Here, we first replicate this observation with an fMRI study in humans showing that subjective values of painting pictures, as expressed in explicit pleasantness ratings, are specifically encoded in the VMPFC. We then establish a bridge with monkey electrophysiology, by comparing single-unit activity evoked by visual cues between the VMPFC and the orbitofrontal cortex. At the neural population level, expected reward magnitude was only encoded in the VMPFC, which also reflected subjective cue values, as expressed in Pavlovian appetitive responses. In addition, we demonstrate in both species that the additive effect of prestimulus activity on evoked activity has a significant impact on subjective values. In monkeys, the factor dominating prestimulus VMPFC activity was trial number, which likely indexed variations in internal dispositions related to fatigue or satiety. In humans, prestimulus VMPFC activity was externally manipulated through changes in the musical context, which induced a systematic bias in subjective values. Thus, the apparent stochasticity of preferences might relate to the VMPFC automatically aggregating the values of contextual features, which would bias subsequent valuation because of temporal autocorrelation in neural activity.

Keywords: decision making, electrophysiology, fMRI, neuroeconomics, reward, ventromedial prefrontal cortex

Introduction

Decision theory in its elementary form assumes that, when making a choice, individuals compare the values of available options and select the highest (Samuelson, 1938; Von Neumann and Morgenstern, 1944). The assignment of subjective values on a common scale is therefore a key stage of the decision process. Yet subjective values, expressed through ratings or choices in the face of seemingly identical conditions, appear to be unreliable: they vary over very short periods of time, and can be easily manipulated, as seen in priming and framing effect (Kahneman and Tversky, 2000; McFadden, 2005; Bardsley et al., 2009). One source of this behavioral variability might be inherent to the biological properties of the brain system that we use to compute subjective value (Fehr and Rangel, 2011). Previous studies have identified the ventromedial prefrontal cortex (VMPFC) as providing a common neural currency for subjective valuation (Rangel et al., 2008; Haber and Knutson, 2010; Peters and Büchel, 2010; Levy and Glimcher, 2012; Bartra et al., 2013). VMPFC activity has been shown to encode the subjective values of various items (such as food, face, painting, or music) that were expressed in choice or rating behavior (Plassmann et al., 2007; Chib et al., 2009; Lebreton et al., 2009; Salimpoor et al., 2013). Thus, subjective valuation might reflect specific properties from VMPFC activity, which might be integrated in economic models for a better account of choice.

One key property of neural signals is their autocorrelation over time (Arieli et al., 1996). Spontaneous fluctuations in brain activity can account for trial-to-trial variations in the neural response elicited by identical stimuli (Fox et al., 2006; Becker et al., 2011), as well as in perceptual decision and cognitive performance (Boly et al., 2007; Sadaghiani et al., 2009; Coste et al., 2011; Zhang et al., 2014). These considerations lead to the suggestion that trial-to-trial variations in prestimulus activity might persist into the stimulus-evoked response, thereby influencing behavioral response. Prestimulus activity has been shown previously to predict choices that were independent of subjective value (Bode et al., 2012; Padoa-Schioppa, 2013; Soon et al., 2013). Here, we tested the mechanistic hypothesis that subjective values expressed in our behavioral measurements are related to the absolute level of evoked activity in the VMPFC, which in turn reflects the activity level in the period just preceding stimulus presentation (see Fig. 1). In an effort to extend this hypothesis, we intended to identify possible sources of baseline fluctuations, beyond mere stochasticity. Building on the notion that the VMPFC automatically encodes the values of various contextual features (Lebreton et al., 2009; Levy et al., 2011), we tested the assumption that these additional values might be incorporated in the ongoing VMPFC activity and thereby influence following value judgments. In doing so, we intended to make sure that our results would not depend on a particular experimental approach by examining both the hemodynamic signal recorded with fMRI in humans and the electrophysiological activity of single neurons recorded in monkeys.

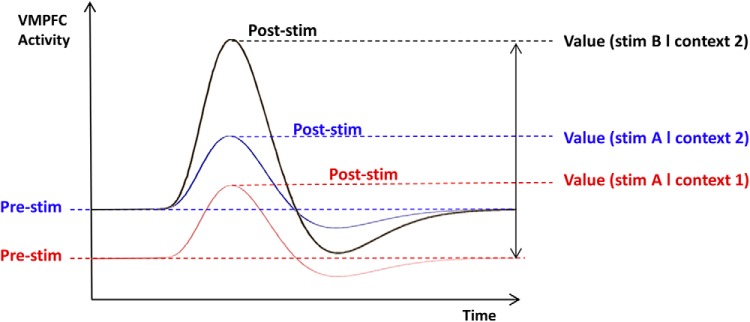

Figure 1.

Graphical explanation of how contextual factor impacts subjective value. As usually assumed, a change of stimulus (stim B in black vs stim A in blue) modulates the magnitude of the VMPFC evoked response and, therefore, the subjective value assigned to the stimulus. The original hypothesis is that a difference in context (context 2 in blue vs context 1 in red) induces a shift in VMPFC baseline activity (Pre-stim), which would persist into poststimulus activity. This would impact the subjective value given to a same stimulus A because subjective value is encoded in the absolute peak of the evoked response, and not in the differential from baseline.

Materials and Methods

Monkeys

This study is a reanalysis of the data collected and previously reported by Bouret and Richmond (2010).

Animals.

Two male rhesus monkeys (Monkey D, 9.5 kg; and Monkey T, 6.5 kg) were used. The experimental procedures followed the Association for Assessment and Accreditation of Laboratory Animal Care Guide for the Care and Use of Laboratory Animals and were approved by the National Institute of Mental Health Animal Care and Use Committee.

Behavioral procedures.

Each monkey squatted in a primate chair positioned in front of a monitor on which visual stimuli were displayed. A touch-sensitive bar was mounted on the chair at the level of the monkey's hands. Liquid rewards were delivered from a tube positioned with care between the monkey's lips but away from the teeth. With this placement of the reward tube, monkeys did not need to protrude their tongue to receive rewards. The tube was equipped with a force transducer to monitor the movement of the lips (referred to as “lipping” as opposed to licking, which we reserve for the situation in which tongue protrusion is needed). Before each experiment, the amplitude of the signal evoked by delivering of a drop of water through the spout was checked to ensure that it matched the observed lipping response.

Monkeys were trained to perform the task depicted in Figure 2a. Each trial began when the monkey touched the bar. One of three visual cues appeared, followed 500 ms later by the appearance of a red target (wait signal) in the center of the cue. After a random interval of 500–1500 ms, the target turned green (go signal). If the monkey released the touch bar 200–800 ms after the green target appeared, the target turned blue (feedback signal) and a liquid reward was delivered 400–600 ms later. The reward sizes of one, two, or four drops of liquid were related to the cues. If the monkey released the bar before the go signal appeared or after the go signal disappeared, an error was registered. No explicit punishment was given for an error in either condition, but the monkey had to perform a correct trial to move on in the task. That is, the monkey had to repeat the same trial with a given reward size until the trial was completed correctly.

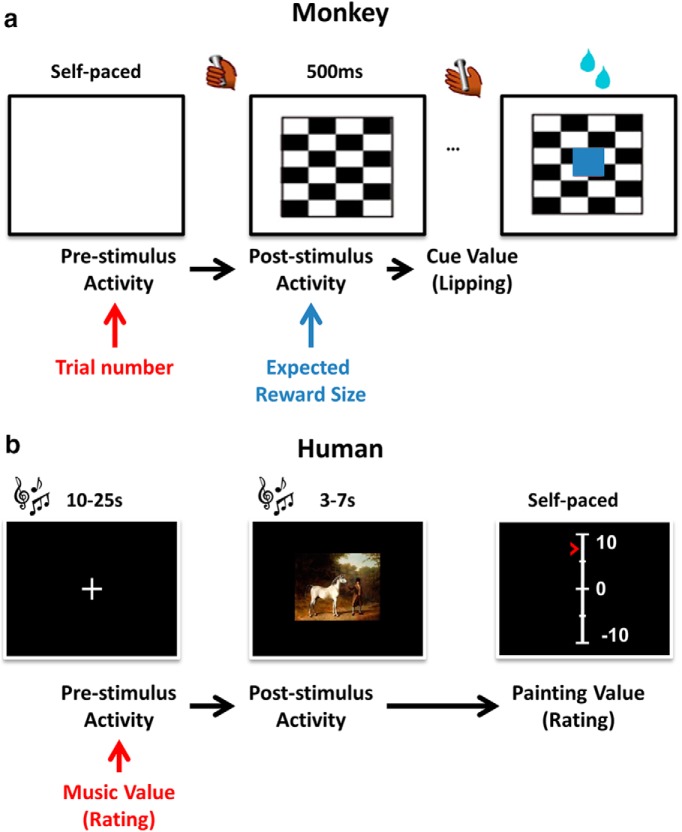

Figure 2.

Behavioral tasks and models. Tasks are illustrated with the successive screenshots displayed during a given trial. The links in the models below represent significant linear dependency. a, Once the monkey touched the bar, one of three visual cues (checkerboards) appeared, indicating reward size. Then a go signal appeared on screen (image not shown), and the monkey released the bar and received the reward accompanied by a visual feedback (blue square). b, After a long interval accompanied by a random musical context, a new painting was displayed on screen. Then subjects moved a cursor on an analog scale to indicate how much they liked the painting (regardless of musical context).

Data acquisition and analysis.

The lipping signal was monitored continuously and digitized at 1 kHz. For each trial, the latency of lipping response onset after cue onset was defined as the first of three successive windows in which the signal displayed a consistent increase in voltage of at least 2 SDs from a reference epoch of 250 ms taken right before cue onset. To avoid any confound due to differences in the way the tube was positioned between the monkey's lips from one day to another, lipping response latencies for each day were z-scored. Our lipping index is equal to minus lipping onset latency. The link between lipping index and the choice to perform the task (correct vs error trial) was assessed using logistic regression.

After initial behavioral training, a magnetic resonance (MR) image at 1.5T was obtained to determine the placement of the recording well. Then, a sterile surgical procedure was performed under general isoflurane anesthesia in a fully equipped and staffed surgical suite to place the recording well and head fixation post. The well was positioned so that the anteroposterior position was at the level of the genu of the corpus callosum, and laterally with an angle of 20° from the vertical in the coronal plane so that a straight electrode through the well would intersect the VMPFC (see Fig. 4a). Electrophysiological recordings were made with tungsten microelectrodes. The electrode was positioned using a stereotaxic plastic insert with holes 1 mm apart in a rectangular grid (Crist Instruments; 6-YJD-j1). The electrode was inserted through a guide tube. After several recording sessions, MR scans were obtained with the electrode at one of the recording sites; the positions of the recording sites were reconstructed based on relative position in the stereotaxic plastic insert and on the alternation of white and gray matter based on electrophysiological criteria during recording sessions. As seen in Figure 4a, VMPFC neurons were recorded in areas 10 and 14 (Carmichael and Price, 1994) and were mostly located along the medial wall. Orbitofrontal cortex (OFC) neurons were recorded more laterally along the orbital surface, in areas 11 and 13.

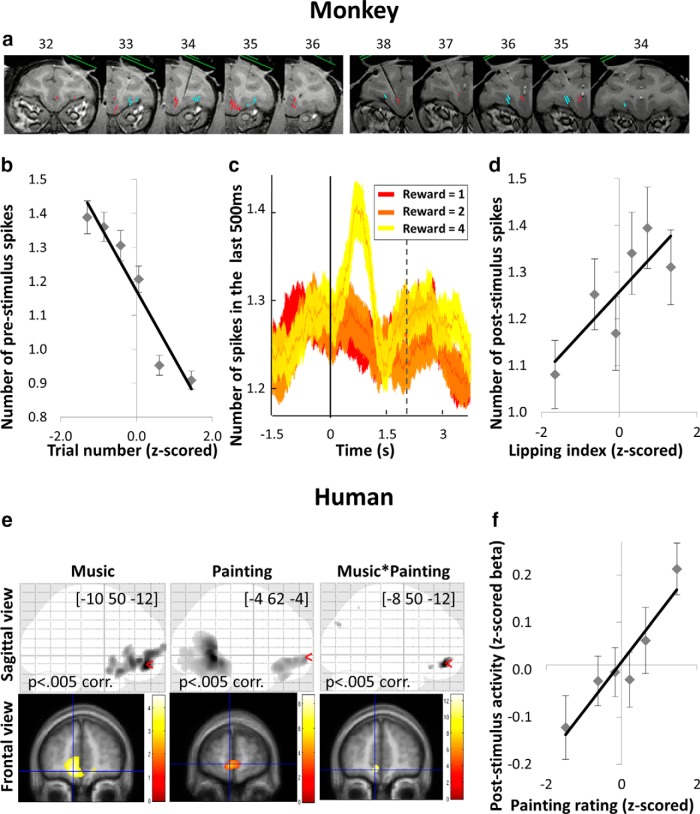

Figure 4.

Encoding of value-related factors in the VMPFC. a, Localization of recorded neurons (red represents VMPFC; blue represents OFC) in Monkey 1 (left) and Monkey 2 (right). Numbers indicate the distance in millimeters from the interaural line in the rostrocaudal axis. b, Evolution of prestimulus activity (number of spikes in the last 500 ms before cue onset) as trial number increases. Dots indicates 773 trials, ranked according to z-scored trial number. Error bars indicate intertrial SEM. c, Encoding of reward level over time around cue onset. At each time point, graph represents the average number of spikes over the last 500 ms. Solid and dashed lines indicate cue onset and reward delivery, respectively. d, Correlation between lipping index and poststimulus activity (number of spikes in the first 500 ms after cue onset). Dots indicate 773 trials, ranked according to lipping index. Error bars indicate intertrial SEM. e, Encoding of pleasantness rating in the VMPFC. x, y, z coordinates of the maxima refer to the MNI space. Color scales on the right indicate t values. A voxelwise threshold of p < 0.005 was used for displaying purposes. Top, Sagittal glass brain. Bottom, Frontal slice. Left, Correlation with music pleasantness over the duration of music display. Middle, Correlation with painting pleasantness 4 s after painting onset. Right, Conjunction between painting and music pleasantness. f, Correlation of painting pleasantness with poststimulus activity in independently defined ROI for VMPFC. Dots indicate 10 trials per subject, ranked according to painting rating. Error bars indicate intersubject SEM.

Because we collected lipping data for a limited number of neurons in the OFC (n = 22), we only report analyses concerning lipping index for VMPFC neurons (n = 84). However, we included data from all neurons (OFC: n = 93; VMPFC: n = 104) in our analysis of reward level and trial number encoding. All of the neurons recorded were included in the analyses whether they showed sensitivity to the task conditions or not. For characterizing firing and lipping activity, we used data from nonrepeated correct trials only; data from incorrect trials were excluded, as were trials that followed incorrect trials, because in this last case the cue was not informative to the monkey. At each time point, electrophysiological activity was calculated as the number of spikes recorded during the preceding 500 ms. Prestimulus activity was taken to be the spike count in the 500 ms preceding cue onset. Poststimulus activity was taken to be the spike count in the 500 ms following cue onset. Two sorts of regressions were performed across trials: (1) against the spike count for each individual neuron separately, and (2) to estimate the population activity, against the spike counts from all of the neurons (pooled as if they were recorded from a same single cell). Both spike counts and lipping data were z-scored on a per monkey basis to put the data on a scale that is relatively independent of individual differences. In the firing time-series (see Fig. 6a), a median-split on lipping index was done separately for each reward level. Therefore, each of the two time series includes the same number of low, medium, and large reward trials.

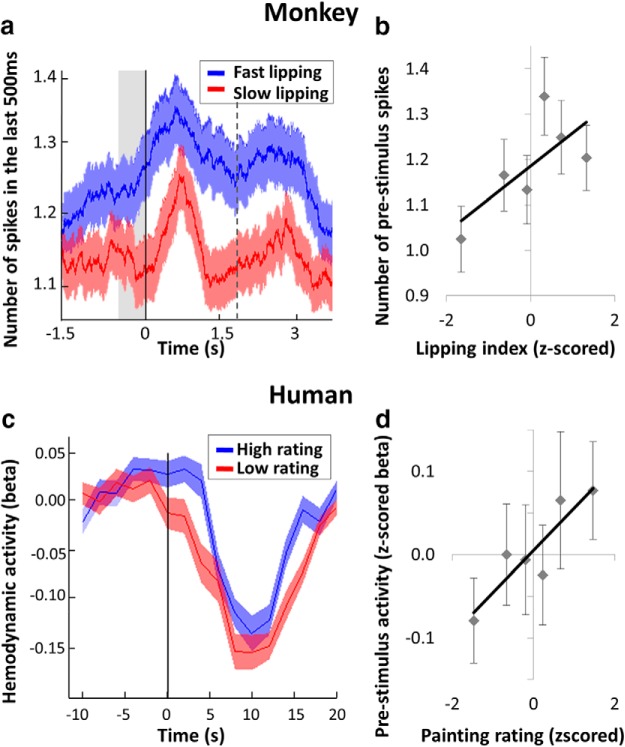

Figure 6.

Time course of value encoding in the VMPFC. a, Time course of peristimulus electrophysiological signal (number of spikes over the last 500 ms) in the VMPFC, shown separately for slow and fast lipping trials. Solid and dashed lines indicate cue onset and reward delivery, respectively. b, Correlation between prestimulus activity (spikes in the last 500 ms before cue onset) and lipping index. Dots indicate 773 trials, ranked according to lipping index. Error bars indicate intertrial SEM. c, Time course of peristimulus fMRI activity in the VMPFC ROI, shown separately for high and low rating trials. Black vertical line indicates painting onset. d, Correlation between painting ratings and regression coefficients (betas) extracted in the ROI at painting onset. Dots indicate 10 trials, ranked according to painting rating and averaged across subjects. Error bars indicate intersubject SEM.

Unless otherwise indicated, values labeled as β indicate coefficients obtained for multiple linear regression models that were estimated using the robustfit function of MATLAB, which underweights large outliers. The p values of β coefficients were estimated using bilateral t tests. All regressions were performed using the full dataset; bins shown on figures were made for display purposes only. All statistical analyses were performed with MATLAB Statistical Toolbox (MATLAB R2006b, MathWorks).

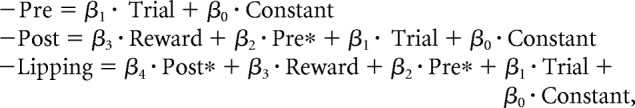

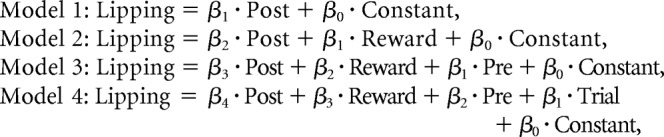

Neuro-behavioral model.

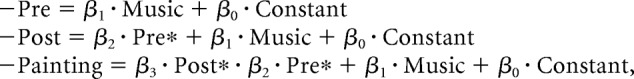

Our model assumes that subjective value (lipping index) is encoded in VMPFC poststimulus activity, which mediates the effect of both reward level and prestimulus activity, which itself is modulated by contextual factors, such as trial number (see Fig. 2a). The nodes in the model represent from left to right the independent variable (contextual factor) and then a succession of three dependent variables (prestimulus activity, poststimulus activity, and cue value). Arrows indicate linear dependencies. To test such a model, we needed to show that each dependent variable (1) integrates some variability induced by the previous node and (2) adds some predictive power in explaining the next node. We therefore used an iterative multiple regression procedure, adjusting the new variable for previous effects at each step. This means removing the variance explained by the previous nodes, with the following orthogonalization procedure. For instance, to adjust prestimulus activity (Pre) for trial number (Trial), first we regressed Pre against Trial to estimate the intercept â and slope b̂ that satisfy Pre = a + b * Trial; then we used these estimates, â and b̂, to remove the variance explained by Trial: Pre* = Pre − (â + b̂ * Trial). The successive multiple regression models were therefore as follows:

|

Where Pre* is prestimulus VMPFC activity adjusted for trial number effect and Post* the VMPFC poststimulus activity adjusted for reward level, trial number, and prestimulus activity (Pre*).

Because trial number could have been a proxy for either fatigue or satiety, we tested whether using cumulated reward (a more direct proxy for satiety) or cumulated time (a better proxy for fatigue) would improve the fit of our regression model. As Bayesian model comparison showed no significant improvement (trial number: ρ = 0.21; cumulated reward: ρ = 0.21; cumulated time: ρ = 0.58), we preferred to keep trial number for our contextual factor, so as to avoid biasing the interpretation in favor of either satiety or fatigue.

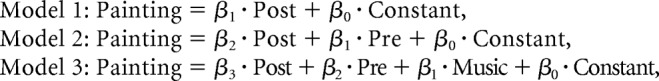

To test for mediation effects formally, we compared between the four following nested models of lipping behavior (without orthogonalization of regressors):

|

The rationale is that, if VMPFC poststimulus activity mediates the effects of all the other factors, then adding these other factors as regressors should not improve the model fit beyond the cost of model complexity (due to adding free parameters); therefore, the simplest model (Model 1) should win the comparison. All models were first inverted using a variational Bayes approach under the Laplace approximation (Daunizeau et al., 2014). This iterative algorithm provides approximations for both the model evidence and the posterior density of free parameters. Because model evidence is difficult to track analytically, it is approximated using variational free energy (Friston and Stephan, 2007). This measure has been shown to provide a better proxy for model evidence compared with other approximations such as Bayesian Information Criterion or Akaike Information Criterion (Penny, 2012). In intuitive terms, the model evidence is the probability of observing the data given the model. This probability corresponds to the marginal likelihood, which is the integral over the parameter space of the model likelihood weighted by the priors on free parameters. This probability increases with the likelihood (which measures the accuracy of the fit) and is penalized by the integration over the parameter space (which measures the complexity of the model). The model evidence thus represents a trade-off between accuracy and complexity and can guide model selection. The log evidence was then converted to posterior probability (ρ), which is the probability that the model generated the data. This quantity must be compared with chance level (one over the number of models in the search space).

Humans

Subjects.

The study was approved by the Ethics Committee for Biomedical Research of the Pitié-Salpêtrière Hospital, where the study was conducted. Subjects were recruited via the Relais d'Information en Sciences Cognitives website and screened for exclusion criteria: age <18 or >39 years, regular use of drugs or medications, history of psychiatric or neurological disorders, and contraindications to MRI scanning (pregnancy, claustrophobia, metallic implants). Subjects were paid 100 € for their participation in the experiment. All subjects gave informed consent before partaking in the study. We scanned 27 subjects: 15 males (age 25.6 ± 2.8 years) and 12 females (age 24.6 ± 3.5 years). None of them had any neurological or psychiatric disease history. One subject was excluded from this study for declaring he had rated the stimuli at random in the scanner, which was objectified by his very low consistency between ratings and choices (42%). The remaining 26 subjects were all included in the behavioral results. Two subjects were excluded from fMRI analyses because they had excessive head movements in the scanner, leaving a total of 24 subjects.

Stimuli.

We used 120 painting images that were gathered from the internet and used in a previous study (Lebreton et al., 2009). They were chosen to cover a large variety of styles, such that different esthetic tastes could be expressed, for example, in terms of modern versus older periods as far back as the Middle Ages. We controlled that size and luminance were approximately matched between painting images. The 120 paintings were randomly assigned to the six scanning blocks, such that each block used 20 new stimuli.

We used 60 music extracts of 30 s each, to cover a large variety of styles, tonalities, and instruments (from medieval Gregorian polyphonies to modern hardcore grind music). To avoid dealing with confounding effects that could arise if there were lyrics, all extracts were strictly instrumental. We controlled that maximal sound volume was the same for all extracts. Music extracts were randomly assigned to the three musical blocks.

Tasks.

Tasks were programmed on a PC using the Cogent 2000 (Wellcome Department of Imaging Neuroscience, London) library of MATLAB functions for stimulus presentation.

Subjects completed six blocks of a pleasantness rating task in the MRI scanner. They were warned from the start that some blocks would contain music, whereas others would not. They were also instructed to ignore any musical context while assigning a pleasantness rating to the painting. All trials started with the appearance of a fixation cross that stayed on screen for a long delay (drawn from a uniform distribution between 10 and 25 s), and then the painting was displayed for a fixed duration of 5 s. In the musical condition, music started with fixation cross and ended when the rating scale was displayed. The rating scale had 21 steps (10 values below and above the center, from very unpleasant to very pleasant), but only five reference graduations were shown. Subjects could move the cursor by pressing a button with the right index finger to go up or with the right middle finger to go down. They had to press a button with the left index finger to validate their responses and go to the next trial. The initial position of the cursor on the scale was randomized to avoid confounding pleasantness with movements. During this first session, painting pleasantness ratings were neutral on average but extended over a wide range along the scale (mean 0.08 ± 7.78).

After scanning, subjects were asked to rate three features of the musical extracts they had previously heard along three dimensions: pleasantness, joyfulness and familiarity. Musical extracts were presented in the same order and for the same duration as in the scanner. All trials started with the appearance of a fixation cross that stayed on screen, during which the music was played, and then subjects had to use the same scale as in the previous task to rate the pleasantness of the music they had just heard, then its joyfulness, and then its familiarity. Joyfulness and familiarity ratings were not used in this study because they did not show any correlation with the pleasantness ratings assigned to paintings in the scanner.

To check the reliability of the painting ratings further, subjects performed a choice task, in which two paintings were shown side by side, following a fixation cross. The relative position of the two paintings on the screen was randomized. Subjects were asked to indicate their preference by pressing one of two buttons corresponding to the left and right stimuli. Subjects performed 60 easy choices, then 60 hard choices, in a random order. Easy choices were those in which pictures ranked n were compared with pictures ranked n + 10, and hard choices were those in which pictures ranked n were compared with pictures ranked n + 1. We recorded not only choices but also response times (delays between picture presentation and button press).

Functional magnetic resonance imaging.

T2*-weighted echo planar images (EPIs) were acquired with BOLD contrast on a 3.0 Tesla magnetic resonance scanner. We used a tilted plane acquisition sequence designed to optimize functional sensitivity in the medial temporal lobes (Deichmann et al., 2003). To cover the whole brain with good spatial resolution, we used the following parameters: TR = 2 s, 35 slices, 2.5 mm slice thickness, 1.5 mm interslice gap. T1-weighted structural images were also acquired, coregistered with the mean EPI, normalized to a standard T1 template, and averaged across subjects to allow group level anatomical localization. EPI data were analyzed in an event-related manner, within a GLM, using the statistical parametric mapping software SPM8 (Wellcome Trust center for NeuroImaging, London) implemented in MATLAB. The first five volumes of each session were discarded to allow for T1 equilibration effects. Preprocessing consisted of spatial realignment, normalization using the same transformation as structural images, and spatial smoothing using a Gaussian kernel with a FWHM of 8 mm.

We used four GLMs to explain individual-level time series. To search for neural correlates of painting ratings, we preferably used finite impulse response (FIR) models, rather than hemodynamic response function (HRF), because they allow analyzing both prestimulus and poststimulus activity.

A FIR model was built for whole-brain search of voxels encoding painting ratings (see Fig. 4e, middle). Each time point, from 5 TR (10 s) before to 6 TR (12 s) after painting onset, was parametrically modulated by painting pleasantness ratings.

A second FIR model was built to plot ROI activity for high versus low ratings (see Fig. 6c). Ratings were sorted using a median split within each block. Two different sets of regressors were used for trials with high and low ratings.

A third FIR model was built, this time with each trial treated as a separate regressor, to analyze trial-by-trial variations of the fMRI signal at painting onset. This model was used for correlating ratings with activity in the VMPFC ROI (see Figs. 4f, 5d–f, and 6d).

A final HRF model was built for whole-brain search of voxels encoding music pleasantness (see Fig. 4e, left). This model included stick functions for fixation cross onset, painting onset and rating scale onset, and a boxcar function over music duration. The boxcar function was parametrically modulated by music pleasantness. All the regressors of interest were convolved with both the canonical HRF and its first temporal derivative.

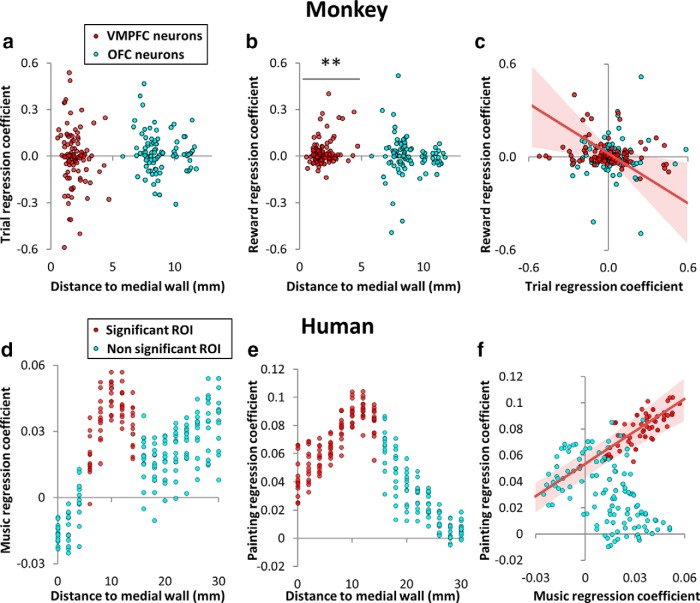

Figure 5.

Value encoding in individual neurons or voxels from medial to lateral prefrontal cortex. Top, Graphs represent, for individual neurons (red represents VMPFC; blue represents OFC), the regression coefficients obtained for encoding trial number in prestimulus activity (a), reward level in poststimulus activity (b), and the correlation between the two (c). **p < 0.01, when the distribution of regression coefficients is significantly shifted away from zero. All recorded neurons are shown according to their x-coordinate (distance along a line orthogonal to the medial wall). Bottom, Graphs represent, for individual voxels (red represents ROI showing significant effect at the group level; blue represents not significant), the regression coefficients (averaged across subjects) obtained for encoding music pleasantness in prestimulus activity (d), painting pleasantness in poststimulus activity (e), and the correlation between the two (f). Voxels were selected by moving the VMPFC ROI along a line orthogonal to the medial wall. For any given distance of x to the medial wall, we represent all voxels of coordinate x = x in an 8-mm-diameter sphere centered on x = x, y = 44, and z = −10 in the MNI space. Trend lines show robust regression fit across VMPFC neurons or voxels. Light red area represents 95% confidence interval of regression estimates.

To correct for motion artifacts subject-specific realignment parameters were included as covariates of no interest in all models. Linear contrasts of regression coefficients were computed at the subject level and then taken to a group-level random-effect analysis using a one-sample t test. A conjunction analysis (Friston et al., 2005) was performed to find brain regions reflecting both painting and music pleasantness (see Fig. 4e, right). To avoid nonindependence issues (or “double-dipping”), we defined the VMPC ROI as a 4-mm-radius sphere containing 33 voxels around the peak coordinate of parametric modulation by pleasantness ratings that was observed in a previous study (Lebreton et al., 2009).

The results reported in the main text were taken from the blocks playing musical excerpts. In the blocks without music, whole-brain maps showed significant correlation with pleasantness rating, surviving a corrected statistical threshold, only in visual areas (the same as in the blocks with music). ROI analysis indicated that VMPFC activation with pleasantness rating was much weaker than in musical blocks, although still significant (p = 0.001, uncorrected). This might be due to subjects mind wandering during long silent delays between task trials, and perhaps valuating other mental representations, which might have affected VMPFC activity. We therefore focused our analysis on musical blocks because this was sufficient to address our question about whether manipulating baseline activity could bias subsequent valuation.

Statistical analysis.

All statistical analyses were performed with MATLAB Statistical Toolbox (MATLAB R2006b, MathWorks). Painting and music ratings, as well as activity levels (β values) were z-scored at the subject level before entering into any statistical analysis. All values noted β indicate coefficients obtained for multiple linear regression models that were estimated using the “robustfit” function of MATLAB which underweights large outliers. To predict choices from pleasantness ratings, we used a logistic regression model. Given the difference in value between the ratings of any two paintings A and B, the probability of choosing A over B was estimated using the softmax rule: P(A) = 1/(1 + exp(−(VA − VB)/β)), with VA and VB the ratings for A and B, respectively. The temperature β captures the randomness of decision-making. This free parameter was adjusted to maximize the probability (or likelihood) of the actual choices under the model. Easy and hard choice conditions were pooled for the logistic regression fit presented in Figure 3c.

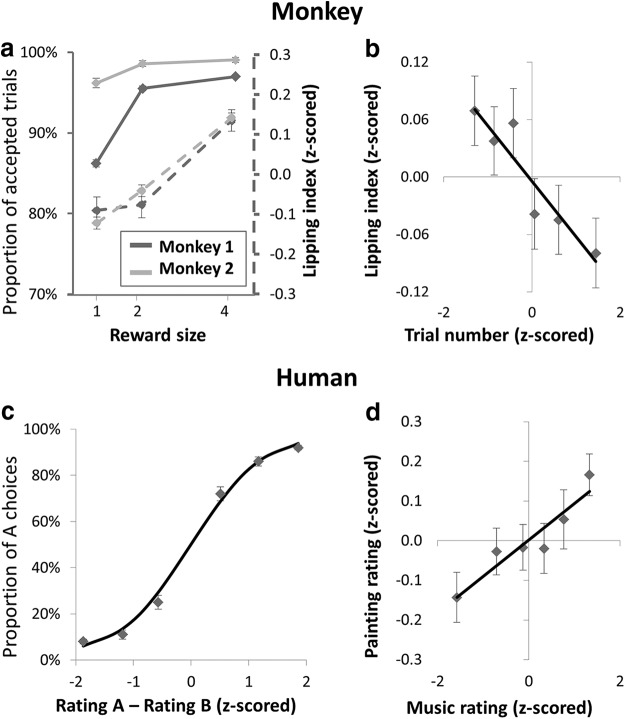

Figure 3.

Behavioral expression of subjective value. a, Proportion of accepted trials (full lines, left axis) and lipping index (dashed lines, right axis) as a function of reward level. Error bars indicate intertrial SEM. b, Lipping index as a function of trial number. Trial number was z-scored, ranked over all recording sessions and binned into data points representing 773 trials each. Straight line indicates linear regression fit. c, Probability of choosing painting A over painting B as a function of the differential between their in-scanner ratings (all choices pooled). Dots indicate 20 choices per subject, ranked according to pleasantness rating difference. Error bars indicate intersubject SEM. Sigmoid line indicates logistic regression fit. d, Correlation between painting and music pleasantness. Dots indicate 10 trials per subject, ranked according to music rating.

Unless otherwise indicated, we report coefficients (β) obtained for multiple linear regression models that were estimated using the “robustfit” function of MATLAB which underweights large outliers. All regression coefficients were tested against 0 at the group level (across subjects) using bilateral t tests, and not on the average data points shown in figures (bins shown were made for display purposes only). All statistical analyses were performed with MATLAB Statistical Toolbox (MATLAB R2006b, MathWorks).

Neuro-behavioral model.

The model applied to human data was based on the same mechanism as seen in monkeys (see Fig. 1). The differences were that lipping index was replaced by painting pleasantness rating (as a proxy for subjective value), that trial number was replaced by music pleasantness (as an example of contextual factor), and that there was no factor attached to stimuli, such as reward level, because paintings had no objective value (see Fig. 2b). The successive multiple regression models were therefore the following:

|

Where Pre* is prestimulus VMPFC activity adjusted for music pleasantness and Post* the VMPFC poststimulus activity adjusted for music pleasantness and prestimulus activity (Pre*).

To formally test for mediation effects, we compared between the three following nested models of painting pleasantness rating (without any orthogonalization of regressors):

|

As in monkeys, models were compared using a toolbox for variational Bayesian analysis (Daunizeau et al., 2014). Because we found no evidence for a random effect at the group level, we performed a fixed-effect group-level Bayesian model comparison and compared models based on their posterior probability (ρ).

Results

The general mechanism that we intended to demonstrate in both monkeys and humans is illustrated in Figure 1. The idea was that: (1) contextual factors could shift VMPFC prestimulus activity, (2) this shift might persist in the peak of VMPFC stimulus-evoked response, and (3) this response peak might be reflected in the subjective value. By contextual factors, we mean factors independent from the characteristics of the image triggering subjective valuation: namely, trial number in monkeys, and music pleasantness in humans. Our model makes quantitative predictions about the relationships between contextual factors, neural data, and behavioral measures of value. These relationships are graphically depicted in Figure 2, where arrows indicate linear dependencies. Although the experimental factors and measurements differ between monkey and human studies, the abstract structure of the model is the same (with the only exception that in monkeys, reward level was included as an additional factor influencing poststimulus activity). The general structure can be seen as a double mediation: prestimulus and consequently poststimulus VMPFC activities were assumed to mediate the effects of contextual factors on subjective values. All of the analyses are derived from this same general model and were applied in the same manner to the monkey and human data. In each species, we start by checking that our value measure was sensitive to context and was a reliable predictor of choices. Then we iteratively tested all the links in the model, from the ultimate cause (contextual factor) to the ultimate consequence (behavioral expression of subjective value). Our steps were to test the following: (1) whether contextual factors impact VMPFC prestimulus activity, (2) whether prestimulus activity predicts poststimulus activity, and (3) whether poststimulus activity translates into behaviorally expressed values. Last, we use nested Bayesian model comparison to formally test that VMPFC activity mediates the effect of contextual factor on subjective value.

Behavioral measure of subjective value predicts choices

Monkeys

Single units were recorded from VMPFC and OFC of two monkeys performing the task illustrated in Figure 2a (for more details, see Materials and Methods). They started trials by pressing a bar, which triggered the presentation of a visual cue indicating reward magnitude (1, 2, or 4 water drops). Then monkeys had to wait for the appearance of a Go signal, after which they could release the bar to obtain the reward. The subjective value of the cue was inferred by measuring an appetitive Pavlovian response named lipping (Bouret and Richmond, 2010; San-Galli and Bouret, 2014). This measure (lipping index, calculated as minus the lipping onset latency) was positively modulated by reward level (Fig. 3a; β = 0.11 ± 0.01, p = 3.0 × 10−13) and negatively modulated by trial number (Fig. 3b; β = −0.05 ± 0.02, p = 4.4 × 10−4), with no significant interaction between these two factors (p = 0.09). The absence of interaction suggests that the effect of trial number was a general decrease in subjective value, independent from reward level. The lipping index predicted the likelihood that the monkey would choose to release the bar, during presentation of the GO signal, to get the reward (logistic regression: β = 0.29 ± 0.06, p = 1.0 × 10−6).

Humans

Human subjects performed a pleasantness rating task during fMRI scanning (Fig. 2b). After a long and variable delay (10–25 s) that was accompanied by a musical extract, a painting image appeared on screen. Subjects then rated the pleasantness of the painting by moving a cursor on an analog scale. After the scanning session, subjects performed a choice task in which they had to indicate their preference between paintings presented in pairs. The difference between painting ratings was a reliable predictor of the choices made in this preference task (Fig. 3c; logistic regression: β = 0.92 ± 0.02, p < 10−27). The difference in ratings also predicted choice duration, with subjects being faster when this difference was greater (β = −0.07 ± 0.02, p = 0.0017).

Then, subjects rated the pleasantness of each musical extract that they had heard during scanning. Painting pleasantness was correlated to music pleasantness (β = 0.08 ± 0.04, p = 0.042; Fig. 3d), even though subjects had explicitly been told to rate the painting alone, regardless of the musical context. It appears that our manipulation was effective: the subjective value assigned to a painting was influenced by the pleasantness of the musical selection presented with the painting.

Thus, the behavioral results make it seem reasonable to use lipping index and pleasantness rating as measures of the subjective values that underpin choice behavior. They also demonstrate that our value measures were significantly modulated by contextual factors, such as trial number and music pleasantness. The following analyses are meant to explain such effects by VMPFC prestimulus and poststimulus activity.

Contextual factors influence VMPFC prestimulus activity

Monkeys

Because our goal was to provide an equivalent reading of values encoded in the prefrontal cortex across species, all analyses of electrophysiological activity include the whole population of recorded neurons (VMPFC, n = 105; OFC, n = 93; see locations in Fig. 4a), with no selection of particular neurons based on their physiological properties or on their response to task events. Prestimulus activity was defined as the number of spikes in the 500 ms period preceding cue onset (VMPFC, 1.64 ± 0.23; OFC, 2.61 ± 0.40 spikes on average). Single-unit analyses revealed that prestimulus activity was significantly (p < 0.05) related to trial number in 49 neurons (47% of total) in the VMPFC, and 40 neurons (43% of total) in the OFC (for the distribution of correlation coefficients, see Fig. 5a).

To identify global trends in the electrophysiological activity of these neuronal populations and facilitate the comparison with hemodynamic activity measured with fMRI, we computed population activity by pooling activity in the time period of interest over all recorded neurons in each region. This population measure of prestimulus activity was negatively correlated with trial number in the VMPFC (Fig. 4b; β = −0.04 ± 0.01, p = 1.2 × 10−6) but not in the OFC (p = 0.74). The dissociation between VMPFC and OFC makes it seem quite unlikely that the effect of trial number could be due to a drift in cell isolation within recording sessions because the same recording techniques were applied in both regions.

The link with the reward level of the previous trial, when included together with trial number in the linear regression model made to predict prestimulus activity, was not significant in either the VMPFC (p = 0.12) or the OFC (p = 0.08). Thus, VMPFC prestimulus activity appeared to integrate fatigue or satiety over a long period (not just the last trial). Yet the effect of trial number remained modest (3.3% of the variance in VMPFC baseline). The residual fluctuations in VMPFC baseline could be due to either unidentified factors or stochastic noise.

Humans

In humans, the linear correlation with music pleasantness (Fig. 4e, left; Table 1) was significant in the VMPFC after cluster-wise whole-brain correction for multiple testing, and in the associative auditory cortex at an uncorrected threshold. To avoid nonindependence issues (“double-dipping”), we defined a VMPFC ROI around an independently defined voxel (VMPFC group-level maximum reported in Lebreton et al., 2009). Activity in this ROI at the time of painting onset was significantly correlated to music pleasantness (β = 0.08 ± 0.03, p = 0.015), but not to previous trial painting rating (p = 0.51). As in the monkey results, the variance explained by the contextual factor was modest (13.9% of the variance in VMPFC baseline, on average across subjects), leaving most fluctuations across trials unexplained.

Table 1.

Brain activation reflecting music pleasantnessa

| Region | Cluster size (voxels) | Cluster p value | Peak t value | Peak p value | x, y, z (mm) coordinates |

|---|---|---|---|---|---|

| VMPFC | 2462 | 0.006 | 4.51 | 7.83 × 10−5 | −10, 50, −12 |

| 3.97 | 3.03 × 10−4 | 40, 34, −10 | |||

| 3.96 | 3.13 × 10−4 | −8, 54, 2 |

aActivation was obtained using parametric modulation by pleasantness rating of a boxcar signal over musical playing. The table includes all regions surviving a threshold of p < 0.001 uncorrected at the voxel level and p < 0.05 after FWE whole-brain correction for multiple testing at the cluster level. The three main local maxima have been selected for each cluster. x, y, z coordinates refer to the MNI space.

Reward level influences poststimulus activity in monkey VMPFC

Poststimulus activity was defined as the number of spikes in the 500 ms window after cue onset. Single-unit analyses of poststimulus activity revealed a significant correlation (p < 0.05) with reward level in 10 neurons (9.5% of total) in the VMPFC, and 33 neurons (35% of total) in the OFC. To investigate the coherence of reward encoding within regions, we also performed a second-level random-effect analysis, using t tests to assess whether single-neuron regression coefficients were significantly positive across neurons. This analysis showed that reward level positively influenced poststimulus activity (mean coefficient ± interneuron SE: β = 0.02 ± 0.01, second-level t test: p = 0.0042) in the VMPFC but not in the OFC (second-level t test: p = 0.56). Thus, although more neurons efficiently encode reward in the OFC, they do not do so consistently (some of them encode it negatively, some positively). In contrast, the effect of reward level is consistently positive across neurons in the VMPFC (for the distribution of reward coefficients, see Fig. 5b). This was further amplified by the fact that VMPFC neurons with a higher firing rate were also the ones that best encoded reward level (regression across neurons: β = 0.02 ± 0.002, p < 10−30), whereas this was not the case in the OFC (regression across neurons: p = 0.11).

The regression coefficients for trial number and reward level were negatively correlated across neurons in the VMPFC (β = −0.52 ± 0.18, p = 0.0052). This indicates that the more a neuron decreases its prestimulus activity with trial number, the more this neuron increases its poststimulus activity with reward level (Fig. 5c). This encoding pattern is consistent with the computing of subjective value as defined by economists because trial number and reward level had opposite effects on both lipping and choice behavior. In the OFC, regression coefficients for trial number and reward level were positively correlated across neurons (β = 0.26 ± 0.08, p = 0.0010). This could indicate that some OFC neurons are more sensitive to the experimental factors in general, but it is not consistent with the idea that they encode subjective value.

As described before for the prestimulus activity, we examined the population activity by pooling the spike counts in the poststimulus window of all recorded neurons, to establish a link with fMRI results. We found that the global population response was positively correlated with reward level in the VMPFC (β = 0.03 ± 0.01, p = 5.3 × 10−4) but not in the OFC (p = 0.48). A closer inspection of VMPFC poststimulus activity (Fig. 4c) suggests that the difference between 2-drop and 4-drop rewards was neater than the difference between 1 and 2 drops. This pattern is consistent with that observed in lipping behavior (Fig. 3a) and therefore with subjective valuation.

Prestimulus activity predicts poststimulus activity

Monkeys

We investigated whether poststimulus activity was predicted by prestimulus activity, adjusted for trial number, which was also included in the GLM, as well as reward level. When testing single units individually, the link between prestimulus and poststimulus activity was significantly (p < 0.05) positive in 48 VMPFC neurons (52% of total) and 52 OFC neurons (50% of total), never negative. When considering the global population signal, VMPFC poststimulus activity was predicted by trial number (β = −0.04 ± 0.01, p = 2.9 × 10−10), prestimulus activity adjusted for trial number (β = 0.67 ± 0.01, p < 10−30), and reward level (β = 0.02 ± 0.01, p = 2.1 × 10−4). OFC poststimulus activity was predicted by prestimulus activity adjusted for trial number (β = 0.72 ± 0.01, p < 10−30), but not by reward level (p = 0.38) or trial number (p = 0.06). Thus, activity in both regions was autocorrelated over time, making prestimulus activity a significant predictor of poststimulus activity. However, the VMPFC seems unique in that its poststimulus activity was significantly influenced by trial number and reward level, in a manner that is consistent with encoding economic value (i.e., with opposite signs).

Humans

In humans, prestimulus activity was defined as the activity level measured in our ROI at the time of stimulus onset, and poststimulus activity as the response measured in our ROI at the activation peak, 4 s after stimulus onset. As seen with monkeys, poststimulus activity was regressed against prestimulus activity adjusted for the contextual factor (music pleasantness), which was also included in the regression model. We found that poststimulus activity was explained both by prestimulus activity adjusted for music pleasantness (β = 0.07 ± 0.04 p = 0.043) and by music pleasantness (β = 0.04 ± 0.03 p = 0.035).

Correlation between prestimulus and poststimulus activity was expected given the well-established autocorrelation of neural signals (Arieli et al., 1996; Fox et al., 2006). It was relevant to our purpose only if the influence of prestimulus activity translated into the behavioral response (Fig. 2, models), an effect that we examine in the next paragraph.

VMPFC poststimulus activity encodes subjective value

Monkeys

Analyses regarding lipping index include all neurons for which we recorded lipping behavior (VMPFC, n = 84; OFC, n = 22), with no selection. Single-unit analyses showed limited relation between poststimulus activity and lipping index (p < 0.05 for 2 VMPFC and 1 OFC neuron). When taken as a population, the VMPFC response was a significant predictor of lipping index (Fig. 4d; β = 0.04 ± 0.02, p = 0.0098). We did not find any link between OFC activity and lipping behavior, but this should be taken with caution, given the lack of statistical power. We then checked that the lipping index was encoded in the absolute level of activity (response peak), and not in the difference from baseline. We found indeed that subtracting the baseline from poststimulus activity removed the correlation with lipping index (p = 0.53).

When all regressors were included in our GLM, we found that lipping index was significantly related to poststimulus activity adjusted for reward level, prestimulus activity, and trial number (β = 0.05 ± 0.02, p = 0.016), to prestimulus activity adjusted for trial number (β = 0.07 ± 0.02, p = 5.1 × 10−6), to reward level (β = 0.10 ± 0.01, p = 6.4 × 10−13), and marginally to trial number (p = 0.051). The fact that prestimulus activity remained a significant predictor of lipping index after adjusting for trial number suggests that unexplained VMPFC baseline fluctuations also affected subjective evaluation, as measured by lipping. In other words, prestimulus VMPFC activity was subject not only to slow changes linked to fatigue or satiety, but also to a trial-to-trial variability that contributed to influence behavior.

To illustrate the time course of value encoding in the global population activity (Fig. 6a), we performed a sliding window analysis on spike counts around cue onset, separately for trials followed by fast and slow lipping (sorted using a median split). The first 500 ms window exhibiting a significant difference between fast and slow lipping ended at 729 ms before cue onset, whereas average lipping latency was of 457 ± 2 ms for Monkey 1 and 369 ± 4 ms for Monkey 2. The large gap (>1 s) between the start of neural encoding and lipping makes it seem unlikely that VMPFC activity was related to the motor aspects of lipping behavior. We also checked that the prestimulus activity (500 ms window ending at cue onset) was significantly correlated to lipping index (β = 0.04 ± 0.02, p = 0.019; Fig. 6b).

Humans

Whole-brain regression against painting pleasantness ratings, estimated at the peak of the evoked hemodynamic response (4 s after painting onset), revealed significant activation in the VMPFC and fusiform face area (FFA), after cluster-wise whole-brain correction for multiple testing (Fig. 4e, middle; Table 2). We verified that the response magnitude extracted from our independent VMPFC ROI was significantly related to painting pleasantness (β = 0.10 ± 0.03, p = 0.0011; Fig. 4f). Poststimulus activity was no longer correlated to pleasantness ratings (p = 0.084) after subtracting prestimulus activity, a result similar to that seen in monkeys. Thus, the measurements from both monkeys and humans seem to show that subjective value is encoded by the absolute level of poststimulus activity in the VMPFC, but not the differential from baseline.

Table 2.

Brain activation reflecting painting pleasantnessa

| Region | Cluster size (voxels) | Cluster p value | Peak t value | Peak p value | x, y, z (mm) coordinates |

|---|---|---|---|---|---|

| VMPFC | 1715 | 0.003 | 5.09 | 1.88 × 10−5 | −4, 62, −4 |

| 4.77 | 4.13 × 10−5 | −10, 42, −8 | |||

| 4.76 | 4.25 × 10−5 | 12, 34, −14 | |||

| FFA | 7165 | 9.72 × 10−9 | 8.71 | 4.81 × 10−9 | −20, −48, −4 |

| 7.64 | 4.65 × 10−8 | −10, −52, 4 | |||

| 6.84 | 2.81 × 10−7 | 16, −52, 10 |

aActivation was obtained using parametric modulation by pleasantness rating at 4 s following painting onset. The table includes all regions surviving a threshold of p < 0.001 uncorrected at the voxel level and p < 0.05 after FWE whole-brain correction for multiple testing at the cluster level. The three main local maxima have been selected for each cluster. x, y, z coordinates refer to the MNI space.

When all regressors were included in our GLM, we found that painting pleasantness rating was significantly related to poststimulus activity adjusted for prestimulus activity and music pleasantness (β = 0.10 ± 0.03, p = 0.0005), to prestimulus activity adjusted for music pleasantness (β = 0.04 ± 0.02, p = 0.043), and marginally to music pleasantness (p = 0.052). As in monkeys, these results suggest not only that prestimulus activity mediated the effect of context but also that trial-to-trial baseline fluctuation per se (regardless of music) biased subsequent valuation.

To verify that the same region was encoding value regardless of sensory modality (visual or auditory), we performed a formal conjunction between parametric regressors modeling painting and music pleasantness (Fig. 4e, right), which restricted the list of activated regions to the VMPFC alone. In parallel to the analysis performed at the single-unit level in monkeys, we computed regression coefficients for individual voxels within our ROI. When moving our ROI along a mediolateral axis, we were able to confirm that only the medial voxels (corresponding to the VMPFC) and not the lateral voxels (corresponding to the OFC) showed a significant correlation with painting ratings at the group level (across subjects; Fig. 5e). Within the ROIs that encoded both music and painting rating, we selected voxels along the mediolateral axis (with MNI coordinates y = 44, z = −10). Although we observed a trend for correlation between single-voxel regression coefficients obtained for painting and music ratings (Fig. 5f), this was not significant in the second-level analysis across subjects (p = 0.13).

To illustrate the time course of value encoding in the hemodynamic response, we separated trials followed by high and low ratings using a median split (Fig. 6c). The first significant difference between high and low rating was seen at time 0 (painting onset), and not at the preceding measure (2 s before). Prestimulus activity (at time 0) was also significantly correlated to painting rating (Fig. 6d; β = 0.09 ± 0.03, p = 0.003). We observed that the general shape of the VMPFC response has a larger undershoot compared with the canonical hemodynamic response function. This could be related to physiological properties of the VMPFC or it might reflect some psychological process, for example, indicating that subjects disliked performing the task at hand.

Testing for mediations: nested Bayesian model comparison

According to our model illustrated in Figures 1 and 2, the impact of VMPFC prestimulus activity on subjective value should be mediated by VMPFC poststimulus activity, and not by any other brain region. Thus, no direct link should be observed between prestimulus activity and behavioral expression of value, once poststimulus activity is taken into account. To test whether prestimulus activity accounts for additional variance in the behavioral data over and above poststimulus activity, we implemented a nested Bayesian model comparison.

Monkeys

In model 1, lipping index was explained by poststimulus activity. In model 2, lipping index was explained by poststimulus activity and reward level. In model 3, lipping index was explained by poststimulus activity, reward level, and prestimulus activity. In the full model 4, lipping index was explained by poststimulus activity, reward level, prestimulus activity, and trial number. A Bayesian model comparison pointed to the second model as the most plausible (model 1: ρ = 1.2 × 10−9; model 2: ρ = 0.91; model 3: ρ = 0.08; model 4: ρ = 0.01). This result supports the idea that the effect of VMPFC baseline fluctuations (including those induced by trial number) on subjective value was mediated by VMPFC poststimulus activity. However, the presence of reward level in the winning model leads us to believe that additional brain regions were involved in mediating the residual effect of reward level on lipping behavior.

Humans

In model 1, painting rating was explained by poststimulus activity. In model 2, painting rating was explained by poststimulus activity and prestimulus activity. In the full model 3, painting rating was explained by poststimulus activity, prestimulus activity, and music rating. A Bayesian model comparison performed at the group level revealed that the simplest model was by far the most plausible (model 1: ρ = 1; model 2: ρ < 1 × 10−30; model 3: ρ < 1 × 10−30). This result supports the idea that the effect of VMPFC baseline fluctuations (including those induced by music pleasantness) on subjective value was mediated by VMPFC poststimulus activity.

Discussion

This study presents convergent evidence from monkey electrophysiology and human fMRI that: (1) subjective values are encoded in the VMPFC population activity, (2) stimulus values are biased by fluctuations in VMPFC prestimulus activity, and (3) contextual dependency of value judgments arises from various internal or external features being aggregated in VMPFC baseline activity.

Even though we worked in two species, used different paradigms, and made distinct physiological measurements, whose relation is not completely defined (Goense and Logothetis, 2008; Sirotin and Das, 2009), the results from monkeys and humans obeyed to the same neuronal mechanisms. Our findings provide equivalence not only between the brain micro- and macro-scales, but also between measures of firing rate and blood flow. For our purposes, we needed to establish a relationship between subjective values expressed in the behavior and a population signal emerging from the firing rates of VMPFC single neurons. To our knowledge, this had not been done in previous studies, either because they explored the lateral part of the orbitofrontal cortex (Tremblay and Schultz, 1999; Padoa-Schioppa and Assad, 2006; Kennerley et al., 2011), and not the medial part that is considered as homologous to human VMPFC (Ongür and Price, 2000; Mackey and Petrides, 2010), or because they focused on describing the functional properties of single neurons (Bouret and Richmond, 2010; Monosov and Hikosaka, 2012; Strait et al., 2014), and not on the emerging global activity that could potentially underlie the hemodynamic signal.

To examine whether single-unit activities could be translated into a population value signal, we focused on the correlation with reward level because association with reward size or probability is a classical way to manipulate stimulus value. In accordance with the literature, single-unit analysis showed that a significant proportion of neurons reflected reward levels in both the OFC and the VMPFC. The difference is that the distribution of correlation coefficients was shifted toward positive values in the VMPFC but not in the OFC. In addition, the neurons that exhibited greater sensitivity to rewards were also the neurons with higher spiking rate. Thus, when pooling neurons together, the emerging population signal was positively and significantly modulated by reward level in the VMPFC, and not in the OFC.

These results contribute to reconcile monkey and human literatures by showing that, when considering the global signal at the population level, and not just the proportion of significant neurons, positive value coding is observed in the monkey VMPFC as it has been in most human fMRI studies (Bartra et al., 2013). However, we are not suggesting that the BOLD signal simply reflects the sum of spikes emitted by single neurons. Several studies have provided evidence that the BOLD signal is better correlated to local field potentials than to firing activity (Goense and Logothetis, 2008; Magri et al., 2012). It is therefore possible that VMPFC local field potentials, which were not recorded here, would have been an even better predictor of reward level or lipping behavior.

We are not claiming either that the role of VMPFC neurons is necessarily reducible to a simple population code for subjective values. It is plausible that such population coding arises on top of various, more specific computations made by individual neurons. The population signal examined here ignores the identity of the neurons, meaning that each spike has one vote. We do not imply that such a signal is actually used by the brain to drive value-based behavior. It is likely that the pattern of connections between neurons weights some value-encoding cells more strongly than others in the processes leading to behavior. We only conclude that, in principle, a neuron that sums the single-unit activity from all of the neurons recorded in the VMPFC would be in a position to read subjective value.

The findings across signals and species suggest that the VMPFC encodes values related to both objective reward size and to subjective hedonic judgment. Human data confirmed two important functional properties of the VMPFC: generality and automaticity (Chib et al., 2009; Lebreton et al., 2009; Levy et al., 2011). Generality refers to the fact that the same VMPFC region was reflecting values assigned to items from two different sensory modalities (visual with paintings and auditory with music). We also addressed the question of generality in monkeys by showing that VMPFC, but not OFC neurons encode internal (trial number) and external (expected reward size) motivational factors in a way that is consistent with value computation. This result supports views of the VMPFC as a region where all dimensions relevant to subjective value are aggregated on a common scale at the level of individual neurons, instead of having distinct neurons encoding the contribution of different features. This view fits well with the idea that the VMPFC could serve as a general valuation device, representing a “common neural currency” that could be used to arbitrate between alternatives from different categories (Levy and Glimcher, 2012).

Automaticity refers to the fact that, at the time of scanning, subjects had been explicitly instructed to ignore the musical context while evaluating the painting and did not know that they were going to rate the pleasantness of the musical extract at the end of the experiment. This suggests that the VMPFC computes the value of contextual features, even if these are irrelevant to the ongoing task. Automaticity cannot be assessed in monkeys because there was no explicit instruction to be compared with the observed behavior. Contrary to the case of music pleasantness in humans, it seems relevant for monkeys to integrate trial number (an index of satiety or fatigue level) in their willingness to perform the task.

Our analyses demonstrated that fluctuations in prestimulus activity could be a source of variability in subjective valuation. It may appear trivial that, because of the strong autocorrelation in neural activity, differences in evoked activity can be traced back to stimulus onset. Our results go beyond autocorrelation by showing that the influence of prestimulus on poststimulus activity is behaviorally relevant. The key finding is that subjective value is encoded in the absolute level of poststimulus activity, and not in the differential from the baseline. This means that there was no evidence for an interaction, beyond the additive effect, and goes against the possibility that music pleasantness would condition the response evoked by paintings, or that satiety level would condition the response evoked by rewards. Thus, a higher prestimulus activity was additively translated into a higher poststimulus activity and thereby into a higher subjective value. Most analyses of electrophysiological and fMRI data have so far focused on the differential response. In this framework, baseline activity is typically considered behaviorally irrelevant, and either averaged out or subtracted from evoked activity. Our results indicate, on the contrary, that the usual procedure of removing intertrial baseline variability, albeit attractive from a signal processing perspective, is not well suited to account for trial-to-trial behavioral response. Indeed, fluctuations in VMPFC prestimulus brain activity predicted trial-to-trial variations in subjective values, whether these were assigned to familiar, repeated items (in monkeys), or to unfamiliar, unrepeated items (in humans).

We identified two factors influencing VMPFC baseline activity and thereby value judgment: trial number in monkeys and music pleasantness in humans. These findings provide an extension of Bouret and Richmond (2010), showing that not only internal contextual factors (such as satiety), but also external contextual factors (such as music), are automatically integrated in the VMPFC valuation signal. The large variance in prestimulus activity that remained unexplained, but still impacted subjective valuation, might be due to either other unknown factors or to mere stochastic noise. Although trial number was not strictly speaking manipulated experimentally in the monkey study, musical context was intentionally varied in the human study, providing an argument for a causal influence of prestimulus activity on subsequent valuation. The behavioral effect is not a trivial consequence of the VMPFC properties highlighted above (generality and automaticity). Music and painting values could have been encoded in separate populations of neurons, preventing any interference. The impact of music on painting values denotes some spillover, or perhaps the representation of a global context value, that would explain misattribution.

Our study was not designed to explain variability in choice but in value assignment because neural activity was not investigated during decision-making. However, the links that were established between our value measures and choice data suggest that the neural mechanism shown to explain contextual dependency of values could be extended to choices. We speculate that the impact of contextual features on prestimulus VMPFC activity might be the neural mechanism that underpins many effects of priming observed on valuation and choice. Because it is generic and automatic, the VMPFC signal would aggregate the pleasantness of any feature that comes to attention. Then, because of the autocorrelation in the VMPFC signal, this aggregation of values from different origins would distort subsequent judgment and choice.

Footnotes

This work was supported by European Research Council Starting Grant ERC-BioMotiv and National Institutes of Health Intra-Mural Research Program. It has also benefited from the Fédération pour la Recherche Médicale. R.A. was supported by the French Direction Générale de l'Armement and Investissement d'avenir ANR-10-IAIHU-06.

The authors declare no competing financial interests.

References

- Arieli A, Sterkin A, Grinvald A, Aertsen A. Dynamics of ongoing activity: explanation of the large variability in evoked cortical responses. Science. 1996;273:1868–1871. doi: 10.1126/science.273.5283.1868. [DOI] [PubMed] [Google Scholar]

- Bardsley N, Cubitt R, Loomes R, Moffatt P, Starmer C, Sugden R. Experimental economics: rethinking the rules. Princeton: Princeton UP; 2009. [Google Scholar]

- Bartra O, McGuire JT, Kable JW. The valuation system: a coordinate-based meta-analysis of BOLD fMRI experiments examining neural correlates of subjective value. Neuroimage. 2013;76:412–427. doi: 10.1016/j.neuroimage.2013.02.063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Becker R, Reinacher M, Freyer F, Villringer A, Ritter P. How ongoing neuronal oscillations account for evoked fMRI variability. J Neurosci. 2011;31:11016–11027. doi: 10.1523/JNEUROSCI.0210-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bode S, Sewell DK, Lilburn S, Forte JD, Smith PL, Stahl J. Predicting perceptual decision biases from early brain activity. J Neurosci. 2012;32:12488–12498. doi: 10.1523/JNEUROSCI.1708-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boly M, Balteau E, Schnakers C, Degueldre C, Moonen G, Luxen A, Phillips C, Peigneux P, Maquet P, Laureys S. Baseline brain activity fluctuations predict somatosensory perception in humans. Proc Natl Acad Sci U S A. 2007;104:12187–12192. doi: 10.1073/pnas.0611404104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bouret S, Richmond BJ. Ventromedial and orbital prefrontal neurons differentially encode internally and externally driven motivational values in monkeys. J Neurosci. 2010;30:8591–8601. doi: 10.1523/JNEUROSCI.0049-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carmichael ST, Price JL. Architectonic subdivision of the orbital and medial prefrontal cortex in the macaque monkey. J Comp Neurol. 1994;346:366–402. doi: 10.1002/cne.903460305. [DOI] [PubMed] [Google Scholar]

- Chib VS, Rangel A, Shimojo S, O'Doherty JP. Evidence for a common representation of decision values for dissimilar goods in human ventromedial prefrontal cortex. J Neurosci. 2009;29:12315–12320. doi: 10.1523/JNEUROSCI.2575-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coste CP, Sadaghiani S, Friston KJ, Kleinschmidt A. Ongoing brain activity fluctuations directly account for intertrial and indirectly for intersubject variability in Stroop task performance. Cereb Cortex. 2011;21:2612–2619. doi: 10.1093/cercor/bhr050. [DOI] [PubMed] [Google Scholar]

- Daunizeau J, Adam V, Rigoux L. VBA: a probabilistic treatment of nonlinear models for neurobiological and behavioural data. PLoS Comput Biol. 2014;10:e1003441. doi: 10.1371/journal.pcbi.1003441. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deichmann R, Gottfried JA, Hutton C, Turner R. Optimized EPI for fMRI studies of the orbitofrontal cortex. Neuroimage. 2003;19:430–441. doi: 10.1016/S1053-8119(03)00073-9. [DOI] [PubMed] [Google Scholar]

- Fehr E, Rangel A. Neuroeconomic foundations of economic choice: recent advances. J Econ Perspect. 2011;25:3–30. doi: 10.1257/jep.25.4.3. [DOI] [Google Scholar]

- Fox MD, Snyder AZ, Zacks JM, Raichle ME. Coherent spontaneous activity accounts for trial-to-trial variability in human evoked brain responses. Nat Neurosci. 2006;9:23–25. doi: 10.1038/nn1616. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Stephan KE. Free energy and the brain. Synthese. 2007;159:417–458. doi: 10.1007/s11229-007-9237-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friston KJ, Penny WD, Glaser DE. Conjunction revisited. Neuroimage. 2005;25:661–667. doi: 10.1016/j.neuroimage.2005.01.013. [DOI] [PubMed] [Google Scholar]

- Goense JB, Logothetis NK. Neurophysiology of the BOLD fMRI signal in awake monkeys. Curr Biol. 2008;18:631–640. doi: 10.1016/j.cub.2008.03.054. [DOI] [PubMed] [Google Scholar]

- Haber SN, Knutson B. The reward circuit: linking primate anatomy and human imaging. Neuropsychopharmacology. 2010;35:4–26. doi: 10.1038/npp.2009.129. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kahneman D, Tversky A. Choices, values and frames. Cambridge: Cambridge UP; 2000. [Google Scholar]

- Kennerley SW, Behrens TE, Wallis JD. Double dissociation of value computations in 534 orbitofrontal and anterior cingulate neurons. Nat Neurosci. 2011;14:1581–1589. doi: 10.1038/nn.2961. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lebreton M, Jorge S, Michel V, Thirion B, Pessiglione M. An automatic valuation system in the human brain: evidence from functional neuroimaging. Neuron. 2009;64:431–439. doi: 10.1016/j.neuron.2009.09.040. [DOI] [PubMed] [Google Scholar]

- Levy D, Glimcher PW. The root of all value: a neural common currency for choice. Curr Opin Neurosci. 2012;22:1027–1038. doi: 10.1016/j.conb.2012.06.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Levy I, Lazzaro SC, Rutledge RB, Glimcher PW. Choice from non-choice: predicting consumer preferences from blood oxygenation level-dependent signals obtained during passive viewing. J Neurosci. 2011;31:118–125. doi: 10.1523/JNEUROSCI.3214-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mackey S, Petrides M. Quantitative demonstration of comparable architectonic areas within the ventromedial and lateral orbital frontal cortex in the human and the macaque monkey brains. Eur J Neurosci. 2010;32:1940–1950. doi: 10.1111/j.1460-9568.2010.07465.x. [DOI] [PubMed] [Google Scholar]

- Magri C, Schridde U, Murayama Y, Panzeri S, Logothetis NK. The amplitude and timing of the BOLD signal reflects the relationship between local field potential power at different frequencies. J Neurosci. 2012;32:1395–1407. doi: 10.1523/JNEUROSCI.3985-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McFadden D. Revealed stochastic preference: a synthesis. Econ Theory. 2005;26:245–264. doi: 10.1007/s00199-004-0495-3. [DOI] [Google Scholar]

- Monosov IE, Hikosaka O. Regionally distinct processing of rewards and punishments by the primate ventromedial prefrontal cortex. J Neurosci. 2012;32:10318–10330. doi: 10.1523/JNEUROSCI.1801-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ongür D, Price JL. The organization of networks within the orbital and medial prefrontal cortex of rats, monkeys and humans. Cereb Cortex. 2000;10:206–219. doi: 10.1093/cercor/10.3.206. [DOI] [PubMed] [Google Scholar]

- Padoa-Schioppa C. Neuronal origins of choice variability in economic decisions. Neuron. 2013;80:1322–1336. doi: 10.1016/j.neuron.2013.09.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Padoa-Schioppa C, Assad JA. Neurons in the orbitofrontal cortex encode economic value. Nature. 2006;441:223–226. doi: 10.1038/nature04676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Penny WD. Comparing dynamic causal models using AIC, BIC and free energy. Neuroimage. 2012;59:319–330. doi: 10.1016/j.neuroimage.2011.07.039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peters J, Büchel C. Neural representations of subjective reward value. Behav Brain Res. 2010;213:135–141. doi: 10.1016/j.bbr.2010.04.031. [DOI] [PubMed] [Google Scholar]

- Plassmann H, O'Doherty J, Rangel A. Orbitofrontal cortex encodes willingness to pay in everyday economic transactions. J Neurosci. 2007;27:9984–9988. doi: 10.1523/JNEUROSCI.2131-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rangel A, Camerer C, Montague PR. A framework for studying the neurobiology of value-based decision making. Nat Rev Neurosci. 2008;9:545–556. doi: 10.1038/nrn2357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sadaghiani S, Hesselmann G, Kleinschmidt A. Distributed and antagonistic contributions of ongoing activity fluctuations to auditory stimulus detection. J Neurosci. 2009;29:13410–13417. doi: 10.1523/JNEUROSCI.2592-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Salimpoor VN, van den Bosch I, Kovacevic N, McIntosh AR, Dagher A, Zatorre RJ. Interactions between the nucleus accumbens and auditory cortices predict music reward value. Science. 2013;340:216–219. doi: 10.1126/science.1231059. [DOI] [PubMed] [Google Scholar]

- Samuelson PA. The numerical representation of ordered classifications and the concept of utility. Rev Econ Stud. 1938;6:65–70. doi: 10.2307/2967540. [DOI] [Google Scholar]

- San-Galli A, Bouret S. Assessing value representation in animals. J Physiol Paris. 2014 doi: 10.1016/j.jphysparis.2014.07.003. piiS0928. [DOI] [PubMed] [Google Scholar]

- Sirotin YB, Das A. Anticipatory haemodynamic signals in sensory cortex not predicted by local neuronal activity. Nature. 2009;457:475–479. doi: 10.1038/nature07664. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Soon C, He AH, Bode S, Haynes JD. Predicting free choices for abstract intentions. Proc Natl Acad Sci U S A. 2013;110:6217–6222. doi: 10.1073/pnas.1212218110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Strait CE, Blanchard TC, Hayden BY. Reward value comparison via mutual inhibition in ventromedial prefrontal cortex. Neuron. 2014;82:1357–1366. doi: 10.1016/j.neuron.2014.04.032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tremblay L, Schultz W. Relative reward preference in primate orbitofrontal cortex. Nature. 1999;398:704–708. doi: 10.1038/19525. [DOI] [PubMed] [Google Scholar]

- Von Neumann J, Morgenstern O. Game theory and economic behavior. Princeton: Princeton UP; 1944. [Google Scholar]

- Zhang M, Wang X, Goldberg ME. A spatially nonselective baseline signal in parietal cortex reflects the probability of a monkey's success on the current trial. Proc Natl Acad Sci U S A. 2014;111:8967–8972. doi: 10.1073/pnas.1407540111. [DOI] [PMC free article] [PubMed] [Google Scholar]