Influenza is a contagious respiratory virus with the potential to cause serious illness and death, particularly in children younger than 5 years of age.1 The influenza vaccine has been shown to be effective in preventing infection and spread of the disease.2 In the United States, annual epidemics of influenza occur from late fall to early spring, with the peak of cases occurring in February,3 prompting the Centers for Disease Control and Prevention (CDC) to emphasize expanding the influenza vaccination season past the fall months to January and later.4 The national children's (<18 years of age) influenza immunization rate for the 2010–2011 season was 46.2%,5 well below the 80% vaccination goal of Healthy People 2020.6 To combat low immunization rates, CDC's Community Guide recommends various evidence-based practices and provider interventions.3 One method of delivering evidence-based education to practicing physicians is through academic detailing, a form of continuing education that has been shown to be an effective method for reaching providers.7

CONCEPTUAL FRAMEWORK FOR IMPROVING CLINIC IMMUNIZATION PERFORMANCE

Academic detailing is similar to other continuing medical education practices in presenting evidence-based information, but features a one-on-one or small group dynamic in which a medical or health educator visits physicians and/or their clinic staff in their office setting to conduct a brief educational session on designated topics. These interactive sessions have been shown to be effective in improving provider practices in many contexts including immunizations.8 One such interactive practice that has been shown to be effective in improving immunization levels is the “audit and feedback” approach.9 The audit and feedback approach consists of conducting medical chart audits to assess baseline immunization levels and reporting this information to clinic staff. At the same time, the health educators provide materials to implement evidence-based practices to improve clinical performance.

One example of the use of academic detailing for public health practices is a study conducted by Larson et al. in 2006, which focused on promoting essential preventive and disease management practices throughout New York City.10 The program was organized around outreach campaigns, each targeting a specific clinical topic, one of which was influenza vaccination. The outreach campaign provided action kits to clinics that received academic detailing visits. These action kits contained clinical tools to support the delivery of evidence-based care, such as flow sheets, reminder stickers, and patient education materials (e.g., brochures and posters). Peer-reviewed journal articles and guidelines were also included in the action kit. At the start of the influenza vaccination campaign, 54% of clinics reported using a provider reminder system for influenza vaccination of patients. This number increased significantly at the end of the campaign, where 67% reported the use of provider reminder systems, reminder postcards, and standing orders (p=0.038). Also, 80% of sites receiving visits in the influenza campaign reported using the patient education materials provided in the influenza vaccination action kit. This study not only demonstrates academic detailing to be an effective method of reaching providers and delivering key messages during a brief interaction, but also suggests it to be a feasible method of approach for public health agencies and acceptable to clinicians.

Another study displaying the efficacy of academic detailing was conducted by Margolis et al.11 This study aimed to improve process methods for the delivery of preventive care to children, such as screening for tuberculosis, anemia, and lead, as well as administering immunizations. Clinics were allocated to intervention and control groups, with intervention clinics receiving four coaching visits from project staff. During these visits, chart audit data for each clinic were reviewed between project staff and intervention staff physicians, evidence-based changes were identified that could improve performance in low performance areas specific to each site, and process change impact was monitored and evaluated. Chart audit feedback provided to intervention clinics included feedback on their performance as well as a comparison of their performance with other clinics included in the study. Throughout the year of participation in the study, each intervention clinic was checked in on by telephone every two to three months. As a result of this study, the proportion of children per clinic with age-appropriate delivery of all four preventive services changed, after one year of implementation, from 7% to 34% in intervention clinics and from 9% to 10% in controls. Also, after adjusting for baseline differences in the study groups, the change in the prevalence of all four services between the beginning and the end of the study was 4.6 times greater in intervention clinics.

San Diego - Influenza Coverage Extension Project

The San Diego - Influenza Coverage Extension (SD-ICE) project was a multiyear (2008–2009 and 2010–2011) intervention study that assessed the effectiveness of an academic detailing intervention, with a special emphasis on promoting late-season vaccinations to increase childhood influenza vaccination rates in six San Diego County primary care clinics. The project was supported by a cooperative agreement with CDC, and project staff were part of the County of San Diego Health and Human Services Agency's Epidemiology and Immunization Branch. In San Diego, coverage rates for children <18 years of age were reported to be at about 55% for the 2010–2011 influenza season,12 a rate that was higher than the aforementioned rate of the national children's influenza immunization rate for 2010–2011 but still considerably lower than the Healthy People 2020 goal of 80%.

METHODS

Medical clinic sample

A list of approximately 40 potential medical facilities was stratified by clinic type (i.e., community health clinic, medical group, and private office), medical specialty (i.e., pediatrics, internal medicine, and family medicine), and geographical region in San Diego County to assure a diverse sampling in primary care settings and patient population. Clinic managers and physicians were contacted by e-mail or telephone and invited to participate in the study. As a condition of inclusion in the study, participating clinics agreed to (1) facilitate on-site confidential patient interviews, (2) participate in key informant interviews, (3) allow random chart audits for influenza vaccination coverage, (4) allow verification of vaccine information when surveyed patients consented to a review of their chart, (5) receive feedback on clinic influenza coverage rates, and (6) consider enhancing vaccination policies to improve influenza vaccination in the late season.

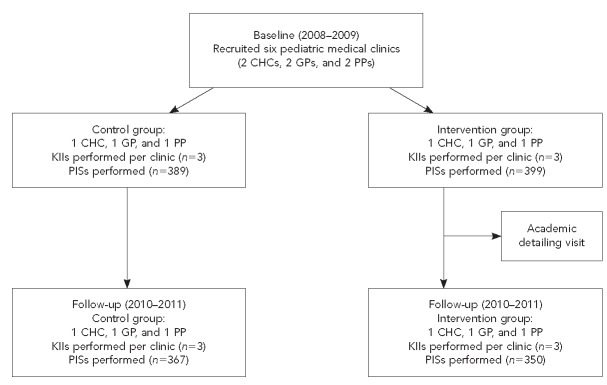

At the end of recruitment, six pediatric clinics agreed to participate, including two community health clinics, two medical group offices, and two private offices. Among each type of medical clinic, one clinic was randomly designated as the intervention clinic and the other as the control. Baseline data were obtained from participating medical clinics through key informant interviews and patient intercept surveys, the process of which is discussed later in this article. Follow-up data were collected during the 2010–2011 influenza season using the same data collection methods to avoid effects of the 2009–2010 H1N1 influenza vaccination campaign (Figure).

Figure.

The San Diego - Influenza Coverage Extension projecta study group stratification process, 2008–2011

aA multiyear intervention study to assess the effectiveness of an academic detailing visit on increasing influenza vaccination rates in children aged 6–59 months

CHC = community health center

GP = group practice

PP = private practice

KII = key informant interview

PIS = patient intercept survey

Patient sample

The source population for patient intercept surveys was a convenience sample of patients aged 6–59 months attending appointments at participating medical clinics. Appointments had to include being seen by a physician. Surveys were conducted during the baseline year (January 1 through March 31, 2009) and follow-up year (January 1 through March 31, 2011). Interviews with patients' parents were administered at each clinic until at least 130 interviews were completed or the end of the survey period was reached.

Intervention

A clinic consultation was scheduled with intervention clinics during the summer of the baseline year, after the 2008–2009 influenza season, with the clinic's nursing staff and physician responsible for immunization in advance of the influenza vaccination season to allow adequate time to implement the new intervention activities. At the time of the consultation, study staff provided intervention clinics with a manual containing an overview of the SD-ICE project, summaries of evidence-based practices (EBPs), and reproducible materials to implement them. Strategies for increasing influenza vaccination rates included chart reminders, reminders and recalls, immunization registry or electronic medical record reminders, special vaccination clinics, and standing orders. These strategies were described in detail, with advantages, resource requirements, implementation steps, and evidence for effectiveness with references. Reproducible materials, provided in both English and Spanish, included sample waiting room screening questionnaires, reminder and recall scripts, and CDC influenza vaccine information statements. SD-ICE staff, consisting of the project's medical director and an intervention specialist, discussed the benefits of implementing these EBPs and baseline influenza vaccine performance results based on patient surveys and random chart audits, and compared each clinic's performance with other study participant clinics and CDC vaccination goals. This comparison was done with the intention of motivating clinics to improve their immunization rates and to do so by implementing the outlined EBPs. Project staff offered technical assistance implementing EBPs to intervention clinics by telephone or e-mail.

Control clinics did not receive a manual or academic detailing intervention visit, and project staff did not provide them with feedback on influenza coverage rates from the baseline year of the study.

Patient surveys

The study team conducted surveys from January 1 through March 31, 2009, during the baseline year, and January 1 through March 31, 2011, during the follow-up year. The baseline survey contained 18 questions, while the follow-up survey contained 25 questions. Both surveys collected demographic data, flu vaccination date and status, location of vaccination, and receptivity to the flu shot. Follow-up surveys also included questions asking if the parents noticed flu vaccine posters in waiting rooms, if they received a flu vaccine notification, and other questions -pertaining to -awareness of flu vaccine promotion. Members of the survey team approached parents of patients in participating medical clinic waiting rooms after their visit with a physician. After being screened for eligibility by age, getting an overview of the study, and obtaining written consent, parents received the survey. When applicable, trained interviewers administered Spanish versions of the surveys.

Key informant interviews

The project team conducted key informant interviews with each clinic's head physician and office staff responsible for vaccine management. The baseline key informant interview ascertained strategies already in use and gauged clinical prioritization of influenza issues, as well as willingness and ability to make changes to current procedures. Similarly, the follow-up key informant interview addressed strategies used in clinics pertaining to flu vaccination, but also had more focused questions on EBP implementation. The follow-up key informant interview also contained an extra set of questions for those clinics that were in the intervention group that asked whether staff remembered the intervention encounter with study staff and the resource binder detailing suggested EBPs.

Statistical analysis

We conducted data analysis using SAS® version 9.2.13 We examined descriptive statistics for all variables and conducted chi-square tests to compare the control and intervention groups of baseline and follow-up years. We reported Pearson's chi-square values and p-values for all comparisons. Variables found to be significant in chi-square testing were adjusted for in further analysis.

Generalized linear mixed models account for the cluster-randomized design of the study and were used to determine whether there was a significant difference between baseline and follow-up vaccination rates in the intervention and control groups. Odds ratios (ORs) with 95% confidence intervals (CIs) were reported, with a statistical significance determination of p=0.05. We repeated this analysis to determine changes in vaccination rates during the late season.

RESULTS

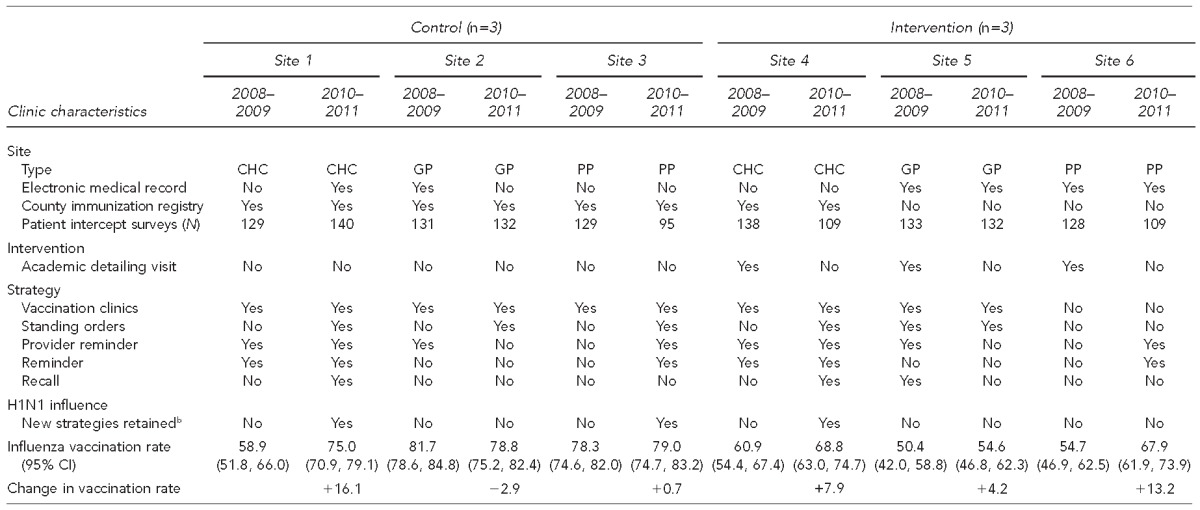

Table 1 illustrates the characteristics of the participant clinics in both study years including placement of study group, immunization strategies employed, and change in influenza vaccination rate from baseline to follow-up. Only one clinic (Site 2) experienced a decrease (–2.9%) in its influenza vaccination rate from baseline to follow-up, although it also reported the highest baseline vaccination rate (81.7%). Two of the three intervention sites and all three control clinics implemented immunization strategies during the follow-up year that weren't used during the baseline.

Table 1.

Comparison of six pediatric medical clinics in San Diego County participating in the San Diego - Influenza Coverage Extension project, by clinic type, use of selected vaccination strategies,a study year (baseline: 2008–2009, follow-up: 2010–2011), and study group

2010–2011 key informant interview question: Did you implement any new strategies in 2009–2010 that carried into the 2010–2011 influenza season?

b“Yes” means used at site and “no” means not used at site.

CHC = community health center

GP = group practice

PP = private practice

CI = confidence interval

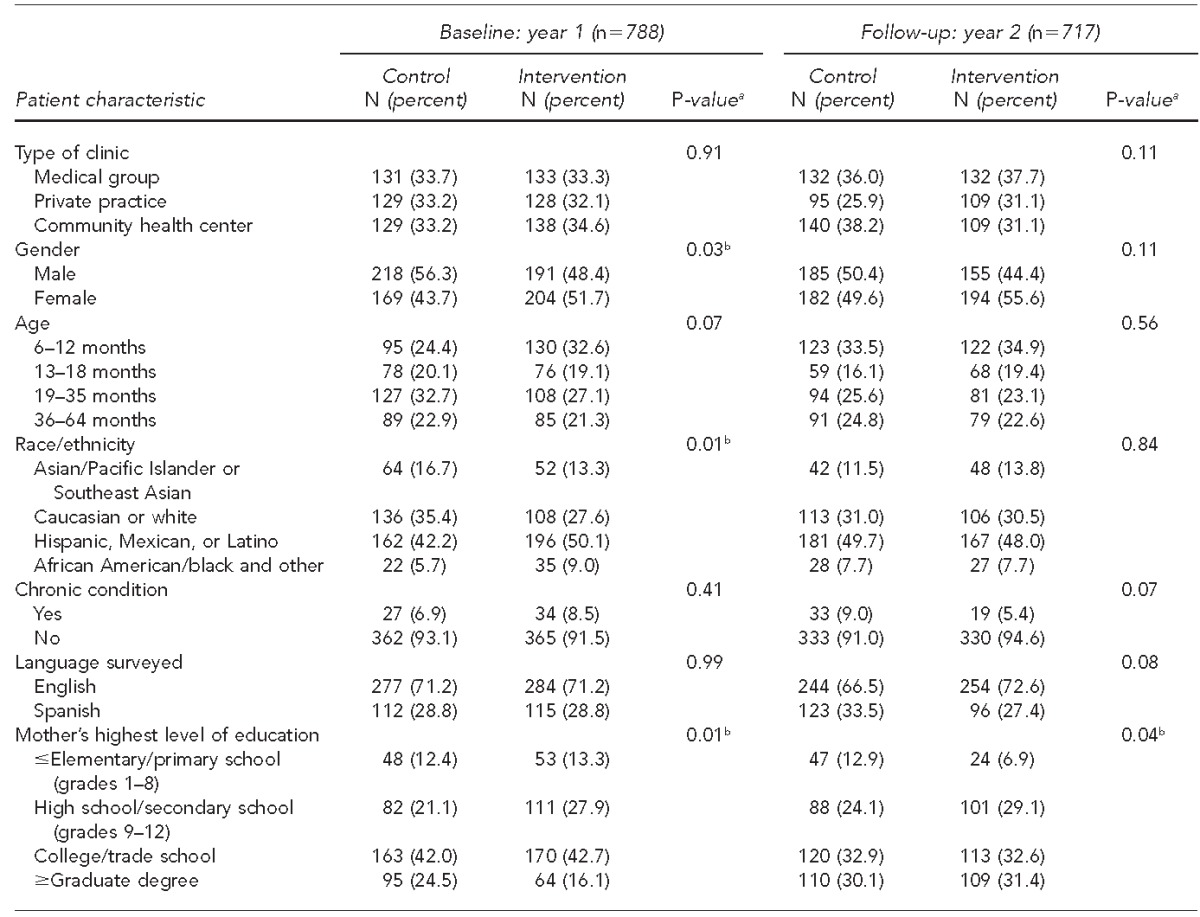

Table 2 displays the results of comparing the control and intervention groups within the baseline and follow-up year. A total of 788 patient intercept surveys were completed in the baseline year—389 from control clinics and 399 from intervention clinics. During the follow-up year, 367 control group surveys and 350 intervention group surveys were completed for a total of 717 patient intercept surveys. In both years, the vast majority of children had no recorded chronic conditions (baseline: 92.3%; follow-up: 92.8%). During baseline, control and intervention populations had significantly different patient genders (p=0.03), ethnicities (p=0.01), and mother's highest education levels (p=0.01). During the follow-up year, only mother's highest level of education was significantly different between the control and intervention groups (p=0.04).

Table 2.

Comparison of control and intervention group participants in six pediatric medical clinics in San Diego County participating in the San Diego - Influenza Coverage Extension project, by baseline study year (2008–2009) and follow-up year (2010–2011)

aP-values from Pearson's chi-square

bStatistically significant at p<0.05

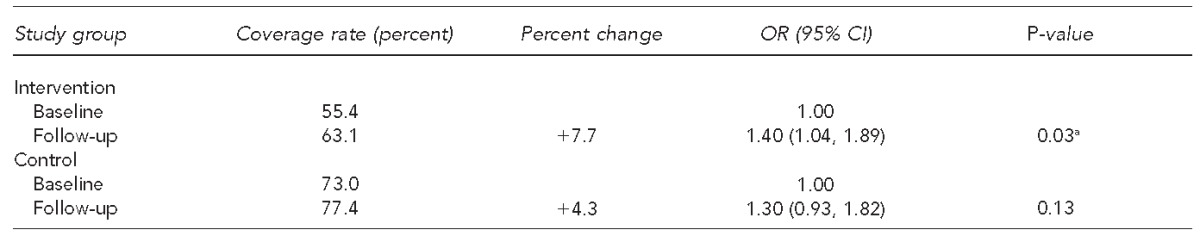

Table 3 displays the change in influenza vaccination coverage rates for the intervention and control group from baseline to follow-up as determined by univariate generalized linear mixed models. The intervention group experienced a significant increase in influenza vaccination rate from 55.4% to 63.1%, with p=0.03, whereas the control group did not experience a significant increase (p=0.13).

Table 3.

San Diego - Influenza Coverage Extension project clinics' influenza vaccination coverage rate changes and study group univariate ORs of vaccination, from baseline (2008–2009) to follow-up (2010–2011), in children aged 6–59 months

aStatistically significant at p<0.05

OR = odds ratio

CI = confidence interval

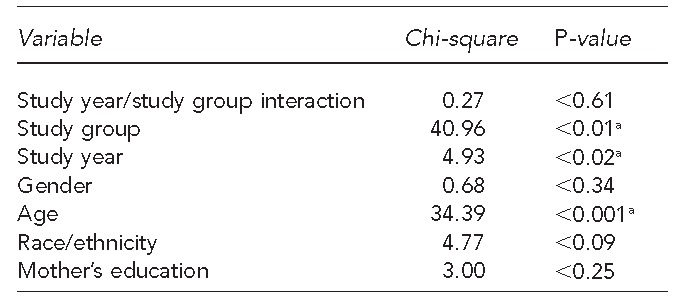

Table 4 displays the generalized linear mixed model used to determine whether or not the increase in influenza vaccination rates in the intervention group differs from the corresponding increase for the control, adjusting for significant covariates. The study year by study group interaction in the type III test of fixed effects was not significant (p=0.61), adjusting for gender, age, ethnicity, and mother's highest level of education, suggesting no significant difference exists between the increase in vaccination rates exhibited by the intervention group vs. control group from baseline to follow-up. Therefore, while we observed an improvement in coverage rates in the intervention group, improved vaccination rates were also experienced in the control group, indicating improvements in vaccination rates to be a result of temporal changes rather than the intervention.

Table 4.

Generalized linear mixed-model type III test of fixed effects for study year and study group interaction, adjusting for gender, age, race/ethnicity, and mother's highest level of education, of children aged 6–59 months in pediatric clinics in San Diego County participating in the San Diego - Influenza Coverage Extension project: 2008–2009 (baseline) and 2010–2011 (follow-up)

aStatistically significant at p<0.05

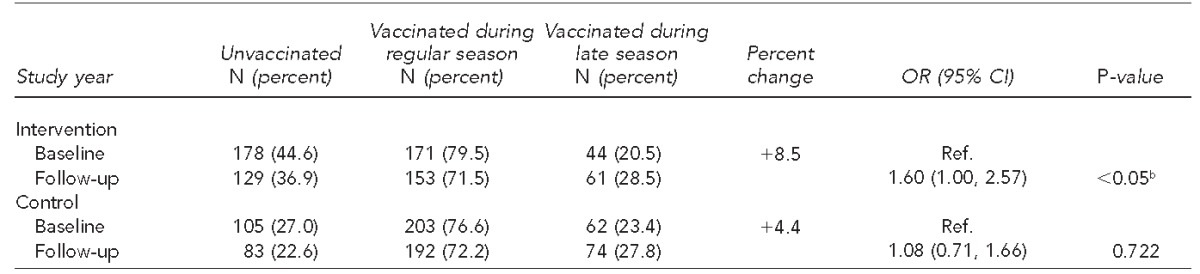

Table 5 displays study year comparisons of influenza vaccination coverage rates during the regular and late season for the intervention and control group using generalized linear mixed models. In the intervention group, late-season vaccination increased from 20.5% to 28.5% from baseline to follow-up. This increase was borderline significant (p<0.05), and the odds of being vaccinated in the late season were 1.60 (95% CI 1.00, 2.57) higher in the follow-up year compared with the baseline year for those in the intervention group. The control group also experienced an increase in late-season vaccination from 23.4% to 27.8%, but the increase was not significant (p=0.72).

Table 5.

Study year comparisons (baseline: 2008–2009, follow-up: 2010–2011) of influenza vaccination coverage rates during regular and late season for intervention and control group children aged 6–59 months in pediatric medical clinics in San Diego County participating in the San Diego - Influenza Coverage Extension projecta

Calculated using generalized linear mixed models

bStatistically significant at p<0.05

OR = odds ratio

CI = confidence interval

Ref. = reference group

DISCUSSION

While both study groups experienced an increase in vaccination rates from baseline to follow-up, only the increase experienced by the intervention group was significant. While this increase supports the idea that the intervention had some role in inducing an increase in influenza vaccination rates, this assumption cannot be confirmed with these results alone, as the control group also experienced some increase in vaccination rate. The baseline-to-follow-up increase in vaccination rate of the intervention group was not significantly different from the increase experienced by the control group. Thus, the extent to which the increase can be attributed to our academic detailing intervention remains unclear. This intervention's results mirror conflicting conclusions from previous academic detailing interventions aimed at increasing vaccination coverage rates. As mentioned previously, Margolis et al. performed a similar intervention aiming to improve process methods for delivery of childhood preventive care.11 Intervention clinics received four coaching visits and the percentage of children per clinic receiving each of the four preventive services was higher in intervention than in control clinics a year later. Significant differences in screenings for tuberculosis (32% vs. 54%), lead (30% vs. 68%), and anemia (71% vs. 79%) were noted, but immunization rates from baseline to follow-up were not significantly different between intervention and control clinics. This similarity in immunization rates between clinic study groups was possibly due to a new universal vaccine purchase program implemented at the time of the study, making it difficult for immunization rates to improve regardless of clinic study group.

We postulate that the 2009 H1N1 influenza pandemic had a similar effect on the nonsignificant difference found in study year ORs for influenza vaccination between our intervention and control clinics. The H1N1 pandemic occurred during the intended SD-ICE follow-up year. There were extensive CDC efforts to promote H1N1 vaccinations, leading to vast improvements in children's vaccination rates for the 2009–2010 season compared with the previous season.14 Our follow-up was postponed to the following influenza season because new influenza vaccine delivery strategies implemented that year would likely be short-lived.

The 2010–2011 follow-up patient intercept surveys asked if the H1N1 pandemic of the previous year influenced patients to get an influenza vaccine. More people stated that H1N1 did not influence their vaccination decision (54.5% control group, 57.8% intervention) than did influence their decision. This finding was counter to our expectation given the extensive media coverage and the observed increase in coverage rates for both control and intervention groups during follow-up compared with baseline. Therefore, it can be concluded that the H1N1 influence and the academic detailing intervention were not the only causes of the increase in influenza vaccination rates from baseline to follow-up in both the control and intervention groups.

Because influenza recommendations for younger children have a short history, the observed increase in coverage rates for all clinics may have been due to the slow temporal increases that are commonly observed when any new vaccine is added to pediatric recommendations. Another possibility is that although the majority of parents reported not being influenced by H1N1 in being vaccinated for influenza during the follow-up year, the providers were. In follow-up key informant interviews, three of six clinics carried over new strategies implemented in the 2009–2010 influenza season into the next influenza season. Of those three clinics, one was an intervention clinic and two were control clinics. These changes likely led to the improvement in vaccination rates from baseline to follow-up.

Another study encountered a similar problem during the 2003–2004 influenza season in Denver, Colorado.15 An abnormally severe influenza season began in October 2003 and resulted in several pediatric deaths the following month, prompting widespread media coverage and attention to influenza vaccinations. The effects of the reminder/recall intervention implemented during the study may have been underestimated, as rates of influenza vaccination increased in all groups. In 6- to 11-month-olds, influenza vaccination rates in the intervention group were significantly higher than in the control group before media coverage but not significant afterward. Thus, it is plausible that the influenza event diminished the effect of the reminder/recall intervention, which may explain the SD-ICE results.

Another objective of this intervention project was to test the feasibility of extending the influenza vaccination season. The control clinics did not exhibit a significant increase in late-season vaccination rates. The increase in late-season vaccination from baseline to follow-up reflected a change in the timing of influenza vaccination, not a substantial change in vaccination practice. These results echo those of Suh et al., where participating clinics reported an increase in late-season vaccinations. However, the physicians were not adequately vaccinating large numbers of patients to expand vaccination coverage to greater rates.16

Limitations

This intervention was subject to several limitations. The interruption caused by the H1N1 pandemic during the study's follow-up year resulted in pushing back the planned follow-up to the next season. Moreover, extensive H1N1 media coverage clouded the results in terms of whether or not academic detailing was solely responsible for increasing vaccination rates. H1N1 also influenced some clinics to adopt new immunization activities to keep up with vaccine demand, which included employing immunization-only clinics and standing orders. In this case, a reported increase in immunization practices during follow-up in comparison with the baseline may have been due to the clinic's reaction to H1N1, not the intervention.

Another concern was that clinics that were randomized to the control group had higher influenza vaccination rates during the baseline year. This artifact of randomization meant that control clinics had less potential for improvement of their influenza vaccination rates from baseline to follow-up, while the intervention group, which started with lower influenza vaccination rates during the baseline, had a greater potential for a larger increase. This large difference in baseline rates also makes it difficult to compare the control and intervention groups.

Finally, throughout the implementation of this intervention, it became apparent from key informant interviews during follow-up that a number of medical clinics experienced changes in important vaccination management staff between baseline and follow-up. These changes likely negatively affected clinics in the intervention group, as clinic staff familiar with the intervention and present at the academic detailing visit were no longer at the clinic. Key informant interviews were completed with the head physician or vaccine manager at the time of the follow-up interview, regardless of their involvement during the baseline year. This discontinuity of essential personnel potentially limited the effectiveness of this intervention, as replacement staff had limited knowledge of prior results and EBPs. Further, they were not introduced to the intervention, nor did they engage the intervention staff who were available for technical assistance.

CONCLUSION

This study assessed the effectiveness of an academic detailing intervention designed to increase influenza vaccination in children aged 6–59 months in six San Diego County primary care clinics. Analysis revealed that the baseline-to-follow-up increase in vaccination rates experienced by the intervention group did not differ significantly from those experienced by the control group. Thus, the academic detailing intervention did not appear to have an effect on influenza vaccination rates. The cause of the observed increase is speculated to be the year-by-year implementation of updated Advisory Committee on Immunization Practices recommendations. It is also possible that the H1N1 pandemic during the year between baseline and follow-up may have influenced providers' attitudes and clinic immunization activities. However, our follow-up key informant interviews documented only a limited increase in adopting EBPs. This intervention's attempt to increase influenza vaccination in the late season was also ineffective.

Although the results indicate that this intervention was unsuccessful, it was an attempt to address an unacceptably low influenza vaccination rate in a vulnerable population. Methods from this intervention could be scaled up to be more intensive and include more frequent interaction with participating providers to ensure its success in the future and progress toward the influenza immunization goals of Healthy People 2020.

Footnotes

This research protocol was approved by the University of California, San Diego (UCSD) School of Medicine Institutional Review Board.

REFERENCES

- 1.Fleming DM, Pannell RS, Cross KW. Mortality in children from influenza and respiratory syncytial virus. J Epidemiol Community Health. 2005;59:586–90. doi: 10.1136/jech.2004.026450. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Belshe RB, Mendelman PM, Treanor J, King J, Gruber WC, Piedra P, et al. The efficacy of live attenuated, cold-adapted, trivalent, intranasal influenza virus vaccine in children. N Engl J Med. 1998;338:1405–12. doi: 10.1056/NEJM199805143382002. [DOI] [PubMed] [Google Scholar]

- 3.Guide to Community Preventive Services. Increasing appropriate vaccination [cited 2014 Oct 17] Available from: URL: www.thecom munityguide.org/vaccines/index.html. [Google Scholar]

- 4.Stinchfield PK. Practice-proven interventions to increase vaccination rates and broaden the immunization season. Am J Med. 2008;121:S11–21. doi: 10.1016/j.amjmed.2008.05.003. [DOI] [PubMed] [Google Scholar]

- 5.Centers for Disease Control and Prevention (US) Results from the March 2011 National Flu Survey—United States, 2010–11 influenza season [cited 2014 Oct 2] Available from: URL: http://www.cdc.gov/flu/pdf/fluvaxview/fluvacsurvey.pdf.

- 6.Department of Health and Human Services (US) Healthy People 2020: objective IID-12: increase the percentage of children and adults who are vaccinated annually against seasonal influenza [cited 2014 Oct 17] Available from: URL: http://www.healthypeople.gov/2020/topics-objectives/topic/immunization-and-infectious-diseases/objectives.

- 7.Soumerai SB, Avorn J. Principles of educational outreach (“academic detailing”) to improve clinical decision making. JAMA. 1990;263:549–56. [PubMed] [Google Scholar]

- 8.Boom JA, Nelson CS, Laufman LE, Kohrt AE, Kozinetz CA. Improvement in provider immunization knowledge and behaviors following a peer education intervention. Clin Pediatr (Phila) 2007;46:706–17. doi: 10.1177/0009922807301484. [DOI] [PubMed] [Google Scholar]

- 9.Bordley WC, Chelminski A, Margolis PA, Kraus R, Szilagyi PG, Vann JJ. The effect of audit and feedback on immunization delivery: a systematic review. Am J Prev Med. 2000;18:343–50. doi: 10.1016/s0749-3797(00)00126-4. [DOI] [PubMed] [Google Scholar]

- 10.Larson K, Levy J, Rome MG, Matte TD, Silver LD, Frieden TR. Public health detailing: a strategy to improve the delivery of clinical preventive services in New York City. Public Health Rep. 2006;121:228–34. doi: 10.1177/003335490612100302. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Margolis PA, Lannon CM, Stuart JM, Fried BJ, Keyes-Elstein L, Moore DE., Jr Practice based education to improve delivery systems for prevention in primary care: randomised trial. BMJ. 2004;328:388. doi: 10.1136/bmj.38009.706319.47. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.San Diego Immunization Program. Influenza coverage rates: San Diego RDD surveys 2008–2010. 2010. [cited 2010 Jan 26]. Available from: URL: http://www.sdiz.org/data-stats/docs/RDD-stats-chart5.pdf.

- 13.SAS Institute, Inc. SAS®: Version 9.2. Cary (NC): SAS Institute, Inc.; 2008. [Google Scholar]

- 14.Update: influenza activity—United States, 2009–10 season. MMWR Morb Mortal Wkly Rep. 2010;59(29):901–8. [PubMed] [Google Scholar]

- 15.Kempe A, Daley MF, Barrow J, Allred N, Hester N, Beaty BL, et al. Implementation of universal influenza immunization recommendations for healthy young children: results of a randomized, controlled trial with registry-based recall. Pediatrics. 2005;115:146–54. doi: 10.1542/peds.2004-1804. [DOI] [PubMed] [Google Scholar]

- 16.Suh C, McQuillan L, Daley MF, Crane LA, Beaty B, Barrow J, et al. Late-season influenza vaccination: a national survey of physician practice and barriers. Am J Prev Med. 2010;39:69–73. doi: 10.1016/j.amepre.2010.03.010. [DOI] [PubMed] [Google Scholar]