Abstract

Diagnostic errors are major contributors to harmful patient outcomes, yet they remain a relatively understudied and unmeasured area of patient safety. Although they are estimated to affect about 12 million Americans each year in ambulatory care settings alone, both the conceptual and pragmatic scientific foundation for their measurement is under-developed. Health care organizations do not have the tools and strategies to measure diagnostic safety and most have not integrated diagnostic error into their existing patient safety programs. Further progress toward reducing diagnostic errors will hinge on our ability to overcome measurement-related challenges. In order to lay a robust groundwork for measurement and monitoring techniques to ensure diagnostic safety, we recently developed a multifaceted framework to advance the science of measuring diagnostic errors (The Safer Dx framework). In this paper, we describe how the framework serves as a conceptual foundation for system-wide safety measurement, monitoring and improvement of diagnostic error. The framework accounts for the complex adaptive sociotechnical system in which diagnosis takes place (the structure), the distributed process dimensions in which diagnoses evolve beyond the doctor's visit (the process) and the outcomes of a correct and timely “safe diagnosis” as well as patient and health care outcomes (the outcomes). We posit that the Safer Dx framework can be used by a variety of stakeholders including researchers, clinicians, health care organizations and policymakers, to stimulate both retrospective and more proactive measurement of diagnostic errors. The feedback and learning that would result will help develop subsequent interventions that lead to safer diagnosis, improved value of health care delivery and improved patient outcomes.

Keywords: Diagnostic errors; Health services research; Information technology; Medical error, measurement/epidemiology; Quality measurement

Introduction

Diagnostic errors are major contributors to harmful patient outcomes,1–7 yet they remain a relatively understudied and unmeasured area of patient safety.8 Diagnostic errors are estimated to affect about 12 million Americans each year in ambulatory care settings alone.9 Many studies, including those involving record reviews and malpractice claims, have shown that common conditions (ie, not just rare or difficult cases) are often missed, leading to patient harm.1 4 10 11 However, both the conceptual and pragmatic scientific foundation for measurement of diagnostic errors is underdeveloped. Healthcare organisations do not have the tools and strategies to measure diagnostic safety and most have not integrated diagnostic error into their existing patient safety programmes.12 Thus, diagnostic errors are generally underemphasised in the systems-based conversation on patient safety.

Many methods have been suggested to study diagnostic errors, including autopsies, case reviews, surveys, incident reporting, standardised patients, second reviews and malpractice claims.13 While most of these have been tried in research settings, most organisations do not use any of these systematically to measure and monitor diagnostic error in routine practice. Most methods look at a select sample of diagnostic errors and do not individually provide a comprehensive picture of the problem. Compared with other safety concerns, there are also fewer sources of valid and reliable data that could enable measurement. Furthermore, unique challenges to reliable measurement such as difficult conceptualisation of the diagnostic process and often blurred boundaries between errors related to diagnosis versus those in other healthcare processes (eg, screening, prevention, management or treatment) make measurement more difficult. Because improving measurement of patient safety is essential to reducing adverse events,14 further progress toward reducing diagnostic errors will hinge on our ability to overcome these measurement-related challenges.

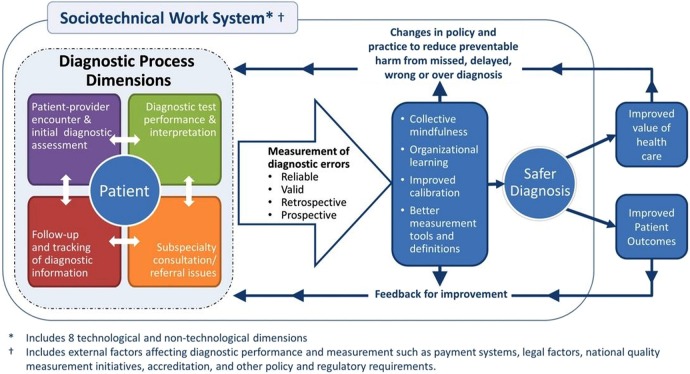

In 2015, the Institute of Medicine (IOM) will release a comprehensive report on diagnostic errors in continuation of the IOM Health Care Quality Initiative that previously published the landmark IOM reports on quality and safety.15 The complexity of measuring diagnostic errors is thus bound to gather widespread attention. In order to lay a robust groundwork for measurement and monitoring techniques to ensure diagnostic safety, we recently developed a multifaceted framework to advance the science of measuring diagnostic errors (Safer Dx framework: figure 1). This framework provides a conceptual foundation for system-wide safety measurement, monitoring and improvement and is further described in this paper. We cannot measure what we cannot define and thus for purposes of this paper, we used an operational definition of diagnostic error that has been extensively used in our work.16 In brief, we define diagnostic errors as missed opportunities to make a correct or timely diagnosis based on the available evidence, regardless of patient harm.16

Figure 1.

The Safer Dx framework for measurement and reduction of diagnostic errors.

Framework rationale

We envision the need for the Safer Dx framework because current organisational processes, procedures and policies do not necessarily facilitate or focus on measurement of diagnostic safety, which is essential for creating future feedback and learning to improve patient care. The Safer Dx framework is expected to facilitate feedback and learning to help accomplish two short-term goals: (1) refine the science of measuring diagnostic error and (2) make diagnostic error an organisational priority by securing commitment from organisational leadership and refocusing the organisation's clinical governance structure. High quality diagnostic performance requires both a well-functioning healthcare system along with high-performing individual providers and care teams within that system.17 Therefore, the framework posits that ideal measurement and monitoring techniques should facilitate feedback and learning at all of these levels.

Operationalising the Safer Dx framework

Our framework follows Donabedian's Structure–Process–Outcome model,18 which approaches quality improvement in three domains: (1) structure (characteristics of care providers, their tools and resources, and the internal physical/organisational setting along with the external regulatory environment); (2) process (both interpersonal and technical aspects of activities that constitute healthcare); and (3) outcome (change in the patient's health status or behaviour). We made several modifications to adapt these three domains to diagnostic error-related measurement work.

Structure

Given the widespread adoption of health information technologies and its significant implications for both measuring and improving the distributed diagnostic process that evolves over multiple care settings, we need to understand the ‘sociotechnical’ context of diagnosis. Our work illustrates the many technical and non-technical (workflow, organisational, people and internal and external policy related) variables that are likely to affect diagnostic safety, supporting the need for a multifaceted sociotechnical approach to improve diagnosis-related performance and measurements.19 Examples of underlying vulnerabilities that require sociotechnical approaches include failure to track patients and their abnormal findings over time, failure to recognise ‘red flags’ in patient presentation even within health information technology-enabled care settings, and lack of reliable quality measurement and feedback systems to analyse diagnostic performance comprehensively. Within the sociotechnical context, many system-related factors could shape cognitive performance and critical thinking and make clinicians vulnerable to losing situational awareness.20 External structural factors that can affect diagnostic performance and measurement include clinical productivity and legal pressures, reimbursement issues, administrative demands and other confounding factors related to ongoing mandatory quality measurements. Thus, the framework includes factors such as payment systems, legal factors, national quality measurement initiatives, accreditation and other policy and regulatory requirements.

In our framework, structure reflects the entire complex adaptive sociotechnical system and has eight technical and non-technical dimensions21 as described in table 1.

Table 1.

Sociotechnical dimensions21 comprising the ‘structure’ of the Safer Dx framework

| Dimension | Description |

|---|---|

| Hardware and software | Computing infrastructure used to support and operate clinical applications and devices |

| Clinical content | The text, numeric data and images that constitute the ‘language’ of clinical applications |

| Human–computer interface | All aspects of technology that users can see, touch or hear as they interact with it |

| People | Everyone who is involved with patient care and/or interacts in some way with healthcare delivery (including technology). This would include patients, clinicians and other healthcare personnel, information technology (IT) developers and other IT personnel and informaticians |

| Workflow and communication | Processes to ensure that patient care is carried out effectively |

| Internal organisational features | Policies, procedures, work environment and culture |

| External rules and regulations | Federal or state rules (eg, CMS's Physician Quality Reporting Initiative22 or requirements for associating a diagnosis with a request for a diagnostic test23) that facilitate or constrain preceding dimensions |

| Measurement and monitoring | Processes to evaluate both intended and unintended consequences |

CMS, Centers for Medicare & Medicaid Services.

Process

Processes in the Safer Dx framework account for the fact that diagnosis evolves over time and is not limited to events during a single provider visit. Accordingly, the framework includes five interactive process dimensions: (1) the patient–provider encounter (history, physical examination, ordering tests/referrals based on assessment); (2) performance and interpretation of diagnostic tests; (3) follow-up and tracking of diagnostic information over time; (4) subspecialty and referral-specific factors; and (5) patient-related factors.10

Outcome

Outcomes in our Safer Dx framework include intermediate outcomes, such as safe diagnosis (correct and timely as opposed to missed, delayed, wrong or overdiagnosis), and account for patient and healthcare delivery outcomes. In much of the measurement-related work, we envision that the intermediate outcome of a safe diagnosis would be the default primary outcome.

Prerequisites of safer diagnosis

In the absence of specific diagnosis-related measurements, organisations cannot maintain situational awareness of their diagnostic safety and thus our framework proposes that improved measurement of diagnostic error should lead to better collective mindfulness and organisational learning.24 Collective mindfulness is a concept from high-reliability organisations that emphasises awareness and action on the part of all involved. It describes the ability of everyone within an organisation to consistently focus on issues that have the potential to cause harm without losing sight of the ‘forest among the trees’25 and to act effectively upon data (even when signals are subtle) to positively transform the organisation.26

The Safer Dx framework is expected to lead to learning and feedback to improve diagnostic safety. While organisational safety and risk management personnel often do not have the tools to measure and address diagnostic error,27 achieving collective mindfulness acknowledges that organisations and practices will need to become ‘preoccupied’ with diagnostic errors to push measurement ahead. Health care organisations (HCOs) will need to gather ‘intelligence’ related to diagnostic safety through multiple retrospective and prospective surveillance methods to inform good measures and solutions. Organisational learning about diagnostic safety will lead to better calibration related to diagnostic performance at both provider and system level and better methods for assessment and feedback about what matters in improving safety.28 Provider calibration would lead to better alignment between actual and perceived diagnostic accuracy and system calibration would entail better alignment between what needs to be measured and what the organisation is actually measuring. Providers receive very little, if any, feedback on the accuracy of their diagnoses and are often unaware of their patients’ ultimate outcomes.29 This also applies to both small clinics and larger institutions where such feedback could be useful to change structures and processes involved in diagnosis and lead to changes in policy and practice to reduce diagnostic error.

The application of the Safer Dx framework emphasises learning about diagnostic errors, which should lead to development of better measurement tools and rigorous definitions of what missed opportunities the organisation should focus on. Thus, it is expected to provide more evidence to support measurement including the iterative development of rigorous ‘sharp-end’ outcome measures to determine errors as they occur as well as ‘blunt-end’ measures of system-level diagnostic performance.

Challenges of real-world diagnostic error measurement

While the Safer Dx framework could provide a useful impetus for using both retrospective and prospective methods to improve the ‘basic science’ of measuring diagnostic safety,16 several challenges remain. Most measurement methods are still evolving and have limitations. In this section, we illustrate challenges of certain current methods to routinely measure diagnostic errors.

As Graber et al30 have emphasised, there is ‘not a single HCO in the United States that is systematically measuring the rate of diagnostic error in its clinics, hospitals, or emergency departments.’ One of the reasons is that HCOs do not know how to find these errors. Nevertheless, organisations will need to start somewhere before we arrive at better measurement and monitoring techniques.

While there have been some early successes with voluntary reporting by providers,31 32 there are no standardised mechanisms or incentives for providers to report diagnostic errors. Further, diagnostic error incident reports from providers are not included in the standardised error reporting protocols in the common formats developed by the Agency for Healthcare Research and Quality.33 It has also become obvious that quality improvement team support and local champions are needed to sustain reporting behaviour.32 At present, few HCOs allocate the ‘protected time’ that is essential for clinicians to report, analyse and learn from safety events. A recently implemented programme at Maine Medical Center was found to be resource intensive and faced challenges in sustainability as well as in identifying the underlying contributory factors and developing and implementing strategies to reduce error.30 Even if these challenges could be overcome, voluntary reporting alone cannot address the multitude of complex diagnostic safety concerns.34 Many times, physicians are unaware of errors they make.35 Although accounts from providers necessarily play an important role in understanding breakdowns in the diagnostic process, provider reporting can only be one aspect of a comprehensive measurement strategy. Patient reports could be a source of measurements about events not discovered through other means36 and recent studies have collected data about diagnostic errors from patients. For example, of 1697 patients surveyed in seven primary care practices, 265 reported physician mistakes and 227 of these involved a diagnostic error.37 Although patient reporting has significant future potential, it has not been validated as a method to measure diagnostic error yet.

While medical record reviews are considered valuable for detecting diagnostic errors, non-selective record review is burdensome and resource intensive. To improve the yield of record reviews, we have developed ‘triggers’38 39 to alert personnel to potential patient safety events, which enables selective targeted review of high-risk patients.40 41 The robust electronic health record (EHR) and large clinical data warehouses within the Department of Veterans Affairs (VA) healthcare system have allowed us to pursue research on electronic trigger development, enabling us to identify preventable conditions likely to result in harm.40 42 Triggers can be used both retrospectively and prospectively, but they are yet to move beyond the research environment into real-world practice.

The use of administrative data (eg, use of billing data as proxy for evolution of clinical diagnosis) to identify possible diagnostic errors is not sufficiently sensitive to detect events of interest. Recent work has tried to refine approaches to use administrative datasets for measurement of diagnostic errors related to stroke and myocardial infarction.43 44 However, this approach alone is unlikely to become a viable basis for rigorous measurement in and of itself in part because of the absence of clinical details related to diagnostic evolution and corresponding care processes.

Prospective methods such as use of unannounced standardised patients help to overcome limitations of other methods, such as chart reviews, which often lack certain details including those related to patient context.45 However, direct observations and simulations, though relatively rigorous, have distinct drawbacks; direct observations are expensive, and simulations may not generalise well to real-world practice.13 An alternative strategy is to develop means of gaining more valid and actionable insights from existing data sources in a proactive fashion.15 For example, many organisations are conducting safety huddles46 and reviews of patient presentations, though we are unaware of any efforts to monitor these sources for diagnosis-related issues. Similarly, we found that peer review may be a useful tool for healthcare organisations when assessing their sharp-end clinical performance, particularly for diagnosis-related safety events.47 However, current peer review systems are inadequate and need to include root cause analysis approaches and performance improvement thinking to detect and address system issues.48 This enhancement of existing peer review programmes could greatly improve processes for diagnostic self-assessment, feedback and improvement.47

Once HCOs start to measure diagnostic safety, ideally using multiple methods (both retrospective and prospective), the findings would lead to further refinement of the science of measuring diagnostic error. This could lead to creation of standardised methods for measuring and monitoring diagnostic error and determining the effects of intervention or improvement. To make this into a reality, external rules, regulations and policies would be necessary to make diagnostic error an organisational priority and to secure commitment from the organisational leadership. Once we have the focus of the organisation's clinical governance structure, the Safer Dx framework suggests that two additional challenges must be overcome for conducting and using measurements at the institutional level.

First, information must be directed to the right people in the appropriate organisational roles. Currently, most institutions do not have dedicated personnel who can analyse and act upon this information. Risk and safety managers routinely collect quality and safety data from multiple sources and are often privy to data unavailable to others, but they have little guidance on how to approach diagnostic errors.49 Second, measurements must generate feedback and learning to improve calibration. However, currently the mechanisms of generating and delivering feedback at the organisational level and provider level remain unknown.

To facilitate measurement and learning, policy makers working on monitoring and quality improvement and researchers would need to address the following questions about giving feedback: (1) what type of content is appropriate for feedback (eg, a return visit based on administrative data which may or may not be related to error vs a clear preventable diagnostic adverse event determined only after a comprehensive review of an incident), (2) what should be the method of delivery (eg, verbal vs written; in person vs by phone; individual vs group; anonymous vs identified), (3) what should be the timing and frequency (eg, delayed until an event is clear vs real-time as details become available) and (4) how do we create feedback that is unambiguous, non-threatening and non-punitive. Use of the Safer Dx framework should lead to shared accountability beyond the clinicians directly involved, including improving the processes and systems they practice in. Because the framework also focuses on value as an outcome, it should help address the challenge of ensuring we mitigate unintended consequences due to feedback-related hypervigilance, for example, due to increased testing/treatment.

Suggested application of the Safer Dx framework and next steps

Use of the Safer Dx framework accounts for the complexity of diagnostic error and will lead to addressing the sociotechnical context while understanding and preventing error. We envision that it will help overcome some of the challenges in exploration, measurement and ultimately improvement of the diagnostic care process within a technology-enabled healthcare system. We have used the approach suggested by this framework in our current work on measuring and reducing delays from missed follow-up of abnormal diagnostic test results in EHR-enabled healthcare. The Safer Dx framework has enhanced our conceptual understanding of the problem and informed intervention development. For example, we often suspected specific aspects of the EHR led to missed follow-up (such as the software or the user interface)50 but we learnt that the user interface is only one, albeit an important, part of the problem. Conversely, we identified and fixed a software configuration error that prevented providers at our site from receiving notifications of abnormal faecal occult blood test results,51 but in doing so we also found problems related to organisational factors, workflow and human–computer interaction, all of which needed to be addressed to improve follow-up.52 In a different study, we found that the same technical intervention to improve test result follow-up in two different VA facilities led to improved outcomes in only one facility.53 The findings remind us about the importance of accounting for the local sociotechnical context as well as the external financial and regulatory environment in which safety innovations are embedded.54

The Safer Dx framework also encourages its users to leverage the transformational power of state-of-the-art health information technology and the EHR in developing interventions and feedback for improvement. Based on the Safer Dx framework, organisations should be harnessing a wealth of electronic data and these data need to be put to use to provide actionable information on diagnostic error. As an example, with funding from the VA,55 we are now developing an automated measurement and surveillance intervention to improve timely diagnosis and follow-up of cancer-related abnormal findings and applying the Safer Dx framework. We are using a large, national data warehouse to trigger medical records with evidence of potential delays in follow-up of cancer-related abnormal test results40 and have continually worked over past 2 years to make measurements more valid and reliable. Additionally, to integrate near real-time surveillance and communication of information about at-risk patients into the point of care, we are adhering to many of the organisational learning elements of the Safer Dx framework. Safer Dx encourages the involvement of organisation's existing safety/quality improvement (QI) infrastructure, including risk managers and safety managers, and to more effectively leverage these personnel to take action and improve collective mindfulness. Through qualitative work, we are working with our organisational partners to ensure that the data we provide them will be used meaningfully, will lead to improvement in collective mindfulness and learning, and be fed back to the providers on the frontlines to improve diagnostic processes. The framework reminds us that various internal and external policies and measurement procedures which, if not designed and implemented with the entire sociotechnical context in mind, can affect diagnosis performance and measurements.

The Safer Dx framework could also help jump-start the improvement of diagnostic safety measurement by informing the design of proactive risk assessment strategies at the organisational or practice level. As used in other high-risk settings and industries, proactive risk assessment involves self-assessment to identify specific areas of vulnerability and ensure readiness to apply preventive measures to promote safety and reduce adverse events. Stakeholders, including policymakers, QI/safety personnel or researchers, could help develop tools for risk assessment based on the framework. For example, with support from the Office of the National Coordinator for Health Information Technology, we previously used a rigorous, iterative process to develop a set of nine self-assessment tools to optimise the safety and safe use of EHRs using our eight-dimension sociotechnical model.56–58 These tools, referred to as the Safety Assurance Factors for EHR Resilience guides, are each organised around a set of ‘recommended practices’.59 The goal is also to change local culture and foster the implementation of risk-reducing practices. Similar tools could be developed based on Safer Dx framework and use a multifaceted approach to assess risk proactively and drive improvements in the quality and safety of diagnosis.58

Last, policymakers working on diagnostic error-related measurement issues would need more evidence on what structural, process and outcomes-related issues need to be measured for improving diagnostic safety. This groundwork could inform the development of future performance measures at a national level with partnership with the National Quality Forum.

Summary

Diagnostic errors are considered harder to tackle8 and remain elusive to improvement efforts in part because they are difficult to define and measure.60 A new conceptual framework (the Safer Dx framework) can be used by a variety of stakeholders including researchers, clinicians, policymakers and healthcare organisations to stimulate the development of local, regional and national strategies and tools for measuring and monitoring diagnostic error. The resulting knowledge would be instrumental to lay the foundation for both retrospective and more proactive measurement of diagnostic errors. The feedback and learning that would result will help develop subsequent interventions that lead to safer diagnosis, improved value of healthcare delivery and improved patient outcomes.

Footnotes

Twitter: Follow Hardeep Singh at @HardeepSinghMD and Dean Sittig at @DeanSittig

Contributors: HS and DFS have significantly contributed to the planning, conduct and reporting of the work reported in this article. Concept and design; analysis and interpretation; drafting and revision of the manuscript; administrative and technical support: HS, DFS.

Funding: HS is supported by the VA Health Services Research and Development Service (CRE 12-033; Presidential Early Career Award for Scientists and Engineers USA 14-274), the VA National Center for Patient Safety and the Agency for Health Care Research and Quality (R01HS022087). This work is supported in part by the Houston VA HSR&D Center for Innovations in Quality, Effectiveness and Safety (CIN 13-413). HS presented the Safer Dx framework to the Institute of Medicine Diagnostic Error in Health Care Consensus Study Committee on 6 August 2014.

Competing interests: None.

Provenance and peer review: Not commissioned; externally peer reviewed.

References

- 1.Bishop TF, Ryan AM, Casalino LP. Paid malpractice claims for adverse events in inpatient and outpatient settings. JAMA 2011;305:2427–31. 10.1001/jama.2011.813 [DOI] [PubMed] [Google Scholar]

- 2.Gandhi TK. Fumbled handoffs: one dropped ball after another. Ann Intern Med 2005;142:352–8. 10.7326/0003-4819-142-5-200503010-00010 [DOI] [PubMed] [Google Scholar]

- 3.Gandhi TK, Kachalia A, Thomas EJ, et al. . Missed and delayed diagnoses in the ambulatory setting: a study of closed malpractice claims. Ann Intern Med 2006;145:488–96. 10.7326/0003-4819-145-7-200610030-00006 [DOI] [PubMed] [Google Scholar]

- 4.Phillips RL Jr, Bartholomew LA, Dovey SM, et al. . Learning from malpractice claims about negligent, adverse events in primary care in the United States. Qual Saf Health Care 2004;13:121–6. 10.1136/qshc.2003.008029 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Singh H, Thomas EJ, Khan MM, et al. . Identifying diagnostic errors in primary care using an electronic screening algorithm. Arch Intern Med 2007;167:302–8. 10.1001/archinte.167.3.302 [DOI] [PubMed] [Google Scholar]

- 6.Singh H, Weingart SN. Diagnostic errors in ambulatory care: dimensions and preventive strategies. Adv Health Sci Educ Theory Pract 2009;14(Suppl 1):57–61. 10.1007/s10459-009-9177-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Singh H, Graber M. Reducing diagnostic error through medical home-based primary care reform. JAMA 2010;304:463–4. 10.1001/jama.2010.1035 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Schiff GD, Kim S, Abrams R, et al. . Diagnosing diagnosis errors: Lessons from a multi-institutional collaborative project. Advances in Patient Safety: From Research to Implementation (Volume 2: Concepts and Methodology). Rockville, MD: Agency for Healthcare Research and Quality, AHRQ Publication Nos. 050021 (1-4), 2005:255–78. [PubMed] [Google Scholar]

- 9.Singh H, Meyer AN, Thomas EJ. The frequency of diagnostic errors in outpatient care: estimations from three large observational studies involving US adult populations. BMJ Qual Saf 2014;23:727–31. 10.1136/bmjqs-2013-002627 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Singh H, Giardina TD, Meyer AN, et al. . Types and origins of diagnostic errors in primary care settings. JAMA Intern Med 2013;173:418–25. 10.1001/jamainternmed.2013.2777 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Schiff GD, Puopolo AL, Huben-Kearney A, et al. . Primary care closed claims experience of Massachusetts malpractice insurers. JAMA Intern Med 2013;173:2063–8. 10.1001/jamainternmed.2013.11070 [DOI] [PubMed] [Google Scholar]

- 12.Wachter RM. Why diagnostic errors don't get any respect—and what can be done about them. Health Aff (Millwood) 2010;29:1605–10. 10.1377/hlthaff.2009.0513 [DOI] [PubMed] [Google Scholar]

- 13.Graber ML. The incidence of diagnostic error in medicine. BMJ Qual Saf 2013;22(Suppl2):ii21–7. 10.1136/bmjqs-2012-001615 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Vincent C, Burnett S, Carthey J. Safety measurement and monitoring in healthcare: a framework to guide clinical teams and healthcare organisations in maintaining safety. BMJ Qual Saf 2014;23:670–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Measurement, Reporting, and Feedback on Diagnostic Error, report to the Committee on Diagnostic Error in Health Care, Institute of Medicine (IOM). Committee on Diagnostic Error in Health Care, Institute of Medicine, 14 August 7; 2014. [Google Scholar]

- 16.Singh H. Editorial: Helping Health Care Organizations to Define Diagnostic Errors as Missed Opportunities in Diagnosis. Jt Comm J Qual Patient Saf 2014;40:99–101. [DOI] [PubMed] [Google Scholar]

- 17.Henriksen K, Brady J. The pursuit of better diagnostic performance: a human factors perspective. BMJ Qual Saf 2013;22(Suppl 2):ii1–5. 10.1136/bmjqs-2013-001827 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Donabedian A. The quality of care. How can it be assessed? JAMA 1988;260:1743–8. 10.1001/jama.1988.03410120089033 [DOI] [PubMed] [Google Scholar]

- 19.Sittig DF, Singh H. Eight Rights of Safe Electronic Health Record Use. JAMA 2009;302:1111–3. 10.1001/jama.2009.1311 [DOI] [PubMed] [Google Scholar]

- 20.Upadhyay DK, Sittig DF, Singh H. Ebola US Patient Zero: lessons on misdiagnosis and effective use of electronic health record. Diagnosis [ 2014 [cited 2014 Dec. 4]; ePub ahead of print. http://www.degruyter.com/view/j/dx.ahead-of-print/dx-2014-0064/dx-2014-0064.xml?format=INT. [DOI] [PMC free article] [PubMed]

- 21.Sittig DF, Singh H. A new sociotechnical model for studying health information technology in complex adaptive healthcare systems. Qual Saf Health Care 2010;19(Suppl 3):i68–74. 10.1136/qshc.2010.042085 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Stulberg J. The physician quality reporting initiative--a gateway to pay for performance: what every health care professional should know. Qual Manag Health Care 2008;17:2–8. 10.1097/01.QMH.0000308632.74355.93 [DOI] [PubMed] [Google Scholar]

- 23.Burken MI, Wilson KS, Heller K, et al. . The interface of Medicare coverage decision-making and emerging molecular-based laboratory testing. Genet Med 2009;11:225–31. 10.1097/GIM.0b013e3181976829 [DOI] [PubMed] [Google Scholar]

- 24.Weick KE, Sutcliffe KM, Obstfeld D. Organizing for high reliability: processes of collective mindfulness. Res Organ Behav 1999;21:81–123. [Google Scholar]

- 25.Mayer D. High Reliability Series: On Collective Mindfulness. Web blog post Educate the Young Wordpress [ 2012 [cited 2014 Oct. 1]. http://educatetheyoung.wordpress.com/2012/07/25/high-reliability-series-on-collective-mindfulness/

- 26.Hines S, Luna K, Loftus J, et al. . Becoming a High Reliability Organization: Operational Advice for Hospital Leaders. (Prepared by the Lewin Group under Contract No. 290-04-0011.). AHRQ Publication No. 08-0022 Agency for Healthcare Research and Quality, 2008. 8-21-2014. [Google Scholar]

- 27.VHA Office of Quality Safety and Value (QSV). Report on the 2011 VHA Risk Management Performance Improvement Survey 2014.

- 28.Thomas EJ, Classen DC. Patient safety: let's measure what matters. Ann Intern Med 2014;160:642–3. 10.7326/M13-2528 [DOI] [PubMed] [Google Scholar]

- 29.Singh H, Sittig DF. Were my diagnosis and treatment correct? No news is not necessarily good news. J Gen Intern Med 2014;29:1087–9. 10.1007/s11606-014-2890-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Graber ML, Trowbridge RL, Myers JS, et al. . The next Organizational challenge: finding and addressing diagnostic error. Jt Comm J Qual Patient Saf 2014;40:102–10. [DOI] [PubMed] [Google Scholar]

- 31.Trowbridge RL, Dhaliwal G, Cosby KS. Educational agenda for diagnostic error reduction. BMJ Qual Saf 2013;22(Suppl 2):ii28–32. 10.1136/bmjqs-2012-001622 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Okafor N, Payne VL, Chathampally Y, et al. . Using voluntary physician reporting to learn from diagnostic errors in emergency medicine. Diagnostic Error in Medicine, 7th International Conference 2014. [Google Scholar]

- 33.Agency for Healthcare Research and Quality (AHRQ). Development of Common Formats. AHRQ [ 2014 [cited 2014 Oct. 1]. https://www.pso.ahrq.gov/common/development

- 34.Sittig DF, Classen DC. Safe electronic health record use requires a comprehensive monitoring and evaluation framework. JAMA 2010;303:450–1. 10.1001/jama.2010.61 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Schiff GD. Minimizing diagnostic error: the importance of follow-up and feedback. Am J Med 2008;121(5, Supplement 1):S38–42. 10.1016/j.amjmed.2008.02.004 [DOI] [PubMed] [Google Scholar]

- 36.Weingart SN, Pagovich O, Sands DZ, et al. . What can hospitalized patients tell us about adverse events? Learning from patient-reported incidents. J Gen Intern Med 2005;20:830–6. 10.1111/j.1525-1497.2005.0180.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Kistler CE, Walter LC, Mitchell CM, et al. . Patient perceptions of mistakes in ambulatory care. Arch Intern Med 2010;170:1480–7. 10.1001/archinternmed.2010.288 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Agency for Healthcare Research and Quality. Patient Safety Network. AHRQ [ 2008 [cited 2014 Oct. 1]. http://psnet.ahrq.gov/

- 39.Szekendi MK, Sullivan C, Bobb A, et al. . Active surveillance using electronic triggers to detect adverse events in hospitalized patients. Qual Saf Health Care 2006;15:184–90. 10.1136/qshc.2005.014589 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Murphy DR, Laxmisan A, Reis BA, et al. . Electronic health record-based triggers to detect potential delays in cancer diagnosis. BMJ Qual Saf 2014;23:8–16. 10.1136/bmjqs-2013-001874 [DOI] [PubMed] [Google Scholar]

- 41.Singh H, Giardina TD, Forjuoh SN, et al. . Electronic health record-based surveillance of diagnostic errors in primary care. BMJ Qual Saf 2012;21:93–100. 10.1136/bmjqs-2011-000304 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Schiff GD. Diagnosis and diagnostic errors: time for a new paradigm. BMJ Qual Saf 2014;23:1–3. 10.1136/bmjqs-2013-002426 [DOI] [PubMed] [Google Scholar]

- 43.Newman-Toker DE, Moy E, Valente E, et al. . Missed diagnosis of stroke in the emergency department: a cross-sectional analysis of a large population-based sample. Diagnosis 2014;1:155–66. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Moy E. Missed Diagnosis of Acute Myocardial Infarction in the Emergency Department (Presented at the AHRQ 2010 Annual Conference). AHRQ Archive [Slide presenttion] 2010 [cited 2014 Dec. 4]. http://archive.ahrq.gov/news/events/conference/2010/moy/index.html [Google Scholar]

- 45.Schwartz A, Weiner SJ, Weaver F, et al. . Uncharted territory: measuring costs of diagnostic errors outside the medical record. BMJ Qual Saf 2012;21:918–24. 10.1136/bmjqs-2012-000832 [DOI] [PubMed] [Google Scholar]

- 46.Brady PW, Goldenhar LM. A qualitative study examining the influences on situation awareness and the identification, mitigation and escalation of recognised patient risk. BMJ Qual Saf 2014;23:153–61. 10.1136/bmjqs-2012-001747 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Meeks DW, Meyer AN, Rose B, et al. . Exploring new avenues to assess the sharp end of patient safety: an analysis of nationally aggregated peer review data. BMJ Qual Saf 2014;23:1023–30. 10.1136/bmjqs-2014-003239 [DOI] [PubMed] [Google Scholar]

- 48.Graber ML. Physician participation in quality management. Expanding the goals of peer review to detect both practitioner and system error. Jt Comm J Qual Improv 1999;25:396–407. [DOI] [PubMed] [Google Scholar]

- 49.Groszkruger D. Diagnostic error: untapped potential for improving patient safety? J Healthc Risk Manag 2014;34:38–43. 10.1002/jhrm.21149 [DOI] [PubMed] [Google Scholar]

- 50.Sittig DF, Murphy DR, Smith MW, et al. . Graphical Display of Diagnostic Test Results in Electronic Health Records: a Comparison of 8 Systems (under review) 2014.

- 51.Singh H, Wilson L, Petersen LA, et al. . Improving follow-up of abnormal cancer screens using electronic health records: trust but verify test result communication. BMC Med Inform Decis Mak Making 2009;9: [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Singh H, Thomas EJ, Sittig DF, et al. . Notification of abnormal lab test results in an electronic medical record: do any safety concerns remain? Am J Med 2010;123:238–44. 10.1016/j.amjmed.2009.07.027 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Laxmisan A, Sittig DF, Pietz K, et al. . Effectiveness of an electronic health record-based intervention to improve follow-up of abnormal pathology results: a retrospective record analysis. Med Care 2012;50:898–904. 10.1097/MLR.0b013e31825f6619 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Taylor SL, Dy S, Foy R, et al. . What context features might be important determinants of the effectiveness of patient safety practice interventions? BMJ Qual Saf 2011;20:611–7. 10.1136/bmjqs.2010.049379 [DOI] [PubMed] [Google Scholar]

- 55.CRE 12-033 Automated Point-of-Care Surveillance of Outpatient Delays in Cancer Diagnosis (HSR&D Study). US Department of Veterans Affairs, Health Services Research & Development [ 2013 [cited 2014 Dec. 5]. http://www.hsrd.research.va.gov/research/abstracts.cfm?Project_ID=2141701900

- 56.SAFER electronic health records: Safety Assurance Factors for EHR Resilience. Sittig DF, Singh H, eds. Waretown, New Jersey: Apple Academic Press, 2015. [Google Scholar]

- 57.Singh H, Ash JS, Sittig DF. Safety Assurance Factors for Electronic Health Record Resilience (SAFER): study protocol. BMC Med Inform Decis Mak 2013;13:46 10.1186/1472-6947-13-46 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Sittig DF, Ash JS, Singh H. The SAFER Guides: empowering organizations to improve the safety and effectiveness of electronic health records. Am J Manag Care 2014;20:418–23. [PubMed] [Google Scholar]

- 59.HHS Press Office. HHS makes progress on Health IT Safety Plan with release of the SAFER Guides. New tools will help providers and health IT developers make safer use of electronic health records. 1-15-2014. Washington, DC: U.S. Department of Health & Human Services. [Google Scholar]

- 60.Graber M. Diagnostic errors in medicine: a case of neglect. Jt Comm J Qual Patient Saf 2005;31:106–13. [DOI] [PubMed] [Google Scholar]