Abstract

It is widely acknowledged that individuals with elevated depressive symptoms exhibit deficits in inter-personal communication. Research has primarily focused on speech production in individuals with elevated depressive symptoms. Little is known about speech perception in individuals with elevated depressive symptoms, especially in challenging listening conditions. Here we examined speech perception in young adults with low or high depressive symptoms in the presence of a range of maskers. Maskers were selected to reflect various levels of informational masking (IM), which refers to cognitive interference due to signal and masker similarity, and energetic masking (EM), which refers to peripheral interference due to signal degradation by the masker. Speech intelligibility data revealed that individuals with high depressive symptoms did not differ from those with low depressive symptoms during EM, but exhibited a selective deficit during IM. Since IM is a common occurrence in real-world social settings, this listening deficit may exacerbate communicative difficulties.

Keywords: Depression, speech perception, informational masking, communication, CES-D

INTRODUCTION

Depression is a common but serious mental condition that is predictive of future suicide attempts, unemployment, and addiction (Kessler et al., 2003; Kessler & Walters, 1998). As per the World Health Organization, approximately 121 million individuals suffer from depression, making it one of the leading causes of disability worldwide. The socioeconomic costs of depression are a significant issue affecting the world population. It is widely recognized that depressed individuals have deficits in communication (Segrin, 1998), but much of the work on communicative competence has focused on the depressed individuals’ speech output. Subjective perception of the speech of individuals with high depressive symptoms suggests that they show less prosodic variability and fluency (Andreasen & Pfohl, 1976; Fossati, Guillaume le, Ergis, & Allilaire, 2003) relative to those with low depressive symptoms. Relative to the literature on speech production little is known about speech perception in individuals with elevated depressive symptoms.

Induced acute anxiety in non-depressed participants causes a modification in speech perception, wherein listeners focus more on higher-level lexical information at the cost of lower-level phonetic information (Mattys, Seymour, Attwood, & Munafò, 2013). This shift in perceptual focus as a function of induced anxiety has been attributed to either a reduced ability to suppress lexical information and/or reduced global control of attentional processes (Mattys et al., 2013). Depression is highly comorbid with anxiety. Recent estimates suggest that as many as 60% of individuals with major depressive disorder report a lifetime history of an anxiety disorder (Zimmerman, McGlinchey, Chelminski, & Young, 2008). Further, meta-analyses indicate that there is a high genetic correlation between anxiety (Cerdá, Sagdeo, Johnson, & Galea, 2010). The association is so strong that depression and anxiety are thought to be indistinguishable from each other at a genetic level (Flint & Kendler, 2014). The current study investigates whether similar impairments may affect speech perception in challenging listening environments in individuals with elevated depressive symptoms.

In typical social settings, speech perception often transpires in less than ideal listening conditions. One common type of noise that can interfere with speech perception is the speech of other unattended talkers. The challenge in such environments is in extracting a speech target from one or several simultaneous competing speech signals (i.e., the so-called “cocktail party effect”) (Cherry, 1953). Other noise conditions may also interfere with speech communication. For example, construction noise or airplane noise can be a source of communicative interference. In these cases, the noise may be relatively less distracting, but still impairs speech perception by masking the auditory signal.

Two general mechanisms—informational masking and energetic masking—have been defined to describe the interference caused by noise (Brungart, 2001). Energetic masking refers to masking that occurs in the auditory periphery, rendering portions of the target speech inaudible to the listener while informational masking refers to interference in target processing that occurs at higher levels of auditory and cognitive processing. Informational masking is particularly an issue in speech-in-speech situations where the possible sources of such masking are numerous: misattribution of components of the noise to the target (and vice versa); competing attention from the masker; increased cognitive load; and linguistic interference (Cooke, Garcia Lecumberri, & Barker, 2008). Informational factors contribute most to masking when there are relatively few talkers in the masker. In such cases, a listener may be able to understand some of what is being said in the background. Energetic masking, however, increases as talkers are added to the masker since the masker becomes more spectrally more complex with fewer ‘dips’ (see Figure 1). Non-fluctuating speech-shaped noise (SSN) (i.e., white noise filtered to match the long-term average spectral structure of speech) represents the acoustics of an infinite number of talkers. Behavioral and neuroimaging studies demonstrate at least a partial dissociation between energetic and informational masking during speech processing. A previous behavioral study showed that speech intelligibility during energetic masking was not associated with performance in high informational-masking environments (Van Engen, 2012). Further, a positron emission tomography study showed that energetic and informational masking are neurally dissociable (Scott, Rosen, Wickham, & Wise, 2004).

Figure 1.

Examples of target signal and noise conditions used in the current study. The waveform (top) and spectrogram (bottom) of the signal and the noise conditions (1-talker babble, 2-talker babble, 8-talker babble, and speech-shaped noise (SSN). In 1-talker babble condition, individual words by the competing talker are discernible, providing informational interference. In contrast, in SSN, individual lexical items are not discernible, but the masking is more continuous, and allows for less signal ‘glimpsing’.

According to a recent model of speech perception in noise (Shinn-Cunningham, 2008), release from informational masking requires listeners to overcome at least two issues: segregating the target source from the maskers (i.e., who is talking?), and selectively listening to the target while ignoring competing maskers. These two mechnisms have been called “object formation” and “object selection” (Shinn-Cunningham, 2008), respectively. The difficulties during energetic masking can be largely attributed to disruption in “object formation” (Shinn-Cunningham, 2008). In contrast, coping with informational masking places greater demands on executive function, requiring the listener to selectively attend to the target and inhibit the influences from the background maskers.

The goal of the current paper is to examine the impact of depressive symptoms on speech perception under a variety of noise conditions. In individuals with elevated depressive symptoms, several of the cognitive skills critical to effectively ignoring irrelevant information have been found to be relatively impaired. Empirically, depressive symptoms have been shown to affect executive function (Austin et al., 1992; McDermott & Ebmeier, 2009), cognitive flexibility (Butters et al., 2004), and working memory (Clark, Chamberlain, & Sahakian, 2009). Also, depressive symptoms have been consistently shown to relate to a greater interference from irrelevant information (particularly those with a negative focus) (Disner, Beevers, Haigh, & Beck, 2011). Since inhibitory ability is more critical to speech perception during informational masking than energetic masking, we predict a listening condition-specific (i.e. informational masking) speech perceptual deficit in individuals with depressive symptoms. Importantly, since informational masking is extremely common in typical social settings (‘cocktail party’ situations), identification of a potential deficit could provide a better understanding of communicative deficits in depression.

Participants were divided into high depressive symptom (HD) or low depressive symptom (LD) groups based on a survey of depressive symptoms (Van Dam & Earleywine, 2011). Participants in both groups listened to sentences in background noise that varied with respect to informational and energetic masking. Specifically, sentence identification was examined in the context of babble(s) containing 1-talker, 2-talker, 8-talker, and speech-shaped noise (SSN). The 1-talker babble and the SSN conditions represent the ends of a continuum of maskers that range from primarily informational to purely energetic.

METHOD

Participants

Two-hundred-twenty-nine University of Texas undergraduates completed the Center for Epidemiological Studies Depression Scale (CES-D) (Radloff, 1977). All participants also completed a sentence identification in noise task. Following previous studies and convention (Weissman, Sholomskas, Pottenger, Prusoff, & Locke, 1977), we classified participants as having elevated depressive symptoms if they scored 16 or greater. This score reflects mild or greater symptoms of depression (Radloff, 1977). We employed the CES-D in this study because this scale was developed to assess depressive symptoms in the general community, rather than in clinical populations. The CES-D in the college population shows a greater sensitivity as a screening tool than the BDI (Beck Depression Inventory), which is another popularly used measure in the field (Santor, Zuroff, Ramsay, Cervantes, & Palacios, 1995). Based on this criterion, 22 participants demonstrated elevated depressive symptoms. From the 199 remaining participants, we selected a low depressive symptom group (n=22) matched for age and sex with the high depressive symptom group (details in Table 1). The matched control group was randomly selected by a research assistant who was blind to participant performance. We also examined the group with elevated depressive symptoms relative to all participants1. All participants were between the ages of 19 and 35 (average age = 25.60 years). Their hearing was screened to ensure thresholds < 25 dB SPL at 500 Hz, 1 kHz, and 2 kHz. Participants reported no history of language or hearing problems and were compensated for their participation as per a protocol approved by the University of Texas-Austin Institutional Review Board.

Table 1.

Demographic information for the two groups.

| High depression symptom group | Low depression symptom group | ||||

|---|---|---|---|---|---|

| Age | Sex | CES-D score | Age | Sex | CES-D score |

| 23 | F | 21 | 23 | F | 6 |

| 20 | M | 18 | 20 | M | 10 |

| 20 | F | 17 | 20 | F | 2 |

| 20 | M | 20 | 20 | M | 10 |

| 18 | F | 19 | 18 | F | 8 |

| 19 | F | 23 | 19 | F | 7 |

| 32 | M | 19 | 32 | M | 8 |

| 28 | F | 19 | 28 | F | 3 |

| 28 | F | 21 | 28 | F | 9 |

| 27 | M | 30 | 27 | M | 4 |

| 29 | M | 23 | 29 | M | 1 |

| 28 | F | 24 | 28 | F | 1 |

| 25 | M | 17 | 25 | M | 4 |

| 22 | M | 24 | 22 | M | 8 |

| 27 | M | 20 | 27 | M | 3 |

| 33 | M | 19 | 33 | M | 1 |

| 26 | M | 18 | 26 | M | 9 |

| 22 | F | 20 | 22 | F | 3 |

| 28 | F | 26 | 28 | F | 5 |

| 27 | M | 24 | 27 | M | 4 |

| 32 | F | 21 | 32 | F | 1 |

| 30 | F | 25 | 30 | F | 2 |

Materials

Target sentences from the Revised Bamford-Kowal-Bench (BKB) Standard Sentence Test (Bamford & Wilson, 1979), were recorded by a female native speaker of American English in a sound-attenuated booth at Northwestern University (Van Engen, 2012). The BKB lists each contain 16 sentences and a total of 50 keywords for scoring. All sentence recordings were equalized for RMS amplitude. N-talker babble tracks were created as follows: 8 female speakers of American English were recorded in a sound-attenuated booth at Northwestern University (Van Engen et al., 2008). Each participant produced 30 simple English sentences. For each talker, these sentences were equalized for RMS amplitude and then concatenated to create 30-sentence strings without silence between sentences. One of these strings was used as the single talker masker track. To generate 2-talker babble, the string from a second talker was mixed with the first. 6 more talkers were added to create 8-talker babble. Speech-shaped noise was generated by obtaining the long-term average spectrum from the full set of 240 sentences and shaping white noise to match that spectrum. All masker tracks were truncated to 50 s and equated for RMS amplitude. Each target sentence was mixed with a random sample of noise such that each stimulus was composed as follows: 400ms of silence, 500 ms of noise, the target and noise together, and a 500 ms noise trailer. The signal-to-noise ratio was -5 dB (i.e., the noise was 5 dB higher than the targets).

Procedure

Listeners were instructed that they would be listening to sentences in noise. They were told that the target sentences would always begin one-half second after the noise, and their task was to type the target sentence using a computer keyboard. If they were unable to understand the entire sentence, they were asked to report any intelligible words and/or make their best guess. Sixteen sentences were presented in each of the four noise types, for a total of 64 trials. The noise types were randomly presented. These sentences were mixed, and each one was only presented once. The order of the sentences was randomized for each participant. Responses were scored by the number of keywords correctly identified. Keywords with added or omitted morphemes were scored as incorrect.

RESULTS

Speech-in-noise performance

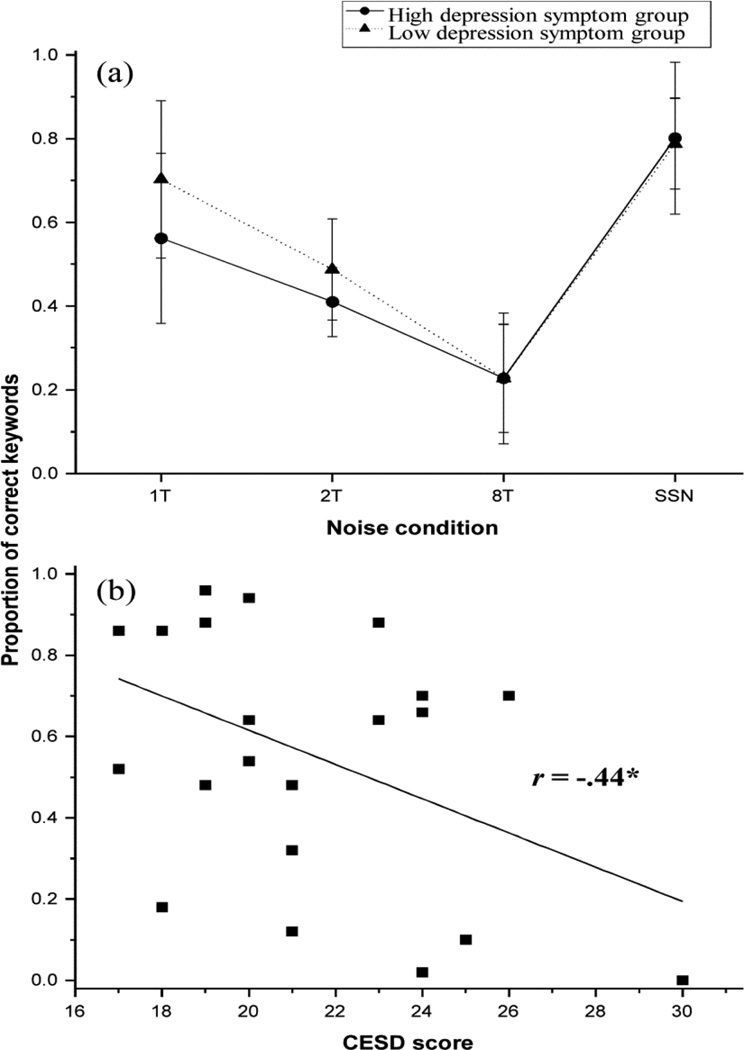

Prior to conducting inferential statistics, we first describe the overall findings. Figure 2a shows group-mean of proportion of correctly identified keywords across the four noise conditions (i.e., 1-talker babble, 2-talker babble, 8-talker babble, and SSN) in the high depression symptom (HD) group (solid line) and the low depression symptom (LD) group (dash line). In both groups, keywords correctly identified differed on the basis of noise conditions. In both groups, mean proportion was greatest for the SSN condition (HD: .80; LD: .79) and least for the 8-talker babble condition (HD: .23, LD: .23). For both groups, performance followed similar trajectories, with performance deteriorating with the addition of talkers (1-talker>2-talker>8-talker), but recovering in SSN (where there is minimal informational masking). This is consistent with previous findings that demonstrate that a confluence of energetic and informational masking in a multi-talker babble results in maximum deleterious effects on performance. Importantly, some critical differences were noted between groups. Qualitatively, the group with high depressive symptoms was less accurate when the masker is largely informational (1-talker babble). The two groups showed no differences in maskers that are increasingly more energetic.

Figure 2.

(a) Proportion of correctly identified keywords across the four noise conditions (i.e., 1- talker babble, 2-talker babble, 8-talker babble, and SSN) in the groups with high (solid line) and low (dash line) depressive symptoms. Error bars represent standard deviation. (b) Proportion of correctly identified keywords in the 1-talker babble condition as a function of the extent of depressive symptoms indicated by CES-D score in the high depressive symptom group. The straight line represents the best-fitting line for the data points. r represents the correlation coefficient between proportion of correctly identified keywords and CES-D score. * denotes p < .05.

The data1 were analyzed with a linear mixed effects logistic regression where keyword identification (i.e. correct or incorrect) was the dichotomous dependent variable. Fixed effects included noise condition, group, and their interaction, with by-subject random intercept. Noise condition and group were treated as categorical variables. Analysis was performed using the lme4 package in R (Bates, Maechler, & Bolker, 2012). The results of the regression are presented in Table 2.

Table 2.

Results of the linear mixed effects logistic regression on the intelligibility data across four noise conditions in the high and low depressive symptom groups.

| Fixed effects: | Estimate | Std.error | z value | P |

|---|---|---|---|---|

| (Intercept) | 0.26 | 0.20 | 1.33 | .18 |

| noise_2T | −0.72 | 0.09 | −7.70 | <.001*** |

| noise_8T | −1.71 | 0.13 | −13.36 | <.001*** |

| noise_SSN | 1.36 | 0.10 | 14.24 | <.001*** |

| group_low-depressive | 0.69 | 0.28 | 2.48 | .013* |

| noise_2T:group_low-depressive | −0.29 | 0.13 | −2.19 | .029* |

| noise_8T:group_low-depressive | −0.59 | 0.18 | −3.23 | .01** |

| noise_SSN: group_low-depressive | −0.86 | 0.13 | −6.45 | <.001*** |

Note: The intercept represents the reference condition: the noise condition was 1-talker babble, and the group was the high depressive symptoms. The estimates for noise_2T, noise_8T, and noise_SSN were the differences in log probability of correct keyword identification in 2-talker babble, 8-talker babble, or SSN conditions for the high depressive symptom group, relative to the reference condition. The estimates for group_low-depressive were the difference in log probability of correct keyword identification in the low depressive symptom group, relative to the reference condition. The estimates for noise_2T: group_low-depressive, noise_8T: group_low-depressive, and noise_SSN: group_low-depressive were the differences in log probability of correct keyword identification in 2-talker babble, 8-talker babble, or SSN conditions for the low depressive symptom group, relative to the reference condition.

denotes p < .001,

denotes p < .01,

denotes p < .05.

Wald test was used to test the overall effect of noise condition, group, and their interactions on the probability of keyword identification. Results showed that the overall effect of noise condition was significant, χ2(2) = 1289.71, p<.001, where the probability of the correct keyword identification followed this trajectory: SSN > 1-talker babble >2-talker babble >8-talker babble, all p-values < 0.001. The effect of group was not significant, χ2 (1) = 1.04, p=.31. The noise condition by group interaction was significant, χ2 (3) = 44.86, p<.001. The nature of this interaction was examined by performing a second round of mixed effects logistic regressions on the four noise conditions individually. In the 1-talker babble condition, the group effect was significant, β= 1.12, SE= 0.56, Z=1.98, p<.05, such that keyword identification in noise was better for the LD group than for the HD group. In the other three noise conditions, the group effect was not significant, 2-talker babble: β= 0.38, SE= 0.25, Z=1.54, p=.12; 8-talker babble: β= −0.01, SE= 0.26, Z= −0.03, p=.97; SSN: β= −0.16, SE= 0.29, Z= −0.55, p=.58.

Correlation between depressive symptoms and speech-in-noise performance

To investigate the continuous relationship between the extent of depressive symptoms (as measured by the CES-D score) and speech perception across various noise conditions, Pearson’s correlations were calculated for the HD and LD groups separately. In the HD group, significant negative correlations (2-tailed) were found between extent of depressive symptoms and performance in 1-talker babble, r(22) = −.44, p < .05 (see Figure 2b); in contrast, associations between depressive symptoms and performance in 2-talker babble (r(22) = −.31, p = .16), 8-talker babble (r(22) = −.27, p = .23), and SSN (r(22) = .13, p = .55) were not significant. In the LD group, associations between depressive symptoms and performance in the four noise conditions were not significant, 1-talker babble: r(22) = −.27, p = .23; 2-talker babble: r(22)= −.24, p =.30; 8-talker babble: r(22) = −.21, p = .37; SSN: r(22) = −.06, p = .79.

DISCUSSION

The goal of this study was to examine speech perception in individuals with elevated depressive symptoms under various challenging listening conditions. Relative to individuals with low depressive symptoms, those with high depressive symptoms showed poorer performance in a noise condition that involved significant informational masking (1-talker babble). In contrast, when noise conditions were primarily energetic, there were no differences between groups. Finally, we also found a significant negative correlation between extent of depressive symptoms and performance under noise conditions that had significant informational masking (1-talker babble), whereas performance in primarily energetic masking conditions (8-talker babble and SSN) did not significantly correlate with the extent of depressive symptoms.

Taken together, our results demonstrate that elevated depressive symptoms interfere with the ability to cope with informational masking during speech perception. This finding has important practical implications since speech perception in typical social settings (‘cocktail party environments’) usually involves significant informational masking. Poorer comprehension leading to communication failures under such listening environments could seriously exacerbate social difficulties and reduce social competence in individuals with elevated depressive symptoms.

Mechanistically, there are several possibilities that could lead to a selective deficit during informational masking conditions. In energetic masking, competing noise renders portions of the target signal inaudible, requiring listeners to cope with the loss of acoustic-phonetic features within the speech signal. In contrast, during informational masking, the speech signal is audible but difficult to separate from the competing noise. A previous study found that the ability to cope with energetic masking was not predictive of performance in high informational-masking environments (Van Engen, 2012), suggesting that the cognitive-sensory resources required for the two masking types are at least partially dissociable. The difficulties during informational masking conditions could arise from failures in “object formation” as well as failures in “object selection”, whereas the difficulties during energetic masking are mainly due to failures in “object formation” (Shinn-Cunningham, 2008). Hence, relative to energetic masking, informational masking places greater demands on executive functions such as inhibitory control, working memory, and cognitive flexibility, to achieve “object selection” (i.e., selectively attend to the target speech and inhibit/ignore the influences from the maskers). Thus, the impairments in executive function (specifically, updating working memory content) that have been observed in individuals with elevated depressive symptoms (Clark et al., 2009; Joormann & Gotfib, 2008) are likely to underlie their poorer performance in informational masking environments.

A previous study showed that anxiety induction led to increased reliance on lexical cues relative to acoustic cues during a word segmentation task (Mattys, Seymour, Attwood, & Munafo, 2013). Mechanistically, this result was explained as arising from either a reduction in the inhibition of lexical activation and reduced attentional control (i.e., distraction from phonetic detail) due to anxiety. Given the overlap between depression and anxiety, it follows that speech perception mediated by effortful cognitive systems may be similarly disrupted among individuals with elevated depression symptoms. In conjunction with the Mattys et al. study, our results suggest that reduced executive control, which can result from anxiety induction or from depressive symptoms, may have a direct impact on speech perception that can be observed both at the level of words and sentences.

To our knowledge, this is the first study to demonstrate selective difficulties in speech perception in individuals with elevated depressive symptoms. We do acknowledge several limitations of the current study. It is unclear whether individuals diagnosed with clinical depression would perform similar to the group with high depressive symptoms. All individuals with high depressive symptoms exceeded a cut-point on the CES-D commonly used to screen for major depressive disorder (Radloff, 1977); however many of these participants would not have met criteria for a major depressive episode. Second, there are several possible mechanisms that could lead to a selective speech perception deficit. We have highlighted these possibilities, but the current experimental design cannot identify the exact mechanism underlying the deficit. Second, while our data are indicative of a subtle effect of depressive symptoms on speech perception during informational masking, further converging evidence is needed. To establish a causal relationship we would need to conduct a mood induction experiment and examine the effects of such induction on speech perception under informational masking conditions. While these findings have important practical and clinical value, further studies are needed to more precisely delineate the mechanisms underlying this selective listening difficulty in individuals with elevated depressive symptoms. We hypothesize that increased distractibility and reduced working memory resources may be particularly important underlying factors contributing to speech perception deficits in depressive individuals.

In summary, this study has shown that in individuals with elevated depressive symptoms exhibit a selective deficit in speech perception under listening conditions that involve informational masking. Since informational maskers are ubiquitous in typical social situations, this selective deficit could lead to (or exacerbate) social and communicative difficulties in the depressive individuals. Future experiments are needed to target a putative mechanism. Despite acknowledged limitations, this foundational work provides important new insight into the influence of elevated depressive symptoms in the domain of speech perception.

ACKNOWLEDGEMENT

This work was supported by NIDA grant DA032457 to WTM and CJB. Research reported in this publication was also supported by the National Institute On Deafness And Other Communication Disorders of the National Institutes of Health under Award Number R01DC013315 (awarded to BC). We thank the Maddox Lab RAs for help with data collection. Address correspondence to Bharath Chandrasekaran (bchandraaustin.utexas.edu).

Footnotes

We also ran an analysis including all the participants in the sample. Of 199 low-depressive individuals, 16 were excluded because of incomplete data on the speech-perception-in noise task. Hence, the sample for this analysis included 22 high-depressive individuals and 183 low-depressive individuals. Results showed that in the 1-talker babble condition, keyword identification in noise was significantly better for the low-depressive group than for the high-depressive group, β = 0.92, SE= 0.39, Z=2.38, p = .017. In the other three noise conditions, keyword identification in noise was not significant different between the two groups, 2-talker babble: β = 0.30, SE= 0.19, Z=1.57, p=.12; 8-talker babble: β= 0.07, SE= 0.22, Z= 0.33, p = .74; SSN: β = −0.12, SE = 0.18, Z = −0.67, p = .50. These patterns were consistent with the results from the selected high and low depression symptom group.

REFERENCES

- Andreasen NC, Pfohl B. LInguistic analysis of speech in affective disorders. Archives of General Psychiatry. 1976;33(11):1361–1367. doi: 10.1001/archpsyc.1976.01770110089009. [DOI] [PubMed] [Google Scholar]

- Austin M-P, Ross M, Murray C, O'Caŕroll R, Ebmeier KP, Goodwin GM. Cognitive function in major depression. Journal of affective disorders. 1992;25(1):21–29. doi: 10.1016/0165-0327(92)90089-o. [DOI] [PubMed] [Google Scholar]

- Bamford J, Wilson I. Methodological considerations and practical aspects of the BKB sentence lists. In: Bench J, Bamford J, editors. Speech-hearing tests and the spoken language of hearing-impaired children. London: Academic Press; 1979. pp. 148–187. [Google Scholar]

- Bates D, Maechler M, Bolker B. lme4: Linear mixed-effects models using S4 classes. 2012 [Google Scholar]

- Brungart DS. Informational and energetic masking effects in the perception of two simultaneous talkers. The Journal of the Acoustical Society of America. 2001;109(3):1101–1109. doi: 10.1121/1.1345696. [DOI] [PubMed] [Google Scholar]

- Butters MA, Whyte EM, Nebes RD, Begley AE, Dew MA, Mulsant BH, Becker JT. The nature and determinants of neuropsychological functioning in late-life depression. Archives of General Psychiatry. 2004;61(6):587–595. doi: 10.1001/archpsyc.61.6.587. [DOI] [PubMed] [Google Scholar]

- Cherry EC. Some Experiments on the Recognition of Speech, with One and with 2 Ears. Journal of the Acoustical Society of America. 1953;25(5):975–979. [Google Scholar]

- Clark L, Chamberlain SR, Sahakian BJ. Neurocognitive Mechanisms in Depression: Implications for Treatment. Annual Review of Neuroscience. 2009;32:57–74. doi: 10.1146/annurev.neuro.31.060407.125618. [DOI] [PubMed] [Google Scholar]

- Cooke M, Garcia Lecumberri ML, Barker J. The foreign language cocktail party problem: Energetic and informational masking effects in non-native speech perception. The Journal of the Acoustical Society of America. 2008;123(1):414–427. doi: 10.1121/1.2804952. [DOI] [PubMed] [Google Scholar]

- Disner SG, Beevers CG, Haigh EAP, Beck AT. Neural mechanisms of the cognitive model of depression. Nature Reviews Neuroscience. 2011;12(8):467–477. doi: 10.1038/nrn3027. [DOI] [PubMed] [Google Scholar]

- Fossati P, Guillaume le B, Ergis AM, Allilaire JF. Qualitative analysis of verbal fluency in depression. Psychiatry Res. 2003;117(1):17–24. doi: 10.1016/s0165-1781(02)00300-1. [DOI] [PubMed] [Google Scholar]

- Joormann J, Gotfib IH. Updating the contents of working memory in depression: Interference from irrelevant negative material. Journal of Abnormal Psychology. 2008;117(1):182–192. doi: 10.1037/0021-843X.117.1.182. [DOI] [PubMed] [Google Scholar]

- Kessler RC, Berglund P, Demler O, Jin R, Koretz D, Merikangas KR, Wang PS. The epidemiology of major depressive disorder. JAMA: the journal of the American Medical Association. 2003;289(23):3095–3105. doi: 10.1001/jama.289.23.3095. [DOI] [PubMed] [Google Scholar]

- Kessler RC, Walters EE. Epidemiology of DSM-III-R major depression and minor depression among adolescents and young adults in the national comorbidity survey. Depression and anxiety. 1998;7(1):3–14. doi: 10.1002/(sici)1520-6394(1998)7:1<3::aid-da2>3.0.co;2-f. [DOI] [PubMed] [Google Scholar]

- Mattys S, Seymour F, Attwood A, Munafò M. Effects of Acute Anxiety Induction on Speech Perception Are Anxious Listeners Distracted Listeners? Psychological science. 2013 doi: 10.1177/0956797612474323. [DOI] [PubMed] [Google Scholar]

- McDermott LM, Ebmeier KP. A meta-analysis of depression severity and cognitive function. Journal of affective disorders. 2009;119(1):1–8. doi: 10.1016/j.jad.2009.04.022. [DOI] [PubMed] [Google Scholar]

- Radloff LS. The CES-D scale a self-report depression scale for research in the general population. Applied psychological measurement. 1977;1(3):385–401. [Google Scholar]

- Santor DA, Zuroff DC, Ramsay J, Cervantes P, Palacios J. Examining scale discriminability in the BDI and CES-D as a function of depressive severity. Psychological Assessment. 1995;7(2):131. [Google Scholar]

- Scott SK, Rosen S, Wickham L, Wise RJ. A positron emission tomography study of the neural basis of informational and energetic masking effects in speech perception. Journal of the Acoustical Society of America. 2004;115(2):813–821. doi: 10.1121/1.1639336. [DOI] [PubMed] [Google Scholar]

- Segrin C. Interpersonal communication problems associated with depression and loneliness. 1998 [Google Scholar]

- Shinn-Cunningham BG. Object-based auditory and visual attention. Trends in Cognitive Sciences. 2008;12(5):182–186. doi: 10.1016/j.tics.2008.02.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Dam NT, Earleywine M. Validation of the Center for Epidemiologic Studies Depression Scale--Revised (CESD-R): pragmatic depression assessment in the general population. Psychiatry Res. 2011;186(1):128–132. doi: 10.1016/j.psychres.2010.08.018. [DOI] [PubMed] [Google Scholar]

- Van Engen KJ. Speech-in-speech recognition: A training study. Language and Cognitive Processes. 2012;27(7–8):1089–1107. [Google Scholar]

- Van Engen KJ, Baese-Berk M, Baker RE, Choi A, Kim M, Bradlow AR. The Wildcat Corpus of native- and foreign-accented English: communicative efficiency across conversational dyads with varying language alignment profiles. Lang Speech. 2008;53(Pt 4):510–540. doi: 10.1177/0023830910372495. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weissman MM, Sholomskas D, Pottenger M, Prusoff BA, Locke BZ. Assessing depressive symptoms in five psychiatric populations: a validation study. American Journal of Epidemiology. 1977;106(3):203–214. doi: 10.1093/oxfordjournals.aje.a112455. [DOI] [PubMed] [Google Scholar]