An interactive 3.0-T MR neurographic imaging atlas is described that combines AIM image annotations, the RadLex radiology ontology, and Web technologies to allow users to explore the imaging anatomy of the brachial plexus.

Abstract

Disorders of the peripheral nervous system have traditionally been evaluated using clinical history, physical examination, and electrodiagnostic testing. In selected cases, imaging modalities such as magnetic resonance (MR) neurography may help further localize or characterize abnormalities associated with peripheral neuropathies, and the clinical importance of such techniques is increasing. However, MR image interpretation with respect to peripheral nerve anatomy and disease often presents a diagnostic challenge because the relevant knowledge base remains relatively specialized. Using the radiology knowledge resource RadLex®, a series of RadLex queries, the Annotation and Image Markup standard for image annotation, and a Web services–based software architecture, the authors developed an application that allows ontology-assisted image navigation. The application provides an image browsing interface, allowing users to visually inspect the imaging appearance of anatomic structures. By interacting directly with the images, users can access additional structure-related information that is derived from RadLex (eg, muscle innervation, muscle attachment sites). These data also serve as conceptual links to navigate from one portion of the imaging atlas to another. With 3.0-T MR neurography of the brachial plexus as the initial area of interest, the resulting application provides support to radiologists in the image interpretation process by allowing efficient exploration of the MR imaging appearance of relevant nerve segments, muscles, bone structures, vascular landmarks, anatomic spaces, and entrapment sites, and the investigation of neuromuscular relationships.

©RSNA, 2015

Introduction

Disorders of the peripheral nervous system have a wide variety of causes, including traumatic injury, mechanical compression, tumoral involvement, inflammatory conditions, metabolic disorders, immune-mediated mechanisms, and genetic factors, among others. Clinical manifestations include motor, sensory, and/or autonomic symptoms. Diagnostic evaluation of peripheral neuropathies has traditionally relied on clinical history, physical examination, and electrodiagnostic techniques such as electromyography and nerve conduction studies. Nerve and muscle biopsy may also be used for diagnosis.

In cases requiring additional information regarding spatial localization of disease or severity of neuromuscular abnormalities, imaging may also be helpful. Beginning in the late 1980s, techniques for peripheral nerve imaging using ultrasonography (1) and magnetic resonance (MR) imaging (2) began to emerge, and subsequent technical advances have made progressive improvements in the visualization of nerves and surrounding structures possible (3–7).

Recent advances in nerve MR imaging (ie, neurography) include isotropic three-dimensional image acquisition and diffusion-weighted imaging (8–10), which have permitted improved visualization of small nerves, greater sensitivity to changes in nerve signal, and better delineation of the perineural soft tissues. The use of MR neurography in a wide range of anatomic locations has been described; these locations include the brachial plexus (11–14), lumbosacral plexus (15–17), and sites along the upper (6,18,19) and lower (7,16,20–22) extremities. Furthermore, surgical techniques for the repair of peripheral nerve injury have improved (23), and the use of postsurgical nerve imaging has been reported (24).

Despite these advances, however, interpretation of MR neurographic examinations may present a diagnostic challenge because knowledge of the morphology, course, branching, entrapment sites, and myotomal distributions of nerves remains relatively specialized. For example, nerve-muscle relationships form the basis for myotomal patterns of muscle denervation changes, which are well seen on MR images but are often unfamiliar to radiologists.

Many reference materials address the pertinent anatomy, including textbooks, Web sites, and Web-based imaging atlases (eg, 25–29). The anatomic structures relevant to MR neurography are characterized by features such as their connectivity, spatial relationships, and imaging appearance with a variety of MR imaging planes and sequences. This key knowledge constitutes information about the anatomy, or anatomic metadata. However, reference materials often either present these metadata in fragmented form or omit them entirely. For example, anatomy textbooks typically demonstrate muscles with images and illustrations while providing data about muscle attachment sites and innervating nerves separately in tabular form. Similarly, electronic imaging atlases typically illustrate the imaging appearance of structures with scrollable images and anatomic labels but without additional metadata (29,30).

The challenge for learners is to integrate related concepts from different resources and across a variety of formats. To facilitate this integration, we have developed a strategy for harmonizing imaging data and anatomic metadata using ontologic knowledge representation and “computable” image annotations.

Ontology-assisted Image Navigation

Our approach aims to facilitate learning by incorporating anatomic metadata and conceptual relationships within the images themselves. Our interface allows users to interactively select structures of interest within images, browse relevant metadata displayed within the images, and use the metadata as a conceptual link to related structures. This approach depends on three key technologies: (a) the RadLex® ontology, (b) ontology queries designed to extract relevant anatomic data from RadLex, and (c) the Annotation and Image Markup (AIM) standard for image annotation.

Ontologies

In the late 1990s and early 2000s, during a period of rapid development of the Web, technologies for structuring information to allow computers to parse the meaning of Web content began to emerge. These technologies came to be known as the Semantic Web (31) and were designed to enable computers to assist users in a variety of ways. Ontologies are one key part of Semantic Web technology. Essentially, ontologies encode terms or concepts and their relationships to one another to form a computer-based representation of knowledge. Within the realm of biomedicine, ontologies have been developed in areas such as biochemistry, genetics, infectious diseases, pharmacology, and neuroscience. These ontologies have been used to support clinical practice, facilitate education and research, and lower the barriers to interdisciplinary collaboration (32,33). Other Semantic Web technologies include mechanisms for querying ontologies and for correlating knowledge between ontologies (34).

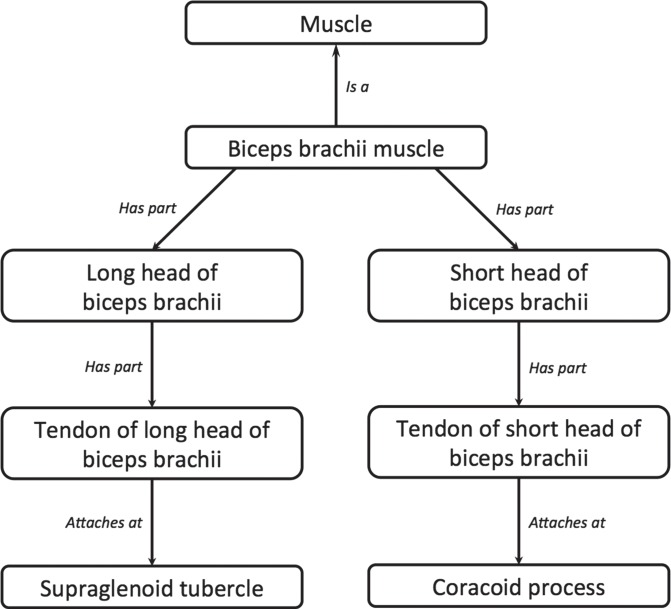

Since its inception in the mid-1990s, the Foundational Model of Anatomy (FMA) has grown to become the most comprehensive and widely used reference ontology of human anatomy (35). The FMA captures knowledge about the phenotypic organization of the human body using an ontologic framework that represents anatomic entities in an Aristotelian taxonomy and describes the spatiostructural properties of these entities (eg, as “is-a” and “part-of” relationships). For example, the biceps brachii muscle, which relates to the brachial plexus as an innervation target of the musculocutaneous nerve, is composed of several parts and provides a convenient illustration of anatomic modeling in the FMA. Thus, for example, the tendon of the long head of the biceps brachii muscle “is-a” tendon, and is “part-of” the biceps brachii muscle (Fig 1).

Figure 1a.

Ontologic modeling of the biceps brachii muscle. (a) Drawing illustrates the biceps brachii muscle. (Reprinted, with permission, from reference 36.) (b) Chart illustrates a related ontology fragment, with relationships indicated by arrows and accompanying text (italics). Conceptual relationships may be interpreted as “subject-verb-object” sentences in which the subject is the concept at the origin of the arrow, the relationship itself constitutes the verb, and the object is the concept at the arrow’s destination. Different relationships are used to indicate parts (eg, biceps brachii muscle “has-part” long head of biceps brachii), types (eg, biceps brachii “is-a” muscle), and attachments (eg, tendon of long head of biceps brachii “attaches-at” supraglenoid tubercle). Note that ontologies also often encode reverse relationships (eg, long head of biceps brachii is “part-of” biceps brachii muscle) (not shown).

During the past several years, the radiology resource RadLex (37) has evolved from a list of standardized imaging terms (ie, a lexicon, or controlled terminology) to a more structured form (38), using the FMA as a template for the ontologic structure pertaining to anatomy, as well as a source of radiology-related anatomic content (39). RadLex now incorporates not only an extensive number of anatomic, pathologic, and imaging terms, but also a rich network of relationships connecting these concepts, making it an ontology in its own right. In particular, RadLex now incorporates detailed anatomic knowledge of the brachial plexus derived from the FMA, including relevant anatomic spaces, nerve branching relationships, and muscle innervation information.

Ontology Queries

Information is retrieved from ontologies such as RadLex by means of queries posed to the ontology. An ontology query consists of a logical function designed to extract specific knowledge. For example, RadLex contains information about synonyms for certain terms, and a simple query might be, “For a given RadLex term, what (if any) synonyms does it have?” For the term “interscalene triangle space,” a RadLex query for synonyms would return three items: “space of interscalene triangle,” “scalene triangle space,” and “interscalene triangle compartment space.”

To provide key anatomic metadata within an imaging atlas, we defined several RadLex queries. The first is a baseline query: “Given a specific RadLex term, retrieve its relationships with other structures.” This first query simply returns any and all relationships for the given term, with the relationships depending on the nature of the structure. This query could be used, for example, to determine that the biceps brachii muscle “has-innervation-source” musculocutaneous nerve.

However, more sophisticated queries are often needed, since it may not be possible to obtain the desired result directly from the immediate relationships for a given term. Consider a second query: “Given a muscle, retrieve its bone attachment sites.” Because of the ontologic modeling of muscles as illustrated in Figure 1b, use of the baseline query described earlier does not return any attachment metadata. A query designed to return muscle attachment sites would instead parse the muscle part hierarchy for the constituent tendons to determine bone attachment sites. Submitting the term “biceps brachii muscle” to this query returns three results: “supraglenoid tubercle,” “area of origin of short head of biceps brachii” (a part of the coracoid process), and “radial tuberosity.” A third query performs the inverse: “Given a bone attachment site, retrieve the muscles that attach there.” Knowledge of muscle attachments as provided by these queries facilitates the identification of muscles as anatomic landmarks and potential sites of denervation in evaluating the brachial plexus.

Figure 1b.

Ontologic modeling of the biceps brachii muscle. (a) Drawing illustrates the biceps brachii muscle. (Reprinted, with permission, from reference 36.) (b) Chart illustrates a related ontology fragment, with relationships indicated by arrows and accompanying text (italics). Conceptual relationships may be interpreted as “subject-verb-object” sentences in which the subject is the concept at the origin of the arrow, the relationship itself constitutes the verb, and the object is the concept at the arrow’s destination. Different relationships are used to indicate parts (eg, biceps brachii muscle “has-part” long head of biceps brachii), types (eg, biceps brachii “is-a” muscle), and attachments (eg, tendon of long head of biceps brachii “attaches-at” supraglenoid tubercle). Note that ontologies also often encode reverse relationships (eg, long head of biceps brachii is “part-of” biceps brachii muscle) (not shown).

A fourth query relates to anatomic spaces: “Given an anatomic space, retrieve its bounding structures as well as the structures contained within the space.” This query would indicate that, for example, the interscalene triangle space is bounded by the anterior and middle scalene muscles and contains the superior, middle, and inferior trunks of the brachial plexus as well as a portion of the subclavian artery. Familiarity with anatomic spaces such as the interscalene triangle is important in assessing the course of nerves and possible entrapment sites.

AIM Standard

To link anatomic structures with their imaging appearance, a mechanism is needed for tagging structures within images. Clinical image review systems have long provided users with the ability to create textual notes (ie, annotations) and graphical marks (ie, markup) on images, allowing radiologists to highlight relevant findings or measurements. However, these notes and marks were typically stored in proprietary formats that were neither portable between systems nor easily accessible with external applications. Over time, researchers and clinical users recognized the potential for making use of these data outside of the traditional image interpretation process. For example, quantitative imaging applications for tracking lesion size over time could parse measurements made on serial imaging examinations. To enable these types of applications, the AIM standard was developed as part of the Cancer Bioinformatics Grid project of the National Institutes of Health (40,41).

AIM provides a mechanism for representing image notes and marks in a form that can be accessed computationally, thereby making the semantic content of images computable. Specifically, AIM defines a standard schema (or data structure) in Extensible Markup Language (XML) for storing annotations and graphical markup from Digital Imaging and Communications in Medicine (DICOM) images, which may then be processed further with other applications. (For convenience, both annotations and graphical markup will hereafter be referred to as “annotations.”) In this way, AIM annotations constitute the means of making the semantic meaning within images explicit and machine accessible. Thus, for example, a shape drawn around the anterior scalene muscle is no longer simply a polygon within an image, but a representation of a particular anatomic structure with specific properties that can be retrieved from an ontology. These capabilities are already being applied to problems in radiology reporting (42), oncologic lesion tracking (43), and automatic image retrieval based on lesion similarity (44).

Ontology-based Imaging Atlas

By combining RadLex and ontology queries with the computable image annotations made possible with AIM, we link knowledge about anatomic structures with their imaging appearance to allow ontology-assisted image navigation. Using AIM annotations to identify specific structures within images, our imaging atlas presents the conceptual material of RadLex through the images themselves. The resulting application allows users to explore the MR imaging appearance of anatomic structures, investigate the relationships between these structures and surrounding structures, and use these relationships to navigate from one portion of the atlas to another. This is particularly relevant for peripheral neuropathies because the assessment of neuromuscular abnormalities often depends on structural connectivity (eg, muscle innervation) and spatial relationships such as potential nerve entrapment sites.

Atlas Implementation

To construct an ontology-based imaging atlas of the brachial plexus, we first identified a set of reference MR images. Next, we created a collection of AIM annotations and implemented the RadLex queries described earlier. A Web-based application architecture was developed to create an interface to these images, annotations, and ontologic material.

Reference MR Images

In an institutional review board–approved retrospective review, 29 consecutive 3.0-T MR imaging examinations of the brachial plexus performed on Magnetom Trio or Magnetom Verio systems (Siemens Medical Solutions, Erlangen, Germany) between July 2009 and January 2011 were identified. One particular examination was selected for its depiction of the normal MR imaging appearance of nerves, muscles, and anatomic spaces. Four specific sequences (total of 296 images) from this examination were selected for use in the imaging atlas: (a) a three-dimensional T2-weighted SPACE (sampling perfection with application optimized contrasts by using different flip angle evolutions) sequence (repetition time msec/echo time msec = 1000/97, voxel dimensions = 1.0 × 1.0 × 1.0 mm); (b) a three-dimensional T2-weighted spectral selection attenuated inversion-recovery sequence (1510/97, voxel dimensions = 0.98 × 0.98 × 1.0 mm); (c) a sagittal short inversion time inversion-recovery sequence (2600/18, inversion time = 220 msec, pixel dimensions = 0.86 × 0.86 mm, section thickness = 3 mm, section interval = 4 mm); and (d) an axial T1-weighted sequence (800/12, pixel dimensions = 0.40 × 0.40 mm, section thickness = 3 mm, section interval = 4 mm). Consultation with the information technology staff led to a set of institution-specific DICOM tags being identified as potentially containing patient information. These tags were removed using the DICOM processing package Ruby DICOM (45), which provides DICOM anonymization functionality.

Image Annotation Workflow

Based in part on the Radiological Society of North America’s structured reporting template for brachial plexus MR imaging (46), a list of 46 key anatomic structures relevant to the interpretation of these examinations was created. This list included 16 nerve segments, nine bone structures, nine muscles, four vascular segments, and eight anatomic spaces (Table). In addition, one ligament (the coracoclavicular ligament) and two bone structures (the coracoid process and the supraglenoid tubercle) were included as supplemental anatomic landmarks. All 49 structures were annotated in the reference images.

Key Anatomic Structures Seen at MR Imaging of the Brachial Plexus

Anonymized reference MR images were imported into the open source DICOM viewing application OsiriX (47). A plug-in within OsiriX called iPAD (48) provides AIM-compliant annotation capabilities and was used to create graphical regions of interest outlining specific anatomic structures. Each region of interest was named according to the RadLex identifier of the corresponding structure. (Note that RadLex assigns a unique RadLex identifier to each of its terms. For example, the anterior scalene muscle is associated with RadLex identifier 7496.) RadLex does not currently include level-by-level foraminal modeling for the spinal neural foramina, and a placeholder identifier was used instead for these anatomic spaces. Similarly, RadLex does not currently model the anterior ramus of the T1 spinal nerve, and a placeholder identifier was used for this structure as well. These placeholders serve to identify these structures in the atlas while also marking them as ineligible for ontology queries, since RadLex does not currently contain information about them. For each annotation, iPAD generated an AIM-compliant XML file with region-of-interest geometry and a corresponding reference to the relevant DICOM image. A total of 1047 AIM annotations were created.

Web-based Application Architecture

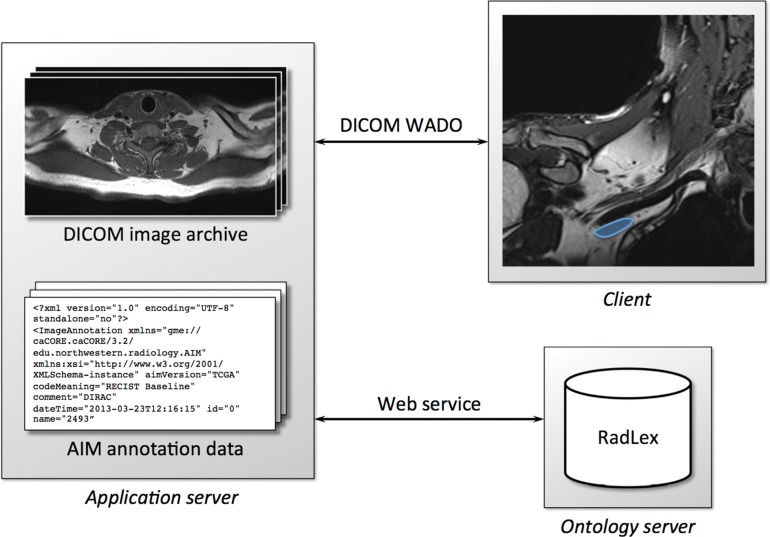

Using these reference images and AIM annotations, we developed a Web-based application architecture for ontology-assisted image navigation (Fig 2). A Windows Server 2008 R2 intranet server (Microsoft, Redmond, Wash) was configured with industry-standard Web tools (the Apache Web server, the MySQL database system, and the PHP scripting language).

Figure 2.

Web-based application architecture for an ontology-driven imaging atlas. The application server manages images in a DICOM archive and maintains a set of image annotations encoded with the AIM standard. The application server responds to client requests for images using the DICOM Web Access to DICOM Persistent Objects (WADO) protocol. RadLex data are dynamically retrieved from a separate ontology server through a Web services interface. In this example, the client has used DICOM WADO to display a coronal T2-weighted SPACE MR image in the region of the brachial plexus (blue = axillary vein).

The server portion of the system performs three primary functions. First, it manages the reference images and AIM annotations. Images are stored on the server using the open source DCM4CHE DICOM archive (49), and annotations are kept in a MySQL database. Second, the server responds to client queries using the WADO protocol (50,51) to communicate imaging data. WADO is part of the DICOM standard and provides for DICOM image transfer using Web services. Web services are a widespread form of programmatic interaction between applications using standard Web communications—specifically, the hypertext transfer protocol. This reliance on standard Web technology makes Web services robust and easy to use. Third, the server mediates client requests for ontologic data. When a client requests information about a structure of interest, the server invokes a Web-based ontology server, Query Integrator (52), which provides access to RadLex content and query functionality. Like WADO, Query Integrator uses a Web services interface, returning ontologic terms and relationships in XML format.

Atlas Interface

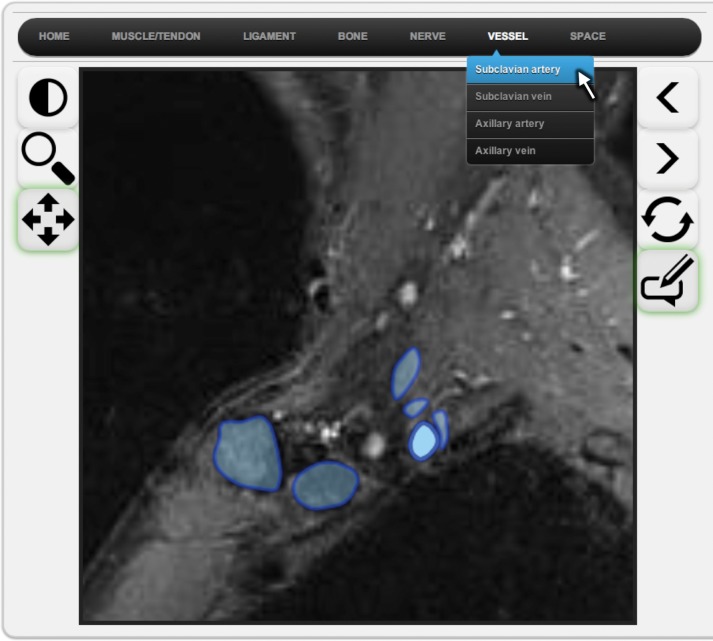

The user interface was developed with the JavaScript programming language for cross-platform functionality, using the Pixastic (53) and KineticJS (54) JavaScript libraries for image manipulation and annotation graphics. This browser-based interface provides several standard tools for image manipulation, including stack scrolling, window level adjustment, image zoom, image pan, and annotation toggle. The interface also provides annotation navigation functionality for viewing and selecting AIM annotations and consists of three separate mechanisms. First, a series of six drop-down menus organized by anatomic category are arrayed along the top of the interface (Fig 3a). Each menu contains items corresponding to specific annotated structures, for a total of 49 selectable menu items. Selecting one of these menu items causes a representative image to be displayed and the corresponding annotation for the selected structure to be highlighted.

Figure 3a.

Browser-based interface to ontology-driven imaging atlas. (a) Drop-down menus (top) provide a mechanism for selecting structures by category and name (the subclavian artery has been selected in this example). Image annotations are shown in the atlas in blue, with the currently selected structure highlighted in brighter opaque blue and other available annotations shown in darker transparent blue. (b) Any available annotation may be selected by moving the cursor over the structure of interest (the coracoclavicular ligament has been selected in this example). Annotations may be toggled on and off to more fully reveal the underlying imaging appearance. Additional information about a structure of interest may be obtained by means of a pop-up menu, which is invoked with the right mouse button. (c) Pop-up menu for the supraspinatus muscle with attachment information derived from RadLex.

Second, on any given image, users may explore annotations by graphically selecting structures (Fig 3b). Moving the cursor over an annotation, followed by a left mouse click, updates the display so that the selected region is highlighted.

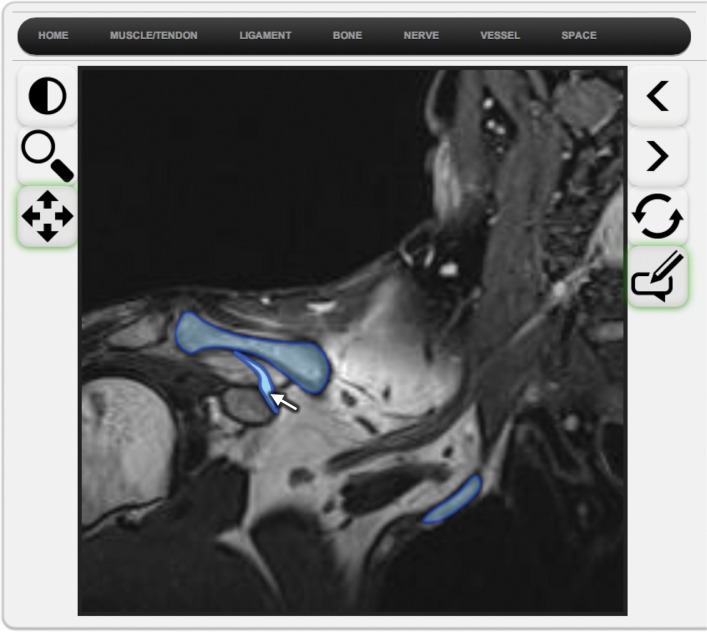

Figure 3b.

Browser-based interface to ontology-driven imaging atlas. (a) Drop-down menus (top) provide a mechanism for selecting structures by category and name (the subclavian artery has been selected in this example). Image annotations are shown in the atlas in blue, with the currently selected structure highlighted in brighter opaque blue and other available annotations shown in darker transparent blue. (b) Any available annotation may be selected by moving the cursor over the structure of interest (the coracoclavicular ligament has been selected in this example). Annotations may be toggled on and off to more fully reveal the underlying imaging appearance. Additional information about a structure of interest may be obtained by means of a pop-up menu, which is invoked with the right mouse button. (c) Pop-up menu for the supraspinatus muscle with attachment information derived from RadLex.

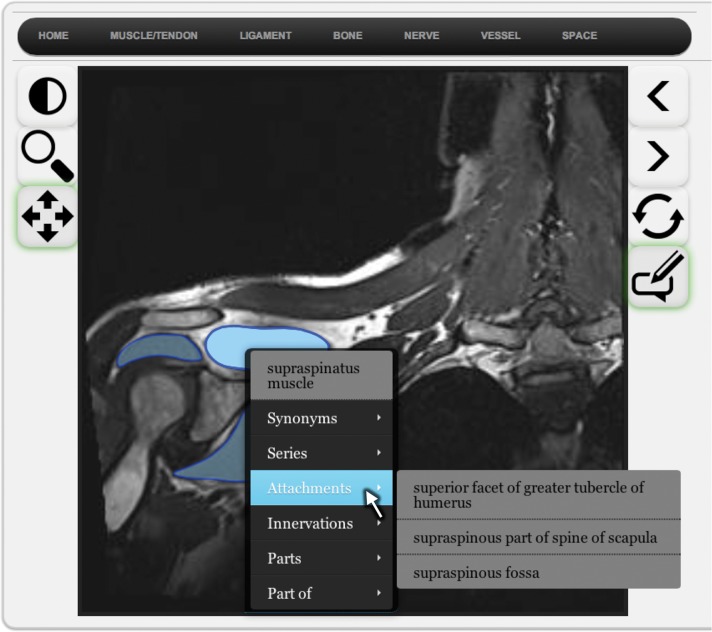

Third, for any annotation within a given image, information about the corresponding anatomic structure may be accessed with a right mouse click (Fig 3c). This action causes a pop-up menu to appear, the contents of which are dynamically assembled by the application server through Web service calls to Query Integrator. This pop-up menu shows the RadLex preferred name for the structure, any synonymous terms, and a list of the image series containing one or more annotations of the structure. Additional content within the pop-up menu varies with the type of structure (eg, attachment information for muscles, boundary information for anatomic spaces). Any item in the pop-up menu that itself corresponds to one of the 49 annotated structures is also selectable, and selecting an item causes the display to update with that item as the structure of interest. Similarly, selecting any of the image series names in a pop-up menu updates the display to reflect the selected imaging sequence. In this way, pop-up menus provide links to other relevant structures and images within the atlas.

Figure 3c.

Browser-based interface to ontology-driven imaging atlas. (a) Drop-down menus (top) provide a mechanism for selecting structures by category and name (the subclavian artery has been selected in this example). Image annotations are shown in the atlas in blue, with the currently selected structure highlighted in brighter opaque blue and other available annotations shown in darker transparent blue. (b) Any available annotation may be selected by moving the cursor over the structure of interest (the coracoclavicular ligament has been selected in this example). Annotations may be toggled on and off to more fully reveal the underlying imaging appearance. Additional information about a structure of interest may be obtained by means of a pop-up menu, which is invoked with the right mouse button. (c) Pop-up menu for the supraspinatus muscle with attachment information derived from RadLex.

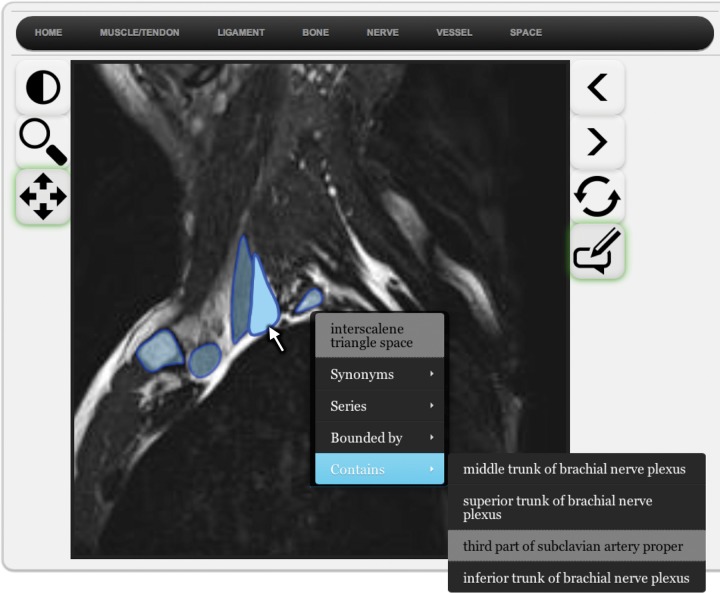

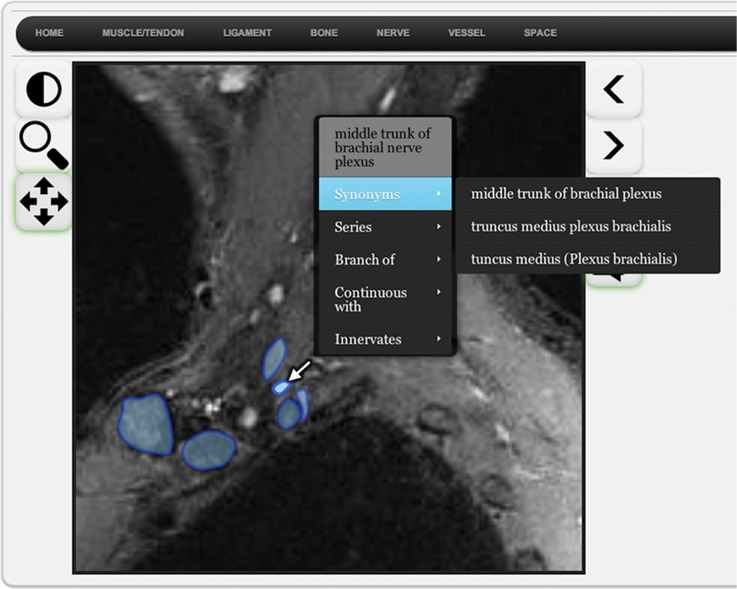

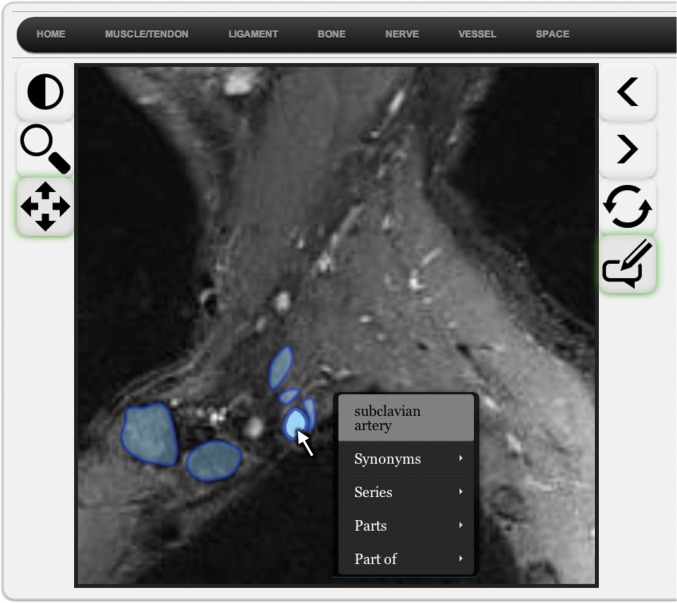

These three annotation navigation mechanisms enable complementary modes of image viewing. Suppose a user knows that the brachial plexus courses through the interscalene triangle and wishes to explore this space further. The drop-down menu system allows this space to be selected by name. The user may then invoke the right-click pop-up menu, revealing that the interscalene triangle contains the superior, middle, and inferior trunks of the brachial plexus, along with a portion of the subclavian artery. Selecting “middle trunk of brachial nerve plexus” from the Contains submenu (Fig 4a) then navigates to a representative image showing the middle trunk (Fig 4b). Graphical browsing may then be used in this image to show that the middle trunk courses superior to the subclavian artery (Fig 4c). In this way, the interface allows users to examine the imaging appearance of specific structures, review the ontologic and spatial relationships between the structures, and move from one structure of interest to other related areas of the atlas.

Figure 4a.

Ontology-assisted image navigation. (a) Pop-up menu reveals that the superior, middle, and inferior trunks of the brachial plexus, as well as a portion of the subclavian artery, course through the interscalene triangle. (b) Entries in the pop-up menu system are themselves selectable, and choosing the middle trunk of the brachial plexus links to a representative image and annotation. (c) Graphical annotation browsing may then be used to demonstrate that the middle trunk of the brachial plexus lies superior to the subclavian artery. In this way, the application facilitates exploration of the ontologic and spatial relationships between structures.

Figure 4b.

Ontology-assisted image navigation. (a) Pop-up menu reveals that the superior, middle, and inferior trunks of the brachial plexus, as well as a portion of the subclavian artery, course through the interscalene triangle. (b) Entries in the pop-up menu system are themselves selectable, and choosing the middle trunk of the brachial plexus links to a representative image and annotation. (c) Graphical annotation browsing may then be used to demonstrate that the middle trunk of the brachial plexus lies superior to the subclavian artery. In this way, the application facilitates exploration of the ontologic and spatial relationships between structures.

Figure 4c.

Ontology-assisted image navigation. (a) Pop-up menu reveals that the superior, middle, and inferior trunks of the brachial plexus, as well as a portion of the subclavian artery, course through the interscalene triangle. (b) Entries in the pop-up menu system are themselves selectable, and choosing the middle trunk of the brachial plexus links to a representative image and annotation. (c) Graphical annotation browsing may then be used to demonstrate that the middle trunk of the brachial plexus lies superior to the subclavian artery. In this way, the application facilitates exploration of the ontologic and spatial relationships between structures.

Discussion

We have described an approach for integrating the anatomic knowledge in RadLex with a Web-based ontology server and AIM annotations to create an MR imaging atlas of the brachial plexus with ontology-assisted image navigation. The incorporation of RadLex terms into a musculoskeletal imaging atlas has been reported previously (30,55). Our use of anatomic relationships and query results builds on this earlier work by incorporating anatomic metadata into the atlas interface.

Web Services and Performance

Use of the Web-based Query Integrator ontology server constitutes a key design decision with benefits and drawbacks. Development of ontology-based applications requires access to the source ontology and a mechanism for specifying and executing query logic. Both ontology representation and query languages are components of Semantic Web technology. However, the ongoing evolution of existing standards for both representing and querying ontologies creates a challenge for ontology application development. By relying on the remote Query Integrator server to manage ontology formats, content version updates (there were five releases of RadLex between February 2013 and February 2014), and query semantics, our approach is decoupled from these technical issues.

The primary potential disadvantage of our approach is related to performance. Because it relies on a remote server for ontology content, the application is subject to latency related to Web-based transactions. For performance testing, we made a series of 14,200 test calls to the Query Integrator Web service interface and measured response times for each call. Mean response time was 217.6 msec (range, 189–1233 msec; standard deviation, 30.4 msec). Although this latency is not prohibitive, caching query results would improve the responsiveness of the interface.

Atlas Extensions

The ontology-based atlas approach should prove useful in other areas characterized by complex anatomic relationships. With a modularized implementation and its use of DICOM image handling mechanisms, the atlas can be extended to other anatomic sites, imaging modalities, and organ systems. New content modules are created by supplying new images and annotations. This material is uploaded to the server with a set of automated scripts, making this process straightforward, and we are currently working on a knee MR imaging module.

Because the server uses a DICOM archive and AIM uses DICOM image references, the atlas is capable of accepting any standard medical images. Furthermore, non-DICOM images may be converted into DICOM format using a variety of tools (including OsiriX), enabling the atlas to make use of other types of images. Diagrams and illustrations are nonradiologic images that are widely used in anatomic atlases to simplify and emphasize specific anatomic features. Art applied to peripheral nerve MR imaging has been described (56), and we plan future work to incorporate annotated illustrations.

There is also potential for expanding the ontology queries to address specific clinical questions. For example, a classic clinical problem in neurology relates to the inference of a likely site of nerve injury based on a pattern of muscle deficits. Using the muscle innervation relationships now present in RadLex, we are developing a query to compute possible sites of nerve injury, given a specific distribution of muscle denervation changes.

Other Future Work

With regard to clinical evaluation, assessments of technology-based systems for education and training have often made use of quasi-experimental study designs (57), which typically involve one or more measurements over time. These metrics may rely on tests of knowledge (58), questionnaires to gauge user opinion (59), or direct measures of clinical performance (60,61) to assess the efficacy of an educational system. We are developing a quiz-based evaluation of the atlas to assess its impact on trainees’ understanding of brachial plexus MR imaging.

In addition, by allowing visual inspection of ontology content, this application provides a mechanism for ontology curation (ie, quality assurance). Although ontology management tools exist for this purpose (62), an image-based interface may allow greater participation by clinical experts in reviewing and developing RadLex content.

Conclusion

The interpretation of MR neurographic examinations depends on specialized anatomic knowledge, and RadLex now includes formal modeling of musculoskeletal anatomy. Using this RadLex content, together with AIM annotations, we have developed an ontology-based MR imaging atlas of the brachial plexus. The resulting application allows users to explore both images and anatomic relationships, thereby providing a reference resource for radiologists. Future work includes development of additional neuromuscular ontology queries, creation of new atlas modules, and incorporation of medical illustrations.

Acknowledgments

Acknowledgments

The authors gratefully acknowledge Avneesh Chhabra, MD, for his assistance with MR neurographic techniques and Beverly Collins, PhD, for her work in RadLex curation.

Recipient of a Trainee Research Prize for an education exhibit at the 2011 RSNA Annual Meeting.

K.C.W., D.L.R., and J.A.C. have provided disclosures (see “Disclosures of Conflicts of Interest”); all other authors have disclosed no relevant relationships.

Funding: The work was supported by the National Institutes of Health [grant number HHSN2682000800020C].

Disclosures of Conflicts of Interest.—: D.L.R.: Activities related to the present article: grant from the National Institutes of Health. Activities not related to the present article: disclosed no relevant relationships. Other activities: disclosed no relevant relationships. J.A.C.: Activities related to the present article: grant from the Society for Imaging Informatics in Medicine. Activities not related to the present article: grants from Siemens Medical Systems, Carestream Health, and Toshiba; fees from BestDoctors, BioClinica, Pfizer, Siemens Medical Systems, GE Healthcare, and Medtronic; nonfinancial support from Siemens Medical Systems, GE Healthcare, and Care-stream Health. Other activities: disclosed no relevant relationships. K.C.W.: Activities related to the present article: grants from the Radiological Society of North America and the National Institute of Biomedical Imaging and Bioengineering (grant HHSN2682000800020C). Activities not related to the present article: grant from the Society for Imaging Informatics in Medicine, cofounder of DexNote. Other activities: disclosed no relevant relationships.

Abbreviations:

- AIM

- Annotation and Image Markup

- DICOM

- Digital Imaging and Communications in Medicine

- FMA

- Foundational Model of Anatomy

- SPACE

- sampling perfection with application optimized contrasts by using different flip angle evolutions

- WADO

- Web Access to DICOM Persistent Objects

- XML

- Extensible Markup Language

References

- 1.Fornage BD. Peripheral nerves of the extremities: imaging with US. Radiology 1988;167(1):179–182. [DOI] [PubMed] [Google Scholar]

- 2.Blair DN, Rapoport S, Sostman HD, Blair OC. Normal brachial plexus: MR imaging. Radiology 1987;165(3): 763–767. [DOI] [PubMed] [Google Scholar]

- 3.Filler AG, Kliot M, Howe FA, et al. Application of magnetic resonance neurography in the evaluation of patients with peripheral nerve pathology. J Neurosurg 1996;85(2):299–309. [DOI] [PubMed] [Google Scholar]

- 4.Maravilla KR, Bowen BC. Imaging of the peripheral nervous system: evaluation of peripheral neuropathy and plexopathy. AJNR Am J Neuroradiol 1998;19(6):1011–1023. [PMC free article] [PubMed] [Google Scholar]

- 5.Stuart RM, Koh ES, Breidahl WH. Sonography of peripheral nerve pathology. AJR Am J Roentgenol 2004;182(1):123–129. [DOI] [PubMed] [Google Scholar]

- 6.Andreisek G, Crook DW, Burg D, Marincek B, Weishaupt D. Peripheral neuropathies of the median, radial, and ulnar nerves: MR imaging features. RadioGraphics 2006;26(5):1267–1287. [DOI] [PubMed] [Google Scholar]

- 7.Donovan A, Rosenberg ZS, Cavalcanti CF. MR imaging of entrapment neuropathies of the lower extremity. Part 2. The knee, leg, ankle, and foot. RadioGraphics 2010;30(4): 1001–1019. [DOI] [PubMed] [Google Scholar]

- 8.Zhang Z, Meng Q, Chen Y, et al. 3-T imaging of the cranial nerves using three-dimensional reversed FISP with diffusion-weighted MR sequence. J Magn Reson Imaging 2008;27(3):454–458. [DOI] [PubMed] [Google Scholar]

- 9.Takahara T, Hendrikse J, Yamashita T, et al. Diffusion-weighted MR neurography of the brachial plexus: feasibility study. Radiology 2008;249(2):653–660. [DOI] [PubMed] [Google Scholar]

- 10.Chhabra A, Soldatos T, Subhawong TK, et al. The application of three-dimensional diffusion-weighted PSIF technique in peripheral nerve imaging of the distal extremities. J Magn Reson Imaging 2011;34(4):962–967. [DOI] [PubMed] [Google Scholar]

- 11.Qayyum A, MacVicar AD, Padhani AR, Revell P, Husband JE. Symptomatic brachial plexopathy following treatment for breast cancer: utility of MR imaging with surface-coil techniques. Radiology 2000;214(3):837–842. [DOI] [PubMed] [Google Scholar]

- 12.Zhou L, Yousem DM, Chaudhry V. Role of magnetic resonance neurography in brachial plexus lesions. Muscle Nerve 2004;30(3):305–309. [DOI] [PubMed] [Google Scholar]

- 13.Saifuddin A. Imaging tumours of the brachial plexus. Skeletal Radiol 2003;32(7):375–387. [DOI] [PubMed] [Google Scholar]

- 14.Aralasmak A, Karaali K, Cevikol C, Uysal H, Senol U. MR imaging findings in brachial plexopathy with thoracic outlet syndrome. AJNR Am J Neuroradiol 2010;31(3):410–417. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Gierada DS, Erickson SJ, Haughton VM, Estkowski LD, Nowicki BH. MR imaging of the sacral plexus: normal findings. AJR Am J Roentgenol 1993;160(5):1059–1065. [DOI] [PubMed] [Google Scholar]

- 16.Petchprapa CN, Rosenberg ZS, Sconfienza LM, Cavalcanti CF, Vieira RL, Zember JS. MR imaging of entrapment neuropathies of the lower extremity. Part 1. The pelvis and hip. RadioGraphics 2010;30(4):983–1000. [DOI] [PubMed] [Google Scholar]

- 17.Soldatos T, Andreisek G, Thawait GK, et al. High-resolution 3-T MR neurography of the lumbosacral plexus. RadioGraphics 2013;33(4):967–987. [DOI] [PubMed] [Google Scholar]

- 18.Husarik DB, Saupe N, Pfirrmann CW, Jost B, Hodler J, Zanetti M. Elbow nerves: MR findings in 60 asymptomatic subjects—normal anatomy, variants, and pitfalls. Radiology 2009;252(1):148–156. [DOI] [PubMed] [Google Scholar]

- 19.Miller TT, Reinus WR. Nerve entrapment syndromes of the elbow, forearm, and wrist. AJR Am J Roentgenol 2010;195(3):585–594. [DOI] [PubMed] [Google Scholar]

- 20.Lee EY, Margherita AJ, Gierada DS, Narra VR. MRI of piriformis syndrome. AJR Am J Roentgenol 2004;183(1): 63–64. [DOI] [PubMed] [Google Scholar]

- 21.Chhabra A, Williams EH, Subhawong TK, et al. MR neurography findings of soleal sling entrapment. AJR Am J Roentgenol 2011;196(3):W290–W297. [DOI] [PubMed] [Google Scholar]

- 22.Chhabra A, Chalian M, Soldatos T, et al. 3-T high-resolution MR neurography of sciatic neuropathy. AJR Am J Roentgenol 2012;198(4):W357–W364. [DOI] [PubMed] [Google Scholar]

- 23.Belkas JS, Shoichet MS, Midha R. Peripheral nerve regeneration through guidance tubes. Neurol Res 2004;26(2): 151–160. [DOI] [PubMed] [Google Scholar]

- 24.Chhabra A, Williams EH, Wang KC, Dellon AL, Carrino JA. MR neurography of neuromas related to nerve injury and entrapment with surgical correlation. AJNR Am J Neuroradiol 2010;31(8):1363–1368. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Stoller DW, ed. Stoller’s atlas of orthopaedics and sports medicine. Baltimore, Md: Lippincott Williams & Wilkins, 2008. [Google Scholar]

- 26.Manaster BJ, ed. Diagnostic and surgical imaging anatomy: musculoskeletal. Salt Lake City, Utah: Amirsys, 2007. [Google Scholar]

- 27.Mohana-Borges AVR, Chung C. Anatomy of upper extremity joints with cadaveric correlation. In: Chung CB, Steinbach LS, eds. MRI of the upper extremity: shoulder, elbow, wrist and hand. Philadelphia, Pa: Lippincott Williams & Wilkins, 2010; 2–184. [Google Scholar]

- 28.StatDx. http://my.statdx.com. Accessed July 11, 2014.

- 29.Imaios. http://www.imaios.com/en/. Accessed July 11, 2014.

- 30.Stanford MSK MRI atlas. http://www.xrayhead.com. Accessed July 11, 2014.

- 31.Berners-Lee T, Hendler J. The semantic web. Scientific American, May 2001; 29–37. [Google Scholar]

- 32.National Center for Biomedical Ontology . http://www.bioontology.org. Accessed July 11, 2014.

- 33.Arp R, Romagnoli C, Chhem RK, Overton JA. Radiological and biomedical knowledge integration: the ontological way. In: Chhem RK, Hibbert KM, Deven T, eds. Radiology education: the scholarship of teaching and learning. Berlin, Germany: Springer-Verlag, 2009; 87–104. [Google Scholar]

- 34.Staab S, Studer R, eds. Handbook on ontologies. 2nd ed. Berlin, Germany: Springer, 2009. [Google Scholar]

- 35.Rosse C, Mejino JL, Jr. A reference ontology for biomedical informatics: the Foundational Model of Anatomy. J Biomed Inform 2003;36(6):478–500. [DOI] [PubMed] [Google Scholar]

- 36.Teitz C, Graney D. A musculoskeletal atlas of the human body. Seattle, Wash: University of Washington, 2003. [Google Scholar]

- 37.Langlotz CP. RadLex: a new method for indexing online educational materials. RadioGraphics 2006;26(6): 1595–1597. [DOI] [PubMed] [Google Scholar]

- 38.Rubin DL. Creating and curating a terminology for radiology: ontology modeling and analysis. J Digit Imaging 2008;21(4):355–362. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Mejino JL, Rubin DL, Brinkley JF. FMA-RadLex: an application ontology of radiological anatomy derived from the Foundational Model of Anatomy reference ontology. In: Proceedings, American Medical Informatics Association Fall Symposium 2008. Washington, DC: American Medical Informatics Association, 2008; 465–469. [PMC free article] [PubMed] [Google Scholar]

- 40.Channin DS, Mongkolwat P, Kleper V, Rubin DL. The annotation and image mark-up project. Radiology 2009; 253(3):590–592. [DOI] [PubMed] [Google Scholar]

- 41.Channin DS, Mongkolwat P, Kleper V, Sepukar K, Rubin DL. The caBIG Annotation and Image Markup project. J Digit Imaging 2010;23(2):217–225. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Zimmerman SL, Kim W, Boonn WW. Informatics in radiology: automated structured reporting of imaging findings using the AIM standard and XML. RadioGraphics 2011; 31(3):881–887. [DOI] [PubMed] [Google Scholar]

- 43.Abajian AC, Levy M, Rubin DL. Informatics in radiology: improving clinical work flow through an AIM database: a sample web-based lesion tracking application. RadioGraphics 2012;32(5):1543–1552. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Rubin DL, Napel S. Imaging informatics: toward capturing and processing semantic information in radiology images. Yearb Med Inform 2010:34–42. [PubMed] [Google Scholar]

- 45.Ruby DICOM. http://rubygems.org/gems/dicom. Accessed July 11, 2014.

- 46.Informatics RSNA. Reporting, “MR Brachial Plexus” template. http://www.radreport.org/template/0000044. Accessed July 11, 2014.

- 47.OsiriX imaging software . http://www.osirix-viewer.com. Accessed July 11, 2014.

- 48.Rubin DL, Rodriguez C, Shah P, Beaulieu C. iPAD: semantic annotation and markup of radiological images. In: Proceedings, American Medical Informatics Association Fall Symposium 2008. Washington, DC: American Medical Informatics Association, 2008; 626–630. [PMC free article] [PubMed] [Google Scholar]

- 49.Open source clinical image and object management . http://www.dcm4che.org. Accessed July 11, 2014.

- 50.Part DICOM. 18: Web access to DICOM Persistent Objects. http://medical.nema.org/Dicom/2011/11_18pu.pdf. Accessed July 11, 2014.

- 51.Lipton P, Nagy P, Sevinc G. Leveraging Internet technologies with DICOM WADO. J Digit Imaging 2012;25(5): 646–652. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Brinkley JF, Detwiler LT; Structural Informatics Group: a query integrator and manager for the query web. J Biomed Inform 2012;45(5):975–991. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Pixastic. http://www.pixastic.com. Accessed July 11, 2014.

- 54.Kinetic JS. http://www.kineticjs.com. Accessed July 11, 2014.

- 55.Ismail A, Do B, Wu A, Maley J, Biswal S. Radiology atlas: a radiology ontology atlas based on RSNA’s RadLex [abstr]. In: Radiological Society of North America Scientific Assembly and Annual Meeting Program. Oak Brook, Ill: Radiological Society of North America, 2008; 914. [Google Scholar]

- 56.Trueblood E, Lees G, Wang KC, del Grande F, Carrino JA, Chhabra A. Art applied to magnetic resonance neurography: demystifying complex LS plexus branch anatomy [abstr]. In: Radiological Society of North America Scientific Assembly and Annual Meeting Program. Oak Brook, Ill: Radiological Society of North America, 2012; 353. [Google Scholar]

- 57.Harris AD, McGregor JC, Perencevich EN, et al. The use and interpretation of quasi-experimental studies in medical informatics. J Am Med Inform Assoc 2006;13(1):16–23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Lieberman G, Abramson R, Volkan K, McArdle PJ. Tutor versus computer: a prospective comparison of interactive tutorial and computer-assisted instruction in radiology education. Acad Radiol 2002;9(1):40–49. [DOI] [PubMed] [Google Scholar]

- 59.Arya R, Morrison T, Zumwalt A, Shaffer K. Making education effective and fun: stations-based approach to teaching radiology and anatomy to third-year medical students. Acad Radiol 2013;20(10):1311–1318. [DOI] [PubMed] [Google Scholar]

- 60.Carney PA, Abraham L, Cook A, et al. Impact of an educational intervention designed to reduce unnecessary recall during screening mammography. Acad Radiol 2012;19(9):1114–1120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Frederick-Dyer KC, Faulkner AR, Chang TT, Heidel RE, Pasciak AS. Online training on the safe use of fluoroscopy can result in a significant decrease in patient dose. Acad Radiol 2013;20(10):1272–1277. [DOI] [PubMed] [Google Scholar]

- 62.Rubin DL, Noy NF, Musen MA. Protégé: a tool for managing and using terminology in radiology applications. J Digit Imaging 2007;20(suppl 1):34–46. [DOI] [PMC free article] [PubMed] [Google Scholar]