Abstract

Humans possess an ability to perceive and synchronize movements to the beat in music (‘beat perception and synchronization’), and recent neuroscientific data have offered new insights into this beat-finding capacity at multiple neural levels. Here, we review and compare behavioural and neural data on temporal and sequential processing during beat perception and entrainment tasks in macaques (including direct neural recording and local field potential (LFP)) and humans (including fMRI, EEG and MEG). These abilities rest upon a distributed set of circuits that include the motor cortico-basal-ganglia–thalamo-cortical (mCBGT) circuit, where the supplementary motor cortex (SMA) and the putamen are critical cortical and subcortical nodes, respectively. In addition, a cortical loop between motor and auditory areas, connected through delta and beta oscillatory activity, is deeply involved in these behaviours, with motor regions providing the predictive timing needed for the perception of, and entrainment to, musical rhythms. The neural discharge rate and the LFP oscillatory activity in the gamma- and beta-bands in the putamen and SMA of monkeys are tuned to the duration of intervals produced during a beat synchronization–continuation task (SCT). Hence, the tempo during beat synchronization is represented by different interval-tuned cells that are activated depending on the produced interval. In addition, cells in these areas are tuned to the serial-order elements of the SCT. Thus, the underpinnings of beat synchronization are intrinsically linked to the dynamics of cell populations tuned for duration and serial order throughout the mCBGT. We suggest that a cross-species comparison of behaviours and the neural circuits supporting them sets the stage for a new generation of neurally grounded computational models for beat perception and synchronization.

Keywords: beat perception, beat synchronization, neurophysiology, modelling

1. Introduction

Beat perception is a cognitive ability that allows the detection of a regular pulse (or beat) in music and permits synchronous responding to this pulse during dancing and musical ensemble playing [1,2]. Most people can recognize and reproduce a large number of rhythms and can move in synchrony to the beat by rhythmically timed movements of different body parts (such as finger or foot taps, or body sway). Beat perception and synchronization can be considered fundamental musical traits that, arguably, played a decisive role in the origins of music [1]. A large proportion of human music is organized by a quasi-isochronous pulse and frequently also in a metrical hierarchy, in which the beats of one level are typically spaced at two or three times those of a faster level (i.e. in the most simple Western cases the tempo of one level is 1/2 (march metre) or 1/3 (waltz metre) that of the other), and human listeners can typically synchronize at more than one level of the metrical hierarchy [3,4]. Furthermore, movement on every second versus every third beat of an ambiguous rhythm pattern (one, for example, that can be interpreted as either a march or a waltz) biases listeners to interpret it as either a march or a waltz, respectively [5]. Therefore, the concept of ‘beat perception and synchronization’ implies both that (i) the beat does not always need to be physically present in order to be ‘perceived’ and (ii) the pulse evokes a particular perceptual pattern in the subject via active cognitive processes. Interestingly, humans do not need special training to perceive and motorically entrain to the beat in musical rhythms; rather it appears to be a robust, ubiquitous and intuitive behaviour. Indeed, even young infants perceive metrical structure [6], and if an infant is bounced on every second beat or on every third beat of an ambiguous rhythm pattern, the infant is biased to interpret the metre of the auditory rhythm in a manner consistent with how they were moved to it. Thus, although rhythmic entrainment is a complex phenomenon that depends on a dynamic interaction between the auditory and motor systems in the brain [7,8], it emerges very early in development without special training [9].

Recent studies support the notion that the timing mechanisms used in the brain depend on whether the time intervals in a sequence can be timed relative to a steady beat (relative, or beat-based, timing) or not (absolute, or duration-based) timing [10–12]. In relative timing, time intervals are measured relative to a regular perceived beat [12], to which individuals are able to entrain. In absolute timing, the absolute duration of individual time intervals is encoded discretely, like a stopwatch, and no entrainment is possible. In this regard, the recent ‘gradual audio-motor hypothesis’ suggests that the complex entrainment abilities of humans seem to have evolved gradually across primates, with a duration-based timing mechanism present across the entire primate order [13,14] and a beat-based mechanism that (i) is most developed in humans, (ii) shows some but not all the properties in monkeys and (iii) is present at an intermediate level chimpanzees [7]. For example, a myriad of studies have demonstrated that humans rhythmic entrain to isochronous stimuli with almost perfect tempo and phase matching [15]. Tempo/period matching means that the period of movement precisely equals the musical beat period. Phase matching means that rhythmic movements occur near or at the onset times of musical beats.

Macaques were able to produce rhythmic movements with proper tempo matching during a synchronization–continuation task (SCT), where they tapped on a push-button to produce six isochronous intervals in a sequence, three guided by stimuli, followed by three internally timed (without the sound) intervals [16]. Macaques reproduced the intervals with only slight underestimations (approx. 50 ms), and their inter-tap interval variability increased as a function of the target interval, as does human subjects' variability in the same task [16,17]. Crucially, these monkeys produce isochronous rhythmic movements by temporalizing the pause between movements and not the movements' duration [18], reminiscent of human results [19]. These observations suggest that monkeys use an explicit timing strategy to perform the SCT, where the timing mechanism controls the duration of the movement pauses, which also trigger the execution of stereotyped pushing movements across each produced interval in the rhythmic sequence. On the other hand, however, the taps of macaques typically occur about 250 ms after stimulus onset, whereas humans show asynchronies close to zero (perfect phase matching) or even anticipate stimulus onset, moving slightly ahead of the beat [16]. The positive asynchronies in monkeys are shorter than their reaction times in a control task with random inter-stimulus intervals, suggesting that monkeys do have some temporal prediction capabilities during SCT, but that these abilities are not as finely developed as in humans [16]. Subsequent studies have shown that monkeys' asynchronies can be reduced to about 100 ms with a different training strategy [7] and that monkeys show tempo matching to periods between 350 and 1000 ms, similar to what has been seen in humans [7,20]. Hence, it appears that macaques possess some but not all the components of the brain machinery used in humans for beat perception and synchronization [21,22].

In order to understand how motoric entrainment to a musical beat is accomplished in the brain, it is important to compare neurophysiological and behavioural data across humans and non-human primates. Because humans appear to have uniquely good abilities for beat perception and synchronization, it is important to examine the basis of these abilities using functional magnetic resonance imaging (fMRI), electroencephalogram (EEG) and magnetoencephalogram (MEG) data. Using these techniques, a complementary set of studies have described the neural circuits and the potential neural mechanisms engaged during both rhythmic entrainment and beat perception in the humans. In addition, the recording of multiple-site extracellular signals in behaving monkeys has provided important clues about the neural underpinnings of temporal and sequential aspects of rhythmic entrainment. Most of the neural data in monkeys have been collected during the SCT task described above. Hence, spiking responses of cells and local field potential (LFP) recordings of monkeys during the synchronization phase of the SCT must be compared and contrasted to neural studies of beat perception and synchronization in humans, which use more macroscopic techniques such as EEG and brain imaging. In this paper, we review both human and monkey data and attempt to synthesize these different neuronal levels of explanation. We end by providing a set of desiderata for neurally grounded computational models of beat perception and synchronization.

2. Functional imaging of beat perception and entrainment in humans

Although studies with humans have used the SCT task, generally with isochronous sequences, humans can spontaneously entrain to non-isochronous sequences, as well, if they have a temporal structure that induces beat perception [23–25]. Sequences that induce a beat are often termed metric and activity to these sequences can be compared with activity to sequences that do not (non-metric). Different researchers use somewhat different heuristics and terminology for creating metric and non-metric sequences, but the underlying idea is similar: simple metric rhythms induce clear beat perception, complex metric rhythms less so and non-metric rhythms not at all.

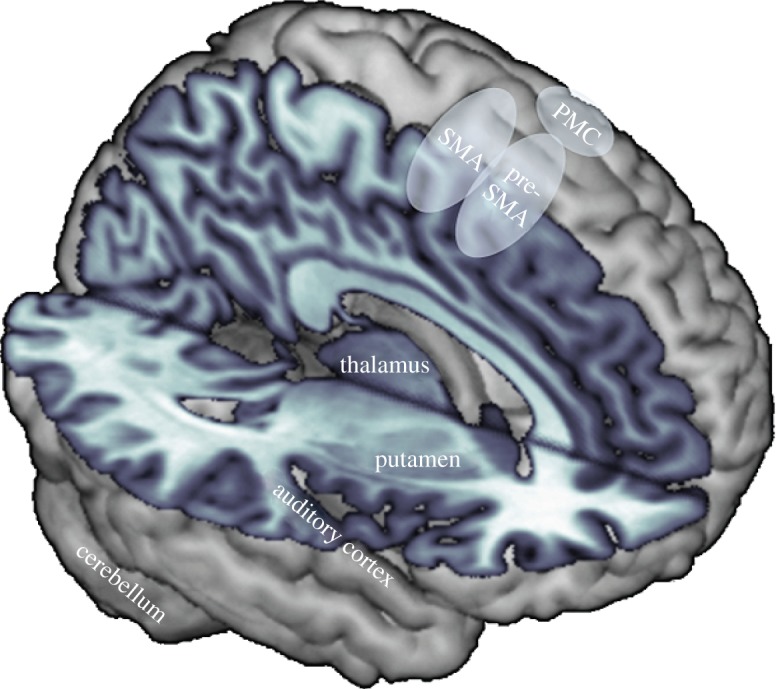

During beat perception and synchronization, activity is consistently observed in several brain areas. Subcortical structures include the cerebellum, the basal ganglia (most often the putamen, also caudate nucleus and globus pallidus) and thalamus, and cortical areas include the supplementary motor area (SMA) and pre-SMA, premotor cortex (PMC), as well as auditory cortex [12,26–37]. Less frequently, ventrolateral prefrontal cortex (VLPFC, sometimes labelled as anterior insula) and inferior parietal cortex activations are observed [30,34,38,39]. These areas are depicted in figure 1. Although the specific role of each area is still emerging, evidence is accumulating for distinctions between particular areas and networks. The basal ganglia and SMA appear to be involved in beat perception, whereas the cerebellum does not. Visual beat perception may be mediated by similar mechanisms to auditory beat perception. Beat perception also leads to greater functional connectivity, or interaction, between auditory and motor areas, particularly for musicians. We now consider this evidence in more detail.

Figure 1.

Brain areas commonly activated in functional magnetic resonance studies of rhythm perception (cut-out to show mid-sagittal and horizontal planes, tinted purple). Besides auditory cortex and the thalamus, many of the brain areas of the rhythm network are traditionally thought to be part of the motor system. SMA, supplementary motor area; PMC, premotor cortex. (Online version in colour.)

Several fMRI studies indicate that the cerebellum is more important for absolute than relative timing. During various perceptual judgement tasks, the cerebellum is more active for non-metric than metric rhythms [12,32]. Similarly, when participants tap along to sequences, cerebellar activity increases as metric complexity increases and the beat becomes more difficult to perceive [34]. The cerebellum also responds more during learning of non-metric than metric rhythms [40]. The fMRI findings are supported by findings from other methods: deficits in encoding of single durations, but not of metric sequences, occur when cerebellar function is disrupted, either by disease [41] or through transcranial magnetic stimulation [42]. Thus, although the cerebellum is commonly activated during rhythm tasks, the evidence indicates it is involved in absolute, not relative, timing and therefore does not play a significant role in beat perception or entrainment.

By contrast, relative timing appears to rely on the basal ganglia, specifically the putamen, and the SMA, as simple metric rhythms, compared with complex or non-metric rhythms, elicit greater putamen and SMA activity across various perception and production tasks [12,30,33,43,44]. For complex rhythms, SMA and putamen activity can be observed when a beat is eventually induced by several repetitions of the rhythm [45]. Importantly, increases in putamen and SMA activity during simple metric compared with non-metric rhythms cannot be attributed to non-metric rhythms simply being more difficult: even when task difficulty is manipulated to equate performance, greater putamen and SMA activity is still evident for simple metric rhythms [30]. Furthermore, the greater activity occurs even when participants are not instructed to attend to the rhythms [26], or when they attend to non-temporal aspects of the stimuli such as loudness [43] or pitch [32]. Thus, greater putamen activity cannot be attributed to metric rhythms simply facilitating performance on temporal tasks. Finally, when the basal ganglia are compromised, as in Parkinson's disease, discrimination performance with simple metric rhythms is selectively impaired. Overall, these findings indicate that the basal ganglia not only respond during beat perception, but are crucial for normal beat perception to occur.

Interestingly, basal ganglia activity does not appear to correlate with the speed of the beat that is perceived [28], instead showing maximal activity around 500 to 700 ms [46,47]. Findings from behavioural work suggest that beat perception is maximal at a preferred beat period near 500 ms [48,49]. Therefore, the basal ganglia are not simply responding equally to any perceived temporal regularity in the stimuli, but are most responsive to regularity at the frequency that best induces a sense of the beat.

Most fMRI studies of beat perception use auditory stimuli, as beat perception and synchronization occur more readily with auditory than visual stimuli [23,50–55]. Thus, it is unclear whether visual beat perception would also be mediated by basal ganglia structures. One study found that presenting visual sequences with isochronous timing elicited greater basal ganglia activity than randomly timed stimuli [56], although it is unclear if participants truly perceived a beat in the visual condition. Another way to induce visual beat perception is for visual rhythms to be ‘primed’, by earlier presentations of the same rhythm in the auditory modality. When metric visual rhythms are perceived after auditory rhythms, putamen activity is greater than when visual rhythms are presented without auditory priming. Moreover, the amount of that increase predicts whether a beat is perceived in the visual rhythm [44]. This suggests that when an internal representation of the beat is induced during the auditory presentation, the beat can be continued in subsequently presented visual rhythms, and this visual beat perception is mediated by the basal ganglia.

In addition to measuring regional activity, fMRI can be used to assess functional connectivity, or interactions, between brain areas. During beat perception, greater connectivity is observed between the basal ganglia and cortical motor areas, such as the SMA and PMC [32]. Furthermore, connectivity between PMC and auditory cortex was found in one study to increase as the salience of the beat in an isochronous sequence increased [29]. However, a later study using metric, not isochronous, sequences found that auditory–premotor activity increased as metric complexity increased (i.e. connectivity increased as perception of the beat decreased) [34]. Thus, further clarification is needed about the role of auditory–premotor connectivity in isochronous versus metric sequences. Musical training is also associated with greater connectivity between motor and auditory areas. During a synchronization task, musicians showed more bilateral patterns of auditory–premotor connectivity than non-musicians [28]. Musicians also showed greater auditory–premotor connectivity than non-musicians during passive listening, when no movement was required [32]. Thus, beat perception increases functional connectivity both within the motor system and between motor and auditory systems. One hypothesis is that increased auditory–premotor connectivity might be important for integrating auditory perception with a motor response [8,28], and perhaps this occurs even if the response is not executed. Interestingly, this hypothesis has been confirmed using EEG and MEG techniques, as reviewed below, suggesting a fundamental role of the audio–motor system in beat perception and synchronization.

Beat perception unfolds over time: initially, when a rhythm is first heard, the beat must be discovered. After beat-finding occurs, an internal representation of the beat rate can be formed, allowing prediction of future beats as the rhythm continues. Two fMRI studies have attempted to determine whether the role of the basal ganglia is finding the beat, predicting future beats, or both [33,34]. In the first study, participants heard multiple rhythms in a row that either did or did not have a beat. Putamen activity was low during the initial presentation of a beat-based rhythm, during which participants were engaged in finding the beat. Activity was high when beat-based rhythms followed one after the other, during which participants had a strong sense of the beat, suggesting that the putamen is more involved in predicting than finding the beat. The suggestion that the putamen and SMA are involved in maintaining an internal representation of beat intervals is supported by findings of greater putamen and SMA activation during the continuation phase, and not the synchronization phase, during synchronization–continuation tasks [35,57] similar to those described for macaques in the next section [17]. Patients with SMA lesions also show a selective deficit in the continuation phase but not the synchronization phase of the synchronization–continuation task [58]. However, a second fMRI study compared activation during the initial hearing of a rhythm, during which participants were engaged in beat-finding, to subsequent tapping of the beat as they heard the rhythm again. In contrast to the previous study, putamen activity was similar during finding and synchronized tapping [34]. These different fMRI findings may result from different experimental paradigms, stimuli, or analyses, but more research will be needed to determine the role of the basal ganglia in beat-finding and beat prediction.

In summary, a network of motor and auditory areas respond not only during tapping in synchrony with a beat, but also during perception of sequences that have a beat, to which one could synchronize, or entrain. Beat perception elicits greater activity than perception of rhythms without a beat in a subset of these motor areas, including the putamen and SMA. Motor and auditory areas also exhibit greater coupling during synchronization to and perception of the beat. A viable mechanism for this coupling may be oscillatory responses, not directly measurable with fMRI, but readily observed using EEG or MEG (discussed below).

3. Oscillatory mechanisms underlying rhythmic behaviour in humans: evidence from EEG and MEG

The perception of a regular beat in music can be studied in human adults and newborns [6,59], as well as in non-human primates [60], using a particular event-related brain potential (ERP) called mismatch negativity (MMN). MMN is a preattentive brain response reflecting cortical processing of rare unexpected (‘deviant’) events in a series of ongoing ‘standard’ events [61]. Using a complex repeating beat pattern, MMN was observed in human adults in response to changes in the pattern at strong beat locations, suggesting extraction of metrical beat structure. Similar results were found in newborn infants, but unfortunately the stimuli used with infants confounded MMN responses to beat changes with a general response to a change in the number of instruments sounding [6], so future studies are needed with newborns in order to verify these results. Honing et al. [60] also recorded ERPs from the scalp of macaque monkeys. This study demonstrated that an MMN-like ERP component could be measured in rhesus monkeys, both for pitch deviants and unexpected omissions from an isochronous tone sequence. However, the study also showed that macaques are not able to detect the beat induced by a varying complex rhythm, while being sensitive to the rhythmic grouping structure. Consequently, these results support the notion of different neural networks being active for interval- and beat-based timing, with a shared interval-based timing mechanism across primates as discussed in the Introduction [7]. A subsequent oddball behavioural study gave additional support for the idea that monkeys can extract temporal information for isochronous beat sequences but not from complex metrical stimuli. This study showed that macaques can detect deviants when they occur in a regular (isochronous) rather than an irregular sequence of tones, by showing changes of gaze and facial expressions to these deviants [62]. However, the authors found that the sensitivity for deviant detection is more developed in humans than monkeys. Humans detect deviants with high accuracy not only for the isochronous tone sequences but also in more complex sequences (with an additional sound with a different timbre), whereas monkeys do not show sensitivity to deviants under these conditions [62]. Overall, these findings suggest that monkeys have some capabilities for beat perception, particularly when the stimuli are isochronous, corroborating the hypothesis that the complex entrainment abilities of humans have evolved gradually across primates, and with a primordial beat-based mechanism already present in macaques [7].

The MMN has also been used to examine another interesting feature of musical rhythm perception, namely that across cultures the basic beat tends to be laid down by bass-range instruments. Hove et al. [63] showed that when two tones are played simultaneously in a repeated isochronous rhythm, deviations in the timing of the lower-pitched tone elicits a larger MMN response compared with deviations in the timing of the higher pitched tone. Furthermore, the results are consistent with behavioural responses. When one tone in an otherwise isochronous sequence occurs too early, and people are tapping along to the beat, they tend to tap too early on tone following the early one, presumably as a form of error correction. Hove et al. [63] found that people adjusted the timing of their tapping more when the lower tone was too early compared with when the higher tone was too early. Finally, using a computer model of the peripheral auditory system, these authors showed that this low-voice superiority effect for timing has a peripheral origin in the cochlea of the inner ear. Interestingly, this effect is opposite to that for detecting pitch changes in two simultaneous tones or melodies [64,65], where larger MMN responses are found for pitch deviants in the higher than in the lower tone or melody, an effect found in infants as well as adults [66,67]. The high-voice superiority effect also appears to depend on nonlinear dynamics in the cochlea [68]. It remains unknown as to whether macaques also show a high-voice superiority effect for pitch and a low-voice superiority effect for timing.

In humans, not only do we extract the beat structure from musical rhythms but also doing so propels us to move in time to the extracted beat. In order to move in time to a musical rhythm, the brain must extract the regularity in the incoming temporal information and predict when the next beat will occur. There is increasing evidence across sensory systems, using a range of tasks, that predictive timing involves interactions between sensory and motor systems, and that these interactions are accomplished by oscillatory behaviour of neuronal circuits across a number of frequency bands, particularly the delta (1–3 Hz) and beta (15–30 Hz) bands [69–72]. In general, oscillatory behaviour is thought to reflect communication between different brain regions and, in particular, influences of endogenous ‘top-down’ processes of attention and expectation on perception and response selection [73–75]. Importantly, such oscillatory behaviour can be measured in humans using the EEG and MEG.

The optimal range for the perception of musical tempos is 1–3 Hz [76], which coincides with the frequency range of neural delta oscillations. Indeed, neural oscillations in the delta range have been shown to phase align with the tempo of incoming stimuli for musical rhythms [77,78] as well as for stimuli with less regular rhythms, such as speech [79]. Several researchers have recently suggested that temporal prediction is actually accomplished in the motor system, perhaps through some sort of movement simulation [69,80–83], and that this information feeds back to sensory areas (corollary discharges or efference copies) to enhance processing of incoming information at particular points in time. In particular, temporal expectations appear to align the phase of delta oscillations in sensory cortical areas, such that response times to stimuli that happen to be presented at large delta oscillation peaks are processed more quickly [72] and more accurately [80] than stimuli presented at other times. This phase alignment of delta rhythms appears to provide a neural instantiation of dynamic attending theory proposed by Large & Jones [3], whereby attention is drawn to particular points in time, and stimulus processing at those points is enhanced.

Metrical structure is derived in the brain based not only on periodicities in the input rhythm but also on expectations for regularity. Thus, in isochronous sequences of identical tones, some tones (e.g. every second or every third tones) can be perceived as accented. And beat locations can be perceived as metrically strong even in the presence of syncopation, in which the most prominent physical stimuli occur off the beat. Interestingly, phase alignment of delta oscillations in auditory cortex reflects the metrical interpretation of input rhythms rather than simply the periodicities in the stimulus [77,78] as predicted by resonance theory [84]. For example, when listeners are presented with a 2.4 Hz sequence of tones, a component at 2.4 Hz can be seen in the measured EEG response [77]. But when listeners imagine strong beats every second tone, oscillatory activity in the EEG at 1.2 Hz is also evident, whereas when they imagine strong beats every third tone, EEG oscillations at 0.8 and 1.6 Hz (harmonic of the ternary metre) are evident.

Neural oscillations in the beta range have also been shown to play an important role in predictive timing [70,71,85]. For example, Iversen et al. [85] showed that beta but not gamma (30–50 Hz) oscillations evoked by tones in a repeating pattern were affected by whether or not listeners imagined them as being on strong or weak beats. Interestingly, beta oscillations have long been associated with motor processes [86]. For example, beta amplitude decreases during motor planning and movement, recovering to baseline once the movement is completed [87–91].

Of importance in the present context are the findings that beta oscillations are crucial for predictive timing in auditory beat processing and that beta oscillations involve interactions between auditory and motor regions [70,71]. Fujioka et al. [71] recorded the MEG while participants listened without attending (while watching a silent movie) to isochronous beat sequences at three different tempos in the delta range (2.5, 1.7 and 1.3 Hz). Examining responses from auditory cortex, they found that the power of induced (non-phase-locked) activity in the beta-band decreased after the onset of each beat and reached a minimum at approximately 200 ms after beat onset regardless of tempo. However, the timing of the beta power rebound depended on the tempo, such that maximum beta-band power was reached just prior to the onset of the next beat (figure 2). Thus, the beta rebound tracked the tempo of the beat and predicted the onset of the following beat. While little developmental work has yet been done, one recent study indicates that similar beta-band fluctuations can be seen in children, at least for slower tempos [92]. Another study [70] found that if a beat in a sequence is omitted, the decrease in beta power does not occur, suggesting that the decrease in beta power might be tied to the sensory input, whereas the rebound in beta power is predictive of the expected onset of the next beat and is internally generated. The results suggest, further, that oscillations in beta and delta frequencies are connected, with beta power fluctuating according to the phase of delta oscillations, consistent with previous work [93].

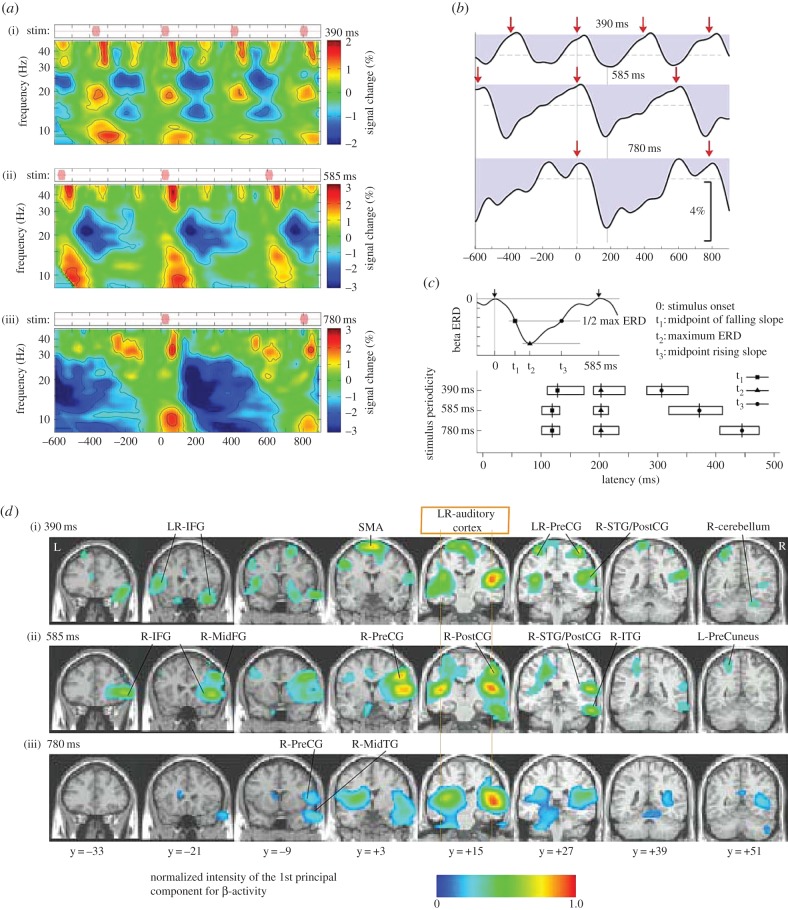

Figure 2.

Induced neuromagnetic responses to isochronous beat sequences at three different tempos. (a) Time-frequency plots of induced oscillatory activity in right auditory cortex between 10 and 40 Hz in response to a fast (390 ms onset-to-onset; upper plot), moderate (585 ms; middle plot) and slow (780 ms; lower plot) tempo (n = 12). (b) The time courses of oscillatory activity in the beta-band (20–22 Hz) for the three tempos, showing beta desynchronization immediately after stimulus onset (shown by red arrows), followed by a rebound with timing predictive of the onset of the next beat. The dashed horizontal lines indicate the 99% confidence limits for the group mean. (c) The time at which the beta desynchronization reaches half power (squares) and minimum power (triangles), and the time at which the rebound (resynchronization) reaches half power (circles). The timing of the desynchronization is similar across the three stimulus tempos, but the time of the rebound resynchronization depends on the stimulus tempo in a predictive manner. (d) Areas across the brain in which beta-activity is modulated by the auditory stimulus, showing involvement of both auditory cortices and a number of motor regions. (Adapted from Fujioka et al. [71].)

To examine the role of the motor cortex in beta power fluctuations, Fujioka et al. [71] looked across the brain for regions that showed similar fluctuations in beta power as in the auditory cortices and found that this pattern of beta power fluctuation occurred across a wide range of motor areas. Interestingly, the beta power fluctuations appear to be in opposite phase in auditory and motor areas, which is suggestive that the activity might reflect some kind of sensorimotor loop connecting auditory and motor regions. This is consistent with a recent study in the mouse showing that axons from M2 synapse with neurons in deep and superficial layers of auditory cortex, and have a largely inhibitory effect on auditory cortex [94]. This is interesting in that interactions between excitatory and inhibitory neurons often give rise to neural oscillations [95]. Furthermore, axons from these same M2 neurons also extend to several subcortical areas important for auditory processing. Finally, Nelson et al. [94] found that stimulation of M2 neurons affected the activity of neurons in auditory cortex. Thus, the connections described by Nelson et al. [94] probably reflect the circuits giving rise to the delta-frequency oscillations in beta-band power described by Fujioka et al. [71]. Because the task of Fujioka [71] involved listening without attention or motor movement, the results indicate that motor involvement in beat processing is obligatory and may provide a rationale for why music makes people want to move to the beat.

In sum, research is converging that auditory and motor regions connect through oscillatory activity, particularly at delta and beta frequencies, with motor regions providing the predictive timing needed for the perception of, and entrainment to, musical rhythms.

4. Neurophysiology of rhythmic behaviour in monkeys

The study of the neural basis of beat perception in monkeys and its comparison with humans started just recently, such that the first attempts are the previously described MMN experiments in macaques. As far as we know, no neurophysiological study has been performed on a beat perception task in behaving monkeys. By contrast, a complete set of single cell and LFP experiments have been carried out in monkeys during the execution of the SCT. A critical aspect of these studies is that they have been performed in cortical and subcortical areas of the circuit for beat perception and synchronization described in fMRI and MEG studies in humans, specifically in the SMA/pre-SMA, as well as in the putamen of behaving monkeys. These areas are deeply interconnected and are fundamental processing nodes of the motor cortico-basal-ganglia–thalamo-cortical (mCBGT) circuit across all primates [96]. Hence, direct inferences of the functional associations between the different levels of organization measured in this circuit with diverse techniques can be performed across species. This is particularly true for data collected during the synchronization phase of the SCT in monkeys and for human studies of beat synchronization. In addition, cautious generalizations can also be made to the beat perception experiments in humans, as its mechanisms seem to have a large overlap with beat synchronization, as described in the previous two sections.

The signals recorded from extracellular recordings in behaving animals can be filtered to obtain either single cell action potentials or LFPs. Action potentials last approximately 1 ms and are emitted by cells in spike trains, whereas LFPs are complex signals determined by the input activity of an area in terms of population excitatory and inhibitory postsynaptic potentials [97]. Hence, LFPs and spike trains can be considered as the input and output stages of information processing, respectively. Furthermore, LFPs are a local version of the EEG that is not distorted by the meninges and the scalp and that is a signal that provides important information about the input–output processing inside local networks [97]. Now, the analysis of putaminal LFPs in monkeys performing the SCT revealed an orderly change in the power of transient modulations in the gamma (30–70 Hz) and beta (15–30 Hz) as a function of the duration of the intervals produced in the SCT (figure 3) [98]. The burst of LFP oscillations showed different preferred intervals so that a range of recording locations represented all the tested durations. These results suggest that the putamen contains a representation of interval duration, where different local cell populations oscillate in the beta- or gamma-bands for specific intervals during the SCT. Therefore, the transient modulations in the oscillatory activity of different cell ensembles in the putamen as a function of tempo can be part of the neural underpinnings for beat synchronization. Indeed, LFPs tuned to the interval duration in the synchronization phase of the SCT could be considered an empirical substrate of the neural resonance hypothesis that suggests that perception of pulse and metre result from rhythmic bursts of high-frequency neural activity in response to music [76]. Accordingly, high-frequency bursts in the gamma- and beta-bands may enable communication between neural areas in humans, such as auditory and motor cortices, during rhythm perception and production, as mentioned previously [99,100].

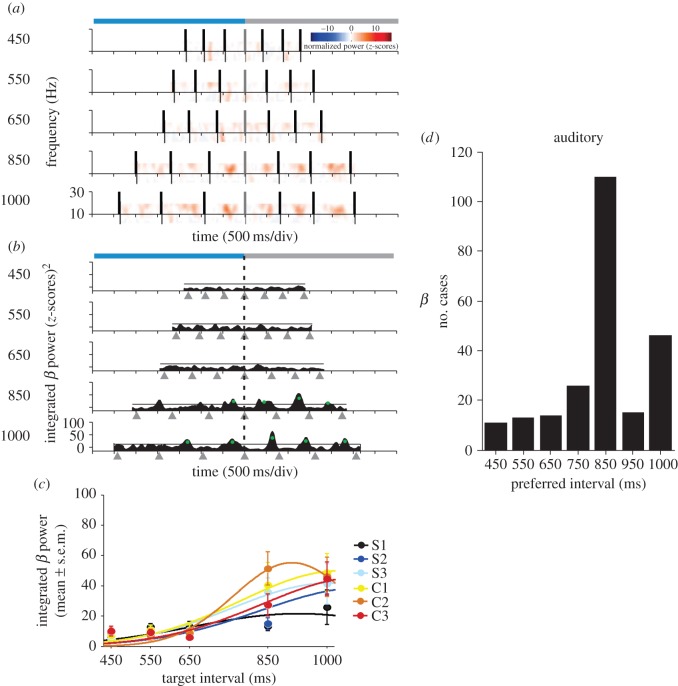

Figure 3.

Time-varying modulations in the beta power of an LFP signal that show selectivity to interval duration. (a) Normalized (z-scores, colour-code) spectrograms in the beta-band. Each plot corresponds to the target interval indicated on the left. The horizontal axis is the time during the performance of the task. The times at which the monkey tapped on the push-button are indicated by black vertical bars, and all the spectrograms are aligned to the last tap of the synchronization phase (grey vertical bar). Light-blue and grey horizontal bars at the top of the panel represent the synchronization and continuation phases, respectively. (b) Plots of the integrated power time-series for each target duration. Tap times are indicated by grey triangles below the time axis. Green dots correspond to power modulations above the 1 s.d. threshold (black solid line) for a minimum of 50 ms across trials. The vertical dotted line indicates the last tap of the synchronization phase. (c) Interval tuning in the integrated beta power. Dots are the mean ± s.e.m. and lines correspond to the fitted Gaussian functions. Tuning functions were calculated for each of the six elements of the task sequence (S1–S3 for synchronization and C1–C3 for continuation) and are colour-coded (see inset). (d) Distribution of preferred interval durations for all the recorded LFPs in the beta-band with significant tuning for the auditory condition. (Adapted from Bartolo et al. [98].)

In a series of elegant studies, Schroeder and colleagues have shown that when attention is allocated to auditory or visual events in a rhythmic sequence, delta oscillations of primary visual and auditory cortices of monkeys are entrained (i.e. phase-locked) to the attended modality [72,101]. As a result of this entrainment, the neural excitability and spiking responses of the circuit have the tendency to coincide with the attended sensory events [72]. Furthermore, the magnitude of the spiking responses and the reaction time of monkeys responding to the attended modality in the rhythmic patterns of visual and auditory events are correlated with the delta phase entrainment in a trial by trial basis [72,101]. Finally, attentional modulations for one of the two modalities is accompanied by large-scale neuronal excitability shifts in the delta band across a large circuit of cortical areas in the human brain, including primary sensory and multisensory areas, as well as premotor and prefrontal areas [74]. Therefore, these authors raised the hypothesis that the attention modulation of delta phase-locking across a large cortical circuit is a fundamental mechanism for sensory selection [102]. Needless to say, the delta entrainment across this large cortical circuit should be present during the SCT; however, the putaminal LFPs in monkeys did not show modulations in the delta band during this task. It is know that delta oscillations have their origin in thalamo-cortical interactions [103] and that play a critical role in the long-range interactions between cortical areas in behaving monkeys [104]. Hence, a possible explanation for this apparent discrepancy is that delta oscillations are part of the mechanism of information flow within cortical areas but not across the mCBGT circuit, which in turn could use interval tuning in the beta- and gamma-bands to represent the beat. Subcortical recordings in the relay nuclei of the mCBGT circuit in humans performing beat perception and synchronization could test this hypothesis.

Surprisingly, the transient changes in oscillatory activity also showed an orderly change as a function of the phase of the task, with a strong bias towards the synchronization phase for the gamma-band (when aligned to the stimuli), whereas there is a strong bias towards the continuation phase for the beta-band (when aligned to the tapping movements) [98]. These results are consistent with the notion that gamma-band oscillations predominate during sensory processing [105] or when changes in the sensory input or cognitive set are expected [86]. Thus, the local processing of visual or auditory cues in the gamma-band during the SCT may serve for binding neural ensembles that processes sensory and motor information within the putamen during beat synchronization [98]. However, these findings seem to be at odds with the role of the beta oscillations in connecting the predictive timing signals from human motor regions to auditory areas needed for the perception of, and entrainment to, musical rhythms. In this case, it is also critical to consider that gamma oscillations in the putamen during beat synchronization seem to be local and more related to sensory cue selection for the execution of movement sequences [106,107], rather than associated to the establishment of top-down predictive signal between motor and auditory cortical areas.

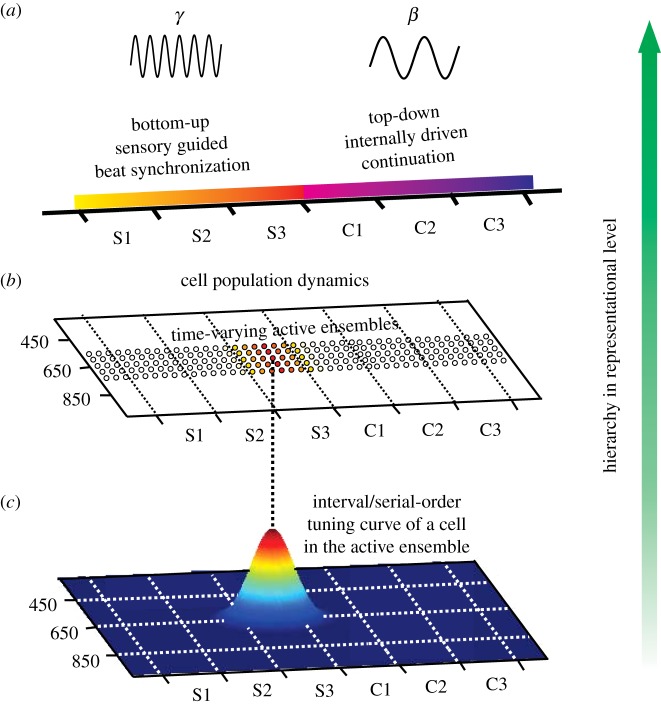

The beta-band activity in the putamen of non-human primates showed a preference for the continuation phase of the SCT, which is characterized by the internal generation of isochronic movements. Consequently, the global beta oscillations could be associated with the maintenance of a rhythmic set and the dominance of an endogenous reverberation in the mCBGT circuit, which in turn could generate internally timed movements and override the effects of external inputs (figure 4) [98]. Comparing the experiments in humans and macaques, it is clear that in both species the beta-band is deeply involved in prediction and the internal set linked with the processing of regular events (isochronic or with a particular metric) across large portions of the brain.

Figure 4.

Multiple layers of neuronal representation for duration, serial order and context in which the synchronization–continuation task is performed. (a). LFP activity that switches from gamma-activity during sensory driven (bottom-up) beat synchronization, to beta-activity during the internally generated (top-down) continuation phase. (b) Dynamic representation of the sequential and temporal structure of the SCT. Small ensembles of interconnected cells are activated in a consecutive chain of neural events. (c) A duration/serial-order tuning curve for a cell that is part of the dynamically activated ensemble on top. Dotted horizontal line links the tuning curve of the cell with the neural chain of events in (b). (Adapted from Merchant et al. [92,108–110].)

The functional properties of cell spiking responses in the putamen and SMA were also characterized in monkeys performing the SCT. Neurons in these areas show a graded modulation in discharge rate as a function of interval duration in the SCT [98,108,109,111,112]. Psychophysical studies on learning and generalization of time intervals predicted the existence of cells tuned to specific interval durations [113,114]. Indeed, large populations of cells in both areas of the mCBGT are tuned to different interval durations during the SCT, with a distribution of preferred intervals that covers all durations in the hundreds of milliseconds, although there was a bias towards long preferred intervals [109] (figure 3d). The bias towards the 800 ms interval in the neural population could be associated with the preferred tempo in rhesus monkeys, similar to what has been reported in humans for the putamen activation at the preferred human tempo of 500 ms [46,47]. Thus, the interval tuning observed in the gamma and beta oscillatory activity of putaminal LFPs is also present in the discharge rate of neurons in the SMA and the putamen, and quite probably across all the mCBGT circuit. The tuning association between spiking activity and LFPs is not always obvious across the central nervous system, because the latter is a complex signal that depends on the following factors: the input activity of an area in terms of population excitatory and inhibitory postsynaptic potentials; the regional processing of the microcircuit surrounding the recording electrode; the cytoarchitecture of the studied area; and the temporally synchronous fluctuations of the membrane potential in large neuronal aggregates [97]. Consequently, the fact that interval tuning is ubiquitous across areas and neural signals during the SCT underlines the role of this population signal in encoding tempo during beat synchronization and probably during beat perception too.

Beat perception and synchronization not only have a predictive timing component, but also are immersed in a sequence of sensory and motor events. In fact, the regular and repeated cycles of sound and movement during beat synchronization can be fully described by their sequential and temporal information. The neurophysiological recordings in SMA underscored the importance of sequential encoding during the SCT. A large population of cells in this area showed response selectivity to the sequential organization of the SCT, namely, they were tuned to one of the six serial order of the SCT (three in the synchronization and three in the continuation phase; figure 3c) [109]. Again, all the possible preferred serial orders are represented across cell populations. Cell tuning is an encoding mechanism used by the cerebral cortex to represent different sensory, motor and cognitive features [115], which include the duration of the intervals and the serial order of movements produced rhythmically. These signals must be integrated as a population code, where the cells can vote in favour of their preferred interval/serial order to generate a neural ‘tag’. Hence, the temporal and sequential information is multiplexed in a cell population signal across the mCBGT that works as the notes of a musical score in order to define the duration of the produced interval and its position in the learned SCT sequence [111].

Interestingly, the multiplexed signal for duration and serial order is quite dynamic. Using encoding and decoding algorithms in a time-varying fashion, it was found that SMA cell populations represent the temporal and sequential structure of periodic movements by activating small ensembles of interconnected neurons that encode information in rapid succession, so that the pattern of active tuned neurons changes dramatically within each interval (figure 3b, top) [110]. The progressive activation of different ensembles generates a neural ‘wave’ that represents the complete sequence and duration of produced intervals during an isochronic tapping task such as the SCT [110]. This potential anatomofunctional arrangement should include the dynamic flow of information inside the SMA and through the loops of the mCBGT. Thus, each neuronal pool represents a specific value of both features and is tightly connected, through feed-forward synaptic connections, to the next pool of neurons, as in the case of synfire chains described in neural-network simulations [116].

A key feature of the SMA neural encoding and decoding for duration and serial order is that its width increased as a function of the target interval during the SCT [110]. The time window in which tuned cells represent serial order, for example, is narrow for short interval durations and becomes wider as the interval increases [110]. Thus, duration and serial order are represented in relative terms in SMA, which as far as we know, is the first single cell neural correlate of beat-based timing and is congruent with the functional imaging studies in the mCBGT circuit described above [12].

The investigation of the neural correlates of beat perception and synchronization in humans has stressed the importance of the top-down predictive connection between motor areas and the auditory cortex. A natural following step in the monkey neurophysiological studies should be the simultaneous recording of premotor and auditory areas during tapping synchronization and beat perception tasks. Initial efforts have been started in this direction. However, it is important to emphasize that monkeys show a large bias towards visual rather than auditory cues to drive their tapping behaviour [7,16,117], which contrast with the strong bias towards the auditory modality in humans during music and dance [1,7]. In fact, during the SCT, humans show smaller temporal variability and better accuracy with auditory rather than visual interval markers [54–56]. It has been suggested that the human perceptual system abstracts the rhythmic-temporal structure of visual stimuli into an auditory representation that is automatic and obligatory [118,119]. Thus, it is quite possible that the human auditory system has a privileged access to the temporal and sequential mechanisms working inside the mCBGT circuit in order to determine the exquisite rhythmic abilities of the Homo sapiens [7,21]. The monkey brain seems to not have this strong audio-motor network, as revealed in comparative diffusion tensor imaging (DTI) experiments [96,120]. Hence, it is likely that the audio-motor circuit could be less important than the visuo-motor network in monkeys during beat perception and synchronization. The tuning properties of monkey SMA cells multiplexing the temporal and sequential structure of the SCT were very similar across visual and auditory metronomes [109]. However, unpublished observations have shown that a larger number of SMA cells respond specifically to visual rather than to auditory cues during the SCT [121], giving the first support to the monkeys' stronger association in the visuo-motor system during rhythmic behaviours. A final point regarding the modality issue is that human subjects improve their beat synchronization when static visual cues are replaced by moving visual stimuli [52,122], stressing the need of using visual moving stimuli to cue beat perception and synchronization in non-human primates [22,96].

Taken together, the discussed evidence supports the notion that the underpinnings of beat synchronization are intrinsically linked to the dynamics of cell populations tuned for duration and serial order thoughtout the mCBGT, which represent information in relative- rather than absolute-timing terms. Furthermore, the oscillatory activity of the putamen measured with LFPs showed that gamma-activity reflects local computations associated with sensory–motor (bottom-up) processing during beat synchronization, whereas beta-activity involves the entrainment of large putaminal circuits, probably in conjunction with other elements of the mCBGT, during internally driven (top-down) rhythmic tapping. A critical question regarding these empirical observations is how the different levels of neural representation interact and are coordinated during beat perception and synchronization. One approach to answering this question would involve integrating the data reviewed above with the results obtained from neurally grounded computational models.

5. Implications for computational models of beat induction

In the past decades, a considerable variety of models have been developed that are relevant in some way to the core neural and comparative issues in rhythm perception discussed above. Broadly speaking, they differ with respect to their purpose and the level of cognitive modelling (in David Marr's sense of algorithmic, computational and implementational levels [123]). These models include rule-based cognitive models, musical information retrieval (MIR) systems designed to process and categorize music files, and dynamical-systems models. Although we make no attempt to review all such models here in detail, we will make a few general observations about the usefulness of each model type for our central problem in this review: understanding the neural basis and comparative distribution of rhythmic abilities.

Rule-based models (e.g. [124,125]) are intended to provide a very high-level computational description of what rhythmic cognition entails, in terms of both pulse and metre induction (reviewed in [4]). Such models make little attempt to specify how, computationally or neurally, this is accomplished, but they do provide a clear description of what any neurally grounded model of human beat perception and synchronization and metre perception should be able to explain. Thus, Lerdahl & Jackendoff [124] emphasize the need to attribute both pulse and metre to a musical surface and propose various abstract principles in terms of well-formedness and preference rules to accomplish these goals. Longuet-Higgins & Lee [125] emphasize that any model of musical cognition must be able to cope with syncopated rhythms, in which some musical events do not coincide with the pulse or a series of events appear to conflict with the established pulse.

A central point made by many rule-based modellers concerns the importance of both bottom-up and top-down processes in determining the rhythmic inferences made by a listener [126]. As a simple example, the vast majority of drum patterns in popular music have bass drum hits on the downbeat (e.g. beats 1 and 3 of a 4/4 pattern) and snare hits on the upbeats (beats 2 and 4). While not inviolable, this statistical generalization leads listeners familiar with these genres to have strong expectations about how to assign musical events to a particular metrical position, thus using learned top-down cognitive processes to reduce the inherent ambiguity of the musical surface. Similarly, many genres have particular rhythmic tropes (e.g. the clave pattern typical of salsa music) that, once recognized, provide an immediate orientation to the pulse and metre in this style. This presumably allows experienced listeners to infer pulse and metre more rapidly (e.g. after a single measure) than would be possible for a listener relying solely on bottom-up processing. It would be desirable for models to allow this type of top-down processing to occur and to provide mechanisms whereby learning about a style can influence more stimulus-drive bottom-up processing.

A second class of potential models for rhythmic processing comes from the practical world of MIR systems. Despite their commercial orientation, such models must efficiently solve problems similar to those addressed in cognitively orientated models. Particularly relevant are beat-finding and tempo-estimation algorithms that aim to process recordings and accurately pinpoint the rate and phase of pulses (e.g. [127,128]). Because such models are designed to deal with real recorded music files, successful MIR systems have higher ecological validity than rule-based systems designed to deal only with notated scores or MIDI files. Furthermore, because their goal is practical and performance driven, and backed by considerable research money, engineering expertise and competitive evaluation (such as MIREX), we can expect this range of models to provide a ‘menu’ of systems able to do in practice what any normal human listener easily accomplishes. While we cannot necessarily expect such systems to show any principled connection to the computations humans in fact use to find and track a musical beat, such models should eventually provide insight into which computational approaches and algorithms do and don't succeed, and about which challenges are successfully addressed by which techniques. Some of these findings should be relevant to cognitive and neural researchers. Unfortunately, and perhaps surprisingly, no MIR system currently available can fully reproduce the rhythmic processing capabilities of a normal human listener [128]. At present, this class of models supports the contention that, despite its intuitive simplicity, human rhythmic processing is by no means trivial computationally.

The third class of models—dynamical-systems-based approaches—is most relevant to our purposes in this paper. Such models attempt to be mathematically explicit and to engage with real musical excerpts (not just scores or other symbolic abstractions). As for the other two classes, there is considerable variety within this class, and we will not attempt a comprehensive review. Rather, we will focus on a class of models developed by Edward Large and his colleagues over several decades, which represent the current state of the art for this model category.

Large and his colleagues [3,76,129,130] have introduced and developed a class of mathematical models of beat induction that can reproduce many of the core behavioural characteristics of human beat and metre perception. Because the model of Large [76] has served as the basis for subsequent improvements and is comprehensively and accessibly described, we describe it briefly here. Large's model is based on a set of nonlinear Hopf oscillators. Such oscillators have two main states—damped oscillation and self-sustained oscillation—where an energy parameter alpha determines which of these states the system is in. Sustained oscillation is the state of interest because only in this state can an oscillator maintain an implicit beat in the face of temporary silence or conflicting information (e.g. syncopation). In Large's model [76], a set of such nonlinear Hopf oscillators is assembled into a network in which each oscillator has inhibitory connections with the others. This leads to a network where each oscillator competes with the others for activation, and the winner(s) are determined by the rhythmic input signal fed into the entire network. Practically speaking, the Large model [76] had 96 such oscillators with fixed preferred periods spaced logarithmically from 100 ms (600 BPM) to 1500 ms (40 BPM) and thus covering the effective span of human tempo perception. Because each oscillator has its own preferred oscillation rate and a coupling strength to the input, as well as a specific inhibitory connection to the other N − 1 oscillators, this model overall has more than N2 (in this case, more then 9000) free parameters. However, these parameters are mostly set based on a priori considerations of human rhythmic perception (e.g. for preferred pulse rate around 600 ms or the tempo range described above) to avoid ‘tweaking’ parameters to fit a given dataset. In Large [76], this model was tested using ragtime piano excerpts, and the results compared to experimental data produced by humans tapping to these same excerpts [131]. A good fit was found between human performance and the model's behaviour.

Nonlinear oscillator networks of this sort exhibit a number of desirable properties important for any algorithm-level model of human beat and metre perception. First and foremost, the notion of self-sustained oscillation allows the network, once stimulated with rhythmic input, to persist in its oscillation in the face of noise, syncopation or silence. This is a crucial feature for any cognitive model of beat perception that is both robust and predictive. Second, the networks explored by Large and colleagues can exhibit hysteresis (meaning that the current behaviour of the system depends not only on the current input but on the system's past behaviour). Thus, once a given oscillator or group of oscillators is active, they tend to stay that way unless outcompeted by other oscillators, again providing resistance to premature resetting of tempo or phase inference due on syncopation or short-term deviations. Beyond these beat-based factors, these models also provide for metre perception, in that multiple related oscillators can be, and typically are, active simultaneously. Thus, the system as a whole represents not just the tactus (typically tapping rate) but also harmonics or subharmonics of that rate. Finally, these models have been tested and vetted against multiple sources of data, including both behavioural and more recently neural studies, and generally perform quite well at matching the specific patterns in data derived from humans [130].

Despite these many virtues, these models also have certain drawbacks for those seeking to understand the neural basis and comparative distribution of rhythmic capabilities. Most fundamentally, these models are implemented at a rather generic mathematical level. Because they have been designed to mirror cognitive and behavioural data, neither the oscillator nor the network properties have any direct connection to properties of specific brain regions, neural circuits or measurable neuronal properties. For example, both the human preference for a tactus period around 600 ms, and our species' preference for small integer ratios in metre, are hand-coded into the model, rather than deriving from any more fundamental properties of the brain systems involved in rhythm perception (e.g. refractory periods of neurons or oscillatory time constants of cortical or basal ganglia circuits). Large [76, p. 533] explicitly considers this generic mathematical framework, implemented ‘without worrying too much about the details of the neural system’, to be a desirable property. However, it makes the model and its parameters difficult to integrate with the converging body of neuroscientific evidence reviewed above.

Thus, for example, we would like to know why humans (and perhaps some other animals) prefer to have a tactus around 600 ms. Does this follow from some external property of the body (e.g. resonance frequency of the motor effectors) or some internal neural factor (e.g. preferred frequency for cortico-cortical sensorimotor oscillations, or preferred firing rates of basal ganglia neurons)? If the latter, are the observed behavioural preferences a result of properties of the oscillators themselves (as implied in [130]), of the network connectivity [76], or both? Similarly, a neurally grounded model should eventually be able to derive the human preference for small integer ratios in metre perception from some more fundamental neural and/or cognitive constraints (e.g. properties of coupling between cortical and basal ganglia circuits) rather than hard-coding them into the model. Ultimately, human cognitive neuroscientists need models that make more specific testable predictions about the neural circuitry, predictions that can be evaluated using modern brain imaging data.

Finally, the apparently patchy comparative distribution of rhythmic abilities among different animal species remains mysterious from the viewpoint of a generic model that, in principle, applies to the nervous system of any species possessing a set of self-sustaining nonlinear oscillators (e.g. virtually any vertebrate species). Why is it that macaques, despite their many similarities with humans, apparently find it so difficult to predictively time their taps to a beat, and show no evidence for grouping events into metrical structures [7,60]? Why is it that the one chimpanzee (out of three tested) who shows any evidence at all for beat perception and synchronization only does so for a single preferred tempo, and does not generalize this behaviour to other tempos [132]? What is it about the brain of parrots or sea lions that, in contrast to these primates, enables them to quite flexibly entrain to rhythmic musical stimuli [133–136]? Why is it that some very small and apparently simple brains (e.g. in fireflies or crickets) can accomplish flexible entrainment, while those of dogs apparently cannot [137]? To address any of these questions requires models more closely tied to measurable properties of animal brains than those currently available.

Another desideratum for the next generation of models of rhythmic cognition would include more explicit treatment of the motor output component observed in entrainment behaviour, to help understand when and why such movements occur. Humans can tap their fingers, nod their heads, tap their feet, sway their bodies or combine such movements during dancing, and all these output behaviours appear to be to some extent cognitively interchangeable. Musicians can do the same with either their voices or their hands and feet. Does this remarkable flexibility mean that rhythmic cognition is primarily a sensory and central phenomenon, with no essential connection to the details of the motor output? With different species, does it matter that in some species (e.g. non-human primates) we study finger taps as the motor output, whereas in others (e.g. birds or pinnipeds) we use head bobs or beak taps? Could other species do better with a vocal response? To what extent do different models predict that output mode should play an important role in determining success or failure in beat perception and synchronization tasks, or in preferred entrainment tempos? While incorporating a motor component in perceptual models does not pose a major computational challenge, it would allow us to evaluate such issues, and perhaps help understand the different roles of cortical, cerebellar and basal ganglia circuits in human and animal rhythmic cognition.

Several features outlined in the review above could provide fertile inspiration for implementational models at the neural level. First, the several independent loops involved in rhythmic cognition have different fundamental properties. Cortico-cortical loops (e.g. connecting premotor and auditory regions) clearly play an important role in rhythm perception, and a key characteristic of long-range connections in cortex is that they are excitatory—pyramidal cells exciting other pyramidal cells in a feedback loop that in principle can lead to uncontrolled positive feedback and seizure. This is avoided in cortex via local inhibitory neurons. Such inhibitory interneurons in turn provide a target for excitatory feedback projections to ‘sculpt’ ongoing local activity by stimulating local inhibition. This can be conceptualized as high-level motor systems making predictions that bias information processing in ‘lower’ auditory regions, via local inhibitory interneurons that tune local circuit oscillations. In sharp contrast, basal ganglia loops are characterized by pervasive inhibitory connections, where inhibition of inhibition is the rule. The computational character of these two loops is thus fundamentally different, in ways that are likely, if properly modelled, to have predictable consequences in terms of both temporal integration windows and the way in which cortico-cortical and basal ganglia loops interact with and influence one another. The basal ganglia are also a main locus for dopaminergic reward circuitry projections from the midbrain, which inject learning signals into the forebrain loops. Thus, this circuit may be a preferred locus for the rewarding effects of rhythmic entrainment and/or learning of rhythmic patterns.

Clearly, each of these classes of models addresses interesting questions, and one cannot expect any single model to span all of these levels. Models at the rule-based (computational) and algorithmic levels are currently the most mature and have already provided a good scaffolding for empirical research in cognition and behaviour. However, our progress in understanding the neurocomputational basis of rhythm perception in humans and other animals will require a more concerted focus on the computational properties of actual neural circuits, at an implementational level. We hope that the brief review and critique above helps to encourage more attention to modelling at Marr's implementational level.

6. Conclusion

This paper describes and compares current knowledge concerning the anatomical and functional basis of beat perception and synchronization in human and non-human primates, ending by delineating how this knowledge could be used to build neurally grounded models. Hence, our review provides an integrated panorama across fields that have only been treated separately before [7,23,138,139]. It is clear that the human mCBGT circuit is engaged not only during motoric entrainment to a musical beat but also during the perception of simple metric rhythms. This indicates that the motor system is involved in the representation of the metrical structure of auditory stimuli. Furthermore, this motoric representation is predictive and can induce in auditory cortex an expectation process for metrical stimuli. The predictive signals are conveyed to the sensory areas via oscillatory activity, particularly at delta and beta frequencies. Non-invasive data from humans are complemented by direct recordings of single cell and microcircuit activity in behaving macaques, showing that SMA, the putamen and probably all of the relay nuclei of the mCBGT circuit use different encoding strategies to represent the temporal and sequential structure of beat synchronization. Indeed, interval tuning could be a mechanism used by the mCBGT to represent the beat tempo during synchronization. As for humans, oscillatory activity in the beta-band is deeply involved in generating the internal set used to process regular events. There is a strong consensus that the motor system makes use of multiple levels of neural representation during beat perception and synchronization. However, implementation-level models, more tightly tied to properties of cells and neural circuits, are urgently needed to help describe and make sense of this (still incomplete) empirical information. Dynamical system approaches to model the neural representations of beat are successful at an algorithmic level, but incorporating single cell intrinsic properties, cell tuning and ramping activity, microcircuit organization and connectivity, and the dynamic communication between cortical and subcortical areas in realistic models would be welcome. The predictions generated by neurally grounded models would help drive further empirical research to bridge the gap across different levels of brain organization during beat perception and synchronization.

Author contributions

H.M. wrote the introduction, the section of neurophysiology of rhythmic behaviour in monkeys, and the conclusions; J.G. wrote the section on the functional imaging of beat perception and entrainment in humans; L.T. wrote the section on the oscillatory mechanisms underlying rhythmic behaviour in humans; M.R. and T.F. wrote the section on the computational models for beat perception and synchronization, and T.F. edited the whole manuscript. All authors contributed to the ideas and framework contained within the paper and edited the manuscript.

Funding statement

H.M. is supported by PAPIIT IN201214-25 and CONACYT 151223. J.A.G. is supported by the Natural Sciences and Engineering Research Council of Canada. L.J.T. is supported by grants from the Nature Sciences and Engineering Research Council of Canada and the Canadian Institutes of Health Research. W.T.F. thanks the ERC (Advanced Grant SOMACCA #230604) for support. M.R. has been supported by the MIT Department of Linguistics and Philosophy as well as the Zukunftskonzept at TU Dresden funded by the Exzellenzinitiative of the Deutsche Forschungsgemeinschaft.

Competing interests

The authors have no competing interests.

References

- 1.Honing H. 2013. Structure and interpretation of rhythm in music. In Psychology of music (ed. Deutsch D.), 3rd edn, pp. 369–404. London, UK: Academic Press. [Google Scholar]

- 2.Large EW, Palmer C. 2002. Perceiving temporal regularity in music. Cogn. Sci. 26, 1–37. ( 10.1207/s15516709cog2601_1) [DOI] [Google Scholar]

- 3.Large EW, Jones MR. 1999. The dynamics of attending: how people track time-varying events. Psychol. Rev. 106, 119–159. ( 10.1037/0033-295X.106.1.119) [DOI] [Google Scholar]

- 4.Drake C, Jones MR, Baruch C. 2000. The development of rhythmic attending in auditory sequences: attunement, referent period, focal attending. Cognition 77, 251–288. ( 10.1016/S0010-0277(00)00106-2) [DOI] [PubMed] [Google Scholar]

- 5.Phillips-Silver J, Trainor LJ. 2007. Hearing what the body feels: auditory encoding of rhythmic movement. Cognition 105, 533–546. ( 10.1016/j.cognition.2006.11.006) [DOI] [PubMed] [Google Scholar]

- 6.Winkler I, Haden G, Lading O, Sziller I, Honing H. 2008. Newborn infants detect the beat in music. Proc. Natl Acad. Sci. USA 106, 2468–2471. ( 10.1073/pnas.0809035106) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Merchant H, Honing H. 2014. Are non-human primates capable of rhythmic entrainment? Evidence for the gradual audiomotor evolution hypothesis. Front. Neurosci. 7, 274 ( 10.3389/fnins.2013.00274) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Zatorre RJ, Chen JL, Penhune VB. 2007. When the brain plays music. Auditory-motor interactions in music perception and production. Nat. Rev. Neurosci. 8, 547–558. ( 10.1038/nrn2152) [DOI] [PubMed] [Google Scholar]

- 9.Phillips-Silver J, Trainor LJ. 2005. Feeling the beat in music: movement influences rhythm perception in infants. Science 308, 1430 ( 10.1126/science.1110922) [DOI] [PubMed] [Google Scholar]

- 10.Povel DJ, Essens P. 1985. Perception of temporal patterns. Music Percept. 2, 411–440. ( 10.2307/40285311) [DOI] [PubMed] [Google Scholar]

- 11.Yee W, Holleran S, Jones MR. 1994. Sensitivity to event timing in regular and irregular sequences: influences of musical skill. Percept. Psychophys. 56, 461–471. ( 10.3758/BF03206737) [DOI] [PubMed] [Google Scholar]

- 12.Teki S, Grube M, Kumar S, Griffiths TD. 2011. Distinct neural substrates of duration-based and beat-based auditory timing. J. Neurosci. 31, 3805–3812. ( 10.1523/JNEUROSCI.5561-10.2011) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Merchant H, Battaglia-Mayer A, Georgopoulos AP. 2003. Interception of real and apparent circularly moving targets: psychophysics in human subjects and monkeys. Exp. Brain Res. 152, 106–112. ( 10.1007/s00221-003-1514-5) [DOI] [PubMed] [Google Scholar]

- 14.Mendez JC, Prado L, Mendoza G, Merchant H. 2011. Temporal and spatial categorization in human and non-human primates. Front. Integr. Neurosci. 5, 50 ( 10.3389/fnint.2011.00050) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Repp BH, Su YH. 2013. Sensorimotor synchronization: a review of recent research (2006–2012). Psychon. Bull. Rev. 20, 403–452. ( 10.3758/s13423-012-0371-2) [DOI] [PubMed] [Google Scholar]

- 16.Zarco W, Merchant H, Prado L, Mendez JC. 2009. Subsecond timing in primates: comparison of interval production between human subjects and rhesus monkeys. J. Neurophysiol. 102, 3191–3202. ( 10.1152/jn.00066.2009) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Merchant H, Zarco W, Pérez O, Prado L, Bartolo R. 2011. Measuring time with different neural chronometers during a synchronization-continuation task. Proc. Natl Acad. Sci. USA 108, 19 784–19 789. ( 10.1073/pnas.1112933108) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Donnet S, Bartolo R, Fernandes JM, Cunha JP, Prado L, Merchant H. 2014. Monkeys time their movement pauses and not their movement kinematics during a synchronization-continuation rhythmic task. J. Neurophysiol. 111, 2138–2149. ( 10.1152/jn.00802.2013) [DOI] [PubMed] [Google Scholar]

- 19.Doumas M, Wing AM. 2007. Timing and trajectory in rhythm production. J. Exp. Psychol. 33, 442–455. ( 10.1037/0096-1523.33.2.442) [DOI] [PubMed] [Google Scholar]

- 20.Konoike N, Mikami A, Miyachi S. 2012. The influence of tempo upon the rhythmic motor control in macaque monkeys. Neurosci. Res. 74, 64–67. ( 10.1016/j.neures.2012.06.002) [DOI] [PubMed] [Google Scholar]

- 21.Honing H, Merchant H. 2014. Differences in auditory timing between human and non-human primates. Behav. Brain Sci. 37, 373–374. [DOI] [PubMed] [Google Scholar]

- 22.Patel AD. 2014. The evolutionary biology of musical rhythm: was Darwin wrong? PLoS Biol. 12, e1001821 ( 10.1371/journal.pbio.1001821) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Patel AD, Iversen JR, Chen Y, Repp BH. 2005. The influence of metricality and modality on synchronization with a beat. Exp. Brain Res. 163, 226–238. ( 10.1007/s00221-004-2159-8) [DOI] [PubMed] [Google Scholar]

- 24.Fitch WT, Rosenfeld AJ. 2007. Perception and production of syncopated rhythms. Music Percept. 25, 43–58. ( 10.1525/mp.2007.25.1.43) [DOI] [Google Scholar]

- 25.Repp BH, Iversen JR, Patel AD. 2008. Tracking an imposed beat within a metrical grid. Music Percept. 26, 1–18. ( 10.1525/mp.2008.26.1.1) [DOI] [Google Scholar]

- 26.Bengtsson SL, Ullén F, Henrik Ehrsson H, Hashimoto T, Kito T, Naito E, Forssberg H, Sadato N, 2009. Listening to rhythms activates motor and premotor cortices. Cortex 45, 62–71. ( 10.1016/j.cortex.2008.07.002) [DOI] [PubMed] [Google Scholar]

- 27.Chen JL, Penhune VB, Zatorre RJ. 2008. Listening to musical rhythms recruits motor regions of the brain. Cereb. Cortex 18, 2844–2854. ( 10.1093/cercor/bhn042) [DOI] [PubMed] [Google Scholar]

- 28.Chen JL, Penhune VB, Zatorre RJ. 2008. Moving on time: brain network for auditory-motor synchronization is modulated by rhythm complexity and musical training. J. Cogn. Neurosci. 20, 226–239. ( 10.1162/jocn.2008.20018) [DOI] [PubMed] [Google Scholar]

- 29.Chen JL, Zatorre RJ, Penhune VB. 2006. Interactions between auditory and dorsal premotor cortex during synchronization to musical rhythms. NeuroImage 32, 1771–1781. ( 10.1016/j.neuroimage.2006.04.207) [DOI] [PubMed] [Google Scholar]

- 30.Grahn JA, Brett M. 2007. Rhythm perception in motor areas of the brain. J. Cogn. Neurosci. 19, 893–906. ( 10.1162/jocn.2007.19.5.893) [DOI] [PubMed] [Google Scholar]

- 31.Grahn JA. 2009. The role of the basal ganglia in beat perception: neuroimaging and neuropsychological investigations. Ann. NY Acad. Sci. 1169, 35–45. ( 10.1111/j.1749-6632.2009.04553.x) [DOI] [PubMed] [Google Scholar]

- 32.Grahn JA, Rowe JB. 2009. Feeling the beat: premotor and striatal interactions in musicians and nonmusicians during beat perception. J. Neurosci. 29, 7540–7548. ( 10.1523/JNEUROSCI.2018-08.2009) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Grahn JA, Rowe JB. 2013. Finding and feeling the musical beat: striatal dissociations between detection and prediction of regularity. Cereb. Cortex 23, 913–921. ( 10.1093/cercor/bhs083) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Kung SJ, Chen JL, Zatorre RJ, Penhune VB. 2013. Interacting cortical and basal ganglia networks underlying finding and tapping to the musical beat. J. Cogn. Neurosci. 25, 401–420. ( 10.1162/jocn_a_00325) [DOI] [PubMed] [Google Scholar]

- 35.Lewis PA, Wing AM, Pope PA, Praamstra P, Miall RC. 2004. Brain activity correlates differentially with increasing temporal complexity of rhythms during initialisation, synchronisation, and continuation phases of paced finger tapping. Neuropsychology 42, 1301–1312. ( 10.1016/j.neuropsychologia.2004.03.001) [DOI] [PubMed] [Google Scholar]

- 36.Schubotz RI, Friederici AD, von Cramon DY. 2000. Time perception and motor timing: a common cortical and subcortical basis revealed by fMRI. NeuroImage 11, 1–12. ( 10.1006/nimg.1999.0514) [DOI] [PubMed] [Google Scholar]

- 37.Ullén F, Bengtsson SL. 2003. Independent processing of the temporal and ordinal structure of movement sequences. J. Neurophysiol. 90, 3725–3735. ( 10.1152/jn.00458.2003) [DOI] [PubMed] [Google Scholar]

- 38.Vuust P, Ostergaard L, Pallesen KJ, Bailey C, Roepstorff A. 2009. Predictive coding of music—brain responses to rhythmic incongruity. Cortex 45, 80–92. ( 10.1016/j.cortex.2008.05.014) [DOI] [PubMed] [Google Scholar]

- 39.Vuust P, Roepstorff A, Wallentin M, Mouridsen K, Ostergaard L. 2006. It don't mean a thing…: keeping the rhythm during polyrhythmic tension, activates language areas (BA47). Neuroimage 31, 832–841. ( 10.1016/j.neuroimage.2005.12.037) [DOI] [PubMed] [Google Scholar]

- 40.Ramnani N, Passingham RE. 2001. Changes in the human brain during rhythm learning. J. Cogn. Neurosci. 13, 952–966. ( 10.1162/089892901753165863) [DOI] [PubMed] [Google Scholar]