Abstract

Since the original work on the Delphi technique, multiple versions have been developed and used in research and industry; however, very little empirical research has been conducted that evaluates the efficacy of using online computer, Internet, and e-mail applications to facilitate a Delphi method that can be used to validate theoretical models. The purpose of this research was to develop computer, Internet, and e-mail applications to facilitate a modified Delphi technique through which experts provide validation for a proposed conceptual model that describes the information needs for a mass-casualty continuum of care. Extant literature and existing theoretical models provided the basis for model development. Two rounds of the Delphi process were needed to satisfy the criteria for consensus and/or stability related to the constructs, relationships, and indicators in the model. The majority of experts rated the online processes favorably (mean of 6.1 on a seven-point scale). Using online Internet and computer applications to facilitate a modified Delphi process offers much promise for future research involving model building or validation. The online Delphi process provided an effective methodology for identifying and describing the complex series of events and contextual factors that influence the way we respond to disasters.

Keywords: Computerized processes, Delphi technique, Information systems, Mass-casualty incidents

The Delphi process, a methodology used for obtaining expert consensus on a particular topic,1 was developed in the 1950s by the Rand Corporation (Santa Monica, CA) to forecast the impact of technology on warfare.2 This technique differs from other group data collection processes through the use of (1) expert input, (2) anonymity, (3) exchange of ideas with controlled feedback, and (4) statistical group response.3,4 Although Delphi processes have been applied extensively in healthcare and used in developing the structure for models,5–8 little research has been conducted that evaluates the use of computer-mediated applications to facilitate a Delphi method applied to model building and validation of conceptual models.

The Delphi process is usually associated with mailed paper-and-pencil questionnaires, or face-to-face interactions. Computer-mediated communication systems can be used to carry out a Delphi process in ways that may be superior to other forms of communication in situations of unusual complexity.9 Computer-mediated Delphis enable experts to efficiently communicate with one another and facilitate the preparation, distribution, collection, and computation of quartiles as well as the revision of questionnaires that can reduce the turnaround time between rounds.8 The following study illustrates the use of a computerized-mediated Delphi process to build and validate a conceptual model for mass-casualty triage.

THE MODEL

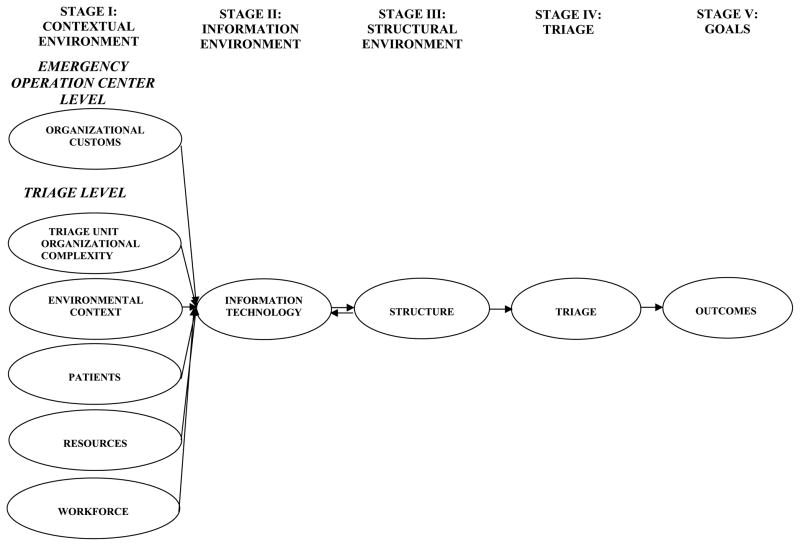

The hypothesized Mass Casualty Conceptual Model (MCCM) used in this study uses an open-systems approach to study the effects of context on the functioning and information needs of multidisciplinary teams during mass-casualty triage. This research examined key relationships among entities/factors needed to provide real-time visibility of data that track patients, personnel, resources, and potential hazards that influence outcomes of care during mass-casualty events. The conceptual model (Figure 1) represents five stages that impact the continuum of care during mass-casualty events. These five stages include 10 constructs, 10 relationships between the constructs, and 43 indicators or measures for the constructs. The constructs included in each stage include the following:

FIGURE 1.

Mass Casualty Conceptual Model. Reprinted with permission from the author.

stage I (contextual environment)—environmental factors that initiate and influence mass-casualty events, patients affected by the events, and resources available to affect survivability that includes the following constructs: organizational customs, triage unit organizational complexity, environmental context, patients, resources, and workforce;

stage II (informational environment)—information and technology necessary to control and support an appropriate work-flow design that matches the skill mix and experience of the available workforce include the information technology construct;

stage III (structural environment)—ad hoc organizational structure used to organize a scalable multidisciplinary emergency responses to incidents of any magnitude includes the structure construct;

stage IV (triage)—the process used to classify and prioritize victims according to predetermined severity algorithms to ensure the greatest survivability with limited resources includes the triage construct; and

stage V (goals)—outcomes for both patients and resources through the appropriate use of resources includes the outcomes construct.

This hypothesized model was presented to a panel of experts for validation.

BACKGROUND

There is a paucity of literature evaluating mass-casualty systems and no clear criterion standard for measuring the efficacy of information support systems used in mass-casualty events. The purpose of this research was to validate a conceptual model for a mass-casualty continuum of care that provides a framework for the development and evaluation of information systems for mass-casualty events. Research questions measured (1) the extent to which experts agreed that the constructs, relationships, and indicators represented predictors of outcomes of care during mass-casualty events; (2) the usefulness of the model to the further study of information and technology requirements during mass-casualty events; and (3) the usefulness of computer-mediated applications that facilitated the Delphi technique to build and validate the conceptual model. The entire study was conducted using computer, Internet, and e-mail applications to support the validation process.

The Delphi Technique

The Delphi technique typically involves the recruitment of a panel of experts on a specific topic. Each expert independently responds to a question(s) designed to elicit opinions, estimates, or predictions regarding the topic. Responses are then aggregated, tabulated, summarized, and returned to the experts in a series of data collection rounds.4 The traditional Delphi technique usually begins with an open-ended questionnaire followed by three to four rounds of feedback and modified questionnaires.4,10

A modified technique has been used in many studies to improve initial-round response rate.10,11 The modified technique begins with preselected items drawn from various sources including synthesized reviews of the literature to provide a context for responses.4,6 The number of rounds may be decreased to as few as two if experts are provided with a list of preselected items.4,11,12

One problem that is often encountered in Delphi studies is maintaining participants’ focus if questionnaires have large numbers of items.10 Delphi studies may contain 50 or more items for consideration. Modifications that involve the presentation of preselected items, purposeful sampling, and quick turnaround times between rounds may reduce panel response fatigue and enhance reliability of findings.

The Delphi technique is an iterative process with data collection rounds repeated until opinion consensus is reached. Consensus refers to the extent to which each respondent agrees with an idea, item, or concept that is rated on a numerical or categorical scale. There is no underlying statistical theory that defines an appropriate stopping point in a Delphi process.13 There is always a certain amount of oscillatory movement and change within the group, but respondents are sensitive to feedback of the scores from the whole group and tend to move toward the perceived consensus or centralize.

In the majority of Delphi applications, consensus is achieved when a percentage of opinions fall within a prescribed range or fall within an interquartile range that is no larger than two units on a 10-unit scale,8 or the inter-rater agreement among experts is 70% or greater.11 Establishing consensus provides a way to identify the central tendency of data.7 However, this method may not take into account all of the information in the distributions. For example, a bimodal distribution may occur or a distribution may flatten out that may not represent consensus but indicate important areas of opinion. Measuring the stability of the experts’ opinion distribution curve over successive rounds may be preferred to methods that measure the amount of change in each individual’s opinion between rounds (the degree of convergence) because it considers variations from the norm.13 The use of stability measures helps to mitigate the effect of extreme or conflicting positions. A reasonable stopping point is determined when responses are unchanged and stable. A change level of 15% or less between rounds indicates stability.13 This study incorporated the use of interquartile ranges, interrater agreement, and stability measures to define consensus and the stopping point in the validation process.

The feedback provided to the experts typically includes (1) statistical summaries that provide measures of central tendency such as variance, mean, median, and mode; (2) individual experts’ comments14,15; (3) ranking, percentages, and interquartile ranges7; and (4) subjective rationales summarized and provided as anonymous feedback.7,10

The expert panel is one of the most fundamental components of a Delphi study. Using a convenience sample allows researchers to purposefully select experts who can apply their knowledge and experience to the specific issue or problem under investigation.4,5 This is particularly useful when there are only a limited number of experts in a field of interest such as mass-casualty triage. The sample size in most Delphi studies has been study specific.5 If experts are selected who have similar training and general understanding of the problem of interest, a relatively small sample can be used.5 Self-rating scales can be used to identify expertise.8 Linestone and Turoff8 suggest the use of a five-point scale, where low numbers represent a low degree of expertise and high numbers are used to represent a high degree of expertise.

Anonymity is considered an important component of most Delphis. The objective of anonymity is to remove some of the common biases normally occurring in the face-to-face group process. Anonymity can also have negative effects on the process. After repeated rounds, experts may no longer feel committed to the issues and may change their responses to bring a more expeditious end to the process.6

Computer-mediated applications can support the Delphi technique in ways that may be superior to other forms of communication in any of the following situations:

Individuals are busy and frequent meetings are difficult.

The group is spread out geographically.

Topics are complex and require reflection.8

Anonymity is important.

PROCEDURE

Approval for the research project was obtained from the University of Arizona’s institutional review board. Extant literature and existing theoretical models provided the basis for the MCCM development. A modified Delphi technique was used to present the proposed conceptual model to a panel of experts. The following study illustrates the use of computer-mediated applications combined with the Delphi technique to validate a conceptual model.

Panel Recruitment

In this study, a purposeful sample of 18 experts was recruited from a group of 26 individuals known to the researcher or recommended by an expert (snowballing). The individuals were contacted by e-mail and invited to participate. A disclaimer was e-mailed to 21 individuals who expressed interest in participating. Once the disclaimer was returned (via e-mail), a second e-mail was sent via SurveyMonkey (SurveyMonkey, Portland, OR), an online custom survey software program, inviting each individual to complete an online Panel Profile Survey.

Panel eligibility criteria included (1) a position title that reflected direct involvement in local, regional/county, state, federal, or military emergency preparedness, response, or research; (2) multiple provider and emergency planning, response, and/or research positions; (3) self-rating of expertise of three or higher on a five-point Likert scale (5 indicated high expertise); and (4) availability of a computer with audio, Internet, and e-mail access. The survey captured demographic and eligibility criteria information and included a self-rating scale of expertise in the area of emergency planning, response, and/or research. A total of 18 experts met the selection criteria and volunteered to be panel members.

Once the panel was selected, all members received an e-mail directing them to the Web page designed for this research. Panel members were given 1 week to review the content on the Web page. One panel member was not able to access the narrated presentations, so all files from the Web page were copied to a CD and overnight mailed to that panel member.

Computer-Mediated Process

SurveyMonkey16 was used to create and present the Panel Profile Survey and each round of questions in the Delphi process and also to collect responses. SurveyMonkey is an online survey tool that enables the user to (1) use his/her own Web browser to create custom surveys that include multiple choice, rating scales, and open-ended text; (2) validate text that is entered or require a particular number of answers; (3) send out a link to the survey via e-mail, or post the link on a Website; (4) track who responds; (5) manage participant lists; (6) create custom e-mail invitations; (7) set cutoff dates for each survey; (8) require a password, or restrict responses by IP address; (9) view results (including graphs and charts of the data) as soon as they are collected; (10) generate a public link to share survey results; and (11) export results to a spreadsheet such as Excel (Microsoft, Redmond, WA). Each panel member in the study was identified by a unique number assigned by SurveyMonkey. Panelists were sent customized e-mails through SurveyMonkey that included a hyperlink to specific questionnaires.

A secure Web page specifically designed for this study provided online access to the following:

a narrated PowerPoint (Microsoft) presentation that provided information regarding the Delphi process;

-

a narrated PowerPoint presentation explaining the model that included

an overview of the model and the theoretical underpinnings of the model and

information about each construct, relationship, and indicator in the model;

a Microsoft Word (Microsoft) computer graphic file that included the proposed model with all indicators;

a Microsoft Word Glossary of Terms related to the model;

the Human Subjects Web site; and

Excel spreadsheets with graphic displays that were uploaded to the study Web page to provide feedback from each round. The data provided comprehensive and up-to-date decision support to assist with decisions in subsequent rounds.

Data Collection Procedure

Panel members were sent customized e-mails through SurveyMonkey, notifying them when a round of questionnaires was available. Panel members had the option of leaving the survey uncompleted and then completing it at a later time. Experts were asked to evaluate the proposed constructs and the relationships between each construct using a seven-point Likert scale. Experts were also asked to identify and define any additional construct(s) or change(s) in relationship links needed to adequately assess the continuum of care during mass-casualty triage. Experts were then asked whether each indicator for constructs should be retained, modified, or deleted and, if appropriate, how they would modify the indicator. Experts were also asked to identify and define any additional indicators needed.

Experts were originally given 1 week to respond to the online questionnaires; however, the time frame was extended an extra week to allow more time for the majority of panel members to respond. Panel members who did not respond within 5 days of the posting were sent an e-mail reminding them that they had only 1 week remaining to complete the questionnaire. The use of e-mail enabled timely communication to extend time frames and send reminders that improved response rates during each round of the process.

Data from each round were analyzed. The use of computerized applications made possible a turnaround time of only 2 days to present the results after the close of each Delphi round. Feedback included means, dispersion, and summaries of comments. The model was modified based on expert consensus. The revised model and feedback from the previous questionnaire were available on the study Web page and formed the basis for the second round of questions. The process continued until the a priori criteria had been met for consensus and/or stability. During the second round of questions, experts were also asked to indicate the usefulness of the conceptual model using a seven-point Likert scale and answer six questions evaluating the computer-mediated applications used to facilitate the Delphi technique.

Data Analysis

Raw data were downloaded from SurveyMonkey and imported into an Excel spreadsheet. Excel was used to calculate medians, means, quartile ranges, percentage of agreement, and stability as well as to calculate descriptive statistics related to panel profile characteristics. Tracking of individual results was helpful in analyzing results,13 although results were reported only in aggregate form.

The criteria for consensus were satisfied when the interquartile range in scores was of no more than one scale point.17 Consensus for questions assessing retention, modification, or deletion of indicators for each construct was determined by calculating the percentage of agreement among the panel. An interrater agreement level of 70% or greater11 was required to reach consensus to retain, modify, or delete an indicator. Stability was calculated for items that did not reach the criteria for consensus. The condition of stability was satisfied when the change in the distribution of responses was less than 15% from one round to the next.13

RESULTS

Of the 18 panel members who agreed to participate in the study, one member did not respond to either round of questions; therefore, responses were based on 17 panel members who responded to both rounds of questionnaires. The original sample included an equal number of males (n = 9) and females (n = 9). The mean years employed in emergency preparedness and mean rating of expertise were higher for males than females, with the mean years employed for both groups at 12.5 years and the mean rating of expertise for both groups at 4 on a five-point scale. The majority of the panel (72%) represented the Northeast US geographical area, and position titles were very diverse representing local, federal, and national constituents.

Round 1

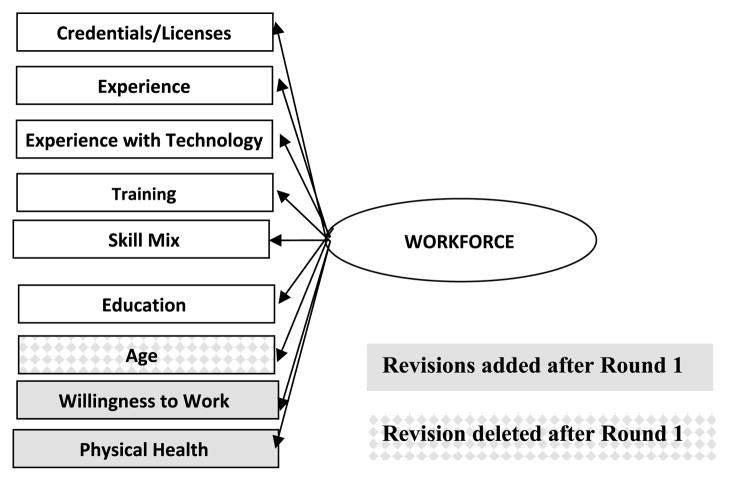

The response rate for round 1 was 94%. Once the round was completed, all panel members were sent an e-mail indicating that the data were available on the study Web page. Feedback included histograms depicting the responses for each construct, relationship, and indicator in the model; tables summarizing the median responses, spread of scale points, and status of consensus for each construct and relationship; tables summarizing the percentage of agreement and status of consensus for each indicator; all comments (deidentified) submitted from any of the questions; and a copy of the revised MCCM based on responses. Table 1 is an example of a summary table with selected comments related to the workforce construct and indicators available on the study Web page as feedback to panel at the completion of round 1. Figure 2 is an example of the modified workforce construct and indicators portion of the model available on the study Web page for review during round 2. The panel reached consensus and/or sufficient stability to retain all 10 constructs, nine relationships, and 39 of 44 indicators. Changes to the model were based on individual comments that were subsequently supported by other panelists and/or by current literature or model underpinnings.

Table 1.

Round 1 Percentage of Agreement Related to Indicators for the Workforce Construct and Selected Examples of Respondent Comments

| Construct Indicators | Delete | Modify | Retain | Status of Consensus (≥70% Agreement) |

|---|---|---|---|---|

| Credentials/licenses | 12% | 25% | 63% | |

| Experience | 6% | 6% | 88% | Consensus met to retain |

| Experience with technology | 13% | 31% | 56% | |

| Training | 0% | 12% | 88% | Consensus met to retain |

| Skill mix | 19% | 25% | 56% | |

| Education | 12% | 25% | 63% | |

| Needs related to safety and health | 0% | 31% | 69% | Very close to consensus |

| Age | 47% | 47% | 6% |

Selected examples of respondent comments: (1) Strongly encourage revising “needs related to safety and health” to “willingness to work.” A body of science is emerging that indicates healthcare personnel may not be willing to work in all disaster situations. Concerns about health and personal safety are only two of the reasons—others include concern for pets and family members, fear, and so on. (2) Perhaps physical health, disability, and handicap should be considered somewhere in the mix. (3) Age: I’m not sure there is evidence to support the statement that maturity may influence the ability to “handle” events. (4) Age is not critical… the ability to meet demanding (tiring, strength) operations, on the other hand, is. (5) Age can be problematic; depending on needs, workforce may require physical fitness as a subcategory versus age. (6) Recommend changing “needs related to safety and health” to “willingness to work in disaster.”

FIGURE 2.

Example of changes to the workforce construct based on input from rounds 1 and 2.

Round 2

The response rate for round 2 was 87%. All panel members were once again sent an e-mail indicating that the data were available for their review. The feedback for round 2 was the same as round 1, except in round 2 feedback also included (1) tables summarizing the percentage of agreement, stability percentage, and status of consensus/stability for each construct, relationship, and indicator from both rounds; (2) responses related to the usefulness of the model; and (3) evaluation of the computer-mediated applications.

Only two rounds of the Delphi process were needed to satisfy the criteria for consensus and/or stability related to the constructs, relationships, and indicators in the model. Modifications to the model were based on comments made by and supported by panel members and supported by current literature or underpinnings of the model. Table 2 summarizes the validated constructs and indicators and identifies indicators that were either eliminated or added.

Table 2.

MCCM Validated Constructs and Indicators

| Organizational Customs |

Triage Level Organizational Complexity |

Environmental Context |

Resources | Workforce | Information Technology (Technology) |

Information Technology (Information) |

Structure | Triage | Outcomes (Patients) |

Outcomes (Resources) |

Outcomes (Safety)a |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Shared beliefs Life cycle Incentive structureb Leadership style Shared beliefsb Disaster planninga |

No. of specialties Size High tech Team culture |

Nature of disaster Geographical size Warning systems Duration Settinga Competing disastersa |

Categories Availabilityb Location Categories |

Credentials/ Licenses experience Exp with technology Training Skill mix Education Safety/health needs Ageb Willingness to worka Physical healtha |

Characteristics Work flow Rate of flow |

Terminology Flow Security Characteristics |

Work flow variability Search behaviors Structure |

Classification Prioritization |

Survivability Disability |

Overtriage | Patient injuriesa Worker injuriesa |

Added to final model.

Deleted from final model.

During the second round of questions, experts were asked to answer questions evaluating the usefulness of the model and the computer-mediated Delphi process. Experts rated the usefulness of the model to the further study of information and technology requirements during mass-casualty events, with a mean response of 5.3 on the seven-point Likert scale (1 = not useful to the further study of information and technology requirements during mass-casualty events and 7 = very useful). The panel of experts validated a conceptual model that provides a foundation to better understand and study the complexity of mass-casualty triage.

Table 3 summarizes the mean responses related to the use of computer-mediated applications utilized in the study. Responses indicated that the majority of panel members rated the computer-mediated applications with a mean of 6.1 on the seven-point Likert scale (1 = not useful to the Delphi process and 7 = very useful). The mean response for Web page, narrated explanation of the conceptual model, online glossary, online questionnaires, and online feedback was 6.2 on the seven-point Likert scale. The Narrated Instructions about the Delphi Process scored a mean of 5.5 on the Likert scale. Respondents reported an average of 71 minutes to respond to the 45-question multipart round 1 questionnaire and 47 minutes to respond to the 39-question multipart round 2 questionnaire. These averages were higher than the log-in times recorded by SurveyMonkey, which were 64 and 22 minutes, respectively.

Table 3.

Mean Responses Related to the Usefulness of the Computer-Mediated Applications

| Online Processes Used in the Study | Mean Responsea |

|---|---|

| Web page | 6.00 |

| Narrated instructions about the Delphi process | 5.50 |

| Narrated explanation of the MCCM | 6.20 |

| Online glossary | 6.40 |

| Online questionnaires | 6.10 |

| Online feedback | 6.20 |

Rated from 1 = not important to 7 = very useful.

DISCUSSION

This study supports the research conducted by Linestone and Turoff8 that showed that the use of computer technology and applications can shorten the process between rounds. Only 2 days were needed between rounds, and the entire study was completed in less than 2 weeks. The use of the study Web page and SurveyMonkey questionnaires provided a flexible alternative to face-to-face meetings, thus ensuring anonymity among the expert panel, the inclusion of experts from across the country who were employed at all levels of emergency preparedness and response, and a format well suited for busy experts. Computer-mediated applications afford quick turnaround times that improve response rates and the ability to animate complex models through narrated PowerPoint presentations to improve the understanding of complex material.

The hypothesized model is complex and included 10 constructs, 10 relationships between the constructs, and 43 indicators or measures of the constructs. All required validation. The availability of a study Web page to all panel members enhanced communication and provided links to (1) narrated PowerPoint presentations that introduce the model and facilitated orientation to the Delphi technique, (2) aggregated data from each round of the Delphi technique, (3) and a glossary of terms and copy of the MCCM as adapted through opinion consensus. The majority of experts scored the resources available on the Web page very favorably. However, the exclusive use of computer-mediated processes may limit the inclusion of a representative sample. Additional research that evaluates the functionality of online resources would offer insights into the use of this modality for future studies. Online computer-mediated processes offer much promise for future research using the Delphi process or other questionnaire-based methods to study complex systems, model building, or theory validation.

References

- 1.Rowe G, Wright G, Bolger F. Delphi: a reevaluation of research and theory. Technol Forecast Soc Change. 1991;39:235–251. [Google Scholar]

- 2.Dalkey N, Helmer O. An experimental application of the Delphi method to the use of experts. Manage Sci. 1963;9:458–467. [Google Scholar]

- 3.Goodman CM. The Delphi technique: a critique. J Adv Nurs. 1987;(12):729–734. doi: 10.1111/j.1365-2648.1987.tb01376.x. [DOI] [PubMed] [Google Scholar]

- 4.Snyder-Halpern R, Thompson CP, Schaffer J. Comparison of mailed vs. Internet applications of the Delphi technique in clinical informatics research. Proc Am Med Inform Assoc. 2000:809–813. [PMC free article] [PubMed] [Google Scholar]

- 5.Akins RB, Tolson H, Cole BR. Stability of response characteristics of a Delphi panel: application of bootstrap data expansion. [Accessed April 1, 2007];BMC Med Res Methodol. 2005 5:37. doi: 10.1186/1471-2288-5-37. http://www.pubmedcentral.nih.gov/articlerender.fcgi?artid=1318466. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.deMeyrick J. The Delphi method and health research. Health Educ. 2003;103(1):7–16. [Google Scholar]

- 7.Jones J, Hunter DL. Br Med J. 311. Vol. 7001. InfoTrac OneFile via Thomson Gale; 1995. [Accessed March 17, 2007]. Consensus methods for medical and health services research. 1995; pp. 376–380. < http://find.galegroup.com.ezproxy.library.arizona.edu/itx/infomark.do?&contentSet=IAC-Documents&type=retrieve&tabID=T002&prodId=ITOF&docId=A17262625&source=gale&srcprod=ITOF&userGroupName=uarizona_main&version=1.0>. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Linestone HA, Turoff M, editors. [Accessed March 6, 2007];The Delphi Method: Techniques and Applications. 2002 http://www.is.njit.edu/pubs/delphibook/delphibook.pdf.

- 9.Turoff M, Hiltz SR. Computer based Delphi processes. In: Adler M, Ziglio E, editors. Gazing Into the Oracle: The Delphi Method and Its Application to Social Policy and Public Health. London: Kingsley Publishers; 1995. [Google Scholar]

- 10.Custer RL, Scarella JA, Stewart BR. The modified Delphi technique—a rotational modification. [Accessed March 12, 2007];J Vocat Tech Educ. 1999 15(2) http://scholar.lib.vt.edu/ejournals/JVTE/v15n2/custer.html. [Google Scholar]

- 11.Snyder-Halpern R. Indicators of organizational readiness for clinical information technology/systems innovation: a Delphi study. Int J Med Inform. 2001;63(3):179–204. doi: 10.1016/s1386-5056(01)00179-4. [DOI] [PubMed] [Google Scholar]

- 12.Martino JP. Technological Forecasting for Decision Making. 2. New York: Elsevier; 1983. [Google Scholar]

- 13.Scheide M, Skutashc M, Schofer J. Linestone HA, Turoff M, editors. Experiments in Delphi methodology. [Accessed October 5, 2010];The Delphi Method: Techniques and Applications [book on the Internet] 2002 [cited September 25, 2008]. http://www.is.njit.edu/pubs/delphibook/delphibook.pdf.

- 14.Crisp J, Pelletier D, Duffield C, Adams A, Nagy S. The Delphi method. Nur Res. 1997;46(2):116–118. doi: 10.1097/00006199-199703000-00010. [DOI] [PubMed] [Google Scholar]

- 15.Alderson C, Gallimore I, Gorman R, Monahan M, Wojtasinski A. Research priorities of VA nurses: a Delphi study. Mil Med. 1992;157(9):462–465. [PubMed] [Google Scholar]

- 16.SurveyMonkey, homepage. Portland, OR: c1999–2008. [Accessed October 5, 2010]. [retrieved September 25, 2008]. http://www.surveymonkey.com. [Google Scholar]

- 17.Verran JA. Delineation of ambulatory care nursing practice. J Ambul Care Manage. 1981;(2):1–13. doi: 10.1097/00004479-198105000-00003. [DOI] [PubMed] [Google Scholar]