Abstract

Prior theoretical and empirical research suggests that multiple aspects of an organization’s context are likely related to a number of factors, from their interest and ability to adopt new programming, to client outcomes. A limited amount of the prior research has taken a more community-wide perspective by examining factors that associate with community readiness for change, leaving how these findings generalize to community organizations that conduct prevention or positive youth development programs unknown. Thus for the current study, we examined how the organizational context of the Cooperative Extension System (CES) associates with current attitudes and practices regarding prevention and evidence-based programming. Attitudes and practices have been found in the empirical literature to be key indicators of an organization’s readiness to adopt prevention and evidence-based programming. Based on multi-level mixed models, results indicate that organizational management practices distinct from program delivery may affect an organization’s readiness to adopt and implement new prevention and evidence-based youth programs, thereby limiting the potential public health impact of evidence-based programs. Openness to change, openness of leadership, and communication were the strongest predictors identified within this study. An organization’s morale was also found to be a strong predictor of an organization’s readiness. The findings of the current study are discussed in terms of implications for prevention and intervention.

Keywords: readiness, organizational context, management practices, translational research, evidencebased programming, positive youth development, community program settings

Over the last two decades, there has been significant growth in the number of prevention and health promotion programs with strong evidence of efficacy and effectiveness (National Research Council and Institute of Medicine, 2009). Recent budget shortfalls and associated concerns about social programming have increased calls to Federal and State funding agencies to require that funded organizations implement evidence-based youth prevention or health-promotion programs (Coalition for Evidence-Based Policy, 2011; Oliff, Mai, & Palacios, 2012; Statement of Jon Baron, 2013). State program delivery systems, such as the Pennsylvania Commission on Crime and Delinquency (Bumbarger & Campbell, 2011; Chilenski, Bumbarger, Kyler, & Greenberg, 2007), the US Department of Health and Human Services (Department of Health and Human Services, 2013a, 2013b), and other federal departments (Hallfors, Pankratz, & Hartman, 2007; Haskins & Baron, n.d.) are responding by requiring the delivery of evidence-based programs to receive funding.

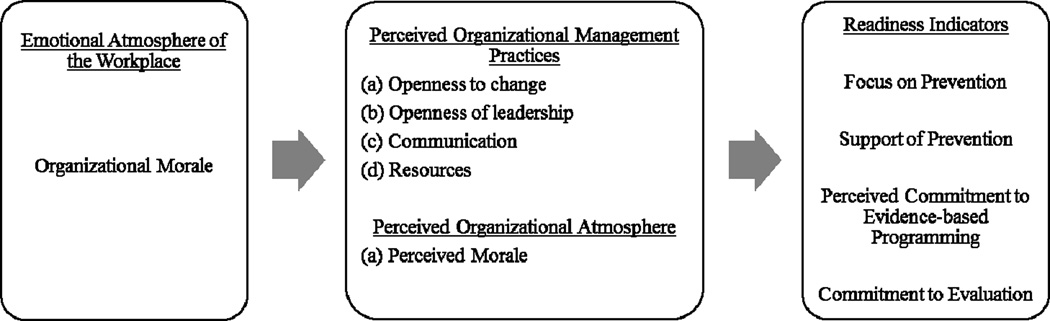

Despite the general movement incentivizing evidence-based youth prevention program implementation, many community organizations are hesitant to change their programming or are unsuccessful at implementing this sort of change effort (Hill & Parker, 2005; Perkins, Chilenski, Olson, Mincemoyer, & Spoth, in press). Prior research suggests that organizational context factors distinct from program delivery such as an organization’s management practices and an organization’s morale, may affect an organization’s readiness to adopt and sustain the implementation of evidence-based programs (Armenakis, Harris, & Mossholder, 1993; Damschroder et al., 2009; Domitrovich et al., 2008; Finney & Moos, 1984). As a result, in this paper we examined the associations between a selected organization’s management practices and morale, with that organization’s attitudes and perceived practices regarding prevention and evidence-based programs1 using a multi-level analytic approach. We conceive current attitudes and perceived practices regarding prevention and evidence-based programming as indicators of readiness for change, that change being adopting prevention and evidence-based programs. .

Readiness to Implement Prevention and Evidence-Based Programs

Favorable attitudes toward and perceived practices of prevention and evidence-based programming are important to consider because of their consistent associations with the adoption or implementation of related programming. As such, these constructs are good indicators of readiness to adopt this type of programming. Multiple studies have found that positive attitudes regarding prevention and evidence-based programming are associated with the adoption and implementation of a new evidence-based program or even a community change effort (Aarons & Palinkas, 2007; Edwards, Jumper-Thurman, Plested, Oetting, & Swanson, 2000; Gottfredson & Gottfredson, 2002; Hagedorn & Heideman, 2010; Allison Payne & Eckert, 2010; Plested, Smitham, Jumper-Thurman, Oetting, & Edwards, 1999; Plested, Edwards, Jumper-Thurman, Pamela, 2006; Ransford, Greenberg, Domitrovich, Small, & Jacobson, 2009). Other studies focused on program implementation quality in early stages of collaborative prevention efforts and have shown that positive attitudes toward prevention facilitated high quality implementation of the new effort (Feinberg, Chilenski, Greenberg, Spoth, & Redmond, 2007; Greenberg, Feinberg, Meyer-Chilenski, Spoth, & Redmond, 2007) and the programs implemented within this effort (Spoth, Clair, Greenberg, Redmond, & Shin, 2007; Spoth, Guyll, Redmond, Greenberg, & Feinberg, 2011). Therefore, we examined the following as indicators of readiness: focus on prevention; support of prevention; and perceived commitment to evidence-based programming.

In addition to attitudes and perceived practices regarding prevention and evidence-based programming, we also examined perceptions of an organization’s current evaluation practices. Commitment to evaluation is a commitment to the measurement of program outcomes, and may also be an indicator of readiness to adopt an evidence-based approach to programming (Becker & Domitrovich, 2011; Spoth, Schainker, & Ralston, in preparation). Though this construct has not been well researched, one case study of nine treatment-oriented organizations found that level of implementation was positively related, albeit not at a significant level, to perceived commitment to measurement and evaluation (Hagedorn & Heideman, 2010). In summary, empirical evidence has suggested that these four constructs are sound indicators of an organization’s readiness to adopt prevention and evidence-based programming, as these constructs have been consistently linked to ease of adoption and implementation quality.

Organizational Characteristics

An organization’s context, or in other words the customs, practices, and values of an organization, and how these attributes are perceived by employees are theoretically linked to an organization’s ability to successfully adopt and implement a new intervention (Center for Mental Health in Schools at UCLA, 2014; Damschroder et al., 2009; Domitrovich et al., 2008; Glisson, 2002). A constructive context would likely support the development of the motivation and flexibility individuals need to complete the hard work that being successful with a new effort requires (Glisson, 2002). Prior research has found that an overall measure of an organization’s context has related positively to client engagement in treatment or even better client outcomes (Broome, Flynn, Knight, & Simpson, 2007; Greener, Joe, Simpson, Rowan-Szal, & Lehman, 2007; Moos & Moos, 1998). Similar constructs measured in a community-school collaborative setting, rather than organizational setting, have been found predictive to the early implementation quality of a evidence-based school program (Halgunseth et al., 2012) and a community prevention effort (Chilenski, Greenberg, & Feinberg, 2007; Greenberg et al., 2007), and in schools, higher levels of organizational support for school-based prevention programs or new teaching strategies has related to higher implementation quality of those programs (Domitrovich, Gest, Gill, Jones, & DeRousie, 2009; Gottfredson & Gottfredson, 2002), yet a broader measure of the school’s organizational context had a limited predictive association with the implementation quality of new teaching strategies (Domitrovich et al., 2009). Thus, we examined how five aspects of an organization’s context are related to current attitudes and practices regarding evidence-based and prevention programming. Four measures describe an organization’s management practices: clear communication; openness of leadership; resources; and openness to change. One construct describes the organizational atmosphere: morale. In addition, we investigate morale at the organizational-level by aggregating individual reports of morale, as we view that in today’s low resourced youth program environments, an organizational-level measure of morale may act differently than an individual-level measure, and may be an important back-drop to understand individual perceptions of management practices .

Clear communication and openness of leadership

Our constructs of clear communication and openness of leadership build on the work of Lehman and colleagues’ concepts of mission and communication in the Organizational Readiness for Change measure (Lehman, Greener, & Simpson, 2002). We expect that organizations that do not have a strong ability to communicate the clarity of their mission, how current goals, objectives, and job duties are related to their mission, or leaders that are open to new ideas will have lower levels on the indicators of readiness assessed in this study. Simply put, an inability to communicate the value of current goals, objectives, and programs, and lack of openness to other ideas may make it even less likely that organizational leadership would be open to and clearly communicate the value of moving towards implementing evidence-based programs and prevention programs.

Prior research in this specific area has related clarity of communication and openness of leadership to a variety of outcomes. One study investigated clarity of communication and openness of leadership within a broader construct of organizational context. The authors found that positive organizational context related to higher beliefs that evidence-based programs (EBPs) were relevant to their setting and population (Saldana, Chapman, Henggeler, & Rowland, 2007). Clear communication specifically has related to the use of better implementation of new programming (Fuller et al., 2007) and higher perceived quality of implementation of school-based prevention programs (Payne, Gottfredson, & Gottfredson, 2006). In other research, communication and openness of leadership were positively associated with better functioning organizations, as measured by self-reported outcomes by clients (Greener et al., 2007; Lehman et al., 2002). Given this research, it is more likely that youth-serving organizations with strong leadership and good communication will be able to communicate the congruence of prevention practices and evidence-based programs with their mission.

Resources

In addition to clear communication and openness of leadership, we also chose to investigate the perceptions of resources in community youth-serving organizations. This construct is drawn from the resources domain of the Organizational Readiness for Change measure (Lehman et al., 2002) and the Triethnic Center’s resources dimension within their model of community readiness (Edwards et al., 2000; Plested et al., 1999). There are varied perspectives about why resources may be important to understand when undertaking a new programming effort. From one perspective, resources may be important because they are needed to undertake any new effort: if there are limited resources available, or that is the perception, adopting and implementing a new prevention or evidence-based program that requires new resources would be expected to be difficult. Yet, from a different perspective, some prior research has found that organizations with more limited resources were more likely to undertake an organizational change effort (Courtney, Joe, Rowan-Szal, & Simpson, 2007). In this case, a lower resource environment may create motivation for an organization to change their operating procedures and programs. Yet, still other researchers have not found any association between the level of perceived resources and the attitudes regarding evidence-based programming and the level of implementation of a new evidence-based program (Hagedorn & Heideman, 2010; Saldana et al., 2007). Consequently, we included an individual-level measure of perceived resources to predict individual attitudes and reported practices of prevention and evidence-based programming. Given the mixture of prior results, we tentatively hypothesized that higher levels of perceived resources would relate to more positive attitudes and practices regarding prevention and evidence-based programs.

Openness to change

We also chose to measure perceptions of an organization’s openness to change. This work draws from the change scale within the Organizational Readiness for Change instrument (Lehman et al., 2002). This construct describes an organizational context that is open to trying new policies, procedures, or programs.

How openness to change relates to readiness has been investigated in different ways, from examining current attitudes and practices to measuring outcomes of those served by their programming. Results from this research have consistently suggested positive associations. For example, organizations perceived to have high levels of openness to change at pretest were more likely to be implementing more components of a new evidence-based program six months later as compared to organizations with low levels of openness to change (Hagedorn & Heideman, 2010). Other researchers have found that openness to change, as one construct within a broader measure of the organizational context had a small, but significant positive association with believing that evidence-based programs were relevant to the targeted setting and population (Saldana et al., 2007). Moreover, other research has found that levels of an organization’s openness to change positively associate with better functioning organizations, as measured by self-reported outcomes by clients (Greener et al., 2007; Lehman et al., 2002). Consequently, we expected that individuals working within an organization that has high levels of openness to change would have more positive attitudes regarding evidence-based and prevention programming, and a stronger current focus on this type of programming.

Morale

Morale can be described as the emotional or mental state of workers and is sometimes labeled as morale (Glisson, 2007), job satisfaction (Hage & Aiken, 1967), stress (TCU Institute of Behavioral Research, 2005), or even burnout (Courtney et al., 2007; Ransford et al., 2009) in prior research. Here, we consider morale a predictor of perceived organizational attitudes and practices regarding prevention and evidence-based programming.

Prior research has found that low levels of morale can be a motivator for organizational change. In one study, higher levels of reported work stress related to the organization being more likely to undertake an organizational change effort after attending a training about the organizational change effort (Courtney et al., 2007). Similarly, higher levels of reported stress related to increased use of treatment manuals and support for a change to more integrated health services (Fuller et al., 2007). These studies were based on a theory that the need for change would be perceived to be strong, due to the high levels of stress, which would then be supportive of the implementation of an organizational change effort. However, some studies have found that high levels of work stress can have a negative effect on organizational change. For example, a study assessing the role of employee stress and commitment towards organization change demonstrated that employees who are highly stressed are less likely to commit to organizational change interventions (Vakola & Nikolaou, 2005). Research in school settings have been mixed. One study has shown that higher levels of burnout related to lower levels of self-reported implementation of a new evidence-based program (Ransford et al., 2009); another study did not show a link between job satisfaction/burnout and implementation quality (Domitrovich et al., 2009). On the other hand, supportive work environment has been found to positively influence the adoption of an organizational change effort, by finding that employees with supportive colleagues were better able to deal with stress during organizational change (Shaw, Fields, Thacker, & Fisher, 1993; Woodward et al., 1999). Others have found job satisfaction to be an important factor in understanding attitudes towards organization change, finding a positive relationship between job satisfaction and organizational change (Hage & Aiken, 1967) .

In the current study, we propose that individual perceptions of morale and the organizational aggregate of morale will predict current attitudes and practices regarding prevention and evidence-based programming. Aggregating individual-level values/scales has been suggested as helpful in understanding the possible influence of the organizational context (Glisson, 2002). Examining morale as an aggregate (i.e., organizational-level) variable may help us accurately consider how the organizational context relates to individual-level outcomes, rather than relying solely on perceptions of individuals to predict their own outcomes. Morale as a construct describes the emotional atmosphere within the workplace, whereas openness to change, resources, clarity of communication, and openness of leadership describe management practices. Consequently; we hypothesize that the emotional context within which individuals work will affect individuals’ perceptions of management practices.

Summary

Prior theoretical and empirical research suggests that multiple aspects of an organization’s context are likely related to a number of factors, from their interest and ability to adopt new programming, to client outcomes. However, most of this research has been conducted in treatment-oriented settings that serve adults or adolescents, rather than prevention and health-promotion settings (Aarons & Palinkas, 2007; Broome et al., 2007; Courtney et al., 2007; Fuller et al., 2007; Hagedorn & Heideman, 2010; Saldana et al., 2007) or community positive youth development or educational settings (Ransford et al., 2009). A limited amount of the prior research has taken a more community-wide perspective by examining factors that associate with community readiness for change (Chilenski et al., 2007; Edwards et al., 2000; Feinberg, Greenberg, & Osgood, 2004), leaving how these findings generalize to community organizations that conduct prevention or positive youth development programs unknown.

The Current Study: Organizational Context of the Extension System

In the current study, we examined how aspects of the organizational context were related to current attitudes and practices regarding prevention and evidence-based programming within the Cooperative Extension System (CES). CES is a non-credit educational network within U.S. state and territory land-grant universities. Simply put, CES is the outreach function of land-grant institutions. This mandate requires land grant universities to "extend" their resources, solving public needs with college or university resources through non-formal, non-credit programs focused in agriculture, marine, family and consumer sciences, and 4-H Youth Development. Programming is implemented at the community level with approximately 2,900 Extension offices nationwide. Historically, the CES has carried out its mission to encourage healthy development of youth and families through research-based community educational programs (Molgaard, 1997). Although CES programs were initially implemented exclusively in rural areas, the CES now addresses almost every aspect of people’s lives regardless of where they reside (Hill, McGuire, Parker, & Sage, 2009), making it a national organizational network that administers positive youth development programs to youth of all ages across the country. Consequently, the goal of this paper is to understand possible indicators of readiness to adopt a more prevention–oriented and evidence-based approach to their youth and family programming, which is typically more positive youth development focused.

The CES is an appropriate context for this study for several reasons (R. Spoth et al., in preparation). First, Family or Youth Development Educators typically see their work as promoting positive family and youth development. Second, decisions about youth and family programming are made at the community-level, grounding implemented programs in community needs. Yet, decisions about programming at the community-level are informed by state-level (i.e., organizational-level) professional development activities and the dissemination of new research knowledge from state-level CES faculty. Bringing research to practice is integrated within Extension’s mission, yet there is considerable variability between states to the degree that youth programs are grounded in a risk and protection, i.e. prevention, framework, and the degree to which evidence-based programs are implemented in communities (Hill et al., 2009). Prior research on this setting has found differences between regions of CES readiness to adopt prevention and evidence-based programming (Spoth et al., in preparation). The current study extends prior research to examine possible reasons for these differences. Specifically, we expect that undertaking this research within this organization will help us understand how perceptions of an organization’s management practices and atmosphere relate to readiness to adopt prevention and evidence-based programming within an organization committed to positive youth development. Consequently, the results of this study inform the translational research goal of improving the dissemination of evidence-based prevention programs (Spoth et al., 2013). We expect that what we learn about how the organizational context relates to readiness can inform dissemination efforts of prevention and evidence-based programs in other positive youth development-focused community organizations, and assist our understanding about how prevention and evidence-based programs may be integrated into these settings.

In this paper, we investigated the following hypotheses. First, we expected that organizational-level morale (i.e., state-level aggregates of individual values) in the CES would significantly predict individual-level reports of current attitudes and practices of prevention and evidence-based programming. Second, we expected that perceptions of organizational management practices: clear communication, openness of leadership, organizational resources, openness to change, and perceptions of morale would also significantly predict individual-level reports of current attitudes and practices of prevention and evidence-based programming. Moreover, we expected these associations to remain significant after accounting for important individual-level demographic characteristics, since prior research has found that characteristics such as educational attainment and organizational position level may be important predictors of the work environment and related outcomes (Aarons, 2004; Jones & James, 1979). Third, we examine the possibility that the influence of state-level morale on indicators of readiness is mediated by individual reports of the organizational management practices. .

Method

Participants

The participating Universities’ Institutional Review Boards approved the study before participant recruitment began. Study participants included a nationwide sample of Cooperative Extension administrators, faculty, coordinators, specialists, educators, and assistants involved in youth and family programming. Out of 4,181 possible participants, 946 (22.6%) completed web surveys, a rate consistent with similar types of surveys (Hamilton, 2009).

In the current study, our primary analyses were limited to the 891 Extension personnel who provided full responses on the variables of interest. Forty-eight states and Washington DC were represented in our analytic sample. On average, participants had been in their current positions for 10.6 years (SD = 9.4), and their tenure with their state’s Extension system averaged 13.6 years (SD = 10.3). Ninety-five percent of the sample had full-time positions. The educational status of the sample was high: 20.2% had a college degree or less, 68.5% had a master’s degree or bachelor’s degree with additional coursework, and 11.2% had a terminal degree. About 76.8% were community-based educators whose primary responsibility was to deliver family and/or youth programs. About 6.5% of the sample worked at a slightly broader regional level within their state, and 16.8% worked at the state level. Regional- and state-level positions tend to be more administrative in nature.

Procedures

Sample selection

In 2009, Extension personnel from each state and Washington DC were invited to participate in a web-based survey to assess their attitudes and knowledge regarding prevention, evidence-based programs, and collaboration and partnership activities. The sampling frame was created by compiling lists of CES youth and family programming personnel from all 50 states’ (and Washington DC’s) web-based employee rosters. Once rosters were collected (N=5,072), they were examined for completeness and size. To balance the sample, employee names were randomly selected from state systems that had over 100 names on their roster so that there was a maximum of 100 potential participants from each state. This process resulted in a final sampling frame that included 4,181 potential participants.

Recruitment

After the sampling frame was created, participants were first recruited through a series of letters to state and regional Extension administrators which asked to communicate support for participating in the confidential survey to all CES faculty and staff statewide. State Extension Directors were asked to send a notification letter to their staff about the survey, before the survey invite emails were sent. Participants were officially invited to take the survey via an email that came directly from data collection staff. This email included a consent letter, a survey link, and an individual access code. Surveys and reminders were sent to all potential participants over a 12-week period in fall 2009.

Incentives

A $2000 incentive was offered to one small, one medium, and one large-sized system that had the highest response rate within their size category. The small, medium, and large categories were created by ranking participating states by the number of employees, and then dividing the states into the three equal numbered categories. In addition to the state-level incentive, one $500 award for professional development was offered to one randomly selected respondent within each state’s Extension System.

Measures

See Table 1 for a list of each independent and dependent variable involved in our analyses, including their items and sources. Our independent variables, which described the organization’s management practices and atmosphere, were assessed with five separate self-report indices: openness to change, openness of leadership, morale, clear communication, and perceptions of organizational resources. Dependent variables assessed attitudes and practices regarding prevention and evidence-based programming: focus on prevention, prevention program implementation, commitment to evidence-based programs, and commitment to evaluation. In addition, self-reports of the following covariates were included in all analyses: number of years with Cooperative Extension, level of education (i.e., college or less, Master’s degree or some post-college, or terminal degree), and level of reach (i.e., county, region, or state).

Table 1.

Self-Report Measures of Independent and Dependent Variables

| Scale name | Items | Source |

|---|---|---|

| Independent Variables | In the following series of questions we will ask about your experience working in your organization | |

| Openness to change* |

|

Organizational Readiness for Change: Change scale; Institute of Behavioral Research, 2005 |

| Openness of leadership* |

|

1 item from Organizational Readiness for Change: Communication scale; Institute of Behavioral Research, 2005 |

| Morale* | Theme endorsed by Glisson, 2007; Similar to Organizational Readiness for Change Stress scale; Institute of Behavioral Research, 2005 | |

| Communication* |

|

Organizational Readiness for Change: Communication & Mission scales; Institute of Behavioral Research, 2005 |

| Organizational resources* | The following statements ask your opinion about training and staff development opportunities

|

Organizational Readiness for Change: Resources scale; Institute of Behavioral Research, 2005 |

| Dependent Variables | ||

| Focus on prevention+ | How important are each of the following areas of prevention for the communities in your state? | Expand ideas on Community Efforts theme from Tri-Ethnic Center’s Community Readiness interview procedure; Plested, Edwards & Jumper-Thurman, 2006 |

| Prevention program implementation* |

Please state how much you agree or disagree with the following statements concerning family and youth programming

|

PROSPER: Workplace support for prevention scale Chilenski, Greenberg & Feinberg, 2007 |

| Commitment to evidence-based programs (EBP)* | Created by project researchers | |

| Commitment to evaluation~ |

|

Expand on evaluation questions from: CYFAR Training & Development Survey |

Notes: Response Options:

(1) Strongly disagree to (5) Strongly agree;

Almost never to (5) Frequently;

(1) Not important to (5) Very important;

Item created by project researchers

Analysis Plan

Data structure

The data had a 2-level hierarchical structure; individuals (Level 1) were nested within states (Level 2). Moreover, the number of respondents within each state varied creating an unbalanced sample, and the Intra-Class Correlation Coefficients for the dependent variables in the paper ranged from .02 to .10 (see Table 2). As a result, we conducted multilevel mixed models with random intercepts using proc mixed in SAS Version 9.2. Estimating a Level 2 random intercept accounts for the shared variance among participants within the same state; therefore, it is an appropriate and conservative analysis. Models were assessed with the Restricted Maximum Likelihood estimator, as maximum likelihood tends to underestimate variances (Singer & Willett, 2003). In addition, due to both theoretical considerations described earlier in the paper and that the ICC for morale (.17) was remarkably larger than the ICC for every other independent variable in this paper, we decided to test the association of a state-level morale variable with our dependent variables, in addition to testing the associations only at the individual-level.

Table 2.

Descriptive statistics for all study variables and correlations among measures of organizational context and dependent variables

| Mean (SD) |

Reliability (ICC) |

1. | 2. | 3. | 4. | 5. | 6. | 7. | 8. | |

|---|---|---|---|---|---|---|---|---|---|---|

| 1. Openness to change | 3.61 (0.70) |

α = .74 (.04) |

-- | |||||||

| 2. Openness of leadership | 3.38 (0.84) |

r = .46 (.07) |

.653*** | -- | ||||||

| 3. Morale | 3.14 (0.89) |

r = .54 (.17) |

.537*** | .578*** | -- | |||||

| 4. Communication | 3.52 (0.77) |

α = .81 (.08) |

.590*** | .684*** | .545*** | -- | ||||

| 5. Resources | 2.36 (0.87) |

r = .52 (.05) |

.236*** | .313*** | .415*** | .289*** | -- | |||

| 6. Focus on prevention | 4.29 (0.65) |

α = .83 (.02) |

.085** | .063 | .010 | .087** | .023 | -- | ||

| 7. Prevention program implementation |

3.90 (0.76) |

α = .81 (.09) |

.311*** | .357*** | .256*** | .367*** | .137*** | .218*** | -- | |

| 8. Commitment to EBP | 3.64 (0.70) |

α = .64 (.07) |

.377*** | .433*** | .347*** | .471*** | .153*** | .195*** | .594*** | -- |

| 9. Commitment to evaluation | 2.86 (0.87) |

α = .85 (.10) |

.237*** | .290*** | .184*** | .304*** | .090** | .257*** | .420*** | .498*** |

p < .05;

p < .01;

p<.001

Hypothesis testing

Hypothesis testing proceeded in a hierarchical fashion in order to best understand the independent and shared associations among the variables of interest. First, state-level morale was entered as a Level 2 predictor for each of our individual-level dependent variables. Second, individual-level demographic characteristics of years in Extension, education level, and level of position were added to each model. Third, the five individual-level independent variables were added to each model. Lastly, additional models drawing from Baron and Kenny (Baron & Kenny, 1986) were estimated because the first three steps suggested that cross-level mediation could be occurring (Krull & MacKinnon, 2001). Testing for a cross-level mediation involved three additional analyses: (a) estimating multi-level regression models with a random intercept for state where state-level morale predicted each dependent variable; then (b) estimating multi-level regression models with a random intercept for state where state-level morale predicted each of the possible mediators; and finally, (c) using the Sobel test to test the significance of the mediation (Preacher & Leonardelli, 2012).

Results

Preliminary analyses

Preliminary analyses were conducted to test for significant differences between the participants who had complete data available for analysis compared to those that did not have complete data and consequently were dropped from the sample. Multilevel mixed models with random intercepts using proc mixed in SAS Version 9.2 were used. Out of all of the scales and demographic characteristics, only one significant difference was found between the two groups: participants in this study tended to be employed within their current CES twice as long as as long as those with incomplete data that were dropped from this study (M = 13.6 vs. M = 6.0, p = .0082).

Descriptive Data

Descriptive statistics for all independent and dependent variables are included in Table 2. Among independent variables, mean values suggest that participants disagreed slightly that they had enough resources to complete their jobs (M = 2.36, 1–5 scale), and levels of morale (M = 3.14, 1–5 scale) and ratings of the openness of their leadership (M = 3.38, 1–5 scale) are relatively indifferent. Values of the other variables (i.e., openness to change and clear communication) suggest slightly more positive perceptions of respondents. Among dependent variables, participants reported a relatively high level of focus on prevention, and a relatively low commitment to evaluation, with responses on other variables (i.e., prevention program implementation and commitment to EBP) falling between these extremes.

Correlation analyses are presented in Table 2. Results suggest a relatively consistent pattern of significant correlations among independent variables, and dependent variables, and also between each independent and each dependent variable. However, there seem to be some differences. Resources and focus on prevention seem to have less consistent associations with the other variables. Hence, hypothesis testing proceeded with examining the contribution of each variable separately, rather than creating a global factor of the organizational context or a global factor for readiness for prevention and evidence-based programming.

Multilevel Models

Hypothesis 1: State-level morale

Results of the multi-level mixed models are presented in Table 3. In Model 1, each dependent variable was regressed on the state-level morale variable. Results suggest that state-level morale had a positive significant association with prevention program implementation (p=.0011), commitment to evidence-based practice (p<.0001), and commitment to evaluation (p=.0291), but not focus on prevention (p=.9782). In Model 2, we entered the three covariates, including years of experience in their CES, educational attainment, and level of reach (county, regional, and state), along with state-level morale. Results suggest an inconsistent pattern of relations among the covariates and dependent variables (see Table 3). Beyond the effects of the covariates, the significant positive effects of state-level morale were maintained for two variables: prevention program implementation (p=.0022) and commitment to evidence-based practice (p<.0001). However, the relation between state-level morale and commitment to evaluation dropped below our threshold for significance (p=.0507).

Table 3.

Results from Multi-Level Mixed Models Assessing Relations among Organizational Context and Dependent Variables

| Focus on Prevention | Prevention program implementation |

Commitment to EBP | Commitment to Evaluation |

|||||

|---|---|---|---|---|---|---|---|---|

| Estimate | SE | Estimate | SE | Estimate | SE | Estimate | SE | |

| Model 1: | ||||||||

| Intercept | 4.29*** | 0.20 | 2.87*** | 0.29 | 2.48*** | 0.23 | 2.06*** | 0.36 |

| L2: State-level Morale | 0.00 | 0.06 | 0.32** | 0.09 | 0.37*** | 0.07 | 0.25* | 0.11 |

| Model 2 | ||||||||

| Intercept | 4.41*** | 0.20 | 3.26**** | 0.30 | 2.56*** | 0.24 | 2.38*** | 0.37 |

| L2: State-level Morale | −0.02 | 0.06 | 0.30** | 0.09 | 0.35*** | 0.07 | 0.23 | 0.11 |

| Years of experience | 0.01* | 0.00 | 0.00 | 0.00 | −0.01* | 0.00 | 0.00 | 0.00 |

| Education: College or less | 0.01 | 0.09 | −0.23* | 0.11 | 0.16 | 0.10 | 0.00 | 0.12 |

| Education: College Plus / Master’s | −0.01 | 0.08 | −0.21* | 0.09 | 0.07 | 0.08 | −0.06 | 0.10 |

| Education: Terminal degree | 0.00 | 0.00 | 0.00 | 0.00 | ||||

| Level: County | −0.16* | 0.07 | −0.17* | 0.08 | −0.05 | 0.07 | −0.20* | 0.09 |

| Level: Regional | 0.01 | 0.11 | 0.02 | 0.12 | 0.14 | 0.11 | −0.03 | 0.14 |

| Level: State | 0.00 | 0.00 | 0.00 | 0.00 | ||||

| Model 3 | ||||||||

| Intercept | 4.08*** | 0.22 | 2.35*** | 0.29 | 1.51*** | 0.24 | 1.51*** | 0.38 |

| L2: State-level Morale | −0.04 | 0.06 | 0.11 | 0.09 | 0.12 | 0.07 | 0.06 | 0.11 |

| L2: Pseudo R2 | .004 | .163 | .278 | .089 | ||||

| Years of experience | 0.01* | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| Education: College or less | −0.01 | 0.09 | −0.32** | 0.10 | 0.05 | 0.09 | −0.08 | 0.12 |

| Education: College Plus / Master’s | −0.02 | 0.08 | −0.22** | 0.08 | 0.05 | 0.07 | −0.08 | 0.10 |

| Education: Terminal degree | 0.00 | 0.00 | 0.00 | 0.00 | ||||

| Level: County | −0.14* | 0.07 | −0.09 | 0.07 | 0.04 | 0.06 | −0.13 | 0.09 |

| Level: Regional | 0.01 | 0.11 | 0.03 | 0.11 | 0.15 | 0.10 | −0.03 | 0.14 |

| Level: State | 0.00 | 0.00 | 0.00 | 0.00 | ||||

| L1: Openness to change | 0.08 | 0.04 | 0.10* | 0.05 | 0.10* | 0.04 | 0.06 | 0.05 |

| L1: Open. of leadership | −0.01 | 0.04 | 0.14*** | 0.04 | 0.13*** | 0.04 | 0.16** | 0.05 |

| L1: Morale | −0.05 | 0.04 | −0.00 | 0.04 | 0.04 | 0.03 | −0.03 | 0.04 |

| L1: Communication | 0.06 | 0.04 | 0.18*** | 0.04 | 0.26*** | 0.04 | 0.18*** | 0.05 |

| L1: Resources | 0.01 | 0.03 | 0.00 | 0.03 | −0.03 | 0.03 | −0.01 | 0.03 |

| L1: Pseudo R2 | .464 | .410 | .563 | .154 | ||||

Note:

p < .05;

p < .01

p<.001

Hypothesis 2: Individual-level measures of organizational context

In Model 3, we regressed each dependent variable on the covariates, state-level morale, and our five individual-level independent variables, including perceptions of openness to change, openness of leadership, morale, communication, and resources (see Table 3). With both the covariates and individual-level independent variables entered in the equation, state-level morale was no longer a significant predictor of any of the dependent variables; the estimated R2 (pseudo-R2; Snijders & Bosker, 2003) accounted for at Level 2 ranged from .00 to .24 in the four models. At the individual level, perceptions of openness to change were significantly associated with an increased prevention program implementation (p=.0288) and commitment to evidence-based practice (p=.0322). Furthermore, openness of leadership and communication were significantly related to increased prevention program implementation (p=.0008 and p<.0001 respectively), commitment to evidence-based practice (p=.0006 and p<.0001 respectively), and commitment to evaluation (p=.0012 and p=.0004 respectively). The, estimated R2 (pseudo-R2; Snijders & Bosker, 2003) accounted for at Level 1 ranged from .15 to .56. These effect sizes for the significant models are similar to, or even higher than those found in prior research (Aarons & Sawitzky, 2006; Broome et al., 2007; Greener et al., 2007)

Mediation analyses

After observing that the significant association between state-level morale and prevention program implementation, commitment to evidence-based programs, and commitment to evaluation disappeared once individual-level predictors were added to the model, we decided to test to see if a cross-level mediation was occurring, such that state-level morale was predicting individual-level measures of the organizational context which were then predicting those three dependent variables. Results of additional multi-level mixed models showed that state-level morale significantly predicted each of the five possible mediators: openness to change (B = 0.3795, SE = 0.05; p< .0001); openness of leadership (B = 0.5402; SE =0.06, p < .0001); morale (B = 1.000, SE =0.06, p < .0001); communication (B = 0.4573, SE =0.07, p < .0001), and resources (B = 0.4023, SE =0.08, p < .0001). Moreover, results from the Sobel Test (Preacher & Leonardelli, 2012) that combined information from these models and our final multi-level mixed models (Table 3, Model 3) showed that influence of state-level morale on prevention program implementation and commitment to evidence-based programs was significantly mediated by openness to change, openness of leadership, and communication. The influence of state-level morale on commitment to evaluation was significantly mediated only by openness of leadership and communication. Clear communication seems to be an especially strong mediator of state-level morale. Test statistics, standard errors, and significance values of these final tests are located in Table 4.

Table 4.

Results of testing the significance of the indirect effect, that (IV) state-level morale predicts (M) Individual-level Perceptions of the organizational context, when then predict attitudes and practices regarding prevention and evidence-based programming.

| Prevention program implementation |

Commitment to EBP | Commitment to Evaluation | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Model 3: Significance of Indirect Effect |

Test Statistic |

SE | p-value |

Test Statistic |

SE | p-value |

Test Statistic |

SE | p-value |

| L1: Openness to change | 2.090 | 0.018 | 0.037* | 2.051 | 0.016 | 0.040* | 1.126 | 0.021 | 0.260 |

| L1: Open. of leadership | 3.126 | 0.025 | 0.002* | 3.200 | 0.022 | 0.001* | 3.033 | 0.029 | 0.002* |

| L1: Morale | −0.009 | 0.038 | 0.993 | 1.209 | 0.033 | 0.227 | −0.562 | 0.045 | 0.574 |

| L1: Communication | 3.413 | 0.024 | <0.001* | 4.590 | 0.026 | <0.001* | 3.108 | 0.027 | 0.002* |

| L1: Resources | 0.147 | 0.012 | 0.883 | −1.113 | 0.011 | 0.266 | −0.398 | 0.014 | 0.691 |

Note:

significant

Discussion

This study examined how the organizational context associates with current attitudes and practices regarding prevention and evidence-based programming, as attitudes and practices are key indicators of an organization’s readiness to adopt prevention and evidence-based programming (Aarons & Palinkas, 2007; Edwards et al., 2000; Feinberg et al., 2007; Greenberg et al., 2007; Hagedorn & Heideman, 2010; Spoth et al., 2007; Spoth et al., 2011). Based on multi-level mixed models, results indicate that organizational characteristics distinct from program delivery may affect an organization’s readiness to adopt and implement new prevention and evidence-based youth programs, thereby limiting the potential public health impact of evidence-based programs (Spoth et al., 2013). The management practices of openness to change, openness of leadership, and clarity of communication were the strongest predictors identified within this study. An organization’s morale was also found to be a strong predictor of an organization’s readiness.

The Importance of Openness of Leadership, Communication, and Openness to change

The management practices measured in this study, perceptions of openness of leadership, the clarity of communication, and openness to change, most strongly and consistently predicted attitudes and practices regarding prevention and evidence-based programming. Specifically, these management practices were significant predictors of the perceived organizational support of prevention, commitment to evidence-based programming, and commitment to evaluation (openness of leadership and communication only) above and beyond the influence of perceptions of resources, individual reported morale, and other demographic characteristics. These findings extend prior research in treatment-focused and school settings to community settings that support positive youth development efforts. Within treatment-focused settings, for example, greater openness of leadership and/or clear communication related to improved use of new treatment manuals, more support of an organizational change effort related to providing integrated health services, or improvements in client outcomes (Fuller et al., 2007; Lehman et al., 2002; Payne et al., 2006; Saldana et al., 2007). Research on school settings has focused on a broader measure of organizational context, which often include different subcomponents than are studied here (Domitrovich et al., 2009; Gottfredson & Gottfredson, 2002; Payne & Eckert, 2010).

Whereas these and other constructs have been investigated as part of a broader organizational context factor in treatment-focused and sometimes school settings (Glisson, 2002; Payne & Eckert, 2010; Saldana et al., 2007), we examined their unique contributions in the current study. The two management practices most descriptive of transparent leadership, or in other words, the open sharing of ideas and rationale for decisions, were the strongest predictors of organizational readiness to adopt prevention and evidence-based youth and family programs. In some ways, this is not surprising as transparency of goals, processes, and outcomes are embedded within the theory of evidence-based programs. At their core, evidence-based programs are explicit and open regarding the outcomes they will and will not achieve, they are typically also explicit about how outcomes are achieved. Evidence-based programs try to open what used to be considered the “black box” of successful prevention and intervention programs (Harachi, Abbott, Catalano, Haggerty, & Fleming, 1999). Given the cross-sectional nature of this study, it is possible that organizations with these characteristics are more likely to adopt a more prevention and evidence-based approach to programming; it is also possible that those leaders in organizations that start to successfully move in this direction begin to be perceived as more open and clear in their communication. Longitudinal research is needed to better address the causal sequences of relevance.

Openness to change was the third strongest predictor of readiness for prevention and evidence-based programming (Lehman et al., 2002). In other words, having an organization that was perceived to be innovative, or willing to try new things and take risks, was important for readiness to adopt prevention and evidence-based programming, as perceived in prior research in treatment-focused settings (Saldana et al., 2007). Indeed adopting a prevention and evidence-based strategy for programming is an example of integrating an organizational change effort and involves risks. However, openness to change was not the most important management practice for the readiness indicators studied; associations with the dependent variables in this paper were not as strong and it only related to two out of the four dependent variables compared to leadership and communication which related to three of the dependent variables. The inter-item correlations between openness to change, communication, and openness of leadership were strong; therefore, when openness of leadership was high, clear communication tended to also be high, as well as perceptions of openness to change. Therefore, openness to change is related to prevention program implementation, commitment to EBP, and the use of evaluation practices, but these associations weaken after communication and leadership characteristics are controlled.

Given prior work that examined the specific links between openness to change and implementation quality of a new evidence-based program six months after implementation began and client outcomes (Hagedorn & Heideman, 2010), it is possible that there could be a developmental process that connects these management practices to the adoption, implementation, and sustainability process. Perhaps clear communication and openness of leadership are most important early in the adoption cycle, whereas the influence of openness for change increases in importance for ongoing implementation quality and the sustainability of a change effort such as the adoption of a prevention and EBP approach to programming. Longitudinal research is needed to further explore the developmental process among these constructs.

The Importance of Organizational Morale

Prior research has found that at times, high levels of morale associate with an organizations ability to successfully adopt an organizational change strategy (Hage & Aiken, 1967; Shaw et al., 1993; Vakola & Nikolaou, 2005; Woodward et al., 1999), whereas other studies have found that low levels associate with adoption (Courtney et al., 2007; Fuller et al., 2007). Prior intervention research has even noted lower levels of stress in organizations that have successfully implemented a new EBP with a fidelity monitoring protocol, compared to treatment as usual programs with a new fidelity monitoring protocol (Aarons, Fettes, Flores, & Sornmerfeld, 2009). In the current study, we found that individual-level reports of morale had small to moderate significant positive correlations with three out of our four dependent variables, and that these associations were no longer significant after accounting for multiple constructs in a multivariate model. In addition, individual-level reports of morale had the highest level of shared variance at the state-level compared to all other independent variables in this study, indicating a noteworthy amount of agreement among individuals within each state.

Aggregated state-level reports of morale had significant positive associations with three of the four dependent variables in the study; however, these associations were no longer significant after accounting for perceptions of communication and leadership. Mediation tests suggest that the influence of state-level morale on readiness was being mediated most strongly by individual-level perceptions of communication and leadership. That is, higher levels of organizational morale associated with more positive individual reports of leadership openness and communication, which in turn associated with more perceived support of prevention and commitment to evidence-based programs. Longitudinal data are needed to better clarify the ordering of these variables, but the combination of these results suggests that it is possible that morale may operate differently when aggregated at the organizational-level, compared to when it is considered only at the individual-level, and that the emotional atmosphere within the organization may affect individual perceptions of management practices.

An organizational-level measure of morale may tap into an underlying organizational property, which may affect how individuals perceive leadership, and practices and attitudes about programming, and thereby affecting objective indicators of organizational functioning and productivity. Organizational morale is likely something that is quickly transferred to new and returning employees through a high proportion of verbal and nonverbal interactions. In this way, morale becomes a property of the organization that is continually reinforced, which may make it somewhat resistant to change.

Implications for Prevention and Intervention

The findings of the current study suggest several implications for prevention and intervention. There seem to be organizational management practices that may affect an organization’s readiness to adopt prevention and evidence-based programs. If these management practices are not addressed, they could become, and perhaps have already been, real barriers to prevention and evidence-based programs achieving impact generally (Damschroder & Hagedorn, 2011), and perhaps even more importantly a real public health impact (Spoth et al., 2013); prior research is beginning to make this link (Halgunseth et al., 2012; Hurlburt et al., 2014). Consequently, program developers, prevention researchers, program disseminators, and technical assistance providers should attend to these management practices when working with organizations that are considering adopting prevention and evidence-based programs. Adding items that assess an organization’s openness of leadership, clarity of communication, openness to change, and organizational morale to readiness assessment instruments and processes might very well provide practical direction for capacity to improve successful adoption, or even implementation, and sustainability.

These findings suggest that leaders of community-based youth-serving organizations should be open in their leadership style, meaning that it may be a good idea to truly consider alternative viewpoints from staff at all levels before making decisions and to keep an open mind about organizational change efforts. In addition, clear communication across the organization about the rationale for decisions is also indicated, given that both efforts are likely related to readiness to adopt prevention and evidence-based programming. Organizational leadership that fails to openly communicate about relevant concerns, whether it be hiding true motivations or their vision, or something else, may be counterproductive and hinder an organization’s ability to successfully integrate a change effort.

Morale is another aspect of the organizational context that could hinder the successful adoption of prevention and evidence-based programs. It describes the emotional atmosphere within the organization. It may be a crucial link that affects the perceptions of the openness of leadership, communication practices, and even perceived openness to change of an organization. More research outlining the interconnectedness of these constructs could be useful. Perhaps there are some strategies that could be integrated within organizations to promote morale and ultimately the organizational developmental process. However, it is quite likely that the interconnections among these and other constructs not assessed here are complicated and intertwined.

Ideally, training on the important issues identified in this study could be integrated into nonprofit leadership or educational leadership development programs and professional development curricula. In addition, interventions that have been developed to improve organizational context in treatment-oriented mental health settings may be appropriate, with some adaptation, for youth-serving prevention and positive youth development organizations (Glisson, 2002).

Limitations

The findings of the current study need to be considered along with a number of study limitations. First, these data were collected shortly after the economic downturn, in the fall of 2009, where budgets for social services, youth serving organizations, community nonprofits, schools, and the Cooperative Extension System were suddenly decreased. The generalization of these findings to another organization and time may be slightly limited due to that historical event. Yet, the budgets of these organizations have yet to fully recover, even as gains have been made in the larger economy. Because of that, it is likely that the same associations would be found today and that these findings remain relevant.

A second limitation is that these data were collected within one particular organizational setting, the CES, which is affiliated with each state-based land grant university in the United States. The CES is a sizeable provider of positive youth development and family skills-building programs in the United States. Drawing our sample from this organizational network made it relatively easy to collect a nation-wide sample of positive youth development program providers. For that reason, these findings are likely to be useful to understand important organizational management practices in non-treatment-oriented and non-school settings. However, it is possible there are limitations due to uniquenesses within this setting. Indeed CES is directly linked to universities that may provide more capacity for ongoing problem-solving and implementation quality, and over the last 15 years CES has been progressing toward using more evidence-based programs. Limited prior research has found some differences in levels of readiness among different youth-serving systems (R. Spoth et al., in preparation). As a result, this paper examines more carefully possible reasons for differences in readiness.

As mentioned previously in this paper, these analyses rely on cross sectional data. It is possible that the independent variables used in this paper are malleable and dependent on other factors not studied here. Longitudinal data is needed to better understand the interconnections and especially the ordering of these constructs. Similarly, this study did not include an actual implementation attempt. Longitudinal data and data from an intervention study will better inform implications for prevention and intervention. Lastly, though we had a sizeable sample and the response rate to this survey was consistent with previous web-based surveys, it was low and likely not fully representative of the targeted population. The sample drew from 48 of the 50 states and the District of Columbia, yet some states had stronger participation rates than others, which could have led to some sample selection bias. The response rate, though typical for a nationally targeted web-based survey of this size (Hamilton, 2009) is also a limiting factor, and preliminary analyses show there may be some selection bias, as participants in this study tended to be employed within their current CES twice as long as nonparticipants. Consequently, some selection effects among participants may have biased our results. Nevertheless, the strengths of this study, in terms of national reach that extended investigation of these constructs to non-school and non-treatment oriented youth serving organizations, and depth of the investigated issues, also should be considered in this context.

Conclusion

The results of this study clearly indicated that management practices distinct from the program selection and implementation process may affect successful adoption of prevention and EBP in positive youth development youth-serving organizations. Management practices found to be critical were: openness/open-mindedness of leadership, clarity of communication, perceived openness to change, and organizational morale. Addressing these practices in nonprofit and educational leadership development programs may be useful. It may also be worthwhile to adapt interventions that have shown to positively impact the organizational context of treatment-oriented settings (Glisson, 2002). Attending to these translational issues is likely to improve the ability of prevention and evidence-based programs to make a true public health impact.

Figure 1.

Conceptual diagram showing the hypothesized association among interested constructs

Highlights.

-

-

We examine how organizational management practices and the emotional context relate to indicators of readiness to implement prevention and evidence-based programs

-

-

Clear communication and openness of leadership were most important

-

-

Organizational-level morale was also an important distal predictor of readiness

Acknowledgements

This work was supported by the following grants: DA13709 National Institute on Drug Abuse; DP002279 Centers for Disease Control and Prevention; DA028879 National Institute on Drug Abuse; data collection was funded by the Annie E Casey Foundation

Biographies

Sarah M. Chilenski, PhD is a Research Associate at the Prevention Research Center at the Pennsylvania State University. Her primary interest is examining how communities, schools, and universities can collaborate in the pursuit of quality youth prevention and health promotion programming. To this end, her focus includes researching the association of community characteristics with adolescent outcomes, the predictors and processes of community prevention team functioning and prevention systems. She frequently employs multiple methods in her research.

Jonathan R. Olson, PhD is on the faculty at The Pennsylvania State University. His research focuses on predictors of internalizing and externalizing outcomes among young people, positive youth development, and prevention programming for youth and families.

Jill A. Schulte, M.Ed., is an Implementation Specialist for the Clearinghouse at Military Family Readiness at The Pennsylvania State University. At the Clearinghouse, Jill provides proactive technical assistance around issues of implementation, evaluation, and sustainability. Additionally, she evaluates current research, programs, and best practices to interpret and synthesize findings for professionals working with military families

Daniel F. Perkins, PhD is Professor of Family and Youth Resiliency and Policy at the Pennsylvania State University and is Director of Penn State Clearinghouse for Military Family Readiness. Currently, Dr. Perkins is examining the transitioning of evidence-based programs and practices to their large scale expansion into real-world settings. The Clearinghouse is an applied research center with a mission of fostering and supporting interdisciplinary applied research and evaluation, translational and implementation science, and outreach efforts that advances the health and well-being of Military service members and their families

Richard Spoth, PhD is the F. Wendell Miller Senior Prevention Scientist and the Director of the Partnerships in Prevention Science Institute at Iowa State University. He provides oversight for an interrelated set of projects addressing a range of research questions on prevention program engagement, program effectiveness, culturally-competent programming, and dissemination of evidence-based programs through community-university partnerships. Among his NIH-funded projects, Dr. Spoth has received multiple awards for his work from the National Institute on Drug Abuse and The Society for Prevention Research.

Footnotes

This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

We use the phrase "prevention and evidence-based programming" throughout this paper in order to be consistent with the measures that were included in the study. WE acknowledge that not all prevention programs are evidence-based, and not all evidence-based programs are prevention programs.

References

- Aarons G. Mental health provider attitudes toward adoption of evidence-based practice: The Evidence-Based Practice Attitude Scale (EBPAS) Mental Health Services Research. 2004;6(2):61–74. doi: 10.1023/b:mhsr.0000024351.12294.65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aarons GA, Fettes DL, Flores LE, Sornmerfeld DH. Evidence-based practice implementation and staff emotional exhaustion in children's services. Behaviour Research and Therapy. 2009;47(11):954–960. doi: 10.1016/j.brat.2009.07.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aarons GA, Palinkas LA. Implementation of evidence-based practice in child welfare: Service provider perspectives. Administration and Policy in Mental Health and Mental Health Services Research. 2007;34(4):411–419. doi: 10.1007/s10488-007-0121-3. [DOI] [PubMed] [Google Scholar]

- Aarons GA, Sawitzky AC. Organizational culture and climate and mental health provider attitudes toward evidence-based practice. Psychological Services. 2006;3(1):61–72. doi: 10.1037/1541-1559.3.1.61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Armenakis AA, Harris SG, Mossholder KW. Creating Readiness for Organizational-Change. Human Relations. 1993;46(6):681–703. [Google Scholar]

- Baron RM, Kenny DA. The moderator-mediator variable distinction in social psychological research: Conceptual, strategic, and statistical considerations. Journal of Personality and Social Psychology. 1986;51:1173–1182. doi: 10.1037//0022-3514.51.6.1173. [DOI] [PubMed] [Google Scholar]

- Becker KD, Domitrovich CE. The Conceptualization, Integration, and Support of Evidence-Based Interventions in the Schools. School Psychology Review. 2011;40(4):582–589. [Google Scholar]

- Broome KM, Flynn PM, Knight DK, Simpson DD. Program structure, staff perceptions, and client engagement in treatment. Journal of Substance Abuse Treatment. 2007;33(2):149–158. doi: 10.1016/j.jsat.2006.12.030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bumbarger B, Campbell E. A State Agency–University Partnership for Translational Research and the Dissemination of Evidence-Based Prevention and Intervention. Administration and Policy in Mental Health and Mental Health Services Research. 2011:1–10. doi: 10.1007/s10488-011-0372-x. [DOI] [PubMed] [Google Scholar]

- Center for Mental Health in Schools at UCLA. Bringing New Prototypes into Practice: Dissemination, Implementation, and Facilitating Transformation: School Mental Health Project. UCLA: Dept. of Psychology; 2014. [Google Scholar]

- Chilenski SM, Bumbarger BK, Kyler S, Greenberg MT. Reducing Youth Violence and Delinquency in Pennslyvania: PCCD's Research-Based Programs Initiative. 2007:1–69. [Google Scholar]

- Chilenski SM, Greenberg MT, Feinberg ME. Community readiness as a multidimensional construct. Journal of Community Psychology. 2007;35(3):347–365. doi: 10.1002/jcop.20152. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coalition for Evidence-Based Policy. CBO/OMB Budget Scoring Guidance Could Greatly Accelerate Development of Rigorous Evidence About "What Works" To Achieve Major Budget Savings. Washington, DC: Coalition for Evidence-Based Policy; 2011. p. 3. [Google Scholar]

- Courtney KO, Joe GW, Rowan-Szal GA, Simpson DD. Using organizational assessment as a tool for program change. Journal of Substance Abuse Treatment. 2007;33(2):131–137. doi: 10.1016/j.jsat.2006.12.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implementation Science. 2009;4:15. doi: 10.1186/1748-5908-4-50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Damschroder LJ, Hagedorn HJ. A Guiding Framework and Approach for Implementation Research in Substance Use Disorders Treatment. Psychology of Addictive Behaviors. 2011;25(2):194–205. doi: 10.1037/a0022284. [DOI] [PubMed] [Google Scholar]

- Department of Health and Human Services. Administration on Aging: Evidence-based Disease and Disability Prevention Program. 2013a Dec 31; 2012 Retrieved November 27, 2013, from http://www.aoa.gov/AoA_programs/HPW/Evidence_Based/index.aspx.

- Department of Health and Human Services. Office of Adolescent Health: Teen Pregnancy Prevention. 2013b Jul 19; 2013 Retrieved November 27, 2013, from http://www.hhs.gov/ash/oah/oah-initiatives/tpp/grantees/

- Domitrovich CE, Bradshaw CP, Poduska JM, Hoagwood K, Buckley JA, Olin S, Ialongo NS. Maximizing the implementation quality of evidence-based preventive interventions in schools: A conceptual framework. Advances in School Mental Health Promotion. 2008;1(3):6. doi: 10.1080/1754730x.2008.9715730. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Domitrovich CE, Gest SD, Gill S, Jones D, DeRousie RS. Individual Factors Associated With Professional Development Training Outcomes of the Head Start REDI Program. Early Education and Development. 2009;20(3):402–430. doi: 10.1080/10409280802680854. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Edwards RW, Jumper-Thurman P, Plested BA, Oetting ER, Swanson L. Community readiness: Research to practice. Journal of Community Psychology. 2000;28(3):291–307. [Google Scholar]

- Feinberg ME, Chilenski SM, Greenberg MT, Spoth RL, Redmond C. Community and team member factors that influence the operations phase of local prevention teams: The PROSPER project. Prevention Science. 2007;8(3):214–226. doi: 10.1007/s11121-007-0069-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Feinberg ME, Greenberg MT, Osgood DW. Readiness, functioning, and perceived effectiveness in community prevention coalitions: A study of communities that care. American Journal of Community Psychology. 2004;33(3–4):163–176. doi: 10.1023/b:ajcp.0000027003.75394.2b. [DOI] [PubMed] [Google Scholar]

- Finney JW, Moos RH. Environmental assessment and evaluation research: Examples from mental health and substance abuse programs. Evaluation and Program Planning. 1984;7(2):151–167. doi: 10.1016/0149-7189(84)90041-7. doi: http://dx.doi.org/10.1016/0149-7189(84)90041-7. [DOI] [PubMed] [Google Scholar]

- Fuller BE, Rieckmann T, Nunes EV, Miller M, Arfken C, Edmundson E, McCarty D. Organizational readiness for change and opinions toward treatment innovations. Journal of Substance Abuse Treatment. 2007;33(2):183–192. doi: 10.1016/j.jsat.2006.12.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glisson C. The organizational context of children's mental health services. Clinical Child and Family Psychology Review. 2002;5(4):233–253. doi: 10.1023/a:1020972906177. [DOI] [PubMed] [Google Scholar]

- Glisson C. Assessing and changing organizational culture and climate for effective services. Research on Social Work Practice. 2007;17(6):736–747. [Google Scholar]

- Gottfredson DC, Gottfredson GD. Quality of school-based prevention programs: Results from a national survey. Journal of Research in Crime and Delinquency. 2002;39(1):3–35. [Google Scholar]

- Greenberg MT, Feinberg ME, Meyer-Chilenski S, Spoth RL, Redmond C. Community and team member factors that influence the early phase functioning of community prevention teams: The PROSPER project. Journal of Primary Prevention. 2007;28(6):485–504. doi: 10.1007/s10935-007-0116-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Greener JM, Joe GW, Simpson DD, Rowan-Szal GA, Lehman WEK. Influence of organizational functioning on client engagement in treatment. Journal of Substance Abuse Treatment. 2007;33(2):139–147. doi: 10.1016/j.jsat.2006.12.025. doi: http://dx.doi.org/10.1016/j.jsat.2006.12.025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hage J, Aiken M. PROGRAM CHANGE AND ORGANIZATIONAL PROPERTIES - COMPARATIVE ANALYSIS. American Journal of Sociology. 1967;72(5):503–519. doi: 10.1086/224380. [DOI] [PubMed] [Google Scholar]

- Hagedorn HJ, Heideman PW. The relationship between baseline Organizational Readiness to Change Assessment subscale scores and implementation of hepatitis prevention services in substance use disorders treatment clinics: a case study. Implementation Science. 2010:5. doi: 10.1186/1748-5908-5-46. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Halgunseth L, Carmack C, Childs S, Caldwell L, Craig A, Smith E. Using the Interactive Systems Framework in Understanding the Relation Between General Program Capacity and Implementation in Afterschool Settings. American Journal of Community Psychology. 2012;50(3–4):311–320. doi: 10.1007/s10464-012-9500-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hallfors DD, Pankratz M, Hartman S. Does federal policy support the use of scientific evidence in school-based prevention programs? Prevention Science. 2007;8(1):75–81. doi: 10.1007/s11121-006-0058-x. [DOI] [PubMed] [Google Scholar]

- Hamilton MB. Online Survey Response Rates and Times: Background and Guidance for Industry. 2009 http://www.supersurvey.com/papers/supersurvey_white_paper_response_rates.pdf.

- Harachi TW, Abbott RD, Catalano RF, Haggerty KP, Fleming CB. Opening the black box: Using process evaluation measures to assess implementation and theory building. American Journal of Community Psychology. 1999;27(5):711–731. doi: 10.1023/A:1022194005511. [DOI] [PubMed] [Google Scholar]

- Haskins R, Baron J. Part 6: The Obama Administration's evidence-based social policy initiatives: An overview. (n.d.) http://www.brookings.edu/~/media/research/files/articles/2011/4/obama%20social%20policy%20haskins/04_obama_social_policy_haskins.pdf.

- Hill LG, McGuire JK, Parker LA, Sage RA. 4-H Healthy Living Literature Review and Recommendations for Program Planning and Evaluation: National 4-H Healthy Living Task Force. 2009 [Google Scholar]

- Hill LG, Parker LA. Extension as a Delivery System for Prevention Programming: Capacity, Barriers, and Opportunities. Journal of Extension. 2005;43(1) http://www.joe.org/joe/2005february/a1.php. [Google Scholar]

- Hurlburt M, Aarons GA, Fettes D, Willging C, Gunderson L, Chaffin MJ. Interagency Collaborative Team model for capacity building to scale-up evidence-based practice. Children and Youth Services Review. 2014;39:160–168. doi: 10.1016/j.childyouth.2013.10.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jones AP, James LR. PSYCHOLOGICAL CLIMATE - DIMENSIONS AND RELATIONSHIPS OF INDIVIDUAL AND AGGREGATED WORK-ENVIRONMENT PERCEPTIONS. Organizational Behavior and Human Performance. 1979;23(2):201–250. [Google Scholar]

- Krull JL, MacKinnon DP. Multilevel modeling of individual and group level mediated effects. Multivariate Behavioral Research. 2001;36:249–277. doi: 10.1207/S15327906MBR3602_06. [DOI] [PubMed] [Google Scholar]

- Lehman WEK, Greener JM, Simpson DD. Assessing organizational readiness for change. Journal of Substance Abuse Treatment. 2002;22(4):197–209. doi: 10.1016/s0740-5472(02)00233-7. [DOI] [PubMed] [Google Scholar]

- Molgaard VK. The extension service as key mechanism for research and services delivery for prevention of mental health disorders in rural areas. American Journal of Community Psychology. 1997;25(4):515–544. doi: 10.1023/a:1024611706598. [DOI] [PubMed] [Google Scholar]

- Moos RH, Moos BS. The Staff Workplace and the Quality and Outcome of Substance Abuse Treatment. Journal of STudies on Alcohol and Drugs. 1998;59(1):43–51. doi: 10.15288/jsa.1998.59.43. [DOI] [PubMed] [Google Scholar]

- National Research Council and Institute of Medicine. Committee on the Prevention of Mental Disorders and Substance Abuse Among Children, Youth, and Young Adults: Research Advances and Promising Interventions. Mary Ellen O'Connell, Thomas Boat, and Kenneth E Warner, Editors. Board on Children, Youth, and Families, Division of Behavioral and Social sciences and Education. Washington, DC: The National Academies Press; 2009. Preventing Mental, Emotional, and Behavioral Disorders Among Young People: Progress and Possibilities. [PubMed] [Google Scholar]

- Oliff P, Mai C, Palacios V. STATES CONTINUE TO FEEL RECESSION'S IMPACT. Washington, DC: Center on Budget and Policy Priorities; 2012. p. 12. [Google Scholar]

- Payne A, Eckert R. The Relative Importance of Provider, Program, School, and Community Predictors of the Implementation Quality of School-Based Prevention Programs. Prevention Science. 2010;11(2):126–141. doi: 10.1007/s11121-009-0157-6. [DOI] [PubMed] [Google Scholar]

- Payne A, Gottfredson D, Gottfredson G. School Predictors of the Intensity of Implementation of School-Based Prevention Programs: Results from a National Study. Prevention Science. 2006;7(2):225–237. doi: 10.1007/s11121-006-0029-2. [DOI] [PubMed] [Google Scholar]

- Perkins DF, Chilenski SM, Olson JR, Mincemoyer CC, Spoth R. Knowledge, attitudes, and commitment towards evidence-based prevention programs: Differences across Family and Consumer Sciences and 4-H Youth Development Educators. Journal of Extension. (in press) [PMC free article] [PubMed] [Google Scholar]

- Plested B, Smitham DM, Jumper-Thurman P, Oetting ER, Edwards RW. Readiness for drug use prevention in rural minority communities. Substance Use & Misuse. 1999;34(4–5):521–544. doi: 10.3109/10826089909037229. [DOI] [PubMed] [Google Scholar]

- Plested BA, Edwards Ruth W, Jumper-Thurman Pamela. Community Readiness: A Handbook for Successful Change. Fort Collins, CO: Tri-Ethnic Center for Prevention Research Sage Hall, Colorado State University; 2006. pp. 1–73. [Google Scholar]

- Preacher KJ, Leonardelli GJ. Calculation for the Sobel test: An interactive calculation tool for mediation tests. 2012 Retrieved March 14, 2012, from http://quantpsy.org/sobel/sobel.htm.

- Ransford CR, Greenberg MT, Domitrovich CE, Small M, Jacobson L. The Role of Teachers' Psychological Experiences and Perceptions of Curriculum Supports on the Implementation of a Social and Emotional Learning Curriculum. School Psychology Review. 2009;38(4):510–532. [Google Scholar]