It is well known that pain education in Canadian medical schools needs to be improved, and there have been many initiatives to improve pain education at the preprofessional stage of training. However, the majority of pain education still occurs in a classroom setting. The authors of this article implemented a novel interprofessional education-based teaching strategy in a tertiary care-based setting. This article presents a pilot study of this education model.

Keywords: Collaboration, Competencies, Education, Interprofessional, Interdisciplinary, Pain, Trainees

Abstract

BACKGROUND:

Health care trainees/students lack knowledge and skills for the comprehensive clinical assessment and management of pain. Moreover, most teaching has been limited to classroom settings within each profession.

OBJECTIVES:

To develop and evaluate the feasibility and preliminary outcomes of the ‘Pain-Interprofessional Education (IPE) Placement’, a five-week pain IPE implemented in the clinical setting. The utility (content validity, readability, internal consistency and practical considerations) of the outcome measures was also evaluated.

METHODS:

A convenience sample of 21 trainees from eight professions was recruited over three Pain-IPE Placement cycles. Pre- and postcurriculum assessment included: pain knowledge (Pediatric Pain Knowledge and Attitudes Survey), IPE attitudes (Interdisciplinary Education Perception Scale [IEPS]) and IPE competencies (Interprofessional Care Core Competencies Global Rating Scales [IPC-GRS]), and qualitative feedback on process/acceptability.

RESULTS:

Recruitment and retention met expectations. Qualitative feedback was excellent. IPE measures (IEPS and IPC-GRS) exhibited satisfactory utility. Postcurriculum scores improved significantly: IEPS, P<0.05; IPC-GRS constructs, P<0.01; and competencies, P<0.001. However, the Pediatric Pain Knowledge and Attitudes Survey exhibited poor utility in professions without formal pharmacology training. Scores improved in the remaining professions (n=14; P<0.01).

DISCUSSION:

There was significant improvement in educational outcomes. The IEPS and IPC-GRS are useful measures of IPE-related learning. At more advanced training levels, a single pain-knowledge questionnaire may not accurately reflect learning across diverse professions.

CONCLUSION:

The Pain-IPE Placement is a successful collaborative learning model within a clinical context that successfully changed interprofessional competencies. The present study represents a first step at defining and assessing change in interprofessional competencies gained from Pain-IPE.

Abstract

HISTORIQUE :

Les stagiaires et les étudiants du milieu de la santé n’ont pas assez de connaissances et d’habiletés en matière d’évaluation clinique et de prise en charge de la douleur. De plus, dans chaque profession, la majorité de l’enseignement est seulement donnée en classe.

OBJECTIFS :

Préparer et évaluer la faisabilité et les résultats préliminaires du stage de formation interprofessionnelle (FIP) sur la douleur en milieu clinique, d’une durée de cinq semaines. Évaluer également l’utilité (validité du contenu, lisibilité, cohérence interne et considérations pratiques) des mesures de résultats.

MÉTHODOLOGIE :

Les chercheurs ont recruté un échantillon de commodité de 21 stagiaires provenant de huit professions au sein des trois cycles du stage de FIP sur la douleur. L’évaluation avant et après le stage incluait les connaissances sur la douleur (sondage sur les connaissances et les attitudes vis-à-vis de la douleur en pédiatrie [sondage]), les attitudes envers la FIP (échelle de perception sur la formation interdisciplinaire [IEPS]), les compétences sur la FIP (échelle d’évaluation globale des compétences de base en soins interprofessionnels [IPC-GRS]) et les réactions qualitatives sur le processus et l’acceptabilité.

RÉSULTATS :

Le recrutement et la rétention ont respecté les attentes. Les réactions qualitatives étaient excellentes. Les mesures de FIP (IEPS et IPC-GRS) ont démontré une utilité satisfaisante. Les résultats après le cursus se sont considérablement améliorés : IEPS, P<0,05; concepts d’IPCGRS, P<0,01; et compétences, P<0,001. Cependant, le sondage était peu utile dans les professions sans formation officielle en pharmacologie. Les résultats se sont améliorés dans les autres professions (n=14; P<0,01).

EXPOSÉ :

Les résultats de formation ont démontré des améliorations significatives. L’IEPS et l’IPC-GRS sont des mesures utiles de l’apprentissage lié à la FIP. À un niveau d’apprentissage plus avancé, un seul sondage ne reflète peut-être pas fidèlement l’apprentissage dans diverses professions.

CONCLUSION :

Le stage de FIP sur la douleur est un modèle d’apprentissage coopératif fructueux en contexte clinique. Il a permis d’améliorer les compétences interprofessionnelles. La présente étude constitue une première étape pour définir et évaluer les changements aux compétences professionnelles acquis grâce à la FIP sur la douleur.

The need to increase and improve the teaching of collaborative competencies for comprehensive clinical pain care in prelicensure and early postlicensure health care education has been well documented (1–8). There is a worldwide effort to develop interprofessional pain education at the preprofessional stage of training (5,9–13). The goal is to increase the student’s understanding of pain mechanisms and related biopsychosocial concepts, and to improve collaboration and communication among professions (9). However, there has been less progress in interprofessional pain education at the advanced ‘trainee’ stage of learning within the clinical sites. In addition, there has been little progress on integrating methodologies for teaching or assessing change in a participant’s competencies for collaborative pain care.

Interprofessional collaboration has been identified as a key factor for effective pain management (9). Competencies for future collaborative practice are best learned in an interprofessional education (IPE) setting (9,14,15) involving interactive, small group learning formats (16–18). This format enables participants from two or more health and/or social care professions to learn “about, from, and with each other” – an essential requirement for IPE (19,20). Although many new programs endeavor to include a small group IPE component, the realities of scheduling and cost issues have resulted instead in a ‘multi-professional’ large-group (18) learning situation and/or are provided early in the individual’s training, well before many participants have exposure to patients (21).

To address this gap, we adapted an existing, more general IPE learning model for trainee/participants within tertiary care settings – the ‘IPE-Placement model’ (22,23) – to focus on clinical pain in the pediatric tertiary care setting. The ‘Pain-IPE Placement’ was piloted at a large metropolitan pediatric academic tertiary care centre in Toronto (Ontario). The overall goal of the Pain-IPE Placement was to provide an opportunity for trainees from ≥3 professions to participate in a collaborative-learning model and apply theoretical pain concepts in the context of pediatric pain care. We were interested in developing not only pain knowledge but also core interprofessional competencies (24–26).

Because the present study was a pilot study of a model for pain IPE, our objectives were as follows: evaluate the feasibility of recruiting and retaining trainee-participants and facilitators; evaluate the content and process in terms of perceived acceptability of the program from perspectives of participants and facilitators; assess the trainee/student learning of IPE competencies – knowledge, attitudes and beliefs – for interprofessional pain care; and evaluate the utility of the chosen measures as indicators of whether the learning occurred.

METHODS

Overview

The Pain-IPE Placement curriculum consisted of five weekly 2 h tutorials, described below. A prospective descriptive mixed-methods study design was conducted in three distinct iterative five-week cycles (between October 2011 and June 2012), thus evaluating the experience of three different cohorts of students. Ethics approval for all aspects of the study was obtained from the hospital Research Ethics Board. Informed consent was obtained from all participants (trainees/students and facilitators) before the start of each five-week session (cycle).

The Pain-IPE Placement goals were to enable participants to develop an increased understanding of the expertise that each profession brings to pain assessment and management of pediatric pain including team members’ roles and responsibilities; learn and collectively develop clinical expertise specific to pain assessment and management; and build a critical understanding of team functioning within the context of collaborative pediatric pain care. Administratively, the aim was to enable trainees to meet their clinical placement requirements while also participating in the Pain-IPE Placement.

Trainees/students

Each of the three cycles included participants from at least three of the following programs: Child Life Studies, Medicine, Nursing, Occupational Therapy, Pharmacy, Physical Therapy and Radiation Sciences. Prelicensure/professional participants who were scheduled for clinical placements/internships at the participating academic pediatric care facility were informed about the Pain-IPE Placement opportunity and applied voluntarily to participate in the clinical placements. All participants met the following inclusion criteria: currently a clinical trainee/student (trainees include prelicensure/professional, as well as advanced training nurses, and medical interns, and residents); have a placement at the host institution such that timing coincided with the scheduled Pain-IPE Placement; agree to commit to attending the five weekly sessions; and have received approval from their clinical preceptor.

The Pain-IPE Placement curriculum and process

Each series of five weekly 2 h tutorials involved a small group of six to nine trainees/students and two facilitators, each from a different profession. Facilitators were clinical staff members who were recognized leaders in clinical pain (ie, a nurse practitioner from the acute pain service and a physical therapist from the chronic pain service). Before participation, facilitators all completed a 4 h faculty development session given by the Centre for Interprofessional Education at the University of Toronto.

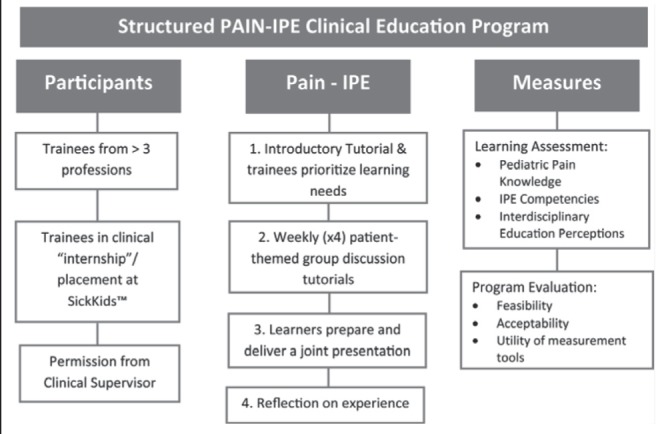

The Pain-IPE Placement was coordinated in partnership with clinical/education staff from the pediatric hospital. The five-week Pain-IPE Placement curriculum consisted of an introductory tutorial followed by four weekly facilitated tutorials (Figure 1). Tutorials were initially planned to be 1.5 h in length; however, during the first cycle tutorial sessions were increased to 2 h, as requested by participants. During the introductory session, participants, in collaboration with the facilitators, suggested and prioritized specific content for the remaining sessions. This was based on the collective learning needs of participants and the opportunities provided by the clinical setting. Each week the tutorial focused on one of the content suggestions (eg, assessing pain; setting goals; planning treatment; managing acute, persistent or neuropathic pain; transitioning/discharging pain cases; examining specific pain clinical syndromes). Participants were also encouraged to discuss issues related to interprofessional roles and collaborative practice in each of the tutorial sessions. At the fifth tutorial, trainees gave a formal group presentation on pain-related patient challenges relevant to the clinical setting, including the development of a comprehensive pain management care plan. The trainees’ preceptors as well as other members of the interprofessional acute and chronic pain teams were invited to attend these presentations.

Figure 1).

Plan for structured pain interprofessional education (IPE) clinical placement

Evaluation of feasibility and acceptability of the Pain-IPE Placement: Trainees/students and facilitators

The clinical education coordinator for the tertiary hospital sent an email describing the learning opportunity and commitment to all trainees/students who were scheduled to be situated at the hospital during a planned five-week period. Anyone who was interested in participating and met the criteria for inclusion notified the education coordinator, who then explained and met with the trainee/student to complete the consent process. Ease of recruitment was based on clinical education coordinator feedback. The research assistant tracked the attendance rates of participants in the Pain-IPE Placement.

Qualitative interview methodology is an excellent method to evaluate impact and process and, although more time consuming than a feedback questionnaire, provided valuable data to better inform about these constructs (27). Immediately following the final session of the program, participants completed the same postprogram questionnaires (available on request) containing standard questions asking for feedback on content and process. Each trainee/student cohort also participated in a 30 min semistructured focus group at the end of each cycle to explore what they liked and did not like about the program and recommended changes. All focus groups were conducted by one individual (MW) who was experienced in conducting focus groups. All focus group interviews were audiotaped and field notes were made during or immediately following the interviews to record the interviewer’s impression of participant responses (verbal and nonverbal) to the questions and comfort level with the interviewing process (27). A general introductory question was asked, followed by broad questions and probes to encourage the participants to elaborate on their experiences with the Pain-IPE Placement. Questions were informed by the research literature and the experience from the study investigators in interprofessional pain curriculum development (Appendixes 1 and 2).

Assessment of learning: Pain knowledge and attitudes

The Pediatric Nurses Knowledge and Attitudes Survey Regarding Pain (PNKAS) (28) was chosen based on its established reliability and validity, and its focus on pediatric pain. The survey consists of 41 true/false and multiple-choice questions about general pain management, pain assessment, and the use of analgesics and nonpharmacological interventions for pain. The total score reflects the number of correct responses, with scores ranging from zero to 41. The PNKAS was originally designed for pediatric nurses and includes 16 pharmacological intervention-related questions that have not been assessed in non-nursing trainees/students. In the present feasibility study, the aim was to evaluate the validity of using the measure for several professions, including those without extensive past education or training in pharmacology (eg, child life specialists, occupational therapists, physiotherapists and social workers). The instructions were modified such that participants were instructed not to respond to the 16 pharmacological intervention-related questions if they believed that they did not pertain to their scope of practice. The PNKAS (modified) results were evaluated separately for the two subgroups of participants: group I consisted of participants from child life, occupational therapy, physical therapy and social work; and group II included all other professions. Incomplete surveys (responses to <75% of the items) were excluded.

Assessment of learning: Interprofessional attitudes

The 2007 version (29) of the Interdisciplinary Education Perception Scale (IEPS) (30) was used to measure interprofessional attitudes. The IEPS is composed of 12 items, each with a six-point Likert scale where 1 represented strong disagreement and 6 represented strong agreement. The revised model structure includes three subscales: Competency and Autonomy (five items), Perceived Need for Cooperation (two items) and Perception of Actual Cooperation (five items). Construct validity of the original IEPS was established by Luecht et al (30) with the help of several health care professionals representing nursing, occupational therapy, podiatry, physiotherapy, prosthetics, psychology, radiography and social work. McFadyen et al’s (29) revised IEPS has been reported to be a stable and reliable instrument (Cronbach’s α=0.86), with improved alpha values and a higher total scale homogeneity compared with the original version.

Assessment of learning: Interprofessional competencies

Trainees’/students’ perceived learning of the IPE core competencies was assessed using the Interprofessional Care Core Competencies Global Rating Scales (IPC-GRS). Competency self-rating scales were developed by the University of Toronto IPE Assessment Team (31–33), based on the Framework for the Development of IPE Values and Core Competencies (26,32) for health professional programs at the University of Toronto (25). This framework has three levels (exposure, immersion and competence) and three constructs (values and ethics, communication and collaboration). Across these levels and constructs, specific measureable IPE core competencies for knowledge, skills/behaviours and attitudes were developed. Pain-IPE Placement learning activities were mapped on to the IPE core competencies and a resulting 15-item instrument was created, using a five-point Likert-scale design, constructed with three item-specific qualifiers or anchors (Appendix 3).

Preliminary evaluation of utility of the chosen outcome measures

Because this was an innovative Pain-IPE experience, there was little evidence available to guide the selection of outcome questionnaires. The choice was, thus, based on the composition of the learner group and the purpose of the questionnaire. Chandratilake et al (34) and van der Vleuten et al (35,36) proposed a utility formula for measures to assess medical education based on the measurement characteristics as well as practical considerations (reliability, validity, educational impact, acceptability and feasibility). The evaluation of the chosen questionnaires (PNKAS, IEPS, IPC-GRS) was guided by the checklist published by Eechaute et al (37) and Lohr et al (38). Because the present study was a pilot study, content validity, readability, internal consistency and practical considerations of the chosen outcome measures were evaluated, as described in Appendix 4.

Analyses

All statistical analyses were conducted using SPSS version 20 (IBM Incorporated, USA) and Excel (Microsoft Corporation, USA). Data were analyzed according to total responses or subscale-specific responses, depending on respective instrumentation used. Tests and surveys included for analyses had any blank items coded as missing with values adjusted appropriately, unless stated otherwise. Cronbach’s alpha was calculated for subscales and overall tests to assess internal consistency in measurement of underlying constructs. Factor analysis was conducted to test item correlations. Initial data analysis included screening for assumptions of normality and homogeneity of variance of dependent variables. Analyses for data meeting these assumptions included paired Student’s t tests to compare matched pre- and post-test scores, with effect size noted. For data not meeting necessary assumptions, matched-pairs Wilcoxon signed-rank tests were used. All significant effects are reported at P<0.05. For the IPC-GRS, the constructs and the categories of competencies were each analyzed by means, SDs, Cronbach’s alphas, and significance of change in scores between pre- and post-self-assessment.

Interviews were audiotaped and transcribed verbatim. All transcripts were verified against the tapes by one author (MW) and imported into NVivo 8.0R (QSR International), a qualitative analysis software program that helps to organize, code and retrieve data. Field notes taken during the interviews were also transcribed and included in the analytical process. The analysis was conducted by one member of the research team (MW) and reviewed by a more senior qualitative research team member (JS). Qualitative simple content analysis, a dynamic process that summarizes the informational content of data, was used (39,40). Specifically, data for all participants were coded according to the study objectives and were organized into categories that reflected the emerging themes. The raw data were revisited on a regular basis throughout the analytic process to ensure that the codes and resulting themes were grounded in them (41).

RESULTS

Feasibility and acceptability

Recruitment and retention:

The Education Coordinator was able to find four time periods in a 12-month calendar where >3 professions had clinical placements/internships that overlapped for a five-week period. Three dates were chosen for three consecutive cycles of the Pain-IPE Placement. A total of 21 (18 [86%] female) participants from eight different professions volunteered for three cohorts (Table 1). Nine of the trainees were from the University of Toronto and 12 were from professional programs in one of seven other universities (ie, University of Windsor, Memorial University, University of Saba, University of Waterloo, Ryerson University, Wilfrid Laurier University and Wheelock College). All 21 students completed the five-week Pain-IPE Placement.

TABLE 1.

Count of trainees by training program and year

| Profession | Program year | Cohort | Total | ||

|---|---|---|---|---|---|

|

| |||||

| 1 | 2 | 3 | |||

| Group 1 | |||||

| Social work | 1 | 0 | 1 | 1 | 2 |

| 2 | 1 | 0 | 1 | 2 | |

| Occupational therapy | 1 | 0 | 0 | 1 | 1 |

| Physical therapy | 2 | 1 | 0 | 0 | 1 |

| Child life | 2 | 0 | 1 | 0 | 1 |

| Group 2 | |||||

| Pharmacy | 1 | 1 | 0 | 0 | 1 |

| 2 | 0 | 0 | 1 | 1 | |

| 3 | 0 | 0 | 2 | 2 | |

| 4 | 0 | 2 | 0 | 2 | |

| Nursing Bachelor of Science | 2 (2-year program) | 0 | 0 | 1 | 1 |

| 3 (4-year program) | 0 | 0 | 1 | 1 | |

| 4 (4-year program) | 2 | 1 | 0 | 3 | |

| Nurse practitioner | 2 | 1 | 0 | 0 | 1 |

| Medicine | |||||

| Pediatric fellowship | 4 | 0 | 1 | 0 | 1 |

| Medical doctor | n/a | 0 | 0 | 1 | 1 |

| Total | 6 | 6 | 9 | 21 | |

n/a Not applicable

Content and process:

The response rate was 95.2%, with 20 of the 21 participants completing the evaluation. Respondents ranked facilitators very highly on all categories, with a mean percentage average of 91.4%. The highest rating on the feedback was in response to the facilitator’s ability to foster a safe and comfortable environment for participants to participate in. Qualitative feedback from open-ended questions supported the appreciation of facilitators who were skilled in IPE.

Analysis of participant focus groups revealed themes specific to learning and themes specific to format of the Pain-IPE Placement. Three emergent themes comprised the primary impact on learning as gaining the following: knowledge of other roles and scopes of practice; knowledge base of resources and referrals; and greater understanding of pain and pain management. With respect to the benefits of the Pain-IPE Placement format, trainees/students appreciated being able to structure the content of their learning and topics for guest lecturers. However, they recommended more structured and directed learning before the start of self-directed learning because they believed that at first they “didn’t know what they didn’t know”. It was also suggested that a lecture followed by case-study format occur during the first few weeks; participants also wished to have the opportunity to attend the placement at a time point toward the completion of their clinical practicum.

Assessment of learning

Pain knowledge and attitudes:

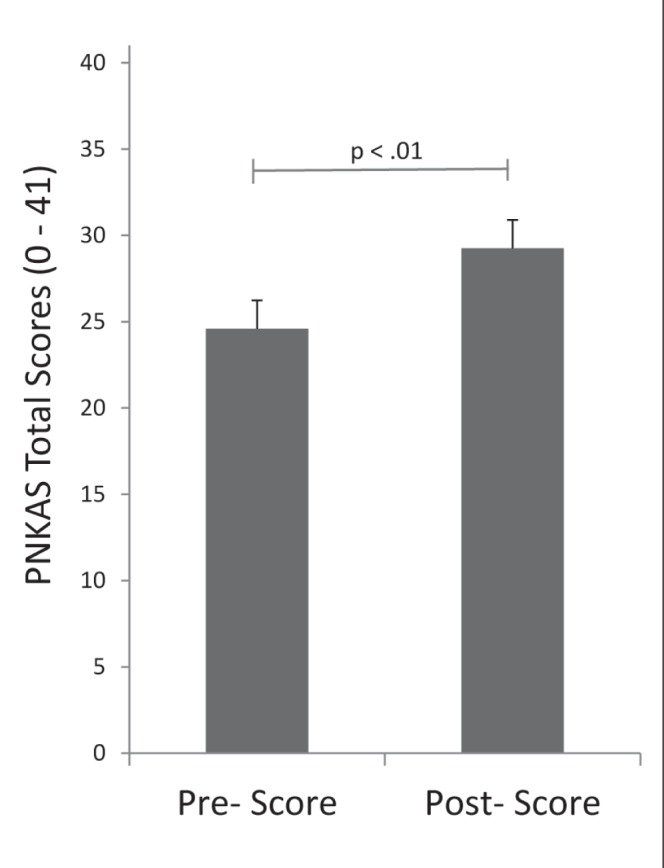

The PNKAS data from group I could not be analyzed (as described below). PNKAS scores for group II revealed excellent pain knowledge outcomes with a statistically significant mean change in correct responses from 62% (mean [± SD] 24.58±1.64) at pre-test to 73% (29.25±1.74) at post-test (P<0.01) (Figure 2).

Figure 2).

Mean and SE Pediatric Nurses’ Knowledge and Attitudes Survey Regarding Pain (PNKAS) (modified) scores (P<0.01) of group II

Interprofessional attitudes:

Positive changes were demonstrated related to interprofessional attitudes with a statistically significant change in the overall IEPS scores (P<0.05). The full distribution of results with mean (± SD) item scores is presented in Table 2.

TABLE 2.

Interdisciplinary Education Perception Scale scores per item, n=19

| Item | Pre-Pain IPE Placement | Post-Pain IPE Placement |

|---|---|---|

| Individuals in my profession are well-trained | 5.10±1.12 | 5.48±0.68 |

| Individuals in my profession are able to work closely with individuals in other professions | 5.05±0.89 | 5.62±0.50 |

| Individuals in my profession are very positive about their goals and objectives | 4.79±0.98 | 5.00±0.75 |

| Individuals in my profession need to cooperate with other professions | 5.55±1.15 | 5.81±0.40 |

| Individuals in my profession are very positive about their contributions and accomplishments | 4.80±1.20 | 5.10±1.00 |

| Individuals in my profession must depend upon the work of people in other professions | 4.85±1.35 | 5.52±0.68 |

| Individuals in my profession trust each other’s professional judgment | 4.90±0.97 | 5.20±0.64 |

| Individuals in my profession are extremely competent | 5.10±1.17 | 5.52±0.60 |

| Individuals in my profession are willing to share information and resources with other professionals | 5.20±1.20 | 5.76±0.54 |

| Individuals in my profession have good relations with people in other professions | 4.80±1.11 | 5.52±0.60 |

| Individuals in my profession think highly of other related professions | 4.85±0.93 | 5.14±0.85 |

| Individuals in my profession work well with each other | 5.00±1.17 | 5.52±0.60 |

Data presented as mean ± SD

With respect to outcomes measured by subscale, the Perception of Actual Cooperation subscale displayed the highest statistically significant difference between pre and post scores (P<0.01). There was also a statistically significant improvement in the Perceived Need for Cooperation subscale (P<0.05). There was no statistically significant change in the Competency and Autonomy subscale scores (P=0.0567) (Table 3).

TABLE 3.

Interdisciplinary Education Perception Scale scores and Cronbach’s alpha coefficient, n=19

| Subscale | Pre-Pain IPE Placement | Post-Pain IPE Placement | ||

|---|---|---|---|---|

|

|

|

|||

| Mean ± SD | Cronbach’s α | Mean ± SD | Cronbach’s α | |

| Competency and autonomy (n=30) | 24.68±5.09 | 0.96 | 26.16±2.95 | 0.84 |

| Perceived need for cooperation (n=12) | 10.40±2.21 | 0.72 | 11.33±1.02 | 0.80 |

| Perception of actual cooperation (n=30) | 24.90±4.90 | 0.95 | 27.57±2.48 | 0.85 |

| Overall total (n=72) | 59.95±11.88 | 0.97 | 64.95±5.55 | 0.90 |

IPE Interprofessional education

Interprofessional competencies:

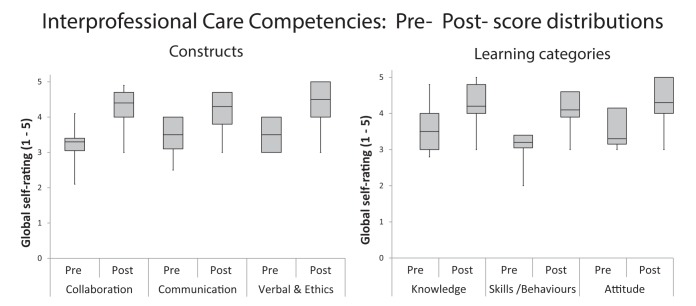

There was a statistically significant change in pre- to postprogram mean scores for all constructs and categories of competencies (Table 4). The distribution of subscale scores for constructs and categories of competencies are presented in Figure 3. The proportion of individuals who answered positively (4 or 5 on the five-item scale) is presented in Appendix 3. The mean percentage increases in positive responses per construct from pre- to postprogram were: collaboration (56.2%); values and ethics (42.9%); and communication (39.9%). The five items (33.3% of total) with the highest increase in percentages were all within the skills/behaviour category of competencies.

TABLE 4.

Interprofessional Care Core Competencies – Global Rating Scale scores

| Pre | Post | Z value | P | Cronbach’s α | ||

|---|---|---|---|---|---|---|

| Pre-Pain IPE Placement | Post-Pain IPE Placement | |||||

| Constructs | ||||||

| Collaboration | 3.3±0.45 | 4.2±0.54 | −3.83 | <0.001 | 0.84 | 0.92 |

| Communication | 3.5±0.62 | 4.3±0.52 | −3.41 | <0.01 | 0.92 | 0.85 |

| Values and ethics | 3.7±0.71 | 4.5±0.59 | −3.50 | <0.01 | 0.86 | 0.84 |

| Categories of competencies | ||||||

| Knowledge | 3.6±0.65 | 4.3±0.53 | −3.60 | <0.001 | 0.89 | 0.83 |

| Skills/behaviours | 3.3±0.45 | 4.2±0.54 | −3.73 | <0.001 | 0.90 | 0.91 |

| Attitudes | 3.7±0.63 | 4.4±0.54 | −3.60 | <0.001 | 0.83 | 0.85 |

Data presented as mean ± SD. IPE Interprofessional education

Figure 3).

Pre- and postintervention distribution frequencies of Interprofessional Care Core Competencies Global Rating Scales scores grouped according to competency constructs (collaboration, communication, verbal/ethics – left graph) and grouped according to categories of learning the competencies (knowledge, skills, attitudes – right graph)

Utility of outcome measures

PNKAS:

There were mixed results for the utility of the PNKAS. In group I, six of the seven respondents did not respond to 16 pharmacology-related items on the pre- or the post-test. One participant responded to 12 (75%) of the pharmacology items on pretest but none of the post-test items. The 16 questions were, thus, excluded from analysis in this group. Analysis of the first 25 questions revealed instability of the 25-item PNKAS in group I, with a fluctuating alpha score for that group (pre α 0.74, post α 0.48; n=7) and for the group as a whole (pre α=0.68, post α=0.68; n=20), and 10 of 25 items displaying either a high percentage of correct responses or poor item total correlation. A small sample size restricted further analyses.

The PNKAS tool performed well in group II. Of the surveys from the 14 participants in this group, one was incomplete and, therefore, excluded from analysis. Response rates were excellent for this group on the 16 pharmacology-related questions. Nine of the 13 participants had a response rate >90% to this section of questions and 10 of the 13 participants displayed a response rate >90% post-test. All respondents from this group attempted minimal response rates of 31%. Reliability testing of the PNKAS tool for group II displayed significant internal consistency (preintervention α=0.86, postintervention α=0.86). Interestingly, five items (items 2, 5, 16, 18 and 23) had 100% correct response rate on both pre- and postintervention. An additional five items (4, 14, 21 and 22) had 100% correct response rate postintervention only.

IEPS:

Clinical utility of the IEPS revealed a 95.2% response rate (20 of 21) for preprogram data and a 100% response rate (21 of 21) postprogram on IEPS. Data from the one participant who did not complete the IEPS pretest were excluded from analysis. Subscale-specific data were established using mean (± SD) total scores and high Cronbach’s α. Internal consistency was high for all subscales (α ranged from 0.80 to 0.90) (Table 3).

IPC-GRS:

There was a 90.5% response rate (19 of 21) for preprogram data and 100% response rate (21 of 21) for postprogram data on the IPC-GRS across all professions. All constructs and categories of competencies achieved satisfactory Cronbach’s α scores (Table 4).

DISCUSSION

Overall, we were successful in developing and implementing the Pain-IPE Placement for trainees/students in the clinical setting aimed primarily at improving IPE competencies for collaborative patient-centred pain care. To our knowledge, the present study is also the first to evaluate the utility of outcome measures for this level (trainee) of inter-professional pain education, including competencies specific to interprofessional care.

Feasibility and acceptability

Recruitment and retention met expectations and qualitative feedback and process evaluations were excellent. Practical considerations concerning the outcome measures selected (eg, time to administer, response rates, administration burden and student burden) were acceptable.

The literature on pedagogical constructs of the ‘how’ to teach interprofessionally to improve collaborative pain care is nonexistent. Although the University of Toronto model for IPE-Placements has been previously iteratively developed and evaluated for more general IPE, the modification of this format for pain teaching is novel. Participants appreciated the direct clinical application of their learning, but also requested additional didactic sessions to help with the initial cognitive orientation to the topic of children’s pain. Thus, although the general format of the IPE-Pain placement was successful, we suggest that an additional one to two weeks be added up front for more specific directed learning on pain topics before the initiation of the self-directed format.

Pain knowledge

Learning outcome:

It is well established that participation in a pain course, based on the International Association for the Study of Pain curricula guidelines, can change trainee/student knowledge about pain, regardless of the length or format of the program (4,5,11,13,45–47). Our finding of improved pain knowledge and attitudes reflects similar findings from prelicensure/professional Pain-IPE initiatives (11–13,47). We can conclude that participants improved their pain knowledge. However, our pain knowledge measure had limitations, which are discussed below.

Interprofessional collaboration attitudes and knowledge

Learning outcome:

There was significant change in the subscales pertaining to cooperation (‘perception of actual cooperation’ and ‘perceived need for cooperation’). It can be concluded that the experience was beneficial to the development of the knowledge and attitudes for future collaborative pain care. Certainly, it appears that the focus on pain care is an excellent topic on which to base IPE education for collaborative care.

Utility of IEPS:

In addition, our findings agree with those of McFadyen et al (29) in that the revised IEPS instrument was a reliable tool for the evaluation of an IPE educational intervention for use with prelicensure/professional health and social care trainees/students. It was able to detect change in interprofessional attitudes and perceptions after the educational intervention. Interestingly, the two subscales that showed significant change addressed attitudes about interprofessional cooperation. This finding, along with the lack of change in the Competency and Autonomy subscale, may reflect the focus of case-based learning, but we can find no other studies with which to compare our findings.

Because we used the IEPS as a pre- and post-test, we were cognizant of the lack of evidence of the stability of the original (four sub-scale version) instrument (30) and of the test-retest reliability of the items and subscales when used with undergraduates (29). Our sample sizes were too small for definitive confirmation of internal consistency, but support the findings of McFadyen et al that the revised three sub-scale model had good test-retest reliability and internal consistency. For our purposes, the revised IEPS had good clinical utility to monitor changes in attitudes and perceptions about IPE in trainees/students participating in IPE-pain placements.

Core competencies for interprofessional pain care

Core competency outcomes:

The present study is the first Pain-IPE study to report outcomes specific to interprofessional education competencies for collaboration. We found significant improvement in all core competencies.

Utility of IPC-GRS:

The literature is sparse with regard to how to assess knowledge and skills competencies required for collaborative practice. Scales, such as the IEPS, successfully evaluate attitudinal shifts; however, as noted by Fragemann et al (5), there is a need to develop ways to measure all types of competencies (knowledge, skills/behaviours and attitudes) for interprofessional care. The IPC-GRS was developed by the University of Toronto IPE Assessment Team to assess health professional education competencies for interprofessional collaboration as follows: knowledge, eg, roles of other health professionals; skills/behaviours, eg, communicating with others, reflecting on own role and others; and attitudes, eg, mutual respect, willingness to collaborate and openness to trust. Each scale in the IPC-GRS is meant to assess the competencies of the three main IPE constructs or themes: collaboration, communication, and values and ethics. The benefit of this consistent framework is the common core competencies that create a shared understanding of the language and requirements needed to achieve interprofessional collaboration. To our knowledge, this is first time the competency questionnaire has been used in a study investigating pain IPE.

IPE has a unique role in helping practitioners enhance their knowledge, skills, behaviours and attitudes to enable them to work together to actually change the culture of health care (14) and develop new models of care especially for improving that of people with complex pain management issues. Based on our findings, the IPC-GRS measured competencies specifically related to this goal. It had good reliability (internal consistency), feasibility, face validity and was sensitive to change. In addition, it appears to have very good educational impact; ie, the assessment is part of the learning process because it requires that trainees/students reflect on their IPE competencies. The IPC-GRS fulfills all utility elements of an educational assessment tool and can be considered psychometrically rigorous and sustainable (34,36) in a Pain-IPE context, such as that described here. Based on our results, it appears that this is a good measure of IPE-related outcomes and may be recommended for trainee/student or postgraduate level pain education programs that are interprofessional in nature.

Limitations: Selecting a pain knowledge outcome measure for diverse professions

The choice of a measure to assess changes in pain knowledge and attitudes following Pain-IPE is not simple. Different questionnaires may be required for different patient population settings, as well as for different student groups depending on their stage of education or the professions involved. This should also be balanced against the need to standardize results from different studies. We chose to use a standardized measure for pediatric pain knowledge (ie, PNKAS) to assess our outcomes because it was specific to our clinical setting, psychometrically sound and feasible (28). However, this measure was originally developed for nurses and did not perform well in an interprofessional setting because data from group I participants were not useable.

There is a need for a reliable, validated Pain-IPE knowledge and attitudes tool that can assess learning outcomes across professions at this advanced level of training. Watt-Watson et al (13) validated a pain knowledge and beliefs measure for an undergraduate multi/interprofessional program. Because our trainees/students were at a more advanced level and were applying the learning to the pediatric population, we chose the PNKAS. However, the PNKAS was previously validated in the nursing population only. Another common pain knowledge measure that is at a similar advanced level (PainKnow-50) (48) is validated only with physicians. Based on our findings, we propose that there may not be a single measure for a valid assessment of change in pain knowledge across professions. For advanced trainees/students and practicing clinicians, one must carefully consider the objectives of the learning experience; some pain knowledge is basic to all professions and some is unique for each profession. A fundamental value of IPE is the deliberate creation of heterogeneous groups of trainees/students from different professions with a diversity of knowledge and perspectives to learn to improve collaborative pain care. It should not be assumed that pain knowledge should be homogeneous before or after the learning. The desired pain-knowledge outcomes must be considered for each profession. Perhaps a single pain-knowledge questionnaire can/should not reflect the learning when advanced-level trainees from a wide range of professions are involved in pain-IPE.

The present study was a pilot study to determine the feasibility and preliminary outcomes of an innovative pain-IPE education model within the clinical site. Our findings have direct application for defining, evaluating, assessing change in interprofessional competencies and pain knowledge to compare and other models of Pain-IPE for advanced trainees.

CONCLUSIONS

The Pain-IPE Placement was a useful learning experience for trainees/students to build on previous exposure to IPE and pain education and develop competencies for collaborative patient-centred pain care. Successful preparation of health care trainees/students for collaborative patient-centred pain care is complex and requires more than one education method or session (9). This model of Pain-IPE can successfully complement previous in-class Pain-IPE education that occurs earlier in the trainee/student’s formal education. The process feedback was positive and there was significant improvement in all educational outcomes.

As more pain education programs that are intended to be inter-professional are developed internationally, it will be critical to establish a framework for evaluating these new curricula, including the assessment of trainee/student-specific and interprofessional-specific competencies for improving collaboration for patients with pain. A rigorous assessment system is an essential requirement in enhancing quality and accountability of Pain-IPE. This is a first step at defining and evaluating measure of Pain-IPE outcomes for advanced level trainee/student or postprofessional participants. Although, at more advanced stages of training, single measures can assess learning of collaborative competencies across professions, competencies specific to advanced pain knowledge vary between professions and may not be sufficiently reflected in a single pain-knowledge measure.

Acknowledgments

The authors thank Vera Gueorguieva RN MN, the Advanced Nursing Practice Educator who acted as education coordinator, and facilitators Anne Ayling-Campos BSc(PT) MSc and Lori Palozzi RN MN. This study was supported by a grant from Pfizer Canada as an unrestricted grant.

APPENDIX 1. Trainee Semistructured Interview Guide

Hello, my name is __________ and this is ______________. We are interested in learning more about your experience in the pain inter-professional education clinical placement at SickKids. We are going to start off with some general questions. We will then ask you some more specific questions about what you liked and did not like as well as any suggestions you might have for improving the program.

Broad Questions:

Can you tell me about your experience in the pain interprofessional education program at SickKids? Probes: Can you tell me more about that?

More specific probes:

-

What did you like best about the program?

Probes: Can you tell me more about why you liked that best?

-

What did you like least about the program?

Probes: Can you tell me more about why you liked that the least? What could we change to make that better?

-

What did you think about the length of the program?

Probes: Was it too short, too long or just right? Can you tell me more about that?

-

What did you think about the educational content regarding pediatric pain?

Probes: Was it too much, not enough or just right? Can you tell me more about that?

Did it meet your learning needs? What would you like to see added or removed?

-

How do you think this experience will influence the way you will manage pain in children in your everyday clinical practice in the future?

Probes: Can you tell me more about that? Can you give me an example?

-

How do you think this experience will influence the way you work with other health care professionals in managing pain in children in your everyday clinical practice in the future?

Probes: Can you tell me more about that? Can you give me an example?

-

If you had to tell another student about the program, what would you tell them?

Probes: Would you recommend the program to another student? Why or why not?

-

What would you change about the program?

Probes: Can you tell me more about that?

-

Is there anything else you would like to tell us about the pain IPE program?

Probes: Can you tell me more about that?

APPENDIX 2. Facilitator Semistructured Interview Guide

Hello, my name is __________ and this is ______________. We are interested in learning more about your experience as a facilitator in the pain interprofessional education clinical program at SickKids. We are going to start off with some general questions. We will then ask you some more specific questions about what you liked and did not like as well as any suggestions you might have for improving the program.

Broad Questions:

Can you tell me about your experience as a facilitator in the pain interprofessional education program at SickKids?

Probes: Can you tell me more about that?

More specific probes:

-

What did you like best about facilitating the program?

Probes: Can you tell me more about why you liked that best?

-

What did you like least about facilitating the program?

Probes: Can you tell me more about why you liked that best?

-

What did you think about the length of the program?

Probes: Was it too short, too long or just right? Can you tell me more about that?

-

What did you think about the educational content regarding pediatric pain?

Probes: Was it too much, not enough or just right? Can you tell me more about that?

Did it meet the students learning needs? What would you like to see added or removed?

-

How do you think this experience will influence the way the students will manage pain in children in their everyday clinical practice in the future?

Probes: Can you tell me more about that? Can you give me an example?

-

How do you think this experience will influence the way the students work with other health care professionals in managing pain in children in their everyday clinical practice in the future?

Probes: Can you tell me more about that? Can you give me an example?

-

If you had to tell another student or staff member at the hospital about the program, what would you tell them?

Probes: Would you recommend the program to another student? Why or why not?

-

What would you change about the program?

Probes: Can you tell me more about that?

-

Is there anything else you would like to tell us about the pain IPE program?

Probes: Can you tell me more about that?

APPENDIX 3. Interprofessional Care Core Competencies Global Rating Scales

| Category | Description | Pre % | Post % |

|---|---|---|---|

| Collaboration | |||

| Knowledge (Exposure) | Able to clearly and thoroughly describe own role, responsibilities, values and scope of practice to clients, patients, families and other professionals | 42.1 | 90.5 |

| Knowledge (Exposure) | Able to thoroughly and accurately identify instances where IP care will improve client, patient and/or family outcomes | 42.1 | 90.5 |

| Skills/Behaviour (Immersion) | Able to comprehensively contribute to involving other professions in client/patient/family care appropriate to their roles and responsibilities | 31.6 | 90.5 |

| Skills/Behaviour (Immersion) | Able to comprehensively contribute to effective decision-making in IP teamwork utilizing judgment and critical thinking | 15.8 | 85.7 |

| Skills/Behaviour (Immersion) | Able to comprehensively contribute to team effectiveness through reflection on IP team function | 26.3 | 90.5 |

| Skills/Behaviour (Immersion) | Able to clearly and thoroughly describe others’ roles, responsibilities, values and scopes of practice | 15.8 | 76.2 |

| Skills/Behaviour (Immersion) | Able to comprehensively contribute to the establishment and maintenance of effective IP working relationships/partnerships | 47.4 | 90.5 |

| Communication | |||

| Skills/Behaviour (Immersion) | Able to contribute accurately and effectively to effective IP communication by addressing conflict or difference of opinions | 36.9 | 81 |

| Attitude (Immersion) | Completely aware of and open to utilize and develop effective IP communication skills | 57.9 | 95.2 |

| Skills/Behaviour (Immersion) | Able to contribute accurately and effectively to effective IP communication by self-reflecting | 47.3 | 85.7 |

| Knowledge (Exposure) | Recognize and understand clearly and thoroughly how others’ own uniqueness, including power and hierarchy, may contribute to effective communication and/or IP tension | 52.6 | 95.2 |

| Knowledge - (Exposure) | Understand how my own uniqueness may contribute to effective communication and/or IP tension | 57.9 | 85.7 |

| Skills/Behaviour (Immersion) | Able to contribute accurately and effectively to effective IP communication by giving and receiving feedback | 31.6 | 81 |

| Values and Ethics | |||

| Attitude (Exposure) | Able to clearly reflect on own values (personal and professional) and to demonstrate respect for the values of other IP team members, clients and/or families | 47.4 | 95.2 |

| Attitude (Exposure) | Able to thoroughly clarify values of accountability, respect, confidentiality, trust, integrity, honesty and ethical behaviour, equity as they relate to IP team functioning to maximize quality, safe patient/client care | 52.6 | 90.5 |

IP Interprofessional; Pre Pre-Pain IP Education Placement; Post Post-Pain IP Education Placement

APPENDIX 4. Checklist preliminary evaluation of utility of Pain-Interprofessional Education Placement evaluative measures

| Quality | Definition | Criteria to rate the quality |

|---|---|---|

| Content validity | The extent to which the domain of interest is comprehensively sampled by the items in the measure | Face validity by participants and research team |

| Readability | The questionnaire is understandable for all patients | Completed questionnaire |

| Qualitative feedback | ||

| Internal consistency | The extent to which items in a subscale are inter-correlated; a measure of the homogeneity of the subscale |

|

| Floor-ceiling effects | The measure fails to demonstrate a worse score in patients who were clinically deteriorated and/or an improved score in patients who clinically improved | |

| Responsiveness | The ability to detect important change over time in the concept being measured | Face evaluation by research team |

| Practicality considerations | Burden to student and administration | Time to complete |

| Ease of scoring |

Footnotes

INSTITUTION AND FUNDING: This work originated in the Centre for the Study of Pain, University of Toronto. This work was partially supported by an educational grant from Pfizer Canada.

REFERENCES

- 1.Ferrell BR, McGuire DB, Donovan MI. Knowledge and beliefs regarding pain in a sample of nursing faculty. J Prof Nurs. 1993;9:79–88. doi: 10.1016/8755-7223(93)90023-6. [DOI] [PubMed] [Google Scholar]

- 2.McCaffery M, Ferrell BR. Nurses’ knowledge of pain assessment and management: How much progress have we made? J Pain Symptom Manage. 1997;14:175–88. doi: 10.1016/s0885-3924(97)00170-x. [DOI] [PubMed] [Google Scholar]

- 3.McCaffery M, Ferrell BR. Nurses’ knowledge about cancer pain: A survey of five countries. J Pain Symptom Manage. 1995;10:356–69. doi: 10.1016/0885-3924(95)00059-8. [DOI] [PubMed] [Google Scholar]

- 4.Briggs EV, Carr EC, Whittaker MS. Survey of undergraduate pain curricula for healthcare professionals in the United Kingdom. Eur J Pain. 2011;15:789–95. doi: 10.1016/j.ejpain.2011.01.006. [DOI] [PubMed] [Google Scholar]

- 5.Fragemann K, Meyer N, Graf BM, Wiese CH. Interprofessional education in pain management: Development strategies for an interprofessional core curriculum for health professionals in German-speaking countries. Schmerz. 2012;26:369–82. doi: 10.1007/s00482-012-1158-0. [DOI] [PubMed] [Google Scholar]

- 6.Henry JL. The need for knowledge translation in chronic pain. Pain Res Manag. 2008;13:465–76. doi: 10.1155/2008/321510. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Lynch ME. Do we care about people with chronic pain? Pain Res Manag. 2008;13:463. doi: 10.1155/2008/523614. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Sessle BJ. The pain crisis: What it is and what can be done. Pain Res Treat. 2012;2012:703947. doi: 10.1155/2012/703947. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Carr E, Hughes J, Jamison R, et al. International Association of Pain (IASP) Interprofessional Pain Curriculum Outline. 2012. < www.iasp-pain.org/Education/CurriculumDetail.aspx?ItemNumber=2057> (Accessed November 12, 2012).

- 10.Carr EC, Brockbank K, Barrett RF. Improving pain management through interprofessional education: Evaluation of a pilot project. Learn Health Soc Care. 2003;2:6–17. [Google Scholar]

- 11.Hunter J, Watt-Watson J, McGillion M, et al. An interfaculty pain curriculum: Lessons learned from six years experience. Pain. 2008;140:74–86. doi: 10.1016/j.pain.2008.07.010. [DOI] [PubMed] [Google Scholar]

- 12.McGillion M, Dubrowski A, Stremler R, et al. The Postoperative Pain Assessment Skills pilot trial. Pain Res Manag. 2011;16:433–9. doi: 10.1155/2011/278397. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Watt-Watson J, Hunter J, Pennefather P, et al. An integrated undergraduate pain curriculum, based on IASP curricula, for six health science faculties. Pain. 2004;110:140–8. doi: 10.1016/j.pain.2004.03.019. [DOI] [PubMed] [Google Scholar]

- 14.Herbert CP. Changing the culture: Interprofessional education for collaborative patient-centred practice in Canada. J Interprof Care. 2005;19(Suppl 1):1–4. doi: 10.1080/13561820500081539. [DOI] [PubMed] [Google Scholar]

- 15.Hammick M, Freeth D, Koppel I, Reeves S, Barr H. A best evidence systematic review of interprofessional education: BEME Guide no. 9. Med Teach. 2007;29:735–51. doi: 10.1080/01421590701682576. [DOI] [PubMed] [Google Scholar]

- 16.Barr H, Schmitt M. Ten years on. J Interprof Care. 2002;16:5–6. doi: 10.1080/13561820220104113. [DOI] [PubMed] [Google Scholar]

- 17.Reeves S, Zwarenstein M, Goldman J, et al. The effectiveness of interprofessional education: Key findings from a new systematic review. J Interprof Care. 2010;24:230–41. doi: 10.3109/13561820903163405. [DOI] [PubMed] [Google Scholar]

- 18.Oandasan I, Reeves S. Key elements for interprofessional education. Part 1: The learner, the educator and the learning context. J Interprof Care. 2005;19(Suppl 1):21–38. doi: 10.1080/13561820500083550. [DOI] [PubMed] [Google Scholar]

- 19.Canadian Interprofessional Health Collaborative (CHIC) College of Health Disciplines. Interprofessional Education & Core Competencies; 2007. < www.cihc.ca/files/publications/CIHC_IPELitReview_May07.pdf> (Accessed on November 8, 2012). [Google Scholar]

- 20.WHO Study Group on Interprofessional Education and Collaborative Practice. Framework for Action on Interprofessional Education and Collaborative Practice. 2010.

- 21.Barr H, Freeth D, Hammick M, Koppel I, Reeves S. The evidence base and recommendations for interprofessional education in health and social care. J Interprof Care. 2006;20:75–8. doi: 10.1080/13561820600556182. [DOI] [PubMed] [Google Scholar]

- 22.Lumague M, Morgan A, Mak D, et al. Interprofessional education: The student perspective. J Interprof Care. 2006;20:246–53. doi: 10.1080/13561820600717891. [DOI] [PubMed] [Google Scholar]

- 23.Sinclair L, Lowe M, Paulenko T, Walczak A. Facilitating Interprofessional Clinical Learning: A Handbook for Interprofessional Education Placements and Other Opportunities: Toronto. Toronto: University of Toronto, Office of Interprofessional Education; 2007. Developing IPE Facilitator Skills: Workshop Resources. [Google Scholar]

- 24.Centre for Interprofessional Education, University of Toronto. A Framework for the Development of Interprofessional Education Values and Core Competencies. 2012. < http://ipe.utoronto.ca/sites/default/files/2012CoreCompetenciesDiagram.pdf> (Accessed on November 12, 2012).

- 25.Centre for Interprofessional Education, University of Toronto. Values and Core Competencies for health professional programs at the University of Toronto. 2012. < www.rehab.utoronto.ca/PDF/IPE.pdf> (Accessed November 12, 2012).

- 26.Canadian Interprofessional Health Collaborative (CHIC) A National Interprofessional Competency Framework. 2010. < www.cihc.ca/files/CIHC_IPCompetencies_Feb1210.pdf> (Accessed July 7, 2014).

- 27.Morgan DL. Focus Groups as Qualitative Research. 2nd edn. Thousand Oaks: Sage; 1997. [Google Scholar]

- 28.Rieman MT, Gordon M, Marvin JM. Pediatric nurses’ knowledge and attitudes survey regarding pain: A competency tool modification. Pediatr Nurs. 2007;33:303–6. [PubMed] [Google Scholar]

- 29.McFadyen AK, Maclaren WM, Webster VS. The Interdisciplinary Education Perception Scale (IEPS): An alternative remodelled sub-scale structure and its reliability. J Interprof Care. 2007;21:433–43. doi: 10.1080/13561820701352531. [DOI] [PubMed] [Google Scholar]

- 30.Luecht RM, Madsen MK, Taugher MP, Petterson BJ. Assessing professional perceptions: Design and validation of an Interdisciplinary Education Perception Scale. J Allied Health. 1990;19:181–91. [PubMed] [Google Scholar]

- 31.Simmons B, Wagner S. Assessment of continuing interprofessional education: Lessons learned. J Contin Educ Health Prof. 2009;29:168–71. doi: 10.1002/chp.20031. [DOI] [PubMed] [Google Scholar]

- 32.Curran V, Hollett A, Casimiro LM, et al. Development and validation of the interprofessional collaborator assessment rubric (ICAR) J Interprof Care. 2011;25:339–44. doi: 10.3109/13561820.2011.589542. [DOI] [PubMed] [Google Scholar]

- 33.Curran V, Casimiro L, Banfield V, et al. Research for interprofessional competency-based evaluation (RICE) J Interprof Care. 2009;23:297–300. doi: 10.1080/13561820802432398. [DOI] [PubMed] [Google Scholar]

- 34.Chandratilake M, Davis M, Ponnamperuma G. Assessment of medical knowledge: The pros and cons of using true/false multiple choice questions. Natl Med J India. 2011;24:225–8. [PubMed] [Google Scholar]

- 35.van der Vleuten CP, Schuwirth LW. Assessing professional competence: From methods to programmes. Med Educ. 2005;39:309–17. doi: 10.1111/j.1365-2929.2005.02094.x. [DOI] [PubMed] [Google Scholar]

- 36.van der Vleuten CP, Schuwirth LW, Scheele F, Driessen EW, Hodges B. The assessment of professional competence: Building blocks for theory development. Best Pract Res Clin Obstet Gynaecol. 2010;24:703–19. doi: 10.1016/j.bpobgyn.2010.04.001. [DOI] [PubMed] [Google Scholar]

- 37.Eechaute C, Vaes P, Van AL, Asman S, Duquet W. The clinimetric qualities of patient-assessed instruments for measuring chronic ankle instability: A systematic review. BMC Musculoskelet Disord. 2007;8:6. doi: 10.1186/1471-2474-8-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Lohr KN, Aaronson NK, Alonso J, et al. Evaluating quality-of-life and health status instruments: Development of scientific review criteria. Clin Ther. 1996;18:979–92. doi: 10.1016/s0149-2918(96)80054-3. [DOI] [PubMed] [Google Scholar]

- 39.Miles MB, Huberman AM. Qualitative Data Analysis: An Expanded Sourcebook. Thousand Oaks: SAGE Publications; 1994. [Google Scholar]

- 40.Morse JM, Field PA. Qualitative Research Methods for Health Professionals. Thousand Oaks: SAGE Publications; 1995. [Google Scholar]

- 41.Kvale S. Interviews: An Introduction to Qualitative Research Interviewing. Thousand Oaks: Sage Publications; 1996. [Google Scholar]

- 42.Reeves S, Lewin S, Zwarenstein M. Using qualitative interviews within medical education research: Why we must raise the ‘quality bar’. Med Educ. 2006;40:291–2. doi: 10.1111/j.1365-2929.2006.02468.x. [DOI] [PubMed] [Google Scholar]

- 43.Zwarenstein M, Reeves S, Perrier L. Effectiveness of pre-licensure interprofessional education and post-licensure collaborative interventions. J Interprof Care. 2005;19(Suppl 1):148–65. doi: 10.1080/13561820500082800. [DOI] [PubMed] [Google Scholar]

- 44.Zwarenstein M, Goldman J, Reeves S. Interprofessional collaboration: Effects of practice-based interventions on professional practice and healthcare outcomes. Cochrane Database Syst Rev. 2009;(3):CD000072. doi: 10.1002/14651858.CD000072.pub2. [DOI] [PubMed] [Google Scholar]

- 45.Strong J, Meredith P, Darnell R, Chong M, Roche P. Does participation in a pain course based on the International Association for the Study of Pain’s curricula guidelines change student knowledge about pain? Pain Res Manag. 2003;8:137–42. doi: 10.1155/2003/263802. [DOI] [PubMed] [Google Scholar]

- 46.Cameron A, Ignjatovic M, Langlois S, et al. An interprofessional education session for first-year health science students. Am J Pharm Educ. 2009;73:62. doi: 10.5688/aj730462. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Anderson E, Manek N, Davidson A. Evaluation of a model for maximizing interprofessional education in an acute hospital. J Interprof Care. 2006;20:182–94. doi: 10.1080/13561820600625300. [DOI] [PubMed] [Google Scholar]

- 48.Harris JM, Jr, Fulginiti JV, Gordon PR, et al. KnowPain-50: A tool for assessing physician pain management education. Pain Med. 2008;9:542–54. doi: 10.1111/j.1526-4637.2007.00398.x. [DOI] [PubMed] [Google Scholar]