Abstract

Behavior, perception and cognition are strongly shaped by the synthesis of information across the different sensory modalities. Such multisensory integration often results in performance and perceptual benefits that reflect the additional information conferred by having cues from multiple senses providing redundant or complementary information. The spatial and temporal relationships of these cues provide powerful statistical information about how these cues should be integrated or “bound” in order to create a unified perceptual representation. Much recent work has examined the temporal factors that are integral in multisensory processing, with many focused on the construct of the multisensory temporal binding window – the epoch of time within which stimuli from different modalities is likely to be integrated and perceptually bound. Emerging evidence suggests that this temporal window is altered in a series of neurodevelopmental disorders, including autism, dyslexia and schizophrenia. In addition to their role in sensory processing, these deficits in multisensory temporal function may play an important role in the perceptual and cognitive weaknesses that characterize these clinical disorders. Within this context, focus on improving the acuity of multisensory temporal function may have important implications for the amelioration of the “higher-order” deficits that serve as the defining features of these disorders.

Introduction

We live in a world rich with information about the events and objects around us. This information comes in a variety of different forms; forms that we generally ascribe to our different senses. Although neuroscience has generally approached the study of sensory processes on a modality-by-modality basis, our perceptual view of the world is an integrated and holistic one in which these sensory cues are blended seamlessly into a singular perceptual Gestalt. Such a multisensory perspective cries out for an intensive investigation of how information from the different senses is combined by the brain to influence our behaviors and shape our perceptions, a field that has emerged over the past 25 years and which is now growing at an impressive pace.

Rather than simply acknowledging the necessity of merging information from the different senses in order to build our perceptual reality, it must also be pointed out that the synthesis of multisensory information confers powerful behavioral and perceptual advantages (for recent reviews see [1-4]). Indeed, the driving evolutionary forces that undoubtedly led to multisensory systems are the powerful adaptive benefits seen when information is available from more than a single sense. For example, in animal behavior, the presence of cues from multiple senses has been shown to result in improvements in stimulus detection, discrimination and localization that manifest as faster and more accurate responses. In a similar manner, human studies have revealed multisensory-mediated performance benefits in a host of behavioral and perceptual tasks. Several of the more salient of these include the speeding of simple reaction times under paired visual-auditory stimulation and increased intelligibility of a speech signal when presented in a multisensory (i.e., audiovisual) context within a noisy environment [5-13].

A great deal of work has gone into examining the neural correlates of these multisensory-mediated changes in behavior and perception. These studies have detailed the presence and organization of a number of cortical and subcortical structures within which information from multiple senses converges, and the neural integration that accompanies this convergence in both humans [6, 7, 14-52] and animals [52-82]. In addition, a great deal of recent work has gone into describing the modulatory influences that a “non-dominant” modality can have on information processing within the “dominant” modality, such as examining how visual information can affect the processing of sounds within auditory cortex [83, 84]. Indeed, these observations have spurred a debate as to whether or not the entire cerebral cortex (and by extension the entire brain) can be considered “multisensory” [85, 86]. Collectively, these studies have greatly illuminated our understanding of how information from the different senses interacts to influence neural and network responses, and how these responses are ultimately correlated with behavior and perception.

A “principled” view into multisensory processing

Along with detailing how neuronal, behavioral and perceptual responses are altered under multisensory conditions, prior work has also revealed key operational characteristics regarding these multisensory interactions. Perhaps most important among these was the general finding that the physical characteristics of the stimuli that were to be combined are important determinants of the end product of a multisensory interaction. First studied at the level of the individual neuron, these stimulus factors include the characteristics of space, time and effectiveness. In regards to space and time, multisensory (e.g., visual-auditory) stimuli that are spatially and temporally proximate typically result in the largest enhancements in neuronal response [56, 58, 66, 87-90]. In addition, stimuli that are weakly effective when presented on their own result in proportionately larger enhancements when combined [71, 91, 92]. These basic integrative principles make a great deal of intuitive sense in that space and time are powerful statistical indicators of the likelihood that stimuli arise from the same event, and in that a highly-salient or effective stimulus is one modality needs little amplification. Recent work has added to our understanding of the role that these stimulus factors play in multisensory interactions by highlighting their interdependency [58, 93-97]. Thus, one cannot view space, time and effectiveness as independent entities, since manipulations of one, for example spatial location, will also impact the effectiveness of those stimuli and the temporal firing patterns associated with them.

Following the description of these principles at the neuronal level, a number of studies have followed up on this work in the behavioral and perceptual realms, and has shown that these principles often extend into these domains as well. Thus, behavioral and perceptual facilitations have been shown to be greatest for stimuli that are close together in space and time [90, 98-128], and the proportional benefits of combining stimuli across different modalities appear to be greatest when the individual stimuli are weakly effective [18, 21, 22, 113]. In addition, and much like for the neuronal data described above, recent studies have also illustrated the interdependency of these principles in human performance and perception [20, 58, 129].

One area of very active research is the applicability of these principles for describing all aspects of human performance and perception. Although first driven by studies showing exceptions to the spatial, temporal and effectiveness principles described above, more recent thinking is converging toward a more dynamic and contextual view of the applicability of these principles [130, 131]. In addition to illustrating the flexibility inherent in multisensory processes, there are strong suggestions as to the mechanistic underpinnings of such adaptive networks and integrative processes, including oscillatory phase resetting and divisive normalization [131]. As a more concrete example, in the context of a task in which temporal factors are relatively unimportant (e.g., stimulus or target localization), it is expected that there would be less (if any) weighting placed on the temporal structure of the stimulus complex. Thus, current thinking invokes a flexibly specified set of interactive rules or principles tightly related to task performance that ultimately dictate the final product of a multisensory stimulus combination.

In the current review, we have chosen to focus on temporal factors, in large measure because of the recent accumulation of evidence that has outlined how multisensory temporal function changes during typical development, and because of the growing acknowledgment that multisensory temporal acuity is altered in a number of neurodevelopmental disabilities – three of which, autism, dyslexia and schizophrenia, are highlighted in this review. Although this review is framed from the perspective of temporal function for these reasons, we must point out that, as alluded to above, both space and spatiotemporal factors are powerful players in the construction of our multisensory perceptual gestalt. Indeed, much work has focused on describing how these spatial and spatiotemporal factors influence multisensory interactions at the neural, behavioral and perceptual levels [20, 58, 89, 90, 97, 116, 117, 128, 132-138], and any accounting of multisensory function is necessarily incomplete without acknowledgement of the important role these factors play as “filters” for multisensory systems.

The temporal principle expanded: the multisensory temporal binding window

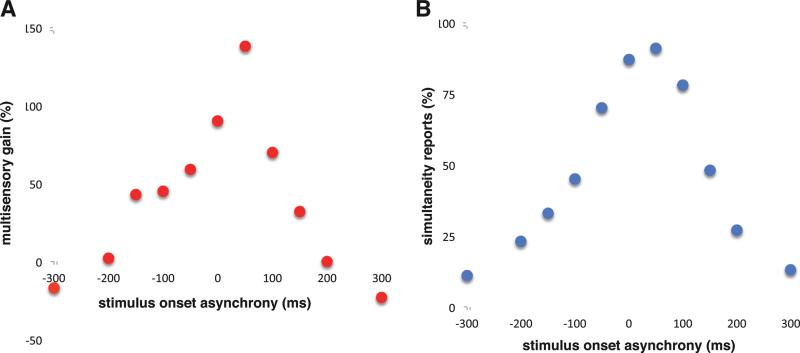

The concept of temporal factors, originally defined on the basis of the temporal tuning functions of individual multisensory neurons (fig 1A) [87], has been expanded to capture the effects of temporal factors on human psychophysical performance. Although the temporal properties of human performance very much resemble their neuronal counterparts (fig 1B) [100], when placed in the context of a judgment about the unity of an audiovisual stimulus complex (i.e., did they occur at the same time or not), they point to a thresholding process in which the observer must make a probabilistic judgment about the nature of the stimulus complex. More concretely, in the example shown in Figure 1b, the subject is making judgments about the simultaneity of a visual-auditory stimulus pair that is presented at varying stimulus onset asynchronies (SOAs) or delays. Note that when the stimuli are objectively simultaneous (i.e., an SOA of 0 ms), the subject has a high probability of correctly reporting this simultaneity. However, even with delays of a hundred milliseconds or more, the subject still reports on a high percentage of trials that the stimuli are simultaneous. Such a broad interval within which simultaneity continues to be reported suggests a degree of temporal tolerance for stimulus asynchrony, in essence creating a “window” of time within which multisensory stimuli are highly likely to be perceptually bound or integrated [99, 105, 115, 139-144].

Figure 1.

Representative multisensory temporal “filters” for neurons and perception. Panel on the left (A) shows the temporal tuning function for a neuron in the cat superior colliculus in response to paired audiovisual stimuli. Plotted is the gain in neuronal response (i.e., multisensory interactive gain) as a function of the stimulus onset asynchrony between the visual and auditory stimuli. Negative values represent conditions in which the auditory stimulus precedes the visual stimulus. Panel on the right (B) shows the responses of a representative human subject for a simultaneity judgment task. Plotted is the percentage of reports of simultaneity as a function of stimulus onset asynchrony. Note the similarities in the neuronal and psychophysical distributions.

This construct of a multisensory temporal binding window (TBW) is highly adaptive, in that it allows multisensory information to be bound even when it originates at differing distances from the subject. The biological utility of this is grounded in the substantial differences in the propagation times for visual and auditory energy. Consider a visual-auditory event happening 1 meter from you vs. 34 meters away. In the first case, the arrival of the visual and auditory energies to the eye and ear is nearly simultaneous, whereas in the second circumstance the auditory information arrives at the ear approximately 100 ms after the visual information impinges on the eye (sound travels at about 340 m/s). Additional evidence for the importance of these biological delays can be seen through measures of the point of subjective simultaneity (PSS), the exact temporal offset (measured at the sensory organ) at which an individual is most likely to perceive two inputs as synchronous. On initial thinking, one would expect the PSS to be at 0. However, the PSS in most individuals is typically observed when the auditory component of a stimulus pair slightly lags the visual stimulus component [for review, see 141].

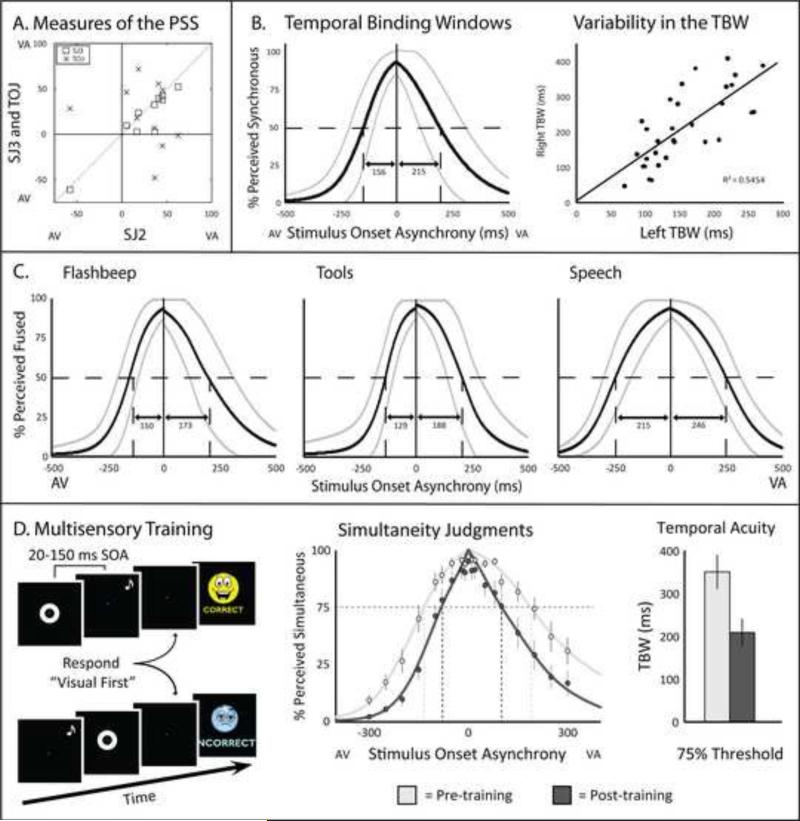

In recent years a number of salient characteristics about this TBW have been discovered (Figure 2). First, the window differs in size for different stimuli, with it being smallest for simple audiovisual stimulus pairs such as flashes and beeps, intermediate in size for more complex environmental stimuli such as a hammer hitting a nail, and largest for the most complex of naturalistic multisensory stimuli – speech [140, 141, 145-147]. Second, the TBW exhibits a marked degree of variability from subject-to-subject [148]. Third, the TBW continues to mature late into development, with it being broader than for adults well into adolescence [101, 120]. Finally, the TBW has been shown to be malleable in multiple ways, both adjusting to the temporal statistics of the environment (recalibration [122, 149-153]), and in perceptual plasticity studies showing that it can be substantially narrowed with feedback training [37, 103, 154, 155].

Figure 2.

Methods of characterizing multisensory temporal function in human subjects. A. Individual subjects’ points of subjective simultaneity (PSS) determined using three different temporal tasks: a two-alternative forced-choice simultaneity judgment (SJ2; “same time different time?”), a three-alternative forced-choice simultaneity judgment (SJ3; “audio first, same time, or visual first?”), and a temporal order judgment (TOJ; “Which came first?”). Note that with all three tasks, individuals PSS values fell in the visual-leading range. Adapted from van Eijk et al, 2008. B. The size of the multisensory temporal binding window (TBW) is highly variable between subjects, but within each subject the size of the left (auditory leading) and right (visual leading) windows are strongly correlated. Adapted from Stevenson, Zemtsov and Wallace, 2012. C. The width of the TBW is very dependent upon the type of stimuli presented, with narrower windows (high temporal acuity) being seen for simple (i.e., flashes and beeps) stimuli, and the widest windows being observed for speech stimuli. Adapted from Stevenson and Wallace, 2013. D. Perceptual learning paradigms have been shown to reliably increase individual's multisensory temporal acuity, as indexed by a narrowing of the TBW. Adapted from Schlesinger et al., In Press.

Collectively, these studies point to the multisensory TBW as an important component of our perceptual view of the world, structured to make strong statistical inferences about the likelihood that multisensory stimuli originate from the same object or event. As highlighted below, individual differences in this window and alterations in its size are likely to have important implications for the construction of our perceptual (and cognitive) representations.

The neural correlates of multisensory temporal function

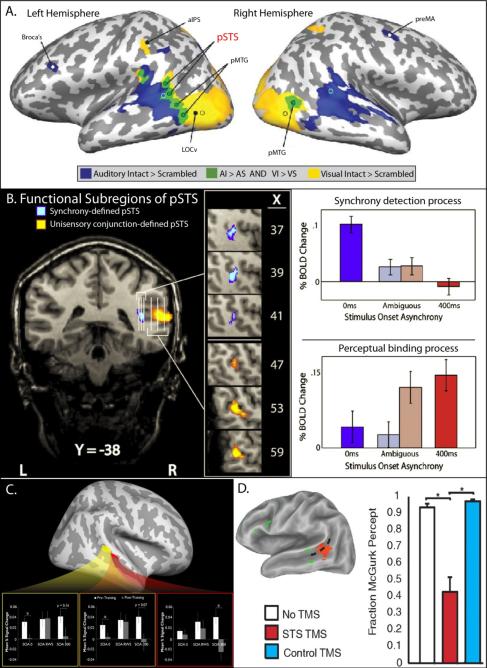

As alluded to earlier, much of the foundational work in regards to the neural correlates of multisensory function, including its temporal constraints, has come from a midbrain structure, the superior colliculus (SC). The primary role of the SC is in the initiation and control of gaze (i.e., combined eye and head) movements to a stimulus of interest. Following the principles described earlier, these movements are facilitated (i.e., are generally faster and more accurate) to multisensory stimuli that are spatially and temporally proximate [109, 113, 116, 117, 156-160]. However, for perceptual judgments such as the evaluations of simultaneity described earlier, it is unlikely that the SC plays a major role. Rather, these perceptual (as opposed to sensorimotor) processes appear to be the dominion of cortical domains likely to play a central role in stimulus “binding.” One of the central cortical hubs for the processing of audiovisual timing relations appears to be the cortex surrounding the posterior superior temporal sulcus (pSTS). The pSTS is well positioned for this role in that it lay at the junction between occipital (visual) and temporal (auditory) cortex, and it receives substantial convergent input from visual and auditory cortical domains. Moreover, the pSTS is differentially active during the presentation of synchronous versus asynchronous audiovisual stimulus pairs, suggesting an important role in evaluations of audiovisual timing [20, 24, 36, 37, 161]. The pSTS has also been shown to signal the perceptual binding of an audiovisual stimulus pairing, responding more efficiently to a pairing of identical temporal relations that is perceived as a single event when compared to one that is perceived to be two distinct events [36]. An additional piece of evidence in support of a central role for the pSTS in multisensory temporal function is that following perceptual training that narrows the TBW, activity changes as indexed by fMRI are seen in a cortical network centered on the pSTS [37]. Finally, numerous studies have shown the pSTS to be an important site for the processing of audiovisual speech cues [7, 15, 18, 19, 26, 162, 163], including work that has shown that deactivation of the pSTS via transcranial magnetic stimulation (TMS) can abolish the McGurk illusion – in which the pairing of discordant visual and auditory speech tokens typically results in a novel fused percept [162]. Collectively, these studies point to the pSTS as a key node for multisensory convergence and integration, and for the evaluation of temporal factors in the perceptual determination of stimulus binding.

The development of multisensory function

Somewhat surprisingly, although we know a great deal about the characteristics, function and behavioral/perceptual correlates of multisensory integration in the adult, our knowledge of these processes during development has been less well described. Animal model studies have shown that multisensory neurons and their associated integrative properties mature over a protracted period of developmental life that extends well into “adolescence” [61, 164-167]. In addition, these studies have shown remarkable plasticity in the development of these processes, such that changes in the statistical structure (i.e., spatial and temporal stimulus relations) of the early sensory world result in the development of integrative properties that match these statistics [53, 168-170].

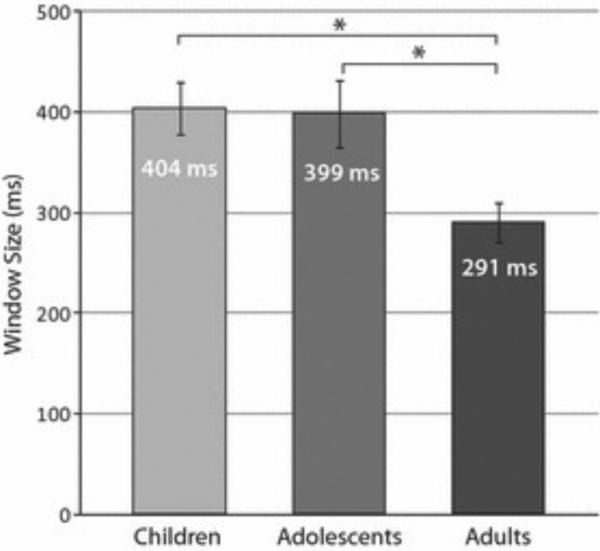

Much of the work that has examined multisensory function in human development has focused on the period soon after birth. These studies have shown a beautiful sequential development in the abilities of the infant in their ability to evaluate (and likely bind) multisensory relations, with the capacity to evaluate simple features of a multisensory stimulus complex (e.g., duration) maturing prior to the ability to evaluate more complex features (e.g., rhythm) [171-173]. Examples that are most germane to the temporal dimension, the focus of the current review, include the findings that infants begin life with much larger temporal binding windows for both audiovisual non-speech and speech stimuli (with those for speech being longest [174-176]). In addition, it has been found that the window for speech stimuli does not begin to narrow until around 5 years of age [177]. More recent work from our group has shown that these developmental processes continue to mature well into older ages. Thus, we have shown that the multisensory TBW remains larger than for adults well into adolescence (Figure 4) [101, 178]. Intriguingly, this enlarged window appears to depend on the nature of the stimuli that are being combined. Thus, whereas the window appears larger for the pairing of simple low-level visual and auditory stimuli (i.e., flashes and beeps), it is of normal size in these children for more complex speech-related stimuli.

Figure 4.

The size of the multisensory temporal binding window (TBW) is smaller in adults than in children and adolescents. Bar graph displays mean window size for children (ages 6-11, left), adolescents (ages 12-17, middle) and adults (ages 18-23, right) (n = 15 participants/ group). * = p < .05. Error bars indicate ±1 standard error of the mean (SEM). Adapted from Hillock-Dunn and Wallace, 2012.

Although far from providing a comprehensive characterization of how multisensory processes develop in the period leading up to adulthood, these studies have illustrated the long developmental interval over which these processes mature, and the marked plasticity that characterizes the maturation of multisensory function. With this as a backdrop, it should come as little surprise to see that multisensory abilities are frequently altered in the context of developmental disabilities.

Multisensory integration in developmental disabilities

As we have seen, the ability of individuals to perceptually bind sensory information allows for significant behavioral benefits and serves to create a coherent and unified perception of the external world. If these processes develop in an atypical manner then, it should come as little surprise that detrimental behavioral, perceptual and cognitive consequences are the result. Here, we will discuss such atypical multisensory function in the context of three developmental disabilities; autism spectrum disorders, dyslexia, and schizophrenia. In each case, we will highlight the current behavioral and perceptual evidence for atypical multisensory temporal processing, describe the evidence for the possible neural correlates of these dysfunctions, and outline areas in which further work is needed..

Autism and emerging evidence for sensory dysfunction

Autism spectrum disorders (ASD) make up a constellation of neurodevelopmental disabilities characterized by deficits in social communicative skills and by the presence of restricted interests and/or repetitive behaviors. The most recent evidence suggests that the incidence of ASD may be as high as 1 child in 88 [179], making it a substantial public health problem with large societal and economic costs. Although initially characterized and diagnosed on the basis of deficits in a “triad” of domains – language and communication, social reciprocity and restricted/repetitive interests – the presence of sensory deficits are now widely acknowledged, warranting their inclusion in the recent revision of the DSM [180].

The challenges in describing and defining sensory dysfunction in the context of ASD have arisen in part because of the enormous heterogeneity in these changes – ranging from striking hyporesponsivity and underreactivity to sensory stimuli to hyperresponsivity and between sensory aversions to sensory-seeking behaviors [180] . Despite this phenotypic variability, the fact that upwards of 90% of children with autism have some form of sensory alteration suggest it to be a core component of autism.

One of the great challenges with assessing the nature of these sensory changes has been that the overwhelming majority of the data has come from anecdotal evidence, caregiver reports, or self-report survey measures, limiting the ability to have a comprehensive and empirically grounded picture of the nature of these changes. This is currently changing as a number of studies are beginning to provide a more objective and systematic view into sensory function in autism. This work has served to bolster the more subjective reports, reinforcing the presence of processing deficits in a number of sensory modalities, including vision [181-194], audition [184, 195-206], and touch [207-209].

However, and seemingly at odds with this evidence, a number of these studies have also revealed the presence of normal or even enhanced sensory function in certain children and in certain domains [210-230]. Although initially enigmatic, these normal or improved abilities appear to be restricted to tasks that tap into low-level sensory function or require extensive local (as opposed to global) processing, suggesting that early sensory processing and the neural architecture that subserves it may be preserved (or even enhanced) in the autistic brain. This finding fits within the hypothetical framework that in autism local cortical organization and connectivity are preserved, but processes that rely upon communication across brain networks are impaired (see model section below for more detail). As an elegant example of this, Bertone and colleagues found that in a visual grating orientation task in which the gratings were specified by luminance, children with autism outperformed typically developing children [223]. In contrast, when the gratings were specified by changes in texture rather than luminance, the children with autism performed more poorly. Whereas the neural mechanisms for determining orientation from luminance are believed to be in primary visual cortex (V1), the mechanism for deriving orientation from texture are believed to take place at later processing stages within the visual hierarchy. This example highlights evidence in support of but one of the many neurobiologically-inspired models for describing autism and the associated changes in sensory function.

Neurobiological models of autism

A multitude of brain-based theories of autism have been put forth, each with varying degrees of supporting evidence. Several of the more prominent of these, described briefly in this section, have been used to explain differences in sensory function in ASD (along with the more widely established changes in social communicative function).

The concept of weak central coherence is closely related to the observations described above, in that it suggests that communication across brain networks is preferentially impaired in autism [231-233]. In its simplest form, the concept suggests strong deficits in holistic or “Gestalt” processing, in which individuals with autism have striking difficulties in the processing of global features, but in which the processing of local features is relatively intact or even enhanced. One of the hallmark tests used to differentiate local vs. global processing is the so-called “embedded figures” test, in which participants are asked to report on the number of simple shapes (e.g., triangles) contained within a larger image (e.g., the drawing of a clock). Numerous studies have shown that individuals with ASD outperform those who are typically-developed, but disagree on the nature of the global deficits seen using this task [234-237]. In many respects, weak central coherence can be subsumed within ideas of autism as a functional disconnection syndrome or a connectopathy, in which the core deficits are founded in weaknesses in connectivity across brain networks and that have been seen in both structural and functional connectivity studies ([238, 239][240, 241]. Although framed at a different level, these changes in network function can also be seen as a result of changes in the excitatory/inhibitory balance, another prevailing model concerning the pathophysiology in autism [242]. In this model, the core deficit in autism is the carefully balanced ratio of excitation and inhibition within and across brain networks, which if disrupted can have dramatic effects on network communication and the associated functional correlates. Another emerging model in autism suggests an important role for increases in noise or degraded signal-to-noise ratio in the etiology of autism [243-246]. The presence of increased noise (which could come from a number of sources) would basically degrade the quality of information processing, with increasing effects as one ascends up through an information processing hierarchy and thus taps greater and greater integrative abilities (since the noise would be cumulative). Finally, the temporal binding deficit hypothesis posits that timing-related deficits as a core feature of autism [247]. Indeed, temporal integration is a core feature for processing within all sensory systems, and disruptions in timing–related circuits could give rise to supramodal or multisensory processing deficits. Although these theories have been espoused by different groups at different times, there are striking similarities among them that suggest marked commonalities and shared mechanistic relations. As just one example, the temporal deficit described above could be the result of alterations in connectivity, excitatory/inhibitory balance and/or noisy sensory and perceptual encoding.

Multisensory contributions to autism

The prevalence of observations highlighting deficits in multiple sensory systems, coupled with evidence that integrative functions across brain networks may be preferentially impacted, has led to an examination of the role that multisensory dysfunction may play in autism [248]. Although as highlighted above there is now clear evidence for changes in function within the individual sensory systems, this work is predicated on the view that these unisensory deficits may not completely capture the nature of the changes in processes that index integration across the different sensory systems. In recent years, a number of labs, including our own, have attempted to provide a better view into the nature of these changes in multisensory function in those with autism.

To date, the picture that has been generated by these studies has been a complex and confusing one. Thus, although a number of studies have reported changes in multisensory function that extend beyond those predicted on the basis of changes in unisensory function, others have found either normal multisensory abilities, or deficits that can be completely explained based on unisensory performance. One of the best illustrations of this complexity is in work that has explored the susceptibility of individuals with autism to the McGurk effect – the perceptual illusion that indexes the synthesis of visual and auditory speech signals [249]. Whereas some groups have found weaknesses in this perceptual fusion [250-256], others have found normal McGurk percepts [257, 258] or changes in McGurk reports that are accountable by changes in responsiveness to the visual or auditory speech tokens [259]. The likely explanations for the substantial disparities across studies include differences in the composition of the ASD cohort (with age and severity of symptoms being significant factors) and differences in how the specific tasks are structured. Thus, even for the McGurk effect, different stimuli and response modes have been used to assay the illusion.

Changes in multisensory temporal function in autism

Despite this confusion, one of the more robust findings in autism is poorer multisensory temporal acuity – a finding that typically manifests as a broadening of their multisensory TBW [146, 147, 196, 260, 261]. In addition to their concordance with the general finding of sensory changes in children with autism, these results are also in agreement with a substantial body of evidence pointing to deficits in timing or temporally-based processes in autism. Indeed, these deficits have been encapsulated within one of the neurobiologically-inspired theories of autism described earlier - namely the temporal binding deficit hypothesis [247].

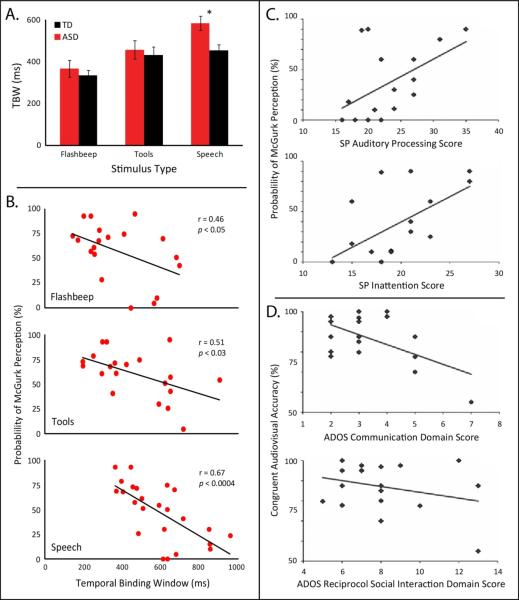

Changes in multisensory temporal function in autism have been found using a number of different tasks, including simultaneity judgments [147], temporal order judgments [146, 196], the perception of the sound-induced flash illusion [260], and preferential looking tasks [261] (Figure 5). In each of these studies, the basic finding is that individuals with ASD perceive paired visual-auditory stimuli as originating from the same event over longer time intervals than for control groups (i.e., they report simultaneity even when the stimuli are substantially asynchronous). One interesting, and to date unresolved, difference between these studies is whether the TBW is extended for all types of visual-auditory stimuli, or only for specific stimulus types more closely related to the well-established domains of weakness (e.g., speech). Thus, whereas much work supports differences only for speech-related stimuli [147, 261], other studies suggest more generalized temporal deficits that extend to pairs of very simple stimuli (i.e., flashes and beeps) [146]. Although future work will need to resolve these differences, it is important to point out here that although there are likely to be commonalities in the brain networks supporting multisensory (or at least audiovisual) temporal function, there are also likely to be separate mechanisms governing the integration of low- vs. higher-level audiovisual stimuli. For example, whereas the integration of lower-level flashes and beeps (which can be considered to represent an “arbitrary” pairing) are likely to not involve brain regions interested in contextual or semantic congruence (another important facet of multisensory binding), the integration of higher-level stimuli such as object or speech cues will also entail activation in network components performing such contextual computations.

Figure 5.

Differences in the multisensory temporal binding window are a characteristic feature of autism that relate to other domains of deficit. A. Temporal binding windows (TBW) measured in individuals with and without ASD show differences across stimulus type. A main effect of complexity was seen in both groups, with more complex stimuli associated with wider TBWs. An interaction effect showed that this effect of complexity was greater in individuals with ASD, and most notably, that a difference specific to the processing of audiovisual speech stimuli between ASD and TD groups was observed. Adapted from Stevenson et al., J Neurosci. 2014. B. In individuals with ASD, the ability to perceive the McGurk Effect is negatively correlated with the width of their TBW. That is, as individuals’ multisensory temporal acuity decreased (wider TBWs), so too did their ability to perceptually bind audiovisual speech in order to perceive the McGurk Effect. This relationship was seen when the TBW was measured using simple (i.e., flashbeep), complex non-speech (i.e., tools) and speech stimuli, suggesting that this relationship is based, at least in part, on low-level multisensory temporal processing. Adapted from Stevenson et al., J Neurosci. 2014. C. Individuals who showed more atypical auditory processing, as measured via the auditory processing score of the Sensory Processing Caregiver Questionnaire (SP), showed lower rates of McGurk perceptions (r = 0.51, p < 0.05). Similarly, individuals who showed atypical attention, as measured with via the inattention score of the SP, showed weaker McGurk perceptions (r = 0.61, p = 0.01). Adapted from Woynaroski et al., J. Autism Devel Disabil. 2014. D. Individuals that showed greater difficulties with communication, as measured by the Autism Diagnostic Observation Schedule's (ADOS) communication domain score, were less likely to accurately perceive congruent audiovisual speech (r = −0.58, p < 0.05). A similar trend was seen with the ADOS reciprocal social interaction domain score, but failed to reach significance (r = −0.29, p > 0.05), likely a result of the relatively small sample size. Adapted from Woynaroski et al., J. Autism Devel Disabil. 2014.

Why would an enlarged TBW in autism necessarily be a bad thing? One reason is that the temporal fidelity or tuning of multisensory systems decides on which stimuli should be bound and which should not. The binding of stimuli over longer temporal intervals is likely to result in the creation of poor or “fuzzy” multisensory perceptual representations in which there is a great deal of ambiguity about stimulus identity. Typically, the temporal relationship between two sensory inputs is an important cue as to whether those inputs should be bound. When the perception of this temporal relationship is less acute, subjective temporal synchrony loses its reliability as a cue to bind. The end result of losing such a salient cue and important piece of information is that the individual shows weaker binding overall. In support of this idea is recent work from our laboratory and that has illustrated a strong relationship between the multisensory TBW and the strength of perceptual binding [147, 148]. In this study, the width of the TBW was found to be strongly negatively correlated with perceptual fusions as indexed by the McGurk effect (Figure 5). This finding lends strong support to the linkage between multisensory temporal function and the creation of perceptual representations, an area of inquiry that we believe will be extremely informative moving forward.

Multisensory temporal function and the creation of veridical perceptual and cognitive representations

In our view, the importance of the worked cited above extends well beyond the links that have currently been established. Sensory, and by extension multisensory, processes form the building blocks upon which perceptual and cognitive representations are created. These input streams are crucial not only for the “maps” that form the cornerstone of early subcortical and cortical sensory representations, but also for so-called “higher-order” processes that are dependent on the integrity of the information within the incoming sensory streams. Such a framework predicts that changes in sensory and multisensory processes will have cascading effects upon the information processing hierarchy, ultimately impacting cognitive domains such as attention, executive function, language and communication and social interactions [147, 262-266].

Focusing on the social and communicative pieces because of their relationship to autism, it must be acknowledged that both are not only highly dependent upon sensory information, but also are dependent upon the integration of information across the different sensory modalities [266]. Language and communicative function are highly multisensory, depending not only upon the auditory channel but also upon the associated visual cues such as articulatory gestures that provide vital information for the comprehension of the speech signal (particularly in noisy environments – see [5-13]). In a similar fashion, the interpretation of social cues is keenly dependent upon multisensory processes. Inflections of the voice, facial gestures, and touch convey important social information that must be properly integrated in order to fully understand the content of the social setting.

Although intuitively appealing, much work needs to be done in order to establish these critical links between sensory and multisensory function and higher-order abilities. Indeed, ongoing work in our laboratory is using large-scale correlational matrices in order to identify important associations between a battery of sensory and multisensory tasks that we now routinely use, and a host of measures of cognitive abilities. In an associated manner, we have recently examined multisensory speech perception in a cohort of ASD and typically-developing (TD) children between the ages of 8 and 17 [147]. Consistent with our prior work, ASD children showed an increased width to their multisensory TBW, as well as differences in the degree to which they fused concordant audiovisual speech stimuli. Children with ASD also exhibited a strong relationship between the strength of their perceptual binding on concordant audiovisual trials and the communication subscore of the ADOS, with lower (i.e., more typical) scores being associated with greater binding [267] (Figure 5). Thus, the temporal acuity of individuals’ multisensory binding is directly correlated with their abilities to integrate audiovisual speech, and the correlation between multisensory temporal processing and ADOS communication scores suggests that this relationship may extend into clinical manifestations of ASD. Although this work suggests important links between some of the key diagnostic features of autism and multisensory function, much more needs to be done in order to fully elucidate the nature of these relationships.

In addition to the recent data linking multisensory temporal acuity, speech integration and ADOS communication scores in the ASD populations, ongoing research has begun to examine these relationships to autistic-like traits in the general population (referred to as the broader or extended phenotype). Autistic traits are found to varying degrees in the population at large, and can be indexed through scales such as the Autism-spectrum Quotient [ASQ; 268] or the Broad Autism Phenotype Questionnaire [269]. These traits can then be correlated with any number of perceptual measures. For example, a recent study by Donohue and colleagues [270] showed that the point of subjective simultaneity (PSS) varies relative to the (non-clinical) level of autistic traits an individual exhibits. The PSS, that point in time in which an individual perceives a visual and auditory event to be absolutely synchronous, tends to be observed when the auditory stimulus component slightly lags the visual component, reflecting the statistics of the natural environment (i.e. auditory information travels more slowly when compared with visual information). Individuals showing greater levels of autistic traits however, tend to have PSS measurements closer to absolute synchrony, reflecting a decrease in adaptation to the statistics in the external environment.

The neurobiological substrate for an extended multisensory TBW in ASD

As described earlier, the cortex of the posterior superior temporal sulcus (pSTS) has been implicated as a major node in the computation of multisensory temporal relations. Hence, with the wealth of evidence suggestive of alterations in multisensory temporal function with autism, a logical biological basis for these differences would be changes in the structure and/or function of pSTS. Indeed, some of the most characteristic structural alterations in the brains of those with autism are differences in gray and white matter associated with the pSTS [271-275]. Furthermore, a number of functional studies (i.e., fMRI) have pointed to differences in the activation patterns within pSTS in autism, as has work looking at both functional and structural connectivity of the pSTS [276-281]. Finally, our lab has shown that training that is focused on improving multisensory temporal acuity results in changes in activation and connectivity in a network centered on the pSTS [37]. Collectively, these studies suggest that changes in pSTS in individuals with autism may represent the neural bases for altered multisensory temporal function, and may be a key node in networks responsible for the changes in social and communicative function.

Multisensory temporal contributions to developmental dyslexia

Although autism represents the clinical condition in which multisensory function has been best characterized, evidence suggests that multisensory deficits, and specifically those in the temporal domain, are not unique to autism. Both sensory and multisensory changes have been found to accompany dyslexia, a reading disability in which affected individuals have profound reading difficulties in the background of normal or even above-normal intelligence. Like with autism, numerous neurobiological theories have been espoused for dyslexia, with most being centered on the substantial differences in phonological processing seen in these individuals. Although many of these theories are centered on changes in brain structures responsible for the processing of phonology and phonological relations (e.g., see [282-286]), others have suggested that these phonological deficits may be a result of processing difficulties at earlier stages. One of the most well-developed of these views centers on the magnocellular layers of the thalamus [287, 288]. In this view, selective deficits in the magnocellular visual stream, which subserves the processing of motion, play a key role in dyslexia. Supporting evidence for this theory comes from reports of abnormal eye movements in dyslexia, and from altered activation patterns in areas of the cerebral cortex specialized for processing stimulus motion [289].

The evidence for changes in both visual and auditory function in dyslexia is suggestive that it may be fruitful to consider the disorder in a more pansensory or multisensory framework. Indeed, some of the original clinical descriptions of dyslexia from the neurologist Samuel Orton are rife with multisensory references [290], and to date the most widely adopted intervention approach, the Orton-Gillingham method, is founded on multisensory principles [291]. In addition, several early studies of reading disabled and reading delayed individuals found changes in cross-modal (visual-auditory) temporal function, consistent with a multisensory contribution to reading dysfunction [292, 293]. In order to attribute a specific multisensory contribution to the disorder, however, it is first necessary to show that the nature of the multisensory changes cannot be ascribed simply to changes in unisensory function. Stated a bit differently, it would not be terribly surprising (or interesting) to see multisensory changes accompanying changes in visual (and/or auditory) function. Of interest is whether these changes go beyond those that can be predicted based on unisensory differences.

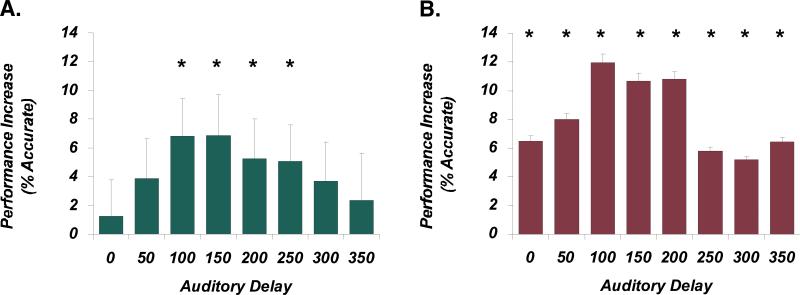

In an effort to examine specific multisensory alterations in dyslexia, we adopted a multisensory version of the familiar and frequently employed visual temporal order judgment (TOJ) task. Prior work in typical subjects had found that the introduction of a pair of task-irrelevant sounds during performance of the visual TOJ task could improve performance, most notably when the second sound lagged the appearance of the second light [294]. Taking advantage of this task, we were able to show striking differences between dyslexic and typical readers – specifically in the time window within which the second auditory stimulus could enhance visual performance (Figure 6) [142]. Dyslexic readers received benefits from this sound over intervals more than twice as long as typical readers, suggesting that they are “binding” visual and auditory stimuli over unusually long periods of time. We speculate that such an extended TBW will present substantial difficulties for the construction of strong reading representations, in that it will present greater ambiguity as to which auditory elements of the written word (i.e., phonemes) belong with which visual elements (i.e., graphemes). In support of this, EEG studies have shown that as readers progress to fluency, letters and speech-sounds are combined early and automatically in the auditory association cortex, and that this processing is strongly dependent on the relative timing of the paired stimuli [295, 296]. Furthermore, it was found that for dyslexic readers, this progression to automaticity failed to take place [297].

Figure 6.

Alterations in multisensory temporal function in developmental dyslexia. A. Typical readers show a pattern of benefits on a visual temporal order judgment (TOJ) task in which an accessory auditory stimulus can facilitate task performance, but only when presented with a specific temporal structure relative to the visual stimuli. Specifically, only when the second auditory stimulus is delayed by between 100-250 ms are accuracy improvements seen. B. In contrast, for dyslexic readers both the magnitude and the temporal pattern of benefits differ substantially. Most importantly, performance improvements are now seen at all tested delays, suggesting an enlargement in the audiovisual temporal binding window. Adapted from Hairston et al., 2005.

Additional evidence that sits outside of the domain of temporal function has been gathered in support of multisensory alterations in dyslexia. For example, deficits in spatial attention to both visual and auditory stimuli have been linked to phonological skills in dyslexia [298]. In addition, Blau and colleagues have shown using fMRI that dyslexic readers underactivate regions of the superior temporal cortex when binding the auditory and visual components of a speech signal [299]. As highlighted earlier, the cortex surrounding the pSTS is a critical node for the convergence of auditory and visual information, and appears to play a key role in the temporal binding of these signals. Indeed, the pSTS and its associated gyrus (the superior temporal gyrus) have been implicated as key regions of difference between typical and dyslexic readers (e.g., see [300-304]). Overall, these studies point to an important role for multisensory function in dyslexia, but much more work needs to be done to better understand how these changes ultimately result in poor reading performance [305].

Evidence for multisensory abnormalities in schizophrenia

Schizophrenia is a complex psychiatric disorder best characterized by changes in thought and emotional reactivity. Frequently accompanying schizophrenia are delusions and hallucinations, with the latter resulting in research into the nature of sensory (i.e., auditory) processing differences and their contributions to the cognitive changes seen in schizophrenia [306-312]. Although these studies have indeed highlighted changes in auditory and visual processes and cortical organization in schizophrenia, no clear picture as to how sensory dysfunction contributes to the overall schizophrenia phenotype has emerged. Nonetheless, as for autism and dyslexia, the presence of these sensory changes across multiple modalities begs for an examination of multisensory function.

Clinical reports have long suggested changes in multisensory function in schizophrenia, most notably seen in the ability to match stimuli across the different sensory modalities (i.e, cross-modal matching, see [313]). More empirically directed work subsequently found there to be deficits in the integration of audiovisual stimuli in a schizophrenia cohort, and that this deficit appeared to be restricted to speech-related audiovisual stimuli and was amplified under noisy conditions [314-318]. A subset of these studies also revealed differences in multisensory performance specifically when the tasks indexed the emotional valence of the auditory (voice) and visual (face) stimuli. Other work has suggested the presence of deficits in lower-level multisensory integration, specifically in demonstrating reduced facilitation of reaction times on a visual-auditory target detection task [319]. In a recent EEG study comparing between those with schizophrenia and controls, it was found that the neural signatures associated with typical audiovisual integration were absent or comprised in the schizophrenic patients [320].

One crucial issue as it relates to the establishment of specific multisensory deficits in schizophrenia (or in any other clinical condition) is to show that changes in performance and/or perception are either unique to the multisensory conditions, or cannot be predicted based on the changes seen in unisensory function. For this reason, it is essential that measures of multisensory function are contrasted against unisensory measures. Although such unisensory-multisensory contrasts are becoming increasingly common, some of the earlier studies failed to test for unisensory changes, making it difficult to interpret the differences in multisensory performance.

Numerous prior studies have suggested that in addition to sensory-based problems, individuals with schizophrenia have alterations in temporal perception [312, 321-323]. Indeed, prior work has merged these areas of inquiry, and has shown changes in both unisensory and multisensory temporal perception in schizophrenia, which manifest as a lessened acuity in judging the simultaneity between visual, auditory and combined visual-auditory stimulus pairs [322, 324]. In an effort to follow up on this work with an emphasis on the TBW and on the specificity of these effects for multisensory integration, we have recently embarked on a study designed to detail the nature of these changes and their relationships to the constellation of clinical symptoms. Although preliminary, this work is suggestive of changes in multisensory temporal function that we believe may be important factors in the schizophrenia phenotype.

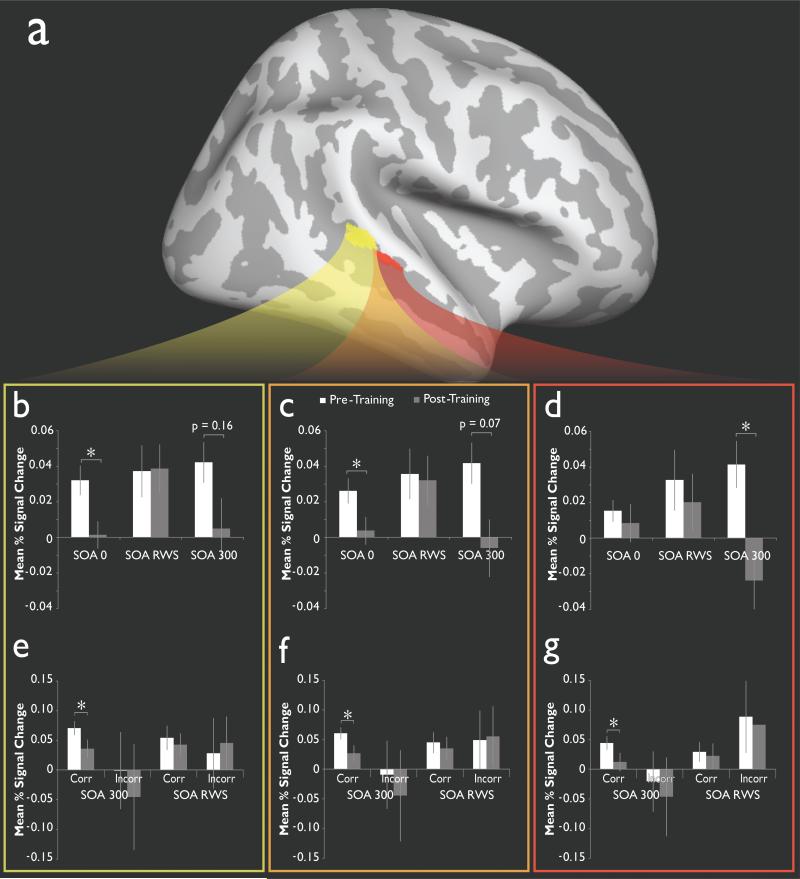

Training as a therapeutic tool to engage unisensory and multisensory plasticity

As alluded to in the prior section, ongoing work in our laboratory has focused on using approaches grounded in perceptual plasticity to train sensory and multisensory systems. In addition to its application for those wearing cochlear implants, we believe that such methods also hold promise for clinical conditions such as autism and schizophrenia, most notably in their possible utility for improving sensory and multisensory temporal acuity. As highlighted in an earlier section, we have successfully trained individuals to narrow the width of their TBW [103, 154], with these changes accompanied by changes in a brain network centered on the pSTS (Figure 7) [37]. Most encouraging in these normative studies was the finding that those who benefited the most from training (i.e., showed the largest changes in the size of their TBW) were those for whom the TBW was the largest prior to training [103, 154]. Hence, our findings of enlarged multisensory TBW in autism, dyslexia and schizophrenia suggest that these individuals may be highly susceptible to perceptual training methods.

Figure 7.

Training on a multisensory temporal task results in activation changes in a network of areas centered on the pSTS. A. Red and yellow colors represent two ROIs in the pSTS that showed activation during visual and auditory conditions and that were altered following perceptual training. B-D. Mean percent signal change for all voxels in the posterior pSTS ROI (yellow box, B), the anterior pSTS ROI (red box, D) and for the two combined (orange box, C). Note that significant decreases in the BOLD response were found following training for stimulus onset asynchronies (SOAs) that represent the easiest conditions (i.e., SOA 0 and SOA 300). In contrast, little changes was seen for the intermediate SOA that defined the borders of each individual's TBW (SOA RWS). E-G. Mean percent signal changes as a function of accuracy for SOA 300 and SOA RWS trials for the posterior (E), anterior (G) and combined ROIs (F). Adapted from Powers, Hevey and Wallace, 2012.

In preliminary work in autism, we have shown this to be the case, with several days of training resulting in a significant narrowing of the TBW. Although very exciting, this work needs to be extended to show that this training results in changes beyond the trained task and domain. We are encouraged by our results in our typical cohort, which have shown that training using low-level stimuli (i.e., flashes and beeps) on one task (i.e., simultaneity judgment) can result in changes in the TBW for the processing of higher-level (i.e., speech) stimuli in the context of a different task (i.e., perceptual fusions as indexed by the McGurk effect). The presence of such generalization is extraordinarily exciting, but must now be extended to see if these training regimens can engender meaningful change in measures of real world function – such as improvements in social skills and communication. Although still in their early stages, we feel that these perceptual plasticity-based approaches hold great promise as potential tools that can be incorporated into behaviorally-based remediation methods.

Concluding remarks

Sensory and multisensory dysfunction accompanies many developmental disabilities. Although widely acknowledged, the presence of these deficits is often overlooked from the perspective of how they can inform and contribute to the characteristics that are considered defining for the disorder. Using autism as an example, it is only with the recent update to the DSM-5 that sensory problems are considered a core feature of the disorder. Even with this important acknowledgment, little empirical evidence exists to better characterize the nature of these sensory disturbances, and perhaps more importantly, to relate these changes to higher cognitive abilities. This landscape is changing, and is beginning to reveal the importance of a more integrated and holistic view into these interactions and interrelationships.

The current review focuses on but one facet of these sensory changes – multisensory temporal processes – and on but a few of the clinical conditions in which a picture is beginning to emerge. The presented evidence illustrates that both unisensory (i.e., within modality) and multisensory (i.e., across modality) processes are frequently affected in autism, dyslexia and schizophrenia. There is surprising commonality in the way in which multisensory function is altered in these three disorders, with the principal finding being an enlargement in the width of the multisensory temporal binding window – that epoch of time within which stimuli from different modalities interact and influence one another's processing. How such an enlarged time window ultimately impacts the perceptual and cognitive features that define each of these conditions, particularly given the striking phenotypic differences between them, remains to be determined. Nonetheless, these results bring into focus the critical importance of sensory and multisensory function, and the strong need to employ a battery of tasks designed to index various aspects of (multi)sensory function, and to relate performance on these tasks to cognitive and perceptual abilities in order to establish sensory-perceptual-cognitive links. In conjunction with brain-based physiological measures, such as EEG and MRI, and the associated connectivity and network analyses, these approaches will undoubtedly reveal key pathophysiological features for each of these clinical conditions.

Finally, in addition to providing a more integrated and detailed view into these behavioral, perceptual and neurobiological characteristics, the current work holds great promise from an interventional perspective. We predicate this concept on the view that (multi)sensory function forms the building blocks for higher-order representations. Thus, training methods that improve (multi)sensory function will also be likely to have effects that cascade beyond the trained tasks and domains.

Highlights (NSY-D-14-00152).

The review focuses on altered multisensory function in developmental disabilities

Multisensory temporal acuity is altered in autism, dyslexia and schizophrenia

The construct of the multisensory temporal binding window is critical in perception

Perceptual training may have utility in improving multisensory function

Figure 3.

The cortex surrounding the posterior superior temporal sulcus (pSTS) is integrally involved in the integration of visual and auditory information. A. The pSTS as defined as the conjunction of brain regions functionally responsive to intact auditory stimuli over their scrambled equivalents AND intact visual stimuli over their scrambled equivalents. The pSTS is located directly between auditory-only and visually-only responsive regions, making it a logical site for audiovisual convergence and integration. Adapted From James et al, 2012. B. Subregions of pSTS appear to be engaged in different multisensory processes. The subregion of pSTS defined as responding more to synchronous as opposed to asynchronous stimuli does not respond differentially according to the individual's perceptual reports (pink and light blue bars). In contrast, the subregion of pSTS defined as a conjunction of auditory and visual responsive regions (see Figure 3A) responds differentially according to the individual's perceptual reports (pink and light blue bars), even when the stimuli are identical. These data suggest that this may be the region of perceptual “binding” for the auditory and visual stimuli. Adapted from Stevenson et al, 2011. C. When given perceptual feedback training to improve multisensory temporal processing, the neural analogs of this change are centered about pSTS. The BOLD responses from these regions show more efficient processing of synchronous (and thus likely perceptually bound) stimuli. Adapted from Powers et al, 2012. D. When TMS is applied to pSTS during presentation of stimulus pairs that typically result in the McGurk illusion, individuals’ ability to perceptually bind the auditory and visual components is impaired, resulting in decreases in perception of the illusion. Adapted from Beauchamp et al, 2010.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Bibliography

- 1.Stein BE, Meredith MA. The Merging of the Senses. MIT Press; Cambridge, MA: 1993. [Google Scholar]

- 2.King AJ, Calvert GA. Multisensory integration: perceptual grouping by eye and ear. Curr Biol. 2001;11(8):R322–5. doi: 10.1016/s0960-9822(01)00175-0. [DOI] [PubMed] [Google Scholar]

- 3.Stein BE, et al. Multisensory integration. In: Ramachandran V, editor. Encyclopedia of the Human Brain. Elsevier; Amsterdam: 2002. pp. 227–241. [Google Scholar]

- 4.Calvert GA, Spence C, Stein BE, editors. The Handbook of Multisensory Processes. The MIT Press; Cambridge, MA: 2004. [Google Scholar]

- 5.Sumby WH, Pollack I. Visual contribution to speech intelligibility in noise. Journal of the Acoustical Society of America. 1954;26:212–215. [Google Scholar]

- 6.Stevenson RA, James TW. Audiovisual integration in human superior temporal sulcus: Inverse effectiveness and the neural processing of speech and object recognition. Neuroimage. 2009;44(3):1210–23. doi: 10.1016/j.neuroimage.2008.09.034. [DOI] [PubMed] [Google Scholar]

- 7.Bishop CW, Miller LM. A multisensory cortical network for understanding speech in noise. J Cogn Neurosci. 2009;21(9):1790–805. doi: 10.1162/jocn.2009.21118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Girin L, Schwartz J-L, Feng G. Audio-visual enhancement of speech in noise. J Acoust Soc Am. 2001;109:3007. doi: 10.1121/1.1358887. [DOI] [PubMed] [Google Scholar]

- 9.MacLeod A, Summerfield Q. Quantifying the contribution of vision to speech perception in noise. Br J Audiol. 1987;21(2):131–41. doi: 10.3109/03005368709077786. [DOI] [PubMed] [Google Scholar]

- 10.Grant KW, Walden BE, Seitz PF. Auditory-visual speech recognition by hearing-impaired subjects: consonant recognition, sentence recognition, and auditory-visual integration. J Acoust Soc Am. 1998;103(5 Pt 1):2677–90. doi: 10.1121/1.422788. [DOI] [PubMed] [Google Scholar]

- 11.Grant KW, Walden BE. Evaluating the articulation index for auditory-visual consonant recognition. J Acoust Soc Am. 1996;100(4 Pt 1):2415–24. doi: 10.1121/1.417950. [DOI] [PubMed] [Google Scholar]

- 12.Erber NP. Auditory-visual perception of speech. The Journal of speech and hearing disorders. 1975;40(4):481–92. doi: 10.1044/jshd.4004.481. [DOI] [PubMed] [Google Scholar]

- 13.Robert-Ribes J, et al. Complementarity and synergy in bimodal speech: auditory, visual, and audio-visual identification of French oral vowels in noise. J Acoust Soc Am. 1998;103(6):3677–89. doi: 10.1121/1.423069. [DOI] [PubMed] [Google Scholar]

- 14.Amedi A, et al. Functional imaging of human crossmodal identification and object recognition. Exp Brain Res. 2005;166(3-4):559–71. doi: 10.1007/s00221-005-2396-5. [DOI] [PubMed] [Google Scholar]

- 15.Baum SH, et al. Multisensory speech perception without the left superior temporal sulcus. Neuroimage. 2012 doi: 10.1016/j.neuroimage.2012.05.034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Beauchamp MS. See me, hear me, touch me: multisensory integration in lateral occipital-temporal cortex. Curr Opin Neurobiol. 2005;15(2):145–53. doi: 10.1016/j.conb.2005.03.011. [DOI] [PubMed] [Google Scholar]

- 17.Beauchamp MS, et al. Integration of auditory and visual information about objects in superior temporal sulcus. Neuron. 2004;41(5):809–23. doi: 10.1016/s0896-6273(04)00070-4. [DOI] [PubMed] [Google Scholar]

- 18.Nath AR, Beauchamp MS. Dynamic changes in superior temporal sulcus connectivity during perception of noisy audiovisual speech. J Neurosci. 2011;31(5):1704–14. doi: 10.1523/JNEUROSCI.4853-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Nath AR, Beauchamp MS. A neural basis for interindividual differences in the McGurk effect, a multisensory speech illusion. Neuroimage. 2012;59(1):781–7. doi: 10.1016/j.neuroimage.2011.07.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Macaluso E, et al. Spatial and temporal factors during processing of audiovisual speech: a PET study. Neuroimage. 2004;21(2):725–32. doi: 10.1016/j.neuroimage.2003.09.049. [DOI] [PubMed] [Google Scholar]

- 21.Kim S, James TW. Enhanced effectiveness in visuo-haptic object-selective brain regions with increasing stimulus salience. Hum Brain Mapp. 2010;31(5):678–93. doi: 10.1002/hbm.20897. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Kim S, Stevenson RA, James TW. Visuo-haptic neuronal convergence demonstrated with an inversely effective pattern of BOLD activation. J Cogn Neurosci. 2012;24(4):830–42. doi: 10.1162/jocn_a_00176. [DOI] [PubMed] [Google Scholar]

- 23.Stevenson R, et al. Inverse Effectiveness and Multisensory Interactions in Visual Event-Related Potentials with Audiovisual Speech. Brain Topography. 2012:1–19. doi: 10.1007/s10548-012-0220-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Stevenson RA, et al. Neural processing of asynchronous audiovisual speech perception. Neuroimage. 2010;49(4):3308–18. doi: 10.1016/j.neuroimage.2009.12.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Stevenson RA, et al. Inverse effectiveness and multisensory interactions in visual event-related potentials with audiovisual speech. Brain Topogr. 2012;25(3):308–26. doi: 10.1007/s10548-012-0220-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Stevenson RA, Kim S, James TW. An additive-factors design to disambiguate neuronal and areal convergence: measuring multisensory interactions between audio, visual, and haptic sensory streams using fMRI. Exp Brain Res. 2009;198(2-3):183–94. doi: 10.1007/s00221-009-1783-8. [DOI] [PubMed] [Google Scholar]

- 27.Calvert GA. Crossmodal processing in the human brain: insights from functional neuroimaging studies. Cereb Cortex. 2001;11(12):1110–23. doi: 10.1093/cercor/11.12.1110. [DOI] [PubMed] [Google Scholar]

- 28.Calvert GA, et al. Response amplification in sensory-specific cortices during crossmodal binding. Neuroreport. 1999;10(12):2619–23. doi: 10.1097/00001756-199908200-00033. [DOI] [PubMed] [Google Scholar]

- 29.Calvert GA, Campbell R, Brammer MJ. Evidence from functional magnetic resonance imaging of crossmodal binding in the human heteromodal cortex. Curr Biol. 2000;10(11):649–57. doi: 10.1016/s0960-9822(00)00513-3. [DOI] [PubMed] [Google Scholar]

- 30.Calvert GA, et al. Detection of audio-visual integration sites in humans by application of electrophysiological criteria to the BOLD effect. Neuroimage. 2001;14(2):427–38. doi: 10.1006/nimg.2001.0812. [DOI] [PubMed] [Google Scholar]

- 31.De Gelder B, Vroomen II, Pourtois G. Multisensory perception of emotion, its time course, and its neural basis. In: Calvert G, Spence C, Stein BE, editors. The Handbook of Multisensory Processes. MIT Press; Cambridge, MA: 2004. pp. 581–597. [Google Scholar]

- 32.Lloyd DM, et al. Multisensory representation of limb position in human premotor cortex. Nat Neurosci. 2003;6(1):17–8. doi: 10.1038/nn991. [DOI] [PubMed] [Google Scholar]

- 33.O'Doherty J, Rolls ET, Kringelbach ML. Neuroimaging studies of cross-modal integration for emotion. In: Calvert G, Spence C, Stein BE, editors. The Handbook of Multisensory Processes. MIT Press; Cambridge, MA: 2004. pp. 563–580. [Google Scholar]

- 34.James TW, et al. Multisensory perception of action in posterior temporal and parietal cortices. Neuropsychologia. 2011;49(1):108–14. doi: 10.1016/j.neuropsychologia.2010.10.030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Stevenson RA, Geoghegan ML, James TW. Superadditive BOLD activation in superior temporal sulcus with threshold non-speech objects. Experimental Brain Research. 2007;179(1):85–95. doi: 10.1007/s00221-006-0770-6. [DOI] [PubMed] [Google Scholar]

- 36.Stevenson RA, et al. Discrete neural substrates underlie complementary audiovisual speech integration processes. Neuroimage. 2011;55(3):1339–45. doi: 10.1016/j.neuroimage.2010.12.063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Powers AR, 3rd, Hevey MA, Wallace MT. Neural correlates of multisensory perceptual learning. J Neurosci. 2012;32(18):6263–74. doi: 10.1523/JNEUROSCI.6138-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Laurienti PJ, et al. Deactivation of sensory-specific cortex by cross-modal stimuli. J Cogn Neurosci. 2002;14(3):420–9. doi: 10.1162/089892902317361930. [DOI] [PubMed] [Google Scholar]

- 39.Laurienti PJ, et al. Cross-modal sensory processing in the anterior cingulate and medial prefrontal cortices. Hum Brain Mapp. 2003;19(4):213–23. doi: 10.1002/hbm.10112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Werner S, Noppeney U. Superadditive Responses in Superior Temporal Sulcus Predict Audiovisual Benefits in Object Categorization. Cereb Cortex. 2009 doi: 10.1093/cercor/bhp248. [DOI] [PubMed] [Google Scholar]

- 41.Werner S, Noppeney U. Distinct functional contributions of primary sensory and association areas to audiovisual integration in object categorization. J Neurosci. 2010;30(7):2662–75. doi: 10.1523/JNEUROSCI.5091-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Werner S, Noppeney U. Superadditive responses in superior temporal sulcus predict audiovisual benefits in object categorization. Cereb Cortex. 2010;20(8):1829–42. doi: 10.1093/cercor/bhp248. [DOI] [PubMed] [Google Scholar]

- 43.Werner S, Noppeney U. The contributions of transient and sustained response codes to audiovisual integration. Cereb Cortex. 2011;21(4):920–31. doi: 10.1093/cercor/bhq161. [DOI] [PubMed] [Google Scholar]

- 44.Cappe C, et al. Selective integration of auditory-visual looming cues by humans. Neuropsychologia. 2009;47(4):1045–52. doi: 10.1016/j.neuropsychologia.2008.11.003. [DOI] [PubMed] [Google Scholar]

- 45.Cappe C, et al. Auditory-visual multisensory interactions in humans: timing, topography, directionality, and sources. J Neurosci. 2010;30(38):12572–80. doi: 10.1523/JNEUROSCI.1099-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Foxe JJ, et al. Multisensory auditory-somatosensory interactions in early cortical processing revealed by high-density electrical mapping. Brain Res Cogn Brain Res. 2000;10(1-2):77–83. doi: 10.1016/s0926-6410(00)00024-0. [DOI] [PubMed] [Google Scholar]

- 47.Foxe JJ, et al. Auditory-somatosensory multisensory processing in auditory association cortex: an fMRI study. J Neurophysiol. 2002;88(1):540–3. doi: 10.1152/jn.2002.88.1.540. [DOI] [PubMed] [Google Scholar]

- 48.Martuzzi R, et al. Multisensory interactions within human primary cortices revealed by BOLD dynamics. Cereb Cortex. 2007;17(7):1672–9. doi: 10.1093/cercor/bhl077. [DOI] [PubMed] [Google Scholar]

- 49.Molholm S, et al. Multisensory auditory-visual interactions during early sensory processing in humans: a high-density electrical mapping study. Brain Res Cogn Brain Res. 2002;14(1):115–28. doi: 10.1016/s0926-6410(02)00066-6. [DOI] [PubMed] [Google Scholar]

- 50.Murray MM, et al. Grabbing your ear: rapid auditory-somatosensory multisensory interactions in low-level sensory cortices are not constrained by stimulus alignment. Cereb Cortex. 2005;15(7):963–74. doi: 10.1093/cercor/bhh197. [DOI] [PubMed] [Google Scholar]

- 51.Romei V, et al. Preperceptual and stimulus-selective enhancement of low-level human visual cortex excitability by sounds. Curr Biol. 2009;19(21):1799–805. doi: 10.1016/j.cub.2009.09.027. [DOI] [PubMed] [Google Scholar]

- 52.Wallace MH, Murray MM, editors. Frontiers in the Neural Basis of Multisensory Processes. Taylor & Francis; London: 2011. [Google Scholar]

- 53.Carriere BN, et al. Visual deprivation alters the development of cortical multisensory integration. J Neurophysiol. 2007;98(5):2858–67. doi: 10.1152/jn.00587.2007. [DOI] [PubMed] [Google Scholar]

- 54.Jiang W, et al. Two cortical areas mediate multisensory integration in superior colliculus neurons. J Neurophysiol. 2001;85(2):506–22. doi: 10.1152/jn.2001.85.2.506. [DOI] [PubMed] [Google Scholar]

- 55.Kadunce DC, et al. Mechanisms of within- and cross-modality suppression in the superior colliculus. J Neurophysiol. 1997;78(6):2834–47. doi: 10.1152/jn.1997.78.6.2834. [DOI] [PubMed] [Google Scholar]

- 56.Meredith MA, Wallace MT, Stein BE. Visual, auditory and somatosensory convergence in output neurons of the cat superior colliculus: multisensory properties of the tecto-reticulo-spinal projection. Exp Brain Res. 1992;88(1):181–6. doi: 10.1007/BF02259139. [DOI] [PubMed] [Google Scholar]

- 57.Perrault TJ, Jr., et al. Superior colliculus neurons use distinct operational modes in the integration of multisensory stimuli. J Neurophysiol. 2005;93(5):2575–86. doi: 10.1152/jn.00926.2004. [DOI] [PubMed] [Google Scholar]

- 58.Royal DW, Carriere BN, Wallace MT. Spatiotemporal architecture of cortical receptive fields and its impact on multisensory interactions. Exp Brain Res. 2009;198(2-3):127–36. doi: 10.1007/s00221-009-1772-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Stein BE, Wallace MT. Comparisons of cross-modality integration in midbrain and cortex. Prog Brain Res. 1996;112:289–99. doi: 10.1016/s0079-6123(08)63336-1. [DOI] [PubMed] [Google Scholar]

- 60.Stein BE, et al. Cortex governs multisensory integration in the midbrain. Neuroscientist. 2002;8(4):306–14. doi: 10.1177/107385840200800406. [DOI] [PubMed] [Google Scholar]

- 61.Wallace MT, et al. The development of cortical multisensory integration. J Neurosci. 2006;26(46):11844–9. doi: 10.1523/JNEUROSCI.3295-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Wallace MT. MA Meredith, and B.E. Stein, Integration of multiple sensory modalities in cat cortex. Exp Brain Res. 1992;91(3):484–8. doi: 10.1007/BF00227844. [DOI] [PubMed] [Google Scholar]

- 63.Wallace MT, Meredith MA, Stein BE. Converging influences from visual, auditory, and somatosensory cortices onto output neurons of the superior colliculus. J Neurophysiol. 1993;69(6):1797–809. doi: 10.1152/jn.1993.69.6.1797. [DOI] [PubMed] [Google Scholar]

- 64.Wallace MT, Meredith MA, Stein BE. Multisensory integration in the superior colliculus of the alert cat. J Neurophysiol. 1998;80(2):1006–10. doi: 10.1152/jn.1998.80.2.1006. [DOI] [PubMed] [Google Scholar]

- 65.Wallace MT, Stein BE. Cross-modal synthesis in the midbrain depends on input from cortex. J Neurophysiol. 1994;71(1):429–32. doi: 10.1152/jn.1994.71.1.429. [DOI] [PubMed] [Google Scholar]

- 66.Wallace MT, Wilkinson LK, Stein BE. Representation and integration of multiple sensory inputs in primate superior colliculus. J Neurophysiol. 1996;76(2):1246–66. doi: 10.1152/jn.1996.76.2.1246. [DOI] [PubMed] [Google Scholar]

- 67.Benevento LA, et al. Auditory-visual interaction in single cells in the cortex of the superior temporal sulcus and the orbital frontal cortex of the macaque monkey. Exp Neurol. 1977;57(3):849–72. doi: 10.1016/0014-4886(77)90112-1. [DOI] [PubMed] [Google Scholar]

- 68.Allman BL, Keniston LP, Meredith MA. Subthreshold auditory inputs to extrastriate visual neurons are responsive to parametric changes in stimulus quality: sensory-specific versus non-specific coding. Brain Res. 2008;1242:95–101. doi: 10.1016/j.brainres.2008.03.086. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Allman BL, Meredith MA. Multisensory processing in “unimodal” neurons: cross-modal subthreshold auditory effects in cat extrastriate visual cortex. J Neurophysiol. 2007;98(1):545–9. doi: 10.1152/jn.00173.2007. [DOI] [PubMed] [Google Scholar]

- 70.Meredith MA. On the neuronal basis for multisensory convergence: a brief overview. Brain Res Cogn Brain Res. 2002;14(1):31–40. doi: 10.1016/s0926-6410(02)00059-9. [DOI] [PubMed] [Google Scholar]

- 71.Meredith MA, Stein BE. Interactions among converging sensory inputs in the superior colliculus. Science. 1983;221(4608):389–91. doi: 10.1126/science.6867718. [DOI] [PubMed] [Google Scholar]

- 72.Meredith MA, Stein BE. Visual, auditory, and somatosensory convergence on cells in superior colliculus results in multisensory integration. J Neurophysiol. 1986;56(3):640–62. doi: 10.1152/jn.1986.56.3.640. [DOI] [PubMed] [Google Scholar]

- 73.Stein B, Meredith MA. The Merging of the Senses. MIT Press; Boston, MA: 1993. p. 224. [Google Scholar]

- 74.Stein BE, Meredith MA, Wallace MT. The visually responsive neuron and beyond: multisensory integration in cat and monkey. Prog Brain Res. 1993;95:79–90. doi: 10.1016/s0079-6123(08)60359-3. [DOI] [PubMed] [Google Scholar]

- 75.Alvarado JC, et al. A neural network model of multisensory integration also accounts for unisensory integration in superior colliculus. Brain Res. 2008;1242:13–23. doi: 10.1016/j.brainres.2008.03.074. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Alvarado JC, et al. Multisensory integration in the superior colliculus requires synergy among corticocollicular inputs. J Neurosci. 2009;29(20):6580–92. doi: 10.1523/JNEUROSCI.0525-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Alvarado JC, et al. Multisensory versus unisensory integration: contrasting modes in the superior colliculus. J Neurophysiol. 2007;97(5):3193–205. doi: 10.1152/jn.00018.2007. [DOI] [PubMed] [Google Scholar]

- 78.Stein BE. Neural mechanisms for synthesizing sensory information and producing adaptive behaviors. Exp Brain Res. 1998;123(1-2):124–35. doi: 10.1007/s002210050553. [DOI] [PubMed] [Google Scholar]

- 79.Stein BE, Meredith MA. Multisensory integration. Neural and behavioral solutions for dealing with stimuli from different sensory modalities. Ann N Y Acad Sci. 1990;608:51–65. doi: 10.1111/j.1749-6632.1990.tb48891.x. discussion 65-70. [DOI] [PubMed] [Google Scholar]