Abstract

Bayesian model selection or averaging objectively ranks a number of plausible, competing conceptual models based on Bayes' theorem. It implicitly performs an optimal trade-off between performance in fitting available data and minimum model complexity. The procedure requires determining Bayesian model evidence (BME), which is the likelihood of the observed data integrated over each model's parameter space. The computation of this integral is highly challenging because it is as high-dimensional as the number of model parameters. Three classes of techniques to compute BME are available, each with its own challenges and limitations: (1) Exact and fast analytical solutions are limited by strong assumptions. (2) Numerical evaluation quickly becomes unfeasible for expensive models. (3) Approximations known as information criteria (ICs) such as the AIC, BIC, or KIC (Akaike, Bayesian, or Kashyap information criterion, respectively) yield contradicting results with regard to model ranking. Our study features a theory-based intercomparison of these techniques. We further assess their accuracy in a simplistic synthetic example where for some scenarios an exact analytical solution exists. In more challenging scenarios, we use a brute-force Monte Carlo integration method as reference. We continue this analysis with a real-world application of hydrological model selection. This is a first-time benchmarking of the various methods for BME evaluation against true solutions. Results show that BME values from ICs are often heavily biased and that the choice of approximation method substantially influences the accuracy of model ranking. For reliable model selection, bias-free numerical methods should be preferred over ICs whenever computationally feasible.

Keywords: Bayesian model selection, Bayesian model evidence, information criteria

Introduction

The idea of model validation is to objectively scrutinize a model's ability to reproduce an observed data set and then to falsify the hypothesis that this model is a good representation for the system under study [Popper, 1959]. If this hypothesis cannot be rejected, the model may be considered for predictive purposes. Modelers have been encouraged for centuries to create multiple such working hypotheses instead of limiting themselves to the subjective choice of a single conceptual representation, therewith avoiding the “dangers of parental affection for a favorite theory” [Chamberlin, 1890]. These dangers include a significant underestimation of predictive uncertainty due to the neglected conceptual uncertainty (uncertainty in the choice of a most adequate representation of a system). Recognizing conceptual uncertainty as a main contribution to overall predictive uncertainty [e.g., Burnham and Anderson, 2003; Gupta et al., 2012; Clark et al., 2011; Refsgaard et al., 2006] makes model selection an “integral part of inference” [Buckland et al., 1997]. The quantification of conceptual uncertainty is of importance in a variety of scientific disciplines, e.g., in climate change modeling [Murphy et al., 2004; Najafi et al., 2011], weather forecasting [Raftery et al., 2005], hydrogeology [Rojas et al., 2008; Poeter and Anderson, 2005; Ye et al., 2010a], geostatistics [Neuman, 2003; Ye et al., 2004], vadose zone hydrology [Wöhling and Vrugt, 2008], and surface hydrology [Ajami et al., 2007; Vrugt and Robinson, 2007; Renard et al., 2010], to name only a few selected examples from the field of water resources.

Different strategies have been proposed to develop alternative conceptual models, assess their strengths and weaknesses, and to test their predictive ability. Bayesian model averaging (BMA) [Hoeting et al., 1999] is a formal statistical approach which allows comparing alternative conceptual models, testing their adequacy, combining their predictions into a more robust output estimate, and quantifying the contribution of conceptual uncertainty to the overall prediction uncertainty. The BMA approach is based on Bayes' theorem, which combines a prior belief about the adequacy of each model with its performance in reproducing a common data set. It yields model weights that represent posterior probabilities for each model to be the best one from the set of proposed alternative models. Based on the weights, it allows for a ranking and quantitative comparison of the competing models. Hence, BMA can be understood as a Bayesian hypothesis testing framework, merging the idea of classical hypothesis testing with the ability to test several alternative models against each other in a probabilistic way. The principle of parsimony or “Occam's razor” [e.g., Angluin and Smith, 1983] is implicitly followed by Bayes' theorem, such that the posterior model weights reflect a compromise between model complexity and goodness of fit (also known as the bias-variance trade-off [Geman et al., 1992]). BMA has been adopted in many different fields of research, e.g., sociology [Raftery, 1995], ecology [Link and Barker, 2006], hydrogeology [Li and Tsai, 2009], or contaminant hydrology [Troldborg et al., 2010], indicating the general need for such a systematic model selection procedure.

The drawback of BMA is, however, that it involves the evaluation of a quantity called Bayesian model evidence (BME). This integral over a model's parameter space typically cannot be computed analytically, while numerical solutions come at the price of high computational costs. Various authors have suggested and applied different approximations to the analytical BMA equations to render the procedure feasible. Neuman [2003] proposes a Maximum Likelihood Bayesian Model Averaging approach (MLBMA), which reduces computational effort by evaluating Kashyap's information criterion (KIC) for the most likely parameter set instead of integrating over the whole parameter space. This is especially compelling for high-dimensional applications (i.e., models with many parameters). If prior knowledge about the parameters is not available or vague, a further simplification leads to the Bayesian information criterion or Schwarz' information criterion (BIC) [Schwarz, 1978; Raftery, 1995]. The Akaike information criterion (AIC) [Akaike, 1973] originates from information theory and is frequently applied in the context of BMA in social research [Burnham and Anderson, 2003] for its ease of implementation. Previous studies have revealed that these information criteria (IC) differ in the resulting posterior model weights or even in the ranking of the models [Poeter and Anderson, 2005; Ye et al., ,,; Tsai and Li, 2010, 2008; Singh et al., 2010; Morales-Casique et al., 2010; Foglia et al., 2013]. This implies that they do not reflect the true Bayesian trade-off between performance and complexity, but might produce an arbitrary trade-off which is not supported by Bayesian theory and cannot provide a reliable basis for Bayesian model selection. Burnham and Anderson [2004] conclude that “… many reported studies are not appropriate as a basis for inference about which criterion should be used for model selection with real data.” The work of Lu et al. [2011] has been a first step into clarifying the so far contradictory results by comparing the KIC and the BIC against a Markov chain Monte Carlo (MCMC) reference solution for a synthetic geostatistical application.

Our study aims to advance this endeavor by rigorously assessing and comparing a more comprehensive set of nine different methods to evaluate BME. In specific, we will highlight their theoretical derivation, computational effort, and approximation accuracy. As representatives of mathematical approximations, we consider the AIC, AICc (bias-corrected AIC), and BIC in our comparison. We further include the KIC evaluated at the maximum likelihood parameter estimate (KIC@MLE) as introduced in MLBMA, and an alternative formulation that is evaluated at the maximum a posteriori parameter estimate instead (KIC@MAP). We also consider three types of Monte Carlo integration techniques (simple Monte Carlo integration, MC; MC integration with importance sampling, MC IS; MC integration with posterior sampling, MC PS) and a very recent approach called nested sampling (NS) as representatives of numerical methods. By pointing out and comparing the important features and assumptions of these mostly well-known techniques, we are able to argue which methods are truly suitable for BME evaluation, and which ones are suspected to yield inaccurate results. We then present a simplistic synthetic, linear test case where an exact analytical expression for BME exists. With this first-time benchmarking of the different BME evaluation methods against the true solution, we close a significant gap in the model selection literature.

The controlled setup in the simplistic example allows us to systematically investigate the factors which influence the value of BME and the approximation thereof by the nine featured evaluation methods. The two main factors investigated are (1) the size of the data set which determines the “seriousness” of the goodness of fit rating, and (2) the shape of the parameter prior which characterizes the robustness of a model. In a second step, we assess the performance of the different methods when confronted with low-dimensional nonlinear models. In this more challenging scenario of the synthetic example, no analytical solution to compute BME exists. We therefore generate a reference solution by brute-force MC integration, after having proven its suitability as reference solution in the linear case. In a third step, we present a real-world application of hydrological model selection. We chose this application such that the model selection task is still relatively simple and unambiguous. Even in this case the deficiencies of some of the evaluation methods become apparent. Our systematic investigation of methods to determine BME takes an important next step toward robust model selection in agreement with Bayes' theorem, heaving it up on solid ground.

We summarize the statistical framework of BMA in section 2 and discuss assets and drawbacks of the available techniques to determine BME in section 3. In section 4, we present the first-time benchmarking of the featured methods on the simplistic test case. Section 5 compares the approximation performance in a real-world hydrological model selection problem. We summarize our findings and formulate recommendations on which methods to use for reliable model selection in even more complex situations in section 6.

Bayesian Model Averaging Framework

We formulate the BMA equations according to Hoeting et al. [1999]. All probabilities and statistics are implicitly conditional on the set of considered models. While the suite of models is a subjective choice that lies in the responsibility of the modeler, it is the starting point for a systematic procedure to account for model uncertainty based on objective likelihood measures.

Let us consider Nm plausible, competing models Mk. The posterior predictive distribution of a quantity of interest given the vector of observed data

given the vector of observed data can be expressed as:

can be expressed as:

| (1) |

with representing a conditional probability distribution and

representing a conditional probability distribution and being discrete posterior model weights. The weights can be interpreted as the Bayesian probability of the individual models to be the best representation of the system from the set of considered models.

being discrete posterior model weights. The weights can be interpreted as the Bayesian probability of the individual models to be the best representation of the system from the set of considered models.

The model weights are given by Bayes' theorem:

| (2) |

with the prior probability (or rather subjective model credibility) that model Mk could be the best one (the most plausible, adequate, and consistent one) in the set of models before any observed data have been considered. A “reasonable, neutral choice” [Hoeting et al., 1999] could be equally likely priors

that model Mk could be the best one (the most plausible, adequate, and consistent one) in the set of models before any observed data have been considered. A “reasonable, neutral choice” [Hoeting et al., 1999] could be equally likely priors if there is little prior knowledge about the assets of the different models under consideration. The denominator in equation (2) is the normalizing constant of the posterior distribution of the models. It is easily obtained by determination of the individual weights. It could even be neglected, since all model weights are normalized by the same constant, so that the ranking of the individual models against each other is fully defined by the proportionality:

if there is little prior knowledge about the assets of the different models under consideration. The denominator in equation (2) is the normalizing constant of the posterior distribution of the models. It is easily obtained by determination of the individual weights. It could even be neglected, since all model weights are normalized by the same constant, so that the ranking of the individual models against each other is fully defined by the proportionality:

| (3) |

represents the BME term as introduced in section 1 and is also referred to as marginal likelihood or prior predictive because it quantifies the likelihood of the observed data based on the prior distribution of the parameters:

represents the BME term as introduced in section 1 and is also referred to as marginal likelihood or prior predictive because it quantifies the likelihood of the observed data based on the prior distribution of the parameters:

| (4) |

where denotes the vector of parameters of model Mk with dimension equal to the number

denotes the vector of parameters of model Mk with dimension equal to the number of parameters,

of parameters, is the corresponding parameter space, and

is the corresponding parameter space, and denotes their prior distribution.

denotes their prior distribution. is the likelihood or probability of the parameter set

is the likelihood or probability of the parameter set of model Mk to have generated the observed data set. The BME term can either be evaluated via integration over the full parameter space

of model Mk to have generated the observed data set. The BME term can either be evaluated via integration over the full parameter space (equation (4), referred to as Bayesian integral by Kass and Raftery [1995]), or via the posterior probability distribution of the parameters

(equation (4), referred to as Bayesian integral by Kass and Raftery [1995]), or via the posterior probability distribution of the parameters by rewriting Bayes' theorem with respect to the parameter distribution (instead of the model distribution, equation (2)):

by rewriting Bayes' theorem with respect to the parameter distribution (instead of the model distribution, equation (2)):

| (5) |

acts as a model-specific normalizing constant for the posterior of the parameters

acts as a model-specific normalizing constant for the posterior of the parameters . As a matter of fact, evaluating

. As a matter of fact, evaluating for any given model is a major nuisance in Bayesian updating, and MCMC methods have been developed with the goal to entirely avoid its evaluation. However, in order to evaluate BME, this normalizing constant has to be determined, which is the challenge addressed in the current study. Rearranging equation (5) yields the alternative formulation for equation (4):

for any given model is a major nuisance in Bayesian updating, and MCMC methods have been developed with the goal to entirely avoid its evaluation. However, in order to evaluate BME, this normalizing constant has to be determined, which is the challenge addressed in the current study. Rearranging equation (5) yields the alternative formulation for equation (4):

| (6) |

MacKay [1992] refers to the twofold evaluation of Bayes' theorem (equations (2) and (4) or (6)) as the “two levels of inference” in Bayesian model averaging: the first level is concerned with finding the posterior distribution of the models, the second level with finding the posterior distribution of each model's parameters (or rather its normalizing constant).

The integration over the full parameter space in equation (4) can be an exhaustive calculation, especially for high-dimensional parameter spaces . The alternative of computing the posterior distribution of the parameters (defining the “calibrated” parameter space, equation (5)) is similarly demanding in high-dimensional applications. Analytical solutions are available only under strongly limiting assumptions. In general, mathematical approximations or numerical methods have to be drawn upon instead. We discuss and compare the nine different methods to compute BME in the following section and assess their accuracy in section 4.

. The alternative of computing the posterior distribution of the parameters (defining the “calibrated” parameter space, equation (5)) is similarly demanding in high-dimensional applications. Analytical solutions are available only under strongly limiting assumptions. In general, mathematical approximations or numerical methods have to be drawn upon instead. We discuss and compare the nine different methods to compute BME in the following section and assess their accuracy in section 4.

Available Techniques to Determine BME

We will adopt the notation of Kass and Raftery [1995] for equation (4):

| (7) |

and denote any approximation to the true BME value Ik as . After explaining two formulations of the analytical solution in detail in section 3.1, we examine mathematical approximations in the form of ICs in section 3.2. Finally, we discuss assets and drawbacks of selected numerical evaluation methods in section 3.3 and summarize our preliminary findings from this theoretical comparison in section 3.4. All BME evaluation methods featured in this study are listed with their underlying assumptions in Table4. All approximation methods (i.e., the nine nonanalytical approaches) follow equation (7) to evaluate BME. We do not use equation (6) here, since typically for medium to highly parameterized applications, the multivariate probability density of posterior parameter realizations cannot be estimated. Knowing the posterior parameter distribution up to its normalizing constant (as in MCMC methods, see section 3.3.3) does not suffice here since the normalizing constant is actually the targeted quantity itself.

. After explaining two formulations of the analytical solution in detail in section 3.1, we examine mathematical approximations in the form of ICs in section 3.2. Finally, we discuss assets and drawbacks of selected numerical evaluation methods in section 3.3 and summarize our preliminary findings from this theoretical comparison in section 3.4. All BME evaluation methods featured in this study are listed with their underlying assumptions in Table4. All approximation methods (i.e., the nine nonanalytical approaches) follow equation (7) to evaluate BME. We do not use equation (6) here, since typically for medium to highly parameterized applications, the multivariate probability density of posterior parameter realizations cannot be estimated. Knowing the posterior parameter distribution up to its normalizing constant (as in MCMC methods, see section 3.3.3) does not suffice here since the normalizing constant is actually the targeted quantity itself.

Table 4.

Overview of Methods to Evaluate Bayesian Model Evidence

| Evaluation method | Abbreviation | Eq. | Underlying Assumptions | Comp. Effort | Performance in Linear Test Case | Performance in Non-linear Test Cases | Recommended Use |

|---|---|---|---|---|---|---|---|

| Analytical solution | |||||||

| Theoretical distribution of BME | - | 9 | Gaussian parameter prior and likelihood, linear model | Negligible | Exact | Not available | Whenever available |

| Normalizing constant of parameter posterior | - | 6 | conjugate prior, linear model | Negligible | Exact | Not available | Whenever available |

| Mathematical approximations | |||||||

| Kashyap's information criterion, evaluated at MLE | KIC@MLE | 14 | Gaussian parameter posterior, negligible influence of prior | Medium | Relatively accurate (assumptions mildly violated) | Inaccurate | KIC@MAP to be preferred |

| Kashyap's information criterion, evaluated at MAP | KIC@MAP | 15 | Gaussian parameter posterior | Medium | Exact (assumptions fulfilled) | Inaccurate | If assumptions fulfilled/ numerical techniques too expensive |

| Bayesian information criterion | BIC | 16 | Gaussian parameter posterior, negligible influence of prior | Low | Potentially very inaccurate (depending on actual data set), ignores prior | Not recommended for BMA | |

| Akaike information criterion | AIC | 18 | (not derived as approximation to BME) | Low | Potentially very inaccurate (depending on actual data set), ignores prior | Not recommended for BMA | |

| corrected Akaike information criterion | AlCc | 19 | (not derived as approximation to BME) | Low | Potentially very inaccurate (depending on actual data set), ignores prior | Not recommended for BMA | |

| Numerical evaluation techniques | |||||||

| Simple Monte Carlo integration | MC | 23 | None | Extreme | Slow convergence, but bias-free | Whenever computationally feasible | |

| MC integration with importance sampling | MC IS | 24 | None | High | Faster convergence, but (potentially) biased | As a more efficient alternative to MC | |

| MC integration with posterior sampling | MC PS | 25 | None | High | Even faster convergence, but even more biased (due to harmonic mean approach) | Not recommended for BMA | |

| Nested sampling | NS | 26 | None | High | Slow convergence for BME (due to uncertainty in prior mass shrinkage), but bias-free | Promising alternative to MC, more research needed | |

Analytical Solution

The Bayesian integral or BME Ik for model Mk can be evaluated analytically for exponential family distributions with conjugate priors [see e.g., DeGroot, 1970]. Thus, analytical solutions for BME are available, if the observed data are measurements of the model parameters

are measurements of the model parameters or a linear function thereof and a conjugate prior (i.e., the prior parameter distribution is in the same family as the posterior parameter distribution) exists. This is generally not the case in realistic applications. However, we will briefly outline the analytical solution to BME under these restrictive and simple conditions, before we discuss other evaluation methods that are not limited by these strong assumptions in sections 3.2 and 3.3.

or a linear function thereof and a conjugate prior (i.e., the prior parameter distribution is in the same family as the posterior parameter distribution) exists. This is generally not the case in realistic applications. However, we will briefly outline the analytical solution to BME under these restrictive and simple conditions, before we discuss other evaluation methods that are not limited by these strong assumptions in sections 3.2 and 3.3.

We will focus here on the special case of a linear model Mk with a linear model operator relating multi-Gaussian parameters

relating multi-Gaussian parameters to multivariate Gaussian distributed variables

to multivariate Gaussian distributed variables :

:

| (8) |

The prior parameter distribution is defined as a normal distribution with the prior mean

with the prior mean and the covariance matrix

and the covariance matrix . For simplicity of notation, the index k is dropped from the notation for the parameter covariance matrix.

. For simplicity of notation, the index k is dropped from the notation for the parameter covariance matrix.

The residuals signify any type of error associated with the data set and the models, e.g., measurement errors and model errors. Here we assume the models to be perfect (free of model errors) and only measurement errors to be relevant, and adopt a Gaussian model

signify any type of error associated with the data set and the models, e.g., measurement errors and model errors. Here we assume the models to be perfect (free of model errors) and only measurement errors to be relevant, and adopt a Gaussian model with a diagonal matrix R representing the covariance matrix for uncorrelated measurement errors. This results in a Gaussian likelihood function

with a diagonal matrix R representing the covariance matrix for uncorrelated measurement errors. This results in a Gaussian likelihood function . Using the theory of linear uncertainty propagation [e.g., Schweppe, 1973] and the stated assumptions, BME can be directly evaluated for any given data set

. Using the theory of linear uncertainty propagation [e.g., Schweppe, 1973] and the stated assumptions, BME can be directly evaluated for any given data set from the Gaussian distribution:

from the Gaussian distribution:

| (9) |

with .

.

As an alternative way to determine BME analytically, the posterior distribution of the parameters can be derived since the Gaussian distribution family is self-conjugate [Box and Tiao, 1973]. In general, the likelihood of the observed data given the prior parameter space of model Mk can be written as a function of the parameters

of the observed data given the prior parameter space of model Mk can be written as a function of the parameters [Fisher, 1922]. Note that the likelihood function is not necessarily a proper probability density function with respect to

[Fisher, 1922]. Note that the likelihood function is not necessarily a proper probability density function with respect to , because it does not necessarily integrate to one. With the assumptions of Gaussian measurement noise and a linear model, the likelihood can be expressed as a Gaussian function of the parameters

, because it does not necessarily integrate to one. With the assumptions of Gaussian measurement noise and a linear model, the likelihood can be expressed as a Gaussian function of the parameters with

with and

and . The mean of the distribution,

. The mean of the distribution, , is the maximum likelihood estimate (MLE) and (in this case) also the estimate obtained by ordinary least squares regression. It represents the parameter vector that yields the best possible fit to the observed data to be achieved by model Mk.

, is the maximum likelihood estimate (MLE) and (in this case) also the estimate obtained by ordinary least squares regression. It represents the parameter vector that yields the best possible fit to the observed data to be achieved by model Mk.

The combination of a Gaussian prior distribution and a Gaussian likelihood function yields an analytical expression for the posterior distribution , which is again Gaussian with

, which is again Gaussian with and

and . Under the current set of assumptions, the mean of the posterior distribution,

. Under the current set of assumptions, the mean of the posterior distribution, , is the maximum a posteriori estimate (MAP). The MAP represents those parameter values that are the most likely ones for model Mk, taking into account both prior belief about the distribution of the parameters and the performance in fitting the observed data. For a derivation of these statistics, see e.g., Box and Tiao [1973].

, is the maximum a posteriori estimate (MAP). The MAP represents those parameter values that are the most likely ones for model Mk, taking into account both prior belief about the distribution of the parameters and the performance in fitting the observed data. For a derivation of these statistics, see e.g., Box and Tiao [1973].

With the posterior parameter distribution, the quotient in equation (6) (Bayes' theorem rewritten to solve for the normalizing constant, equivalent to the integral in equation (7)) can be determined for any given value within the limits of

within the limits of .

.

Mathematical Approximation

If no analytical solution exists to the application at hand, equation (7) can be approximated mathematically, e.g., by a Taylor series expansion followed by a Laplace approximation. We briefly outline this approach in section 3.2.1 and then discuss the derivation of the KIC (section 3.2.2) which is based on this approximation. In this context, it becomes more evident how Occam's razor works in BMA (section 3.2.3). The BIC (section 3.2.4) represents a truncated version of the KIC. Another mathematical approximation, which is based on information theory, results in the AIC(c) (section 3.2.5). We contrast the expected impact of the different IC formulations on model selection in section 3.2.6.

Laplace Approximation

The idea of the Laplace method [De Bruijn, 1961] is to approximate the integral by defining a simpler mathematical function for a subinterval of the original parameter space, assuming that the contribution of this neighborhood almost makes up the whole integral. Here a Gaussian posterior distribution is assumed as simplification to the unknown distribution. This is a suitable approximation if the posterior distribution is highly peaked around its mode (or maximum) . This assumption holds, if a large data set with a high information content is available for calibration. Expanding the logarithm of the integrand in equation (7) by a Taylor series about the posterior mode

. This assumption holds, if a large data set with a high information content is available for calibration. Expanding the logarithm of the integrand in equation (7) by a Taylor series about the posterior mode (i.e., the MAP), neglecting third-order and higher-order terms, taking the exponent again and finally performing the integration with the help of the Laplace approximation yields:

(i.e., the MAP), neglecting third-order and higher-order terms, taking the exponent again and finally performing the integration with the help of the Laplace approximation yields:

| (10) |

with the likelihood function , the prior density

, the prior density , and the number of parameters

, and the number of parameters . The

. The x

x matrix

matrix is the negative inverse Hessian matrix of second derivatives and represents an asymptotic estimator of the posterior covariance

is the negative inverse Hessian matrix of second derivatives and represents an asymptotic estimator of the posterior covariance . It is equal to

. It is equal to for the case of an actually Gaussian posterior (see section 3.1). For details on the Laplace approximation in the field of Bayesian statistics and an analysis of its asymptotic errors, please refer to Tierney and Kadane [1986].

for the case of an actually Gaussian posterior (see section 3.1). For details on the Laplace approximation in the field of Bayesian statistics and an analysis of its asymptotic errors, please refer to Tierney and Kadane [1986].

If the parameter prior is little informative, the expansion could also be carried out about the MLE instead of the MAP

instead of the MAP . This approximation will be less accurate in general, with the deterioration depending on the distance between the MAP and MLE estimators. However, the MLE may be easier to find than the MAP with standard optimization routines. The corresponding approximation takes the following form:

. This approximation will be less accurate in general, with the deterioration depending on the distance between the MAP and MLE estimators. However, the MLE may be easier to find than the MAP with standard optimization routines. The corresponding approximation takes the following form:

| (11) |

The inverse of the covariance matrix is the observed Fisher information matrix evaluated at the MLE,

is the observed Fisher information matrix evaluated at the MLE, , with l being the log-likelihood function [Kass and Raftery, 1995].

, with l being the log-likelihood function [Kass and Raftery, 1995].

If the normalized (per observation) Fisher information is used, equals

equals [Ye et al., 2008]:

[Ye et al., 2008]:

|

(12) |

For clarity in notation, model indices are omitted here for the covariance matrices and for the Fisher information matrix.

The presented mathematical approximations to the Bayesian Integral (equation (7)) are typically known in the shape of ICs, i.e., as . We subsequently discuss the three most commonly used ICs in the BMA framework. They all generally aim at identifying the optimal bias-variance trade-off in model selection, but differ in their theoretical derivation and therefore in their accuracy with respect to the theoretically optimal trade-off according to Bayes' theorem.

. We subsequently discuss the three most commonly used ICs in the BMA framework. They all generally aim at identifying the optimal bias-variance trade-off in model selection, but differ in their theoretical derivation and therefore in their accuracy with respect to the theoretically optimal trade-off according to Bayes' theorem.

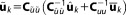

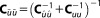

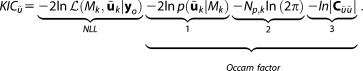

Kashyap Information Criterion

The Kashyap information criterion (KIC) directly results from the approximation defined in equation (12) by applying [Kashyap, 1982]:

[Kashyap, 1982]:

| (13) |

The KIC is applied within the framework of MLBMA [Neuman, 2002]. By means of this approximation, MLBMA is a computationally feasible alternative to full Bayesian model averaging if knowledge about the prior of parameters is vague. For applications of MLBMA, see Neuman [2003], Ye et al. [2004], Neuman et al. [2012], and references therein.

If an estimate of the postcalibration covariance matrix is obtainable, equation (11) can be drawn upon instead:

is obtainable, equation (11) can be drawn upon instead:

| (14) |

Ye et al. [2004] point toward the close relationship of the with the original Laplace approximation, but prefer the evaluation at the MLE, because it is in line with traditional MLE-based hydrological model selection and parameter estimation routines. Neuman et al. [2012] appreciate that MLBMA “admits but does not require prior information about the parameters” and include prior information in their likelihood optimization routine, which makes it de facto a MAP estimation routine. We strongly advertise the latter variant, because the Laplace approximation originally involves an expansion about the MAP instead of the MLE, and we understand prior information on the parameters as a vital part of Bayesian inference. Therefore, we propose to explicitly evaluate the KIC at the MAP:

with the original Laplace approximation, but prefer the evaluation at the MLE, because it is in line with traditional MLE-based hydrological model selection and parameter estimation routines. Neuman et al. [2012] appreciate that MLBMA “admits but does not require prior information about the parameters” and include prior information in their likelihood optimization routine, which makes it de facto a MAP estimation routine. We strongly advertise the latter variant, because the Laplace approximation originally involves an expansion about the MAP instead of the MLE, and we understand prior information on the parameters as a vital part of Bayesian inference. Therefore, we propose to explicitly evaluate the KIC at the MAP:

|

(15) |

We will refer to this formulation as KIC@MAP as opposed to the KIC@MLE (equation (14)) to avoid any confusion within the MLBMA framework, which seems to admit both of the KIC variants discussed here. The evaluation at the MAP is consistent with the Laplace approach to approximate the Bayesian integral and, in case of an actually Gaussian parameter posterior, will yield accurate results; this does not hold if the approximation is evaluated at the MLE. If the assumption of a Gaussian posterior is violated, it needs to be assessed how the different evaluation points affect the already inaccurate approximation. We will investigate the differences in performance between the KIC@MLE and our proposed KIC@MAP in section 4.

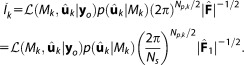

Interpretation Via Occam's Razor

In equation (15), we have distinguished different terms of the Laplace approximation in the formulation of the KIC@MAP. They can be interpreted within the context of BMA, and if the assumption of a Gaussian posterior parameter distribution is satisfied, this represents an interpretation of the ingredients of BME (or more specifically, minus twice the logarithm of BME). It incorporates a measure of goodness of fit (the negative log-likelihood term, NLL) and three penalty terms that account for model dimensionality (dimension of the model's parameter space). These three terms are referred to as Occam factor [MacKay, 1992].

The Occam factor reflects the principle of parsimony or Occam's razor: If any number of competing models shows the same quality of fit, the least complex one should be used to explain the observed effects. Any additional parameter is considered to be fitted to noise in the observed data and might lead to low parameter sensitivities and poor predictive performance (due to little robustness of the estimated parameters). Synthesizing the discussions by Neuman [,], Ye et al. [2004], and Lu et al. [2011], and explicitly transferring them to the expansion about the MAP, we make an attempt to explain the role of the three terms that are contained in the Occam factor.

The parameter prior (term 1) implicitly penalizes a growing complexity in that it gives a lower probability density to models with larger parameter spaces (larger

(term 1) implicitly penalizes a growing complexity in that it gives a lower probability density to models with larger parameter spaces (larger ), since high-dimensional densities have to dilute their total probability mass of unity within a larger space. Thus, a more complex model with its smaller prior parameter probabilities will obtain a higher value of the criterion or a decreased value of BME, which will compromise its chances to rule out its competitors according to Occam's razor.

), since high-dimensional densities have to dilute their total probability mass of unity within a larger space. Thus, a more complex model with its smaller prior parameter probabilities will obtain a higher value of the criterion or a decreased value of BME, which will compromise its chances to rule out its competitors according to Occam's razor.

The opposite is true for (term 2): here, an increase in dimensionality yields a decrease of the KIC or an increase in model evidence. This term is actually part of the normalizing factor of a Gaussian prior distribution and thus partially compensates the effect of (1).

(term 2): here, an increase in dimensionality yields a decrease of the KIC or an increase in model evidence. This term is actually part of the normalizing factor of a Gaussian prior distribution and thus partially compensates the effect of (1).

Finally, (term 3) accounts for the curvature of the posterior distribution. A strong negative curvature, i.e., a very narrow posterior distribution, represents a high information content in the data with respect to the calibration of the parameters. A narrow posterior leads to a low value for the determinant and thus to a decrease in model evidence or an increase of the KIC. This might seem counter-intuitive at first, but has to be interpreted from the viewpoint that if the data provide a high information content, the resulting likelihood function shall be narrow, and thus, its peak value shall also be high. The determinant is thus a partially compensating counterpart to the NLL term. If two competing models achieve the same likelihood, but differ in their sensitivity to the data, the one with a smaller sensitivity will be chosen because of its robustness [Ye et al., 2010b].

(term 3) accounts for the curvature of the posterior distribution. A strong negative curvature, i.e., a very narrow posterior distribution, represents a high information content in the data with respect to the calibration of the parameters. A narrow posterior leads to a low value for the determinant and thus to a decrease in model evidence or an increase of the KIC. This might seem counter-intuitive at first, but has to be interpreted from the viewpoint that if the data provide a high information content, the resulting likelihood function shall be narrow, and thus, its peak value shall also be high. The determinant is thus a partially compensating counterpart to the NLL term. If two competing models achieve the same likelihood, but differ in their sensitivity to the data, the one with a smaller sensitivity will be chosen because of its robustness [Ye et al., 2010b].

Bayesian Information Criterion

The Bayesian information criterion (BIC) or Schwarz information criterion [Schwarz, 1978] is a simplification to equation (13) in that it only retains terms that vary with Ns:

| (16) |

Evaluating this criterion for the MAP would not be consistent, because the influence of the prior is completely ignored in equation (16). Since only parts of the Occam factor are retained compared to equation (15), the BIC penalizes a model's dimensionality to a different extent. Those differences are not supported by any specific theory. However, the KIC@MLE reduces asymptotically to BIC with growing data set size Ns. The reason is that the prior probability of

would not be consistent, because the influence of the prior is completely ignored in equation (16). Since only parts of the Occam factor are retained compared to equation (15), the BIC penalizes a model's dimensionality to a different extent. Those differences are not supported by any specific theory. However, the KIC@MLE reduces asymptotically to BIC with growing data set size Ns. The reason is that the prior probability of as well as the normalized Fisher information do not grow with data set size, but the likelihood

as well as the normalized Fisher information do not grow with data set size, but the likelihood and

and do, rendering contributions that only grow with

do, rendering contributions that only grow with negligible [Neuman, 2003]. The error in approximation by the BIC is therefore expected to reduce to the error made by the KIC for large data set sizes. In section 3.2.6, we will compare the different IC approximations with regard to their penalty terms, and in section 4 we will investigate the convergence behavior of the KIC and BIC in more detail on a synthetic test case.

negligible [Neuman, 2003]. The error in approximation by the BIC is therefore expected to reduce to the error made by the KIC for large data set sizes. In section 3.2.6, we will compare the different IC approximations with regard to their penalty terms, and in section 4 we will investigate the convergence behavior of the KIC and BIC in more detail on a synthetic test case.

Applying the KIC or BIC for model selection (as opposed to averaging) is consistent as the assigned weight for the true model (if it is a member of the considered set of models) converges to unity for an infinite data set size. The truncated form (BIC) still seems to perform reasonably well for model identification or explanatory purposes [Koehler and Murphree, 1988]. It is also much less expensive to evaluate than the KIC for models with high-dimensional parameter spaces, since the evaluation of the covariance matrix is not required.

Akaike Information Criterion

The Akaike information criterion (AIC) or, as originally entitled, “an information criterion,” originates from information theory (as opposed to the Bayesian origin of the KIC and BIC), but has frequently been applied in the framework of BMA [e.g., Poeter and Anderson, 2005]. It is derived from the Kullback-Leibler (KL) divergence that measures the loss of information when using an alternative model Mk with a predictive density function instead of the “true” model with predictive density function

instead of the “true” model with predictive density function , with Y being a random variable from the true density f of the same size Ns as the observed data set

, with Y being a random variable from the true density f of the same size Ns as the observed data set :

:

|

(17) |

The first term in the second line of equation (17) is an unknown constant that drops out when comparing differences in the expected KL-information for the competing models in the set [e.g., Kuha, 2004]. Akaike [,] argued that the second term, called relative expected KL-information, can be estimated using the MLE. For reasons not provided here, this estimator is biased by , the number of parameters in the model Mk. Correcting this bias and multiplying by −2 yields the AIC [Burnham and Anderson, 2004]. The AIC formulation contains the NLL term as an expression for the goodness of fit and a penalty term for the number of parameters:

, the number of parameters in the model Mk. Correcting this bias and multiplying by −2 yields the AIC [Burnham and Anderson, 2004]. The AIC formulation contains the NLL term as an expression for the goodness of fit and a penalty term for the number of parameters:

| (18) |

Compared to the BIC in equation (16), the penalty term for the number of parameters is less severe. For data set sizes

is less severe. For data set sizes , BIC favors models with less parameters than AIC, since its penalty term

, BIC favors models with less parameters than AIC, since its penalty term becomes larger than the AIC's

becomes larger than the AIC's .

.

For a finite data set size Ns, a second-order bias correction has been suggested [Sugiura, 1978; Hurvich and Tsai, 1989]:

| (19) |

Among others, Burnham and Anderson [2004] suggest using the corrected formulation for data set sizes . For increasing data set sizes, the AICc converges to the AIC.

. For increasing data set sizes, the AICc converges to the AIC.

The posterior Akaike model weight is derived from:

| (20) |

with or

or , respectively. Based on its theoretical derivation, the absolute value of AICk or AICck has no explanatory power [Burnham and Anderson, 2004], only the difference

, respectively. Based on its theoretical derivation, the absolute value of AICk or AICck has no explanatory power [Burnham and Anderson, 2004], only the difference with respect to the lowest AICk or AICck can be interpreted. The BIC or KIC, in contrast, are a direct approximation to BME (equation (7)) and therefore yield meaningful values, also in interpretation as absolute values.

with respect to the lowest AICk or AICck can be interpreted. The BIC or KIC, in contrast, are a direct approximation to BME (equation (7)) and therefore yield meaningful values, also in interpretation as absolute values.

The AIC seems to perform well for predictive purposes, with a tendency to over-fit observed data [see e.g., Koehler and Murphree, 1988; Claeskens and Hjort, 2008]. This tendency is supposedly less severe for the bias-corrected AICc. Both versions of the AIC do not converge to the true model for an infinite data set size. The reason is that, with an increasing amount of data, the model chosen by AIC(c) will increase in complexity, potentially beyond the complexity of the true model (if it exists) [Burnham and Anderson, 2004].

The KIC is expected to provide the most consistent results among the ICs investigated here because it is based on the approximation closest to the true equations. Applications and comparisons of KIC, BIC, and AIC can be found in Ye et al. [2008], Tsai and Li [2010], Singh et al. [2010], Riva et al. [2011], Morales-Casique et al. [2010], and Lu et al. [2011]. In the following, we will summarize the main theoretical differences in the BME approximation by these ICs.

Theoretical Comparison of IC Approximations to BME

Based on equation (10), the Laplace approximated BME can be divided into the likelihood and the Occam factor OF (see section 3.2.3):

| (21) |

The ICs analyzed here all share the same approximation for the goodness of fit term based on the MLE. The only exception is the KIC@MAP, which is evaluated at the MAP instead of at the MLE. However, they all differ in their approximation to the OF. The OF represents the penalty for the dimensionality of a model or what we call the sharpness of Occam's razor. For the different ICs, it is given by:

|

(22) |

The OF as approximated by KIC does not explicitly account for data set size, yet Ns typically influences the curvature of the posterior probability (or the likelihood function) and thus implicitly affects (or

(or ). In contrast, AICc and BIC explicitly take data set size Ns in account, but do not evaluate the sensitivity of the calibrated parameter set via the curvature. The effects of these differences on the accuracy of the BME approximation will be demonstrated exemplarily on two test case applications in sections 4 and 5.

). In contrast, AICc and BIC explicitly take data set size Ns in account, but do not evaluate the sensitivity of the calibrated parameter set via the curvature. The effects of these differences on the accuracy of the BME approximation will be demonstrated exemplarily on two test case applications in sections 4 and 5.

Numerical Evaluation

Numerical evaluation offers a second alternative to determine BME, if no analytical solution is available or if one mistrusts the approximate character of the ICs. A comprehensive review of numerical methods to evaluate the Bayesian integral (equation (7)) is given by Evans and Swartz [1995]. In the following, we will shortly review selected state-of-the-art methods and discuss their strengths and limitations. Note that conventional efficient integration schemes (e.g., adaptive Gaussian quadrature) are limited to low-dimensional applications [Kass and Raftery, 1995]. In this study, we focus on numerical methods that can also be applied to highly complex models (models with large parameter spaces) in order to provide a useful discussion for a broad range of research fields and applications.

Monte Carlo Integration

Simple Monte Carlo integration [Hammersley, 1960] evaluates the integrand at randomly chosen points in parameter space. These parameter sets

in parameter space. These parameter sets are randomly drawn from their prior distribution

are randomly drawn from their prior distribution . The integral (or expected value over parameter space, cf. equation (4)) is then determined as the mean value of the evaluated likelihoods (sometimes referred to as arithmetic mean approach):

. The integral (or expected value over parameter space, cf. equation (4)) is then determined as the mean value of the evaluated likelihoods (sometimes referred to as arithmetic mean approach):

| (23) |

with the number of Monte Carlo (MC) realizations N. For large ensemble sizes N and a friendly overlap of the parameter prior and the likelihood function, this method will provide very accurate results. For high-dimensional parameter spaces, however, a sufficient (converging) ensemble might come at a high or even prohibitive computational cost. If the likelihood function is sharp compared to the prior distribution, only very few integration points will contribute a high likelihood value to the integral (making a very skewed variable), and the numerical uncertainty in the approximated integral might be large.

a very skewed variable), and the numerical uncertainty in the approximated integral might be large.

Monte Carlo Integration With Importance Sampling

To reduce computational effort and improve convergence, importance sampling [Hammersley et al., 1965] aims at a more efficient sampling strategy. Instead of drawing random realizations from the prior parameter distribution, integration points are drawn from any arbitrary distribution that is more similar to the posterior distribution. Thus, the mass of the integral will be detected more likely by the sampling points. When drawing from a different distribution q than the prior distribution p, the integrand in equation (7) must be expanded by q / q. This modifies equation (23) to:

| 24 |

with weights . The improvement achieved by importance sampling compared to simple MC integration will greatly depend on the choice of the importance function q.

. The improvement achieved by importance sampling compared to simple MC integration will greatly depend on the choice of the importance function q.

Monte Carlo Integration With Posterior Sampling

Developing this idea further, it could be most advantageous to draw parameter realizations from the posterior distribution in order to capture the full mass of the integral. Sampling from the posterior distribution is possible, e.g., with the MCMC method [Hastings, 1970].

in order to capture the full mass of the integral. Sampling from the posterior distribution is possible, e.g., with the MCMC method [Hastings, 1970].

For posterior sampling, the approximation to the integral reduces to the harmonic mean of likelihoods [Newton and Raftery, 1994], also referred to as the harmonic mean approach:

| (25) |

Equation (25) can be subject to numerical instabilities, due to small likelihoods that may corrupt the evaluation of the harmonic mean.

According to Jensen's inequality [Jensen, 1906], sampling exclusively from the posterior parameter distribution yields a biased estimator that overestimates BME. Thus, the harmonic mean approach should be seen as a trade-off between accuracy and computational effort. In order to avoid the instabilities of the harmonic mean approach by solving equation (6) instead, it would be necessary to estimate the posterior parameter probability density from the generated ensemble through kernel density estimators [e.g., Härdle, 1991], which is only possible for low-dimensional parameter spaces. We therefore do not investigate this alternative option further within this study.

Integration in Likelihood Space With Nested Sampling

The main challenge in evaluating BME lies in sufficiently sampling high-dimensional parameter spaces. A promising approach which avoids this challenge and instead samples the one-dimensional likelihood space is called nested sampling [Skilling, 2006]. The integral to obtain BME is written as:

| (26) |

where Z represents prior mass . It is solved by discretizing into m likelihood threshold values with the sequence

. It is solved by discretizing into m likelihood threshold values with the sequence and summing up over the corresponding prior mass pieces

and summing up over the corresponding prior mass pieces according to a numerical integration rule. How to find subsequent likelihood thresholds is described in Skilling [2006].

according to a numerical integration rule. How to find subsequent likelihood thresholds is described in Skilling [2006].

One of the remaining challenges for this very recent approach lies in finding conforming samples above the current likelihood threshold. We follow Elsheikh et al. [2013] in utilizing a short random walk Markov chain starting from a random sample that overcame the previous threshold. However, instead of using the ratio of likelihoods as acceptance distribution, we take the ratio of prior probabilities instead, to ensure that new samples still conform with their prior. Another challenge lies in ending the procedure with a suitable termination criterion (e.g., stop if the increase in BME per iteration has flattened out or if the likelihood threshold cannot be overcome within a maximum number of MCMC steps).

If the prior mass enclosing a specific likelihood threshold was known, the value of BME could be determined as accurately as the integration scheme allows. However, the fact that the real prior mass pieces Zj are unknown introduces a significant amount of uncertainty into the procedure, which reduces its precision. To quantify the resulting numerical uncertainty, an MC simulation over randomly chosen prior mass shrinkage factor should be performed [Skilling, 2006].

should be performed [Skilling, 2006].

Conclusions From Theoretical Comparison

From our comparison of the underlying assumptions for the nine BME evaluation methods considered here, we conclude that out of the ICs, the KIC@MAP is the most consistent one with BMA theory. It represents the true solution if the assumptions of the Laplace approximation hold (i.e., if the posterior parameter distribution is Gaussian). The other ICs considered here represent simplifications of this approach or, in the case of the AIC(c), are derived from a different theoretical perspective and are therefore expected to show an inferior approximation quality. Among the numerical methods considered here, simple MC integration is the most generally applicable approach because it is bias-free and spares any assumptions on the shape of the parameter distribution, but is also the computationally most expensive one. The other numerical methods vary in their efficiency, but are expected to yield similarly accurate results, except for MC integration with posterior sampling, which yields a biased estimate. We will test these expectations on a synthetic setup in the following section. The underlying assumptions of the nine BME evaluation methods analyzed in this study are summarized in Table4.

Benchmarking on a Synthetic Test Case

The nine methods to solve the Bayesian integral (equation (7)) differ in their accuracy and computational effort, as described in the previous section. To illustrate the differences in accuracy under completely controlled conditions, we apply the methods presented in section 3 to an oversimplified synthetic example. In a first step, we consider a setup with a linear model where an analytical solution exists. We create an ideal premise for the KIC@MAP and its variants (KIC@MLE and BIC) by using a Gaussian parameter posterior, which fulfills their core assumption. We designed this test case as a best-case scenario regarding the performance of these ICs: there is no less challenging case in which the information criteria could possibly perform better. In a second step, we also consider nonlinear models of different complexity that violate this core assumption. Since in this case no analytical solution exists, we use brute-force MC integration as reference for benchmarking. We designed this test case as an intermediate step toward real-world applications that typically entail nonlinear models and a higher number of parameters.

Setup and Implementation

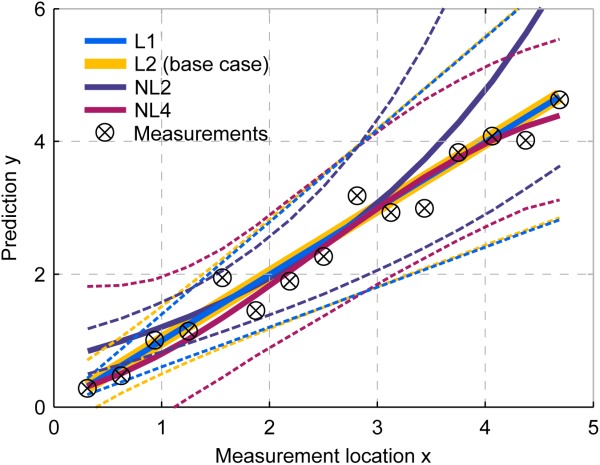

In the first step, a linear model relates bivariate Gaussian distributed parameters

relates bivariate Gaussian distributed parameters (slope and intersect of a linear function) to multi-Gaussian distributed predictions y at measurement locations x. This linear model is tested against a synthetic data set. The synthetic truth underlying the data set is generated from the same model, but with slightly different parameter values than the prior mean. To obtain a synthetic data set, a random measurement error is added according to a Gaussian distribution

(slope and intersect of a linear function) to multi-Gaussian distributed predictions y at measurement locations x. This linear model is tested against a synthetic data set. The synthetic truth underlying the data set is generated from the same model, but with slightly different parameter values than the prior mean. To obtain a synthetic data set, a random measurement error is added according to a Gaussian distribution .

.

For this artificial setup, BME can be determined analytically according to equations (9) (determining BME via linear uncertainty propagation) or 6 (solving Bayes' theorem for BME). This exact value is used as reference for the approximate methods presented in section 3. In this simple case, the MAP, , and the MLE,

, and the MLE, , are known analytically. Also, the corresponding covariances

, are known analytically. Also, the corresponding covariances and

and are known. We allow the mathematical approximations to take advantage of this knowledge by evaluating them at these exact values. Normally, these quantities have to be approximated by optimization algorithms first. This initial step represents the computational effort needed to determine BME with mathematical approximations in the form of ICs, since the evaluation of their algebraic equations itself is very cheap. Here we are not concerned with the potential challenge to find these parameter estimates, but merely wish to remind the reader of this fact.

are known. We allow the mathematical approximations to take advantage of this knowledge by evaluating them at these exact values. Normally, these quantities have to be approximated by optimization algorithms first. This initial step represents the computational effort needed to determine BME with mathematical approximations in the form of ICs, since the evaluation of their algebraic equations itself is very cheap. Here we are not concerned with the potential challenge to find these parameter estimates, but merely wish to remind the reader of this fact.

The numerical evaluation schemes do not take advantage of the linearity of the test case. Their computational effort is determined by the number of required parameter realizations, and hence by the number of required model evaluations. To assess the expected improvement in accuracy by investing in computational effort, we repeat the determination of BME for increasing ensemble sizes (MC integration, importance sampling, sampling from the posterior) or increasing sizes of the active set (nested sampling). To determine the lowest reasonable ensemble size, we investigate the convergence of the BME approximation for each method. Simple MC integration is performed based on ensembles of 2000–1,000,000 parameter realizations drawn from the Gaussian prior. MC integration with importance sampling is performed based on the same ensemble sizes. The sampling distribution for importance sampling is chosen to be Gaussian with a mean value equal to the MAP and a variance equal to the prior variance. Realizations of the posterior parameter distribution for MC integration with posterior sampling are generated by the differential evolution adaptive metropolis adaptive MCMC scheme DREAM [Vrugt et al., 2008]. With DREAM, BME is approximated based on ensembles of 5000–1,000,000 parameter realizations. Convergence of the MCMC runs was monitored by the Gelman-Rubin criterion [Gelman and Rubin, 1992] and we chose to take the final 25% of the converged Markov chains as posterior ensemble. Nested sampling is performed with initial ensemble sizes of 10–10,000. A nested sampling run is complete if one of the two following termination criteria is reached. The first termination criterion stops the calculation if the current likelihood threshold could not be overcome within 100 MCMC steps with a scaling vector . The second termination criterion stops the calculation if the current BME estimate would not increase by more than 0.5% even if the current maximum likelihood value would be multiplied with the total remaining prior mass. This results in total ensemble sizes (summed over all iterations) of roughly 1000–1,000,000.

. The second termination criterion stops the calculation if the current BME estimate would not increase by more than 0.5% even if the current maximum likelihood value would be multiplied with the total remaining prior mass. This results in total ensemble sizes (summed over all iterations) of roughly 1000–1,000,000.

As a base case, we generate a synthetic data set of size Ns = 15. Figure 1 shows the setup for the synthetic test case. The parameters used in the synthetic example are summarized in Table1.

Figure 1.

Synthetic test case setup. Measurements marked in black, prior estimate of linear (L1, L2) and nonlinear (NL2, NL4) models in solid lines, 95% Bayesian prediction confidence intervals in dashed lines of the respective color.

Table 1.

Definition of Parameters Used in Different Scenarios of the Synthetic Test Casea

| Parameter | Symbol | Value |

|---|---|---|

| Base Case (L2) | ||

| Prior mean |  |

; ;

|

| Prior covariance |  |

; ;

|

| Data set size | Ns | Ns = 15 |

| Meas. error covariance | R |

; Rij = 0 ; Rij = 0 |

| Varied Data Set Size | ||

| Data set size | Ns |  |

| Varied Prior Width | ||

| Prior covariance |  |

; ;

|

| Varied Prior/Likelihood Overlap | ||

| Prior mean |  |

; ;

|

| Varied Model Structure | ||

| Prior mean (L1) |  |

|

| Prior variance (L1) | s2 |  |

| Prior mean (NL2) |  |

; ;

|

| Prior covariance (NL2) |  |

; ;  ; ;

|

| Prior mean (NL4) |  |

; ;  ; ;  ; ;

|

| Prior covariance (NL4) |  |

; ;  ; ;  ; ;  ; ;  ; ;  ; ;  ; ;  ; ;  ; ;

|

For variations of the base case, only differences to the base case parameters are listed.

In our synthetic test case, the computational effort required for one model run is very low. This allows us to repeat the entire analysis for the base case and to average over 500 runs for each ensemble size in order to quantify the inherent numerical uncertainty in the results obtained from the numerical approximation methods. In the case of nested sampling, we additionally average over 200 random realizations of the prior mass shrinkage factor per run.

With the setup described above, we compare the performance of the different approximation methods in quantifying BME. Additionally, we study by scenario variations the impact of varied data set size and varied prior information (different mean values and variances of parameters) on the outcome of BME and on the performance of the different methods. The behavior of the mathematical approximations for small or large data set sizes has been touched upon in the literature [e.g., Burnham and Anderson, 2004; Lu et al., 2011]. We will underpin these discussions by systematically increasing the data set size from Ns = 2 to Ns = 50. Again, the same model is used to generate the synthetic truth as in the base case, and the measurements are taken at equidistant locations on the same interval of x. To show the general behavior of the approximation methods and to eliminate artifacts caused by a specific outcome of measurement error, we generate 200,000 perturbed data sets for each data set size and average over the results of these realizations.

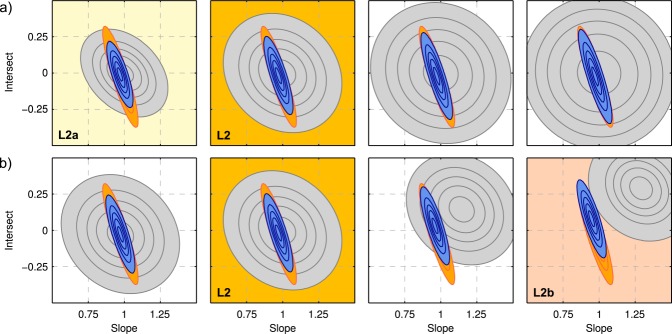

To our knowledge, the impact of prior information on the performance of BME approximation methods has not yet been studied in such a systematic approach. With the help of our synthetic test case, we can assess and then discuss this impact in a rigorous manner. Figure 2a visualizes the prior parameter densities, the likelihood function, and the posterior densities for a range of prior widths, Figure 2b for different prior/likelihood overlaps. The second column represents the base case as described above. Variations in prior width are normalized as fractions of the base case variances (covariance is not varied), variations in overlap of prior and likelihood are measured as distance between the prior mean and the MLE. The varied parameter values are also listed in Table1.

Figure 2.

Prior densities (gray), likelihood (orange), and posterior densities (blue) for the different scenarios of the synthetic test case. Contour lines represent 10–90% Bayesian confidence intervals: (a) variations of prior width (fractions of base case variance shown here: 0.5,…,5), (b) variations of prior/likelihood overlap (distance between prior mean and MLE shown here: 0,…,0.3).

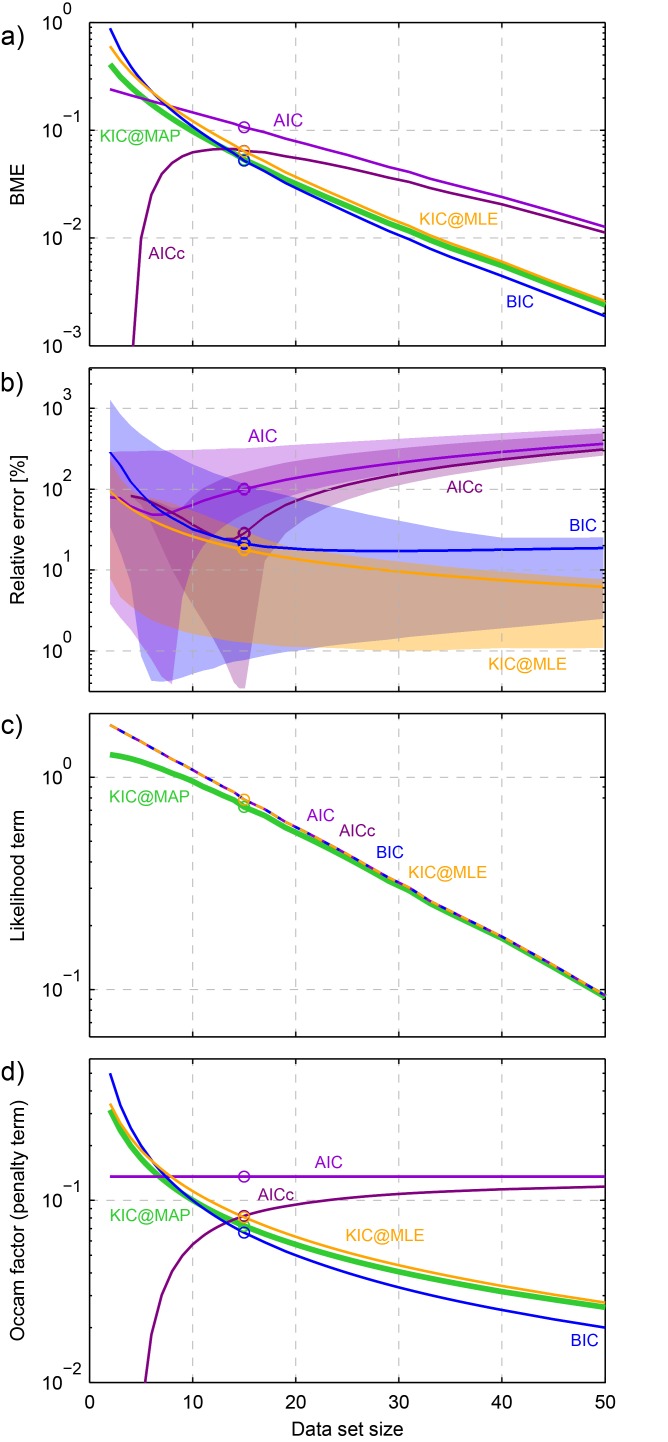

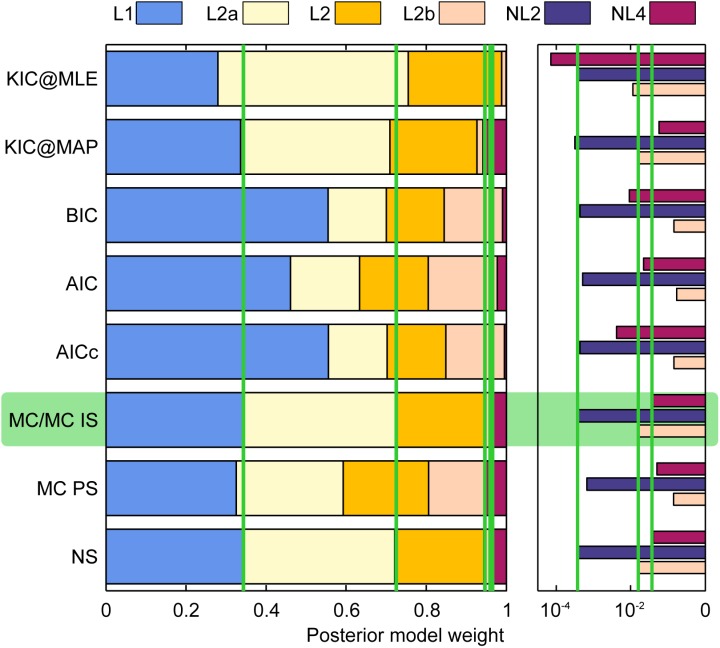

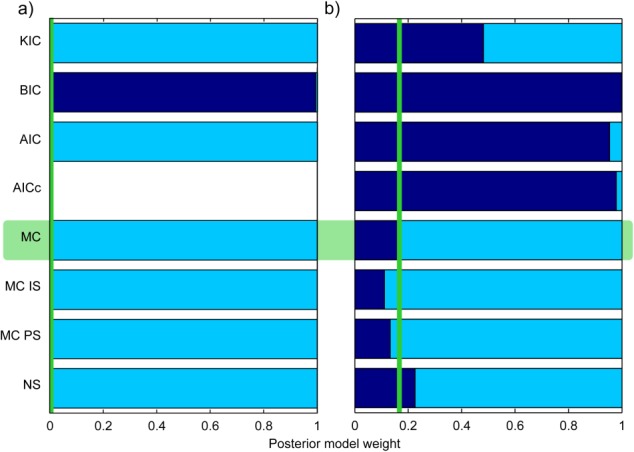

Besides the factors explained above, the model structure and dimensionality of the models' parameter spaces is expected to influence the performance of the different approximation methods. In the first step, we consider varying complexity with regard to the allowed parameter ranges as defined by the prior. In the second step, we also consider models with varied structure. Differences in model structure can manifest themselves in either differences in the dimensionality of the model (i.e., the number of parameters), or in the type of model (linear versus nonlinear), or in both. We consider all of these options here by including a linear model with one parameter (smaller number of parameters, but same model type; L1), a weakly nonlinear model with two parameters

(smaller number of parameters, but same model type; L1), a weakly nonlinear model with two parameters (same number of parameters, but different model type; NL2), and a nonlinear model with four parameters

(same number of parameters, but different model type; NL2), and a nonlinear model with four parameters (higher number of parameters and different model type; NL4) into the analysis. All prior distributions are chosen to be Gaussian with their mean and covariance values given in Table1. For nonlinear models, no analytical solution exists. In order to still be able to assess the differences in approximation quality, we generate a reference solution with brute-force MC integration using a very large ensemble of 10 million realizations per model. We choose this exceptionally large number of realizations to obtain a very reliable estimate of BME as a reference. In a numerical convergence analysis (bootstrapping) [Efron, 1979], we determined the variance upon resampling of the ensemble members, which confirmed that the BME estimate is varying less than 0.001%. This variation is insignificant in relation to the lowest error produced by the compared BME evaluation methods, which is two orders of magnitude larger. The average BME approximation quality (and its scattering) achieved by the other numerical methods is based on 500 repeated runs with ensemble sizes of 50,000, which might be considered a reasonable compromise between accuracy and computational effort based on the findings from the first step of our synthetic test case. From the posterior parameter sample generated by DREAM, we determine the MAP and the covariance matrix needed for the evaluation of the KIC@MAP. We obtain the respective ML statistics for the KIC@MLE from a DREAM run with uninformative prior distributions to cancel out the influence of the prior. We also evaluate the AIC(c) and the BIC at this parameter set.

(higher number of parameters and different model type; NL4) into the analysis. All prior distributions are chosen to be Gaussian with their mean and covariance values given in Table1. For nonlinear models, no analytical solution exists. In order to still be able to assess the differences in approximation quality, we generate a reference solution with brute-force MC integration using a very large ensemble of 10 million realizations per model. We choose this exceptionally large number of realizations to obtain a very reliable estimate of BME as a reference. In a numerical convergence analysis (bootstrapping) [Efron, 1979], we determined the variance upon resampling of the ensemble members, which confirmed that the BME estimate is varying less than 0.001%. This variation is insignificant in relation to the lowest error produced by the compared BME evaluation methods, which is two orders of magnitude larger. The average BME approximation quality (and its scattering) achieved by the other numerical methods is based on 500 repeated runs with ensemble sizes of 50,000, which might be considered a reasonable compromise between accuracy and computational effort based on the findings from the first step of our synthetic test case. From the posterior parameter sample generated by DREAM, we determine the MAP and the covariance matrix needed for the evaluation of the KIC@MAP. We obtain the respective ML statistics for the KIC@MLE from a DREAM run with uninformative prior distributions to cancel out the influence of the prior. We also evaluate the AIC(c) and the BIC at this parameter set.

For all cases (base case, varied data set size, varied prior information, varied model structure), the error in BME approximation is quantified as a relative error

| (27) |

with the subscript i representing any of the discussed methods. In the case of numerical techniques, the average Erel value and its Bayesian confidence interval out of all repetitions is provided.

Finally, we determine the impact of BME approximation errors on model weights based on the same setup and implementation details as described for the investigation of the influence of model structure (section 4.5).

Results for the Base Case

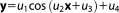

Figure 3 shows the relative error of BME approximations with respect to the analytical solution for the base case (see definition of parameters in section 4.1) as a function of ensemble size (number of model calls). Obviously, the accuracy of approximation improves for numerical methods when investing more computational effort, i.e., when increasing the numerical ensemble size. The improvement includes both a reduction in bias (error) and a reduction in variance (numerical uncertainty, shown as 95% Bayesian confidence intervals of the approximation error in Figure 3).

Figure 3.

Relative error of BME approximation with respect to the analytical solution for the synthetic base case as a function of ensemble size. IC solutions are plotted as horizontal lines, as they do not use realizations for BME evaluation. Results of the numerical evaluation schemes are presented with 95% Bayesian confidence intervals.

Simple MC integration (MC) and MC integration with importance sampling (MC IS) perform equally well for this setup. MC results improve linearly in quality in this log-log-plot, which complies with its well-known convergence rate of (Central Limit Theorem) [Feller, 1968]. MC integration with sampling from the posterior (MC PS), however, leads to a severe overestimation of BME as anticipated (see section 3.3.3) and does not improve linearly with ensemble size in log-log-space, but shows a slower convergence. It also produces a much larger numerical uncertainty (keep in mind the logarithmic scale of the error axis). Note that the bias in BME approximation stems from the harmonic mean formulation and not from the sampling technique, because the posterior realizations generated with DREAM were checked to be consistent with the (in this case) known analytical posterior parameter distribution.

(Central Limit Theorem) [Feller, 1968]. MC integration with sampling from the posterior (MC PS), however, leads to a severe overestimation of BME as anticipated (see section 3.3.3) and does not improve linearly with ensemble size in log-log-space, but shows a slower convergence. It also produces a much larger numerical uncertainty (keep in mind the logarithmic scale of the error axis). Note that the bias in BME approximation stems from the harmonic mean formulation and not from the sampling technique, because the posterior realizations generated with DREAM were checked to be consistent with the (in this case) known analytical posterior parameter distribution.

Nested sampling (NS) shows a similar approximation quality to MC integration, but is shifted on the x axis, i.e., it is less efficient with regard to numerical ensemble sizes in this specific test case. The convergence behavior shown here might not be a general property of nested sampling, because we found that modifications in the termination criteria significantly influence its approximation quality and uncertainty bounds. For this synthetic linear test case, we conclude that nested sampling is not as efficient as simple MC integration. It is also less reliable due to its somewhat arbitrary formulation with respect to the search for a replacement realization and the choice of termination criteria. In principle, it offers an alternative to simple MC integration and might become more advantageous in high-dimensional parameter spaces. We will continue this discussion for the real-word hydrological test case (section 5) and draw some final conclusions in section 6.

Since the ICs do not use random realizations to approximate BME, they are plotted as horizontal lines. With its assumptions fully satisfied, the KIC@MAP is equal to the analytical solution in this case. Therefore, it does not produce any error to be plotted in Figure 3. Evaluating the KIC at the MLE (KIC@MLE), however, leads to a significant deviation from the exact solution. For this specific setup, the AICc (after the KIC@MAP) performs best out of the mathematical approximations with a tolerable error of 3%. However, we will demonstrate later that this is not a general result. Note that we assess the AIC(c)'s performance in approximating the absolute BME value here for illustrative reasons, although strictly speaking, it is only derived for comparing models with each other, i.e., only the resulting model weights should be assessed (see section 4.6). The other ICs yield approximation errors of 20–60%. Figure 3 shows that, except for MC integration with posterior sampling, the numerical methods outperform all of the ICs evaluated at the MLE, if only enough realizations are used.

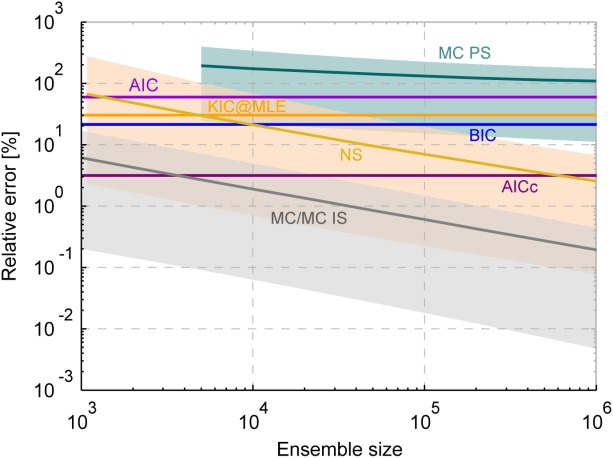

Results for Varied Data Set Size

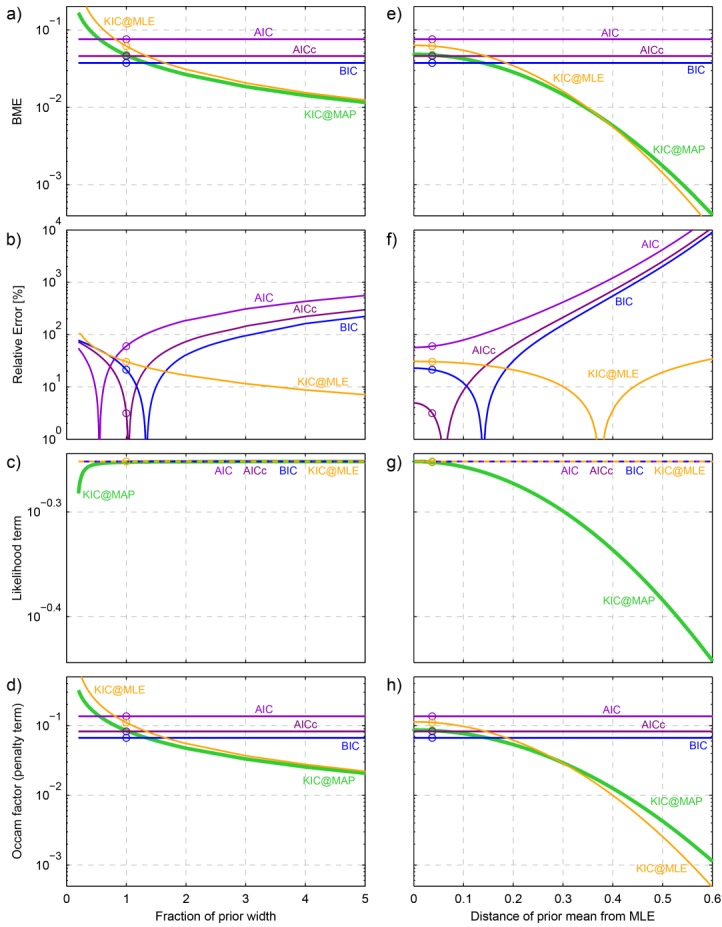

The approximation results as a function of data set size are shown in Figure 4. Since we have demonstrated that the numerical methods (except for posterior sampling) can approximate the true solution with arbitrary accuracy if only the invested computational power is large enough, we do not show their results here, as they would coincide with the solution of the KIC@MAP. Figure 4a shows the approximated BME values, while the relative error in percent with respect to the analytical solution is shown in Figure 4b.

Figure 4.

Synthetic test case results as a function of data set size: (a) approximation of BME, (b) relative error with respect to the analytical solution with 95% Bayesian confidence intervals, (c) likelihood term approximation, (d) Occam factor approximation. The result obtained from KIC@MAP represents the analytical solution in this case.

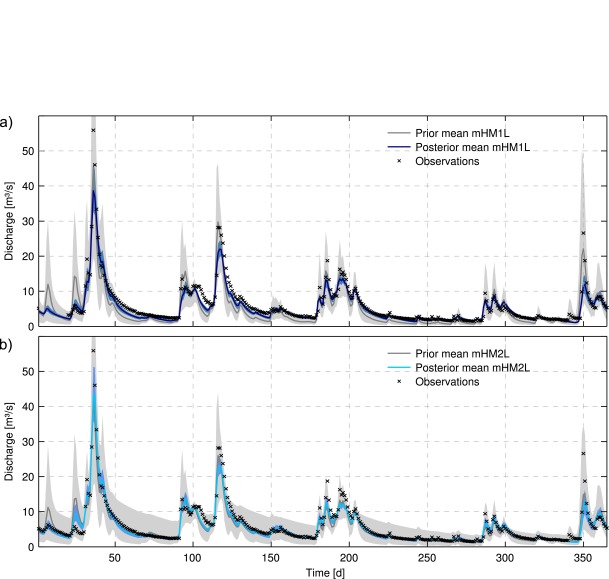

The true BME curve (represented by the KIC@MAP here) is approximated quite well by both the KIC@MLE and by the BIC. However, while the KIC@MLE converges to the KIC@MAP with increasing data set size, the BIC does not. Its relative error with respect to the analytical solution becomes stable at more than 20%. This result is not in agreement with the findings of Lu et al. [2011], who confirmed the general belief that the BIC approaches the KIC with increasing data set size. In our case, the contribution of the terms dismissed by the BIC (see section 3.2) is still significant and hence produces a relevant deviation between the two BME approximations.