Abstract

Pigeons prefer an alternative that provides them with a stimulus 20% of the time that predicts 10 pellets of food and a different stimulus 80% of the time that predicts 0 pellets, over an alternative that provides them with a stimulus that always predicts 3 pellets of food, even though the preferred alternative provides them with considerably less food. It appears that the stimulus that predicts 10 pellets acts as a strong conditioned reinforcer, in spite of the fact that the stimulus that predicts 0 pellets occurs four times as often. In the present research we tested the hypothesis that early in training conditioned inhibition develops to the 0-pellet stimulus but later in training it dissipates. We trained pigeons with a hue as the 10-pellet stimulus and a vertical line as the 0-pellet stimulus. To assess the inhibitory value of the vertical line, we compared responding to the 10-pellet hue to responding to the compound of the 10-pellet hue and the vertical line early in training and once again late in training, using both a within subject design (Experiment 1) and a between groups design (Experiment 2) and found that there was a significant reduction in inhibition between the Early test (when pigeons chose optimally) and Late test (when choice was suboptimal). Thus, the increase in suboptimal choice may result from the decline in inhibition to the 0-pellet stimulus. Implications for human gambling behavior are considered.

It has been estimated that problem gambling affects as much as 5% of the population (Shaffer, Hall, Vander Bilt, 1999). Given the increased availability of legalized gambling and its rising popularity, attention to the mechanisms involved in this kind of suboptimal behavior is of interest, especially considering the negative biological, psychiatric, and social consequences oftentimes associated with it (Fong, 2005).

In an attempt to understand why gambling problems exist, several theories that have attempted to model the risk factors that contribute to acquisition and degree or severity of a gambling habit (Blaszczynski & Nower, 2002; Sharpe, 2002). For example, a number of studies have found that higher behavioral impulsivity is associated with more severe problem gambling than lower baseline levels of impulsivity (see Nower & Blaszczynski, 2006 for a review and descriptive model). However, the causal relations are unclear as it is difficult to experimentally manipulate these variables with humans. Thus, for example, is impulsivity merely a marker of the gambling problem or does it play a causal role in pathological gambling? Human researchers also acknowledge the role of learning in ongoing gambling but to our knowledge, the means by which a gambling habit is acquired over repeated exposures has not been systematically studied in humans. In other words, research with humans has largely focused on the outcomes of a single gambling session, but it may be important to understand the learning processes that lead to the acquisition of a gambling habit. Fortunately, animal models allow one to attempt to identify the factors that contribute to the kind of suboptimal choice characteristic of human gambling and study the acquisition of gambling habits over time. To shed light on some of these questions, we have developed a model of the decision-making process observed in human gamblers using pigeons as subjects (Zentall & Laude, 2013; Zentall & Stagner, 2011).

Like humans who gamble, we have found that animals too choose suboptimally under similar choice conditions. Pigeons show an impaired ability to objectively assess overall probabilities and amounts of reinforcement when an infrequent, high-value outcome (analogous to a jackpot in human gambling) is presented in the context of more frequently occurring losses. For example, pigeons reliably prefer an alternative that signals a low-probability, high-payoff outcome (i.e., gambling), even when this preference results in less overall reinforcement than an alternative that signals a high-probability, low-payoff outcome, in which losses never occur (i.e., not gambling; see, Zentall & Stagner, 2011).

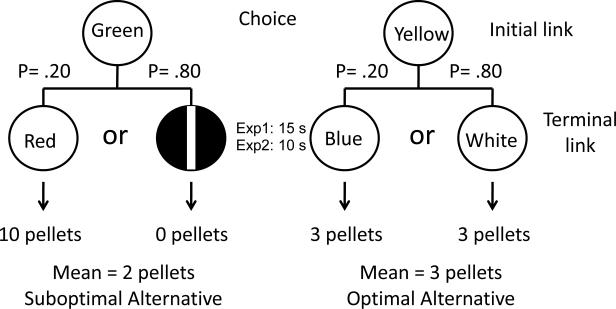

More specifically, for the low-probability high-payoff alternative, a stimulus that always predicts 10 pellets of food (S10) is presented with a probability of .20 (e.g., either a fixed time schedule, FT-10s, in which reinforcement is provided after a fixed time, or a fixed interval schedule, FI-10s, in which the first response after a fixed interval is reinforced; although no responses are required on FT schedules, pigeons typically peck, at rates related to the “value” of the stimulus), and a stimulus that always predicts the absence of food (S0) (always an FT-10s schedule), is presented with a probability of .80. The mean reinforcement per trial associated with this discriminative-stimulus alternative is 2 pellets. Choice of the other (high-probability, low-payoff) alternative produces one of two stimuli on each trial, both of which always predict 3 pellets (either a FT-10s or FI-10s schedule), (S3; see the design in Figure 1). In this experiment the pigeons preferred the suboptimal alternative that provided them with an average of 2 pellets of food over the optimal alternative that provided them with 3 pellets of food (Zentall & Stagner, 2011).

Figure 1.

Design of Experiment 1: Pigeons were given a choice between two alternatives. Choice of one alternative produced either a stimulus (e.g., red) on 20% of the trials that produced 10 pellets or a white vertical line on the remaining 80% of the trials that was followed by 0 pellets (discriminative stimulus/suboptimal alternative). Choice of the other alternative was followed by one of two stimuli (e.g., green or white) each of which yielded 3 pellets (nondiscriminative stimulus/optimal alternative).

Similar results were found when the alternatives led to stimuli that differed in terms of the probability of reinforcement (Stagner & Zentall, 2010). Specifically, when pigeons were given a choice between one alternative that on 20% of the trials provided a stimulus that predicted reinforcement 100% of the time but otherwise provided a stimulus that always predicted the absence of reinforcement (on average 20% reinforcement) and an alternative that provided a stimulus that always predicted reinforcement 50% of the time, they showed a strong preference for 20% reinforcement over 50% reinforcement.

Furthermore, manipulations presumed to increase impulsivity are also thought to be associated with increased suboptimal choice. Such conditions include pigeons that are maintained at higher levels of motivation for food (Laude, Pattison, & Zentall, 2012) and pigeons that are housed in individual cages (compared to pigeons that spent time in a larger social setting; Pattison, Laude, & Zentall, 2012). Those manipulations presumably function to increase attraction to the signal for the high-reward outcomes. More recently, we have found that greater degrees of impulsivity as indexed by a delay-discounting task were associated with increased choice of the gambling-like alternative (Laude, Beckmann, Daniels, & Zentall, 2013). Interestingly, parallel findings exist in the human literature. For instance, people from lower socio-economic status tend to gamble proportionally more than those from higher socio-economic status (Lyk-Jensen, 2010). It has also been found that increased rates of delay discounting of monetary rewards for human gamblers is associated with impulsive choice and severity of problem gambling problem (Alessi & Petry, 2003; Dixon, Marley & Jacobs, 2003; MacKillop, Anderson, Castelda, Mattson & Donovick, 2006).

Further support that our model is an appropriate analog of human gambling behavior comes from results we have obtained with self-reported human gamblers who were trained with a procedure similar to the one we used with pigeons (Molet, Miller, Laude, Kirk, Manning & Zentall, 2012). In this study we found that humans who reported gambling chose the low-probability high-payoff outcome significantly more than those who reported that they did not gamble.

One reason for the general finding of suboptimal choice under these conditions is that stimuli that are better predictors of reinforcement when they are present than when they are absent become conditioned reinforcers (S+) to which animals are particularly attracted (Dinsmoor, 1983). With regard to the procedure used by Zentall and Stagner (2010), it is proposed that choice depends on the very strong attraction to the signal for 10 pellets even though it occurs for those choices only 20% of the time. Despite the appeal of the conditioned reinforcement hypothesis, it fails to consider the presumed conditioned inhibition that should accrue to the stimulus associated with the absence of reinforcement, which should reduce the preference for the suboptimal alternative, especially given that it occurs four times as often as the conditioned reinforcer. That is, the frequent appearance of the presumed conditioned inhibitor should diminish the attraction of the alternative associated with the infrequently occurring conditioned reinforcer. If the stimulus associated with the absence of reinforcement is inhibitory, it should come to control a tendency that subtracts from that of the conditioned reinforcer and thus, should decrease choice of that discriminative stimulus alternative (see Rescorla, 1969).

Perhaps the conditioned inhibitor does not gain sufficient inhibitory value because when it appears, the pigeon turns away from it. For example, there is evidence that the effectiveness of conditioning for pigeons depends on the length of time that the pigeon spends in the presence of the stimulus (Roberts 1972). To test this hypothesis, Stagner, Laude, and Zentall (2011) repeated the Stagner and Zentall (2010) procedure and included a condition in which a diffuse houselight (that the pigeons presumably could not avoid) replaced the localized stimulus associated with the absence of reinforcement, however, that manipulation did not decrease the pigeons’ preference for the suboptimal alternative. Although reduced observation of the conditioned inhibitor does not appear to be responsible for the suboptimal choice, it is clear that the stimulus associated with the absence of reinforcement did not acquire sufficient inhibition to counteract the conditioned reinforcement associated with conditioned reinforcer, therefore resulting in suboptimal choice.

Traditional theories of discrimination learning posit that as the amount of discrimination training increases, so too should the negativity of the stimulus associated with the absence of reinforcement (Spence, 1936; Sutherland, 1964; Macintosh, 1965). That is, conditioned inhibition associated with S0 should increase monotonically as a function of amount of discrimination training. Alternatively, it is possible that repeated nonreinforced exposure to the conditioned inhibitor could reduce its inhibitory value, a prediction that has some empirical support. For example, Biederman (1968) trained pigeons on two simultaneous discriminations, one that received 100 training trials (more trained) and the other 50 training trials (less trained). When he then presented the pigeons with a choice between the more trained and less trained negative stimuli (S-), he found a preference for the more trained S- (see also; Biederman, 1967, 1970; Deutsh, & Biederman, 1965). Thus, the relation between strength of conditioning to the S- and amount of training may not be monotonic. In fact, consistent with the hypothesis that inhibition to the S- decreases with training is the finding with our gambling-like task that pigeons sometimes choose optimally early in training, before they choose suboptimally (see Laude, Pattison, & Zentall, 2012; Pattison, Laude, & Zentall, 2013).

The purpose of the present experiment was to assess whether the inhibitory value of a stimulus associated with the absence of reinforcement decreases with training relative to the strength of the conditioned reinforcer. To accomplish this, a modified version of the procedure used by Stagner and Zentall (2011) was used to test for inhibition at two different points in training (early and late). We used a within subject design in Experiment 1 and a between subjects design in Experiment 2.

Because we were interested in changes in conditioned inhibition as a function of training and we have found that low levels of food restriction result in an initial preference for the optimal alternative (Laude et al., 2012), the present experiment was conducted with pigeons that were minimally food restricted. The prediction was that early in training the pigeons would show relatively strong conditioned inhibition to the stimulus associated with the absence of reinforcement but that the conditioned inhibition would decline as the pigeons’ preference shifted from the optimal, average 3-pellet, nondiscriminative stimulus alternative to the suboptimal, average 2-pellet, discriminative stimulus alternative.

To assess the value of the conditioned inhibitor, a combined-cue test (referred to by Rescorla, 1971, as a summation test) was conducted in which the S0 was presented together with a stimulus that had been previously been associated with reinforcement (the S10). These stimuli were on an FT or FI-10s schedule, for example. When the two cues were presented in compound, we asked how responding would be altered relative to responding to S10 alone. The negative value of the conditioned inhibitor should be demonstrated by its capacity to reduce responding to S10 below the level occurring in the absence of S0 (see Hearst, 1972; Hearst, Beasley, & Farthing, 1970). If a reduction in inhibition to the stimulus associated with the absence of food is responsible for the eventual preference for the discriminative stimulus alternative, then when pigeons have an initial preference for the nondiscriminative alternative, we should see a large decrement in responding to the S10S0 compound relative to responding S10 alone. On the other hand, later in training, when the pigeons prefer the discriminative stimulus alternative, we should see a reduction in the decrement in in responding to the S10S0 compound relative to responding S10 alone.

Method

Subjects

The subjects were five White Carneau pigeons that were retired breeders purchased from the Palmetto Pigeon Plant (Sumter, SC). Throughout the experiment, the pigeons were fed to 90% of their free-feeding weight and were individually housed in wire cages, with free access to water and grit, in a colony room that was maintained on a 12:12-h light:dark cycle. The pigeons were cared for in accordance with University of Kentucky animal care guidelines.

Apparatus

A Med Associates (St Albans, VT) ENV–008 modular operant test cage was used for this research. The response panel in the chamber had a horizontal row of three response keys. Behind each key was a 12-stimulus inline projector (Industrial Electronics Engineering, Van Nuys, CA) that projected a vertical white line on a black background as well as red, yellow, blue, white and green hues. Reinforcement (45 mg pigeon pellets, Bio-Serve, Frenchtown, NJ) was delivered from a pellet dispenser that was mounted behind the response panel (Med Associates ENV– 45). A 28 V, 0.1 A, houselight was centered above the response panel. A microcomputer in the adjacent room controlled the experiment.

Procedure

Pretraining

All pigeons were trained to peck each of the stimuli on the left and right response keys while illuminated and the first peck after a specified duration (fixed interval, FI, schedule of reinforcement) resulted in 2 pellets of reinforcement. The pigeons were first trained on an FI 1s schedule for 2 sessions and then an FI 10s schedule for 2 sessions. Each stimulus was presented 10 times in each session (60 trials per session).

Training

There were four different types of forced trial. At the start of each trial either a blue or yellow hue was presented on one of the side keys (the initial link). Each hue appeared equally often on the left or right. One peck to this stimulus changed the stimulus to the terminal link. If blue was presented (10 trials per session), 20% of the time, one peck to it changed it to red, for example, (S10, two trials per session), and after 15s (a fixed time, FT-15s schedule - response independent) 10 pellets were delivered. On the remaining 80% of the trials that the blue stimulus was presented, one peck changed it to the vertical line (S0 , eight trials per session), and after 15s no pellets were delivered. If yellow was presented (10 trials per session), a peck changed the stimulus to green on 20% of the trials (S3-2), (2 trials per session) and to white on 80% of the trials (S3-8), (8 trials per session). In each case, after 15s, 3 pellets were delivered. The colors of the initial link stimuli (yellow and blue) were counterbalanced over subjects as was the 10-pellet stimulus (S10) and the less frequently presented 3-pellet stimulus (S3-2) (always either red or green). The 0-pellet stimulus (S0) was always the vertical white line and the more frequently presented 3-pellet stimulus (S3-8) was always white (the design of the experiment appears in Figure 1). A 10-s intertrial interval illuminated by the houselight separated the trials.

Each session also included 20 choice trials in which blue and yellow hues were presented simultaneously, one on the left key the other on the right key. A single peck to either color resulted in presentation of one of the stimuli associated with that choice, with the same probabilities and outcomes as on forced trials. The unchosen key was darkened. The choice trials were presented randomly among the forced trials. All pigeons received experience with forced and choice trials with this procedure for a minimum of 35 sessions.

The criterion for testing was twofold. First, a pigeon had to have had at least 3 sessions of training and second, to ensure adequate stimulus discrimination, the discrimination ratio (responses per trial to S10 divided by responses per trial to the sum of responses to both S10 and S0 had to be 0.80 or better.

Early test of inhibition

To assess the degree of conditioned inhibition associated with S0 early in training, a test session was conducted in which compound stimulus trials were presented (S10S0, as well as trials of each forced trial type from training (S10, S0, S3-2, S3-8; 40 total trials per session).

On the eight compound stimulus trials, following illumination of the blue initial link stimulus, one peck was required to illuminate a compound consisting of the S10 and S0 terminal link stimuli (S10S0) for 15s. Pecking the blue initial link also resulted in the S10 terminal link stimulus on eight trials and the S0 terminal link stimulus on eight trials. Upon illumination of the yellow initial link stimulus, either the S3-2 (8 trials) or the S3-8 (8 trials) terminal link stimuli would appear. On test sessions, all trial types were presented in extinction and in random order. Of interest was the measure of inhibition (i.e., the degree to which the number of pecks during the 15s presentation of S10S0 was less than the number of pecks to the S10).

Late test of inhibition

To assess the degree of conditioned inhibition associated with S0 later in training, a test session similar to the Early test session was conducted. Because the schedule associated with the terminal-link stimuli was FT (i.e., response independent) the rate of pecking the terminal-link stimuli associated with reinforcement declined with training. However, it was important for the rate of pecking the S10 stimulus to be reasonably high to adequately conduct the combined-cue test. For this reason, if for any pigeon, responding to the 10-pellet stimulus (S10) was below an average of 20 pecks/trial, at Session 35, the schedule associated with the terminal-link stimuli (with the exception of the S0 stimulus) was changed from an FT-15s schedule to an FI-15s schedule until they had reached a discrimination ratio of 0.80 or better. The number of each trial type and the outcomes associated with each for the Late test of inhibition were the same as for the Early test of inhibition.

Results

Acquisition of Suboptimal Choice

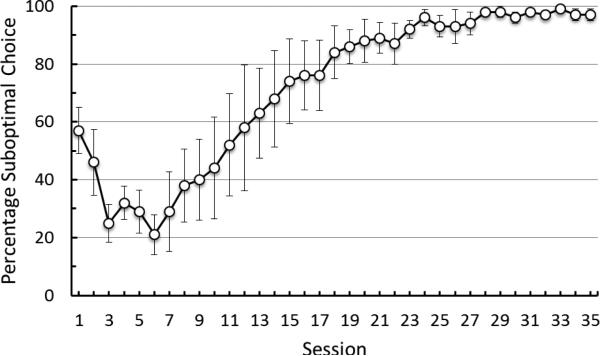

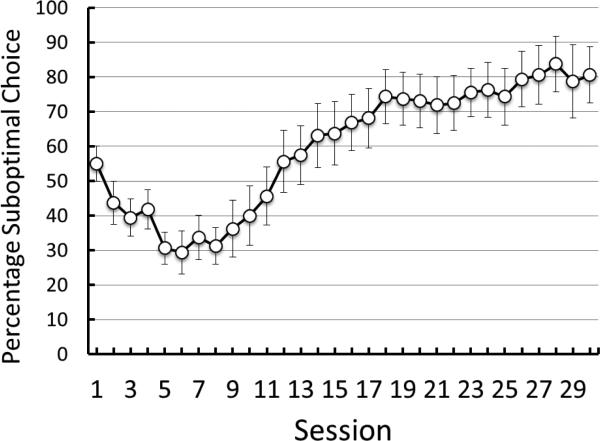

Pigeons showed a clear early preference for the nondiscriminative stimulus alternative with 3 pellets of reinforcement and it was statistically reliable at Session 3, t(4) = 3.84, p = 0.02 (M = 68.00, SE = 5.83) (see Figure 2). Preference for the nondiscriminative alternative was also reliable through Sessions 4-6, all ps < .05. With continued experience with the task, however, the preference for the 3-pellet alternative began to reverse to a preference for the discriminative alternative. The preference for the discriminative stimulus alternative was reliable at Session 18, t(4) = 3.70, p = 0.02, (M = 84, SE = 9.14), and remained so throughout Phase 1 training, all ps < .05.

Figure 2.

Experiment 1: Choice of the suboptimal alternative as a function of training. Error bars indicate ± one standard error of the mean. All of the pigeons received at least 35 sessions of training.

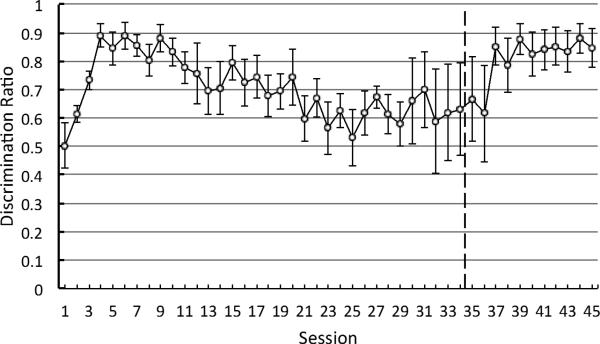

Discrimination Ratios

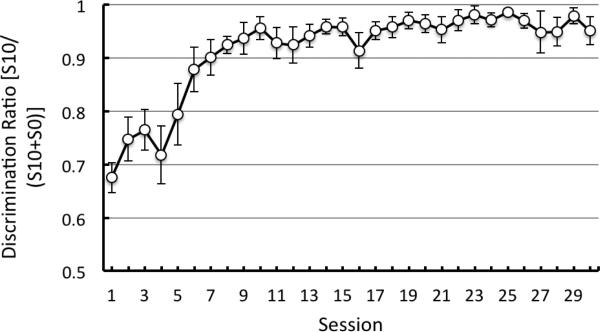

Overall, the pigeons’ discrimination ratios (the ratio of responding to the S10 divided by the sum of responding to both the S10 and the S0) were reliably above 0.50 as early as Session 2, t(4) = 3.94, p = 0.02 (see Figure 3). This was the case until Session 12 at which point the discrimination ratio was not significantly better than .50, t(4) = 2.40, p = 0.07, because pecking to the stimulus associated with 10 pellets had declined. When the schedule of reinforcement associated with S10 and the two, S3 stimuli was changed from FT to FI (response dependent), responding to the conditioned reinforcers increased as did the discrimination ratio.

Figure 3.

Experiment 1: Discrimination ratio [pecks to S10/(pecks to S10 + pecks to S0)] as a function of training. Dashed line indicates the point in training at which the schedule of reinforcement associated with S10 and S3 changed from fixed time (response independent) to fixed interval (response dependent). Error bars indicate ± one standard error. The figure ends at 45 sessions because all of the pigeons received at least this much training- the average from all 8 pigeons is presented.

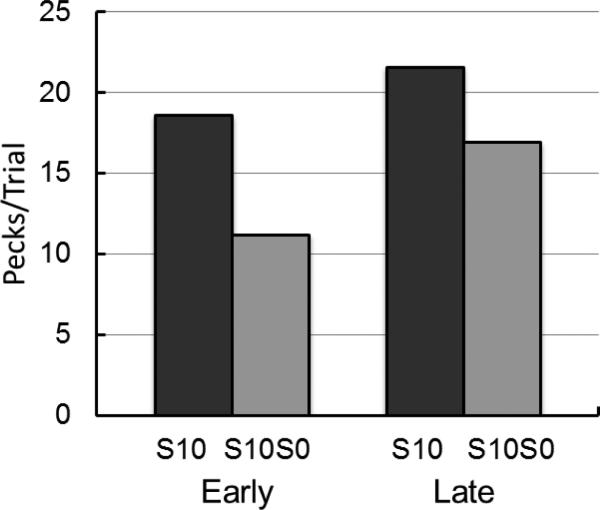

Tests of Inhibition

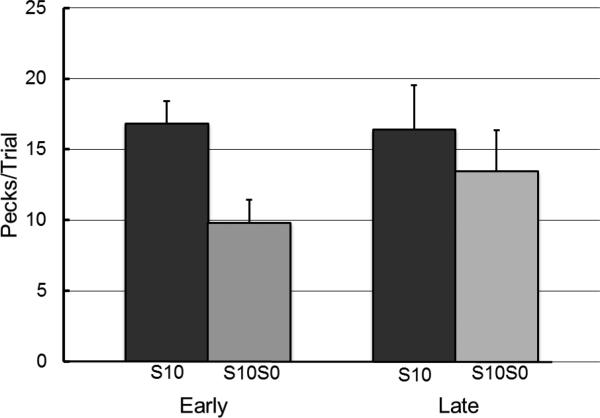

The individual pigeons were given the early test of inhibition at Session 3, 4, 4, 4, and 6 depending on when each pigeon reached the discrimination criterion, at which point there was an overall preference for the 3-pellet alternative of 77% (SE = 8.75). The average discrimination ratio on the session prior to the Early test of inhibition was 0.89 (SE = 0.04). For the Late test of inhibition, because the discrimination ratios for four of the pigeons did not meet the 0.80 criterion on Session 35, only one of the pigeons was given the Late test on Session 36. The other four pigeons were given the Late test on Sessions 46, 46, 48, and 49. On the session prior to the Late test, choice of the discriminative stimulus alternative was 100% for all of the pigeons. The average discrimination ratio for the session before the Late test of inhibition was 0.88 ( SE = 0.02). Neither the change in peck rate from the Early to Late test for the S10 stimulus (M = 18.60, SE = 3.92; M = 21.60, SE = 2.99, respectively), p > .05, nor the change in peck rate from the Early to Late test for the S0 stimulus (M = 2.3, SE = 0.80; M = 3.52, SE = 1.23, respectively) was statistically significant, p > .05. Furthermore, the discrimination ratios in the session prior to the Early and Late tests were comparable (M = .90, SE = .03; M = .87, SE = .03, respectively), p > .05.

Inhibition was defined as the proportion of decrease in responding to S10 resulting from presentation of the combined cue S10S0 [1- (responses to S10S0/ responses to S10)]. A paired-samples t-test revealed that there was a significant decrease in inhibition from the Early test (M = 0.38, SE = 0.14) to the Late test (M = 0.12, SE = 0.21), t(4) = -2.99, p = .04, d = .66, 95% CI(d) = 0.50 ≤ d ≤ 5.70, indicating that inhibition had declined between the Early and Late tests (see Figure 4).

Figure 4.

Experiment 1: Test of inhibition early and late. S10= pecks to the conditioned reinforcer (S10). S10S0 = pecks to the combined cue. Error bars indicate one standard error of the mean.

Discussion

The results of Experiment 1 are consistent with the hypothesis that although considerable inhibition develops early in training, as assessed by the combined-cue test, inhibition decreases with further training. Furthermore, the decrease in inhibition was accompanied by a decrease in preference for the optimal (3-pellet) initial link alternative. Thus, it is likely that the decreased inhibition associated with S0 contributed to the shift in preference in the initial link stimuli from the optimal alternative to the suboptimal alternative.

It may be, however, that because the Early and Late tests of inhibition were conducted as a repeated measure, the first test may have had an effect on the second test. In anticipation of the possibility that a decrease in inhibition would occur between the Early and Late tests, we ran the tests in extinction. That is, by conducting the tests in extinction, the effect of the first test on the second test should have been to increase inhibition on the second test due to the association of the combined cue with the absence of reinforcement. Thus, any effect of extinction on the combined cue should have actually decreased responding to it and thus, increased the measure of inhibition. In fact, however, pecking to the nonreinforced compound actually increased between the Early and Late tests and the increase was proportionally more than the increase in responding to S10. On the other hand, in spite of our efforts to counter the potential artifact that might have resulted from the test-retest procedure, it was still the case that the combined cue was not as novel on the second test as it was on the first test.

To control for the possibility that the reduction in inhibition from the Early test to the Late test found in Experiment 1 resulted from testing the pigeons twice, in Experiment 2 we used a between groups design in which one group of pigeons was tested for conditioned inhibition early and the other late. That is, in Experiment 2 each pigeon was tested only once.

Subjects

Subjects were 8 unsexed White Carneau pigeons (ages 3-6) that were naïve to suboptimal choice procedures. The pigeons were maintained under the same conditions as the subjects in Experimnet 1.

Apparatus

The apparatus was the same as in Experiment 1.

Procedure

Training

Both the pretraining and training procedures were the same as they were in Experiment 1 (See Figure 1) with the exception that FI schedules were used for the terminal links from the outset of the experiment (with the exception of the stimulus associated with 0 pellets which was on a FT schedule) and the terminal links were 10s rather than 15s in duration. All the pigeons were trained for at least 30 sessions.

Tests for inhibition

Four pigeons were assessed for their degree of conditioned inhibition associated with S0 at an early point in training and the remaining four pigeons were tested at a later point in training. The criterion for testing and the testing procedure itself was the same as it was in Experiment 1 with the exception that each pigeons’ discrimination ratio had to be 0.85 or better before they were tested.

Assignment to Early or Late group

Discrimination ratios were assessed after each training session. Early and Late groups were matched for sessions to reach a discrimination ratio of 0.85 or better such that once a pigeon met criterion, it was randomly assigned to one of the groups and the next pigeon that met criterion was assigned to the other group.

Results

Acquisition of Suboptimal Choice

As in Experiment 1, pigeons in Experiment 2 showed an early preference for the more optimal alternative associated with 3 statistically reliable at Session 5, t(7) = 4.14, p = .004, (M = 69.38, SE = 4.67) (see Figure 5). Preference for the optimal alternative remained reliable through Session 8, ps < .05, at which point, we observed a trend towards choice of the suboptimal alternative. The preference for the suboptimal alternative was reliable at Session 18, t(4) = 3.70, p = 0.02 , (M = 74.4, SE = 7.80), and remained so throughout training, ps < .05.

Figure 5.

Experiment 2: Choice of the suboptimal alternative as a function of training. Error bars indicate ± one standard error of the mean.

Discrimination Ratios

In examining the discrimination ratios, overall, the pigeons were reliably above a 0.50 discrimination ratio as early as Session 1, t(7) = 6.30, p < .001 (see Figure 6) and performance remained at this level or higher throughout training (ps < .05). As a group, there was a sharp decrease in responding to the S0 stimulus and responding did not reliably increase with extended training. Responding to the S10 stimulus was high from the first session on.

Figure 6.

Experiment 2: Discrimination ratio [pecks to S10/(pecks to S10 + pecks to S0)] as a function of training. Error bars indicate ± one standard error of the mean.

Dissipation of Inhibition

On average, the pigeons were tested for conditioned inhibition at Session 6 at which point there was an overall preference for the 3-pellet alternative of 68.8% (SE = 6.25). Individually, pigeons were given the Early test on sessions 3, 6, 6 and 8. The average discrimination ratio on the session before the Early test of inhibition was M = 0.91, SE = 0.02. On average, pigeons were given the Late test of inhibition after 35 sessions of training, at which point there was an overall preference for the discriminative stimulus alternative (M = 95.0%, SE = 2.04). Individually, pigeons were given the Late test on sessions 32, 35, 37 and 34. The average discrimination ratio for the session before the Last inhibition was 0.94 ( SE = 0.03). The difference in peck rate to the S10 stimulus from the Early to the Late test was not significant (M = 16.84, SE = 1.59; M = 16.44, SE = 3.08) nor was the difference in peck rate to the S0 stimulus from the Early to the Late test (M = 2.66, SE = 1.52; M = 3.13, SE = 2.54), both p's > .05. Discrimination ratios for the session prior to test and during test were all comparable, p's > .05. Analysis of the inhibition ratio [1-(responses to S10S0/responses to S10)] with an independent-samples t-test revealed that there was a significant decrease in inhibition from the Early test (M = .43, SE = .04) to the Late test (M = .19, SE = .04), t(6) = -3.88, p = 0.008, d = 2.67, 95% CI(d) = 0.86 ≤ d ≤ 6.599, (see Figure 7).

Figure 7.

Experiment 2: Test of inhibition early and late. S10 = pecks to the conditioned reinforcer (S10). S10S0 = pecks to the combined cue. Error bars indicate one standard error of the mean.

Correlation between Inhibition Ratios and Suboptimal Choice

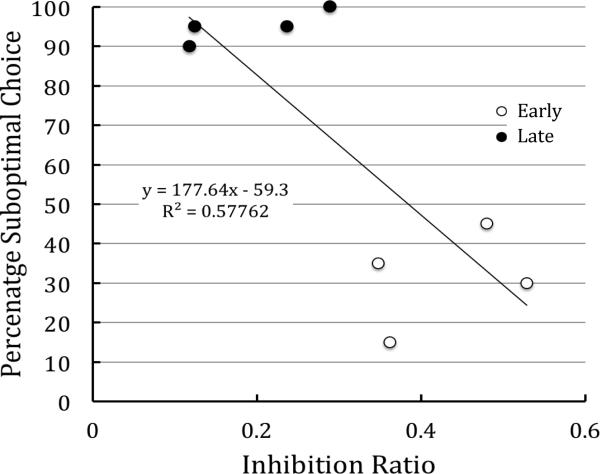

When the data from the two groups were pooled, a reliable correlation was found between inhibition ratios and suboptimal choice on the session prior to testing, r = -0.76, t(6) = 2.86, p < 0.05, CI(r) -0.95 ≤ r ≤ -0.12, (see Figure 8).

Figure 8.

Experiment 2: Correlation between degree of inhibition as assessed early and late in training and degree of choice of the suboptimal alternative.

Discussion

The results of Experiment 2 confirmed the findings from Experiment 1. Although there was considerable inhibition found on the Early test of inhibition, there was a significant reduction of inhibition on the Late test of inhibition. The between groups design of Experiment 2 avoided the potential problem with the test-retest design that may have biased the results of Experiment 1, however, the similarity of findings between the two experiments suggests that retesting in Experiment 1 probably did not play an important role in the effects found.

General Discussion

The purpose of the present experiments was to test the hypothesis that conditioned inhibition to the S0 stimulus would be large when there was a preference for the 3-pellet (nondiscriminative stimulus) alternative and would decline once a preference for the discriminative stimulus alternative developed. This hypothesis was based on the finding with pigeons we have often found of an initial preference for the optimal (3-pellet) alternative prior to the strong preference that pigeons eventually have for the suboptimal alternative. Support for our hypothesis was the earlier finding that extended training with a simultaneous discrimination can lead to reduced inhibition as assessed by a preference test between an overtrained and undertrained S- stimulus (Biederman, 1968; see also D'Amato & Jagoda, 1961).

In two experiments we assessed the possibility that a similar decline might be observed in a successive discrimination using a compound-cue test of inhibition. Consistent with this hypothesis we found a significant decrease in inhibition from the Early test of inhibition, when the pigeons chose optimally, to the Late test of inhibition, when the pigeons chose suboptimally. Furthermore, in the second experiment, when we pooled the data from the Early and Late-test groups, we found a significant negative correlation between the level of inhibition and degree of suboptimal choice. This finding supports the conclusion that when pigeons prefer the alternative that is associated with 3 pellets of reinforcement, they tend to have greater levels of inhibition than when they prefer the suboptimal alternative associated with 2 pellets overall. The results of both experiments indicate that the reduction in inhibition likely plays a role in the reversal in preference from the nondiscriminative stimuli associated with the 3-pellet alternative to the discriminative stimuli associated with the mean of 2 pellets overall.

It is interesting to consider how the rates acquisition of excitatory and inhibitory conditioning in this task may have interacted to produce choice behavior. Specifically, it is possible that conditioned inhibition developed at a faster rate than it normally would have in a more typical design with equal frequencies of the stimuli. This is because in our experiment, the conditioned inhibitor S0 was presented four times as often as the conditioned reinforcer S10. Early in training the inhibition would have subtracted from the slowly developing conditioned reinforcement to the S10 stimulus. On the other alternative, the conditioned reinforcers (S3-2 and S3-8) occurred 100% of the time, and thus, early in training, conditioned reinforcement associated with that alternative would have been greater than the conditioned reinforcement associated with the gambling-like alternative. For this reason one would expect an initial preference for the optimal (3-pellet) alternative. However, with extended training, conditioned inhibition declines and conditioned reinforcement associated with the signal for 10 pellets on the discriminative stimulus alternative would reach asymptotic level. At this later point in training, the conditioned reinforcement associated with the discriminative stimulus alternative would be greater than that of the 3-pellet alternative which would result in suboptimal choice.

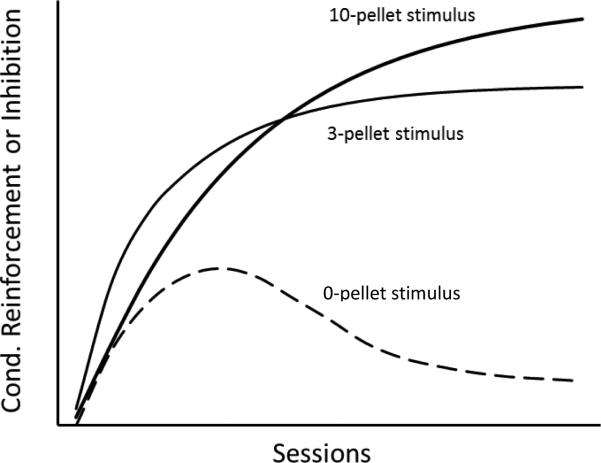

A plot of the hypothetical growth of conditioned reinforcement associated with the S10 stimulus and the S3 stimulus, and conditioned inhibition associated with the S0 stimulus appears in Figure 9. Note that conditioned reinforcement associated with the S3 stimulus rises at a faster rate than the S10 stimulus because on forced trials there are many more presentations of the S3 stimulus (although there were two S3 stimuli it is assumed that the net level of conditioned reinforcement associated with that alternative grew at a rate of at least that of the more frequently occurring stimulus, S3-8) but that at asymptote, conditioned reinforcement associated with the S10 stimulus is higher than conditioned reinforcement associated with the S3 stimulus. Note also that conditioned inhibition (indicated by the dashed line) first rises and then falls as we found in the present research.

Figure 9.

Hypothetical growth of conditioned reinforcement associated with the 10-pellet, and 3-pellet stimuli, and conditioned inhibition associated with 0-pellet stimulus. Note that the response strength associated with the 3-pellet stimulus is hypothesized to grow faster than the response strength associated with the 10-pellet stimulus because it occurs five times as often (on forced trials) but it reaches a lower asymptote. Conditioned inhibition associated with the 0-pellet stimulus first rises and then falls as assessed in the present research.

One might argue that because the peck rate to S10 was comparable on the Early and Late test, we do not have evidence that with extended training excitation to S10 increases. However, it is very likely that a behavioral ceiling was reached - the pigeons were already responding at a high rate by that point and we were not able to detect the increase in excitation with the response rate measure. On the other hand, the continued increase in choice of the suboptimal alternative suggests that S10 excitation continued to grow for some time after the first test. Thus, the increase in excitation to S10 as a function of additional training has generalized to the initial choice link.

In the present experiment, although inhibition appears to have dissipated with continued training, one may ask why excitation did not dissipate as well. Evidence dating back to Pavlov suggests that excitation also may dissipate (see also Urcelay Witnauer, & Miller, 2012). However, in our experiment, because the S10 occurred relatively infrequently as compared to the S0 there may not have been sufficient opportunity for excitation to dissipate. In any case, an asymmetry in the effects of overtraining on excitation and inhibition is reasonable given the differing probabilities of the occurrence of S10 and S0 in our task.

The question remains as to why inhibition diminishes with continued training? One possibility is that the amount of inhibition depends on the contrast between what is expected at the time of choice of the initial link and the stimulus that appears in the terminal link. Early in training, reinforcement is uncertain, so the absence of reinforcement upon the appearance of S0 produces contrast and inhibition. Later in training, however, the high probability of the occurrence of S0 should raise the expectation of nonreinforcement and reduce the contrast that occurs on nonreinforced trials, thus reducing inhibition. What is paradoxical about this account is that it leads to the conclusion that the pigeons are choosing to gamble with the expectation of losing. However, it is likely that the strong positive contrast that results whenever S10 occurs, accounts for choice of the suboptimal alternative especially if the appearance of S0 is associated with very little inhibition.

Alternatively, the reduction of inhibition with continued training may result from the mechanism that Capaldi (1967) proposed to account for the partial reinforcement extinction effect, an effect that he called sequential theory. According to sequential theory, whenever reinforcement occurs following choice of the suboptimal alternative, it serves to reinforce all of the immediately past nonreinforced choices of the suboptimal alternative (back to the last reinforced choice of that alternative). Thus, if one represents a reinforced choice of the suboptimal alternative as R and a nonreinforced choice of the suboptimal alternative as N, one could imagine a series of choices of the suboptimal alternative as, for example, NNNNR (or others such as NNNNNR or NNNR) and according to Capaldi, the R reinforces the series of Ns that preceded it and thus the Ns come to take on some degree of higher-order conditioned reinforcing value.

Once inhibition has declined and conditioned reinforcement to the S10 stimulus has fully developed, it appears that the overall probability of reinforcement associated with each alternative does not play an important role in the pigeons’ choice. In fact, recent findings from our lab suggest that the frequency with which positive conditioned stimuli occur generally does not play an important role in choice behavior for pigeons (Stagner, Laude & Zentall, 2012). In that experiment we manipulated the probability of reinforcement between two alternatives that each resulted in the presentation of discriminative stimuli. If the pigeon chose one alternative, on 20% of the trials it received an S+ (always followed by reinforcement) and on the remaining trials, an S- (never followed by reinforcement). If the pigeons chose the other alternative, on 50% of the trials it received an S+ (always followed by reinforcement) and on the remaining trials, an S- (never followed by reinforcement). Thus, the choice was between 20% reinforcement and 50% reinforcement. Under these conditions, although there was a clear difference in the probability of reinforcement associated with the two alternatives, the pigeons were indifferent between them. The results of this experiment suggest that the pigeons’ choice is based on the comparison of the best outcome associated with each alternative, in both cases a stimulus associated with 100% reinforcement, rather than the probability of reinforcement associated with choice of either of the initial link stimuli.

The purpose of the present experiments was to compare inhibition at a point at which inhibition was thought to be maximal, with inhibition later in training (at asymptote). However, the point in training of maximal inhibition could only be approximated. Examination of individual choice functions, suggests that the point at which several of the pigeons were tested early was not the point at which they had a maximum preference for 3-pellet alternative. For this reason we may not have tested the pigeons at the point at which we hypothesized that the greatest amount of inhibition occurred and we may have underestimated the point of maximum inhibition.

On the other hand, the amount of inhibition assessed with the combined-cue test may actually overestimate the actual inhibition to the S0 stimulus because there may have been some decline in responding to the combined cue due to its novelty. However, in the between groups design of Experiment 2, the fact that the results of the Early inhibition test were compared with the results of the Late inhibition test should have controlled for the novelty of the test stimuli. Although in Experiment 1 the late combined cue test would have been less novel than the early combined cue test, the similarity of results between the two experiments suggests that the novelty of the combined cues did not play a significant role in amount of inhibition found.

A similar argument can be made for the possibility that reduced responding to the test stimulus compound occurred because of a generalization decrement but once again those effects should have been similar on the Early and Late tests in Experiment 2, so comparison of the difference between inhibition on the Early and Late tests should have been unbiased. Furthermore, in both experiments, responding to the S10 alone was comparable at the time of the two tests, thus it is appropriate to compare the amount of inhibition at the two test times. The only variation in responding we found on the two tests within experiment was in the compound-cue test of inhibition between Early and Late tests. Moreover, in Experiment 1, although responding did decline to the S10 stimulus on the FT schedule following the Early test causing us to switch to an FI schedule, at the time of the Late test, responding was comparable to what it was when an FI schedule was used for both Early and Late tests in Experiment 2, ps > .05. Responding to S0 was also comparable across experiments for both tests, ps > .05.

With regard to the proposal that the present task is a reasonable analog of a human gambling task, it could be argued that the procedures used in the present experiment are unlike those that gamblers encounter because of the presence of conditioned reinforcers and conditioned inhibitors in the present research that may not present in the games of chance that gamblers typically encounter. However, if given some thought one can surely see that both conditioned excitors and conditioned inhibitors exist in human gambling contexts, albeit perhaps more subtly in the form of the symbols that appear on the wheels of a slot machine and the numbers that appear to a lottery ticket. To better appreciate the presence of those conditioned stimuli, one need only imagine the effect on human gambling of the absence of such cues (i.e., if the slot machine wheels were covered and money merely appeared, or if there were no numbers on a lottery ticket and the winners merely received a check in the mail).

The high probability of signals for nonreinforcement in the present experiments is analogous to what happens typically when humans gamble. Thus, a similar decline in conditioned inhibition to stimuli that signal a losing outcome could contribute to the acquisition and maintenance of a gambling problem in humans. Furthermore, our results with pigeons suggest that this suboptimal behavior can emerge even in humans who are not initially attracted to suboptimal alternatives if they are exposed to gambling environments for an extended time. That is, this behavior may emerge by way of conditioning even when losing is initially aversive and even in the absence of certain risk factors such as increased behavioral impulsivity (Laude et al., 2013; Nower & Blaszczynski, 2006).

The results of the present experiments contribute to the understanding of the mechanisms underlying suboptimal choice in pigeons. With the present procedure, it appears that conditioned inhibition develops at a rapid rate because of its more frequent occurrence, resulting in choice of the 3-pellet alternative. However, as inhibition declines and conditioned reinforcement increases, the pigeons come to prefer the discriminative stimulus alternative. Thus, the net result is that choice is not based on the overall probabilities of reinforcement associated with two alternatives. In this regard, it is noteworthy that Breen and Zuckerman (1999; see also Blanco, Ibáñez, Sáiz-Ruiz, Blanco-Jerez, & Nunes, 2000) found that humans who gamble regularly attend more to their wins and less to their considerably more frequent losses than occasional gamblers. Thus, the finding that the declining aversiveness of nonreinforcement and increase in conditioned reinforcement affects suboptimal choice by pigeons may have implications for human gambling behavior.

References

- Alessi SM, Petry NM. Pathological gambling severity is associated with impulsivity in a delay discounting procedure. Behavioural Processes. 2003;64:345–354. doi: 10.1016/s0376-6357(03)00150-5. [DOI] [PubMed] [Google Scholar]

- Biederman GB. The overlearning reversal effect. A function of the non-monotonicity of S-during discriminative training. Psychonomic Science. 1967;7:385–386. [Google Scholar]

- Biederman GB. Stimulus function in simultaneous discrimination. Journal of the Experimental Analysis of Behavior. 1968;11:459–463. doi: 10.1901/jeab.1968.11-459. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Biederman GB. Continuity theory revisited: A failure in a basic assumption. Psychological Review. 1970;77:255–256. [Google Scholar]

- Blanco C, Ibanez A, Saiz-Ruiz J, Blanco-Jerez C, Nunes E. Epidemiology, pathophysiology and treatment of pathological gambling. CNS Drugs. 2000;13:397–407. [Google Scholar]

- Blaszczynski A, Nower L. A pathways model of problem and pathological gambling. Addiction. 2002;97:487–499. doi: 10.1046/j.1360-0443.2002.00015.x. [DOI] [PubMed] [Google Scholar]

- Bradshaw CM, Szabadi E. Choice between delayed reinforcers in a discrete-trials schedule: the effect of deprivation. The Quarterly Journal of Experimental Psychology. 1992;44:1–16. doi: 10.1080/02724999208250599. [DOI] [PubMed] [Google Scholar]

- Breen RB, Zuckerman M. ‘Chasing’ in gambling behavior: Personality and cognitive determinants. Personality and Individual Differences. 1999;27:1097–1111. [Google Scholar]

- D'Amato MR, Jagoda H. Analysis of the role of over-learning in discrimination learning. Journal of Experimental Psychology. 1961;61:45–50. doi: 10.1037/h0047757. [DOI] [PubMed] [Google Scholar]

- Deutsch JA, Biederman GB. The monotonicity of the negative stimulus during learning. Psychonomic Science. 1965;3:391–392. [Google Scholar]

- Dinsmoor JA. Observing and conditioned reinforcement. The Behavioral and Brain Sciences. 1983;6:693–728. [Google Scholar]

- Dixon MR, Marley J, Jacobs EA. Delay discounting by pathological gamblers. Journal of Applied Behavior Analysis. 2003;36:449–458. doi: 10.1901/jaba.2003.36-449. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eisenberger R, Masterson FA, Lowman K. Effects of previous delay of reward, generalized effort, and deprivation on impulsiveness. Learning and Motivation. 1982;13:378–389. [Google Scholar]

- Field M, Cox WM. Attentional bias in addictive behaviors: A review of its development, causes, and consequences. Drug and Alcohol Dependence. 2008;97:1–20. doi: 10.1016/j.drugalcdep.2008.03.030. [DOI] [PubMed] [Google Scholar]

- Gipson CD, Alessandri JD, Miller HC, Zentall TR. Preference for 50% reinforcement over 75% reinforcement by pigeons. Learning & Behavior. 2009;37:289–298. doi: 10.3758/LB.37.4.289. [DOI] [PubMed] [Google Scholar]

- Hearst E, Besley S, Farthing GW. Inhibition and the stimulus control of operant behavior. Journal of the Experimental Analysis of Behavior. 1970;14:373–409. doi: 10.1901/jeab.1970.14-s373. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hearst E. Some persistent problems in the analysis of conditioned inhibition. In: Boakes RA, Halliday MS, editors. Inhibition and learning. Academic Press; London: 1972. pp. 5–39. [Google Scholar]

- Herrnstein RJ. Self-control as response strength. In: Bradshaw CM, Szabadi E, Lowe CF, editors. Quantification of steady-state operant behaviour. Elsevier North-Holland; Amsterdam: 1981. pp. 3–20. [Google Scholar]

- Holland PC, Rescorla RA. The effect of two ways of devaluing the unconditioned stimulus after first- and second-order appetitive conditioning. Journal of Experimental Psychology: Animal Behavior Processes. 1975;1:355–363. doi: 10.1037//0097-7403.1.4.355. [DOI] [PubMed] [Google Scholar]

- Holst RJ, Brink W, Veltman DJ, Goudriaan AE. Why gamblers fail to win: A review of cognitive and neuroimaging findings in pathological gambling. Neuroscience and Biobehavioral Reviews. 2010;34:87–107. doi: 10.1016/j.neubiorev.2009.07.007. [DOI] [PubMed] [Google Scholar]

- Hull CL. Principles of behavior. Appleton-Century-Crofts; New York: 1943. [Google Scholar]

- Laude JR, Pattison KF, Zentall TR. Hungry pigeons make suboptimal choices, less hungry pigeons do not. Psychonomic Bulletin & Review. 2012;19:884–891. doi: 10.3758/s13423-012-0282-2. [DOI] [PubMed] [Google Scholar]

- Lyk-Jensen SV. New evidence from the grey area: Danish results for at-risk gambling. Journal of Gambling Studies. 2010;26:455–467. doi: 10.1007/s10899-009-9173-5. [DOI] [PubMed] [Google Scholar]

- MacKillop J, Anderson EJ, Castelda BA, Mattson RE, Donovick PJ. Divergent validity of measures of cognitive distortions, impulsivity, and time perspective in pathological gambling. Journal of Gambling Studies. 2006;22:339–354. doi: 10.1007/s10899-006-9021-9. [DOI] [PubMed] [Google Scholar]

- Mackintosh NJ. Overtraining, reversal and extinction in rats and chicks. Journal of Comparative and Physiological Psychology. 1965;59:31–26. doi: 10.1037/h0021620. [DOI] [PubMed] [Google Scholar]

- Molet M, Miller HC, Laude JR, Kirk C, Manning B, Zentall TR. Decision making by humans in a behavioral task: Do humans, like pigeons, show suboptimal choice?. Learning & behavior. 2012;40:439–447. doi: 10.3758/s13420-012-0065-7. [DOI] [PubMed] [Google Scholar]

- Nower L, Blaszczynski A. Impulsivity and pathological gambling: A descriptive model. International Gambling Studies. 2006;6:61–75. [Google Scholar]

- Rescorla RA. Summation and retardation tests of latent inhibition. Journal of Comparative and Physiological Psychology. 1971;75:77–81. doi: 10.1037/h0030694. [DOI] [PubMed] [Google Scholar]

- Rescorla RA. Pavlovian conditioned inhibition. Psychological Bulletin. 1969;72:77–94. [Google Scholar]

- Roberts WA. Short-term memory in the pigeon: Effects of repetition and spacing. Journal of Experimental Psychology. 1972;94:74–83. [Google Scholar]

- Roper KL, Zentall TR. Observing behavior in pigeons: The effect of reinforcement probability and response cost using a symmetrical choice procedure. Learning and Motivation. 1999;30:201–220. [Google Scholar]

- Spence KW. The nature of discriminative learning in animals. Psychological Review. 1936;43:427–449. [Google Scholar]

- Shaffer HJ, Hall MN, Vander Bilt J. Estimating the prevalence of disordered gambling in the United States and Canada: a research synthesis. American Journal of Public Health. 1999;89:1369–1376. doi: 10.2105/ajph.89.9.1369. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shannon CE, Weaver W. The Mathematical Theory of Communication. University of Illinois Press; Champaign, IL: 1949. [Google Scholar]

- Sharpe L. A reformulated cognitive-behavioral model of problem gambling: A biopsychosocial perspective. Clinical Psychology Review. 2002;22:1–25. doi: 10.1016/s0272-7358(00)00087-8. [DOI] [PubMed] [Google Scholar]

- Snyderman M. Optimal prey selection: the effects of food deprivation. Behavior Analysis Letters. 1983;3:359–369. [Google Scholar]

- Stagner JP, Laude JR, Zentall TR. Effect of non-reinforced stimulus saliency on suboptimal choice in pigeons. Learning and Motivation. 2011;42:282–287. doi: 10.1016/j.lmot.2011.03.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stagner JP, Laude JR, Zentall TR. Pigeons prefer discriminative stimuli independently of the overall probability of reinforcement and of the number of presentations of the conditioned reinforcer. Journal of Experimental Psychology: Animal Behavior Processes. 2012;38:446–452. doi: 10.1037/a0030321. [DOI] [PubMed] [Google Scholar]

- Stagner JP, Zentall TR. Suboptimal choice behavior by pigeons. Psychonomic Bulletin & Review. 2010;17:412–416. doi: 10.3758/PBR.17.3.412. [DOI] [PubMed] [Google Scholar]

- Stephens DW, Krebs JR. Foraging theory. Princeton University Press; Princeton, NJ: 1986. [Google Scholar]

- Sutherland NS. The learning of discrimination by animals. Endeavour. 1964;23:148–152. doi: 10.1016/0160-9327(64)90007-9. [DOI] [PubMed] [Google Scholar]

- Thorndike EL. Animal intelligence. Macmillan; New York: 1911. [Google Scholar]

- Urcelay, Witnauer, Miller The dual role of the context in postpeak performance decrements resulting from extended training. Learning & Behavior. 2012;40:476–493. doi: 10.3758/s13420-012-0068-4. [DOI] [PubMed] [Google Scholar]

- Williams BA. Conditioned reinforcement: Neglected or outmoded explanatory construct? Psychonomic Bulletin & Review. 1994;4:257–475. doi: 10.3758/BF03210950. [DOI] [PubMed] [Google Scholar]

- Wagner AR, Rescorla RA. Inhibition in Pavlovian conditining: Application of a theory. In: Boakes RA, Halliday MS, editors. Inhibition and learning. Academic Press; London: 1972. pp. 301–336. [Google Scholar]

- Wyckoff LB., Jr. The role of observing responses in discrimination learning: Part I. Psychological Review. 1952;59:431–442. doi: 10.1037/h0053932. [DOI] [PubMed] [Google Scholar]

- Zentall TR, Laude JR. Do pigeons gamble? I wouldn't bet against it. Current Directions in Psychological Science. in press. [Google Scholar]

- Zentall TR, Stagner JP. Maladaptive choice behavior by pigeons: An animal analog of gambling (sub-optimal human decision making behavior). Proceedings of the Royal Society: Biological Sciences. 2011;278:1203–1208. doi: 10.1098/rspb.2010.1607. [DOI] [PMC free article] [PubMed] [Google Scholar]